A Generalized Method for Filtering Noise in Open-Source Project Selection

Abstract

1. Introduction

- Identifying Limitations in Existing Methods: We uncover critical shortcomings (e.g., missing essential keywords) in prior approaches, which hinder their effectiveness across different datasets.

- Proposing an Automated Solution: We introduce a robust method that overcomes the limitations of existing techniques, achieving an F-measure ranging from 0.780 to 0.893 in CCP classification, as validated on multiple datasets.

2. Related Work

2.1. Datasets Used in Studying GitHub Repositories

2.2. Risk Avoidance Strategies in Selecting Project Samples

2.3. Project Sample Selection Process in Studies on GHTorrent Dataset

3. Collaborative Coding Projects

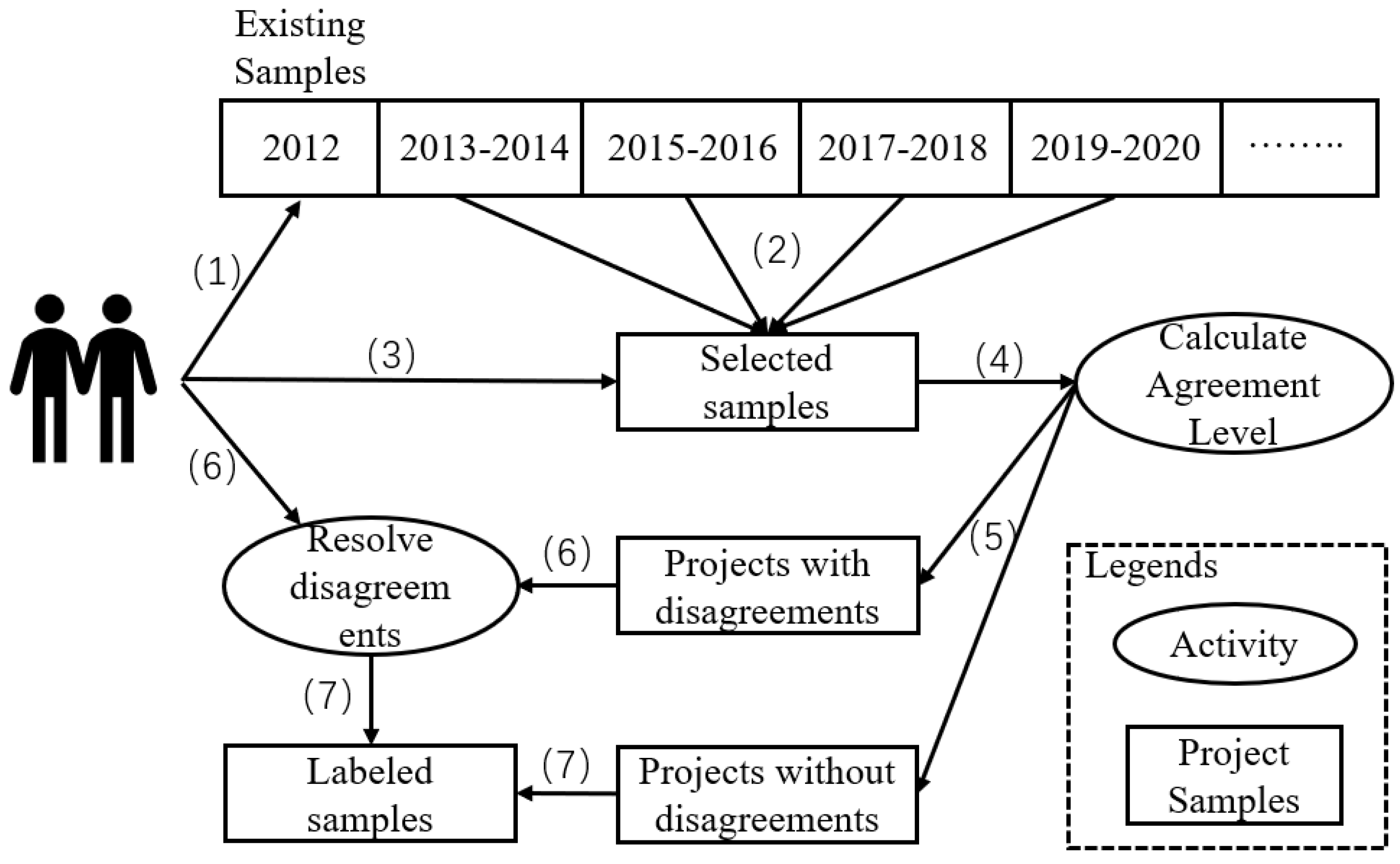

3.1. Labeling Process

- (1)

- Learn annotation standards: We provided the labeled dataset to two participants, identified ambiguous items, and discussed the findings to finalize the label details.

- (2)

- Randomly select samples: Based on the aforementioned discussion, we chose 1/1000 of the items every two years as the item samples.

- (3)

- Label samples: According to Definition 1 and Definition 2, as well as the labeling details from the first step, the two participants labeled the samples separately.

- (4)

- Calculate agreement level: We used Cohen’s Kappa coefficient to measure the agreement between the labels of the two participants.

- (5)

- Divide samples: We compute the sets of samples that they agreed on and disagreed on.

- (6)

- Resolve disagreements: We settled labeling disagreements through discussion with the two participants.

- (7)

- Generate complete label for samples: This was achieved by merging the two label sets to form the final label dataset.

3.2. Labeling Results

4. Analysis of Existing Methods

4.1. Costs of Existing Methods

4.2. Accuracy of Existing Methods

4.3. Weaknesses of Existing Methods

4.3.1. Weaknesses of the Baseline Method

4.3.2. Weaknesses of SADTM

5. Our Method

5.1. Overcoming the Weaknesses of SADTM

- (1)

- We examined the existing keywords employed by SADTM and observed that these terms often reflect the development types of projects (e.g., app, tool, and plugin). This insight motivated us to explore methods of automatically tagging CCPs in an OSS environment.

- (2)

- We examined existing methods of tagging projects. However, traditional tagging approaches primarily generate generalized tags (e.g., Java) for projects [40,41]. These methods require an additional training dataset (e.g., projects with manually assigned tags) and, at the same time, fail to meet our requirement of extracting keywords directly from project descriptions. Therefore, we explored more general text mining techniques. Term Frequency–Inverse Document Frequency (TF-IDF) is widely used as a weighting factor in information retrieval and text mining [42]. Meanwhile, the Latent Dirichlet Allocation (LDA) model is commonly employed to analyze topics in software development [43]. Both methods only require a corpus (in this study, the corpus consists of CCP descriptions) and can generate the keywords that we need.

- (1)

- The Corpus: The descriptions of environment projects from the sample projects in the standard dataset. (Note that the samples that we have labeled are only some of the projects that were established in that year. The description of labeled projects is not enough because text mining needs a large amount of training data to ensure the stability of the training results. Therefore, we collected all projects established in the current period (e.g., 2013–2014) as the training corpus for LDA or TF-IDF.)

- (2)

- Keyword Selection: Cheng et al. proposed SADTM, which employs dozens of keywords and achieved accurate project classification [34] on the 2012 dataset. This demonstrates that only a limited set of keywords is sufficient for identifying CCPs. Accordingly, we selected 80 keywords—a number comparable to SADTM’s keyword count—to ensure a fair comparison between our method and the existing approaches (SADTM and the baseline method), while avoiding the curse of dimensionality. (This concept refers to the phenomenon whereby, if a dataset contains too many features, it will be inefficient when fitting a model to it. For the prediction of CCPs, the more keywords that our model includes, the more information it can leverage and the better the results may be. However, an excessive number of features will seriously affect the efficiency in generating the model.)

- (3)

- TF-IDF: We evaluated TF-IDF and IDF on the corpus and observed that ranking the TF-IDF scores from high to low is essentially equivalent to ranking DF (the document frequency, which measures how often a word appears across all documents) from low to high. This is because, in CCP descriptions, a word typically appears only once. Consequently, we applied IDF to the corpus and manually assessed the interpretability of the resulting keywords. For instance, the keyword “framework” is meaningful, as a CCP containing this term is likely a reusable framework for developers. In contrast, the keyword “2015” is uninformative, as it frequently appears in CCPs created that year.

- (4)

- LDA: Our analysis reveals that the keywords in SADTM effectively summarize project types. Consequently, when applying LDA, it is essential to select the most representative term for each topic as the key identifier. In this study, we performed LDA on the corpus and extracted the top words for each topic (i.e., those most semantically relevant) to serve as topic labels. Similarly to TF-IDF, we engaged participants in manually evaluating the interpretability of these keywords. Parameters: (this is the parameter that can control the number of generated topics), , and .

5.2. Method Design

- (1)

- Generate keywords for each year (2012–2020). We first collected environment projects (e.g., 2013–2014 projects in Github) and extracted descriptions from these projects. Then, we preprocessed these descriptions, i.e., separating words and deleting stop words. In the next stage, we implemented the IDF method on these descriptions and collected the top 80 words for each year. Finally, we merged these keywords and formed a keyword set to reflect the most popular keywords of these years (a total of 127 keywords).

- (2)

- Construct data matrices for sample projects. First, we preprocessed the project descriptions in the standard dataset by performing word segmentation and removing stop words using the Lucene library. Next, we employed MySQL’s REGEXP operator to verify whether a project contained a specific keyword (e.g., “tool”). In addition to keyword-based features, we enriched the dataset by incorporating basic project metadata and URL information, following the same structure as in Section 4.2.

- (3)

- Create a balanced training dataset. First, we randomly selected 100 projects per keyword (there may have been repetitions because some projects contained two or more keywords). Then, we randomly added 3000 projects without any keywords to balance the number of projects with keywords and the number of projects without keywords. Finally, we obtained a balanced training dataset with 9745 samples.

- (4)

- Fit a model. We fitted a J48 model on the balanced training dataset using Weka, in which all parameters remained at the default values.

6. Study Design and Results

6.1. Research Question

6.2. Study Design

6.3. Study Results

7. Discussion

7.1. Overview of This Work

7.2. Analysis of Misclassified Projects

7.3. How to Use Our Method

- (1)

- Apply our model directly. Researchers studying CCPs who adopt our labeling standards can directly use our model to identify CCPs from candidate samples.

- (2)

- Adjust the model based on researchers’ needs. For researchers studying CCPs who disagree with our labeling standards, they should identify the disputed labels, relabel the corresponding data in our training dataset, and retrain the model on the revised dataset. For instance, we classified most projects containing the keyword “small” as negative, but some researchers may contest this criterion. In such cases, they should locate these projects in the training dataset and reclassify them as positive. Given that our training dataset includes 100 projects with the keyword “small”, this adjustment process is not overly labor-intensive.

- (3)

- Combining our model with existing methods. For researchers studying projects beyond CCPs, our model remains highly useful. For example, some researchers investigate stakeholder behavior in top projects (e.g., [27]); our model enables them to filter out private and non-development projects. We evaluated this on the 2015–2016 dataset: when selecting the top 15% of projects by star count, the initial sample contained 26.3% negative projects. After applying our model, the final result set reduced this proportion to just 8.9%.

- (4)

- Enhancing our model through additional information. As discussed in Section 7.2, a significant number of classification errors can be mitigated by incorporating new data sources. Researchers may manually review CCPs lacking keywords or integrate additional information such as README files to substantially improve the model performance. Since a large proportion of misclassifications in our method stem from data limitations rather than model flaws, these issues can be addressed independently.

8. Implications

9. Threats to Validity

9.1. Construct Validity

9.2. External Validity

9.3. Reliability

10. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gousios, G.; Spinellis, D. GHTorrent: Github’s data from a firehose. In Proceedings of the 9th Working Conference on Mining Software Repositories (MSR), Zurich, Switzerland, 2–3 June 2012; pp. 12–21. [Google Scholar]

- Kalliamvakou, E.; Gousios, G.; Blincoe, K.; Singer, L.; German, D.M.; Damian, D. An in-depth study of the promises and perils of mining GitHub. Empir. Softw. Eng. 2016, 21, 2035–2071. [Google Scholar] [CrossRef]

- Zhou, M.; Mockus, A. What make long term contributors: Willingness and opportunity in OSS community. In Proceedings of the 34th International Conference on Software Engineering (ICSE), Zurich, Switzerland, 2–9 June 2012; pp. 518–528. [Google Scholar]

- Kalliamvakou, E.; Gousios, G.; Blincoe, K.; Singer, L.; German, D.M.; Damian, D. The promises and perils of mining github. In Proceedings of the 11th Working Conference on Mining Software Repositories (MSR), Hyderabad, India, 31 May–1 June 2014; pp. 92–101. [Google Scholar]

- Jing, J.; Li, Z.; Lei, L. Understanding project dissemination on a social coding site. In Proceedings of the 20th Working Conference on Reverse Engineering (WCRE), Koblenz, Germany, 14–17 October 2013; pp. 132–141. [Google Scholar]

- Cosentino, V.; Izquierdo, J.L.C.; Cabot, J. A Systematic Mapping Study of Software Development with GitHub. IEEE Access 2017, 5, 7173–7192. [Google Scholar] [CrossRef]

- Manikas, K.; Hansen, K.M. Software ecosystems—A systematic literature review. J. Syst. Softw. 2013, 86, 1294–1306. Available online: http://www.sciencedirect.com/science/article/pii/S016412121200338X (accessed on 10 July 2025). [CrossRef]

- Yu, Y.; Wang, H.; Yin, G.; Wang, T. Reviewer recommendation for pull-requests in GitHub: What can we learn from code review and bug assignment? Inf. Softw. Technol. 2016, 74, 204–218. [Google Scholar] [CrossRef]

- Yu, Y.; Wang, H.; Filkov, V.; Devanbu, P.; Vasilescu, B. Wait for It: Determinants of Pull Request Evaluation Latency on GitHub. In Proceedings of the 12th Working Conference on Mining Software Repositories (MSR), Florence, Italy, 16–17 May 2015; pp. 367–371. [Google Scholar]

- Gousios, G.; Pinzger, M.; Deursen, A.V. An exploratory study of the pull-based software development model. In Proceedings of the 36th International Conference on Software Engineering (ICSE), Hyderabad, India, 31 May–7 June 2014; pp. 345–355. [Google Scholar]

- Vasilescu, B.; Yu, Y.; Wang, H.; Devanbu, P.; Filkov, V. Quality and productivity outcomes relating to continuous integration in GitHub. In Proceedings of the 10th Joint Meeting on Foundations of Software Engineering (FSE), Bergamo, Italy, 30 August–4 September 2015; pp. 805–816. [Google Scholar]

- Murphy, G.C.; Terra, R.; Figueiredo, J.; Serey, D. Do developers discuss design? In Proceedings of the 11th Working Conference on Mining Software Repositories (MSR), Hyderabad, India, 31 May–1 June 2014; pp. 340–343. [Google Scholar]

- Kikas, R.; Dumas, M.; Pfahl, D. Using dynamic and contextual features to predict issue lifetime in GitHub projects. In Proceedings of the 13th Working Conference on Mining Software Repositories (MSR), Austin, TX, USA, 14–15 May 2016; pp. 291–302. [Google Scholar]

- Constantinou, E.; Mens, T. Socio-technical evolution of the Ruby ecosystem in GitHub. In Proceedings of the 24th IEEE International Conference on Software Analysis, Evolution and Reengineering (SANER), Klagenfurt, Austria, 20–24 February 2017; pp. 34–44. [Google Scholar]

- Bertoncello, M.V.; Pinto, G.; Wiese, I.S.; Steinmacher, I. Pull Requests or Commits? Which Method Should We Use to Study Contributors’ Behavior? In Proceedings of the 27th International Conference on Software Analysis, Evolution and Reengineering (SANER), London, ON, Canada, 18–21 February 2020; pp. 592–601. [Google Scholar]

- Tantithamthavorn, C.; Hassan, A.E.; Matsumoto, K. The impact of class rebalancing techniques on the performance and interpretation of defect prediction models. IEEE Trans. Softw. Eng. 2018, 46, 1200–1219. [Google Scholar] [CrossRef]

- Pascarella, L.; Palomba, F.; Penta, M.D.; Bacchelli, A. How Is Video Game Development Different from Software Development in Open Source. In Proceedings of the IEEE/ACM International Conference on Mining Software Repositories (MSR), Gothenburg, Sweden, 28–29 May 2018. [Google Scholar]

- Goyal, R.; Ferreira, G.; Kästner, C.; Herbsleb, J. Identifying unusual commits on GitHub. J. Softw. Evol. Process 2018, 30, e1893. [Google Scholar] [CrossRef]

- Fronchetti, F.; Wiese, I.; Pinto, G.; Steinmacher, I. What attracts newcomers to onboard on oss projects? In Proceedings of the 15th Open Source Systems—IFIP WG 2.13 International Conference (OSS), Montreal, QC, Canada, 26–27 May 2019; pp. 91–103. [Google Scholar]

- Zhao, G.; Da Costa, D.A.; Zou, Y. Improving the pull requests review process using learning-to-rank algorithms. Empir. Softw. Eng. 2019, 24, 2140–2170. [Google Scholar] [CrossRef]

- Zhou, P.; Liu, J.; Liu, X.; Yang, Z.; Grundy, J. Is Deep Learning Better than Traditional Approaches in Tag Recommendation for Software Information Sites? Inf. Softw. Technol. 2019, 109, 1–13. [Google Scholar] [CrossRef]

- Jiarpakdee, J.; Tantithamthavorn, C.K.; Dam, H.K.; Grundy, J. An empirical study of model-agnostic techniques for defect prediction models. IEEE Trans. Softw. Eng. 2020, 48, 166–185. [Google Scholar] [CrossRef]

- Vale, G.; Schmid, A.; Santos, A.R.; De Almeida, E.S.; Apel, S. On the relation between Github communication activity and merge conflicts. Empir. Softw. Eng. 2020, 25, 402–433. [Google Scholar] [CrossRef]

- Malviya-Thakur, A.; Mockus, A. The Role of Data Filtering in Open Source Software Ranking and Selection. In Proceedings of the 1st IEEE/ACM International Workshop on Methodological Issues with Empirical Studies in Software Engineering, Lisbon, Portugal, 15 April 2024; pp. 7–12. [Google Scholar]

- Padhye, R.; Mani, S.; Sinha, V.S. A study of external community contribution to open-source projects on GitHub. In Proceedings of the 11th Working Conference on Mining Software Repositories (MSR), Hyderabad, India, 31 May–1 June 2014; pp. 332–335. [Google Scholar]

- Hilton, M.; Tunnell, T.; Huang, K.; Marinov, D.; Dig, D. Usage, costs, and benefits of continuous integration in open-source projects. In Proceedings of the 31th International Conference on Automated Software Engineering (ASE), Singapore, 3–7 September 2016; pp. 426–437. [Google Scholar]

- Xavier, J.; Macedo, A.; Maia, M.D.A. Understanding the popularity of reporters and assignees in the Github. In Proceedings of the 26th International Conference on Software Engineering & Knowledge Engineering (SEKE), Vancouver, BC, Canada, 1–3 July 2014; pp. 484–489. [Google Scholar]

- Elazhary, M.S.N.E.O.; Zaidman, A. Do as I Do, Not as I Say: Do Contribution Guidelines Match the GitHub Contribution Process. In Proceedings of the 35th IEEE International Conference on Software Maintenance and Evolution (ICSME), Cleveland, OH, USA, 29 September–4 October 2019; pp. 286–290. [Google Scholar]

- Cheng, C.; Li, B.; Li, Z.-Y.; Liang, P.; Yang, X. An in-depth study of the effects of methods on the dataset selection of public development projects. IET Softw. 2021, 16, 146–166. [Google Scholar] [CrossRef]

- Montandon, J.E.; Valente, M.T.; Silva, L.L. Mining the Technical Roles of GitHub Users. Inf. Softw. Technol. 2021, 131, 106485. [Google Scholar] [CrossRef]

- Baltes, S.; Ralph, P. Sampling in software engineering research: A critical review and guidelines. Empir. Softw. Eng. 2022, 27, 94. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, J.; Zhang, H.; Ming, X.; Shi, L.; Wang, Q. Where is your app frustrating users? In Proceedings of the 44th International Conference on Software Engineering (ICSE), Pittsburgh, PA, USA, 21–29 May 2022; pp. 2427–2439. [Google Scholar]

- Wang, Y.; Zhang, P.; Sun, M.; Lu, Z.; Yang, Y.; Tang, Y.; Qian, J.; Li, Z.; Zhou, Y. Uncovering bugs in code coverage profilers via control flow constraint solving. IEEE Trans. Softw. Eng. 2023, 49, 4964–4987. [Google Scholar] [CrossRef]

- Cheng, C.; Li, B.; Li, Z.-Y.; Liang, P. Automatic Detection of Public Development Projects in Large Open Source Ecosystems: An Exploratory Study on GitHub. In Proceedings of the 30th International Conference on Software Engineering and Knowledge Engineering (SEKE), San Francisco, CA, USA, 1–3 July 2018; pp. 193–198. [Google Scholar]

- Munaiah, N.; Kroh, S.; Cabrey, C.; Nagappan, M. Curating GitHub for engineered software projects. Empir. Softw. Eng. 2016, 22, 3219–3253. [Google Scholar] [CrossRef]

- Pickerill, P.; Jungen, H.J.; Ochodek, M.; Maćkowiak, M.; Staron, M. PHANTOM: Curating GitHub for Engineered Software Projects Using Time-Series Clustering. Empir. Softw. Eng. 2020, 25, 2897–2929. [Google Scholar] [CrossRef]

- Tim Menzies, M.S.; Smith, A. “Bad Smells” in Software Analytics Papers. Inf. Softw. Technol. 2019, 112, 35–47. [Google Scholar] [CrossRef]

- Gousios, G. The GHTorent dataset and tool suite. In Proceedings of the 10th Working Conference on Mining Software Repositories (MSR), San Francisco, CA, USA, 18–19 May 2013; pp. 233–236. [Google Scholar]

- Fu, W.; Menzies, T. Easy over hard: A case study on deep learning. In Proceedings of the 12th Joint Meeting on Foundations of Software Engineering (FSE), Paderborn, Germany, 4–8 September 2017; pp. 49–60. [Google Scholar]

- Saha, A.K.; Saha, R.K.; Schneider, K.A. A discriminative model approach for suggesting tags automatically for Stack Overflow questions. In Proceedings of the 10th Working Conference on Mining Software Repositories (MSR), San Francisco, CA, USA, 18–19 May 2013; pp. 73–76. [Google Scholar]

- Wang, T.; Wang, H.; Yin, G.; Ling, C.X.; Li, X.; Zou, P. Tag recommendation for open source software. Front. Comput. Sci. 2014, 8, 69–82. [Google Scholar] [CrossRef]

- Beel, J.; Gipp, B.; Langer, S.; Breitinger, C. Research-paper recommender systems: A literature survey. Int. J. Digit. Libr. 2015, 17, 1–34. [Google Scholar] [CrossRef]

- Barua, A.; Thomas, S.W.; Hassan, A.E. What are developers talking about? An analysis of topics and trends in Stack Overflow. Empir. Softw. Eng. 2014, 19, 619–654. [Google Scholar] [CrossRef]

- Falessi, D.; Smith, W.; Serebrenik, A. STRESS: A Semi-Automated, Fully Replicable Approach for Project Selection. In Proceedings of the 11th International Symposium on Empirical Software Engineering & Measurement (ESEM), Toronto, ON, Canada, 9–10 November 2017; pp. 151–156. [Google Scholar]

| Problem | Strategy | Automated Method |

|---|---|---|

| A repository is not necessarily a project | Consider the activity in both the base repository and all associated forked repositories. | Yes |

| Most projects have low activity | Consider the number of recent commits on a project to select projects with an appropriate activity level. | Yes |

| Most projects are inactive | Consider the number of recent commits and pull requests. | Yes |

| Many projects are not software development | Review descriptions and README files to ensure that the projects fit the research needs. | No, this strategy needs researchers to review descriptions and README files of project samples. |

| Most projects are personal | Consider the number of committers. | No, this strategy cannot effectively remove personal projects because many public projects have only one committer. |

| Many active projects do not use GitHub exclusively | Avoid projects that have a high number of committers who are not registered GitHub users and projects with descriptions that explicitly state that they are mirrors. | Yes |

| Few projects use pull requests | Consider the number of pull requests before selecting a project. | Yes |

| Method | Literature |

|---|---|

| Remove projects demonstrating poor performance in key metrics (e.g., pull request activity) | [8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24] |

| Select projects ranking highly in significant dimensions (e.g., star count) | [15,20,25,26,27,28,29,30,31,32,33] |

| Employ decision tree classification to determine CCP status (using project statistics and description keywords as features) | [29,34] |

| Apply score-based and random forest classifiers through the Reaper tool to identify engineered software projects | [35] |

| Propose the PHANTOM approach, which extracts multidimensional time-series features from Git logs and applies k-means clustering to automatically and effectively identify “engineered” projects from a large number of GitHub repositories | [36] |

| Period | No. of Samples | Cohen’s Kappa Coefficient |

|---|---|---|

| 1 January 2012–15 January 2012 | 6715 | / |

| 1 January 2013–1 January 2014 | 1385 | 0.857 |

| 1 January 2015–1 January 2016 | 3991 | 0.835 |

| 1 January 2017–1 January 2018 | 6930 | 0.812 |

| 1 January 2019–1 January 2020 | 7721 | 0.885 |

| Method | Best Parameter for Precision, Recall, or F-Measure | Precision | Recall | F-Measure |

|---|---|---|---|---|

| 2012.1.1–2012.1.16 | ||||

| Baseline method | Select top 2% projects regarding watcher number | 0.880 | 0.027 | 0.052 |

| Baseline method | Remove bottom 1% projects regarding community member number | 0.644 | 0.992 | 0.781 |

| SADTM | J48, Confidencefactor = 0.05 | 0.847 | 0.927 | 0.885 |

| 2013–2014 | ||||

| Baseline method | Select top 2% projects regarding star number | 0.963 | 0.033 | 0.064 |

| Baseline method | Remove bottom 1% projects regarding watcher number | 0.564 | 0.992 | 0.720 |

| SADTM | J48, Confidencefactor = 0.05 | 0.703 | 0.789 | 0.743 |

| 2015–2016 | ||||

| Baseline method | Select top 2% projects regarding watcher number | 0.862 | 0.038 | 0.074 |

| Baseline method | Remove bottom 1% projects regarding watcher number | 0.443 | 0.990 | 0.612 |

| SADTM | J48, Confidencefactor = 0.05 | 0.463 | 0.790 | 0.583 |

| 2017–2018 | ||||

| Baseline method | Select top 1% projects regarding star number | 0.855 | 0.019 | 0.037 |

| Baseline method | Remove bottom 1% projects regarding star number | 0.437 | 0.991 | 0.606 |

| SADTM | J48, Confidencefactor = 0.05 | 0.575 | 0.801 | 0.669 |

| 2019–2020 | ||||

| Baseline method | Select top 1% projects regarding star number | 0.857 | 0.022 | 0.044 |

| Baseline method | Remove bottom 1% projects regarding star number | 0.374 | 0.992 | 0.544 |

| SADTM | J48, Confidencefactor = 0.05 | 0.469 | 0.844 | 0.602 |

| Method | 2012 | 2013–2014 | 2015–2016 | 2017–2018 | 2019–2020 |

|---|---|---|---|---|---|

| LDA | 60/80 | 61/80 | 60/80 | 60/80 | 59/80 |

| IDF | 61/80 | 61/80 | 62/80 | 59/80 | 60/80 |

| Year | Precision (Ours) | Recall (Ours) | F-Measure (Ours) | F-Measure (Baseline) | F-Measure (SADTM) |

|---|---|---|---|---|---|

| 2012 | 0.845 | 0.946 | 0.893 | 0.781 | 0.885 |

| 2013–2014 | 0.849 | 0.885 | 0.866 | 0.720 | 0.743 |

| 2015–2016 | 0.752 | 0.848 | 0.797 | 0.612 | 0.583 |

| 2017–2018 | 0.813 | 0.800 | 0.806 | 0.606 | 0.669 |

| 2019–2020 | 0.740 | 0.825 | 0.780 | 0.544 | 0.602 |

| Year | Model Bias | Inadequate Information | Limited Keyword Set |

|---|---|---|---|

| 2012 | 25.1% | 67.7% | 7.2% |

| 2013–2014 | 23.4% | 61.9% | 14.7% |

| 2015–2016 | 23.3% | 63.9% | 12.7% |

| 2017–2018 | 20.1% | 69.0% | 10.8% |

| 2019–2020 | 27.1% | 66.2% | 6.7% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ding, Y.; Fang, Q.; Liu, X. A Generalized Method for Filtering Noise in Open-Source Project Selection. Information 2025, 16, 774. https://doi.org/10.3390/info16090774

Ding Y, Fang Q, Liu X. A Generalized Method for Filtering Noise in Open-Source Project Selection. Information. 2025; 16(9):774. https://doi.org/10.3390/info16090774

Chicago/Turabian StyleDing, Yi, Qing Fang, and Xiaoyan Liu. 2025. "A Generalized Method for Filtering Noise in Open-Source Project Selection" Information 16, no. 9: 774. https://doi.org/10.3390/info16090774

APA StyleDing, Y., Fang, Q., & Liu, X. (2025). A Generalized Method for Filtering Noise in Open-Source Project Selection. Information, 16(9), 774. https://doi.org/10.3390/info16090774