Technologies for Reflective Assessment in Knowledge Building Communities: A Systematic Review

Abstract

1. Introduction

1.1. Knowledge Building

1.2. Reflective Assessment

1.3. Knowledge Building Analytics

- Collaborative efforts. This category underscores knowledge construction as an inherently social and collaborative process. Learning analytics plays a crucial role by making group interactions visible, which fosters equitable participation, coordinated efforts, and collective responsibility for advancing shared understanding (Gutiérrez-Braojos et al., 2023) [4].

- Idea improvement. This dimension highlights the continuous improvement of ideas and epistemic agency. It involves the ability to identify promising contributions, explore deeper explanations, and synthesize diverse perspectives—processes that enhance the epistemic quality of the community’s knowledge (Hong et al., 2015) [39].

1.4. The Current Review

2. Method

- Type of study. Empirical studies using quantitative, qualitative, or mixed methods approaches.

- Pedagogical context. Implementation within knowledge building environments, with or without the use of Knowledge Forum.

- Analytical technology. Use of analytics tools or technologies specifically linked to reflective assessments during knowledge building implementation.

- Study objective. Focus on reflective assessment as an integral part of the knowledge building process.

- Participants. Students at any educational level (primary, secondary, or higher education).

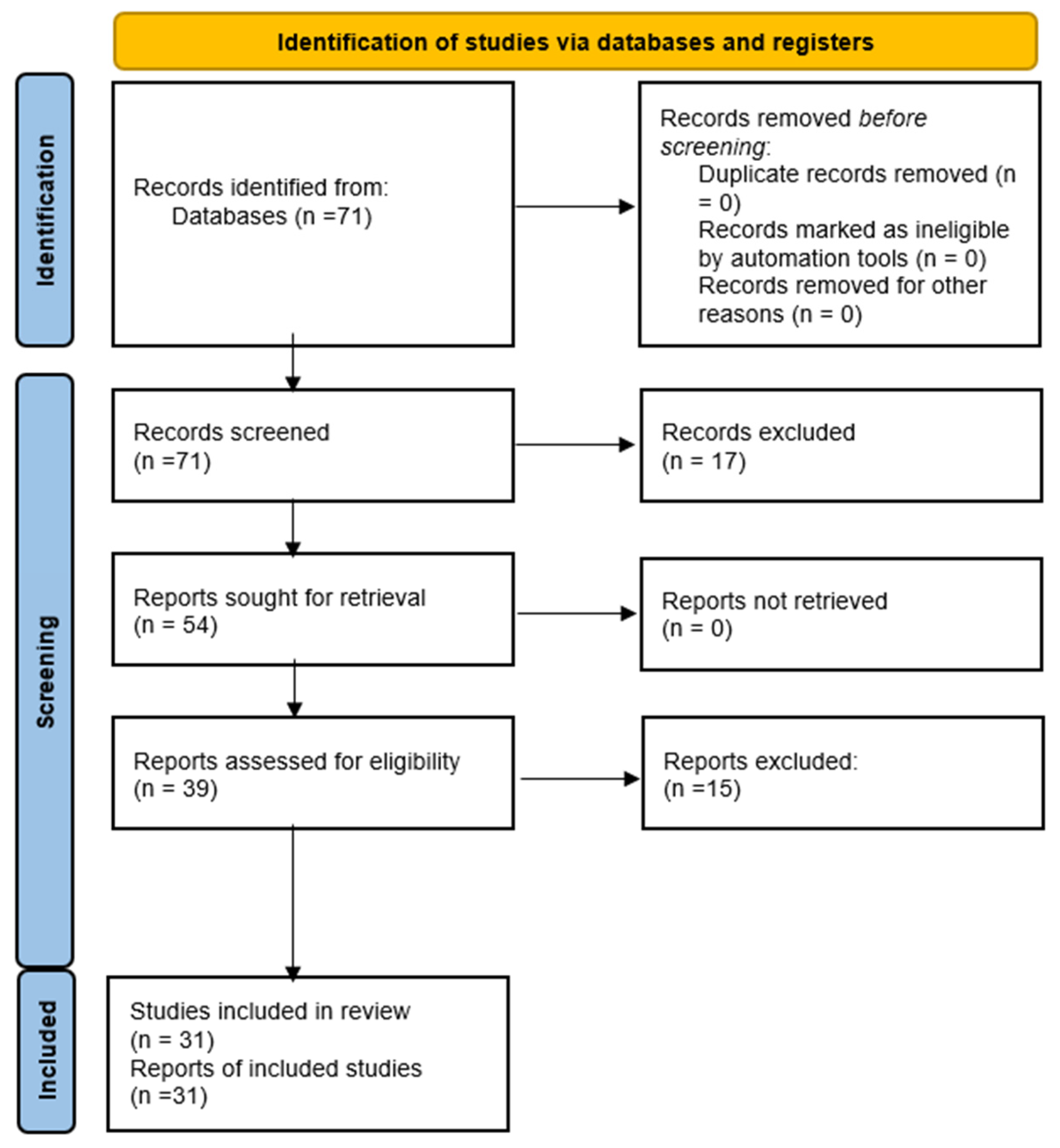

2.1. Search Strategy and Inclusion Criteria

2.2. Data Extraction

3. Results

- Academic course. The educational level or stage at which the analytics technology is implemented.

- Participants. The total number of participants involved in the study or educational experience.

- Participant setup. The organization of participants during the intervention, including large groups (whole class), small groups (subdivided), or individual work.

- Platform used. The platforms used to record community contributions, from which the data for analytics are extracted.

- Implementation time: The duration of the implementation during the educational intervention.

- Assessment moment. The phase of the educational process when assessment occurs (initial, formative, or summative).

- Learning analytic assessment agent. The agent responsible for conducting the assessment using analytics technologies, whether for students, teachers, or both.

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Correction Statement

References

- Zheng, L.; Niu, J.; Zhong, L. Effects of a Learning Analytics-Based Real-Time Feedback Approach on Knowledge Elaboration, Knowledge Convergence, Interactive Relationships and Group Performance in CSCL. Br. J. Educ. Technol. 2022, 53, 130–149. [Google Scholar] [CrossRef]

- Oshima, J.; Shaffer, D.W. Learning Analytics for a New Epistemological Perspective of Learning. Inf. Technol. Educ. Learn. 2021, 1, Inv-p003. [Google Scholar] [CrossRef]

- Ong, E.T.; Lim, L.H.; Chan, C.K.K. Collective Reflective Assessment for Knowledge Building. Comput. Educ. 2023, 190, 104–639. [Google Scholar]

- Gutiérrez-Braojos, C.; Rodríguez-Domínguez, C.; Daniela, L.; Carranza-García, F. An Analytical Dashboard of Collaborative Activities for the Knowledge Building. Technol. Knowl. Learn. 2023, 1, 1–27. [Google Scholar] [CrossRef]

- Stoyanov, S.; Kirschner, P.A. Text Analytics for Uncovering Untapped Ideas at the Intersection of Learning Design and Learning Analytics: Critical Interpretative Synthesis. J. Comput. Assist. Learn. 2023, 39, 899–920. [Google Scholar] [CrossRef]

- Mangaroska, K.; Sharma, K.; Gaševic, D.; Giannakos, M. Multimodal Learning Analytics to Inform Learning Design: Lessons Learned from Computing Education. J. Learn. Anal. 2020, 7, 79–97. [Google Scholar] [CrossRef]

- Stahl, G. A Model of Collaborative Knowledge-Building. In Proceedings of the Fourth International Conference of the Learning Sciences; Fishman, B., O’Connor-Divelbiss, S., Eds.; Erlbaum: Mahwah, NJ, USA, 2000; pp. 70–77. [Google Scholar]

- Gutiérrez-Braojos, C.; Rodríguez-Chirino, P.; Vico, B.P.; Fernández, S.R. Teacher Scaffolding for Knowledge Building in the Educational Research Classroom. RIED-Rev. Iberoam. Educ. Distancia 2024, 27, 1–25. [Google Scholar] [CrossRef]

- Scardamalia, M. Collective Cognitive Responsibility for the Advancement of Knowledge. In Liberal Education in a Knowledge Society; Smith, B., Ed.; Open Court: Chicago, IL, USA, 2002; pp. 67–98. [Google Scholar]

- Cress, U.; Stahl, G.; Ludvigsen, S.; Law, N. The Core Features of CSCL: Social Situation, Collaborative Knowledge Processes and Their Design. Int. J. Comput. Support. Collab. Learn. 2015, 10, 109–116. [Google Scholar] [CrossRef]

- Scardamalia, M.; Bereiter, C. Knowledge Building. In The Cambridge Handbook of the Learning Sciences; Sawyer, R.K., Ed.; Cambridge University Press: Cambridge, UK, 2006; pp. 97–118. [Google Scholar]

- Scardamalia, M. CSILE/Knowledge Forum®. In Encyclopedia of Education and Technology; Guthrie, J.W., Ed.; ABC-CLIO: Santa Barbara, CA, USA, 2004; pp. 183–192. [Google Scholar]

- Zhu, G.; Kim, M.S. A Review of Assessment Tools of Knowledge Building towards the Norm of Embedded and Transformative Assessment. In Proceedings of the Knowledge Building Summer Institute, Philadelphia, PA, USA, 18–22 June 2017. [Google Scholar]

- Apiola, M.V.; Lipponen, S.; Seitamaa, A.; Korhonen, T.; Hakkarainen, K. Learning Analytics for Knowledge Creation and Inventing in K-12: A Systematic Review. In Proceedings of the Science and Information Conference, Cham, Switzerland, 14–16 July 2022; Springer: Cham, Switzerland, 2022; pp. 238–257. [Google Scholar]

- Sánchez-Serrano, S.; Navarro, I.P.; González, M.D. ¿Cómo Hacer una Revisión Sistemática Siguiendo el Protocolo PRISMA?: Usos y Estrategias Fundamentales para su Aplicación en el Ámbito Educativo a Través de un Caso Práctico. Bordón Rev. Pedagog. 2022, 74, 51–66. [Google Scholar] [CrossRef]

- Scardamalia, M.; Bereiter, C. Knowledge Building: Advancing the State of Community Knowledge. In International Handbook of Computer-Supported Collaborative Learning; Springer: Cham, Switzerland, 2021; pp. 261–279. [Google Scholar]

- Scardamalia, M.; Bereiter, C. Smart Technology for Self-Organizing Processes. Smart Learn. Environ. 2014, 1, 1. [Google Scholar] [CrossRef]

- Scardamalia, M.; Bereiter, C. Computer Support for Knowledge-Building Communities. J. Learn. Sci. 1994, 3, 265–283. [Google Scholar] [CrossRef]

- Gutiérrez-Braojos, C.; Montejo-Gámez, J.; Ma, L.; Chen, B.; Muñoz de Escalona-Fernández, M.; Scardamalia, M.; Bereiter, C. Exploring Collective Cognitive Responsibility through the Emergence and Flow of Forms of Engagement in a Knowledge Building Community. In Didactics of Smart Pedagogy; Springer: Cham, Switzerland, 2019; pp. 213–232. [Google Scholar]

- Zhang, J.; Scardamalia, M.; Reeve, R.; Messina, R. Designs for Collective Cognitive Responsibility in Knowledge-Building Communities. J. Learn. Sci. 2009, 18, 7–44. [Google Scholar] [CrossRef]

- Ma, L.; Scardamalia, M. Teachers as Designers in Knowledge Building Innovation Networks. In The Learning Sciences in Conversation; Shanahan, M.C., Kim, B., Takeuchi, M.A., Koh, K., Preciado-Babb, A.P., Sengupta, P., Eds.; Routledge: New York, NY, USA, 2022; pp. 107–120. [Google Scholar]

- Chen, B. Fostering Scientific Understanding and Epistemic Beliefs through Judgments of Promisingness. Educ. Technol. Res. Dev. 2017, 65, 255–277. [Google Scholar] [CrossRef]

- Cacciamani, S.; Perrucci, V.; Fujita, N. Promoting Students’ Collective Cognitive Responsibility through Concurrent, Embedded and Transformative Assessment in Blended Higher Education Courses. Technol. Knowl. Learn. 2021, 26, 1169–1194. [Google Scholar] [CrossRef]

- Chen, B.; Hong, H.Y. Schools as Knowledge-Building Organizations: Thirty Years of Design Research. Educ. Psychol. 2016, 51, 266–288. [Google Scholar] [CrossRef]

- Gutiérrez-Braojos, C.; Montejo-Gámez, J.; Poza Vilches, F.; Marín-Jiménez, A. Evaluation of Research on the Knowledge Building Pedagogy: A Mixed Methodological Approach. Relieve Rev. ELectrón. Investig. Eval. Educ. 2020, 26, 1–22. [Google Scholar]

- Yang, Y.; Van Aalst, J.; Chan, C.K.K. Collective Reflective Assessment for Shared Epistemic Agency by Undergraduates in Knowledge Building. Br. J. Educ. Technol. 2020, 51, 423–437. [Google Scholar] [CrossRef]

- Lei, C.; Chan, C.K. Developing Metadiscourse through Reflective Assessment in Knowledge Building Environments. Comput. Educ. 2018, 126, 153–169. [Google Scholar] [CrossRef]

- Tan, S.C.; Chan, C.; Bielaczyc, K.; Ma, L.; Scardamalia, M.; Bereiter, C. Knowledge Building: Aligning Education with Needs for Knowledge Creation in the Digital Age. Educ. Technol. Res. Dev. 2021, 69, 2243–2266. [Google Scholar] [CrossRef]

- Van Aalst, J.; Chan, C.K. Student-Directed Assessment of Knowledge Building Using Electronic Portfolios. J. Learn. Sci. 2007, 16, 175–220. [Google Scholar] [CrossRef]

- Yang, Y.; Chan, C.K.; Zhu, G.; Tong, Y.; Sun, D. Reflective Assessment Using Analytics and Artifacts for Scaffolding Knowledge Building Competencies among Undergraduate Students. Int. J. Comput. Support. Collab. Learn. 2024, 19, 231–272. [Google Scholar] [CrossRef]

- Yang, Y.; Yuan, K.C.; Zhang, J. Analytics-Supported Reflective Assessment for Fostering Collective Knowledge Advancement. Int. J. Comput. Support. Collab. Learn. 2022, 17, 375–400. [Google Scholar]

- Chan, C.K.; Van Aalst, J. Learning, Assessment and Collaboration in Computer-Supported Environments. In What We Know About CSCL: And Implementing It in Higher Education; Strijbos, J.W., Kirschner, P.A., Martens, R.L., Eds.; Springer: Dordrecht, The Netherlands, 2004; pp. 87–112. [Google Scholar]

- Yan, Z.; Zhang, K.C.; Brown, G.T.L.; Panadero, E. Students’ Perceptions of Self-Assessment: A Systematic Review of Empirical Studies. Educ. Psychol. Rev. 2023, 36, 35–81. [Google Scholar]

- Bereiter, C.; Scardamalia, M. Knowledge Building and Knowledge Creation: One Concept, Two Hills to Climb. In Knowledge Creation in Education; Tan, S.C., So, H.J., Yeo, J., Eds.; Springer: Singapore, 2014; pp. 35–52. [Google Scholar]

- Avella, J.T.; Kebritchi, M.; Nunn, S.G.; Kanai, T. Learning Analytics Methods, Benefits, and Challenges in Higher Education: A Systematic Literature Review. J. Asynch. Learn. Netw. 2016, 20, 13–35. [Google Scholar]

- Sergis, S.; Sampson, D.G. Teaching and Learning Analytics to Support Teacher Inquiry: A Systematic Literature Review. Learn. Res. Pract. 2016, 2, 42–64. [Google Scholar]

- Oliva-Córdova, V.; Martínez-Abad, F.; Rodríguez-Conde, M.J. Learning Analytics to Support Teaching Skills: A Systematic Literature Review. Int. J. Educ. Technol. High. Educ. 2021, 18, 58351–58363. [Google Scholar] [CrossRef]

- Sonderlund, A.L.; Hughes, E.; Smith, J. The Efficacy of Learning Analytics Interventions in Higher Education: A Systematic Review. Br. J. Educ. Technol. 2018, 50, 2594–2618. [Google Scholar] [CrossRef]

- Hong, H.Y.; Scardamalia, M.; Messina, R.; Teo, C.L. Fostering Sustained Idea Improvement with Principle-Based Knowledge Building Analytic Tools. Comput. Educ. 2015, 87, 227–240. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. Declaración PRISMA 2020: Una Guía Actualizada para la Publicación de Revisiones Sistemáticas. Rev. Esp. Cardiol. 2021, 74, 790–799. [Google Scholar] [CrossRef]

- Matsuzawa, Y.; Oshima, J.; Oshima, R.; Niihara, Y.; Sakai, S. KBDeX: A Platform for Exploring Discourse in Collaborative Learning. Procedia-Soc. Behav. Sci. 2011, 26, 198–207. [Google Scholar] [CrossRef]

- Zhang, J.; Chen, M.H. Idea Thread Mapper: Designs for Sustaining Student-Driven Knowledge Building across Classrooms. In Proceedings of the 13th International Conference on Computer Supported Collaborative Learning (CSCL 2019), École Normale Supérieure de Lyon, France, 17–21 June 2019; Lund, K., Niccolai, G., Lavoué, E., Hmelo-Silver, C., Gweon, G., Baker, M., Eds.; International Society of the Learning Sciences: Lyon, France, 2019; pp. 144–151. [Google Scholar]

- Shaffer, D.W.; Collier, W.; Ruis, A.R. A Tutorial on Epistemic Network Analysis: Analyzing the Structure of Connections in Cognitive, Social, and Interaction Data. J. Learn. Anal. 2016, 3, 9–45. [Google Scholar] [CrossRef]

- Van Aalst, J.; Mu, J.; Yang, Y. Formative Assessment of Computer-Supported Collaborative Learning and Knowledge Building. In Measuring and Visualizing Learning in the Information-Rich Classroom; Shum, S.B., Ferguson, R., Martínez-Maldonado, R., Eds.; Routledge: London, UK, 2015; pp. 154–166. [Google Scholar]

- Gutiérrez-Braojos, C.; Rodríguez-Domínguez, C.; Daniela, L.; Rodríguez-Chirino, P. Evaluating a New Knowledge Building Analytics Tool (KBAT). In Proceedings of the 17th International Conference on Computer-Supported Collaborative Learning (CSCL 2024), Buffalo, NY, USA, 10–14 June 2024; International Society of the Learning Sciences: Bodo, Norway, 2024; pp. 413–414. [Google Scholar]

- Tan, S.C.; Lee, A.V.Y.; Lee, M. Ideations for AI-Supported Knowledge Building. In Proceedings of the Knowledge Building Summer Institute, Wageningen, The Netherlands, 15–19 August 2022. [Google Scholar]

- Chen, B.; Scardamalia, M.; Bereiter, C. Advancing Knowledge-Building Discourse through Judgments of Promising Ideas. Int. J. Comput. Support. Collab. Learn. 2015, 10, 345–366. [Google Scholar] [CrossRef]

- Gutiérrez-Braojos, C.; Daniela, L.; Montejo-Gámez, J.; Aliaga, F. Developing and Comparing Indices to Evaluate Community Knowledge Building in an Educational Research Course. Sustainability 2022, 14, 10603. [Google Scholar] [CrossRef]

- Burtis, J. Analytic Toolkit for Knowledge Forum; Centre for Applied Cognitive Science, Ontario Institute for Studies in Education, University of Toronto: Toronto, ON, Canada, 1998. [Google Scholar]

- Oshima, J.; Oshima, R.; Matsuzawa, Y. Knowledge Building Discourse Explorer: A Social Network Analysis Application for Knowledge Building Discourse. Educ. Technol. Res. Dev. 2012, 60, 903–921. [Google Scholar] [CrossRef]

- Park, H.; Zhang, J. Learning Analytics for Teacher Noticing and Scaffolding: Facilitating Knowledge Building Progress in Science. In Proceedings of the 15th International Conference on Computer-Supported Collaborative Learning (CSCL 2022), Hiroshima, Japan, 6–10 June 2025; International Society of the Learning Sciences: Hiroshima, Japan, 2022; pp. 147–154. [Google Scholar]

- Yang, Y.; Feng, X.; Zhu, G.; Xie, K. Effects and Mechanisms of Analytics-Assisted Reflective Assessment in Fostering Undergraduates’ Collective Epistemic Agency in Computer-Supported Collaborative Inquiry. J. Comput. Assist. Learn. 2024, 40, 1098–1122. [Google Scholar] [CrossRef]

- Zhang, J.W.; Hong, H.Y.; Scardamalia, M.; Teo, C.; Morley, E. Sustaining Knowledge Building as a Principle-Based Innovation at an Elementary School. Int. J. Comput. Support. Collab. Learn. 2018, 13, 361–384. [Google Scholar] [CrossRef]

- Gutiérrez-Braojos, C.; Daniela, L.; Rodríguez, C.; Berrocal, E. Resignifying Assessment through Knowledge Building: A Grounded Theory on Students’ Perceived Value of Reflective Evaluation with KBAT and AI. In press.

- Yuan, G.; Zhang, J.; Chen, M.H. Cross-Community Knowledge Building with Idea Thread Mapper. Int. J. Comput. Support. Collab. Learn. 2022, 17, 293–326. [Google Scholar] [CrossRef]

- Bramer, W.M.; Rethlefsen, M.L.; Kleijnen, J.; Franco, O.H. Optimal database combinations for literature searches in systematic reviews: A prospective exploratory study. Syst. Rev. 2017, 6, 245. [Google Scholar] [CrossRef]

- Harari, M.B.; Parola, H.R.; Hartwell, C.J.; Riegelman, A. Literature searches in systematic reviews and meta-analyses: A review, evaluation, and recommendations. J. Vocat. Behav. 2020, 118, 103–377. [Google Scholar] [CrossRef]

| Year | Authors | Title | Study Design | Type of Document | Times Cited (All Databases) | |

|---|---|---|---|---|---|---|

| 1 | 2010 | Erkunt, H | Emergence of Epistemic Agency in College Level Educational Technology Course for Pre-Service Teachers Engaged in CSCL | Mixed | Article | 10 |

| 2 | 2011 | Chan, CKK; Chan, YY | Students’ views of collaboration and online participation in Knowledge Forum | Mixed | Article | 87 |

| 3 | 2012 | Oshima, Jun; Oshima, Ritsuko; Matsuzawa, Yoshiaki | Knowledge Building Discourse Explorer: a social network analysis application for knowledge building discourse | Mixed | Article | 111 |

| 4 | 2015 | Chen, B; Scardamalia, Marlene; Bereiter, C | Advancing knowledge building discourse through judgments of promising ideas | Mixed | Article | 77 |

| 5 | 2015 | Hong, HY; Scardamalia, M; Messina, R; Teo, CL | Fostering sustained idea improvement with principle-based knowledge building analytic tools | Mixed | Article | 66 |

| 6 | 2016 | Yang, Yuqin; van Aalst, Jan; Chan, Carol K. K.; Tian, Wen | Reflective assessment in knowledge building by students with low academic achievement | Mixed | Article | 45 |

| 7 | 2017 | Chen, Bodong | Fostering scientific understanding and epistemic beliefs through judgments of promisingness | Mixed | Article | 29 |

| 8 | 2018 | Zhang, JW; Tao, D; Chen, MH; Sun, YQ; Judson, D; Naqvi, S | Co-Organizing the Collective Journey of Inquiry with Idea Thread Mapper | Mixed | Article | 74 |

| 9 | 2019 | Yang, Yuqin | Reflective assessment for epistemic agency of academically low-achieving students | Mixed | Article | 6 |

| 10 | 2019 | Khanlari, Ahmad; Zhu, Gaoxia; Scardamalia, Marlene | Knowledge Building Analytics to Explore Crossing Disciplinary and Grade-Level Boundaries | Mixed | Article | 4 |

| 11 | 2020 | Yang, YQ; Chen, QQ; Yu, YW; Feng, XQ; van Aalst, J | Collective reflective assessment for shared epistemic agency by undergraduates in knowledge building | Mixed | Article | 33 |

| 12 | 2020 | Hod, Y; Katz, S; Eagan, B | Refining qualitative ethnographies using Epistemic Network Analysis: A study of socioemotional learning dimensions in a Humanistic Knowledge Building Community | Mixed | Article | 24 |

| 13 | 2021 | Yang, Yuqin; van Aalst, Jan; Chan, Carol | Examining Online Discourse Using the Knowledge Connection Analyzer Framework and Collaborative Tools in Knowledge Building | Mixed | Article | 7 |

| 14 | 2021 | Ong, Aloysius; Teo, Chew Lee; Tan, Samuel; Kim, Mi Song | A knowledge building approach to primary science collaborative inquiry supported by learning analytics | Qualitative | Article | 32 |

| 15 | 2021 | Tao, D; Zhang, JW | Agency to transform: how did a grade 5 community co-configure dynamic knowledge building practices in a yearlong science inquiry? | Qualitative | Article | 20 |

| 16 | 2021 | Gutiérrez-Braojos, Calixto; Rodriguez-Dominguez, Carlos; Carranza-Garcia, Francisco; Navarro-Garulo, Gabriel | Computer-supported knowledge building community A new learning analytics tool | Mixed | Book Chapter | 0 |

| 17 | 2022 | Yang, Yuqin; Zhu, Gaoxia; Sun, Daner; Chan, Carol K. K. | Collaborative analytics-supported reflective Assessment for Scaffolding Pre-service Teachers’ collaborative Inquiry and Knowledge Building | Mixed | Article | 0 |

| 18 | 2022 | Yuan, GJ; Zhang, JW; Chen, MH | Cross-community knowledge building with idea thread mapper | Mixed | Article | 12 |

| 19 | 2022 | Yang, YQ; Yuan, KC; Feng, XQ; Li, XH; van Aalst, J | Fostering low-achieving students’ productive disciplinary engagement through knowledge building inquiry and reflective assessment | Mixed | Article | 14 |

| 20 | 2023 | Ong, Aloysius; Teo, Chew Lee; Lee, Alwyn Vwen Yen; Yuan, Guangji | Epistemic Network Analysis to assess collaborative engagement in Knowledge Building discourse | Mixed | Proceedings Paper | 8 |

| 21 | 2023 | Gutiérrez-Braojos, C.; Rodriguez-Dominguez, C.; Daniela, L.; Carranza-Garcia, F. | An Analytical Dashboard of Collaborative Activities for the Knowledge Building | Mixed | Article | 5 |

| 22 | 2023 | Yang, Yuqin; Zheng, Zhizi; Zhu, Gaoxia; Salas-Pilco, Sdenka Zobeida | Analytics-supported reflective assessment for 6th graders’ knowledge building and data science practices: An exploratory study | Mixed | Article | 3 |

| 23 | 2023 | Jiang, JP; Xie, WL; Wang, SY; Zhang, YB; Gao, J | Assessing team creativity with multi-attribute group decision-making in a knowledge building community: A design-based research | Mixed | Article | 1 |

| 24 | 2023 | Chai, SM; Oon, EPT; Chai, Y; Li, ZK | Examining the role of metadiscourse in collaborative knowledge building community | Mixed | Article; Early Access | 2 |

| 25 | 2024 | Yang, Yuqin; Chen, Yewen; Feng, Xueqi; Sun, Daner; Pang, Shiyan | Investigating the mechanisms of analytics-supported reflective assessment for fostering collective knowledge | Mixed | Article | 2 |

| 26 | 2024 | Yang, Yuqin; Chan, Carol K. K.; Zhu, Gaoxia; Tong, Yuyao; Sun, Daner | Reflective assessment using analytics and artifacts for scaffolding knowledge building competencies among undergraduate students | Mixed | Article | 3 |

| 27 | 2024 | Yang, Yuqin; Feng, Xueqi; Zhu, Gaoxia; Xie, Kui | Effects and mechanisms of analytics-assisted reflective assessment in fostering undergraduates’ collective epistemic agency in computer-supported collaborative inquiry | Mixed | Article | 1 |

| 28 | 2024 | Yu, Yawen; Tao, Yang; Chen, Gaowei; Sun, Can | Using learning analytics to enhance college students shared epistemic agency in mobile instant messaging: A new way to support deep discussion | Mixed | Article | 2 |

| 29 | 2024 | Gutiérrez-Braojos, Calixto; Rodríguez-Chirino, Paula; Vico, Beatriz Pedrosa; Fernández, Sonia Rodriguez | Teacher scaffolding for knowledge building in the educational research classroom | Mixed | Article | 2 |

| 30 | 2024 | Gutiérrez-Braojos, C; Rodríguez-Domínguez, C; Daniela, L; Rodríguez-Chirino, P | Evaluating a New Knowledge Building Analytics Tool (KBAT) | Mixed | Proceedings Paper | 0 |

| 31 | 2025 | Tong, YY; Chen, GW; Jong, MSY | Video-based analytics-supported formative feedback for enhancing low-achieving students’ conception of collaboration and classroom discourse engagement | Mixed | Article | 0 |

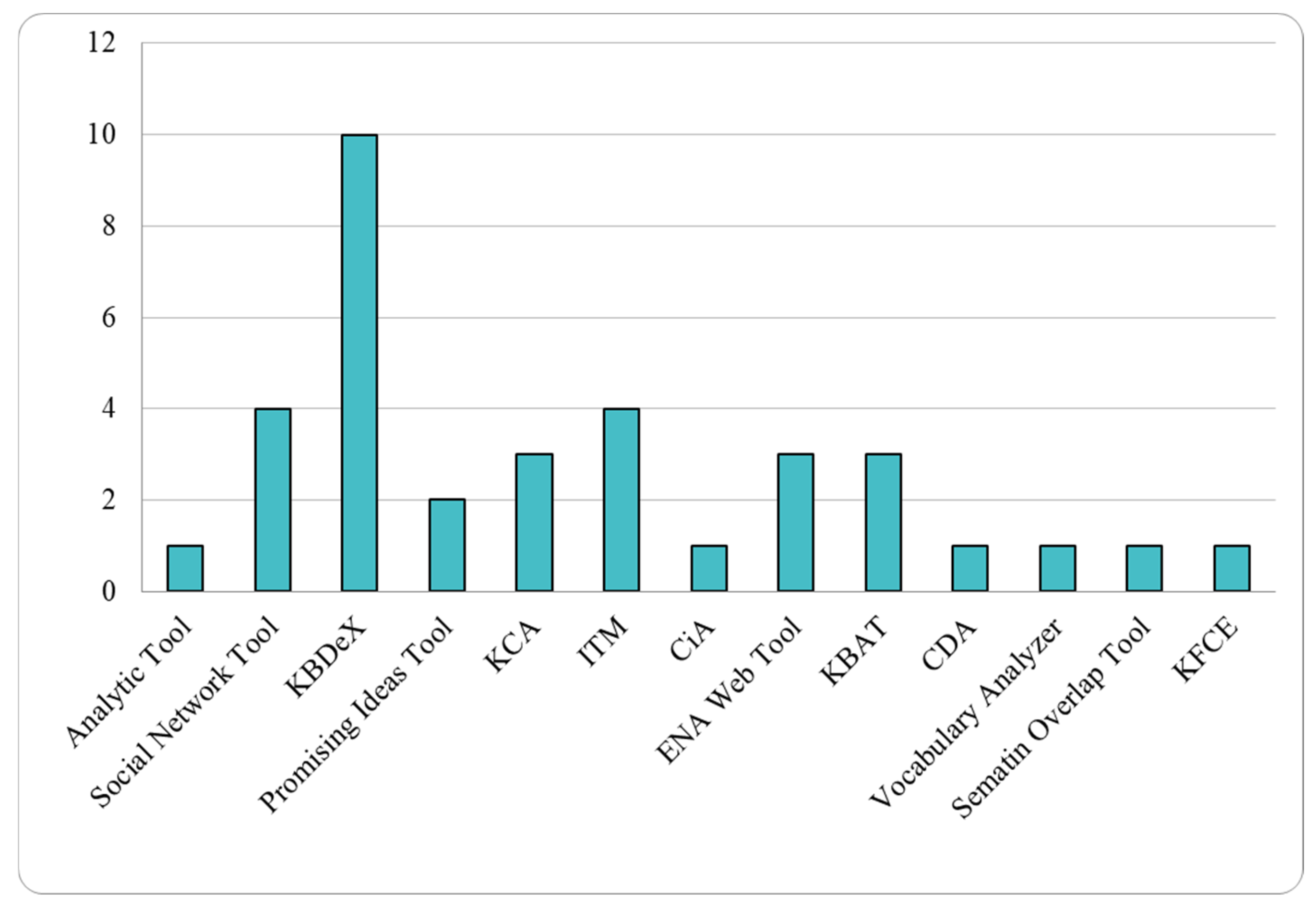

| Analytical Technology | Description | Types of Analysis Enabled |

|---|---|---|

| KBDeX | KBDeX is a discourse analysis tool designed to explore collaborative learning processes by visualizing network structures that link participants, discourse units, and key terms. Based on a bipartite graph model, it allows researchers to investigate how ideas and interactions develop within a community of learners (Matsuzawa et al., 2011) [41]. | Discourse visualization, network structure analysis, idea flow tracking. |

| Social Network Tool | A tool used to analyze group dynamics and community members’ interactivity. It helps identify who is collaborating with whom based on responses, links, references, or annotations and highlights members working in isolation due to a lack of interaction. It also shows how many notes each participant builds on, connects to, references, or otherwise interacts with, providing a clear overview of collaboration patterns within the community (Hong et al., 2015) [39]. | Social network analysis, participation mapping, community structure. |

| ITM | Idea Thread Mapper (ITM) is introduced as a research-based digital platform that enables students to collaboratively engage in sustained, self-directed knowledge building. It supports learners in visualizing and organizing idea threads over time, fostering metacognitive reflection and collective inquiry within and across classrooms. The platform also includes shared community spaces, such as “Super Talk,” designed to promote cross-group interaction and comparison of idea development trajectories (Zhang & Chen, 2019) [42]. | Content sequence analysis, idea development, cross-thread connections. |

| ENA Web Tool | The ENA Web Tool is introduced as an online platform designed to support researchers in modeling and analyzing discourse data through the principles of Epistemic Network Analysis. While not extensively defined, the tool allows users to upload coded datasets, construct networks of co-occurring elements (such as skills or concepts), and visualize patterns of connections across individuals or groups. It is positioned as a key resource for exploring how knowledge is built through interactions (Shaffer et al., 2016) [43]. | Epistemic Network Analysis, co-occurrence patterns, connections between discourse elements, epistemic frame mapping. |

| KCA | The Knowledge Connections Analyzer (KCA) is a web-based analytical tool designed to support student reflection and metacognitive awareness within knowledge building environments. It is integrated into a broader framework that includes four guiding inquiry questions, and it extracts discourse data from the Knowledge Forum platform to generate visualizations and indicators. These outputs are intended to facilitate collective evaluation and refinement of online collaborative work (van Aalst et al., 2015) [44]. | Concept frequency, semantic overlap, principle-specific mapping. |

| KBAT | The Knowledge Building Analytics Tool (KBAT) is a tool designed to enable the construction of customized analytical dashboards within the Knowledge Forum environment. It includes visual and interactive components that allow users to analyze key dimensions of participation, such as discourse patterns, conceptual progress, equity of engagement, and leadership dynamics. These dashboards support metacognitive self-assessment and collaborative reflection aligned with knowledge building principles (Gutiérrez-Braojos et al., 2024) [45]. | Discourse moves classification, contribution patterns. |

| CiA | Curriculum-ideas Analytics (CiA) is an educational analytics tool developed to support teachers and students in identifying and reflecting on “big ideas” that span multiple subjects and grade levels. It facilitates the analysis of discourse and curriculum content by mapping conceptual trajectories across science and humanities domains, promoting a deeper understanding of interdisciplinary knowledge development (Tan et al., 2022) [46]. | Interaction types, contribution quality, reflection indicators. |

| Promising Ideas Tool | The Promising Ideas Tool was developed as a component of the Knowledge Forum platform to enhance student engagement in knowledge building discourse. It enables participants to identify, highlight, and track promising contributions within online discussions. The tool includes features for tagging key ideas, aggregating and ranking them based on peer recognition, and exporting selected ideas to new collaborative spaces for further development (Chen et al., 2015) [47]. | Idea tagging and ranking, promising idea identification, idea aggregation and exportation for further development |

| Vocabulary Analyzer | A tool developed to track the growth of a user’s vocabulary over time and to assess their vocabulary level against a pre-defined dictionary. This dictionary typically contains key terms relevant to a specific domain or important concepts identified in curriculum guidelines, helping monitor students’ conceptual development (Hong et al., 2015) [39]. | Lexical diversity, term frequency, conceptual depth. |

| CDA | The Classroom Discourse Analyzer (CDA) is introduced as a practical tool designed to help educators overcome common challenges in analyzing classroom discourse. By facilitating the coding and examination of student–teacher interactions, the CDA enables teachers to reflect on and improve their instructional practices using data derived from authentic classroom environments (Chen et al., 2015) [47]. | Critical discourse features, power relations in discourse. |

| Semantic Overlap Tool | The Semantic Overlap Tool is an analytic feature that compares notes or sets of notes to detect shared terms and phrases, thereby identifying conceptual similarities across student discourse. It is particularly useful for analyzing the degree to which students’ contributions align with curriculum goals by highlighting overlapping key vocabulary (Hong et al., 2015) [39]. | Semantic similarity, conceptual alignment. |

| KFCE | It is an analytical tool integrated into Knowledge Forum that allows key information about a learning community’s activity to be extracted and organized. It supports the evaluation of indicators such as individual participation, connections between contributions, and collective cognitive responsibility. These data provide a basis for monitoring progress, identifying interaction patterns, and supporting reflective assessment processes in knowledge building contexts (Gutiérrez-Braojos et al., 2022) [48]. | Data extraction, community participation metrics, content export. |

| Analytic Tool | The Analytic Toolkit, developed for the Knowledge Forum environment, was designed to help users examine their participation in collaborative discourse. It offered metrics such as the number and type of contributions, response patterns, and lexical overlap, allowing students and educators to reflect on idea development and engagement patterns within the online learning community (Burtis, 1998) [49]. | Contribution frequency analysis, response pattern analysis, lexical overlap analysis, participation monitoring |

| Vocabulary Analyzer | A tool developed to track the growth of a user’s vocabulary over time and to assess their vocabulary level against a pre-defined dictionary. This dictionary typically contains key terms relevant to a specific domain or important concepts identified in curriculum guidelines, helping monitor students’ conceptual development (Hong et al., 2015) [39]. | Vocabulary growth tracking, conceptual development monitoring, domain-specific term analysis |

| Year | Title | Learning Analytics |

|---|---|---|

| Collaborative Efforts | ||

| 2011 | Students’ views of collaboration and online participation in Knowledge Forum | Analytic Toolkit |

| 2020 | Refining qualitative ethnographies using Epistemic Network Analysis: A study of socioemotional learning dimensions in a Humanistic Knowledge Building Community | ENA Web Tool |

| Idea Improvement | ||

| 2015 | Advancing knowledge building discourse through judgments of promising ideas | Promising Ideas Tool |

| 2020 | Collective reflective assessment for shared epistemic agency by undergraduates in knowledge building | KBDeX/Promising Ideas Tool |

| 2022 | Fostering low-achieving students’ productive disciplinary engagement through knowledge building inquiry and reflective assessment | ENA Web Tool |

| 2023 | Epistemic Network Analysis to assess collaborative engagement in Knowledge Building discourse | ENA Web Tool |

| 2023 | Analytics-supported reflective assessment for 6th graders’ knowledge building and data science practices: An exploratory study | KBDeX |

| 2024 | Reflective assessment using analytics and artifacts for scaffolding knowledge building competencies among undergraduate students | KBDeX |

| 2024 | Effects and mechanisms of analytics-assisted reflective assessment in fostering undergraduates’ collective epistemic agency in computer-supported collaborative inquiry | KBDeX |

| Collaborative Efforts and Idea Improvement | ||

| 2010 | Emergence of Epistemic Agency in College Level Educational Technology Course for Pre-Service Teachers Engaged in CSCL | Social Network Tool |

| 2012 | Knowledge Building Discourse Explorer: a social network analysis application for knowledge building discourse | KBDeX/Social Network Tool |

| 2015 | Fostering sustained idea improvement with principle-based knowledge building analytic tools | Social Network Tool, Vocabulary Analyzer, Semantic Overlap Tool |

| 2016 | Reflective assessment in knowledge building by students with low academic achievement | KCA |

| 2017 | Fostering scientific understanding and epistemic beliefs through judgments of promisingness | ITM |

| 2018 | Co-Organizing the Collective Journey of Inquiry with Idea Thread Mapper | ITM |

| 2019 | Reflective assessment for epistemic agency of academically low-achieving students | KCA |

| 2019 | Knowledge Building Analytics to Explore Crossing Disciplinary and Grade-Level Boundaries | KBDeX |

| 2021 | Examining Online Discourse Using the Knowledge Connection Analyzer Framework and Collaborative Tools in Knowledge Building | KBDeX/Promising Ideas |

| 2021 | A knowledge building approach to primary science collaborative inquiry supported by learning analytics | CiA |

| 2021 | Agency to transform: how did a grade 5 community co-configure dynamic knowledge building practices in a yearlong science inquiry? | ITM |

| 2021 | Computer-supported knowledge building community A new learning analytics tool | KFCE |

| 2022 | Collaborative analytics-supported reflective Assessment for Scaffolding Pre-service Teachers’ collaborative Inquiry and Knowledge Building | KBDeX |

| 2022 | Cross-community knowledge building with idea thread mapper | ITM |

| 2023 | An Analytical Dashboard of Collaborative Activities for the Knowledge Building | KBAT |

| 2023 | Assessing team creativity with multi-attribute group decision-making in a knowledge building community: A design-based research | Social Network Tool |

| 2023 | Examining the role of metadiscourse in collaborative knowledge building community | KBDeX |

| 2024 | Investigating the mechanisms of analytics-supported reflective assessment for fostering collective knowledge | KBDeX |

| 2024 | Using learning analytics to enhance college students shared epistemic agency in mobile instant messaging: A new way to support deep discussion | KBDeX |

| 2024 | Teacher scaffolding for knowledge building in the educational research classroom | KBAT |

| 2024 | Evaluating a New Knowledge Building Analytics Tool (KBAT) | KBAT |

| 2025 | Video-based analytics-supported formative feedback for enhancing low-achieving students’ conception of collaboration and classroom discourse engagement | CDA |

| Year | Title | Participants | Participant Setup | Platform Used | Period | Assessment Moment | Assessment Agent |

|---|---|---|---|---|---|---|---|

| Primary Education | |||||||

| 2015 | Fostering sustained idea improvement with principle-based knowledge building analytic tools | 22 | Not specified | KF | 17 weeks | Formative and summative | Student and teacher |

| 2015 | Advancing knowledge-building discourse through judgments of promising ideas | 40 | Small group | KF | 8 weeks | Formative and summative | Student and teacher |

| 2017 | Fostering scientific understanding and epistemic beliefs through judgments of promisingness | 26 | Big group, small group, and individual | KF | 10 weeks | Formative and summative | Student and teacher |

| 2018 | Co-Organizing the Collective Journey of Inquiry with Idea Thread Mapper | 47 | Big group and small group | KF | 12 weeks | Formative and summative | Student and teacher |

| 2019 | Reflective assessment for epistemic agency of academically low-achieving students | 33 | Big group and small group | KF | 21 weeks | Formative and summative | Student and teacher |

| 2019 | Knowledge Building Analytics to Explore Crossing Disciplinary and Grade-Level Boundaries | 40 | Big group and individual | KF | 13 weeks | Formative and summative | Teacher |

| 2021 | A knowledge building approach to primary science collaborative inquiry supported by learning analytics | 25 | Big group and individual | KF | Not specified | Formative and summative | Student and teacher |

| 2021 | Agency to transform: how did a grade 5 community co-configure dynamic knowledge building practices in a yearlong science inquiry? | 24 | Big group, small group, and individual | KF | 1 year | Formative and summative | Student and teacher |

| 2022 | Cross-community knowledge building with idea thread mapper | 76 | Small group | KF | 26 weeks | Formative and summative | Student and teacher |

| 2023 | Epistemic Network Analysis to assess collaborative engagement in Knowledge Building discourse | 6 | Big group | KF | 2 weeks | Summative | Student and teacher |

| 2023 | Analytics-supported reflective assessment for 6th graders’ knowledge building and data science practices: An exploratory study | 56 | Big group and individual | KF | 8 weeks | Formative and summative | Teacher |

| 2023 | Assessing team creativity with multi-attribute group decision-making in a knowledge building community: A design-based research | 37 | Big group and individual | KF | 39 weeks | Formative and summative | Student and teacher |

| 2024 | Investigating the mechanisms of analytics-supported reflective assessment for fostering collective knowledge | 93 | Small group | KF | 18 weeks | Formative and summative | Student and teacher |

| Secondary Education | |||||||

| 2011 | Students’ views of collaboration and online participation in Knowledge Forum | 23 | Small group | KF | 8 weeks | Summative | Teacher |

| 2016 | Reflective assessment in knowledge building by students with low academic achievement | 20 | Small group and individual | KF | 2 weeks | Formative and summative | Student and teacher |

| 2021 | Examining Online Discourse Using the Knowledge Connection Analyzer Framework and Collaborative Tools in Knowledge Building | 353 | Big group and individual | KF | 21 weeks | Formative and summative | Student and teacher |

| 2022 | Fostering low-achieving students’ productive disciplinary engagement through knowledge-building inquiry and reflective assessment | 34 | Big group, small group, and individual | KF | 17 weeks | Summative | Student and teacher |

| 2025 | Video-based analytics-supported formative feedback for enhancing low-achieving students’ conception of collaboration and classroom discourse engagement | 98 | Small group | Not specified | 7 weeks | Formative | Student and teacher |

| Higher Education | |||||||

| 2010 | Emergence of Epistemic Agency in College Level Educational Technology Course for Pre-Service Teachers Engaged in CSCL | 44 | Big group | KF | 6 weeks | Formative and summative | Teacher |

| 2012 | Knowledge Building Discourse Explorer: a social network analysis application for knowledge building discourse | 6 | Small group | Not specified | Not specified | Formative | Teacher |

| 2020 | Collective reflective assessment for shared epistemic agency by undergraduates in knowledge building | 73 | Big group, small group, and individual | KF | 17 weeks | Formative and summative | Student and teacher |

| 2020 | Refining qualitative ethnographies using Epistemic Network Analysis: A study of socioemotional learning dimensions in a Humanistic Knowledge Building Community | 18 | Big group, small group, and individual | KF | 13 weeks | Summative | Teacher |

| 2022 | Computer-supported knowledge building community A new learning analytics tool | 59 | Small group | KF | 16 weeks | Formative | Student and teacher |

| 2022 | Collaborative analytics-supported reflective Assessment for Scaffolding Pre-service Teachers’ collaborative Inquiry and Knowledge Building | 68 | Big group and small group | KF | 18 weeks | Formative and summative | Student and teacher |

| 2023 | An Analytical Dashboard of Collaborative Activities for the Knowledge Building | 126 | Big group and individual | KF | 16 weeks | Formative and summative | Student and teacher |

| 2023 | Examining the role of metadiscourse in collaborative knowledge building community | 35 | Small group | Not specified | 12 weeks | Formative | Student and teacher |

| 2024 | Reflective assessment using analytics and artifacts for scaffolding knowledge building competencies among undergraduate students | 41 | Big group and individual | KF | 2 weeks | Formative | Student and teacher |

| 2024 | Effects and mechanisms of analytics-assisted reflective assessment in fostering undergraduates’ collective epistemic agency in computer-supported collaborative inquiry | 81 | Big group and individual | KF | 16 weeks | Formative and summative | Student and teacher |

| 2024 | Using learning analytics to enhance college students shared epistemic agency in mobile instant messaging: A new way to support deep discussion | 40 | Small group | 14 weeks | Formative | Student and teacher | |

| 2024 | Teacher scaffolding for knowledge building in the educational research classroom | 59 | Small group | KF | 16 weeks | Formative | Student and teacher |

| 2024 | Evaluating a New Knowledge Building Analytics Tool (KBAT) | 122 | Small group | KF | 16 weeks | Formative | Student and teacher |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rodríguez-Chirino, P.; Gutiérrez-Braojos, C.; Martínez-Gámez, M.; Rodríguez-Domínguez, C. Technologies for Reflective Assessment in Knowledge Building Communities: A Systematic Review. Information 2025, 16, 762. https://doi.org/10.3390/info16090762

Rodríguez-Chirino P, Gutiérrez-Braojos C, Martínez-Gámez M, Rodríguez-Domínguez C. Technologies for Reflective Assessment in Knowledge Building Communities: A Systematic Review. Information. 2025; 16(9):762. https://doi.org/10.3390/info16090762

Chicago/Turabian StyleRodríguez-Chirino, Paula, Calixto Gutiérrez-Braojos, Mario Martínez-Gámez, and Carlos Rodríguez-Domínguez. 2025. "Technologies for Reflective Assessment in Knowledge Building Communities: A Systematic Review" Information 16, no. 9: 762. https://doi.org/10.3390/info16090762

APA StyleRodríguez-Chirino, P., Gutiérrez-Braojos, C., Martínez-Gámez, M., & Rodríguez-Domínguez, C. (2025). Technologies for Reflective Assessment in Knowledge Building Communities: A Systematic Review. Information, 16(9), 762. https://doi.org/10.3390/info16090762