Low-Cost Smart Cane for Visually Impaired People with Pathway Surface Detection and Distance Estimation Using Weighted Bounding Boxes and Depth Mapping

Abstract

1. Introduction

- Proposal of a novel Pathway Surface Transition Point Detection (PSTPD) method, a new approach designed to detect transition points between different walking surfaces and estimate their distances using a weighted center calculation derived from bounding boxes and a calibrated depth mapping technique.

- Integration of the PSTPD method and enhanced obstacle detection, in which the proposed system introduces a smart cane that delivers distance-based alerts indicating the severity and proximity of both obstacles and pathway surface transition points.

- A cost-effective smart cane solution that uses a Raspberry Pi 4, camera modules, and an ultrasonic sensor to create a low-cost but reliable assistive tool that works well in real-life situations.

2. Related Works

3. Problem Analysis

4. System Overview

4.1. Hardware Unit

4.2. Software Unit

5. Proposed Method

5.1. Input

5.2. Processing Unit

5.2.1. Obstacle Detection Process

- Obstacle detection model

- 2.

- Obstacle severity assessment process

5.2.2. Pathway Surface Transition Point Detection (PSTPD) Process

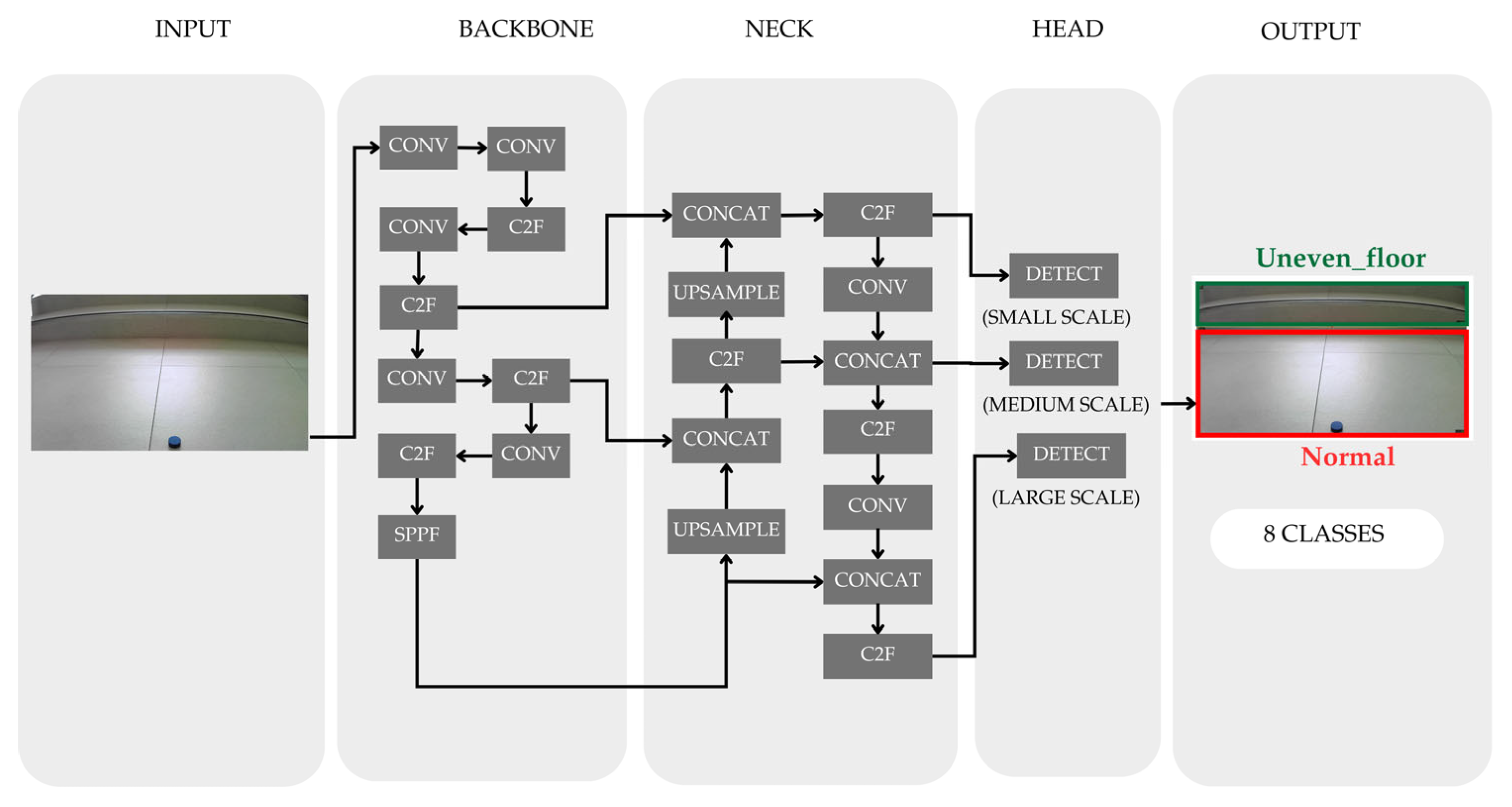

- Pathway surface detection model

- 2.

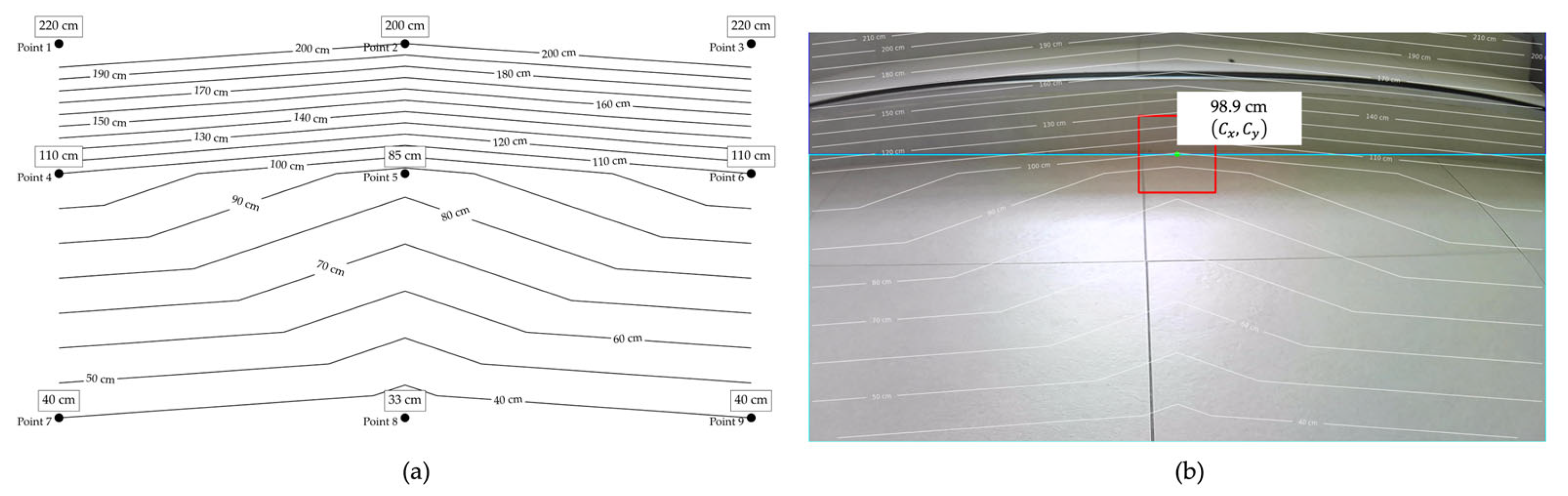

- Pathway surface transition point distance estimation process

- 3.

- Pathway Surface transition point severity assessment process

5.3. Notification

5.3.1. Obstacle Detection Alerts

5.3.2. Pathway Surface Transition Point Detection Alerts

6. Experiments and Results

6.1. Experiments

6.1.1. Dataset

6.1.2. Configuration Parameter

6.1.3. Evaluation

- Mean Average Precision (mAP)

- 2.

- Intersection over Union (IoU)

6.2. Results

7. Discussion

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- World Health Organization. Blindness and Visual Impairment; WHO: Geneva, Switzerland, 2024; Available online: https://www.who.int/news-room/fact-sheets/detail/blindness-and-visual-impairment (accessed on 15 March 2025).

- Daga, F.B.; Diniz-Filho, A.; Boer, E.R.; Gracitelli, C.P.; Abe, R.Y.; Fajpo, M. Fear of Falling and Postural Reactivity in Patients with Glaucoma. PLoS ONE 2017, 12, e0187220. [Google Scholar] [CrossRef]

- Williams, J.S.; Kowal, P.; Hestekin, H.; O’Driscoll, T.; Peltzer, K.; Yawson, A.E.; Biritwum, R.; Maximova, T.; Salinas Rodríguez, A.; Manrique Espinoza, B.; et al. Prevalence, Risk Factors, and Disability Associated with Fall-Related Injury in Older Adults in Low- and Middle-Income Countries: Results from the WHO Study on Global AGEing and Adult Health (SAGE). BMC Med. 2015, 13, 147. [Google Scholar] [CrossRef] [PubMed]

- Patino, M.; McKean-Cowdin, R.; Azen, S.P.; Allison, J.C.; Choudhury, F.; Varma, R. Central and Peripheral Visual Impairment and the Risk of Falls and Falls with Injury. Ophthalmology 2010, 117, 199–206. [Google Scholar] [CrossRef] [PubMed]

- Khan, S.; Nazir, S.; Khan, H.U. Analysis of Navigation Assistants for Blind and Visually Impaired People: A Systematic Review. IEEE Access 2021, 9, 26712–26729. [Google Scholar] [CrossRef]

- Beingolea, J.R.; Zea-Vargas, M.A.; Huallpa, R.; Vilca, X.; Bolivar, R.; Rendulich, J. Assistive Devices: Technology Development for the Visually Impaired. Designs 2021, 5, 75. [Google Scholar] [CrossRef]

- Mai, C.; Xie, D.; Zeng, L.; Li, Z.; Li, Z.; Qiao, Z.; Qu, Y.; Liu, G.; Li, L. Laser Sensing and Vision Sensing Smart Blind Cane: A Review. Sensors 2023, 23, 869. [Google Scholar] [CrossRef]

- Hersh, M. Wearable Travel Aids for Blind and Partially Sighted People: A Review with a Focus on Design Issues. Sensors 2022, 22, 5454. [Google Scholar] [CrossRef]

- Buckley, J.G.; Panesar, G.K.; MacLellan, M.J.; Pacey, I.E.; Barrett, B.T. Changes to Control of Adaptive Gait in Individuals with Long-Standing Reduced Stereoacuity. Investig. Ophthalmol. Vis. Sci. 2010, 51, 2487–2495. [Google Scholar] [CrossRef]

- Zafar, S.; Maqbool, H.F.; Ahmad, N.; Ali, A.; Moeizz, A.; Ali, F.; Taborri, J.; Rossi, S. Advancement in Smart Cane Technology: Enhancing Mobility for the Visually Impaired Using ROS and LiDAR. In Proceedings of the 2024 International Conference on Robotics and Automation in Industry (ICRAI), Lahore, Pakistan, 18–19 December 2024; pp. 1–8. [Google Scholar] [CrossRef]

- Panazan, C.-E.; Dulf, E.-H. Intelligent Cane for Assisting the Visually Impaired. Technologies 2024, 12, 75. [Google Scholar] [CrossRef]

- Sipos, E.; Ciuciu, C.; Ivanciu, L. Sensor-Based Prototype of a Smart Assistant for Visually Impaired People—Preliminary Results. Sensors 2022, 22, 4271. [Google Scholar] [CrossRef]

- Cardillo, E.; Li, C.; Caddemi, A. Empowering Blind People Mobility: A Millimeter-Wave Radar Cane. In Proceedings of the 2020 IEEE International Workshop on Metrology for Industry 4.0 and IoT (MetroInd4.0&IoT), Rome, Italy, 3–5 June 2020; pp. 390–395. [Google Scholar] [CrossRef]

- Sibu, S.F.; Raina, K.J.; Kumar, B.S.; Joseph, V.P.; Joseph, A.T.; Thomas, T. CNN-Based Smart Cane: A Tool for Visually Impaired People. In Proceedings of the 2023 9th International Conference on Smart Computing and Communications (ICSCC), Palai, India, 17–19 August 2023; pp. 126–131. [Google Scholar] [CrossRef]

- Li, J.; Xie, L.; Chen, Z.; Shi, L.; Chen, R.; Ren, Y.; Wang, L.; Lu, X. An AIoT-Based Assistance System for Visually Impaired People. Electronics 2023, 12, 3760. [Google Scholar] [CrossRef]

- Chen, L.B.; Pai, W.Y.; Chen, W.H.; Huang, X.R. iDog: An Intelligent Guide Dog Harness for Visually Impaired Pedestrians Based on Artificial Intelligence and Edge Computing. IEEE Sens. J. 2024, 24, 41997–42008. [Google Scholar] [CrossRef]

- Rahman, M.W.; Tashfia, S.S.; Islam, R.; Hasan, M.M.; Sultan, S.I.; Mia, S.; Rahman, M.M. The Architectural Design of Smart Blind Assistant Using IoT with Deep Learning Paradigm. Internet Things 2021, 13, 100344. [Google Scholar] [CrossRef]

- Patankar, N.S.; Patil, H.P.; Aware, B.H.; Maind, R.V.; Dhorde, P.S.; Deshmukh, Y.S. An Intelligent IoT-Based Smart Stick for Visually Impaired Person Using Image Sensing. In Proceedings of the 2023 14th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Delhi, India, 6–8 July 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Ma, Y.; Shi, Y.; Zhang, M.; Li, W.; Ma, C.; Guo, Y. Design and Implementation of an Intelligent Assistive Cane for Visually Impaired People Based on an Edge-Cloud Collaboration Scheme. Electronics 2022, 11, 2266. [Google Scholar] [CrossRef]

- Leong, X.; Ramasamy, R.K. Obstacle Detection and Distance Estimation for Visually Impaired People. IEEE Access 2023, 11, 136609–136627. [Google Scholar] [CrossRef]

- Nataraj, B.; Rani, D.R.; Prabha, K.R.; Christina, V.S.; Abinaya, R. Smart Cane with Object Recognition System. In Proceedings of the 5th International Conference on Smart Electronics and Communication (ICOSEC 2024), Coimbatore, India, 25–27 September 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1438–1443. [Google Scholar] [CrossRef]

- Raj, S.; Srivastava, K.; Nigam, N.; Kumar, S.; Mishra, N.; Kumar, R. Smart Cane with Object Recognition System. In Proceedings of the 2023 10th International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 23–24 February 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 704–708. [Google Scholar] [CrossRef]

- Dang, Q.K.; Chee, Y.; Pham, D.D.; Suh, Y.S. A Virtual Blind Cane Using a Line Laser-Based Vision System and an Inertial Measurement Unit. Sensors 2016, 16, 95. [Google Scholar] [CrossRef]

- Chang, W.-J.; Chen, L.-B.; Sie, C.-Y.; Yang, C.-H. An Artificial Intelligence Edge Computing-Based Assistive System for Visually Impaired Pedestrian Safety at Zebra Crossings. IEEE Trans. Consum. Electron. 2021, 67, 3–11. [Google Scholar] [CrossRef]

- Bai, J.; Liu, Z.; Lin, Y.; Li, Y.; Lian, S.; Liu, D. Wearable Travel Aid for Environment Perception and Navigation of Visually Impaired People. Electronics 2019, 8, 697. [Google Scholar] [CrossRef]

- Joshi, R.C.; Yadav, S.; Dutta, M.K.; Travieso-Gonzalez, C.M. Efficient Multi-Object Detection and Smart Navigation Using Artificial Intelligence for Visually Impaired People. Entropy 2020, 22, 941. [Google Scholar] [CrossRef]

- Farooq, M.S.; Shafi, I.; Khan, H.; De La Torre Díez, I.; Breñosa, J.; Martínez Espinosa, J.C.; Ashraf, I. IoT Enabled Intelligent Stick for Visually Impaired People for Obstacle Recognition. Sensors 2022, 22, 8914. [Google Scholar] [CrossRef]

- Veena, K.N.; Singh, K.; Ullal, B.S.; Biswas, A.; Gogoi, P.; Yash, K. Smart Navigation Aid for Visually Impaired Person Using a Deep Learning Model. In Proceedings of the 2023 3rd International Conference on Artificial Intelligence and Smart Energy (ICAIS), Coimbatore, India, 23–25 February 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1049–1053. [Google Scholar] [CrossRef]

- Mai, C.; Chen, H.; Zeng, L.; Li, Z.; Liu, G.; Qiao, Z.; Qu, Y.; Li, L.; Li, L. A Smart Cane Based on 2D LiDAR and RGB-D Camera Sensor—Realizing Navigation and Obstacle Recognition. Sensors 2024, 24, 870. [Google Scholar] [CrossRef]

- Scalvini, F.; Bordeau, C.; Ambard, M.; Migniot, C.; Dubois, J. Outdoor Navigation Assistive System Based on Robust and Real-Time Visual–Auditory Substitution Approach. Sensors 2024, 24, 166. [Google Scholar] [CrossRef]

- Raspberry Pi Ltd. Raspberry Pi 4 Model B. Available online: https://www.raspberrypi.com/products/raspberry-pi-4-model-b/ (accessed on 17 December 2024).

- HOCO. Web Camera GM101 2K HD. Available online: https://hocotech.com/product/home-office/pc-accessories/web-camera-gm101-2k-hd/ (accessed on 4 December 2024).

- Advice IT Infinite Public Company Limited. WEBCAM OKER (OE-B35). Available online: https://www.advice.co.th/product/webcam/webcam-hd-/webcam-oker-oe-b35- (accessed on 4 December 2024).

- Arduitronics Co., Ltd. Ultrasonic Sensor Module (HC-SR04) 5V. Available online: https://www.arduitronics.com/product/20/ultrasonic-sensor-module-hc-sr04-5v (accessed on 4 December 2024).

- Nubwo Co., Ltd. NBL06. Available online: https://www.nubwo.co.th/nbl06/ (accessed on 5 December 2024).

- ModuleMore. DS-212 Mini Push Button Switch. Available online: https://www.modulemore.com/p/2709 (accessed on 5 December 2024).

- Ultralytics. YOLOv5 Models. Available online: https://docs.ultralytics.com/models/yolov5/ (accessed on 5 December 2024).

- Ultralytics. YOLOv8 Models. Available online: https://docs.ultralytics.com/models/yolov8/ (accessed on 5 December 2024).

- Lin, T.-Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context. arXiv 2015, arXiv:1405.0312v3. [Google Scholar] [CrossRef]

- Roboflow. Test_YOLO8s Dataset. Available online: https://universe.roboflow.com/testyolo5-ro4zc/test_yolo8s (accessed on 10 December 2024).

- Roboflow. PotholeKerta Dataset. Available online: https://universe.roboflow.com/pothole-yzqlw/potholekerta (accessed on 5 December 2024).

- Roboflow. Damaged-Sidewalks Dataset. Available online: https://universe.roboflow.com/damagedsidewalks/damaged-sidewalks (accessed on 5 December 2024).

- Roboflow. Grass-Mrude Dataset. Available online: https://universe.roboflow.com/aut/grass-mrude (accessed on 7 December 2024).

- Roboflow. Block-Wawkg Dataset. Available online: https://universe.roboflow.com/braille-block/block-wawkg (accessed on 3 December 2024).

- Roboflow. Crosswalk-Ognxu Dataset. Available online: https://universe.roboflow.com/edgar1019-naver-com/crosswalk-ognxu (accessed on 14 December 2024).

- Roboflow. Puddle-Detection Dataset. Available online: https://universe.roboflow.com/hanyang-university-bd2kb/puddle-detection (accessed on 10 December 2024).

- Roboflow. Pothole 2-7kwss Dataset. Available online: https://universe.roboflow.com/perception-hmwbz/pothole-2-7kwss (accessed on 9 December 2024).

- Roboflow. DataAdd-Bkykc Dataset. Available online: https://universe.roboflow.com/data-ksqzc/dataadd-bkykc (accessed on 5 December 2024).

- Ultralytics. Performance Metrics Deep Dive. Available online: https://docs.ultralytics.com/guides/yolo-performance-metrics/ (accessed on 1 January 2025).

- Ultralytics. YOLOv5 vs. YOLOv8: A Detailed Comparison. Available online: https://docs.ultralytics.com/compare/yolov5-vs-yolov8/ (accessed on 5 December 2024).

- Testbook. Midpoint Formula. Available online: https://testbook.com/maths/midpoint-formula (accessed on 15 July 2025).

- Masoumian, A.; Rashwan, H.A.; Cristiano, J.; Asif, M.S.; Puig, D. Monocular Depth Estimation Using Deep Learning: A Review. Sensors 2022, 22, 5353. [Google Scholar] [CrossRef] [PubMed]

| Method | Year | Components | Cost | Weight | Obstacle Distance | Pathway Transition Point Distance | Danger Level (Obstacle/Pathway) | Limitations |

|---|---|---|---|---|---|---|---|---|

| [25] | 2019 | RGB-D Camera, IMU Sensor, Earphones, Smartphone | High | Light | No | No | No | Fail to detect or measure pathway transition distances, high computational resources |

| [26] | 2020 | RGB Camera, Raspberry Pi4, Distance Sensor, Headphones | Low | Light | Yes | No | No | Fail to detect or measure pathway transition distances |

| [27] | 2022 | RGB Camera, Distance Sensors, GPS, Raspberry Pi4, Water Sensor, Earphones | Low | Light | Yes | No | No | Fail to detect or measure pathway transition distances |

| [28] | 2023 | RGB Camera, Raspberry Pi, Distance Sensor, Buzzer, Earphone | Low | Light | Yes | No | No | Fail to detect or measure pathway transition distances, limited effectiveness in obstacle detection |

| [29] | 2024 | LiDAR, RGB-D Camera, IMU, GPS, Jetson nano | High | Light | Yes | No | No | Fail to detect or measure pathway transition distances, high computational resources |

| [30] | 2024 | RGB-D Camera, GPS, IMU Sensor, Laptop | High | Bulky | No | No | Both | Fail to detect or measure pathway transition distances, high computational resources |

| Systems | Specification |

|---|---|

| Raspberry Pi [31] | Model: Raspberry Pi 4 Model B |

| CPU: Broadcom BCM2711, Quad-core Cortex-A72 (ARM v8) 64-bit SoC @ 1.5 GHz | |

| Memory Size: 8 GB LPDDR4-3200 SDRAM | |

| RGB Camera1 [32] | Model: Hoco Webcam GM101 Resolution: 2560 × 1440 pixels |

| Frame Rate: 30 FPS | |

| RGB Camera2 [33] | Model: OE-B35 |

| Resolution: 640 × 480 pixels | |

| Frame Rate: 30 FPS | |

| Ultrasonic Sensor [34] | Model: HC-SR04 |

| Detection Range: 2 cm–400 cm | |

| Measuring Angle: <15° | |

| Battery [35] | Capacity: 10,000 mAh |

| Battery: Lithium Polymer | |

| Output USB: 5V/3A | |

| Dimensions (Width × Depth × Height): 6.7 × 1.5 × 13.4 cm | |

| Weight: 0.21 kg | |

| Push Button Switch [36] | DS-212 Mini No Lock Round Switch |

| 3.3 V DC (GPIO logic level) |

| Severity Level | Object Classes | Description |

|---|---|---|

| Mild | Bench, Backpack, Umbrella, Handbag, Suitcase, Cat, Dog, Bird, Bottle, Chair, Potted Plant | Generally static and easy to detect; pose minimal threat to navigation. |

| Moderate | Bicycle, Motorcycle, Stop Sign, Parking Meter, Fire Hydrant, Couch, Bed, Dining Table, Toilet, Sink, Refrigerator | May partially obstruct the path or exist at elevations not consistently detected; present moderate risk. |

| Severe | Person, Car, Bus, Train, Truck, Boat, Traffic Light | Dynamic, large, or linked to hazardous environments; high risk, requiring immediate user awareness. |

| Experiment No. | Experiment Description |

|---|---|

| 1 | Evaluation of object detection performance for obstacle identification |

| 2 | Measurement of obstacle detection distance using ultrasonic sensor |

| 3 | Evaluation of object detection performance for pathway surface classification |

| 4 | Distance estimation for mild-level pathway surface transition points |

| 5 | Distance estimation for moderate-level pathway surface transition points |

| 6 | Distance estimation for severe-level pathway surface transition points |

| 7 | Performance comparison of object detection models and surface transition estimation methods |

| 8 | Evaluation of the effectiveness of each component via ablation experiments |

| Datasets | Number of Classes | Original Images | Augmented Images | Dataset (Images) | |

|---|---|---|---|---|---|

| Training Set | Testing Set | ||||

| COCO2017 [39] | 29 | 102,184 | - | 98,057 | 4127 |

| Pathway Surface [40,41,42,43,44,45,46,47,48] | 8 | 1200 (150 images per class) | 6400 | 4800 | 1600 |

| PSTP—Mild Cases [Ours] | - | 50 | - | - | 50 |

| PSTP—Moderate Cases [Ours] | - | 300 | - | - | 300 |

| PSTP—Severe Cases [Ours] | - | 100 | - | - | 100 |

| Parameter | Value |

|---|---|

| Image size | 640 × 640 |

| Learning rate | 0.0001 |

| Optimizer | AdamW |

| Batch size | 27 |

| Epoch | 200 |

| Weights | yolov8n.pt (Pathway surface detection) yolov5x.pt (Obstacle detection) |

| Classes | mAP@50 | mAP@50:95 | Precision | Recall |

|---|---|---|---|---|

| person | 0.84 | 0.61 | 0.82 | 0.76 |

| bicycle | 0.66 | 0.40 | 0.76 | 0.58 |

| car | 0.74 | 0.50 | 0.76 | 0.68 |

| motorcycle | 0.78 | 0.52 | 0.79 | 0.70 |

| bus | 0.86 | 0.73 | 0.87 | 0.79 |

| train | 0.94 | 0.75 | 0.92 | 0.90 |

| truck | 0.63 | 0.45 | 0.67 | 0.53 |

| boat | 0.59 | 0.33 | 0.71 | 0.49 |

| traffic light | 0.63 | 0.33 | 0.71 | 0.58 |

| stop sign | 0.83 | 0.74 | 0.88 | 0.73 |

| parking meter | 0.68 | 0.53 | 0.79 | 0.63 |

| fire hydrant | 0.91 | 0.74 | 0.93 | 0.83 |

| bench | 0.47 | 0.32 | 0.67 | 0.43 |

| cat | 0.92 | 0.75 | 0.92 | 0.88 |

| backpack | 0.40 | 0.22 | 0.61 | 0.37 |

| umbrella | 0.71 | 0.48 | 0.74 | 0.65 |

| handbag | 0.38 | 0.22 | 0.60 | 0.35 |

| suitcase | 0.72 | 0.49 | 0.70 | 0.65 |

| dog | 0.84 | 0.70 | 0.80 | 0.78 |

| bird | 0.61 | 0.41 | 0.79 | 0.51 |

| bottle | 0.62 | 0.44 | 0.67 | 0.56 |

| chair | 0.80 | 0.67 | 0.81 | 0.76 |

| potted plant | 0.60 | 0.39 | 0.68 | 0.53 |

| couch | 0.67 | 0.44 | 0.74 | 0.63 |

| bed | 0.88 | 0.71 | 0.84 | 0.82 |

| dining table | 0.53 | 0.38 | 0.64 | 0.49 |

| toilet | 0.72 | 0.50 | 0.77 | 0.60 |

| sink | 0.68 | 0.50 | 0.75 | 0.59 |

| refrigerator | 0.58 | 0.36 | 0.65 | 0.54 |

| Average | 0.70 | 0.50 | 0.76 | 0.63 |

| Classes | [29] (YOLOv5) | [30] (YOLOv8) | [Ours] (YOLOv8-nano) | [Ours] (YOLOv5s) | Proposed Method (YOLOv5x) |

|---|---|---|---|---|---|

| person | 0.67 | 0.88 | 0.77 | 0.75 | 0.84 |

| bicycle | 0.39 | 0.90 | 0.54 | 0.53 | 0.66 |

| car | 0.73 | 0.96 | 0.64 | 0.63 | 0.74 |

| motorcycle | 0.51 | 0.89 | 0.70 | 0.68 | 0.78 |

| bus | 0.67 | 0.89 | 0.80 | 0.76 | 0.86 |

| train | - | - | 0.85 | 0.84 | 0.94 |

| truck | 0.75 | 0.92 | 0.51 | 0.50 | 0.63 |

| boat | - | - | 0.40 | 0.43 | 0.59 |

| traffic light | 0.37 | 0.85 | 0.50 | 0.53 | 0.63 |

| stop sign | - | - | 0.73 | 0.74 | 0.83 |

| parking meter | - | 0.91 | 0.64 | 0.64 | 0.68 |

| fire hydrant | - | - | 0.84 | 0.83 | 0.91 |

| bench | - | 0.72 | 0.32 | 0.31 | 0.47 |

| cat | - | - | 0.85 | 0.82 | 0.92 |

| backpack | - | - | 0.23 | 0.25 | 0.40 |

| umbrella | - | - | 0.56 | 0.56 | 0.71 |

| handbag | - | - | 0.24 | 0.22 | 0.38 |

| suitcase | - | - | 0.56 | 0.53 | 0.72 |

| dog | - | - | 0.70 | 0.67 | 0.84 |

| bird | - | - | 0.43 | 0.42 | 0.61 |

| bottle | - | - | 0.53 | 0.48 | 0.62 |

| chair | - | 0.86 | 0.70 | 0.65 | 0.80 |

| potted plant | - | 0.82 | 0.42 | 0.43 | 0.60 |

| couch | - | - | 0.55 | 0.54 | 0.67 |

| bed | - | - | 0.78 | 0.77 | 0.88 |

| dining table | - | - | 0.47 | 0.42 | 0.53 |

| toilet | - | - | 0.58 | 0.53 | 0.72 |

| sink | - | - | 0.60 | 0.59 | 0.68 |

| refrigerator | - | - | 0.43 | 0.39 | 0.58 |

| Average | 0.58 | 0.87 | 0.58 | 0.57 | 0.70 |

| Actual Distance (cm) | Measured Distance (cm) | Mean Distance (cm) | Mean Error (cm) | Accuracy (%) | ||||

|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | ||||

| 25 | 24.8 | 24.6 | 24.9 | 24.5 | 24.7 | 24.7 | 0.3 | 98.8 |

| 50 | 49.9 | 50.1 | 49.8 | 50.4 | 49.8 | 50.0 | 0.0 | 100.0 |

| 100 | 100.6 | 100.3 | 100.7 | 100.2 | 100.4 | 100.4 | 0.4 | 99.6 |

| 150 | 150.1 | 150.3 | 150.0 | 150.4 | 150.3 | 150.2 | 0.2 | 99.8 |

| 200 | 200.7 | 200.5 | 200.6 | 200.3 | 200.9 | 200.6 | 0.6 | 99.7 |

| 300 | 299.8 | 299.7 | 300.3 | 300.5 | 301.1 | 300.3 | 0.3 | 99.9 |

| Actual Distance (cm) | Mean Error [11] | Mean Error (Our Method) | Accuracy (%) [11] | Accuracy (%) (Our Method) |

|---|---|---|---|---|

| 25 | 0 | 0.3 | 100 | 98.8 |

| 50 | 1 | 0.0 | 98 | 100.0 |

| 100 | 3.2 | 0.4 | 96.8 | 99.6 |

| 150 | 3.8 | 0.2 | 97.5 | 99.8 |

| 200 | 4.4 | 0.6 | 97.8 | 99.7 |

| 300 | 5.8 | 0.3 | 98.1 | 99.9 |

| Average | 3.0 | 0.3 | 98.0 | 99.6 |

| Classes | mAP@50 | mAP@50:95 | Precision | Recall |

|---|---|---|---|---|

| Braille block | 0.69 | 0.51 | 0.87 | 0.57 |

| Crosswalk | 0.93 | 0.66 | 0.87 | 0.86 |

| Grass | 0.96 | 0.85 | 0.96 | 0.92 |

| Hole | 0.99 | 0.66 | 0.98 | 1.00 |

| Normal | 0.94 | 0.82 | 0.92 | 0.93 |

| Puddle | 0.94 | 0.73 | 0.96 | 0.88 |

| Rough | 0.95 | 0.80 | 0.96 | 0.88 |

| Uneven floor | 0.94 | 0.68 | 0.96 | 0.88 |

| Average | 0.92 | 0.71 | 0.94 | 0.87 |

| Classes | [29] (YOLOv5) | [30] (YOLOv8) | [Ours] (YOLOv5x) | [Ours] (YOLOv5s) | Proposed Method (YOLOv8n) |

|---|---|---|---|---|---|

| Braille block | 0.83 | 0.87 | 0.73 | 0.68 | 0.69 |

| Crosswalk | 0.82 | 0.86 | 0.96 | 0.95 | 0.93 |

| Grass | - | - | 0.96 | 0.97 | 0.96 |

| Hole | - | - | 0.99 | 0.99 | 0.99 |

| Normal | - | - | 0.95 | 0.95 | 0.94 |

| Puddle | - | - | 0.94 | 0.96 | 0.94 |

| Rough | - | - | 0.96 | 0.95 | 0.95 |

| Uneven floor | - | - | 0.97 | 0.95 | 0.94 |

| Average | 0.82 | 0.86 | 0.93 | 0.93 | 0.92 |

| Actual Distance (cm) | Estimated Distance(cm) | Mean Estimated Distance (cm) | Error (cm) | Average Processing Time (s) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | ||||

| 80 | 84.4 | 84.7 | 84.8 | 84.9 | 84.9 | 85.0 | 87.8 | 87.8 | 88.1 | 88.2 | 86.1 | 6.1 | 0.5 |

| 100 | 106.0 | 108.2 | 105.3 | 106.6 | 104.4 | 105.5 | 107.6 | 104.7 | 106.6 | 106.9 | 106.2 | 6.2 | 0.5 |

| 120 | 129.1 | 129.1 | 129.1 | 129.1 | 129.1 | 129.1 | 129.4 | 129.4 | 129.4 | 129.5 | 129.2 | 9.2 | 0.6 |

| 140 | 145.9 | 146.6 | 146.8 | 146.9 | 147.0 | 147.1 | 147.1 | 147.5 | 147.8 | 148.2 | 147.1 | 7.1 | 0.6 |

| 160 | 169.0 | 169.1 | 170.1 | 170.3 | 170.3 | 170.7 | 171.0 | 171.0 | 171.0 | 171.2 | 170.4 | 10.4 | 0.6 |

| Average (cm) | 7.8 | 0.6 | |||||||||||

| Actual Distance (cm) | Estimated Distance (cm) | Mean Estimated Distance (cm) | Error (cm) | Average Processing Time (s) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | ||||

| 80 | 80.2 | 80.2 | 80.2 | 80.3 | 80.3 | 80.5 | 80.4 | 80.5 | 80.9 | 81.0 | 80.4 | 0.4 | 0.6 |

| 100 | 100.7 | 99.0 | 98.2 | 98.1 | 97.9 | 97.7 | 97.3 | 96.8 | 95.7 | 95.7 | 97.7 | 2.3 | 0.6 |

| 120 | 135.0 | 130.3 | 136.3 | 129.1 | 131.4 | 131.7 | 129.9 | 134.4 | 132.6 | 127.5 | 131.8 | 11.8 | 0.6 |

| 140 | 147.1 | 147.4 | 147.4 | 147.5 | 147.8 | 150.6 | 150.4 | 149.7 | 149.2 | 149.1 | 148.6 | 8.6 | 0.6 |

| 160 | 161.5 | 161.9 | 162.6 | 164.2 | 164.2 | 164.9 | 164.9 | 165.9 | 165.7 | 165.0 | 164.1 | 4.1 | 0.6 |

| Average (cm) | 5.4 | 0.6 | |||||||||||

| Actual Distance (cm) | Estimated Distance (cm) | Mean Estimated Distance (cm) | Error (cm) | Average Processing Time (s) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | ||||

| 80 | 81.0 | 81.0 | 81.1 | 81.1 | 81.1 | 81.2 | 81.2 | 81.3 | 81.3 | 81.4 | 81.2 | 1.2 | 0.6 |

| 100 | 99.9 | 100.4 | 99.3 | 98.9 | 98.8 | 98.5 | 98.5 | 98.2 | 98.1 | 98.0 | 98.9 | 1.1 | 0.6 |

| 120 | 116.5 | 116.3 | 116.2 | 116.0 | 115.4 | 114.8 | 114.3 | 114.2 | 113.8 | 113.8 | 115.1 | 4.9 | 0.6 |

| 140 | 130.0 | 129.3 | 129.2 | 129.1 | 128.4 | 127.6 | 127.5 | 127.3 | 127.2 | 127.2 | 128.3 | 11.7 | 0.6 |

| 160 | 157.1 | 157.0 | 156.5 | 156.1 | 155.6 | 155.1 | 154.5 | 154.0 | 154.0 | 153.8 | 155.4 | 4.6 | 0.6 |

| Average (cm) | 4.7 | 0.6 | |||||||||||

| Actual Distance (cm) | Estimated Distance (cm) | Mean Estimated Distance (cm) | Error (cm) | Average Processing Time (s) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | ||||

| 80 | 80.4 | 80.4 | 80.5 | 80.5 | 80.5 | 80.8 | 80.8 | 80.8 | 80.8 | 80.9 | 80.6 | 0.6 | 0.6 |

| 100 | 100.2 | 100.2 | 100.3 | 99.6 | 99.6 | 100.4 | 100.5 | 100.6 | 100.8 | 100.9 | 100.3 | 0.3 | 0.6 |

| 120 | 117.5 | 116.6 | 116.5 | 116.3 | 116.0 | 115.9 | 115.8 | 115.8 | 115.7 | 115.7 | 116.2 | 3.8 | 0.6 |

| 140 | 136.1 | 136.1 | 135.6 | 135.2 | 135.1 | 135.0 | 134.9 | 134.7 | 134.2 | 133.6 | 135.1 | 4.9 | 0.6 |

| 160 | 157.1 | 156.5 | 156.4 | 156.0 | 155.8 | 155.6 | 155.4 | 155.2 | 155.1 | 154.5 | 155.7 | 4.2 | 0.6 |

| Average (cm) | 2.8 | 0.6 | |||||||||||

| Actual Distance (cm) | Estimated Distance (cm) | Mean Estimated Distance (cm) | Error (cm) | Average Processing Time (s) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | ||||

| 80 | 79.9 | 80.2 | 80.7 | 80.8 | 80.8 | 80.9 | 81.4 | 81.5 | 81.5 | 81.6 | 80.9 | 0.9 | 0.6 |

| 100 | 101.0 | 98.9 | 102.3 | 96.7 | 96.5 | 96.4 | 96.4 | 96.3 | 96.2 | 96.0 | 97.7 | 2.3 | 0.6 |

| 120 | 117.8 | 114.9 | 114.0 | 113.3 | 112.9 | 111.9 | 111.8 | 111.5 | 111.2 | 111.1 | 113.0 | 7.0 | 0.6 |

| 140 | 140.0 | 138.6 | 136.7 | 136.6 | 136.6 | 136.2 | 136.0 | 135.7 | 135.7 | 135.0 | 136.7 | 3.3 | 0.6 |

| 160 | 155.5 | 155.4 | 155.2 | 154.7 | 154.0 | 153.7 | 153.3 | 153.2 | 152.7 | 152.6 | 154.0 | 6.0 | 0.6 |

| Average (cm) | 3.9 | 0.6 | |||||||||||

| Actual Distance (cm) | Estimated Distance (cm) | Mean Estimated Distance (cm) | Error (cm) | Average Processing Time (s) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | ||||

| 80 | 80.8 | 80.8 | 80.9 | 81.2 | 81.4 | 81.5 | 81.9 | 82.4 | 82.5 | 82.9 | 81.6 | 1.6 | 0.6 |

| 100 | 100.4 | 100.5 | 101.7 | 101.8 | 102.0 | 102.0 | 102.2 | 102.4 | 102.4 | 102.4 | 101.8 | 1.8 | 0.6 |

| 120 | 127.3 | 127.6 | 128.1 | 128.2 | 128.5 | 128.8 | 128.8 | 128.9 | 129.2 | 129.2 | 128.5 | 8.5 | 0.6 |

| 140 | 136.8 | 136.5 | 136.5 | 136.5 | 136.4 | 136.3 | 136.3 | 136.0 | 136.0 | 135.9 | 136.3 | 3.7 | 0.6 |

| 160 | 161.8 | 161.9 | 161.9 | 162.0 | 157.6 | 162.8 | 165.9 | 166.9 | 169.4 | 149.9 | 162.0 | 2.0 | 0.6 |

| Average (cm) | 3.5 | 0.6 | |||||||||||

| Actual Distance (cm) | Estimated Distance (cm) | Mean Estimated Distance (cm) | Error (cm) | Average Processing Time (s) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | ||||

| 80 | 83.6 | 83.9 | 84.0 | 84.0 | 84.1 | 84.1 | 84.1 | 84.3 | 84.4 | 84.4 | 84.1 | 4.1 | 0.6 |

| 100 | 100.0 | 100.0 | 99.8 | 100.6 | 99.4 | 99.3 | 100.7 | 99.2 | 100.8 | 99.0 | 99.9 | 0.1 | 0.6 |

| 120 | 126.0 | 129.6 | 129.8 | 129.9 | 130.2 | 130.3 | 130.5 | 131.1 | 131.2 | 131.2 | 130.0 | 10.0 | 0.6 |

| 140 | 141.4 | 141.5 | 142.0 | 142.4 | 144.5 | 144.8 | 145.1 | 145.4 | 145.8 | 146.0 | 143.9 | 3.9 | 0.6 |

| 160 | 160.0 | 160.1 | 159.8 | 160.3 | 161.3 | 161.4 | 161.6 | 161.9 | 162.7 | 163.7 | 161.3 | 1.3 | 0.6 |

| Average (cm) | 3.9 | 0.6 | |||||||||||

| Actual Distance (cm) | Estimated Distance (cm) | Mean Estimated Distance (cm) | Error (cm) | Average Processing Time (s) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | ||||

| 80 | 80.1 | 79.8 | 79.6 | 79.4 | 79.4 | 80.7 | 79.2 | 78.9 | 78.6 | 78.5 | 79.4 | 0.6 | 0.6 |

| 100 | 97.8 | 103.9 | 94.8 | 105.6 | 92.7 | 92.7 | 92.5 | 92.5 | 92.3 | 92.2 | 95.7 | 4.3 | 0.6 |

| 120 | 127.0 | 127.0 | 127.0 | 127.1 | 127.4 | 127.7 | 128.3 | 129.0 | 129.1 | 129.3 | 127.9 | 7.9 | 0.6 |

| 140 | 140.4 | 141.1 | 141.8 | 141.9 | 142.0 | 142.1 | 142.2 | 142.2 | 142.4 | 144.3 | 142.0 | 2.0 | 0.6 |

| 160 | 157.8 | 157.2 | 157.0 | 155.3 | 154.5 | 154.4 | 151.9 | 151.2 | 150.9 | 150.3 | 154.0 | 6.0 | 0.6 |

| Average (cm) | 4.1 | 0.6 | |||||||||||

| Actual Distance (cm) | Estimated Distance (cm) | Mean Estimated Distance (cm) | Error (cm) | Average Processing Time (s) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | ||||

| 80 | 82.5 | 82.6 | 82.8 | 82.9 | 83.0 | 83.1 | 83.3 | 83.4 | 83.4 | 83.4 | 83.0 | 3.0 | 0.6 |

| 100 | 99.5 | 100.6 | 99.3 | 99.1 | 100.9 | 100.9 | 101.3 | 98.0 | 97.5 | 97.5 | 99.5 | 0.5 | 0.6 |

| 120 | 120.7 | 121.1 | 122.0 | 122.1 | 117.3 | 122.9 | 123.0 | 123.7 | 124.1 | 124.1 | 122.1 | 2.1 | 0.6 |

| 140 | 141.7 | 142.0 | 142.2 | 142.3 | 142.4 | 142.9 | 143.8 | 143.9 | 144.1 | 144.2 | 142.9 | 2.9 | 0.6 |

| 160 | 159.9 | 160.1 | 159.7 | 160.6 | 160.6 | 160.7 | 161.4 | 161.6 | 162.1 | 162.4 | 160.9 | 0.9 | 0.6 |

| Average (cm) | 1.9 | 0.6 | |||||||||||

| Model | Method | Mean Error (cm) | FPS | Avg CPU% | Peak CPU% | Peak Memory MB |

|---|---|---|---|---|---|---|

| YOLOv5s | simple midpoint | 38.89 | 0.92 | 78.84 | 82.30 | 965.67 |

| YOLOv5s | monocular depth networks | 48.13 | 0.47 | 79.45 | 82.60 | 965.67 |

| YOLOv5s | weighted center | 4.26 | 0.91 | 78.83 | 82.20 | 965.54 |

| YOLOv5x | simple midpoint | 37.66 | 0.15 | 90.74 | 95.10 | 1011.39 |

| YOLOv5x | monocular depth networks | 49.25 | 0.13 | 89.16 | 95.00 | 1116.46 |

| YOLOv5x | weighted center | 4.25 | 0.15 | 90.57 | 95.00 | 1069.09 |

| YOLOv8-nano | simple midpoint | 38.81 | 1.8 | 71.19 | 74.80 | 492.40 |

| YOLOv8-nano | monocular depth networks | 47.75 | 0.62 | 76.83 | 79.30 | 647.01 |

| YOLOv8-nano (Ours) | weighted center | 4.22 | 1.72 | 67.32 | 74.50 | 483.38 |

| Experimental Setup | Obstacle Detection (mAP@50) | Pathway Surface Detection (mAP@50) | Mean Obstacle Distance Error (cm) | Mean Transition Distance Error (cm) |

|---|---|---|---|---|

| Camera 1 + Camera 2 + Ultrasonic | 0.70 | 0.92 | 0.30 | 4.22 |

| Camera 1 + Camera 2 | - | 0.92 | - | 4.22 |

| Camera 1 + Ultrasonic | - | 0.92 | 0.30 | 4.22 |

| Camera 2 + Ultrasonic | 0.70 | - | 0.30 | - |

| Camera 1 | - | 0.92 | - | 4.22 |

| Camera 2 | - | - | - | - |

| Ultrasonic | - | - | 0.30 | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mungdee, T.; Ramsiri, P.; Khabuankla, K.; Khambun, P.; Nupim, T.; Chophuk, P. Low-Cost Smart Cane for Visually Impaired People with Pathway Surface Detection and Distance Estimation Using Weighted Bounding Boxes and Depth Mapping. Information 2025, 16, 707. https://doi.org/10.3390/info16080707

Mungdee T, Ramsiri P, Khabuankla K, Khambun P, Nupim T, Chophuk P. Low-Cost Smart Cane for Visually Impaired People with Pathway Surface Detection and Distance Estimation Using Weighted Bounding Boxes and Depth Mapping. Information. 2025; 16(8):707. https://doi.org/10.3390/info16080707

Chicago/Turabian StyleMungdee, Teepakorn, Prakaidaw Ramsiri, Kanyarak Khabuankla, Pipat Khambun, Thanakrit Nupim, and Ponlawat Chophuk. 2025. "Low-Cost Smart Cane for Visually Impaired People with Pathway Surface Detection and Distance Estimation Using Weighted Bounding Boxes and Depth Mapping" Information 16, no. 8: 707. https://doi.org/10.3390/info16080707

APA StyleMungdee, T., Ramsiri, P., Khabuankla, K., Khambun, P., Nupim, T., & Chophuk, P. (2025). Low-Cost Smart Cane for Visually Impaired People with Pathway Surface Detection and Distance Estimation Using Weighted Bounding Boxes and Depth Mapping. Information, 16(8), 707. https://doi.org/10.3390/info16080707