TSRACE-AI: Traffic Sign Recognition Accelerated with Co-Designed Edge AI Based on Hybrid FPGA Architecture for ADAS

Abstract

1. Introduction

2. Related Work

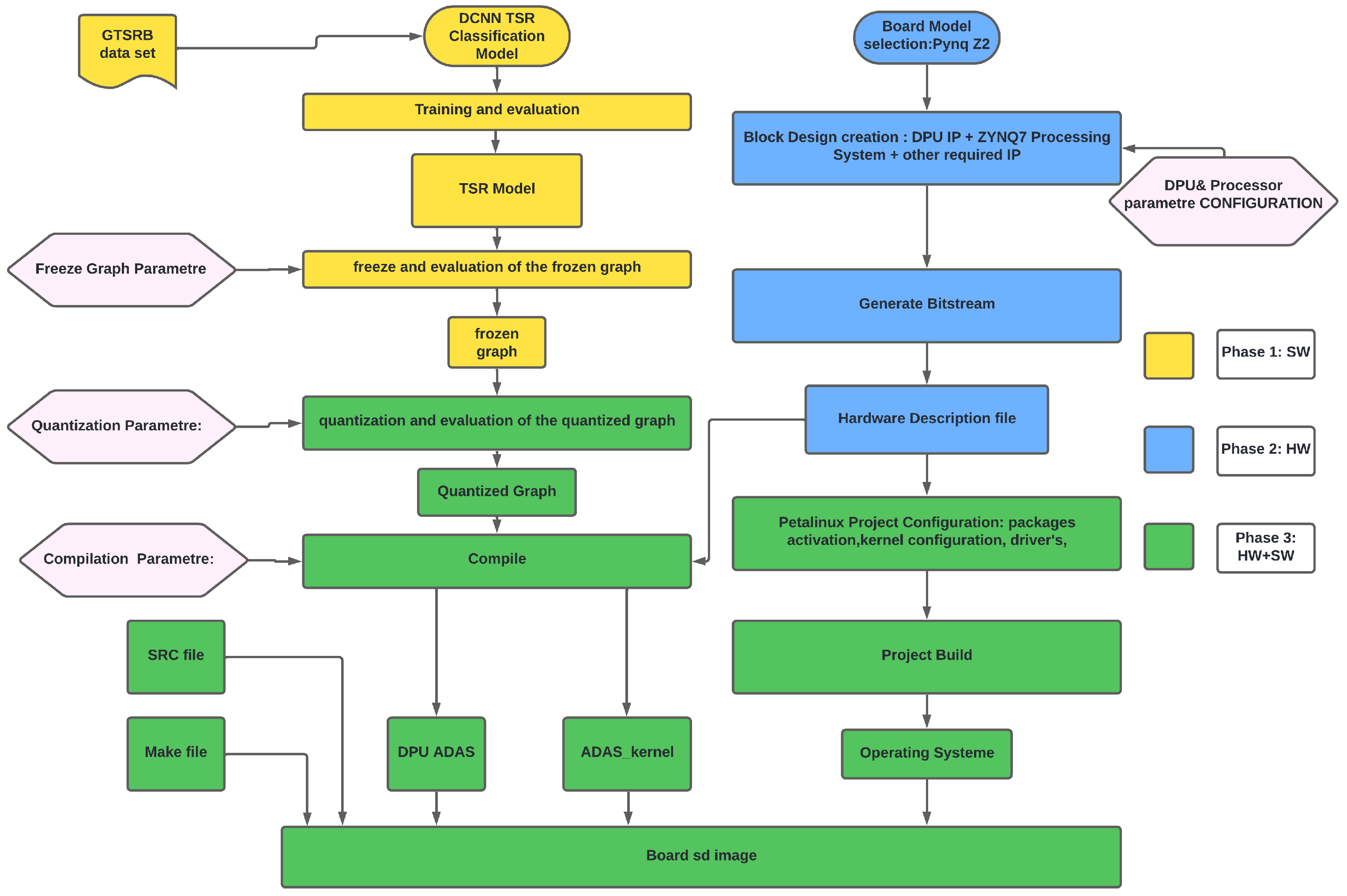

3. Design Approach

3.1. Phase 1: Software Preprocessing and Model Training

- Model Adjustment: The neural network architecture was tailored to align with the constraints and capabilities of the DNNDK framework, ensuring compatibility with the DPU for hardware acceleration. This involved optimizing the model’s structure to minimize computational overhead and enhance execution efficiency. Various data augmentation techniques, including random rotations, scaling, and flipping, were applied to improve the model’s robustness. These augmentations enabled the model to generalize effectively, preparing it for real-world conditions.

- Preprocessing: Extensive preprocessing was conducted to standardize the input data. The GTSRB dataset, containing traffic sign images of varying sizes and lighting conditions, was resized to uniform dimensions of 32 × 32 × 3. Pixel normalization was applied to scale values, facilitating faster convergence during training. These preprocessing steps ensured uniformity and enhanced the model’s ability to handle data efficiently in both software and hardware environments.

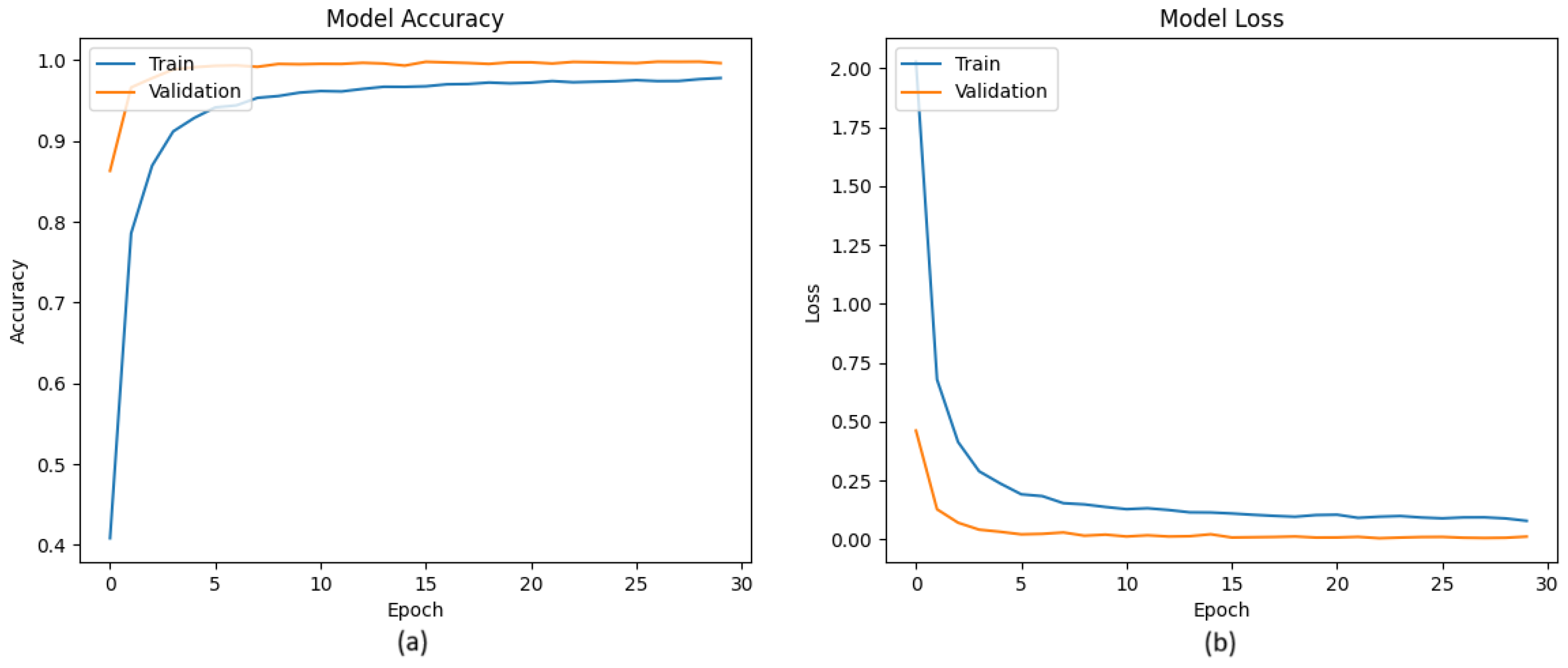

- Network Training: The adjusted model was trained using the preprocessed GTSRB dataset. Key performance indicators such as accuracy, precision, recall, and loss were closely monitored to guide model fine-tuning. Optimization techniques, including learning rate scheduling, Adam optimizer, and dropout, were employed to mitigate overfitting and accelerate convergence. The training process involved multiple iterative experiments to achieve optimal performance while maintaining compatibility with the DNNDK environment.

- Evaluation: The trained model was rigorously evaluated across various metrics, including accuracy, precision, recall, and F1-score, to confirm its suitability for real-time traffic sign recognition. Inference latency tests were also conducted within the DNNDK framework to assess the model’s efficiency when deployed on FPGA hardware. The design emphasized balancing computational efficiency and accuracy, ensuring the model’s readiness for deployment in resource-constrained environments such as mobile devices and automotive applications.

3.1.1. Model Architecture and Training

Model Architecture

Explanation of Layers

Model Training Process

- Preprocessing: All images were resized to pixels, normalized to the range [0, 1], and augmented with techniques such as horizontal flipping, rotation, zooming, random shifts, and brightness adjustments. These techniques improved the model’s generalization ability and helped prevent overfitting by exposing it to a more diverse set of input conditions. Data preprocessing and augmentation were implemented during training using the ImageDataGenerator pipeline in Keras.

- Loss Function: The model’s predictions were evaluated using the categorical cross-entropy loss function, defined as follows:denotes the true label, while represents the predicted probability for the corresponding class.Optimization: The Adam optimizer was utilized to minimize the loss function with an initial learning rate of 0.001, which was linearly decayed to 0.00005 over the 30 training epochs. This algorithm adaptively adjusts the learning rates for individual parameters by computing moving averages of the gradients and their squared values, thereby accelerating convergence. The update rules are given as follows [29]:where represents the gradient at time step t, and and denote the first and second moment estimates, respectively. The hyperparameters and , which control the exponential moving averages of the gradient and its square, were fixed at their default values of 0.9 and 0.999 throughout the training process.

3.1.2. Evaluation and Results

Model Accuracy and Loss

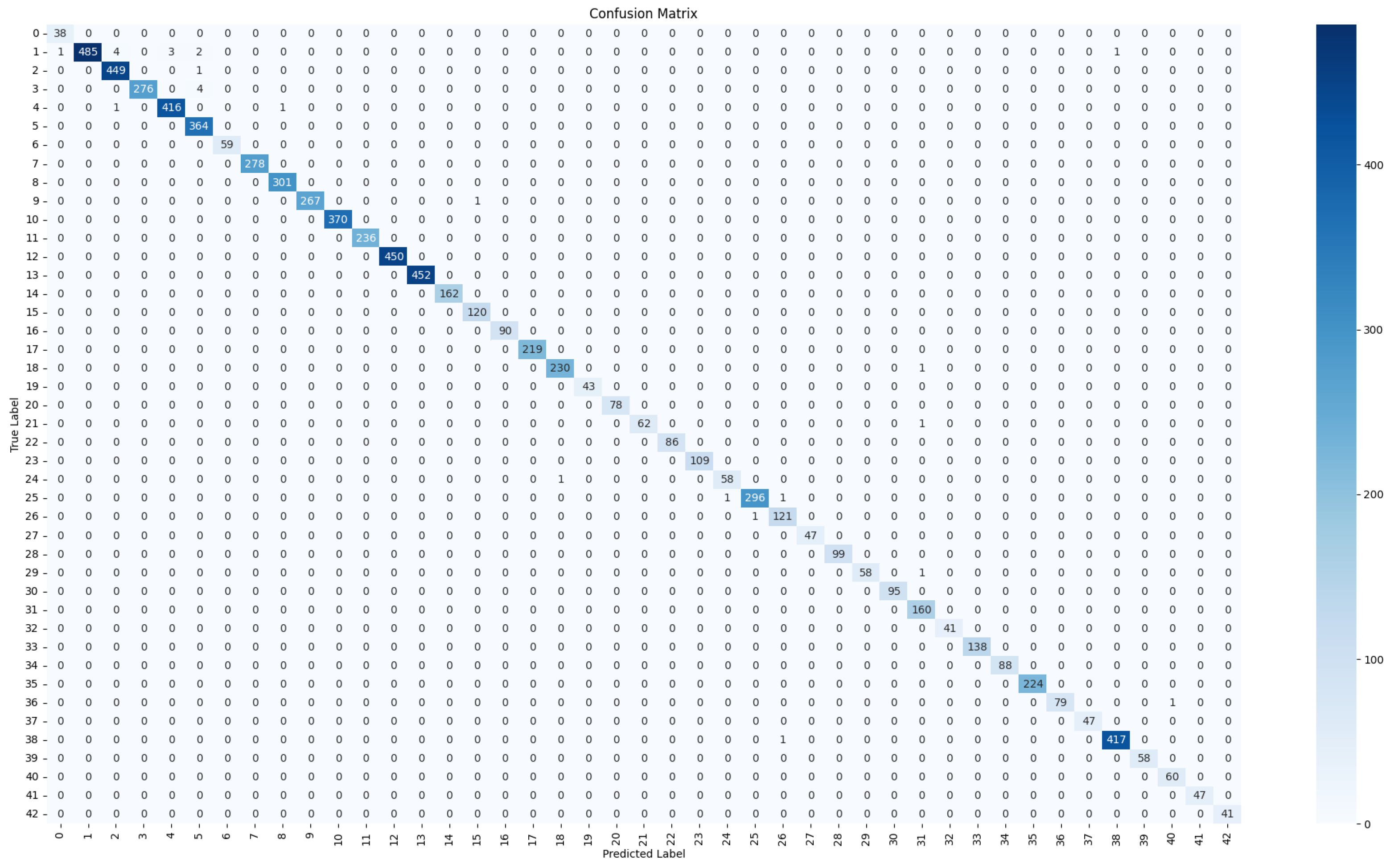

Confusion Matrix

- High Accuracy: The model exhibits strong classification performance across most classes, with very few misclassifications.

- Misclassification Patterns: Errors, though rare, primarily occur between visually similar traffic signs, highlighting areas for potential refinement.

- Diagonal Dominance: The high concentration along the diagonal reflects a significant proportion of correct predictions, confirming the robustness of the training process.

Evaluation Metrics

- TP (True Positives): The number of correctly predicted samples for a given class, represented by the value on the main diagonal of the confusion matrix in Figure 3.

- FP (False Positives): For a given class, these are the samples incorrectly predicted as belonging to that class. In the confusion matrix, FP for a specific class is the sum of the corresponding column values excluding the diagonal element.

- FN (False Negatives): For a given class, these are the samples from that class that were incorrectly predicted as another class. In the confusion matrix, FN for a specific class is the sum of the corresponding row values excluding the diagonal element.

- TP = 485 (main diagonal value at row 0, column 0)

- FP = sum of all values in column 0 except row 0 (e.g., 0 from row 1, 0 from row 2, etc.)

- FN = sum of all values in row 0 except column 0 (e.g., 3 from column 1, 0 from column 2, etc.)

Misclassification Analysis

3.1.3. Software Phase Outcome

3.2. Phase 2: Hardware Process

3.2.1. Overview of Zynq Architecture

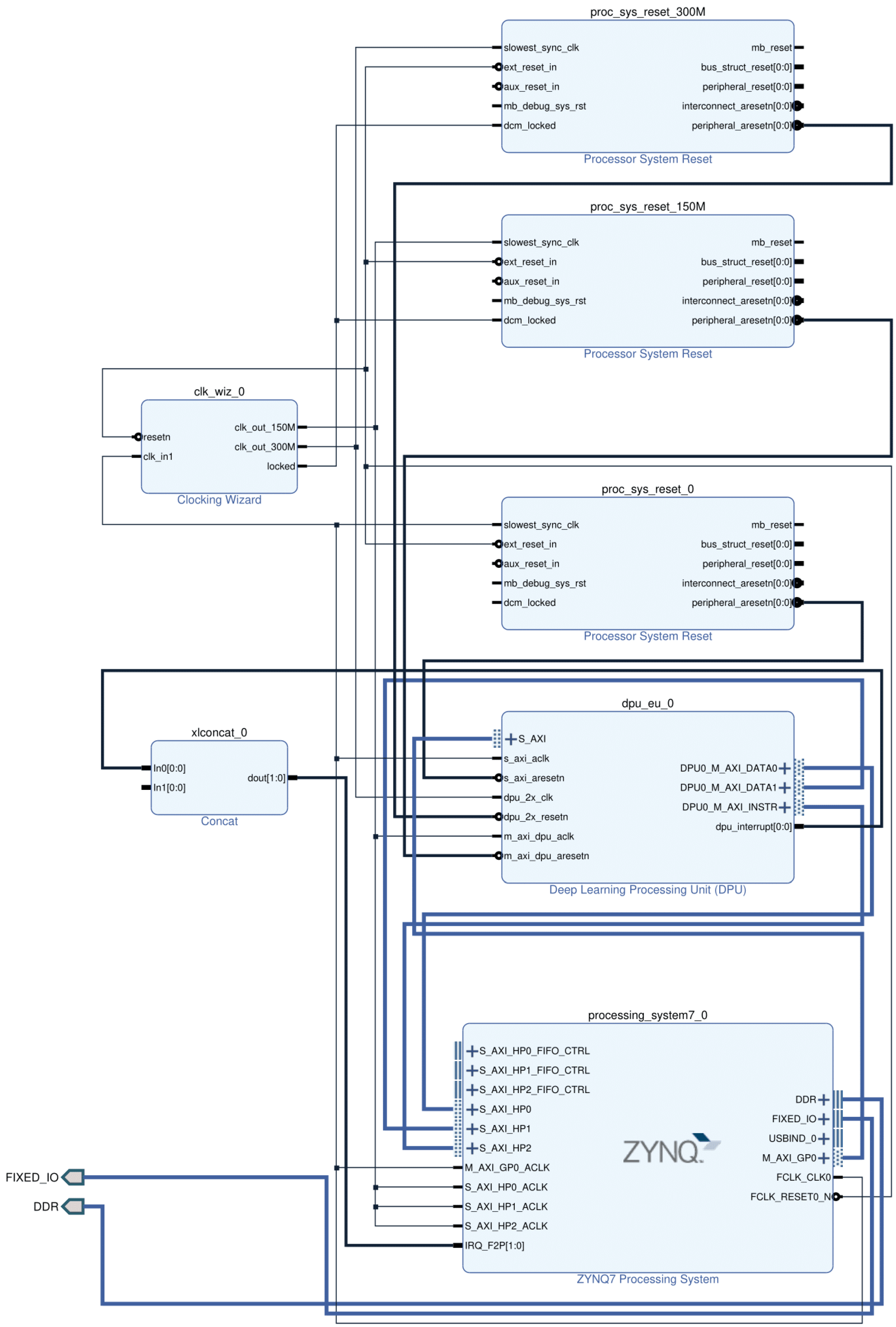

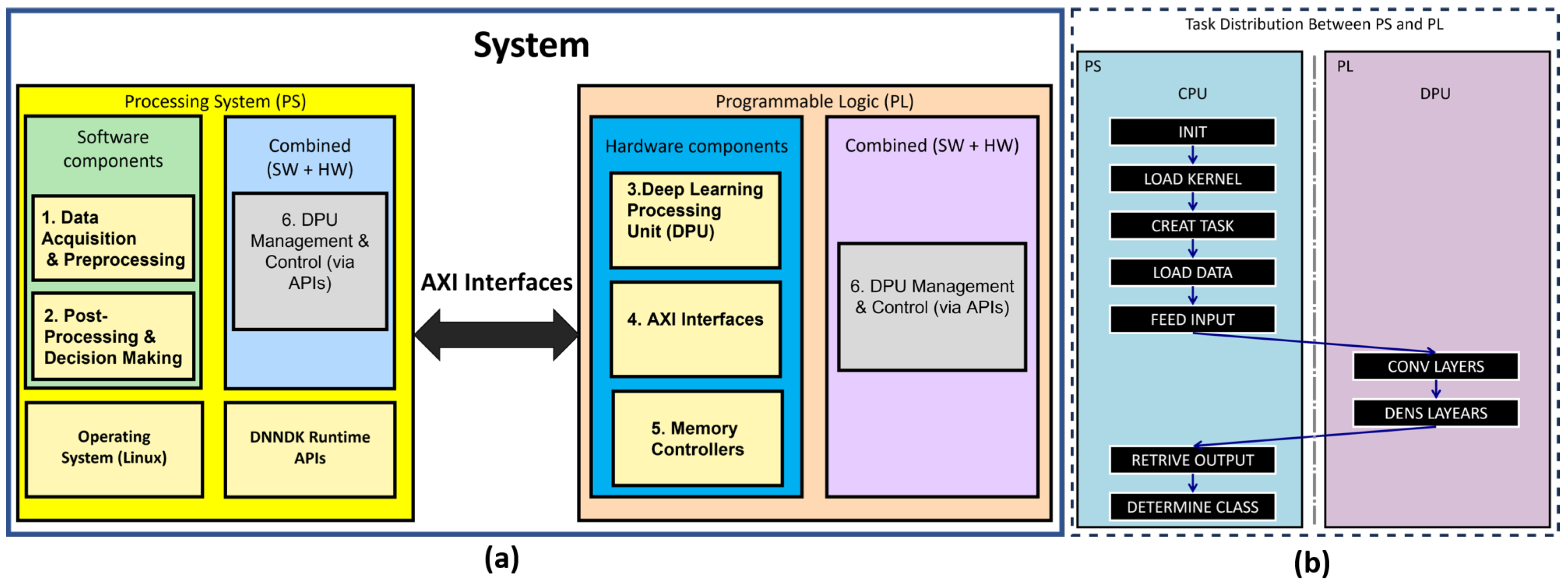

- Hardware–Software Co-Design: The Zynq SoC facilitates a seamless co-design approach, enabling software and hardware to work in tandem. The ARM cores handle control flow and lighter computational tasks, while the FPGA’s programmable logic accelerates compute-intensive operations like matrix multiplications and convolutions.

- Efficient Resource Utilization: By using both the processing system (PS) and programmable logic (PL), the Zynq platform maximizes resource efficiency, significantly enhancing performance while maintaining design flexibility.

- Low Power and High Performance: The PL is optimized for power-efficient deep learning computations, making it ideal for resource-constrained applications, including Advanced Driver Assistance Systems (ADASs) and Edge AI solutions.

3.2.2. DPU and DNNDK Integration

3.2.3. Hardware Design Workflow

- Clean startup behavior across all clock regions,

- Safe transition of control and data signals between PS and PL,

- Reliable handshaking over AXI interfaces (M_AXI_INSTR, M_AXI_DATA0, M_AXI_ DATA1),

- Glitch-free operation, even during partial reconfiguration or low-power modes.

3.2.4. Hardware Phase Outcome

3.3. Phase 3: HW/SW Co-Design

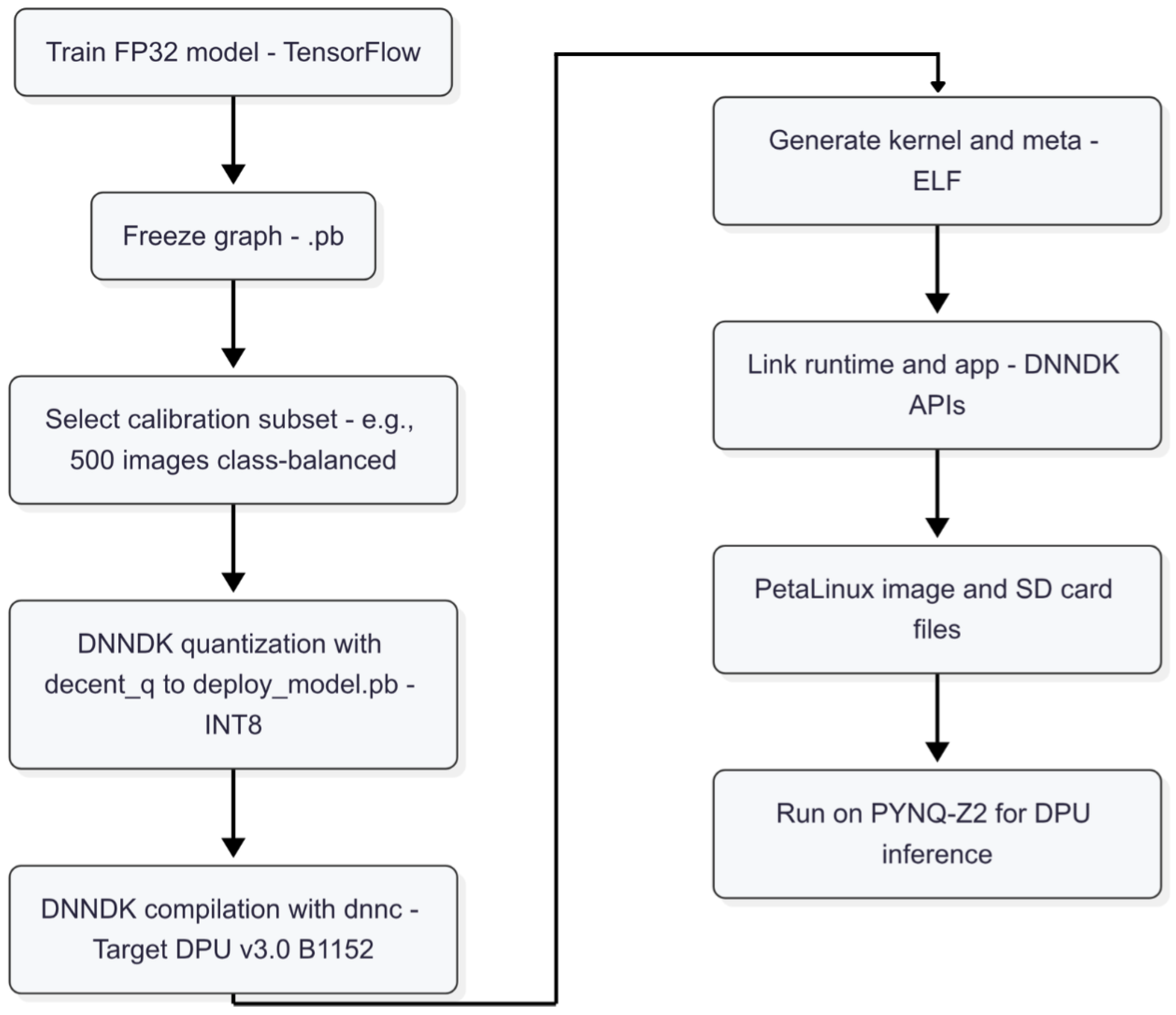

3.3.1. Quantization and Compilation

- Model Adjustment: The network architecture was adapted to ensure compatibility with the DPU, addressing unsupported layers and optimizing for the 8-bit fixed-point format. Adjustments were made to ensure that all layers and operations complied with the DNNDK’s supported layer types, and that quantization could be applied effectively across the model.

- Calibration: A subset of 500 images from the training dataset was used to calibrate the quantized model. These images were randomly and uniformly sampled across all 43 classes to ensure balanced representation of traffic sign types. The calibration process was conducted using the DNNDK quantization tool (decent_q), which collected statistical activation data (min, max, histogram) during forward passes through the floating-point model. This enabled the computation of appropriate quantization parameters such as scale and zero-point, ensuring minimal performance degradation compared to the original floating-point version.

3.3.2. Software Design, System Partitioning, and Scheduling

- DPU Execution: The DPU handled compute-intensive operations such as convolutional and fully connected layers, forming the core of the TSR system.

- CPU Execution: The CPU managed preprocessing and post-processing tasks, including handling layers incompatible with the DPU (e.g., custom pooling).

- System Partitioning: CPU-DPU communication was optimized using high-speed AXI interfaces, ensuring efficient data transfers with minimal latency.

- Task Scheduling: Parallel execution was employed, with the DPU processing one batch while the CPU prepared the next, creating a pipeline effect to maximize throughput.

3.3.3. System Integration and Deployment

- DPU Configuration: Parameters such as clock frequency, architecture, RAM usage, and memory mappings were optimized to ensure efficient execution and data handling.

- PetaLinux Customization: The embedded Linux environment on the PYNQ-Z2 board was tailored to support the DPU, including custom kernel drivers, device tree modifications, and runtime APIs provided by the DNNDK framework.

- Boot Image Generation: The complete system, including the quantized CNN model, compiled DPU bitstream, and Linux environment, was packaged into a bootable SD image and deployed on the PYNQ-Z2 platform for functional testing and validation.

3.3.4. System Architecture and Task Allocation

3.3.5. HW/SW Co-Design Outcome

4. Experiment and Results

4.1. Resource Utilization

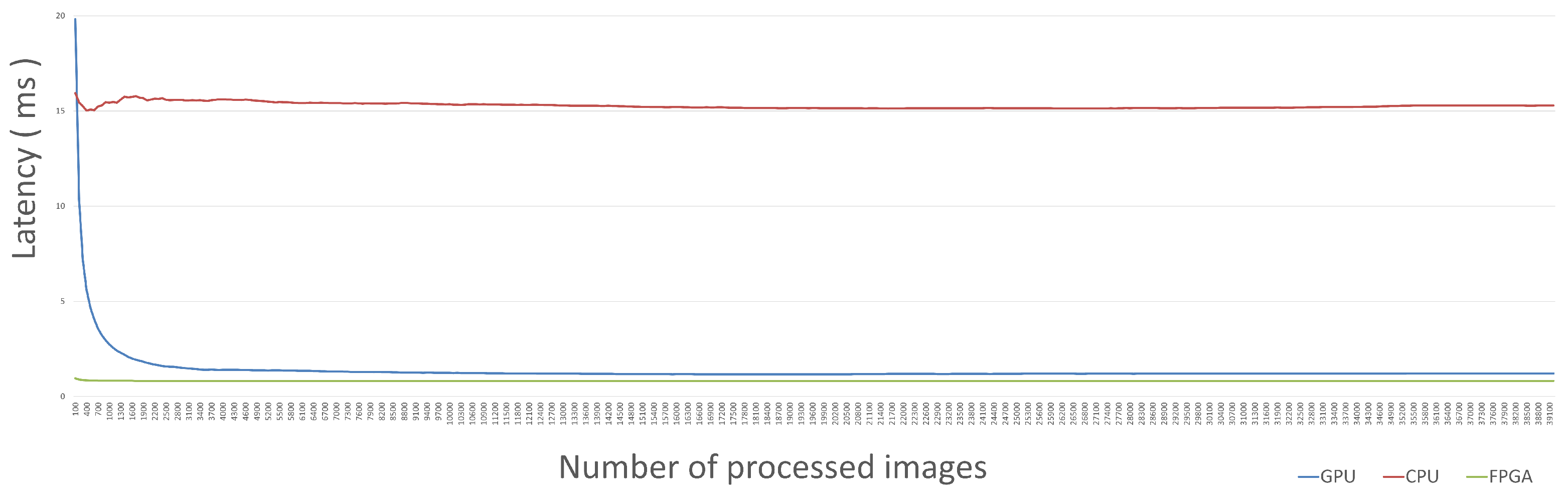

4.2. Latency Analysis

- Input Latency: As shown in Figure 9, the FPGA demonstrates consistently low input latency, averaging around 0.3 ms across all processed images. This stability is crucial for real-time systems, allowing for predictable response times. In contrast, the CPU and GPU exhibit higher and more variable latencies due to their general-purpose architectures and data handling pipelines.

4.3. Throughput and Real-Time Feasibility

4.4. Power Efficiency

4.5. Comparative Analysis with Related Work

4.5.1. Device and Resource Utilization

4.5.2. Frequency and Network

4.5.3. Throughput and Latency

- Tatar and Bayar [21] achieve 25 GOPS throughput with a latency of 39.37 ms. TSRACE-AI is therefore over faster in latency and higher in throughput.

- Kim et al. [20] report 0.96 GOPS throughput with a latency of 25 ms, optimized for low power rather than speed. TSRACE-AI outperforms this by over in latency and approximately in throughput.

4.5.4. Power Efficiency

- BNN-PYNQ and H2PIPE show stronger GOPS/W on larger or specialized devices at higher operating frequencies and with greater resource budgets.

- TSRACE-AI presents a practical trade-off on DDR-based, low-power hardware: it sacrifices a small efficiency margin to achieve a 98.85% reduction in latency and a ∼3× gain in throughput versus [10] while providing a plug-in path to standard baselines (VGG-16, ResNet-20) under identical deployment constraints.

4.5.5. Framework Rationale and Unique Value

- Plug-in flexibility on a fixed overlay. The same PYNQ-Z2 DPU (INT8, 150/300 MHz) runs multiple networks without regenerating hardware. Swapping among VGG-16, ResNet-20, and TSRACE-AI exposes accuracy/latency trade-offs under identical platform settings.

- Deterministic, real-time response at ≤5 W. TSRACE-AI delivers ms per decision (ResNet-20: ms; VGG-16: ms) within a 4.3 W budget—meeting strict ADAS latency targets on a low-cost board.

- Edge-centric memory assumptions. Our design is tuned for DDR bandwidths typical of embedded platforms; it avoids reliance on HBM2 or oversized devices while still achieving GOPS sustained throughput.

- Transparent, comparable reporting. By separating hardware constants (board/overlay/clock/precision) from model complexity C (GOPs/inf) and using the identities , , and , we provide reproducible, apples-to-apples comparisons across plug-in models on the same platform.

- Practical time/energy trade-offs. Under identical settings, the energies per inference are 1.72 mJ (ResNet-20), 5.03 mJ (TSRACE-AI), and 11.95 mJ (VGG-16). These figures, together with sub-millisecond response, reflect application-level utility beyond peak GOPS/W.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Levinson, J.; Askel, J.; Becker, J.; Dolson, J.; Held, D.; Kammel, S.; Kolter, J.Z.; Langer, D.; Pink, O.; Pratt, V.; et al. Towards fully autonomous driving: Systems and algorithms. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011; pp. 163–168. [Google Scholar]

- Garikapati, D.; Shetiya, S.S. Autonomous vehicles: Evolution of artificial intelligence and the current industry landscape. Big Data Cogn. Comput. 2024, 8, 42. [Google Scholar] [CrossRef]

- Bhatt, D.; Patel, C.; Talsania, H.; Patel, J.; Vaghela, R.; Pandya, S.; Modi, K.; Ghayvat, H. CNN variants for computer vision: History, architecture, application, challenges and future scope. Electronics 2021, 10, 2470. [Google Scholar] [CrossRef]

- Cho, M.; Kim, Y. FPGA-based convolutional neural network accelerator with resource-optimized approximate multiply-accumulate unit. Electronics 2021, 10, 2859. [Google Scholar] [CrossRef]

- Abbas, N.; Zhang, Y.; Taherkordi, A.; Skeie, T. Mobile edge computing: A survey. IEEE Internet Things J. 2017, 5, 450–465. [Google Scholar] [CrossRef]

- Possa, P.; Schaillie, D.; Valderrama, C. FPGA-based hardware acceleration: A CPU/accelerator interface exploration. In Proceedings of the 2011 18th IEEE International Conference on Electronics, Circuits, and Systems, Beirut, Lebanon, 11–14 December 2011; pp. 374–377. [Google Scholar] [CrossRef]

- García, G.J.; Jara, C.A.; Pomares, J.; Alabdo, A.; Poggi, L.M.; Torres, F. A survey on FPGA-based sensor systems: Towards intelligent and reconfigurable low-power sensors for computer vision, control and signal processing. Sensors 2014, 14, 6247–6278. [Google Scholar] [CrossRef]

- Ghaffar, M.A.; Li, Z.; Chen, T.; Haider, S.A.; Pokharel, M.; Hanifi, S.; Subedi, N. A traffic sign recognition algorithm for ADAS based on CNN for complex scenarios. In Proceedings of the 2023 7th International Conference on Transportation Information and Safety (ICTIS), Xi’an, China, 4–6 August 2023; pp. 1760–1766. [Google Scholar] [CrossRef]

- Triki, N.; Karray, M.; Ksantini, M. A real-time traffic sign recognition method using a new attention-based deep convolutional neural network for smart vehicles. Appl. Sci. 2023, 13, 4793. [Google Scholar] [CrossRef]

- Gundrapally, A.; Shah, Y.A.; Alnatsheh, N.; Choi, K.K. A high-performance and ultra-low-power accelerator design for advanced deep learning algorithms on an FPGA. Electronics 2024, 13, 2676. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Krizhevsky, A.; Nair, V.; Hinton, G. CIFAR-10 (Canadian Institute for Advanced Research). 2010. Available online: https://academictorrents.com/details/463ba7ec7f37ed414c12fbb71ebf6431eada2d7a (accessed on 15 August 2025).

- Doumet, M.; Stan, M.; Hall, M.; Betz, V. H2PIPE: High Throughput CNN Inference on FPGAs with High-Bandwidth Memory. In Proceedings of the 2024 34th International Conference on Field-Programmable Logic and Applications (FPL), Torino, Italy, 2–6 September 2024; pp. 69–77. [Google Scholar]

- Gao, X.; Wu, B.; Li, P.; Jing, Z. 1D-CNN-Transformer for Radar Emitter Identification and Implemented on FPGA. Remote Sens. 2024, 16, 2962. [Google Scholar] [CrossRef]

- Ajili, M.T.; Hara-Azumi, Y. Multimodal neural network acceleration on a hybrid CPU-FPGA architecture: A case study. IEEE Access 2022, 10, 9603–9617. [Google Scholar] [CrossRef]

- Mansouri, A.; Elzaar, A.; Madani, M.; Bakir, T. Design and Hardware Implementation of CNN-GCN Model for Skeleton-Based Human Action Recognition. Wseas Trans. Comput. Res. 2024, 12, 318–327. [Google Scholar] [CrossRef]

- Wang, Y.; Liao, Y.; Yang, J.; Wang, H.; Zhao, Y.; Zhang, C.; Xiao, B.; Xu, F.; Gao, Y.; Xu, M. An FPGA-based online reconfigurable CNN edge computing device for object detection. Microelectron. J. 2023, 137, 105805. [Google Scholar] [CrossRef]

- Yao, Y.; Duan, Q.; Zhang, Z.; Gao, J.; Wang, J.; Yang, M.; Tao, X.; Lai, J. An FPGA-based hardware accelerator for multiple convolutional neural networks. In Proceedings of the 2018 14th IEEE International Conference on Solid-State and Integrated Circuit Technology (ICSICT), Qingdao, China, 31 October–3 November 2018; pp. 1–3. [Google Scholar] [CrossRef]

- Gschwend, D. Zynqnet: An FPGA-accelerated embedded convolutional neural network. arXiv 2020, arXiv:2005.06892. [Google Scholar]

- Kim, J.; Kang, J.-K.; Kim, Y. A low-cost fully integer-based CNN accelerator on FPGA for real-time traffic sign recognition. IEEE Access 2022, 10, 84626–84634. [Google Scholar] [CrossRef]

- Tatar, G.; Bayar, S. Real-time multi-task ADAS implementation on reconfigurable heterogeneous MPSoC architecture. IEEE Access 2023, 11, 80741–80760. [Google Scholar] [CrossRef]

- ResNet-50 PYNQ GitHub. Available online: https://github.com/Xilinx/ResNet50-PYNQ/blob/master/host/README.md (accessed on 6 July 2025).

- Nguyen, V.C.; Nakashima, Y. Implementation of fully-pipelined CNN inference accelerator on FPGA and HBM2 platform. IEICE Trans. Inf. Syst. 2023, 106, 1117–1129. [Google Scholar] [CrossRef]

- Han, Y.; Oruklu, E. Real-time traffic sign recognition based on Zynq FPGA and ARM SoCs. In Proceedings of the IEEE International Conference on Electro/Information Technology, Milwaukee, WI, USA, 5–7 June 2014; pp. 373–376. [Google Scholar]

- DNNDK User Guide (UG1327) v1.6. Available online: https://docs.amd.com/v/u/en-US/ug1327-dnndk-user-guide (accessed on 14 October 2024).

- Maillard, P.; Chen, Y.P.; Vidmar, J.; Fraser, N.; Gambardella, G.; Sawant, M.; Voogel, M.L. Radiation-tolerant deep learning processor unit (DPU)-based platform using Xilinx 20-nm Kintex UltraScale FPGA. IEEE Trans. Nucl. Sci. 2022, 70, 714–721. [Google Scholar] [CrossRef]

- Abdi, L.; Meddeb, A. Deep learning traffic sign detection, recognition and augmentation. In Proceedings of the Symposium on Applied Computing, Marrakech, Morocco, 4–6 April 2017; pp. 131–136. [Google Scholar]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 4th ed.; Pearson Education Limited: New York, NY, USA, 2018. [Google Scholar]

- Nwankpa, C.E. Advances in optimisation algorithms and techniques for deep learning. Adv. Sci. Technol. Eng. Syst. J. 2020, 5, 563–577. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar] [CrossRef]

- Howard, A.G. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- DPUCZDX8G for Zynq UltraScale+ MPSoCs Product Guide (PG338). Available online: https://docs.amd.com/r/en-US/pg338-dpu/Introduction?tocId=3xsG16y_QFTWvAJKHbisEw (accessed on 7 August 2025).

- Venieris, S.I.; Fernandez-Marques, J.; Lane, N.D. Mitigating memory wall effects in CNN engines with on-the-fly weights generation. Acm Trans. Des. Autom. Electron. Syst. 2023, 28, 1–31. [Google Scholar] [CrossRef]

- Dong, Q.; Xie, X.; Wang, Z. SWAT: An efficient Swin Transformer accelerator based on FPGA. In Proceedings of the Asia and South Pacific Design Automation Conference (ASP-DAC), Incheon, Republic of Korea, 22–25 January 2024; pp. 515–520. [Google Scholar] [CrossRef]

- Huang, M.; Luo, J.; Ding, C.; Wei, Z.; Huang, S.; Yu, H. An integer-only and group-vector systolic accelerator for efficiently mapping vision transformer on edge. IEEE Trans. Circuits Syst. I Regul. Pap. 2023, 70, 5289–5301. [Google Scholar] [CrossRef]

- Salameh, A.A.; Baharum, F. Adaptive VLSI design using dynamic voltage and frequency scaling (DVFS) for low-latency IoT communication networks. J. Vlsi Circuits Syst. 2025, 7, 19–25. [Google Scholar]

- AMD. Zynq UltraScale+ MPSoC Data Sheet: Overview (DS891) (Rev. 1.11.1, March 18, 2025); AMD Inc.: Santa Clara, CA, USA, 2025; Available online: https://docs.amd.com/v/u/en-US/ds891-zynq-ultrascale-plus-overview (accessed on 10 August 2025).

| Layer | Input Shape | Output Shape | Number of Parameters | Operations (M) | GOPs |

|---|---|---|---|---|---|

| Input Layer | (None, 32, 32, 3) | (None, 32, 32, 3) | 0 | 0 | 0 |

| Conv2D (Layer 1) | (None, 32, 32, 3) | (None, 28, 28, 32) | 2432 | 56.63 | 0.0566 |

| Conv2D (Layer 2) | (None, 28, 28, 32) | (None, 24, 24, 32) | 25,632 | 113.66 | 0.1137 |

| MaxPooling2D | (None, 24, 24, 32) | (None, 12, 12, 32) | 0 | 0 | 0 |

| Dropout | (None, 12, 12, 32) | (None, 12, 12, 32) | 0 | 0 | 0 |

| Conv2D (Layer 3) | (None, 12, 12, 32) | (None, 10, 10, 64) | 18,496 | 33.12 | 0.0331 |

| Conv2D (Layer 4) | (None, 10, 10, 64) | (None, 8, 8, 64) | 36,928 | 37.99 | 0.0380 |

| MaxPooling2D | (None, 8, 8, 64) | (None, 4, 4, 64) | 0 | 0 | 0 |

| Dropout | (None, 4, 4, 64) | (None, 4, 4, 64) | 0 | 0 | 0 |

| Flatten | (None, 4, 4, 64) | (None, 1024) | 0 | 0.53 | 0.0005 |

| Dense (Layer 5) | (None, 1024) | (None, 256) | 262,400 | 0.53 | 0.0005 |

| Dropout | (None, 256) | (None, 256) | 0 | 0 | 0 |

| Dense (Output) | (None, 256) | (None, 43) | 11,051 | 0.022 | 0.00002 |

| Total | 356,939 | 242.48 | 0.2425 |

| Parameter | Settings |

|---|---|

| Number of DPUs | 1 |

| Architecture | B1152 |

| RAM Usage | Low |

| DSP Usage | Low DSP Mode |

| Low Power Mode | Off |

| Extra Operations | ElementwiseAdd, LeakyReLU |

| Resource | Utilization | Available | Utilization % |

|---|---|---|---|

| LUT | 35,495 | 53,200 | 66.72% |

| LUT RAM | 1744 | 17,400 | 10.02% |

| FF | 63,779 | 106,400 | 59.94% |

| BRAM | 123 | 140 | 87.86% |

| DSP | 212 | 220 | 96.36% |

| BUFG | 4 | 32 | 12.5% |

| MMCM | 1 | 4 | 25% |

| Platform | CNN Size (GOP) | Throughput (GOPS) | Power (W) | Power Efficiency (GOPS/W) |

|---|---|---|---|---|

| FPGA (PYNQ-Z2) | 0.24 | 205.39 | 4.3 | 47.76 |

| GPU (RTX 4060 Ti) | 0.24 | 2500 | 14.3 | 174.83 |

| CPU (i7-14700K) | 0.24 | 67 | 9.8 | 6.83 |

| Reference | [33] | [13] | [33] | [22] | [23] | [10] | [34] | [35] | [14] | [21] | [20] | TSRACE-AI | TSRACE-AI (VGG-16) | TSRACE-AI (ResNet-20) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Device | Z7045 | Stratix 10 NX | ZU7EV | Alveo U250 | Alveo U280 | PYNQ-Z2 | ALVEO U50 | ZCU102 | Xcku040 | XCK26 | XC7Z045 | PYNQ-Z2 | PYNQ-Z2 | PYNQ-Z2 |

| Device BRAM (Mb) | 19.2 | 140 | 38 | 432 | 357 | 16.2 | 47.3 | 32.1 | - | 135 | - | 16.2 | 16.2 | 16.2 |

| DSPs | 900 | 3960 | 1728 | 11,508 | 9024 | 220 | 5952 | 2520 | - | 774 | 40 | 220 | 220 | 220 |

| Logic Utilization (%) | - | 67 | 81 | 77 | 55 | 23 | 31.1 | 52.7 | 57.3 | 54.17 | - | 66.72 | 66.72 | 66.72 |

| BRAM Utilization (%) | - | 98 | 91 | 97 | 92 | 31 | 45.3 | - | 64.33 | 93.75 | 25 | 87.86 | 87.86 | 87.86 |

| Used DSPs (%) | 100 | 51 | 33 | 14 | 96 | 30 | 31.3 | 50.3 | 51.7 | 62.02 | - | 96.36 | 96.36 | 96.36 |

| Frequency (MHz) | 150 | 300 | 200 | 195 | 250 | 50 | 200 | 300 | 150 | 300 | 250 | 150/300 | 150/300 | 150/300 |

| Network | ResNet-18 | ResNet-18 | ResNet-50 | ResNet-50 | VGG-16 | ResNet-20 | Swin-T | VIT-S | lw-ct PART | Yolo ADAS | Custom CNN | Custom CNN | VGG-16 (32×32) | ResNet-20 (32×32) |

| Precision | 16-bit | 8-bit | 16-bit | 1-bit | 16-bit | 16-bit | int16/int8 | int8 | int16 | QAT-INT8 | INT8 | 8-bit | 8-bit | 8-bit |

| Throughput (GOPS) | 59.7 | 4174 | 1004 | 527 | 912.7 | 68.4 | 301.9 | 153.2 | 153.2 | 25 | 0.96 | 205.39 | 205.39 | 205.39 |

| Latency (ms) | 16.75 | 1.01 | 9.48 | 1.9 | 0.704 | 102 | - | 0.3752 | 0.3752 | 39.37 | 25 | 1.17 | 2.78 | 0.40 |

| GOPs | 236 | 15,109 | 7731 | 3567 | 0.643 | 6.97 | - | - | - | 25 | - | 0.241952 | 0.5703 | 0.0816 |

| Power (W) | - | - | - | - | 40.6 | 1.331 | 14.35 | 29.6 | 5.72 | 7.19 | 6.95 | 4.3 | 4.3 | 4.3 |

| Power Efficiency (GOPS/W) | - | - | - | 51.38 | 22.48 | 51.38 | 21.04 | 25.76 | 26.78 | 3.47 | 0.138 | 47.77 | 47.77 | 47.77 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Smaali, A.; Ben Alla, S.; Touhafi, A. TSRACE-AI: Traffic Sign Recognition Accelerated with Co-Designed Edge AI Based on Hybrid FPGA Architecture for ADAS. Information 2025, 16, 703. https://doi.org/10.3390/info16080703

Smaali A, Ben Alla S, Touhafi A. TSRACE-AI: Traffic Sign Recognition Accelerated with Co-Designed Edge AI Based on Hybrid FPGA Architecture for ADAS. Information. 2025; 16(8):703. https://doi.org/10.3390/info16080703

Chicago/Turabian StyleSmaali, Abderrahmane, Said Ben Alla, and Abdellah Touhafi. 2025. "TSRACE-AI: Traffic Sign Recognition Accelerated with Co-Designed Edge AI Based on Hybrid FPGA Architecture for ADAS" Information 16, no. 8: 703. https://doi.org/10.3390/info16080703

APA StyleSmaali, A., Ben Alla, S., & Touhafi, A. (2025). TSRACE-AI: Traffic Sign Recognition Accelerated with Co-Designed Edge AI Based on Hybrid FPGA Architecture for ADAS. Information, 16(8), 703. https://doi.org/10.3390/info16080703