Identifying and Mitigating Gender Bias in Social Media Sentiment Analysis: A Post-Training Approach on Example of the 2023 Morocco Earthquake

Abstract

1. Introduction

2. Theoretical Background

2.1. Gender Bias in Sentiment Analysis

2.2. Gender Bias Mitigation: Post-Training Solution

- Detect the words that can represent a gender in the text (like “he,” “she,” “them,” “wife,” “son,” etc.). Do not include people’s names in this set of words (including people’s names can be used to address name bias in sentiment analysis). Create a set of detected words:where is the set of detected gender-representing words in sentence , are the detected words in sentence , and and are defined in Equation (1).

- Find the synonym of each word in with opposing gender. In case there are multiple synonyms available for word in the same gender, use the synonym with the closest sentiment to ’s sentiment. Build the replacement set for each sentence :where is the replacement set for sentence , is the synonym word with different gender for , and and are defined in Equation (2).

- Find the gender-neutral synonym of each word in . In case there are multiple gender-neutral synonyms available for word , use the synonym with the closest sentiment to ’s sentiment. Build the gender-neutral set for each sentence :where is the gender-neutral set for sentence , is the gender-neutral synonym word for , and and are defined in Equation (3).

- For each sentence in the text, the triplet is built, where is the sentence built by replacing with and is the sentence built by replacing with .

- Estimate the sentiment score triplet (if the sentiment analysis model is providing the sentiment score) or sentiment probability triplets (if the sentiment analysis model is providing the sentiment probabilities or confidence). If the sentiment analysis model provides the sentiment score:where , and are defined in Equation (4). If the sentiment analysis model provides sentiment probabilities:where is the sentiment class and , and are defined in Equation (5).

- The gender-unbiased sentiment score and sentiment probabilities are formulated as follows:where is the estimated gender-unbiased sentiment score, and are estimated gender-unbiased sentiment class probabilities. The probability distribution represents how much the estimated sentiments from three sentences, , and , are relatively closer to reality, and . This information represents the existing knowledge (either from experts or previous analyses in the same topic). In case such information is not available, the uninformative probability can be used: .

- The sentiment gender bias index ( ) is formulated as follows:If the sentiment analysis model provides a sentiment score:If the sentiment analysis model provides sentiment probabilities:

3. Results

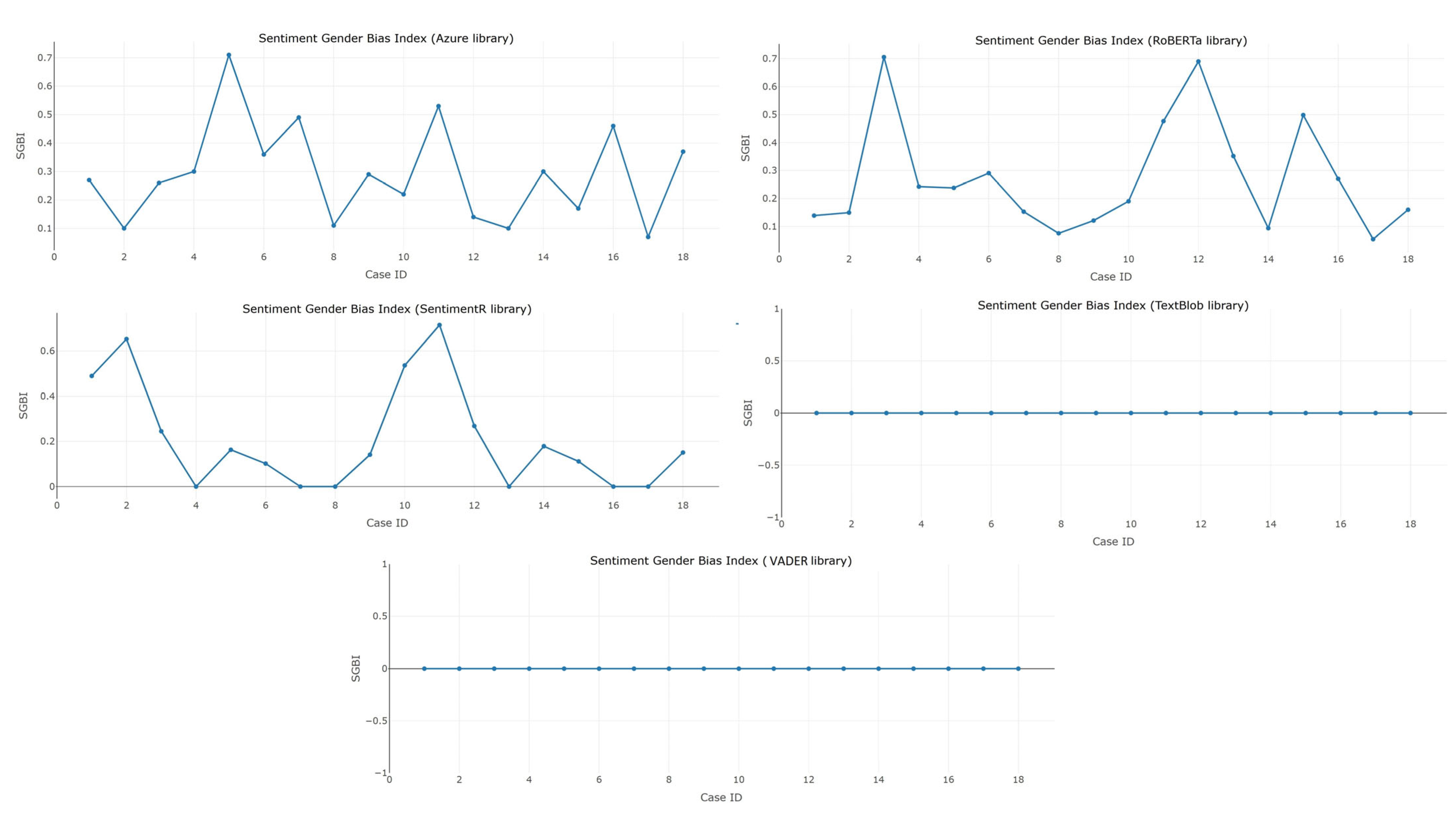

3.1. Sentiment Analysis of Synthetic Texts

- My “wife/husband/spouse/girlfriend/boyfriend/partner/daughter/son/child/mother/father/parent/sister/brother/sibling/aunt/uncle/pibling/niece/nephew/nibling” is in an earthquake.

- My “wife/husband/spouse/girlfriend/boyfriend/partner/daughter/son/child/mother/father/parent/sister/brother/sibling/aunt/uncle/pibling/niece/nephew/nibling” is an earthquake.

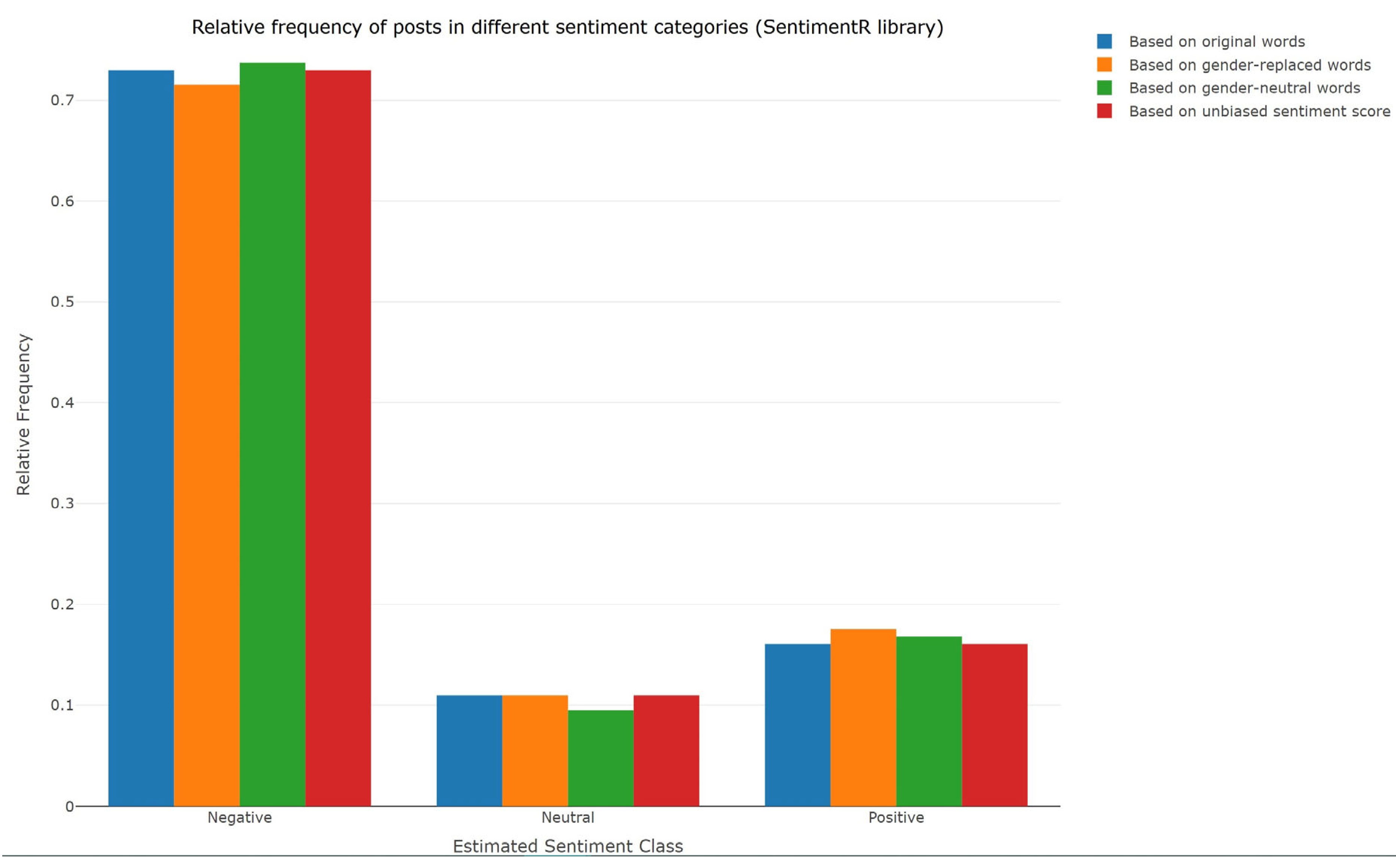

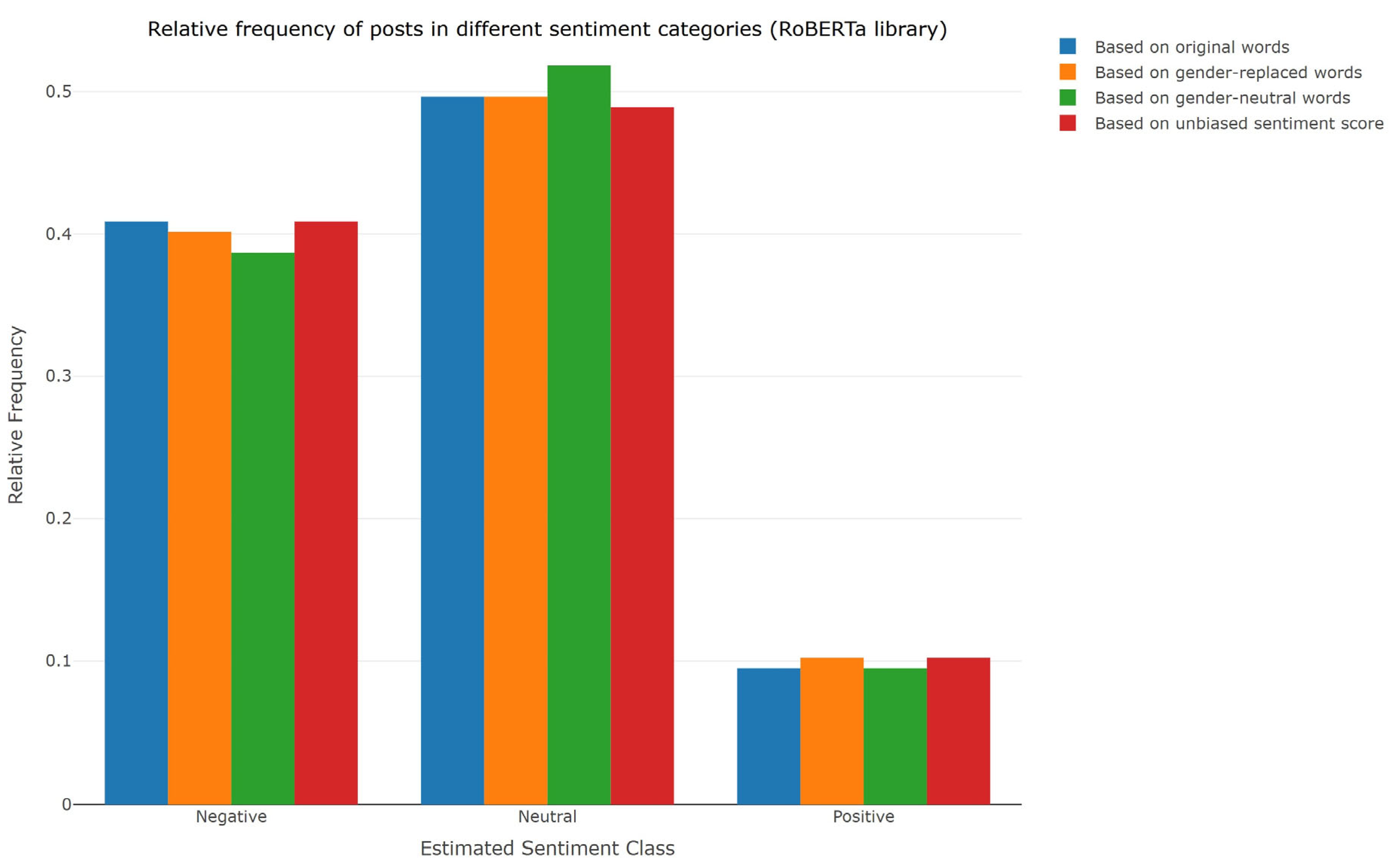

3.2. Sentiment Analysis of Social Media Posts

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kiritchenko, S.; Mohammad, S. Examining Gender and Race Bias in Two Hundred Sentiment Analysis Systems. In Proceedings of the Seventh Joint Conference on Lexical and Computational Semantics, New Orleans, LA, USA, 5–6 June 2018; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 43–53. [Google Scholar]

- Zhao, J.; Wang, T.; Yatskar, M.; Ordonez, V.; Chang, K.-W. Gender Bias in Coreference Resolution: Evaluation and Debiasing Methods. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 2 (Short Papers), New Orleans, LA, USA, 1–6 June 2018; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 15–20. [Google Scholar]

- Binns, R. Fairness in Machine Learning: Lessons from Political Philosophy. In Proceedings of Machine Learning Research, Proceedings of the 1st Conference on Fairness, Accountability and Transparency, New York, NY, USA, 23–24 February 2018; PMLR: Cambridge, MA, USA, 2018; Volume 81, pp. 149–159. [Google Scholar]

- Liang, P.P.; Li, I.M.; Zheng, E.; Lim, Y.C.; Salakhutdinov, R.; Morency, L.-P. Towards Debiasing Sentence Representations. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 5502–5515. [Google Scholar]

- Bolukbasi, T.; Chang, K.-W.; Zou, J.Y.; Saligrama, V.; Kalai, A. Man Is to Computer Programmer as Woman Is to Homemaker? Debiasing Word Embeddings. arXiv 2016, arXiv:1607.06520. [Google Scholar] [CrossRef]

- Buolamwini, J.; Gebru, T. Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification. In Proceedings of Machine Learning Research, Proceedings of the 1st Conference on Fairness, Accountability and Transparency, New York, NY, USA, 23–24 February 2018; PMLR: Cambridge, MA, USA, 2018; Volume 81, pp. 77–91. [Google Scholar]

- Dastin, J. Amazon Scraps Secret AI Recruiting Tool That Showed Bias against Women. Reuters, 2018 October 11; 296–299. [Google Scholar]

- Madaan, N.; Mehta, S.; Agrawaal, T.; Malhotra, V.; Aggarwal, A.; Gupta, Y.; Saxena, M. Analyze, Detect and Remove Gender Stereotyping from Bollywood Movies. In Proceedings of Machine Learning Research, Proceedings of the 1st Conference on Fairness, Accountability and Transparency, New York, NY, USA, 23–24 February 2018; PMLR: Cambridge, MA, USA, 2018; Volume 81, pp. 92–105. [Google Scholar]

- Srivastava, B.; Rossi, F. Towards Composable Bias Rating of AI Services. In Proceedings of the 2018 AAAI/ACM Conference on AI, Ethics, and Society, New Orleans, LA, USA, 2–3 February 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 284–289. [Google Scholar]

- Calmon, F.; Wei, D.; Vinzamuri, B.; Natesan Ramamurthy, K.; Varshney, K.R. Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Optimized Pre-Processing for Discrimination Prevention. In Proceedings of the Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Sun, T.; Gaut, A.; Tang, S.; Huang, Y.; ElSherief, M.; Zhao, J.; Mirza, D.; Belding, E.; Chang, K.-W.; Wang, W.Y. Mitigating Gender Bias in Natural Language Processing: Literature Review. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019. [Google Scholar]

- Dixon, L.; Li, J.; Sorensen, J.; Thain, N.; Vasserman, L. Measuring and Mitigating Unintended Bias in Text Classification. In Proceedings of the 2018 AAAI/ACM Conference on AI, Ethics, and Society, New Orleans, LA, USA, 2–3 February 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 67–73. [Google Scholar]

- Caliskan, A.; Bryson, J.J.; Narayanan, A. Semantics Derived Automatically from Language Corpora Contain Human-like Biases. Science 2017, 356, 183–186. [Google Scholar] [CrossRef] [PubMed]

- Sheng, E.; Chang, K.-W.; Natarajan, P.; Peng, N. The Woman Worked as a Babysitter: On Biases in Language Generation. arXiv 2019, arXiv:1909.01326. [Google Scholar] [CrossRef]

- Zhang, B.H.; Lemoine, B.; Mitchell, M. Mitigating Unwanted Biases with Adversarial Learning. In Proceedings of the 2018 AAAI/ACM Conference on AI, Ethics, and Society, New Orleans, LA, USA, 2–3 February 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 335–340. [Google Scholar]

- Kaneko, M.; Bollegala, D. Gender-Preserving Debiasing for Pre-Trained Word Embeddings. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 1641–1650. [Google Scholar]

- Guo, Y.; Guo, M.; Su, J.; Yang, Z.; Zhu, M.; Li, H.; Qiu, M.; Liu, S.S. Bias in Large Language Models: Origin, Evaluation, and Mitigation. arXiv 2024, arXiv:2411.10915. [Google Scholar] [CrossRef]

- Hassani, H.; Komendantova, N.; Rovenskaya, E.; Yeganegi, M.R. Social Intelligence Mining: Unlocking Insights from X. Mach. Learn. Knowl. Extr. 2023, 5, 1921–1936. [Google Scholar] [CrossRef]

- Rinker, T.W. Sentimentr: Calculate Text Polarity Sentiment; Buffalo, NY, USA. 2021. Available online: https://cran.r-project.org/web/packages/sentimentr/. (accessed on 3 August 2025).

- Loria, S. Textblob Documentation, release 0.15; 2018. Available online: https://textblob.readthedocs.io/en/dev/index.html (accessed on 3 August 2025).

- What is sentiment analysis and opinion mining? Microsoft Azure AI Language—Sentiment Analysis 2025. Availiable online: https://learn.microsoft.com/en-us/azure/ai-services/language-service/sentiment-opinion-mining/overview (accessed on 4 August 2025).

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Roehrick, K. Vader: Valence Aware Dictionary and sEntiment Reasoner (VADER). 2020. Available online: https://cran.r-project.org/web/packages/vader/vader.pdf (accessed on 3 August 2025).

| Sentence | Sentiment Class | Sentence | Sentiment Class | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | My wife is in an earthquake | −0.20 | Negative | 0.49 | 9 | My wife is an earthquake | −0.22 | Negative | 0.54 | ||

| My husband is in an earthquake | −0.20 | Negative | My husband is an earthquake | −0.22 | Negative | ||||||

| My spouse is in an earthquake | 0.04 | Neutral | My spouse is an earthquake | 0.04 | Neutral | ||||||

| Gender-Unbiased | −0.12 | Negative | Gender-Unbiased | −0.13 | Negative | ||||||

| 2 | My girlfriend is in an earthquake | −0.20 | Negative | 0.65 | 10 | My girlfriend is an earthquake | −0.22 | Negative | 0.716 | ||

| My boyfriend is in an earthquake | −0.20 | Negative | My boyfriend is an earthquake | −0.22 | Negative | ||||||

| My partner is in an earthquake | 0.12 | Positive | My partner is an earthquake | 0.13 | Positive | ||||||

| Gender-Unbiased | −0.10 | Negative | Gender-Unbiased | −0.10 | Negative | ||||||

| 3 | My daughter is in an earthquake | 0.04 | Neutral | 0.24 | 11 | My daughter is an earthquake | 0.04 | Neutral | 0.27 | ||

| My son is in an earthquake | −0.20 | Negative | My son is an earthquake | −0.22 | Negative | ||||||

| My child is in an earthquake | 0.04 | Neutral | My child is an earthquake | 0.04 | Neutral | ||||||

| Gender-Unbiased | −0.04 | Neutral | Gender-Unbiased | −0.04 | Neutral | ||||||

| 4 | My mother is in an earthquake | −0.20 | Negative | 0 | 12 | My mother is an earthquake | −0.22 | Negative | 0 | ||

| My father is in an earthquake | −0.20 | Negative | My father is an earthquake | −0.22 | Negative | ||||||

| My parent is in an earthquake | −0.20 | Negative | My parent is an earthquake | −0.22 | Negative | ||||||

| Gender-Unbiased | −0.20 | Negative | Gender-Unbiased | −0.22 | Negative | ||||||

| 5 | My sister is in an earthquake | −0.20 | Negative | 0.16 | 13 | My sister is an earthquake | −0.22 | Negative | 0.18 | ||

| My brother is in an earthquake | −0.04 | Neutral | My brother is an earthquake | −0.04 | Neutral | ||||||

| My sibling is in an earthquake | −0.20 | Negative | My sibling is an earthquake | −0.22 | Negative | ||||||

| Gender-Unbiased | −0.15 | Negative | Gender-Unbiased | −0.16 | Negative | ||||||

| 6 | My aunt is in an earthquake | −0.10 | Negative | 0.1 | 14 | My aunt is an earthquake | −0.11 | Negative | 0.11 | ||

| My uncle is in an earthquake | −0.20 | Negative | My uncle is an earthquake | −0.22 | Negative | ||||||

| My pibling is in an earthquake | −0.20 | Negative | My pibling is an earthquake | −0.22 | Negative | ||||||

| Gender-Unbiased | −0.17 | Negative | 15 | Gender-Unbiased | −0.19 | Negative | |||||

| 7 | My niece is in an earthquake | −0.20 | Negative | 0 | 16 | My niece is an earthquake | −0.22 | Negative | 0 | ||

| My nephew is in an earthquake | −0.20 | Negative | My nephew is an earthquake | −0.22 | Negative | ||||||

| My nibling is in an earthquake | −0.20 | Negative | My nibling is an earthquake | −0.22 | Negative | ||||||

| Gender-Unbiased | −0.20 | Negative | Gender-Unbiased | −0.22 | Negative | ||||||

| 8 | My mother in law is in an earthquake | −0.18 | Negative | 0 | 17 | My mother in law is an earthquake | −0.19 | Negative | 0 | ||

| My father in law is in an earthquake | −0.18 | Negative | My father in law is an earthquake | −0.19 | Negative | ||||||

| My parent in law is in an earthquake | −0.18 | Negative | My parent in law is an earthquake | −0.19 | Negative | ||||||

| Gender-Unbiased | −0.18 | Negative | Gender-Unbiased | −0.19 | Negative | ||||||

| 9 | My sister in law is in an earthquake | −0.18 | Negative | 0.14 | 18 | My sister in law is an earthquake | −0.19 | Negative | 0.15 | ||

| My brother in law is in an earthquake | −0.04 | Neutral | My brother in law is an earthquake | −0.04 | Neutral | ||||||

| My sibling in law is in an earthquake | −0.18 | Negative | My sibling in law is an earthquake | −0.19 | Negative | ||||||

| Gender-Unbiased | −0.13 | Negative | Gender-Unbiased | −0.14 | Negative | ||||||

| Sentence | Microsoft Azure | RoBERTa | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

Sentiment Class |

Sentiment Class | |||||||||||

| 1 | My wife is in an earthquake | 0.76 | 0.24 | 0.00 | Negative | 0.27 | 0.52 | 0.45 | 0.03 | Negative | 0.14 | |

| My husband is in an earthquake | 0.77 | 0.22 | 0.00 | Negative | 0.52 | 0.45 | 0.04 | Negative | ||||

| My spouse is in an earthquake | 0.70 | 0.30 | 0.00 | Negative | 0.52 | 0.42 | 0.03 | Negative | ||||

| Gender-Unbiased | 0.74 | 0.25 | 0.00 | Negative | 0.53 | 0.44 | 0.03 | Negative | ||||

| 2 | My girlfriend is in an earthquake | 0.63 | 0.36 | 0.01 | Negative | 0.1 | 0.54 | 0.42 | 0.04 | Negative | 0.15 | |

| My boyfriend is in an earthquake | 0.68 | 0.32 | 0.00 | Negative | 0.47 | 0.48 | 0.05 | Neutral | ||||

| My partner is in an earthquake | 0.65 | 0.35 | 0.00 | Negative | 0.47 | 0.48 | 0.03 | Neutral | ||||

| Gender-Unbiased | 0.65 | 0.34 | 0.00 | Negative | 0.50 | 0.46 | 0.04 | Negative | ||||

| 3 | My daughter is in an earthquake | 0.78 | 0.22 | 0.00 | Negative | 0.26 | 0.63 | 0.35 | 0.02 | Negative | 0.71 | |

| My son is in an earthquake | 0.79 | 0.21 | 0.00 | Negative | 0.64 | 0.34 | 0.02 | Negative | ||||

| My child is in an earthquake | 0.72 | 0.28 | 0.00 | Negative | 0.64 | 0.18 | 0.01 | Negative | ||||

| Gender-Unbiased | 0.76 | 0.24 | 0.00 | Negative | 0.69 | 0.29 | 0.02 | Negative | ||||

| 4 | My mother is in an earthquake | 0.87 | 0.13 | 0.00 | Negative | 0.3 | 0.71 | 0.27 | 0.02 | Negative | 0.24 | |

| My father is in an earthquake | 0.88 | 0.12 | 0.00 | Negative | 0.59 | 0.39 | 0.02 | Negative | ||||

| My parent is in an earthquake | 0.80 | 0.20 | 0.00 | Negative | 0.59 | 0.30 | 0.02 | Negative | ||||

| Gender-Unbiased | 0.85 | 0.15 | 0.00 | Negative | 0.66 | 0.32 | 0.02 | Negative | ||||

| 5 | My sister is in an earthquake | 0.81 | 0.19 | 0.00 | Negative | 0.71 | 0.51 | 0.46 | 0.03 | Negative | 0.24 | |

| My brother is in an earthquake | 0.76 | 0.23 | 0.00 | Negative | 0.55 | 0.43 | 0.03 | Negative | ||||

| My sibling is in an earthquake | 0.61 | 0.39 | 0.00 | Negative | 0.55 | 0.39 | 0.02 | Negative | ||||

| Gender-Unbiased | 0.73 | 0.27 | 0.00 | Negative | 0.55 | 0.43 | 0.03 | Negative | ||||

| 6 | My aunt is in an earthquake | 0.85 | 0.14 | 0.00 | Negative | 0.36 | 0.58 | 0.39 | 0.02 | Negative | 0.29 | |

| My uncle is in an earthquake | 0.67 | 0.32 | 0.00 | Negative | 0.44 | 0.53 | 0.03 | Neutral | ||||

| My pibling is in an earthquake | 0.76 | 0.23 | 0.00 | Negative | 0.44 | 0.44 | 0.03 | Neutral | ||||

| Gender-Unbiased | 0.76 | 0.23 | 0.00 | Negative | 0.52 | 0.46 | 0.03 | Negative | ||||

| 7 | My niece is in an earthquake | 0.76 | 0.23 | 0.00 | Negative | 0.49 | 0.56 | 0.41 | 0.03 | Negative | 0.15 | |

| My nephew is in an earthquake | 0.83 | 0.17 | 0.00 | Negative | 0.58 | 0.39 | 0.03 | Negative | ||||

| My nibling is in an earthquake | 0.67 | 0.32 | 0.00 | Negative | 0.58 | 0.44 | 0.02 | Negative | ||||

| Gender-Unbiased | 0.75 | 0.24 | 0.00 | Negative | 0.56 | 0.41 | 0.03 | Negative | ||||

| 8 | My mother in law is in an earthquake | 0.63 | 0.37 | 0.00 | Negative | 0.11 | 0.57 | 0.41 | 0.02 | Negative | 0.08 | |

| My father in law is in an earthquake | 0.59 | 0.40 | 0.00 | Negative | 0.57 | 0.41 | 0.02 | Negative | ||||

| My parent in law is in an earthquake | 0.64 | 0.36 | 0.00 | Negative | 0.57 | 0.39 | 0.02 | Negative | ||||

| Gender-Unbiased | 0.62 | 0.38 | 0.00 | Negative | 0.58 | 0.40 | 0.02 | Negative | ||||

| 9 | My sister in law is in an earthquake | 0.73 | 0.27 | 0.00 | Negative | 0.29 | 0.49 | 0.49 | 0.02 | Neutral | 0.12 | |

| My brother in law is in an earthquake | 0.59 | 0.40 | 0.00 | Negative | 0.55 | 0.43 | 0.02 | Negative | ||||

| My sibling in law is in an earthquake | 0.59 | 0.41 | 0.00 | Negative | 0.55 | 0.47 | 0.02 | Negative | ||||

| Gender-Unbiased | 0.64 | 0.36 | 0.00 | Negative | 0.51 | 0.47 | 0.02 | Negative | ||||

| 10 | My wife is an earthquake | 0.85 | 0.15 | 0.00 | Negative | 0.22 | 0.45 | 0.50 | 0.05 | Neutral | 0.19 | |

| My husband is an earthquake | 0.88 | 0.12 | 0.00 | Negative | 0.54 | 0.41 | 0.04 | Negative | ||||

| My spouse is an earthquake | 0.81 | 0.19 | 0.00 | Negative | 0.54 | 0.49 | 0.04 | Negative | ||||

| Gender-Unbiased | 0.85 | 0.15 | 0.00 | Negative | 0.49 | 0.47 | 0.05 | Negative | ||||

| 11 | My girlfriend is an earthquake | 0.60 | 0.39 | 0.01 | Negative | 0.53 | 0.53 | 0.42 | 0.05 | Negative | 0.48 | |

| My boyfriend is an earthquake | 0.77 | 0.22 | 0.00 | Negative | 0.54 | 0.40 | 0.06 | Negative | ||||

| My partner is an earthquake | 0.82 | 0.18 | 0.00 | Negative | 0.54 | 0.53 | 0.05 | Negative | ||||

| Gender-Unbiased | 0.73 | 0.26 | 0.00 | Negative | 0.50 | 0.45 | 0.05 | Negative | ||||

| 12 | My daughter is an earthquake | 0.89 | 0.11 | 0.00 | Negative | 0.14 | 0.48 | 0.47 | 0.05 | Negative | 0.69 | |

| My son is an earthquake | 0.89 | 0.11 | 0.00 | Negative | 0.47 | 0.48 | 0.05 | Neutral | ||||

| My child is an earthquake | 0.85 | 0.14 | 0.00 | Negative | 0.47 | 0.33 | 0.02 | Negative | ||||

| Gender-Unbiased | 0.88 | 0.12 | 0.00 | Negative | 0.53 | 0.42 | 0.04 | Negative | ||||

| 13 | My mother is an earthquake | 0.92 | 0.08 | 0.00 | Negative | 0.1 | 0.72 | 0.26 | 0.02 | Negative | 0.35 | |

| My father is an earthquake | 0.89 | 0.11 | 0.00 | Negative | 0.55 | 0.42 | 0.03 | Negative | ||||

| My parent is an earthquake | 0.88 | 0.12 | 0.00 | Negative | 0.55 | 0.32 | 0.03 | Negative | ||||

| Gender-Unbiased | 0.90 | 0.10 | 0.00 | Negative | 0.64 | 0.33 | 0.03 | Negative | ||||

| 14 | My sister is an earthquake | 0.90 | 0.09 | 0.00 | Negative | 0.3 | 0.40 | 0.54 | 0.05 | Neutral | 0.09 | |

| My brother is an earthquake | 0.85 | 0.14 | 0.00 | Negative | 0.45 | 0.50 | 0.05 | Neutral | ||||

| My sibling is an earthquake | 0.80 | 0.19 | 0.00 | Negative | 0.45 | 0.51 | 0.05 | Neutral | ||||

| Gender-Unbiased | 0.85 | 0.14 | 0.00 | Negative | 0.43 | 0.52 | 0.05 | Neutral | ||||

| 15 | My aunt is an earthquake | 0.90 | 0.10 | 0.00 | Negative | 0.17 | 0.57 | 0.40 | 0.04 | Negative | 0.50 | |

| My uncle is an earthquake | 0.81 | 0.18 | 0.00 | Negative | 0.32 | 0.62 | 0.05 | Neutral | ||||

| My pibling is an earthquake | 0.86 | 0.14 | 0.00 | Negative | 0.32 | 0.56 | 0.06 | Neutral | ||||

| Gender-Unbiased | 0.86 | 0.14 | 0.00 | Negative | 0.42 | 0.53 | 0.05 | Neutral | ||||

| 16 | My niece is an earthquake | 0.84 | 0.16 | 0.00 | Negative | 0.46 | 0.43 | 0.51 | 0.06 | Neutral | 0.27 | |

| My nephew is an earthquake | 0.88 | 0.12 | 0.00 | Negative | 0.41 | 0.53 | 0.06 | Neutral | ||||

| My nibling is an earthquake | 0.75 | 0.25 | 0.01 | Negative | 0.41 | 0.59 | 0.05 | Neutral | ||||

| Gender-Unbiased | 0.82 | 0.18 | 0.00 | Negative | 0.40 | 0.54 | 0.06 | Neutral | ||||

| 17 | My mother in law is an earthquake | 0.83 | 0.17 | 0.00 | Negative | 0.07 | 0.49 | 0.48 | 0.03 | Negative | 0.05 | |

| My father in law is an earthquake | 0.79 | 0.20 | 0.00 | Negative | 0.47 | 0.50 | 0.03 | Neutral | ||||

| My parent in law is an earthquake | 0.81 | 0.19 | 0.00 | Negative | 0.47 | 0.49 | 0.03 | Neutral | ||||

| Gender-Unbiased | 0.81 | 0.19 | 0.00 | Negative | 0.48 | 0.49 | 0.03 | Neutral | ||||

| 18 | My sister in law is an earthquake | 0.73 | 0.26 | 0.00 | Negative | 0.37 | 0.34 | 0.62 | 0.05 | Neutral | 0.16 | |

| My brother in law is an earthquake | 0.78 | 0.22 | 0.00 | Negative | 0.39 | 0.57 | 0.04 | Neutral | ||||

| My sibling in law is an earthquake | 0.66 | 0.33 | 0.00 | Negative | 0.39 | 0.63 | 0.04 | Neutral | ||||

| Gender-Unbiased | 0.72 | 0.27 | 0.00 | Negative | 0.35 | 0.61 | 0.04 | Neutral | ||||

| Sentiment Analysis Library | Microsoft Azure | RoBERTa | VADER |

|---|---|---|---|

| 5.25 | 4.9 | 0 |

| Sentiment Analysis Library | SentimentR | TextBlob |

|---|---|---|

| 3.757228 | 0 |

| Null Hypothesis | ||||

| Average SGBI difference | 0.2087 | 0.0194 | 0.2917 | 0.2722 |

| t | 3.7684 | 0.3121 | 7.1412 | 5.815 |

| df | 17 | 17 | 17 | 17 |

| p-value | 0.0015 | 0.7587 | 1.652 × 10−6 | 2.07 × 10−5 |

| Average SGBI difference is significant (0.05 significance level) | Yes | No | Yes | Yes |

| Null Hypothesis | ||||

|---|---|---|---|---|

| Average SGBI difference | 0.0298 | 0.1120 | 0.1842 | 0.0722 |

| t | 3.4775 | 5.3909 | 8.9917 | 11.508 |

| df | 136 | 136 | 136 | 136 |

| p-value | 0.0007 | 3.01 × 10−7 | 1.842 × 10−15 | 2.2 × 10−16 |

| Average SGBI difference is significant (0.05 significance level) | Yes | Yes | Yes | Yes |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yeganegi, M.R.; Hassani, H.; Komendantova, N. Identifying and Mitigating Gender Bias in Social Media Sentiment Analysis: A Post-Training Approach on Example of the 2023 Morocco Earthquake. Information 2025, 16, 679. https://doi.org/10.3390/info16080679

Yeganegi MR, Hassani H, Komendantova N. Identifying and Mitigating Gender Bias in Social Media Sentiment Analysis: A Post-Training Approach on Example of the 2023 Morocco Earthquake. Information. 2025; 16(8):679. https://doi.org/10.3390/info16080679

Chicago/Turabian StyleYeganegi, Mohammad Reza, Hossein Hassani, and Nadejda Komendantova. 2025. "Identifying and Mitigating Gender Bias in Social Media Sentiment Analysis: A Post-Training Approach on Example of the 2023 Morocco Earthquake" Information 16, no. 8: 679. https://doi.org/10.3390/info16080679

APA StyleYeganegi, M. R., Hassani, H., & Komendantova, N. (2025). Identifying and Mitigating Gender Bias in Social Media Sentiment Analysis: A Post-Training Approach on Example of the 2023 Morocco Earthquake. Information, 16(8), 679. https://doi.org/10.3390/info16080679