Beyond Polarity: Forecasting Consumer Sentiment with Aspect- and Topic-Conditioned Time Series Models

Abstract

1. Introduction

1.1. The Evolution of Sentiment Analysis

1.2. The Gap: From Static Classification to Dynamic Forecasting

1.3. Proposed Framework and Contributions

- A Novel Multi-Feature Framework: We propose and implement a framework that fuses three distinct types of textual signals—polarity scores, aspect-based confidence scores, and low-dimensional topic embeddings—to create a comprehensive, multivariate representation of daily public sentiment.

- Application of Exogenous Forecasting Models: We demonstrate the successful application of a SARIMAX (Seasonal AutoRegressive Integrated Moving Average with eXogenous variables) model, using the extracted contextual features as predictive exogenous variables to enhance forecasting accuracy.

- An Interpretable and Reproducible Pipeline: We present an end-to-end, reproducible methodology that not only improves predictive performance but also provides interpretable insights into which conversational features are statistically significant drivers of sentiment change.

2. Literature Review

2.1. Evolution of Sentiment Analysis

2.1.1. Lexicon-Based and Traditional Machine Learning Approaches

2.1.2. Deep Learning: CNNs, LSTMs, and Beyond

2.1.3. Transformers and Aspect-Based Sentiment Analysis (ABSA)

2.2. Time Series Forecasting Models

2.2.1. Traditional and Hybrid Models

2.2.2. Exogenous Variables with SARIMAX

2.3. Research Gap and Our Contribution

3. Methodology

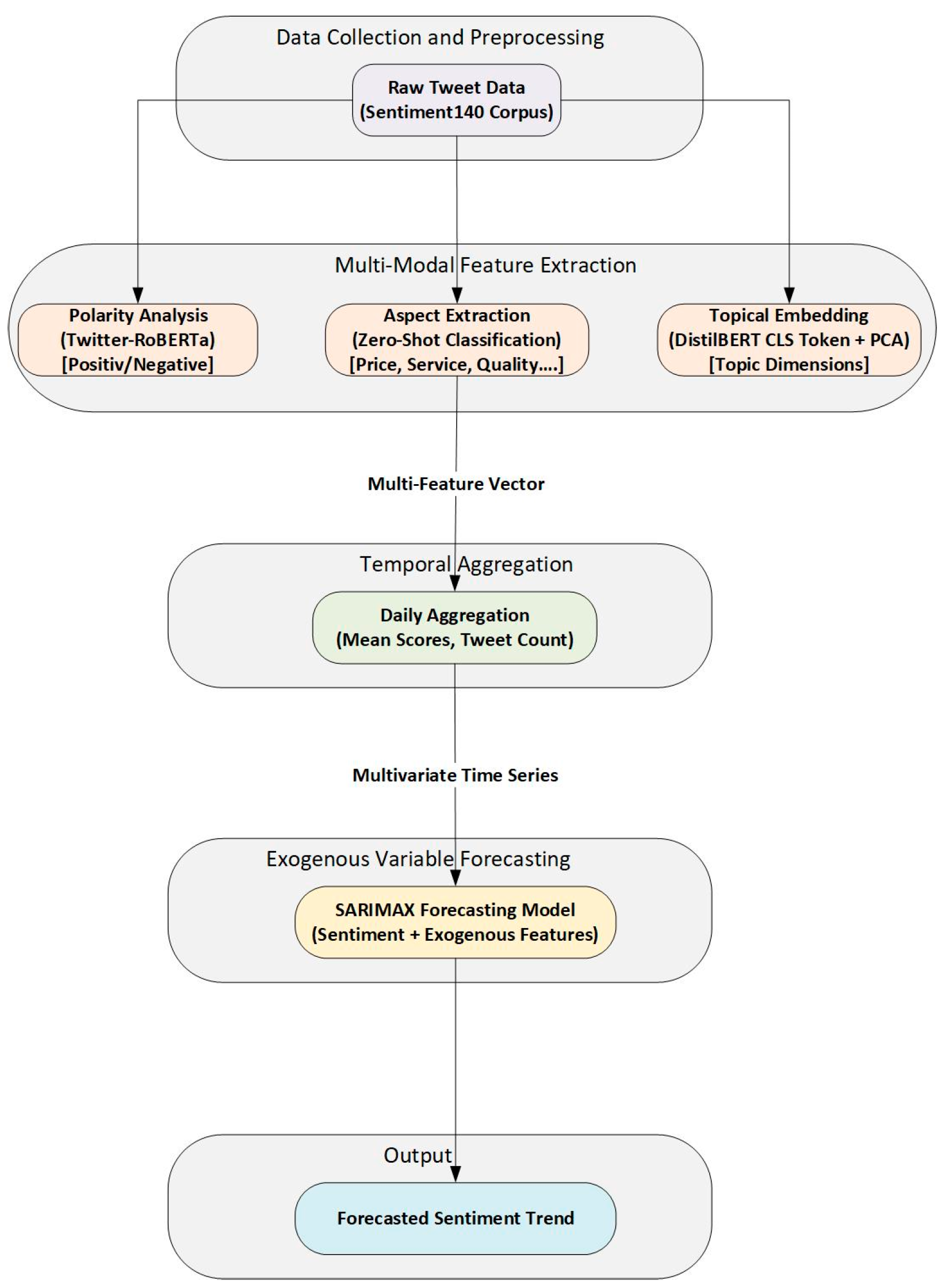

3.1. MFSF System Architecture

3.2. Data Collection and Preprocessing

3.3. Multi-Modal Feature Extraction

3.3.1. Polarity Score Extraction

3.3.2. Aspect Score Extraction

3.3.3. Topical Embedding and Dimensionality Reduction

3.4. Algorithmic Procedure and Model Formulation

| Algorithm 1: The Multi-Feature Sentiment Forecasting (MFSF) Procedure |

| Input: Raw tweet dataset

. Output: Forecasted sentiment series ; Model performance metrics (RMSE, MAE).

|

- •

- Daily Average Polarity Score (ST):

- •

- Daily Tweet Volume (VT):

- •

- Daily Average Aspect Vector (AT):

- •

- Daily Average Topic Vector (TT):

3.5. Forecasting Models

3.5.1. Baseline Model: Univariate ARIMA

3.5.2. Proposed Model: Multivariate SARIMAX

3.6. Evaluation Metrics

- •

- RMSE: This measures the standard deviation of the prediction errors. It is sensitive to large errors.

- •

- MAE: This measures the average magnitude of the errors, providing an easily interpretable assessment of the average error size.

4. Results

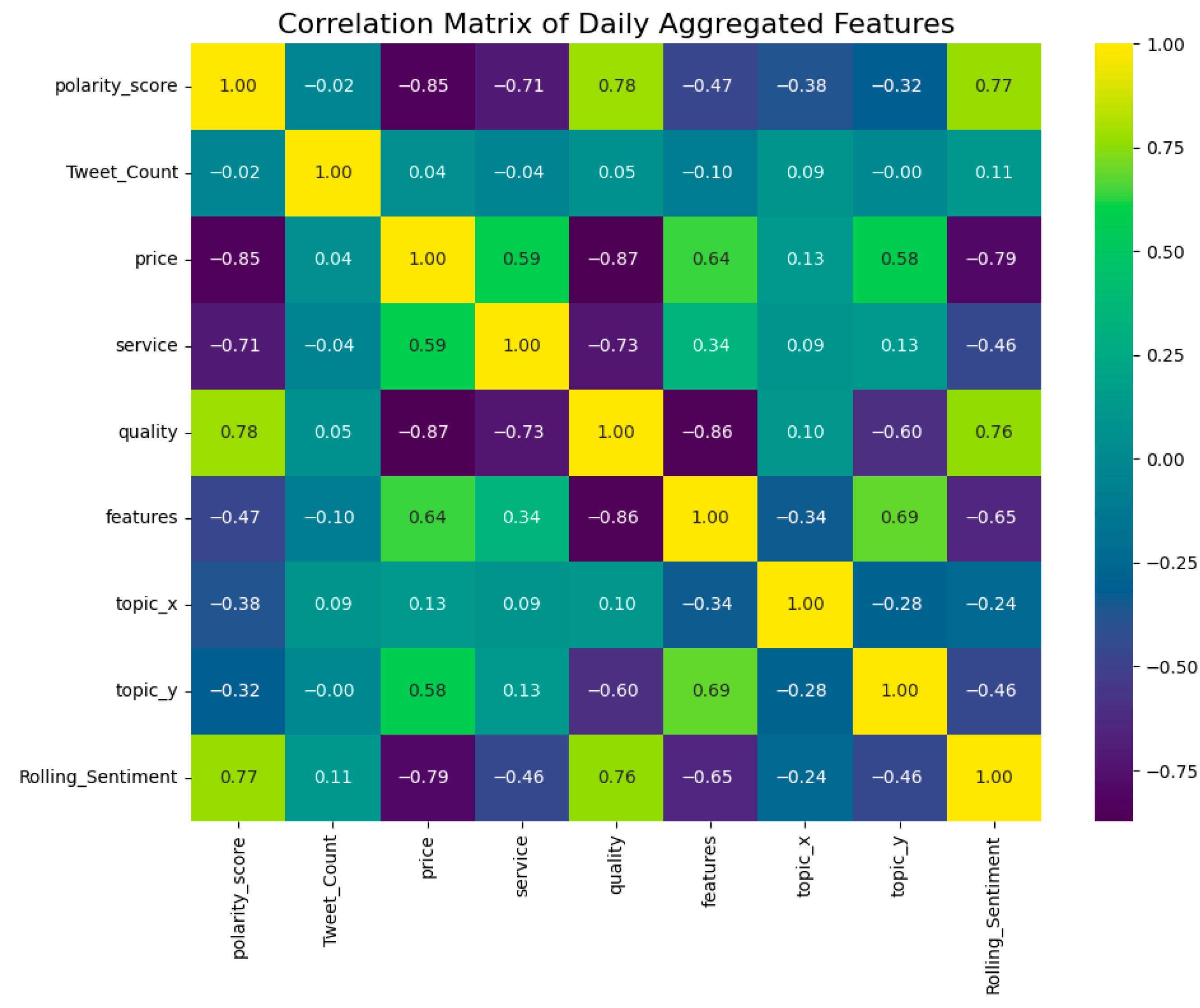

4.1. Exploratory Data Analysis of Aggregated Features

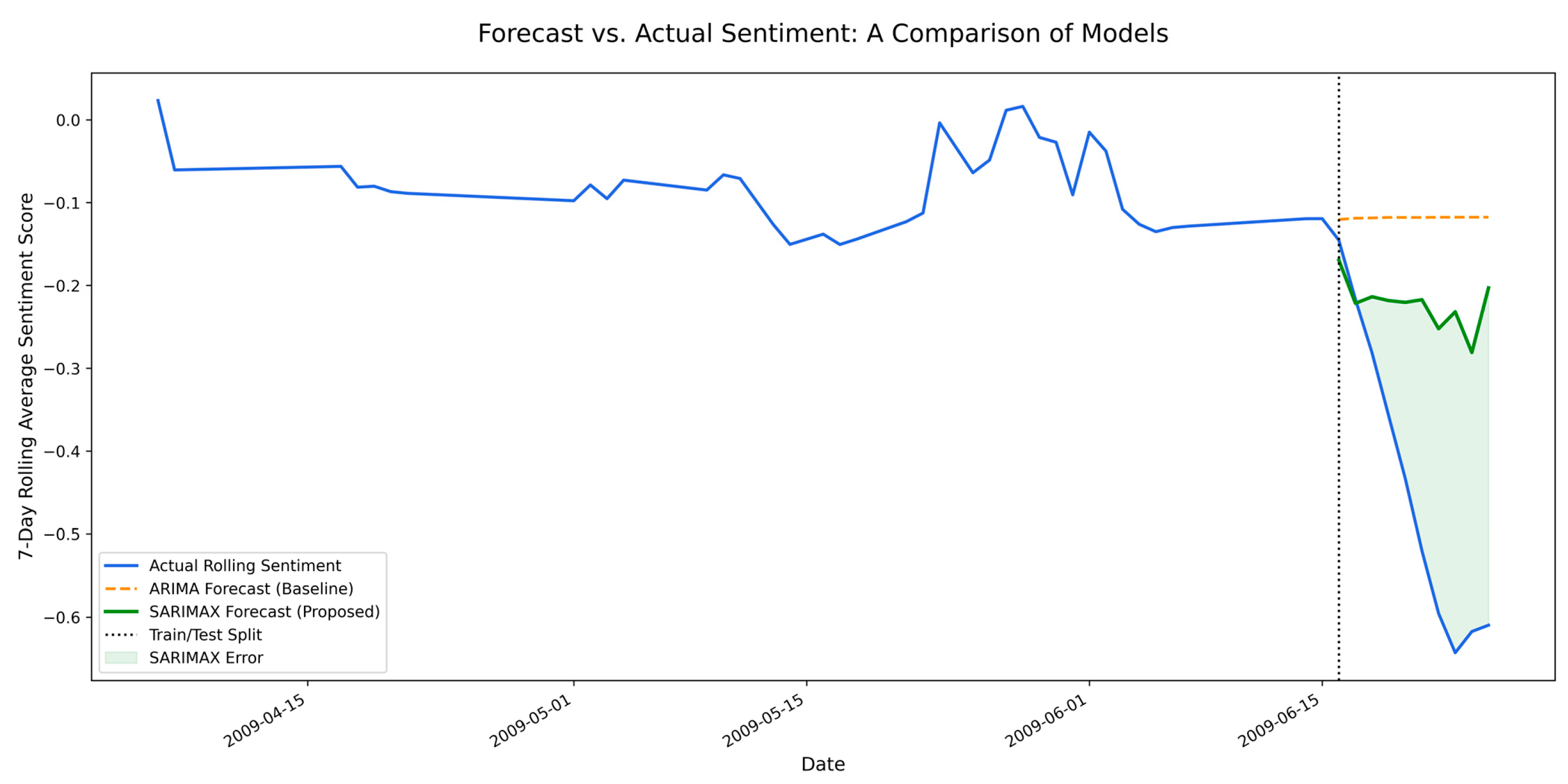

4.2. Forecasting Performance: Baseline vs. Proposed Model

4.3. Statistical Analysis of the SARIMAX Model

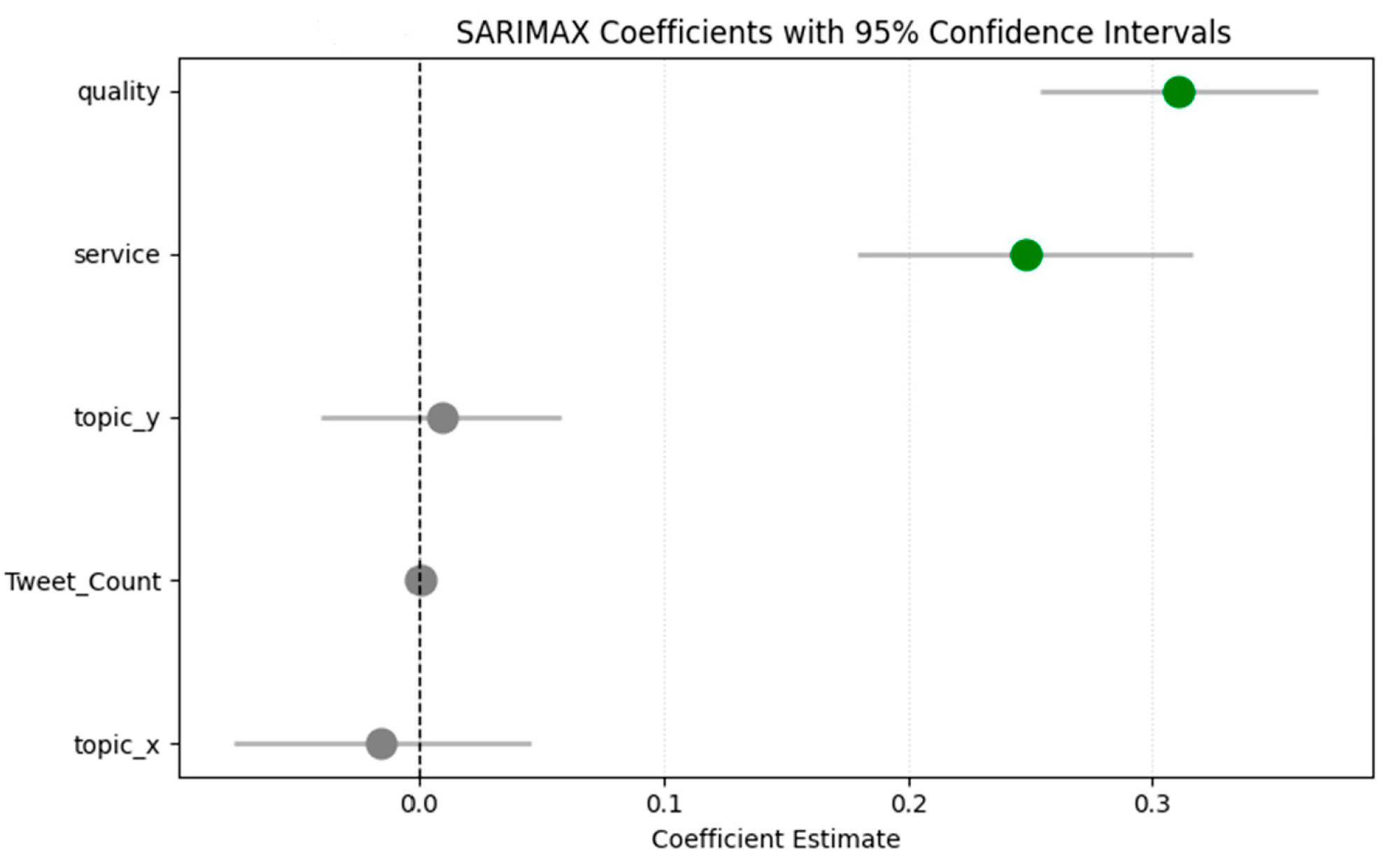

4.3.1. Model Coefficient Analysis

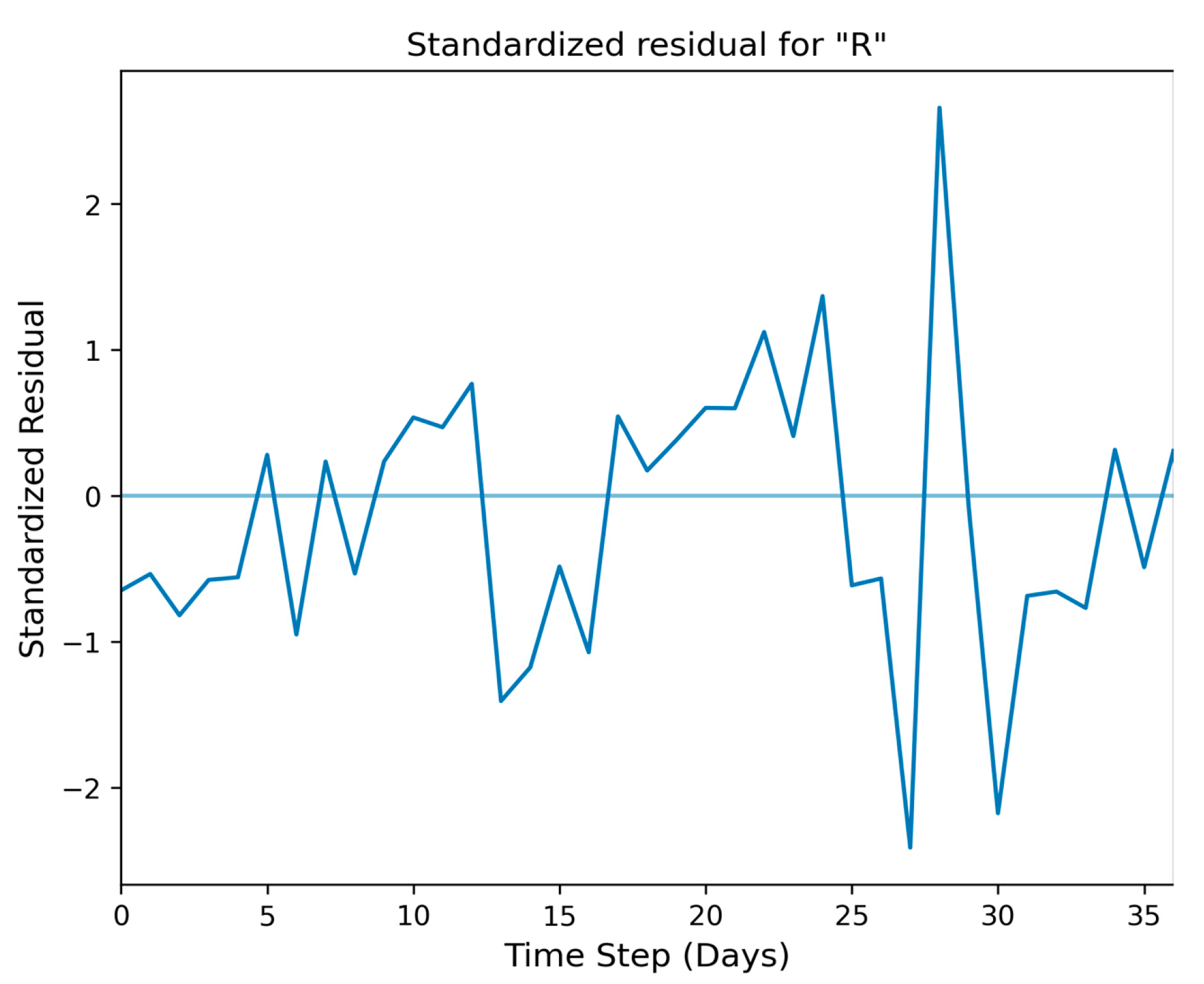

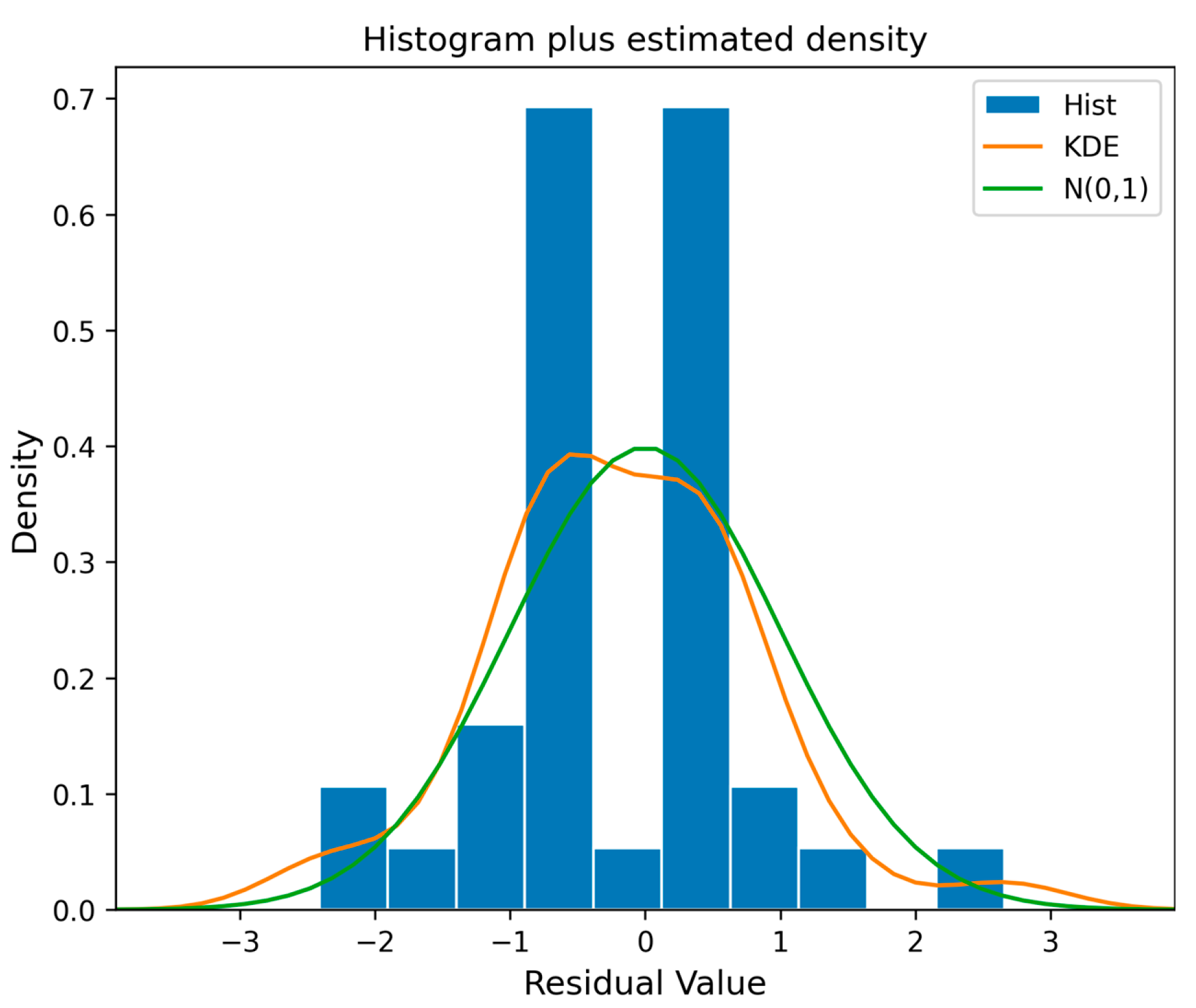

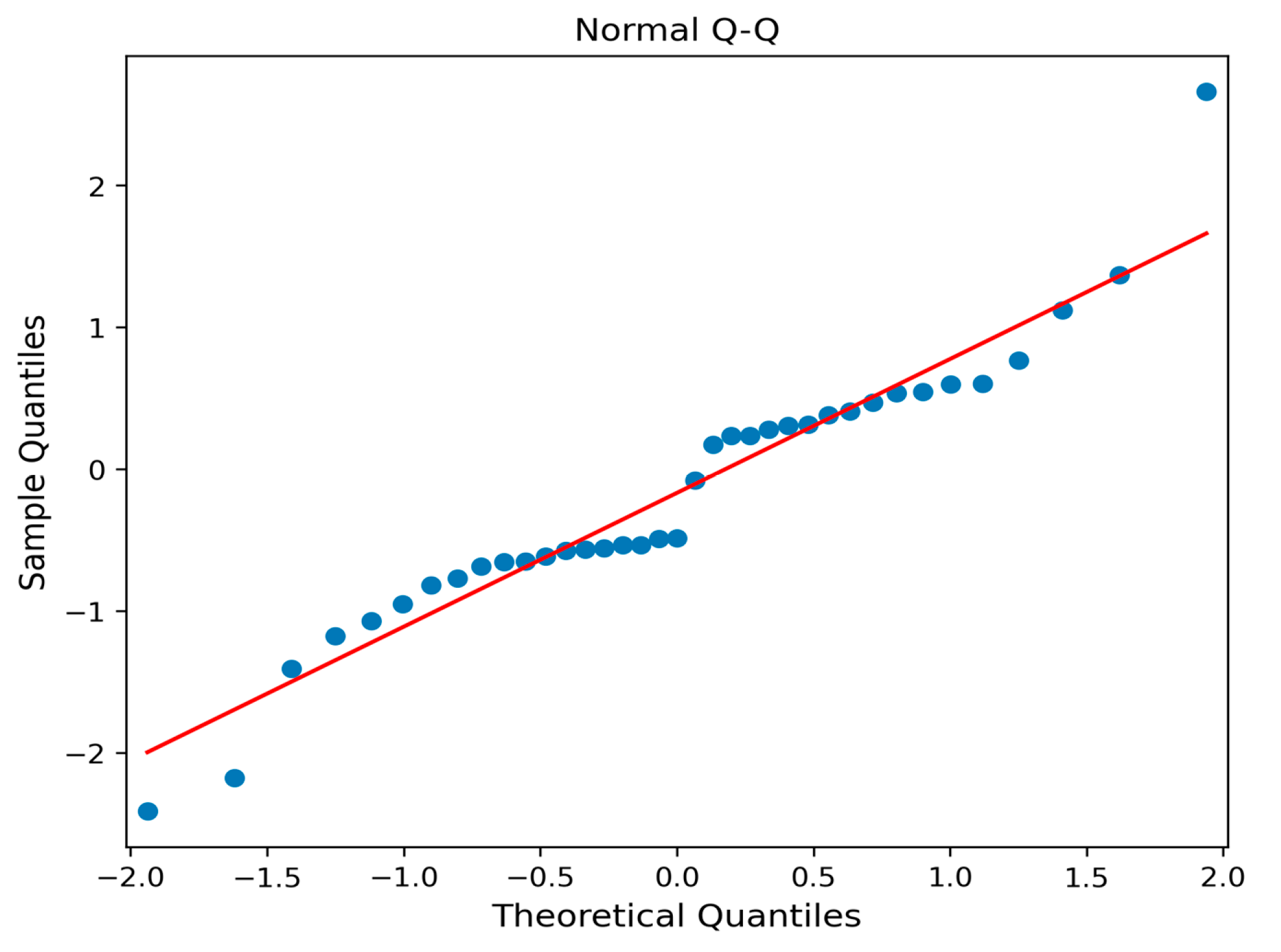

4.3.2. Model Diagnostics

5. Discussion

5.1. Interpretation of Findings

5.2. Strategic Implications and Contributions

5.3. Policy Implications and Societal Applications

- •

- Public Health and Crisis Management: Government health agencies could deploy this framework to monitor public sentiment regarding health policies, vaccination campaigns, or public health emergencies. For example, by tracking an increase in conversations about ‘side effects’ (a ‘quality’ or ‘features’ aspect), policymakers could proactively address public concerns and counter misinformation before it erodes trust in public health initiatives. During a crisis, the tool could serve as an early warning system for rising public anxiety or dissatisfaction with official responses.

- •

- Economic Monitoring and Consumer Protection: Central banks and financial regulators are increasingly interested in high-frequency data to gauge economic conditions. Forecasting consumer sentiment, particularly with respect to aspects like ‘price’ and ‘quality’, can provide a leading indicator of consumer confidence, inflation expectations, and potential shifts in spending behavior. Furthermore, consumer protection agencies could use the framework to detect emerging patterns of complaints about specific products or industries, enabling faster investigations and interventions.

- •

- Improving Public Service Delivery: Government agencies at all levels can use this framework to gather real-time feedback on public services. By analyzing discussions related to aspects like ‘service’ (e.g., ‘long wait times at the DMV’) or ‘quality’ (e.g., ‘the new park is poorly maintained’), local governments can identify and address service delivery failures more efficiently than through traditional surveys, leading to more responsive and effective governance.

- •

- Ethical Guardrails and Responsible Use: The deployment of such technology in a policy context is not without risks. There is a potential for this tool to be used for surveillance or to manipulate public opinion by identifying and targeting persuadable groups. Therefore, any government use of this framework must be bound by strong ethical guidelines, including full transparency, robust data privacy protections, and a commitment to using the insights to improve public welfare rather than to control public discourse.

5.4. Limitations of the Study

- Data Source Singularity and Dynamic Drifts: Our analysis relies exclusively on the Sentiment140 dataset, which consists of tweets from 2009. This introduces several key limitations related to dynamic changes over time. First, public sentiment on Twitter is a proxy for, not a direct measure of, overall consumer sentiment, and its users are not representative of the general population. Second, the platform itself has undergone significant changes. The language, conversational norms, and user behavior on social media have evolved dramatically since 2009. For example, the use of sarcasm, memes, and emojis to convey complex sentiment has become far more prevalent. This ‘concept drift’ means that a model trained on historical data may struggle to interpret contemporary language. Finally, social media platforms continuously update their content recommendations and moderation algorithms. Changes to how tweets are sorted, promoted, or suppressed can alter the visibility of certain types of content, potentially skewing the data stream. Together, these temporal drifts pose a significant challenge for the long-term stability of any social media forecasting model, necessitating periodic retraining and adaptation.

- Predefined Aspects: The set of aspects (price, service, etc.) was manually predefined. This approach may miss emergent or niche topics of discussion that could be valuable predictive signals.

- Model Linearity: The SARIMAX model, while powerful and interpretable, primarily captures linear relationships. The true dynamics of sentiment may involve more complex, non-linear interactions that the model may not fully capture.

- Scope of Data Modality and Language: The current MFSF framework is designed exclusively for English-language text. It does not account for the rich, non-textual data that often accompanies social media posts, such as images, videos, GIFs, or emojis, all of which can be powerful conveyors of sentiment. A comprehensive understanding of public sentiment would ultimately require a multi-modal and multilingual approach.

- Limited Generalizability and Stress Testing: The model’s performance was evaluated on a single, continuous time period from a historical dataset (2009). Its generalizability to other time periods, particularly during major external events or crises (e.g., a financial crisis or a public health emergency), has not been tested. A comprehensive evaluation would require testing the framework’s robustness across diverse and volatile time periods.

5.5. Ethical Considerations and Potential Biases

- Linguistic and Cultural Bias: Our analysis was conducted on an English-language dataset. NLP models trained on this data may not perform equally well on different dialects, slang, or cultural expressions of sentiment. Furthermore, what is considered ‘positive’ or ‘negative’ sentiment can be culturally dependent, and models may fail to capture these nuances, potentially misrepresenting the opinions of certain groups.

- Demographic and Representation Bias: As noted in our limitations, social media users are not a perfect representation of the general population. The opinions captured may over-represent certain age groups, geographic locations, or socioeconomic statuses while under-representing others. Relying solely on this data for major business or policy decisions could therefore perpetuate or even amplify existing inequalities.

- Data Privacy: Although the data used in this study was from a public corpus of tweets, the application of such models in a real-world setting raises significant privacy concerns. Organizations must ensure that any collection and analysis of user data comply with privacy regulations like GDPR and respect user consent. The potential for de-anonymizing individuals from aggregated data, however small, must be carefully managed through robust anonymization and data protection protocols.

- Potential for Manipulation: Forecasting public opinion also introduces the risk of its manipulation. Malicious actors could use such models to identify contentious topics and inject targeted misinformation to sway public discourse or to create artificial sentiment trends. The ethical deployment of these models requires robust safeguards and a commitment to transparency to mitigate the risk of such misuse.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ABSA | Aspect-Based Sentiment Analysis |

| ARIMA | Autoregressive Integrated Moving Average |

| BART | Bidirectional and Auto-Regressive Transformers |

| BERT | Bidirectional Encoder Representations from Transformers |

| LDA | Latent Dirichlet Allocation |

| LSTM | Long Short-Term Memory |

| MAE | Mean Absolute Error |

| MFSF | Multi-Feature Sentiment-Driven Forecasting |

| NLP | Natural Language Processing |

| PCA | Principal Component Analysis |

| RMSE | Root Mean Squared Error |

| SARIMAX | Seasonal AutoRegressive Integrated Moving Average with eXogenous variables |

References

- Stieglitz, S.; Dang-Xuan, L. Social media and political communication: A social media analytics framework. Soc. Netw. Anal. Min. 2013, 3, 1277–1291. [Google Scholar] [CrossRef]

- Pang, B.; Lee, L. Opinion Mining and Sentiment Analysis, 1st ed.; Now: Hanover, MA, USA, 2008; pp. 1–135. [Google Scholar]

- Medhat, W.; Hassan, A.; Korashy, H. Sentiment analysis algorithms and applications: A survey. Ain. Shams Eng. J. 2014, 5, 1093–1113. [Google Scholar] [CrossRef]

- Devlin, J. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding. 2024. [Google Scholar]

- Bollen, J.; Mao, H.; Zeng, X. Twitter mood predicts the stock market. J. Comput. Sci. 2011, 2, 1–8. [Google Scholar] [CrossRef]

- Asur, S.; Huberman, B.A. Predicting the Future with Social Media. In Proceedings of the 2010 IEEE/WIC/ACM International Conference on Web Intelligence and Intelligent Agent Technology, Toronto, ON, Canada, 31 August–3 September 2010; pp. 492–499. [Google Scholar] [CrossRef]

- Friedrich, N.; Bowman, T.D.; Stock, W.G.; Haustein, S. Adapting sentiment analysis for tweets linking to scientific papers. arXiv 2015, arXiv:1507.01967. [Google Scholar] [CrossRef]

- Yin, Z.; Shao, J.; Hussain, M.J.; Hao, Y.; Chen, Y.; Zhang, X.; Wang, L. DPG-LSTM: An Enhanced LSTM Framework for Sentiment Analysis in Social Media Text Based on Dependency Parsing and GCN. Appl. Sci. 2023, 13, 354. [Google Scholar] [CrossRef]

- Şengül, F.; Adem, K.; Yılmaz, E.K. Sentiment analysis based on machine learning methods on twitter data using one API. Int. Conf. Contemp. Acad. Res. 2023, 1, 207–213. [Google Scholar] [CrossRef]

- Tan, K.L.; Lee, C.P.; Lim, K.M. RoBERTa-GRU: A Hybrid Deep Learning Model for Enhanced Sentiment Analysis. Appl. Sci. 2023, 13, 3915. [Google Scholar] [CrossRef]

- Cernian, A.; Sgarciu, V.; Martin, B. Sentiment analysis from product reviews using SentiWordNet as lexical resource. In Proceedings of the 2015 7th International Conference on Electronics, Computers and Artificial Intelligence (ECAI), New Delhi, India, 26 October 2021; p. WE-18. [Google Scholar]

- Hutto, C.; Gilbert, E. VADER: A Parsimonious Rule-Based Model for Sentiment Analysis of Social Media Text. In Proceedings of the International AAAI Conference on Web and Social Media, Copenhagen, Denmark, 23–26 June 2025; pp. 216–225. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, S.; Liu, B. Deep learning for sentiment analysis: A survey. WIREs Data Min. Knowl. Discov. 2018, 8, e1253. [Google Scholar] [CrossRef]

- Gaikwad, H.R.; Mujawar, N.; Sawant, N.; Kiwelekar, A.; Netak, L. Urdu Sentiment Analysis: A Review. In Data Science and Applications; Springer Nature: Singapore, 2024; Volume 820, pp. 463–472. [Google Scholar]

- Suryawanshi, N.S. Sentiment analysis with machine learning and deep learning: A survey of techniques and applications. Int. J. Sci. Res. Arch. 2024, 12, 5–15. [Google Scholar] [CrossRef]

- Tul, Q.; Ali, M.; Riaz, A.; Noureen, A.; Kamranz, M.; Hayat, B.; Rehman, A. Sentiment Analysis Using Deep Learning Techniques: A Review. Int. J. Adv. Comput. Sci. Appl. 2017, 8. [Google Scholar] [CrossRef]

- Prabha, M.I.; Srikanth, G.U. Survey of Sentiment Analysis Using Deep Learning Techniques. In Proceedings of the 2019 1st International Conference on Innovations in Information and Communication Technology (ICIICT), New York, NY, USA, 25–26 April 2019; pp. 1–9. [Google Scholar] [CrossRef]

- Malhotra, P.; Vig, L.; Shroff, G.; Agarwal, P. Long Short Term Memory Networks for Anomaly Detection in Time Series. 2024. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Brain, G.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł. Attention Is All You Need. arXiv 2023, arXiv:1706.03762v7. [Google Scholar]

- Sanh, V.; Debut, L.; Chaumond, J.; Wolf, T. DistilBERT, a distilled version of BERT: Smaller, faster, cheaper and lighter. arXiv 2019, arXiv:1910.01108. [Google Scholar] [CrossRef]

- Liu, H.; Chatterjee, I.; Zhou, M.; Lu, X.S.; Abusorrah, A. Aspect-Based Sentiment Analysis: A Survey of Deep Learning Methods. Trans. Comput. Soc. Syst. 2020, 7, 1358–1375. [Google Scholar] [CrossRef]

- Brauwers, G.; Frasincar, F. A Survey on Aspect-Based Sentiment Classification. ACM Comput. Surv. 2023, 55, 1–37. [Google Scholar] [CrossRef]

- Yusuf, K.K.; Ogbuju, E.; Abiodun, T.; Oladipo, F. A Technical Review of the State-of-the-Art Methods in Aspect-Based Sentiment Analysis. J. Comput. Theor. Appl. 2024, 1, 287–298. [Google Scholar] [CrossRef]

- Lewis, M.; Liu, Y.; Goyal, N.; Ghazvininejad, M.; Mohamed, A.; Levy, O.; Stoyanov, V.; Zettlemoyer, L. BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension. arXiv 2019, arXiv:1910.13461. [Google Scholar] [CrossRef]

- Kim, J.; Kim, H.; Kim, H.; Lee, D.; Yoon, S. A Comprehensive Survey of Time Series Forecasting: Architectural Diversity and Open Challenges. Artif. Intell. Rev. 2025, 58, 1–95. Available online: https://www.proquest.com/docview/3127411846 (accessed on day month year). [CrossRef]

- Wilson, G.T.; Gwilym, M.J.; Gregory, C. Reinsel Time Series Analysis: Forecasting and Control, 5th ed.; John Wiley and Sons: Hoboken, NJ, USA, 2016; pp. 709–711. [Google Scholar]

- Rawat, D.; Singh, V.; Dhondiyal, S.A.; Singh, S. Time Series Forecasting Models: A Comprehensive Review. Int. J. Recent Technol. Eng. 2020, 8, 84–86. [Google Scholar] [CrossRef]

- Vagropoulos, S.I.; Chouliaras, G.I.; Kardakos, E.G.; Simoglou, C.K.; Bakirtzis, A.G. Comparison of SARIMAX, SARIMA, modified SARIMA and ANN-based models for short-term PV generation forecasting. In Proceedings of the 2016 IEEE International Energy Conference (ENERGYCON), Leuven, Belgium, 14 July 2016; pp. 1–6. [Google Scholar]

- Cools, M.; Moons, E.; Wets, G. Investigating the Variability in Daily Traffic Counts through use of ARIMAX and SARIMAX Models. Transp. Res. Rec. J. Transp. Res. Board 2009, 2136, 57–66. [Google Scholar] [CrossRef]

- Jain, A.; Karthikeyan, V. Demand Forecasting for E-Commerce Platforms. In Proceedings of the 2020 IEEE International Conference for Innovation in Technology (INOCON), Bangalore, India, 6–8 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–4. [Google Scholar]

- Montaser, M.A.A.; Ghosh, B.P.; Barua, A.; Karim, F.; Das, B.C.; Shawon, R.E.R.; Chowdhury, M.S.R. Sentiment analysis of social media data: Business insights and consumer behavior trends in the USA. Edelweiss Appl. Sci. Technol. 2025, 9, 515–535. [Google Scholar] [CrossRef]

- Garza, A.; Mergenthaler-Canseco, M. TimeGPT-1. arXiv 2023, arXiv:2310.03589. [Google Scholar] [CrossRef]

| Model | Description | RMSE | MAE |

|---|---|---|---|

| ARIMA (Baseline) | Univariate; uses only past sentiment data. | 0.3677 | 0.3239 |

| SARIMAX (Proposed) | Multivariate; includes exogenous features (e.g., tweet volume and topic embeddings). | 0.2697 | 0.2249 |

| Feature | Coefficient | Std. Error | p-Value | 95% Confidence Interval | Significance |

|---|---|---|---|---|---|

| service | 0.2481 | 0.035 | 0.000 | [0.179, 0.317] | Significant (p < 0.05) |

| quality | 0.3109 | 0.029 | 0.000 | [0.254, 0.368] | Significant (p < 0.05) |

| Tweet_Count | 0.0001 | 0.000 | 0.315 | [−0.0001, 0.0003] | Not Significant |

| topic_x | −0.0154 | 0.031 | 0.620 | [−0.076, 0.045] | Not Significant |

| topic_y | 0.0089 | 0.025 | 0.721 | [−0.040, 0.058] | Not Significant |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sattar, M.U.; Hasan, R.; Palaniappan, S.; Mahmood, S.; Khan, H.W. Beyond Polarity: Forecasting Consumer Sentiment with Aspect- and Topic-Conditioned Time Series Models. Information 2025, 16, 670. https://doi.org/10.3390/info16080670

Sattar MU, Hasan R, Palaniappan S, Mahmood S, Khan HW. Beyond Polarity: Forecasting Consumer Sentiment with Aspect- and Topic-Conditioned Time Series Models. Information. 2025; 16(8):670. https://doi.org/10.3390/info16080670

Chicago/Turabian StyleSattar, Mian Usman, Raza Hasan, Sellappan Palaniappan, Salman Mahmood, and Hamza Wazir Khan. 2025. "Beyond Polarity: Forecasting Consumer Sentiment with Aspect- and Topic-Conditioned Time Series Models" Information 16, no. 8: 670. https://doi.org/10.3390/info16080670

APA StyleSattar, M. U., Hasan, R., Palaniappan, S., Mahmood, S., & Khan, H. W. (2025). Beyond Polarity: Forecasting Consumer Sentiment with Aspect- and Topic-Conditioned Time Series Models. Information, 16(8), 670. https://doi.org/10.3390/info16080670