AI in Maritime Security: Applications, Challenges, Future Directions, and Key Data Sources

Abstract

1. Introduction

1.1. Maritime Security Threats and Illegal Activities

1.1.1. Illegal Migration and Border Crossings

1.1.2. Drug Trafficking and Human Smuggling

1.1.3. Illegal Fishing

1.1.4. Environmental Threats and Marine Pollution

1.2. Limitations of Traditional Surveillance Approaches

- Coverage Gaps: Traditional surveillance systems offer limited coverage, particularly in the deep sea where patrolling infrastructure is insufficient.

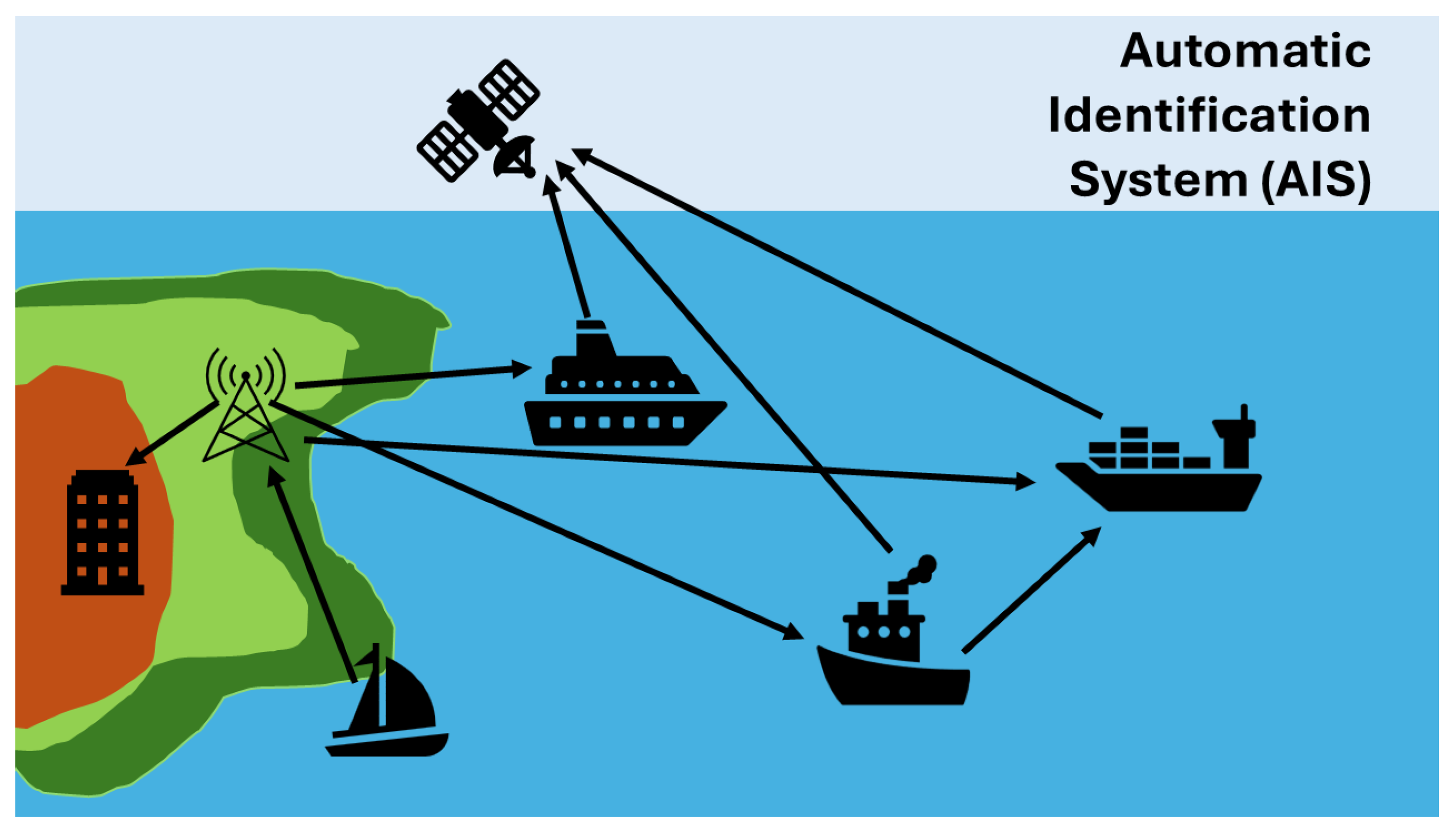

- Evasion Tactics: Various malicious actors working in crime groups leverage technology to manipulate AIS data, operate without transponders, or exploit blind spots in satellite coverage.

- Data Overload and Latency: The volume and speed of data generated by various sensors mounted in the sea and satellite systems are too much to be processed by human operators, causing delayed responses to threats or even missing them completely.

1.3. Scope and Objectives of the Paper

2. Deep Learning for Maritime Object Detection and Tracking

2.1. Vessel Detection

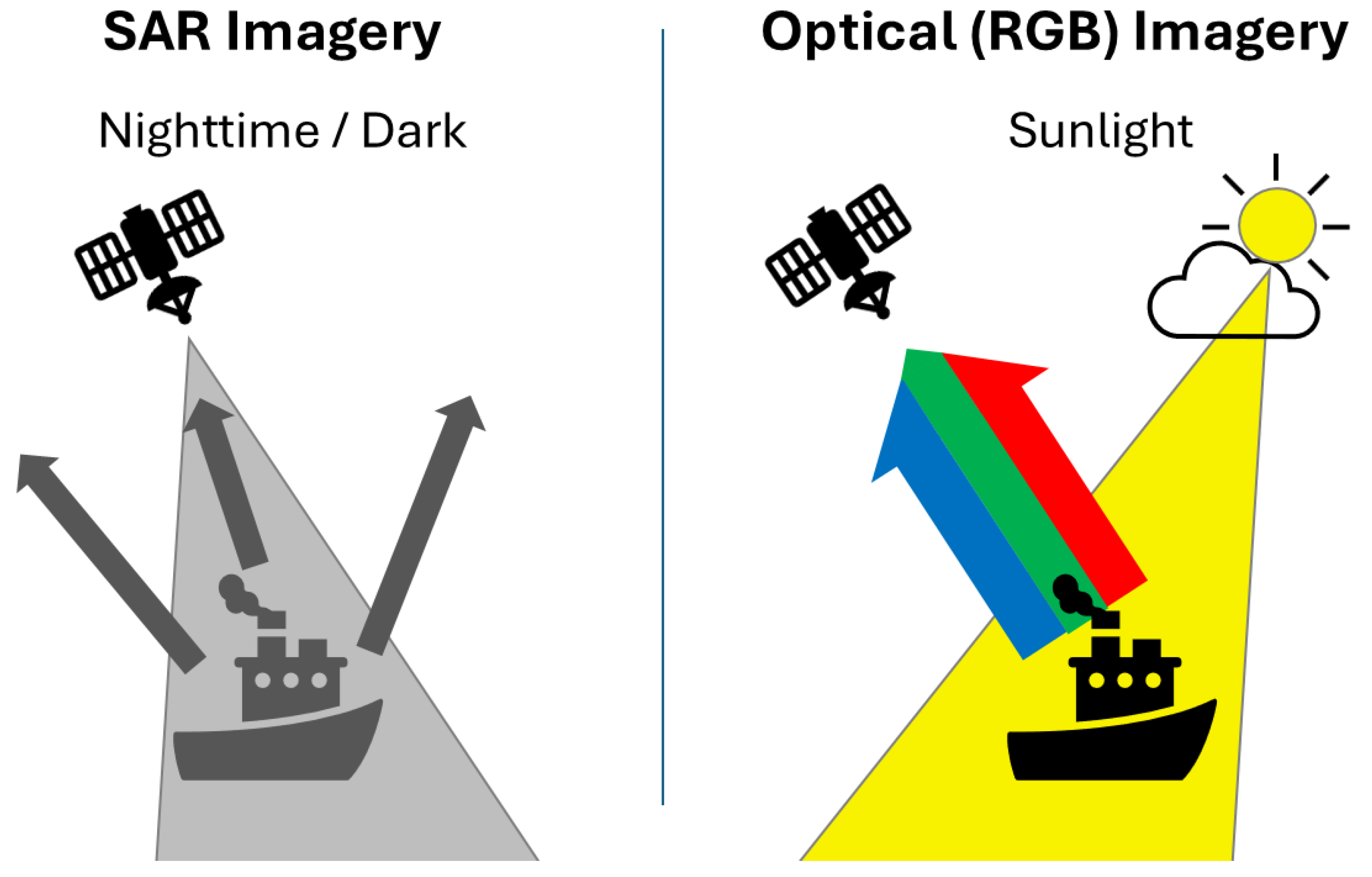

2.1.1. Satellite Imagery

2.1.2. Aerial Imagery

2.1.3. Surface Imagery

2.1.4. Radar Data

2.1.5. AIS Data

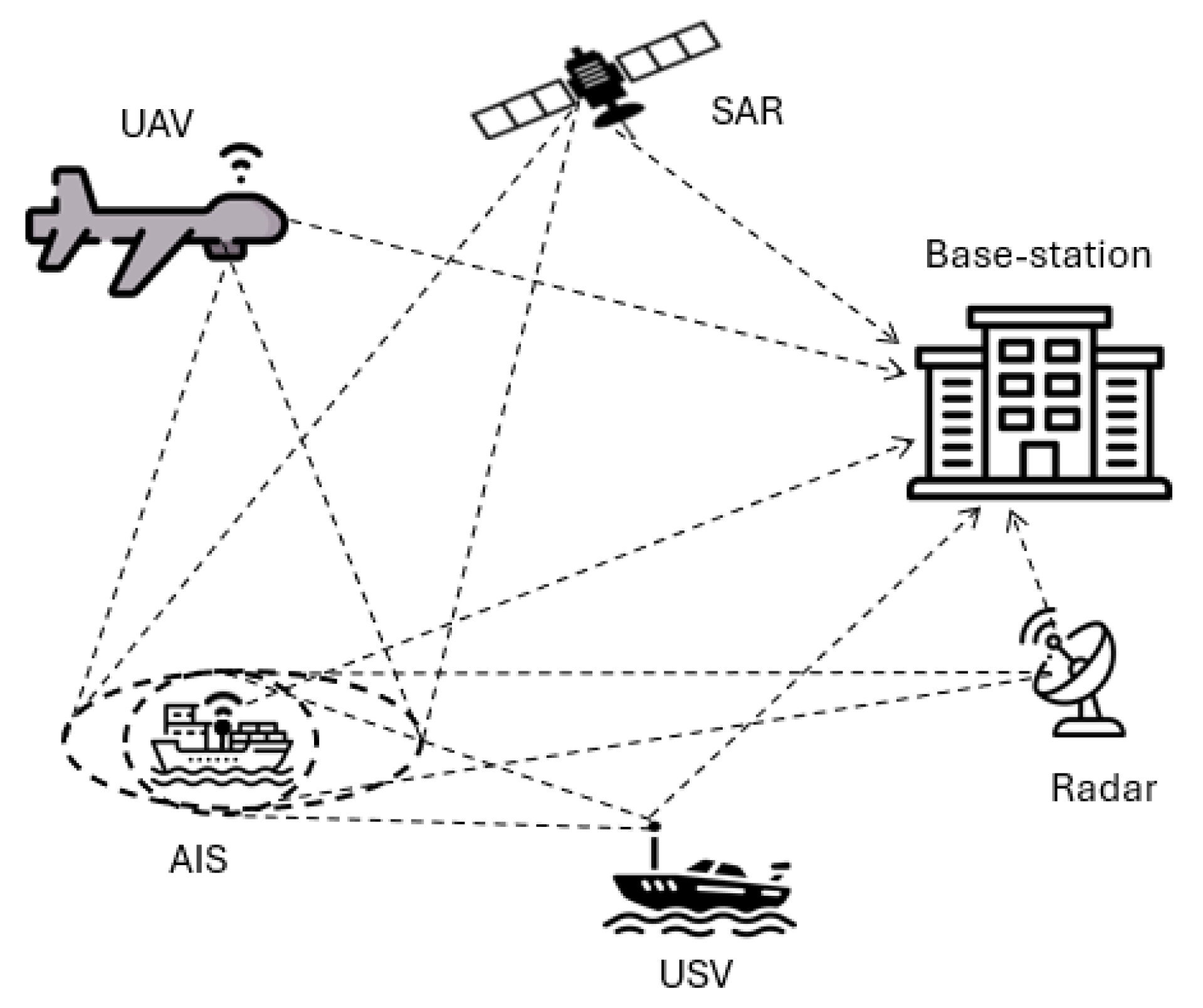

2.1.6. Integration of Data from Different Sources

2.1.7. Challenges in Vessel Detection

2.2. Anomaly Detection in Vessel Behaviour

2.2.1. Analysing AIS Data for Unusual Patterns

2.2.2. Combining AIS with Contextual Information

2.2.3. Using Sequence-Based Models

3. Deep Learning for Maritime Surveillance and Situational Awareness

3.1. Maritime Image and Video Analysis

3.1.1. Event and Activity Recognition

3.1.2. Scene Understanding and Context

3.1.3. Video Surveillance for Tracking and Anomaly

3.2. Fusion of Multi-Sensor Data

3.2.1. Fusion Architecture

3.2.2. Sensor-Specific Examples

3.3. Maritime Domain Awareness Systems Using Deep Learning

Decision Support and Visualisation

4. Deep Learning for Specific Maritime Security Applications

4.1. Illegal Fishing Detection

4.2. Piracy and Armed Robbery Prevention

4.3. Smuggling and Trafficking Detection

4.4. Maritime Environmental Monitoring

4.5. Safety, Search and Rescue Operations

5. Key Sources for Deep Learning in Maritime Domain

6. Challenges and Future Directions

7. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- European External Action Service. Maritime Security. 2025. Available online: https://www.eeas.europa.eu/marsec25-eu-maritime-security_en (accessed on 24 June 2025).

- Kowalski, M.; Pałka, N.; Młyńczak, J.; Karol, M.; Czerwińska, E.; Życzkowski, M.; Ciurapiński, W.; Zawadzki, Z.; Brawata, S. Detection of inflatable boats and people in thermal infrared with deep learning methods. Sensors 2021, 21, 5330. [Google Scholar] [CrossRef] [PubMed]

- Galdelli, A.; Narang, G.; Pietrini, R.; Zazzarini, M.; Fiorani, A.; Tassetti, A.N. Multimodal AI-enhanced ship detection for mapping fishing vessels and informing on suspicious activities. Pattern Recognit. Lett. 2025, 191, 15–22. [Google Scholar] [CrossRef]

- Guan, Y.; Zhang, X.; Chen, S.; Liu, G.; Jia, Y.; Zhang, Y.; Gao, G.; Zhang, J.; Li, Z.; Cao, C. Fishing vessel classification in SAR images using a novel deep learning model. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5215821. [Google Scholar] [CrossRef]

- Ventikos, N.P.; Koimtzoglou, A.; Michelis, A.; Stouraiti, A.; Kopsacheilis, I.; Podimatas, V. A Bayesian network-based tool for crisis classification in piracy or armed robbery incidents on passenger ships. Proc. Inst. Mech. Eng. Part M J. Eng. Marit. Environ. 2024, 238, 251–261. [Google Scholar] [CrossRef]

- Trujillo-Acatitla, R.; Tuxpan-Vargas, J.; Ovando-Vázquez, C.; Monterrubio-Martínez, E. Marine oil spill detection and segmentation in SAR data with two steps deep learning framework. Mar. Pollut. Bull. 2024, 204, 116549. [Google Scholar] [CrossRef]

- Gamage, C.; Dinalankara, R.; Samarabandu, J.; Subasinghe, A. A comprehensive survey on the applications of machine learning techniques on maritime surveillance to detect abnormal maritime vessel behaviors. WMU J. Marit. Aff. 2023, 22, 447–477. [Google Scholar] [CrossRef]

- Bentes, C.; Velotto, D.; Tings, B. Ship classification in TerraSAR-X images with convolutional neural networks. IEEE J. Ocean. Eng. 2017, 43, 258–266. [Google Scholar] [CrossRef]

- Wang, S.; Kim, B. Scale-Sensitive Attention for Multi-Scale Maritime Vessel Detection Using EO/IR Cameras. Appl. Sci. 2024, 14, 11604. [Google Scholar] [CrossRef]

- Jiang, X.; Liu, T.; Song, T.; Cen, Q. Optimized Marine Target Detection in Remote Sensing Images with Attention Mechanism and Multi-Scale Feature Fusion. Information 2025, 16, 332. [Google Scholar] [CrossRef]

- Mujtaba, D.F.; Mahapatra, N.R. Deep Learning for Spatiotemporal Modeling of Illegal, Unreported, and Unregulated Fishing Events. In Proceedings of the 2022 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 14–16 December 2022; pp. 423–425. [Google Scholar] [CrossRef]

- Yang, D.; Solihin, M.I.; Ardiyanto, I.; Zhao, Y.; Li, W.; Cai, B.; Chen, C. A streamlined approach for intelligent ship object detection using EL-YOLO algorithm. Sci. Rep. 2024, 14, 15254. [Google Scholar] [CrossRef]

- Karst, J.; McGurrin, R.; Gavin, K.; Luttrell, J.; Rippy, W.; Coniglione, R.; McKenna, J.; Riedel, R. Enhancing Maritime Domain Awareness Through AI-Enabled Acoustic Buoys for Real-Time Detection and Tracking of Fast-Moving Vessels. Sensors 2025, 25, 1930. [Google Scholar] [CrossRef] [PubMed]

- Yan, H.; Chen, C.; Jin, G.; Zhang, J.; Wang, X.; Zhu, D. Implementation of a Modified Faster R-CNN for Target Detection Technology of Coastal Defense Radar. Remote Sens. 2021, 13, 1703. [Google Scholar] [CrossRef]

- Hu, H.; Zhou, W.; Jiang, B.; Zhang, J.; Cheng, T. Exploring deep learning techniques for the extraction of lit fishing vessels from Luojia1-01. Ecol. Indic. 2024, 159, 111682. [Google Scholar] [CrossRef]

- Ding, J.; Li, W.; Pei, L.; Yang, M.; Ye, C.; Yuan, B. Sw-YoloX: An anchor-free detector based transformer for sea surface object detection. Expert Syst. Appl. 2023, 217, 119560. [Google Scholar] [CrossRef]

- Walsh, P.W.; Cuibus, M.V. People Crossing the English Channel in Small Boats; Briefing, Migration Observatory, University of Oxford: Oxford, UK, 2025. [Google Scholar]

- Government of Canada. Illegal, Unreported and Unregulated (IUU) Fishing; Government of Canada: Ottawa, ON, Canada, 2019.

- Cheng, X.; Wang, J.; Chen, X.; Zhang, F. Attention-enhanced and integrated deep learning approach for fishing vessel classification based on multiple features. Sci. Rep. 2025, 15, 8642. [Google Scholar] [CrossRef]

- Burgherr, P. In-depth analysis of accidental oil spills from tankers in the context of global spill trends from all sources. J. Hazard. Mater. 2007, 140, 245–256. [Google Scholar] [CrossRef]

- Bui, N.A.; Oh, Y.; Lee, I. Oil spill detection and classification through deep learning and tailored data augmentation. Int. J. Appl. Earth Obs. Geoinf. 2024, 129, 103845. [Google Scholar] [CrossRef]

- Qu, J.; Gao, Y.; Lu, Y.; Xu, W.; Liu, R.W. Deep learning-driven surveillance quality enhancement for maritime management promotion under low-visibility weathers. Ocean Coast. Manag. 2023, 235, 106478. [Google Scholar] [CrossRef]

- Baswaid, M.H.; Darir, F.F.F.; Qin, C.Y.; Sofian, A.P.; Amin, N. Deep Learning-Based Ship Detection: Enhancing Maritime Surveillance with Convolutional Neural Networks. Preprints 2025. [Google Scholar] [CrossRef]

- Dimitrov, T. Applying Artificial Intelligence for improving Situational awareness and Threat monitoring at sea as key factor for success in Naval operation. In Proceedings of the Environment, Technologies, Resources, Proceedings of the International Scientific and Practical Conference, Rezekne, Latvia, 27–28 June 2024; Volume 4, pp. 49–55. [Google Scholar]

- Kanjir, U.; Greidanus, H.; Oštir, K. Vessel detection and classification from spaceborne optical images: A literature survey. Remote Sens. Environ. 2018, 207, 1–26. [Google Scholar] [CrossRef]

- Ahmed, M.; El-Sheimy, N.; Leung, H. Dual-Modal Approach for Ship Detection: Fusing Synthetic Aperture Radar and Optical Satellite Imagery. Sensors 2025, 25, 329. [Google Scholar] [CrossRef]

- Jeon, I.; Ham, S.; Cheon, J.; Klimkowska, A.M.; Kim, H.; Choi, K.; Lee, I. A real-time drone mapping platform for marine surveillance. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 385–391. [Google Scholar] [CrossRef]

- Park, J.J.; Park, K.A.; Kim, T.S.; Oh, S.; Lee, M. Aerial hyperspectral remote sensing detection for maritime search and surveillance of floating small objects. Adv. Space Res. 2023, 72, 2118–2136. [Google Scholar] [CrossRef]

- Zhang, Z.; Lu, X.; Cao, G.; Yang, Y.; Jiao, L.; Liu, F. ViT-YOLO: Transformer-based YOLO for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2799–2808. [Google Scholar]

- PhiliP-Kpae, F.O.; Ogbondamati, L.E.; Ebri, K.F.E. Evaluating Marine Radar Object Detection System Using Yolo-Based Deep Learning Algorithm. Direct Res. J. Eng. Inf. Technol. 2025, 13, 7–15. [Google Scholar] [CrossRef]

- International Maritime Organization. Automatic Identification Systems (AIS) Transponders. 2025. Available online: https://www.imo.org/en/OurWork/Safety/Pages/AIS.aspx (accessed on 9 June 2025).

- Murray, B.; Perera, L.P. An AIS-based deep learning framework for regional ship behavior prediction. Reliab. Eng. Syst. Saf. 2021, 215, 107819. [Google Scholar] [CrossRef]

- V7 Labs. Multimodal Deep Learning: Definition, Examples, Applications. 2025. Available online: https://www.v7labs.com/blog/multimodal-deep-learning-guide (accessed on 9 June 2025).

- Zhang, Q.; Wang, L.; Meng, H.; Zhang, Z.; Yang, C. Ship Detection in Maritime Scenes under Adverse Weather Conditions. Remote Sens. 2024, 16, 1567. [Google Scholar] [CrossRef]

- Chen, X.; Wei, C.; Xin, Z.; Zhao, J.; Xian, J. Ship Detection under Low-Visibility Weather Interference via an Ensemble Generative Adversarial Network. J. Mar. Sci. Eng. 2023, 11, 2065. [Google Scholar] [CrossRef]

- Riveiro, M.J. Visual Analytics for Maritime Anomaly Detection. Ph.D. Thesis, Örebro Universitet, Örebro, Sweden, 2011. [Google Scholar]

- Riveiro, M.; Pallotta, G.; Vespe, M. Maritime anomaly detection: A review. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2018, 8, e1266. [Google Scholar] [CrossRef]

- Nguyen, D.; Vadaine, R.; Hajduch, G.; Garello, R.; Fablet, R. GeoTrackNet—A maritime anomaly detector using probabilistic neural network representation of AIS tracks and a contrario detection. IEEE Trans. Intell. Transp. Syst. 2021, 23, 5655–5667. [Google Scholar] [CrossRef]

- Pradipta, G.A.; Wardoyo, R.; Musdholifah, A.; Sanjaya, I.N.H.; Ismail, M. SMOTE for Handling Imbalanced Data Problem: A Review. In Proceedings of the 2021 Sixth International Conference on Informatics and Computing (ICIC), Virtual Conference, 3–4 November 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Liu, F.T.; Ting, K.M.; Zhou, Z.H. Isolation Forest. In Proceedings of the 2008 Eighth IEEE International Conference on Data Mining, Pisa, Italy, 15–19 December 2008; pp. 413–422. [Google Scholar] [CrossRef]

- González-Muñiz, A.; Díaz, I.; Cuadrado, A.A.; García-Pérez, D.; Pérez, D. Two-step residual-error based approach for anomaly detection in engineering systems using variational autoencoders. Comput. Electr. Eng. 2022, 101, 108065. [Google Scholar] [CrossRef]

- Wijaya, W.M.; Nakamura, Y. Loitering behavior detection by spatiotemporal characteristics quantification based on the dynamic features of Automatic Identification System (AIS) messages. PeerJ Comput. Sci. 2023, 9, e1572. [Google Scholar] [CrossRef] [PubMed]

- Duan, H.; Ma, F.; Miao, L.; Zhang, C. A semi-supervised deep learning approach for vessel trajectory classification based on AIS data. Ocean Coast. Manag. 2022, 218, 106015. [Google Scholar] [CrossRef]

- Maganaris, C.; Protopapadakis, E.; Doulamis, N. Outlier detection in maritime environments using AIS data and deep recurrent architectures. In Proceedings of the 17th International Conference on PErvasive Technologies Related to Assistive Environments, Crete, Greece, 26–28 June 2024; pp. 420–427. [Google Scholar]

- Rong, H.; Teixeira, A.; Guedes Soares, C. A framework for ship abnormal behaviour detection and classification using AIS data. Reliab. Eng. Syst. Saf. 2024, 247, 110105. [Google Scholar] [CrossRef]

- Wolsing, K.; Roepert, L.; Bauer, J.; Wehrle, K. Anomaly Detection in Maritime AIS Tracks: A Review of Recent Approaches. J. Mar. Sci. Eng. 2022, 10, 112. [Google Scholar] [CrossRef]

- Minßen, F.M.; Klemm, J.; Steidel, M.; Niemi, A. Predicting Vessel Tracks in Waterways for Maritime Anomaly Detection. Trans. Marit. Sci. 2024, 13. [Google Scholar] [CrossRef]

- Martinčič, T.; Štepec, D.; Costa, J.P.; Čagran, K.; Chaldeakis, A. Vessel and Port Efficiency Metrics through Validated AIS data. In Proceedings of the Global Oceans 2020: Singapore–U.S. Gulf Coast, Virtual, 5–14 October 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Radon, A.N.; Wang, K.; Glässer, U.; Wehn, H.; Westwell-Roper, A. Contextual verification for false alarm reduction in maritime anomaly detection. In Proceedings of the 2015 IEEE International Conference on Big Data (Big Data), Santa Clara, CA, USA, 29 October–1 November 2015; pp. 1123–1133. [Google Scholar] [CrossRef]

- Su, L.; Zuo, X.; Li, R.; Wang, X.; Zhao, H.; Huang, B. A systematic review for transformer-based long-term series forecasting. Artif. Intell. Rev. 2025, 58, 80. [Google Scholar] [CrossRef]

- Capobianco, S.; Millefiori, L.M.; Forti, N.; Braca, P.; Willett, P. Deep learning methods for vessel trajectory prediction based on recurrent neural networks. IEEE Trans. Aerosp. Electron. Syst. 2021, 57, 4329–4346. [Google Scholar] [CrossRef]

- Petković, M. Enhancing Maritime Video Surveillance Trough Deep Learning and Hybrid Distance Estimation. Ph.D. Thesis, University of Split, Split, Croatia, 2024. [Google Scholar]

- Seong, N.; Kim, J.; Lim, S. Graph-Based Anomaly Detection of Ship Movements Using CCTV Videos. J. Mar. Sci. Eng. 2023, 11, 1956. [Google Scholar] [CrossRef]

- Xue, H.; Chen, X.; Zhang, R.; Wu, P.; Li, X.; Liu, Y. Deep learning-based maritime environment segmentation for unmanned surface vehicles using superpixel algorithms. J. Mar. Sci. Eng. 2021, 9, 1329. [Google Scholar] [CrossRef]

- Bilous, N.; Malko, V.; Frohme, M.; Nechyporenko, A. Comparison of CNN-Based Architectures for Detection of Different Object Classes. AI 2024, 5, 2300–2320. [Google Scholar] [CrossRef]

- Matasci, G.; Plante, J.; Kasa, K.; Mousavi, P.; Stewart, A.; Macdonald, A.; Webster, A.; Busler, J. Deep learning for vessel detection and identification from spaceborne optical imagery. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 3, 303–310. [Google Scholar] [CrossRef]

- Huang, Y.; Han, D.; Han, B.; Wu, Z. ADV-YOLO: Improved SAR ship detection model based on YOLOv8. J. Supercomput. 2025, 81, 34. [Google Scholar] [CrossRef]

- Li, X. Ship segmentation via combined attention mechanism and efficient channel attention high-resolution representation network. J. Mar. Sci. Eng. 2024, 12, 1411. [Google Scholar] [CrossRef]

- Xing, Z.; Ren, J.; Fan, X.; Zhang, Y. S-DETR: A transformer model for real-time detection of marine ships. J. Mar. Sci. Eng. 2023, 11, 696. [Google Scholar] [CrossRef]

- Guo, L.; Wang, Y.; Guo, M.; Zhou, X. YOLO-IRS: Infrared Ship Detection Algorithm Based on Self-Attention Mechanism and KAN in Complex Marine Background. Remote Sens. 2024, 17, 20. [Google Scholar] [CrossRef]

- Farahnakian, F.; Heikkonen, J. Deep learning based multi-modal fusion architectures for maritime vessel detection. Remote Sens. 2020, 12, 2509. [Google Scholar] [CrossRef]

- Kalliovaara, J.; Jokela, T.; Asadi, M.; Majd, A.; Hallio, J.; Auranen, J.; Seppänen, M.; Putkonen, A.; Koskinen, J.; Tuomola, T.; et al. Deep learning test platform for maritime applications: Development of the em/s salama unmanned surface vessel and its remote operations center for sensor data collection and algorithm development. Remote Sens. 2024, 16, 1545. [Google Scholar] [CrossRef]

- Lu, Y.; Yang, K.; Yang, D.; Ding, H.; Weng, J.; Liu, R.W. Graph Learning-Driven Multi-Vessel Association: Fusing Multimodal Data for Maritime Intelligence. arXiv 2025, arXiv:2504.09197. [Google Scholar]

- Lu, Y.; Ma, H.; Smart, E.; Vuksanovic, B.; Chiverton, J.; Prabhu, S.R.; Glaister, M.; Dunston, E.; Hancock, C. Fusion of camera-based vessel detection and ais for maritime surveillance. In Proceedings of the 2021 26th International Conference on Automation and Computing (ICAC), Portsmouth, UK, 2–4 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–6. [Google Scholar]

- MIT Sea Grant Autonomous Underwater Vehicles Lab. AUV Lab – Marine Perception Datasets (AUVLab). 2022. Available online: https://seagrant.mit.edu/auvlab-datasets-marine-perception-2-3/ (accessed on 9 June 2025).

- Protopapadakis, E.; Voulodimos, A.; Doulamis, A.; Doulamis, N.; Dres, D.; Bimpas, M. Stacked autoencoders for outlier detection in over-the-horizon radar signals. Comput. Intell. Neurosci. 2017, 2017, 5891417. [Google Scholar] [CrossRef]

- Yao, S.; Guan, R.; Wu, Z.; Ni, Y.; Huang, Z.; Liu, R.W.; Yue, Y.; Ding, W.; Lim, E.G.; Seo, H.; et al. Waterscenes: A multi-task 4d radar-camera fusion dataset and benchmarks for autonomous driving on water surfaces. IEEE Trans. Intell. Transp. Syst. 2024, 25, 16584–16598. [Google Scholar] [CrossRef]

- Varga, M.; Liggett, K.K.; Bivall, P.; Lavigne, V. Exploratory Visual Analytics (STO-TR-IST-141); Technical Report; NATO: Brussels, Belgium, 2023. [Google Scholar]

- FAO. State Of Worlds Fisheries And Aquaculture 2002; Food and Agriculture Organization of the United Nations: Rome, Italy, 2020. [Google Scholar]

- Jin, M.; Shi, W.; Lin, K.C.; Li, K.X. Marine piracy prediction and prevention: Policy implications. Mar. Policy 2019, 108, 103528. [Google Scholar] [CrossRef]

- Li, H.; Yang, Z. Towards safe navigation environment: The imminent role of spatio-temporal pattern mining in maritime piracy incidents analysis. Reliab. Eng. Syst. Saf. 2023, 238, 109422. [Google Scholar] [CrossRef]

- Fahreza, M.I.; Hirata, E. Maritime piracy and armed robbery analysis in the Straits of Malacca and Singapore through the utilization of natural language processing. Marit. Policy Manag. 2024, 52, 709–722. [Google Scholar] [CrossRef]

- Hu, Z.; Sun, Y.; Zhao, Y.; Wu, W.; Gu, Y.; Chen, K. Msif-Sstr: A Ship Smuggling Trajectory Recognition Method Based on Multi-Source Information Fusion. Appl. Ocean. Res. 2025. [Google Scholar] [CrossRef]

- Sun, Z.; Yang, Q.; Yan, N.; Chen, S.; Zhu, J.; Zhao, J.; Sun, S. Utilizing deep learning algorithms for automated oil spill detection in medium resolution optical imagery. Mar. Pollut. Bull. 2024, 206, 116777. [Google Scholar] [CrossRef]

- Deo, R.; John, C.M.; Zhang, C.; Whitton, K.; Salles, T.; Webster, J.M.; Chandra, R. Deepdive: Leveraging Pre-trained Deep Learning for Deep-Sea ROV Biota Identification in the Great Barrier Reef. Sci. Data 2024, 11, 957. [Google Scholar] [CrossRef]

- Taipalmaa, J.; Raitoharju, J.; Queralta, J.P.; Westerlund, T.; Gabbouj, M. On automatic person-in-water detection for marine search and rescue operations. IEEE Access 2024, 12, 52428–52438. [Google Scholar] [CrossRef]

- Wang, S.; Han, Y.; Chen, J.; Zhang, Z.; Wang, G.; Du, N. A deep-learning-based sea search and rescue algorithm by UAV remote sensing. In Proceedings of the 2018 IEEE CSAA Guidance, Navigation and Control Conference (CGNCC), Xiamen, China, 10–12 August 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–5. [Google Scholar]

- Moosbauer, S.; Konig, D.; Jakel, J.; Teutsch, M. A benchmark for deep learning based object detection in maritime environments. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Kiefer, B.; Kristan, M.; Perš, J.; Žust, L.; Poiesi, F.; Andrade, F.; Bernardino, A.; Dawkins, M.; Raitoharju, J.; Quan, Y.; et al. 1st workshop on maritime computer vision (macvi) 2023: Challenge results. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 265–302. [Google Scholar]

- AISHub. AIS Data Sharing and Vessel Tracking by AISHub. 2025. Available online: https://www.aishub.net/ (accessed on 27 June 2025).

- Al-Saad, M.; Aburaed, N.; Panthakkan, A.; Al Mansoori, S.; Al Ahmad, H.; Marshall, S. Airbus ship detection from satellite imagery using frequency domain learning. In Proceedings of the Image and Signal Processing for Remote Sensing XXVII, Online, 13–18 September 2021; SPIE: Bellingham, WA, USA, 2021; Volume 11862, pp. 279–285. [Google Scholar]

- Shao, Z.; Wu, W.; Wang, Z.; Du, W.; Li, C. Seaships: A large-scale precisely annotated dataset for ship detection. IEEE Trans. Multimed. 2018, 20, 2593–2604. [Google Scholar] [CrossRef]

- Huang, W.; Feng, H.; Xu, H.; Liu, X.; He, J.; Gan, L.; Wang, X.; Wang, S. Surface Vessels Detection and Tracking Method and Datasets with Multi-Source Data Fusion in Real-World Complex Scenarios. Sensors 2025, 25, 2179. [Google Scholar] [CrossRef]

- Nanda, A.; Cho, S.W.; Lee, H.; Park, J.H. KOLOMVERSE: Korea Open Large-Scale Image Dataset for Object Detection in the Maritime Universe. IEEE Trans. Intell. Transp. Syst. 2024, 25, 20832–20840. [Google Scholar] [CrossRef]

- Wei, S.; Zeng, X.; Qu, Q.; Wang, M.; Su, H.; Shi, J. HRSID: A high-resolution SAR images dataset for ship detection and instance segmentation. IEEE Access 2020, 8, 120234–120254. [Google Scholar] [CrossRef]

- Chen, S.Q.; Zhan, R.H.; Zhang, J. Robust single stage detector based on two-stage regression for SAR ship detection. In Proceedings of the 2nd International Conference on Innovation in Artificial Intelligence, London, UK, 26 July 2018; pp. 169–174. [Google Scholar]

- Watson, R.A. A database of global marine commercial, small-scale, illegal and unreported fisheries catch 1950–2014. Sci. Data 2017, 4, 170039. [Google Scholar] [CrossRef]

- Blondeau-Patissier, D.; Schroeder, T.; Suresh, G.; Li, Z.; Diakogiannis, F.I.; Irving, P.; Witte, C.; Steven, A.D. Detection of marine oil-like features in Sentinel-1 SAR images by supplementary use of deep learning and empirical methods: Performance assessment for the Great Barrier Reef marine park. Mar. Pollut. Bull. 2023, 188, 114598. [Google Scholar] [CrossRef] [PubMed]

- Lou, X.; Liu, Y.; Xiong, Z.; Wang, H. Generative knowledge transfer for ship detection in SAR images. Comput. Electr. Eng. 2022, 101, 108041. [Google Scholar] [CrossRef]

- Martinez-Esteso, J.P.; Castellanos, F.J.; Rosello, A.; Calvo-Zaragoza, J.; Gallego, A.J. On the use of synthetic data for body detection in maritime search and rescue operations. Eng. Appl. Artif. Intell. 2025, 139, 109586. [Google Scholar] [CrossRef]

- Zhao, T.; Wang, Y.; Li, Z.; Gao, Y.; Chen, C.; Feng, H.; Zhao, Z. Ship detection with deep learning in optical remote-sensing images: A survey of challenges and advances. Remote Sens. 2024, 16, 1145. [Google Scholar] [CrossRef]

- Guo, Y.; Zhou, L. MEA-Net: A lightweight SAR ship detection model for imbalanced datasets. Remote Sens. 2022, 14, 4438. [Google Scholar] [CrossRef]

- Giannopoulos, A.; Gkonis, P.; Bithas, P.; Nomikos, N.; Kalafatelis, A.; Trakadas, P. Federated learning for maritime environments: Use cases, experimental results, and open issues. J. Mar. Sci. Eng. 2024, 12, 1034. [Google Scholar] [CrossRef]

| Model | Architecture Type | Application | Strengths | Weaknesses | Latency/Comp. Cost | Operational Context/Key Limitation | Reported Performance |

|---|---|---|---|---|---|---|---|

| YOLO (v4–v8) [55] | CNN (single-shot, anchor-based) | Ship/Object Detection | Very fast inference; high accuracy; real-time capable. | Anchor design requires tuning; may miss very small objects. | Low (e.g., 20–50 FPS on modern GPUs). Suitable for real-time edge deployment on USVs or drones. | Performance degrades with heavy sun glare or reflective water, which can obscure small vessel outlines. Not ideal for highly cluttered port scenes without fine-tuning. | mAP = ∼, F1 = ≈ (on general object benchmarks) |

| RetinaNet [56] | CNN (one-stage, FPN) | Ship/Object Detection | Handles class imbalance with focal loss; high detection accuracy. | Comparatively heavy; slower than YOLO; may struggle on tiny targets. | Medium. Slower than pure single-stage detectors, may not be suitable for high-frame-rate video surveillance without powerful hardware. | Focal loss is effective for the data imbalance of rare vessel types (e.g., specific illegal fishing boats), but still challenged by severe weather or high sea states which can obscure small targets. | F1 = ≈ on multiscale spaceborne dataset |

| CNN-MR [8] | CNN (multi-resolution input) | SAR Ship Classification | Utilizes multi-scale SAR inputs for richer features; excellent classification. | Requires multi-resolution SAR data; more complex input. | High. Processing and fusing multi-resolution SAR is computationally intensive and not suited for real-time edge applications. | Specialized for SAR imagery; invaluable for all-weather, night-time surveillance where optical sensors fail. Not applicable to standard optical/IR data streams. | F1 = 0.94 |

| EL-YOLO [12] | CNN (YOLOv8 variant) | Ship/Object Detection (RGB) | Lightweight YOLOv8 variant; improved bounding box regression (AWIoU, SMFN); better small object performance. | Still CNN-heavy; many components to tune. | Low to Medium. Optimized for a reduced footprint suitable for edge devices, but added components can be more demanding than the simplest YOLO variants. | Specifically tuned for maritime scenes with many small vessels. As an RGB model, its performance is entirely dependent on good visibility and lighting conditions. | = 0.672, = 0.348 on Sea ships (significant gain YOLOv3-tiny) |

| ADV-YOLO [57] | CNN (YOLOv8 variant) | SAR Ship Detection | Enhanced for SAR: space-to-depth and dilation modules; uses WIoU loss. | May be heavyweight; specialised to SAR imagery. | High. The specialized modules add significant computational overhead compared to a baseline YOLOv8. | A highly specialized model designed to extract better features from SAR images. Excellent for overcoming adverse weather, but not general-purpose for other sensor types. | HRSID: ≈ (+4.5% vs. YOLOv8n); SSDD: + 1.1%. |

| CA2HRNet [58] | CNN (HRNet with attention) | Ship Segmentation/Detection | High resolution feature extraction with combined channel/spatial attention; achieves very high accuracy and IoU. | Computationally heavy (segmentation network); specialised. | Very High. The segmentation component adds significant overhead, making it unsuitable for real-time detection tasks. | Designed for high-precision segmentation, not just detection. Useful for tasks like precise spill area estimation or docking assistance, but too slow for general real-time tracking. | Accuracy = 99.77%, F1 = 97.0%, IoU = 96.97% |

| S-DETR [59] | Transformer (DETRbased) | Ship/Object Detection | End-to-end detection; built-in scale attention and dense queries for multi-scale ships; comparable speed to single-stage models. | Higher complexity; slow convergence; needs many epochs. | High (Training), Medium (Inference). Requires significantly more data and longer training than CNNs. Inference speed can approach real-time. | Built-in attention is theoretically more robust for scenes with vessels of vastly different sizes. However, it is less mature in operational maritime deployments compared to the well-established YOLO family. | Achieves state-of-art multi-scale detection in trials (real-time capable) |

| YOLO-IRS [60] | CNN+Transformer (Swin) | IR Ship Detection | YOLOv10-based IR model with Swin transformer backbone; better small/weak target detection, anti-interference. | Slightly higher complexity; still emerging research. | Medium. The Swin transformer backbone adds computational overhead compared to a pure CNN backbone, but is optimized for efficiency. | Specialized for Infrared (IR) data. Highly effective at night or for detecting vessels with thermal signatures (e.g., running engines) against a cooler water background. May struggle in daytime. | +1.3% precision, +0.5% , +1.7% vs. YOLOv10 |

| Fusion Type | Sensors Combined | Techniques Used | Applications | Practical Challenges and Considerations | Performance Highlights |

|---|---|---|---|---|---|

| Early Fusion [61] | RGB (EO)+IR imagery | CNN (concatenate inputs) | Vessel detection in visible/thermal | Requires precise pixel-level alignment and calibration between sensors, which is very difficult to maintain on a moving, vibrating platform. Any misalignment can corrupt the input data and degrade model performance. | Fusing raw pixel data allows CNN to learn combined features; robust in mixed lighting. |

| Mid Fusion [61] | RGB+IR imagery | CNN (feature-level fusion) | Vessel detection across modalities | Architecturally complex. Balancing and normalizing features from different modalities (e.g., visual texture vs. thermal intensity) before fusion is crucial to avoid one sensor’s features dominating the other. Requires careful network design. | Multi-modal mid-fusion gave highest accuracy: AP = ≈ (daytime) and 61.6% (night), outperforming uni-modal. |

| Late Fusion [61] | RGB+IR imagery | CNN (separate branches) | Ensemble detection/classification | Can be less efficient as it requires running multiple full models. The primary challenge lies in designing the decision-level logic to effectively associate or resolve conflicting detections from the different sensor streams. | Decision-level fusion improves robustness; effectively integrates complementary IR and RGB cues. |

| Mid Fusion [66] | AIS+Marine Radar | RNN, CNN | Vessel behaviour classification | Major challenge is robust data association between sparse, high-latency AIS signals and continuous radar tracks. Prone to failure if AIS signals are spoofed, delayed, or lost (e.g., ’dark vessels’), making it difficult to reliably link a radar blip to a vessel identity. | Learns spatiotemporal patterns from trajectories and radar; showed moderate precision (data-limited) in identifying vessel status. |

| Association (graph) [63] | AIS+EO Video (CCTV) | GNN with attention | Multi-target vessel association | Requires complex and continuous temporal and spatial alignment: matching sparse AIS pings to continuous video frames and co-registering world coordinates with pixel coordinates. High vessel density can lead to incorrect associations. | Graph-based fusion with spatiotemporal attention improved association accuracy and robustness. |

| Model | Architecture Type | Application | Strengths | Weaknesses | Reported Performance |

|---|---|---|---|---|---|

| BiLSTM-CNN-Attention [19] | BiLSTM, CNN and attention mechanism | Illegal Fishing Detection | High accuracy; real-time capable; capturing both past and future context in the sequential data | Data bias problems; misclassifies stow-net vessels and gillnetters as illegal fishing trawlers | Accuracy ≈ 74%, Precision = 0.7562, Recall = 0.7410, F1 Score = 0.7408 |

| FishNet [4] | A combination of DenseNet, Feature Fusion (CNN-based module), and Multilevel Feature Aggregation | Fishing vessels classification | High accuracy | Longer training time | Accuracy ≈ 90%, Precision = 0.9017, Recall = 0.8981, F1 Score = 0.8971 |

| Stacked-YOLOV5 [15] | CNN (YOLOv5) | Lit fishing boats detection | Improved feature extraction and detection performance | Poor detection accuracy when lights from non-fishing vessels introduce noise | Precision = 0.966, Recall = 0.930, Map@0.5 = 0.931 F1 Score = 0.948 |

| YOLOv10s [3] | CNN (YOLOv10 small) | Dark vessels detection | Able to detect small ships; reduced architecture with unnecessary Conv and C2f layers removed | The proposed pipeline demands high computational resources. | accuracy = 0.8588, = 0.6631, precision = 0.9370, recall = 0.9381, and specificity = 0.9869 |

| YOLOv8m [73] | CNN (YOLOv8m) | Ship-to-ship smuggling detection | High accuracy; fusion of radar trajectories and the corresponding meteorological data | Higher complexity | F1 = 0.97, accuracy = 94% |

| Faster R-CNN with ResNet101 [2] | CNN, RNN (YOLOv2-v3, Faster R-CNN), feature extraction (GoogLeNet, ResNet18, ResNet50, and ResNet101) | Small inflatable smuggling boats detection | Faster R-CNN with ResNet101 achieves high detection rate | Higher complexity; slow convergence; needs many epochs; detection capability reduction in varying environmental conditions | Accuracy = 95%, mIoU = 79% |

| Sw-YoloX [16] | CNN (Convolutional Block Attention Module, Atrous Spatial Pyramid Pooling) | Search and Rescue Operations | High accuracy | Requires pruning for lower weights to reduce memory overhead | F1 = 0.78, mAP = 54, recall = 0.72 |

| Dataset Name | Sensor/Modality | Data Type | Annotations | Size/Scale | Limitations |

|---|---|---|---|---|---|

| WaterScenes [67] | Camera (RGB), 4D Radar, GPS/IMU | Image sequences (video) | 2D bounding boxes (camera), 3D point clusters (radar) | 54,120 RGB frames+radar scans; ∼200 k object instances | Same locale (Singapore); weather range limited. |

| SeaDronesSee [79] | UAV RGB Video | Images & video | Bounding boxes (boats, people, flares); track IDs (multi/SOT) | 8930 train+ 3750 test images (drones); includes full video clips for tracking | Mostly temperate marine conditions; daytime imagery |

| Airbus Ship Detection [81] | Satellite optical (SPOT) | Image chips | Pixel-wise ship masks (RLE) | 231,723 images, 81,723 contain at-least 1 ship | Primarily daylight RGB; many empty frames; oriented masks |

| SeaShips [82] | Shorebased cameras (RGB) | Images | Bounding boxes + ship type (6 classes) | 31,455 images of coastal traffic | Fixed coastal perspectives; limited environmental diversity |

| SPSCD [83] | Port surveillance (RGB) | Images | Bounding boxes + ship class (12 types) | 19,337 images, 27,849 labeled ship instances | Focused on port environments; no AIS tracking |

| KOLOMVERSE [84] | UAV 4K images | Images | Bounding boxes (vessels) | 100,000+ 4 K images of one class “boat” | Single object class (“boat”); access upon request |

| HRSID [85] | SAR imagery | Images | Bounding boxes (ships) | 5604 high-res SAR images, 16,951 ship instances | SAR-only modality (requires specialised processing) |

| SSDD [86] | SAR imagery (Sentinel-1, TerraSAR-X) | Images | Bounding boxes (ships) | 2752 SAR image chips (ships/non-ships) | Limited to SAR; chip-based (small images) |

| Dataset Name | Application | Size/Scale | Limitations |

|---|---|---|---|

| Global Fisheries Catch 1950–2014 [87] | A database of global marine commercial, small-scale, illegal and unreported fisheries catch 1950–2014 | Nearly 868 million records with 12 descriptive fields, structured in 5-year blocks starting from 1950 | Data can be heavily skewed toward certain regions or time periods, undermining representativeness |

| FishingVesselSAR [4] | SAR images for fishing vessel classification | 369 high-resolution SAR image (116 gillnetters, 72 seiners, and 181 trawlers) | Data can be heavily skewed toward certain regions or time periods, undermining representativeness |

| [15] | Nighttime SAR images for fishing vessel classification | 1364 high-resolution SAR image of 1281 lit fishing vessels | The sample dataset is relatively small and the presence of lights from non-fishing vessels may introduce noise. |

| Maritime Piracy Incidents [70] | Structured data of piracy incidents | 8369 records of piracy incidents from 1990–2021 | Dataset primarily focuses on high-risk areas, potentially overlooking other regions. |

| HS3-S2 [3] | SAR, Sentinel-2, and high-resolution optical images for detecting suspicious maritime activities | 69,331 images | Integrating multiple sources of satellite imagery increases the complexity of pre-processing and model training. Additionally, the varying resolutions of the images from different sources can pose challenges in standardising the input data for the detection model. |

| HN_BF [73] | Ship trajectories near Qiongzhou Strait in China from March to May 2024 | 5337 labeled trajectories including 1473 as “Big flyer” and the rest as “Normal” | Focusing on one particular region which may impact model generalisation ability when employed outside the specified region. |

| CSIRO [88] | Oil spill detection dataset | 5630 image chips: 3725 chips class 0 (no oil features) and 1905 chips with class 1 (containing oil features) | Look-alike features such as wind shadows, reef structures, or biogenic slicks may increase the false positive rate of oil-like feature detection. |

| Oil spill [21] | Oil spill segmentation and classification dataset | 19,544 RGB images: 8376 cropped images, 3168 resized images, and 8000 synthetic images | The dataset is imbalanced, with certain types of oil spills being underrepresented compared to others. The images come from various sources with different resolutions, which can affect the model’s performance. |

| Deepdive [75] | Deep-sea biota images captured by a remotely operated vehicle (ROV) | 4158 images of deep-sea biota belonging to 62 different classes | The manual labeling process, despite rigorous quality control, may still introduce errors due to the complexity of deep-sea biota shapes and overlapping boundaries. |

| SeaDronesSee [79] | UAV videos for maritime surveillance, rescue operations, human detection in aquatic environments, drone-based vision research. | 54,000 image with 400,000 instances with class labels such as boats, people, and buoys. | It is a synthetic dataset, however effectiveness of computer vision algorithms is heavily reliant on real-case training data. |

| SAR-HumanDetection-FinlandProper [76] | UAV images for maritime surveillance, rescue operations, human detection in aquatic environments, drone-based vision research. | 72,000 images of instances with positive class label as swimming/floating person. | The dataset lacks complex scenarios and weather conditions, as the images are daylight and clear summer weather. It may be ineffective in detection tasks in real-world cases. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Talpur, K.; Hasan, R.; Gocer, I.; Ahmad, S.; Bhuiyan, Z. AI in Maritime Security: Applications, Challenges, Future Directions, and Key Data Sources. Information 2025, 16, 658. https://doi.org/10.3390/info16080658

Talpur K, Hasan R, Gocer I, Ahmad S, Bhuiyan Z. AI in Maritime Security: Applications, Challenges, Future Directions, and Key Data Sources. Information. 2025; 16(8):658. https://doi.org/10.3390/info16080658

Chicago/Turabian StyleTalpur, Kashif, Raza Hasan, Ismet Gocer, Shakeel Ahmad, and Zakirul Bhuiyan. 2025. "AI in Maritime Security: Applications, Challenges, Future Directions, and Key Data Sources" Information 16, no. 8: 658. https://doi.org/10.3390/info16080658

APA StyleTalpur, K., Hasan, R., Gocer, I., Ahmad, S., & Bhuiyan, Z. (2025). AI in Maritime Security: Applications, Challenges, Future Directions, and Key Data Sources. Information, 16(8), 658. https://doi.org/10.3390/info16080658