Abstract

The ongoing decline in global biodiversity constitutes a critical challenge for environmental science, necessitating the prompt development of effective monitoring frameworks and conservation protocols to safeguard the structure and function of natural ecosystems. Recent progress in ecoacoustic monitoring, supported by advances in artificial intelligence, might finally offer scalable tools for systematic biodiversity assessment. In this study, we evaluate the performance of BirdNET, a state-of-the-art deep learning model for avian sound recognition, in the context of selected bird species characteristic of the Italian Alpine region. To this end, we assemble a comprehensive, manually annotated audio dataset targeting key regional species, and we investigate a variety of strategies for model adaptation, including fine-tuning with data augmentation techniques to enhance recognition under challenging recording conditions. As a baseline, we also develop and evaluate a simple Convolutional Neural Network (CNN) trained exclusively on our domain-specific dataset. Our findings indicate that BirdNET performance can be greatly improved by fine-tuning the pre-trained network with data collected within the specific regional soundscape, outperforming both the original BirdNET and the baseline CNN by a significant margin. These findings underscore the importance of environmental adaptation and data variability for the development of automated ecoacoustic monitoring devices while highlighting the potential of deep learning methods in supporting conservation efforts and informing soundscape management in protected areas.

1. Introduction

The global decline in biodiversity is one of the most pressing challenges of our time [1], calling for the rapid development of improved conservation strategies to protect, preserve, and restore the health and integrity of natural ecosystems. However, efforts are hindered by significant gaps in understanding the status of and trends in biodiversity [2], making effective monitoring programs essential to advance ecological research and guide appropriate management approaches, especially considering the current climate crisis and habitat loss [3,4]. In Italy—as in many parts of the world—the network of protected areas encompasses various categories and levels, reflecting a commitment to conserving the country’s rich biodiversity. However, these areas often face challenges in meeting established conservation objectives [5,6]. A critical obstacle to the proper deployment of conservation protocols is the lack of effective monitoring frameworks that guarantee fast, accurate, and efficient methods to conduct systematic environmental surveys [7]. In fact, data are increasingly being used to inform decisions and evaluate conservation performance, reflecting a growing demand for more evidence-based approaches [8]. In this sense, birds represent excellent indicators of environmental status, as they are diverse, widespread, mobile, and quickly responsive to changes in their habitat; they are also high in the food chain and therefore sensitive to changes lower down, and their ecology is well studied [9,10].

A recent surge in the adoption of ecoacoustic technologies has significantly enhanced the monitoring of bird species’ presence, richness, and distribution [11]. In particular, passive acoustic monitoring reduces the disturbance caused by surveyors and provides standardized data that can be permanently archived while addressing issues related to surveying rare or elusive species, the inaccessibility of areas, or nighttime darkness [12,13]. However, the application of ecoacoustics is still mainly a human-supervised process due to the need to manually review recorded data, which often come in large amounts [14]. Thus, the development of low-cost recording systems [15] and automated data analysis pipelines [16] should be prioritized to implement more effective monitoring programs.

In recent years, significant progress has been made in the creation of automatic methods to analyze ecoacoustic data [17,18]. The key challenges in automated bird song recognition are that field recordings are very noisy, signal variability is strongly affected by weather and environmental conditions, birds’ vocalizations exhibit considerable variability, and there can be overlapping signals from many different individuals/species [16]. Nevertheless, automatic recognition can be dramatically improved by taking advantage of modern artificial intelligence techniques based on deep learning, which can achieve remarkable performance in challenging signal detection and classification tasks [19]. Since the first appearance of deep learning models in the BirdCLEF challenge [20], there has been an explosion in neural network architectures specifically tailored to recognize bird sounds [21,22]. The most popular model is probably BirdNET [23], which is a freely available classifier based on a residual neural network architecture trained on several thousands of hours of annotated recordings to identify hundreds of species of North American and European birds. However, despite its ease of use, which enables widespread adoption [24], accurate bird recognition in omnidirectional soundscape recordings remains challenging [25], especially at longer distances [26]. Additional challenges include class imbalance, distribution shifts, and the presence of background noise [27], all of which hinder the ability of automatic recognition models to generalize under a variety of environmental conditions.

The motivation behind the research presented in this article therefore stems from an urgent need to improve biodiversity monitoring frameworks in response to the global biodiversity crisis, identifying ecoacoustic-based methods as a promising approach to these challenges. A key objective is to assess whether a pre-trained model like BirdNET, when fine-tuned with local, manually annotated data and supported by data augmentation, can significantly outperform both its original version and a custom CNN trained from scratch. Specifically, our goal is to validate the performance of BirdNET on a selected list of target species that are common to Italian Alpine coniferous forests. Our approach aims to develop a system that supports the conservation and management of natural protected areas, which are frequently located in remote and high-altitude regions [28], conditions that complicate monitoring efforts and, consequently, hinder biodiversity data collection. We systematically evaluate the recognition performance using a variety of metrics, and we measure model generalization by exploiting a separate test set containing an independent set of recordings. As a baseline for comparison, we also implement a vanilla Convolutional Neural Network (CNN) specifically trained on our dataset of recordings. Indeed, it has been recently shown that customized CNN models trained on specialized datasets sometimes offer an advantage over pre-trained models such as BirdNET, since they are more closely aligned with the statistical properties of the environment under study [29].

This article is structured as follows: in Section 2, we describe the problem setting; the dataset collection and annotation process; the preprocessing pipeline; the modeling strategies, including the fine-tuning of BirdNET; the design of a baseline CNN; and the evaluation metrics used to assess performance. In Section 3, we present the quantitative results comparing the different models, provide a detailed error analysis, and explore qualitative examples of model predictions. In Section 4, we interpret our findings, highlight their implications for biodiversity monitoring, and discuss methodological limitations and possible future directions. Finally, Section 5 summarizes the contributions of this study and their relevance to conservation and ecological research.

2. Materials and Methods

2.1. Database of Audio Recordings

The process of fine-tuning a pre-trained deep learning model requires the further optimization of the model parameters based on an additional set of training data. In order to fine-tune BirdNET on the scenario of interest, we therefore collected and manually tagged a large dataset of audio recordings containing a variety of vocalizations of the target bird species. Bird vocalizations were extracted from audio files recorded during the 2019 bird breeding season and the 2020 bird wintering season in the Tovanella watershed forest, located in the northeastern Italian Alps (municipality of Ospitale di Cadore, Province of Belluno, Veneto; 46°18′ N, 12°18′ E). The watershed, with an altitudinal range spanning from 550 to 2500 m above sea level, has been designated as a nature reserve since 1971 [30], and it is included within two Natura 2000 protected sites: the Special Area of Conservation IT3230031 and the Special Protection Area for Birds IT3230089 [31]. To collect audio data, ten automatic recording units (AudioMoth, version 1.1; Open Acoustic Devices [32]) were installed in different parts of the forest stands to allow for simultaneous recording. In cases where vocalizations of certain species were too scarce or absent in local recordings, we supplemented our dataset with audio files from the online repository Xeno-canto [33,34].

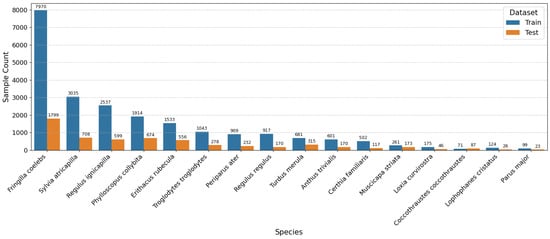

Bird vocalizations included songs, calls, alarm sounds, and, in the case of woodpeckers, drumming. Noise categories encompassed sounds classified as geophony or anthrophony [35], such as those produced by natural inanimate sources (e.g., raindrops, thunder, wind, and rustling vegetation) and human-related activities (e.g., passing airplanes and human voices). Additionally, vocalizations of non-avian animals (e.g., foxes and deer) were included in the noise categories. Tagging was performed by observing the corresponding spectrograms and listening to the recorded data to mark the traces of the identified vocalizations, adapting ad hoc tagging software used in previous work [36]. In total, 282 audio files were annotated, corresponding to approximately 18 h of recordings. An independent subset comprising 20 tagged recordings was then used to evaluate the generalization capabilities of the model. Overall, the final dataset included 16 bird species (those with the highest number of tagged occurrences and thus suitable for model training) and 3 noise categories: the distribution of species vocalizations in the training and test sets is reported in Figure 1.

Figure 1.

Distribution of target species in our training and test sets.

2.2. Data Pre-Processing and Data Augmentation

The original audio files were windowed into 3 s segments, with 50% overlap between consecutive segments. Each audio segment could include vocalizations from one or more species, silence (class “None”), or environmental sounds (segments containing signals with a duration of less than 0.2 s were considered empty to avoid including insignificant data in the training set). To train the models, spectrograms of the audio segments were then created using Short-Time Fourier Transform (STFT), calculated using a sampling rate of 32,000 on a Hann window of size 1024 with a hop length of 256. To normalize the spectrograms, a logarithmic transformation was applied to compress the higher range, and the resulting images were resized to pixels using bilinear interpolation.

To increase variability and improve generalization, four popular data augmentation techniques were implemented through the audiomentations library (https://iver56.github.io/audiomentations/, accessed on 21 July 2025): pitch shifting, time stretching, the addition of background noise, and signal gain. These transformations are consistent with StandardSGN, an effective augmentation protocol evaluated by Nanni et al. [37] on the BIRDZ dataset, which shares a similar domain and task. Each technique was applied on a probabilistic basis in order to introduce controlled variability while preserving the essential acoustic elements of the bird sounds. Pitch shifting, for example, was added in order to simulate a change in vocal range that can naturally occur between individuals of the same species; in this case, two types of pitch shifts were implemented: a downward shift of 1 to 3 semitones and an upward shift of the same amount, both applied with a probability of 0.75. While Nanni et al. [37] used a pitch shift range of [2, −2] semitones, we selected a slightly broader range to better capture inter-individual differences observed in field recordings. Time stretching was used to emulate a faster or slower sound that can be caused by external environmental factors or by recording artifacts: a rate of 0.9 to 1.1 was applied with a probability of 0.75. This aligns with the [0.8, 1.2] range used in StandardSGN, and it was adjusted to avoid excessive deformation on short vocalizations. Gain adjustment helps to simulate variations in recording volume, which can be due to factors such as bird distance from the microphone or differences in recording equipment. A gain of −5 to +5 dB was applied with a probability of 0.5, slightly broader than the [−3, 3] dB used in [37], to account for the higher dynamic variability observed in our dataset. Finally, a background noise augmentation technique was added with a probability of 0.8 in order to improve recognition robustness. This technique mirrors the approach used in the training of BirdNET [23], where background noise is added using non-salient segments from the original dataset.

2.3. Fine-Tuning the BirdNET Model

BirdNET consists of a sequence of residual stacks that extract features through down-sampling and residual blocks and a final classification layer [23]. We used “BirdNET_Analyzer v1.5.1” available on GitHub (https://github.com/birdnet-team/BirdNET-Analyzer/, accessed on 21 July 2025), which is based on version 2.4 of the BirdNET model featuring an EfficientNetB0-like backbone with Squeeze-and-Excitation blocks. For fine-tuning, we used the train.py function, with a batch size of 64 for 150 epochs and the “mixup flag” set to True. These hyperparameters were empirically calibrated to optimize the F1-score on the validation set. In particular, preliminary tests showed that variations in the batch size, learning rate, and number of epochs affected convergence speed but did not lead to significant improvements in classification performance, while the mixup option was kept enabled due to its consistent, even if marginal, positive impact across our experimental runs. We obtained the models’ predictions using the analyze.py function, setting the sensitivity to 1 and the minimum confidence score to 0.05. A subset of the training data was used as a validation set in order to calibrate custom confidence thresholds for each species. This was achieved using a linear search, starting from 0.01 to 0.95 in 200 steps, using the F1-score as the evaluation metric.

2.4. Baseline CNN Model

As a baseline, we implemented a vanilla CNN trained from scratch on the same dataset used to fine-tune BirdNET. We chose CNN as a comparison baseline because it has been extensively shown that this architecture achieves state-of-the-art performance in bird recognition tasks across a wide variety of datasets and bird species [38,39].

To better explore the design space of the CNN, we conducted a random search over 200 configurations, sampling the hyperparameters from the following search space: number of convolutional layers {1, 2, 3, 4}, kernel size (non-decreasing sequences from {2, 3, 4, 5, 6}), number of channels {16, 32, 64, 128}, dropout rate {0.0, 0.5}, dense layer size {32, 64, 128}, and batch size {32, 64, 128}. To ensure fair model selection, performance metrics (micro-average, sample average, and weighted average F1-scores) were computed on a separate validation set, and the best configuration was selected based on the average of these three scores. The best architecture had four convolutional layers, kernel sizes [4, 5, 6, 6], number of channels [16, 32, 64, 128], no dropout, a dense layer with 128 units, and a batch size of 128. The hyperparameter search was designed to favor compact and lightweight architectures, limiting the number of convolutional layers and the size of the final dense layer. For other parameters, such as batch and kernel sizes, the search space was instead chosen to be broad enough to include a wide range of potentially effective configurations. Training was carried out using the Adam optimizer for a maximum of 200 epochs, with early stopping with a patience of 15 epochs to mitigate the risk of overfitting.

2.5. Performance Assessment

To assess model performance, we employed the following evaluation metrics:

where TP stands for true positive, FP stands for false positive, TN stands for true negative, and FN stands for false negative. We aggregated these metrics through micro-, weighted, and sample averages. Micro-averaging sums up the TP, FP, TN, and FN across all classes and then calculates precision, recall, and F1 from these totals: it does not average per class, as it treats all predictions as one unique class (i.e., classes with more samples have more weight). Weighted average calculates metrics for each class separately and then takes a weighted average based on how many samples each class has: in this way, every class is individually analyzed, but the most frequent classes still have more weight. Sample average calculates the average of the metrics on each individual sample in the dataset: this focuses on how the model classifies every single data point, independently from its class.

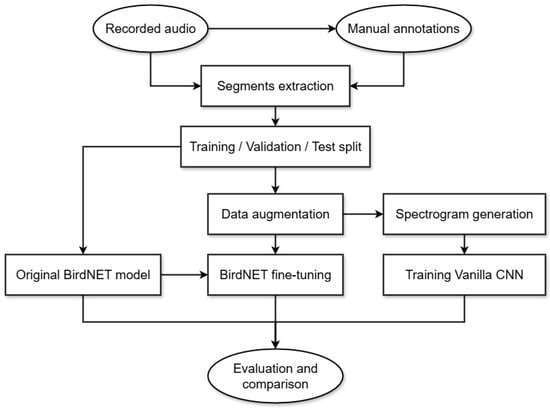

For the best performing model, we also report a confusion matrix and Receiver Operating Characteristic (ROC) curves, along with the corresponding Area Under the Curve (AUC) values. A schematic representation of our methodological pipeline is shown in Figure 2.

Figure 2.

Workflow diagram of the proposed methodology, including data preprocessing, model training, and model evaluation.

3. Results

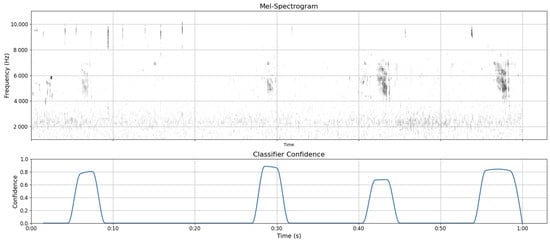

As shown in Table 1, the BirdNET model fine-tuned with data augmentation achieves the best F1-score, which is also reflected in the increased balance between precision and recall. An example of the classification confidence score returned by this model for the Fringilla coelebs species is reported in Figure 3. The figure shows a spectrogram of a 1 min recording, along with the corresponding output score, highlighting that the classifier can properly recognize all relevant calls in the acoustic input stream.

Table 1.

Performance metrics—micro-average.

Figure 3.

Mel-spectrogram and classifier confidence curve for the BirdNET model fine-tuned with data augmentation for the Fringilla coelebs species.

The original BirdNET model has the lowest F1-score, with a higher precision but a poorer recall than all other models. The CNN model is an improvement over the original BirdNET model but does not approach the performance of the fine-tuned versions of BirdNET. Similar trends apply for the weighted average (Table 2) and sample average (Table 3) metrics, confirming that fine-tuning the pre-trained BirdNET classifier seems to be the most promising approach to obtain accurate predictions and that data augmentation provides a small but consistent improvement during model fine-tuning.

Table 2.

Performance metrics—weighted average.

Table 3.

Performance metrics—sample average.

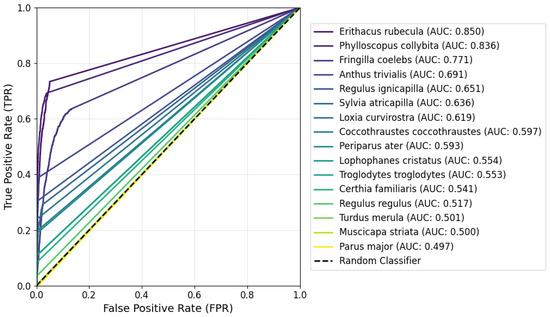

The ROC curves of the best performing model (BirdNET fine-tuned with data augmentation) are shown in Figure 4. The plot shows that Erithacus rubecula is the most recognizable species, with an AUC score of 0.849, closely followed by Phylloscopus collybita. Muscicapa striata presents a problematic case, as none of the models could correctly recognize it. Interestingly, Turdus merula was sufficiently recognized by the original BirdNET model but not by any other model, suggesting that our training set had unrepresentative or biased data for this species.

Figure 4.

ROC curve of the fine-tuned BirdNET model with data augmentation.

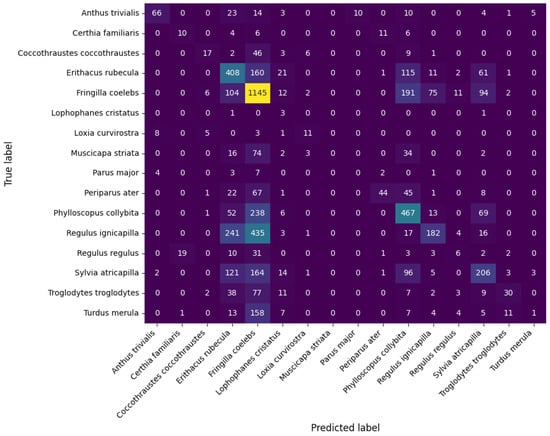

Figure 5 shows the confusion matrix for the BirdNET model fine-tuned with data augmentation, which helps us to identify the most recognizable and most confused species. It is evident that some species are often confused: this is the case in particular for Coccothraustes coccothraustes, Muscicapa striata, Parus Major, Periparus ater, Regulus ignicapilla, Regulus regulus, Troglodytes troglodytes, and Turdus merula, which are classified more often as Fringilla coelebs than the correct class. This issue is likely due to the strong bias for the vocalizations of Fringilla coelebs in the training corpus, since they appear much more frequently than the others (see Figure 1). Nevertheless, the other species are correctly classified in most cases, even though the model often exhibits biased responses and tends to incorrectly predict the presence of a few key species, in particular Erithacus rubecula, Phylloscopus collybita, and Sylvia atricapilla, which are also much more frequent in the training samples (see Figure 1).

Figure 5.

Confusion matrix for BirdNET model fine-tuned with data augmentation.

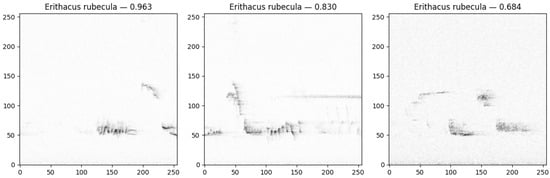

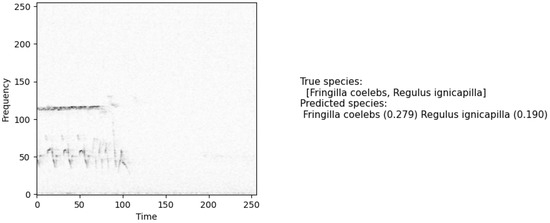

We also qualitatively analyzed the model’s predictions by inspecting a set of representative spectrograms highlighting different scenarios, such as correct predictions across diverse signals from the same species or in the presence of overlapping vocalizations. In Figure 6, we can observe three different signals from Erithacus rubecula, which the model correctly recognizes but with a decreasing confidence score as the signal becomes progressively noisier or more affected by other environmental sounds. This highlights the sensitivity of the model to background interference and its reduced ability to generalize when the vocalization pattern is partially obscured or distorted. Figure 7 instead shows the challenge associated with handling simultaneous vocalizations from multiple species: although the model correctly identifies both species, the confidence scores are fairly low. This may reflect uncertainty due to overlapping frequency patterns or a lack of training examples featuring co-occurring vocalizations of these species.

Figure 6.

Decrease in recognition confidence for different calls of Erithacus rubecula as the signal becomes weaker and increasingly disturbed by environmental background noises.

Figure 7.

Decrease in recognition confidence when the spectrogram contains overlapping vocalization from multiple species.

4. Discussion

Our results demonstrate that fine-tuning the BirdNET model on region-specific data can substantially enhance its recognition performance in a complex Alpine coniferous forest soundscape. This confirms previous findings suggesting that pre-trained classifiers, although powerful, often struggle to generalize to novel acoustic environments characterized by overlapping vocalizations, unfamiliar background conditions, and habitat-specific sound propagation features [25,27]. Such conditions are typical of dense mountainous forests, where signal degradation, reverberation, and masking effects complicate automatic detection [40]. This trade-off, while acceptable in some applications (e.g., presence-only species checklists), is unsuitable for biodiversity monitoring, which involves comprehensive detection tasks where recall is crucial for assessing species richness and community structure [41]. Our findings align with those of other studies showing that tuning BirdNET’s sensitivity improves recall but may increase false positives, thereby reducing precision [42], highlighting the need to balance these metrics based on monitoring goals.

Fine-tuning alone significantly boosts recall, while augmentation further improves precision without sacrificing recall. These findings highlight the limitations of applying standard models to unfamiliar soundscapes without any adaptation, supporting the hypothesis that training on context-specific data—even when limited in duration—enables the model to learn habitat-specific patterns, background noise profiles, and intra-specific vocal variability. The misclassification or undetection of species like Parus major, Loxia curvirostra, Regulus regulus, and Muscicapa striata—relatively common in this Alpine area—suggests that they may be underrepresented in the original BirdNET training data or that there was a poor alignment between BirdNET’s learned characteristics and the ambiguous acoustic characteristics of these species in forest habitats. This reinforces the idea that general-purpose models struggle with regionally frequent but acoustically complex taxa [23]. In this respect, a promising way to improve species-specific precision could be to use adaptive thresholds, thereby tuning the decision boundaries according to the acoustic characteristics of each species [42].

Our results also highlight vulnerabilities in the presence of acoustic interference or simultaneous vocalizations, leading to reduced confidence or ambiguous predictions. This limitation is particularly relevant in natural settings during dawn choruses or peak breeding periods, where multiple individuals vocalize simultaneously [43]. A possible mitigation of this issue could be to incorporate source separation methods to isolate vocalizations [44]. Still, looking at the vocalization-level metrics, BirdNET fine-tuned with data augmentation achieved a remarkable micro-average F1-score of 0.647, which is nearly double the maximum F1-score of 0.339 reported in other studies [45]. This substantial improvement suggests that fine-tuning the model parameters allows for a much better balance between precision and recall, enhancing the model’s ability to detect complex and rare vocalizations more effectively, bridging the gap between model generalization and site-specific accuracy, especially in underrepresented or more acoustically complex habitats.

Notably, the vanilla CNN, while expectedly weaker than the fine-tuned BirdNET, still achieved remarkable performance, supporting previous findings that customized models can still perform adequately when trained on a dataset that closely reflects the target environment [29]. However, the fine-tuned BirdNET still outperformed the CNN, especially in handling vocal complexity and rare species detection, likely due to its deeper architecture and broader pre-training.

Finally, although our study focused primarily on accuracy improvements, future research should also address the operational scalability and deployment efficiency of these models. This includes testing performance on low-power hardware, understanding memory and energy requirements, and evaluating detection robustness across longer temporal spans.

5. Conclusions

The strengths of our work consist of it providing one of the first empirical validations of BirdNET fine-tuning in Alpine coniferous forests, addressing real-world condition-related challenges (e.g., overlapping calls and acoustic interference). Our results confirm that deep learning is a very promising tool for the automatic monitoring of avian biodiversity and that pre-trained classifiers such as BirdNET could be effectively adapted to the environment of interest with minimal effort. At the same time, our results highlight the importance of contextual model adaptation to improve recognition accuracy and ensure consistent performance across diverse ecological conditions, thereby reinforcing the need for conservation practitioners to consider the local calibration of machine learning models, especially when monitoring protected areas where management decisions rely on accurate and timely biodiversity assessments.

From a conservation point of view, locally adapted models would offer a valuable tool to track target species, assess bird communities, and inform conservation actions, especially in remote or rugged areas such as the Alpine regions [18]. The ability to rapidly, automatically, and remotely assess avian species can also support early warning systems for habitat quality change and biodiversity loss, enhancing adaptive management. Interestingly, despite lightweight models remaining promising in contexts where energy or computational efficiency is required, recent advances have shown that larger models like BirdNET can also be effectively deployed on low-power recording devices [46], enabling real-time acoustic monitoring even in off-grid environments. This broadens the practical potential of AI for scalable and cost-effective biodiversity monitoring, and it offers practical implications for cost-effective, scalable biodiversity monitoring in protected and remote areas.

At the same time, we should note that our study has several limitations. First, the number of species analyzed was limited, and the dataset—although carefully curated—was narrow in duration and scope. Future research should therefore expand our approach to include more species, habitats, and seasonal contexts to test the scalability and robustness of AI models under more variable environmental and acoustic conditions. Second, while we focused on improving model performance through fine-tuning and data augmentation—directly modifying model weights to enhance its ability to generalize—we did not explore alternative adaptation strategies to optimize BirdNET’s performance without altering the underlying model, such as the adjustment of threshold parameters or segment durations or the use of source separation techniques, which could further enhance recognition in complex soundscapes [42]. However, although this strategy is lightweight and effective for real-world deployment, it does not directly address the model’s capacity to recognize challenging or rare species, as it does not involve retraining or modifying the underlying neural network. Finally, we did not assess computational aspects or compare performance across devices and deployment environments—an important consideration for real-world applications, particularly in remote or resource-limited settings, setting the stage for further applied studies.

In conclusion, we believe that our work underscores the potential of deep learning approaches to support biodiversity monitoring in protected and remote areas, offering valuable tools for managers and conservation practitioners tasked with tracking the presence of species and informing management actions. Indeed, the key innovation is the regional fine-tuning of a widely used AI model (BirdNET) for improved ecoacoustic performance in complex habitats. This study contributes to bridging the gap between state-of-the-art AI and on-the-ground conservation needs, highlighting the role of context-specific adaptation, and it provides a replicable framework for the local calibration of bioacoustic models, relevant for other ecosystems worldwide.

Author Contributions

Conceptualization, A.P. and A.T.; methodology, A.T.; software, G.S.; validation, G.S., A.P., and A.T.; resources, A.P. and A.T.; data curation, G.S. and A.P.; writing—original draft preparation, G.S., A.P., and A.T.; writing—review and editing, G.S., A.P., and A.T.; visualization, G.S.; supervision, A.T.; project administration, A.T.; funding acquisition, A.P. and A.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by European Union Next-Generation EU—Piano Nazionale di Ripresa e Resilienza (PNRR)—Missione 4 Componente 2, Investimento 1.1, Bando PRIN 2022 PNRR—DD 1409, for the project ECO-AID (P2022Z8BE2).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All source code used to reproduce the methodology described in the present study can be found at https://github.com/giacomoschiavo/finetuning-BirdNET, accessed on 21 July 2025.

Acknowledgments

The authors would like to thank Ilan Shachar and Roee Diamant for sharing their acoustic tagging software; Andrea Favaretto and Jacopo Barchiesi for their consultation on bird species tagging; and the Department of Land, Environment, Agriculture and Forestry of the University of Padova for the equipment used for the recordings.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Butchart, S.H.; Walpole, M.; Collen, B.; Van Strien, A.; Scharlemann, J.P.; Almond, R.E.; Baillie, J.E.; Bomhard, B.; Brown, C.; Bruno, J.; et al. Global biodiversity: Indicators of recent declines. Science 2010, 328, 1164–1168. [Google Scholar] [CrossRef]

- Scheffers, B.R.; Joppa, L.N.; Pimm, S.L.; Laurance, W.F. What we know and don’t know about Earth’s missing biodiversity. Trends Ecol. Evol. 2012, 27, 501–510. [Google Scholar] [CrossRef]

- Pocock, M.J.; Newson, S.E.; Henderson, I.G.; Peyton, J.; Sutherland, W.J.; Noble, D.G.; Ball, S.G.; Beckmann, B.C.; Biggs, J.; Brereton, T.; et al. Developing and enhancing biodiversity monitoring programmes: A collaborative assessment of priorities. J. Appl. Ecol. 2015, 52, 686–695. [Google Scholar] [CrossRef]

- Krause, B.; Farina, A. Using ecoacoustic methods to survey the impacts of climate change on biodiversity. Biol. Conserv. 2016, 195, 245–254. [Google Scholar] [CrossRef]

- Campedelli, T.; Florenzano, G.T.; Londi, G.; Cutini, S.; Fornasari, L. Effectiveness of the italian national protected areas system in conservation of farmland birds= Efectividad del sistema nacional de protección de áreas en Italia para la conservación de aves en paisajes agrícolas: A gap analysis= un análisis de deficiencias. Ardeola 2010, 57, 51–64. [Google Scholar]

- Portaccio, A.; Basile, M.; Favaretto, A.; Campagnaro, T.; Pettenella, D.; Sitzia, T. The role of Natura 2000 in relation to breeding birds decline on multiple land cover types and policy implications. J. Nat. Conserv. 2021, 62, 126023. [Google Scholar] [CrossRef]

- European Environment Agency. State of Nature in the EU—Results from Reporting Under the Nature Directives 2013–2018; Publications Office: Luxembourg, 2020. [Google Scholar]

- Segan, D.B.; Bottrill, M.C.; Baxter, P.W.; Possingham, H.P. Using conservation evidence to guide management. Conserv. Biol. 2011, 25, 200–202. [Google Scholar] [CrossRef]

- BirdLife International. Birds and Biodiversity Targets: What Do Birds Tell Us About Progress to the Aichi Targets and Requirements for the Post-2020 Biodiversity Framework? A State of the World’s Birds Report; BirdLife International: Cambridge, UK, 2020. [Google Scholar]

- Gregory, R.D.; Noble, D.; Field, R.; Marchant, J.; Raven, M.; Gibbons, D. Using birds as indicators of biodiversity. Ornis Hung. 2003, 12, 11–24. [Google Scholar]

- Darras, K.; Batáry, P.; Furnas, B.; Celis-Murillo, A.; Van Wilgenburg, S.L.; Mulyani, Y.A.; Tscharntke, T. Comparing the sampling performance of sound recorders versus point counts in bird surveys: A meta-analysis. J. Appl. Ecol. 2018, 55, 2575–2586. [Google Scholar] [CrossRef]

- Celis-Murillo, A.; Deppe, J.L.; Allen, M.F. Using soundscape recordings to estimate bird species abundance, richness, and composition. J. Field Ornithol. 2009, 80, 64–78. [Google Scholar] [CrossRef]

- Sugai, L.S.M.; Silva, T.S.F.; Ribeiro, J.W., Jr.; Llusia, D. Terrestrial passive acoustic monitoring: Review and perspectives. BioScience 2019, 69, 15–25. [Google Scholar] [CrossRef]

- Abrahams, C. Bird bioacoustic surveys-developing a standard protocol. Practice 2018, 102, 20–23. [Google Scholar]

- Acevedo, M.A.; Villanueva-Rivera, L.J. From the field: Using automated digital recording systems as effective tools for the monitoring of birds and amphibians. Wildl. Soc. Bull. 2006, 34, 211–214. [Google Scholar] [CrossRef]

- Priyadarshani, N.; Marsland, S.; Castro, I. Automated birdsong recognition in complex acoustic environments: A review. J. Avian Biol. 2018, 49, jav-01447. [Google Scholar] [CrossRef]

- Zhao, Z.; Zhang, S.; Xu, Z.; Bellisario, K.; Dai, N.; Omrani, H.; Pijanowski, B.C. Automated bird acoustic event detection and robust species classification. Ecol. Inform. 2017, 39, 99–108. [Google Scholar] [CrossRef]

- Nieto-Mora, D.; Rodríguez-Buritica, S.; Rodríguez-Marín, P.; Martínez-Vargaz, J.; Isaza-Narváez, C. Systematic review of machine learning methods applied to ecoacoustics and soundscape monitoring. Heliyon 2023, 9, e20275. [Google Scholar] [CrossRef]

- Nur Korkmaz, B.; Diamant, R.; Danino, G.; Testolin, A. Automated detection of dolphin whistles with convolutional networks and transfer learning. Front. Artif. Intell. 2023, 6, 1099022. [Google Scholar] [CrossRef]

- Sprengel, E.; Jaggi, M.; Kilcher, Y.; Hofmann, T. Audio based bird species identification using deep learning techniques. In Proceedings of the Conference and Labs of the Evaluation Forum (CLEF) 2016, Evora, Portugal, 5–8 September 2016; pp. 547–559. [Google Scholar]

- Maegawa, Y.; Ushigome, Y.; Suzuki, M.; Taguchi, K.; Kobayashi, K.; Haga, C.; Matsui, T. A new survey method using convolutional neural networks for automatic classification of bird calls. Ecol. Inform. 2021, 61, 101164. [Google Scholar] [CrossRef]

- Madhusudhana, S.; Shiu, Y.; Klinck, H.; Fleishman, E.; Liu, X.; Nosal, E.M.; Helble, T.; Cholewiak, D.; Gillespie, D.; Širović, A.; et al. Improve automatic detection of animal call sequences with temporal context. J. R. Soc. Interface 2021, 18, 20210297. [Google Scholar] [CrossRef]

- Kahl, S.; Wood, C.M.; Eibl, M.; Klinck, H. BirdNET: A deep learning solution for avian diversity monitoring. Ecol. Inform. 2021, 61, 101236. [Google Scholar] [CrossRef]

- Wood, C.M.; Kahl, S.; Rahaman, A.; Klinck, H. The machine learning–powered BirdNET App reduces barriers to global bird research by enabling citizen science participation. PLoS Biol. 2022, 20, e3001670. [Google Scholar] [CrossRef]

- Pérez-Granados, C. BirdNET: Applications, performance, pitfalls and future opportunities. Ibis 2023, 165, 1068–1075. [Google Scholar] [CrossRef]

- Pérez-Granados, C. A first assessment of BirdNET performance at varying distances: A playback experiment. Ardeola 2023, 70, 257–269. [Google Scholar] [CrossRef]

- Matsinos, Y.G.; Tsaligopoulos, A. Hot spots of ecoacoustics in Greece and the issue of background noise. J. Ecoacoustics 2018, 2, 14. [Google Scholar] [CrossRef]

- Joppa, L.N.; Pfaff, A. High and far: Biases in the location of protected areas. PLoS ONE 2009, 4, e8273. [Google Scholar] [CrossRef]

- Haley, S.M.; Madhusudhana, S.; Branch, C.L. Comparing detection accuracy of mountain chickadee (Poecile gambeli) song by two deep-learning algorithms. Front. Bird Sci. 2024, 3, 1425463. [Google Scholar] [CrossRef]

- Hardersen, S.; Mason, F.; Viola, F.; Campedel, D.; Lasen, C.; Cassol, M. Research on the natural heritage of the reserves Vincheto di Celarda and Val Tovanella (Belluno province, Italy). In Conservation of Two Protected Areas in the Context of a LIFE Project. Quaderni Conservazione Habitat Invertebrati; Arti Grafiche Fiorini: Verona, Italy, 2008; Volume 5. [Google Scholar]

- Lasen, C.; Scariot, A.; Sitzia, T. Natura 2000 Habitats map, forest types and vegetation outline of Val Tovanella Nature Reserve. In Research on the Natural Heritage of the Reserves Vincheto di Celarda and Val Tovanella (Belluno Province Italy). Conservation of Two Protected Areas in the Context of a LIFE Project; Arti Grafiche Fiorini: Verona, Italy, 2008; pp. 325–334. [Google Scholar]

- Hill, A.P.; Prince, P.; Snaddon, J.L.; Doncaster, C.P.; Rogers, A. AudioMoth: A low-cost acoustic device for monitoring biodiversity and the environment. HardwareX 2019, 6, e00073. [Google Scholar] [CrossRef]

- Planqué, B.; Vellinga, W.P. Xeno-Canto: A 21st century way to appreciate neotropical bird song. Neotrop. Bird 2008, 3, 17–23. [Google Scholar]

- Vellinga, W.P.; Planqué, R. The Xeno-canto Collection and its Relation to Sound Recognition and Classification. In Proceedings of the CLEF (Working Notes), Toulouse, France, 8–11 September 2015. [Google Scholar]

- Krause, B. Bioacoustics, habitat ambience in ecological balance. Whole Earth Rev. 1987, 57, 14–18. [Google Scholar]

- Diamant, R.; Testolin, A.; Shachar, I.; Galili, O.; Scheinin, A. Observational study on the non-linear response of dolphins to the presence of vessels. Sci. Rep. 2024, 14, 6062. [Google Scholar] [CrossRef]

- Nanni, L.; Maguolo, G.; Paci, M. Data augmentation approaches for improving animal audio classification. Ecol. Inform. 2020, 57, 101084. [Google Scholar] [CrossRef]

- Stowell, D.; Wood, M.D.; Pamuła, H.; Stylianou, Y.; Glotin, H. Automatic acoustic detection of birds through deep learning: The first bird audio detection challenge. Methods Ecol. Evol. 2019, 10, 368–380. [Google Scholar] [CrossRef]

- Florentin, J.; Dutoit, T.; Verlinden, O. Detection and identification of European woodpeckers with deep convolutional neural networks. Ecol. Inform. 2020, 55, 101023. [Google Scholar] [CrossRef]

- Slabbekoorn, H.; Yeh, P.; Hunt, K. Sound transmission and song divergence: A comparison of urban and forest acoustics. Condor 2007, 109, 67–78. [Google Scholar] [CrossRef]

- Hoefer, S.; McKnight, D.T.; Allen-Ankins, S.; Nordberg, E.J.; Schwarzkopf, L. Passive acoustic monitoring in terrestrial vertebrates: A review. Bioacoustics 2023, 32, 506–531. [Google Scholar] [CrossRef]

- Pérez-Granados, C.; Funosas, D.; Morant, J.; Acosta, M.; Tarifa, R.; Devenish, D.K.N.; Fléau, H.L.; Rocha, P.A.; Bělka, T.; Desmet, L.; et al. Optimisation of passive acoustic bird surveys: A global assessment of BirdNET settings. Res. Sq. 2025. preprint (Version 1). [Google Scholar] [CrossRef]

- Staicer, C.A.; Spector, D.A.; Horn, A.G. The dawn chorus and other diel patterns in acoustic signaling. In Ecology and Evolution of Acoustic Communication in Birds; Comstock Pub. Associates: Ithaca, NY, USA, 1996; pp. 426–453. [Google Scholar]

- Luo, Y.; Mesgarani, N. Conv-TasNet: Surpassing ideal time-frequency magnitude masking for speech separation. IEEE/ACM Trans. Audio Speech Lang. Process. 2019, 27, 1256–1266. [Google Scholar] [CrossRef]

- Funosas, D.; Barbaro, L.; Schillé, L.; Elger, A.; Castagneyrol, B.; Cauchoix, M. Assessing the potential of BirdNET to infer European bird communities from large-scale ecoacoustic data. Ecol. Indic. 2024, 164, 112146. [Google Scholar] [CrossRef]

- Bota, G.; Manzano-Rubio, R.; Catalán, L.; Gómez-Catasús, J.; Pérez-Granados, C. Hearing to the unseen: AudioMoth and BirdNET as a cheap and easy method for monitoring cryptic bird species. Sensors 2023, 23, 7176. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).