1. Introduction

The global aviation industry is undergoing a rapid digital transformation, driven by increasing demands for safety, operational efficiency, and real-time decision-making. Amid this evolution, the role of aviation maintenance technicians has grown more complex, requiring proficiency not only in traditional mechanical procedures but also in data interpretation, system-level diagnostics, and adaptive learning. According to forecasts by leading aviation stakeholders, over 700,000 new maintenance professionals will be needed by 2043 to support fleet expansion and technological modernization [

1]. Existing training models, largely reliant on static courseware and infrequent practical assessments, struggle to deliver the depth, adaptability, and individualization needed in today’s data-rich aviation environment.

Digital twin (DT) technology, originally developed in the manufacturing sector, refers to a dynamic digital representation of a physical system or process that enables real-time monitoring, simulation, and decision-making across its lifecycle. This concept emphasizes the value of virtual replicas in enhancing operational efficiency and system understanding [

2]. Building upon this, the architecture of Digital Twin as a Service was formalized within Industry 4.0 frameworks to support scalable, cloud-integrated, and service-oriented applications across sectors [

3]. These developments have led to the emergence of DT ecosystems that can replicate, predict, and adapt to complex system behaviors, making them particularly suitable for aviation training, where real-time diagnostics, predictive maintenance, and regulatory alignment are critical.

In response to these challenges, this study proposes a digital-twin-based training (DTBT) ecosystem specifically designed for aviation maintenance. The system builds on three foundational concepts: learner digital twins (LDTs) that dynamically reflect a trainee’s evolving competence profile; ideal competence twins (ICTs) that encode the regulatory and operational skill benchmarks derived from aviation normative documents; and a four-level learning ecosystem twin (LET) that curates training resources across a fidelity spectrum from traditional computer-based training (CBT) to high-fidelity operational twins.

The emergence of digital twin technology has opened innovative pathways for educational methodology transformation. Learners can acquire educational experiences and hands-on investigations that mirror physical learning contexts through the concurrent operation of tangible entities or their digital counterparts within digital twin educational environments [

4]. This concurrent functionality not only strengthens students’ hands-on capabilities but also enriches their comprehension and knowledge implementation [

5]. Nevertheless, existing studies predominantly remain within conceptual validation and initial deployment phases, requiring further confirmation of practical impacts and widespread adoption [

6]. Concerning robust interactivity, learners can employ wearable technology to engage with elements in digital twin spaces or participate in immersive discovery as virtual representations within digital twin educational settings [

7]. This engagement approach offers substantial assistance for pervasive, geographically distributed learning activities and productive team collaboration [

8].

However, successfully incorporating these technologies practically to guarantee students’ educational experiences and tangible results presents numerous obstacles [

9]. As computational technology progresses, digital twin technology implementation in education has steadily broadened. Contemporary research mainly emphasizes developing digital twin educational environments and instructional support frameworks [

10]. Through digital twin technology utilization, diverse digital twin learning contexts have been established, incorporating intelligent digital twin learning spaces [

11], digital twin systems [

12], and immersive architectural prototype twin environments [

13].

The instantaneous engagement, virtual–physical integration, and comprehensive understanding delivered by digital twins provide learners with environments and materials that are viewable, experiential, manipulable, testable, and evolutionary [

14]. Additionally, digital twin technology has facilitated creating cognitive digital twins [

15] and digital-twin-supported instructional systems [

16]. These frameworks deliver customized learning approaches and educational blueprints through continuous data evaluation and response, illustrating digital twin technology’s capacity for improving instructional quality and effectiveness.

Digital twins are being actively researched in relation to various areas of aviation. A paper [

17] introduces a holistic framework for applying digital twins in aircraft lifecycle management, emphasizing the use of data-driven models to improve decision-making and operational performance. The research presented in [

18] introduces an innovative digital twin framework tailored for twin-spool turbofan engines, aiming to enhance accuracy by integrating the strengths of both mechanism-based models and data-driven approaches. In a broader fleet-wide context, a study [

19] proposes a comprehensive monitoring and diagnostics framework for aircraft health management.

The term DTBT ecosystem refers to a structured educational framework that integrates dynamic digital replicas of learners, competence models, and learning environments to enable real-time monitoring, personalized instruction, and regulatory alignment in technical education. This ecosystem relies on continuous feedback loops and fidelity-stratified content delivery to adapt instruction according to evolving learner profiles. Several technology providers have already explored the application of digital twin technology to address aviation training challenges. For instance, PTC has developed digital twin solutions integrated with its platforms, allowing interactive procedural training via augmented reality and 3D system simulations [

20]. Similarly, IBM has implemented digital twin-based predictive analytics and procedural training modules through its Maximo platform, focusing on system diagnostics and asset performance in aviation maintenance contexts [

21].

All major aviation companies have been actively developing platforms to predict component wear and optimize maintenance strategies. Some of the most significant advancements towards digital twins in the aviation sector include Aviatar (Lufthansa Technik, Hamburg, Germany) [

22], Skywise (Airbus, Blagnac, France) [

23], Predix (General Electric, General Electric. San Ramon, CA, USA) [

24], PROGNOS (Air France Industries and KLM Engineering & Maintenance, Paris, France) [

25], AnalytX (Boeing, Crystal City, VA, USA) [

26], and others.

Innovative approaches to aviation maintenance training include the use of virtual reality (VR) and adaptive game-based environments. A paper [

27] investigates the effectiveness of virtual simulation-based training for aviation maintenance technicians, concluding that VR technology can enhance training outcomes when combined with traditional methods. Additionally, a study [

28] discusses maintenance training based on an adaptive game-based environment using a pedagogic interpretation engine, which dynamically adapts training scenarios to maximize effectiveness.

Virtual maintenance training offers interactive 3D simulations for effective skill development. A study [

29] developed an aircraft maintenance virtual reality system for training students in the aviation industry, demonstrating its effectiveness in improving training outcomes. A comprehensive analysis of the issues of application and training of artificial intelligence in aviation is studied in one article [

30].

Despite significant progress in the application of digital twin technologies and immersive environments for training, existing studies in aviation maintenance education primarily remain at the conceptual or prototype stage. These approaches often lack robust orchestration mechanisms that adapt training content dynamically based on individual learner profiles, real-time performance data, and regulatory compliance metrics. Moreover, current systems do not fully integrate fidelity-stratified content assignment or closed-loop feedback for competence tracking, which limits their scalability, auditability, and instructional precision.

This article addresses these critical gaps by introducing a fully realized digital-twin-based ecosystem for aviation maintenance training that combines LDT, ICT, and LET within a modular, cloud–edge hybrid architecture. The main contribution lies in the design and experimental validation of an adaptive orchestration engine capable of real-time gap analysis, fidelity-matched content delivery, and comprehensive validation logging aligned with European Union Aviation Safety Agency (EASA) requirements.

The remainder of this paper is structured as follows:

Section 2 presents the conceptual framework, system architecture, and mathematical models underlying the ecosystem;

Section 3 describes the simulation setup and evaluates system behavior and learning outcomes;

Section 4 discusses scalability, regulatory readiness, and lessons from deployment, and

Section 5 concludes with insights into limitations and future research directions.

2. Materials and Methods

2.1. Conceptual Framework

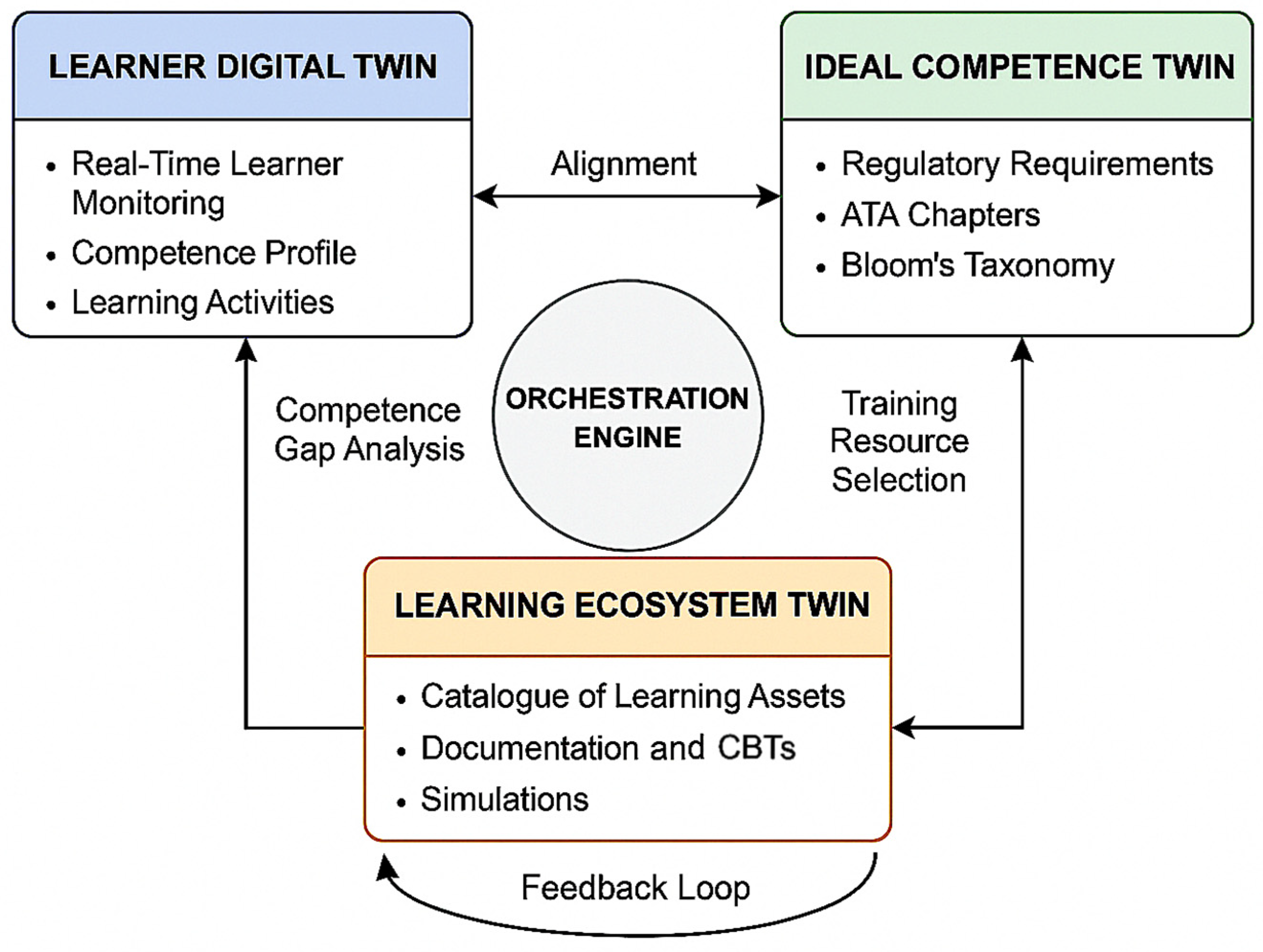

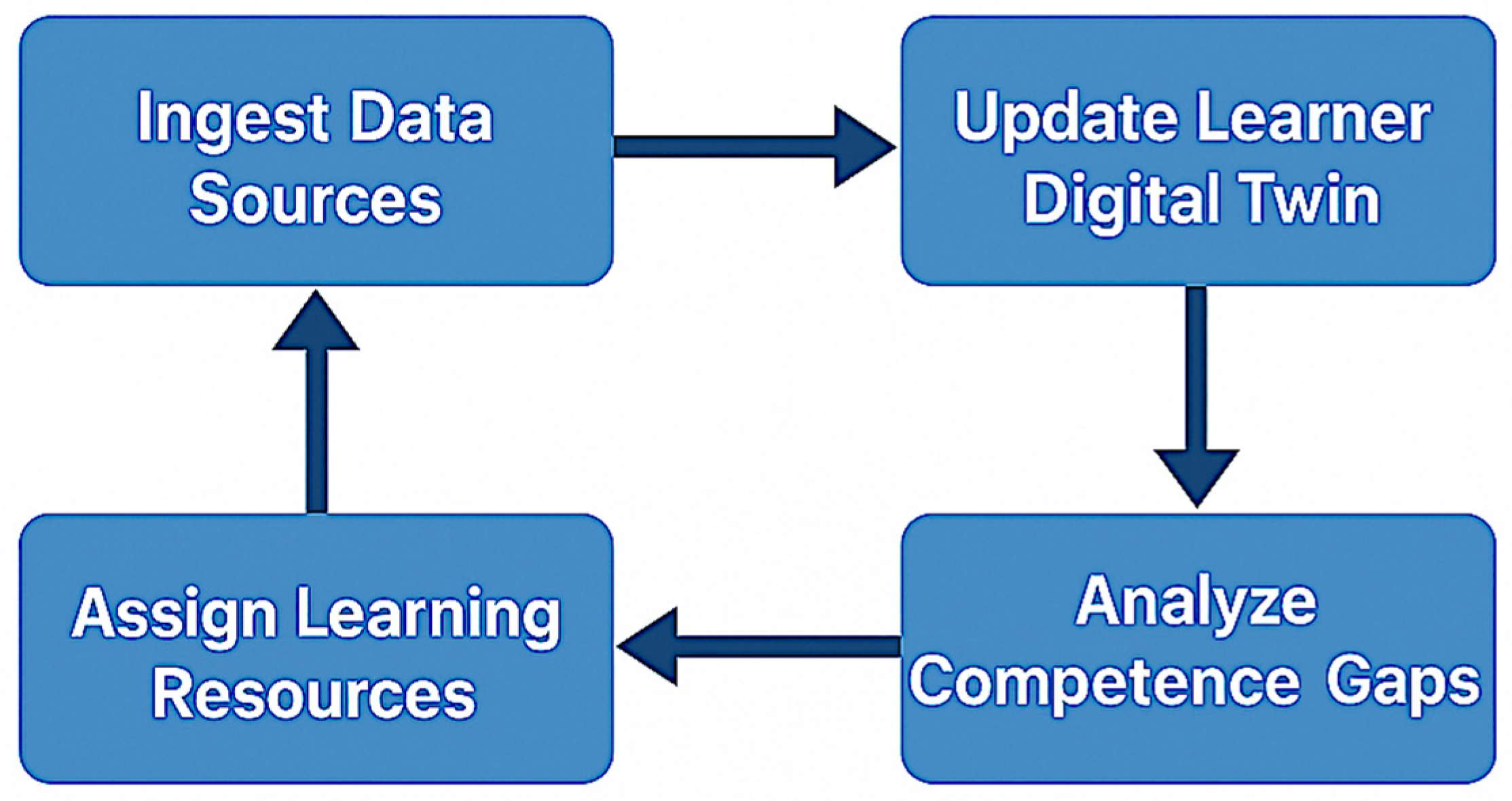

The proposed digital-twin-based training ecosystem is grounded in a multi-layered conceptual framework that integrates real-time learner monitoring, regulatory competence modeling, and adaptive content delivery. This framework orchestrates the interaction of three interdependent digital twin models—LDT, ICT and LET—within a continuous feedback loop (

Figure 1).

The learner digital twin serves as a real-time digital replica of the trainee’s evolving competence profile. It continuously captures individual learning activities, assessment outcomes, interaction patterns, and behavioral markers. The LDT reflects not only the current level of mastery across technical domains but also temporal patterns such as learning speed, error recurrence, and decision-making latency. This enables personalized tracking and adaptive interventions over the course of the training program.

The ideal competence twin functions as a normative reference model, encoding the required knowledge, skills, and performance standards derived from international aviation maintenance regulations and instructional frameworks. Specifically, it integrates the structure of EASA Part-66 training modules [

31], organizes content by Air Transport Association (ATA) e-Business Program chapters [

32], and applies Bloom’s Taxonomy [

33] to define expected levels of cognitive, psychomotor, and affective learning. The ICT thus serves as the benchmark against which each LDT is periodically compared to identify competence gaps at a granular level.

The learning ecosystem twin is a structured repository of all instructional assets available within the training environment. These include static resources such as manuals and CBTs, interactive simulations, VR-based procedural walkthroughs, and sliced versions of full operational digital twins derived from actual aircraft telemetry and maintenance logs. The LET not only catalogues assets by topic and fidelity level but also annotates each resource with metadata such as learning objectives, fidelity rating, expected duration, and regulatory alignment.

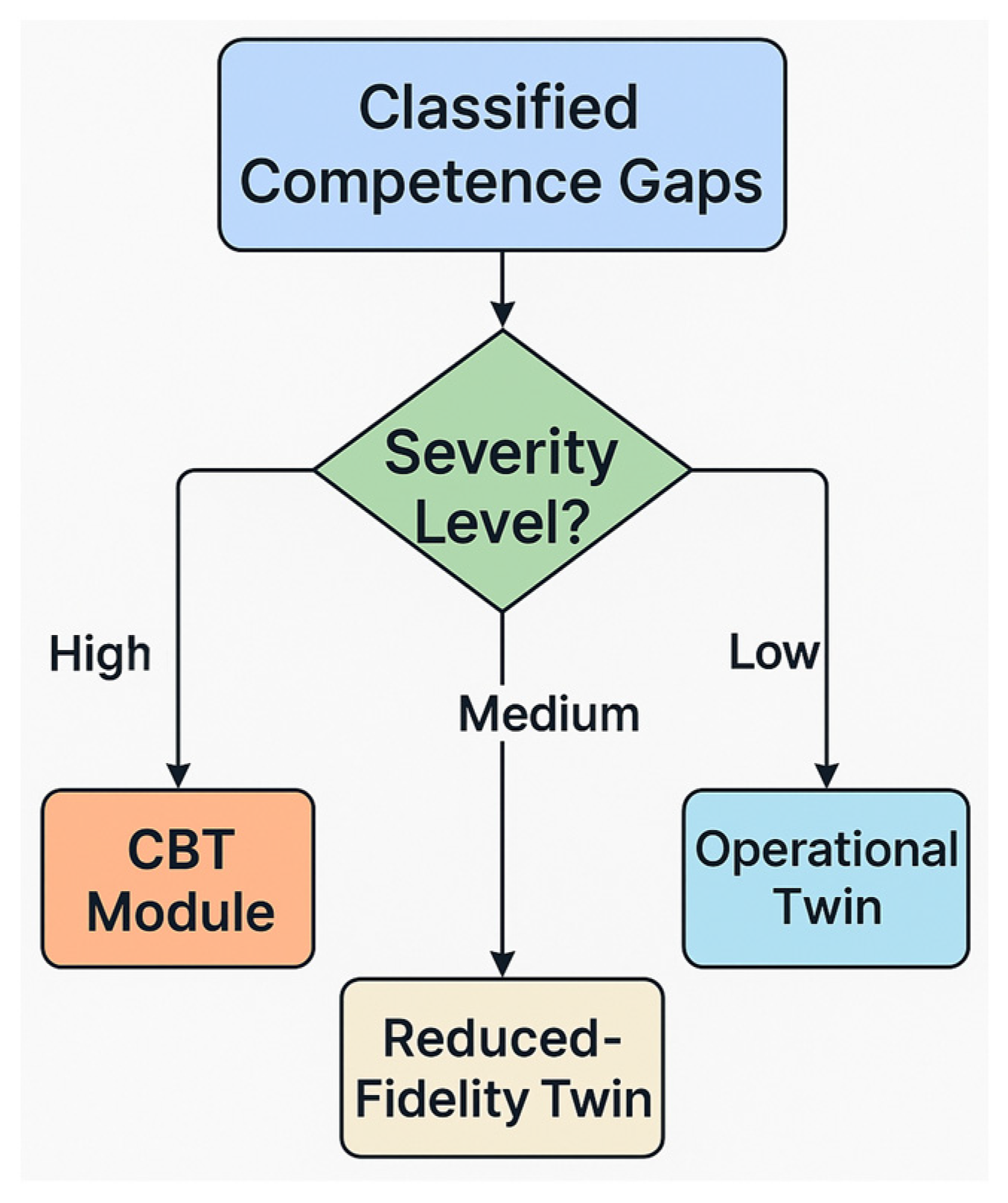

Together, these three twins are continuously aligned through an orchestration engine that identifies deviations between the LDT and ICT, ranks the magnitude of detected gaps, and selects an appropriate training resource from the LET. The orchestration logic is governed by predefined thresholds that classify gaps into high, medium, or low severity, each mapped to a corresponding content fidelity tier. Once a resource is deployed to the learner, all interactions are streamed back in real time to update the LDT and inform the next cycle of training decisions.

This conceptual framework transforms traditional maintenance training into a closed-loop, evidence-based learning system, where instructional content dynamically adapts to learner needs, and competence progression is continuously benchmarked against formal regulatory expectations. The ecosystem ensures both pedagogical relevance and regulatory compliance across all phases of technical education through anchoring the learning process in validated digital twins.

2.2. System Architecture and Data Flow

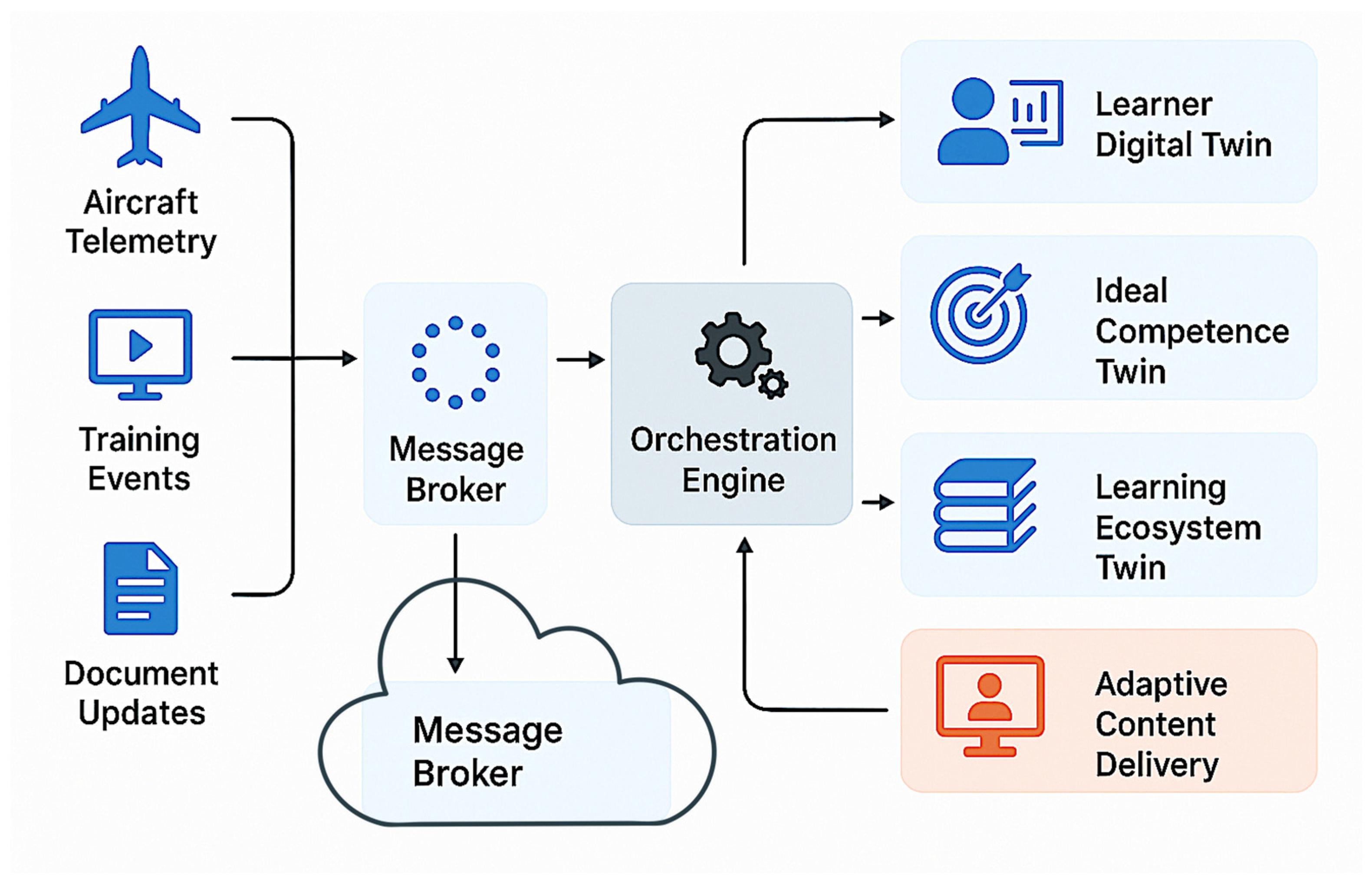

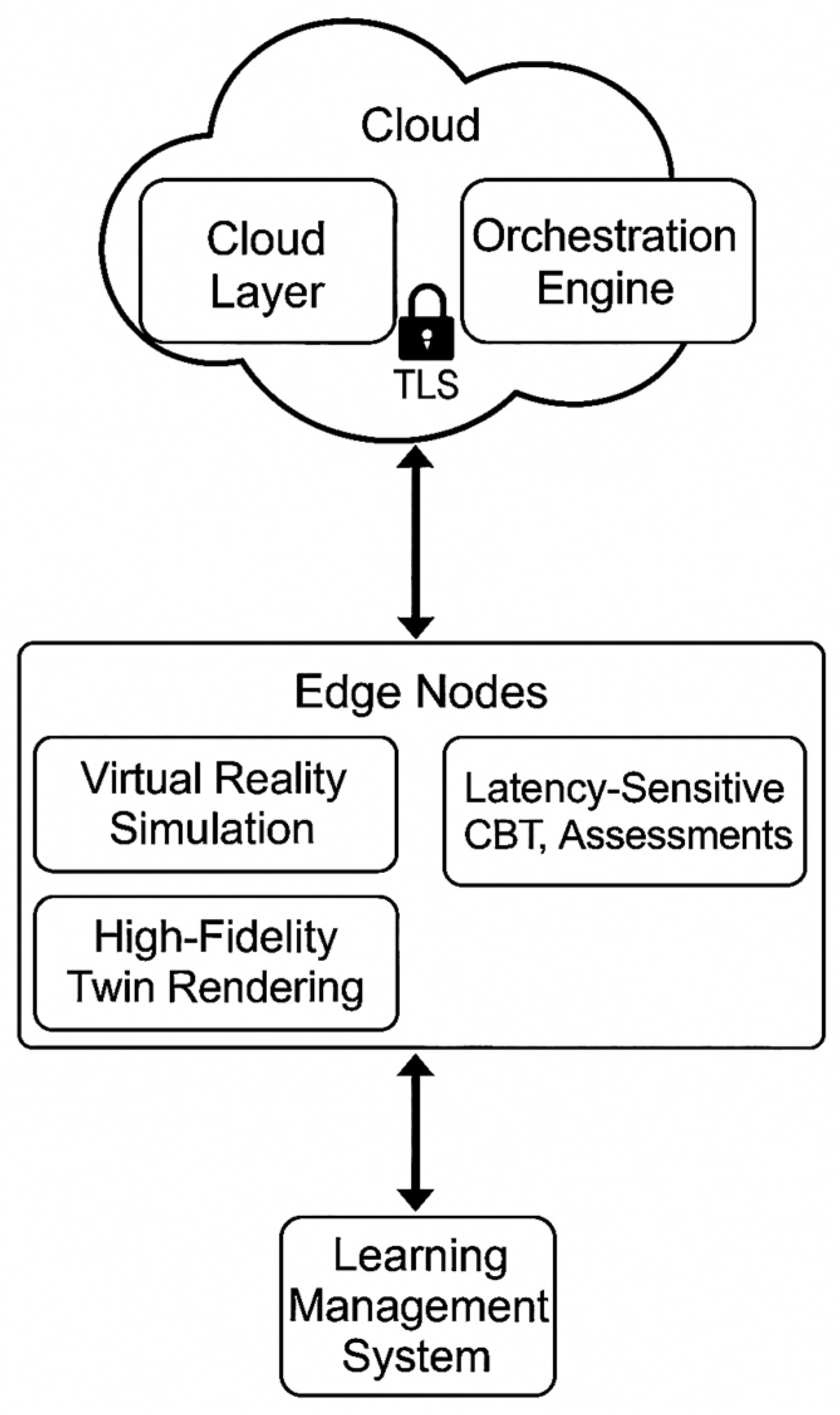

The architecture of the proposed aviation maintenance training ecosystem is designed to support dynamic learner modeling, personalized training orchestration, and traceable validation within a modular and scalable framework (

Figure 2). The system integrates multiple interacting components within a cloud–edge hybrid infrastructure that facilitates both real-time responsiveness and resource-intensive simulation delivery.

At the core of the architecture is the adaptive orchestration engine, which serves as the decision-making hub. It processes data from three digital twin layers: the LDT, which maintains an up-to-date representation of the learner’s competence profile; the ICT, which encodes regulatory skill requirements and serves as the benchmark for gap analysis; and the LET, which categorizes all available training resources by fidelity, Bloom’s taxonomy level, domain relevance, and regulatory linkage.

Central to this architecture is a secure, event-driven infrastructure that connects the three core digital twin layers of LDT, ICT, and LET via a centralized orchestration engine and a publish–subscribe message backbone based on Apache Kafka [

34] or Message Queuing Telemetry Transport (MQTT) [

35] protocols.

The system initiates with the ingestion of heterogeneous data streams from multiple sources. These include operational telemetry from aircraft digital twins, xAPI-formatted learning activity logs (xAPI is an eLearning specification that makes it possible to collect data about the wide range of experiences a person has within online and offline training activities [

36]), and document updates such as revised maintenance manuals or airworthiness directives. All inputs are encrypted and serialized before being published to their respective message topics on the streaming backbone. A schema registry ensures that data structures remain consistent across different training modules, learner groups, and content sources.

The presence of two “Message Broker” elements in

Figure 2 reflects their dual operational context within the cloud–edge hybrid infrastructure. Although they share the same functional role (streaming and routing of data), they are deployed separately at the edge and cloud layers to manage data locality, latency, and security.

The upper message broker operates within the orchestration layer, handling the routing of incoming telemetry, training events, and document updates toward the orchestration engine. In contrast, the lower cloud-symbol-associated broker represents the backend cloud-level message exchange layer that supports inter-institutional synchronization, long-term data storage, and asynchronous delivery of training records across distributed edge nodes.

This architectural split reflects the system’s design principle of decoupling latency-sensitive training orchestration from backend analytics and archival services. The directional flow between these brokers, shown as a one-way arrow, illustrates the push of raw data from the edge environment toward centralized orchestration logic while maintaining modular separation of real-time orchestration and long-term analytics. This dual-broker pattern ensures scalability, modularity, and robustness in multi-institutional deployments while supporting real-time responsiveness for training orchestration at the learner-facing edge.

At the heart of the system, the orchestration engine subscribes to these real-time data streams and performs dynamic alignment between each learner’s current competence profile (LDT) and the regulatory and operational expectations encoded in the ICT. By comparing multi-dimensional competence vectors, the engine identifies gaps, ranks their severity based on threshold deltas across cognitive depth, domain coverage, and operational importance, and selects appropriate instructional resources from the LET. This selection process considers multiple factors, including gap size, prior learner behavior, instructional metadata, and resource fidelity.

Once a resource is selected, it is deployed to the learner through a front-end delivery interface, which may include a traditional learning management system (LMS), virtual reality (VR) or augmented reality systems, or portable CBT platforms. To manage performance and accessibility, the ecosystem employs a hybrid deployment model: latency-sensitive and lightweight resources such as CBT modules are delivered via local edge nodes, while GPU-intensive simulations and VR training modules are rendered through elastic cloud environments. This model ensures scalability, responsiveness, and compatibility with diverse training scenarios and locations.

During each training session, all learner interactions, ranging from diagnostic decisions to simulation paths and time-based performance metrics, are captured and streamed back to the orchestration engine in real time. These interactions are used to update the LDT, refine the learner’s competence vector, and inform the next iteration of gap analysis. Simultaneously, all training events are logged into a secure validation matrix that includes metadata such as scenario identifiers, asset versions, session outcomes, and cryptographic hashes to guarantee audit integrity. This matrix can be queried by instructors, quality managers, or regulatory auditors to validate training relevance and compliance with EASA Part-66 standards.

Security and data governance are integral to the system. All communications are protected via TLS encryption, and role-based access control (RBAC) ensures that users only access the data relevant to their function—be it learner, instructor, auditor, or system administrator. To protect proprietary data, especially in operational twin slices used for training, digital rights management (DRM) is enforced. Furthermore, all sensitive component identifiers are scrubbed during the transformation of full operational twins into training-ready digital slices, thus preserving behavior-critical dynamics while safeguarding OEM intellectual property.

Through this architectural design, the training ecosystem achieves a high degree of automation, adaptability, and regulatory accountability. It enables continuous alignment of instructional resources with both individual learner needs and evolving operational contexts, thereby transforming maintenance training into a responsive and evidence-based process.

Table 1 summarizes the key components of the proposed DTBT ecosystem, along with their core functions, enabling technologies, and roles within the data flow pipeline.

2.3. Four-Level Learning Architecture of the Digital Twin Ecosystem

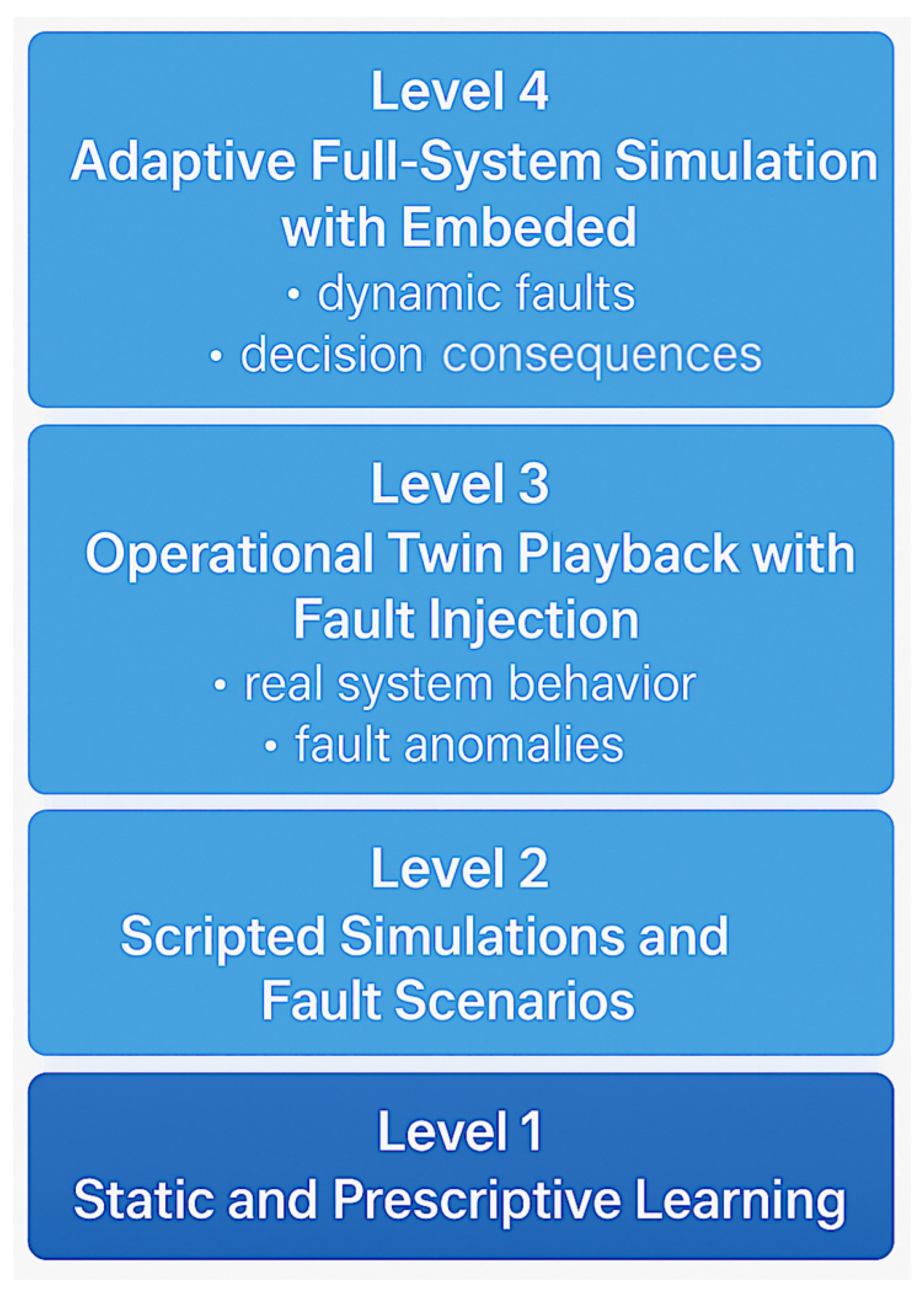

The training ecosystem is built on a four-level learning architecture that enables progressive, personalized, and regulation-aligned development of aviation maintenance competencies. This structure is embedded within the LET and allows the orchestration engine to deliver content dynamically, based on the severity of detected competence gaps and the learner’s evolving profile. The levels represent a fidelity gradient from static theoretical instruction to full-system digital twin simulations mapped to increasing Bloom’s taxonomy stages and operational complexity (

Figure 3).

At the foundation is Level 1, comprising static and prescriptive learning resources. These include traditional CBT modules, digital manuals, procedural checklists, and multimedia tutorials. Content at this level is designed to support fundamental knowledge acquisition, particularly for learners exhibiting high-severity gaps in low-order cognitive domains such as remembering and understanding. Delivery typically occurs via learning management systems, mobile apps, or downloadable documents. Digital twins are absent or represented symbolically, serving primarily a referential function.

Level 2 introduces scripted simulations and fault scenarios with medium fidelity. Learners engage in guided diagnostic sequences or procedural walk-throughs using reduced-function digital twins. These assets simulate predictable faults, procedural errors, or system states, enabling learners to practice applying knowledge in controlled environments. The focus is on mid-level Bloom’s taxonomy objectives, such as applying and analyzing, and the delivery may take the form of interactive browser-based modules or tablet-based tools. The digital twin slices used at this level allow limited input manipulation and visual feedback.

In Level 3, the training experience incorporates operational digital twin playback combined with fault injection. These high-fidelity modules simulate real-world system behavior using synchronized telemetry from actual aircraft, augmented with realistic anomalies. Learners observe and interact with system states under semi-structured fault conditions, practicing higher-order competencies such as system evaluation and procedural validation. This level supports immersive learning through VR-enabled environments or advanced simulators and represents a transition to near-operational realism.

At the highest tier, Level 4, learners interact with adaptive, full-system digital twins that simulate complex operational environments in real time. These environments embed dynamic fault progression, decision consequences, timing tolerances, and behavioral scoring. The scenarios are designed to assess mastery-level performance, with learners required to synthesize and apply procedural knowledge in realistic, time-sensitive situations. The orchestration engine adapts the scenario parameters based on the learner’s inputs, enabling a closed-loop instructional cycle. Delivery takes place in virtual or augmented reality settings, often using headsets or high-performance simulation workstations.

Learners do not progress through these levels in a linear fashion. Instead, the orchestration engine selects the appropriate level for each identified gap, based on gap severity, operational criticality, learner history, and regulatory alignment. For instance, a significant gap in hydraulic actuation understanding may begin with Level 1 theory-based content, proceed through Level 2 fault simulation, and culminate in a Level 4 VR-based full-system diagnostic scenario. This adaptive logic ensures that instructional resources are matched precisely to learner needs while optimizing cost, engagement, and outcome fidelity.

This four-level architecture enables modular deployment, targeted remediation, and measurable skill development. It supports scalability across institutions, traceability through the xAPI-powered validation matrix, and regulatory compliance via alignment with EASA Part-66 modules and Bloom’s-taxonomy-level competence standards. As such, it provides a pedagogically rigorous and technically robust framework for aviation maintenance training in digitally transformed learning environments.

2.4. Content Fidelity Stratification

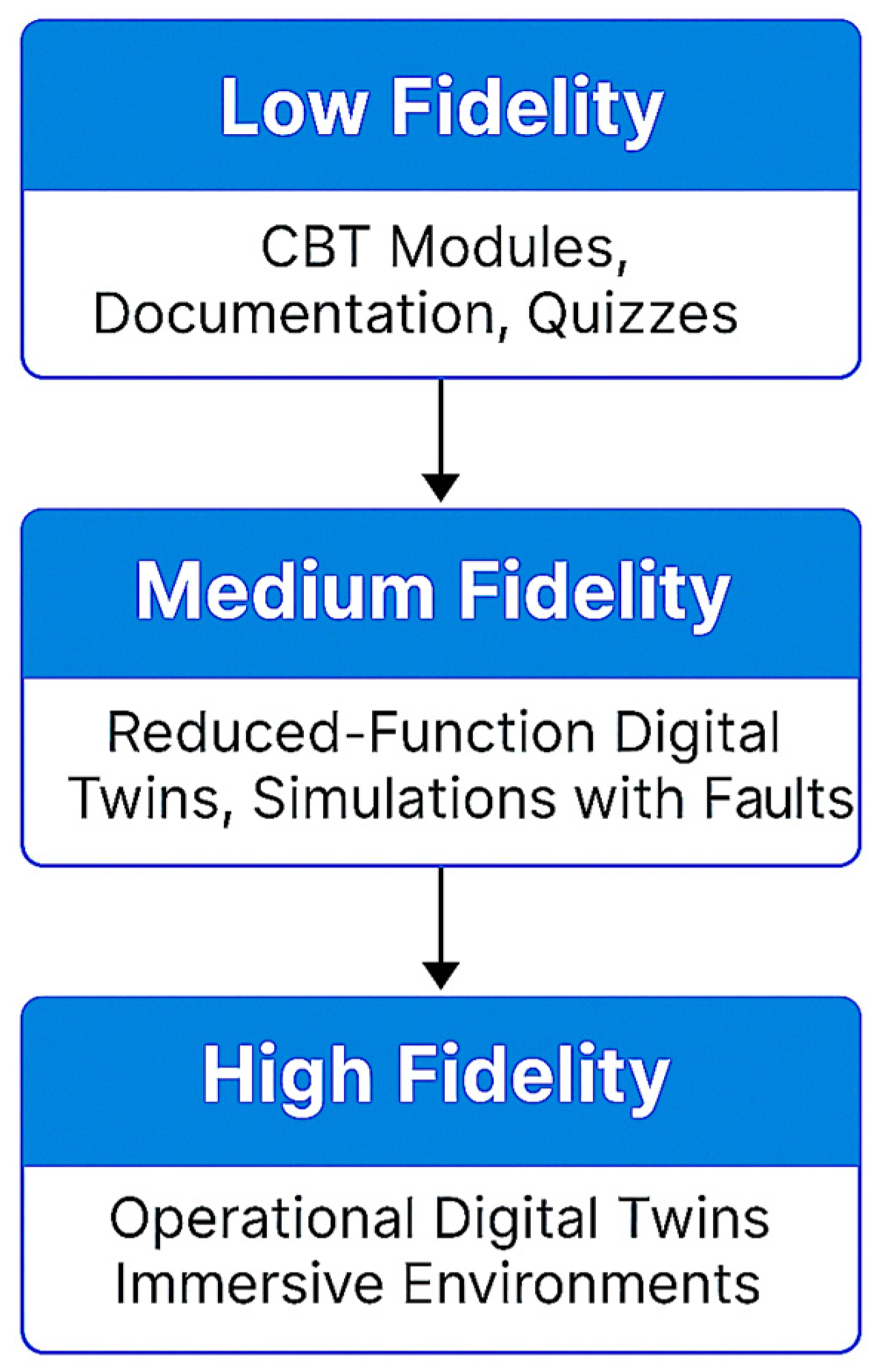

A critical feature of the proposed training ecosystem is its ability to tailor instructional delivery based on the severity of identified competence gaps through a structured fidelity stratification model (

Figure 4). This model categorizes learning assets within LET into three tiers of increasing realism and complexity: low fidelity, medium fidelity, and high fidelity. The selection of content is governed by the orchestration engine, which dynamically aligns training asset fidelity with the learner’s current proficiency level and the nature of the learning objective.

Low-fidelity content is designed to address broad or foundational skill gaps, typically aligned with lower-order cognitive objectives such as recall and comprehension. These assets include traditional CBT modules, digital documentation, instructional videos, and quizzes. For example, in ATA Chapter 24 (Electrical Power), a low-fidelity module may introduce the basic components of the AC power distribution system, common wiring standards, and safety procedures using annotated diagrams and narrated slides. This type of content is particularly effective for new trainees or those who require recertification on standard system knowledge before progressing to interactive tasks.

Medium-fidelity content is suitable for addressing moderate competence gaps, where the learner exhibits partial understanding or inconsistent procedural application. These resources include reduced-function digital twins and semi-interactive simulations that model selected system behaviors with scripted inputs and faults. For instance, in ATA Chapter 29 (Hydraulic Power), a medium-fidelity simulation may allow the learner to operate virtual hydraulic pumps, manipulate selector valves, and identify procedural faults (e.g., loss of pressure due to actuator leaks) within a predefined scenario. This level of fidelity helps reinforce applied diagnostic logic, procedural flow, and cause–effect relationships in moderately complex tasks.

High-fidelity content targets learners with minimal gaps who are preparing for system-level mastery, especially in operationally critical or safety-sensitive areas. These assets are derived from sliced operational digital twins that mirror the behavior of actual aircraft subsystems in real-world conditions, based on telemetry and maintenance records. For example, in ATA Chapter 36 (Pneumatic Systems), a high-fidelity training twin might simulate dynamic pressure changes across multiple bleed air zones during different phases of flight. The scenario would include real-time sensor data, cascading failures, and time-constrained decision points, requiring the learner to conduct a full diagnostic sweep using onboard indications and fault isolation procedures. Such content is typically delivered via immersive virtual or augmented reality interfaces and supports Bloom’s taxonomy’s highest levels—evaluation and synthesis.

Each content tier is associated with metadata tags that define its coverage, fidelity, scenario type, and regulatory mapping. The orchestration engine uses these attributes, along with the learner’s historical performance and current LDT profile, to assign content that is educationally appropriate and computationally efficient.

Learning assets are structured to align with gap severity and ATA-specific training objectives, ensuring targeted, scalable, and regulation-compliant instruction. This approach to fidelity stratification enhances both personalization and resource efficiency while reinforcing the pedagogical connection between simulated behavior and real-world aircraft operations.

2.5. Validation and Learning Record Management

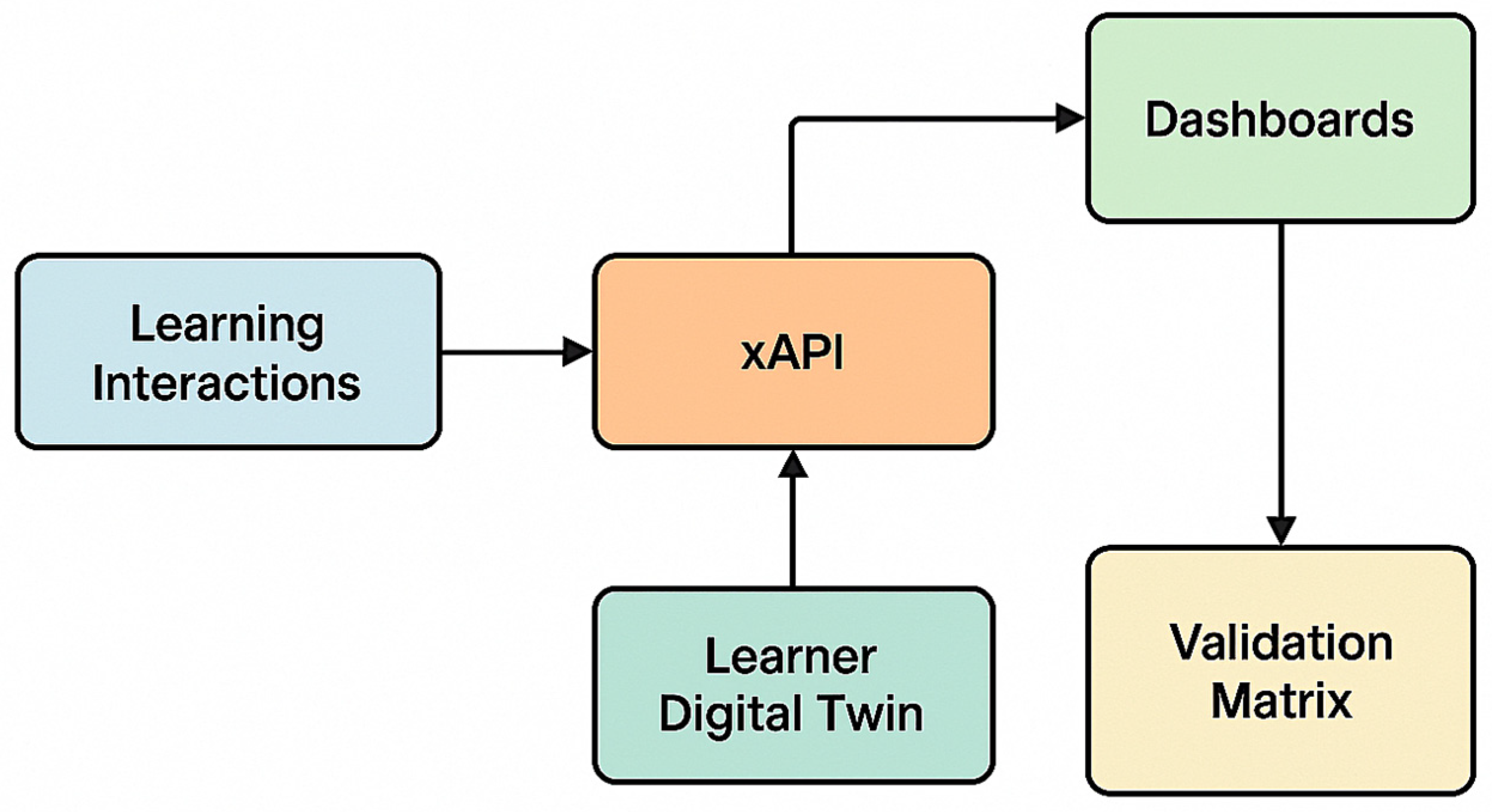

A foundational requirement of aviation maintenance training is the ability to demonstrate regulatory compliance, instructional validity, and traceable learner progression. The proposed training ecosystem addresses this requirement through a robust validation and learning record management framework, designed to ensure that each instructional interaction is verifiable, auditable, and pedagogically aligned (

Figure 5).

At the core of this framework is the validation matrix, a structured and immutable ledger that captures the complete metadata of every training session. For each learning event, whether initiated through a CBT module, reduced-fidelity simulator, or high-fidelity operational twin, the system records key attributes including learner ID, scenario ID, gap classification, selected LET asset, resource version, interaction timestamps, performance metrics, and result outcomes. Additionally, digital hash values are appended to ensure content integrity and support forensic-level audits.

All learner interactions are tracked using the xAPI standard, which enables granular logging of behaviors such as clicks, decisions, timing, resource transitions, and task completions. These records are continuously streamed into the LDT, updating the trainee’s competence profile in real time. Each completed session triggers an automated evaluation process that recalculates the competence vector, repositions the learner within the ideal skill space, and identifies new or unresolved gaps for the next orchestration cycle.

Instructors and administrators can access these records via role-based dashboards that visualize training progression, fidelity history, assessment scores, and readiness levels per ATA chapter or regulatory module. For quality assurance personnel and auditors, the system enables targeted queries across the validation matrix, for example, retrieving all training sessions tied to ATA Chapter 32 (Landing Gear) that used a specific simulation version or verifying that a cohort has completed all required EASA Part-66 modules at the specified Bloom’s taxonomy level.

The ecosystem supports version control and traceability for all LET assets. Each learning resource carries a version identifier, instructional metadata, and update history. When a resource is revised, due to a regulatory update, OEM change notice, or pedagogical improvement, its new version is registered and tracked. Historical records maintain the linkage between learner activity and the exact content version used, ensuring that all competence assessments are contextualized and valid for their timeframe.

To safeguard data privacy and regulatory integrity, the system enforces strict security and governance protocols. All record streams are encrypted using TLS, stored in tamper-resistant databases, and governed by digital-rights management rules. Learner records are anonymized for research or cross-fleet analytics and are accessible only through role-based permissions compliant with GDPR and industry data standards.

This validation and learning record management architecture transforms the training process from a sequence of isolated events into a continuous, traceable learning trajectory. It empowers stakeholders to track progress, audit instructional quality, and document compliance with aviation safety standards thereby reinforcing trust, accountability, and operational readiness across the training ecosystem.

Table 2 summarizes the core components of the validation and learning record management framework. It identifies each element’s primary function, enabling technologies, and contribution to the overall integrity and auditability of the training ecosystem.

2.6. Adoption Strategy and Experimental Scope

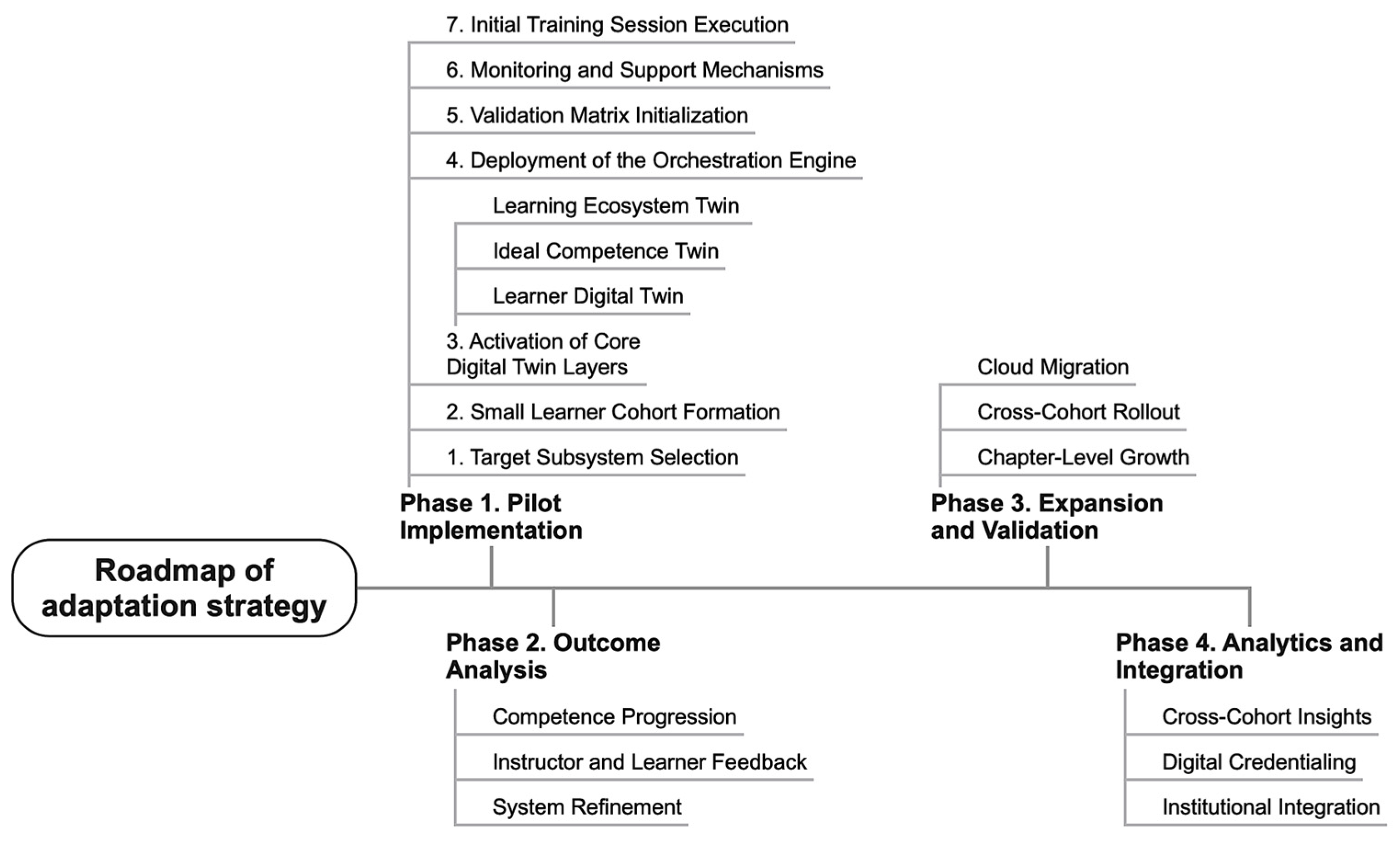

The adoption of a DTBT ecosystem in aviation maintenance requires a phased, evidence-driven strategy that supports institutional readiness, regulatory compliance, and instructional effectiveness. To ensure manageable deployment and measurable outcomes, the proposed approach emphasizes modular onboarding, iterative validation, and scalable expansion across learning cohorts and technical domains.

The initial stage focuses on pilot implementation within a single ATA chapter or system domain, such as ATA 32 (Landing Gear) or ATA 36 (Pneumatics), where learning assets and operational digital twin slices are already available or easily acquired. A small group of trainees selected based on prior training history or cohort diversity is enrolled in a controlled trial using the full orchestration pipeline, including LDT tracking, ICT comparison, adaptive LET content delivery, and validation matrix logging. This limited-scope deployment allows institutions to assess technical feasibility, user engagement, and gap closure rates before scaling to additional systems or learner populations.

Instructional outcomes from the pilot are analyzed across multiple dimensions, including learning efficiency, competence vector progression, resource utilization, and training impact measured against Bloom’s-taxonomy-level attainment and EASA Part-66 thresholds. Feedback is gathered from trainees, instructors, and quality managers through surveys, dashboard analytics, and regulatory audits. Any issues related to fidelity matching, orchestration logic, or platform usability are addressed prior to broader rollout.

In subsequent phases, the ecosystem is expanded across additional ATA chapters, with each new module validated through structured onboarding protocols. As the learning asset library grows, high-fidelity training twins are migrated to the cloud layer, enabling greater resource elasticity and centralized version control. Institutions may begin to adopt blockchain-secured digital credentials or badges to document learner progression and competence gap closure, making qualifications more portable and transparent.

Throughout the adoption cycle, cross-cohort and cross-system analytics are used to identify recurring skill deficits, bottlenecks in instructional delivery, or correlations between learner profiles and training outcomes. These insights inform decisions at both the curriculum level (e.g., which systems require additional fidelity) and the strategic level (e.g., workforce planning or OEM feedback loops).

This strategy allows organizations, whether training academies, airline MRO divisions, or regulatory authorities, to adopt the ecosystem in measured increments, minimizing disruption while maximizing visibility into its instructional and operational value. Rather than replacing legacy systems all at once, this model supports coexistence and gradual integration, ensuring that transformation proceeds at a pace aligned with institutional capacity, instructor acceptance, and regulatory approval cycles.

2.7. Mathematical Framework of Competence Gap Analysis and Content Matching

To operationalize the orchestration logic that underpins the adaptive training loop, this study formalizes a mathematical framework for real-time competence gap detection and content fidelity selection. The framework ensures that the alignment between the LDT and the ICT is not only rule-based, but also quantitatively transparent, scalable, and auditable.

Let represent the learner competence vector, where each element denotes the normalized mastery level of a specific skill, as derived from the LDT, and represents the target competence vector (ICT), where defines the regulatory or operational requirement for the -th competence unit. Notation denotes the -dimensional real vector space. This refers to a vector with real-valued components.

The competence gap vector is then defined as

Each component is classified into a gap severity level based on threshold parameters:

High severity if

Medium severity if

Low severity if

No gap if

where are empirically derived based on Bloom’s-taxonomy-level mappings or prior training data (e.g., .

For each identified gap , the orchestration engine selects a content asset, , from the learning ecosystem twin (LET), where represents a set of training resources designed to cover skill dimensions. Each resource is tagged with metadata:

Fidelity level (low, medium, high)

Skill target vector

Bloom’s taxonomy level

Duration estimates , which are important for time budgeting and scheduling

Regulatory mapping , which is important for ensuring content satisfies regulatory constraints.

Only resources satisfying the regulatory mapping are required:

where

is the regulatory module associated with skill

(e.g., M09.02 for the emergency landing gear extension).

And optionally,

if the learner has time constraints (e.g., session limits, device availability).

The resource selection function

aims to minimize a multi-criteria cost function:

where

is the unit vector for the targeted competence gap

, and weights

are assigned based on instructional policy (e.g., learning outcome priority vs. fidelity cost).

The selected resource

is then streamed to the learner, and its effectiveness is measured by observing the post-intervention competence vector

, with

This framework enables quantitative tracking of training impact, automated resource matching, and regulatory justification for instructional pathways. It also lays the foundation for simulation-driven validation and model-based audit querying, as all interactions and gap resolutions are recorded within the validation matrix using scenario and vector metadata.

3. Results

3.4. Simulation Setup and Process Flow

To evaluate the effectiveness of the digital-twin-based orchestration engine, a discrete-time simulation was developed to model learner progression over multiple training cycles. This simulation captures the iterative nature of the ecosystem’s feedback loop, where competence gaps are continuously assessed, content is assigned, and learning outcomes are recorded into the validation matrix.

The simulation setup is based on the normalized competence framework introduced in

Section 2.7 and uses the learner vectors established in

Section 3.1. The process is implemented in a modular structure consisting of five key stages executed in a loop across multiple iterations.

Step 1. Initialization.

Each learner is assigned an initial competence vector , representing mastery in skill areas within ATA Chapter 32 (Landing Gear). These vectors are compared against a predefined target vector , which encodes the ideal competence thresholds drawn from EASA Part-66 modules and Bloom’s-taxonomy-level mappings.

Step 2. Gap computation and severity classification.

For each skill domain

, the competence gap is computed as

Gap values are classified into four categories based on the severity thresholds: high (), medium (), low (), and no gap (). This classification determines the fidelity level of training content to be delivered in the next step.

Step 3. Resource assignment via orchestration engine.

Based on gap severity, each learner is assigned content from the LET. Each training asset includes a fidelity multiplier for low, medium, and high fidelity, respectively. These values influence the magnitude of learning gains during competence updates.

Step 4. Competence update.

After simulated engagement with the assigned content, the learner’s competence in skill domain

is updated using

where

is a responsiveness coefficient specific to each learner,

is the fidelity multiplier, and

is the gap for skill

at iteration

.

Step 5. Logging and validation.

Each iteration produces an xAPI-compliant training record, which is logged in the validation matrix. Logged metadata includes the following:

Scenario and learner IDs

Gap severity classification

Resource ID and fidelity tier

Pre- and post-training competence scores

Completion time and confidence interval (if simulated)

This information is used for downstream auditing, analytics, and performance forecasting.

The simulation loop (

Figure 8) runs for eight iterations per learner, allowing observation of convergence trends, learning velocity, and system responsiveness. Subsequent sections will analyze these results numerically and visually, demonstrating how competence gaps are progressively reduced and personalized instruction dynamically evolves.

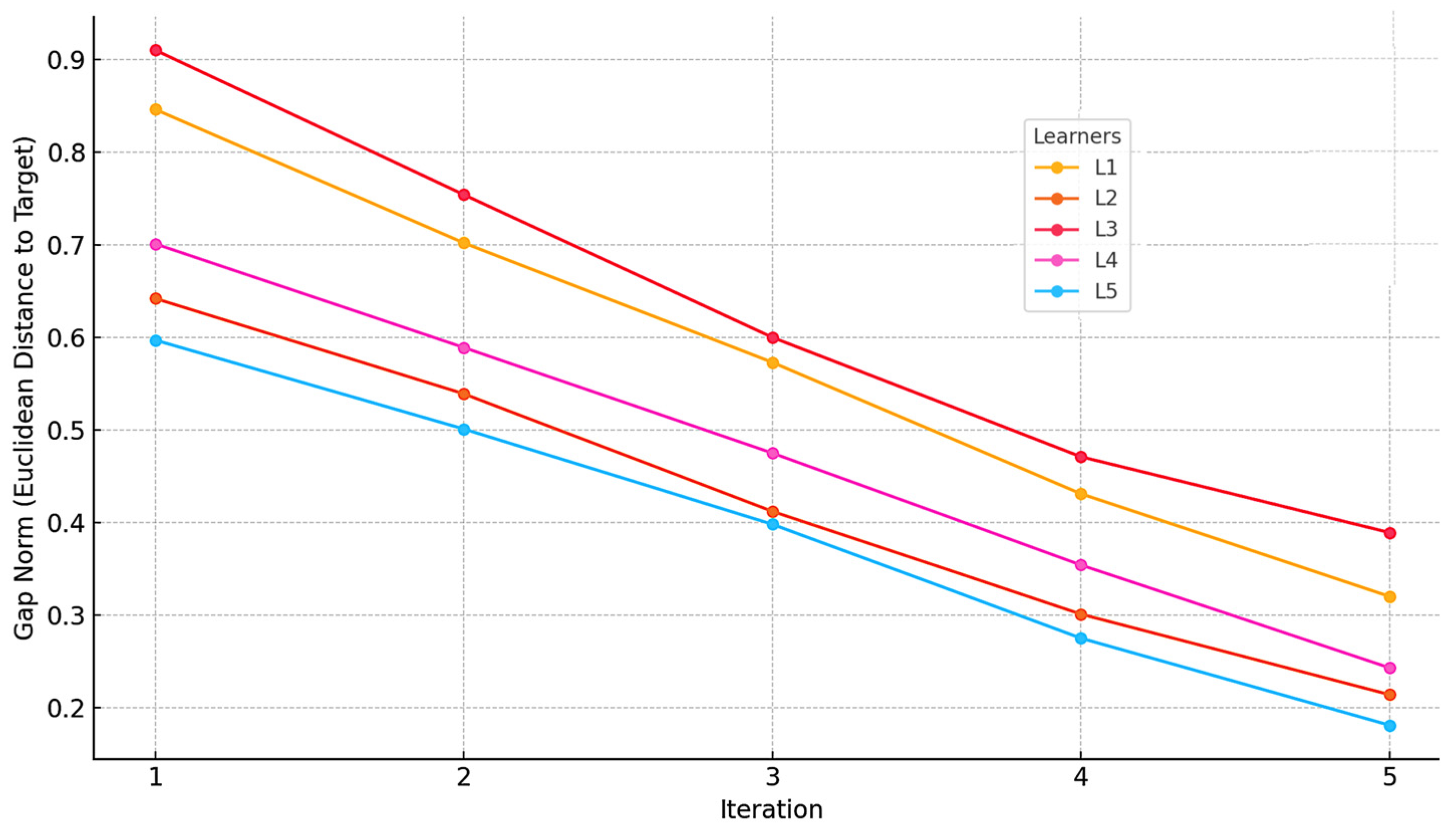

3.5. Learning Progression Results

The discrete-time simulation described in the previous section was executed over five orchestration cycles per learner, with content assignments dynamically adjusted at each step according to gap severity. This section presents the results of the simulation, highlighting how the learners’ competence vectors evolved over time under the influence of fidelity-matched instructional content.

Each learner’s competence vector was updated iteratively based on the assigned content’s fidelity multiplier , the magnitude of the initial gap , and an individual responsiveness factor . The responsiveness coefficient was randomly assigned per learner from the interval to reflect individual variation in learning effectiveness.

The primary performance metric was the competence gap norm, computed as the Euclidean distance between the learner’s evolving competence vector and the target vector:

where

is target competence vector,

is the learner’s current competence vector at iteration

, and

is the Euclidean (

) norm, which computes the distance between the two vectors.

This metric captures overall proximity to the regulatory competence standard across all six skill domains.

Figure 9 displays the trajectory of each learner’s gap norm over five iterations. All learners showed a consistent downward trend in their gap norms, indicating progressive closure of identified competence gaps.

The rate of learning varied among individuals, reflecting differences in initial competence, responsiveness , and content fidelity assigned. Learner 1, who started with the highest overall gaps, required multiple iterations with low- and medium-fidelity content before being assigned high-fidelity digital twin scenarios in iterations 4 and 5. In contrast, Learner 5, who began with relatively minor deficits, progressed rapidly and was transitioned to consolidation scenarios by the third cycle.

The simulation also logged the distribution of content fidelity levels used per learner. Learners with initially high-severity gaps received a greater share of low- and medium-fidelity interventions in early cycles, while low-severity learners engaged primarily with high-fidelity operational twins. This supports the effectiveness of the adaptive orchestration engine in allocating resources efficiently and pedagogically appropriately.

Moreover, all training events and associated outcomes were captured in the validation matrix, including content version hashes, learner interaction timestamps, and post-assessment scores. This ensures full auditability and enables downstream analysis of training pathway effectiveness, instructional efficiency, and regulatory traceability.

3.6. System Behavior and Validation Matrix Output

To complete the simulation cycle, the training ecosystem logs every instructional interaction, decision, and outcome into a structured validation matrix. This matrix serves as the central audit mechanism for regulatory compliance, system transparency, and instructional traceability. It captures the contextual and performance metadata associated with each orchestration decision, forming a verifiable training history that can be queried by instructors, auditors, and certification bodies.

Each row in the validation matrix corresponds to a unique training event. The validation matrix is a structured, tamper-evident data store that captures detailed logs of every training interaction executed through the orchestration engine. It acts as the digital audit trail of the system, providing visibility into what was taught, to whom, using what resource, at what time, and with what outcome. This matrix plays a central role in verifying instructional alignment with regulatory standards (e.g., EASA Part-66), facilitating continuous improvement, and enabling third-party audits.

Each row in the matrix represents a single, discrete training transaction—a learner engaging with a content asset to address a specific competence gap. Columns (fields) in the matrix include the following elements:

Learner ID—Pseudonymized identifier.

Skill Area—ATA-coded domain (e.g., LG-3: Hydraulic Actuation).

Gap Severity—Classification at the time of content selection.

Resource ID—Assigned instructional asset with version hash.

Fidelity Level—Low, medium, or high.

Pre/Post Scores—Normalized competence values before and after training.

Compliance Flags—Tags for EASA Part-66 module mapping.

A sample output from the simulation is shown in

Table 5, which records five selected events from Learner 2 across different skill domains and iterations. These entries illustrate how the orchestration engine dynamically adjusts training fidelity and records measurable gains aligned with the learner’s competence trajectory.

This matrix provides multiple benefits:

Every decision, asset, and learner outcome is verifiable with a version-controlled record.

Regulators can query training activity by module, scenario, or skill area to confirm compliance.

System designers and instructors can identify which resources yield the highest learning gains or where instructional strategies may need revision.

The matrix can be used for cohort-level skill gap heatmaps, training efficiency dashboards, or federated reporting across institutions.

From a systems perspective, the simulation confirms that the orchestration engine adheres to the expected behavior:

Learners with larger gaps receive foundational resources.

Gains are progressively achieved in a personalized and trackable manner.

All training interactions are logged in a format suitable for automated review and continuous improvement.

With this validation mechanism in place, the DTBT ecosystem not only adapts dynamically to learner needs but also provides the infrastructure for data-driven certification, enabling aviation organizations to meet future regulatory expectations in a digitally transformed training environment.

To ensure that real-time orchestration and validation processes remain computationally efficient, the system architecture integrates both edge computing elements and latency-aware task scheduling. Low-latency operations such as competence vector updates and fidelity tier assignments are performed on-site or via local server clusters, while higher-complexity analytics (e.g., session trace audits, performance forecasting) are routed through cloud services during non-critical intervals. This hybrid cloud–edge topology minimizes bandwidth consumption and prevents bottlenecks during high-frequency user interaction.

Furthermore, data transmissions use event-driven protocols (e.g., MQTT) and compressed xAPI log formats to reduce overhead without sacrificing granularity. The orchestration engine operates on pre-processed metadata rather than full simulation logs, further optimizing runtime responsiveness. Preliminary deployment benchmarks indicate that orchestration decisions are executed within sub-second latency even in multi-user sessions, supporting practical classroom use without perceived delay.