Novel Matching Algorithm for Effective Drone Detection and Identification by Radio Feature Extraction

Abstract

1. Introduction

- (a)

- We propose a novel drone detection method that identifies the presence of unmanned aerial vehicle (UAV) signals through power detection, filters out non-drone signals using bandwidth analysis, and matches drone signals based on time-series characteristics, thereby achieving the precise recognition of UAV signals.

- (b)

- We present a method to evaluate the effectiveness of UAV feature matching, establishing reliable metrics to improve identification accuracy.

- (c)

- We introduce DroneRF820 (https://pan.quark.cn/s/ae18fe3731da (accessed on 13 June 2025)), a new dataset collected through dual-band simultaneous monitoring. It contains RF signals from eight common UAVs and their flight controllers, offering high signal-to-noise ratio data for developing and validating detection methods.

- (d)

- We evaluate the performance of the recognition algorithm using the datasets. Compared to previous methods, our algorithm demonstrates advantages in both accuracy and speed.

2. Related Works

2.1. Principles of UAV Communication

2.2. UAV Signal Transmission Based on OFDM Modulation

3. Data Acquisition and Analysis

3.1. Data Collection

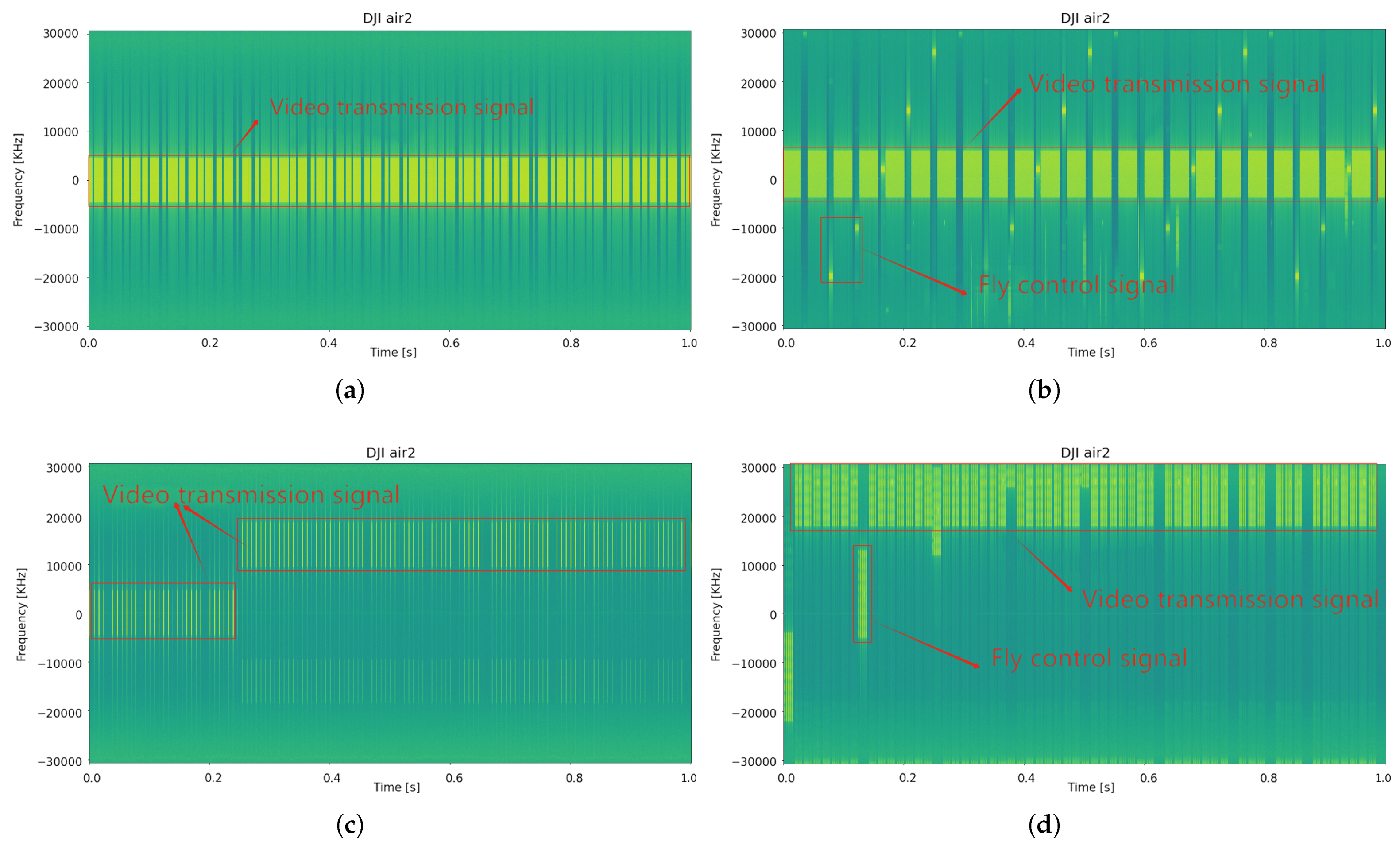

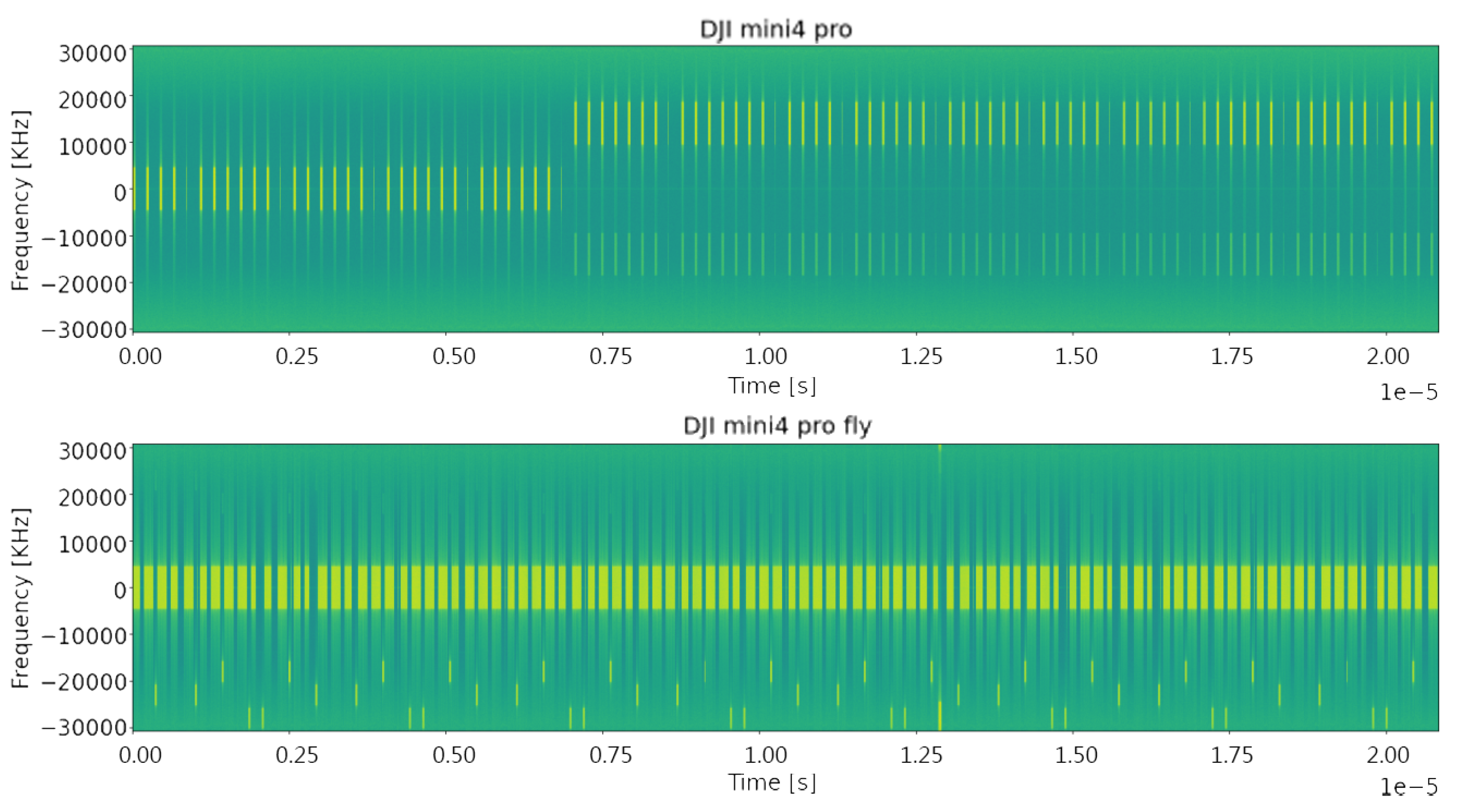

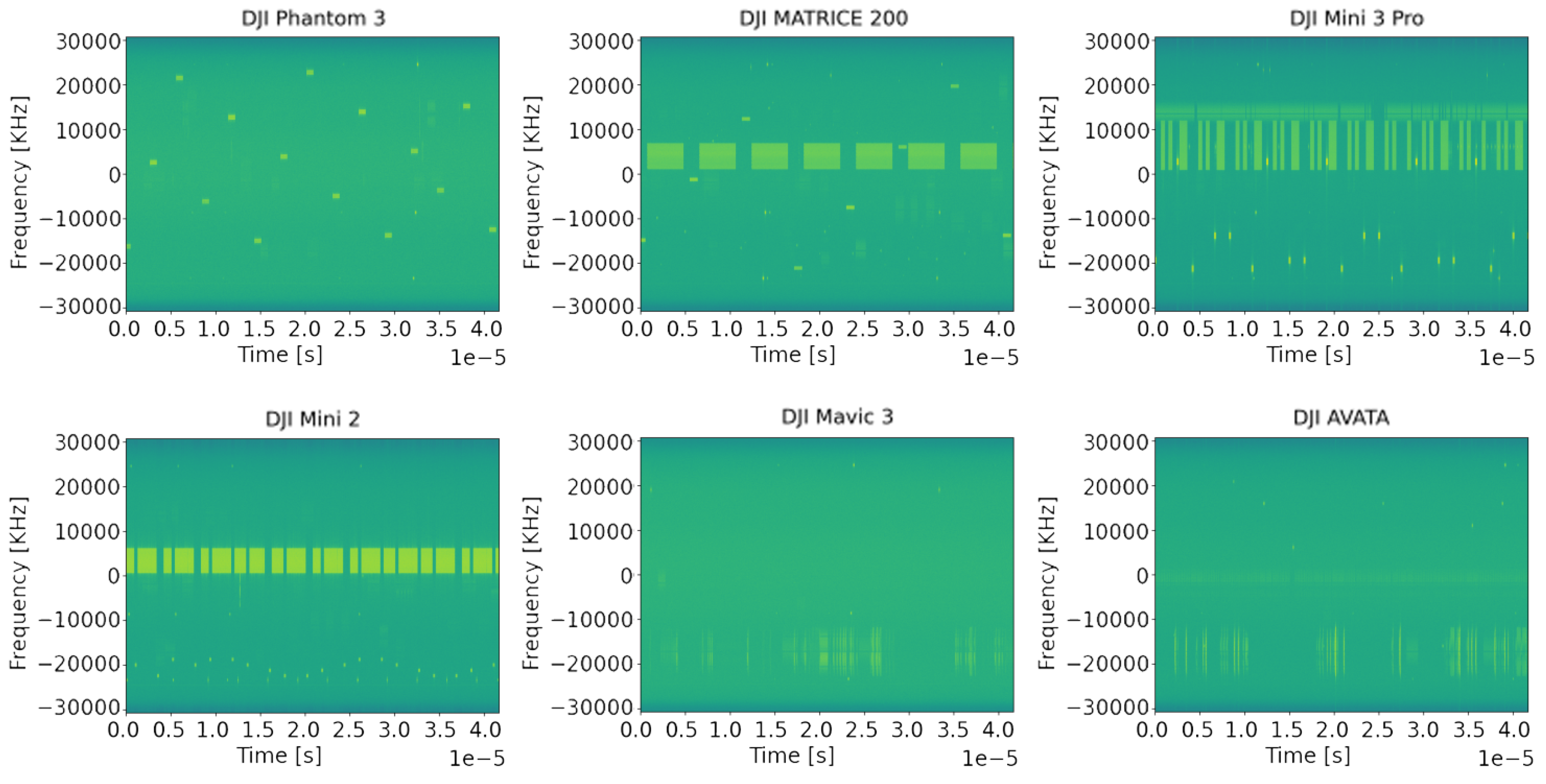

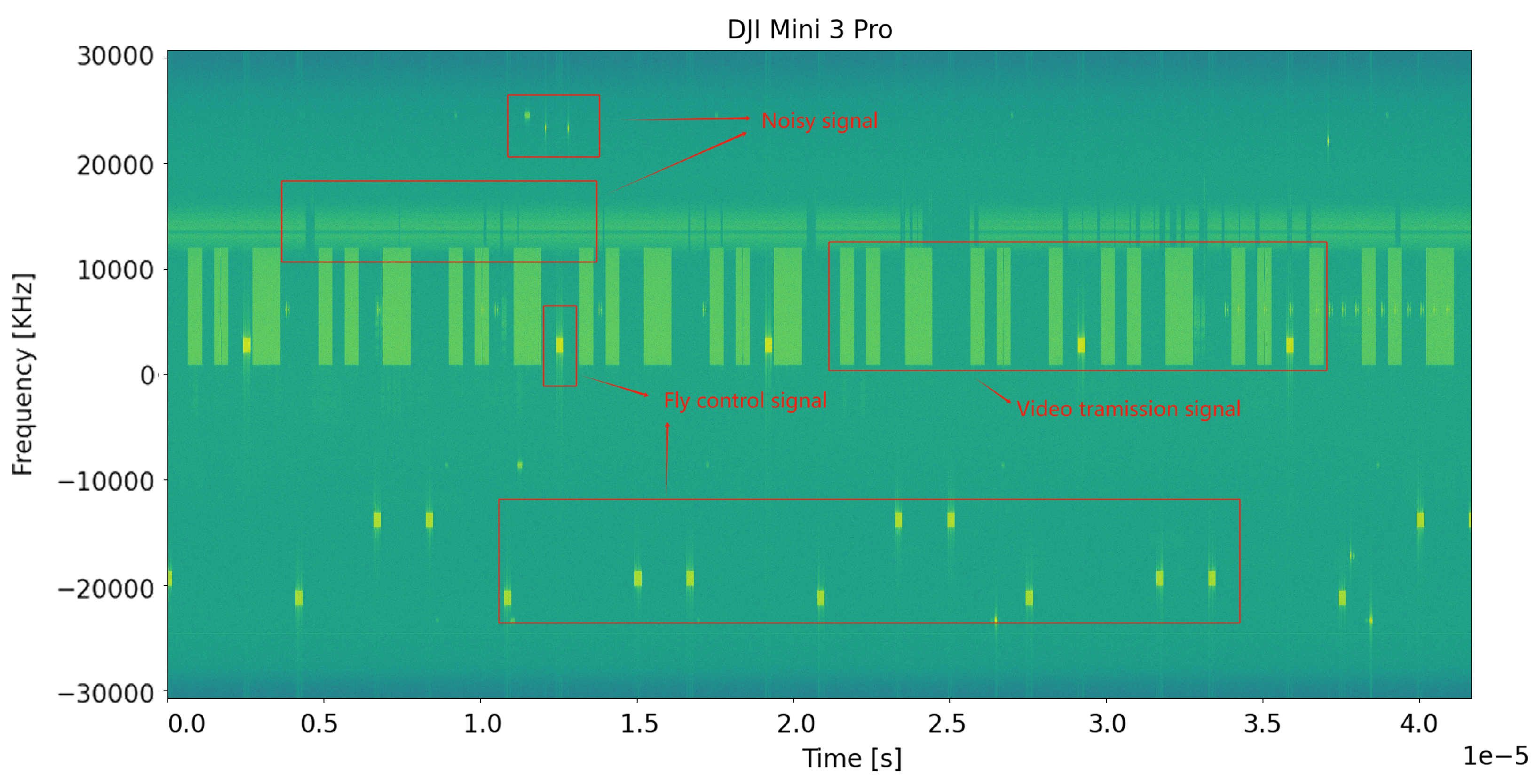

3.2. Time–Frequency Analysis of UAV Signal Measurements

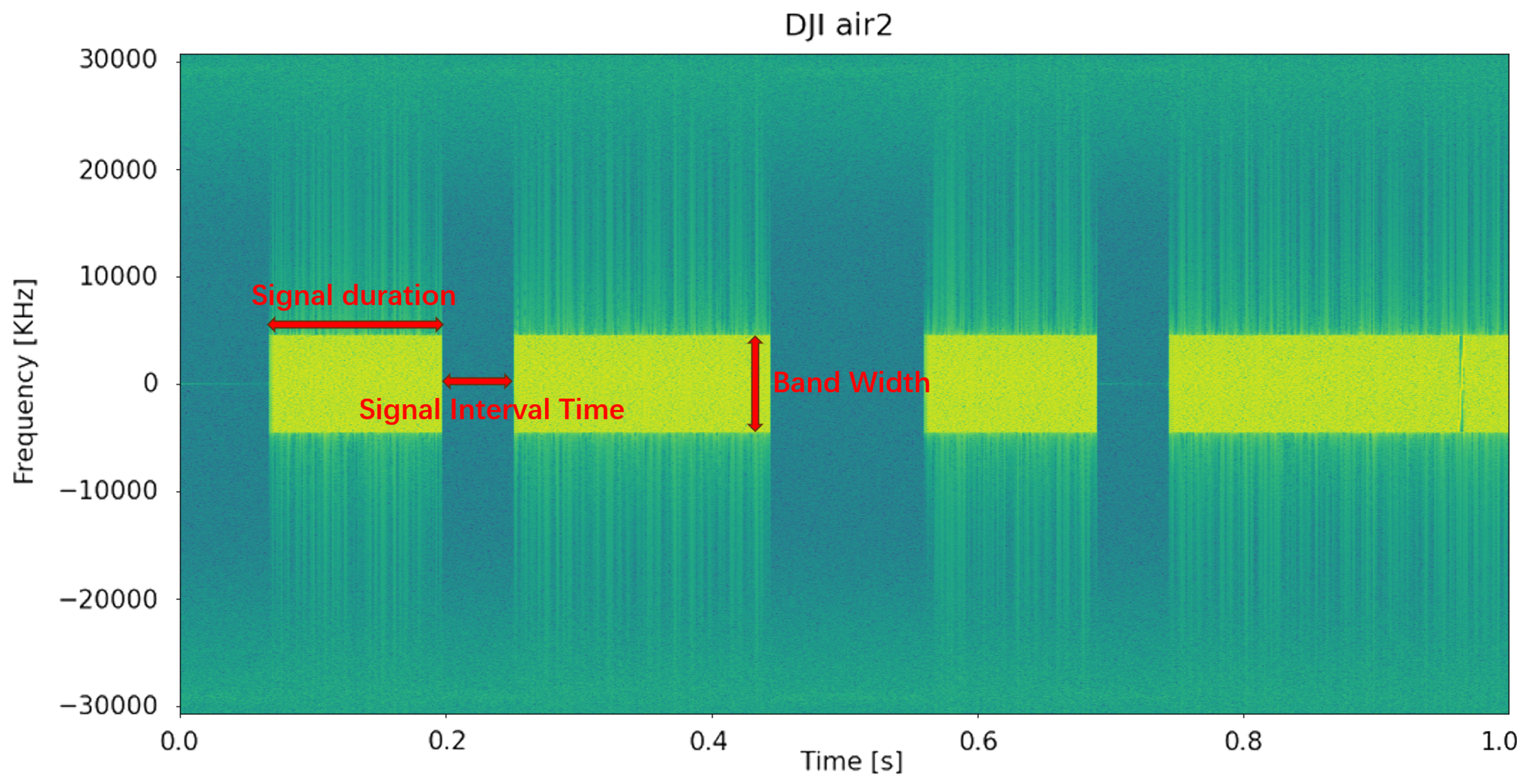

- Signal duration refers to the length of a data packet in time, that is, the time interval over which a single signal pulse or data packet was transmitted.

- Signal interval time denotes the time gap between consecutive signals.

- Bandwidth indicates the frequency range occupied by the signal, which determined the data transmission rate and signal quality.

4. Methods

4.1. Signal Preprocessing Module

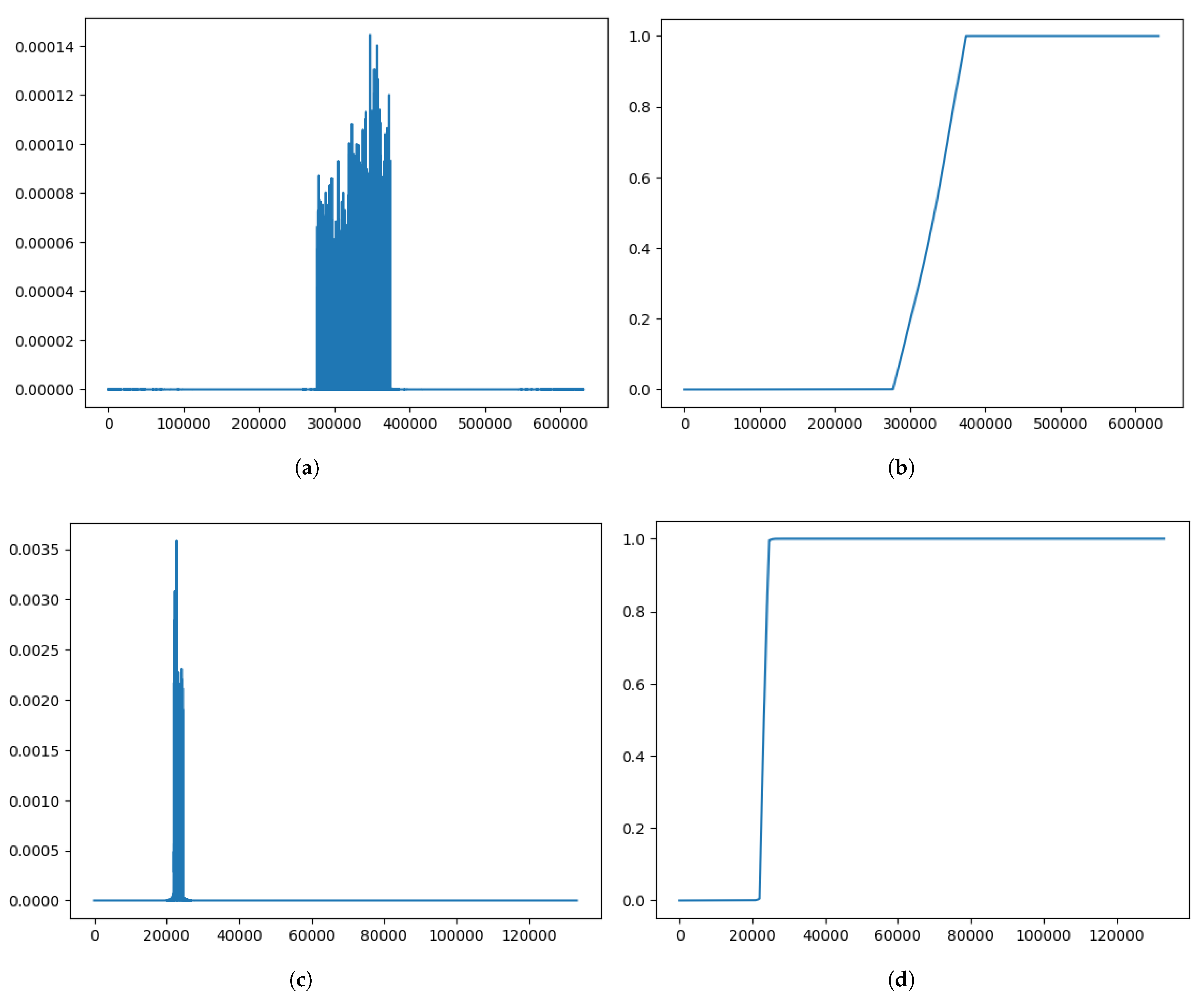

4.2. UAV Feature Extraction Module

4.3. UAV Feature Reconstruction Module

4.4. UAV Matching and Recognition Module

5. Experimental Design and Results Analysis

5.1. Controlled Experimential Design

- Dataset Partitioning: The signal data from the three brands and eight drone models were divided into training and testing sets in an 8:2 ratio.

- Feature Extraction: In the training set, our algorithm extracted signal features to construct a drone signal feature library, while the testing set was utilized to evaluate the accuracy of the detection algorithm.

5.2. Experimental Results and Analysis

5.2.1. Experiment 1: Validation of the DroneRF820 Dataset

5.2.2. Experiment 2: Validation of the DroneRFa Dataset

5.3. Comparison with Other Methods

- Rapid training rate. Compared to other machine learning methods that require extensive image data for training, our approach only necessitates the extraction of a small number of drone features to achieve recognition. This significantly accelerates the training speed of the recognition model.

- Real-time training capability. Due to the characteristic of training models based on extracting a limited set of features, our recognition model can commence training as soon as a drone is detected. This effectively enhances the precise recognition of drones in complex and variable recognition tasks.

- High recognition accuracy. Compared to other methods, our experimental data shows an accuracy rate of 100%, which is considerably higher than other mainstream recognition methods.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tsao, K.Y.; Girdler, T.; Vassilakis, V.G. A survey of cyber security threats and solutions for UAV communications and flying ad-hoc networks. Ad Hoc Netw. 2022, 133, 102894. [Google Scholar] [CrossRef]

- Boroujeni, S.P.H.; Razi, A.; Khoshdel, S.; Afghah, F.; Coen, J.L.; O’Neill, L.; Fule, P.; Watts, A.; Kokolakis, N.M.T.; Vamvoudakis, K.G. A comprehensive survey of research towards AI-enabled unmanned aerial systems in pre-, active-, and post-wildfire management. Inf. Fusion 2024, 108, 102369. [Google Scholar] [CrossRef]

- Wang, W.; Zhao, W.; Wang, X.; Jin, Z.; Li, Y.; Runge, T. A low-cost simultaneous localization and mapping algorithm for last-mile indoor delivery. In Proceedings of the 2019 5th International Conference on Transportation Information and Safety (ICTIS), Liverpool, UK, 14–17 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 329–336. [Google Scholar]

- Feng, K.; Li, W.; Ge, S.; Pan, F. Packages delivery based on marker detection for UAVs. In Proceedings of the 2020 Chinese Control And Decision Conference (CCDC), Hefei, China, 22–24 August 2020; pp. 2094–2099. [Google Scholar]

- Tanveer, L.; Alam, M.Z.; Misbah, M.; Orakzai, F.A.; Alkhayyat, A.; Kaleem, Z. Optimizing RF-Sensing for Drone Detection: The Synergy of Ensemble Learning and Sensor Fusion. In Proceedings of the 2024 20th International Conference on Distributed Computing in Smart Systems and the Internet of Things (DCOSS-IoT), Abu Dhabi, United Arab Emirates, 29 April–1 May 2024; pp. 308–314. [Google Scholar] [CrossRef]

- Jdid, B.; Hassan, K.; Dayoub, I.; Lim, W.H.; Mokayef, M. Machine learning based automatic modulation recognition for wireless communications: A comprehensive survey. IEEE Access 2021, 9, 57851–57873. [Google Scholar] [CrossRef]

- Chiper, F.L.; Martian, A.; Vladeanu, C.; Marghescu, I.; Craciunescu, R.; Fratu, O. Drone Detection and Defense Systems: Survey and a Software-Defined Radio-Based Solution. Sensors 2022, 22, 1453. [Google Scholar] [CrossRef]

- Zhou, X.; Yang, G.; Chen, Y.; Li, L.; Chen, B.M. VDTNet: A High-Performance Visual Network for Detecting and Tracking of Intruding Drones. IEEE Trans. Intell. Transp. Syst. 2024, 25, 9828–9839. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, J.; Li, D.; Wang, D. Vision-based anti-uav detection and tracking. IEEE Trans. Intell. Transp. Syst. 2022, 23, 25323–25334. [Google Scholar] [CrossRef]

- Gao, B.; Huo, J. UAV Night Target Detection Method Based on Improved YOLOv5. In Proceedings of the 2024 5th International Seminar on Artificial Intelligence, Networking and Information Technology (AINIT), Nanjing, China, 29–31 March 2024; pp. 2208–2211. [Google Scholar]

- Jiang, C.; Ren, H.; Ye, X.; Zhu, J.; Zeng, H.; Nan, Y.; Sun, M.; Ren, X.; Huo, H. Object Detection from UAV Thermal Infrared Images and Videos Using YOLO Models. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102912. [Google Scholar] [CrossRef]

- Fang, H.; Ding, L.; Wang, L.; Chang, Y.; Yan, L.; Han, J. Infrared Small UAV Target Detection Based on Depthwise Separable Residual Dense Network and Multiscale Feature Fusion. IEEE Trans. Instrum. Meas. 2022, 71, 1–20. [Google Scholar] [CrossRef]

- Aydın, İ.; Kızılay, E. Development of a new light-weight convolutional neural network for acoustic-based amateur drone detection. Appl. Acoust. 2022, 193, 108773. [Google Scholar] [CrossRef]

- Tejera-Berengue, D.; Zhu-Zhou, F.; Utrilla-Manso, M.; Gil-Pita, R.; Rosa-Zurera, M. Analysis of distance and environmental impact on UAV acoustic detection. Electronics 2024, 13, 643. [Google Scholar] [CrossRef]

- Shi, Z.; Chang, X.; Yang, C.; Wu, Z.; Wu, J. An Acoustic-Based Surveillance System for Amateur Drones Detection and Localization. IEEE Trans. Veh. Technol. 2020, 69, 2731–2739. [Google Scholar] [CrossRef]

- Wang, X.; Fei, Z.; Zhang, J.A.; Huang, J.; Yuan, J. Constrained utility maximization in dual-functional radar-communication multi-UAV networks. IEEE Trans. Commun. 2020, 69, 2660–2672. [Google Scholar] [CrossRef]

- Fu, H.; Abeywickrama, S.; Zhang, L.; Yuen, C. Low-complexity portable passive drone surveillance via SDR-based signal processing. IEEE Commun. Mag. 2018, 56, 112–118. [Google Scholar] [CrossRef]

- Xu, C.; Chen, B.; Liu, Y.; He, F.; Song, H. RF fingerprint measurement for detecting multiple amateur drones based on STFT and feature reduction. In Proceedings of the 2020 Integrated Communications Navigation and Surveillance Conference (ICNS), Herndon, VA, USA, 8–10 September 2020. [Google Scholar]

- Xie, Y.; Jiang, P.; Gu, Y.; Xiao, X. Dual-source detection and identification system based on UAV radio frequency signal. IEEE Trans. Instrum. Meas. 2021, 70, 1–15. [Google Scholar] [CrossRef]

- Nie, W.; Han, Z.C.; Li, Y.; He, W.; Xie, L.B.; Yang, X.L.; Zhou, M. UAV detection and localization based on multi-dimensional signal features. IEEE Sens. J. 2021, 22, 5150–5162. [Google Scholar] [CrossRef]

- Medaiyese, O.O.; Ezuma, M.; Lauf, A.P.; Adeniran, A.A. Hierarchical learning framework for UAV detection and identification. IEEE J. Radio Freq. Identif. 2022, 6, 176–188. [Google Scholar] [CrossRef]

- Allahham, M.S.; Al-Sa’d, M.F.; Al-Ali, A.; Mohamed, A.; Khattab, T.; Erbad, A. DroneRF dataset: A dataset of drones for RF-based detection, classification and identification. Data Brief 2019, 26, 104313. [Google Scholar] [CrossRef]

- Bisio, I.; Garibotto, C.; Lavagetto, F.; Sciarrone, A.; Zappatore, S. Blind Detection: Advanced Techniques for WiFi-Based Drone Surveillance. IEEE Trans. Veh. Technol. 2019, 68, 938–946. [Google Scholar] [CrossRef]

- Ezuma, M.; Erden, F.; Anjinappa, C.K.; Ozdemir, O.; Guvenc, I. Detection and classification of UAVs using RF fingerprints in the presence of Wi-Fi and Bluetooth interference. IEEE Open J. Commun. Soc. 2019, 1, 60–76. [Google Scholar] [CrossRef]

- Aouladhadj, D.; Kpre, E.; Deniau, V.; Kharchouf, A.; Gransart, C.; Gaquière, C. Drone Detection and Tracking Using RF Identification Signals. Sensors 2023, 23, 7650. [Google Scholar] [CrossRef]

- Akhter, Z.; Bilal, R.M.; Shamim, A. A dual mode, thin and wideband MIMO antenna system for seamless integration on UAV. IEEE Open J. Antennas Propag. 2021, 2, 991–1000. [Google Scholar] [CrossRef]

- Yu, N.; Mao, S.; Zhou, C.; Sun, G.; Shi, Z.; Chen, J. DroneRFa: A large-scale dataset of drone radio frequency signals for detecting low-altitude drones. J. Electron. Inf. Technol 2023, 45, 1–10. [Google Scholar]

- Karuppuswami, S.; Baua, S. Numerical modelling of video transmission range for an unmanned aerial vehicle. In Proceedings of the 2022 IEEE International Symposium on Antennas and Propagation and USNC-URSI Radio Science Meeting (AP-S/URSI), Denver, CO, USA, 10–15 July 2022; pp. 1920–1921. [Google Scholar]

- Zhang, X.; Babar, Z.; Petropoulos, P.; Haas, H.; Hanzo, L. The Evolution of Optical OFDM. IEEE Commun. Surv. Tutor. 2021, 23, 1430–1457. [Google Scholar] [CrossRef]

- Al-Emadi, S.; Al-Senaid, F. Drone Detection Approach Based on Radio-Frequency Using Convolutional Neural Network. In Proceedings of the 2020 IEEE International Conference on Informatics, IoT, and Enabling Technologies (ICIoT), Doha, Qatar, 2–5 February 2020; pp. 29–34. [Google Scholar] [CrossRef]

- Medaiyese, O.O.; Syed, A.; Lauf, A.P. Machine Learning Framework for RF-Based Drone Detection and Identification System. In Proceedings of the 2021 2nd International Conference On Smart Cities, Automation & Intelligent Computing Systems (ICON-SONICS), Tangerang, Indonesia, 12–13 October 2021; pp. 58–64. [Google Scholar] [CrossRef]

- Al-Sa’d, M.F.; Al-Ali, A.; Mohamed, A.; Khattab, T.; Erbad, A. RF-based drone detection and identification using deep learning approaches: An initiative towards a large open source drone database. Future Gener. Comput. Syst. 2019, 100, 86–97. [Google Scholar] [CrossRef]

- Nemer, I.; Sheltami, T.; Ahmad, I.; Yasar, A.U.H.; Abdeen, M.A. RF-based UAV detection and identification using hierarchical learning approach. Sensors 2021, 21, 1947. [Google Scholar] [CrossRef] [PubMed]

| Collection No. | Status | Channel Bandwidth | Frequency Band |

|---|---|---|---|

| 1 | Standby | 10 MHz | 2.4 GHz–2.5 GHz |

| 2 | Standby | 10 MHz | 2.4 GHz–2.5 GHz |

| 3 | Standby | 10 MHz | 5.7 GHz–5.8 GHz |

| 4 | Standby | 10 MHz | 5.7 GHz–2.5 GHz |

| 5 | Standby | 10 MHz | 5.7 GHz–5.8 GHz |

| 6 | Standby | 20 MHz | 2.4 GHz–2.5 GHz |

| 7 | Standby | 20 MHz | 2.4 GHz–2.5 GHz |

| 8 | Standby | 20 MHz | 5.7 GHz–5.8 GHz |

| 9 | Standby | 20 MHz | 5.7 GHz–5.8 GHz |

| 10 | Standby | 20 MHz | 5.7 GHz–5.8 GHz |

| 11 | Flight | 10 MHz | 2.4 GHz–2.5 GHz |

| 12 | Flight | 10 MHz | 2.4 GHz–2.5 GHz |

| 13 | Flight | 10 MHz | 5.7 GHz–5.8 GHz |

| 14 | Flight | 10 MHz | 5.7 GHz–5.8 GHz |

| 15 | Flight | 10 MHz | 5.7 GHz–5.8 GHz |

| 16 | Flight | 20 MHz | 2.4 GHz–2.5 GHz |

| 17 | Flight | 20 MHz | 2.4 GHz–2.5 GHz |

| 18 | Flight | 20 MHz | 5.7 GHz–5.8 GHz |

| 19 | Flight | 20 MHz | 5.7 GHz–5.8 GHz |

| 20 | Flight | 20 MHz | 5.7 GHz–5.8 GHz |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, T.; Du, Y.; Mao, R.; Xie, H.; Wei, S.; Hu, C. Novel Matching Algorithm for Effective Drone Detection and Identification by Radio Feature Extraction. Information 2025, 16, 541. https://doi.org/10.3390/info16070541

Wu T, Du Y, Mao R, Xie H, Wei S, Hu C. Novel Matching Algorithm for Effective Drone Detection and Identification by Radio Feature Extraction. Information. 2025; 16(7):541. https://doi.org/10.3390/info16070541

Chicago/Turabian StyleWu, Teng, Yan Du, Runze Mao, Hui Xie, Shengjun Wei, and Changzhen Hu. 2025. "Novel Matching Algorithm for Effective Drone Detection and Identification by Radio Feature Extraction" Information 16, no. 7: 541. https://doi.org/10.3390/info16070541

APA StyleWu, T., Du, Y., Mao, R., Xie, H., Wei, S., & Hu, C. (2025). Novel Matching Algorithm for Effective Drone Detection and Identification by Radio Feature Extraction. Information, 16(7), 541. https://doi.org/10.3390/info16070541