Abstract

In recent years, deep learning techniques have become increasingly prominent in face recognition tasks, particularly through the extraction and classification of face vectors. These vectors enable the inference of demographic attributes such as gender, age, and ethnicity. This study introduces a gender classification approach based solely on face vectors, avoiding the use of traditional machine learning algorithms. Face embeddings were generated using three popular models: dlib, ArcFace, and FaceNet512. For classification, the Average Neural Face Embeddings (ANFE) technique was applied by calculating distances between vectors. To improve gender recognition performance for Asian individuals, a new dataset was created by scraping facial images and related metadata from AsianWiki. The experimental evaluations revealed that ANFE models based on ArcFace achieved classification accuracies of 93.1% for Asian women and 90.2% for Asian men. In contrast, the models utilizing dlib embeddings performed notably lower, with accuracies dropping to 76.4% for women and 74.3% for men. Among the tested models, FaceNet512 provided the best results, reaching 97.5% accuracy for female subjects and 94.2% for males. Furthermore, this study includes a comparative analysis between ANFE and other commonly used gender classification methods.

1. Introduction

1.1. Background

Using images/photographs to determine gender has become a popular research topic in recent years. The primary aim of these studies is to automatically determine a person’s gender through the analysis of facial features extracted from images. Over the years, researchers have proposed various techniques for gender determination using images, including traditional machine learning algorithms and deep learning models [1,2,3]. The initial approach to gender determination using images involved using geometric features such as facial shape as well as the distances between the eyes, nose, and mouth. However, this method has been limited as it does not account for variations in facial features that may occur due to factors such as age and ethnicity.

Newer approaches involve using machine learning algorithms to classify gender based on facial features. These algorithms are trained on a dataset of tagged images to learn the relationship between facial features and gender. However, the accuracy of machine learning-based methods is limited by the quality and size of the training dataset. With the rise of deep learning, convolutional neural networks (CNNs) have been increasingly used for gender determination using images. These models have been highly effective due to their ability to learn features directly from raw image data and achieve state-of-the-art performance on various benchmark datasets.

Despite the advancements in gender determination using images, there are still persistent challenges that require attention. One of the primary challenges lies in the creation of comprehensive facial datasets for facial attribute recognition (FAR) systems, which should encompass a wide array of variables including image diversity, subjects, age, gender, race, and environmental factors. While FAR systems typically exhibit a strong performance on constrained images, they may falter in real-life scenarios due to inherent limitations. Factors such as resolution, sharpness, lighting, expression, occlusion, profile, frontal view, constrained and unconstrained environments, longitudinal data, race, hair, and scale can significantly impact system performance. Datasets generally fall into controlled [4,5,6,7,8,9,10] and uncontrolled categories [11,12,13,14] with controlled datasets exhibiting limited variability within specific environments, and uncontrolled datasets presenting challenges encountered in natural settings. For example, datasets like FG-NET [4], UIUC-IFP-Y Internal Aging [6], and MORPH [7] are obtained in controlled environments, whereas uncontrolled datasets such as LFW [11] and IMDB-WIKI [12] comprise data collected from natural settings. These datasets offer a broader diversity for evaluating the performance of facial attribute recognition and age estimation systems, as depicted in Table 1.

Table 1.

Dataset of facial gender and age detection.

In this study, a special dataset has been created to accurately determine the gender and age of individuals belonging to East Asian races, and a new deep learning-based approach with fast parallel computing architecture has been developed for this purpose.

1.2. Related Work

Studies focusing on gender and age estimation from facial images using the Single Task Layer (STL) approach have been analyzed. Studies are summarized, taking into account the dataset used, feature extraction method, classification and regression methods, and the performance results obtained.

When reviewing previous studies, gender recognition studies using facial features can be categorized into two main groups based on the feature space and extraction approach. These categories include appearance-based feature extraction (global features) and geometry-based feature extraction (local features) approaches. In the appearance-based approach, the entire facial feature space is taken into account, while geometric-based methods extract features from specific facial components such as eyebrows, nose, and lips, and use this feature set for gender classification. The findings presented in Table 1 demonstrate that deep learning-based methods achieve superior accuracy in gender recognition, even in uncontrolled facial images, compared with the handcrafted feature engineering seen in traditional learning methods. The deep learning-based approach excels in recognizing gender in real-life challenging facial images with variations such as scale, rotation, and lighting, unlike the traditional feature engineering approach. However, the CNN-based approach’s drawback is its dependence on large datasets.

In the realm of machine learning (ML) methods, Cottrell [15] achieved an accuracy of 99.0% using an autoencoder with backpropagation on a private dataset. Additionally, Gutta et al. [16] employed raw pixels and a combination of RBF and decision tree methods on the FERET dataset, resulting in an accuracy of 96.0%. Moghaddam [17], operating on the FERET dataset, utilized raw pixels and SVM-RBF to achieve an accuracy of 96.62%. Shakhnarovic [18], working with the web dataset, obtained a 79.0% accuracy by employing Haar features with Adaboost. Furthermore, Jain et al. [19] reported an impressive accuracy of 99.30% on the FERET dataset by utilizing ICA and LDA. Khan et al. [20] achieved an accuracy of 88.70% by implementing PCA and NN on a private dataset. Kim et al. [21] utilized the AR dataset in a machine learning (ML) approach, employing raw pixels and achieving an accuracy of 97.0% using the generalized portrait classifier (GPC). Baluja et al. [22], working with the FERET dataset, utilized raw pixels and Adaboost, resulting in an accuracy of 94.3%. Yang et al. [23] reported a 96.3% accuracy on a private dataset by employing local binary patterns (LBP) and Adaboost. Leng et al. [24] achieved a notable accuracy of 98.0% on the FERET dataset by utilizing a combination of Gabor and fuzzy features with support vector machines (SVM). Mäkinen [25] worked on the FERET dataset, employing a combination of LBP and Haar features, and achieved an accuracy of 92.86% using artificial neural networks (ANN) and SVM. Li et al. [26] operated on the YGA dataset, employing discrete cosine transform (DCT) and spatial Gaussian mixture models (GMM) for feature extraction, resulting in an accuracy of 92.5%. Lastly, Lu et al. [27] reported an accuracy of 94.85% by employing 2D principal component analysis (PCA) and SVM with radial basis function (SVM-RBF) on the FERET dataset. Wang et al. [28] conducted research on the FERET dataset using a machine learning (ML) approach, employing scale-invariant feature transform (SIFT) and context for feature extraction and Adaboost for classification, achieving an accuracy of 95.0%. Alexandre et al. [29] also worked with the FERET dataset, utilizing local binary patterns (LBP) for feature extraction and linear support vector machines (SVM-linear) for classification, reporting an impressive accuracy of 99.07%. Guo et al. [30] operated on the YGA dataset, employing a combination of LBP, a histogram of oriented gradients (HOG), and biologically inspired features (BIF) for feature extraction, and nonlinear support vector machines (NonlinearSVM) for classification, resulting in an accuracy of 89.28%. Alamri et al. [31], working with the FERET dataset, utilized a combination of LBP and Weber local descriptor (WLD) for feature extraction and nearest neighbor methods for classification, achieving an accuracy of 98.82%. Bissoon [32] reported an accuracy of 85% on the FERET dataset by employing principal component analysis (PCA) for feature extraction and linear discriminant analysis (LDA) for classification. Hassner [33] achieved a 79.3% accuracy on the Adience dataset by using a combination of LBP and Fourier phase local binary patterns (FPLBP) for feature extraction and SVM for classification. Yildirim [34] reported an accuracy of 92.3% on a private dataset and 85.6% using a histogram of oriented gradients (HOG) for feature extraction and random forest and Adaboost for classification, respectively. Lastly, Abbas et al. [35] achieved an accuracy of 87.3% on the ALSPAC dataset by employing a geodesic path for feature extraction and linear discriminant analysis (LDA) for classification.

On the other hand, in the domain of deep learning (DL) methods, Van et al. [36], utilizing a convolutional neural network (CNN) with oversampling on the FERET dataset, achieved an accuracy of 97.30%. Antipov [37] conducted research on the Labeled Faces in the Wild (LFW) dataset, utilizing a DL approach with a CNN and softmax classifier, reporting an accuracy of 97.31%. Mansanet [38] also worked with the LFW dataset, employing a deep convolutional neural network (DCNN) with class-posterior methods, achieving an accuracy of 94.48%. Jia et al. [39] achieved an accuracy of 98.90% on the LFW dataset by utilizing a DL approach with a CNN and softmax classifier. Cirne et al. [40] worked on the AR Face dataset, employing a DL approach with a geometric descriptor and CNN, achieving an accuracy of 97.50%. Aslam et al. [41] reported an accuracy of 98.90% on the FERET dataset by employing a DL approach with a VGG-16 CNN. Simanjuntak [42] attained a high accuracy of 99.28% using COSFIRE and VGG Face on the LFW dataset. Afifi et al. [43] achieved an accuracy of 90.43% on the Adience dataset, 99.28% on the FERET dataset, and 95.98% on the LFW dataset by employing a DL approach with isolated and holistic features using DCNN + Adaboost and score fusion methods, respectively. D’Amelio [44] reported an accuracy of 95.13% on the LFW dataset by utilizing a DL approach with a DCNN and sparse dictionary learning. Moeini et al. [45] achieved an impressive accuracy of 99.90% on the FERET dataset and 99.0% on the LFW dataset by employing a DL approach with separate dictionary learning using gray and LBP features and pixel values, respectively. Bekhet et al. [46] introduced a novel technique for gender recognition from non-standard selfie images using deep learning approaches and demonstrated the effectiveness of the proposed technique, achieving an accuracy of 89%. Kim et al. [47] proposed a gender, race, and age image transformation technique using a generative adversarial network for image style transfer (GRA-GAN) based on a channel-wise and multiplication-based information combination of encoder and decoder features. The accuracy of the proposed method was tested on four open databases: MORPH, AAF, AFAD, and UTK. Raman et al. [48] generated facial images using the pre-trained Style-Generative Adversarial Networks (StyleGAN) model and labeled the images based on observed gender differences. They employed a CNN architecture that they designed, along with VGG16 and VGG19, as classifiers. The performance of their method was evaluated on the UTKFace and Kaggle gender datasets, yielding accuracy rates of 94% and 95%, respectively. Guo et al. [49] proposed the kernel partial least squares regression (K-PLSR) method for age estimation from human face images. They developed a model that simultaneously performs dimensionality reduction and age estimation, thus facilitating more the effective learning of age-related changes in facial features. The work presented at CVPR 2011 performed particularly well on high-dimensional face images. Janahiraman et al. [50] performed gender prediction on facial images of East Asians with different deep learning methods such as CNN and VGG. In this study, the results obtained with different architectures (CNN, VGG, etc.) are shared comparatively. Dey et al. [51] presented a new CNN-based approach for age and gender detection. In this study, the gender detection models created using the Adience and UTKFace datasets have an 86.42% and 81.96% accuracy, respectively. Tahyudin et al. [52] also presented a gender detection model based on Vision Transformer. The AFAD and UTKFace open source datasets were used in the study. In the study, the accuracy was 96.76% for the 160 × 160 pixel image with eight patches, 98.43% for the 224 × 224 pixel image with twenty-eight patches, and 81.74% for the 224 × 224 pixel image with fourteen patches. In the study by Ramesha et al. [53], a feature extraction-based method for face recognition and gender and age classification was presented. Within the scope of the study, the statistical and structural features of face images were extracted and then used in the classification process. The experimental results demonstrated the efficacy of the proposed method in face recognition and age and gender classification.

This comprehensive collection of results demonstrates the wide array of methodologies employed and their corresponding accuracy rates, providing valuable insights into the advancements in facial recognition research across both machine learning and deep learning domains.

1.3. Motivation

The present study is principally concerned with the issue of gender prediction for individuals of East Asian descent. The primary impetus for this research stems from the findings of a study titled “Average Neural Face Embeddings for Gender Recognition”. In that study, facial images were collected from movie-related websites, such as Listal and Filmweb (Caucasian, Asian, African American, Latin, Indian, etc.), and used during the training phase. The widely recognized benchmark dataset, FaceScrub (Caucasian, Asian, African American, etc.), was employed for testing.

In the initial experiments, the performance of gender-specific average face embedding models was evaluated with the aim of identifying the most effective configuration. For this purpose, tests were conducted using the Listal and Filmweb datasets, in which average gender representations were generated with varying sample sizes. Among these, the model constructed using 10K (10,000 female and 10,000 male) face images yielded the highest accuracy. According to this model, gender classification tests performed on the Listal dataset resulted in an accuracy of 99.92% for females and 96.47% for males.

This selected 10K gender-specific average face embedding model was subsequently evaluated on the larger and independent FaceScrub dataset, which consists of 31,370 male and 26,631 female images, totaling 58,001 face images. In this second experiment, only 228 images (62 male and 166 female) were misclassified. Based on these results, the model achieved an accuracy of 99.802% for male individuals and 99.376% for female individuals.

However, a subsequent analysis of the misclassified samples indicated that the majority of these errors occurred for individuals of African and East Asian descent. The underlying cause of this underperformance was identified as the absence of adequate training data that adequately represented these groups. Consequently, the creation of new, more inclusive datasets was recommended.

Another key motivation for the present study is that, despite numerous previous works on gender estimation among East Asian populations, several critical challenges remain. Chief among these is the scarcity of relevant data. Most open-access facial datasets are predominantly composed of individuals of European or Caucasian background, leading to model bias. Moreover, East Asian populations exhibit significant variation in facial features, further complicating gender classification based on appearance. A further challenge is the propensity of deep learning models to overfit when trained on limited and homogeneous data, which can result in inaccurate gender predictions. In light of these challenges, the present study aims to develop a new dataset and create more balanced models to improve gender prediction performance for East Asian individuals.

1.4. Contribution and Novelty

The proposed work uses the Python-based (version 3.6) dlib, insightface, and DeepFace libraries to extract face representations. The designers of the FaceNet512, ArcFace, and dlib models created these libraries. In this approach, the extracted facial representations are directly used to predict the gender of the person without any classifier. The unique aspects of this study are:

- ➢

- The research has demonstrated that gender recognition can be achieved without the utilization of any classification algorithm, employing solely 128B average face representation vectors for dlib, 512B for ArcFace, and 512B for FaceNet512.

- ➢

- During this study, a Python-based web scraping method was developed to prepare the datasets.

- ➢

- The face detection process incorporated the RetinaFace (RetinaFace-10GF) and CenterFace (centerface.onnx) models.

- ➢

- The face representation vectors were extracted using dlib’s “dlib_face_recognition_resnet_model_v1,” ArcFace’s (ResNet50@WebFace600K), and FaceNet512’s (facenet512_weights.h5) deep neural network models.

- ➢

- The data type known as “JSON” was utilized for the purpose of storing the face representation vectors.

- ➢

- The novelty of the approach presented in this paper is that the classical vector distance measure is used as a classifier without using a deep learning-based model in the classification process.

2. Materials and Method

The estimation of gender is a complex issue, particularly in the context of East Asians, due to the paucity of available datasets. This challenge stems from the influence of social norms and facial expressions that vary across different Asian cultures. Additionally, the diversity in the physical characteristics of East Asian individuals and the difficulty in accurately recognizing their acoustic structure further complicate gender estimation. Furthermore, models trained with limited data are often susceptible to overfitting, which can adversely impact their overall performance.

To address these issues, a comprehensive and representative dataset consisting of only East Asian individuals was created. Additionally, a novel approach was developed to minimize the overfitting problem in deep learning models. This solution aims to provide more accurate and generalizable results in gender prediction tasks.

2.1. Creating the Dataset

For the purposes of this study, a unique dataset was meticulously designed and implemented. The generation of the dataset comprised several distinct stages. The initial stage of the process was the collection of visual data. To this end, a Python-based web scraping script was developed. The website https://asianwiki.com (accessed on 18 June 2025) was identified as the primary source for obtaining the visual materials. The selection of this website was predicated on its comprehensive documentation of Asian productions and actors, encompassing detailed information and media content.

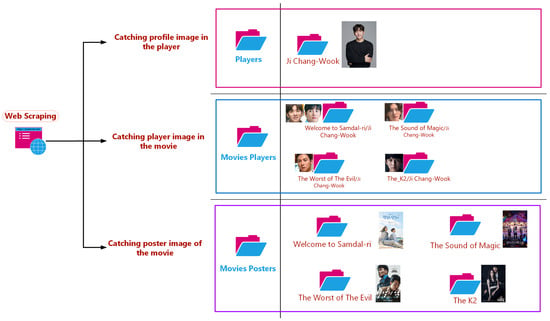

In accordance with the data collection protocol, the profile photographs of approximately 20,000 Asian celebrities, posters and advertisements for television series, and 90 × 90 small-sized images depicting the facial features of the actors in the productions were collected. The images were then methodically organized into three primary folder structures. Figure 1 presents a sample folder structure of an actor.

Figure 1.

Data collection system with web scraping.

The subsequent stage of the dataset creation process entailed the cleansing of the collected profile photographs of Asian celebrities. Initially, the images contained within the “players” folder that exhibited more than one face were automatically identified and eliminated from the dataset. Subsequently, the lists on https://asianwiki.com/Category:Actors (accessed on 18 June 2025) (10,855 people) and https://asianwiki.com/Category:Actresses (accessed on 18 June 2025) (9063 people) for gender labeling were extracted by a web scraping method. Pursuant to the aforementioned lists, the collected images were automatically categorized into two gender classes: male and female. In instances where automatic labeling proved inadequate or ambiguous, the relevant images were manually examined and assigned to the appropriate gender class.

The images were then divided into datasets of different sizes for training and testing according to their gender in a controlled manner. Six datasets of 100, 200, 300, 400, 500, and 1K were prepared for training and two datasets of 500 and 1K were prepared for testing. The images for each dataset were randomly selected. Automatic folderization was not possible because the profile of each celebrity on the website did not include gender data. To automatically parse the data, first, the lists of people in the actor and actress category on the website were extracted and the datasets were separated according to this list. The content of the resulting datasets was then manually checked. Two different problems were encountered during the manual check. Firstly, there were child players in these folders and secondly, there were incorrect data. Child actors were moved to a different folder and erroneous data to the appropriate gender folder. The child actors in the prepared actor and actress lists were removed from the list and the data of the incorrect people were moved to the appropriate list.

The random content of the training dataset for men and women is given in Figure 2.

Figure 2.

Female and male training dataset.

2.2. Face Detecting and Face Embedding Methods

Embedding is the process of transforming an object, usually an object or data, into a vector space of a given dimension. This transformation aims to create a more meaningful representation while preserving the features of the data. Face embedding is a technique used to represent the features of face images in a lower dimensional vector space. This technique can be used in many tasks such as face recognition, face similarity comparison, and face classification. The process of extracting a face representation vector is usually composed of five successive steps. The first step is to detect faces in a given image. In this step, specialized face detection algorithms or deep learning models are usually used. Some of the important models used in face detection are OpenCV [54], SSD [55], MtCNN [56], RetinaFace [57,58], Faster MtCNN [56], dlib [59], MediaPipe [60], YuNet [61], Yolo [62], and CenterFace [63]. In addition, the positions of the detected faces are determined and corrected with transformations if necessary. This step is performed to ensure the exact alignment of the face. After the correction, the important points or patterns that express the features of the face are extracted. This step is usually performed using convolutional neural network (CNN)-based deep learning models. The information obtained at this stage is used as the input to the next step, an embedding model. As a result of this model, the face image is transformed into a lower-dimensional vector space. These vectors encode the unique features of faces. The resulting face representations are usually compared using similarity metrics or machine learning algorithms. In this way, the degree of similarity between faces can be calculated and recognition or classification can be performed.

There are various techniques for generating facial representations, some of which use deep neural networks. There have been many studies that extract face representations using deep neural networks. Some of the prominent models are Facenet [64] (both 128D and 512D), dlib [59], VGG-Face [65], OpenFace [66], DeepFace [67], DeepID [68], ArcFace [69], SFace [70], and GhostFaceNet [71].

In the context of this study, the face detection models RetinaFace and CenterFace were utilized, while dlib, ArcFace, and FaceNet512 served as face representation models.

RetinaFace [57] is a deep learning model designed for face detection and landmark identification. The model is based on the RetinaNet architecture, which has been shown to be successful in object detection tasks and is optimized for face detection problems. It has been demonstrated to detect both small and large faces with a high degree of accuracy by leveraging the Feature Pyramid Network (FPN) and Contextual Module. Its capabilities extend beyond mere face detection, encompassing the extraction of additional information such as the five basic facial landmarks (eyes, nose, and corners of the mouth) and the presence of face masks. The model employs a multi-task learning strategy to ensure the precise detection of facial features. Large datasets, such as WIDER FACE, were utilized during the training process and optimized to detect faces in both multi-scale and variable conditions. The efficacy of the model is evident in its capacity to support real-time applications, thus establishing it as a contemporary standard in face detection systems. Its wide range of applications, including face recognition, face tracking, and security systems, underscores its reliability and versatility.

RetinaNet [58] is an architecture designed for object detection. It consists of two main components: the Feature Pyramid Network (FPN), which combines feature maps of different resolutions to detect both small and large objects, and the Subnetwork Structure, which is divided into two separate subnetworks. The first of these is the Classification Subnetwork, which estimates the class probabilities of each object, and the second is the Regression Subnetwork, which estimates the coordinates of object locations (bounding box). The innovative aspect of RetinaNet is that it effectively handles class imbalance by using the Focal Loss function for rare and difficult-to-detect objects. This structure provides both high accuracy and high speed, making RetinaNet a popular choice among object detection models.

CenterFace [64] is a lightweight and efficient deep learning model for face detection and landmark detection tasks. This model directly estimates the central points and dimensions of faces using an anchor-free method, thus eliminating the need for complex preprocessing and anchor adjustments that are present in many other models. CenterFace employs a multi-task learning strategy that combines face detection and keypoint extraction. It employs Keypoint Heatmap Regression and Box Regression mechanisms to predict the positions and key points of faces with a high degree of accuracy. CenterFace is designed for real-time applications and can run quickly even on low-power devices. It has been trained on datasets such as WIDER FACE and AFLW, giving it a wide generalization capability. Thanks to its high speed and accuracy, it is widely used in various applications such as face detection, tracking, and analysis.

Dlib [59] is a library for face recognition and face embedding that is characterized by its lightweight and high performance. The model is based on the ResNet-34 architecture but has been redesigned as a lighter structure with a total of 29 layers by reducing the number of layers and filters. This results in a reduction in the computational cost and faster processing performance. Dlib creates a 128-dimensional embedding vector to represent faces. The model’s capacity for generalizing across different face attributes and behaviors is enhanced by its training on the VGGFace and FaceScrub datasets. The dlib face embedding model finds extensive application in domains such as face detection, face matching, and face clustering. Its widespread adoption is further facilitated by its portability across programming languages, including Python and C++.

ArcFace [69] is a deep learning model for face recognition and verification. Its most prominent feature is the implementation of Additive Angular Margin Loss (AAM-Loss), which incorporates a marginal function that increases the diagonal distances, thereby rendering the face representations more discrete. The model is based on deep neural networks and is frequently implemented with ResNet or other contemporary CNN architectures. During the training process, it calculates the angular similarity between face representations, with the objective of achieving a more compact grouping of faces within the same class and a greater separation between different classes. ArcFace generates a 128-D or 512-D representation vector for the face representation, which ensures a high accuracy in tasks such as authentication and recognition. The model is typically trained on large-scale face datasets, including MS-Celeb-1M and VGGFace2, thereby demonstrating high generalization capability. The incorporation of modified softmax-based classification during the training process, in conjunction with the projection of model outputs on the unit circle via L2-normalization, has been demonstrated to result in a substantial enhancement in accuracy. These methodological nuances contribute to the efficacy of the model in contemporary face recognition applications.

FaceNet [64] is a deep learning model that has been employed in tasks such as face recognition, face verification, and face clustering. The primary objective of this model is to transform high-dimensional data representing faces into a smaller and more meaningful vector, typically a 128-dimensional embedding vector, although 512-dimensional vector models have also been developed. The Triplet Loss function is the primary method behind FaceNet’s success. This function aims to bring the facial representations of the same person closer together, while pushing the representations of different people further apart. The model is generally based on the Inception architecture and applies L2-normalization to project face representation vectors onto a unit circle. These vectors are used to compute the similarity between faces using metrics such as Euclidean distance or cosine similarity. FaceNet is trained on extensive datasets, including CASIA-WebFace, VGGFace2, and MS-Celeb-1M, to attain a substantial generalization capability. A notable benefit of FaceNet is its capacity to operate directly on face-to-face distances, thereby enabling verification and recognition without the necessity for a classification layer. Due to its high accuracy rates and extensive range of applications, FaceNet is regarded as a fundamental component of contemporary face recognition systems.

2.3. Average Neural Face Embeddings (ANFE) Model

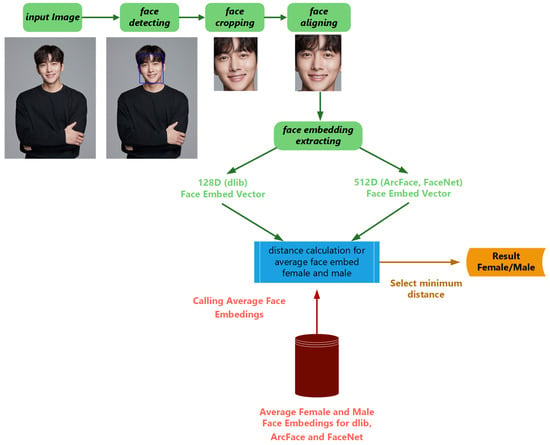

This paper proposes a novel methodology for gender detection employing face representation vectors, a departure from conventional classifier methods. This approach, designated as Average Neural Face Representations (ANFE), involves a sequence of algorithmic steps outlined below:

- The training dataset is segmented into two distinct groups, designated as “male” and “female”.

- The faces in each image in the two different groups in the training dataset are detected with the RetinaFace and CenterFace deep network models. (Consequently, a series of experiments were conducted with the objective of enhancing detection accuracy and the minimum size threshold for the face region was set to 40 pixels).

- The face representation vectors of the detected and aligned faces are extracted with face recognition-based deep network models, including dlib, ArcFace, and FaceNet512. From these models, the 128-D face representation vectors are extracted with dlib and the 512-D face representation vectors are extracted with ArcFace and FaceNet512.

- Within each gender group, the extracted face representation vectors are then summed and divided to create an average face representation vector for that group, which is saved as JSON data. The mathematical representation of this structure is given in Equation (1) [72]. In the equation, m represents the number of samples, i.e., the number of people, and X is the face embedding (feature vector) extracted for each person.

The following pseudocode delineates the process of computing average face embeddings for gender classification. In order to accomplish this objective, a dataset of images has been compiled, with each image grouped according to its gender. Face embeddings are then extracted using multiple recognition models, including ArcFace, dlib, and Facenet512. For each gender and model, embeddings are accumulated, and average vectors are computed at specific sample intervals (e.g., after processing 100, 200, 300, 400, 500, 1000 images). These averages are subsequently employed for model-based gender inference tasks. The final dataset is stored in JSON format for subsequent analysis. Algorithm 1 outlines the implementation used to generate gender-specific average face embedding vectors.

| Algorithm 1: Generation of gender-specific mean face embeddings |

| Input: Image dataset grouped by gender (female, male) Output: Averaged facial embeddings for each gender and model Initialize gender_embeds for each model in {ArcFace, Dlib, Facenet512} For each gender in {female, male}: Load image list from dataset Shuffle image list randomly count ← 0 For each image in image list: If image format is unsupported: continue For each model in {ArcFace, Dlib, Facenet512}: Detect face and extract embedding using DeepFace If one face is detected with confidence ≥ 0.8 and face_size ≥ 40: Add embedding to gender_embeds[model][gender] Update sum of embeddings count ← count + 1 If count ∈ {100, 200, 300, 400, 500, 1000}: For each model: Compute average embedding: avg ← sum/count Store avg in gender_embeds[model][gender][count] Convert all embeddings to list format Save gender_embeds to JSON file |

The mean face representation vector derived from Equation (1) is now employed to denote gender. Utilizing these vectors, the gender of the subjects in the test data is ascertained. The methodology for preparing the tests to assess the efficacy of the obtained ANFE vectors is delineated in the subsequent steps:

- The test dataset is segmented into two distinct groups, designated as “male” and “female.”

- The faces in each image in the two different groups in the test dataset are detected with the RetinaFace and CenterFace deep network models.

- Subsequently, the face representation vectors of the detected and aligned faces are extracted with the face recognition-based deep network models dlib, ArcFace, and FaceNet512. From these models, the 128-D face representation vectors are extracted with dlib and the 512-D face representation vectors are extracted with ArcFace and FaceNet512.

- For each face, L2-normalization is used to calculate the Euclidean distance of the extracted face representation vectors to the average male and female face representation vectors. The minimum value is designated as the predicted gender group. The mathematical representation of the Euclidean distance is given in Equation (2) [72].

The pseudocode below delineates the process of gender classification using pre-computed average face embeddings specific to gender and model. For each test image, face detection is first performed with multiple backends, followed by embedding extraction using three recognition models. The gender is then predicted by comparing the image’s embedding to average male and female face embeddings using the Euclidean-L2 distance. Accurate predictions are documented for the purpose of performance evaluation. Algorithm 2 illustrates the implementation used to evaluate the gender-specific average face embedding model.

| Algorithm 2: Gender-specific mean face representation vector model test |

| Initialize gender embedding results dictionary for each model: ArcFace, Dlib, Facenet512 Load average gender embeddings from previously computed data: For each model in [ArcFace, Dlib, Facenet512]: Load average embeddings for female and male at various sample sizes (e.g., 100, 200, 300, 400, 500, 1000) For each gender in [female, male]: Retrieve image paths from test dataset Randomly shuffle image list For each image in the list (up to 500 samples): For each face detector in preferred list: Detect face in the image using the current detector If one face is detected with confidence ≥ 0.8 and face_size ≥ 40: Extract face embedding using the specified recognition model Store image-level info (embedding, bounding box, detection score, etc.) For each sample size (100–1000): Calculate Euclidean-L2 distances to average female and male embeddings Predict gender based on closer distance Record prediction outcome and whether it matches ground truth Break face detection loop Save the aggregated gender prediction results to a JSON file |

In summary, the proposed work utilizes specialized libraries and models that support the Python platform to extract facial representations. The objective of this study is to predict gender with face representation vectors that differ from machine learning classifiers. To this end, two distinct datasets, male and female, were prepared. Subsequently, the average gender representations of both male and female datasets according to different sample weights were calculated and stored as JSON data. The detailed processing steps of the proposed model are outlined in ANFE [72]. In contrast to the previous study, this study also involved the creation of the ANFE model of 512B ArcFace and FaceNet512 face embedding models. The face embeddings of the subjects in the dataset, which was prepared in a controlled manner for testing, were obtained with the dlib, ArcFace, and FaceNet512 models. The Euclidean distances of these face embeddings with ANFE vectors were calculated. The value with the minimum distance among the calculated distances was determined as the predicted gender label. The working principle of the proposed system is given in Figure 3.

Figure 3.

Gender estimation with average face representation vector.

3. Experimental Results

Testing was conducted on a Windows 10 laptop with Intel(R) Core(TM) i7-8550U CPU @1.80 GHz 1.99 GHz, 16GB RAM, 64-bit specifications, lenovo thinkpad x1 carbon 6th gen intel core i7 (8th), (Lenovo Group Limited, Beijing, China). In this study, RetinaFace and CenterFace were used for face detection, and dlib, FaceNet512, and ArcFace were used to obtain face representation vectors. Two phases of testing were performed and the test results were stored as the JSON data type. The first stage of the tests was to find the most appropriate one among the gender ANFE (Average Neural Face Embeddings) vectors obtained with different weights. The ANFE vectors of the models were saved separately as JSON data. Each file contains the mean vectors for both men and women.

Table 2 shows the results of the ANFE gender vectors obtained using dlib for the 500 and 1 K test data.

Table 2.

Dlib ANFE test results.

Table 3 shows the results of the ANFE gender vectors obtained using ArcFace for the 500 and 1 K test data.

Table 3.

ArcFace ANFE test results.

Table 4 shows the results of the ANFE gender vectors obtained using the FaceNet512 model on the 1 K test data.

Table 4.

FaceNet512 ANFE test results.

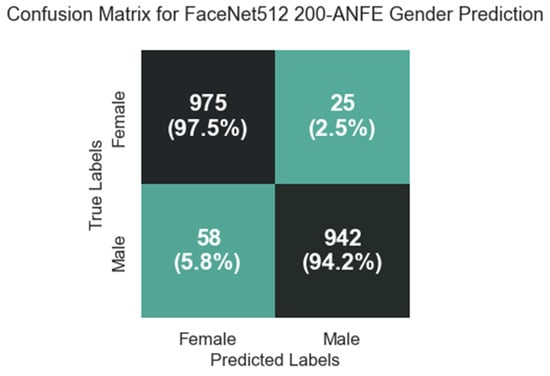

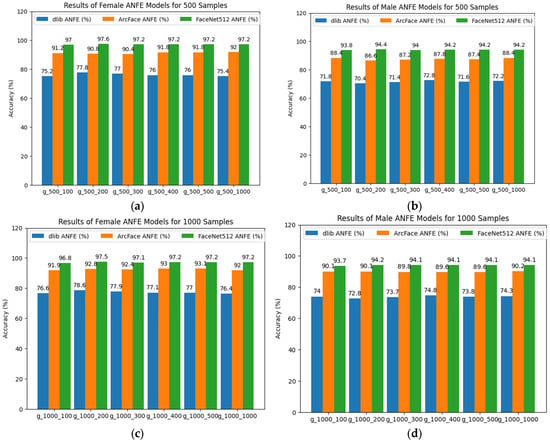

When Table 2 and Table 3 are analyzed, it is observed that the accuracy rates for dlib ranged between 75% and 78% for women and between 70% and 74% for men. For ArcFace, the accuracy ranged between 90% and 93% for females and between 86% and 90% for males. The ANEF Model Id dataset for which the ANFE (Average Neural Face Embeddings) gender vector created with the ArcFace model works best is 500 for females and 1K for males. The best working ANEF Model Id data group for the ANFE gender vector created with the dlib model is 200 for women and 400 for men. All results of the ANFE gender models created with the FaceNet512 model are given in Table 3. When the data is analyzed, it is observed that better results are obtained than dlib and ArcFace. The ANFE gender vectors created with the FaceNet512 face representation model were the best for both females and males with the model id data group 200. Figure 4 shows the confusion matrix of the FaceNet512 200-ANFE gender vector.

Figure 4.

Confusion matrix of FaceNet512 200-ANFE.

Figure 4 shows that the FaceNet512 200-ANFE gender vector model has a TP (true positive) value of 975, FN (false negative) value of 25, FP (false positive) value of 58, and TN (true negative) value of 942. In the tests, it was observed that no more models were needed for the ANFE gender vectors. Therefore, the study was terminated at the 1K ANFE gender vector model.

ArcFace has emerged as the prevailing architecture in contemporary face recognition competitions. Despite being trained with extensive datasets, leading to high accuracy across various angles, age differences, and a substantial number of individuals, FaceNet-512 demonstrated a superior performance in this study. The primary rationale for this phenomenon is that FaceNet-512 is predominantly trained with more balanced and diversified datasets, such as VGGFace2. In summary, the dataset employed for the training of FaceNet-512 consists of faces of European descent, characterized by brightness, frontal orientation, and posed composition. In the context of this study, the subjects are typically positioned in a frontal orientation and are exposed to ample lighting. This outcome indicates that the FaceNet-512 model exhibits a superior performance in comparison with the other two models examined in this study.

The results of the analysis of the ANFE gender vectors are summarized in Figure 5.

Figure 5.

Results of the ANFE gender mode analysis. (a) Analysis of the variation in the accuracy of the ANFE female vector on 500 samples; (b) Analysis of the variation in the accuracy of the ANFE male vector on 500 samples; (c) Analysis of the variation in the accuracy of the ANFE female vector on 1000 samples; (d) Analysis of the variation in the accuracy of the ANFE male vector on 1000 samples.

4. Discussion

A test dataset was prepared with 1000 females and 1000 males, and various gender models were evaluated on this dataset, excluding the ANFE (Average Neural Face Embeddings) gender vector model. One notable study in this context is the gender model of the VGG-Face model in DeepFace [73], which was developed using the transfer learning method. In this study, VGG-Face, a pre-existing model, was assessed on the dataset. The gender model of DeepFace predicted 887 individuals correctly and 113 individuals incorrectly, yielding a success rate of 88.70% for the 1K female dataset, and 968 individuals correctly and 32 individuals incorrectly, yielding a success rate of 96.80% for the 1K male dataset. In addition to the VGG-Face gender model, the Small-VGG-Face16 [74], the opencv-caffemodel (Gender-Caffemodel) [75] and imdb-tensorflow [76] gender models were also assessed. Table 5 presents the metric scores of the two ANFE gender vector models and other gender detection models.

Table 5.

Accuracy metrics of the models.

The study used the metrics ‘precision’, ‘recall’, ‘F1 score’, and ‘accuracy’ to measure the accuracy of the system. The mathematical representations of these metrics are given in Equations (3)–(6).

- True Positive (TP): True positive predictions (those predicted by the model to be positive that are in fact positive).

- True Negative (TN): True negative predictions (those predicted by the model to be negative that are actually negative).

- False Positive (FP): False positive predictions (those that the model predicts as positive that are actually negative).

- False Negative (FN): False negative predictions (those that the model predicts as negative but are actually positive).

The comparisons of the deep learning-based models utilized in the tests are presented in Table 6.

Table 6.

Comparison of gender recognition models.

The average processing time of the DNN and ANFE models for gender prediction for a single face image is shown in Table 7.

Table 7.

Average processing performance of the models for a single face image.

While VGG-Face demonstrates high accuracy with its 16-layer CNN architecture and softmax-based classification, it is dependent on large datasets and computationally intensive. In contrast, FaceNet512 200-ANFE eliminates the need for a classifier by averaging 512-dimensional vectors, which allows it to both adapt to the diversity of the Asian dataset and dramatically reduce processing time (Table 7).

5. Conclusions

In this study, the ANFE (Average Neural Face Embeddings) method was employed for the gender identification of East Asian individuals, marking a departure from the conventional data classification methods typically employed for gender recognition. A previous study had identified issues stemming from insufficient data, a conclusion that was corroborated by subsequent tests demonstrating the efficacy of ANFE gender vectors under the conditions of sufficient data.

This study incorporated deep learning models capable of generating face representation vectors, namely dlib, ArcFace, and FaceNet512, to facilitate gender detection. The experimental findings indicated that FaceNet512 demonstrated the optimal performance.

It was also determined that open-source datasets do not adequately represent individuals from East Asia. To address this limitation, a new dataset consisting of famous individuals from East Asia was created. This new dataset was then tested on existing gender detection models.

The subsequent phases of this study will entail meticulous analyses to assess the efficacy of ANFE models in race detection.

Author Contributions

Conceptualization, S.M. and G.A.; methodology, S.M.; software, S.M.; validation, S.M. and G.A.; formal analysis, S.M.; investigation, S.M.; resources, S.M. and G.A.; data curation, S.M.; writing—original draft preparation, S.M.; writing—review and editing, S.M.; visualization, S.M.; supervision, G.A.; project administration, G.A.; funding acquisition, G.A. All authors have read and agreed to the published version of the manuscript.

Funding

Open access funding provided by the Scientific and Technological Research Council of Türkiye (TÜBİTAK).

Institutional Review Board Statement

All data utilized in the study were obtained from “https://asianwiki.com”, an open-source movie-sharing system.

Informed Consent Statement

All data utilized in the study were obtained from “https://asianwiki.com”, an open-source movie-sharing system.

Data Availability Statement

The data will then be shared via google drive address for literature studies.

Acknowledgments

This study was supported by the Presidency of the Turkish Defense Industry.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gupta, S.K.; Nain, N. Review: Single attribute and multi attribute facial gender and age estimation. Multimed. Tools Appl. 2023, 82, 1289–1311. [Google Scholar] [CrossRef]

- Dubey, A.K.; Jain, V. A review of face recognition methods using deep learning network. J. Inf. Optim. Sci. 2019, 40, 547–558. [Google Scholar] [CrossRef]

- Pranoto, H.; Heryadi, Y.; Warnars, H.L.H.S.; Budiharto, W. Recent Generative Adversarial Approach in Face Aging and Dataset Review. IEEE Access 2022, 10, 28693–28716. [Google Scholar] [CrossRef]

- Fu, Y.; Hospedales, T.M.; Xiang, T.; Yao, Y.; Gong, S. Interestingness prediction by robust learning to rank. In Computer Vision—ECCV 2014; Springer: Cham, Switzerland, 2014. [Google Scholar]

- Deb, D.; Nain, N.; Jain, A.K. Longitudinal study of child face recognition. In Proceedings of the 2018 International Conference on Biometrics (ICB), Gold Coast, QLD, Australia, 20–23 February 2018; pp. 225–232. [Google Scholar]

- Fu, Y.; Huang, T.S. Human age estimation with regression on discriminative aging manifold. IEEE Trans. Multimed. 2008, 10, 578–584. [Google Scholar] [CrossRef]

- Ricanek, K.; Tesafaye, T. Morph: A longitudinal image database of normal adult age-progression. In Proceedings of the 7th International Conference on Automatic Face and Gesture Recognition (FGR06), Southampton, UK, 10–12 April 2006; pp. 341–345. [Google Scholar]

- Somanath, G.; Rohith, M.V.; Kambhamettu, C. Vadana: A dense dataset for facial image analysis. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011; pp. 2175–2182. [Google Scholar]

- Chen, B.-C.; Chen, C.-S.; Hsu, W.H. Face recognition and retrieval using cross-age reference coding with cross-age celebrity dataset. IEEE Trans. Multimed. 2015, 17, 804–815. [Google Scholar] [CrossRef]

- Phillips, P.J.; Moon, H.; Rizvi, S.A.; Rauss, P.J. The feret evaluation methodology for face-recognition algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1090–1104. [Google Scholar] [CrossRef]

- Huang, G.B.; Ramesh, M.; Berg, T.; Learned-Miller, E. Labeled Faces in the Wild: A Database for Studying Face Recognition in Unconstrained Environments; Tech. Rep. 07-49; University of Massachusetts: Amherst, MA, USA, 2007. [Google Scholar]

- Rothe, R.; Timofte, R.; Van Gool, L. Deep expectation of real and apparent age from a single image without facial landmarks. Int. J. Comput. Vis. 2018, 126, 144–157. [Google Scholar] [CrossRef]

- Escalera, S.; Fabian, J.; Pardo, P.; Baró, X.; Gonzalez, J.; Escalante, H.J.; Misevic, D.; Steiner, U.; Guyon, I. Chalearn looking at people 2015: Apparent age and cultural event recognition datasets and results. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Santiago, Chile, 7–13 December 2015; pp. 1–9. [Google Scholar]

- Eidinger, E.; Enbar, R.; Hassner, T. Age and gender estimation of unfiltered faces. IEEE Trans. Inf. Forensics Secur. 2014, 9, 2170–2179. [Google Scholar] [CrossRef]

- Cottrell, G.W.; Metcalfe, J. Empath: Face, emotion, and gender recognition using holons. In Advances in Neural Information Processing Systems; Morgan Kaufmann Publishers: San Mateo, CA, USA, 1991; pp. 564–571. [Google Scholar]

- Gutta, S.; Huang, J.R.J.; Jonathon, P.; Wechsler, H. Mixture of experts for classification of gender, ethnic origin, and pose of human faces. IEEE Trans. Neural Netw. 2000, 11, 948–960. [Google Scholar] [CrossRef]

- Moghaddam, B.; Yang, M.-H. Learning gender with support faces. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 707–711. [Google Scholar] [CrossRef]

- Shakhnarovich, G.; Viola, P.A.; Moghaddam, B. A unified learning framework for real time face detection and classification. In Proceedings of the Fifth IEEE International Conference on Automatic Face Gesture Recognition, Washington, DC, USA, 21 May 2002; pp. 16–23. [Google Scholar]

- Jain, A.; Huang, J. Integrating independent components and linear discriminant analysis for gender classification. In Proceedings of the Sixth IEEE International Conference on Automatic Face and Gesture Recognition, Seoul, Republic of Korea, 19 May 2004; pp. 159–163. [Google Scholar]

- Khan, A.; Majid, A.; Mirza, A.M. Combination and optimization of classifiers in gender classification using genetic programming. Int. J. Knowl.-Based Intell. Eng. Syst. 2005, 9, 1–11. [Google Scholar] [CrossRef]

- Kim, H.-C.; Kim, D.; Ghahramani, Z.; Bang, S.Y. Appearance-based gender classification with gaussian processes. Pattern Recogn. Lett. 2006, 27, 618–626. [Google Scholar] [CrossRef]

- Baluja, S.; Rowley, H.A. Boosting sex identification performance. Int. J. Comput. Vis. 2007, 71, 111–119. [Google Scholar] [CrossRef]

- Yang, Z.; Ai, H. Demographic classification with local binary patterns. In Proceedings of the International Conference on Biometrics, Seoul, Republic of Korea, 27–29 August 2007; pp. 464–473. [Google Scholar]

- Leng, X.; Wang, Y. Improving generalization for gender classification. In Proceedings of the 2008 15th IEEE International Conference on Image Processing, San Diego, CA, USA, 12–15 October 2008; pp. 1656–1659. [Google Scholar]

- Mäkinen, E.; Raisamo, R. An experimental comparison of gender classification methods. Pattern Recognit. Lett. 2008, 29, 1544–1556. [Google Scholar] [CrossRef]

- Li, Z.; Zhou, X.; Huang, T.S. Spatial gaussian mixture model for gender recognition. In Proceedings of the 2009 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 45–48. [Google Scholar]

- Lu, L.; Shi, P. A novel fusion-based method for expression-invariant gender classification. In Proceedings of the 2009 IEEE International Conference on Acoustics, Speech and Signal Processing, Taipei, Taiwan, 19–24 April 2009; pp. 1065–1068. [Google Scholar]

- Wang, J.-G.; Li, J.; Lee, C.Y.; Yau, W.-Y. Dense sift and gabor descriptors-based face representation with applications to gender recognition. In Proceedings of the 2010 11th International Conference on Control Automation Robotics & Vision, Singapore, 7–10 December 2010; pp. 1860–1864. [Google Scholar]

- Alexandre, L.A. Gender recognition: A multiscale decision fusion approach. Pattern Recognit. Lett. 2010, 31, 1422–1427. [Google Scholar] [CrossRef]

- Guo, G. Human age estimation and sex classification. In Video Analytics for Business Intelligence; Springer: Berlin/Heidelberg, Germany, 2012; pp. 101–131. [Google Scholar]

- Alamri, T.; Hussain, M.; Aboalsamh, H.; Muhammad, G.; Bebis, G.; Mirza, A.M. Category specific face recognition based on gender. In Proceedings of the 2013 International Conference on Information Science and Applications (ICISA), Pattaya, Thailand, 24–26 June 2013; pp. 1–4. [Google Scholar]

- Bissoon, T.; Viriri, S. Gender classification using face recognition. In Proceedings of the 2013 International Conference on Adaptive Science and Technology, Pretoria, South Africa, 25–27 November 2013; pp. 1–4. [Google Scholar]

- Hassner, T.; Harel, S.; Paz, E.; Enbar, R. Effective face frontalization in unconstrained images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4295–4304. [Google Scholar]

- Yildirim, M.E.; Ince, O.F.; Salman, Y.B.; Song, J.K.; Park, J.S.; Yoon, B.W. Gender recognition using hog with maximized inter-class difference. In Proceedings of the 11th International Joint Conference, VISIGRAPP (3: VISAPP), Rome, Italy, 27–29 February 2016; pp. 108–111. [Google Scholar]

- Abbas, H.; Hicks, Y.; Marshall, D.; Zhurov, A.I.; Richmond, S. A 3d morphometric perspective for facial gender analysis and classification using geodesic path curvature features. Comput. Vis. Media 2018, 4, 17–32. [Google Scholar] [CrossRef]

- van de Wolfshaar, J.; Karaaba, M.F.; Wiering, M.A. Deep convolutional neural networks and support vector machines for gender recognition. In Proceedings of the 2015 IEEE Symposium Series on Computational Intelligence, Cape Town, South Africa, 7–10 December 2015; pp. 188–195. [Google Scholar]

- Antipov, G.; Berrani, S.-A.; Dugelay, J.-L. Minimalistic cnn-based ensemble model for gender prediction from face images. Pattern Recognit. Lett. 2016, 70, 59–65. [Google Scholar] [CrossRef]

- Mansanet, J.; Albiol, A.; Paredes, R. Local deep neural networks for gender recognition. Pattern Recogn. Lett. 2016, 70, 80–86. [Google Scholar] [CrossRef]

- Jia, S.; Lansdall-Welfare, T.; Cristianini, N. Gender classification by deep learning on millions of weakly labelled images. In Proceedings of the 2016 IEEE 16th International Conference on Data Mining Workshops (ICDMW), Barcelona, Spain, 12–15 December 2016; pp. 462–467. [Google Scholar]

- Cirne, M.V.M.; Pedrini, H. Gender recognition from face images using a geometric descriptor. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 2006–2011. [Google Scholar]

- Aslam, A.; Hussain, B.; Cetin, A.E.; Umar, A.I.; Ansari, R. Gender classification based on isolated facial features and foggy faces using jointly trained deep convolutional neural network. J. Electron. Imaging 2018, 27, 053023. [Google Scholar] [CrossRef]

- Simanjuntak, F.; Azzopardi, G. Fusion of CNN-and COSFIRE-based features with application to gender recognition from face images. In Proceedings of the Science and Information Conference, Las Vegas, NV, USA, 2–3 May 2019; pp. 444–458. [Google Scholar]

- Afifi, M.; Abdelhamed, A. Afif4: Deep gender classification based on adaboost-based fusion of isolated facial features and foggy faces. J. Vis. Commun. Image Represent. 2019, 62, 77–86. [Google Scholar] [CrossRef]

- D’Amelio, A.; Cuculo, V.; Bursic, S. Gender recognition in the wild with small sample size—A dictionary learning approach. In Proceedings of the International Symposium on Formal Methods, Porto, Portugal, 7–11 October 2019; pp. 162–169. [Google Scholar]

- Moeini, H.; Mozaffari, S. Gender dictionary learning for gender classification. J. Vis. Commun. Image Represent. 2017, 42, 1–13. [Google Scholar] [CrossRef]

- Bekhet, S.; Alghamdi, A.M.; Taj-Eddin, I. Gender recognition from unconstrained selfie images: A convolutional neural network approach. Int. J. Electr. Comput. Eng. 2022, 12, 2066–2078. [Google Scholar] [CrossRef]

- Kim, Y.H.; Nam, S.H.; Hong, S.B.; Park, K.R. GRA-GAN: Generative adversarial network for image style transfer of Gender, Race, and age. Expert. Syst. Appl. 2022, 198, 116792. [Google Scholar] [CrossRef]

- Raman, V.; ELKarazle, K.; Then, P. Artificially Generated Facial Images for Gender Classification Using Deep Learning. Comput. Syst. Sci. Eng. 2023, 44, 1341–1355. [Google Scholar] [CrossRef]

- Guo, G.; Mu, G. Simultaneous dimensionality reduction and human age estimation via kernel partial least squares regression. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 657–664. [Google Scholar]

- Janahiraman, T.V.; Subramaniam, P. Gender classification based on Asian faces using deep learning. In Proceedings of the 2019 IEEE 9th İnternational Conference on System Engineering and Technology (ICSET), Shah Alam, Malaysia, 7 October 2019; pp. 84–89. [Google Scholar]

- Dey, P.; Mahmud, T.; Chowdhury, M.S.; Hossain, M.S.; Andersson, K. Human age and gender prediction from facial images using deep learning methods. Procedia Comput. Sci. 2024, 238, 314–321. [Google Scholar] [CrossRef]

- Tahyudin, G.G.; Sulistiyo, M.D.; Arzaki, M.; Rachmawati, E. Classifying Gender Based on Face Images Using Vision Transformer. JOIV Int. J. Inform. Vis. 2024, 8, 18–25. [Google Scholar] [CrossRef]

- Ramesha, K.B.R.K.; Raja, K.B.; Venugopal, K.R.; Patnaik, L.M. Feature extraction based face recognition, gender and age classification. Int. J. Comput. Sci. Eng. 2010, 2, 14–23. [Google Scholar]

- Bradski, G.; Kaehler, A. Learning OpenCV: Computer Vision with the OpenCV Library; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2008. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Zhang, K.; Zhang, Z.; Li, Z.; Qiao, Y. Joint face detection and alignment using multitask cascaded convolutional networks. IEEE Signal Process. Lett. 2016, 23, 1499–1503. [Google Scholar] [CrossRef]

- Deng, J.; Guo, J.; Zhou, Y.; Yu, J.; Kotsia, I.; Zafeiriou, S. Retinaface: Single-stage dense face localisation in the wild. arXiv 2019, arXiv:1905.00641. [Google Scholar]

- Ross, T.Y.; Dollár, G.K.H.P. Focal loss for dense object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- King, D.E. Dlib-ml: A machine learning toolkit. J. Mach. Learn. Res. 2009, 10, 1755–1758. [Google Scholar]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Grundmann, M. Mediapipe: A framework for building perception pipelines. arXiv 2019, arXiv:1906.08172. [Google Scholar]

- Wu, W.; Peng, H.; Yu, S. Yunet: A tiny millisecond-level face detector. Mach. Intell. Res. 2023, 20, 656–665. [Google Scholar] [CrossRef]

- Yolov8 Face Detection. Available online: https://github.com/derronqi/yolov8-face/tree/main (accessed on 19 June 2025).

- Xu, Y.; Yan, W.; Yang, G.; Luo, J.; Li, T.; He, J. CenterFace: Joint face detection and alignment using face as point. Sci. Program. 2020, 2020, 7845384. [Google Scholar] [CrossRef]

- Schroff, F.; Kalenichenko, D.; Philbin, J. Facenet: A unified embedding for face recognition and clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Parkhi, O.; Vedaldi, A.; Zisserman, A. Deep face recognition. In Proceedings of the BMVC 2015–Proceedings of the British Machine Vision Conference 2015, Swansea, UK, 7–10 September 2015; British Machine Vision Association: Durham, UK, 2015. [Google Scholar]

- Amos, B.; Ludwiczuk, B.; Satyanarayanan, M. Openface: A general-purpose face recognition library with mobile applications. CMU Sch. Comput. Sci. 2016, 6, 20. [Google Scholar]

- Taigman, Y.; Yang, M.; Ranzato, M.A.; Wolf, L. DeepFace: Closing the gap to human-level performance in face verification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1701–1708. [Google Scholar]

- Sun, Y.; Liang, D.; Wang, X.; Tang, X. Deepid3: Face recognition with very deep neural networks. arXiv 2015, arXiv:1502.00873. [Google Scholar]

- Deng, J.; Guo, J.; Xue, N.; Zafeiriou, S. ArcFace: Additive angular margin loss for deep face recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4690–4699. [Google Scholar]

- Zhong, Y.; Deng, W.; Hu, J.; Zhao, D.; Li, X.; Wen, D. Sface: Sigmoid-constrained hypersphere loss for robust face recognition. IEEE Trans. Image Process. 2021, 30, 2587–2598. [Google Scholar] [CrossRef]

- Alansari, M.; Hay, O.A.; Javed, S.; Shoufan, A.; Zweiri, Y.; Werghi, N. Ghostfacenets: Lightweight face recognition model from cheap operations. IEEE Access 2023, 11, 35429–35446. [Google Scholar] [CrossRef]

- Makinist, S.; Ay, B.; Aydın, G. Average neural face embeddings for gender recognition. Avrupa Bilim Ve Teknol. Derg. 2020, 522–527. [Google Scholar] [CrossRef]

- Sefik Ilkin Serengil, “DeepFace”. Available online: https://github.com/serengil/deepface (accessed on 19 June 2025).

- Levi, G.; Hassner, T. Age and gender classification using convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; pp. 34–42. [Google Scholar]

- Available online: https://github.com/naheri/gender_detection/tree/main (accessed on 19 June 2025).

- Available online: https://github.com/arunponnusamy/gender-detection-keras/tree/master (accessed on 19 June 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).