Abstract

Aiming at the existing problems in practical teaching in higher education, we construct an intelligent teaching recommendation model for a higher education practical discussion course based on naive Bayes machine learning and an improved k-NN data mining algorithm. Firstly, we establish the naive Bayes machine learning algorithm to achieve accurate classification of the students in the class and then implement student grouping based on this accurate classification. Then, relying on the student grouping, we use the matching features between the students’ interest vector and the practical topic vector to construct an intelligent teaching recommendation model based on an improved k-NN data mining algorithm, in which the optimal complete binary encoding tree for the discussion topic is modeled. Based on the encoding tree model, an improved k-NN algorithm recommendation model is established to match the student group interests and recommend discussion topics. The experimental results prove that our proposed recommendation algorithm (PRA) can accurately recommend discussion topics for different student groups, match the interests of each group to the greatest extent, and improve the students’ enthusiasm for participating in practical discussions. As for the control groups of the user-based collaborative filtering recommendation algorithm (UCFA) and the item-based collaborative filtering recommendation algorithm (ICFA), under the experimental conditions of the single dataset and multiple datasets, the PRA has higher accuracy, recall rate, precision, and F1 value than the UCFA and ICFA and has better recommendation performance and robustness.

1. Introduction

The goal of higher education is to cultivate innovative and applied talents [1]. It focuses on cultivating students’ interests and improving their practical abilities, encouraging students to transform their theoretical knowledge into practical skills [2]. Based on the training objectives of higher education, course design includes two parts: theoretical teaching and practical teaching [3]. Practical teaching focuses on cultivating and examining the students’ mastery of a certain skill [4]. Traditionally, the basic teaching mode of practical courses is “teacher transferring knowledge”, which means that the teachers design the teaching content while the students passively receive it [5]. The process of this teaching mode is relatively simple. The teachers firstly determine and analyze the theoretical course content, and then extract the skills and requirements involved in the course, determine the practical content based on the skills and requirements, and divide the practical content into several implementation steps, which will be completed by the student groups. At the end of the course, the entire practical teaching activity is summarized by the whole class [6]. This process mainly involves three steps: firstly, the design of the practical content; secondly, grouping students based on the practical topics; and thirdly, the implementation of the practical teaching [7]. Analyzing the design and implementation process of practical teaching in the traditional mode, it can be concluded that the teachers play a leading role in this process. The design of the practical topics, the student grouping, and the implementation and evaluation of practical teaching are all independently completed by the teachers. Students participate in the entire practical course, receive the practical content designed by the teachers, and complete the practical content [8]. This mode has the features of teacher control, prominent theme, and strong pertinence. It can effectively explore the core content of the theoretical course, transform the theoretical teaching content into practical content, guide students to complete the practice in order, and improve the students’ skills in a targeted manner [9].

In addition, there are some other commonly used teaching methods in practical courses, such as the scenario simulation method, the experimental operation method, the task-driven method, the game teaching method, the visiting teaching method, and so on. In the scenario simulation method, the teachers design the teaching scenarios, and the students are divided into groups to perform, identify, and solve problems [10]. In the experimental operation method, the teachers design the experimental content and process, and the students are divided into groups to complete the experiment. Students are required to perform hands-on operations to discover and solve problems during the experimental process [11]. In the task-driven method, the teachers design specific tasks, the students are divided into groups, and the teachers ask each group to complete different tasks to achieve the specified goals [12]. In the game teaching method, the teachers design games with simulated scenarios, in which the students are grouped together to participate and solve problems during the game process [13]. In the visiting teaching method, the teachers lead the students to visit the teaching site, in which the students are divided into groups to discuss and solve problems [14]. These practical teaching methods have their own features, but they all rely on three aspects: the teaching content design, the student grouping, and the practical teaching implementation. Based on the teaching objectives and contents, the three core elements of practical teaching are analyzed, and the following problems in the traditional practical teaching mode are summarized.

The design of practical content is based on the teachers’ teaching experience and their mastery of the teaching content. The practical topics are determined by their qualitative description. This approach lacks a quantitative method for practical topics, and there is no clear quantitative method to explore and visualize the core elements involved in the teaching content. Meanwhile, the design of the practical content lacks a quantitative standard and executable method to guide the teachers in exploring the practical elements, resulting in low accuracy in the design of practical topics.

Grouping students is based on the teachers’ experience and the students’ free matching, without strict grouping criteria or a scientifically quantified grouping method. The grouping process is highly random and casual, and the features and interests of group members vary greatly, resulting in low accuracy of grouping.

The implementation of the practical teaching lacks personalized interest mining or a method for matching and recommending practical content based on the interest mining results, resulting in a low degree of matching between the practical topics and the interests of each group’s members. This ultimately leads to a low quality of practical teaching and poor classroom teaching effectiveness.

In response to the above problems, the practical teaching mode needs to be optimized from three aspects: quantitative modeling of the core elements of the practical topics, a quantitative grouping algorithm model for the students, and a matching and recommendation algorithm model for the practical topics [15]. Firstly, it is necessary to determine the practical teaching topics based on the theoretical teaching content, explore the core element labels of the practical teaching topics, and construct a label quantification model. Secondly, based on the label quantification model, the student interest mining model and the algorithm model for the student grouping are constructed to achieve accurate classifications of students, so that students within the same group all have the closest interests. We establish a practical topic recommendation algorithm based on the student grouping model, with interest mining and matching as the core methods. It aims to accurately recommend the practical topics for each group; then, the teachers and students make decisions based on the recommendation results [16]. According to the problem analysis and the modeling principle, we construct an intelligent teaching recommendation model for a higher education practical discussion course based on naive Bayes machine learning and an improved k-NN data mining algorithm. The main work includes the following aspects.

- (1)

- We analyze the research background of “artificial intelligence plus education” and the current status and existing problems of practical teaching methods. In response to these problems, we propose the advantages of the intelligent teaching recommendation model based on naive Bayes machine learning and improved k-NN data mining algorithm.

- (2)

- We construct the student grouping algorithm based on naive Bayes machine learning. Firstly, we establish the training set model for the naive Bayes machine learning algorithm and collect the training dataset from the previous classes. Secondly, we set up a naive Bayes machine learning model to group the students in a class, so that each group of students has the closest interests.

- (3)

- We build a teaching recommendation model based on the improved k-NN data mining algorithm by implementing the class grouping. Firstly, an optimal complete binary encoding tree for the discussion topic is constructed, and the feature attributes of the discussion topic are matched based on the interests of each group of students. The binary encoding tree is established based on the spatial coordinate system of the discussion topic, and the recommendation model is established on the coordinate system.

- (4)

- We design the experiments to validate the proposed algorithm, demonstrating its feasibility from three aspects as detailed in the following sections: “Results and Analysis on the Naive Bayes Grouping”, “Results and Analysis on the Proposed Teaching Recommendation Algorithm”, and “Results and Analysis of the Comparative Experiment”. Compared with the traditional collaborative filtering algorithms, the proposed algorithm has higher accuracy, recall rate, precision, and F1 value.

2. Related Works and the Advantages, Application Purpose, Formulated Requirements, and Constraints of the Proposed Model

2.1. Related Works

In the field of artificial intelligence development, the integration of intelligent algorithms into teaching practice is currently a hot research topic. The research on teaching recommendation algorithms mainly focuses on the combination of recommendation algorithms with teaching platform development, teaching design, teaching evaluation, and other aspects. Ren [17] constructed an e-learning-based recommendation model incorporating the user’s previous behaviors, which are used to mine the user’s interest data and recommend Chinese learning resources. The model has been experimentally tested and shows good performance. Fu et al. [18] designed a multimedia system based on Chinese language teaching by combining data mining techniques and recommendation algorithms. The research optimized and improved the information resource library of the system from the perspective of users and added system functions to meet the personalized needs of certain users. It enhances the efficiency of the teaching system. Yin [19] developed an improved collaborative filtering algorithm that combined users’ social relationships and behavioral characteristics to recommend relevant teaching content for music teaching platforms. The algorithm applies the users’ social relationships to construct the similarity calculation formula and uses the behavioral feature data as the basis for recommendation calculation, improving the accuracy of the recommendation algorithm. Liu [20] established a new mode of college English teaching based on the personalized recommendation of teaching resources and developed a teaching recommendation model based on a collaborative filtering algorithm to improve the accuracy of the recommendation algorithm. Zhang et al. [21] established a recommendation model for the online teaching of Chinese as a foreign language based on user interest similarity using a collaborative filtering recommendation algorithm. Three experiments were designed to prove that the constructed algorithm has good recommendation performance. Ying [22] developed an interactive AI virtual teaching resource recommendation algorithm based on similarity measurements. The core idea is to mine the users’ similarity and find similar user neighbors for the current users, thereby recommending the teaching resources to the neighboring users and improving the accuracy of the recommendation results. Liu [23] developed a collaborative filtering recommendation algorithm based on the user, content, and student profiles. It analyzed the users’ previous behaviors to construct their interest profiles and incorporated a recommendation algorithm to build a personalized classroom teaching model. Lu [24] used information retrieval technology to optimize a recommendation algorithm and combined two methods for recommendation. The main steps that affect the recommendation results in recommendation algorithms include similarity calculation, nearest neighbor selection method, rating prediction method calculation, etc., which can accurately retrieve the content that users are interested in and greatly improve the diversity of recommended content. Gavrilovic et al. [25] constructed two discrete-element heuristic algorithms to group students in e-learning. It groups students with different knowledge levels and recommends teaching content to each group of students, thus improving the overall efficiency of the online learning process. Baig et al. [26] proposed an efficient knowledge-graph-based recommendation framework that can provide personalized e-learning recommendations for existing or new target learners with sufficient previous data of the target learners. Bhaskaran et al. [27] developed an intelligent recommendation system by using clustering based on splitting and conquering strategies, which can automatically adapt to learners’ needs, interests, and knowledge levels; provide intelligent suggestions by evaluating the ratings of frequent sequences; and offer the optimal recommendations for the learners. Nachida et al. [28] designed a new educational recommendation model: EDU-CF-GT, which is based on the universal CF-GT model. The constructed model can adapt to the complexity of the education field and improve learning efficiency by simplifying resource acquisition. Bustos López et al. [29] developed an educational recommendation system that combines collaborative filtering with sentiment detection technology to recommend educational resources to users based on their preferences/interests and user emotions detected by facial recognition technology. Amin et al. [30] proposed a new personalized course recommendation model, which was implemented by an intelligent electronic learning platform. This model aims to collect data on the students’ academic performance, interests, and learning preferences and use the information to recommend the most beneficial courses for each student. Lin et al. [31] developed a deep learning recommendation system, which includes augmented reality (AR) technology and learning theory, for non-professional students with different learning backgrounds to learn. It can effectively improve the students’ academic performance and optimize their computational thinking ability. Chen et al. [32] designed a reliable personalized teaching resource recommendation system for online teaching under large-scale user access. It combines collaborative filtering and unit closure association rules, achieving the reliable recommendation of personalized teaching resources. Qu [33] constructed a personalized system and joint recommendation technology for English teaching resources. It improved the traditional joint recommendation algorithms and proposed hybrid recommendation. The recommendation system has good effectiveness and stability in both performance and practical applications. Wang et al. [34] designed a recommendation system based on multiple collaborative filtering hybrid algorithms and evaluated the performance of the recommendation system through teaching practice. The experiment proved that this hybrid method has certain advantages in recommending Chinese learning resources, with high accuracy in recommending learning resources.

Based on the analysis of the existing research, it can be concluded that the current research on teaching recommendation systems mainly focuses on the combination of recommendation algorithms with teaching platform development, teaching design, teaching evaluation, and other aspects. In collaborative filtering algorithms, the users’ previous behaviors are mined, and teaching resources are recommended to users. The accuracy of the teaching resource recommendations is increased by improving the recommendation algorithms. However, there are several problems with these types of research methods. Firstly, most of the research only learns from the users’ perspectives, mining their interests and establishing the recommendation models without quantitatively modeling the teaching contents, teaching topics, or teaching resources. The teaching objects targeted by the models are not clearly quantified, and the matching relationship between the user interests and the teaching objects is vague. Secondly, some research explores the behaviors of previous users or analyzes their browsing behaviors to obtain interest tendencies. This method obtains approximate user behaviors rather than precise interests, and the recommendation results cannot fully match the current users’ interests, resulting in low accuracy. Thirdly, some researchers design recommendation models based on collaborative filtering algorithms. The collaborative filtering algorithm itself has limitations. The user-based collaborative filtering recommendation algorithm (UCFA) searches for the approximate users, while the item-based collaborative filtering recommendation algorithm (ICFA) searches for the approximate items. These methods are both approximate searching algorithms, and the recommendation results are also based on approximate matching, resulting in low accuracy.

2.2. The Advantages, Application Purpose, Formulated Requirements, and Constraints of the Proposed Model

2.2.1. The Innovations and Advantages of the Proposed Model

Regarding the problems of the related works, our proposed teaching recommendation model based on naive Bayes machine learning and an improved k-NN data mining algorithm has the following innovations. Firstly, we quantitatively mine the teaching contents and topics and construct a quantitative matrix to label the core elements of practical teaching, so that the teaching objects have clear quantitative expressions, which is more in line with the data structure required for building the recommendation algorithm. Secondly, we construct a machine learning algorithm and use the naive Bayes classification model to classify the interests of students in the class, achieving the teaching grouping. The model can accurately group class students based on the teaching content labels, the student feature attributes, and the teaching topic categories, providing the grouping criteria and standards for the practical classroom teaching. Thirdly, the establishment of the recommendation model is based on an improved k-NN data mining algorithm, which achieves the optimal matching for group interests and practical teaching topics, providing a basis for student group matching, recommending the most suitable teaching topics for the students’ interests, and providing decision support for the teachers to implement the practical teaching. Fourthly, the recommendation model based on the improved k-NN data mining algorithm has higher accuracy than the collaborative filtering recommendation algorithms, and the recommendation results are more in line with the students’ interests and needs.

The constructed recommendation model has the following advantages. Firstly, in the existing research on teaching recommendation systems, most studies focus on recommending teaching resources, student courses, learning plans, etc. However, there is a lack of research specifically on recommendation systems for organizing the practical discussion courses in universities. This model can effectively solve this problem. Secondly, compared with the fuzzy recommendation implemented by traditional collaborative filtering algorithms, this model is based on accurate mining of the student interest labels and the teaching topic labels, achieving precise matching between the two labels. The recommended results are closer to the students’ interests and have higher accuracy. Thirdly, compared with the large-scale model and universal features of traditional teaching recommendation systems, this model can achieve personalized teaching in small classes, divide students into groups according to their interests, and enable the teachers to accurately quantify the interest tendencies of the group members. Based on the label matching, personalized practice content can be recommended and designed for the students, achieving personalized thematic discussions and effectively improving the students’ learning interests and the teaching quality.

2.2.2. The Application Purpose, Formulated Requirements, and Constraints of the Proposed Model

- The Application Purpose and Formulated Requirements

The constructed teaching recommendation model has a completely different application purpose compared to the recommendation models discussed in the literature review for course selection and teaching planning. Firstly, the constructed model is not specific to which courses the students should choose to achieve their semester goals but is based on a course that has already been offered and intended to design the specific teaching method. In the model, the teaching process of the course must include practical discussion classes. The model is used for accurately recommending the theme of a certain discussion class in the course. The recommendation model is specifically designed for teaching activities such as student interest grouping, discussion topic classification, discussion topic matching, and recommendation in the practical discussion courses. Secondly, the constructed model is not designed for the students’ specific majors, to recommend the most suitable courses. Instead, based on the established course plan, it helps to design the discussion topics for the teachers and the students, determine the specific student groups, and recommend the specific discussion topics for each group in the practical teaching of the courses. Thirdly, the constructed model is not aimed at how to choose the specific learning issues but recommends the most suitable discussion topics for the different interest groups based on the determined learning contents and themes, in order to achieve problem-oriented discussion teaching. Thus, we summarize the application purpose and formulated requirements of the constructed teaching recommendation model as follows:

(1) It is used for a specific course.

(2) The course must include the teaching process of practical discussion.

(3) During the teaching process, it is specifically used for a practical discussion class.

(4) It is used for student interest mining, student interest grouping, discussion topic classification, and recommendation in the practical discussion class.

(5) It is suitable for a small class with a moderate number of students (such as small classes of 10–20 students). Additionally, the number of student groups should not be too large, and the number of students in each group should not be too large, either. When there are a large number of students in a class (such as 50 students), it is necessary to split the class or increase the class hours to complete the teaching task in batches and ensure the teaching quality.

(6) The number of discussion topics is limited to one, and the number of grouped topics divided by the discussion topic cannot exceed five.

- 2.

- The Model Features in Practical Teaching Case

Based on the application purpose and the formulated requirements of the model, combined with the specific scenarios of practical teaching, the constructed teaching recommendation model has the following features and advantages in practical teaching cases compared to the traditional recommendation models:

(1) Accuracy. Traditional recommendation models commonly use collaborative filtering algorithms to implement recommendations, which are based on the previous users’ interests or current users’ behaviors to recommend objects that are close to the current users’ preferences. It is an approximate recommendation. The constructed teaching recommendation system matches the discussion topics based on the current interests of students and has built a strict mathematical model for matching interests, which has the feature of accuracy. In the teaching recommendation system, the recommended objects are the discussion topics, and the users are the students. The feature of accuracy is reflected in the modeling process of feature engineering in the recommendation system, which includes the following features:

- Primary feature labels of the recommended objects: the designed discussion topic for the course;

- Secondary feature labels of recommended objects: the grouped discussion topics determined by the discussion topic, with each group representing an interest tendency;

- Primary feature labels for students: based on the secondary feature labels of recommended objects, we design the labels that students are interested in, and let the students judge their degree of preference for the labels;

- Student classification labels: corresponding to the secondary feature labels of the recommended objects;

- Discussion contents and feature labels: we further subdivide the group discussion topics into several discussion contents, quantify the labels of the discussion contents, and use them to match the student interest labels.

(2) Personalization. The constructed recommendation model has personalized features, achieving precise matching and recommendation between the student interest labels and the discussion contents, meeting the personalized interests of each student. Its feature of personalization is manifested in the internal algorithmic logic of the recommendation system outputting the discussion contents that match the interests. It includes the following features:

- Collection and quantification of discussion content labels: we determine the discussion contents based on the discussion topic, then collect discussion content labels, and quantify the labels;

- Collection and quantification of student interest labels: regarding the designed discussion contents of student classification, we collect interest labels and quantify the labels;

- Build the matching model: we construct the matching model between the discussion content labels and the student interest labels to achieve the personalized recommendation.

Based on the analysis of the features of the recommendation model, we compare the features of the constructed recommendation model with those presented in related works, and the results are shown in Table 1. From the feature comparison results in Table 1, it can be concluded that the constructed model has great innovation and advantages compared to the related research. It can realize the student interest grouping and recommend specific teaching contents based on the interest grouping.

Table 1.

Comparison of the relevant features contained in each recommendation model.

- 3.

- The Constraints of the Model

Based on the analysis of the application purpose and the formulated requirements, it can be concluded that the constructed teaching recommendation model has certain constraints in its application. Firstly, it is applicable to courses that include practical discussion teaching, while for courses that do not include the practical discussion teaching (such as theoretical courses), this recommendation model is not applicable. Secondly, there are constraints on the specific objectives and discussion contents of the practical teaching. It is suitable for classes that include practical activities such as exploration, debate, and discussion in order to train the students’ oral expression ability, logical thinking ability, and teamwork ability. It is particularly suitable for subjects in the humanities and social sciences such as tourism, education, philosophy, history, and sociology, as well as group discussions on solutions in the subjects of the natural sciences. This method is not suitable for practical activities such as writing computer programs, building algorithms, executing engineering projects, conducting chemical and physical experiments, etc. Overall, the recommendation model is suitable for oral debates and discussions but not for practical applications that require hands-on experience. Thirdly, the algorithm design of the recommendation model is suitable for teaching small classes. For classes with a large number of students, in order to ensure the teaching quality, it is necessary to divide the classes or increase class hours to complete the teaching tasks in batches.

3. Methodology

3.1. Class Grouping Algorithm Based on Naive Bayes Machine Learning

The naive Bayes machine learning algorithm is a classification algorithm built on the basis of constructing a training set for the feature attribute objects containing independent properties, with the goal of quantifying the posterior probability of the object to be classified belonging to a certain class [35]. For the practical teaching content of the same teaching course, the interest classification of the previous class of students provides the raw structured data for constructing the naive Bayes machine learning algorithm [36]. We firstly construct a training set model for the naive Bayes machine learning algorithm, and then establish a class grouping algorithm based on the naive Bayes machine learning. The modeling principle of the algorithm, as well as how the algorithm realizes the student grouping, are interpreted as follows:

(1) The first step: The naive Bayes machine learning algorithm is a supervised learning algorithm. Therefore, for a certain discussion course, we select an adequate amount of students and their label data from the previous classes that have organized identical discussion courses to construct a training set for the naive Bayes machine learning algorithm. It includes the recommendation system labels described in Section 2.2.2, namely the primary feature labels of students and the classification labels of students. We then quantify the labels [37].

(2) The second step: We collect the student data from the current class to be classified, including the primary feature labels of the students, and then quantify the student labels.

(3) The third step: We use the collected student data from the previous classes to construct the naive Bayes machine learning algorithm. Based on the algorithm, we input the student label data from the current class (a student who is to be classified) and calculate the posterior probability of each classification label for the student. The student’s assigned group is the group with the highest posterior probability [38].

- The first step is used to interpret the data collected from the previous classes to build the algorithm.

- The second step is used to interpret the data collected from the current class (a class that will be organized to have a discussion course, and its students will be grouped).

- The third step is used to interpret how the student data in the current class is used in the naive Bayes machine learning algorithm.

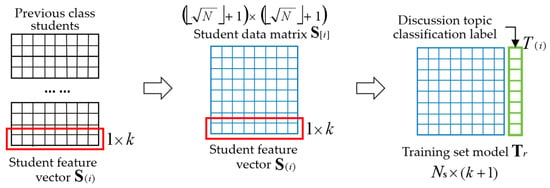

3.1.1. Training Set Model for Naive Bayes Machine Learning Algorithm

To establish the naive Bayes machine learning algorithm, it is necessary to select the individual students from the previous classes who meet the naive Bayes classification criteria and then construct the quantitative labels for the core elements of the practical teaching topics to determine the classified labels of the individual students. We construct the feature vector and the data matrix for the students in the previous classes, quantify the data matrix for the students in the previous class, select the individual students who meet the conditions of the naive Bayes machine learning algorithm, and then establish the training set model. We first construct the relevant definitions and then establish the naive Bayes machine learning training set model. Figure 1 shows the training set model of the constructed naive Bayes machine learning algorithm.

Figure 1.

The constructed training set for the naive Bayes machine learning algorithm.

Definition 1.

The sample student and the student feature vector . Naive Bayes machine learning is a supervised learning algorithm that requires collecting data from the previous courses and establishing a training set. We define an arbitrary student selected from the previous teaching class, who participates in the practical teaching and has feature attributes and interests, as the sample student, denoted as . We construct a one-dimensional vector to store the teaching interest labels selected and quantified by the students. The dimension is denoted as , and its elements store the practical teaching topic elements. This vector is the student feature vector, denoted as .

Definition 2.

The classification label for the discussion topic. The labels used for classification in supervised learning are the key elements of naive Bayes machine learning. We define the student classification label determined by the topic content in practical teaching as the classification label for constructing the naive Bayes machine learning, denoted as . After determining the classification label, the student feature vector is expanded into dimension , and the last element of the vector stores the label

.

Definition 3.

The student data matrix . We select sample students from all the previous students who could be used as the training set for constructing the naive Bayes machine learning algorithm, and store them in a matrix in the order of a certain algorithm. We define the matrix as the student data matrix, denoted as .

Definition 4.

The training set model for the naive Bayes machine learning. According to the storage rules for the feature labels and classification labels in the naive Bayes training set, the vector

is topologically transformed to generate a training set for constructing the naive Bayes machine learning, denoted as . The training set stores the student labels and classifications that have undergone data prepossessing.

The algorithm for constructing the training set model for the naive Bayes machine learning based on the previous student teaching data is as follows (Appendix A.1):

Step 1: Randomly select number of students from the previous classes, each of whom is an independent individual with independent and different interests, which meet the conditions , and . We initialize the number of students as , , …, .

Step 2: Establish the feature vector for the students in the previous classes, , . A vector represents a student . Formula (1) is the constructed vector model .

Step 2.1: Determine the number of the core attributes for the practical teaching topics, , . Each core attribute is an independent feature that satisfies . Attribute has a quantifiable interval or discrete value.

Step 2.2: Determine number of discussion topics based on the practical content and note it as a classification label , , .

Step 2.3: Establish a dimensional vector with row rank and column rank . The composition of the vector elements is as follows:

(1) The first element of the no. element stores attributes , corresponding to the element , , in which represents the no. student element.

(2) The no. element corresponds to the element , , in which represents the no. student element.

Step 3: Establish the previous class student data matrix . According to the number of students , we set the row rank and column rank of the dimensional matrix, satisfying , . Formula (2) is the constructed matrix model .

Step 3.1: Store the number of marked students in the matrix in increasing order of rows and columns . The storage status meets the following conditions:

(1) If , then the remaining number of elements in the matrix are stored as 0;

(2) If , then the matrix is full rank.

Step 3.2: The elements in the matrix correspond to the students , and the vectors are quantified based on the previous data records. For the student , if any element in the vector satisfies , it indicates that the student has not participated in any classification or practical course. Then, there are:

(1) For in the matrix , if corresponding student is , then set ;

(2) For in the matrix , if corresponding student is , then set .

Step 3.3: Mark all the elements of in the matrix with a count of . According to the modeling process, there is .

Step 4: Build the training set model for the naive Bayes machine learning. Construct a dimensional matrix based on the vector dimension . Formula (3) is the constructed model .

Step 4.1: Extract the quantified matrix and extract number of elements .

Step 4.2: Define the dimensional empty vector with the row rank and the column rank .

Step 4.3 Initialize the row , , and expand the rows of the vector . If , continue to execute. Note that . If , complete the execution, and output .

Step 4.4: Store the quantified labels of the number of students into . The storage rule is:

(1) The matrix row corresponds to one student vector ;

(2) The element represents the no. attribute label of the no. student and satisfies the constraint condition , , ;

(3) The last column of the matrix stores the noted classification labels of students .

3.1.2. Class Grouping Algorithm

The establishment of the naive Bayes machine learning algorithm is based on the constructed training set , with the goal of achieving student classification in the teaching class and grouping the practical teaching based on the classification results. Based on the number of core attributes determined by the practical teaching topics, the interest labels and classification labels of students in the previous classes, we construct the naive Bayes machine learning model to calculate the Bayesian posterior probability of each student belonging to the classification label , achieving the classification of the students in the teaching class. According to the modeling principle of the naive Bayes machine learning algorithm, the constructed training set consists of two parts: one is the student interest label and the quantified module, namely the element , , , ; the second is the student classification label module, namely the last column of the matrix . The columns in the training set are independent from each other, which meets the modeling requirements of the naive Bayes machine learning algorithm [39].

Definition 5.

The naive Bayes prior probability model . For the student training set, it is one of the conditions used to construct the naive Bayes machine learning algorithm. It is defined as the probability of the classification

appearing in the total number of samples in the training set.

Definition 6.

The naive Bayes conditional probability density . For the student training set, it is also one of the conditions used to construct the naive Bayes machine learning algorithm. It is defined as the probability of students and student feature labels appearing under the classification conditions

.

Definition 7.

The naive Bayes posterior probability model . The probability of classifying student into the classification label . It is used to determine the final classification of the student

.

Definition 8.

The feature vector of the student to be classified. Based on the dimensional feature vector of the previous class of students, we construct a dimensional vector with the same attribute labels

for storing and quantifying the feature labels of students to be classified. We define this vector as the feature vector of the student to be classified, denoted as

.

For an arbitrary student to be classified in the teaching class, the classification objective is to match the student with the training set model for the naive Bayes machine learning algorithm and the selected classification labels based on the previous classes and use the classification algorithm to classify the student into the classification with the highest Bayesian posterior probability value. The measuring of the interest tendency of the student to be classified by the naive Bayes machine learning algorithm is consistent with the prior probability and previous teaching experience. We suppose that is the assumption that the student is classified to a classification , and the basic idea of constructing a classification model using Bayes’ theorem is to determine the Bayesian posterior probability of the assumption that the student is to be classified to a classification . Formula (4) is the constructed Bayesian posterior probability model that is used to assume that the student belongs to a classification .

For the topic classification determined by the practical teaching, , , the naive Bayes machine learning algorithm predicts that a student belongs to the classification with the highest posterior probability by calculating the Bayesian posterior probability. For the number of classifications set in the sample space, the condition for the student belonging to a certain classification is: if and only if , in which and . At this moment, the classification relating to the maximum Bayesian posterior probability is the maximum posteriori assumption. Based on the modeling principle, the naive Bayes machine learning algorithm for the student classification is constructed as follows (Appendix A.2).

Step 1: Determine the quantified vector of the student to be classified, which will be used to construct the naive Bayes machine learning model.

Step 2: Perform the equivalent simplification on the Bayesian posterior probability model.

Step 2.1: Without considering the conditional probability constraints, the possibility of the student in any class is identical. Define the probability of the student for all classes as constant, i.e., .

Step 2.2: Simplify the Bayesian posterior probability model and convert it to calculate the value .

Step 2.3: Set . Calculating and comparing is equivalent to calculating .

Step 3: Retrieve the training set model and construct the prior probability model for the classification labels.

Step 3.1: Mark the students in the model who belong to the classification and record .

Step 3.2: Initialize , , set the number of students as , , and determine the rows of the matrix : (1) if , then ; (2) if , then .

Step 3.3: Iterate , determine whether row related to meets , and include .

Step 3.4: Determine the termination conditions: (1) if , the searching ends, output the current ; (2) if , continue searching.

Step 3.5: Build a prior probability model for student classification, as shown in Formula (5).

Step 3.6: Repeat steps 3.1 to 3.5, traverse , and calculate the prior probability for each .

Step 4: Introduce and quantify the labels to construct the conditional probability density model .

Step 4.1: If the attribute labels in the matrix satisfy , construct a conditional probability density function , as shown in Formula (6).

Step 4.2: Estimate the probability . Each label in the matrix satisfies the condition , which means that each label is a discrete feature. The probability estimate is constructed as Formula (7), in which is the number of students with attribute labels in the classification , and

is the number of students in the classification .

Step 4.3: When counting the number of label as , in order to avoid calculating the conditional probability density value as 0, a perturbation factor is introduced as an adjustment when calculating the number of the label as . Then, a conditional probability density model is constructed as shown in Formula (8).

Step 5: Calculate the transformation value for the Bayesian posterior probability, traversing , and determine the classification with the as the classification that the student belongs to.

3.2. Teaching Recommendation Model Based on Improved k-NN Data Mining Algorithm

The naive Bayes machine learning algorithm determines the classification of the students in the teaching class, and based on the determined student classification, the teachers encode and group the students in the class. According to the inherent logic of the naive Bayes machine learning algorithm, the students in the same classification tend to have a high degree of closeness in their interest tendencies, while the students in the different classifications tend to have a low degree of closeness in their interest tendencies. According to the course design process, the teachers need to determine the specific practical topic content for each group based on the grouping. Scientific and quantitative recommendation and decision making is the best mode for accurately determining the practical topic content for each group, ensuring that the recommended practical topic content can accurately match the interests of each student in the group. Due to the inclusion of multiple students in each group , the construction of the practical topic recommendation algorithm must consider the precise interests of all students. From the perspective of recommendation algorithm design, it is necessary to construct a collective recommendation model [40]. Based on this fundamental principle, we construct a teaching recommendation model based on the improved k-NN data mining algorithm.

The basic idea of the modeling is as follows. We set the classification result as the number of groups . Each classification represents one interest, and the number of students in each group is . The teacher determines number of discussion topics for each group , , . We build a k-NN data mining algorithm between number of students and number of discussion topics in a group , output number of the most matching topics for each student, and then find the number of topics with the best intersection among the number of topics as the recommended topics for the group of students. The model outputs the recommended topics for other groups by using the same algorithm until the recommendations for the number of groups are completed [41].

3.2.1. The Modeling of the Complete Binary Encoding Tree for Discussion Topic

According to the modeling concept, we confirm some Definitions for the algorithm. Firstly, the algorithm is constructed to match the individual students in the group with the discussion topics , and the optimal complete binary encoding tree model for the discussion topic is established.

Definition 9.

The discussion topic feature vector . For the number of discussion topics designed for the group , the containing labels of each discussion topic have a matching relationship with the labels contained in the dimensional student interest feature vector . The dimensional vector composed of number of quantified labels used to express the characteristics of the discussion topic is defined as the discussion topic feature vector, denoted as . The vector elements are labels , , , and

.

Definition 10.

The quantization vector and the quantization vector . We quantify and normalize the elements of the vector based on the interests of the individual students , and the resulting quantified vector is denoted as , in which the normalized elements are denoted as . For the feature vector of the discussion topic, the elements are quantified and normalized based on the topic characteristics, and the resulting quantified vector is denoted as , in which the normalized elements are denoted as

.

Definition 11.

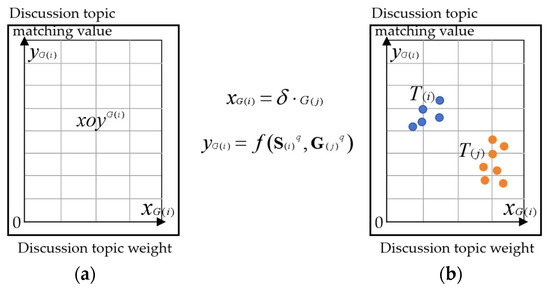

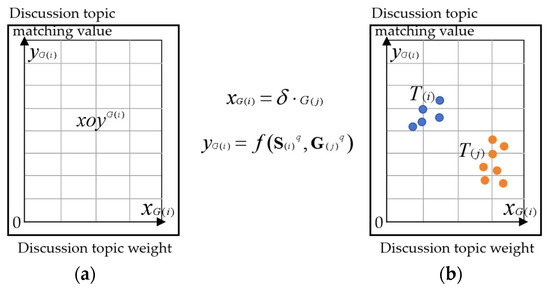

The discussion topic weight . The feature vector of the discussion topic describes the degree to which a certain discussion content covers the requirements of the practical teaching under the specific practical teaching conditions. The reciprocal of the modulus of the quantified vector of the discussion topic is defined as the discussion topic weight , quantified as . It is the measurement value that covers the requirements of the practical teaching. The higher the weight value is, the higher the coverage will be.

Definition 12.

The discussion topic matching model . Based on the matching feature between the student interest feature vector and the discussion topic feature vector , a matching model based on the quantization vector and is constructed to express the matching measurement value between the individual interest of no. student in the group and the no. discussion topic . Formula (9) is the constructed discussion topic matching model.

Definition 13.

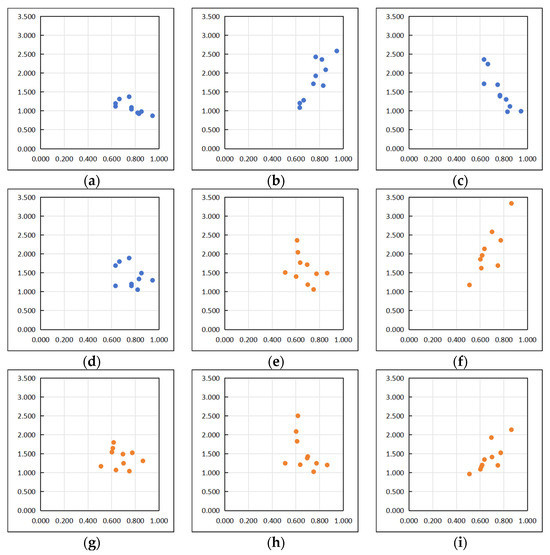

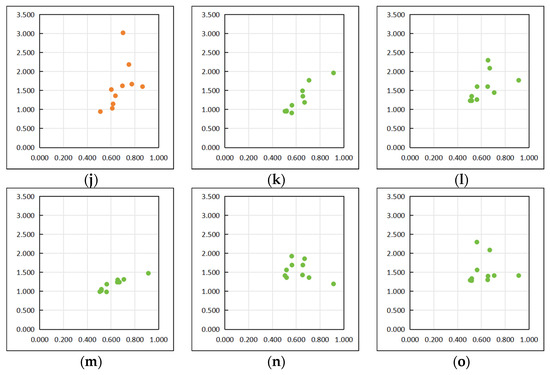

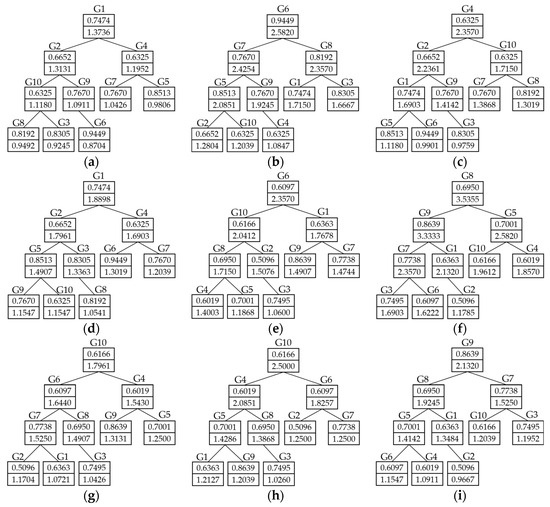

The discussion topic spatial coordinate system and the spatial coordinates . Based on the discussion topic weight and the discussion topic matching model , we quantitatively model the spatial distribution of the discussion topics included in student group . We construct a spatial coordinate system for the discussion topics with weights as the abscissa and function values as the ordinate, denoted as . The discussion topic is represented by a coordinate point, whose coordinates are denoted as , in which , . Figure 2 shows the constructed discussion topic spatial coordinate system: Figure 2a shows the constructed spatial coordinate system , and Figure 2b shows an example of the quantified coordinate system containing the discussion topic points.

Figure 2.

The constructed discussion topic spatial coordinate system.

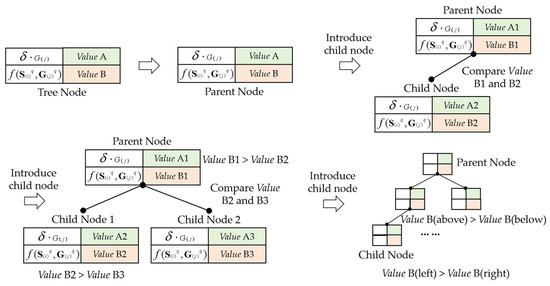

Definition 14.

The optimal complete binary encoding tree model for the discussion topic. The goal of the optimal complete binary tree model is to achieve the ordered storage of parent node and child nodes, output the optimal sorted tree structure, and extract the optimal nodes. We simulate the generation rules of the optimal complete binary tree. Based on the discussion topic spatial coordinate system and the spatial coordinates , we treat the spatial coordinates as the codes of the discussion topic in the coordinate system and construct the complete binary tree with the optimal parent node. This tree is defined as the optimal complete binary encoding tree model for the discussion topic, denoted as

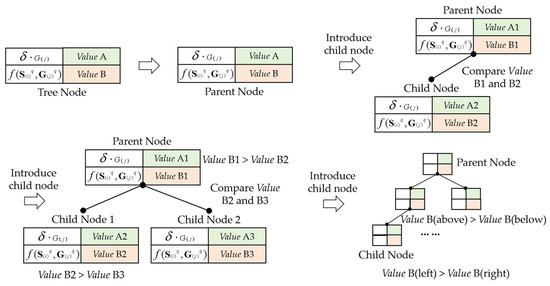

. Figure 3 shows the basic generation rules and the logical structure of the tree model . Based on the Definition, the rules and logical structure are generated in Figure 3.

Figure 3.

The basic generation rules and logical structure of the tree model .

The algorithm steps for constructing the optimal complete binary encoding tree model for the discussion topic are as follows (Appendix A.3).

Step 1: Select the individual student from the group . Quantify and output . Quantify and output number of for number of the topics in the group and encode the discussion topics .

Step 1.1: Determine the basic structure of the discussion topic in the coordinate system , including the head-word and suffix-word . The storage structure is as follows:

{: discussion topic weight: } & {: discussion topic matching value: }

Step 1.2: Initialize the counter , which is the number of times the discussion topic has been traversed.

Step 1.3: Take , initialize the first topic , and generate the nodes for .

(1) Extract , calculate , and store to the related head-word for ;

(2) Extract , calculate , and store to the corresponding suffix-word for ;

(3) Complete the storage and generate the storage structure for node ;

(4) Note : (i) if , continue searching; (ii) if , the node searching ends, and the algorithm ends.

Step 1.4: For any , return to Step 1.3 to initialize the no. topic and generate node . Note : (i) if , continue searching; (ii) if , the node searching ends, and the algorithm ends.

Step 1.5: Iterate the index , and stop the iterating when . Output the node storage structures for number of discussion topics .

Step 1.6: Based on the node storage structures for number of discussion topics , establish the spatial coordinate system of the discussion topic under the condition of the individual student interest vector .

Step 2: Randomly select any topic and store it in the parent node of the model . Randomly select another topic and make the following comparison, in which represents , the number represents the no. topic , and each function value has the same .

(1) If , keep the topic storage unchanged and store in the left child node of the second layer;

(2) If , delete , store in the parent node of the model , and store in the left child node of the second layer.

Step 3: Introduce arbitrary and make the following comparison:

(1) If :

① If , store and to and , and store to the child node on the right side of the second row;

② If , store and to and , and store to the child node on the right side of the second row;

③ If , store and to and , and store to the child node on the right side of the second row;

(2) If :

① If , store and to and , and store to the child node on the right side of the second row;

② If , store and to and , and store to the child node on the right side of the second row;

③ If , store and to and , and store to the child node on the right side of the second row.

Step 4: Introduce any , . Calculate the current corresponding to , compare ~, and continue storing by the following storage rules.

(1) Arbitrary node can have a maximum of two child nodes and a minimum of zero child nodes;

(2) Regarding the binary tree storage rule, any row of the tree contains number of child nodes, and ~ must be stored in the previous number of nodes in the tree , satisfying the following:

① The number of nodes that store meets the requirement . The represents the maximum row that is used to store ;

② For any node for , its left node must meet the criteria ;

③ For any node for , its right node must meet the following:

(i) If the last is currently stored in , the node on the right does not exist;

(ii) If the current node is not the last , the right node continues to store, satisfying the condition .

④ If the row contains the child node that stores , then all nodes in the previous rows of the tree satisfy the condition .

(3) Any node satisfies the following:

① The stored of corresponds to , the stored of child nodes and correspond to and , and there is ;

② The stored of corresponds to , the stored of left node corresponds to , the stored of right node corresponds to , and there is .

Step 5: Continue storing according to the algorithm from Step 1 to Step 4 until the searching stops at the end of traversal . The algorithm ends, producing output .

3.2.2. Recommendation Model Based on the Improved k-NN Data Mining Algorithm

For any student group , the construction of the k-NN data mining algorithm is based on the optimal complete binary encoding tree of the discussion topic. Firstly, we establish the encoding trees for all the individual students in the group and search for the first number of optimal matching topics based on the encoding tree. Secondly, based on the determined number of optimal matching topics for number of students , the group is used as a team discussion group to find out the number of common optimal nearest neighbor topics for number of students and to recommend them as the optimal topics for the group . Finally, the model outputs the recommended topics for each group generated for the class, and then the teacher and the students jointly determine the practical discussion topic for the practical course [42]. Based on the modeling principle, we construct the related Definitions for the algorithm.

Definition 15.

The optimal topic vector for the student . Regarding the constructed student optimal encoding tree , the previous number of nodes are selected starting from the parent node as the topics that best match the student . When the value reaches until the node , it satisfies the requirement . Construct a dimensional vector to store the selected number of nodes corresponding to the topics from the tree . Define this vector as the optimal topic vector for the student , denoted as . The in the vector represents the no. student, and the in the vector represents the no. topic

.

Definition 16.

The student optimal topic matrix for the student . Each student corresponds to the number of the most matched topics , and if the group contains number of students , a dimensional matrix is constructed to store all the topics corresponding to number of students . The matrix satisfies the following conditions:

(1) The row rank is , and the column rank is ;

(2) The row corresponds to one student , and the column element represents the topic ;

(3) The rows and are not linearly related, and the columns and are not linearly related;

(4) Any element is a non-zero element;

(5) A matrix corresponds to a group .

Definition 17.

The discussion topic interest intensity . For any group and the related matrix , we construct an algorithm to iterate the frequency of each topic appearing in the matrix , and the frequency of is defined as the discussion topic interest intensity, denoted as . Normalize the intensity to obtain the interest intensity weight with a value range of .

Definition 18.

The discussion topic recommendation vector . Extract the number of topics with the highest intensity from the matrix and store them in a dimensional vector in order of decreasing intensity. We define this vector as the discussion topic recommendation vector, denoted as . The represents the no. group , and the represents the no. topic

.

Based on the basic idea and the related Definitions of the algorithm model, we construct a recommendation model based on the improved k-NN data mining algorithm to recommend the optimal discussion topics for each student group . The constructed algorithm is as follows (Appendix A.4):

Step 1: Determine the group and the student , and quantify the student interest vector and the topic vector . Introduce the optimal complete binary encoding tree algorithm for the discussion topics and output the individual encoding trees for the students .

Step 1.1: Generate the encoding tree for the student , and mark the previous number of nodes including the parent node , in which is the maximum row of the current tree with number of nodes, while is the maximum element number in the no. row in the tree.

Step 1.2: Regarding the same algorithm, generate the encoding trees , , …, for the students , , …, , and mark the previous number of nodes in each tree separately.

Step 2: Output the optimal topic vector of the student . Based on each encoding tree of the student and labeled number of nodes, extract the corresponding topics for number of nodes and construct the vector .

Step 2.1: Take the number of optimal topics from the encoding tree of the student and store them in dimensional vector .

Step 2.2: Take the number of optimal topics of the corresponding encoding trees , , …, of the students , , …, , and store them in dimensional vector , in which .

Step 3: Initialize the matrix and initialize the counter . Generate the full rank matrix :

Step 3.1: Take the element and store ~ in the first row of the matrix in sequence, so that the first row is full rank. Note .

Step 3.2: Take the element and store ~ in the second row of the matrix in sequence, so that the second row is full rank. Note .

Step 3.3: In line with the same storage rules, take the element , and store the ~ in the no. row of the matrix in sequence, so that the no. row is full rank, in which traverses . Note until the traversal is completed when ; then, the storing process ends.

Step 4: Build a baseline vector containing number of the discussion topics . The dimension of the vector is , and the vector element corresponds to the stored . Introduce vector to scan the matrix .

Step 4.1: The row code of the matrix is , the column code is , and the matrix elements are . According to the matrix Definition , there are , , and .

Step 4.2: Initialize the counter . Take the first element of the vector and make the following judgment:

(1) For matrix , take and traverse :

(i) If , then ; if , then .

(ii) If , then ; if , then .

(iii) Using the same iterative method, if , then ; if , then . Until the traversal is complete, when , output and note .

(2) For matrix , take , and traverse . Iterate over the elements in the second row of the matrix, and output , denoted as .

(3) Follow steps (1)–(2) to traverse all rows of the matrix and output separately.

(4) Iterate to calculate , and denote as the interest intensity of the element .

Step 4.3: Initialize the counter . Take the second element of the vector , iterate in line with the same algorithm as in Step 4.2, and output as the interest intensity of the element .

Step 4.4: Using the same algorithm, output the interest intensity of the element . Traverse , complete the iteration, and output before the searching ends. Output the quantified vector .

Step 4.5: Calculate the normalized interest intensity weight . Formula (10) is the constructed interest intensity weight model .

Step 5: Build a complete binary tree based on the vector by the following algorithm:

Step 5.1: Take and store in the parent node of the tree. Take and make a judgment:

(1) If , store in the left child node of the second layer.

(2) If , delete , and store to the parent node and store to the left child node of the second layer.

Step 5.2: Take and make a judgment:

(1) If .

① If , keep and , and store to the right child node ;

② If , store to , and store to ;

③ If , delete and , and then store to and store and to and .

(2) If .

① If , keep and , and store to the right child node .

② If , store to , and store to .

③ If , delete and , and store to and and to and .

Step 5.3: Store to the first number of nodes in the binary tree in line with steps 5.1~5.2, meeting the following conditions:

(1) Any node can have a maximum of two child nodes and a minimum of zero child nodes.

(2) Any row of the tree contains number of child nodes, satisfying:

① The number of nodes storing meets the requirement , where the represents the maximum row that can be stored currently.

② For any node storing , its left node must satisfy .

③ For any node storing , the right node satisfies:

(i) If the current node stores the last , the right node does not exist.

(ii) If the current node does not store the last , the right node continues to store, satisfying the condition .

④ If the row contains the child node storing , then all nodes in the previous rows of the tree satisfy the condition .

(3) Any node satisfies:

① The in relates to . Its child nodes and of correspond to and . There must be .

② The stored in corresponds to . The stored in the left node corresponds to , while the stored in the right node corresponds to . There must be .

Step 6: Select the corresponding and of the number of nodes in the tree , and the topics of the number of nodes are the optimal discussion topics recommended to all the students in the group . Build the same recommendation model and binary trees for all number of groups in line with the same algorithm, and output the optimal discussion topics recommended to all number of groups . The algorithm ends.

3.2.3. Improvement of the Constructed k-NN Recommendation Algorithm

The basic modeling process of the traditional k-NN algorithm includes three steps: the first step is calculating the distance between the object to be classified and other objects; the second step is selecting the objects closest to the object to be classified; the third step is confirming the classification, in terms of which class the majority of the closest objects belong to and which class the object to be classified belongs to. Compared with the traditional k-NN algorithm, the constructed k-NN algorithm has significant improvements, mainly reflected in the following aspects:

Firstly, the algorithm’s aim is not to achieve the general classification of objects but to calculate and output the common features of the group of objects based on the classification ideas. Using the grouping as the basic unit, it establishes a matching relationship between each student in the group and the overall discussion topics, and then obtains the common interest topic of the student group through cross statistics. This model is based on the necessary conditions for the student grouping in the discussion courses. By constructing the k-NN algorithm, the student group’s interest matching is achieved based on calculating the matching degree of individual students; thus, it greatly improves the logic of the k-NN algorithm.

Secondly, the matching degree between the students and the discussion topics is obtained by calculating the Minkowski distance between the student interest feature labels and the topic feature labels, rather than the spatial distance of the traditional k-NN algorithm. The dimensionality of the feature attribute is higher, containing more complex feature labels. Therefore, the objective function constructed by the Minkowski distance has a higher dimensionality.

Thirdly, for the algorithm logic that has been greatly improved, in order to help teachers and students master the recommendation process of the discussion topics, we introduce the complete binary encoding tree algorithm into the k-NN algorithm. The goal is to output and visualize the strength ranking of individual student matching discussion topics within the group, so that the selection of the value k directly corresponds to the top k number of nodes. This is another innovation and improvement of the k-NN algorithm.

Fourthly, the complete binary encoding tree algorithm has significant improvements compared to the traditional tree structure, and its data structure is significantly different. Each node contains two storage units, one storing the weight of the discussion topic and the other storing the matching value of the discussion topic. The former describes the degree to which the discussion topic covers the practical teaching requirements, while the latter describes the degree to which the student interests match the discussion topic. The teachers and students use the former unit to understand the strength of the discussion topic in line with the teaching objectives, while the latter unit is used to construct the k-NN algorithm. Therefore, the constructed complete binary encoding tree has significant improvements in both structure and functionality.

4. Experiment and Analysis

For the constructed model, we use a professional course as the research basis. The “Rural Tourism” practical course topic of the Smart Tourism Course is set as the experimental object. We randomly select 20 students from the previous teaching classes who participated in the “Rural Tourism” practical course, and had high participation passion and satisfaction in the course, as the training set for constructing the naive Bayes model. By constructing the naive Bayes machine learning model, the current teaching class is divided into several student groups, and then number of discussion topics are designed for each group. By using the proposed k-NN data mining algorithm to output the optimal complete binary encoding trees for student groups, we determine the quantified coordinates of the discussion topics to output the most matched discussion topics for the students and ultimately output the optimal discussion topic for each group. Finally, we design a comparative experiment to verify the advantages of our proposed recommendation algorithm over the traditional recommendation algorithms.

4.1. Data Preparation

We determine the classification labels of the naive Bayes machine learning training set model as : “Rural Preservation”, : “Rural Cuisine”, and : “Rural Farming”.

According to the application purpose and the formulated requirements for building the model in Section 2.2.2 of the Introduction, the application scope of the constructed naive Bayes machine learning model is constrained in small classes with 10–20 students. Therefore, the experiment needs to select small-scale sample data to build the model and verify it. The data’s influence on the effectiveness of the experiment is manifested in the following aspects:

(1) The raw training data for building the naive Bayes machine learning algorithm come from small classes of 10–20 students. We collect the interest data and grouping data of students in small classes, making the raw data highly targeted and capable of constructing an accurate machine learning model for small class grouping.

(2) The features of the naive Bayes machine learning algorithm determine that it exhibits high-performance features on small-scale datasets. Compared to the modeling on large-scale datasets, it has less computational complexity and higher accuracy in the output results, ensuring the accuracy of the class grouping.

(3) In practical teaching applications, the naive Bayes machine learning algorithm needs to control the number of students in a class. When the number of students in a class is too large (such as 50 or more), the class needs to be split or the class hours need to be increased to complete the teaching tasks in batches and ensure the teaching quality.

Based on the above conditions, we select 20 students who have participated in the Rural Tourism practical course from the previous classes. We collect interest labels and vectors, determine each student’s group , and construct the training set model as shown in Table 2. In Table 2, represents the selected representative student sample, I-1 represents “the level of preference for cooking”, I-2 represents “the level of preference for reading”, I-3 represents “the level of preference for sports”, I-4 represents “the level of preference for planting”, and I-5 represents “the level of preference for music”. “MFL” represents “most favorite level”, “FL” represents “favorite level”, and “LL” represents “like level”. In the classification, “T1” represents “Rural Preservation”, “T2” represents “Rural Cuisine”, and “T3” represents “Rural Farming”.

Table 2.

Naive Bayes learning training set model constructed by the experiment.

We collect data from the students in the experimental class (Class E1). In the small-class teaching course, we select 15 students , and each student’s selection and evaluation of items I-1~I-5 are based on their own interests. We distribute collection forms with the designed questions to the 15 students, and make them select their preference levels for items I-1~I-5 to determine the feature vectors for each student. We input the student feature vectors into the constructed naive Bayes machine learning algorithm to output the student classification . Table 3 shows the collected feature vectors of the experimental class’s students to be classified. In the table, each row represents the feature vector of a student , in which represents the encoding of the student to be classified. I-1 represents “the level of preference for cooking”, I-2 represents “the level of preference for reading”, I-3 represents “the level of preference for sports”, I-4 represents “the level of preference for planting”, and I-5 represents “the level of preference for music”. In each item, “MFL” represents “most favorite level”, “FL” represents “favorite level”, and “LL” represents “like level”.

Table 3.

The collected feature vectors of students to be classified in the experimental class.

According to the teaching content of the “Rural Tourism” practical course, we design the discussion topics for each classification , take , that is, for each group of the experimental class: : “Rural Preservation”, : “Rural Cuisine”, and : “Rural Farming”. The 10 matched discussion topics are designed for as the raw data for constructing the topic recommendation algorithm. According to the topic recommendation algorithm, we design the quantitative labels for each group and construct the feature attribute vectors for the discussion topic based on the labels, and then output the quantitative vectors . The quantization vector represents the measurement value of the topic . Based on the results of student grouping, within each group, we determine the quantitative values of the topic feature labels for the classification on the students . For the Definition, there is , . Table 4 shows the designed feature labels for each classification . Based on Table 4, for each classification (: “Rural Preservation”, : “Rural Cuisine”, and : “Rural Farming”), we choose the tourism city Leshan in Sichuan Province, China, as the research scope to select the relevant scenic spots, then we design the discussion topic , shown in Table 5, , .

Table 4.

The designed feature labels for each classification.

Table 5.

The designed discussion topic for each classification .

4.2. Results and Analysis on the Naive Bayes Grouping

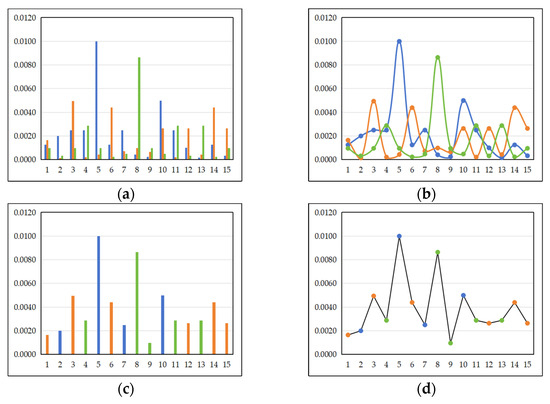

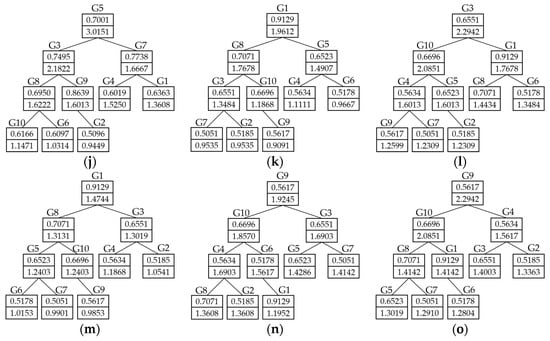

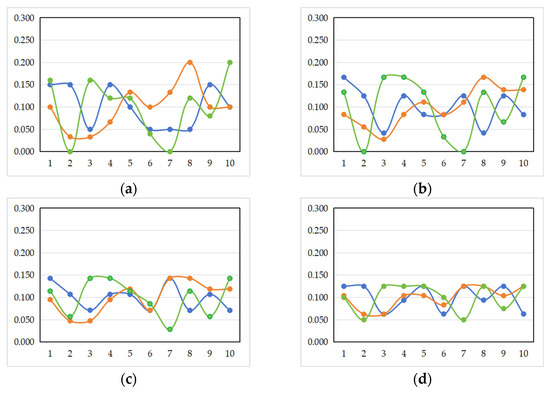

Based on the proposed naive Bayes machine learning algorithm, we input the related data on the collected feature vectors of the students to be classified in the experimental class and obtain the posterior probability values of each student in the class belonging to different classifications . The results are shown in Table 6. Based on the results in Table 6, we output the posterior probability bar chart and curve trend chart shown in Figure 4. The abscissa in Figure 4 represents the student number, and the ordinate represents the posterior probability value.

Table 6.

The posterior probability values of students belonging to different classifications output by naive Bayes machine learning algorithm.

Figure 4.

The bar chart and curve trend chart of posterior probability. The blue color represents the T(1); the orange color represents the T(2); the green color represents the T(3).

Figure 4a shows the naive Bayes posterior probability bar charts of each student for the classifications , , and , in which the blue data bar represents the classification , the orange data bar represents the classification , and the green data bar represents the classification . Figure 4b shows the naive Bayes posterior probability trend curves of each student for the classifications , , and , in which the blue data bar represents the classification , the orange data bar represents the classification , and the green data bar represents the classification . Figure 4c shows the maximum naive Bayes posterior probability bar chart of the student for the classifications , , and , in which the blue data bar represents the classification , the orange data bar represents the classification , and the green data bar represents the classification . Figure 4d shows the trend curve of the maximum naive Bayes posterior probability for all students. The blue data bar represents the classification , the orange data bar represents the classification , and the green data bar represents the classification .

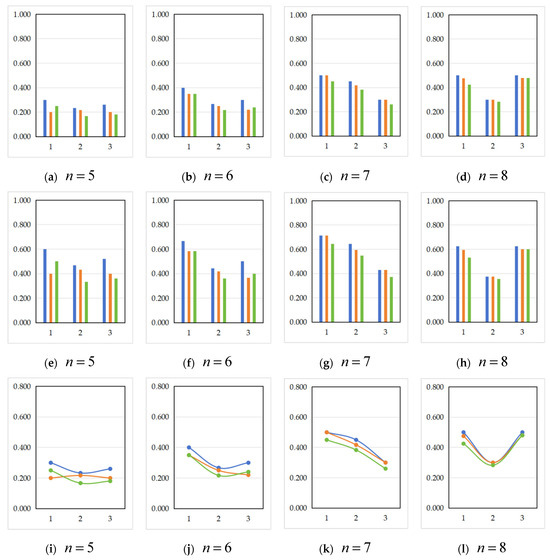

On the other hand, in teaching practice, we organize three experimental classes: classes E1, E2 and E3, each with 15 students and different class members. Among them, the teacher uses the naive Bayes machine learning algorithm to group the class E1 and obtain the student grouping results, while the teacher subjective evaluation method is used to group classes E2 and E3. By organizing a themed discussion course on “Rural Tourism”, the students evaluate the course with the indicators: “grouping satisfaction”, “interest matching satisfaction”, “team collaboration satisfaction”, and “discussion process satisfaction”. The evaluation indicators are divided into three categories: “very satisfied”, “satisfied”, and “dissatisfied”. Based on the students’ evaluations, we calculate the statistical percentage of each indicator in each class and compare them to obtain the results in Table 7.

Table 7.

Satisfaction evaluation results of experimental classes E1, E2, and E3 on the grouping results and discussion courses.

To test the accuracy of the methods, we use the measurement method of the accuracy indicator in the machine learning algorithm to measure and compare the accuracy of the naive Bayes machine learning algorithm and the teacher subjective evaluation method. The accuracy indicator is shown in Formula (11). In the formula, is the classification label that should be accurate, is the classification label that is predicted to be accurate, represents the total number of accurate labels, and represents the total number of label samples. In the experiment, we set the students who choose “very satisfied” as the accurately predicted classification labels, and the total students in the class as the classification labels that should be accurate. The experiment outputs the calculation results of accuracy, shown in Table 8.

Table 8.

Accuracy evaluation results of experimental classes E1, E2, and E3 on the grouping results and discussion courses.

Based on the analysis of the data in Table 6, Table 7 and Table 8 and the results in Figure 4, we can draw the following conclusions:

(1) The grouping result of students in class E1 by the naive Bayes machine learning algorithm is:

: “Rural Preservation”: {, , , }.

: “Rural Cuisine”: {, , , , , }.

: “Rural Farming”:{, , , , }.

The grouping result of students in class E2 by the teacher’s subjective evaluation method is:

: “Rural Preservation”: {, , , , , }.

: “Rural Cuisine”: {, , , , }.

: “Rural Farming”: {, , , }.

The grouping result of students in class E2 by the teacher’s subjective evaluation method is:

: “Rural Preservation”: {, , , , , }.

: “Rural Cuisine”: {, , , , }.

: “Rural Farming”: {, , , }.

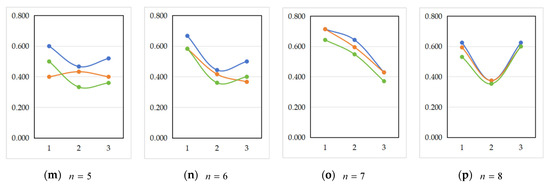

(2) The proposed naive Bayes machine learning model can effectively identify the independent feature labels representing the students’ preference levels and calculate the posterior probabilities of the students in the different classifications , , and . The probability values have obvious mutual exclusion feature, that is, there is no equivalent posterior probability between the classifications , , and . This shows that the proposed naive Bayes machine learning model has accurate computational performance and operational capability and can accurately calculate the posterior probability of the student samples in various classifications.