A Graph Laplacian Regularizer from Deep Features for Depth Map Super-Resolution

Abstract

1. Introduction

2. Related Work

2.1. Depth Map Super-Resolution

2.2. Graph-Based Representations

3. Depth Map Super-Resolution Using the Admm Algorithm and Graph-Based Regularization

3.1. Depth Map Super-Resolution as an Inverse Problem

| Algorithm 1 ADMM algorithm |

|

3.2. Graph-Based Regularization for Depth Map Super-Resolution

3.3. Feature-Based Graph Laplacian Matrix

4. Results and Evaluation

4.1. Experimental Setup

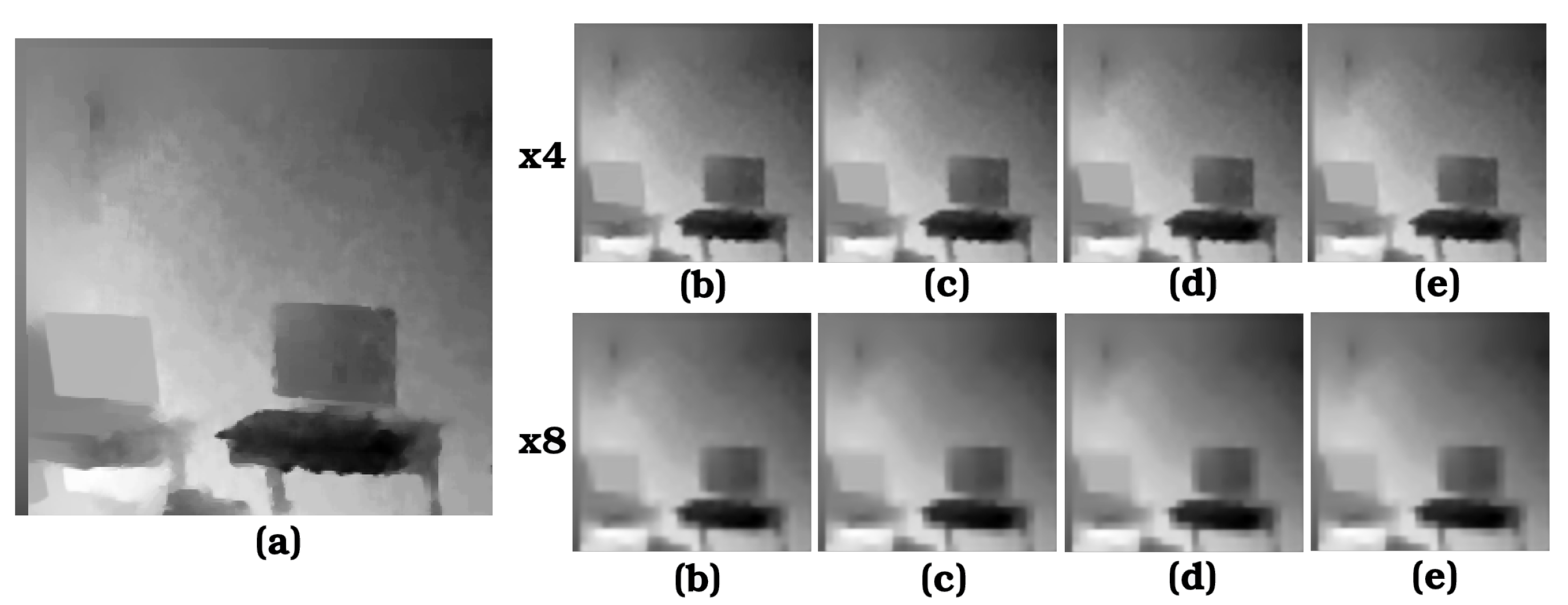

4.2. Performance Comparison Across Selected Models

4.3. Comparison with Other Methods

5. Ablation Study

Experimental Setup and Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ADMM | Alternating Direction Method of Multiplier |

| HR | High Resolution |

| LR | Low Resolution |

| TV | Total Variation |

| NLM | Non-Local Mean |

| AR | AutoRegressive |

| JBP | Joint Basis Pursuit |

| DL | Deep Learning |

| CNNs | Convolutional Neural Networks |

| SRCNNs | Super Resolution Convolutional Neural Networks |

| ESRGANs | Enhanced Super-Resolution Generative Adversarial Networks |

| RESNET | Residual Neural Network |

| VGG | Visual Geometry Group |

| SRGATs | Super Resolution Graph Attention Networks |

| GAT | Graph Attention Network |

| RMSE | Root Mean Squared Error |

| SCICO | Scientific Computational Imaging Code |

| PnP | Plug-and-Play |

| PAN | Pyramid Attention Network |

| DAGF | Deep Attentional Guided Image Filtering |

References

- Song, Z.; Lu, J.; Yao, Y.; Zhang, J. Self-supervised depth completion from direct visual-LiDAR odometry in autonomous driving. IEEE Trans. Intell. Transp. Syst. 2021, 23, 11654–11665. [Google Scholar] [CrossRef]

- Li, J.; Gao, W.; Wu, Y. High-quality 3D reconstruction with depth super-resolution and completion. IEEE Access 2019, 7, 19370–19381. [Google Scholar] [CrossRef]

- Ndjiki-Nya, P.; Koppel, M.; Doshkov, D.; Lakshman, H.; Merkle, P.; Muller, K.; Wiegand, T. Depth image-based rendering with advanced texture synthesis for 3-D video. IEEE Trans. Multimed. 2011, 13, 453–465. [Google Scholar] [CrossRef]

- Wang, F.; Pan, J.; Xu, S.; Tang, J. Learning discriminative cross-modality features for RGB-D saliency detection. IEEE Trans. Image Process. 2022, 31, 1285–1297. [Google Scholar] [CrossRef]

- Shankar, K.; Tjersland, M.; Ma, J.; Stone, K.; Bajracharya, M. A learned stereo depth system for robotic manipulation in homes. IEEE Robot. Autom. Lett. 2022, 7, 2305–2312. [Google Scholar] [CrossRef]

- Lange, R.; Seitz, P. Solid-state time-of-flight range camera. IEEE J. Quantum Electron. 2001, 37, 390–397. [Google Scholar] [CrossRef]

- Herrera, D.; Kannala, J.; Heikkilä, J. Joint depth and color camera calibration with distortion correction. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2058–2064. [Google Scholar] [CrossRef] [PubMed]

- Xie, J.; Feris, R.S.; Sun, M.T. Edge-guided single depth image super resolution. IEEE Trans. Image Process. 2015, 25, 428–438. [Google Scholar] [CrossRef]

- Xu, W.; Zhu, Q.; Qi, N. Depth map super-resolution via joint local gradient and nonlocal structural regularizations. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 8297–8311. [Google Scholar] [CrossRef]

- Zhong, Z.; Liu, X.; Jiang, J.; Zhao, D.; Ji, X. Guided depth map super-resolution: A survey. ACM Comput. Surv. 2023, 55, 1–36. [Google Scholar] [CrossRef]

- Zhang, Y.; Feng, Y.; Liu, X.; Zhai, D.; Ji, X.; Wang, H.; Dai, Q. Color-guided depth image recovery with adaptive data fidelity and transferred graph Laplacian regularization. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 320–333. [Google Scholar] [CrossRef]

- Yan, C.; Li, Z.; Zhang, Y.; Liu, Y.; Ji, X.; Zhang, Y. Depth image denoising using nuclear norm and learning graph model. ACM Trans. Multimed. Comput. Commun. Appl. TOMM 2020, 16, 1–17. [Google Scholar] [CrossRef]

- Wang, J.; Sun, L.; Xiong, R.; Shi, Y.; Zhu, Q.; Yin, B. Depth map super-resolution based on dual normal-depth regularization and graph Laplacian prior. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 3304–3318. [Google Scholar] [CrossRef]

- Xu, B.; Yin, H. Graph convolutional networks in feature space for image deblurring and super-resolution. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Virtual, 18–22 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–8. [Google Scholar]

- De Lutio, R.; Becker, A.; D’Aronco, S.; Russo, S.; Wegner, J.D.; Schindler, K. Learning graph regularisation for guided super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1979–1988. [Google Scholar]

- Tomasi, C.; Manduchi, R. Bilateral filtering for gray and color images. In Proceedings of the Sixth International Conference on Computer Vision (IEEE Cat. No. 98CH36271), Bombay, India, 4–7 January 1998; IEEE: Piscataway, NJ, USA, 1998; pp. 839–846. [Google Scholar]

- Buades, A.; Coll, B.; Morel, J.M. A non-local algorithm for image denoising. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision And Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; IEEE: Piscataway, NJ, USA, 2005; Volume 2, pp. 60–65. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 1397–1409. [Google Scholar] [CrossRef]

- Wang, J.; Xu, W.; Cai, J.F.; Zhu, Q.; Shi, Y.; Yin, B. Multi-direction dictionary learning based depth map super-resolution with autoregressive modeling. IEEE Trans. Multimed. 2019, 22, 1470–1484. [Google Scholar] [CrossRef]

- Tosic, I.; Drewes, S. Learning joint intensity-depth sparse representations. IEEE Trans. Image Process. 2014, 23, 2122–2132. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a deep convolutional network for image super-resolution. In Computer Vision—ECCV 2014, Proceedings of the 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceeding Part IV 13; Springer: Berlin/Heidelberg, Germany, 2014; pp. 184–199. [Google Scholar]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Qiao, Y.; Change Loy, C. Esrgan: Enhanced super-resolution generative adversarial networks. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Marivani, I.; Tsiligianni, E.; Cornelis, B.; Deligiannis, N. Multimodal deep unfolding for guided image super-resolution. IEEE Trans. Image Process. 2020, 29, 8443–8456. [Google Scholar] [CrossRef] [PubMed]

- Tsiligianni, E.; Zerva, M.; Marivani, I.; Deligiannis, N.; Kondi, L. Interpretable deep learning for multimodal super-resolution of medical images. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Strasbourg, France, 27 September–1 October 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 421–429. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 29–30 June 2016; pp. 770–778. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Proceedings of the 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings Part III 18; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Hui, T.W.; Loy, C.C.; Tang, X. Depth map super-resolution by deep multi-scale guidance. In Computer Vision—ECCV 2016, Proccedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceendings Part III 14; Springer: Berlin/Heidelberg, Germany, 2016; pp. 353–369. [Google Scholar]

- Zhong, Z.; Liu, X.; Jiang, J.; Zhao, D.; Chen, Z.; Ji, X. High-resolution depth maps imaging via attention-based hierarchical multi-modal fusion. IEEE Trans. Image Process. 2021, 31, 648–663. [Google Scholar] [CrossRef]

- He, L.; Zhu, H.; Li, F.; Bai, H.; Cong, R.; Zhang, C.; Lin, C.; Liu, M.; Zhao, Y. Towards fast and accurate real-world depth super-resolution: Benchmark dataset and baseline. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 9229–9238. [Google Scholar]

- Sun, B.; Ye, X.; Li, B.; Li, H.; Wang, Z.; Xu, R. Learning scene structure guidance via cross-task knowledge transfer for single depth super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7792–7801. [Google Scholar]

- Yan, Y.; Ren, W.; Hu, X.; Li, K.; Shen, H.; Cao, X. SRGAT: Single image super-resolution with graph attention network. IEEE Trans. Image Process. 2021, 30, 4905–4918. [Google Scholar] [CrossRef]

- Rossi, M.; Frossard, P. Geometry-consistent light field super-resolution via graph-based regularization. IEEE Trans. Image Process. 2018, 27, 4207–4218. [Google Scholar] [CrossRef]

- Shuman, D.I.; Narang, S.K.; Frossard, P.; Ortega, A.; Vandergheynst, P. The emerging field of signal processing on graphs: Extending high-dimensional data analysis to networks and other irregular domains. IEEE Signal Process. Mag. 2013, 30, 83–98. [Google Scholar] [CrossRef]

- Rudin, L.I.; Osher, S.; Fatemi, E. Nonlinear total variation based noise removal algorithms. Phys. D 1992, 60, 259–268. [Google Scholar] [CrossRef]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image restoration by sparse 3D transform-domain collaborative filtering. In Image Processing: Algorithms and Systems VI; SPIE: Bellingham, WA, USA, 2008; Volume 6812, pp. 62–73. [Google Scholar]

- Parikh, N.; Boyd, S. Proximal algorithms. Found. Trends Optim. 2014, 1, 127–239. [Google Scholar] [CrossRef]

- Renaud, M.; Prost, J.; Leclaire, A.; Papadakis, N. Plug-and-play image restoration with stochastic denoising regularization. In Proceedings of the Forty-First International Conference on Machine Learning, Vienna, Austria, 21–27 July 2024. [Google Scholar]

- Zhu, Y.; Zhang, K.; Liang, J.; Cao, J.; Wen, B.; Timofte, R.; Van Gool, L. Denoising diffusion models for plug-and-play image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 1219–1229. [Google Scholar]

- Wu, Y.; Zhang, Z.; Wang, G. Unsupervised deep feature transfer for low resolution image classification. In Proceedings of the IEEE/CVF International Conference On Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Zhang, K.; Li, Y.; Zuo, W.; Zhang, L.; Van Gool, L.; Timofte, R. Plug-and-play image restoration with deep denoiser prior. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 6360–6376. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Chaurasia, A.; Culurciello, E. Linknet: Exploiting encoder representations for efficient semantic segmentation. In Proceedings of the 2017 IEEE Visual Communications And Image Processing (VCIP), St. Petersburg, FL, USA, 10–13 December 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–4. [Google Scholar]

- Li, H.; Xiong, P.; An, J.; Wang, L. Pyramid attention network for semantic segmentation. arXiv 2018, arXiv:1805.10180. [Google Scholar]

- Balke, T.; Davis Rivera, F.; Garcia-Cardona, C.; Majee, S.; McCann, M.T.; Pfister, L.; Wohlberg, B.E. Scientific computational imaging code (SCICO). J. Open Source Softw. 2022, 7, 4722. [Google Scholar] [CrossRef]

- Kamilov, U.S.; Bouman, C.A.; Buzzard, G.T.; Wohlberg, B. Plug-and-play methods for integrating physical and learned models in computational imaging: Theory, algorithms, and applications. IEEE Signal Process. Mag. 2023, 40, 85–97. [Google Scholar] [CrossRef]

- Zhong, Z.; Liu, X.; Jiang, J.; Zhao, D.; Ji, X. Deep attentional guided image filtering. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 12236–12250. [Google Scholar] [CrossRef]

- Kim, B.; Ponce, J.; Ham, B. Deformable kernel networks for joint image filtering. Int. J. Comput. Vision 2021, 129, 579–600. [Google Scholar] [CrossRef]

| U-Net [26] | DeepLabV3 [41] | LinkNet [42] | PAN [43] | |

|---|---|---|---|---|

| NYUv2 | 0.015409 | 0.015611 | 0.015600 | 0.015612 |

| NYUv2 | 0.026526 | 0.027544 | 0.027555 | 0.027546 |

| DIML | 0.015288 | 0.015473 | 0.015462 | 0.015473 |

| DIML | 0.025857 | 0.026851 | 0.026865 | 0.026852 |

| Single Depth Map SR | Guided Depth Map SR | |||||

|---|---|---|---|---|---|---|

| Proposed | DnCNN-ADMM [45] | TV-ADMM [34] | BM3D-ADMM [35] | DAGF [46] | de Lutio et al. [15] | |

| NYUv2 | 0.0154 | 0.0188 | 0.0232 | 0.0273 | 0.0141 | 0.0203 |

| NYUv2 | 0.0265 | 0.0338 | 0.0413 | 0.0666 | 0.0292 | 0.0277 |

| DIML | 0.0152 | 0.0161 | 0.0212 | 0.0242 | 0.0201 | 0.0127 |

| DIML | 0.0258 | 0.0303 | 0.0393 | 0.0617 | 0.0309 | 0.0187 |

| Proposed | DnCNN-ADMM [45] | TV-ADMM [34] | BM3D-ADMM [35] | DAGF [46] | de Lutio et al. [15] | |

|---|---|---|---|---|---|---|

| NYUv2 | 8.15 | 1.75 | 1.21 | 8.84 | 0.05 | 0.11 |

| DIML | 8.30 | 1.3 | 1.99 | 9.5 | 0.09 | 0.08 |

| fdim | 64 | 128 | 256 |

|---|---|---|---|

| NYUv2 | 0.01539 | 0.01539 | 0.01539 |

| NYUv2 | 0.02652 | 0.02654 | 0.02656 |

| DIML | 0.01528 | 0.01528 | 0.01527 |

| DIML | 0.02585 | 0.02588 | 0.02590 |

| Side Info | Yes | No |

|---|---|---|

| NYUv2 | 0.01540 | 0.01540 |

| NYUv2 | 0.02665 | 0.02652 |

| DIML | 0.01530 | 0.01528 |

| DIML | 0.02598 | 0.02585 |

| Iterations | 5 | 10 | 15 | 20 |

|---|---|---|---|---|

| NYUv2 | 0.01551 | 0.01540 | 0.01540 | 0.01540 |

| NYUv2 | 0.02781 | 0.02678 | 0.02652 | 0.02654 |

| DIML | 0.01535 | 0.01529 | 0.01528 | 0.01529 |

| DIML | 0.02717 | 0.02615 | 0.02585 | 0.02583 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gartzonikas, G.; Tsiligianni, E.; Deligiannis, N.; Kondi, L.P. A Graph Laplacian Regularizer from Deep Features for Depth Map Super-Resolution. Information 2025, 16, 501. https://doi.org/10.3390/info16060501

Gartzonikas G, Tsiligianni E, Deligiannis N, Kondi LP. A Graph Laplacian Regularizer from Deep Features for Depth Map Super-Resolution. Information. 2025; 16(6):501. https://doi.org/10.3390/info16060501

Chicago/Turabian StyleGartzonikas, George, Evaggelia Tsiligianni, Nikos Deligiannis, and Lisimachos P. Kondi. 2025. "A Graph Laplacian Regularizer from Deep Features for Depth Map Super-Resolution" Information 16, no. 6: 501. https://doi.org/10.3390/info16060501

APA StyleGartzonikas, G., Tsiligianni, E., Deligiannis, N., & Kondi, L. P. (2025). A Graph Laplacian Regularizer from Deep Features for Depth Map Super-Resolution. Information, 16(6), 501. https://doi.org/10.3390/info16060501