Adaptive AR Navigation: Real-Time Mapping for Indoor Environment Using Node Placement and Marker Localization

Abstract

1. Introduction

2. Materials and Methods

2.1. Research Methodology Overview

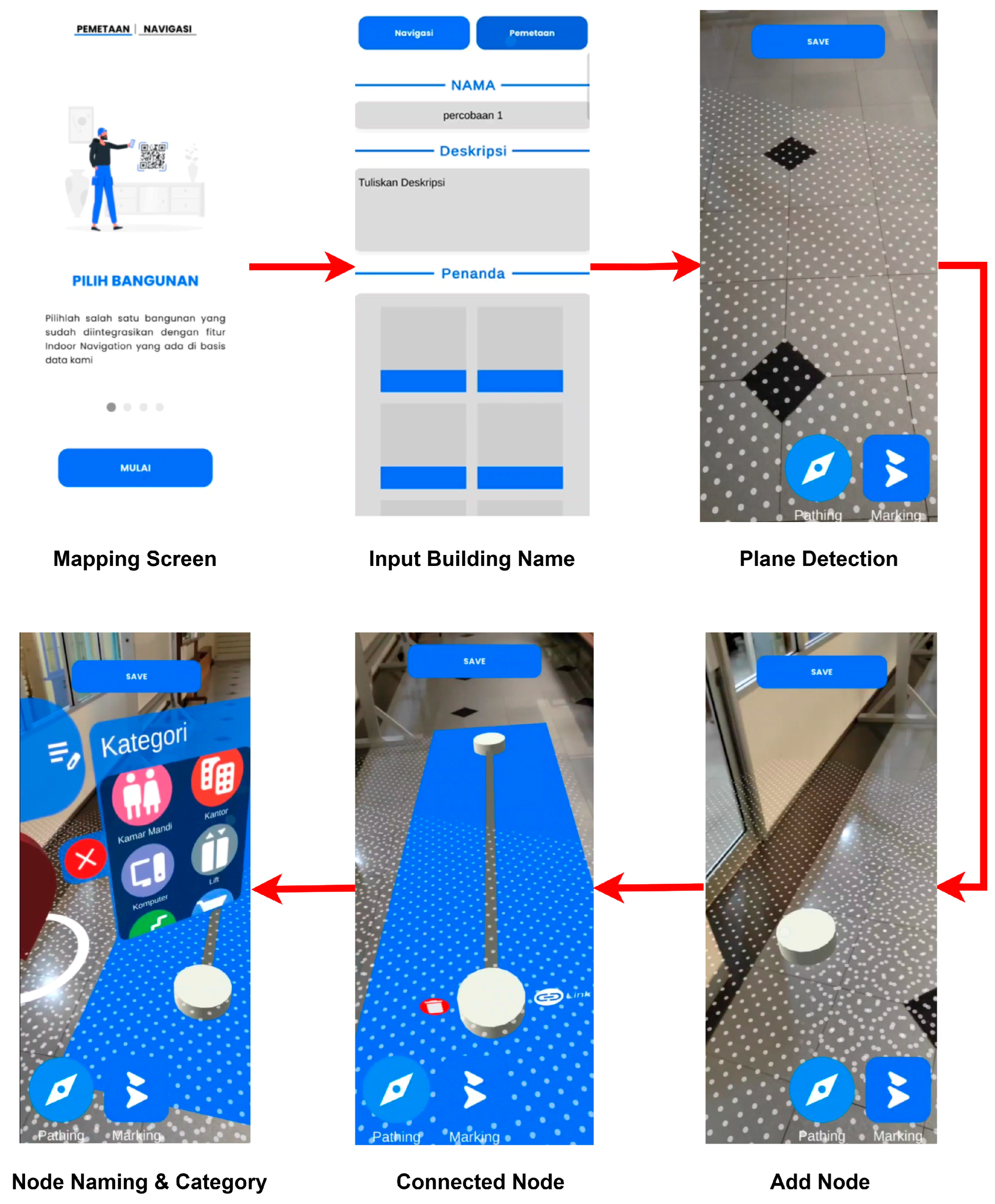

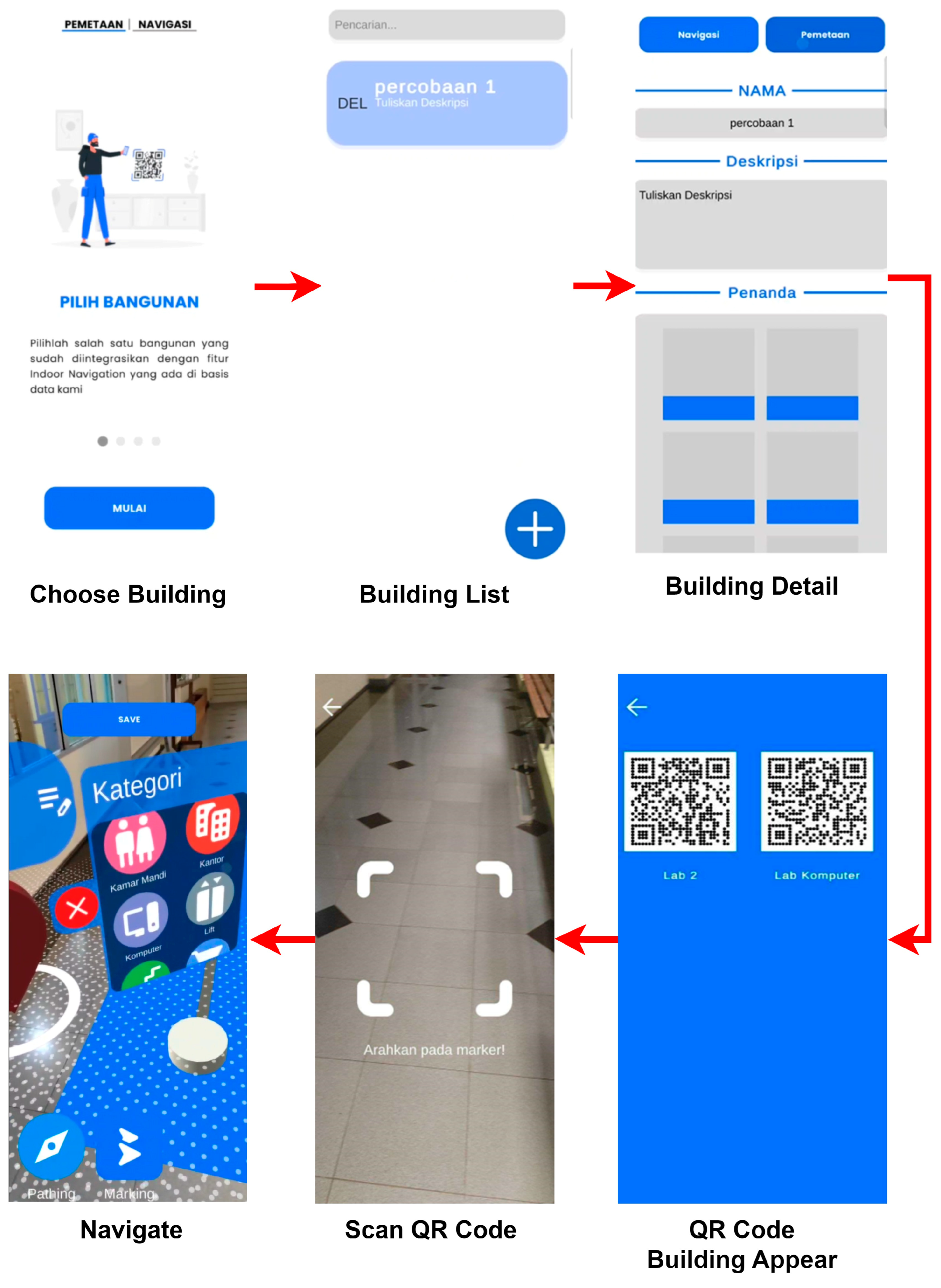

2.2. System Design and Development

- Augmented Reality (AR) Framework—The system uses Google ARCore, enabling real-time plane detection, spatial anchoring, and camera-based tracking. This framework provides real-time plane detection, enabling the system to dynamically recognize floors and surfaces where navigation nodes can be placed. The framework also supports Android and iOS, allowing wider adoption and deployment and motion tracking features to ensure accurate user positioning without external hardware (e.g., LiDAR sensors or BLE beacons). ARCore is integrated into Unity3D as part of the AR foundation package, which allows seamless development of cross-platform AR applications. The system continuously updates the user’s position and surroundings, ensuring a responsive navigation experience.

- Pathfinding Algorithm—The navigation paths are generated using Navigation Mesh (NavMesh), while route optimization is performed using the A algorithm*, which computes the shortest path between user-defined nodes. NavMesh provides a walkable area representation, eliminating manual 3D modeling of indoor spaces. This framework also supports multi-floor navigation by connecting staircase nodes and is optimized for real-time use in mobile applications, requiring less computational power than SLAM-based navigation. For pathfinding, the A* Algorithm is widely recognized for fast and optimal route calculations in graph-based navigation. It guarantees the shortest path with minimal computation, making it suitable for mobile AR applications where efficiency is critical. NavMesh and A* work together to dynamically compute the best navigation path based on user-defined nodes, ensuring scalability and adaptability to different indoor environments.

- Node-Based Mapping System—Unity3D creates a node-based mapping system. Unity3D is a real-time 3D development platform that provides native support for ARCore and NavMesh navigation. This platform offers extensive optimizations for mobile AR applications, ensuring efficient performance even on mid-range smartphones. JSON-based data storage is created to store node data for the system. JSON is a lightweight and human-readable format that allows easy import/export of navigation maps. It stores node positions, connectivity data, and marker GUIDs, reusing navigation maps without requiring rebuilding or remapping. Using Unity3D + JSON, the system minimizes storage overhead, allowing users to load, edit, and share their navigation maps across multiple devices.

- Marker-Based Position Initialization—While the use of QR codes for localization is nothing new, this method remains a practical and affordable solution. Without the need for additional hardware, QR codes allow users to find their starting position easily, making it perfect for use in lightweight and efficient mobile AR systems. For the implementation, ZXing.Net is used because it is an open-source QR code generation and provides a scanning library optimized for C# and Unity3D. QR codes are hardware-independent, requiring only a mobile camera for scanning, avoiding additional Bluetooth or RFID-based localization solutions. Each Marker Node in the system generates a unique QR code associated with a GUID. When a user scans a QR code, their position is instantly initialized to the corresponding marker location, ensuring accurate navigation of starting points.

2.3. Usability Testing Procedure

- Manipulability (Questions 1–8): assessing comfort, ease of interaction, and physical effort.

- Comprehensibility (Questions 9–16): assessing clarity, information design, and visual consistency.

2.4. Statistical Analysis

3. Results

3.1. System Functionality

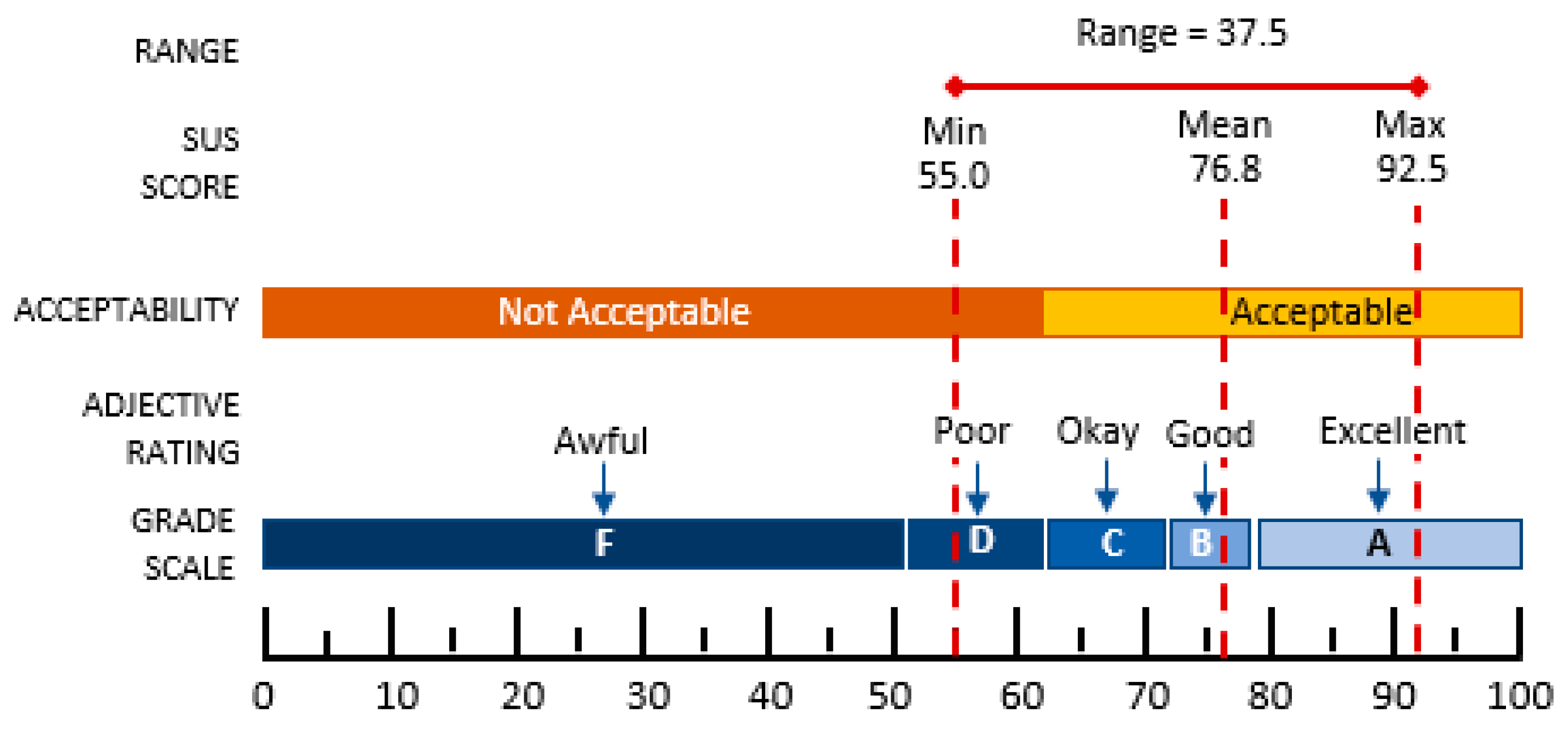

3.2. Usability Testing Using HARUS

4. Discussion

4.1. Node-Based Indoor Navigation Using AR Mobile Applications

4.2. User Response Regarding Indoor Navigation Using AR Mobile Applications

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Alkady, Y.; Rizk, R.; Alsekait, D.M.; Alluhaidan, A.S.; Abdelminaam, D.S. SINS_AR: An Efficient Smart Indoor Navigation System Based on Augmented Reality. IEEE Access 2024, 12, 109171–109183. [Google Scholar] [CrossRef]

- Hořejší, P.; Macháč, T.; Šimon, M. Reliability and Accuracy of Indoor Warehouse Navigation Using Augmented Reality. IEEE Access 2024, 12, 94506–94519. [Google Scholar] [CrossRef]

- Gan, Q.; Liu, Z.; Liu, T.; Chai, Y. An indoor evacuation guidance system with an AR virtual agent. Procedia Comput. Sci. 2022, 213, 636–642. [Google Scholar] [CrossRef]

- Yi, T.-H.; Li, H.-N.; Gu, M. Effect of Different Construction Materials on Propagation of GPS Monitoring Signals. Measurement 2012, 45, 1126–1139. [Google Scholar] [CrossRef]

- Valizadeh, M.; Ranjgar, B.; Niccolai, A.; Hosseini, H.; Rezaee, S.; Hakimpour, F. Indoor Augmented Reality (AR) Pedestrian Navigation for Emergency Evacuation Based on BIM and GIS. Heliyon 2024, 10, e32852. [Google Scholar] [CrossRef] [PubMed]

- Ahn, Y.; Choi, H.; Choi, R.; Ahn, S.; Kim, B.S. BIM-Based Augmented Reality Navigation for Indoor Emergency Evacuation. Expert Syst. Appl. 2024, 255, 124469. [Google Scholar] [CrossRef]

- Rakkolainen, I.; Farooq, A.; Kangas, J.; Hakulinen, J.; Rantala, J.; Turunen, M.; Raisamo, R. Technologies for Multimodal Interaction in Extended Reality—A Scoping Review. Multimodal Technol. Interact. 2021, 5, 81. [Google Scholar] [CrossRef]

- Bibbo, L.; Bramanti, A.; Sharma, J.; Cotroneo, F. AR Platform for Indoor Navigation: New Potential Approach Extensible to Older People with Cognitive Impairment. BioMedInformatics 2024, 4, 1589–1619. [Google Scholar] [CrossRef]

- Bhowmik, A.K. Virtual and Augmented Reality: Human Sensory-Perceptual Requirements and Trends for Immersive Spatial Computing Experiences. J. Soc. Inf. Disp. 2024, 32, 605–646. [Google Scholar] [CrossRef]

- Mahapatra, T.; Tsiamitros, N.; Rohr, A.M.; K, K.; Pipelidis, G. Pedestrian Augmented Reality Navigator. Sensors 2023, 23, 1816. [Google Scholar] [CrossRef] [PubMed]

- Ayyanchira, A.; Mahfoud, E.; Wang, W.; Lu, A. Toward Cross-Platform Immersive Visualization for Indoor Navigation and Collaboration with Augmented Reality. J. Vis. 2022, 25, 1249–1266. [Google Scholar] [CrossRef]

- Zollmann, S.; Langlotz, T.; Grasset, R.; Lo, W.H.; Mori, S.; Regenbrecht, H. Visualization Techniques in Augmented Reality: A Taxonomy, Methods and Patterns. IEEE Trans. Vis. Comput. Graph. 2020, 27, 3808–3825. [Google Scholar] [CrossRef] [PubMed]

- Yigitbas, E.; Gorissen, S.; Weidmann, N.; Engels, G. Design and Evaluation of a Collaborative UML Modeling Environment in Virtual Reality. Softw. Syst. Model. 2023, 22, 1397–1425. [Google Scholar] [CrossRef] [PubMed]

- Pratomo, D.G.; Khomsin; Aditya, N. MARS: An Augmented Reality-Based Marine Chart Display System. Int. J. Geoinform. 2023, 19, 21–31. [Google Scholar] [CrossRef]

- Nendya, M.B.; Mahastama, A.W.; Setiadi, B. Augmented Reality Indoor Navigation Using NavMesh. In Proceedings of the 2023 1st IEEE International Conference on Smart Technology (ICE-SMARTec), Bandung, Indonesia, 17–19 July 2023; IEEE: New York, NY, USA; pp. 134–139. [Google Scholar] [CrossRef]

- Zhou, Z.; Feng, X.; Di, S.; Zhou, X. A LiDAR Mapping System for Robot Navigation in Dynamic Environments. IEEE Trans. Intell. Veh. 2023, 1–20. [Google Scholar] [CrossRef]

- Wen, T.; Liu, Z.; Lu, B.; Fang, Y. Scaffold-SLAM: Structured 3D Gaussians for Simultaneous Localization and Photorealistic Mapping. arXiv 2025. [Google Scholar] [CrossRef]

- Santos, M.E.C.; Taketomi, T.; Sandor, C.; Polvi, J.; Yamamoto, G.; Kato, H. A Usability Scale for Handheld Augmented Reality. In Proceedings of the 20th ACM Symposium on Virtual Reality Software and Technology (VRST ’14), Edinburgh, UK, 11–13 November 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 167–176. [Google Scholar]

- Nendya, M.B.; Mahastama, A.W.; Setiadi, B. A Usability Study of Augmented Reality Indoor Navigation Using Handheld Augmented Reality Usability Scale (HARUS). CogITo Smart J. 2024, 10, 326–338. [Google Scholar] [CrossRef]

- Nik Ahmad, N.A.; Hasni, N.S. ISO 9241-11 and SUS Measurement for Usability Assessment of Dropshipping Sales Management Application. In Proceedings of the 2021 10th International Conference on Software and Computer Applications, Kuala Lumpur, Malaysia, 23–26 February 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 70–74. [Google Scholar]

- Gullà, F.; Menghi, R.; Papetti, A.; Carulli, M.; Bordegoni, M.; Gaggioli, A.; Germani, M. Prototyping Adaptive Systems in Smart Environments Using Virtual Reality. Int. J. Interact. Des. Manuf. 2019, 13, 597–616. [Google Scholar] [CrossRef]

| Phase | Activities | Description |

|---|---|---|

|

| Identified challenges in current systems and defined essential features such as real-time node placement, map storage, and AR path visualization. |

|

| Prototypes were created using Unity, tested internally for AR tracking and NavMesh, and refined through feedback from pilot users. |

|

| Conducted structured usability tests using HARUS to assess manipulability and comprehensibility in actual indoor environments. |

| No | Stages | Description |

|---|---|---|

| 1 | Node Placement and Map Construction | |

| 1a | Mapping initialization | The user scans the floor using ARCore plane detection. |

| 1b | Path Node Placement | Users tap the screen to place nodes in important locations. |

| 1c | Node Linking | Path nodes are connected to each other to form a navigation system. |

| 1d | Marker Node Placement | The Marker Node is placed and generates a QR code with a unique GUID. |

| 1e | Map Storage | The map is saved in JSON format for reuse. |

| 2 | The stored JSON file contains | |

| 2a | Node Position | Position coordinates of each node in X, Y, and Z formats. |

| 2b | Node Type | Information on whether the node is a Path or a Marker. |

| 2c | Connectivity Data | A list of adjacencies that represent connections between nodes for pathfinding. |

| 2d | GUID Marker | A unique ID for each marker node. |

| 3 | Navigation System | |

| 3a | Navigation initialization | The user scans the QR code to determine the starting position. |

| 3b | Invocation of navigation map | The system retrieves the map from storage. |

| 3d | Route calculation | The system calculates the optimal route using the A* (A star) algorithm over the NavMesh graph. |

| 3c | AR navigation guide | The app displays a virtual arrow to direct the user to the destination in the AR view. |

| Step | Action | Description |

|---|---|---|

| 1 | Initialize AR Session | Start an AR session and scan the floor surface using ARCore’s plane detection capabilities. |

| 2 | Place Path Nodes | Tap the screen to place navigation nodes at key locations. |

| 3 | Add Marker Nodes | Insert and label marker nodes to represent target destinations. |

| 4 | Generate and Scan QR Codes | Generate QR codes for marker nodes and scan them to initialize the user’s starting position. |

| 5 | Follow AR Navigation | Use the AR interface with virtual arrows to follow the optimal route toward the selected destination. |

| No | Gender | Familiarity with AR Application | ||

|---|---|---|---|---|

| Yes | Not Yet | Percentage (%) | ||

| 1. | Male | 16 | 4 | 66.67 |

| 2. | Female | 8 | 2 | 33.33 |

| Total Participant | 24 | 6 | 100 | |

| No | Usability Dimension | Average Score |

|---|---|---|

| 1. | Manipulability | 80.90 |

| 2. | Comprehensibility | 83.06 |

| 3. | Overall Usability Score | 81.98 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Putra, B.S.C.; Senapartha, I.K.D.; Wang, J.-C.; Nendya, M.B.; Pandapotan, D.D.; Tjahjono, F.N.; Santoso, H.B. Adaptive AR Navigation: Real-Time Mapping for Indoor Environment Using Node Placement and Marker Localization. Information 2025, 16, 478. https://doi.org/10.3390/info16060478

Putra BSC, Senapartha IKD, Wang J-C, Nendya MB, Pandapotan DD, Tjahjono FN, Santoso HB. Adaptive AR Navigation: Real-Time Mapping for Indoor Environment Using Node Placement and Marker Localization. Information. 2025; 16(6):478. https://doi.org/10.3390/info16060478

Chicago/Turabian StylePutra, Bagas Samuel Christiananta, I. Kadek Dendy Senapartha, Jyun-Cheng Wang, Matahari Bhakti Nendya, Dan Daniel Pandapotan, Felix Nathanael Tjahjono, and Halim Budi Santoso. 2025. "Adaptive AR Navigation: Real-Time Mapping for Indoor Environment Using Node Placement and Marker Localization" Information 16, no. 6: 478. https://doi.org/10.3390/info16060478

APA StylePutra, B. S. C., Senapartha, I. K. D., Wang, J.-C., Nendya, M. B., Pandapotan, D. D., Tjahjono, F. N., & Santoso, H. B. (2025). Adaptive AR Navigation: Real-Time Mapping for Indoor Environment Using Node Placement and Marker Localization. Information, 16(6), 478. https://doi.org/10.3390/info16060478