Systematic Review of Graph Neural Network for Malicious Attack Detection

Abstract

1. Introduction

2. Background and Previous Work

2.1. Machine Learning Algorithm

2.1.1. Supervised Learning Models

2.1.2. Unsupervised Learning Models

2.1.3. Reinforcement Learning Models

2.2. Deep Learning Algorithm

- In a classical ML model, the classification task is different from feature selection, and the two procedures are unable to be integrated with one another to enhance the performance [6]. Nevertheless, DL handles the problem by integrating these two procedures into a single phase that facilitates effective detection and classification of phishing attacks [7].

- Third-party services and manual feature engineering are still required for ML [8]. On the other hand, DL models are capable of learning and extracting features automatically without the guidance of a human.

2.2.1. Multilayer Perceptrons (MLPs)

2.2.2. Radial Basis Function Network (RBFNs)

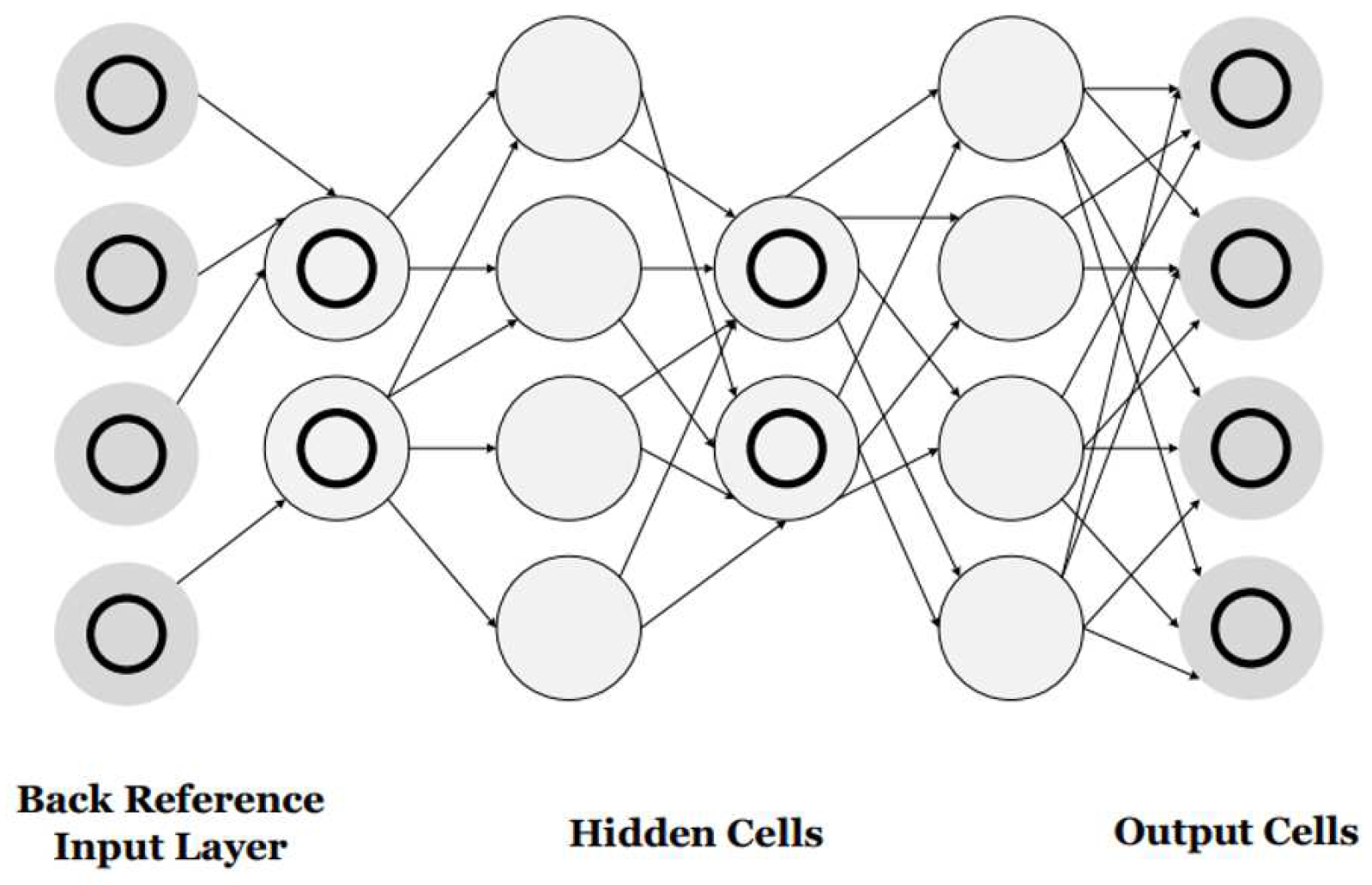

2.2.3. Recurrent Neural Networks (RNNs)

2.2.4. Self-Organizing Maps (SOMs)

2.2.5. Autoencoders

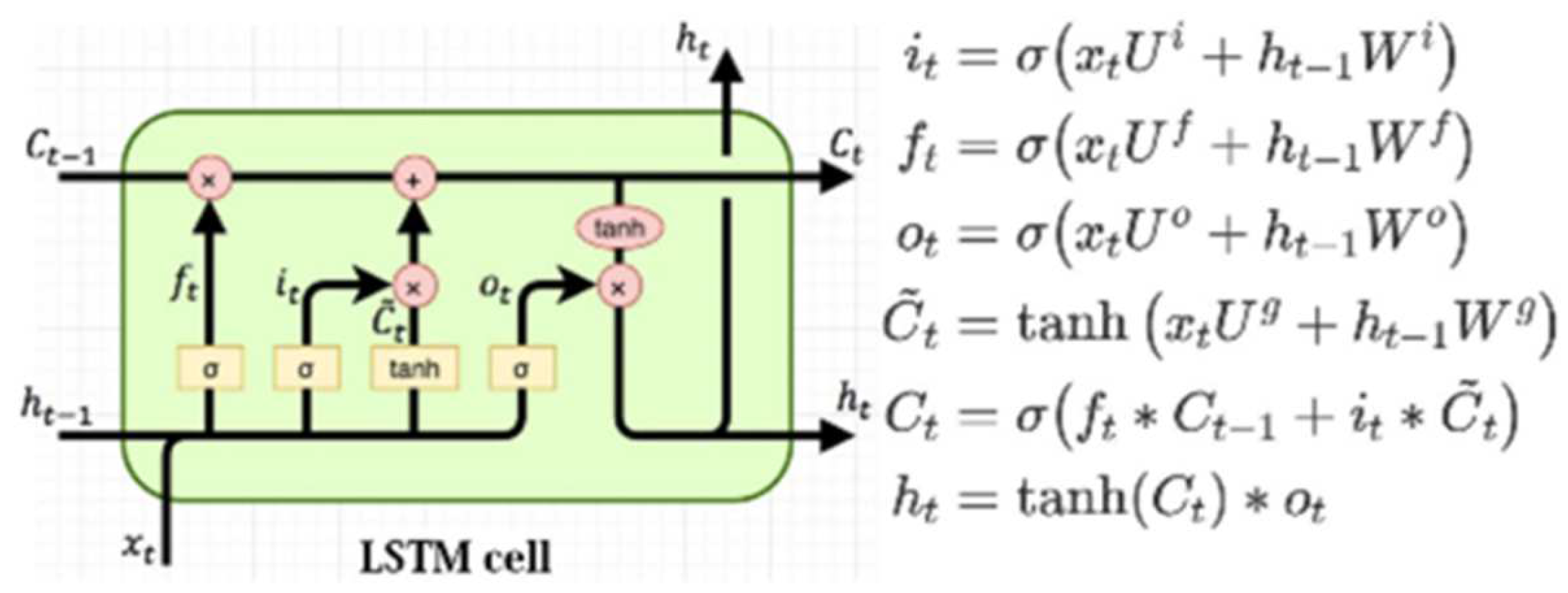

2.2.6. Long Short-Term Memory Networks (LSTMs)

2.2.7. Deep Belief Networks (DBNs)

2.2.8. Restricted Boltzmann Machines (RBMs)

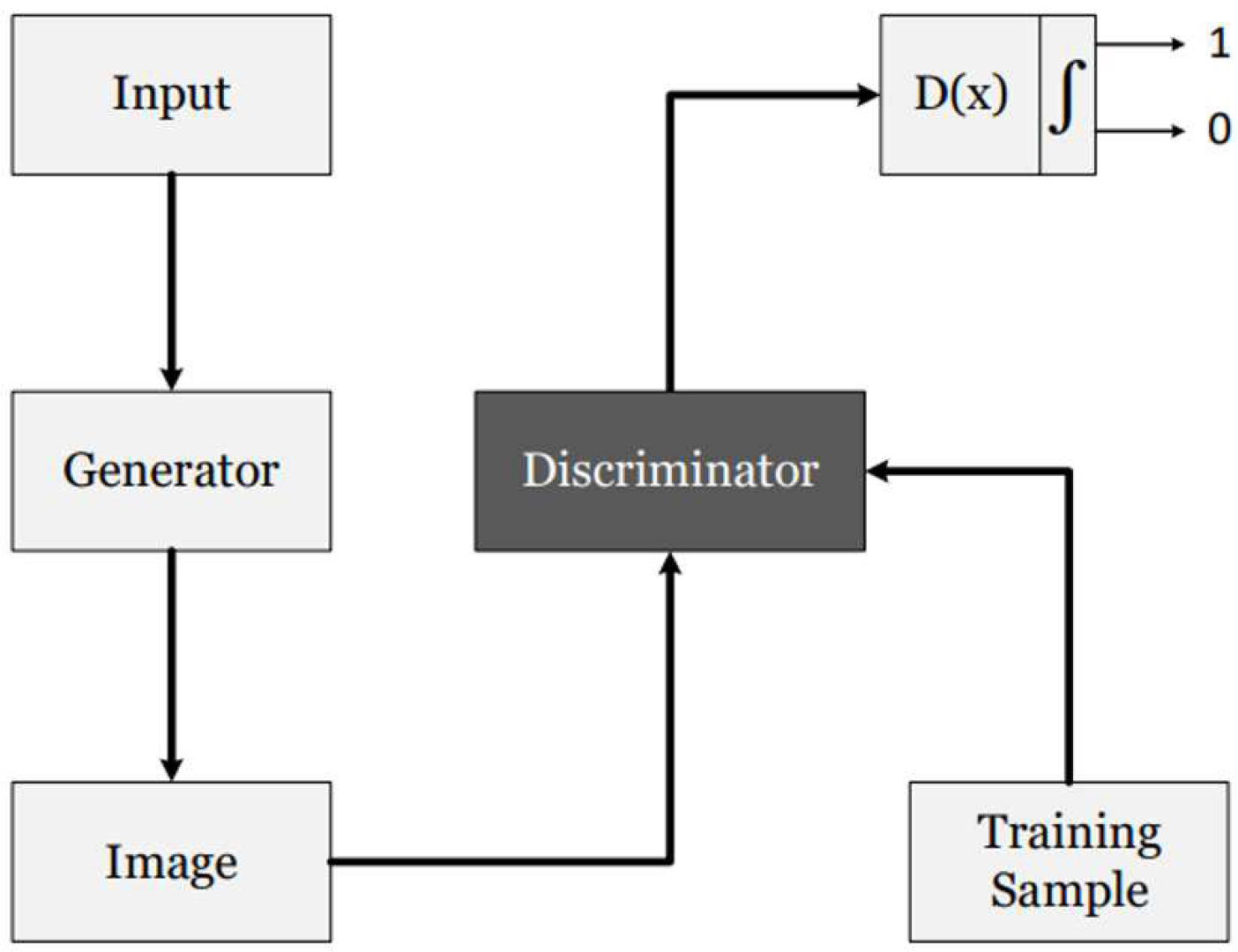

2.2.9. Generative Adversarial Network (GANs)

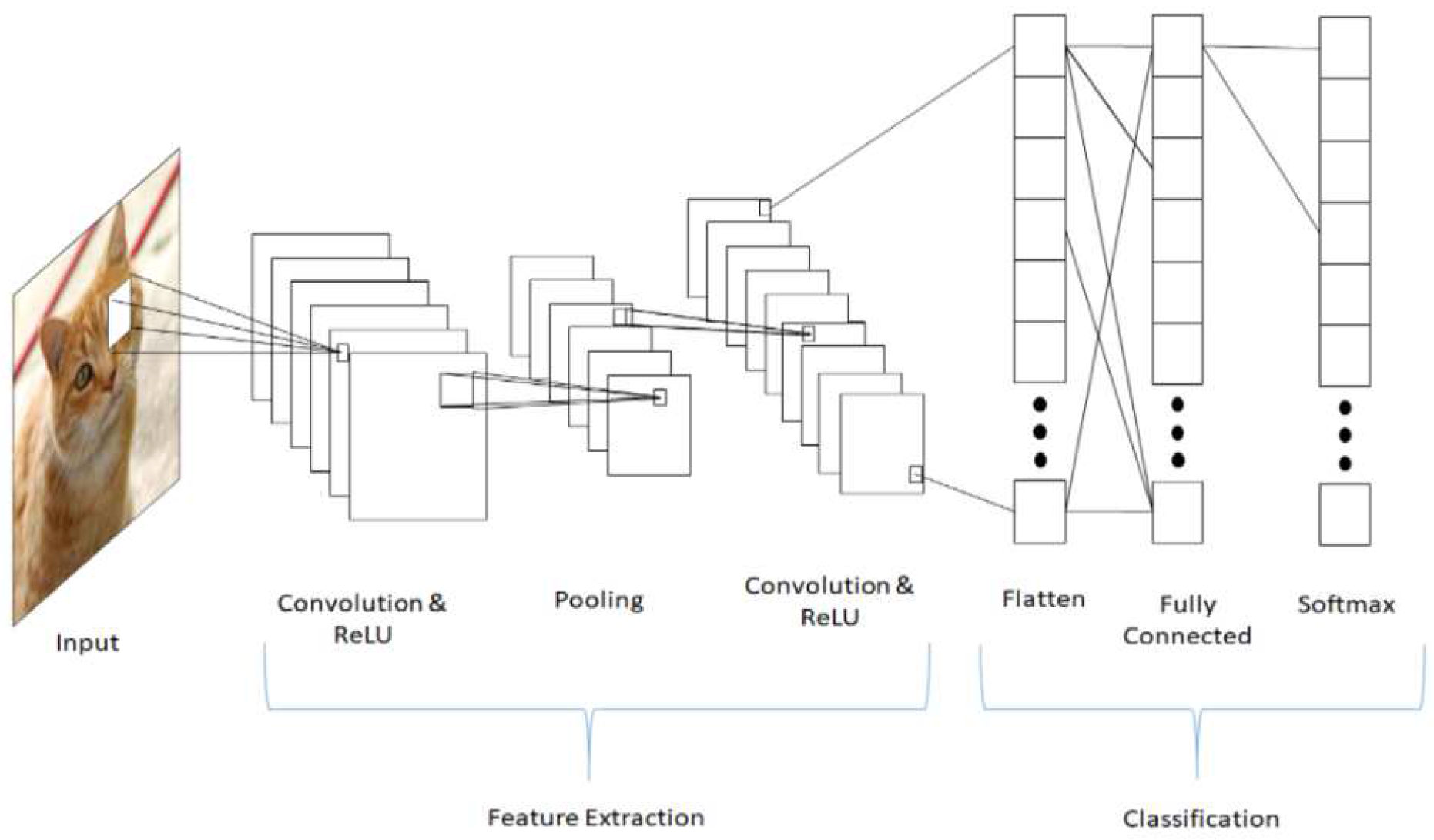

2.2.10. Convolutional Neural Networks (CNNs)

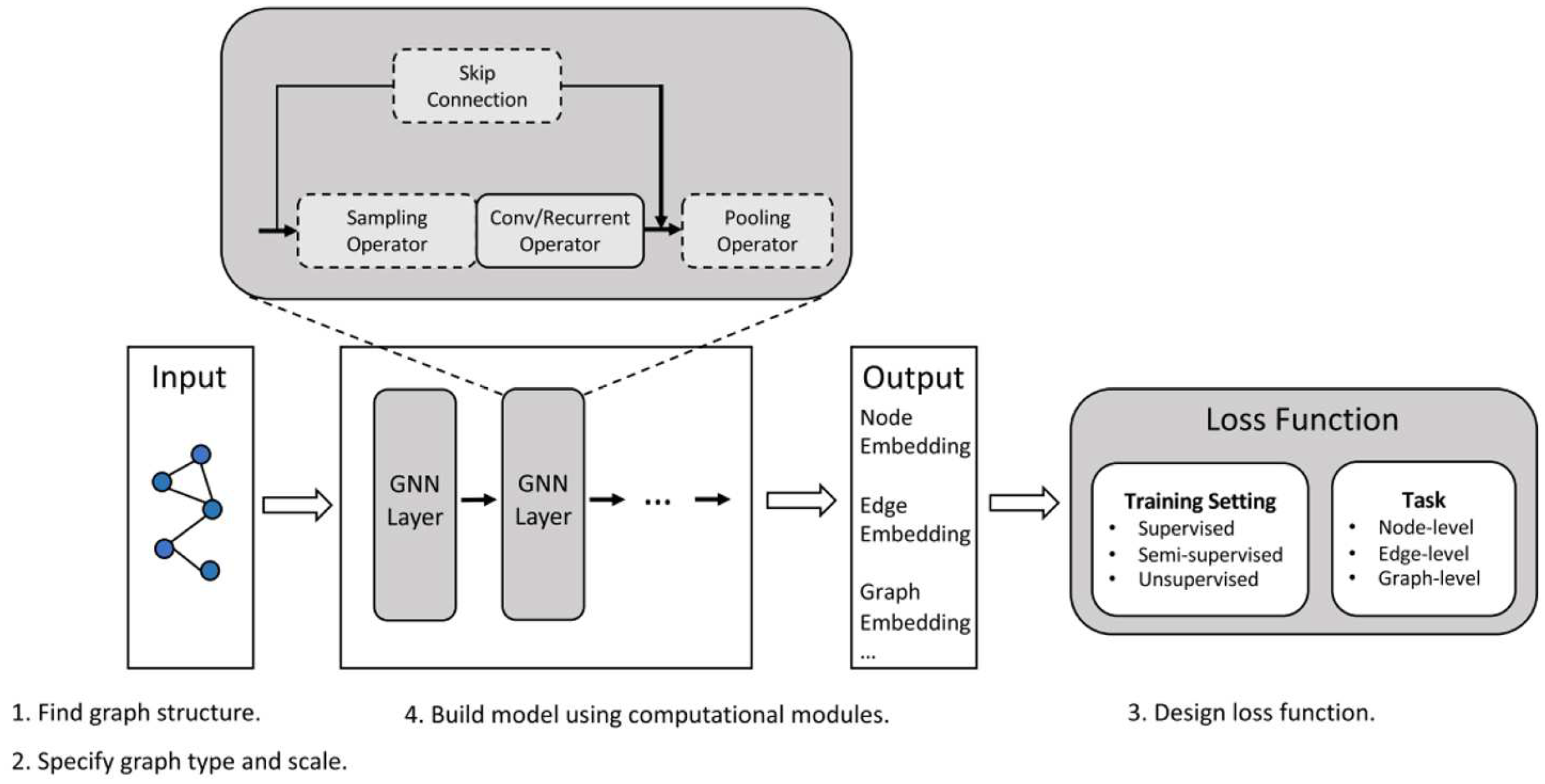

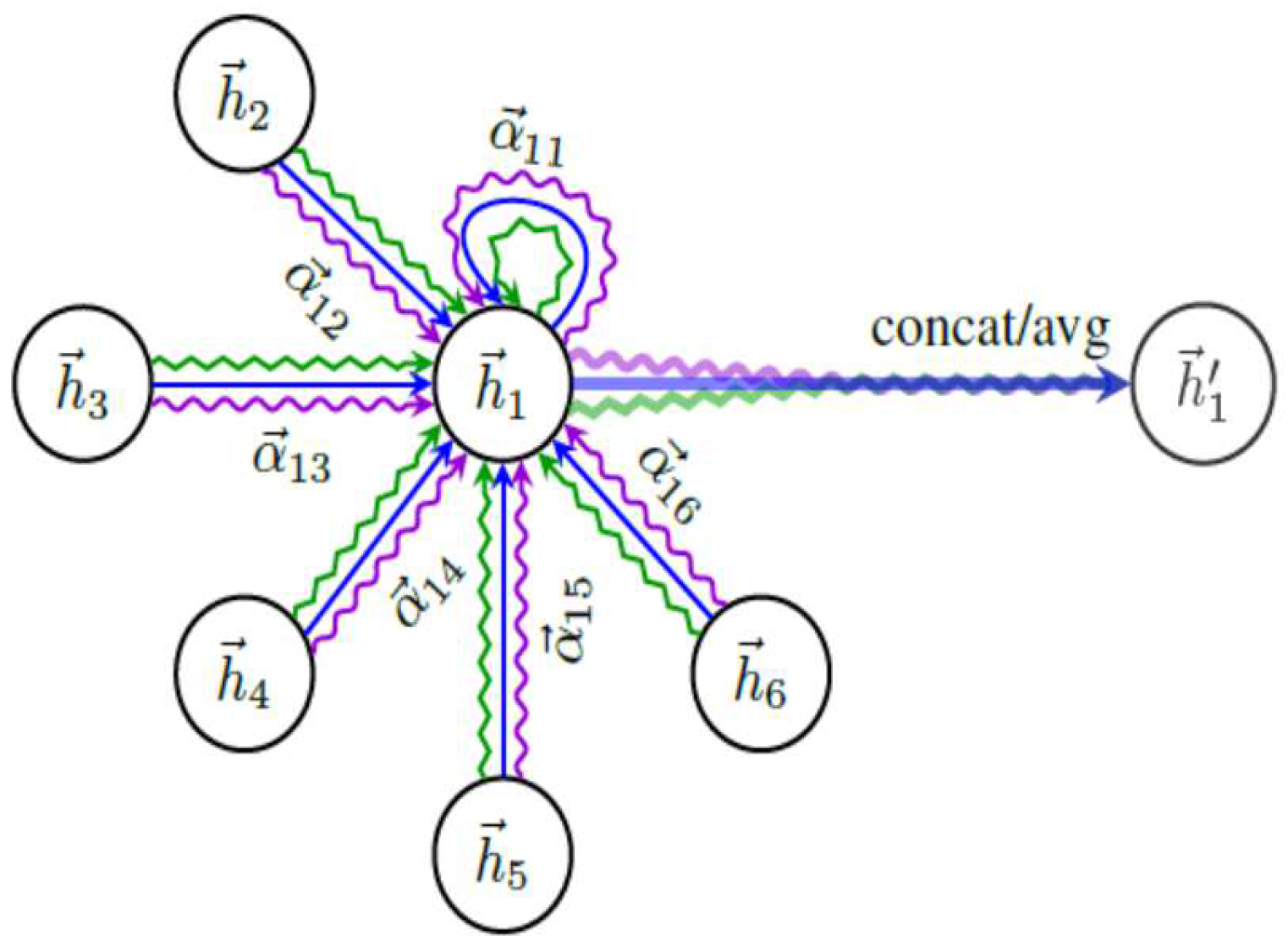

2.2.11. Graph Neural Networks (GNNs)

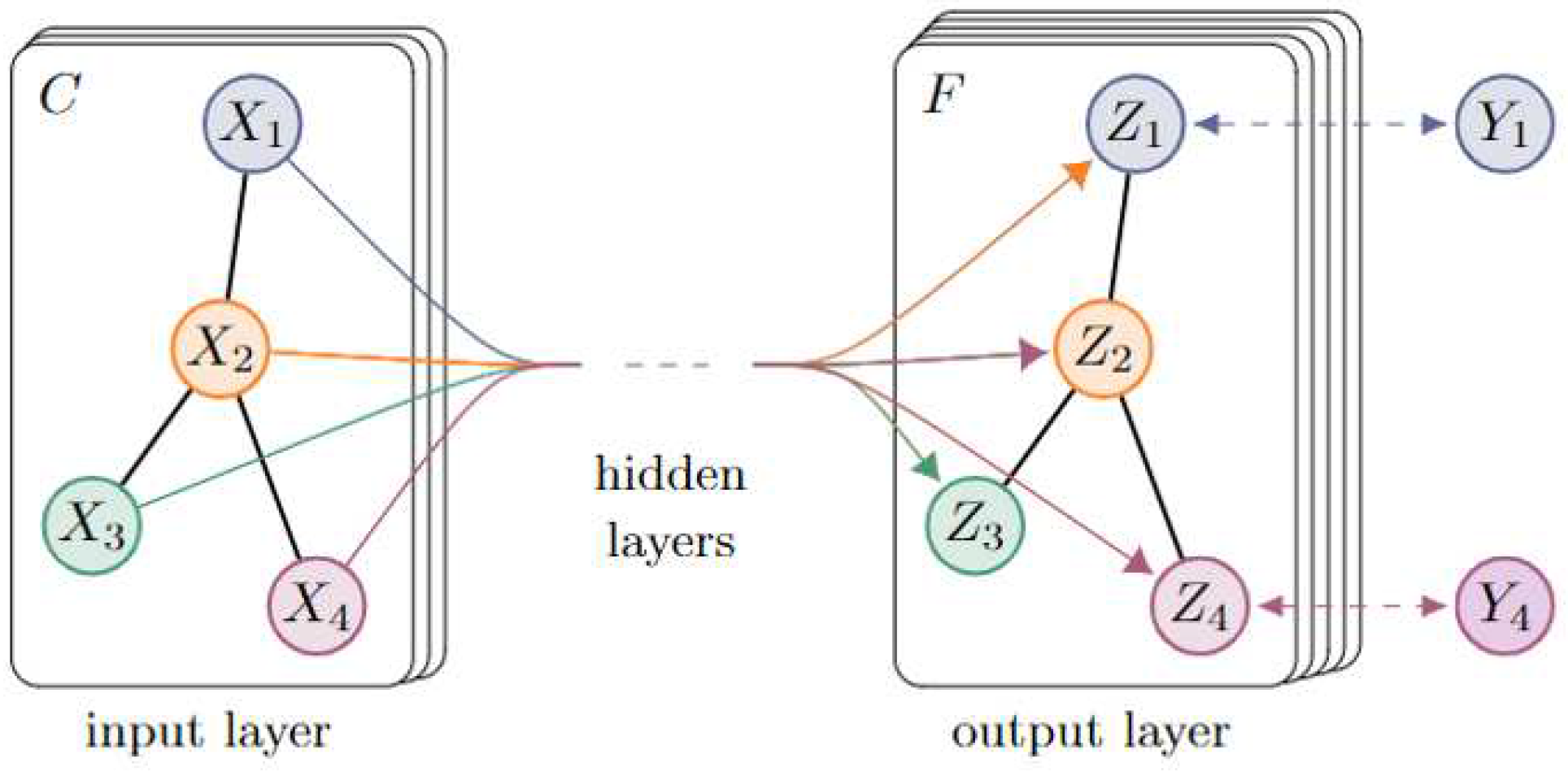

- The graph convolutional network (GCN) is a commonly used algorithm for classification purposes. Figure 7 shows that the information of each node’s neighborhood can be aggregated by applying convolutional operations on the signal. This model has demonstrated high accuracy in various classification tasks [41].

2.3. Related Work

3. Method

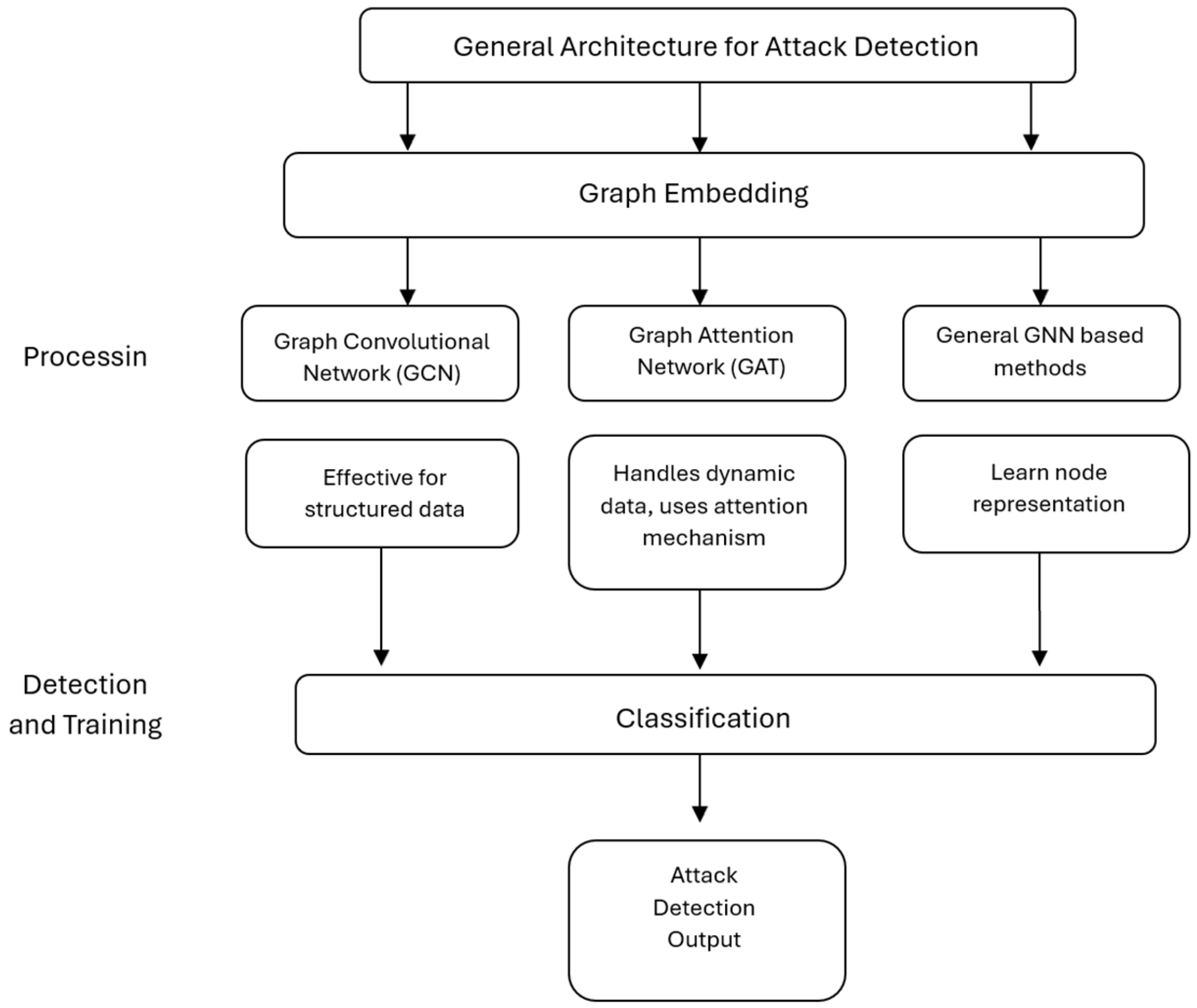

- What types of graph neural network (GNN) models are currently used in cyberattack detection research?

- How are GNN methods applied to detect and classify different types of cyberattacks?

- What public datasets have been used in the reviewed studies for model training and evaluation?

- What performance evaluation metrics are commonly used in GNN-based attack detection?

- Which GNN models demonstrated the best performance across different attack detection scenarios?

- What are the key challenges and research gaps identified in current GNN-based cyberattack detection studies?

- What future research directions have been proposed for advancing the use of GNNs in cybersecurity?

Study Strategy

4. Primary Studies

4.1. Study Selection

4.2. Dataset

5. Discussion

5.1. Limitations and Challenges

5.2. Performance Evaluation and Metric

5.3. Future Research Directions

5.4. Comparative Analysis of GNN Models

5.5. Tools and Dataset Diversity

5.6. Summary of Research Questions

6. Conclusions and Future Work

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CNN | Convolutional neural networks |

| DBNs | Deep belief networks |

| DL | Deep learning |

| GANs | Generative adversarial network |

| GAT | Graph attention network |

| GCN | Graph convolutional networks |

| GNN | Graph neural networks |

| LSTM | Long short-term memory networks |

| ML | Machine learning |

| RBMs | Restricted Boltzmann machines |

| RNN | Recurrent neural networks |

| SOMs | Self-organizing maps |

References

- Ongsulee, P. Artificial Intelligence, Machine Learning and Deep Learning. In Proceedings of the 2017 15th International Conference on ICT and Knowledge Engineering (ICT&KE), Bangkok, Thailand, 22–24 November 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Simeone, O. A Very Brief Introduction to Machine Learning with Applications to Communication Systems. IEEE Trans. Cogn. Commun. Netw. 2018, 4, 648–664. [Google Scholar] [CrossRef]

- Varlamis, I.; Michail, D.; Glykou, F.; Tsantilas, P. A Survey on the Use of Graph Convolutional Networks for Combating Fake News. Future Internet 2022, 14, 70. [Google Scholar] [CrossRef]

- Thapa, N.; Liu, Z.; Kc, D.B.; Gokaraju, B.; Roy, K. Comparison of Machine Learning and Deep Learning Models for Network Intrusion Detection Systems. Future Internet 2020, 12, 167. [Google Scholar] [CrossRef]

- Dhingra, M.; Jain, M.; Jadon, R.S. Role of Artificial Intelligence in Enterprise Information Security: A Review. In Proceedings of the 2016 Fourth International Conference on Parallel, Distributed and Grid Computing (PDGC), Waknaghat, India, 22–24 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 188–191. [Google Scholar]

- Apruzzese, G.; Colajanni, M.; Ferretti, L.; Guido, A.; Marchetti, M. On the Effectiveness of Machine and Deep Learning for Cyber Security. In Proceedings of the 2018 10th International Conference on Cyber Conflict (CyCon), Tallinn, Estonia, 29 May–1 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 371–390. [Google Scholar]

- Ahmad, R.; Alsmadi, I. Machine Learning Approaches to IoT Security: A Systematic Literature Review. Internet Things 2021, 14, 100365. [Google Scholar] [CrossRef]

- Aljofey, A.; Jiang, Q.; Qu, Q.; Huang, M.; Niyigena, J.-P. An Effective Phishing Detection Model Based on Character Level Convolutional Neural Network from URL. Electronics 2020, 9, 1514. [Google Scholar] [CrossRef]

- Odeh, A.J.; Keshta, I.; Abdelfattah, E. Efficient Detection of Phishing Websites Using Multilayer Perceptron. Int. J. Interact. Mob. Technol. (iJIM) 2020, 14, 22–31. [Google Scholar] [CrossRef]

- Teoh, T.T.; Chiew, G.; Franco, E.J.; Ng, P.C.; Benjamin, M.P.; Goh, Y.J. Anomaly Detection in Cyber Security Attacks on Networks Using MLP Deep Learning. In Proceedings of the 2018 International Conference on Smart Computing and Electronic Enterprise (ICSCEE), Kuala Lumpur, Malaysia, 11–12 July 2018; pp. 1–5. [Google Scholar]

- Hwang, Y.-S.; Bang, S.-Y. An Efficient Method to Construct a Radial Basis Function Neural Network Classifier. Neural Netw. 1997, 10, 1495–1503. [Google Scholar] [CrossRef]

- Rapaka, A.; Novokhodko, A.; Wunsch, D. Intrusion Detection Using Radial Basis Function Network on Sequences of System Calls. In Proceedings of the International Joint Conference on Neural Networks, Portland, OR, USA, 20–24 July 2003; IEEE: Piscataway, NJ, USA, 2003; pp. 1820–1825. [Google Scholar]

- Jiang, J.; Zhang, C.; Kamel, M. RBF-Based Real-Time Hierarchical Intrusion Detection Systems. In Proceedings of the International Joint Conference on Neural Networks, Portland, OR, USA, 20–24 July 2003; IEEE: Piscataway, NJ, USA, 2003; Volume 2, pp. 1512–1516. [Google Scholar]

- Berman, D.S.; Buczak, A.L.; Chavis, J.S.; Corbett, C.L. A Survey of Deep Learning Methods for Cyber Security. Information 2019, 10, 122. [Google Scholar] [CrossRef]

- Mahdavifar, S.; Ghorbani, A.A. Application of Deep Learning to Cybersecurity: A Survey. Neurocomputing 2019, 347, 149–176. [Google Scholar] [CrossRef]

- Sarker, I.H. Deep Cybersecurity: A Comprehensive Overview from Neural Network and Deep Learning Perspective. SN Comput. Sci. 2021, 2, 154. [Google Scholar] [CrossRef]

- Arivukarasi, M.; Antonidoss, A. Performance Analysis of Malicious URL Detection by Using RNN and LSTM. In Proceedings of the 2020 Fourth International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 11–13 March 2020; pp. 454–458. [Google Scholar]

- Alhoniemi, E.; Hollmén, J.; Simula, O.; Vesanto, J. Process Monitoring and Modeling Using the Self-Organizing Map. Integr. Comput. Aided Eng. 1999, 6, 3–14. [Google Scholar] [CrossRef]

- Marković, V.S.; Marjanović Jakovljević, M.; Njeguš, A. Anomalies Detection in the Application Logs Using Kohonen SOM Machine Learning Algorithm. In Proceedings of the SINTEZA 2020—International Scientific Conference on Information Technology and Data Related Research, Belgrade, Serbia, 26 June 2020; pp. 275–282. [Google Scholar] [CrossRef]

- Ramadas, M.; Ostermann, S.; Tjaden, B. Detecting Anomalous Network Traffic with Self-Organizing Maps; Springer: Berlin/Heidelberg, Germany, 2003; pp. 36–54. [Google Scholar]

- Mohammadi, M.; Al-Fuqaha, A.; Sorour, S.; Guizani, M. Deep Learning for IoT Big Data and Streaming Analytics: A Survey. IEEE Commun. Surv. Tutor. 2018, 20, 2923–2960. [Google Scholar] [CrossRef]

- Yousefi-Azar, M.; Varadharajan, V.; Hamey, L.; Tupakula, U. Autoencoder-Based Feature Learning for Cyber Security Applications. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 3854–3861. [Google Scholar]

- Wong, M.C.K. Deep Learning Models for Malicious Web Content Detection: An Enterprise Study; University of Toronto: Toronto, ON, Canada, 2019; ISBN 1392398290. [Google Scholar]

- Do, N.Q.; Selamat, A.; Krejcar, O.; Herrera-Viedma, E.; Fujita, H. Deep Learning for Phishing Detection: Taxonomy, Current Challenges and Future Directions. IEEE Access 2022, 10, 36429–36463. [Google Scholar] [CrossRef]

- Ren, F.; Jiang, Z.; Liu, J. A Bi-Directional LSTM Model with Attention for Malicious URL Detection. In Proceedings of the 2019 IEEE 4th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chengdu, China, 20–22 December 2019; pp. 300–305. [Google Scholar]

- Wu, Y.; Wei, D.; Feng, J. Network Attacks Detection Methods Based on Deep Learning Techniques: A Survey. Secur. Commun. Netw. 2020, 2020, 8872923. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.-W. A Fast Learning Algorithm for Deep Belief Nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef]

- Zhang, Q.; Yang, L.T.; Chen, Z.; Li, P. A Survey on Deep Learning for Big Data. Inf. Fusion 2018, 42, 146–157. [Google Scholar] [CrossRef]

- Li, Y.; Ma, R.; Jiao, R. A Hybrid Malicious Code Detection Method Based on Deep Learning. Int. J. Secur. Its Appl. 2015, 9, 205–216. [Google Scholar] [CrossRef]

- Asharf, J.; Moustafa, N.; Khurshid, H.; Debie, E.; Haider, W.; Wahab, A. A Review of Intrusion Detection Systems Using Machine and Deep Learning in Internet of Things: Challenges, Solutions and Future Directions. Electronics 2020, 9, 1177. [Google Scholar] [CrossRef]

- Hinton, G.E. A Practical Guide to Training Restricted Boltzmann Machines; Springer: Berlin/Heidelberg, Germany, 2012; pp. 599–619. [Google Scholar]

- Elseaidy, A.; Munasinghe, K.S.; Sharma, D.; Jamalipour, A. Intrusion Detection in Smart Cities Using Restricted Boltzmann Machines. J. Netw. Comput. Appl. 2019, 135, 76–83. [Google Scholar] [CrossRef]

- Fiore, U.; Palmieri, F.; Castiglione, A.; De Santis, A. Network Anomaly Detection with the Restricted Boltzmann Machine. Neurocomputing 2013, 122, 13–23. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. Adv. Neural Inf. Process. Syst. 2014, 2, 2672–2680. [Google Scholar]

- Hiromoto, R.E.; Haney, M.; Vakanski, A. A Secure Architecture for IoT with Supply Chain Risk Management. In Proceedings of the 2017 9th IEEE International Conference on Intelligent Data Acquisition and Advanced Computing Systems: Technology and Applications (IDAACS), Bucharest, Romania, 21–23 September 2017; IEEE: Piscataway, NJ, USA, 2017; Volume 1, pp. 431–435. [Google Scholar]

- Zhao, X.; Fok, K.W.; Thing, V.L.L. Enhancing Network Intrusion Detection Performance Using Generative Adversarial Networks. Comput. Secur. 2024, 145, 104005. [Google Scholar] [CrossRef]

- Geetha, R.; Thilagam, T. A Review on the Effectiveness of Machine Learning and Deep Learning Algorithms for Cyber Security. Arch. Comput. Methods Eng. 2021, 28, 2861–2879. [Google Scholar] [CrossRef]

- Pooja, A.L.; Sridhar, M. Analysis of Phishing Website Detection Using CNN and Bidirectional LSTM. In Proceedings of the 2020 4th International Conference on Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, 5–7 November 2020; pp. 1620–1629. [Google Scholar]

- Zhou, J.; Cui, G.; Hu, S.; Zhang, Z.; Yang, C.; Liu, Z.; Wang, L.; Li, C.; Sun, M. Graph Neural Networks: A Review of Methods and Applications. AI Open 2020, 1, 57–81. [Google Scholar] [CrossRef]

- Glisic, S.G.; Lorenzo, B. Graph Neural Networks; Wiley: Hoboken, NJ, USA, 2022. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-Supervised Classification with Graph Convolutional Networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Yao, L.; Mao, C.; Luo, Y. Graph Convolutional Networks for Text Classification. Proc. AAAI Conf. Artif. Intell. 2019, 33, 7370–7377. [Google Scholar] [CrossRef]

- Hong, D.; Gao, L.; Yao, J.; Zhang, B.; Plaza, A.; Chanussot, J. Graph Convolutional Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 5966–5978. [Google Scholar] [CrossRef]

- Zhang, S.; Tong, H.; Xu, J.; Maciejewski, R. Graph Convolutional Networks: A Comprehensive Review. Comput. Soc. Netw. 2019, 6, 11. [Google Scholar] [CrossRef]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph Attention Networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- Ma, X.; Yin, Y.; Jin, Y.; He, M.; Zhu, M. Short-Term Prediction of Bike-Sharing Demand Using Multi-Source Data: A Spatial-Temporal Graph Attentional LSTM Approach. Appl. Sci. 2022, 12, 1161. [Google Scholar] [CrossRef]

- Kim, H.; Lee, B.S.; Shin, W.Y.; Lim, S. Graph Anomaly Detection with Graph Neural Networks: Current Status and Challenges. IEEE Access 2022, 10, 111820–111829. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhao, Y.; Li, Z.; Cheng, X.; Wang, Y.; Kotevska, O.; Derr, T. A Survey on Privacy in Graph Neural Networks: Attacks, Preservation, and Applications. IEEE Trans. Knowl. Data Eng. 2024, 36, 7497–7515. [Google Scholar] [CrossRef]

- Zhao, C.; Xin, Y.; Li, X.; Zhu, H.; Yang, Y.; Chen, Y. An Attention-Based Graph Neural Network for Spam Bot Detection in Social Networks. Appl. Sci. 2020, 10, 8160. [Google Scholar] [CrossRef]

- Ren, Y.; Wang, B.; Zhang, J.; Chang, Y. Adversarial Active Learning Based Heterogeneous Graph Neural Network for Fake News Detection. In Proceedings of the 2020 IEEE International Conference on Data Mining (ICDM), Sorrento, Italy, 17–20 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 452–461. [Google Scholar]

- Wang, Y.; Qian, S.; Hu, J.; Fang, Q.; Xu, C. Fake News Detection via Knowledge-Driven Multimodal Graph Convolutional Networks. In Proceedings of the 2020 International Conference on Multimedia Retrieval, Dublin, Ireland, 8–11 June 2020; ACM: New York, NY, USA, 2020; pp. 540–547. [Google Scholar]

- Guo, Z.; Tang, L.; Guo, T.; Yu, K.; Alazab, M.; Shalaginov, A. Deep Graph Neural Network-Based Spammer Detection under the Perspective of Heterogeneous Cyberspace. Future Gener. Comput. Syst. 2021, 117, 205–218. [Google Scholar] [CrossRef]

- Ouyang, L.; Zhang, Y. Phishing Web Page Detection with HTML-Level Graph Neural Network. In Proceedings of the 2021 IEEE 20th International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom), Shenyang, China, 20–22 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 952–958. [Google Scholar]

- Fang, Y.; Huang, C.; Zeng, M.; Zhao, Z.; Huang, C. JStrong: Malicious JavaScript Detection Based on Code Semantic Representation and Graph Neural Network. Comput. Secur. 2022, 118, 102715. [Google Scholar] [CrossRef]

- Ariyadasa, S.; Fernando, S.; Fernando, S. Combining Long-Term Recurrent Convolutional and Graph Convolutional Networks to Detect Phishing Sites Using URL and HTML. IEEE Access 2022, 10, 82355–82375. [Google Scholar] [CrossRef]

- Huang, Y.; Negrete, J.; Wagener, J.; Fralick, C.; Rodriguez, A.; Peterson, E.; Wosotowsky, A. Graph Neural Networks and Cross-Protocol Analysis for Detecting Malicious IP Addresses. Complex Intell. Syst. 2023, 9, 3857–3869. [Google Scholar] [CrossRef]

- Zhang, B.; Li, J.; Chen, C.; Lee, K.; Lee, I. A Practical Botnet Traffic Detection System Using GNN; Springer: Cham, Switzerland, 2022; pp. 66–78. [Google Scholar]

- Pan, Y.; Cai, L.; Leng, T.; Zhao, L.; Ma, J.; Yu, A.; Meng, D. AttackMiner: A Graph Neural Network Based Approach for Attack Detection from Audit Logs; Springer: Cham, Switzerland, 2023; pp. 510–528. [Google Scholar]

- Yang, Z.; Pei, W.; Chen, M.; Yue, C. WTAGRAPH: Web Tracking and Advertising Detection Using Graph Neural Networks. In Proceedings of the 2022 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 22–26 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1540–1557. [Google Scholar]

- Zhou, X.; Liang, W.; Li, W.; Yan, K.; Shimizu, S.; Wang, K.I.-K. Hierarchical Adversarial Attacks Against Graph-Neural-Network-Based IoT Network Intrusion Detection System. IEEE Internet Things J. 2022, 9, 9310–9319. [Google Scholar] [CrossRef]

- Li, Y.; Li, R.; Zhou, Z.; Guo, J.; Yang, W.; Du, M.; Liu, Q. GraphDDoS: Effective DDoS Attack Detection Using Graph Neural Networks. In Proceedings of the 2022 IEEE 25th International Conference on Computer Supported Cooperative Work in Design (CSCWD), Hangzhou, China, 4–6 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1275–1280. [Google Scholar]

- Lo, W.W.; Kulatilleke, G.; Sarhan, M.; Layeghy, S.; Portmann, M. XG-BoT: An Explainable Deep Graph Neural Network for Botnet Detection and Forensics. Internet Things 2023, 22, 100747. [Google Scholar] [CrossRef]

- Ren, W.; Song, X.; Hong, Y.; Lei, Y.; Yao, J.; Du, Y.; Li, W. APT Attack Detection Based on Graph Convolutional Neural Networks. Int. J. Comput. Intell. Syst. 2023, 16, 184. [Google Scholar] [CrossRef]

- Gao, M.; Wu, L.; Li, Q.; Chen, W. Anomaly Traffic Detection in IoT Security Using Graph Neural Networks. J. Inf. Secur. Appl. 2023, 76, 103532. [Google Scholar] [CrossRef]

- Bao, H.; Li, W.; Wang, X.; Tang, Z.; Wang, Q.; Wang, W.; Liu, F. Payload Level Graph Attention Network for Web Attack Traffic Detection; Springer: Cham, Switzerland, 2023; pp. 394–407. [Google Scholar]

- Maksimoski, A.; Woungang, I.; Traore, I.; Dhurandher, S.K. Bonet Detection Mechanism Using Graph Neural Network; Springer: Cham, Switzerland, 2023; pp. 247–257. [Google Scholar]

- Lin, H.-C.; Wang, P.; Lin, W.-H.; Lin, Y.-H.; Chen, J.-H. Malware Detection and Classification by Graph Neural Network. In Proceedings of the 2023 IEEE 5th Eurasia Conference on IOT, Communication and Engineering (ECICE), Yunlin, Taiwan, 27–29 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 623–625. [Google Scholar]

- Hosseini, D.; Jin, R. Graph Neural Network Based Approach for Rumor Detection on Social Networks. In Proceedings of the 2023 International Conference on Smart Applications, Communications and Networking (SmartNets), Istanbul, Turkey, 25–27 July 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–6. [Google Scholar]

- Hussain, S.; Nadeem, M.; Baber, J.; Hamdi, M.; Rajab, A.; Al Reshan, M.S.; Shaikh, A. Vulnerability detection in Java source code using a quantum convolutional neural network with self-attentive pooling, deep sequence, and graph-based hybrid feature extraction. Sci. Rep. 2024, 14, 7406. [Google Scholar] [CrossRef] [PubMed]

- Shi, S.; Chen, J.; Wang, Z.; Zhang, Y.; Zhang, Y.; Fu, C.; Qiao, K.; Yan, B. SStackGNN: Graph Data Augmentation Simplified Stacking Graph Neural Network for Twitter Bot Detection. Int. J. Comput. Intell. Syst. 2024, 17, 106. [Google Scholar] [CrossRef]

- Zhang, G.; Zhang, S.; Yuan, G. Bayesian Graph Local Extrema Convolution with Long-tail Strategy for Misinformation Detection. ACM Trans. Knowl. Discov. Data 2024, 18, 89. [Google Scholar] [CrossRef]

- Zhou, Y.; Pang, A.; Yu, G. Clip-GCN: An adaptive detection model for multimodal emergent fake news domains. Complex Intell. Syst. 2024, 10, 5153–5170. [Google Scholar] [CrossRef]

- Altaf, T.; Wang, X.; Ni, W.; Yu, G.; Liu, R.P.; Braun, R. GNN-Based Network Traffic Analysis for the Detection of Sequential Attacks in IoT. Electronics 2024, 13, 2274. [Google Scholar] [CrossRef]

- Pattanaik, B.; Mandal, S.; Tripathy, R.M.; Sekh, A.A. Rumor detection using dual embeddings and text-based graph convolutional network. Discov. Artif. Intell. 2024, 4, 86. [Google Scholar] [CrossRef]

- Chukka, R.B.; Suneetha, M.; Ahmed, M.A.; Babu, P.R.; Ishak, M.K.; Alkahtani, H.K.; Mostafa, S.M. Hybridization of synergistic swarm and differential evolution with graph convolutional network for distributed denial of service detection and mitigation in IoT environment. Sci. Rep. 2024, 14, 30868. [Google Scholar] [CrossRef]

- Guo, W.; Du, W.; Yang, X.; Xue, J.; Wang, Y.; Han, W.; Hu, J. MalHAPGNN: An Enhanced Call Graph-Based Malware Detection Framework Using Hierarchical Attention Pooling Graph Neural Network. Sensors 2025, 25, 374. [Google Scholar] [CrossRef]

| No | Study | Year | Algorithm | Description | Limitations |

|---|---|---|---|---|---|

| 1 | [49] | 2020 | GAT | To construct a detection model, the paper proposed using aggregate neighbor relationships and features. Due to the model’s ability to learn complex method, it can integrate different neighbor relationships. | The method relies on the abundance of hidden features that may not always be available. In addition, constructing the detection model is based on structural data that may affect accuracy. |

| 2 | [50] | 2020 | GNN | A novel hierarchical attention method is applied by the model in HIN for the purpose of learning node representations. The subsequent detection of fake news is accomplished by AA-HGNN through the classification of new nodes during active learning. | Utilizing the model with complicated and large amounts of data may result in inadequate performance. |

| 3 | [51] | 2020 | GCN | A unified framework is created for the detection process by combining visual and textual information. To improve the process and provide semantic information. However, the model converts them into a graph and subsequently derives external knowledge from the real world, which is represented as nodes. | The scalability of the extraction process may be restricted by its high cost. |

| 4 | [52] | 2021 | GNN | The proposed model suggests a method to strengthen feature expressions and generate more spaces. | The model may not be equipped to handle spammers who use advanced programs to conceal their tracks and identities. |

| 5 | [53] | 2021 | GCN | The model uses the DOM trees in HTML source codes to construct a graph. It employs an embedding method and recurrent neural networks (RNNs) to identify the important features within the nodes and represent their semantics. | The method that uses URLs as input was found to be less accurate than other methods that use HTML as input. |

| 6 | [54] | 2022 | GAT | Flow information is managed by the model, which embeds the nodes and edges of the graph as well as generates an abstract syntax tree that incorporates flow. | Lack of updated data in addition to the comprehensive dataset, which contains a wide range of malicious codes, including JavaScript. |

| 7 | [55] | 2022 | GCN | The model is made up of two distinct components: URLDET and HTMLDET. To process URLs and HTML, these models were developed using long-term recurrent convolutional networks (LRCN) and GCN, respectively. | At times, the model may not be saved correctly, which could adversely affect its performance. |

| 8 | [56] | 2022 | GCN | The identification of malicious IPs will be utilized by both an innovative approach and the random forest model. The web protocol and targeted features from email will be combined and discriminant features will be incorporated into the graph. | The model’s features were not enhanced enough to significantly impact its performance. |

| 9 | [57] | 2022 | GNN | The research proposes a novel approach to detect traffic using graph neural network (GNN) models to classify nodes. The present system uses three distinct modules: Data classification module, data processing module, and a module for visualizing results. The modules work together in order to apply data encoding, data visualization, and feature extraction. | The network topology diagram only tested botnet traffic. Other anomalous traffic such as virus attacks or worm traffic were not tested. |

| 10 | [58] | 2022 | GAT | The model combines deep learning techniques and provenance graph causal analysis to build a graph model. It identifies whether an attack has occurred by building key patterns. The input audit logs are then used to create provenance graphs. | N\A |

| 11 | [59] | 2022 | GNN | HTTP network traffic is represented using a homogenous multigraph in the model. It formulates advertising and web tracking detection via GNN-based edge representation learning. | The CDN server may experience performance issues when detecting new WTA requests. |

| 12 | [60] | 2022 | GCN | This research introduces a novel method called hierarchical adversarial attack (HAA). One of its objectives is to create a black-box adversarial assault technique that is level-aware. This approach aims to identify intrusions in IoT systems that rely on graph neural networks (GNN) and have limited resources. The approach involves developing a shadow graph neural network (GNN) model by employing an intelligent approach that includes a map to generate adversarial samples. The model works well in recognizing an important feature accurately. | N\A |

| 13 | [61] | 2022 | GNN | The model creates an endpoint traffic graph that includes structural relationships. | The DDoS dataset may not be effectively tested across different size or traffic amounts. |

| 14 | [62] | 2023 | GCN | The XG-BoT uses a graph isomorphism network and a reversible residual connection to significant important node representations from botnet communication networks. | The model is experiencing issues with its network communications. |

| 15 | [63] | 2023 | GCN | The paper presents a method for detecting advanced persistent threat (APT) attacks. The method employs graph convolutional networks (GCN) to identify vulnerabilities and establish relationships between existing APT threats. This is carried out by analyzing the names of software security entities from CAPEC, CWE, and CVE and using these data to form a graph representing APT attack practices. | There is a possibility of information loss when simplifying the heterogeneous APT attack graph. |

| 16 | [64] | 2023 | GCN | The Internet of Things (IoT) has been represented by graphs including nodes that are implemented to optimize efficiency. To enhance the graph convolutional network, a meta-path-based aggregation strategy is used, which produces a representation of the graph nodes with low dimensions. | Only two downloaded datasets have been used to test the model. |

| 17 | [65] | 2023 | GAT | An independent graph is generated for each pre-processed payload. In the global matrix of features, the node representation is shared. Then, the model uses GAT to train graph classification models. | The model may not be capable enough to effectively tackle the problem of traffic encryption. |

| 18 | [66] | 2023 | GCN | A graph neural network (GNN) is employed in the model to facilitate the efficient detection of malicious activities within botnets through the use of supervised learning models. The model was evaluated using labeled datasets and five metrics. | The model may not be effective when compared to other benchmark models and datasets. |

| 19 | [67] | 2023 | GCN | The malware and its variants will be classified using a graph convolutional network (GCN) that determines their potential features. Cuckoo Sandbox logs are also employed in this model. | The model may not be as effective as other models when compared. |

| 20 | [68] | 2023 | GAT | The model consists of three steps: first it creates a graph. Second, it incorporates different features into this graph-based approach. Finally, it employs a GAT to integrate neighboring information with these features. | The results of the model are based on inadequate data. |

| 21 | [69] | 2024 | GCN | A hybrid GCN-RFEMLP model integrated with CodeBERT utilizes quantum convolutional neural networks along with self-attentive pooling to identify vulnerabilities in Java code by analyzing code patterns and structures. | N\A |

| 22 | [70] | 2024 | GNN | The stacked graph neural network employs streamlined graph stacking techniques to enhance data augmentation, with the goal of improving GNN performance in semi-supervised learning tasks. | N\A |

| 23 | [71] | 2024 | GNN | A Bayesian graph convolutional network incorporates local extrema convolution to tackle challenges in long-tail graph data, enhancing node representation. | N\A |

| 24 | [72] | 2024 | GCN | The Clip-GCN model comprises three modules: cross-modal feature extraction, domain detection, and news detection. The feature extraction module captures semantic relationships between modalities, the domain detection module extracts domain-invariant features, and the news detection module utilizes cross-domain knowledge for news authenticity detection. | N\A |

| 25 | [73] | 2024 | GCN | The model utilizes a novel GGCN architecture combined with sequential analysis to capture and analyze the temporal dynamics of network traffic, enhancing its ability to detect and respond to evolving cyber threats. | Multiclass classification increases complexity due to feature overlap and data imbalance, affecting generalization. The model struggled with diverse attacks in the Mirai dataset, leading to lower performance than BoT-IoT. |

| 26 | [74] | 2024 | GCN | A novel rumor detection model that integrates dual embeddings from BERT and GPT with a graph-based approach. The system constructs text-based graphs to capture contextual relationships between rumors and their propagation on social media. | The model performed well on PHEME but struggled on Twitter15, possibly due to data quality, hyperparameter tuning, or graph structure. Relying solely on BERT and GPT may be insufficient, and the reasons for performance issues lack experimental validation. |

| 27 | [75] | 2024 | GCN | The SSODE-GCNDM method begins with Z-score normalization to standardize input data. It employs the SSO-DE approach for feature selection, while the GCN technique is utilized to detect and mitigate attacks. Finally, the NGO method is applied to fine-tune the parameters of the GCN model, enhancing its effectiveness. | The model is sensitive to data quality, prone to overfitting with high-dimensional data, and limited by its reliance on specific optimization algorithms. |

| 28 | [76] | 2025 | GCN | The MalHAPGNN model utilizes enhanced call graphs and GNN to identify malware behaviors by examining call sequences and execution patterns in programs. | N/A |

| No | Detection Task | Dataset | Data Type | Description | Related Work |

|---|---|---|---|---|---|

| 1 | Bot Detection | Twitter 1KS-10KN | Twitter user activities | Uses Twitter user activity and interaction patterns such as retweets and followers to build graphs that help identify automated bots. | [49] |

| 2 | Fake Content Detection | PolitiFact Buzz feed | Social media posts, images | Models content relationships using social media posts, images and links to detect fake or misleading information. | [50] |

| 3 | Pheme | Tweets, News and Images | [51] [72] [71] | ||

| 4 | Spam Detection | Social media posts, accounts | Analyzes social platform posts and user accounts, using graph-based models to identify repetitive or coordinated spam behavior. | [52] | |

| 5 | Web Content | Open Phish, Phish Tank | URLs and HTML | Focuses on detecting malicious or deceptive web content such as phishing pages and scripts. | [53] [54] |

| 6 | [55] | ||||

| 7 | Mcafee | Emails, Web and DNS | [56] | ||

| 8 | Malware | Tranco | Benign and Malicious Java script Codes, DDos. | Involves datasets collected from scripts or APKs labeled as malicious or benign. | [54] [67] [69] [76] |

| 9 | Audit log detection | DARPA TC | Audit Log Activities | Uses audit logs from monitored systems to build temporal graphs, allowing detect advanced persistent threats. | [58] |

| 10 | Advertising Detection | Chromium | HTTP requests, DOM, API Access for Webpages | Detects abnormal advertising behaviour and tracking using user interaction data such as chromium activities | [59] |

| 11 | UNSW-SOSR2019, Bot-IoT and mirai | IoT Attack Traces | [60] [73] | ||

| 12 | Traffic Detection | CIC-IDS2017, CIC-DoS2017 | DoS and DDoS Traffic | Utilizes network flow datasets to generate communication graphs between nodes, helping detect patterns of DDoS or other volumetric attacks. | [60] [61] |

| 13 | CTU-13 | Network Flows, HTTPs, DDoS | [62] [66] | ||

| 14 | |||||

| 15 | CIDA | IP Traces | [57] | ||

| 16 | Bot-Iot, Isot, CICDDoS2019 | Network Traffic | [64] [75] | ||

| 17 | CSIC2010 FWAF, TBWIDD BDCI2022 | HTTPs Traffic | [65] | ||

| 18 | Web, application and Service Content | CVE, CWE, CAPEC | CVE, CWE, CAPEC, and Apt Reports | Analyzes source code and threat intelligence to detect structural software vulnerabilities by modeling functions or APIs | [63] [70] |

| 19 | Rumour Source Detection | Pheme, Twitter15 | Tweets, Posts | Models tweet threads and reply interactions to trace back the source of misinformation using graph models. | [68] [74] |

| No | PRECISION (%) | RECALL (%) | F1-Score (%) | ACCURACY (%) |

|---|---|---|---|---|

| [49] | 93% | 88% | 91% | - |

| [68] | - | - | 80% | 82% |

| [54] | 99.93% | 99.96% | 99.96% | 99.95% |

| [58] | 100.00% | 99.12% | 99.56% | 97.72% |

| [50] | 72.11% | 69.09% | 70.57% | 73.51% |

| [52] | - | - | 93.46% | - |

| [61] | 95.05% | 94.07% | 94.56% | 97.51% |

| [51] | 87.62% | 87.65% | 87.64% | 87.56% |

| [56] | 88.90% | 58.03% | 90.22% | 85.28% |

| [53] | 93.45% | 80.25% | 86.34% | 95.50% |

| [55] | 96.40% | 96.44% | 96.42% | 96.42% |

| [57] | 99.4% | 98.4% | 98.91% | - |

| [59] | 98.38% | 96.25% | 97.18% | 97.90% |

| [62] | 99.63% | 99.42% | 99.52% | - |

| [63] | 95.8% | 95.8% | 95.8% | 95.9% |

| [64] | 93.2% | 99.7% | 96.3% | - |

| [66] | 100% | 77% | 87% | 78% |

| [67] | 96.86% | 96.72% | - | 97.78% |

| [69] | 98.00% | 96.00% | 97.00% | 99.00% |

| [70] | - | - | 97.59% | 97.59% |

| [71] | - | - | 88.39% | 86.81% |

| [72] | 87.56% | 87.01% | 87.06% | 87.79% |

| [73] | 98.95% | 98.68% | 98.88% | 98.86% |

| [74] | - | - | - | 88.64% |

| [75] | 99.72% | 99.62% | 99.67% | 99.62% |

| [76] | 98.96% | 98.78% | 98.87% | 98.90% |

| Study | Future Direction |

|---|---|

| [49] | Authors aim to enhance the performance of spam bot detection in social networks. |

| [51] | The authors intend to employ an improved method and extract visual information for the purpose of improving the current model’s ability to identify and classify fake news. |

| [54] | The future path of the research will focus on large-scale detection of harmful behavior and analysis of JavaScript code. |

| [56] | The authors intend to perform experiments on larger datasets and investigate approximation methods that include other forms of malicious traffic, including worms or viruses, that target network traffic. |

| [57] | The current model did not test whether other malicious traffic such as worms or viruses attack traffic. In addition, authors intend to expand the testing process |

| [59] | Authors plan to study more efficient attacks to test the performance effectiveness. |

| [63] | Authors intend to extract additional threat indicators from APT threat data to improve performance. |

| [64] | The authors’ objective is to enhance the efficiency of the current model in order to more effectively address the trend of traffic encryption. |

| [66] | Authors want to evaluate the model by testing it with many different datasets, in addition to comparing its performance with that of existing benchmark models. |

| [68] | Given the constraints of the current rumour analysis dataset, authors intend to generate a more varied dataset. |

| [69] | Authors intend to expand the system to support additional programming languages to evaluate its effectiveness across various codebases, and additionally, to investigate its potential applications in NLP tasks to enhance time efficiency, reduce costs, and optimize memory usage. |

| [71] | Authors intend to expand the current neural network into hyperbolic space, which could improve the learning of propagation structures. |

| [73] | Authors intend to focus on improving the model’s performance in multiclass classification tasks. |

| [76] | Authors intend to offer more detailed insights into malware functions, thereby improving the interpretability of malware detection results. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alshehri, S.M.; Sharaf, S.A.; Molla, R.A. Systematic Review of Graph Neural Network for Malicious Attack Detection. Information 2025, 16, 470. https://doi.org/10.3390/info16060470

Alshehri SM, Sharaf SA, Molla RA. Systematic Review of Graph Neural Network for Malicious Attack Detection. Information. 2025; 16(6):470. https://doi.org/10.3390/info16060470

Chicago/Turabian StyleAlshehri, Sarah Mohammed, Sanaa Abdullah Sharaf, and Rania Abdullrahman Molla. 2025. "Systematic Review of Graph Neural Network for Malicious Attack Detection" Information 16, no. 6: 470. https://doi.org/10.3390/info16060470

APA StyleAlshehri, S. M., Sharaf, S. A., & Molla, R. A. (2025). Systematic Review of Graph Neural Network for Malicious Attack Detection. Information, 16(6), 470. https://doi.org/10.3390/info16060470