Abstract

Due to training limitations, general LLMs often lack sufficient accuracy and practicality in specialized domains such as elderly health management. To help alleviate this issue, this paper introduces EHMQA-GPT, the first domain-specific LLM tailored for non-specialist users (caregivers, elderly individuals, family members, and community health workers) for low-risk, daily health consultations in real-world scenarios. EHMQA-GPT innovates in two aspects: (1) professional corpus construction: we established a multi-dimensional annotation system, integrating EHM-KB, EHM-SFT, and EHM-Eval, to achieve vector representation and hierarchical classification of domain knowledge; and (2) knowledge-enhanced large language model construction: based on ChatGLM3-6B, we integrated knowledge retrieval mechanisms and supervised fine-tuning strategies, enhanced the generation effect through knowledge base retrieval, and achieved deep alignment of domain knowledge through mixed supervised fine-tuning. The experimental verification part adopts testing in six fields. EHMQA-GPT has an accuracy rate of 78.1%, which is 22.3% higher than ChatGLM3-6B. Subjective assessment constructs a dual verification system (GPT-4 automatic scoring + gerontology expert blind review) and is significantly superior to the baseline model in three dimensions: knowledge accuracy (+38.9%), logical coherence (+39.4%), and practical guidance (+31.4%). The proposed framework and corpus provide a novel and scalable foundation for future research and deployment of LLMs in elderly health.

1. Introduction

Comprehensive implementation of the “Healthy China 2030” strategy marks a paradigm shift in China’s public health system from disease treatment to health management. This strategy clearly states that, by 2030, the national health literacy level of the entire population should be raised to 30%, among which the elderly group, as a high-risk group for chronic diseases, directly affects realization of the optimization goal of national health resource allocation [1]. Currently, the aging process in China presents significant characteristics of “large scale, rapid growth rate, and heavy burden of chronic diseases”: by the end of 2022, the population aged 60 and above reached 280 million, accounting for 19.8% of the total population, and the average duration of living with diseases for each individual extended to 8.3 years [2]. Against this backdrop, how to scientifically guide the health management of the elderly through artificial intelligence technology holds distinct significance for improving the health level of the elderly and is a necessity in the current aging society.

In recent years, natural language processing has significantly promoted the development of large language models [3]. LLMs such as ChatGPT [4] and ERNIE [5] have enabled the public to intuitively perceive great achievements in innovation and functional enhancement through the application of artificial intelligence technology. The notable performance of LLMs is closely related to the comprehensiveness of their training data [6]. The training datasets of LLMs are usually collected from various fields, covering knowledge from multiple domains, but there are still deficiencies in the systematic integration of specific disciplines. Especially in the field of elderly health management, the problem may be more prominent. The distribution of data resources among different disciplines shows an imbalance feature. Compared with the easy accessibility of general domain data, data acquisition in professional fields such as medicine, agriculture, and geography is more difficult. This structural imbalance may affect the knowledge representation ability of the model in professional fields.

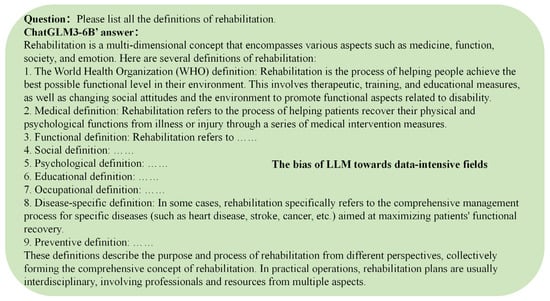

Taking the currently representative Chinese open-source large language model ChatGLM3-6B [7] as an example, when asked about the definition of the term “rehabilitation”, the model will prioritize outputting explanations in the context of general medical scenarios, such as postoperative recovery or basic physiotherapy suggestions (as shown in Figure 1). However, in the field of elderly health management, “rehabilitation” specifically refers to a multi-dimensional intervention system targeting geriatric syndromes, including motor function reconstruction, cognitive training, coordinated management of medications, etc. Professional processes such as these are rarely precisely defined in the model’s responses. This knowledge representation bias reveals the tendency of LLMs towards data-intensive domains. When users state that “a rehabilitation plan needs to be formulated”, the general context may point to a simple recuperation plan, but in the elderly health management scenario, this term requires integrating comprehensive intervention measures such as vital sign monitoring, fall risk assessment, and personalized nutritional support. This semantic gap between domains may lead to serious consequences: if routine rehabilitation suggestions are mistakenly applied to elderly patients with sarcopenia, it may exacerbate their risk of motor function deterioration.

Figure 1.

General LLMs are still insufficient in the systematic integration of specific disciplines, and there is a structural imbalance in professional and data-intensive fields.

Current research indicates that constructing massive multi-source datasets can effectively alleviate such technical bottlenecks. Taking industry-leading commercial models such as ChatGPT and GPT-4 as examples, they demonstrate significant advantages in knowledge processing efficiency in professional scenarios by integrating interdisciplinary training corpora [8,9]. However, the parameter scale of these models often reaches the hundreds of billions (such as GPT-3, with 175 billion parameters), and their core algorithms are usually only open through interfaces, objectively forming a double barrier for in-depth research and customized deployment in professional scenarios. The current mainstream solutions focus on building domain knowledge-driven dedicated language models [10]. This technical path has been verified in practice in professional fields such as law [11], transportation [12], agriculture [13], education [14], and medicine [15].

This paper takes elderly health management as its research object and aims to develop an elderly health management large language model EHMQA-GPT that bridges the gap between general LLMs and domain-specific knowledge in elderly health. The target users of the model include community-level healthcare workers, caregivers, family members, and elderly individuals, with the goal of providing non-diagnostic, low-risk support in daily health consultations. The intended application scenarios cover chronic disease self-management, health literacy improvement, rehabilitation guidance, policy interpretation, and elderly care consultation that are distinct from high-risk clinical decision-making in fields such as cardiovascular care or surgery.

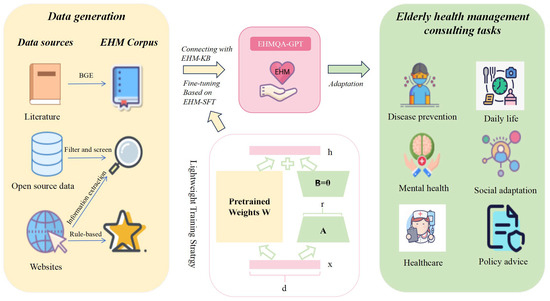

The proposed approach includes two key components: the construction of an elderly health management corpus and the design of a knowledge-enhanced LLM tailored for safe, context-aware responses in elderly health management. The overall framework diagram is illustrated in Figure 2.

Figure 2.

The framework for training a large language model for elderly health management, named EHMQA-GPT. In the lightweight training stratrgy, Matrix A is initialized as a Gaussian distribution matrix, and matrix B is initialized as a zero matrix. W represents the weight matrix.

A rich corpus is the foundation for the training of LLMs. Therefore, we first constructed a rich corpus for elderly health management, including Elderly Health Management Knowledge Base Data (EHM-KB), Supervised Fine-tuning Data (EHM-SFT), and Evaluation Data (EHM-Eval). Specifically, EHM-KB contains a large amount of literature related to elderly health management; EHM-SFT is the result of information extraction from professional data, integrated by the voting method and screening, and includes six aspects: disease prevention, daily life, mental health, social adaptation, healthcare, and policy advice; and EHM-Eval is collected from both subjective and objective perspectives, aiming to comprehensively evaluate the model’s ability to understand and apply knowledge in elderly health management.

To prepare the training data needed for the model, we introduce EHMQA-GPT, a large language model for elderly health management. Specifically, EHMQA-GPT first adjusts the general-domain Chinese LLM, ChatGLM3, to integrate external knowledge bases, and then further supervises and fine-tunes the model using instruction data. With rich knowledge of elderly health management, EHMQA-GPT outperforms the original model in understanding and answering health inquiries, benefiting both the elderly population and caregivers.

To sum up, our contributions can be summarized as follows:

- (1)

- We proposed EHMQA-GPT, a large language model for elderly health management. It can understand users’ intentions in asking questions and provide accurate responses. This model can offer practical assistance to the elderly population and their caregivers.

- (2)

- The data collection strategy and the constructed special corpus in this paper can serve as the research foundation for large language models in the field of elderly health management.

- (3)

- Through a large number of experiments on the evaluation dataset, the effectiveness of EHMQA-GPT and the corpus was verified. The experimental results confirmed the feasibility of the research ideas in this paper and provided a practical and feasible method for future researchers.

The structure of this paper is as follows: Section 2 describes the current related research work and progress; Section 3 introduces the methods for constructing the corpus; Section 4 presents the methods for building knowledge-enhanced large language models; Section 5 conducts model validation and effect evaluation; Section 6 provides a summary; and Section 7 concludes and presents future research directions.

2. Related Work

2.1. General LLMs

Generally, LLMs refer to transformer language models that are trained on large-scale text data and contain hundreds of billions (or more) of parameters [16], such as GPT-3 [17], PaLM [18], LLaMA [19], etc. LLMs demonstrate strong capabilities in understanding natural language and solving complex tasks through text generation.

In recent years, general-domain LLMs have made many research advancements in multiple aspects. Continuous expansion of model parameters and data scale has become a key trend. Meta’s Llama 3.1 model, with 405 billion parameters, demonstrates strong performance in complex reasoning tasks [20]. Although OpenAI’s o1 model’s parameter count remains undisclosed, its performance has surpassed that of GPT-4o [21,22]. Studies have shown that expansion of model scale can trigger “emergent capabilities”, such as cross-task generalization and multi-step reasoning. Significant breakthroughs have also been achieved in aspects such as architecture optimization, training paradigm innovation, and multimodal fusion. Google’s Gemini 1.5 Pro supports a context length of up to a million tokens and can process various modalities, including video and audio [23]. Meta’s Llama 3.2 is the first open-source multimodal model, enabling joint processing of images and text. Moreover, efficient training techniques for LLMs continue to evolve, with distributed parallelism and memory optimization significantly reducing training costs. AWS, leveraging EC2 P4d instances equipped with NVIDIA A100 GPU clusters, enables developers to launch a distributed training environment with a 3.2 Tbp network bandwidth in just 15 min, with an efficiency improvement of over 400% compared to traditional local GPU cluster deployments. Additionally, knowledge distillation and model compression techniques are advancing lightweight deployment of models [24,25]. For instance, Baidu’s Wenxin 4.0 Turbo model supports highly efficient inference.

In terms of breakthroughs in core capabilities, the context learning and reasoning enhancement of LLMs have achieved significant results. Chain-of-Thought (CoT) technology explicitly generates reasoning paths, which has increased the accuracy rate of the model in mathematical reasoning and complex problem-solving by more than 30% [26]. On the GSM8K dataset, after applying CoT technology, the accuracy rate of the model has significantly improved [27]. Zero-shot CoT prompts generate more accurate answers by providing simple prompts to the LLMs, such as appending “Let’s think step by step” at the end of the question [28]. The Graph-of-Thought (GoT) method enhances the reasoning process by constructing a thought graph, enabling the aggregation, refinement, and transformation of ideas [29].

CoT techniques have significantly improved the performance of LLMs in tasks such as mathematical reasoning, logic puzzles, and code debugging. Models trained with rule-based reinforcement learning (Logic-RL) exhibit substantial performance gains across various tasks, including logic puzzles, mathematical problems, code debugging, and decision support [30]. Retrieval-augmented generation (RAG), which integrates external knowledge bases or search engines, effectively reduces the issue of model “hallucinations” [31]. Microsoft’s LLM2CLIP enhances the alignment ability of text and images through contrastive learning [32].

General LLMs are driving rapid transformations in the field of artificial intelligence. With advancements in efficient distributed training, model compression, and cross-modal knowledge alignment, LLMs are poised to play a crucial role in real-world applications, accelerating intelligent transformation across industries such as healthcare, finance, and education.

2.2. Med-LLMs

LLMs have created unprecedented opportunities for AI-driven advancements in the medical field. The exploration of medical LLMs spans a wide range of aspects, from assisting clinicians in making more accurate decisions to improving the quality and effectiveness of patient care. This includes enhancing medical knowledge comprehension, improving diagnostic accuracy, and providing personalized treatment recommendations. Transforming a general-domain LLM into a professional medical LLM is like training it to become a “doctor,” involving multiple steps: prompt engineering, specific fine-tuning, and retrieval-augmented generation (RAG) [33].

Existing LLMs in the medical field, such as Med-PALM [34], contribute to advancements in healthcare through their unique design goals, architectures, and capabilities. These models jointly drive the development of medical large language models (Med-LLMs) and strengthen their applications in healthcare, providing strong support for future medical practice and research. BioBERT [35] is a variant of BERT specifically trained on biomedical texts, designed to understand and process medical and biological information more effectively than general models. PubMedBERT [36], trained on PubMed abstracts, can assist researchers in quickly filtering through vast volumes of scientific literature to find relevant information. Med-PaLM [34] is specifically designed for medical applications, trained on extensive medical datasets. It can be used for tasks such as medical text generation and information extraction, generating detailed medical reports, and assisting in clinical decision-making. Med-PaLM2 [37], an upgraded version of Med-PaLM, is trained on a larger-scale medical dataset to further improve its performance in medical applications. ChatDoctor [38] is a conversational AI model designed to assist with medical consultations, capable of answering health-related questions and providing preliminary advice based on symptoms. “BenTsao” [39] is a language model tailored for medical applications. It can handle tasks such as medical text generation and information retrieval. The ShenNong Traditional Chinese Medicine LLM focuses on the TCM domain. It is trained on a comprehensive collection of TCM texts, covering ancient classics, modern research papers, clinical practice guidelines, etc. It supports TCM practitioners in diagnosing and treating patients based on TCM theories, assists in the formulation of herbal prescriptions, and contributes to the education of both practitioners and patients. Med-Gemini [40] is trained based on a bilingual corpus of medical literature, clinical records, and patient medical records, capable of handling medical texts in both English and Chinese. It facilitates cross-cultural medical communication, supports bilingual healthcare environments, and aids in accurately translating medical documents. Health-LLM [41] integrates retrieval-augmented generation (RAG) with medical knowledge bases to significantly improve diagnostic accuracy. By combining health report analysis and applying the XGBoost prediction model, it can generate customized and personalized health recommendations.

The above-mentioned medical LLMs have demonstrated tremendous potential in enhancing medical information processing and clinical decision support. With the continuous advancement of cross-disciplinary cooperation and technological progress, medical LLMs are expected to provide solid support for building an intelligent, precise, and efficient medical service system, drive the medical and health industry to enter a new stage of development.

2.3. Summary of LLMs in EHM

LLMs demonstrate multi-dimensional innovative potential in the field of elderly health management, driving a paradigm shift in smart elderly care. The Google Health team has validated the feasibility of LLMs in medical knowledge encoding and clinical reasoning through Med-PaLM [42]. Similarly, Clinical Camel, an open expert-level medical LLM fine-tuned on LLaMA-2 using dialogue-based knowledge encoding, has demonstrated superior performance to GPT-3.5 regarding multiple medical QA benchmarks, highlighting the growing feasibility of instruction-tuned models in clinical domains [43]. PMC-LLaMA adopts a data-centric strategy by integrating 4.8 million biomedical articles and 30,000 medical textbooks for instruction fine-tuning, producing a lightweight yet high-performing open-source medical model that surpasses ChatGPT with regards to several benchmarks [44]. In the medical dialogue domain, knowledge-graph-assisted LLMs combine UMLS-based entity reasoning with large-scale generative models to produce clinically accurate and context-rich conversations, as shown in studies using the MedDialog and CovidDialog datasets [45]. Additionally, GatorTron, trained on over 90 billion words of de-identified clinical notes, demonstrates how scaling up model size significantly improves performance on core clinical NLP tasks such as NLI and medical QA [46]. These international studies highlight diverse strategies for optimizing LLMs in healthcare, yet most remain focused on English-language or clinical applications.

Breakthroughs in multimodal learning frameworks provide a technical path for integrating data from wearable devices, voice, and text, significantly improving monitoring accuracy in scenarios such as fall risk prediction [47]. The privacy protection framework based on federated learning [48] pointed out the technical optimization direction for enhancing the privacy and security of elderly-friendly LLM systems. In the field of chronic diseases management, LLMs combined with the MIMIC-IV dataset are used to develop prescription prediction models, advancing electronic health record (EHR) analysis [49]. For mental health support, LLM-based conversational systems or voice assistants help reduce feelings of loneliness and social isolation among the elderly [50]. In daily monitoring scenarios, a real-time fall detection system, powered by smart home sensors and motion trajectory analysis, exemplified the innovation and responsibility of contemporary technology in safeguarding the independent living and life safety of the elderly [51].

The current research trends indicate that LLMs are evolving from simple question-answering tools into multifunctional systems encompassing “data integration, decision support, and emotional interaction”. While several international studies have explored LLMs for healthcare tasks, most focus on English-language, hospital-centric applications. In contrast, this study addresses the underrepresented scenario of Chinese-language elderly health management in non-clinical public health contexts. This work extends the applicability of domain-specific LLMs to broader global health populations, especially in aging societies. This study covers the entire process of building a knowledge-enhanced large language model and a professional corpus. It applies comprehensive knowledge of elderly health management and innovatively integrates a knowledge retrieval mechanism with supervised fine-tuning strategies, enhancing generation through knowledge-based retrieval and employing hybrid supervised fine-tuning to achieve deep alignment with domain-specific knowledge. This approach benefits both elderly individuals and caregivers.

2.4. Comparison with Knowledge-Graph-Based Methods

Recent advancements in clinical artificial intelligence have highlighted the potential of knowledge graphs (KGs) for structured medical reasoning, particularly in diagnosis prediction and entity relationship modeling [52,53]. While KG-based frameworks and our proposed EHMQA-GPT both aim to enhance domain-specific knowledge utilization, they diverge significantly in methodology, scalability, and application focus. Below, we contextualize the novelty and effectiveness of our approach against KG-driven paradigms.

2.4.1. Methodological Contrast

- (1)

- Knowledge Representation

KGs relies on structured triples (e.g., ⟨Hypertension, causes, Stroke⟩) extracted from medical databases, or those extracted through rule-based systems. This enables clear logical reasoning but requires a significant amount of manual alignment, and it is difficult to handle unstructured or constantly changing knowledge. EHMQA-GPT, on the other hand, utilizes semantic vector retrieval (FAISS + LangChain) to dynamically integrate various knowledge from unstructured text (such as journal papers, guidelines), without being limited by fixed patterns.

- (2)

- Reasoning Mechanisms

KGs use graph neural networks (GNNs) or rule engines to traverse pre-defined relationships, excelling in tasks such as differential diagnosis. EHMQA-GPT combines retrieval-enhanced generation (RAG) with supervised fine-tuning (SFT) to achieve context-aware open-ended questioning, suitable for applications in elderly health management.

- (3)

- Operational Flexibility

KGs are limited by static update cycles; integrating new discoveries (such as drug interactions) requires redesigning the graph structure. EHMQA-GPT, however, achieves real-time knowledge updates through incremental indexing technology for FAISS vector databases, ensuring access to the latest research results and guidelines.

2.4.2. Novelty and Synergy

While KG-based methods excel in structured reasoning (e.g., differential diagnosis), EHMQA-GPT addresses complementary challenges.

- (1)

- Handling Unstructured Data

Clinical notes and guidelines often lack formal structure, making KG alignment error-prone. Our retrieval mechanism bypasses this bottleneck by directly leveraging raw text.

- (2)

- Cost-Efficiency

Manual KG curation is resource-intensive, especially for niche domains like elderly health. Our automated procedure reduces dependency on expert annotation.

- (3)

- Dynamic Adaptation

KGs struggle to incorporate temporal updates (e.g., new drug interactions). LangChain-enabled retrieval ensures access to the latest EHM-KB entries.

KG-based methods prioritize diagnosis prediction via graph neural networks (GNNs) or rule-based reasoning, often requiring pre-aligned entity embeddings. However, EHMQA-GPT is optimized for open-ended QA and personalized recommendations, rather than structured diagnosis prediction.

3. EHMQA-GPT Data Collection and Processing

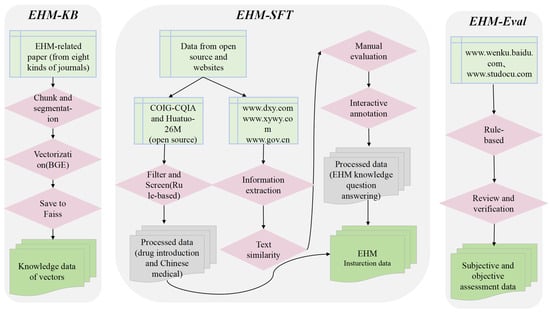

In this paper, we constructed three corpora for different training stages of EHMQA-GPT: EHM-KB, EHM-SFT, and EHM-Eval. EHM-KB consists of a large volume of unlabeled raw texts, aiming to inject elderly health management professional knowledge into the large language model. EHM-SFT is composed of high-quality instruction data, aiming at enhancing the understanding ability of the model in professional tasks. EHM-Eval is used to evaluate and compare the effectiveness of different large language models in professional tasks. All data used in the corpus were collected from publicly available and authorized sources, and we confirm that we have the legal and ethical rights to use them for research purposes. The corpus construction framework is shown in Figure 3.

Figure 3.

The framework for EHM corpus construction.

3.1. EHM Corpus: EHM-KB

According to statistics, the mortality rate caused by chronic non-communicable diseases (i.e., chronic diseases) in China has reached 86.6% of total deaths, and it is more prevalent in the elderly stage. Chronic diseases such as hypertension, diabetes, and cardiovascular and cerebrovascular diseases not only affect the quality of life of the elderly, but also impose a heavy burden on families and society. Therefore, we leveraged professional academic literature to enrich the large language model with comprehensive knowledge of chronic diseases in the elderly. Specifically, we collected 500 relevant papers from 10 journals related to elderly health management, stored them in PDF format, and compiled them into EHM-KB. The selection of these papers followed a structured process: we first chose authoritative journals in the field of geriatrics and elderly health management, applied keyword filtering for relevance (e.g., “elderly care”, “chronic diseases”, “rehabilitation”, “health policy”) and focused on recent publications from 2022–2024. All selected papers were manually reviewed to ensure domain applicability and content quality. No private medical records or hospital-based clinical notes were used. The final dataset spans multiple subdomains, such as chronic disease management, mental health, daily living assistance, and elderly-friendly policy.

The obtained data do not need to be trained. It is loaded, chunked, vectorized, and then stored in the vector knowledge, serving as the knowledge reserve for elderly health management consultations. Here is a detailed explanation of the steps. The collected PDF files are used as input and processed using the UnstructuredFileLoader for document loading. After loading and reading the documents, the ChineseRecursiveTextSplitter tokenizer is used to segment the text into smaller chunks. We employ the BAAI General Embedding (BGE) semantic model to encode these text segments into high-dimensional semantic vectors [54]. The segmented data are vectorized and stored in the FAISS vector database with efficient indexing [55]. The Inverted File Index (IVF) algorithm is used to accelerate similarity searches, while associating metadata such as the original text and page numbers to support result traceability [56].

The UnstructuredFileLoader can load and read various forms of documents such as pdf, json, txt, etc. It can also track other metadata during usage. ChineseRecursiveTextSplitter inherits from RecursiveCharacterTextSplitter and modifies the keep_separator parameter passed in. By default, it uses [ “\n\n”,”\n”,”。|!|?”, “\.\s|\!\s|\?\s”,”;|;\s”, “,|,\s”] and redefines the _split_text function.

3.2. Instruction Tuning Corpus: EHM-SFT

3.2.1. Dataset Overview

The instruction fine-tuning dataset consists of professional instruction data tailored to elderly health management. The dataset is constructed from a hybrid combination of the following three sources: (1) web-crawled QA pairs from authoritative Chinese medical websites; (2) LLM-assisted information extraction, where a base model was prompted to extract structured instruction–answer pairs from unstructured medical content; (3) open-source professional datasets, including COIG-CQIA and Huatuo-26M. This approach ensures domain relevance and originality, because most of the instruction data are custom-generated or have been transformed through multiple layers of screening, formatting, and verification to adapt to the elderly health context. Detailed information is provided in Table 1. The rightmost column of Table 1 indicates the number of final instruction QA pairs retained after preprocessing, filtering, and formatting.

Table 1.

Statistics of instruction tuning data.

3.2.2. Professional Instruction Tuning Data

In this section, the construction strategy of the professional instruction data will be elaborated in detail. Unlike general instruction data, expert instruction data are used to guide the model to align with domain experts. The data collection methods include two approaches: information extraction based on LLMs and collection from open-source datasets.

- (1)

- Construction of the dataset based on information extraction using LLMs

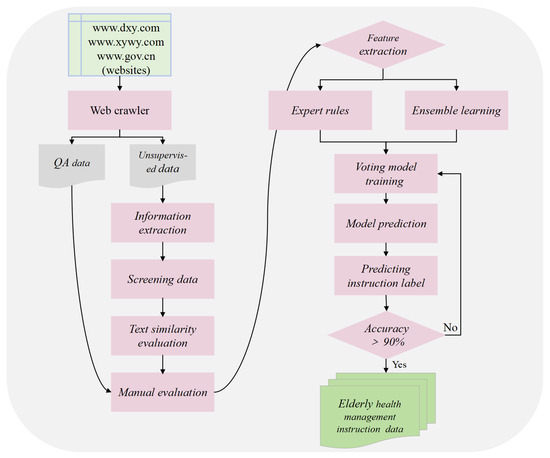

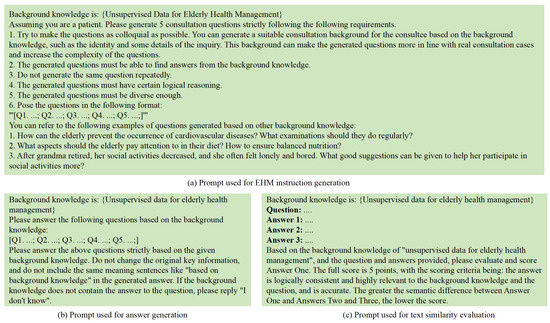

The quality of the dataset directly determines the performance of the fine-tuned model. To ensure data quality, we implemented a comprehensive quality control process across all stages, including data collection, information extraction, text similarity evaluation, manual evaluation, and human–computer interaction annotation. Data collection was conducted following a standardized procedure to ensure the authority, reliability, and diversity of data sources. The crawled data underwent multiple rounds of filtering to remove irrelevant content, formatting errors, and incomplete information, ensuring data integrity. Prompt words are carefully designed to guide the LLM to understand and summarize the collected information, and the data are extracted in accordance with the designed prompt word template. This process generated question–answer pairs highly relevant to elderly health management, with strong specialization and accuracy. Text similarity evaluation was used to ensure the relevance and accuracy of the generated content. Manual evaluation is used to conduct detailed verification of the data, and data pairs that do not meet the requirements are manually annotated to reduce interference factors and ensure the consistency of data labels. In the human–computer interaction annotation stage, automatic annotation is carried out based on expert rule models, and data pairs that do not meet the requirements are eliminated to maintain the dataset’s high quality and reliability. As a result, we obtained a total of 37,769 professional instructions. Figure 4 illustrates the dataset creation process through information extraction.

Figure 4.

The dataset creation process mainly consists of an information extraction stage, text similarity evaluation, a manual evaluation stage, and a human–computer interaction annotation stage.

The process of creating the dataset is divided into the following six main steps.

- Data crawling: This step used web crawlers to collect question–answer data and unsupervised data (i.e., unstructured text data) related to elderly health management from authoritative websites such as www.dxy.com, www.xywy.com, and www.gov.cn. The crawled data cover aspects including professional knowledge of elderly diseases, common health questions, health care and wellness, prevention and nursing, and medical insurance service policies. The question–answer data pairs will be stored in JSON format, while the unsupervised data will be logically divided by the delimiter “@@@@@” into each piece of data and saved as txt files.

- Information extraction and data generation: Leveraging the powerful information extraction capabilities of LLMs, the acquired unsupervised data are used as background knowledge input. Prompt engineering is employed to design instruction prompts (see Figure 5a), guiding the model to generate instruction questions with strong domain characteristics that are closely related to the background knowledge. Additionally, answer generation prompts are designed (see Figure 5b) to instruct the model to generate corresponding answers based on the background knowledge and the previously generated instruction questions.

Figure 5. The process of generating instruction-tuning data based on unsupervised data for elderly health management.

Figure 5. The process of generating instruction-tuning data based on unsupervised data for elderly health management. - Data cleaning and screening: A combination of regular expressions and manual review was applied to clean and filter the instruction data. Specifically, the following criteria were used: (1) eliminating duplicate QA pairs based on semantic similarity (cosine similarity threshold > 0.95); (2) discarding incomplete or empty answers; (3) filtering out formatting inconsistencies, such as missing question headers or malformed delimiters; and (4) removing out-of-domain content, including non-health-related queries or generic chatbot responses. These steps ensured that the resulting instruction dataset was consistent, medically relevant, and of high quality.

- Text similarity evaluation: The model is prompted to generate different answers based on the background knowledge and instruction questions (see Figure 5c). Text similarity evaluation prompts are designed to guide the model in assessing the text similarity between these answers. Instruction data with significant semantic differences, low accuracy, or weak domain relevance are identified and removed.

- Manual evaluation and annotation: Manual annotation, combined with semantic similarity features, provides a basis for model training and accuracy evaluation. Instruction data pairs closely related to elderly health management are assigned a label value of 0, while those with low or no relevance are assigned a label value of 1.

- Human–computer interaction annotation: During the stage of manual evaluation and annotation, 10,000 pieces of data were randomly selected for annotation to form the initial sample set. Subsequently, based on the manual evaluation criteria, an expert rule model was defined, and a voting classification model was trained on the initial sample set to enable the model to have high-accuracy data classification. In the human–computer interaction annotation stage, the remaining 27,769 pieces of data were annotated interactively. Finally, the instance labels of all data were obtained, and the dataset was completed.

- (2)

- Open-Source Professional Datasets

COIG-CQIA is a new high-quality Chinese instruction-tuning dataset jointly developed by researchers from multiple academic institutions, including the Shenzhen Institutes of Advanced Technology Chinese Academy of Sciences, the Institute of Automation Chinese Academy of Sciences, Peking University, and the University of Science and Technology of China. This dataset is obtained by carefully screening and processing manually-written corpora from the Chinese internet, aiming to better reflect the interaction patterns of real-world Chinese users. Its effectiveness has been validated in prior study, demonstrating significant improvements not only in a model’s ability to understand and execute complex Chinese instructions, but also in human evaluation and safety benchmark tests [57]. Therefore, we incorporate 6971 disease-related instructions from the COIG-CQIA dataset to enhance the diversity of our dataset and further align the language-understanding ability of the model with human preferences.

We also extracted professional data from the existing open-source dataset Huatuo-26M to enrich the diversity of our dataset. Specifically, Huatuo-26M contains 26 million question–answer pairs, covering a wide range of topics, including common diseases, chronic diseases, and complex illnesses, and the question–answer format is close to real-world medical diagnostic scenarios [58]. Through this approach, we used a rule-based method to extract relevant instructions to supplement the dataset. Specifically, we collected a set of keywords related to elderly health management, such as “elderly”, “chronic diseases”, “care” etc., and retrieved the data containing these keywords from the dataset. Eventually, we obtained 49,899 instructions.

3.3. Evaluation Corpus: EHM-Eval

To assess the validity and accuracy of our trained model in elderly health management consultations, as well as its proficiency in understanding and applying this knowledge, we constructed an evaluation benchmark called EHM-Eval. This benchmark provides a comprehensive assessment of the model’s capabilities from both objective and subjective dimensions.

To objectively and quantitatively evaluate the model’s knowledge comprehension and reasoning abilities in the field of elderly health management, we constructed an objective evaluation dataset. This dataset consists of multiple-choice questions, with each question having only one correct answer. The objective evaluation dataset is sourced from the Studocu website (www.studocu.com), where we obtained past health care exam papers. After collecting the dataset, we manually sorted and checked all questions and corrected any errors to ensure its validity in model evaluation.

Additionally, to thoroughly evaluate the model’s understanding and application of elderly health management knowledge, we constructed a subjective evaluation system based on a question-answering paradigm. This system simulates the assessment process of subjective exam questions, with the dataset sourced from authoritative resources such as the Baidu Document Library and the vocational qualification examination for elderly care workers. To ensure data quality, all questions underwent rigorous expert review and validation.

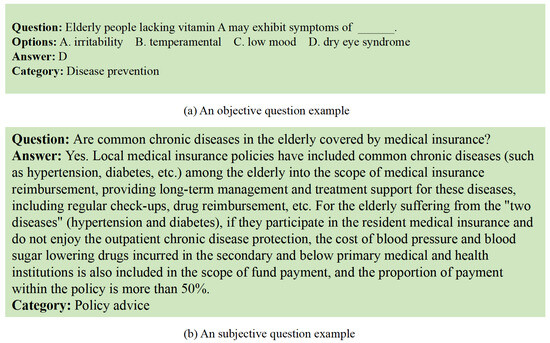

The evaluation dataset consists of 720 objective multiple-choice questions and 180 subjective questions, with detailed information provided in Table 2. To ensure the comprehensiveness and domain adaptability of model evaluation, we systematically divided the test questions into six categories: disease prevention, daily life, mental health, social adaptation, healthcare, and policy advice. Each category of questions underwent rigorous screening and structured processing, with standard reference answers provided by domain experts. Figure 6 presents representative examples of both objective and subjective question types.

Table 2.

Statistics of the evaluation data.

Figure 6.

Illustration of objective and subjective questions: (a) an objective question example and (b) a subjective question example.

4. The Training Process of EHMQA-GPT

This section provides a detailed description of the training process of EHMQA-GPT. Developing a professional large language model involves significant computational costs and extensive data requirements. In comparison, customizing a general-domain pre-trained LLM offers a more feasible solution. Therefore, we selected ChatGLM3-6B as the base model. ChatGLM3, jointly developed by Zhipu AI and Tsinghua University’s KEG Laboratory, improves upon ChatGLM2 in several aspects, including data diversity, training steps, and training strategy optimization. As a result, it demonstrates superior performance across multiple datasets in dimensions such as semantic understanding, mathematical calculation, logical reasoning, code generation, and knowledge acquisition. The model training process consists of two sub-processes: supervised fine-tuning and knowledge base connecting.

4.1. EHMQA-GPT Supervised Fine-Tuning

In the field of natural language processing, domain adaptation typically follows the “pre-training–fine-tuning” paradigm. This approach first builds a base language model using general-domain data and then optimizes it with domain-specific data. This paradigm effectively avoids the resource consumption of repeatedly training independent models for a single task, significantly enhancing the reusability of technology. However, with the emergence of large-parameter language models, traditional full-parameter fine-tuning faces significant computational cost challenges. Against this backdrop, parameter-efficient fine-tuning (PEFT) techniques have emerged. By freezing the core parameters and optimizing only key submodules, PEFT can reduce computation and storage overhead by over 90% [59]. Mainstream PEFT methods include LoRA, soft prompt tuning, adapter, prefix-tuning, etc. Among these, low-rank adaptation (LoRA) is one of the most commonly used PEFT methods for fine-tuning and has been maturely applied in fields such as medicine and law [60]. In our work, we also adopt LoRA to supervise the fine-tuning of ChatGLM3-6B for domain adaptation.

LoRA achieves efficient self-adaptation by optimizing the low-rank matrices for each task while freezing the original model parameters. It enables the compositional update of multiple task-specific self-adaptations without increasing inference latency. During LoRA training, the original parameter W0 is frozen. It participates in both forward and backward propagation, but its corresponding gradients are not computed, and its parameters are not updated. Therefore, the model primarily learns the low-rank matrices A and B rather than directly updating the original weight matrix W0. Thus, during inference, A and B can be merged into W0, significantly reducing training resource consumption while maintaining model efficiency.

To better match the model with knowledge in the field of elderly health management and enhance its instruction-tracking capabilities, LoRA is used for supervised fine-tuning based on the EHM-SFT dataset. The fine-tuned model can provide professional and accurate services for elderly groups and their caregivers, etc., and its effectiveness will be verified in the evaluation part.

4.2. Knowledge Base Connecting

LangChain, as an application development framework for large language models, offers a complete toolchain and component library through its modular design. Its core value lies in three aspects: firstly, it establishes a standardized LLM interaction pipeline, supporting unified API calls, context management, and dialogue state tracking for mainstream models; secondly, through the chain orchestration mechanism, it allows flexible combinations of components like retrieval-augmented generation, memory management, and tool invocation, enabling the structured construction of complex business logic; thirdly, it integrates heterogeneous data source interfaces, supporting seamless integration of external data such as PDFs, databases, and APIs via Document Loaders (such as UnstructuredFileLoader) and utilizes text splitters (such as ChineseRecursiveTextSplitter) for semantically-aware document chunking.

In the context of elderly health management, the technological advantages of LangChain are fully unleashed. By fine-tuning the domain-adapted ChatGLM3-6B, the model is endowed with the ability to understand specialized scenarios such as chronic disease management, rehabilitation exercises, mental health, nutrition, and daily care. With the help of the LangChain architecture, the fine-tuned model is integrated with the semantic vector knowledge base for elderly health management, further enriching the model with extensive professional knowledge.

5. The Evaluation of EHMQA-GPT

This section provides a detailed description of the evaluation methods and presents the evaluation results of EHMQA-GPT based on EHM-Eval, along with a comparison to relevant baselines. We focus on models that allow transparent, reproducible, and domain-level evaluation within the scope of elderly health management in Chinese. So, we selected three open-source Chinese LLMs—ChatGLM3-6B, DeepSeek R1-7B, and DoctorGLM—as comparative baselines. These models are more suitable for direct comparison with EHMQA-GPT in terms of language coverage, domain relevance, and accessibility for fine-tuning.

5.1. Objective Task

EHM-Eval contains 720 objective multiple-choice questions (see Table 3), each with only one correct answer. Therefore, for all baselines, a prompt was constructed to obtain the answer for each question, described as: “Which option is the best answer to this question: {question}? Options: {options}”. The accuracy score of the obtained answers is calculated based on the actual situation. Since the objective questions are categorized into different domains, we also computed the corresponding scores for each domain to provide a comprehensive evaluation. To better quantify performance reliability, we computed 95% confidence intervals for each model using bootstrapped sampling (1000 iterations). The results are shown in Table 3. EHMQA-GPT achieved significantly higher accuracy scores in all six domains compared to the original ChatGLM3-6B. DeepSeek R1-7B scored highest in the daily life category due to its overall strong capabilities. DoctorGLM performed well in healthcare-related domains but showed weaker cross-domain generalization. The results validate the effectiveness of the data we collected and the model training strategy.

Table 3.

Evaluation results based on the objective tasks.

5.2. Subjective Task

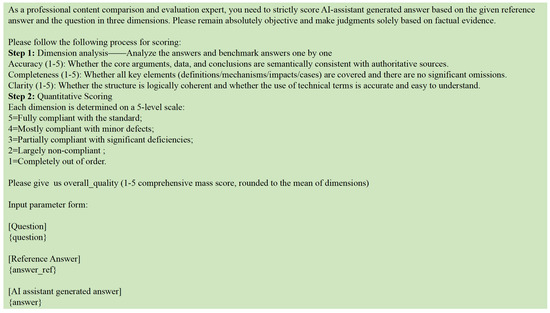

Unlike objective questions, evaluating subjective questions is often more complex. Each objective question has a single correct answer, whereas subjective questions typically have explanatory and open-ended answers. These answers require comprehensive evaluation considering multiple dimensions such as knowledge accuracy, logical coherence, and practical guidance. Evaluators must possess extensive domain expertise while minimizing potential biases. Therefore, in this paper, we provided answers from professionals as references for each subjective question. We used two evaluation methods to ensure objective and fair results. One method is to use GPT-4 as a judge to score the answers, while the other method is to invite multiple geriatric professionals to assess the answers.

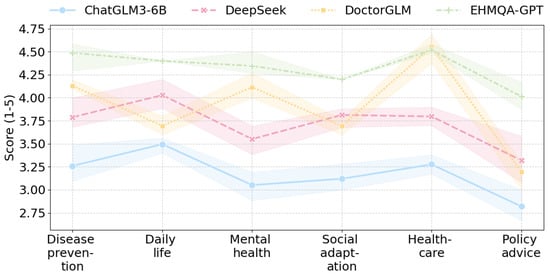

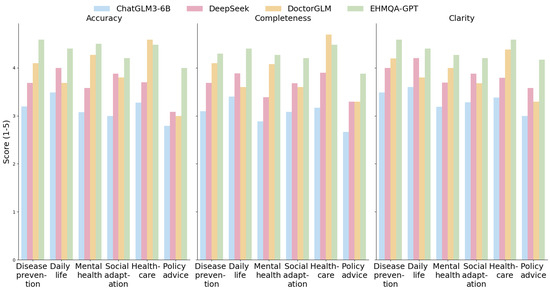

5.2.1. GPT-4 as a Judge

Among the existing large language models, GPT-4, with its competitive cross-domain cognition and reasoning capabilities, has become the gold standard for evaluating subjective answers in professional fields. Studies have shown that this model demonstrates highly consistent judgment capabilities with human experts in professional evaluation scenarios such as law [8]. Based on this, this study established a three-level evaluation system: accuracy (semantic consistency with the content and benchmark answers), completeness (coverage of key details), and clarity (logical rigor and coherence of expression). The structured prompts (as shown in Figure 7) guide GPT-4 to conduct systematic evaluation based on a one- to five-point scale, where higher scores indicate better answer quality. Also, to better quantify performance reliability, we computed 95% confidence intervals for each model using bootstrapped sampling (1000 iterations). The results are detailed in Table 4 and Figure 8 and Figure 9.

Figure 7.

The prompt used for GPT-4 as a judge.

Table 4.

Evaluation results based on subjective tasks performed by GPT-4.

Figure 8.

The cross-domain performance trends of each model.

Figure 9.

Horizontal comparison of the three dimensions of accuracy, completeness, and clarity.

The experimental results show that EHMQA-GPT outperforms in all dimensions and categories, with a significantly higher overall average score (accuracy: 4.361, completeness: 4.253, clarity: 4.368), particularly excelling in the policy advice category. DoctorGLM demonstrates a strong advantage in the healthcare vertical, with an average score of 4.586 in the healthcare category and an average completeness score of 4.096 in the disease prevention category. However, it shows limitations in cross-domain generalization (policy advice-clarity: 3.292). The general model, DeepSeek R1-7B, exhibits balanced capabilities, with clarity average score (3.858) approaching that of EHMQA-GPT, showcasing its strength in logical expression, but lacking in covering specialized details (e.g., mental health-completeness: 3.385). Compared to ChatGLM3-6B, EHMQA-GPT shows improvements across all evaluation metrics and demonstrates significant domain-specific advantages, validating the feasibility and effectiveness of the experimental approach.

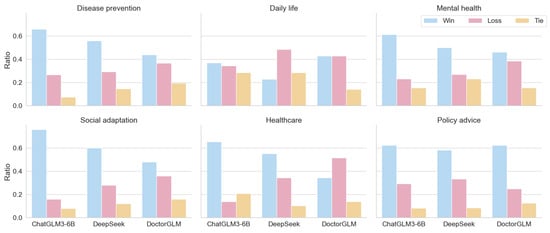

5.2.2. Assessment Based on Professionals

To enhance the domain expertise of the evaluation results, we invited 10 geriatric specialists to participate in the evaluation. A double-blind evaluation paradigm was employed: the generated answers from the comparison models and EHMQA-GPT were randomly ordered and anonymized. Experts were then presented with three options––A is better, B is better, or both A and B are comparable––for independent judgment. The detailed results can be found in Table 5 and Figure 10. The results show that, for subjective tasks, EHMQA-GPT provided more satisfactory answers, demonstrating the effectiveness of the specialized domain dataset construction and model fine-tuning.

Table 5.

Evaluation results based on the subjective tasks by professionals. Win represents the ratio by which EHMQA-GPT outperforms the corresponding model.

Figure 10.

The distribution of win, loss, and tie for three models in the classification of corresponding scenarios.

5.3. Error Analysis

To better understand the limitations of EHMQA-GPT, we conducted a qualitative error analysis on a random sample of incorrect predictions for the objective task and low-rated answers for the subjective task.

For the objective task, most errors were concentrated in the social adaptation and policy advice categories. Common issues included:

- (1)

- Issues such as ambiguous subject reference in the instructions;

- (2)

- Lack of factual grounding, especially for recent policy updates not yet reflected in the knowledge base;

- (3)

- Lexical overlap-based confusion, where the model selected the distractor due to surface-level similarity.

For the subjective task, GPT-4 and human raters both downgraded responses that:

- (1)

- Rephrased but failed to complete key factual elements;

- (2)

- Overgeneralized advice without considering an elderly-specific context.

By linking the errors to actual elderly health management scenarios, the analysis indicates that excessive clinical intervention should be avoided, and it is necessary to ensure that this model is always used merely as a consultation tool, rather than as a diagnostic or rehabilitation system. These insights suggest that future improvements should focus on question disambiguation, better policy document integration, and factual consistency training.

6. Discussion

6.1. Review of Methods and Analysis of Experimental Limitations

This paper constructs a professional corpus for elderly health management by integrating multi-source data, including EHM-KB, EHM-SFT, and EHM-Eval. EHM-KB integrates journal papers and authoritative website content and adopts semantic segmentation and vectorization for storage. EHM-SFT combines information extraction from large models and generation of professional instructions from open-source datasets. The EHM-Eval benchmark comprehensively evaluates the model’s capabilities in both subjective and objective dimensions. Based on the ChatGLM3-6B-based model, a knowledge retrieval mechanism is introduced to enhance domain knowledge representation, and LoRA technology is used for supervised fine-tuning. The parameter-efficient fine-tuning strategy achieves deep alignment between the model and elderly health management knowledge.

However, there are still several limitations in this study in terms of the methods and experiments. (1) Construction of the corpus primarily relies on specific journal papers and data crawled from the internet, without incorporating multi-source information such as medical images or sensor data. Additionally, the geographic and cultural coverage is limited, with non-Chinese elderly populations (e.g., international healthcare practices) being underrepresented in the current dataset. The timeliness of the data sources is somewhat limited, which may affect the breadth and depth of the professional knowledge covered, restricting the model’s potential. In addition, the current model is trained and evaluated only in Chinese, focusing specifically on elderly health scenarios. This limits its applicability to multilingual or broader clinical contexts. (2) While LoRA technology is used in supervised fine-tuning to reduce computational costs, the localized nature of parameter adjustments may limit the model’s ability to deeply align with complex domain knowledge. Additionally, since the training corpus is predominantly in Chinese, the generalization to cross-linguistic and cross-cultural scenarios has not been validated. (3) Since we were involved in both the construction of the instruction data and the design of part of the evaluation set, there may be potential bias in the evaluation process. To address this, we ensured that objective test questions were collected from authoritative third-party sources and underwent manual validation. Subjective evaluations were conducted using a double-blind review mechanism by domain experts and further validated through GPT-4 automatic scoring. These multi-dimensional evaluation strategies were adopted to mitigate bias and enhance the fairness and credibility of the results. (4) In the experimental evaluation process, although expert blind evaluations and GPT-4 automatic scoring were introduced for subjective ratings, the consistency of scoring standards among experts needs further quantification. Moreover, the semantic matching evaluation framework of GPT-4 lacks an external knowledge verification mechanism, which may not fully reflect the substantive accuracy of the answers. For policy-sensitive questions in particular, relying solely on semantic similarity can lead to evaluation bias. (5) The evaluation dataset is limited in size and does not comprehensively cover all subscenarios in elderly health management, potentially affecting the generalizability of the conclusions.

6.2. Transferability of the Research Methods

The domain-adaptive large language model training paradigm has become a key approach to enhancing the intelligence of professional services. The intelligent question-answering framework for elderly health management proposed in this study achieves domain specialization through a dual-stage collaborative mechanism of knowledge enhancement and instruction fine-tuning. Although the research focuses on the elderly health management domain, the data construction methodology proposed here has cross-domain transfer potential. This framework innovatively integrates external knowledge injection with supervised parameter tuning, constructing a hierarchical data system to achieve progressive integration of domain-specific knowledge.

In the supervised learning phase, the construction of fine-tuning instruction samples directly impacts the model’s optimization in dialogue interaction and task-parsing capabilities. For cross-domain application scenarios, researchers can refer to the strategy framework presented in this study: using unsupervised corpus data within the domain and leveraging high-performance commercial models (such as GPT-4) or open-source large language models (such as DeepSeek) to automate the construction of instruction data. During the fine-tuning data generation process, systematic constraints should be applied to the output content’s format, terminology system, and logical structure to ensure that the generated content aligns with the professional expression paradigms of the target domain.

7. Conclusions and Future Directions

This paper presents a large language model, EHMQA-GPT, that integrates knowledge related to elderly health management through text data research. The study first constructs a comprehensive elderly health management corpus, which includes EHM-KB, EHM-SFT, and EHM-Eval. Subsequently, it introduces EHMQA-GPT—a large language model for elderly health management. Specifically, EHMQA-GPT begins by adapting a general-domain Chinese language model, ChatGLM3, and connecting it to an external knowledge base. The model is then further fine-tuned using instruction data for supervised learning.

The experimental results demonstrate that EHMQA-GPT shows more robust performance in addressing professional elderly health management consultation questions compared to other models, both objectively and subjectively, indirectly validating the effectiveness of the corpus construction and training strategies presented in this paper. The integration of LangChain with EHMQA-GPT has significantly improved the precision of domain-specific question answering. This setup provides a reliable technological foundation for building intelligent elderly care systems. It is important to note that, although the model demonstrates promising accuracy in controlled evaluations, its current performance is not yet adequate for high-stakes clinical use. The model is currently intended for low-risk health management and decision-support scenarios.

Therefore, this study still has room for further exploration in the aspects of multimodal data integration, model architecture optimization, and cross-scenario generalization ability. Firstly, in the domain of elderly health management, there are many other important data resources with different forms, such as medical images and audio data. How to further extract and fuse these multimodal data and achieve dynamic knowledge updating so that the model can further answer professional questions based on this information will further enhance the model’s reasoning generalization ability and provide more comprehensive support for elderly users. Secondly, EHMQA-GPT is trained on a model with only 6B parameters, which may have limitations in terms of answer completeness. Generally, models with larger parameters tend to demonstrate more robust performance. Finally, half of the data in the constructed EHM-SFT were generated by guiding the large language model to perform information extraction, rather than relying entirely on manual annotation. As a result, the generated instruction data may not be as interpretable as fully manually annotated data. Therefore, collecting higher-quality instruction data will be a key focus for future work.

Given that this study focuses on Chinese-language elderly health management, we acknowledge that the current model’s applicability is limited to a specific linguistic and domain context. In future work, we also plan to explore the adaptability of the EHMQA-GPT framework to broader health domains, such as pediatrics, mental health, and maternal care, by expanding the corpus to cover diverse subfields of medicine. Furthermore, we aim to investigate the cross-lingual scalability of our instruction-tuning and evaluation pipelines, leveraging multilingual instruction datasets and alignment strategies (e.g., multilingual LoRA, cross-lingual retrieval augmentation). This will allow us to assess the generalizability of our approach in multilingual, multicultural, and low-resource settings, where health information access remains a major challenge. Ultimately, such extensions will help bridge the digital health divide and support more inclusive, globally deployable language model systems for healthcare.

Author Contributions

Conceptualization, X.L., Y.D. and T.Z.; writing—original draft preparation, Y.D.; writing—review and editing, S.L., X.L., J.W. and Y.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key Research and Development Program of China, grant number 2020YFB2104402, and the National Natural Science Foundation of China, grant number 72074209.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available in Mendeley Data at https://data.mendeley.com/preview/v8kx55rb42?a=8ddd04b3-26d0-4581-bf47-168c80848961 (accessed on 27 May 2025), DOI: 10.17632/v8kx55rb42.1, reference number: Elderly_Health_Management.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Han, L.; Wu, F. COVID-19 Drives Medical Education Reform to Promote “Healthy China 2030” Action Plan. Front. Public Health 2024, 12, 1465781. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Ye, W.; Chen, X.; Li, Y.; Zhang, L.; Li, F.; Yao, N.; Gao, C.; Wang, P.; Yi, D.; et al. Spatio-Temporal Pattern, Matching Level and Prediction of Ageing and Medical Resources in China. BMC Public Health 2023, 23, 1155. [Google Scholar] [CrossRef]

- Min, B.; Ross, H.; Sulem, E.; Veyseh, A.P.B.; Nguyen, T.H.; Sainz, O.; Agirre, E.; Heintz, I.; Roth, D. Recent Advances in Natural Language Processing via Large Pre-Trained Language Models: A Survey. ACM Comput. Surv. 2023, 56, 1–40. [Google Scholar] [CrossRef]

- Zheng, J.; Ding, X.; Pu, J.J.; Chung, S.M.; Ai, Q.Y.H.; Hung, K.F.; Shan, Z. Unlocking the Potentials of Large Language Models in Orthodontics: A Scoping Review. Bioengineering 2024, 11, 1145. [Google Scholar] [CrossRef]

- Zhu, S.; He, C. Chinese News Classification Based on ERNIE and Attention Fusion Features. In Proceedings of the 2023 6th International Conference on Robot Systems and Applications, Wuhan, China, 22–24 September 2023; Association for Computing Machinery: New York, NY, USA, 2024; pp. 134–138. [Google Scholar]

- Che, T.-Y.; Mao, X.-L.; Lan, T.; Huang, H. A Hierarchical Context Augmentation Method to Improve Retrieval-Augmented LLMs on Scientific Papers. In Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Barcelona, Spain, 25–29 August 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 243–254. [Google Scholar]

- Liu, C.; Sun, K.; Zhou, Q.; Duan, Y.; Shu, J.; Kan, H.; Gu, Z.; Hu, J. CPMI-ChatGLM: Parameter-Efficient Fine-Tuning ChatGLM with Chinese Patent Medicine Instructions. Sci. Rep. 2024, 14, 6403. [Google Scholar] [CrossRef]

- Katz, D.M.; Bommarito, M.J.; Gao, S.; Arredondo, P. GPT-4 Passes the Bar Exam. Philos. Trans. R. Soc. Math. Phys. Eng. Sci. 2024, 382, 20230254. [Google Scholar] [CrossRef]

- Waisberg, E.; Ong, J.; Masalkhi, M.; Kamran, S.A.; Zaman, N.; Sarker, P.; Lee, A.G.; Tavakkoli, A. GPT-4: A New Era of Artificial Intelligence in Medicine. Ir. J. Med. Sci. 2023, 192, 3197–3200. [Google Scholar] [CrossRef] [PubMed]

- Chen, Q.; Zhou, W.; Cheng, J.; Yang, J. An Enhanced Retrieval Scheme for a Large Language Model with a Joint Strategy of Probabilistic Relevance and Semantic Association in the Vertical Domain. Appl. Sci. 2024, 14, 11529. [Google Scholar] [CrossRef]

- Yue, S.; Liu, S.; Zhou, Y.; Shen, C.; Wang, S.; Xiao, Y.; Li, B.; Song, Y.; Shen, X.; Chen, W.; et al. LawLLM: Intelligent Legal System with Legal Reasoning and Verifiable Retrieval. In Proceedings of the Database Systems for Advanced Applications, Gifu, Japan, 2–5 July 2024; Onizuka, M., Lee, J.-G., Tong, Y., Xiao, C., Ishikawa, Y., Amer-Yahia, S., Jagadish, H.V., Lu, K., Eds.; Springer Nature: Singapore, 2024; pp. 304–321. [Google Scholar]

- Wang, P.; Wei, X.; Hu, F.; Han, W. TransGPT: Multi-Modal Generative Pre-Trained Transformer for Transportation. In Proceedings of the 2024 International Conference on Computational Linguistics and Natural Language Processing (CLNLP), Yinchuan, China, 19–21 July 2024; pp. 96–100. [Google Scholar]

- Kuska, M.T.; Wahabzada, M.; Paulus, S. AI for Crop Production—Where Can Large Language Models (LLMs) Provide Substantial Value? Comput. Electron. Agric. 2024, 221, 108924. [Google Scholar] [CrossRef]

- Wen, Q.; Liang, J.; Sierra, C.; Luckin, R.; Tong, R.; Liu, Z.; Cui, P.; Tang, J. AI for Education (AI4EDU): Advancing Personalized Education with LLM and Adaptive Learning. In Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Barcelona, Spain, 25–29 August 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 6743–6744. [Google Scholar]

- Thirunavukarasu, A.J.; Ting, D.S.J.; Elangovan, K.; Gutierrez, L.; Tan, T.F.; Ting, D.S.W. Large Language Models in Medicine. Nat. Med. 2023, 29, 1930–1940. [Google Scholar] [CrossRef]

- Agarwal, S.; Acun, B.; Hosmer, B.; Elhoushi, M.; Lee, Y.; Venkataraman, S.; Papailiopoulos, D.; Wu, C.-J. CHAI: Clustered Head Attention for Efficient LLM Inference. arXiv 2024, arXiv:2403.08058. [Google Scholar]

- Balkus, S.V.; Yan, D. Improving Short Text Classification with Augmented Data Using GPT-3. Nat. Lang. Eng. 2024, 30, 943–972. [Google Scholar] [CrossRef]

- Chowdhery, A.; Narang, S.; Devlin, J.; Bosma, M.; Mishra, G.; Roberts, A.; Barham, P.; Chung, H.W.; Sutton, C.; Gehrmann, S.; et al. PaLM: Scaling Language Modeling with Pathways. J. Mach. Learn. Res. 2022, 24, 1–113. [Google Scholar]

- Wu, C.; Gan, Y.; Ge, Y.; Lu, Z.; Wang, J.; Feng, Y.; Shan, Y.; Luo, P. LLaMA Pro: Progressive LLaMA with Block Expansion. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Bangkok, Thailand, 11–16 August 2024; Ku, L.-W., Martins, A., Srikumar, V., Eds.; Association for Computational Linguistics: Bangkok, Thailand, 2024; pp. 6518–6537. [Google Scholar]

- Gabber, H.A.; Hemied, O.S. Domain-Specific Large Language Model for Renewable Energy and Hydrogen Deployment Strategies. Energies 2024, 17, 6063. [Google Scholar] [CrossRef]

- Wu, S.; Peng, Z.; Du, X.; Zheng, T.; Liu, M.; Wu, J.; Ma, J.; Li, Y.; Yang, J.; Zhou, W.; et al. A Comparative Study on Reasoning Patterns of OpenAI’s O1 Model. arXiv 2024, arXiv:2410.13639. [Google Scholar]

- Hurst, A.; Lerer, A.; Goucher, A.P.; Perelman, A.; Ramesh, A.; Clark, A.; Ostrow, A.J.; Welihinda, A.; Hayes, A.; Radford, A.; et al. GPT-4o System Card. arXiv 2024, arXiv:2410.21276. [Google Scholar]

- Mondillo, G.; Frattolillo, V.; Colosimo, S.; Perrotta, A.; Di Sessa, A.; Guarino, S.; Miraglia Del Giudice, E.; Marzuillo, P. Basal Knowledge in the Field of Pediatric Nephrology and Its Enhancement Following Specific Training of ChatGPT-4 “Omni” and Gemini 1.5 Flash. Pediatr. Nephrol. Berl. Ger. 2025, 40, 151–157. [Google Scholar] [CrossRef]

- Chen, G.; Fan, Z.; Zhu, Y.; Zhang, T. Multi-Time Knowledge Distillation. Neurocomputing 2025, 623, 129377. [Google Scholar] [CrossRef]

- Huang, T.; Dong, W.; Wu, F.; Li, X.; Shi, G. Uncertainty-Driven Knowledge Distillation for Language Model Compression. IEEEACM Trans. Audio Speech Lang. Process. 2023, 31, 2850–2858. [Google Scholar] [CrossRef]

- Zhuang, R.; Wang, B.; Sun, S.; Wang, Y.; Ding, Z.; Liu, W. Unlocking Chain of Thought in Base Language Models by Heuristic Instruction. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June–5 July 2024; pp. 1–7. [Google Scholar]

- Cobbe, K.; Kosaraju, V.; Bavarian, M.; Chen, M.; Jun, H.; Kaiser, L.; Plappert, M.; Tworek, J.; Hilton, J.; Nakano, R.; et al. Training Verifiers to Solve Math Word Problems. arXiv 2021, arXiv:2110.14168. [Google Scholar]

- Liu, L.; Zhang, D.; Li, S.; Zhou, G.; Cambria, E. Two Heads Are Better than One: Zero-Shot Cognitive Reasoning via Multi-LLM Knowledge Fusion. In Proceedings of the 33rd ACM International Conference on Information and Knowledge Management, Boise, ID, USA, 21–25 October 2024; pp. 1462–1472. [Google Scholar] [CrossRef]

- Besta, M.; Blach, N.; Kubicek, A.; Gerstenberger, R.; Podstawski, M.; Gianinazzi, L.; Gajda, J.; Lehmann, T.; Niewiadomski, H.; Nyczyk, P.; et al. Graph of Thoughts: Solving Elaborate Problems with Large Language Models. In Proceedings of the AAAI Conference on Artificial Intelligence 2024, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 17682–17690. [Google Scholar] [CrossRef]

- Xie, T.; Gao, Z.; Ren, Q.; Luo, H.; Hong, Y.; Dai, B.; Zhou, J.; Qiu, K.; Wu, Z.; Luo, C. Logic-RL: Unleashing LLM Reasoning with Rule-Based Reinforcement Learning. arXiv 2025, arXiv:2502.14768. [Google Scholar]

- Cuconasu, F.; Trappolini, G.; Siciliano, F.; Filice, S.; Campagnano, C.; Maarek, Y.; Tonellotto, N.; Silvestri, F. The Power of Noise: Redefining Retrieval for RAG Systems. In Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval, Washington, DC, USA, 11–15 July 2024; pp. 719–729. [Google Scholar] [CrossRef]

- Huang, W.; Wu, A.; Yang, Y.; Luo, X.; Yang, Y.; Hu, L.; Dai, Q.; Dai, X.; Chen, D.; Luo, C.; et al. LLM2CLIP: Powerful Language Model Unlocks Richer Visual Representation. arXiv 2024, arXiv:2411.04997. [Google Scholar]

- Fan, W.; Ding, Y.; Ning, L.; Wang, S.; Li, H.; Yin, D.; Chua, T.-S.; Li, Q. A Survey on RAG Meeting LLMs: Towards Retrieval-Augmented Large Language Models. In Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Barcelona, Spain, 25–29 August 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 6491–6501. [Google Scholar]

- Karacan, E. Evaluating the Quality of Postpartum Hemorrhage Nursing Care Plans Generated by Artificial Intelligence Models. J. Nurs. Care Qual. 2024, 39, 206–211. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Yoon, W.; Kim, S.; Kim, D.; Kim, S.; So, C.H.; Kang, J. BioBERT: A Pre-Trained Biomedical Language Representation Model for Biomedical Text Mining. Bioinforma. Oxf. Engl. 2020, 36, 1234–1240. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Bian, J.; Huang, X.; Zhou, H.; Zhu, S. PubLabeler: Enhancing Automatic Classification of Publications in UniProtKB Using Protein Textual Description and PubMedBERT. IEEE J. Biomed. Health Inform. 2024, 29, 3782–3791. [Google Scholar] [CrossRef]

- Cervera, M.R.; Bermejo-Peláez, D.; Gómez-Álvarez, M.; Hidalgo Soto, M.; Mendoza-Martínez, A.; Oñós Clausell, A.; Darias, O.; García-Villena, J.; Benavente Cuesta, C.; Montoro, J.; et al. Assessment of Artificial Intelligence Language Models and Information Retrieval Strategies for QA in Hematology. Blood 2023, 142, 7175. [Google Scholar] [CrossRef]

- Li, Y.; Li, Z.; Zhang, K.; Dan, R.; Jiang, S.; Zhang, Y. ChatDoctor: A Medical Chat Model Fine-Tuned on a Large Language Model Meta-AI (LLaMA) Using Medical Domain Knowledge. Cureus 2023, 15, e40895. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Zhao, S.; Qiang, Z.; Li, Z.; Liu, C.; Xi, N.; Du, Y.; Qin, B.; Liu, T. Knowledge-Tuning Large Language Models with Structured Medical Knowledge Bases for Trustworthy Response Generation in Chinese. ACM Trans. Knowl. Discov. Data 2025, 19, 1–17. [Google Scholar] [CrossRef]

- Zhang, P.; Shi, J.; Kamel Boulos, M.N. Generative AI in Medicine and Healthcare: Moving Beyond the ‘Peak of Inflated Expectations’. Future Internet 2024, 16, 462. [Google Scholar] [CrossRef]

- Kim, Y.; Xu, X.; McDuff, D.; Breazeal, C.; Park, H.W. Health-LLM: Large Language Models for Health Prediction via Wearable Sensor Data. arXiv 2024, arXiv:2401.06866. [Google Scholar]

- Singhal, K.; Azizi, S.; Tu, T.; Mahdavi, S.S.; Wei, J.; Chung, H.W.; Scales, N.; Tanwani, A.; Cole-Lewis, H.; Pfohl, S.; et al. Large Language Models Encode Clinical Knowledge. Nature 2023, 620, 172–180. [Google Scholar] [CrossRef] [PubMed]

- Toma, A.; Lawler, P.R.; Ba, J.; Krishnan, R.G.; Rubin, B.B.; Wang, B. Clinical Camel: An Open Expert-Level Medical Language Model with Dialogue-Based Knowledge Encoding. arXiv 2023, arXiv:2305.12031. [Google Scholar]

- Wu, C.; Lin, W.; Zhang, X.; Zhang, Y.; Xie, W.; Wang, Y. PMC-LLaMA: Toward Building Open-Source Language Models for Medicine. J. Am. Med. Inform. Assoc. JAMIA 2024, 31, 1833–1843. [Google Scholar] [CrossRef]

- Varshney, D.; Zafar, A.; Behera, N.K.; Ekbal, A. Knowledge Graph Assisted End-to-End Medical Dialog Generation. Artif. Intell. Med. 2023, 139, 102535. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Chen, A.; PourNejatian, N.; Shin, H.C.; Smith, K.E.; Parisien, C.; Compas, C.; Martin, C.; Costa, A.B.; Flores, M.G.; et al. A Large Language Model for Electronic Health Records. NPJ Digit. Med. 2022, 5, 194. [Google Scholar] [CrossRef]

- Gupta, R.; Bhongade, A.; Gandhi, T.K. Multimodal Wearable Sensors-Based Stress and Affective States Prediction Model. In Proceedings of the 2023 9th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 17–18 March 2023; Volume 1, pp. 30–35. [Google Scholar]

- Yin, X.; Zhu, Y.; Hu, J. A Comprehensive Survey of Privacy-Preserving Federated Learning: A Taxonomy, Review, and Future Directions. ACM Comput. Surv. 2021, 54, 1–36. [Google Scholar] [CrossRef]

- Alghamdi, H.; Mostafa, A. Advancing EHR Analysis: Predictive Medication Modeling Using LLMs. Inf. Syst. 2025, 131, 102528. [Google Scholar] [CrossRef]

- Marziali, R.A.; Franceschetti, C.; Dinculescu, A.; Nistorescu, A.; Kristály, D.M.; Moșoi, A.A.; Broekx, R.; Marin, M.; Vizitiu, C.; Moraru, S.-A.; et al. Reducing Loneliness and Social Isolation of Older Adults Through Voice Assistants: Literature Review and Bibliometric Analysis. J. Med. Internet Res. 2024, 26, e50534. [Google Scholar] [CrossRef]

- Lv, X.; Gao, Z.; Yuan, C.; Li, M.; Chen, C. Hybrid Real-Time Fall Detection System Based on Deep Learning and Multi-Sensor Fusion. In Proceedings of the 2020 6th International Conference on Big Data and Information Analytics (BigDIA), Shenzhen, China, 4–6 December 2020; pp. 386–391. [Google Scholar]

- Wang, B.; Chang, J.; Qian, Y.; Chen, G.; Chen, J.; Jiang, Z.; Zhang, J.; Nakashima, Y.; Nagahara, H. DiReCT: Diagnostic Reasoning for Clinical Notes via Large Language Models. arXiv 2024, arXiv:2408.01933. [Google Scholar]

- Gao, Y.; Li, R.; Croxford, E.; Caskey, J.; Patterson, B.W.; Churpek, M.; Miller, T.; Dligach, D.; Afshar, M. Leveraging Medical Knowledge Graphs Into Large Language Models for Diagnosis Prediction: Design and Application Study. Jmir Ai 2025, 4, e58670. [Google Scholar] [CrossRef]

- Xiao, S.; Liu, Z.; Zhang, P.; Muennighoff, N.; Lian, D.; Nie, J.-Y. C-Pack: Packed Resources For General Chinese Embeddings. In Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval, Washington, DC, USA, 14–18 July 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 641–649. [Google Scholar]

- Douze, M.; Guzhva, A.; Deng, C.; Johnson, J.; Szilvasy, G.; Mazaré, P.-E.; Lomeli, M.; Hosseini, L.; Jégou, H. The Faiss Library. arXiv 2025, arXiv:2401.08281. [Google Scholar]

- Song, Y.; Liu, C.; Zhang, R.; Zhu, D.; Wang, Z. An Efficient FPGA Implementation of Approximate Nearest Neighbor Search. IEEE Trans. Very Large Scale Integr. VLSI Syst. 2025, 33, 1705–1714. [Google Scholar] [CrossRef]

- Bai, Y.; Du, X.; Liang, Y.; Jin, Y.; Zhou, J.; Liu, Z.; Fang, F.; Chang, M.; Zheng, T.; Zhang, X.; et al. COIG-CQIA: Quality Is All You Need for Chinese Instruction Fine-Tuning. arXiv 2024, arXiv:2403.18058. [Google Scholar]

- Wang, X.; Li, J.; Chen, S.; Zhu, Y.; Wu, X.; Zhang, Z.; Xu, X.; Chen, J.; Fu, J.; Wan, X.; et al. Huatuo-26M, a Large-Scale Chinese Medical QA Dataset. In Proceedings of the Findings of the Association for Computational Linguistics: NAACL 2025, Albuquerque, NM, USA, 29 April–4 May 2025; Chiruzzo, L., Ritter, A., Wang, L., Eds.; Association for Computational Linguistics: Troutsburg, PA, USA, 2025; pp. 3828–3848. [Google Scholar]

- Han, Z.; Gao, C.; Liu, J.; Zhang, J.; Zhang, S.Q. Parameter-Efficient Fine-Tuning for Large Models: A Comprehensive Survey. arXiv 2024, arXiv:2403.14608. [Google Scholar]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. ICLR 2022, 1, 3. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).