Multi-View Intrusion Detection Framework Using Deep Learning and Knowledge Graphs

Abstract

1. Introduction

- (1)

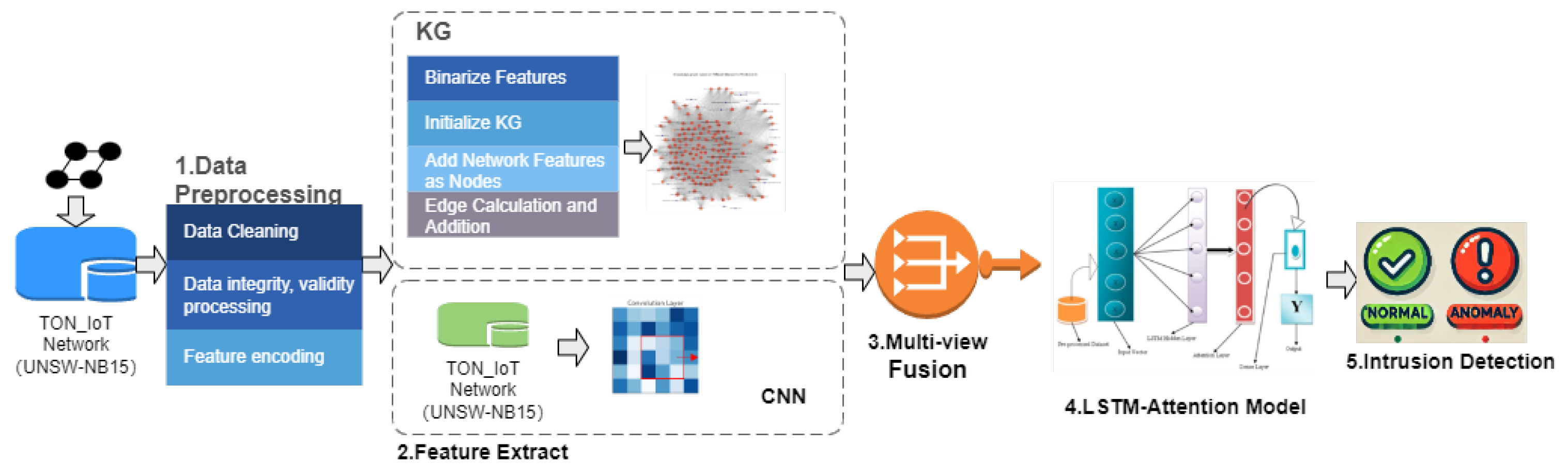

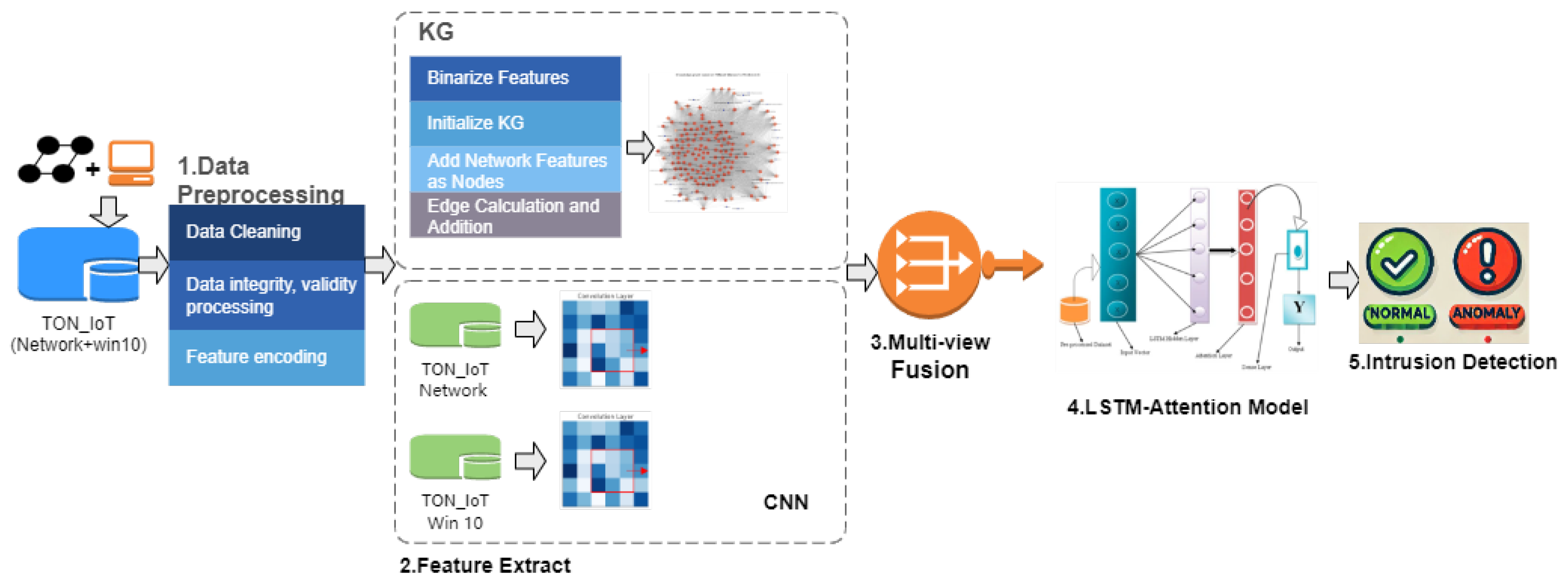

- Multi-view features fusion to enhance anomaly detection: This study presents a multi-view data fusion framework that integrates host data and network traffic data to improve anomaly detection performance. The robustness and generalizability of the proposed model were evaluated using the TON_IoT and UNSW-NB15 datasets.

- (2)

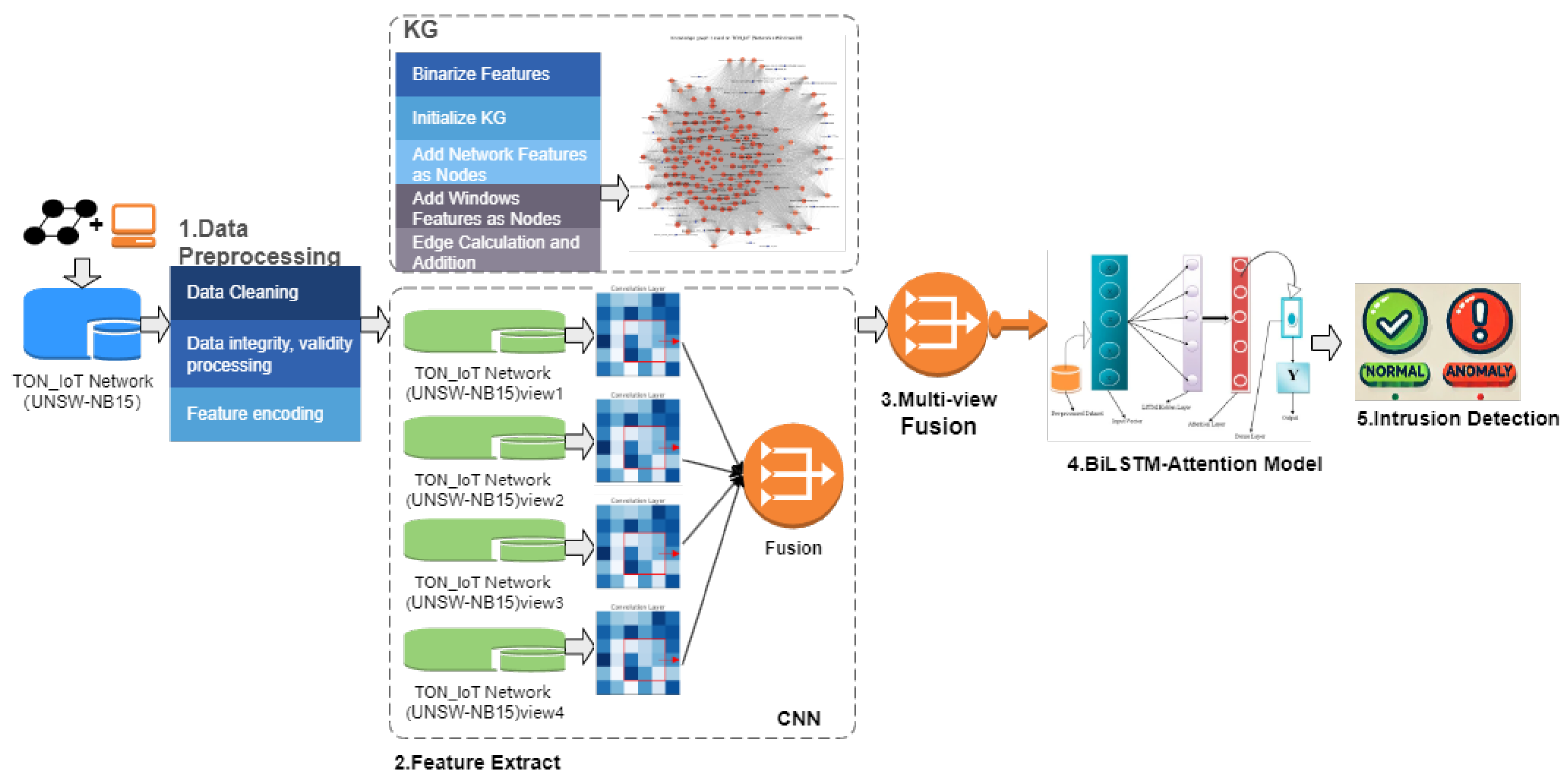

- Diversified model evaluation for intrusion detection: This study conducted a comprehensive evaluation of both single-view models (CNN, LSTM, CNN+attention LSTM) and multi-view fusion models ((KG+CNN)+attention LSTM, (KG+Multi-CNNs)+attention LSTM). The results highlight the advantages of multi-view fusion in enhancing detection accuracy and reliability over traditional single-view approaches.

- (3)

- Optimization of multi-view fusion strategies and the role of KG: This study assessed the impact of one-level and two-level fusion strategies on model performance under consistent experimental conditions. Through ablation studies, the contribution of KG to feature fusion and anomaly detection was validated, emphasizing the pivotal role of KG in boosting the effectiveness of multi-view models.

2. Related Work

3. Methodology

3.1. Overview

3.2. Model Design

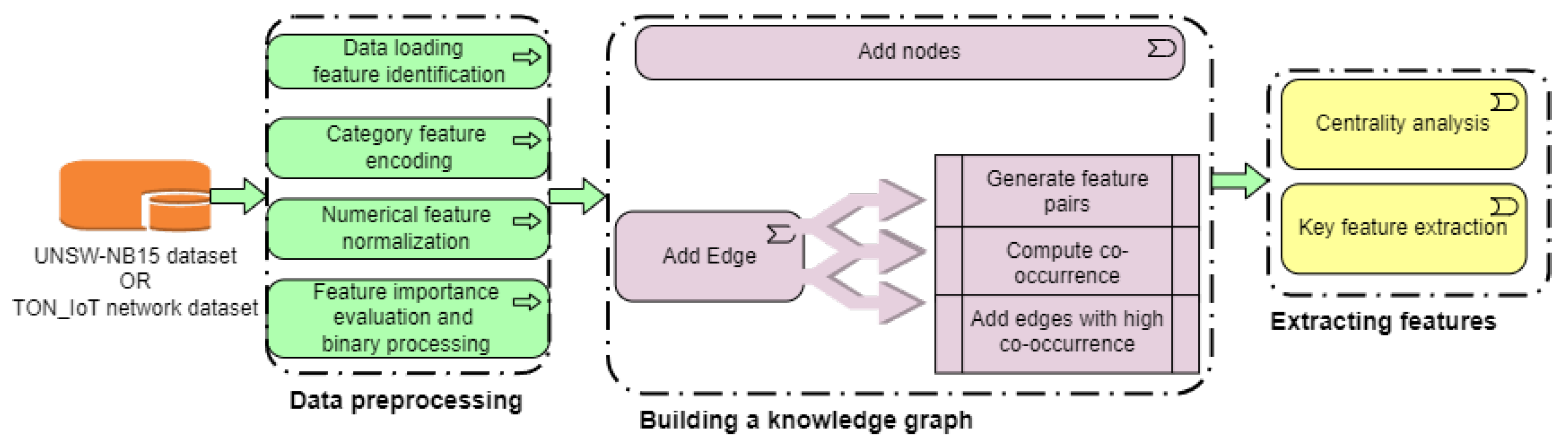

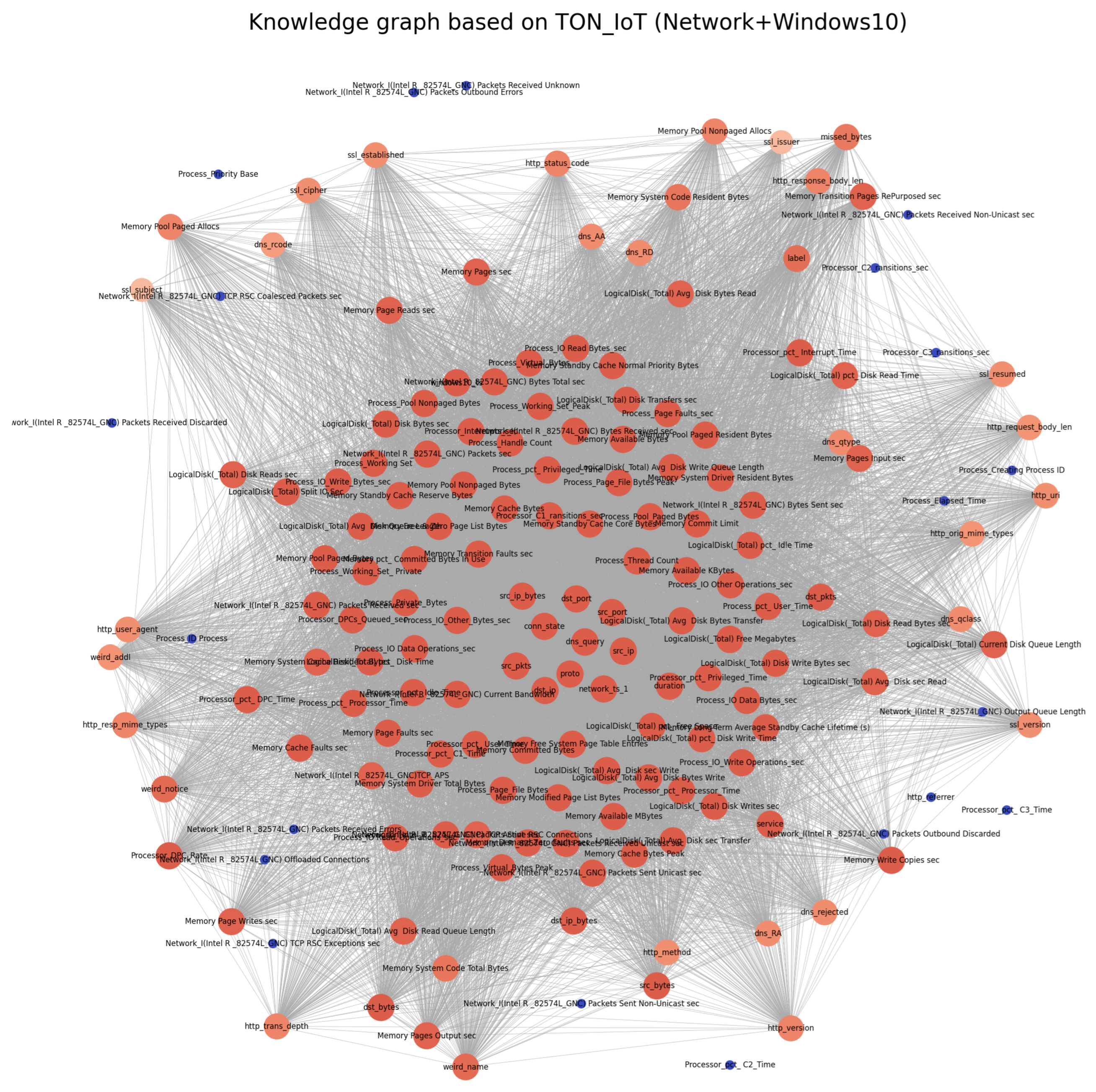

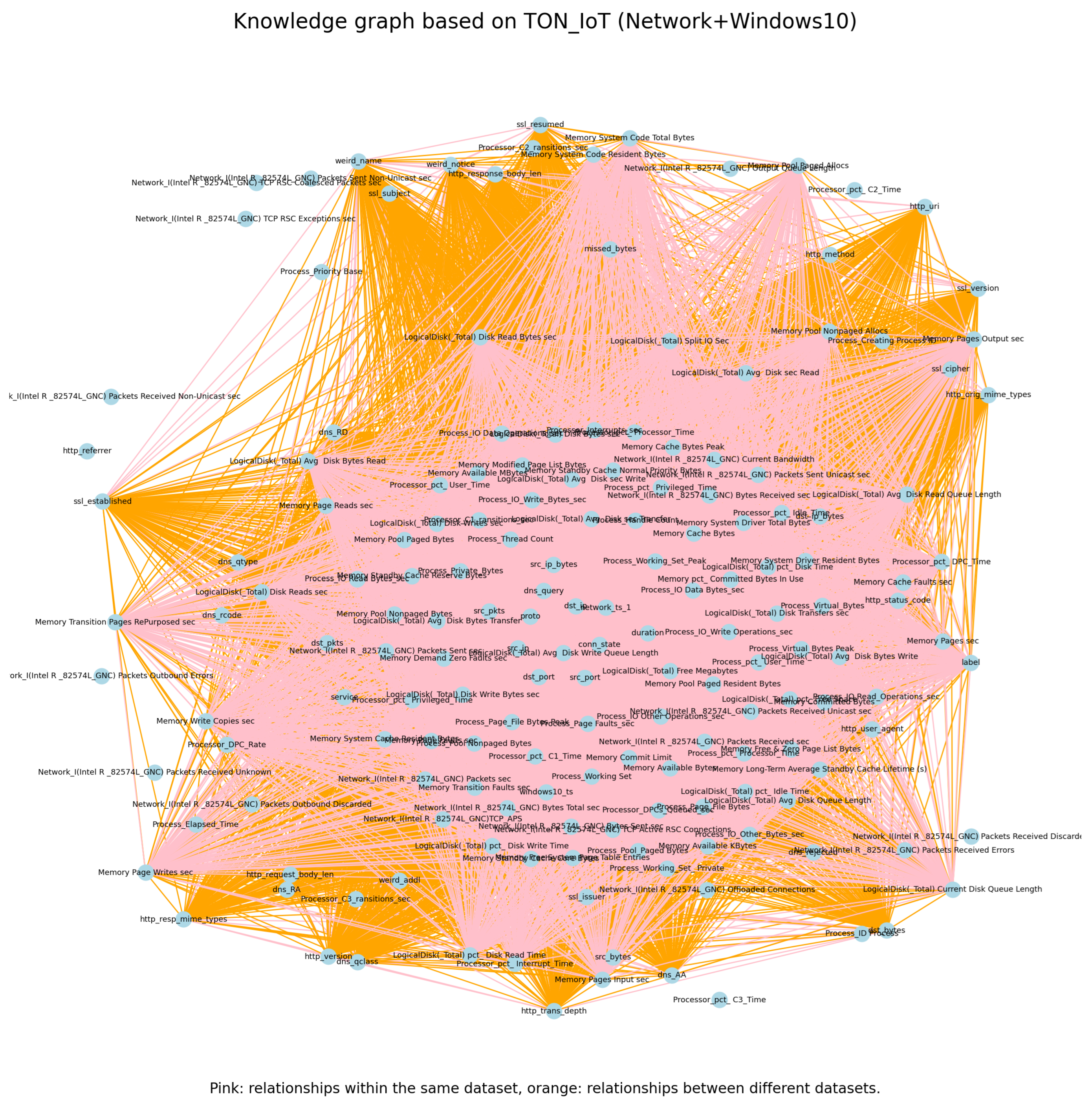

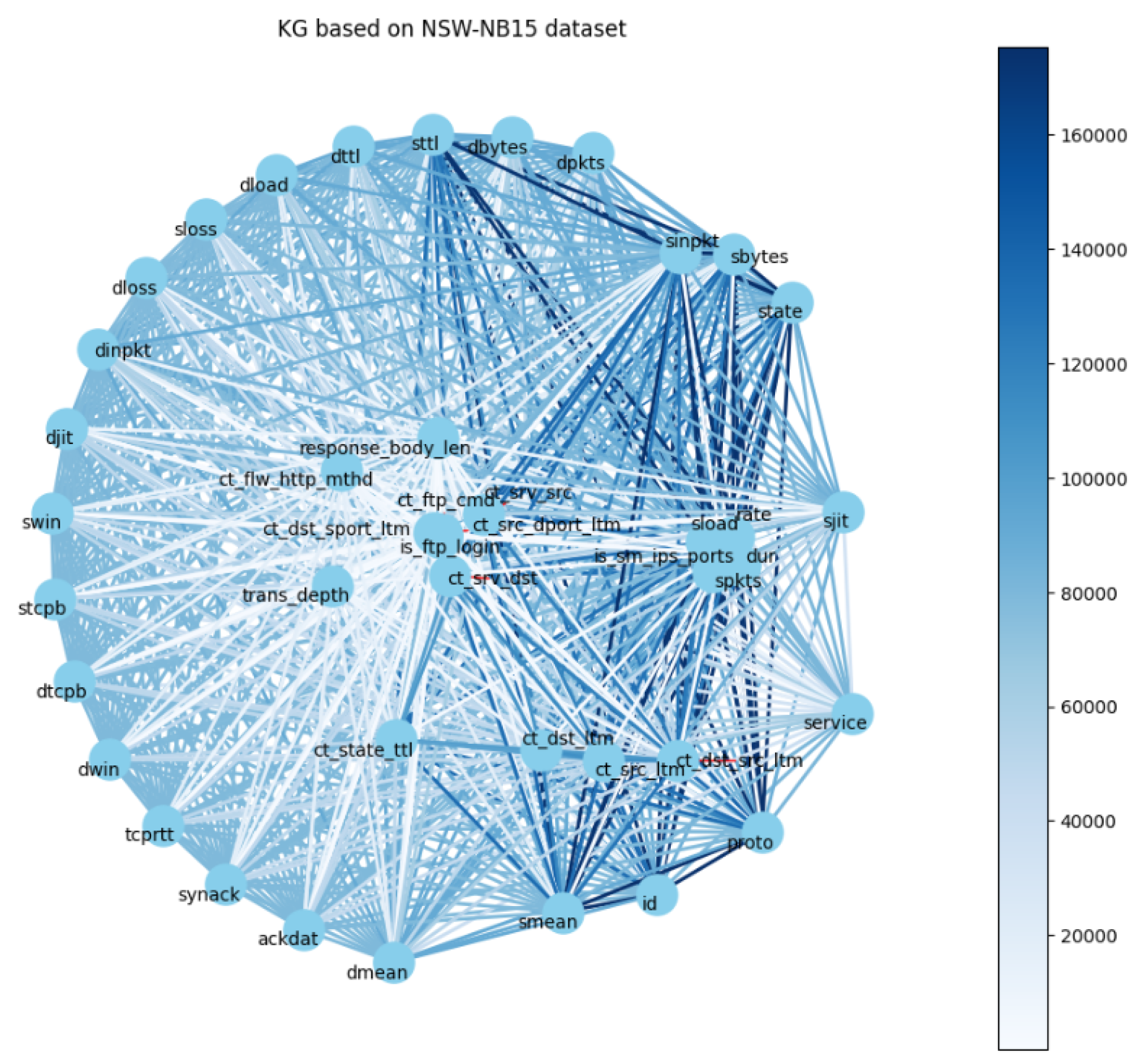

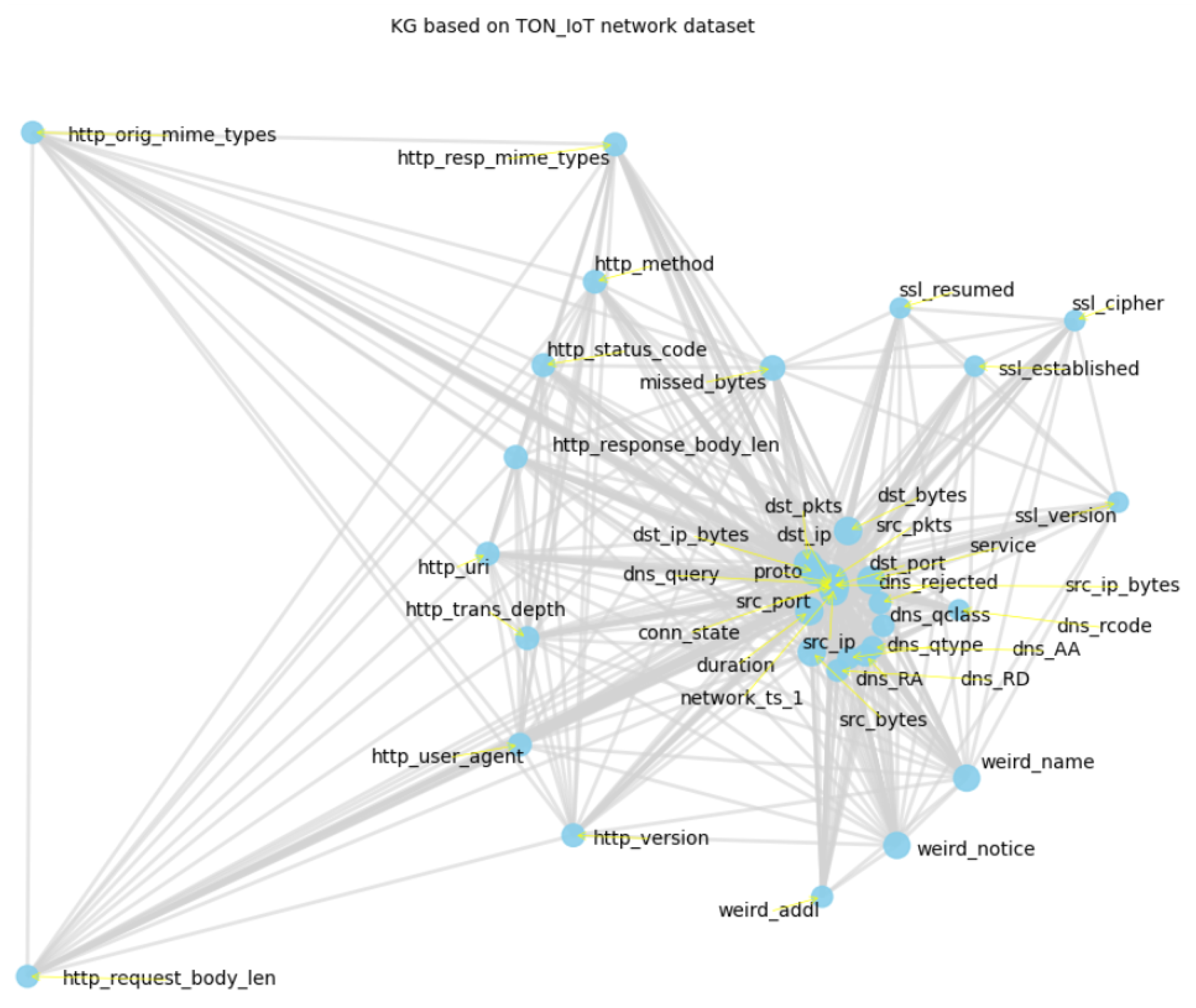

3.3. Construction of the KG

| Algorithm 1 KG Construction Based on TON_IoT network and Windows10 Dataset. |

Require: Network dataset , Windows dataset Ensure: KG G, Critical features tensors. 1. Initialize an empty graph G using NetworkX. 2. Add feature columns from each dataset as nodes in G with corresponding dataset attributes. 3. For each dataset, add edges based on feature co-occurrence: Function add_edges_from_cooccurrence(): Convert to boolean matrix using threshold. Compute co-occurrence matrix via boolean dot product. Add edges for feature pairs with non-zero co-occurrence. End Function Call add_edges_from_cooccurrence on both datasets. 4. Compute eigenvector centrality for all nodes. 5. Determine number of critical features to select from each dataset. 6. Sort nodes by centrality and select top features per dataset. 7. Convert selected feature names to tensor format: Function string_to_tensor(): Convert strings to hashed tensor values. End Function Apply string_to_tensor on selected features. 8. Visualize graph G using spring layout with node styling by centrality. 9. Return graph G and critical feature tensors. |

| Algorithm 2 KG Construction Based on TON_IOT Network Dataset (UNSW-NB15 Dataset). |

Require: Training dataset , Testing dataset Ensure: Preprocessed datasets, Feature co-occurrence KG G 1. Load training and testing datasets. 2. Detect numeric and categorical columns. 3. Encode categorical features using label encoding on combined unique values. 4. Normalize numeric features using MinMaxScaler fitted on training data. 5. Extract and from training dataset. 6. Compute mutual information between features and labels to select top k features. 7. Build co-occurrence matrix: Binarize top features, compute co-occurrence counts for each feature pair. 8. Construct graph G: Add feature nodes and edges for pairs with co-occurrence above threshold. 9. Visualize graph G with Kamada-Kawai layout, color/size based on edge weights. 10. Return preprocessed datasets and knowledge graph G. |

4. Preparation for the Experiment

4.1. Data Set

- (1)

- TON_IoT dataset: The dataset includes data on both normal operations and various intrusion events, aiming to closely simulate network behaviors observed in practical environments. In this study, we selected two types of data for combination: one is network traffic data, and the other is a dataset combining network traffic data and Windows data.

- (2)

- UNSW-NB15 dataset: The UNSW-NB15 dataset includes 2,540,044 records captured through an IXIA traffic generator. Following data cleaning and preprocessing, portions of the data were released as two distinct .csv files: the UNSW_NB15_training-set and the UNSW_NB15_test-set.

4.2. Multi-View Framework Integration

5. Experiment and Results

5.1. Experiment Outline

5.1.1. Comparative Analysis of Baseline (Single-View) and Multi-View Models

5.1.2. Comparison of Multi-View Fusion Strategies

5.1.3. Ablation Study on KG Contribution

5.1.4. Generalization Evaluation Across Different Datasets

5.2. Deployment of Single-View Models

5.2.1. Experimental Deployment Based on the TON_IoT Network+Win10 Dataset

5.2.2. Experimental Deployment Based on the TON_IoT Network and UNSW-NB15 Dataset

5.3. Deployment of Multi-View Models

5.3.1. Experimental Deployment Based on the TON_IoT Network+Win10 Dataset

5.3.2. Experimental Deployment Based on TON_IoT Network and UNSW-NB15 Dataset

5.4. Deployment of Ablation Experiments

6. Conclusions

6.1. Experimental Conclusion Analysis

6.1.1. F1 Score Analysis

- Advantages of Multi-View Models

- 2.

- Comparison of Feature Fusion Strategies

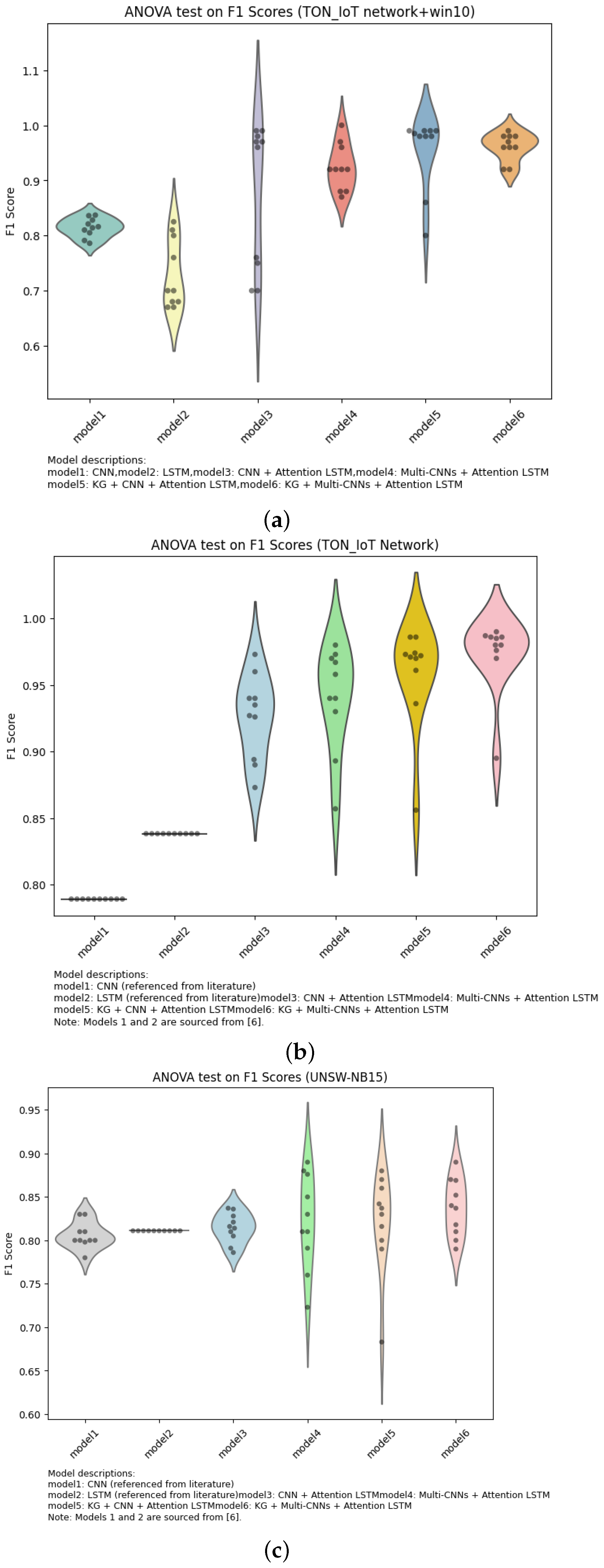

6.1.2. ANOVA Summary Analysis

- Superiority of Multi-View Models

- 2.

- Comparison of Feature Fusion Strategies

- 3.

- Summary of Model Distribution Characteristics

6.2. Practical Application Value of the Model in Network Intrusion Detection

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, L.; Yu, Y.; Bai, S.; Hou, Y.; Chen, X. An effective two-step intrusion detection approach based on binary classification and k-NN. IEEE Access 2018, 6, 12060–12073. [Google Scholar] [CrossRef]

- Ali, M.H.; Al Mohammed, B.A.D.; Ismail, A.; Zolkipli, M.F. A new intrusion detection system based on fast learning network and particle swarm optimization. IEEE Access 2018, 6, 20255–20261. [Google Scholar] [CrossRef]

- Dainotti, A.; Gargiulo, F.; Kuncheva, L.I.; Pescapè, A.; Sansone, C. Identification of traffic flows hiding behind TCP Port 80. In Proceedings of the IEEE International Conference on Communications, Cape Town, South Africa, 23–27 May 2010; pp. 1–6. [Google Scholar]

- Mishra, P.; Varadharajan, V.; Tupakula, U.; Pilli, E.S. A detailed investigation and analysis of using machine learning techniques for intrusion detection. IEEE Commun. Surv. Tutor. 2019, 21, 686–728. [Google Scholar] [CrossRef]

- Yin, C.; Zhu, Y.; Fei, J.; He, X. A deep learning approach for intrusion detection using recurrent neural networks. IEEE Access 2017, 5, 21954–21961. [Google Scholar] [CrossRef]

- Kim, J.; Kim, J.; Thu, H.L.T.; Kim, H. Long short term memory recurrent neural network classifier for intrusion detection. In Proceedings of the International Conference on Platform Technology and Service (PlatCon), Jeju, Republic of Korea, 15–17 February 2016; pp. 1–5. [Google Scholar]

- Jia, Y.; Qi, Y.; Shang, H.; Jiang, R.; Li, A. A practical approach to constructing a knowledge graph for cybersecurity. Engineering 2018, 4, 53–60. [Google Scholar] [CrossRef]

- Undercoffer, J.; Pinkston, J.; Joshi, A.; Finin, T. A target-centric ontology for intrusion detection. In IJCAI-03 Workshop on Ontologies and Distributed Systems; Morgan Kaufmann Publishers: Burlington, MA, USA, 2004; pp. 47–58. [Google Scholar]

- Teng, S.; Wu, N.; Zhu, H.; Teng, L.; Zhang, W. SVM-DT-based adaptive and collaborative intrusion detection. IEEE/CAA J. Autom. Sin. 2018, 5, 108–118. [Google Scholar] [CrossRef]

- Liu, J.; Xu, L. Improvement of SOM classification algorithm and application effect analysis in intrusion detection. In Recent Developments in Intelligent Computing, Communication and Devices; Springer: Berlin/Heidelberg, Germany, 2019; pp. 559–565. [Google Scholar]

- Sun, P.; Liu, P.; Li, Q.; Liu, C.; Lu, X.; Hao, R.; Chen, J. DL-IDS: Extracting Features Using CNN-LSTM Hybrid Network for Intrusion Detection System. Secur. Commun. Netw. 2020, 2020, 8890306. [Google Scholar] [CrossRef]

- Hotelling, H. Relations between two sets of variates. Biometrika 1936, 28, 321–372. [Google Scholar] [CrossRef]

- Srivastava, N.; Salakhutdinov, R.R. Multimodal learning with deep Boltzmann machines. In Proceedings of the International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 2222–2230. [Google Scholar]

- Ngiam, J.; Khosla, A.; Kim, M.; Nam, J.; Lee, H.; Ng, A.Y. Multimodal deep learning. In Proceedings of the International Conference on Machine Learning, Washington, DC, USA, 28 June–2 July 2011; pp. 689–696. [Google Scholar]

- Mao, J.; Xu, W.; Yang, Y.; Wang, J.; Huang, Z.; Yuille, A. Deep captioning with multimodal recurrent neural networks (m-RNN). arXiv 2014, arXiv:1412.6632. [Google Scholar]

- Peng, Z.; Luo, M.; Li, J.; Xue, L.; Zheng, Q. A deep multi-view framework for anomaly detection on attributed networks. IEEE Trans. Knowl. Data Eng. 2020, 34, 2539–2552. [Google Scholar] [CrossRef]

- Zhao, J.; Yan, Q.; Li, J.; Shao, M.; He, Z.; Li, B. TIMiner: Automatically extracting and analyzing categorized cyber threat intelligence from social data. Comput. Secur. 2020, 95, 101867. [Google Scholar] [CrossRef]

- Husari, G.; Al-Shaer, E.; Ahmed, M.; Chu, B.; Niu, X. Ttpdrill: Automatic and accurate extraction of threat actions from unstructured text of cti sources. In Proceedings of the Annual Computer Security Applications Conference, Orlando, FL, USA, 4–8 December 2017; pp. 103–115. [Google Scholar]

- Bouarroudj, W.; Boufaida, Z.; Bellatreche, L. Named entity disambiguation in short texts over knowledge graphs. Knowl. Inf. Syst. 2022, 64, 325–351. [Google Scholar] [CrossRef] [PubMed]

- Liu, K.; Wang, F.; Ding, Z.; Liang, S.; Yu, Z.; Zhou, Y. A review of knowledge graph application scenarios in cyber security. arXiv 2022, arXiv:2204.04769. [Google Scholar]

- Jian, S.; Lu, Z.; Du, D.; Jiang, B.; Liu, B.X. Overview of network intrusion detection technology. J. Cyber Secur. 2020, 5, 96–122. [Google Scholar]

- Yang, X.; Peng, G.; Zhang, D.; Lv, Y. An enhanced intrusion detection system for IoT networks based on deep learning and knowledge graph. Secur. Commun. Netw. 2022, 2022, 4748528. [Google Scholar] [CrossRef]

- Garrido, J.S.; Dold, D.; Frank, J. Machine learning on knowledge graphs for context-aware security monitoring. In Proceedings of the IEEE International Conference on Cyber Security and Resilience (CSR), Rhodes, Greece, 26–28 July 2021; pp. 55–60. [Google Scholar]

- Xiao, H.; Xing, Z.; Li, X.; Guo, H. Embedding and predicting software security entity relationships: A knowledge graph based approach. In Proceedings of the International Conference on Neural Information Processing, Sydney, Australia, 12–15 December 2019; pp. 50–63. [Google Scholar]

- Liu, K.; Wang, F.; Ding, Z.; Liang, S.; Yu, Z.; Zhou, Y. Recent Progress of Using Knowledge Graph for Cybersecurity. Electronics 2022, 11, 2287. [Google Scholar] [CrossRef]

- Cao, Z.; Zhao, Z.; Shang, W.; Ai, S.; Shen, S. Using the ToN-IoT dataset to develop a new intrusion detection system for industrial IoT devices. In Multimedia Tools and Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2024. [Google Scholar] [CrossRef]

- Rosy, J.V.; Britto, S.; Kumar, R.; Scholar, R. Intrusion Detection On The Unsw-Nb15 Dataset Using Feature Selection And Machine Learning Techniques. Webology 2021, 18, 6. [Google Scholar]

- Hogan, A.; Blomqvist, E.; Cochez, M.; d’Amato, C.; Melo, G.D.; Gutierrez, C.; Kirrane, S.; Gayo, J.E.L.; Navigli, R.; Neumaier, S.; et al. Knowledge graphs. Synth. Lect. Data Semant. Knowl. 2021, 12, 1–257. [Google Scholar]

- Hick, P.; Aben, E.; Claffy, K.; Polterock, J. The CAIDA DDoS Attack 2007 Data Set. 2012. Available online: http://www.caida.org (accessed on 10 July 2015).

- Shiravi, A.; Shiravi, H.; Tavallaee, M.; Ghorbani, A. Toward Developing a Systematic Approach to Generate Benchmark Datasets for Intrusion Detection. Comput. Secur. 2012, 31, 357–374. [Google Scholar] [CrossRef]

- Sharafaldin, I.; Lashkari, A.; Ghorbani, A. Toward Generating a New Intrusion Detection Dataset and Intrusion Traffic Characterization. In Proceedings of the ICISSP, Madeira, Portugal, 22–24 January 2018; pp. 108–116. [Google Scholar]

- Disha, R.; Waheed, S. Performance Analysis of Machine Learning Models for Intrusion Detection System Using Gini Impurity-based Weighted Random Forest (GIWRF) Feature Selection Technique. Cybersecurity 2022, 5, 1. [Google Scholar] [CrossRef]

- Moustafa, N. ToN_IoT and UNSW15 Datasets. 2022. Available online: https://research.unsw.edu.au/projects/toniot-datasets (accessed on 3 April 2022).

- Momand, A.; Jan, S.U.; Ramzan, N. ABCNN-IDS: Attention-based convolutional neural network for intrusion detection in IoT networks. Wirel. Pers. Commun. 2024, 136, 1981–2003. [Google Scholar] [CrossRef]

| View Category | Features | Type |

|---|---|---|

| Processor Activity | Processor_DPC_Rate, Processor_pct_Idle_Time, etc. | Number |

| Network Activity | Network I/Current Bandwidth, Network I/Packets/sec, etc. | Number |

| Process Activity | Process_Pool_Paged Bytes, Process_IO Read Ops/sec, etc. | Number |

| Disk Activity | LogicalDisk(_Total)/Avg. Disk Bytes/Write, etc. | Number |

| Memory Activity | Memory/Pool Paged Bytes, Memory/Pool Nonpaged Bytes, etc. | Number |

| View Category | Features | Type |

|---|---|---|

| Network Traffic | num_spkts, num_dpkts,num_sbytes, etc. | Numerical |

| Temporal | num_dur, num_sinpkt,num_dinpkt, etc. | Numerical |

| Protocol and Service | cat_proto_3pc,cat_proto_a/n, etc. | Categorical |

| Security | num_sttl, num_dttl,num_sloss, etc. | Numerical |

| Connection | cat_state_CON,cat_state_ECO, etc. | Mixed (Mostly Numerical) |

| No. | Feature Category | Feature Name | No. | Feature Category | Feature Name |

|---|---|---|---|---|---|

| 1 | Intrinsic | src_ip | 22 | Content | ssl_cipher |

| 2 | Intrinsic | src_port | 23 | Content | ssl_resumed |

| 3 | Intrinsic | dst_ip | 24 | Content | ssl_established |

| 4 | Intrinsic | dst_port | 25 | Content | ssl_subject |

| 5 | Intrinsic | proto | 26 | Content | ssl_issuer |

| 6 | Intrinsic | src_bytes | 27 | Content | http_method |

| 7 | Intrinsic | dst_bytes | 28 | Content | http_uri |

| 8 | Intrinsic | src_pkts | 29 | Content | http_referrer |

| 9 | Intrinsic | dst_pkts | 30 | Content | http_version |

| 10 | Intrinsic | src_ip_bytes | 31 | Content | http_request_body_len |

| 11 | Intrinsic | dst_ip_bytes | 32 | Content | http_response_body_len |

| 12 | Content | service | 33 | Content | http_status_code |

| 13 | Content | dns_query | 34 | Content | http_user_agent |

| 14 | Content | dns_class | 35 | Content | http_orig_mime_types |

| 15 | Content | dns_type | 36 | Content | http_resp_mime_types |

| 16 | Content | dns_rcode | 37 | Time-based | network_ts |

| 17 | Content | dns_AA | 38 | Time-based | duration |

| 18 | Content | dns_RD | 39 | Host-based | conn_state |

| 19 | Content | dns_RA | 40 | Host-based | missed_bytes |

| 20 | Content | dns_rejected | 41 | Host-based | weird_name |

| 21 | Content | ssl_version | 42 | Host-based | weird_addl |

| 43 | Host-based | weird_notice |

| Model Number | Model Type | Model Name | TON_IoT Network+Win10 (F1) |

|---|---|---|---|

| Model 1 | Single-view | CNN | 0.815 |

| Model 2 | Single-view | LSTM | 0.726 |

| Model 3 | Single-view | CNN+Attn-Based LSTM | 0.87 |

| Model 5 | Multi-view | (KG+CNN)+Attn-Based LSTM | 0.95 |

| Model 6 | Multi-view | (KG+multi-CNNs)+Attn-Based LSTM | 0.96 |

| Datesets | TON_IoT Network | TON_IoT Network+Win10 | UNSW-NB15 |

|---|---|---|---|

| Nodes | 43 | 170 | 43 |

| Edges | 696 | 10,894 | 874 |

| Model Type | Model Number | Model Name | TON_IoT Network (F1) |

|---|---|---|---|

| Single-view | Model 1 | CNN [26,34] | 0.7892/0.9111 |

| Model 2 | LSTM [26,32] | 0.8383/0.922 | |

| Model 3 | CNN+Attn-Based LSTM | 0.93 | |

| Multi-view | Model 5 | (KG+CNN)+Attn-Based LSTM | 0.96 |

| Model 6 | (KG+multi-CNNs)+Attn-Based LSTM | 0.963 |

| Model Number | Model Type | Model Name | UNSW-NB15 (F1) |

|---|---|---|---|

| Model 1 | Single-view | CNN [27] | 0.717 |

| Model 2 | Single-view | LSTM [27] | 0.811 |

| Model 3 | Single-view | CNN+Attn-Based LSTM | 0.814 |

| Model 5 | Multi-view | (KG+CNN)+Attn-Based LSTM | 0.821 |

| Model 6 | Multi-view | (KG+multi-CNNs)+Attn-Based LSTM | 0.837 |

| Model Name and Model Number | F1 Score | ||

|---|---|---|---|

| TON_IoT | UNSW-NB15 | ||

| Network | Network+Win10 | ||

| CNN+Attn LSTM (Model 3) | 0.93 | 0.87 | 0.814 |

| (KG+CNN)+Attn-Based LSTM (Model 5) | 0.96 | 0.95 | 0.821 |

| Multi-CNNs+Attn-Based LSTM (Model 4) | 0.94 | 0.924 | 0.82 |

| (KG+Multi-CNNs)+Attn-Based LSTM (Model 6) | 0.963 | 0.96 | 0.837 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, M.; Qiao, Y.; Lee, B. Multi-View Intrusion Detection Framework Using Deep Learning and Knowledge Graphs. Information 2025, 16, 377. https://doi.org/10.3390/info16050377

Li M, Qiao Y, Lee B. Multi-View Intrusion Detection Framework Using Deep Learning and Knowledge Graphs. Information. 2025; 16(5):377. https://doi.org/10.3390/info16050377

Chicago/Turabian StyleLi, Min, Yuansong Qiao, and Brian Lee. 2025. "Multi-View Intrusion Detection Framework Using Deep Learning and Knowledge Graphs" Information 16, no. 5: 377. https://doi.org/10.3390/info16050377

APA StyleLi, M., Qiao, Y., & Lee, B. (2025). Multi-View Intrusion Detection Framework Using Deep Learning and Knowledge Graphs. Information, 16(5), 377. https://doi.org/10.3390/info16050377