Abstract

This paper presents a novel method for the super-resolution reconstruction and generation of synthetic aperture radar (SAR) images with an improved single-image generative adversarial network (ISinGAN). Unlike traditional machine learning methods typically requiring large datasets, SinGAN needs only a single input image to extract internal structural details and generate high-quality samples. To improve this framework further, we introduced SinGAN with a self-attention module and incorporated noise specific to SAR images. These enhancements ensure that the generated images are more aligned with real-world SAR scenarios while also improving the robustness of the SinGAN framework. Experimental results demonstrate that ISinGAN significantly enhances SAR image resolution and target recognition performance.

1. Introduction

Synthetic aperture radar (SAR) is a sophisticated high-resolution imaging radar technology that relies on Doppler frequency analysis. Unlike optical and infrared imaging techniques, SAR has a distinct advantage in its ability to capture images under challenging conditions, such as low-light environments and adverse weather. This characteristic makes SAR an indispensable tool across a wide range of applications, including national defense, security, and environmental monitoring [1,2,3]. Despite previous extensive research, SAR target recognition still faces many challenges, such as data scarcity, suboptimal image quality, etc. [4,5,6,7,8]. Conceptually, SAR target recognition can be viewed as a limited-sample recognition problem [9].

Current methods for SAR image generation can be roughly summarized into four categories:

(1) Geometric transformation techniques: This approach involves generating images through operations like panning, rotating, and adding noise to the original image [10].

(2) Virtual image generation: These techniques create virtual images, with the quality of the resulting image heavily dependent on the integrity of the original data [11,12,13,14].

(3) Local statistics or compensation algorithms: This category employs local statistical methods or compensation algorithms to generate artificial training data [15,16].

(4) Deep learning algorithms: Advanced deep learning techniques are increasingly used to address the challenges of SAR image generation and enhancement [17,18,19,20,21].

For methods 1, 2, and 3, the corresponding conversions will be designed by experts, and human factors greatly affect the accuracy and robustness of the output. For method 4, the commonly used deep learning algorithms are models such as convolutional neural networks (CNNs) [22,23,24] and generative adversarial networks (GANs) [25,26,27]. These models exhibit outstanding generalization capabilities and robustness, but they all require large-scale and high-quality data sets, which will inevitably bring extremely high additional costs. To solve the above problems, an image generation method based on the single-image generative adversarial network (SinGAN), a variant of GAN, has been proposed [28,29].

SinGAN is an unconditional generative model that learns from a single image. It captures the internal compositional information of the image and generates visual content with variable sample quality that maintains the same overall structure. SinGAN employs a pyramid structure of fully convolutional GANs, with each layer learning the component information of the image at different scales. This architecture allows new samples to be generated at any size or aspect ratio. These generated samples exhibit obvious variations compared to the original image but retain the overall structure and fine-texture characteristics of the training image. Unlike earlier single-image GANs, SinGAN is not limited to texture images; it operates in an unconditional manner, enabling more flexible image generation.

The limitations of SinGAN are primarily twofold. First, it struggles when confronted with significant dissimilarity between image blocks, impeding the acquisition of a cohesive composition and often resulting in the generation of unrealistic pictures. Second, the generated image content is heavily constrained by the semantic information provided in the training image, limiting its creative potential. Despite the model learning the composition of individual images in detail, the outputs remain inherently restricted and lack the flexibility to generate highly diverse or novel content.

In the context of SAR target detection tasks, additional challenges arise due to environmental and equipment factors [30]. SAR images are highly sensitive to these external influences, with signal noise and clutter making a single image inadequate for capturing the diverse scenarios encountered in practical applications [31,32]. Consequently, SinGAN cannot be directly applied to SAR image processing, and further improvements are needed to address these challenges. Thus, we added specific noise related to SAR images in baseline noise to further enhance the robustness of SinGAN, making the resulting images more realistic and effective. This helps prevent the difference between the generated image and the real image being too large or too small, which improves the limitations of SinGAN. To counteract the potential destabilizing effects of added noise, we incorporated an attention mechanism inspired by recent advancements in the field [33,34,35,36,37]. This mechanism operates by starting with the full image, generating sub-regions iteratively, and subsequently making predictions for each sub-region. By leveraging these predicted results, we can obtain feature maps that effectively constrain and mitigate the impact of noise on the subject, thus ensuring greater stability in the model.

The experimental results highlight the superiority of ISinGAN (improved SinGAN) over its predecessor. Remarkably, ISinGAN demonstrates enhanced performance without the need for extensive datasets, significantly reducing the costs associated with subsequent tasks such as target recognition.

This work contributes to the field in two key ways. First, it pioneers the use of a single SAR image to train a model for super-resolution and image generation tasks, eliminating the need for diverse datasets. Second, by modifying the noise generation model in SinGAN and integrating an attention mechanism specifically designed for SAR images, ISinGAN surpasses SinGAN in performance. It also demonstrates a greater ability to extract useful information from a single image, leading to the generation of more realistic images.

2. The Proposed Methods

2.1. The Related Theory of SinGAN

SinGAN is trained to capture the internal composition of image patches, enabling it to generate high-quality and diverse samples that retain the same visual content as the input image. It features a fully convolutional GAN pyramid, with each layer learning the composition of patches at different scales. This structure allows SinGAN to generate new samples of any size or with any aspect ratio, exhibiting significant variability while preserving the overall structure and fine-texture details of the training image. To capture global attributes such as target shape and arrangement, as well as fine details and textures, SinGAN contains a patch GAN with a hierarchical structure, in which each discriminator is responsible for capturing different proportions of attributes to achieve the best results for image generation tasks.

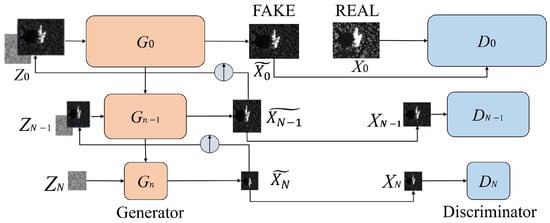

SinGAN consists of a pyramid of generators, and each generator is responsible for training against an image x, , where is a downsampled version of x by a factor . In adversarial training, the generative network learns to fool a related discriminative network , which tries to distinguish patches in the generated samples . The generation of image samples starts from the thickest scale and then passes through all generators in turn to reach the finest scale. On the roughest scale, the image samples are purely generated by the spatial Gaussian white noise . More details can be found in [28]. As shown in Figure 1, the framework consists of Generators {Gn}n = 0N. Each Gn takes noise input Zn and outputs fake samples. Discriminators {Dn}n = 0N: distinguish between real images Xn and generated samples. The training progresses coarse-to-fine (N→0), with adversarial losses at each scale.

Figure 1.

The generator–discriminator pyramid of ISinGAN.

Figure 1 shows the network structure of SinGAN.

At every scale n (n < N), the input of is the image from the previous scale. is upsampled to the current resolution, and is the image noise. is set to 5 conv layers; the output is added back to .

All generators have similar architecture, as shown:

where is the spatial noise, is a fully CN with 5 conv layers.

The next step is to train the multiscale architecture. The training loss for the nth GAN includes adversarial terms and reconstruction terms:

The adversarial loss uses the form of WGAN-GP (Wasserstein GAN Gradient Penalty) loss to increase training stability:

where is the distribution of original images, and is the distribution of generated images. is defined as sampling uniformly from and .

The reconstruction loss ensures that there is a specific noise that can generate the original data . The specific noise is set as , where is the fixed noise during training. The image generated by at the nth scale is denoted by . Then, can be expressed as follows:

2.2. Incorporating the SAR Noise Model

Lee filtering [32] is designed based on a fully developed multiplicative noise model. The formula for the filtering method based on the minimum mean square error can be expressed as follows:

where represents the image value after image denoising, represents the mathematical expectation of noise , and represents the weight coefficient; its functional expression yields the following:

where and represent the standard deviation coefficients of the noise spot and the image , respectively, and their expressions are as follows:

where and , represent the standard deviation and mean of the noise spot , respectively. represents the standard deviation of the image.

The noise modeling and self-attention mechanism are two key innovative modules that distinguish ISinGAN from SinGAN and also make the most significant contributions to SAR image processing. Specifically, the noise modeling module adapts to the unique speckle noise distribution characteristics of SAR images to construct a noise generation model that more closely matches real imaging scenarios. The self-attention mechanism, by capturing the global contextual dependencies in SAR images, effectively improves the reconstruction accuracy of complex ground object structures.

After such processing, the original training noise and the SAR image-specific noise are combined, making the model more suitable for SAR images. We incorporated the self-attention module into both the generator and the discriminator, training alternately by reducing the hinge version of the adversarial loss.

3. Results

The experimental data are based on the SAR static ground target data from Mobile and Stationary Target Acquisition and Recognition (MSTAR), supported by the Defense Advanced Research Projects Agency (DARPA). This dataset is commonly used in research on SAR image target recognition. The sensor used to collect the data is a high-resolution SAR system with a resolution of 0.3 × 0.3 m, operating in the X-band with horizontal transmit and receive (HH) polarization mode.

The training set contains operational elevation radar target image data obtained at an elevation angle of 17° and includes three target types, BTR70 (armored transport vehicle), BMP2 (infantry combat vehicle), and T72 (tank). We trained our network on an NVIDIA TITAN XP using TensorFlow and CUDA (manufactured by NVIDIA Corporation, Santa Clara, CA, USA). The Adam optimizer was chosen for the model with parameters β1 = 0.9 and β2 = 0.99, and the learning rate was set to 0.0001. The batch size was set to 16, and the model was trained for 500 epochs. To speed up model training, we compressed the resolution of the image data to 100 × 100 pixels.

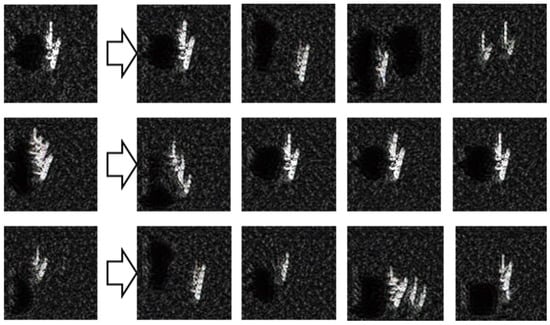

The generated image of ISinGAN is shown in Figure 2 and Figure 3. The model has effectively grasped the connection between the primary object and its surrounding environment, demonstrating robustness in image generation. Figure 2 indicates that ISinGAN can generate new object samples while maintaining the original patch composition. Figure 3 illustrates the generated images from various angles and under different lighting conditions, providing additional details of the original object.

Figure 2.

Generated images from a single SAR image. ISinGAN employs a special multiscale training strategy, which can be used to generate new realistic image samples while retaining the original object structure.

Figure 3.

Image generation examples from different angles and under different lighting conditions. ISinGAN has learned the composition of the main body of the image and surrounding environment, even generating shadows for some images.

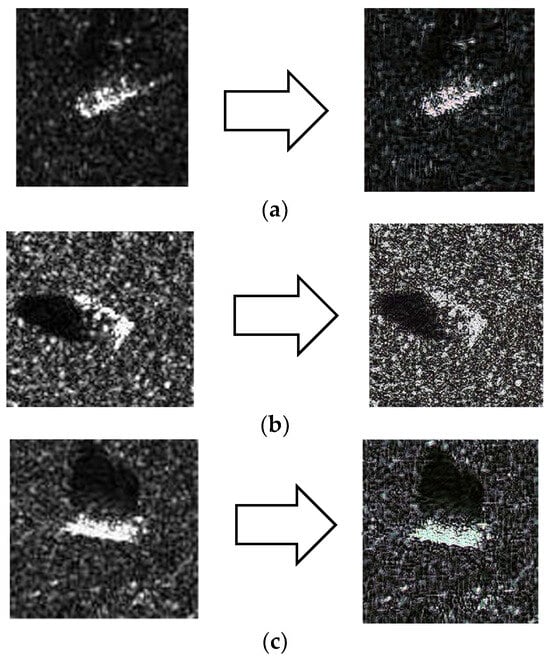

ISinGAN is employed for the super-resolution reconstruction of SAR images, as illustrated in Figure 4. It can be seen that the reconstructed images exhibit significantly improved visual clarity, particularly in the preservation of fine-grained details such as edge structures and textural patterns. The proposed model achieves remarkable performance in maintaining sharp edge delineation in background regions while simultaneously enhancing texture representation and shadow details across all reconstructed images.

Figure 4.

Super-resolution reconstruction results of SAR images from the ISinGAN network. (a) Original low-resolution SAR image; (b) Super-resolved image generated by SinGAN; (c) Super-resolved image generated by ISinGAN (proposed method).

These images indicate that the model demonstrates excellent high resolution for SAR images, significantly improving the essential features of key targets.

Our study involved comprehensive qualitative and quantitative evaluations using a diverse set of scene images, including armored personnel carriers, howitzers, and bulldozers, all sourced from the MSTAR dataset. The evaluation of ISinGAN encompasses two primary dimensions, image generation quality and super-resolution performance. For the image generation assessment, we established a comprehensive evaluation consisting of two quantitative aspects: (1) target recognition accuracy measured through a CNN and other methods; (2) single-image Fréchet inception distance (SIFID) assessment. Regarding super-resolution performance, the evaluation also included two metrics: (1) peak signal-to-noise ratio (PSNR) assessment and (2) structural similarity index measure (SSIM) evaluation.

3.1. Target Recognition

To compare the performance with ISinGAN, we trained the CNN using the same set of SAR images. The CNN architecture consists of four convolutional layers with filter sizes of 6 × 6, 6 × 6, 5 × 5, and 6 × 6, with a stride of 1. Additionally, there are three 3 × 2 maximum pooling layers, each with a stride of 2. The mapping is set to 16, 32, 64, and 128 for each layer, and the activation function used is ReLU (rectified linear unit). The learning rate is set to 0.0001.

Considering the significant impact of varying incidence angles on model performance, we constructed the dataset to include SAR images across a diverse range of incidence angles. The training dataset comprised 1800 selected images, ensuring comprehensive angular coverage. To ensure statistical reliability and robustness of evaluation, we conducted three independent experimental trials with randomized initialization, and the final performance metrics were derived from the results averaged across all trials. The experimental results of different methods are shown in Table 1. It can be seen that ISinGAN achieves state-of-the-art performance, exhibiting superior accuracy (98.16%) with minimal variance (σ2 = 0.33) compared to other GANs. This optimal performance can be attributed to the model’s robust architecture and effective training strategy, as evidenced by the comprehensive evaluation metrics.

Table 1.

Target recognition accuracy of different methods.

3.2. Single-Image Fréchet Inception Distance Assessment

The single-image Fréchet inception distance (SIFID) metric serves as a robust quantitative measure for evaluating the perceptual quality of generated images, particularly in assessing the performance of GANs [32,33]. SIFID is the Fréchet inception distance between the statistics of the features in the original image and generated samples, where a lower SIFID value indicates a higher degree of similarity between the two images. SIFID demonstrates superior capability over the conventional inception score (IS) in quantifying the similarity between generated and real images, primarily due to its utilization of deep feature representations. Table 2 shows the SIFID of ISinGAN. It can be seen that the mean SIFID values generated at scale N − 1 are significantly lower than those obtained at scale N.

Table 2.

SIFID of ISinGAN.

3.3. SSIM and PSNR Assessment

ISinGAN achieves excellent performance in SAR image super-resolution, remarkably enhancing edge sharpness and textural precision while maintaining background clarity and shadow details. To provide a more objective evaluation of the super-resolution performance, we present specific peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM) values for various methods, as shown in Table 3. The results indicate that our model outperforms competing algorithms, achieving higher PSNR and SSIM values for SAR images. This reinforces the model’s effectiveness in enhancing noise suppression and structural reconstruction in SAR imagery.

Table 3.

PSNR and SSIM of different methods.

To further validate the effectiveness of ISinGAN in SAR image super-resolution tasks, we quantitatively assess the results using two standard metrics: peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM). As presented in Table 3, ISinGAN achieves superior performance, significantly outperforming existing methods in terms of both noise suppression and structural detail preservation.

In the preceding section, we compared the results of ISinGAN with those of six classic algorithms such as CNN- and GAN-based methods. However, constrained by time and workload, a comparative analysis between ISinGAN and recently prevalent algorithms such as vision transformers (ViTs) or diffusion models was not conducted. When conditions permit in the future, we intend to analyze the performance of a broader range of existing algorithms in the context of synthetic aperture radar (SAR) image generation and super-resolution reconstruction.

4. Conclusions

This paper presents an advanced SinGAN-based framework for single-image SAR image generation and super-resolution. The proposed architecture integrates with a specific noise module and self-attention mechanism, specifically optimized for SAR image characteristics. Unlike conventional approaches requiring extensive training datasets, this model achieves excellent performance using only a single input SAR image, significantly improving computational efficiency. Quantitative evaluations consistently verify the model’s superior capability compared to other models.

Future research directions will focus on three aspects: (1) developing adaptive noise modulation strategies to enhance model generalization across diverse SAR imaging conditions, (2) implementing dynamic noise component balancing through advanced optimization algorithms, and (3) establishing quantitative metrics for realistic SAR image evaluation. These advancements will enable the generation of more sophisticated and physically accurate SAR representations, particularly for complex scenarios involving multiple scattering mechanisms and varying terrain characteristics. The proposed enhancements are expected to significantly improve the model’s applicability in critical domains such as military surveillance, environmental monitoring, and disaster assessment.

Author Contributions

Conceptualization, X.Y.; software, L.N.; writing—original draft preparation, X.Y.; writing—review and editing, Y.Z.; visualization, L.Z.; project administration, X.Y.; funding acquisition, X.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundations of China (grant number 62461034, 62061026), Science and Technology Program of Gansu Province of China (grant number 24YFGM001), and Science and Technology Program of Qingyang of China (grant number QY-STK-2023A-058).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Cerutti-Maori, D.; Sikaneta, I.; Gierull, C.H. Optimum SAR/GMTI Processing and Its Application to the Radar Satellite RADARSAT-2 for Traffic Monitoring. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3868–3881. [Google Scholar] [CrossRef]

- Zhang, M.; Lu, H.; Li, S.; Li, Z. High-resolution wide-swath sar imaging with multifrequency pulse diversity mode in azimuth multichannel system. Int. J. Remote Sens. 2024, 45, 29. [Google Scholar] [CrossRef]

- Xu, W.; Hu, J.; Huang, P.; Tan, W.; Dong, Y. Azimuth phase center adaptive adjustment upon reception for high-resolution wide-swath imaging. Sensors 2019, 19, 4277. [Google Scholar] [CrossRef]

- Tang, T.; Cui, Y.; Feng, R.; Xiang, D. Vehicle Target Recognition in SAR Images with Complex Scenes Based on Mixed Attention Mechanism. Information 2024, 15, 159. [Google Scholar] [CrossRef]

- Sanderson, J.; Mao, H.; Abdullah, M.A.M.; Al-Nima, R.R.O.; Woo, W.L. Optimal Fusion of Multispectral Optical and SAR Images for Flood Inundation Mapping through Explainable Deep Learning. Information 2023, 14, 660. [Google Scholar] [CrossRef]

- Zeng, H.; Ma, P.; Shen, H.; Su, C.; Wang, H.; Wang, Y.; Yang, W.; Liu, W. A Coarse-to-Fine Scene Matching Method for High-Resolution Multiview SAR Images. IEEE Trans. Geosci. Remote Sens. 2025, 63, 1–14. [Google Scholar] [CrossRef]

- Arai, K.; Nakaoka, Y.; Okumura, H. Method for Landslide Area Detection Based on EfficientNetV2 with Optical Image Converted from SAR Image Using pix2pixHD with Spatial Attention Mechanism in Loss Function. Information 2024, 15, 524. [Google Scholar] [CrossRef]

- Zha, Y.; Pu, W.; Chen, G.; Huang, Y.; Yang, J. A Minimum-Entropy Based Residual Range Cell Migration Correction for Bistatic Forward-Looking SAR. Information 2016, 7, 8. [Google Scholar] [CrossRef]

- Franceschetti, G.; Migliaccio, M.; Riccio, D. On ocean SAR raw signal simulation. IEEE Trans. Geosci. Remote Sens. 1998, 36, 84–100. [Google Scholar] [CrossRef]

- Jia, Y.; Liu, S.; Liu, Y.; Zhai, L.; Gong, Y.; Zhang, X. Echo-Level SAR Imaging Simulation of Wakes Excited by a Submerged Body. Sensors 2024, 24, 1094. [Google Scholar] [CrossRef]

- Wu, M.; Zhang, L.; Niu, J.; Wu, Q.J. Target detection in clutter/interference regions based on deep feature fusion for hfswr. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 5581–5595. [Google Scholar] [CrossRef]

- Schmitt, A. Multiscale and multidirectional multilooking for SAR image enhancement. IEEE Trans. Geosci. Remote Sens. 2016, 54, 5117–5134. [Google Scholar] [CrossRef]

- Gleich, D.; Datcu, M. Wavelet-based SAR image despeckling and information extraction, using particle filter. IEEE Trans. Image Process. 2009, 18, 2167–2184. [Google Scholar] [CrossRef] [PubMed]

- Yahya, N.; Kamel, N.S.; Malik, A.S. Subspace-based technique for speckle noise reduction in SAR images. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6257–6271. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, H.; Ding, C.; Wang, H. A novel approach for shadow enhancement in high-resolution SAR images using the height-variant phase compensation algorithm. IEEE Geosci. Remote Sens. Lett. 2012, 10, 189–193. [Google Scholar] [CrossRef]

- Thayaparan, T.; Stankovic, L.; Wernik, C.; Dakovic, M. Real-time motion compensation, image formation and image enhancement of moving targets in ISAR and SAR using S-method-based approach. IET Signal Process. 2008, 2, 247–264. [Google Scholar] [CrossRef]

- Chen, S.; Wang, H.; Xu, F.; Jin, Y.-Q. Target classification using the deep convolutional networks for SAR images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4806–4817. [Google Scholar] [CrossRef]

- Liu, P.; Li, J.; Wang, L.; He, G. Remote sensing data fusion with generative adversarial networks, State-of-the-art methods and future research directions. IEEE Geosci. Remote Sens. Mag. 2022, 10, 295–328. [Google Scholar] [CrossRef]

- Yuan, C.; Deng, K.; Li, C.; Zhang, X.; Li, Y. Improving image super-resolution based on multiscale generative adversarial networks. Entropy 2022, 24, 1030. [Google Scholar] [CrossRef]

- Xie, J.; Fang, L.; Zhang, B.; Chanussot, J.; Li, S. Super resolution guided deep network for land cover classification from remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–12. [Google Scholar] [CrossRef]

- Karwowska, K.; Wierzbicki, D. MCWESRGAN, Improving Enhanced Super-Resolution Generative Adversarial Network for Satellite Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 9459–9479. [Google Scholar] [CrossRef]

- Vitale, S.; Ferraioli, G.; Pascazio, V. Multi-objective CNN-based algorithm for SAR despeckling. IEEE Trans. Geosci. Remote Sens. 2020, 59, 9336–9349. [Google Scholar] [CrossRef]

- Li, Y.; Du, L.; Wei, D. Multiscale CNN based on component analysis for SAR ATR. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–12. [Google Scholar] [CrossRef]

- Li, H.; Huang, H.; Chen, L.; Peng, J.; Huang, H.; Cui, Z.; Mei, X.; Wu, G. Adversarial examples for CNN-based SAR image classification, An experience study. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 1333–1347. [Google Scholar] [CrossRef]

- Zhu, J.; Lin, Z.; Wen, B. TM-GAN, A Transformer-Based Multi-Modal Generative Adversarial Network for Guided Depth Image Super-Resolution. IEEE J. Emerg. Sel. Top. Circuits Syst. 2024, 14, 261–274. [Google Scholar] [CrossRef]

- Hou, J.; Bian, Z.; Yao, G.; Lin, H.; Zhang, Y.; He, S.; Chen, H. Attribute Scattering Center Assisted SAR ATR Based on GNN-FiLM. IEEE Geosci. Remote Sens. Lett. 2024, 21, 1–5. [Google Scholar] [CrossRef]

- Ye, T.; Kannan, R.; Prasanna, V.; Busart, C. Adversarial attack on GNN-based SAR image classifier. Artif. Intell. Mach. Learn. Multi-Domain Oper. Appl. V SPIE 2023, 12538, 291–295. [Google Scholar]

- Shaham, T.R.; Dekel, T.; Michaeli, T. Singan, Learning a generative model from a single natural image. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4570–4580. [Google Scholar]

- Cai, Z.; Xiong, Z.; Xu, H.; Wang, P.; Li, W.; Pan, Y. Generative Adversarial Networks: A Survey Toward Private and Secure Applications. ACM Comput. Surv. 2021, 54, 132. [Google Scholar] [CrossRef]

- Singh, P.; Diwakar, M.; Shankar, A.; Shree, R.; Kumar, M. A Review on SAR Image and its Despeckling. Arch. Comput. Methods Eng. 2021, 28, 4633–4653. [Google Scholar] [CrossRef]

- Tarchi, D.; Lukin, K.; Fortuny-Guasch, J.; Mogyla, A.; Vyplavin, P.; Sieber, A. SAR imaging with noise radar. IEEE Trans. Aerosp. Electron. Syst. 2010, 46, 1214–1225. [Google Scholar] [CrossRef]

- Zhang, W.P.; Tong, X. Noise modeling and analysis of SAR ADCs. IEEE Trans. VLSI Syst. 2014, 23, 2922–2930. [Google Scholar] [CrossRef]

- Zhang, H.; Goodfellow, I.; Metaxas, D.; Odena, A. Self-attention generative adversarial networks. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 7354–7363. [Google Scholar]

- Chaabane, F.; Réjichi, S.; Tupin, F. Self-attention generative adversarial networks for times series VHR multispectral image generation. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 4644–4647. [Google Scholar]

- Zhao, H.; Jia, J.; Koltun, V. Exploring self-attention for image recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10076–10085. [Google Scholar]

- Zheng, C.; Jiang, X.; Zhang, Y.; Liu, X.; Yuan, B.; Li, Z. Self-normalizing generative adversarial network for super-resolution reconstruction of SAR images. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 1911–1914. [Google Scholar]

- Lee, J.S.; Wen, J.H.; Ainsworth, T.L.; Chen, K.-S.; Chen, A.J. Improved sigma filter for speckle filtering of SAR imagery. IEEE Trans. Geosci. Remote Sens. 2008, 47, 202–213. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).