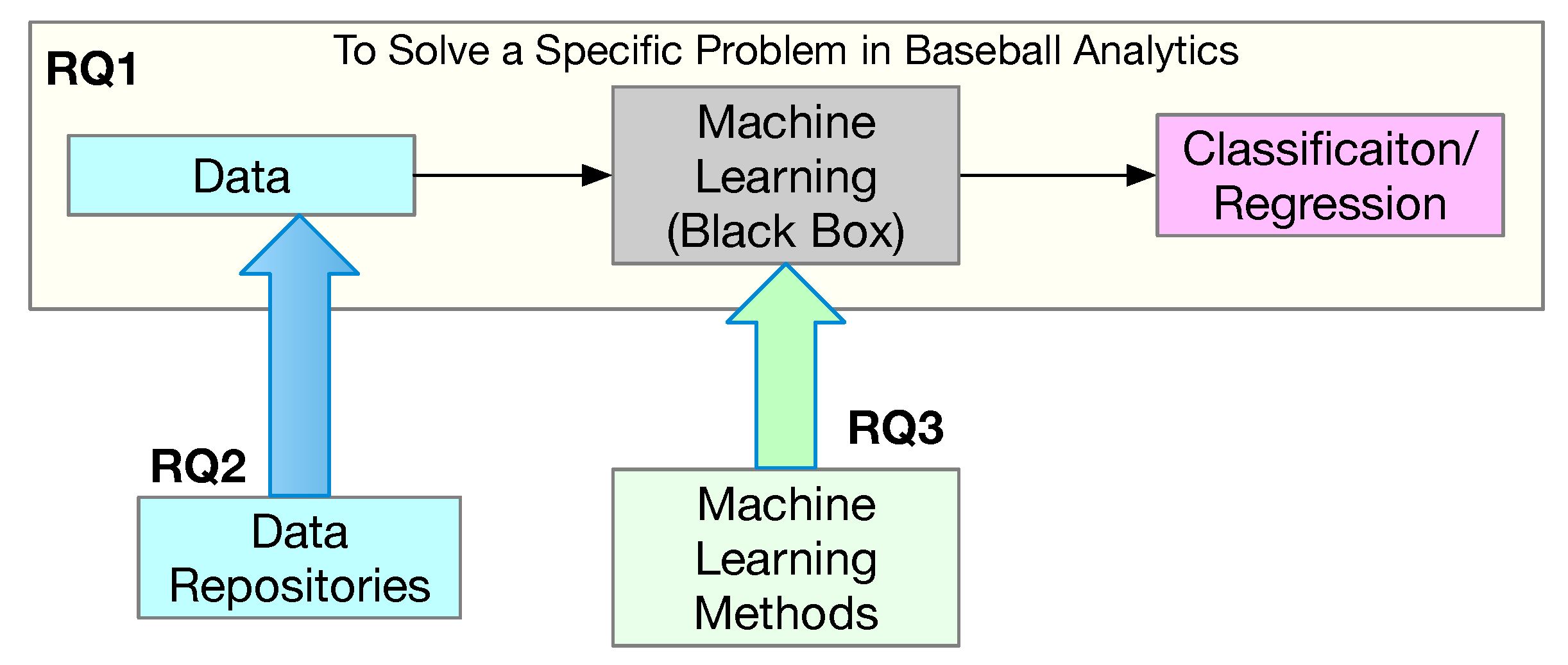

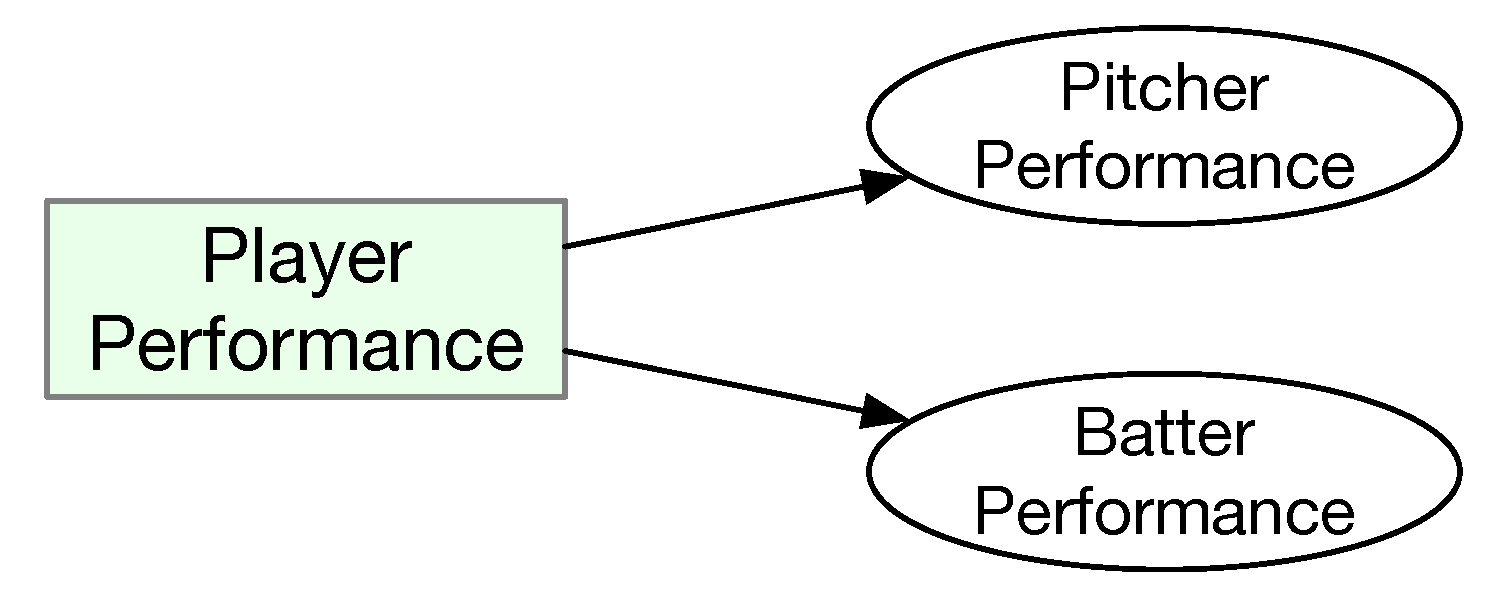

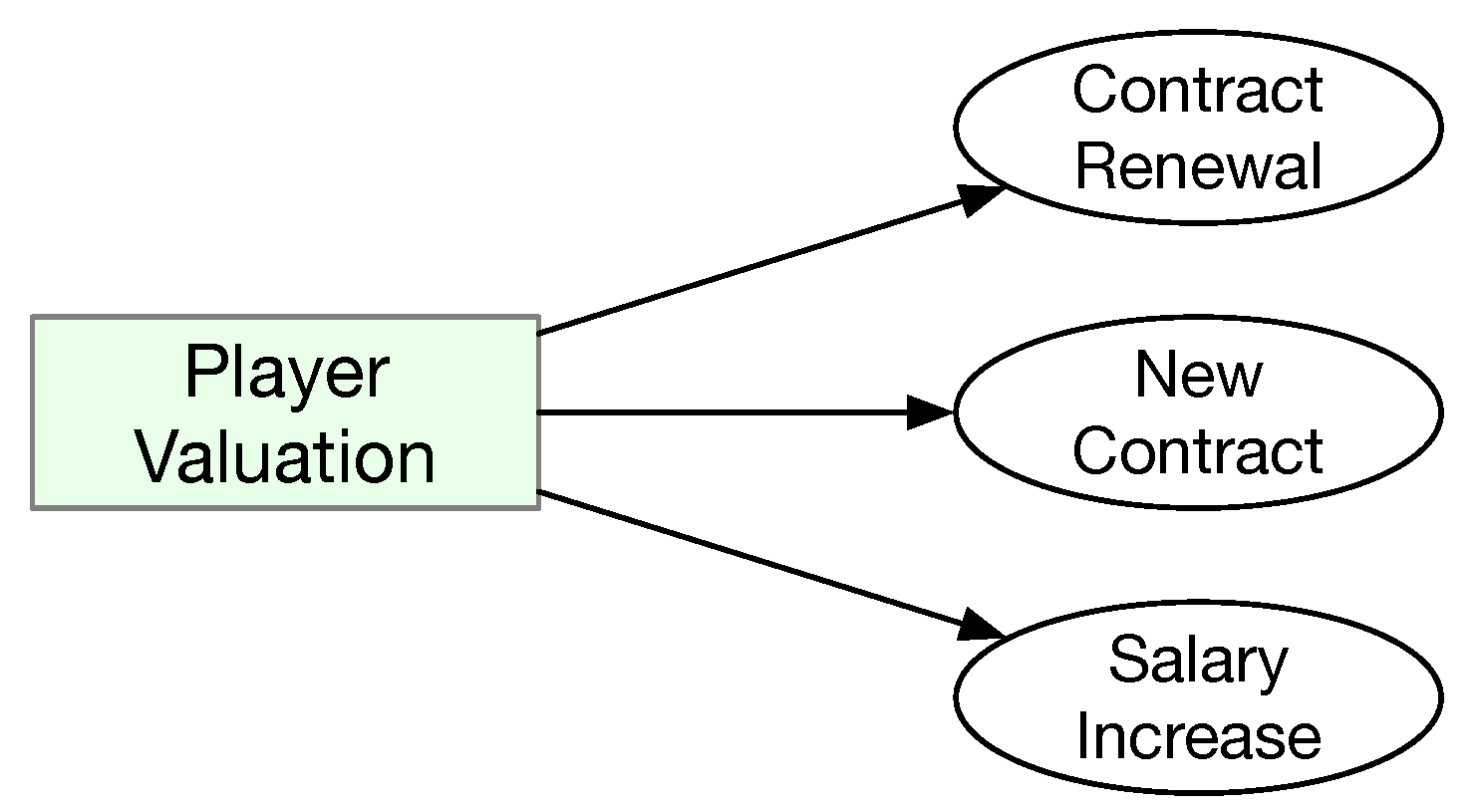

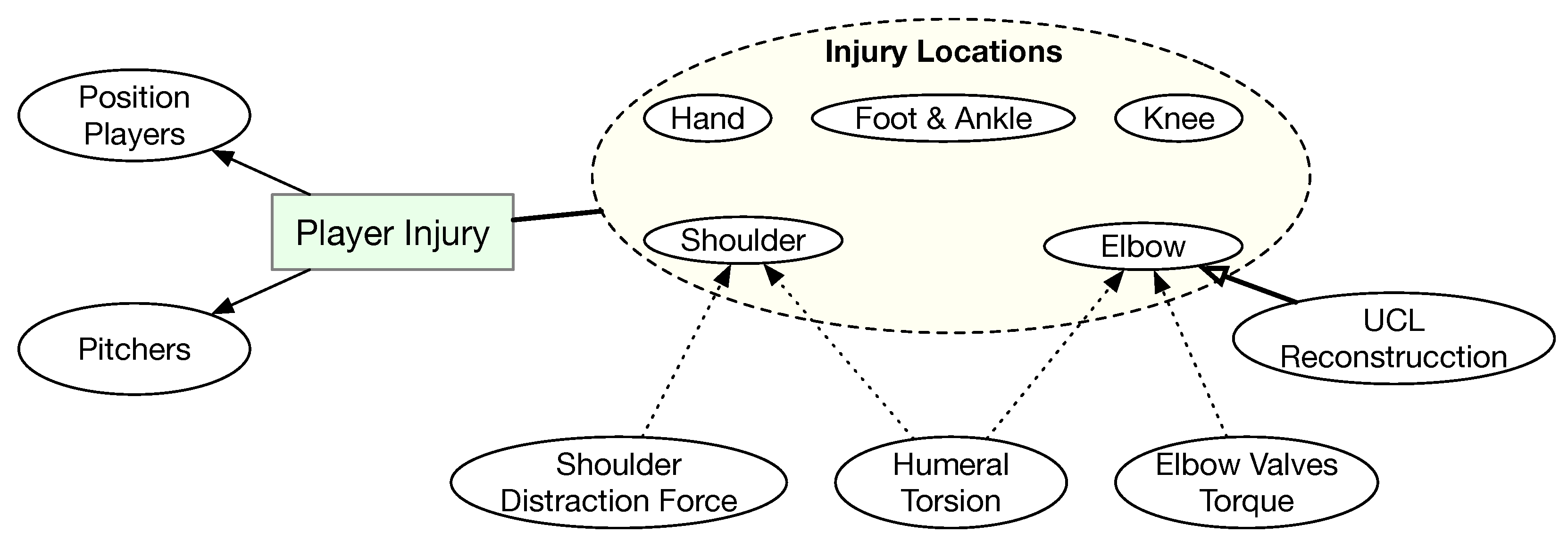

To organize the 34 studies, we propose a taxonomy that consists of the following five categories: (1) prediction of individual game play; (2) determination of player performance (in terms of improved sabermetrics); (3) estimation of player valuation (in terms of salary or contract); (4) prediction of player future injuries; and (5) projection of future game outcomes.

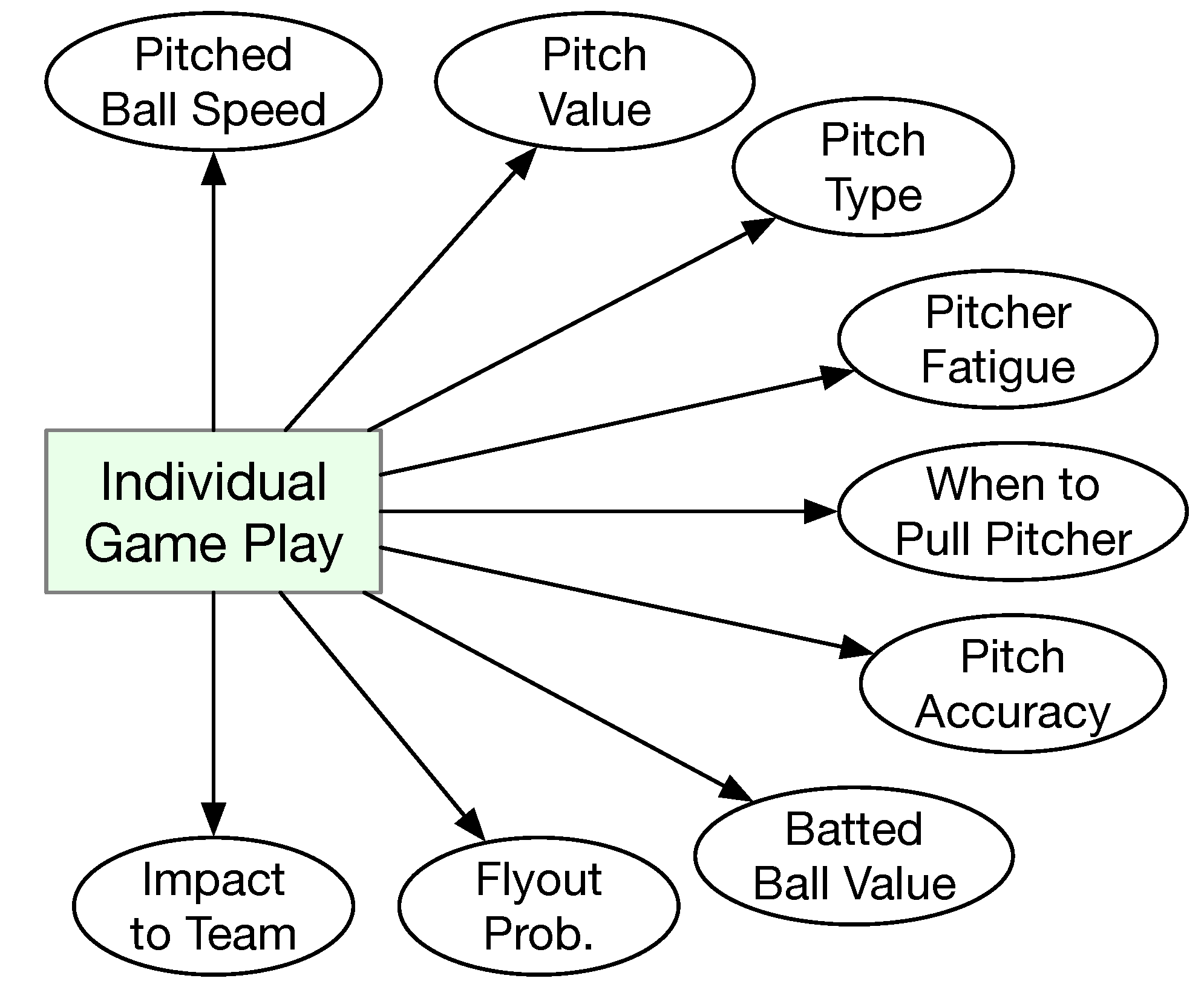

3.1. Individual Game Play

Various aspects of individual game play have been studied with machine learning. The types of individual game play are illustrated in

Figure 3. These include predictions and evaluations such as pitch speed, type, accuracy in the strike zone, fatigue, and overall impact on the team. The individual studies are summarized in

Table 1. This category has attracted 15 studies.

In Ref. [

26], machine learning is used to predict if the next pitch is going to be a fastball as a binary classification problem. The capability of being able to correctly predict the next pitch could give the batter a significant advantage by allowing the batter to decide on the right strategy at the plate towards making a hit. Details about the features used for making the prediction are elaborated on. Furthermore, the context of the prediction is considered in terms of the pitcher and the game play situation referred to as count (i.e., the number of balls and strikes have been thrown, a total of 12 scenarios). A total of 18 features (i.e., statistical metrics) are extracted from the data repository, and they are transitioned into 59 features in 6 groups. The data are taken from 2008 (used for training), 2009 (used for testing), 2010 (used for training), 2011 (used for training), and 2012 (used for testing) seasons in MLB. All pitchers that had 750 or more pitches are considered. The accuracy of the prediction ranges between 76.27% and 77.97%.

In Ref. [

27], the same problem (i.e., whether or not the next pitch is a fastball) as that of Ref. [

26] is addressed with a different machine learning method. The data used consist of about 85,000 observations, which are pitch-by-pitch statistics for all the pitchers of the four teams (Cincinnati Reds, New York Yankees, New York Mets, and Toronto Blue Jays) in two seasons (2016 and 2017). The 2016 season data are used as training data, and the 2017 season data are used to test the model performance. The paper reported only the overall accuracy of the prediction, which is 71.36%. Despite being published four years later, the authors of Ref. [

27] were not aware of the study in Ref. [

26].

The next pitch prediction problem is also studied three years later in Ref. [

28] with yet another machine learning model. Eight features are used for prediction, including the previous pitch type, the location the previous pitch is thrown to, the number of pitches made in the game by the pitcher, the current inning in the game, the number of runners currently on base, the current score difference, the number of balls and strikes so far, and the current number of outs. The data used are taken from 201 pitchers in MLB in the seasons of 2015, 2016, 2017, 2018, and 2021. The average accuracy is 76.7%.

Pitch prediction is studied in Ref. [

29] from a totally different perspective. Instead of using game-related data and player preference/performance statistics, the study focused on determining the pitch type based on the player movement data acquired via two inertial measurement instruments (IMUs) [

39]. The motion data collected in this study in conjunction with the pitch type prediction outcomes could enhance pitcher training. From the pitcher’s perspective, it is advantageous to keep the same form of motions when throwing different types of pitches because this would prevent the batter from anticipating a specific type of pitch. On the other hand, it is inevitable to use a distinctive form of motion when throwing different types of pitches, which is the foundation for making predictions based on motion data. Furthermore, having the right pitching mechanics is also instrumental to minimize injuries. Due to the nature of the study, the authors acquired data via a human subject trial with 19 elite youth academic pitchers in Europe. The pitcher would wear two IMUs, one on the front (in the chest area) and the other on the back (in the lower spine area). The prediction performance is evaluated in terms of a set of metrics, including accuracy, sensitivity, precision, and F1 score. Both binary and multiclass classification are carried out. The average accuracy for binary classification is 71.0%. This study addressed the class imbalance issue with under or over-sampling.

Pitch type prediction is also studied in Ref. [

30] from yet another perspective. The objective of the study is to determine the pitch type correctly and correlate the impact of pitch types to batter performance. The training data are obtained from the Nippon Professional Baseball (NPB) 2017 and 2018 seasons, and the pitch types are labeled by experts. The classification accuracy is reported graphically. Due to the two-step classification approach, the mean accuracy for pitch type prediction is higher than 95%.

In Ref. [

32], the velocity of the pitched fastball is predicted using machine learning based on pitcher kinematics data. Similar to Refs. [

29,

33], the data are acquired from a human subject trial where the pitchers are at the high school and college levels. The primary research objective of the study is to establish a relationship between biomechanical variables with the velocity of the pitch. Due to the goal of the study, machine learning-based regression (instead of classification) is used to predict pitch velocity. The study focused on only a single type of pitch, fastball, which is the most popular type. Motion data are acquired using a 12-camera motion analysis system, as well as three multicomponent force plates. The performance of the prediction is in terms of the root mean square error (RMSE). The best machine learning model produces a rather small RMSE of less than 0.001. Several significant kinematic variables with respect to the pitch velocity have been identified.

In Ref. [

33], the association between pitcher pitching kinematics and the pitch outcome in terms of whether or not the ball hits the strike zone (instead of conventional pitch type) is studied. Similar to the study in Ref. [

29], the data are collected via a human subject trial with professional-level baseball pitchers. The pitching kinematics are captured via an 8-camera motion analysis system. The pitcher would need to wear 42 reflective marker placed at specific locations on their body. The participating pitchers are required to pitch towards the center of the strike zone. Because no one has perfect control over the pitching target location, it is inevitable that the pitched ball would sometimes go outside the strike zone, which is called a ball. In this study, machine learning is used to predict the pitched ball location (within the strike zone or outside) based on the measured pitcher body kinematics. The prediction accuracy is 70.0%.

In Ref. [

19], the intrinsic value of an individual pitch is investigated. The value of a pitch is determined based on a set of five variables that are captured by the PITCHf/x system and transformed from the data, including one variable for the ball speed, two variables for the pitch location relative to the strike zone, and two other variables that are calculated to reflect the impact to the trajectory of the pitched ball due to ball spinning in terms of the displacement of the ball. The value of the pitch is measured in terms of how successful the batter performs with this pitch. The value is expressed in the unit of a modern sabermetric called weighted on base average (wOBA). The study then calculated the aggregated pitch values for each pitcher as a more accurate intrinsic measure of performance. The performance of the proposed regression method is evaluated in terms of the predictive power for pitchers in MLB in the 2015 season based on the 2014 season data.

In Ref. [

18], a machine learning method is proposed to determine the intrinsic value of individual batted balls based on the HITf/x data. A simple three-dimensional feature vector is used to estimate the intrinsic value of the batted ball. The three features include the initial speed of the batted ball, the vertical launch angle, and the horizontal spray angle. The intrinsic value of the batted ball is also expressed in terms of the wOBA. The proposed method is validated using Cronbach’s alpha, which shows that statistics derived from proposed intrinsic values for batted balls have a higher reliability than existing sabermetrics based on the MLB 2014 season data.

In Ref. [

31], the intrinsic value of an individual pitch is also studied. The basic idea is rather similar to that of Ref. [

19], where the value of a pitch is determined by the following three components: (1) context of the pitch in terms of the count; (2) pitch descriptor (i.e., characteristics of the pitcher and the batter, and the characteristics of the pitched ball); (3) the outcome of the pitch. The treatment of the three components in Ref. [

31] is slightly different in Ref. [

19]. The handedness of the pitcher and the batter is considered as part of the pitch description in Ref. [

31], while such information is considered as part of the context in Ref. [

19]. The two approaches are summarized in

Table 2. The study used the PITCHf/x data from the MLB 2013, 2014, and 2015 seasons.

In Ref. [

34], the focus is also on individual play actions that are pertaining to both batters and fielders regarding what types of fly balls are harder to catch in terms of the probability of flyout. Although there are abundant data in baseball, they are not enough for some specific problems such as the one investigated in this study. To resolve this issue, a machine learning model is trained using actual game data, and then the trained model is used to generate as much synthetic data as needed to validate the research questions, as shown in

Figure 4. The findings confirmed the following hypotheses of the authors: (1) the motion of the fly balls differs between the pull side from that of the opposite side due to aerodynamic effects, and (2) if the first hypothesis holds, then the probability for the outcome of the batted balls would be different (flyout or base hit) for the batted in the pull size and for the opposite side.

In Ref. [

35], the pitching decision is modeled as a Markov decision process where the pitcher’s decision is assumed only dependent on the current count. The main hypothesis of this study is that a batter could exploit the knowledge of the pitcher decision for better outcomes. Simulated games are used to validate the hypothesis. Furthermore, reinforcement learning is used with the data from the MLB 2010 season and tested the batting performance using the data from the MLB 2009 season. A spatial component is introduced in the model and it is essential to calculate how a batter could exploit the knowledge of the anticipated pitch. The study showed that normal batters would see improved performance if the knowledge of the next pitch can be gained with reinforcement learning while elite batter would not see any improvements.

In Ref. [

36], the issue of pitcher fatigue detection is studied. The fatigue detection is based on the following three factors: (1) elbow vagus angle, (2) trunk flexion angle, and (3) time between pitch. A machine learning model is used to predict the fatigue point. The data used for the study are obtained from the games played by a particular pitcher of the Futon Titans baseball team during the 2018 season in the Chinese Professional Baseball League (CPBL in Taiwan).

In Ref. [

37], the authors attempted to tackle a very challenging in-game decision making problem, i.e., when to pull the starting pitcher. The problem is formulated as a regression problem and the decision whether or not to pull the starting pitcher is translated into an observable event as follows: if the pitcher is not pulled in the current inning, the pitcher would give up at least one run in the next inning. Due to the complexity of the problem, traditional evaluation metrics are redefined. Data from the MLB 2006–2010 season are used for the study. The study obtained the data from a website that is no longer available (but the data are now available in many repositories).

In Ref. [

38], a method of evaluating the impact of a player is proposed. The method is based on the transformer-based large language model [

40]. It aims to describe the impact of a player to the game using a small set of game activities, which would demonstrate how a player performs instead of what the player’s statistics are. A key idea is to first define the state of the game and then determine the state change as the result of the play made by a particular play. The game state is defined by the following four variables: (1) pitch count; (2) base occupancy; (3) number of outs; and (4) the game score. To measure the impact of a player, a sequence of activities, which is referred to as a window, is considered. The window of activities consists of two views. This study used the pitch-by-pitch data from the MLB 2015–2018 seasons, and the season-by-season data of the MLB 1995–2018 seasons. The output of the proposed model is shown visually without any quantitative analysis.

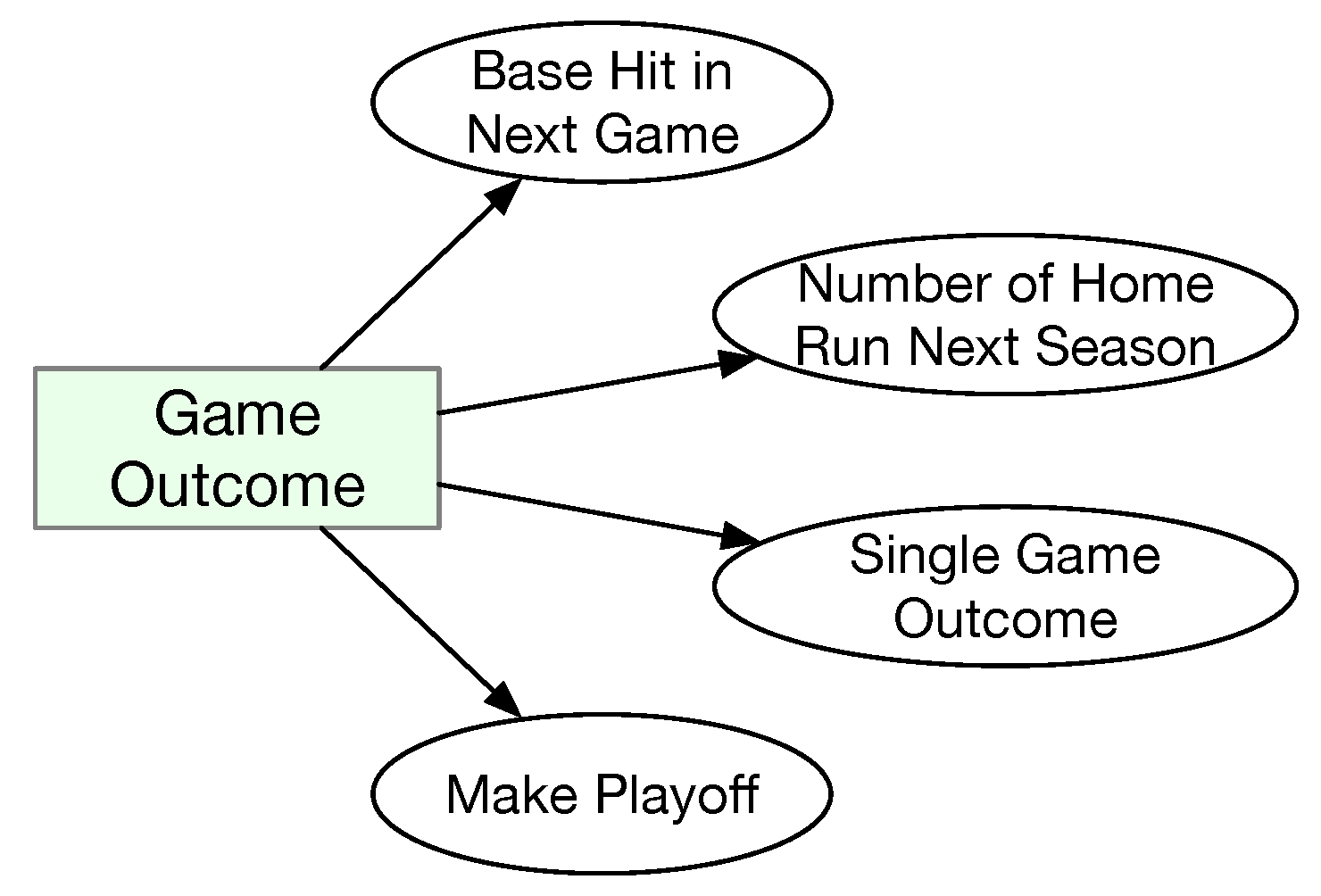

3.5. Game Outcome Prediction

In the world of sports, betting is an important component. Sports analytics has been heavily used to improve the betting odds [

54]. There are a few studies that focused on using machine learning to make predictions related to baseball betting. These studies attempted to project the likelihood of a batter to make a hit, which team would win a particular game, which teams would make playoff, or even which team would win the championship in MLB. This type of prediction is referred to as projection. Various projection algorithms have been proposed. The projection results are published online. However, the exact algorithms are usually not publicly available. In Ref. [

54], several such algorithms are mentioned, including Marcel, PECOTA (short for Player Empirical Comparison and Optimization Test Algorithm), Steamer, and ZiPS. Seven studies are included in this category, and they have focused on various aspects of the game outcomes, as shown in

Figure 8 and

Table 6.

In Ref. [

54], several machine learning models are used to predict which batter would most likely make a base hit in a game. The challenge is to make the right choice in picking batters that could make a base hit consecutively. MLB offers an online game called “Beat the Streak” for MLB fans to make the predictions, offering cash prizes for those who could make the right picks 5 times in a row or more. To make the prediction, data from four MLB seasons (2015–2018) are retrieved from three sources (Baseball Reference, Baseball Savant, and ESPN). A total of 155,521 samples are obtained. The prediction performance is evaluated with several metrics. The most prominent ones are the precision for the 100 and 250 highest probability of making base hits as predicted by the machine learning models. For top-100, the highest precision obtained in 85%.

In [

55], the number of home runs that a batter will make in the next season is predicted based on historical data using a deep learning model. Batter data from the MLB 1961–2019 seasons are retrieved for the study, where the data from the 2018 and 2019 seasons are used for testing, and data from the previous years are used for training the machine learning model. Obviously, it is unreasonable to expect highly accurate prediction on the number of home runs for a batter in a future season. The authors introduced a series of ranges for the predicted number, e.g.,

,

,

, and

, centered around the ground truth. The accuracy is defined as the ratio of the predicted number within each range. The accuracy can exceed 80% for the

range.

In Ref. [

56], the single-game outcomes of the 2019 regular season games are predicted using three machine learning models. Although the stated prediction accuracy is significantly higher than other similar studies (94.18% vs. 73.7% or lower), we have serious concerns over the soundness of the study. The study claimed that there are 4858 games in the 2019 season (two teams played 161 games instead of 162). In fact, the actual number of games is half that (i.e., 2429). The study mentioned a number of times that the outcome of some games is win and some is loss. In fact, for each game, the only outcome is one team would win and the other team would loss. Hence, it is quite likely that the dataset contains duplicate games. Compounding the issue is the fact that the same dataset is used for both training the model parameters and testing. Normally, the testing dataset should not be used to train the model parameters.

A follow-up study of Ref. [

56] is reported in Ref. [

57]. The game outcome prediction accuracy reported in Ref. [

57] is much more realistic (around 65%). The prediction of single-game outcomes is structured on a per-team basis, and there is no clear issue of double-counting games as in Ref. [

56]. The data used also expanded from a single 2019 MLB season to all games in the 2015–2019 MLB seasons.

In [

58], the outcomes of games in KBO (2012–2021 seasons) are predicted with a deep learning model based on player statistics for the home team and the away team, as well as the team features (in terms of the winning percentage). In addition to the proposed deep learning model, the study was also compared with three baseline machine learning models. In addition to binary classification, the study also experimented with prediction based on regression. The prediction accuracy of the outcomes is around 60%, which is significantly below that of Ref. [

56] but much more realistic.

Another study on KBO game outcome prediction is reported in Ref. [

59]. A deep learning model is used to make predictions based on the team, stadium, and player features. The paper is rather short, with little details provided on the features used, how the model is trained, and how testing is performed. Nevertheless, the reported prediction accuracy can exceed 70%.

In Ref. [

60], machine learning models are used to predict which teams could make the playoffs in MLB. Records from the MLB seasons (1998–2018) are used to train the models, and the 2019 season is used to test the performance of the models. Team-based batting and pitching data are used as the features. The probability of each team to make the playoff is predicted based on the features. The overall average accuracy is about 83%, but only six out of the ten teams are correctly predicted. The discrepancy is due to the fact that there are far more teams that will not make the playoff.