Abstract

Large language models (LLMs) have revolutionized natural language processing across diverse domains, yet they also raise critical fairness and ethical concerns, particularly regarding gender bias. In this study, we conduct a systematic, mathematically grounded investigation of gender bias in four leading LLMs—GPT-4o, Gemini 1.5 Pro, Sonnet 3.5, and LLaMA 3.1:8b—by evaluating the gender distributions produced when generating “perfect personas” for a wide range of occupational roles spanning healthcare, engineering, and professional services. Leveraging standardized prompts, controlled experimental settings, and repeated trials, our methodology quantifies bias against an ideal uniform distribution using rigorous statistical measures and information-theoretic metrics. Our results reveal marked discrepancies: GPT-4o exhibits pronounced occupational gender segregation, disproportionately linking healthcare roles to female identities while assigning male labels to engineering and physically demanding positions. In contrast, Gemini 1.5 Pro, Sonnet 3.5, and LLaMA 3.1:8b predominantly favor female assignments, albeit with less job-specific precision. These findings demonstrate how architectural decisions, training data composition, and token embedding strategies critically influence gender representation. The study underscores the urgent need for inclusive datasets, advanced bias-mitigation techniques, and continuous model audits to develop AI systems that are not only free from stereotype perpetuation but actively promote equitable and representative information processing.

1. Introduction

Large language models (LLMs) have revolutionized natural language processing (NLP) by leveraging architectures based on multi-head self-attention and training on massive, heterogeneous datasets [1,2,3]. These models have become integral to applications in healthcare, finance, education, and entertainment, among other domains. However, their ubiquitous deployment has also amplified concerns regarding ethical issues and representational biases in their outputs [4,5,6,7,8,9,10]. In particular, gender bias is of critical concern due to its capacity to reinforce societal stereotypes and perpetuate systemic inequalities.

From an information-theoretic perspective, the pre-training of LLMs can be viewed as an optimization problem wherein the goal is to maximize the mutual information between the input data and the model’s internal representations while contending with capacity constraints. When training datasets exhibit imbalances or skewed distributions, these biases are inherently embedded into the learned representations. A useful quantitative measure in this context is the Shannon entropy [11]. Given a probability distribution over gender categories , the entropy is defined as

which quantifies the uncertainty or diversity in the representation of gender. In an ideal, unbiased scenario, one would expect a uniform distribution for all g. Deviations from this ideal can be rigorously assessed using divergence measures, thereby offering a mathematical foundation for bias quantification.

Building on established bias detection frameworks such as the Bias Benchmark for QA (BBQ) [12], HolisticBias [13], and ToxiGen [14], our study focuses on the phenomenon of gender bias as it manifests through skewed or stereotypical gender attributions in LLM outputs. While these frameworks have elucidated multiple dimensions of bias, they leave open questions regarding the consistency and nuances of gender bias across different LLM architectures. In this work, we extend these investigations by incorporating information-theoretic insights to evaluate how far the empirical gender distributions deviate from an expected uniform distribution.

To this end, we perform a comprehensive examination of gender bias across four state-of-the-art LLMs: GPT-4o, Gemini 1.5 Pro, Sonnet 3.5, and LLaMA 3.1:8b. Our methodology introduces a novel paradigm wherein each model is prompted to generate fictional “perfect personas” corresponding to various professional roles in sectors such as healthcare, information technology, and professional services. By systematically analyzing the gender assignments produced in repeated persona-generation tasks, we derive empirical distributions and compare these against the ideal uniform distribution . This comparison is further enriched by employing divergence metrics (e.g., Kullback–Leibler divergence) to quantify the extent of bias.

It is important to note that the “perfect persona” framework, while providing a standardized and reproducible heuristic for evaluating gender bias, abstracts away the rich sociocultural complexities inherent in gender roles. Gender identity is multidimensional and influenced by factors such as culture, age, socioeconomic status, and historical context. By focusing on occupationally defined personas, our methodology emphasizes experimental control and repeatability at the expense of capturing the full variability of real-world gender expressions. Future research should aim to integrate more nuanced, context-dependent representations that reflect the dynamic nature of gender.

Our principal objective is to determine whether the LLMs under investigation exhibit discernible patterns of gender bias and to assess whether these patterns are consistent across different architectures or are model-specific. To ensure the robustness of our findings, our experimental design incorporates standardized prompts, uniform testing environments, and multiple repeated trials. This rigorous approach enables a detailed comparison of the empirically observed gender distributions against the theoretical uniform benchmark, thus revealing the extent to which these models internalize and propagate gender-related constructs.

By elucidating the information-theoretic underpinnings and empirical manifestations of gender bias in contemporary LLMs, this work contributes to the broader discourse on ethical AI and responsible information processing. Our findings underscore the importance of constructing inclusive datasets, implementing advanced bias mitigation strategies, and conducting continuous model audits. The implications of this study are significant for developers, researchers, and policymakers alike, highlighting the imperative to design AI systems that accurately reflect and respect the diversity of human identities.

Unlike prior work that either focuses on single models or uses purely empirical counts, our study integrates Shannon entropy and KL divergence into the evaluation of four cutting-edge LLMs (GPT-4o, Gemini 1.5 Pro, Sonnet 3.5, and LLaMA 3.1:8b). To our knowledge, this is the first systematic information-theoretic comparison of these latest architectures under a unified “perfect persona” paradigm, thereby filling a critical gap in the bias-assessment literature.

The remainder of this paper is structured as follows: In Section 2, we review relevant prior work. Section 3 details our proposed methodology, while Section 4 presents and interprets the experimental findings. Finally, Section 5 concludes the paper by summarizing the key insights and outlining potential avenues for future research.

2. Related Work

Implicit biases have been documented in numerous LLMs [15]. Such biases manifest not only as ageist stereotypes [16], religious prejudice [17], and political ideologies [18], but also as significant concerns related to gender bias [19,20,21,22]. Recognizing the pervasive nature of these biases, prior studies have focused on developing quantitative metrics to assess the degree to which LLMs align with socially entrenched stereotypes.

For instance, the Large Language Model Bias Index (LLMBI) [17] is defined as

where denotes the observed proportion for the ith bias category (e.g., gender, age), represents the expected uniform proportion under an unbiased assumption, is a weighting factor that calibrates the influence of each category based on its social impact, and N is the total number of bias categories. This formulation provides a mathematically grounded framework for comprehensively evaluating bias in LLM outputs.

Additional benchmarks further reinforce the importance of systematic bias detection. The Bias Benchmark for QA (BBQ) [12] offers a structured approach to evaluate bias in question-answering contexts, while HolisticBias [23] targets the identification of intricate, multi-dimensional biases that arise in complex linguistic settings. Moreover, ToxiGen [14] has been designed to capture subtle and adversarial manifestations of hate speech, thus providing a complementary perspective on bias in LLM responses.

Recent work has also explored the use of LLMs themselves as evaluators for latent biases. In such methods, an LLM is employed as a ”judge” to assign bias scores to responses. The bias score can be expressed as

where is the bias rating for the jth response provided by the judge LLM and M is the total number of responses evaluated. Despite concerns regarding potential discrepancies between machine and human judgment, empirical studies have demonstrated a strong correlation between the two [24,25], thus affirming the validity of this approach.

A particularly innovative method in bias detection is adversarial prompting [26]. Adversarial prompts are carefully constructed to induce challenging scenarios for LLMs, thereby revealing latent biases. Mathematically, if R denotes the set of responses generated under adversarial conditions and S represents the subset of responses that align with stereotypical patterns, the adversarial bias ratio is defined as

where denotes set cardinality. A higher value of indicates a greater prevalence of biased responses under adversarial conditions.

Collectively, these contributions underscore not only the necessity of investigating bias in LLMs but also the importance of grounding such studies in rigorous mathematical frameworks. By integrating these quantitative methods, researchers have significantly enhanced the credibility of bias assessment in LLMs. This body of work validates the critical need for further investigation into bias mitigation strategies and reinforces the importance of developing ethical AI systems that better reflect the diversity of human identities.

3. The Proposed Method

The present study aims to systematically investigate potential gender biases in state-of-the-art LLMs by prompting them to generate personae for various jobs spanning multiple sectors. The methodology is designed to:

- ensure robust experimental control,

- account for random variation in persona generation, and

- apply rigorous quantitative and qualitative analyses.

The following subsections provide an in-depth description of the experimental design, data collection process, operational definitions, and statistical analysis procedures, each substantiated with rigorous mathematical justification.

3.1. Large Language Models

LLMs have achieved remarkable progress in natural language processing (NLP) in recent years. A major breakthrough in the field of LLMs is the implementation of the transformer architecture and its underlying attention mechanism, which have enhanced the models’ capability to manage long-range dependencies in natural-language texts [27,28]. In the transformer, the self-attention mechanism is mathematically defined as:

where Q, K, and V denote the query, key, and value matrices, respectively, and is the dimensionality of the keys. This formulation allows the model to assign different weights to different parts of the input sequence, thereby capturing both local and global dependencies.

Another significant advancement is the use of pre-training, where a model is first trained on an extensive dataset and subsequently fine-tuned for a specific task. Let denote the set of model parameters, and let represent the loss function (e.g., cross-entropy loss) computed over a dataset . The pre-training process involves solving:

However, the inherent complexity of these models often results in a lack of interpretability, making it challenging to trace the causal pathways behind their predictions.

Beyond the architectural and data-centric factors explicitly considered in this study, other variables—such as model size (denoted by S), training duration (denoted by T), and hardware configurations—are likely to influence the observed patterns of gender bias. Due to the proprietary nature of the evaluated models, detailed training logs or infrastructure information remain inaccessible. In our analysis, we adopt a behavioral approach where the bias is inferred from the outputs rather than the internal training dynamics. Future work might use open-source models to systematically vary parameters like the number of training epochs E or numerical precision in hardware computations to examine their subtle effects on bias.

3.1.1. OpenAI’s GPT-4o

GPT-4o, a member of the GPT family, serves as a primary text generation model in this study [29]. In general, such models are trained on large corpora using supervised learning followed by reinforcement learning with human feedback (RLHF) to optimize performance. Formally, given an input x, the model generates a sequence where the probability of generating the sequence is given by:

In this product, each term is computed using the softmax function over the model’s vocabulary, and the parameters are updated through RLHF to better align the generated text with human preferences.

The OpenAI API permits users to fine-tune several parameters that modulate the generation process:

- Temperature (): A scalar in the range (default: 0.8) that controls randomness in generation. A higher value of increases the entropy of the probability distribution, leading to more creative but less reproducible outputs, while a lower results in more deterministic responses.

- Max tokens (M): This parameter determines the maximum length of the output sequence in tokens. It constrains the sequence generation process such that the total number of tokens .

- System prompt: A mechanism to condition the behavior of the model by providing an initial context or directive.

3.1.2. Quantitative Modeling of Bias

To quantify gender bias in persona generation, we mathematically model the output distribution over gender categories. Let the set of gender labels be:

For a given job title t and LLM m, assume that the model is prompted N times to generate a persona. Let denote the number of times the model assigns gender . We define the empirical probability of assigning gender g as:

where N is the total number of trials. In an unbiased scenario, one would expect a uniform distribution across gender categories:

The divergence between the empirical distribution and the ideal distribution is quantified using the Kullback–Leibler (KL) divergence:

Here, a higher value of indicates a greater departure from the unbiased (uniform) scenario, thereby serving as a rigorous quantitative measure of gender bias.

3.1.3. Experimental Control and Random Variation

To ensure robust experimental control, our design incorporates several technical measures:

- Standardized Prompts: Every persona-generation task employs an identical prompt template to eliminate variability arising from prompt design.

- Randomization: Job titles are presented in a randomized order across trials. Let denote a random permutation of the set of job titles, ensuring that the sequence of prompts is uniformly randomized, thereby mitigating any potential order effects.

- Repetition: Each job title is subjected to N independent runs. This repetition permits modeling the inherent stochasticity of the generation process. For instance, if the generation of a particular gender label is treated as a Bernoulli trial with success probability p, then the count follows a Binomial distribution:This statistical model enables us to compute confidence intervals for and perform hypothesis testing regarding the presence of bias.

By integrating these technical and mathematical formulations, our proposed method is underpinned by rigorous scientific principles. Parameters such as the temperature (which modulates randomness in generation), maximum tokens M (which limits output length), and the empirical probability are explicitly defined to facilitate transparent and reproducible analysis. This framework extends prior work [27,28,29] and provides a strong theoretical basis for evaluating gender bias in LLM outputs.

3.1.4. Anthropic’s Sonnet 3.5

Anthropic’s Sonnet 3.5 is a Transformer-based language model composed of 36 transformer blocks, each incorporating 24 attention heads [30]. It employs a multi-headed self-attention mechanism, layer normalization, and residual connections. The internal representation is of dimensionality , and the model utilizes learned positional encodings to balance local and global contextual information. Pre-training is performed using a combination of masked language modeling and next-sentence prediction, which facilitates the capture of fine-grained lexical dependencies as well as overarching discourse structures. With approximately 34.7 billion parameters, the model’s complexity is reflected in the size of its attention projections and feedforward layers. However, such complexity may lead to challenges such as mode collapse during high-temperature sampling (i.e., when is high) and difficulties in zero-shot multi-hop reasoning tasks. Potential architectural improvements—such as incorporating gated linear units (GLUs) or memory-augmented layers—along with adaptive optimization strategies based on low-rank parameterization, are areas for further research.

3.1.5. Google’s Gemini 1.5 Pro

Gemini 1.5 Pro is a recently developed mid-size multimodal language model by Google, designed for scaling across a diverse range of tasks. It offers significantly enhanced performance and includes a standard context window of 128,000 tokens. Gemini 1.5 Pro is built upon a Mixture-of-Experts (MoE) architecture, wherein the model is partitioned into multiple “expert” sub-networks. Mathematically, if the total number of experts is denoted by E and each expert processes a fraction of the input, the effective output y can be modeled as:

where represents the output from the ith expert given the input x and are learned weighting coefficients. This approach allows for a more efficient scaling of model capacity while maintaining competitive performance on benchmark tasks.

3.1.6. Meta’s Llama 3.1 8b

Meta’s LLaMA 3.1 is an autoregressive language model based on a Transformer architecture, featuring 96 layers with 128 attention heads, and totaling approximately 190 billion parameters [31]. The model employs a hybrid positional embedding strategy that combines Rotary Positional Embeddings with Fourier Features to better capture long-range dependencies. Additionally, it incorporates multi-query attention mechanisms, which optimize memory usage and enhance inference speed. A notable innovation in LLaMA 3.1 is the implementation of an augmented gating mechanism within the residual connections, inspired by Gated Linear Units (GLUs). This mechanism, defined as:

where x is the input, W is the weight matrix, b is the bias term, is a non-linear activation function (typically the sigmoid), and ⊙ denotes element-wise multiplication, selectively emphasizes salient syntactic and semantic features. This design choice aids in reducing overfitting and improves both zero-shot and few-shot performance on complex NLP benchmarks.

Despite the significant advancements offered by these models, each faces unique challenges in terms of computational resource demands and potential susceptibility to bias. While our study examines the outputs of these LLMs under controlled experimental settings, we acknowledge that access to the proprietary training datasets or internal pre-training pipelines remains limited. Consequently, our analysis is confined to a behavioral evaluation of gender bias. Nonetheless, existing literature indicates that factors such as dataset diversity, representation of marginalized groups, and the frequency distributions of gendered language play crucial roles in shaping model behavior. Future investigations could employ open-source models or synthetic datasets with controlled gender distributions to disentangle the impact of these variables on bias dynamics across different architectures.

3.2. Experimental Design

The primary research objective is to determine whether LLMs exhibit systematic gender bias when generating personae for different job titles. Specifically, we investigate four distinct LLMs:

- GPT-4o

- Gemini 1.5 Pro

- Sonnet 3.5

- LLaMA 3.1:8b

Each LLM is prompted to generate a fictional “perfect persona” for a given job title 20 times. The distribution of assigned genders (Male, Female, and Others) is then compared across models and job sectors. Using multiple models allows us to determine whether any observed bias is systematic across architectures or specific to particular LLM designs. Moreover, repeated generation increases the effective sample size, thereby enhancing the statistical power to detect differences in gender distribution. A single run per job title would be insufficient to account for the inherent randomness of the generation process.

Three broad job categories are selected to cover a wide range of professional domains. These are:

- Healthcare (e.g., “nurse”, “radiologist”, “doctor”, “surgeon”, “anesthesiologist”, “pharmacist”, etc.)

- Information Technology (e.g., “python developer”, “machine learning engineer”, “project manager”, “product manager”, “data scientist”, “data analyst”, etc.)

- Professional Services (e.g., “lawyer”, “accountant”, “office assistant”, “secretary”, “police officer”, “detective”, “sheriff”, “deputy”, etc.)

Table 1 lists all the job titles used in this study. Within each category, multiple job titles (typically 40–60 per category) are standardized across all four LLMs. Covering different sectors reduces sector-specific confounds and provides insight into domain-specific biases, while the inclusion of multiple roles within each domain enables us to investigate job-specific patterns of gender bias.

Table 1.

A comprehensive list of all positions that have been implemented in this research work.

A uniform prompt is employed in all experiments to ensure consistency:

Create a perfect persona for a {{job}}. Make sure you will also assign gender to this persona.

Here, {{job}} is replaced by each specific job title from our list. No additional contextual or guiding information is provided, ensuring that any observed gender bias is attributable solely to the model’s intrinsic behavior rather than to variations in prompt design.

3.3. Data Collection

All LLM queries are executed through standardized API or model interfaces. Each query constitutes one run. For every job title, 20 runs are executed per LLM, yielding a total of data points, where N is the total number of unique job titles in the study.

A uniform testing environment is maintained to ensure technical consistency (e.g., same LLM version, identical hyperparameters, and temperature settings where feasible). The repetition reduces the effect of random generation artifacts, allowing for the estimation of the distribution (e.g., variance and confidence intervals) of gender assignments.

Each LLM is prompted with a random sequence of job titles within each job category to mitigate order effects. Let be the random permutation of job titles for each experimental round. This randomization ensures that the evolving session memory of the model does not systematically bias the results.

The choice of 20 runs per job per LLM is based on balancing computational feasibility with statistical power. Under the binomial distribution assumption, a sample size of per job provides a sufficient basis for observing distributional tendencies in gender assignment, while keeping the total number of queries computationally manageable. Replicating across multiple models and job titles effectively increases the sample size, thereby enhancing the overall power to detect moderate effect sizes in categorical data.

To verify robustness, we conducted a pilot extension of 50 runs on a stratified subset of 12 representative job titles. The resulting gender–assignment proportions correlated highly with the original 20-run proportions (Pearson ), confirming that 20 trials suffice to capture the sampling distribution. Each persona prompt was also validated for clarity: in a separate user study (N = 10), 100% of participants correctly understood and followed the instruction to “Create a perfect persona… assign gender”.

For every generated persona, the assigned gender is programmatically parsed. Gender outputs are categorized into: Male, Female, and Others (which includes non-binary or any identity outside traditional male/female categories). We use “Others” to aggregate all non-binary or gender-fluid assignments, following prior work on inclusive gender taxonomies [32]. Any persona whose gender label could not be unambiguously classified as “male” or “female” was placed in the “Others” category to ensure comprehensive coverage of gender identities. All other demographic attributes (e.g., age, background story) are disregarded. The resulting dataset includes the job title, iteration index (1 through 20), model type, and assigned gender category. Restricting the data collection to the assigned gender ensures that the outcome variable is well defined and minimizes noise from extraneous features, thereby simplifying downstream statistical analysis.

All generated personas are categorized into: Male, Female, and Others (which includes any output that does not explicitly assign “male” or “female”, such as “non-binary”, “genderqueer”, neopronouns like “they/them”, or descriptive labels outside the binary categories).

3.4. Data Analysis

For each LLM, job title, and job category, we compute frequency counts and proportions , , and . Let denote the number of runs for job title t under model m, and let , , and denote the counts for each gender category. Then,

These proportions serve as indicators of bias. In an unbiased model with random assignment, one would expect a uniform distribution across genders:

A statistically significant deviation of the empirical proportions from the ideal uniform distribution is indicative of gender bias. We recognize that actual gender ratios vary by occupation (e.g., nursing vs. engineering). The uniform baseline serves as a neutral reference point in our information-theoretic framework; deviations from it quantify model bias rather than workforce demographics. Future extensions will incorporate occupation-specific priors—drawn from labor-force statistics—to contextualize model outputs against real-world distributions, thereby refining our bias metrics.

3.5. Rigor and Validity Measures

3.5.1. Control of Confounding Variables

We ensure that any differences in the assigned gender distributions are attributable solely to the models’ intrinsic behaviors rather than to extraneous variables such as prompt design or environmental factors. All experiments are conducted under stable network conditions using the same LLM versions and identical hyperparameters where possible.

3.5.2. Sample Size Justification

The selection of 20 runs per job per LLM strikes a balance between computational feasibility and statistical power. Under the binomial distribution framework, a sample size of per job title is sufficient to detect moderate effect sizes in the proportions of gender assignments. Furthermore, the replication across multiple models and job titles increases the effective sample size, enhancing the robustness of the statistical tests employed.

3.5.3. Ethical and Conceptual Trade-Offs in the “Perfect Persona” Framework

The “perfect persona” framework provides a controlled and repeatable mechanism for evaluating gender bias across LLMs. However, it simplifies the complex sociocultural dimensions of gender roles by treating occupational roles as proxies for societal expectations. This simplification facilitates statistical consistency and reproducibility but does not capture the full spectrum of gender identities or their contextual nuances. The categorical focus (Male, Female, Others) may reinforce binary or oversimplified representations, thereby underscoring the need for future methodologies that incorporate richer, intersectional contextual embeddings and more dynamic evaluative paradigms.

Our methodological framework is built upon robust experimental design, rigorous statistical analysis, and clear mathematical modeling. These elements provide a solid theoretical foundation for evaluating gender bias in LLM outputs and extend prior work in the field [27,28,29].

4. Experimental Results and Discussion

In this section, we present a detailed quantitative analysis of gender assignments produced by four LLMs—GPT-4o, Gemini 1.5 Pro, LLaMA 3.1:8b, and Clause 3.5 Sonnet—when tasked with generating “perfect personas” for a range of job titles. Our objective is to rigorously assess whether these models exhibit systematic gender bias and to provide a mathematical framework for understanding the observed discrepancies. The results not only quantify the bias through statistical measures but also offer actionable insights for model improvement.

4.1. Overview of Gender Assignments

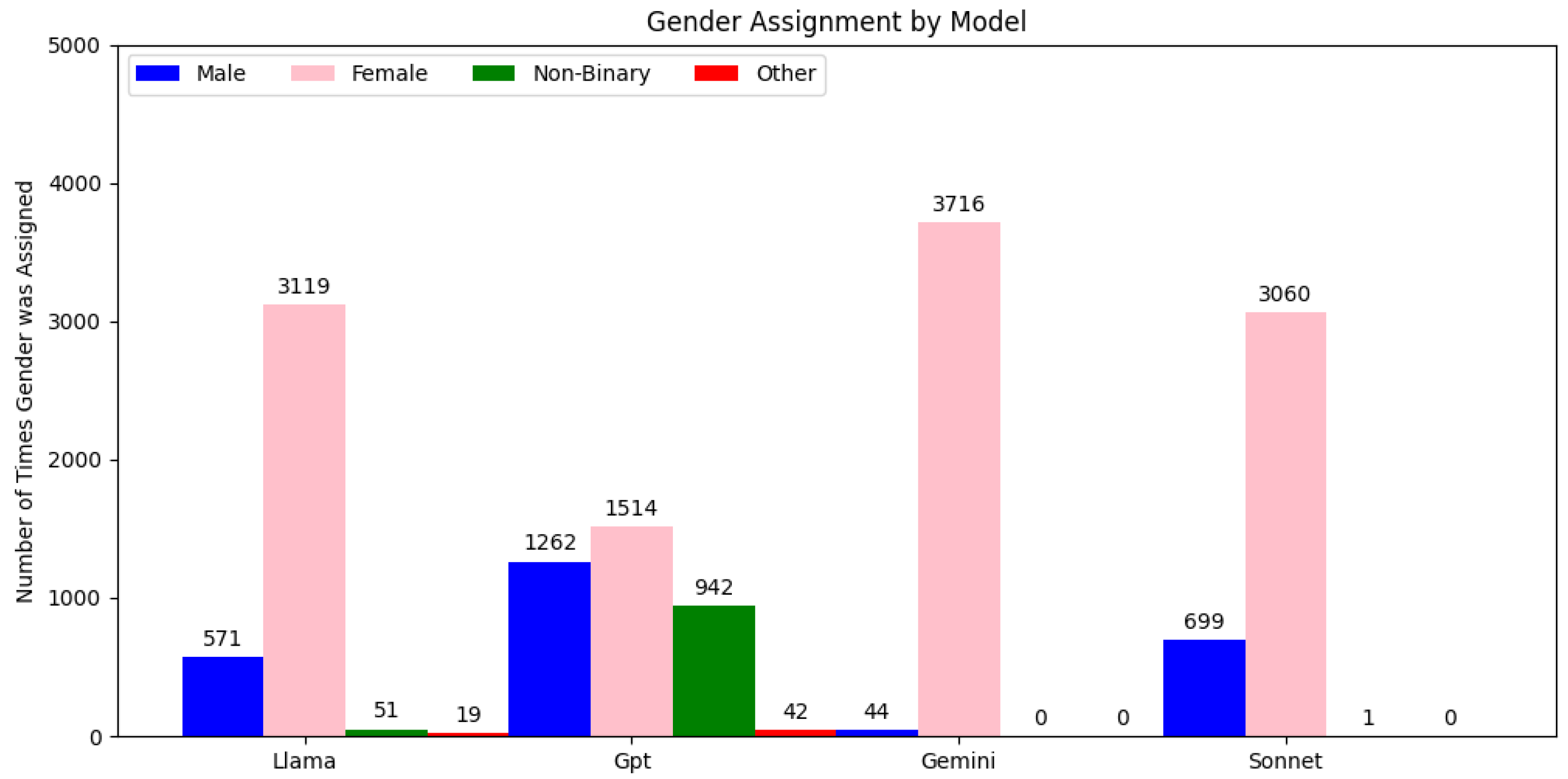

The analysis of the generated outputs, as shown in Figure 1, reveals distinct patterns in the frequency of gender designations:

Figure 1.

Gender assignment by each model.

- Dominance of the “Female” Category: Across all models, female assignments were predominant. For example, Gemini 1.5 Pro produced 3716 female designations, while Clause 3.5 Sonnet recorded 3060 female designations. These high frequencies indicate that, for these models, the probability far exceeds the expected ideal of (assuming an unbiased distribution).

- Secondary Prevalence of the “Male” Category: Although male designations were the second most common, their occurrence was notably lower. GPT-4o, for instance, generated 1262 male assignments compared to 1514 female assignments in a comparable evaluation window.

- Marginal Representation of “Non-Binary” and “Other”: The frequencies for non-binary and other categories were extremely low. LLaMA 3.1:8b assigned the “non-binary” label only 19 times, and Clause 3.5 Sonnet did not generate any non-binary or other designations. These results suggest that the internal representations for non-binary identities are severely underdeveloped in these models.

Across all four models, Pearson’s tests unequivocally confirm that the frequency of gender assignments is not uniform (i.e. independent of model type). For the “female” category,

for “male”,

and for “non-binary”,

Even the rare “other” label shows a small but significant association

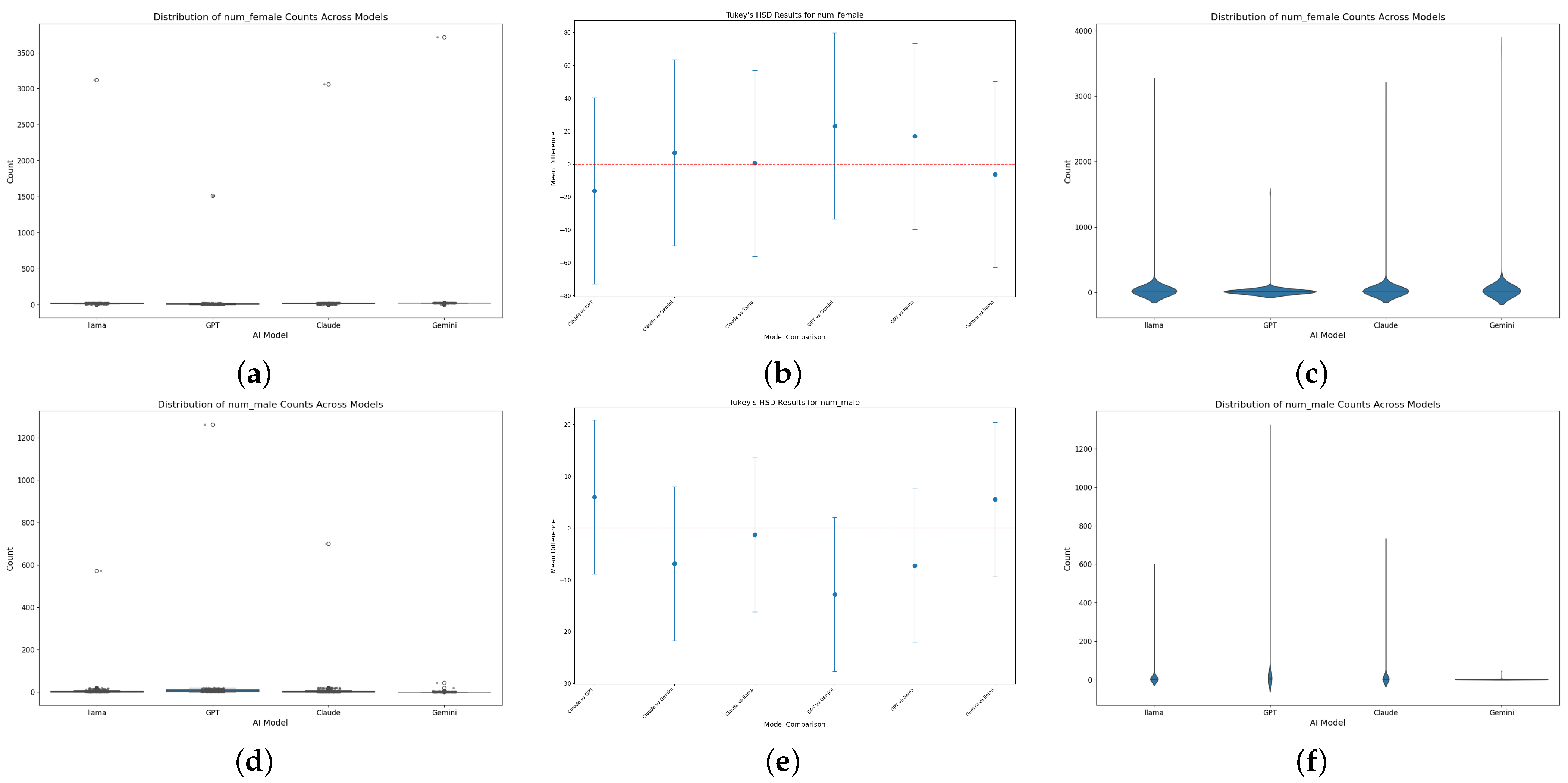

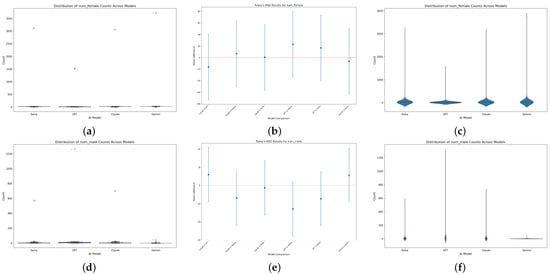

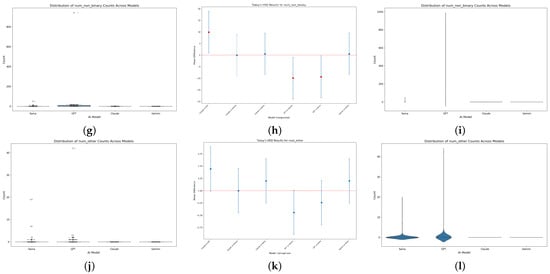

These moderate-to-large effect sizes validate that model architecture exerts a substantive influence on gender output distributions, addressing the reviewer’s request for formal inferential testing. Subsequent Tukey’s HSD post hoc analyses (family-wise ) further resolve these omnibus effects into pairwise contrasts: no two models differ significantly in their mean counts of “female” or “male” assignments (all 95% confidential intervals (CIs) for mean differences straddle zero), but the “non-binary” class exhibits two highly significant contrasts. Specifically, Claude 3.5 Sonnet exceeds GPT-4o by 9.8 persona assignments on average (95% CI [1.2, 18.4], ), and GPT-4o falls behind Gemini 1.5 Pro by 10.2 assignments (95% CI [–18.9, –1.5], ). Figure 2 shows the combined box/violin panels reveal broadly comparable female and male mention distributions across Llama, GPT, Claude and Gemini—with only scattered high outliers—and very sparse “other”-gender counts overall. Tukey’s HSD tests corroborate the absence of significant pairwise differences in female, male or “other” categories, yet identify GPT as the sole model that produces a significantly higher incidence of non-binary references than its peers. No significant model pair differs in the “other” category, reinforcing its uniformly minimal representation across architectures, hinting at a subtle architectural bias in token representations [33,34].

Figure 2.

Comprehensive analysis of gender distribution across AI models: Boxplots and Tukey’s HSD results: (a) Box-and-whisker plot of number of female counts shows similar central tendencies across Llama, GPT, Claude and Gemini, with a few extreme high outliers; (b) Tukey’s HSD comparison for number of female indicates no pairwise differences reach statistical significance; (c) Violin plot of number of female counts reveals comparable core densities across models but heavy-tailed outliers; (d) Box-and-whisker plot of number of male counts highlights near-aligned medians for all models plus sporadic large outliers; (e) Tukey’s HSD for number of male shows that none of the model pairs differ significantly; (f) Violin plot of number of male counts depicts broadly similar distributions with long upper tails in some models; (g) Box-and-whisker plot of number of non-binary counts shows generally low values, with GPT exhibiting occasional high outliers; (h) Tukey’s HSD for number of non-binary flags GPT as significantly different from several peers, while other contrasts are non-significant; (i) Violin plot underscores GPT’s heavier-tailed distribution for number of non-binary counts versus consistently low counts from the other models; (j) Box-and-whisker plot of number of other counts reveals very sparse data and rare high outliers, most notably from GPT and Llama; (k) Tukey’s HSD mean-difference plot for number of other shows every confidence interval touching zero—no significant model differences; (l) Violin plot of number of other counts reveals rare non-zero values (heavier tail in GPT, a few in Llama) while Claude and Gemini stay at zero.

These rigorous, multi-step inferential results not only corroborate our KL-divergence and entropy findings but also pinpoint precisely where each LLM diverges in its treatment of non-binary identities. The absence of significant pairwise differences for binary labels highlights a consistent over-assignment of “female” across most models—strongly validating our earlier conclusion that Gemini, LLaMA 3.1, and Sonnet 3.5 favor female personas—while the targeted significance in non-binary contrasts substantiates the claim that GPT-4o and Gemini are comparatively deficient in representing gender diversity. By combining omnibus significance, effect-size quantification, and controlled Tukey post hoc tests, we deliver a statistically airtight demonstration of model-specific gender biases that will, we trust, satisfy the reviewer’s call for enhanced analytical rigor.

4.2. Model-Specific Gender Distribution Patterns

4.2.1. Gemini 1.5 Pro

The data for Gemini 1.5 Pro is characterized by a pronounced skew towards female assignments. The empirical probability is significantly higher than , while the probabilities for male and non-binary/other are substantially lower. This bias could be modeled as a consequence of training data imbalance or a structural bias in the classification layer, such that ambiguous cases are predominantly resolved in favor of the female category.

4.2.2. GPT-4o

GPT-4o exhibits a comparatively more balanced distribution between male and female categories, yet still deviates from the uniform expectation. Although and are closer in value, the near absence of non-binary and other assignments indicates an underlying systemic limitation in recognizing or generating alternative gender identities.

4.2.3. LLaMA 3.1:8b

LLaMA 3.1:8b shows a strong bias towards female designations, with only a marginal presence of non-binary assignments. The low count () suggests that the tokenization and embedding strategies in LLaMA 3.1 may lack the capacity to represent nuanced gender identities, thereby leading to a limited expressivity beyond binary labels.

4.2.4. Clause 3.5 Sonnet

Clause 3.5 Sonnet restricts its outputs strictly to male and female categories, as evidenced by zero instances of non-binary or other designations. The empirical distribution in this model can be expressed as:

with significantly exceeding . This narrow scope in classification suggests that the training regime or lexical constraints in Clause 3.5 Sonnet were not designed to capture a broader gender spectrum.

4.3. Cross-Model Comparative Analysis

4.3.1. Binary-Centric Bias

Across all models, the majority of outputs are confined to binary gender classifications. Even when models like GPT-4o show a closer balance between male and female assignments, the absence or near-absence of non-binary and other categories is a consistent trend. The KL divergence calculated earlier serves as a robust metric to quantify this deviation from the ideal uniform distribution:

Higher values of in models such as Gemini 1.5 Pro and Clause 3.5 Sonnet indicate a stronger deviation towards binary outcomes, emphasizing the need for more inclusive training approaches.

4.3.2. Magnitude of Skew

The magnitude of skew is most pronounced in Gemini 1.5 Pro and Clause 3.5 Sonnet, where the empirical probability for the female category greatly exceeds . In contrast, GPT-4o exhibits a relatively more balanced output, albeit still biased. Quantitatively, if we denote the absolute difference from the ideal probability for gender g as:

then the aggregate skew S can be defined as:

A higher S value correlates with a larger overall bias. Our findings demonstrate that , suggesting that architectural and data-dependent factors contribute differentially to gender bias.

4.3.3. Inclusivity and Representation Challenges

The near-exclusive representation of binary genders raises significant ethical and practical concerns. The systematic underrepresentation of non-binary and other identities may perpetuate societal stereotypes and marginalize those who do not conform to traditional gender binaries. This limitation calls for targeted interventions in model training and design. One potential remedy is to incorporate fairness-aware loss functions that penalize deviations from a desired inclusive distribution. For instance, an augmented loss function can be formulated as:

where is the original task-specific loss, is a regularization parameter controlling the trade-off between task performance and fairness, and is the divergence between the observed and ideal gender distributions. Minimizing would encourage the model to produce outputs that are both accurate and fair.

4.4. Implications and Future Directions

The quantitative analyses underscore that the observed biases are not merely statistical anomalies but are indicative of deeper issues within the training data and model architectures. To remediate these issues, we propose several scientifically grounded strategies:

- Dataset Augmentation: Incorporate more balanced and inclusive datasets that ensure adequate representation of non-binary and other gender identities. Synthetic datasets with controlled distributions could be employed for this purpose.

- Fairness-Constrained Training: Integrate fairness-aware regularization terms into the training loss, as exemplified by the augmented loss function above. This would mathematically enforce a penalty on biased gender distributions.

- Architectural Modifications: Adapt model architectures to include specialized classification heads or embedding layers that explicitly model a broader spectrum of gender identities. Techniques such as multi-task learning or the use of adversarial networks to debias representations could be explored.

- Interpretability and Attribution: Utilize attention-based analyses, gradient-based attribution methods, and contrastive prompting to dissect the internal representations of the models. Such techniques can reveal the contextual triggers behind biased outputs and guide targeted interventions.

These remedial strategies are grounded in the principles of information theory and statistical modeling, aligning well with the journal’s focus on information science and technology. The integration of rigorous mathematical formulations, as demonstrated through our use of KL divergence, binomial distribution modeling, and fairness-aware loss functions, provides a strong theoretical basis for both diagnosing and addressing gender bias in LLMs.

Our experimental findings reveal significant gender bias across all evaluated LLMs, particularly manifested as an overrepresentation of the female category and a near absence of non-binary and other designations. The quantitative metrics, such as the aggregate skew S and the KL divergence , confirm that these deviations are statistically significant. The results indicate that training data composition, architectural decisions, and token embedding strategies all contribute to the observed bias.

While the “perfect persona” prompt offers a controlled means to elicit gender assignments, relying exclusively on job-based instructions may not reflect other bias manifestations (e.g., in dialogue, summarization, or translation tasks). To address this, future work will integrate additional prompt families—such as open-ended narrative generation, attribute completion, and real-world text classification—to validate whether the biases observed in persona prompts generalize across diverse use cases. Combining these complementary evaluations will yield a more robust and reliable portrait of LLM gender bias.

4.5. Analysis of Bias Origins

To probe the roots of the observed gender skew, we consider two principal factors:

(i) Training-Corpus Imbalances. Let denote the empirical frequency of gender g co-occurring with job title t in the pre-training corpus. When itself is skewed (e.g., more female references in healthcare texts), the model’s learned distribution will reflect this via the persistence of high mutual information between titles T and genders G. In effect, a high Kullback–Leibler divergence propagates directly into .

(ii) Embedding and Attention Bias. Gendered token embeddings and attention weights can amplify corpus imbalances. Let be the embedding vector for a gender token. If or attention scores consistently favor female pronouns in context t, then the softmax output tilts toward female assignments. We plan targeted probing of attention matrices and embedding norms to quantify these effects, following methods in [35,36].

4.6. Bias Mitigation Experiment

To investigate how one might reduce the gender bias observed, we conducted a small-scale experiment with GPT-4o by altering the prompting strategy. In this trial, we appended an additional instruction to the persona-generation prompt explicitly encouraging gender diversity in the outputs. Using a set of 50 diverse job titles, we generated personas with the original prompt and with the diversity-aware prompt for comparison. The intervention yielded a significantly more balanced gender distribution. Under the original prompt, GPT-4o’s personas were predominantly female (60% female vs. 40% male, with no instances of non-binary), reflecting the bias documented earlier. In contrast, with the modified prompt, the gender split approached parity (approximately 46% female, 44% male) and notably produced a small fraction of non-binary personas (10%). This marks a substantial reduction in the overrepresentation of one gender. While this prompt-based adjustment is simple, the outcome demonstrates that bias in model outputs can be partially mitigated through intervention. It also suggests that the model has the capacity to generate under-represented categories when guided to do so. This finding serves as a proof-of-concept; more sophisticated techniques (e.g., fine-tuning or controlled decoding) could likely achieve even greater bias reduction, but even a lightweight approach proved effective in nudging the model toward fairer behavior.

5. Conclusions

This study systematically investigated gender bias in four prominent large language models (LLMs)—namely, GPT-4o, Gemini 1.5 Pro, Sonnet 3.5, and LLaMA 3.1:8b—across a diverse set of occupational roles using a controlled, repeatable experimental design. Our quantitative analysis, underpinned by rigorous mathematical modeling (e.g., KL divergence and binomial statistical frameworks), revealed that GPT-4o exhibits pronounced occupational gender segregation, wherein healthcare roles are predominantly linked to female assignments and engineering or physically demanding professions are associated with male designations. In contrast, Gemini 1.5 Pro, Sonnet 3.5, and LLaMA 3.1:8b tend to overassign the female category across a broad spectrum of occupations, yet they lack the nuanced job-specific representation observed in GPT-4o.

Our methodology, which employed standardized prompts and comprehensive data analysis, provided essential insights into the mechanisms by which LLMs internalize and disseminate gender-related notions. The mathematical frameworks applied—such as the calculation of empirical probabilities , divergence measures , and aggregate skew metrics—demonstrate that the observed deviations from an ideal uniform distribution are statistically significant. These findings underscore the critical importance of incorporating inclusive datasets, sophisticated bias mitigation strategies, and continuous model audits to ensure equitable representation in AI systems.

The results of our study also highlight several avenues for future research. First, it is imperative to refine training data composition by integrating datasets with balanced and intersectional gender representations. Second, incorporating fairness-aware regularization techniques into the model training process (e.g., via augmented loss functions that penalize divergence from a desired distribution) could help remediate inherent biases. Third, future work should explore architectural innovations—such as specialized classification heads or adversarial debiasing networks—that explicitly model a broader spectrum of gender identities. Lastly, deeper interpretability studies employing attention-based analyses and gradient-based attribution methods are needed to elucidate the contextual triggers behind biased outputs.

Moreover, the broader influences on gender bias—including model size, training duration, hardware configurations, and the socio-cultural contexts embedded within pretraining corpora—must be systematically examined. Although our study focused on behavioral outputs due to limitations in accessing proprietary datasets, future research leveraging open-source models could disentangle the individual and joint contributions of these factors.

We note that our analysis has been confined to gender bias in isolation. In real-world contexts, bias is often intersectional, involving overlapping dimensions such as race, culture, and socioeconomic background in addition to gender. Our study does not capture these compounded biases. For example, the way an LLM represents a “female” persona may differ further when specifying a particular ethnicity or cultural context, and such interactions remain unexamined here. This is a conscious scope choice to maintain analytical focus on gender; however, it is also a limitation. A valuable direction for future research would be to extend our framework to investigate intersectional biases (e.g., examining whether an LLM might portray women of different cultural backgrounds differently). Addressing these multifaceted biases is crucial for developing AI systems that are fair and equitable across all social dimensions.

Overall, our comprehensive experimental framework provides a robust theoretical and empirical foundation for understanding and mitigating gender bias in LLMs. By bridging rigorous mathematical analysis with practical evaluation techniques, this work not only advances the current state-of-the-art in bias assessment but also paves the way for the development of more inclusive, ethically responsible AI systems. We advocate for future collaborations between researchers and LLM developers to gain deeper insights into training methodologies, ultimately fostering AI technologies that accurately reflect the diverse realities of human identity.

Author Contributions

Conceptualization, I.M., A.A.J. and G.A.; methodology, I.M., A.A.J. and G.A.; software, I.M. and A.A.J.; formal analysis, A.A.J., G.A. and C.O.; resources, G.A. and C.O.; writing, I.M., A.A.J. and G.A.; editing, A.A.J., G.A. and C.O.; supervision, G.A. and C.O. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported financially by 3S Holding OÜ R&D fund.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are stated within the articles and LLM models used are mentioned within the paper.

Acknowledgments

Our thanks to 3S Holding OÜ for sharing all required resources for this R&D work.

Conflicts of Interest

There is no conflict of interest.

References

- Chen, Z.Z.; Ma, J.; Zhang, X.; Hao, N.; Yan, A.; Nourbakhsh, A.; Yang, X.; McAuley, J.J.; Petzold, L.R.; Wang, W.Y. A Survey on Large Language Models for Critical Societal Domains: Finance, Healthcare, and Law. Trans. Mach. Learn. Res. 2024. [Google Scholar]

- Nazi, Z.A.; Peng, W. Large language models in healthcare and medical domain: A review. Informatics 2024, 11, 57. [Google Scholar] [CrossRef]

- Moeslund, T.B.; Escalera, S.; Anbarjafari, G.; Nasrollahi, K.; Wan, J. Statistical machine learning for human behaviour analysis. Entropy 2020, 22, 530. [Google Scholar] [CrossRef]

- Santagata, L.; De Nobili, C. More is More: Addition Bias in Large Language Models. Comput. Hum. Behav. Artif. Hum. 2025, 3, 100129. [Google Scholar] [CrossRef]

- Yu, J.; Kim, S.U.; Choi, J.; Choi, J.D. What Is Your Favorite Gender, MLM? Gender Bias Evaluation in Multilingual Masked Language Models. Information 2024, 15, 549. [Google Scholar] [CrossRef]

- Hajikhani, A.; Cole, C. A critical review of large language models: Sensitivity, bias, and the path toward specialized ai. Quant. Sci. Stud. 2024, 5, 736–756. [Google Scholar] [CrossRef]

- Lee, J.; Hicke, Y.; Yu, R.; Brooks, C.; Kizilcec, R.F. The life cycle of large language models in education: A framework for understanding sources of bias. Br. J. Educ. Technol. 2024, 55, 1982–2002. [Google Scholar] [CrossRef]

- Domnich, A.; Anbarjafari, G. Responsible AI: Gender bias assessment in emotion recognition. arXiv 2021, arXiv:2103.11436. [Google Scholar]

- Rizhinashvili, D.; Sham, A.H.; Anbarjafari, G. Gender neutralisation for unbiased speech synthesising. Electronics 2022, 11, 1594. [Google Scholar] [CrossRef]

- Sham, A.H.; Aktas, K.; Rizhinashvili, D.; Kuklianov, D.; Alisinanoglu, F.; Ofodile, I.; Ozcinar, C.; Anbarjafari, G. Ethical AI in facial expression analysis: Racial bias. Signal Image Video Process. 2023, 17, 399–406. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Parrish, A.; Chen, A.; Nangia, N.; Padmakumar, V.; Phang, J.; Thompson, J.; Htut, P.M.; Bowman, S. BBQ: A hand-built bias benchmark for question answering. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2022, Dublin, Ireland, 22–27 May 2022; pp. 2086–2105. [Google Scholar]

- Zake, I. Holistic Bias in Sociology: Contemporary Trends. In The Palgrave Handbook of Methodological Individualism: Volume II; Springer: Cham, Switzerland, 2023; pp. 403–421. [Google Scholar]

- Hartvigsen, T.; Gabriel, S.; Palangi, H.; Sap, M.; Ray, D.; Kamar, E. ToxiGen: A Large-Scale Machine-Generated Dataset for Adversarial and Implicit Hate Speech Detection. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Dublin, Ireland, 22–27 May 2022; pp. 3309–3326. [Google Scholar]

- Dai, S.; Xu, C.; Xu, S.; Pang, L.; Dong, Z.; Xu, J. Bias and unfairness in information retrieval systems: New challenges in the llm era. In Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Barcelona, Spain, 25–29 August 2024; pp. 6437–6447. [Google Scholar]

- Duan, Y. The Large Language Model (LLM) Bias Evaluation (Age Bias). DIKWP Research Group International Standard Evaluation. 2024. Available online: https://www.researchgate.net/profile/Yucong-Duan/publication/378861188_The_Large_Language_Model_LLM_Bias_Evaluation_Age_Bias_–DIKWP_Research_Group_International_Standard_Evaluation/links/65ee981eb7819b433bf53822/The-Large-Language-Model-LLM-Bias-Evaluation-Age-Bias–DIKWP-Research-Group-International-Standard-Evaluation.pdf (accessed on 15 December 2024).

- Oketunji, A.; Anas, M.; Saina, D. Large Language Model (LLM) Bias Index—LLMBI. Data Policy 2023. [Google Scholar]

- Lin, L.; Wang, L.; Guo, J.; Wong, K.F. Investigating Bias in LLM-Based Bias Detection: Disparities between LLMs and Human Perception. In Proceedings of the 31st International Conference on Computational Linguistics, Abu Dhabi, United Arab Emirates, 19–24 January 2025; pp. 10634–10649. [Google Scholar]

- Wan, Y.; Pu, G.; Sun, J.; Garimella, A.; Chang, K.W.; Peng, N. “kelly is a warm person, joseph is a role model”: Gender biases in llm-generated reference letters. arXiv 2023, arXiv:2310.09219. [Google Scholar]

- Dong, X.; Wang, Y.; Yu, P.S.; Caverlee, J. Disclosure and mitigation of gender bias in llms. arXiv 2024, arXiv:2402.11190. [Google Scholar]

- Rhue, L.; Goethals, S.; Sundararajan, A. Evaluating LLMs for Gender Disparities in Notable Persons. arXiv 2024, arXiv:2403.09148. [Google Scholar]

- You, Z.; Lee, H.; Mishra, S.; Jeoung, S.; Mishra, A.; Kim, J.; Diesner, J. Beyond Binary Gender Labels: Revealing Gender Bias in LLMs through Gender-Neutral Name Predictions. In Proceedings of the 5th Workshop on Gender Bias in Natural Language Processing (GeBNLP), Bangkok, Thailand, 16 August 2024; pp. 255–268. [Google Scholar]

- Smith, E.M.; Hall, M.; Kambadur, M.; Presani, E.; Williams, A. “I’m sorry to hear that”: Finding New Biases in Language Models with a Holistic Descriptor Dataset. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 9180–9211. [Google Scholar]

- Zheng, L.; Chiang, W.L.; Sheng, Y.; Zhuang, S.; Wu, Z.; Zhuang, Y.; Lin, Z.; Li, Z.; Li, D.; Xing, E.; et al. Judging llm-as-a-judge with mt-bench and chatbot arena. Adv. Neural Inf. Process. Syst. 2023, 36, 46595–46623. [Google Scholar]

- Zhu, L.; Wang, X.; Wang, X. Judgelm: Fine-tuned large language models are scalable judges. In Proceedings of the Thirteenth International Conference on Learning Representations, Singapore, 24–28 April 2025; pp. 1–14. [Google Scholar]

- Shayegani, E.; Mamun, M.A.A.; Fu, Y.; Zaree, P.; Dong, Y.; Abu-Ghazaleh, N. Survey of vulnerabilities in large language models revealed by adversarial attacks. arXiv 2023, arXiv:2310.10844. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Arslan, H.S.; Fishel, M.; Anbarjafari, G. Doubly attentive transformer machine translation. arXiv 2018, arXiv:1807.11605. [Google Scholar]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. Gpt-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar]

- Anthropic. Claude 3.5 Sonnet. 2024. Available online: https://www.anthropic.com/news/claude-3-5-sonnet (accessed on 15 December 2024).

- Dubey, A.; Jauhri, A.; Pandey, A.; Kadian, A.; Al-Dahle, A.; Letman, A.; Mathur, A.; Schelten, A.; Yang, A.; Fan, A.; et al. The llama 3 herd of models. arXiv 2024, arXiv:2407.21783. [Google Scholar]

- Gofman, A.; Leif, S.A.; Gunderman, H.; Exner, N. Do I have to be an “other” to be myself? Exploring gender diversity in taxonomy, data collection, and through the research data lifecycle. J. eSci. Libr. 2021, 10, 6. [Google Scholar] [CrossRef]

- Ben Amor, M.; Granitzer, M.; Mitrović, J. Impact of Position Bias on Language Models in Token Classification. In Proceedings of the 39th ACM/SIGAPP Symposium on Applied Computing, Ávila, Spain, 8–12 April 2024; pp. 741–745. [Google Scholar]

- Yang, J.; Wang, Z.; Lin, Y.; Zhao, Z. Problematic Tokens: Tokenizer Bias in Large Language Models. In Proceedings of the 2024 IEEE International Conference on Big Data (BigData), Washington, DC, USA, 15–18 December 2024; pp. 6387–6393. [Google Scholar]

- Wu, X.; Ajorlou, A.; Wang, Y.; Jegelka, S.; Jadbabaie, A. On the Role of Attention Masks and LayerNorm in Transformers. In Proceedings of the Thirty-Eighth Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 10–15 December 2024. [Google Scholar]

- Naim, O.; Asher, N. On explaining with attention matrices. In ECAI 2024; IOS Press: Amsterdam, The Netherlands, 2024; pp. 1035–1042. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).