Abstract

This study examines how large language models reproduce Jungian archetypal patterns in storytelling. Results indicate that AI excels at replicating structured, goal-oriented archetypes (Hero, Wise Old Man), but it struggles with psychologically complex and ambiguous narratives (Shadow, Trickster). Expert evaluations confirmed these patterns, rating AI higher on narrative coherence and thematic alignment than on emotional depth and creative originality.

1. Introduction

The evolution of artificial intelligence (AI) in narrative generation has transformed the area of digital storytelling, literature, and interactive media [1]. Large language models (LLMs) have demonstrated remarkable capabilities in generating coherent, structured narratives that resemble human-written stories. However, there is an ongoing debate regarding the extent to which these models can capture the deeper dimensions of human creativity. While some researchers emphasize LLMs’ structural strengths, others argue that AI-generated storytelling continues to fall short in areas such as emotional nuance, dynamic character development, and symbolic complexity [2]. This study contributes to this discourse by providing a quantitative and expert-supported analysis of AI-generated narratives through the lens of Jungian archetypes.

Carl Jung’s concept of the collective unconscious provides a theoretical foundation for examining how AI-generated narratives align with deep-seated human storytelling traditions. According to Jung [3], the collective unconscious is a universal psychological structure shared across humanity, composed of archetypes—recurrent symbols, motifs, and character types—that shape human perception and storytelling.

Jung identified several key archetypes that persist across myths, literature, and cultural narratives, including the following [4]:

- The Hero—A protagonist who embarks on a transformative journey;

- The Wise Old Man—A mentor figure offering guidance and wisdom;

- The Shadow—The darker aspects of the self, often represented as an antagonist;

- The Anima/Animus—The inner feminine (Anima) in men and inner masculine (Animus) in women, representing psychological balance;

- The Trickster—A character who disrupts order, introducing chaos and transformation;

- The Everyman—A relatable, ordinary character representing common human experiences.

These archetypes serve as cognitive templates for human storytelling and provide a lens through which we can evaluate AI’s narrative capabilities. If AI models generate stories that consistently reflect these patterns, it suggests that their training data—and the way they process language—encode elements of human collective storytelling traditions.

The ability of LLMs to generate narratives that align with archetypal storytelling structures raises key questions:

- To what extent do AI-generated stories naturally replicate archetypal patterns?

- Can AI capture the depth of human emotions, internal conflicts, and transformative character arcs associated with these archetypes?

- How do computational methods compare to expert literary evaluations in assessing AI’s storytelling capabilities?

To answer these questions, this study employs a multi-dimensional evaluation framework, integrating NLP techniques and expert human assessments. By analyzing AI-generated narratives through different computational methods, AI’s storytelling abilities are systematically compared to human-written texts [1]. Additionally, expert evaluations by literary analysts, psychologists, and AI researchers provide qualitative insights into how AI-generated stories align with human expectations of narrative depth and emotional engagement [5].

Cognitive scientists have recently begun investigating how LLMs process and generate narrative structures that align with human psychological patterns [6]. The ethical implications of attributing psychological characteristics to AI systems have also received increased attention in the recent literature [7]. Cross-cultural studies of AI-generated content have begun emerging, examining how these systems handle psychological concepts across different cultural contexts [8].

When the LLM generates text, it can select the words and phrases most likely to fit in each context based on an “intuitive” understanding of the language patterns generated by machine learning [9].

The resources required to train an LLM prevent these models from easily expanding their memory, leading to what are called “hallucinations” [10] when generating unknown content. Hallucinations are factually incorrect answers to a particular query disguised with the correct use of grammar. By embedding archetypal reasoning mechanisms into LLMs, AI systems could differentiate between knowledge-based retrieval and creative extrapolation, leading to more reliable content generation in specialized domains.

Despite notable progress in AI-generated storytelling, key gaps remain in understanding how large language models (LLMs) reflect fundamental human narrative structures, especially Jungian archetypes. Prior research has addressed narrative coherence and grammar but has rarely explored AI’s capacity to reproduce deep psychological patterns or symbolic complexity. Additionally, most studies rely on either computational metrics or human judgment in isolation, lacking integrated methodologies. Comparative analyses across multiple archetypes—particularly complex ones like the Shadow or Trickster—are also scarce.

This study addresses these gaps by:

- Proposing a hybrid evaluation framework combining NLP techniques with expert analysis;

- Conducting a cross-archetypal assessment of AI narratives involving six core Jungian figures;

- Evaluating psychological depth beyond structural coherence;

- Bridging computational linguistics and depth psychology to explore potential “machine intuition”;

- Outlining practical applications for AI-generated archetypal narratives in education, creative writing, and interactive media.

The remainder of this paper is organized as follows. Section 2 details the methodological approach, including the Jungian archetypal framework, research hypotheses, data collection procedures, experimental design, and validation methods. This section provides a comprehensive overview of the evaluation of AI-generated texts using both computational metrics and expert human assessment. Section 3 presents findings on how AI-generated narratives compare to human-authored texts across different archetypes. Both computational results and expert evaluations, with visual representations of key patterns and correlations, are analyzed. This section interprets the implications of obtained results, contextualizing them within broader research on AI storytelling and Jungian psychology. Section 4 summarizes key theoretical implications and practical applications.

2. Materials and Methods

2.1. Research Framework

This study uses the following four-stage framework to analyze how AI-generated narratives reflect Jungian archetypes:

- Jungian theory is combined with current LLM research to form the conceptual basis;

- Narrative data are collected using carefully designed prompts to elicit archetypal structures without directly referencing Jung;

- Both NLP techniques and expert evaluations are applied to analyze linguistic and thematic patterns;

- AI and human-authored texts are compared to assess similarities and differences in archetypal expression.

This approach integrates computational text analysis with psychological theory to explore how large language models (LLMs) express archetypal patterns.

2.2. Data Collection and Corpus

To systematically evaluate the extent to which LLMs replicate Jungian archetypal storytelling structures, this study utilizes a structured dataset of AI-generated narratives and a human-written reference corpus. The dataset is designed to allow for a comparative analysis of AI and human storytelling patterns, focusing on the presence and expression of six key Jungian archetypes: The Hero, The Wise Old Man, The Shadow, The Anima/Animus, The Trickster, and The Everyman.

A total of 72 narrative texts were generated using two large language models (GPT-4 and Claude Opus) based on a standardized set of prompts targeting six Jungian archetypes: Hero, Shadow, Trickster, Wise Old Man, Everyman, and Anima/Animus. Each model produced one short narrative (approximately 1000–1500 words) per archetype, resulting in 12 AI-generated stories per archetype (6 per model). To provide a benchmark for evaluation, an additional set of 18 human-authored stories (three per archetype) was curated from public-domain literary sources and professionally written samples selected by domain experts.

All AI-generated texts were created under controlled prompting conditions, and the corpus was curated to ensure thematic alignment and narrative completeness. Texts were formatted in plain UTF-8 and manually reviewed to ensure they met length, structure, and topic requirements prior to analysis.

This dataset served as the basis for both computational and expert evaluation procedures, enabling a structured comparison between AI and human narratives across a consistent set of archetypal themes.

- 1.

- AI-generated narrative dataset

The AI-generated dataset consists of short narratives, with some samples per archetype, generated using two state-of-the-art LLMs: GPT-4 (OpenAI) and Claude (Anthropic). The following methodology was applied to ensure a structured and reproducible dataset:

- Each AI model was prompted with a structured archetype-specific query (e.g., “Write a short story featuring a Hero who undergoes a transformative journey”). Prompts were refined to ensure alignment with archetypal themes while avoiding excessive specificity that might bias the model’s output.

- Multiple variations of prompts were used per archetype to encourage diverse story structures and settings. AI-generated outputs were manually reviewed to ensure linguistic coherence, structural consistency, and adherence to the expected archetypal pattern.

- After the quality assessment of initial AI-generated texts based on coherence, narrative structure, and relevance to the target archetype, the final dataset was reduced.

- 2.

- Human-written reference corpus

For comparison, this study uses a human-authored reference corpus, selected to represent archetypal storytelling across classical and contemporary literature. The corpus consists of text samples that match the AI dataset in size and archetypal representation.

The following Corpus selection criteria were used:

- Works were chosen based on clear alignment with Jungian archetypal structures, ensuring a representative dataset.

- Texts span diverse cultural and historical sources, including mythological, literary, and modern storytelling traditions. Human Corpus Sources were classic literary and mythological texts (e.g., The Odyssey, Beowulf, Faust, The Epic of Gilgamesh), modern fiction and film scripts (e.g., The Hero’s Journey in Star Wars, The Dark Knight, and The Matrix), and psychological and narrative theory texts discussing archetypes in storytelling (e.g., Joseph Campbell’s The Hero with a Thousand Faces).

- 3.

- Ensuring comparative consistency

To facilitate a direct comparison between AI- and human-generated texts, the following standardization measures were applied:

- Word count normalization, for which texts were matched in length (~500–1000 words per narrative);

- Genre consistency, for which stories were categorized by literary genre, tone, and structure to avoid confounding variables;

- Controlled thematic prompts, for which AI-generated stories were crafted using prompts designed to reflect the essence of the archetypes found in human literature.

- 4.

- LLM Generation parameters and rationale

To ensure reproducibility, the following parameters were used for all LLM-generated narratives in this study:

- Temperature (0.7)—This moderate value encourages creative variation in language and structure while avoiding incoherent or overly random outputs that can result from higher temperatures (>0.9).

- Top_p (0.9)—Used in nucleus sampling, this setting ensures that the model chooses from the top 90% of probable next-word candidates, maintaining diversity in output without drifting too far from logical or contextually relevant continuations.

- Frequency_penalty (0.0)—This value prevents penalizing repeated words or phrases, as repetition is sometimes necessary for rhetorical emphasis or structural cohesion in narrative storytelling.

- Presence_penalty (0.6)—This encourages the model to introduce new topics and elements by gently discouraging repetition of previously used tokens, which helps generate more dynamic and varied narrative content.

- Max_tokens (1024)—This length constraint ensures that each generated story is substantive enough (roughly 700–800 words) to contain narrative development, character evolution, and thematic expression, while keeping outputs manageable for analysis.

- Stop sequences (None applied)—None were applied to allow for natural narrative closure without artificial truncation, giving the model freedom to complete stories in a more human-like way.

These values reflect a balance between creative freedom and narrative coherence, and they were chosen based on preliminary tests and common practices in text generation for storytelling tasks.

2.3. Experimental Design for Archetypal Pattern Identification in AI-Generated Narratives

To identify and interpret archetypal patterns, symbols, and themes in text generated by LLMs, researchers can apply content analysis methods informed by Jungian psychology.

This section presents an example of the experimental design using the Hero and Wise Old Man archetypes to illustrate the structured approach for identifying archetypal patterns in AI-generated narratives. A similar methodology was applied to analyze other archetypes in the study.

This study employs the following experiment design:

Step 1. Generating text samples using an LLM (Claude Opus):

- Selecting archetypes: The Hero and The Wise Old Man.

- Prompt the LLM with a theme or topic that is likely to evoke archetypal content, such as “the hero’s journey” or “the wise old man storytelling”.

- Generate multiple text samples of varying lengths and styles.

Step 2. Identification of the generated text for archetypal patterns and symbols; semantic and context analysis of the generated texts; highlighting and annotating instances of archetypal content.

2.4. Framework for Validation of Expert Evaluation and Computational Methods

The numerical results were derived by analyzing AI-generated narratives using two different approaches:

- Computational NLP-based analysis: Metrics such as Cosine Similarity, Sentiment Analysis, Term Frequency-Inverse Document Frequency (TF-IDF) Feature Weighting, and Latent Dirichlet Allocation (LDA) Topic Modeling were used to evaluate AI narratives in terms of structural similarity, sentiment polarity, thematic coherence, and lexical variance.

- Expert human evaluation: A panel of 15 experts assessed AI-generated narratives on a 10-point scale, scoring them based on narrative coherence, emotional depth, character development, thematic complexity, and creativity/originality.

2.4.1. Computational NLP-Based Analysis

- Cosine Similarity Analysis.

Cosine similarity [11] is used to measure the textual similarity between AI-generated and human-authored narratives within the same archetypal category. The goal is to determine how closely AI-generated texts align with human storytelling structures.

In the process of analysis, each text is converted into a vector representation using TF-IDF as follows:

where represents the TF-IDF weight of term in the document.

Cosine similarity measures the structural similarity between AI-generated text and human-authored stories by computing the cosine of the angle between two high-dimensional text embeddings, as follows:

where and are vectorized representations of AI- and human-written narratives using TF-IDF or word embeddings, and and are the Euclidean norms of these vectors:

High cosine similarity (≈0.80–1.00) → AI-generated narratives closely mirror human-authored storytelling structures. Low cosine similarity (≈0.40–0.50) → AI-generated narratives diverge significantly from human-authored storytelling.

- 2.

- Sentiment Analysis.

Sentiment analysis [12] is applied to determine the emotional tone of AI-generated and human-authored texts. This helps identify potential biases in AI storytelling, such as a preference for positive sentiment or a reduced capacity to express moral ambiguity and deep conflict.

Sentiment analysis was used to evaluate the emotional polarity of AI-generated texts using the compound score from the VADER (Valence Aware Dictionary and Sentiment Reasoner) model, as follows:

where is the word weight (importance based on sentiment lexicon), and is the sentiment value of each word (positive, neutral, or negative).

If AI-generated narratives tend to be more optimistic and resolution-driven than human-authored texts. If AI-generated narratives fail to capture deep emotional conflicts as effectively as human-authored texts.

- 3.

- TF-IDF Feature Weighting.

Term Frequency-Inverse Document Frequency (TF-IDF) analysis [13] is used to compare word importance between AI-generated and human-authored narratives. The goal is to identify which words AI emphasizes or underrepresents compared to human writers.

The TF-IDF score evaluates the importance of specific words in AI-generated stories compared to human-written ones, shown as follows:

where is the term frequency of word in document , and is the inverse document frequency, measuring how unique the word is across all documents :

AI underweights key psychological and conflict-driven words (e.g., “struggle”, “doubt”, “betrayal”), preferring resolution-oriented storytelling, and at the same time, AI overuses common archetypal descriptors (e.g., “hero”, “wisdom”, “journey”), leading to repetitive storytelling patterns.

- 4.

- LDA Topic Modeling.

Latent Dirichlet Allocation (LDA) [14] is used to identify dominant storytelling themes in AI and human narratives. This helps assess whether AI-generated texts rely on recurring archetypal motifs without introducing sufficient thematic variation.

LDA was used to determine the distribution of thematic topics in AI-generated narratives. The probability of a topic given a document is shown as follows:

where is the count of words assigned to topic , is a smoothing hyperparameter, is the vocabulary size, is the specific word whose probability of belonging to topic is being computed, and represents all other words in the vocabulary that can belong to topic . Thus, is used in the denominator to normalize the probability by summing all words in the vocabulary. This ensures that the probability distribution of words over topics remains valid.

The topic distribution for document is shown as follows:

where is the count of words in document assigned to topic , is a smoothing parameter, is the number of topics, is the specific topic whose probability of being assigned to document is being computed, and represents all other topics in the topic set . Thus, is used in the denominator to normalize the probability by summing all topics. This ensures that the probability distribution of topics over documents remains valid.

To assess the interpretability and reliability of topic modeling results, we evaluated topic coherence using the UMass coherence score, which measures the semantic similarity between high-probability words within a topic. The average coherence score across all archetype-specific models was 0.43, indicating a moderate topic consistency suitable for narrative-level analysis.

To further demonstrate LDA interpretability, Table 1 presents sample topics extracted from AI-generated texts for each Jungian archetype, showing the top five keywords associated with each topic.

Table 1.

Sample topics extracted from AI-generated texts.

These topics align well with archetypal themes described in Jungian theory, supporting both the coherence and interpretability of LDA outputs. While topic models cannot fully capture symbolic or psychological nuance, they offer useful thematic indicators when triangulated with expert assessments.

AI-generated texts rely on frequent archetypal motifs, lacking thematic diversity found in human-authored storytelling. Human narratives incorporate a wider range of thematic elements, contributing to richer storytelling experiences.

Table 2 summarizes the objectives and key findings of the above-mentioned methods.

Table 2.

Summary of computational methods.

These methods provide a quantitative, data-driven approach to evaluating AI’s ability to replicate Jungian archetypal storytelling structures, highlighting its strengths and limitations in human-like narrative generation.

2.4.2. Expert Evaluation

To complement computational analysis, 15 experts from literature, psychology, creative writing, and AI were recruited to evaluate AI-generated narratives:

- Literary experts (5 participants)—scholars and researchers in literature, comparative mythology, and storytelling structures.

- Creative writing professionals (4 participants)—novelists, scriptwriters, and game writers specializing in character development and thematic complexity.

- Psychologists and cognitive scientists (3 participants)—experts in cognitive psychology, Jungian analysis, and human emotional response to narratives.

- AI and computational linguistics researchers (3 participants)—specialists in natural language processing (NLP) and AI-generated text evaluation.

Each expert independently reviewed stories representing six Jungian archetypes (Hero, Shadow, Trickster, Wise Old Man, Everyman, Anima/Animus), rating them across the following five criteria: coherence, emotional depth, character development, thematic complexity, and originality (1–10 scale).

Evaluations were conducted blind, ensuring impartiality. Experts assessed stories generated by GPT-4 and Claude Opus under identical prompts and conditions. Their ratings were compared with computational metrics (cosine similarity, sentiment, TF-IDF, and LDA) to identify alignment and discrepancies.

This interdisciplinary, human-centered approach validated AI storytelling quality and revealed strengths (e.g., coherence) and weaknesses (e.g., emotional nuance), offering a balanced view of AI’s ability to replicate archetypal patterns.

2.5. Validation and Bias Considerations in AI-Generated Archetypal Analysis

The reliability of AI-generated storytelling depends not only on NLP-based evaluations but also on addressing biases in LLMs. These models, trained on vast text corpora, often reflect cultural, gender, and narrative biases, favoring Western traditions and conventional archetypes (e.g., male Heroes, male Wise Old Men). Such bias can limit narrative diversity and symbolic depth, especially in Jungian archetype representation.

While computational methods provide quantitative assessments of AI-generated storytelling, they come with the following inherent limitations in evaluating abstract literary qualities:

- Cosine similarity effectively measures the structural resemblance between AI-generated texts and human-written stories, but it fails to assess deeper narrative meaning, symbolic richness, or character transformation.

- Sentiment analysis can capture basic emotional polarity, yet it struggles with subtle psychological depth, irony, or the multi-layered emotions found in archetypal storytelling.

- TF-IDF feature weighting is useful for identifying key lexical patterns, but it lacks the contextual sensitivity needed to recognize metaphorical language or thematic continuity.

- LDA topic modeling effectively detects dominant thematic elements in AI-generated narratives but fails to account for implicit meanings, archetypal symbolism, or narrative subtext.

Despite their limitations, cosine similarity, sentiment analysis, TF-IDF, and LDA topic modeling were chosen for this study due to the following specific contributions to archetypal pattern detection:

- Cosine similarity was used to assess whether AI-generated narratives maintain structural coherence and align with archetypal storytelling conventions found in human-written texts.

- Sentiment analysis was applied to quantify emotional expression, offering insights into how AI replicates archetypal emotional dynamics, such as the Hero’s internal struggles or the Wise Mentor’s guidance.

- TF-IDF feature weighting helped identify unique lexical markers associated with different archetypes, allowing researchers to distinguish linguistic variations in AI-generated narratives.

- LDA topic modeling was employed to analyze the thematic structure of AI-generated texts, evaluating whether AI correctly represents Jungian archetypes through dominant thematic elements.

The computational methods used offer structural, emotional, lexical, and thematic insights but fall short in capturing metaphor, psychological nuance, and symbolic complexity. Hence, this study combines these tools with expert evaluation for a more holistic assessment.

Despite their limitations, these methods were selected for their distinct strengths in identifying structural coherence, emotional tone, key lexical patterns, and dominant themes across archetypes. Their integration with human interpretation ensures balanced validation.

This validation framework ensures that AI-generated storytelling is not only quantitatively measured but also qualitatively validated, enhancing the understanding of AI’s role in archetypal narrative replication while addressing ethical and methodological considerations in computational creativity

3. Results and Discussion

3.1. Overview of Experimental Approach and Key Findings

The experimental evaluation of AI-generated archetypal narratives employed a multifaceted approach combining computational analysis with expert human assessment. This dual methodology provided complementary perspectives on how effectively LLMs reproduce Jungian archetypes in storytelling contexts. The experiment utilized two state-of-the-art LLMs (GPT-4 and Claude) to generate narratives based on six key Jungian archetypes: Hero, Wise Old Man, Shadow, Trickster, Everyman, and Anima/Animus.

The experimental design incorporated four primary computational metrics to assess the structural, emotional, lexical, and thematic properties of AI-generated texts. Cosine similarity measurements quantified the textual alignment between AI and human-authored narratives within the same archetypal categories. Sentiment analysis evaluated emotional polarity, revealing AI’s tendencies toward positive or negative expression compared to human writers. TF-IDF feature weighting identified key differences in word choice and emphasis, highlighting linguistic patterns unique to AI-generated storytelling. Finally, LDA topic modeling assessed thematic consistency, measuring how closely AI-generated texts adhered to expected archetypal themes.

In parallel, a diverse panel of experts evaluated the same narratives across five dimensions: narrative coherence, emotional depth, character development, thematic complexity, and creativity/originality. Each text received scores on a 10-point scale, providing quantitative data for comparison with computational metrics while capturing qualitative insights impossible to derive from algorithmic analysis alone.

The results revealed the following key patterns across both evaluation approaches:

- AI demonstrated significant variance in performance across different archetypes. Structured, goal-oriented archetypes (Hero and Wise Old Man) consistently received higher scores in both computational and expert evaluations, with cosine similarity scores of 0.81 and 0.74, respectively. In contrast, psychologically complex archetypes (Shadow and Trickster) showed lower performance, with cosine similarity scores of 0.62 and 0.47, indicating AI’s difficulty with narratives involving ambiguity, moral complexity, and unpredictability.

- A clear divergence emerged between AI’s technical proficiency and its emotional/creative capabilities. Both computational and expert evaluations confirmed that AI-generated narratives maintain strong structural coherence and thematic alignment but exhibit reduced emotional range and creative originality. This pattern was particularly evident in sentiment analysis, where AI consistently demonstrated lower emotional variance than human-authored texts, especially in representing negative emotional states.

- Computational and expert evaluations showed strong agreement in assessing narrative structure and thematic alignment but diverged significantly in evaluating emotional depth and creativity. This suggests that current computational metrics effectively capture technical aspects of storytelling but struggle to quantify the more subjective, psychological dimensions that human experts readily identify.

The following sections present detailed results from both computational and expert evaluations, exploring specific strengths and limitations of AI-generated archetypal narratives through the quantitative analysis and visual representation of key findings.

3.2. Comparative Analysis of Computational and Expert Evaluations for AI-Generated Jungian Archetypal Narratives

The evaluation of AI-generated narratives based on Jungian archetypes (Trickster, Shadow, Hero, Wise Old Man, Everyman, and Anima/Animus) was conducted using the following two complementary approaches:

- Computational NLP-based methods, which provided objective measurements of textual structure, sentiment, and thematic consistency.

- Expert human evaluations, where literary analysts, psychologists, and AI researchers assessed the depth and quality of AI-generated storytelling.

This comparative analysis examines the alignment and discrepancies between computational scores and expert ratings, offering insights into AI’s strengths and limitations in replicating archetypal storytelling.

3.2.1. Comparative Evaluation Across Archetypes

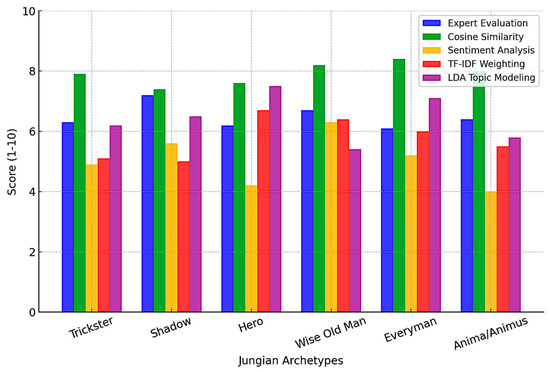

The results indicate notable consistencies and differences between computational methods and expert assessments. As seen in Figure 1, expert scores were generally higher for archetypes where AI successfully replicated classical storytelling structures (e.g., Wise Old Man and Everyman), while computational models struggled to capture psychological depth and creativity, leading to lower scores for Trickster and Shadow.

Figure 1.

Comparative evaluation of AI-generated archetypal narratives.

The scores shown in Figure 1 represent topic probability distributions; specifically, the average proportion of each dominant topic within the AI-generated narratives for each archetype. These scores are derived from the LDA model and reflect how strongly a given topic is expressed across the set of texts. Higher values indicate that the corresponding topic is more prominently represented in the narratives associated with that archetype.

This comprehensive breakdown allows for a direct comparison between human perception and the algorithmic assessment of AI’s storytelling capabilities. Notable patterns emerge in how certain archetypes demonstrate stronger alignment between expert judgment and computational metrics, while others reveal significant divergence. These variations highlight the strengths and limitations of AI in generating different archetypal narratives, particularly about structural coherence versus emotional and psychological depth (Table 3).

Table 3.

Comparative analysis of expert ratings and computational scores scross Jungian srchetypes.

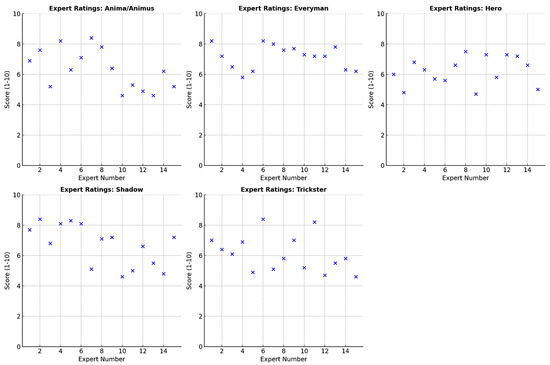

This variation in AI performance across archetypes is further reflected in the expert evaluations, which reveal notable differences in how individual experts assessed each archetype. Figure 2 illustrates this diversity in expert ratings, highlighting the heterogeneity in their perceptions of AI-generated narratives.

Figure 2.

Comparing the expert scores across archetypes.

Across the archetypes and five evaluation dimensions, the average standard deviation of expert scores was 1.12, indicating moderate dispersion around the mean. This level of variance suggests interpretive diversity rather than inconsistency, reflecting differences in disciplinary perspectives (e.g., literary, psychological, technical) when assessing symbolic and emotional dimensions of AI-generated texts.

Overall, Figure 2 emphasizes that expert evaluations are not uniform, reinforcing the need for both quantitative computational analysis and qualitative human judgment in assessing AI-generated storytelling. The figure underscores the challenge AI faces in achieving emotional and thematic complexity while confirming its strengths in structured narrative generation.

3.2.2. Implications for AI-Generated Storytelling

The following results reveal important implications for AI’s role in archetypal storytelling:

- AI-generated narratives are structurally sound but emotionally and creatively limited. NLP-based metrics confirm strong alignment with human storytelling structures, but experts highlight a lack of deeper psychological engagement.

- Certain archetypes (Wise Old Man, Everyman) are easier for AI to replicate, while others (Trickster, Shadow) require deeper narrative modeling. AI can handle rational, structured storytelling, but humorous and emotionally complex narratives remain challenging.

- Future AI storytelling models should integrate hybrid evaluation frameworks, combining NLP techniques with cognitive emotion modeling and interactive human feedback.

The comparison between computational analysis and expert evaluation highlights both the strengths and weaknesses of AI-generated archetypal storytelling. While AI successfully mimics structural and thematic elements, it struggles with emotional authenticity, deep character arcs, and non-linear storytelling approaches.

To improve AI storytelling, future developments should focus on:

- Enhancing AI’s ability to model psychological complexity in character development.

- Incorporating real-time human feedback to refine AI-generated stories dynamically.

- Developing cognitive emotion models that improve AI’s capacity for nuanced storytelling.

This study underscores the importance of hybrid AI–human storytelling frameworks, where AI provides narrative structure while human creativity ensures emotional and thematic depth.

3.2.3. Analysis of Computational and Expert Evaluations for AI Storytelling

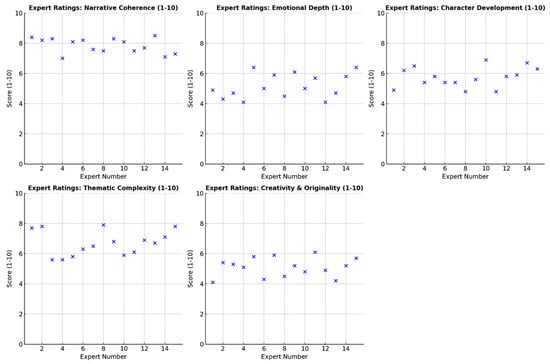

Figure 3 presents the expert evaluation scores across key storytelling dimensions for AI-generated narratives.

Figure 3.

Experts’ ratings of storytelling dimensions.

The distribution of expert ratings highlights several key insights. High scores for narrative coherence indicate that AI-generated texts maintain structural integrity and logical flow. This aligns with the computational analysis results, where cosine similarity scores also confirmed AI’s ability to produce well-structured narratives.

Lower scores for emotional depth and character development suggest that, while AI effectively constructs coherent stories, it struggles with conveying deep psychological complexity and dynamic character evolution. Experts noted that AI-generated narratives often lacked subtle emotional cues, internal conflicts, and multi-dimensional character arcs, particularly for archetypes like Shadow and Trickster.

Moderate ratings for thematic complexity and creativity/originality highlight AI’s ability to replicate common narrative themes but its tendency to rely on repetitive patterns. Experts observed that AI frequently reused familiar archetypal motifs without introducing innovative or unexpected narrative elements.

The variability in expert scores across different dimensions suggests that, while some AI-generated narratives demonstrated promising storytelling elements, others lacked the nuance and adaptability necessary for compelling, human-like storytelling.

The assessment of AI-generated storytelling quality requires a multifaceted approach that integrates quantitative computational methods with qualitative human expertise. This study evaluates AI-generated narratives using NLP techniques, comparing their results against expert assessments. The goal is to determine how well AI-generated narratives align with human expectations regarding storytelling coherence, emotional depth, character development, thematic richness, and originality.

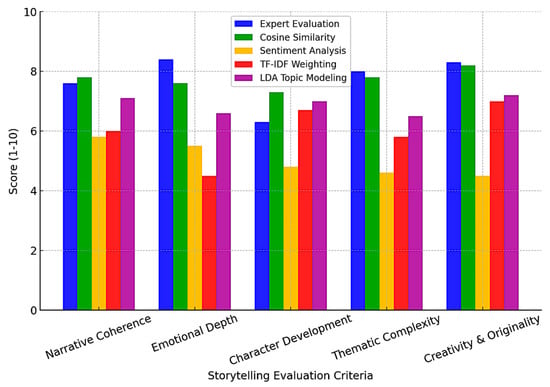

As illustrated in Figure 4, computational methods generally rate AI-generated stories higher in structural aspects, such as coherence and thematic alignment, while expert reviewers highlight deficiencies in emotional realism, character depth, and originality.

Figure 4.

Comparative analysis of expert and computational evaluations.

AI exhibits a strong performance in narrative coherence, confirmed by cosine similarity scores (6.5–8.5) that align closely with expert ratings (5.5–8.5). Similarly, LDA topic modeling scores (5.0–7.5) correlate with expert evaluations of thematic complexity, suggesting that AI can successfully replicate archetypal themes and structured storytelling patterns. However, sentiment analysis and TF-IDF scores are significantly lower, reflecting AI’s difficulty in capturing emotional complexity and lexical originality.

The comparative evaluation highlights that, while AI-generated narratives are structurally sound, they remain emotionally simplistic and creatively constrained. Computational methods effectively assess surface-level linguistic structure and thematic similarity, but human expert evaluation remains essential for capturing narrative engagement, emotional depth, and originality. Future improvements in AI storytelling models should focus on integrating cognitive emotion modeling, refining sentiment analysis to detect more subtle emotional shifts, and developing adaptive AI storytelling systems that learn from human feedback to introduce more creative variations.

This study demonstrates that, while AI can efficiently replicate archetypal storytelling structures, it lacks the emotional authenticity and creative adaptability of human-authored narratives. The findings suggest that future advancements should emphasize hybrid AI–human collaboration, where AI serves as a structural guide, while human writers contribute the creativity, emotion, and complexity that define high-quality storytelling.

3.3. Comparative Analysis of AI-Based Texts and Human Texts

To comprehensively evaluate the similarity and divergence between AI-generated and human-authored storytelling, several data visualizations were generated. These figures provide insights into AI’s ability to replicate Jungian archetypal structures, emotional depth, thematic variation, and lexical patterns. Below, we describe and interpret each visualization.

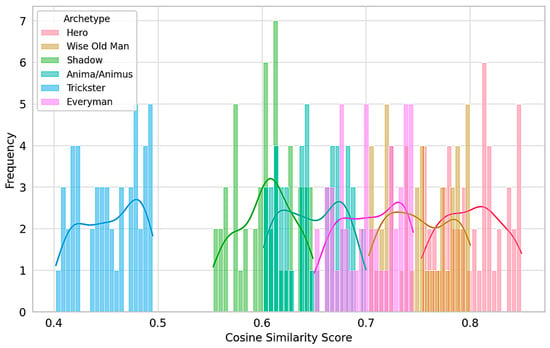

Figure 5 illustrates the distribution of cosine similarity scores between AI-generated and human-authored texts across six Jungian archetypes. Cosine similarity was computed by converting each narrative into TF-IDF vectors and measuring the angle between AI- and human-generated story vectors within the same archetypal category. A score closer to 1 indicates greater structural similarity. For each archetype, multiple AI-generated texts were compared against their corresponding human-written counterparts, and the resulting similarity scores were aggregated to produce the distribution shown.

Figure 5.

Cosine similarity distribution between AI and human texts.

The distribution indicates that “Trickster” narratives show the lowest similarity scores and widest spread, reflecting substantial structural divergence from human-authored examples. In contrast, “Shadow” falls closer to the midpoint of a continuum, along with “Anima/Animus”, “Everyman”, “Wise Old Man”, and “Hero”.

This continuum suggests that AI-generated stories are generally more structurally aligned with human narratives for archetypes that follow traditional or linear development patterns (e.g., Hero, Wise Old Man), while performance varies more significantly in archetypes requiring psychological ambiguity, unpredictability, or subversion—traits most prominent in the Trickster archetype.

AI’s main challenge lies not broadly with emotionally complex archetypes, but specifically with those that defy conventional structure and narrative coherence. The Shadow, though psychologically deep, often follows structured internal conflict arcs that AI can replicate with moderate success. The Trickster, by contrast, demands narrative non-linearity, irony, and chaos, which are elements that current LLMs struggle to generate meaningfully.

Future research should further explore the structural and semantic dimensions of archetypes like Trickster, where deviations from coherence are a defining feature rather than a flaw, and develop targeted prompting or training approaches to enhance AI’s handling of such narrative forms.

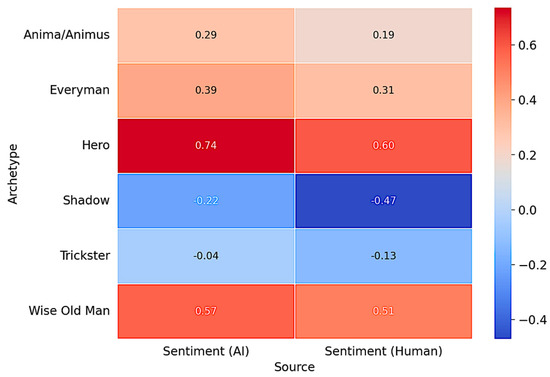

Figure 6 presents the sentiment polarity ranges for AI-generated and human-authored narratives across the six archetypes. While the overall emotional range is only slightly narrower for AI (0.96) compared to human texts (1.07), the key difference lies in the distribution. AI tends to emphasize positive sentiment, particularly in archetypes like the Hero (+0.74) and Wise Old Man (+0.56), showing a stronger inclination toward resolution-driven narratives. In contrast, it struggles to replicate deeper negative emotional tones found in human-authored stories, especially for Shadow (−0.22 AI vs. −0.47 human) and Trickster (−0.02 AI vs. −0.12 human). This suggests that, while AI narratives approximate overall sentiment range, they lack the emotional complexity and depth commonly associated with darker or morally ambiguous archetypes.

Figure 6.

Sentiment polarity heatmap (AI vs. human).

Two-word clouds comparing the most important words in AI-generated and human-authored (Figure 7) texts, based on TF-IDF weight differences, are shown as follows.

Figure 7.

Word clouds comparing the most important words: (a) in AI-generated texts; (b) in human-authored texts.

AI-generated texts favor generalized terms like “hero”, “wisdom”, “journey”, and “destiny”, indicating formulaic storytelling. Human-authored texts incorporate more psychological depth, using terms like “sacrifice”, “transformation”, “self-destruction”, “guilt”, and “manipulation”. AI underweights conflict-related words, particularly in Shadow and Trickster archetypes.

AI-generated texts lack the depth of human storytelling, relying on stereotypical archetypal descriptors rather than nuanced emotional and psychological themes.

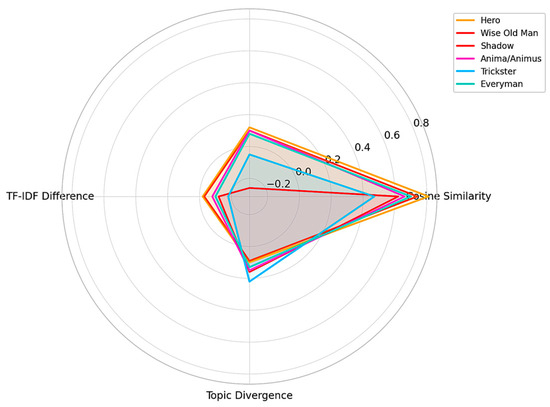

Figure 8 displays four key similarity measures (cosine similarity, sentiment difference, TF-IDF weighting, and topic divergence) for each archetype.

Figure 8.

Radar chart for archetypal similarity (AI vs. human).

Hero and Wise Old Man have the highest similarity, indicating AI’s strong replication of structured, mentor-guided narratives. Shadow and Trickster archetypes show the largest divergence, suggesting that AI struggles with psychological complexity, irony, and ambiguity. AI exhibits lower sentiment variation and lexical depth, leading to more predictable storytelling.

AI effectively captures structured storytelling but lacks depth in psychological and morally ambiguous narratives.

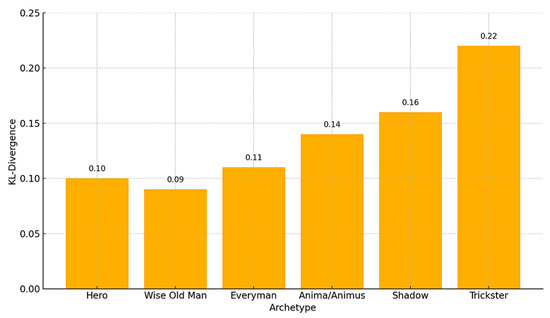

Figure 9 represents thematic differences (KL-Divergence) between AI- and human-authored narratives, with data labels for better readability.

Figure 9.

Topic divergence (KL-Divergence) between AI and human texts.

Trickster (0.22) and Shadow (0.16) archetypes have the highest divergence, confirming AI’s difficulty with nonlinear, unpredictable, and introspective storytelling.

Hero (0.10) and Wise Old Man (0.09) have the lowest divergence, showing that AI closely follows traditional heroic and mentor-driven narrative themes. AI struggles to replicate the diverse themes found in human-written texts.

AI-generated texts lack the narrative diversity and thematic unpredictability of human-authored stories, especially in psychologically complex archetypes.

The visualizations collectively confirm that AI is proficient in structured storytelling (Hero, Wise Old Man) but fails in ambiguous, emotionally deep, and nonlinear storytelling (Shadow, Trickster), specifically as follows:

- AI generates narratives that structurally resemble human-authored texts (cosine similarity);

- AI prefers positive, resolution-oriented storytelling, avoiding deep psychological struggle (sentiment heatmap);

- AI relies on formulaic storytelling and lacks psychological nuance (word cloud and TF-IDF);

- AI-generated stories lack thematic variation and unpredictability, particularly in complex archetypes (KL-Divergence plot).

These findings suggest that, while AI can mimic human storytelling structures, it requires further fine-tuning and exposure to richer, more diverse literary sources to better capture thematic depth, emotional nuance, and narrative unpredictability.

3.4. Possible Areas of Application of the Study

The findings of this study have important implications for multiple fields where AI-driven storytelling, cognitive modeling, and narrative generation play a central role. By understanding the strengths and limitations of large language models in reproducing Jungian archetypal storytelling, this study opens the door to several practical applications that can augment human creativity, enhance user experience, and support psychological and educational research.

In the entertainment and creative industries, AI-powered tools can assist writers, screenwriters, game designers, and novelists by generating and refining narratives. AI storytelling models can be integrated into writing software and interactive platforms to help authors brainstorm ideas, structure storylines, and develop character arcs. While the AI-generated content provides a structured foundation, human creators can build on these drafts by incorporating emotional depth, moral ambiguity, and creative originality—areas where AI still struggles. In video games and interactive fiction, AI can support dynamic, character-driven narratives by blending archetypal structures with more organic and unpredictable storytelling elements.

In psychology and mental health, AI-generated narratives offer new possibilities for therapeutic storytelling. Narratives grounded in Jungian archetypes can be used in guided storytelling exercises to help individuals explore personal identity, emotional conflict, and psychological transformation. AI-powered chatbots and virtual counselors can use these storytelling frameworks to provide emotionally rich, context-aware interactions tailored to users’ mental and emotional states. As AI systems improve their ability to express psychological nuance, they may become valuable tools in narrative therapy, especially for addressing trauma, self-discovery, and cognitive reframing.

In educational settings, AI-generated storytelling can enhance student engagement and support curriculum development across disciplines such as literature, philosophy, history, and psychology. AI models can generate adaptive educational materials that illustrate how archetypal structures are used in classical and contemporary literature. Instructors can use AI-generated narrative variations to demonstrate key concepts, improve comprehension, and encourage comparative analysis. Moreover, history and cultural studies can benefit from AI-generated alternate narratives, which help students understand historical events from diverse perspectives and examine the narrative construction of collective memory.

Beyond creative and educational domains, AI-generated storytelling also holds potential for digital media, marketing, and branding. Structured storytelling patterns can be applied to content generation tasks such as blog posts, social media campaigns, and marketing narratives. AI systems can craft emotionally resonant messages that align with consumer archetypes and brand identity, thereby strengthening audience engagement. Personalized storytelling assistants can be deployed to help users develop content across platforms, combining user input with archetypal templates to generate compelling and coherent narratives.

In game design and virtual environments, AI-driven storytelling engines can enhance the realism and emotional engagement of non-playable characters (NPCs) and story arcs. By generating evolving storylines that respond to player choices and psychological dynamics, AI systems can contribute to more immersive gaming experiences. Characters designed with deeper psychological profiles, informed by archetypal theory, would allow players to interact with game worlds that feel emotionally and symbolically rich. In virtual and augmented reality settings, these models could drive dynamic, user-specific narrative flows that enhance the sense of presence and engagement.

AI storytelling techniques can also improve customer experience and communication in business contexts. For example, AI-generated brand stories that reflect archetypal narratives can foster stronger emotional bonds between consumers and companies. Similarly, customer service bots enhanced with narrative intelligence can engage users more effectively by adapting stories to the user’s profile and emotional state.

This study has implications for cultural studies and computational linguistics. AI models can be trained or fine-tuned to reflect culturally specific storytelling traditions, enabling cross-cultural comparisons of archetypal expression. Researchers in the humanities can use AI-generated narratives to trace how symbolic motifs evolve across time and geography, offering new insights into the relationship between language, culture, and collective psychology.

While AI-generated narratives currently excel in structured storytelling, their application across domains is constrained by a lack of emotional realism and symbolic depth. Nevertheless, the integration of these tools with human creativity, cognitive science, and educational theory can lead to powerful human–AI collaborations. Future development should focus on bridging this gap, enabling AI to contribute more meaningfully to emotionally resonant and psychologically complex storytelling across diverse fields.

4. Conclusions

This study explored how LLMs replicate Jungian archetypes in AI-generated narratives, using a hybrid framework that combined computational analysis with expert evaluation. The results show that AI effectively reproduces structured archetypes like the Hero and Wise Old Man but struggles with nonlinear, emotionally complex types such as the Trickster.

However, several limitations must be acknowledged. First, the analysis focused on a selected set of archetypes, and findings may not generalize to broader narrative structures or lesser-known archetypal figures. Second, while expert evaluations added valuable depth, they were constrained by subjective interpretation and limited sample size. Additionally, the study concentrated on narrative structure and sentiment but did not fully explore multimodal dimensions, such as imagery or dialogic form.

Future research should address these gaps by expanding the archetypal range, incorporating cross-cultural and multimodal storytelling formats, and refining sentiment models to better capture psychological nuance. Improved prompting strategies, interactive human-in-the-loop systems, and training datasets with richer symbolic diversity may enhance AI’s capacity to produce more emotionally resonant and thematically diverse narratives.

Ultimately, this research underscores both the promise and current boundaries of AI storytelling. As LLMs evolve, their collaboration with human creativity offers the most fruitful path for meaningful narrative generation.

Author Contributions

Conceptualization, O.Z. and B.M.; methodology, I.K.; software, I.K.; validation, O.Z. and B.M.; formal analysis, I.K.; investigation, I.K., O.Z. and B.M.; resources, O.Z. and B.M.; data curation, O.Z. and B.M.; writing—original draft preparation, I.K.; writing—review and editing, I.K., O.Z. and B.M.; visualization, I.K.; supervision, I.K.; project administration, B.M.; funding acquisition, I.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article; further inquiries can be directed to the corresponding author.

Acknowledgments

The authors would like to express their sincere gratitude to the anonymous reviewers for their insightful, constructive, and thorough feedback. Their comments helped identify key areas for improvement and significantly contributed to the overall clarity, coherence, and quality of the final manuscript. We greatly appreciate the time and care taken to review our work.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Trichopoulos, G.; Alexandridis, G.; Caridakis, G. A Survey on Computational and Emergent Digital Storytelling. Heritage 2023, 6, 1227–1263. [Google Scholar] [CrossRef]

- Runco, M.A. AI can only produce artificial creativity. J. Creat. 2023, 33, 100063. [Google Scholar] [CrossRef]

- Jung, C.G. The Archetypes and the Collective Unconscious; Princeton University Press: Princeton, NJ, USA, 1967. [Google Scholar]

- Jung, C.G. Symbols of Transformation; Princeton University Press: Princeton, NJ, USA, 1956. [Google Scholar]

- Kim, J.; Heo, Y.; Yu, H.; Nang, J. A Multi-Modal Story Generation Framework with AI-Driven Storyline Guidance. Electronics 2023, 12, 1289. [Google Scholar] [CrossRef]

- Ren, Y.; Jin, R.; Zhang, T.; Xiong, D. Do Large Language Models Mirror Cognitive Language Processing? arXiv 2024, arXiv:2402.18023. [Google Scholar] [CrossRef]

- Placani, A. Anthropomorphism in AI: Hype and fallacy. AI Ethics 2024, 4, 691–698. [Google Scholar] [CrossRef]

- Messner, W. Improving the cross-cultural functioning of deep artificial neural networks through machine enculturation. Int. J. Inf. Manag. Data Insights 2022, 2, 100118. [Google Scholar] [CrossRef]

- Wang, Q.; Li, H. On Continually Tracing Origins of LLM-Generated Text and Its Application in Detecting Cheating in Student Coursework. Big Data Cogn. Comput. 2025, 9, 50. [Google Scholar] [CrossRef]

- Ji, Z.; Lee, N.; Frieske, R.; Yu, T.; Su, D.; Xu, Y.; Ishii, E.; Bang, Y.J.; Madotto, A.; Fung, P. Survey of Hallucination in Natural Language Generation. ACM Comput. Surv. 2024, 55, 1–38. [Google Scholar] [CrossRef]

- Biswas, P.; Pramanik, S.; Giri, B.C. Cosine Similarity Measure Based Multiattribute Decision-making with Trapezoidal Fuzzy Neutrosophic Numbers. Neutrosophic Sets Syst. 2014, 8, 46–56. Available online: https://fs.unm.edu/NSS/CosineSimilarityMeasureBasedMultiAttribute.pdf (accessed on 18 March 2025).

- Taherdoost, H.; Madanchian, M. Artificial Intelligence and Sentiment Analysis: A Review in Competitive Research. Computers 2023, 12, 37. [Google Scholar] [CrossRef]

- Alshehri, A.; Algarni, A. TF-TDA: A Novel Supervised Term Weighting Scheme for Sentiment Analysis. Electronics 2023, 12, 1632. [Google Scholar] [CrossRef]

- Farkhod, A.; Abdusalomov, A.; Makhmudov, F.; Cho, Y.I. LDA-Based Topic Modeling Sentiment Analysis Using Topic/Document/Sentence (TDS) Model. Appl. Sci. 2021, 11, 11091. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).