Temporal Dynamics in Short Text Classification: Enhancing Semantic Understanding Through Time-Aware Model

Abstract

1. Introduction

2. Related Work

2.1. Traditional Approaches to Sentence Classification

2.2. Contextualized Embeddings: BERT, GPT, and XLNet

2.3. Time-Aware Models: Capturing Language Evolution

3. Preliminaries and Technical Details

3.1. Static Sentence Classification

3.2. Problem Formalism: Time-Aware Sentence Classification

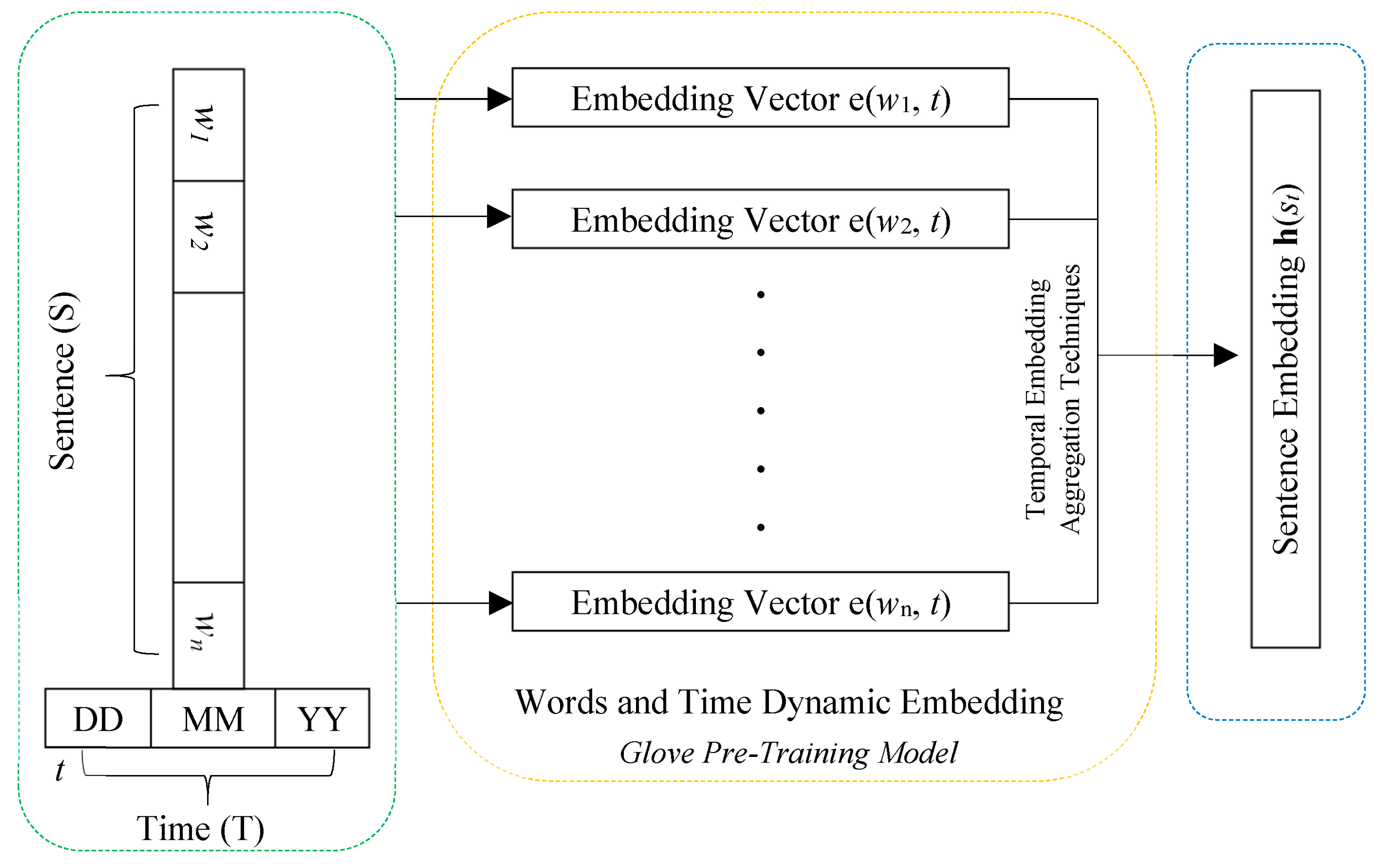

3.3. Temporal-Aware Word Embeddings

3.4. Time-Aware Sentence Classification with Hybrid Architecture

3.5. Loss Function

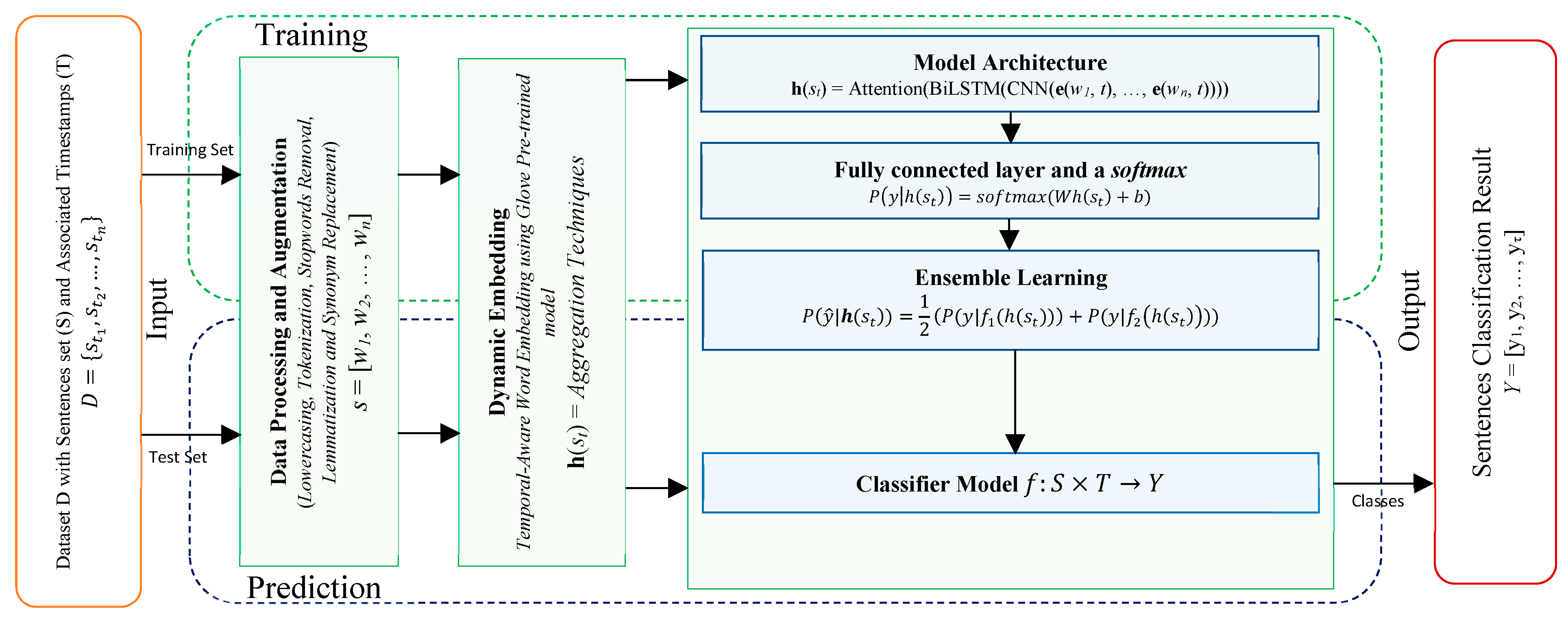

4. Proposed Approach

4.1. Data Processing and Augmentation

- Lowercasing: All words are converted to lowercase.

- Tokenization: Sentences are split into tokens wi ∈ V, where V is the vocabulary.

- Stopwords Removal: Words from a predefined stopword list are removed.

- Lemmatization: Words are reduced to their base forms using a lemmatizer, ensuring that inflected forms are handled correctly.

4.2. Dynamic Embedding

4.3. Model Architecture

4.4. Attention Mechanism

4.5. Ensemble Learning

4.6. Computation Complexity Analysis

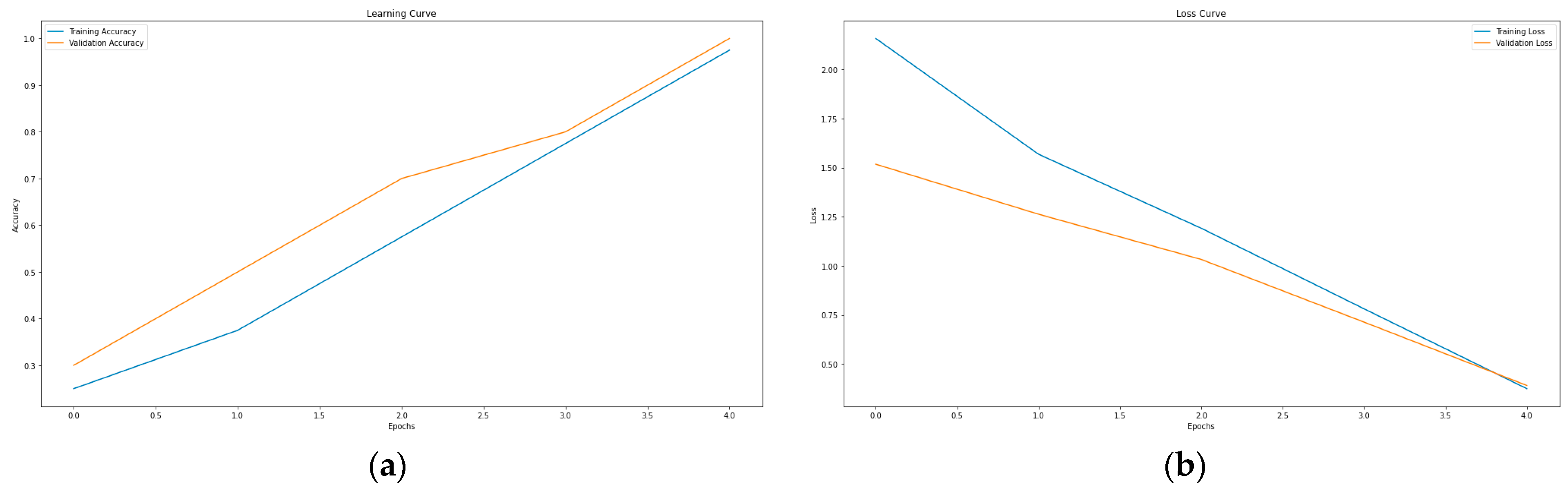

5. Experiments and Results

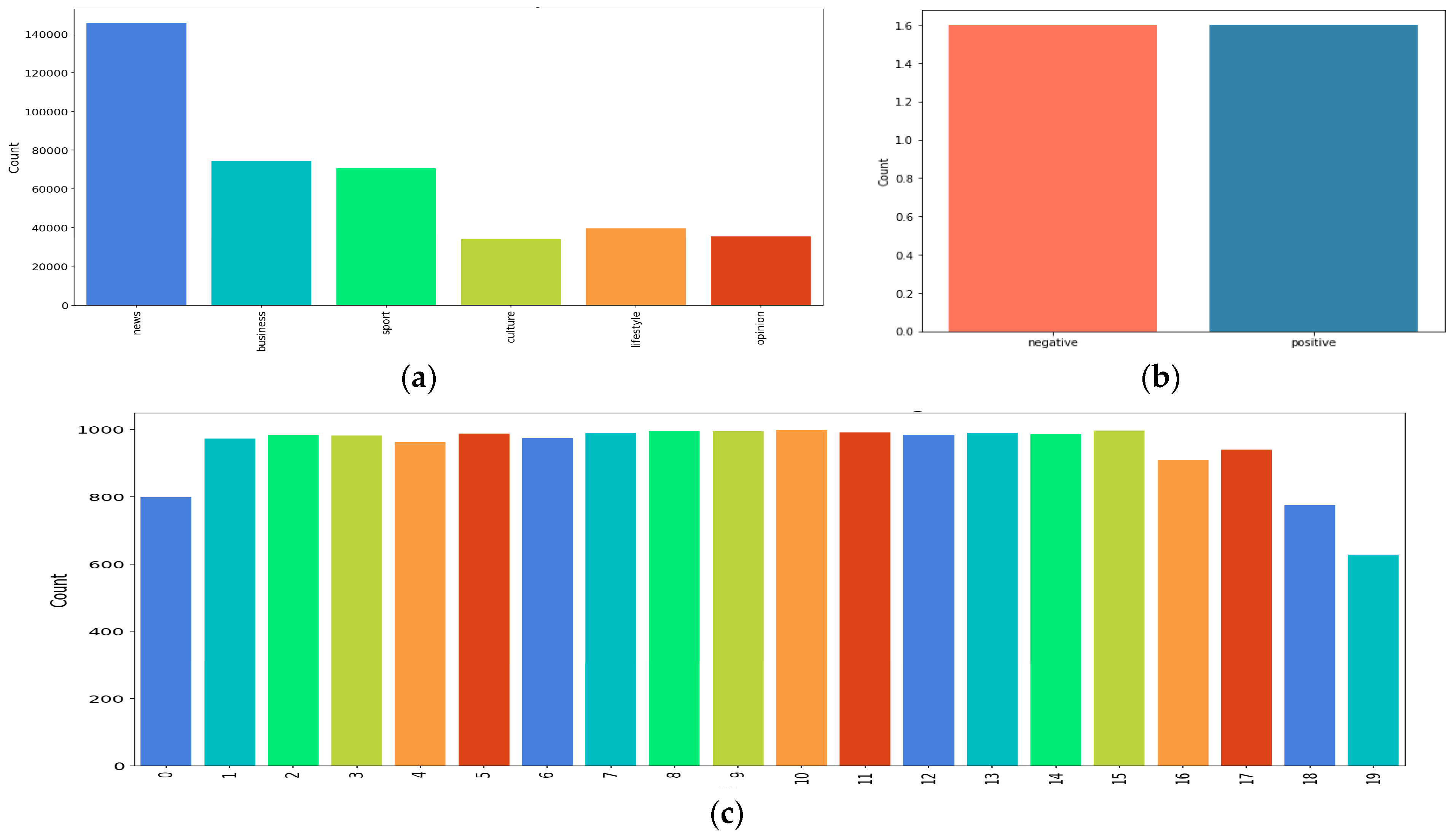

5.1. Benchmark Datasets

5.2. Experiment Setup

- Embedding dimension: 100.

- Maximum sequence length: 100 tokens.

- BiLSTM units: 128.

- Attention dropout: 0.3.

- Conv1D filters: 128 with kernel size 5.

- Dense layers: Two layers with 256 and 128 units, respectively, followed by dropout layers.

- Learning rate: 0.00005 with early stopping and learning rate reduction strategies.

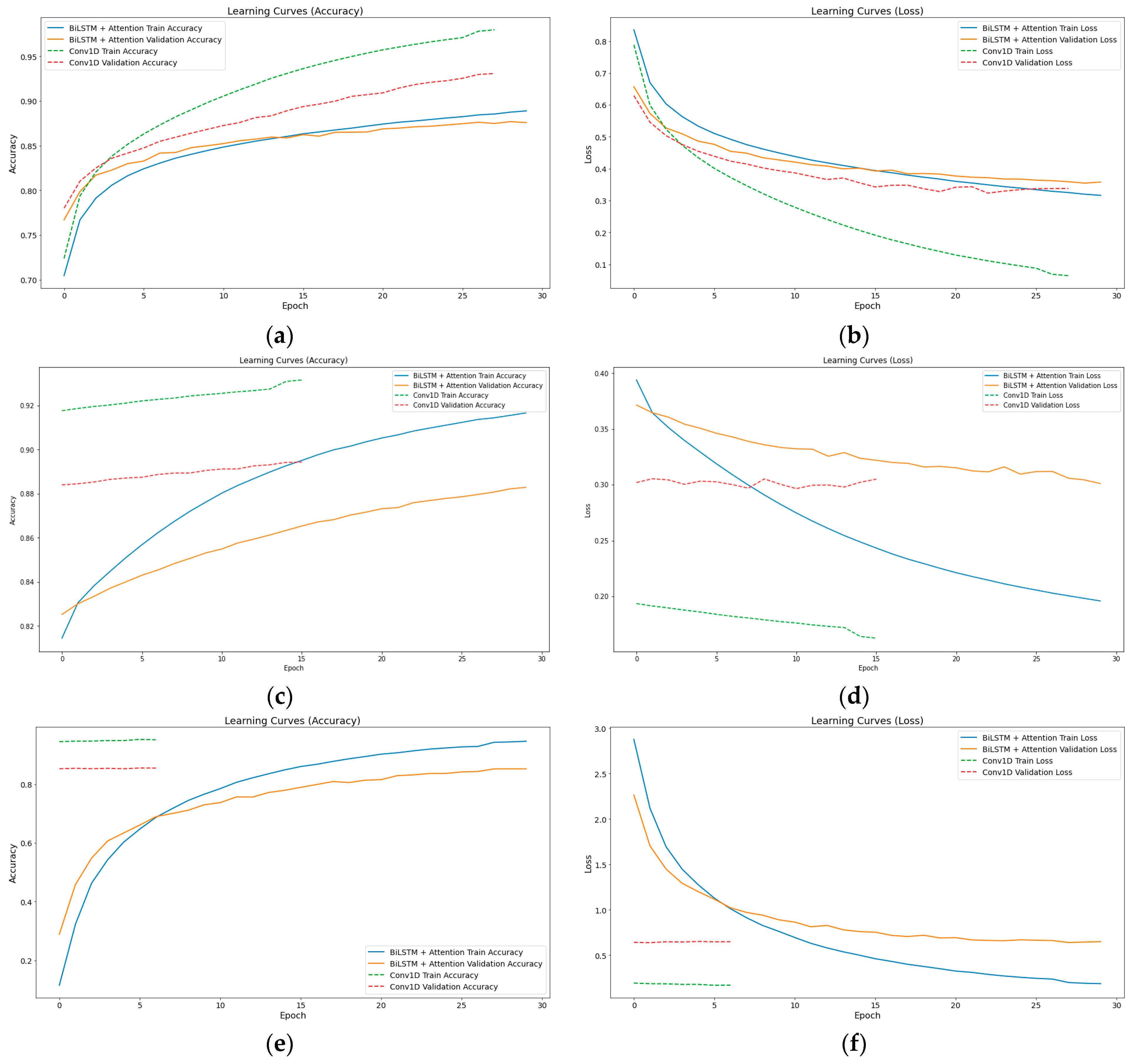

5.3. Evaluation Criteria

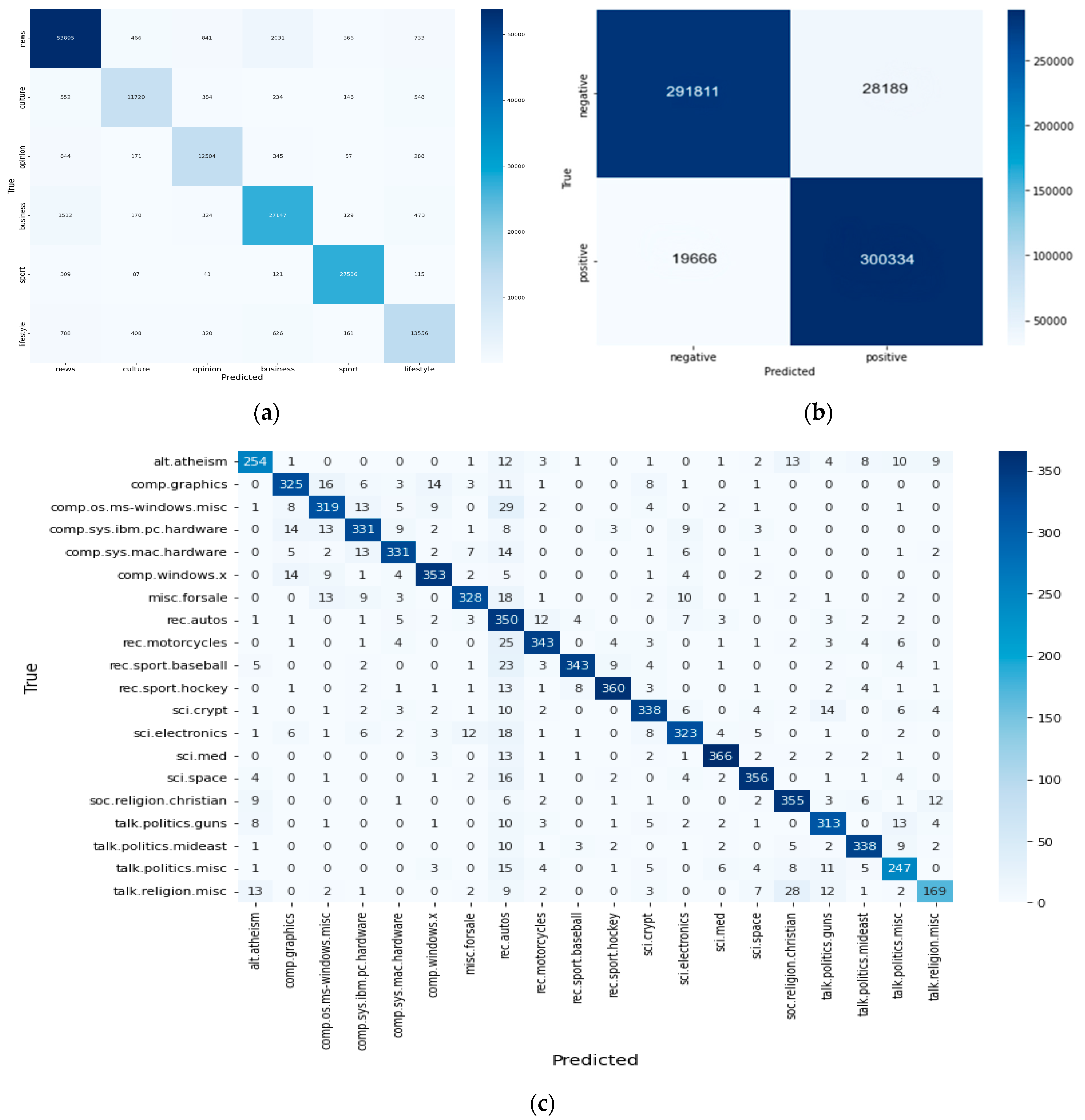

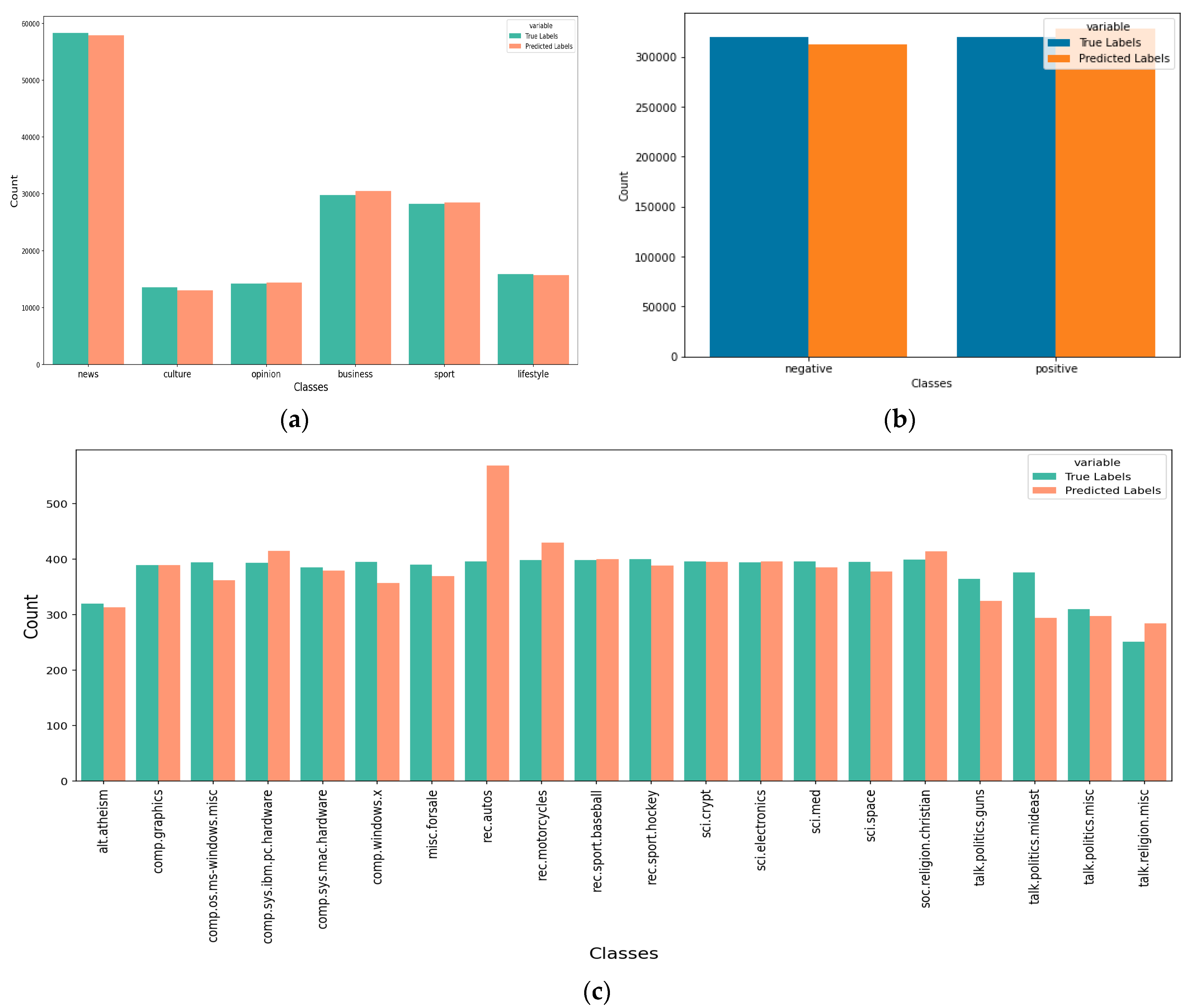

5.4. Results on Benchmark Datasets

5.5. Results on Lager Temporal Gap

5.6. Comparison with Well-Known Temporal Models

- Temporal Awareness Significantly Improves Classification: The substantial gap between RoBERTa without date and time-aware models emphasizes the necessity of incorporating temporal information into text classification.

- Dynamic Word Embeddings Enhance Performance: Our model’s superior accuracy with dynamic embeddings confirms that capturing semantic drift over time is essential for effective classification.

- Generalizability Across Datasets: Unlike certain models, such as Hybrid Stacked Ensemble, which perform well on Sentiment140 but lack evaluations on multiple datasets, our approach maintains high accuracy across diverse text domains.

- Comparison with Well-Known Work: Our model consistently outperforms existing temporal classification approaches, demonstrating the effectiveness of direct time-aware embedding integration rather than treating time as an external input feature.

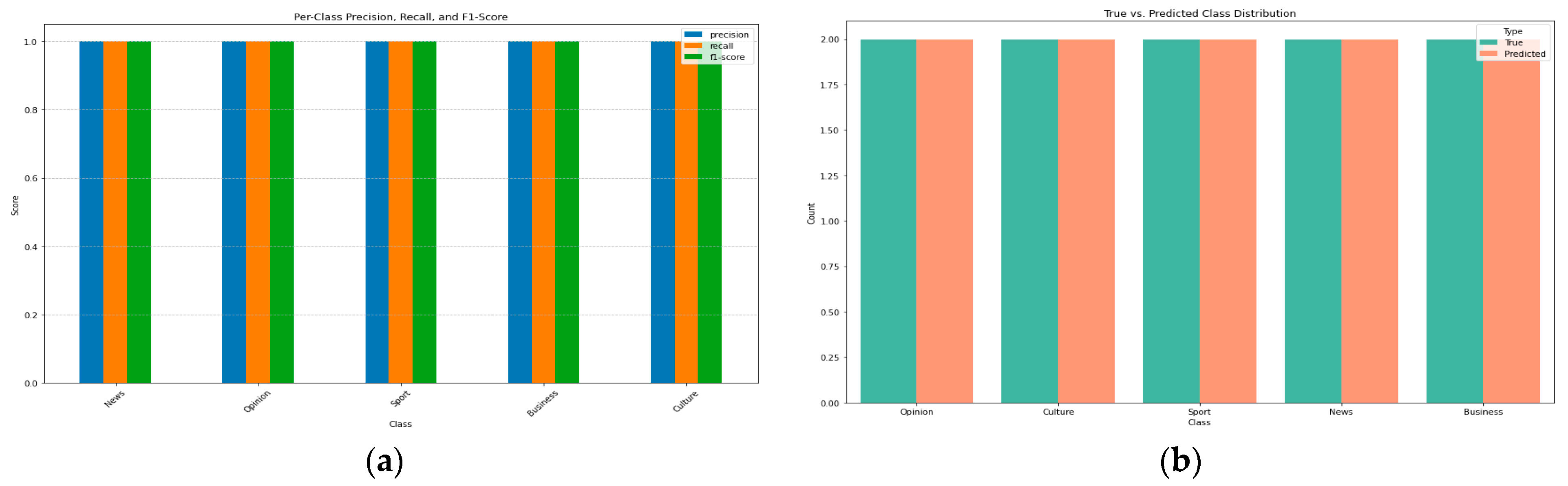

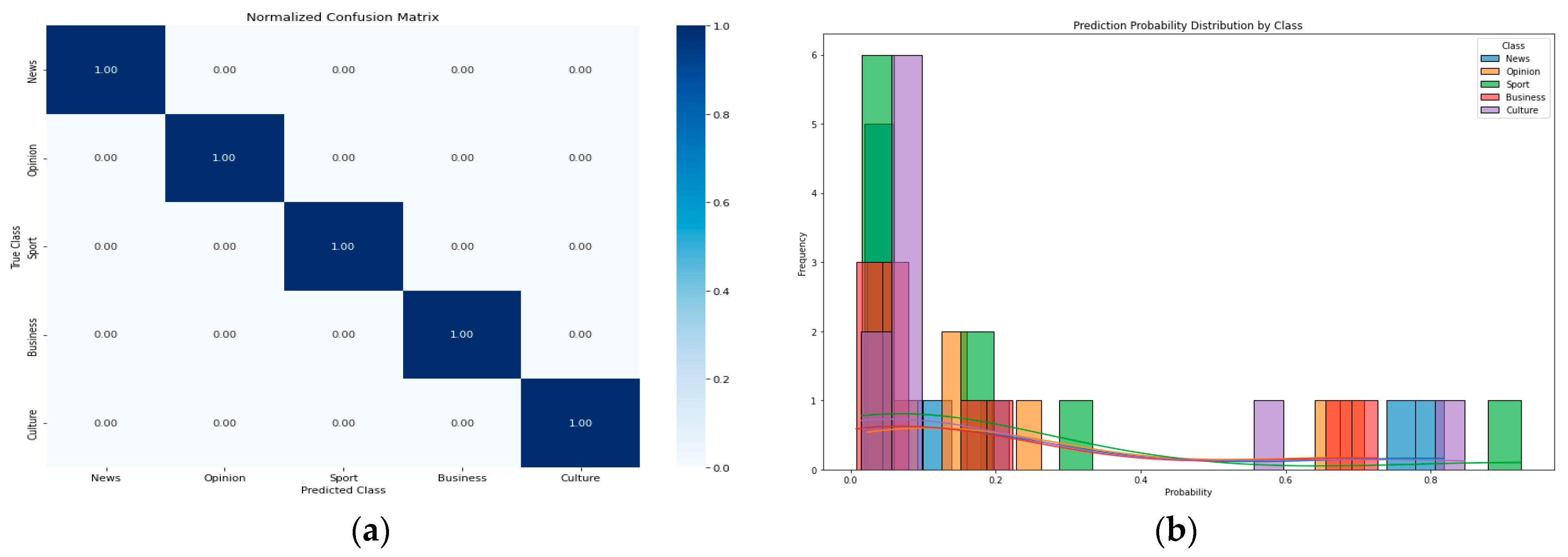

5.7. Application to News Categorization and Trend Analysis

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liu, B. Sentiment Analysis and Opinion Mining; Springer Nature: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Mayur, W.; Annavarapu, C.S.R.; Chaitanya, K. A survey on sentiment analysis methods, applications, and challenges. Artif. Intell. Rev. 2022, 55, 5731–5780. [Google Scholar]

- Khaled, A.; Aysha, A.S. Experimental results on customer reviews using lexicon-based word polarity identification method. IEEE Access 2020, 8, 179955–179969. [Google Scholar]

- Bogery, R.; Nora, A.B.; Nida, A.; Nada, A.; Yara, A.H.; Irfan, U.K. Automatic Semantic Categorization of News Headlines using Ensemble Machine Learning: A Comparative Study. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 689–696. [Google Scholar] [CrossRef]

- Agarwal, J.; Sharon, C.; Aditya, P.; Anand, K.M.; Guru, P. Machine Learning Application for News Text Classification. In Proceedings of the 13th International Conference on Cloud Computing, Data Science & Engineering (Confluence), Noida, India, 19–20 January 2023; pp. 463–466. [Google Scholar]

- Sukhramani, K.; Harshika, K.; Akhtar, R.; Abhishek, J. Binary Classification of News Articles using Deep Learning. In Proceedings of the IEEE International Students’ Conference on Electrical, Electronics and Computer Science (SCEECS), Bhopal, India, 24–25 February 2024; pp. 1–9. [Google Scholar]

- Hiremath, B.N.; Malini, M.P. Enhancing Optimized Personalized Therapy in Clinical Decision Support System using Natural Language Processing. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 2840–2848. [Google Scholar] [CrossRef]

- Eguia, H.; Carlos, L.S.-B.; Franco, V.; Fernando, A.-L.; Francesc, S.-R. Clinical Decision Support and Natural Language Processing in Medicine: Systematic Literature Review. J. Med. Internet Res. 2024, 26, 39348889. [Google Scholar] [CrossRef]

- Palanivinayagam, A.; Claude, Z.E.-B.; Robertas, D. Twenty Years of Machine-Learning-Based Text Classification: A Systematic Review. Algorithms 2023, 16, 236. [Google Scholar] [CrossRef]

- Sun, X.; Xiaoya, L.; Jiwei, L.; Fei, W.; Shangwei, G.; Tianwei, Z.; Guoyin, W. Text classification via large language models. arXiv 2023, arXiv:2305.08377. [Google Scholar]

- Duarte, J.M.; Berton, L. A review of semi-supervised learning for text classification. Artif. Intell. Rev. 2023, 56, 9401–9469. [Google Scholar] [CrossRef]

- Zhao, H.; Haihua, C.; Thomas, A.R.; Yunhe, F.; Debjani, S.; Hong-Jun, Y. Improving Text Classification with Large Language Model-Based Data Augmentation. Electronics 2024, 13, 2535. [Google Scholar] [CrossRef]

- Kamal, T.; Paul, D.Y.; Chan, Y.; Dirar, H.; Aya, T. A comprehensive survey of text classification techniques and their research applications: Observational and experimental insights. Comput. Sci. Rev. 2024, 54, 100664. [Google Scholar]

- Wu, Y.; Jun, W. A survey of text classification based on pre-trained language model. Neurocomputing 2025, 616, 128921. [Google Scholar] [CrossRef]

- Hyojung, K.; Minjung, P. Discovering fashion industry trends in the online news by applying text mining and time series regression analysis. Heliyon 2023, 9, 2405–8440. [Google Scholar]

- Hardin, G. Disinformation; Misinformation, and Fake News: The Latest Trends and Issues in Research. In Encyclopedia of Libraries, Librarianship, and Information Science, 1st ed.; Academic Press: Cambridge, MA, USA, 2025; pp. 519–530. [Google Scholar]

- Hu, T.; Siqin, W.; Wei, L.; Mengxi, Z.; Xiao, H.; Yingwei, Y.; Regina, L. Revealing public opinion towards COVID-19 vaccines with Twitter data in the United States: Spatiotemporal perspective. J. Med. Internet Res. 2021, 23, e30854. [Google Scholar] [CrossRef] [PubMed]

- Sun, G.; Cheng, Y.; Dong, F.; Wang, L.; Zhao, D.; Zhang, Z.; Tong, X. Multi-Label Text Classification model integrating Label Attention and Historical Attention. Knowl.-Based Syst. 2024, 296, 111878. [Google Scholar] [CrossRef]

- Ren, H.; Wang, H.; Zhao, Y.; Ren, Y. Time-Aware Language Modeling for Historical Text Dating. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (EMNLP-2023), Singapore, 6–10 December 2023. [Google Scholar]

- Khan, S.U.R.; Muhammd, A.I.; Muhammad, A.; Muhammad, A.I. Temporal specificity-based text classification for information retrieval. Turk. J. Electr. Eng. Comput. Sci. 2018, 26, 2916–2927. [Google Scholar] [CrossRef]

- He, Y.; Li, J.; Song, Y.; He, M.; Peng, H. Time-evolving Text Classification with Deep Neural Networks. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence (IJCAI-18), Stockholm, Sweden, 13–19 July 2018. [Google Scholar]

- Rabab, A.; Elena, K.; Arkaitz, Z. Building for tomorrow: Assessing the temporal persistence of text classifiers. Inf. Process. Manag. 2023, 60, 103200. [Google Scholar]

- Salles, T.; Leonardo, R.; Gisele, L.P.; Fernando, M.; Wagner, M.J.; Marcos, G. Temporally-aware algorithms for document classification. In Proceedings of the 33rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Geneva, Switzerland, 19–23 July 2010. [Google Scholar]

- Raneen, Y.; Abdul, H.; Zahra, A. MTS2Graph: Interpretable multivariate time series classification with temporal evolving graphs. Pattern Recognit. 2025, 152, 110486. [Google Scholar]

- Pokrywka, J.; Filip, G. Temporal language modeling for short text document classification with transformers. In Proceedings of the 2022 17th Conference on Computer Science and Intelligence Systems (FedCSIS), Sofia, Bulgaria, 4–7 September 2022; pp. 121–128. [Google Scholar]

- Santosh, T.; Vuong, T.; Grabmair, M. Time-aware Incremental Training for Temporal Generalization of Legal Classification Tasks. arXiv 2024, arXiv:2405.14211. [Google Scholar]

- Chen, X.; Qiu, P.; Zhu, W.; Li, H.; Wang, H.; Sotiras, A.; Wang, Y.; Razi, A. TimeMIL: Advancing Multivariate Time Series Classification via a Time-aware Multiple Instance Learning. arXiv 2024, arXiv:2405.03140. [Google Scholar]

- Frank, E.; Bouckaert, R. Naive Bayes for Text Classification with Unbalanced Classes. In Proceedings of the 10th European conference on Principle and Practice of Knowledge Discovery in Databases, Berlin, Germany, 18–22 September 2006; Volume 4213. [Google Scholar]

- Jiang, L.; Li, C.; Wang, S.; Zhang, L. Deep feature weighting for naive Bayes and its application to text classification. Eng. Appl. Artif. Intell. 2016, 52, 26–39. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Ramesh, B.; Sathiaseelan, J.G.R. An Advanced Multi Class Instance Selection based Support Vector Machine for Text Classification. Procedia Comput. Sci. 2015, 57, 1124–1130. [Google Scholar] [CrossRef][Green Version]

- Parashjyoti, B.; Deepak, G.; Barenya, B.H. ConCave-Convex procedure for support vector machines with Huber loss for text classification. Comput. Electr. Eng. 2025, 122, 109925. [Google Scholar]

- Wu, J. Introduction to Convolutional Neural Networks; National Key Lab for Novel Software Technology, Nanjing University: Nanjing, China, 2017; Volume 5, p. 495. [Google Scholar]

- Albawi, S.; Mohammed, T.A.; Al-Zawi, S. Understanding of a convolutional neural network. In Proceedings of the 2017 International Conference on Engineering and Technology (ICET), Antalya, Turkey, 21–23 August 2017. [Google Scholar]

- Zhao, Z.; Wu, Y. Attention-Based Convolutional Neural Networks for Sentence Classification. In Proceedings of the Interspeech, San Francisco, CA, USA, 8–12 September 2016; Volume 8, pp. 705–709. [Google Scholar]

- Vieira, J.; Paulo, A.; Raimundo, S.M. An analysis of convolutional neural networks for sentence classification. In Proceedings of the 2017 XLIII Latin American Computer Conference (CLEI), Córdoba, Argentina, 4–8 September 2017. [Google Scholar]

- Lai, S.; Xu, L.; Liu, K.; Zhao, J. Recurrent convolutional neural networks for text classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; Volume 29. [Google Scholar]

- Tarwani, K.M.; Swathi, E. Survey on recurrent neural network in natural language processing. Int. J. Eng. Trends Technol. 2016, 48, 301–304. [Google Scholar] [CrossRef]

- Tomas, M.; Kai, C.; Greg, C.; Jeffrey, D. Efficient estimation of word representations in vector space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Pennington, J.; Richard, S.; Christopher, D.M. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Giulianelli, M.; Marco, D.T.; Raquel, F. Analysing lexical semantic change with contextualised word representations. arXiv 2020, arXiv:2004.14118. [Google Scholar]

- Bhuwan, D.; Jeremy, R.C.; Julian, M. Time-Aware Language Models as Temporal Knowledge Bases. Trans. Assoc. Comput. Linguist. 2022, 10, 257–273. [Google Scholar]

- Rosin, G.D.; Ido, G.; Kira, R. Time masking for temporal language models. In Proceedings of the Fifteenth ACM International Conference on Web Search and Data Mining, Tempe, AZ, USA, 21–25 February 2022; pp. 833–841. [Google Scholar]

- Rosin, G.D.; Kira, R. Temporal attention for language models. arXiv 2022, arXiv:2202.02093. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the NAACL 2019, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. 2018. Available online: https://openai.com/research/language-unsupervised (accessed on 10 February 2025).

- Yang, Z.; Dai, Z.; Yang, Y.; Carbonell, J.; Salakhutdinov, R.R.; Le, Q.V. XLNet: Generalized Autoregressive Pretraining for Language Understanding. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Vancouver, BC, Canada, 8–14 December 2019; Volume 32, pp. 5754–5764. [Google Scholar]

- Schuster, M.; Kuldip, K.P. Bidirectional Recurrent Neural Networks. IEEE Trans. Signal Process. 1997, 45, 2673–2681. [Google Scholar] [CrossRef]

- Grisoni, F.; Michael, M.; Robin, L.; Gisbert, S. Bidirectional molecule generation with recurrent neural networks. J. Chem. Inf. Model. 2020, 60, 1175–1183. [Google Scholar] [CrossRef]

- Powers, D.M. Evaluation: From precision, recall and F-measure to ROC, informedness, markedness and correlation. arXiv 2020, arXiv:2010.16061. [Google Scholar]

- Cohen, J. A coefficient of agreement for nominal scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Matthews, B.W. Comparison of the predicted and observed secondary structure of T4 phage lysozyme. Biochim. Biophys. Acta (BBA)-Protein Struct. 1975, 405, 442–451. [Google Scholar] [CrossRef]

- Willmott, C.J.; Kenji, M. Advantages of the Mean Absolute Error (MAE) Over the Root Mean Square Error (RMSE) in Assessing Average Model Performance. Clim. Res. 2005, 30, 79–82. [Google Scholar] [CrossRef]

- Sheng, L.; Lizhen, X. Topic Classification Based on Improved Word Embedding. In Proceedings of the 14th Web Information Systems and Applications Conference (WISA), Liuzhou, China, 11–12 November 2017; pp. 117–121. [Google Scholar]

- Luo, W. Research and Implementation of Text Topic Classification Based on Text CNN. In Proceedings of the 2022 3rd International Conference on Computer Vision, Image and Deep Learning & International Conference on Computer Engineering and Applications (CVIDL & ICCEA), Changchun, China, 20–22 May 2022; pp. 1152–1155. [Google Scholar]

- Ding, H.; Yang, J.; Deng, Y.; Zhang, H.; Roth, D. Towards Open-Domain Topic Classification. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies: System Demonstrations, Seattle, WA, USA, 10–15 July 2022; pp. 90–98. [Google Scholar]

- Ghazala, N.; Muhammad, M.K.; Muhammad, Y.; Bushra, Z.; Muhammad, K.H. Email spam detection by deep learning models using novel feature selection technique and BERT. Egypt. Inform. J. 2024, 26, 100473. [Google Scholar]

- Maged, N.; Faisal, S.; Aminu, D.; Abdulaziz, A.; Mohammed, A.-S. Topic-aware neural attention network for malicious social media spam detection. Alex. Eng. J. 2025, 111, 540–554. [Google Scholar]

- Mariano, M.; Fernando, D.; Fernando, T.; Ana, M.; Evangelos, M. Detecting ongoing events using contextual word and sentence embeddings. Expert Syst. Appl. 2022, 209, 118257. [Google Scholar]

- Elena, M.; Eva, W. Temporal construal in sentence comprehension depends on linguistically encoded event structure. Cognition 2025, 254, 105975. [Google Scholar]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. In Proceedings of the EMNLP, Doha, Qatar, 25–29 October 2014; pp. 1746–1751. [Google Scholar]

- Nguyen, A.; Srijeet, C.; Sven, W.; Leo, S.; Martin, M.; Bjoern, E. Time matters: Time-aware lstms for predictive business process monitoring. In Proceedings of the Process Mining Workshops: ICPM 2020 International Workshops, Padua, Italy, 5–8 October 2020; pp. 112–123. [Google Scholar]

- Graves, A.; Navdeep, J.; Abdel-rahman, M. Hybrid speech recognition with deep bidirectional LSTM. In Proceedings of the 2013 IEEE Workshop on Automatic Speech Recognition and Understanding, Olomouc, Czech Republic, 8–12 December 2013; pp. 273–278. [Google Scholar]

- Bahdanau, D. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Raffel, C.; Noam, S.; Adam, R.; Katherine, L.; Sharan, N.; Michael, M.; Yanqi, Z.; Wei, L.; Peter, J.L. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 2020, 21, 1–67. [Google Scholar]

- Luo, M.; Xue, B.; Niu, B. A comprehensive survey for automatic text summarization: Techniques, approaches and perspectives. Neurocomputing 2024, 603, 128280. [Google Scholar] [CrossRef]

- Huang, Y.; Bai, X.; Liu, Q.; Peng, H.; Yang, Q.; Wang, J. Sentence-level sentiment classification based on multi-attention bidirectional gated spiking neural P systems. Appl. Soft Comput. 2024, 152, 111231. [Google Scholar] [CrossRef]

- Liu, Y. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692 364. [Google Scholar]

- Röttger, P.; Janet, B.P. Temporal adaptation of BERT and performance on downstream document classification: Insights from social media. arXiv 2021, arXiv:2104.08116. [Google Scholar]

- Yao, Z.; Yifan, S.; Weicong, D.; Nikhil, R.; Hui, X. Dynamic word embeddings for evolving semantic discovery. In Proceedings of the Eleventh ACM International Conference on Web Search and Data Mining, Los Angeles, CA, USA, 5–9 February 2018; pp. 673–681. [Google Scholar]

- Bamler, R.; Stephan, M. Dynamic word embeddings. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 380–389. [Google Scholar]

- Turner, R.E. An introduction to transformers. arXiv 2023, arXiv:2304.10557. [Google Scholar]

- Reimers, N. Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. arXiv 2019, arXiv:1908.10084. [Google Scholar]

- Gururangan, S.; Ana, M.; Swabha, S.; Kyle, L.; Iz, B.; Doug, D.; Noah, A.S. Don’t stop pretraining: Adapt language models to domains and tasks. arXiv 2020, arXiv:2004.10964. [Google Scholar]

- Wang, J.; Jatowt, A.; Yoshikawa, M.; Cai, Y. BiTimeBERT: Extending Pre-Trained Language Representations with Bi-Temporal Information. In Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR ’23), Taipei, Taiwan, 23–27 July 2023. [Google Scholar]

- Gong, H.; Suma, B.; Pramod, V. Enriching word embeddings with temporal and spatial information. arXiv 2020, arXiv:2010.00761. [Google Scholar]

- Tang, X.; Yi, Z.; Danushka, B. Learning dynamic contextualised word embeddings via template-based temporal adaptation. arXiv 2023, arXiv:2208.10734. [Google Scholar]

- Go, A.; Richa, B.; Lei, H. Twitter Sentiment Classification Using Distant Supervision; S224N Project Report; Stanford University: Stanford, CA, USA, 2009; Volume 1. [Google Scholar]

- Diederik, P.K.; Jimmy, B. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Nagumothu, D.; Peter, W.E.; Bahadorreza, O.; Reda, B.M. Linked Data Triples Enhance Document Relevance Classification. Appl. Sci. 2021, 11, 6636. [Google Scholar] [CrossRef]

| Dataset | Sentences | Time Span | Avg. Sentence Length (Tokens/Words) | Labels |

|---|---|---|---|---|

| Ireland-news-headlines | 1,610,000 | 1996–2021 | 15 | news, culture, opinion, business, sport, lifestyle |

| Sentiment140 | 1,600,000 | 2009–2020 | 13 | Positive, Negative |

| 20 News Groups | 18,846 | 1995–2000 | 150 | 20 domain-agnostic topic categories |

| Dynamic Embedding Techniques | Acc | CK | F1 Macro | F1 Micro | MCC | MSE | MAE |

|---|---|---|---|---|---|---|---|

| Ireland-news-headlines dataset | |||||||

| Aggregate-based Embedding Fusion | 0.92 | 0.89 | 0.90 | 0.92 | 0.89 | 0.75 | 0.23 |

| Time-weighted Embedding Fusion | 0.91 | 0.88 | 0.89 | 0.91 | 0.88 | 0.83 | 0.25 |

| Self-Attention with Temporal Positional Encoding | 0.92 | 0.89 | 0.90 | 0.92 | 0.89 | 0.75 | 0.23 |

| Sentiment140 dataset | |||||||

| Aggregate-based Embedding Fusion | 0.87 | 0.74 | 0.87 | 0.87 | 0.74 | 0.13 | 0.13 |

| Time-weighted Embedding Fusion | 0.92 | 0.81 | 0.91 | 0.92 | 0.80 | 0.09 | 0.09 |

| Self-Attention with Temporal Positional Encoding | 0.89 | 0.78 | 0.87 | 0.89 | 0.78 | 0.11 | 0.11 |

| 20 News Group dataset | |||||||

| Aggregate-based Embedding Fusion | 0.73 | 0.71 | 0.71 | 0.73 | 0.72 | 11.5 | 1.30 |

| Time-weighted Embedding Fusion | 0.85 | 0.85 | 0.85 | 0.85 | 0.85 | 3.2 | 0.80 |

| Self-Attention with Temporal Positional Encoding | 0.82 | 0.81 | 0.82 | 0.82 | 0.82 | 6.3 | 0.97 |

| Dynamic Embedding Techniques | Acc | CK | F1 Macro | F1 Micro | MCC | MSE | MAE |

|---|---|---|---|---|---|---|---|

| Ireland-news-headlines dataset | |||||||

| Time-weighted Embedding Fusion | 0.91 | 0.87 | 0.89 | 0.91 | 0.87 | 0.92 | 0.27 |

| Approaches | System | Accuracy (%) | Datasets |

|---|---|---|---|

| Time-Aware Classification | RoBERTa with date as text [25] | 87.84 | Ireland-news-headlines |

| 91.13 | Sentiment140 | ||

| RoBERTa with added embeddings [25] | 86.82 | Ireland-news-headlines | |

| 91.04 | Sentiment140 | ||

| RoBERTa with stacked embeddings [25] | 87.65 | Ireland-news-headlines | |

| 91.1 | Sentiment140 | ||

| Text GloVe with Triples [80] | 86.9 | 20 News Group | |

| Text BERT with Triples [80] | 77.7 | 20 News Group | |

| Traditional/Static Classification | RoBERTa without date [25] | 82.35 | Ireland-news-headlines |

| 89.27 | Sentiment140 | ||

| Most frequent class baseline [25] | 51.10 | Ireland-news-headlines | |

| 49.88 | Sentiment140 | ||

| Naive Bayes Classifier [25] | 85.00 | Sentiment140 | |

| Hybrid Stacked Ensemble Model [25] | 99.00 | Sentiment140 | |

| Proposed Classification Model | Time-Aware Sentence Classification Model with traditional/static word embeddings | 85.6 | Ireland-news-headlines |

| 82.1 | Sentiment140 | ||

| 77.00 | 20 News Group | ||

| Time-Aware Sentence Classification Model with dynamic word embeddings | 92.00 | Ireland-news-headlines | |

| 92.00 | Sentiment140 | ||

| 85.00 | 20 News Group |

| Timestamp | News Headlines | Category |

|---|---|---|

| 1 January 2020 | Government announces economic stimulus package. | News |

| 8 January 2020 | World leaders meet to discuss global climate goals. | News |

| 15 January 2020 | New policies aim to stabilize economic growth. | News |

| 22 January 2020 | Climate crisis takes center stage at global summit. | News |

| 29 January 2020 | Global literacy programs gain global traction. | News |

| 1 June 2020 | Remote work should remain post-pandemic. | Opinion |

| 10 June 2020 | Is technology making us more disconnected from reality? | Opinion |

| 17 June 2020 | The future of work: Balancing remote and in-office setups. | Opinion |

| 24 June 2020 | Ethical concerns in AI dominate opinion columns. | Opinion |

| 1 July 2020 | Healthcare debates spark discussions on innovation. | Opinion |

| 5 February 2020 | Football team wins championship after dramatic final. | Sport |

| 12 February 2020 | Olympics postponed amid global uncertainty. | Sport |

| 19 February 2020 | Local soccer team secures thrilling playoff victory. | Sport |

| 26 February 2020 | World championships highlight resilience amid challenges. | Sport |

| 4 March 2020 | Global sports events thrive with new safety measures. | Sport |

| 11 March 2020 | Tech industry sees surge in remote collaboration tools. | Business |

| 18 March 2020 | Stock market rallies as companies report strong earnings. | Business |

| 25 March 2020 | E-commerce giants report record profits in Q2 earnings. | Business |

| 1 April 2020 | Tech startups lead innovation in green energy solutions. | Business |

| 8 April 2020 | Small businesses adopt AI for competitive edge. | Business |

| 22 April 2020 | Art exhibition explores human connection in isolation. | Culture |

| 29 April 2020 | Digital concerts gain popularity among younger audiences. | Culture |

| 6 May 2020 | Local artists adapt to virtual mediums during pandemic. | Culture |

| 13 May 2020 | Virtual reality performances redefine cultural experiences. | Culture |

| 20 May 2020 | Museum curates digital exhibitions for global audiences. | Culture |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abdalgader, K.; Matroud, A.A.; Al-Doboni, G. Temporal Dynamics in Short Text Classification: Enhancing Semantic Understanding Through Time-Aware Model. Information 2025, 16, 214. https://doi.org/10.3390/info16030214

Abdalgader K, Matroud AA, Al-Doboni G. Temporal Dynamics in Short Text Classification: Enhancing Semantic Understanding Through Time-Aware Model. Information. 2025; 16(3):214. https://doi.org/10.3390/info16030214

Chicago/Turabian StyleAbdalgader, Khaled, Atheer A. Matroud, and Ghaleb Al-Doboni. 2025. "Temporal Dynamics in Short Text Classification: Enhancing Semantic Understanding Through Time-Aware Model" Information 16, no. 3: 214. https://doi.org/10.3390/info16030214

APA StyleAbdalgader, K., Matroud, A. A., & Al-Doboni, G. (2025). Temporal Dynamics in Short Text Classification: Enhancing Semantic Understanding Through Time-Aware Model. Information, 16(3), 214. https://doi.org/10.3390/info16030214