Translation in the Wild

Abstract

1. Introduction

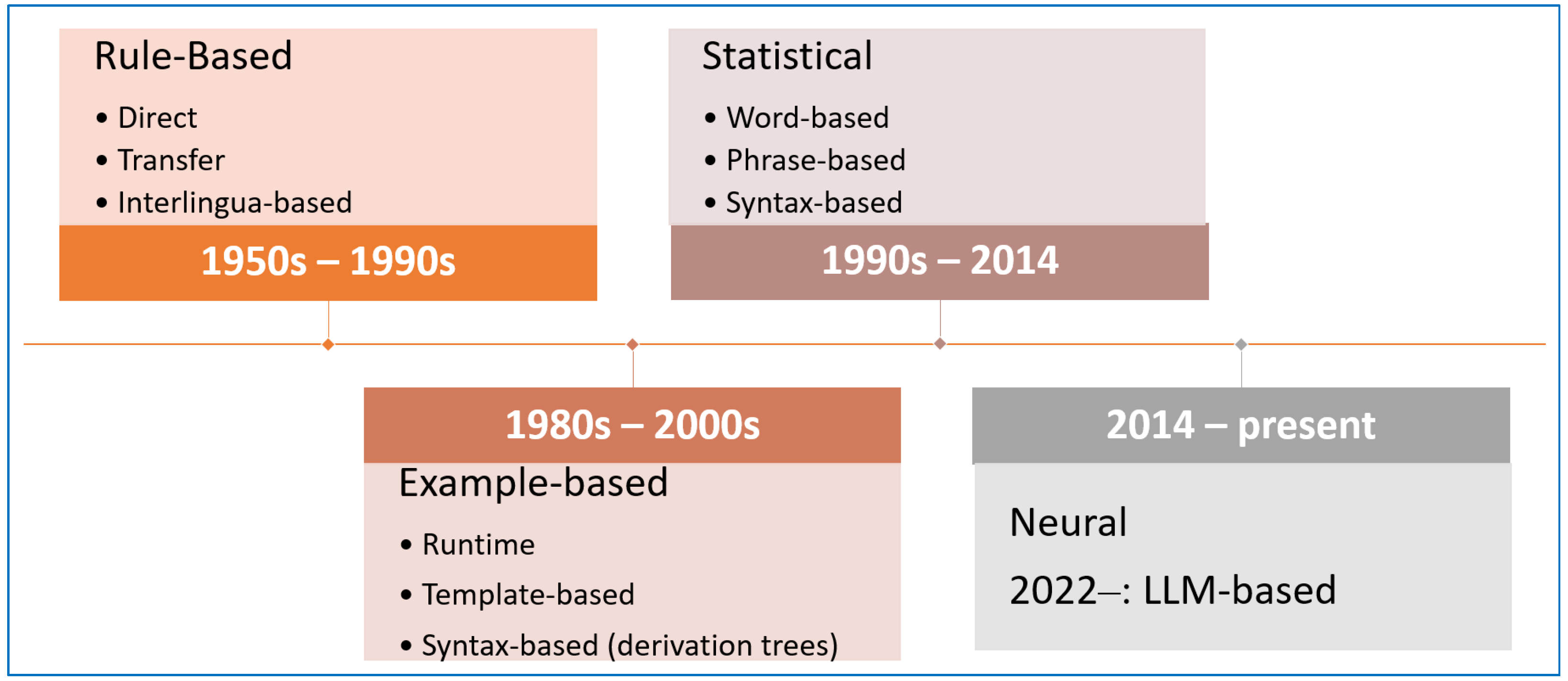

2. Translation Technologies: Brief History and Current State

3. NMT vs. LLMs: A Comparison

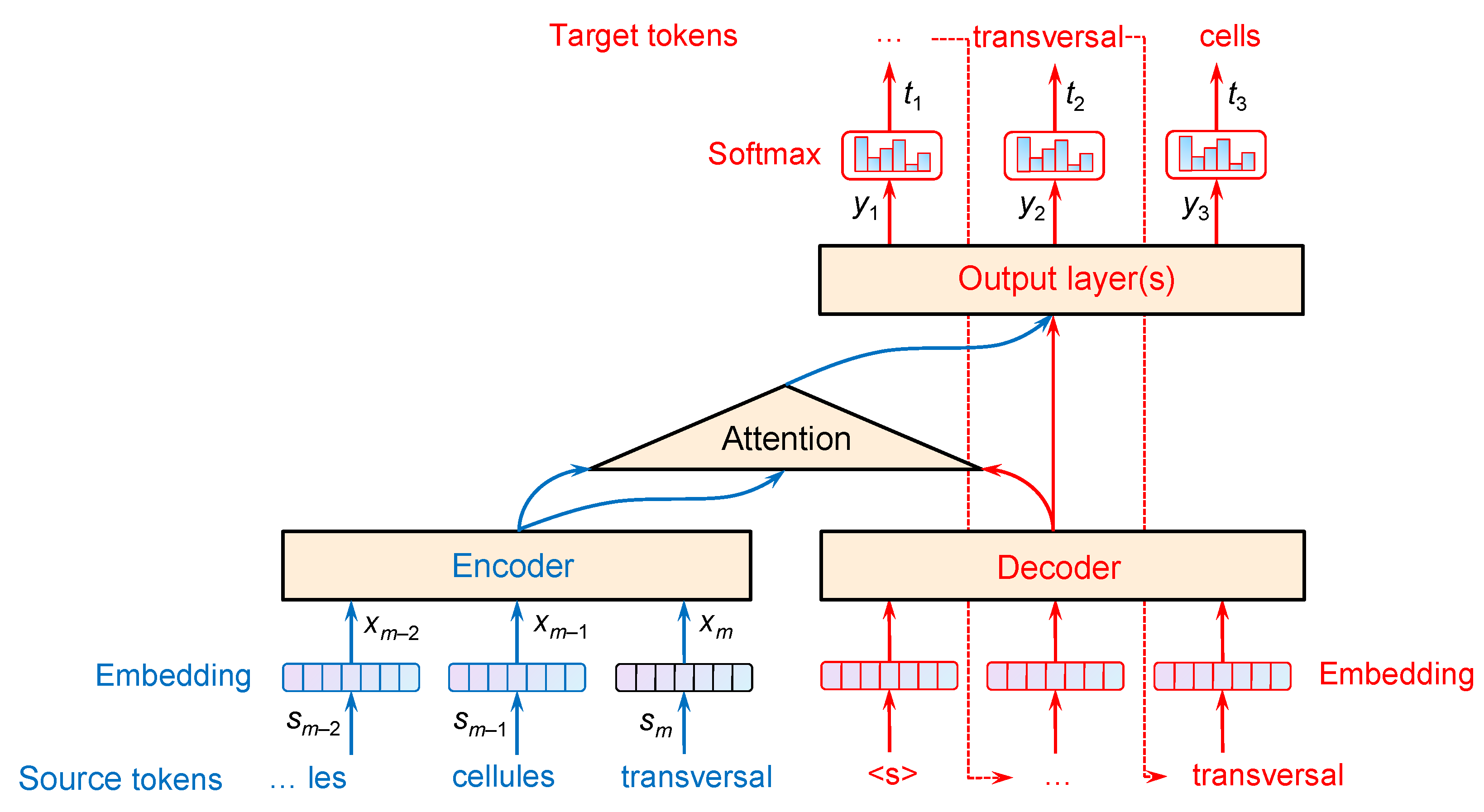

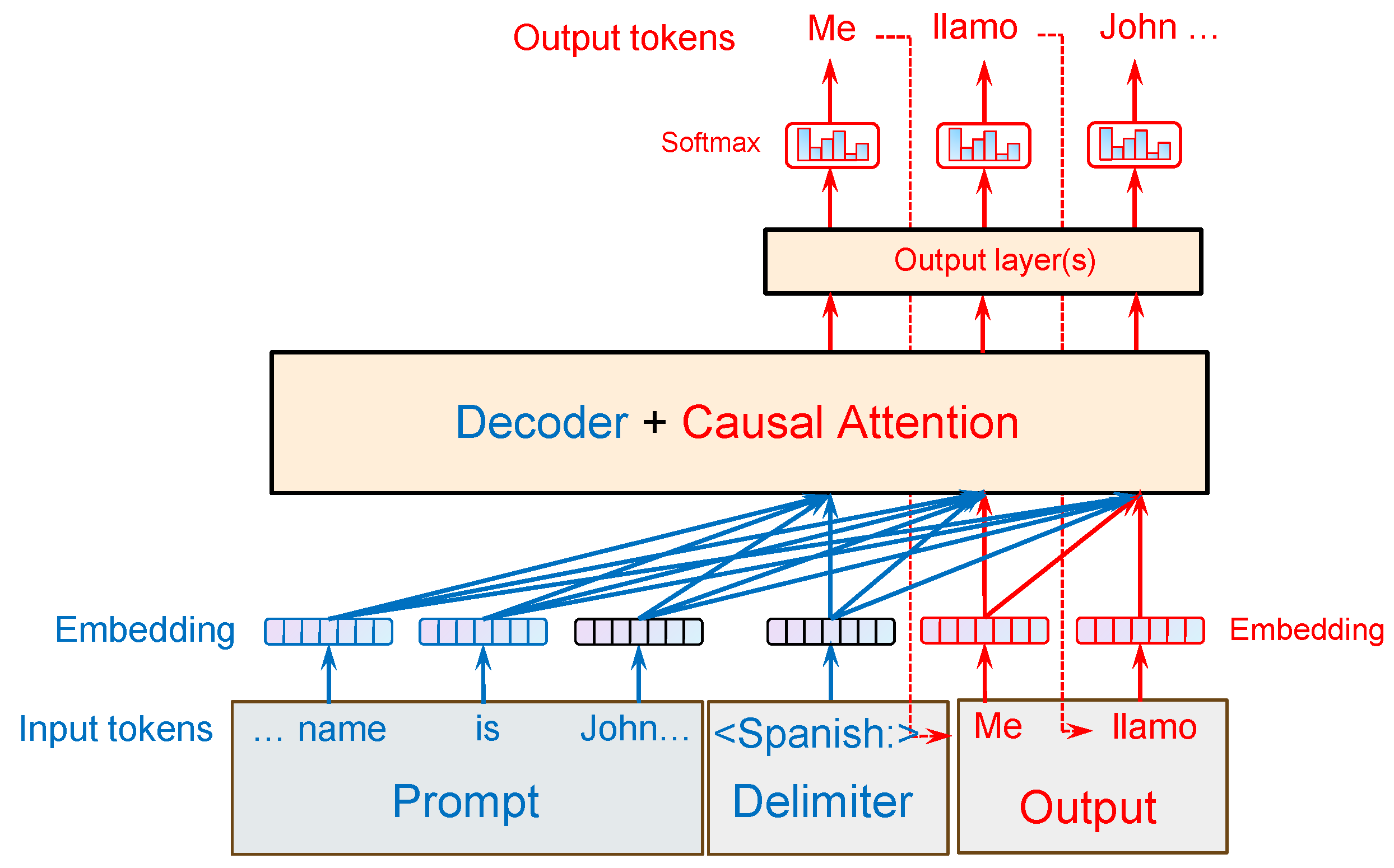

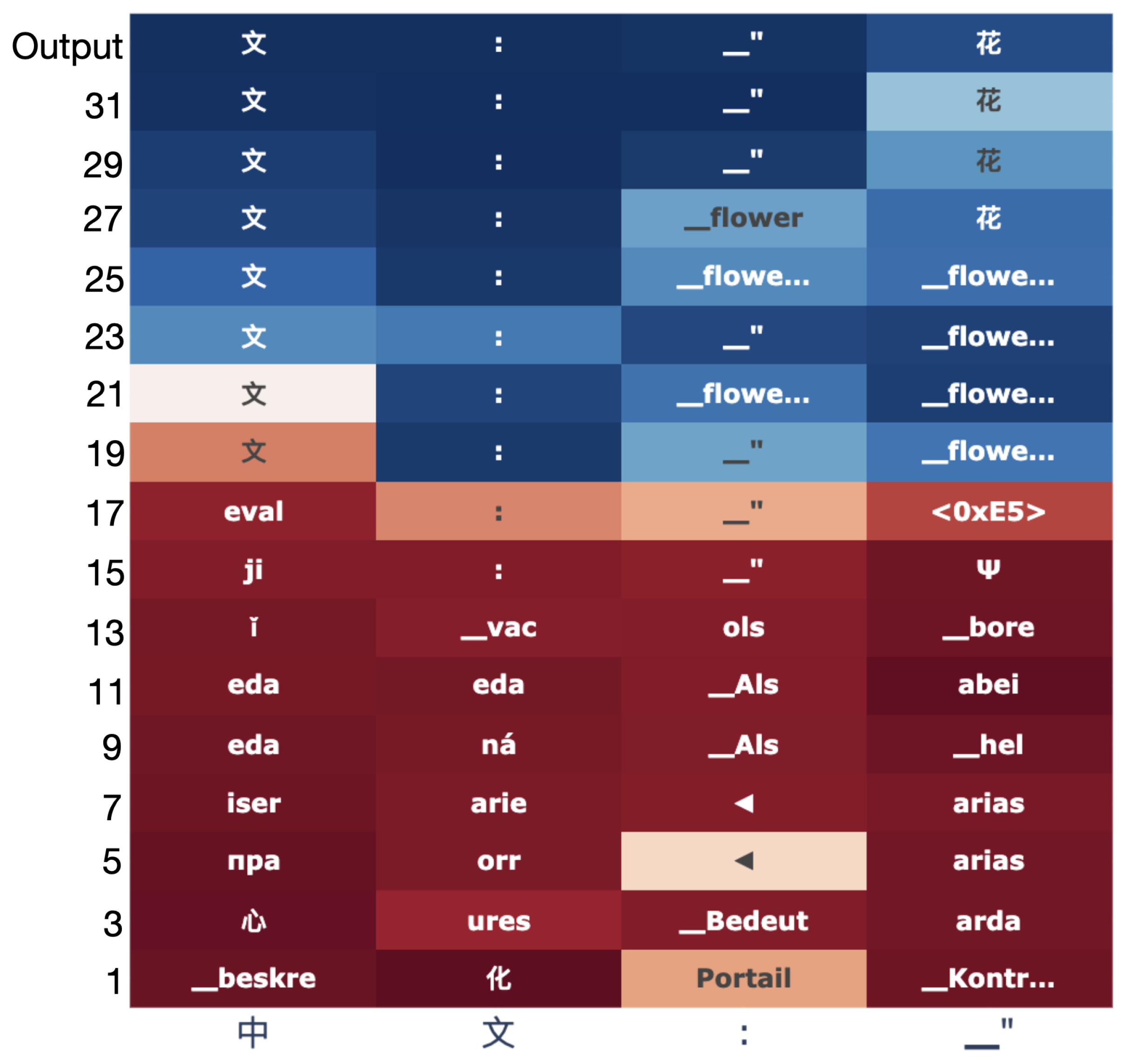

3.1. Model Architecture and Size

3.2. Training Data

3.3. Training Objective

3.4. Inference and Use

3.5. Quality and Evaluation

3.6. Multilingualism

3.7. Behavior and Errors

3.8. Specialization Versus Generalization

4. Recent Uses of LLMs in Translation Tasks

4.1. Zero- and Few-Shot Translation with LLMs

4.2. Low-Resource Languages

4.3. Pivoting and Intermediate Languages

4.4. Chain-of-Thought Prompting for Translation

4.5. Multilingual Latent Representations and Cross-Lingual Tasks

5. How Do LLMs Translate? Where Does Translation Happen in Them?

5.1. In-Context Translation

5.2. Cross-Lingual Representation Alignment

5.3. Do LLMs Pivot Through English on Their Own?

5.4. Language-Agnostic Representation?

6. What Explains the Origin of LLMs’ Translation Abilities?

6.1. The Minimal Role of Instruction Tuning

6.2. Incidental Bilingualism in Training Data

6.3. Global and Local Learning

- Local learning: acquisition from bilingual signals that co-occur within a single training context window (e.g., an English sentence followed shortly by its translation).

- Global learning: alignment of semantically related monolingual content distributed across the training corpus, not necessarily co-occurring in a single window.

- The two interact iteratively during batch training.

6.4. Empirical Implications of Global and Local Learning

6.4.1. Style of Translation Outputs

6.4.2. Dependence on Model Scale and Data

6.4.3. Generalization to Unseen Language Pairs and Domains

7. Prospects for Empirical Testing

7.1. Selective Ablation of Fine-Tuning Data

7.2. Synthetic Tasks and Controlled Probes

7.3. Human Evaluation and Error Analysis

7.4. Feasible Settings and Metrics

8. Reconceptualizing Translation in the Age of Deep Learning

8.1. Pluralism and Duality in Translation

8.2. Emergent Translation Competence?

8.3. Opacity and Interpretability: Translation as a Black Box

8.4. Continuities with Translation Studies and Distributional Semantics

9. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to Sequence Learning with Neural Networks. In Proceedings of the 27th International Conference on Neural Information Processing Systems NIPS’14—Volume 2, Cambridge, MA, USA, 8–13 December 2014; pp. 3104–3112. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar] [CrossRef]

- Luong, T.; Pham, H.; Manning, C.D. Effective Approaches to Attention-based Neural Machine Translation. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 1412–1421. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Advances in Neural Information Processing Systems 30; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; pp. 5998–6008. [Google Scholar]

- Sennrich, R.; Haddow, B.; Birch, A. Neural Machine Translation of Rare Words with Subword Units. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Berlin, Germany, 7–12 August 2016; pp. 1715–1725. [Google Scholar] [CrossRef]

- Kudo, T.; Richardson, J. SentencePiece: A simple and language independent subword tokenizer and detokenizer for Neural Text Processing. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, Brussels, Belgium, 31 October–4 November 2018; pp. 66–71. [Google Scholar] [CrossRef]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. BLEU: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting on Association for Computational Linguistics—ACL ’02, Philadelphia, PA, USA, 7–12 July 2002; p. 311. [Google Scholar] [CrossRef]

- Ataman, D.; Birch, A.; Habash, N.; Federico, M.; Koehn, P.; Cho, K. Machine Translation in the Era of Large Language Models: A Survey of Historical and Emerging Problems. Information 2025, 16, 723. [Google Scholar] [CrossRef]

- Blevins, T.; Zettlemoyer, L. Language Contamination Helps Explains the Cross-lingual Capabilities of English Pretrained Models. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 3563–3574. [Google Scholar] [CrossRef]

- Lin, X.V.; Mihaylov, T.; Artetxe, M.; Wang, T.; Chen, S.; Simig, D.; Ott, M.; Goyal, N.; Bhosale, S.; Du, J.; et al. Few-shot Learning with Multilingual Generative Language Models. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 9019–9052. [Google Scholar] [CrossRef]

- Wei, J.; Tay, Y.; Bommasani, R.; Raffel, C.; Zoph, B.; Borgeaud, S.; Yogatama, D.; Bosma, M.; Zhou, D.; Metzler, D.; et al. Emergent Abilities of Large Language Models. arXiv 2022, arXiv:2206.07682. [Google Scholar] [CrossRef]

- Morris, J.X.; Sitawarin, C.; Guo, C.; Kokhlikyan, N.; Suh, G.E.; Rush, A.M.; Chaudhuri, K.; Mahloujifar, S. How much do language models memorize? arXiv 2025, arXiv:2505.24832. [Google Scholar] [CrossRef]

- Briakou, E.; Cherry, C.; Foster, G. Searching for Needles in a Haystack: On the Role of Incidental Bilingualism in PaLM’s Translation Capability. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; pp. 9432–9452. [Google Scholar] [CrossRef]

- Chua, L.; Ghazi, B.; Huang, Y.; Kamath, P.; Kumar, R.; Manurangsi, P.; Sinha, A.; Xie, C.; Zhang, C. Crosslingual Capabilities and Knowledge Barriers in Multilingual Large Language Models. arXiv 2023, arXiv:2406.16135. [Google Scholar]

- Gao, C.; Lin, H.; Huang, X.; Han, X.; Feng, J.; Deng, C.; Chen, J.; Huang, S. Understanding LLMs’ Cross-Lingual Context Retrieval: How Good It Is And Where It Comes From. In Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing, Suzhou, China, 4–9 November 2025; pp. 22808–22837. [Google Scholar]

- Snell-Hornby, M. The Turns of Translation Studies; Benjamins Translation Library, John Benjamins Publishing Company: Amsterdam, The Netherlands; Philadelphia, PA, USA, 2006. [Google Scholar] [CrossRef]

- Venuti, L. Translation Changes Everything: Theory and Practice; Routledge; London, UK; New York, NY, USA, 2013. [Google Scholar] [CrossRef]

- Munday, J.; Pinto, S.R.; Blakesley, J. Introducing Translation Studies: Theories and Applications, 5th ed.; Routledge: Abingdon, UK; New York, NY, USA, 2022. [Google Scholar]

- Rothwell, A.; Moorkens, J.; Fernández-Parra, M.; Drugan, J.; Austermühl, F. Translation Tools and Technologies, 1st ed.; Routledge Introductions to Translation and Interpreting, Routledge: Abingdon, UK; New York, NY, USA, 2023. [Google Scholar] [CrossRef]

- Moorkens, J.; Way, A.; Lankford, S. Automating Translation; Routledge Introductions to Translation and Interpreting, Routledge: Abingdon, UK; New York, NY, USA, 2025. [Google Scholar] [CrossRef]

- Balashov, Y. The Translator’s Extended Mind. Minds Mach. 2020, 30, 349–383. [Google Scholar] [CrossRef]

- Hutchins, W.J. Machine Translation: Past, Present, Future; Ellis Horwood Series in Computers and Their Applications; Ellis Horwood: Chichester, UK; Halsted Press: New York, NY, USA, 1986. [Google Scholar]

- Jurafsky, D.; Martin, J.H. Speech and Language Processing: An Introduction to Natural Language Processing, Computational Linguistics, and Speech Recognition, 2nd ed.; Prentice Hall Series in Artificial Intelligence; Pearson Prentice Hall: Upper Saddle River, NJ, USA, 2009. [Google Scholar]

- Sin-wai, C. The Routledge Encyclopedia of Translation Technology; Routledge: London, UK; New York, NY, USA, 2015. [Google Scholar] [CrossRef]

- Koehn, P. Neural Machine Translation, 1st ed.; Cambridge University Press: New York, NY, USA, 2020. [Google Scholar]

- Mercan, H.; Akgün, Y.; Odacıoğlu, M. The Evolution of Machine Translation: A Review Study. Int. J. Lang. Transl. Stud. 2024, 4, 104–116. [Google Scholar]

- Makarenko, Y. 5 Best Machine Translation Software. Crowdin Blog, 8 August 2025. Available online: https://crowdin.com/blog/best-machine-translation-software (accessed on 27 November 2025).

- Big Language Solutions. Why NMT Still Leads: Smarter, Faster, and Safer Translation in the Age of AI. BIG Language Solutions, 16 June 2025. Available online: https://biglanguage.com/insights/blog/why-nmt-still-leads-smarter-faster-and-safer-translation-in-the-age-of-ai/ (accessed on 27 November 2025).

- Kocmi, T.; Federmann, C. Large Language Models Are State-of-the-Art Evaluators of Translation Quality. In Proceedings of the 24th Annual Conference of the European Association for Machine Translation, Tampere, Finland, 12–15 June 2023; pp. 193–203. [Google Scholar]

- Fernandes, P.; Deutsch, D.; Finkelstein, M.; Riley, P.; Martins, A.; Neubig, G.; Garg, A.; Clark, J.; Freitag, M.; Firat, O. The Devil Is in the Errors: Leveraging Large Language Models for Fine-grained Machine Translation Evaluation. In Proceedings of the Eighth Conference on Machine Translation, Singapore, 6–7 December 2023; pp. 1066–1083. [Google Scholar] [CrossRef]

- Lu, Q.; Qiu, B.; Ding, L.; Zhang, K.; Kocmi, T.; Tao, D. Error Analysis Prompting Enables Human-Like Translation Evaluation in Large Language Models. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2024, Bangkok, Thailand, 11–16 August 2024; pp. 8801–8816. [Google Scholar] [CrossRef]

- Berger, N.; Riezler, S.; Exel, M.; Huck, M. Prompting Large Language Models with Human Error Markings for Self-Correcting Machine Translation. In Proceedings of the 25th Annual Conference of the European Association for Machine Translation (Volume 1), Sheffield, UK, 24–27 June 2024; pp. 636–646. [Google Scholar]

- Feng, Z.; Zhang, Y.; Li, H.; Wu, B.; Liao, J.; Liu, W.; Lang, J.; Feng, Y.; Wu, J.; Liu, Z. TEaR: Improving LLM-based Machine Translation with Systematic Self-Refinement. In Proceedings of the Findings of the Association for Computational Linguistics: NAACL 2025, Albuquerque, NM, USA, 29 April–4 May 2025; pp. 3922–3938. [Google Scholar] [CrossRef]

- Raunak, V.; Sharaf, A.; Wang, Y.; Awadalla, H.; Menezes, A. Leveraging GPT-4 for Automatic Translation Post-Editing. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2023, Singapore, 6–10 December 2023; pp. 12009–12024. [Google Scholar] [CrossRef]

- Ki, D.; Carpuat, M. Guiding Large Language Models to Post-Edit Machine Translation with Error Annotations. In Proceedings of the Findings of the Association for Computational Linguistics: NAACL 2024, Mexico City, Mexico, 16–21 June 2024; pp. 4253–4273. [Google Scholar] [CrossRef]

- Alves, D.M.; Pombal, J.; Guerreiro, N.M.; Martins, P.H.; Alves, J.; Farajian, A.; Peters, B.; Rei, R.; Fernandes, P.; Agrawal, S.; et al. Tower: An Open Multilingual Large Language Model for Translation-Related Tasks. arXiv 2024, arXiv:2402.17733. [Google Scholar] [CrossRef]

- Ghazvininejad, M.; Gonen, H.; Zettlemoyer, L. Dictionary-based Phrase-level Prompting of Large Language Models for Machine Translation. arXiv 2023, arXiv:2302.07856. [Google Scholar] [CrossRef]

- Rios, M. Instruction-tuned Large Language Models for Machine Translation in the Medical Domain. In Proceedings of the Machine Translation Summit XX: Volume 1, Geneva, Switzerland, 23–27 June 2025; pp. 162–172. [Google Scholar]

- Sia, S.; Duh, K. In-context Learning as Maintaining Coherency: A Study of On-the-fly Machine Translation Using Large Language Models. In Proceedings of the Machine Translation Summit XIX, Volume 1: Research Track, Macau, China, 4–8 September 2023; pp. 173–185. [Google Scholar]

- Zheng, J.; Hong, H.; Liu, F.; Wang, X.; Su, J.; Liang, Y.; Wu, S. Fine-tuning Large Language Models for Domain-specific Machine Translation. arXiv 2024, arXiv:2402.15061. [Google Scholar] [CrossRef]

- Moslem, Y.; Haque, R.; Kelleher, J.D.; Way, A. Adaptive Machine Translation with Large Language Models. In Proceedings of the 24th Annual Conference of the European Association for Machine Translation, Tampere, Finland, 12–15 June 2023; pp. 227–237. [Google Scholar]

- Moslem, Y. Language Modelling Approaches to Adaptive Machine Translation. arXiv 2024, arXiv:2401.14559. [Google Scholar] [CrossRef]

- Vieira, I.; Allred, W.; Lankford, S.; Castilho, S.; Way, A. How Much Data is Enough Data? Fine-Tuning Large Language Models for In-House Translation: Performance Evaluation Across Multiple Dataset Sizes. In Proceedings of the 16th Conference of the Association for Machine Translation in the Americas (Volume 1: Research Track), Chicago, IL, USA, 28 September–2 October 2024; pp. 236–249. [Google Scholar]

- Ding, Q.; Cao, H.; Feng, Z.; Yang, M.; Zhao, T. Enhancing bilingual lexicon induction via harnessing polysemous words. Neurocomputing 2025, 611, 128682. [Google Scholar] [CrossRef]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Ichter, B.; Xia, F.; Chi, E.H.; Le, Q.V.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. In Proceedings of the 36th International Conference on Neural Information Processing Systems, NIPS ’22, New Orleans, LA, USA, 28 November–9 December 2022. [Google Scholar]

- Chen, P.; Guo, Z.; Haddow, B.; Heafield, K. Iterative Translation Refinement with Large Language Models. In Proceedings of the 25th Annual Conference of the European Association for Machine Translation (Volume 1), Sheffield, UK, 24–27 June 2024; pp. 181–190. [Google Scholar]

- He, Z.; Liang, T.; Jiao, W.; Zhang, Z.; Yang, Y.; Wang, R.; Tu, Z.; Shi, S.; Wang, X. Exploring Human-Like Translation Strategy with Large Language Models. Trans. Assoc. Comput. Linguist. 2024, 12, 229–246. [Google Scholar] [CrossRef]

- Briakou, E.; Luo, J.; Cherry, C.; Freitag, M. Translating Step-by-Step: Decomposing the Translation Process for Improved Translation Quality of Long-Form Texts. In Proceedings of the Ninth Conference on Machine Translation, Miami, FL, USA, 15–16 November 2024; pp. 1301–1317. [Google Scholar] [CrossRef]

- Chowdhery, A.; Narang, S.; Devlin, J.; Bosma, M.; Mishra, G.; Roberts, A.; Barham, P.; Chung, H.W.; Sutton, C.; Gehrmann, S.; et al. PaLM: Scaling Language Modeling with Pathways. J. Mach. Learn. Res. 2023, 24, 11324–11436. [Google Scholar]

- Jiao, W.; Wang, W.; Huang, J.t.; Wang, X.; Tu, Z. Is ChatGPT A Good Translator? Yes With GPT-4 As The Engine. arXiv 2023, arXiv:2301.08745. [Google Scholar] [CrossRef]

- Vilar, D.; Freitag, M.; Cherry, C.; Luo, J.; Ratnakar, V.; Foster, G. Prompting PaLM for Translation: Assessing Strategies and Performance. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; pp. 15406–15427. [Google Scholar] [CrossRef]

- Peng, K.; Ding, L.; Zhong, Q.; Shen, L.; Liu, X.; Zhang, M.; Ouyang, Y.; Tao, D. Towards Making the Most of ChatGPT for Machine Translation. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2023, Singapore, 6–10 December 2023; pp. 5622–5633. [Google Scholar] [CrossRef]

- Zhang, B.; Haddow, B.; Birch, A. Prompting Large Language Model for Machine Translation: A Case Study. arXiv 2023, arXiv:2301.07069. [Google Scholar] [CrossRef]

- Lyu, C.; Xu, J.; Wang, L. New Trends in Machine Translation using Large Language Models: Case Examples with ChatGPT. arXiv 2023, arXiv:2305.01181. [Google Scholar] [CrossRef]

- Balashov, Y.; Balashov, A.; Koski, S.F. Translation Analytics for Freelancers: I. Introduction, Data Preparation, Baseline Evaluations. In Proceedings of the Machine Translation Summit XX, Geneva, Switzerland, 23–27 June 2025; Bouillon, P., Gerlach, J., Girletti, S., Volkart, L., Rubino, R., Sennrich, R., Farinha, A.C., Gaido, M., Daems, J., Kenny, D., et al., Eds.; European Association for Machine Translation: Geneva, Switzerland, 2025; Volume 1, pp. 538–565. [Google Scholar]

- Zhang, X.; Rajabi, N.; Duh, K.; Koehn, P. Machine Translation with Large Language Models: Prompting, Few-shot Learning, and Fine-tuning with QLoRA. In Proceedings of the Eighth Conference on Machine Translation, Singapore, 6–7 December 2023; pp. 468–481. [Google Scholar] [CrossRef]

- Dettmers, T.; Pagnoni, A.; Holtzman, A.; Zettlemoyer, L. QLORA: Efficient finetuning of quantized LLMs. In Proceedings of the 37th International Conference on Neural Information Processing Systems, NIPS ’23, Red Hook, NY, USA, 10–16 December 2023. [Google Scholar]

- Team, N.; Costa-jussà, M.R.; Cross, J.; Çelebi, O.; Elbayad, M.; Heafield, K.; Heffernan, K.; Kalbassi, E.; Lam, J.; Licht, D.; et al. No Language Left Behind: Scaling Human-Centered Machine Translation. arXiv 2022, arXiv:2207.04672. [Google Scholar] [CrossRef]

- Zhu, W.; Liu, H.; Dong, Q.; Xu, J.; Huang, S.; Kong, L.; Chen, J.; Li, L. Multilingual Machine Translation with Large Language Models: Empirical Results and Analysis. In Proceedings of the Findings of the Association for Computational Linguistics: NAACL 2024, Mexico City, Mexico, 16–21 June 2024; pp. 2765–2781. [Google Scholar] [CrossRef]

- Hendy, A.; Abdelrehim, M.; Sharaf, A.; Raunak, V.; Gabr, M.; Matsushita, H.; Kim, Y.J.; Afify, M.; Awadalla, H.H. How Good Are GPT Models at Machine Translation? A Comprehensive Evaluation. arXiv 2023, arXiv:2302.09210. [Google Scholar] [CrossRef]

- Sinitsyna, D. Generative AI for Translation in 2025. Intento, 30 March 2025. Available online: https://inten.to/blog/generative-ai-for-translation-in-2025/ (accessed on 27 November 2025).

- Sizov, F.; España-Bonet, C.; Van Genabith, J.; Xie, R.; Dutta Chowdhury, K. Analysing Translation Artifacts: A Comparative Study of LLMs, NMTs, and Human Translations. In Proceedings of the Ninth Conference on Machine Translation, Miami, FL, USA, 15–16 November 2024; pp. 1183–1199. [Google Scholar] [CrossRef]

- Kong, M.; Fernandez, A.; Bains, J.; Milisavljevic, A.; Brooks, K.C.; Shanmugam, A.; Avilez, L.; Li, J.; Honcharov, V.; Yang, A.; et al. Evaluation of the accuracy and safety of machine translation of patient-specific discharge instructions: A comparative analysis. BMJ Qual. Saf. 2025. [Google Scholar] [CrossRef]

- Meta. The Llama 4 Herd: The Beginning of a New Era of Natively Multimodal AI Innovation. 2025. Available online: https://ai.meta.com/blog/llama-4-multimodal-intelligence/ (accessed on 27 November 2025).

- Cui, M.; Gao, P.; Liu, W.; Luan, J.; Wang, B. Multilingual Machine Translation with Open Large Language Models at Practical Scale: An Empirical Study. In Proceedings of the 2025 Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers), Albuquerque, NM, USA, 30 April–1 May 2025; pp. 5420–5443. [Google Scholar] [CrossRef]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. arXiv 2020, arXiv:2005.14165. [Google Scholar] [CrossRef]

- OpenAI. GPT-4 Technical Report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Castilho, S.; Mallon, C.Q.; Meister, R.; Yue, S. Do online Machine Translation Systems Care for Context? What About a GPT Model? In Proceedings of the 24th Annual Conference of the European Association for Machine Translation, Tampere, Finland, 12–15 June 2023; pp. 393–417. [Google Scholar]

- Savenkov, K. GPT-3 Translation Capabilities. 2023. Available online: https://inten.to/blog/gpt-3-translation-capabilities/ (accessed on 27 November 2025).

- Garcia, X.; Bansal, Y.; Cherry, C.; Foster, G.; Krikun, M.; Feng, F.; Johnson, M.; Firat, O. The unreasonable effectiveness of few-shot learning for machine translation. arXiv 2023, arXiv:2302.01398. [Google Scholar] [CrossRef]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. arXiv 2021, arXiv:2106.09685. [Google Scholar] [CrossRef]

- Jiao, W.; Huang, J.t.; Wang, W.; He, Z.; Liang, T.; Wang, X.; Shi, S.; Tu, Z. ParroT: Translating during Chat using Large Language Models tuned with Human Translation and Feedback. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2023, Singapore, 6–10 December 2023; pp. 15009–15020. [Google Scholar] [CrossRef]

- Lu, Q.; Ding, L.; Zhang, K.; Zhang, J.; Tao, D. MQM-APE: Toward High-Quality Error Annotation Predictors with Automatic Post-Editing in LLM Translation Evaluators. In Proceedings of the 31st International Conference on Computational Linguistics, Abu Dhabi, United Arab Emirates, 19–24 January 2025; pp. 5570–5587. [Google Scholar]

- Treviso, M.V.; Guerreiro, N.M.; Agrawal, S.; Rei, R.; Pombal, J.; Vaz, T.; Wu, H.; Silva, B.; Stigt, D.V.; Martins, A. xTower: A Multilingual LLM for Explaining and Correcting Translation Errors. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2024, Miami, FL, USA, 12–16 November 2024; pp. 15222–15239. [Google Scholar] [CrossRef]

- Zebaze, A.R.; Sagot, B.; Bawden, R. Compositional Translation: A Novel LLM-based Approach for Low-resource Machine Translation. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2025, Suzhou, China, 4–9 November 2025; pp. 22328–22357. [Google Scholar]

- Blum, C.; Filippova, K.; Yuan, A.; Ghandeharioun, A.; Zimmert, J.; Zhang, F.; Hoffmann, J.; Linzen, T.; Wattenberg, M.; Dixon, L.; et al. Beyond the Rosetta Stone: Unification Forces in Generalization Dynamics. arXiv 2025, arXiv:2508.11017. [Google Scholar] [CrossRef]

- Ravisankar, K.; Han, H.; Carpuat, M. Can you map it to English? The Role of Cross-Lingual Alignment in Multilingual Performance of LLMs. arXiv 2025, arXiv:2504.09378. [Google Scholar] [CrossRef]

- Dong, Q.; Li, L.; Dai, D.; Zheng, C.; Ma, J.; Li, R.; Xia, H.; Xu, J.; Wu, Z.; Chang, B.; et al. A Survey on In-context Learning. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing, Miami, FL, USA, 12–16 November 2024; pp. 1107–1128. [Google Scholar] [CrossRef]

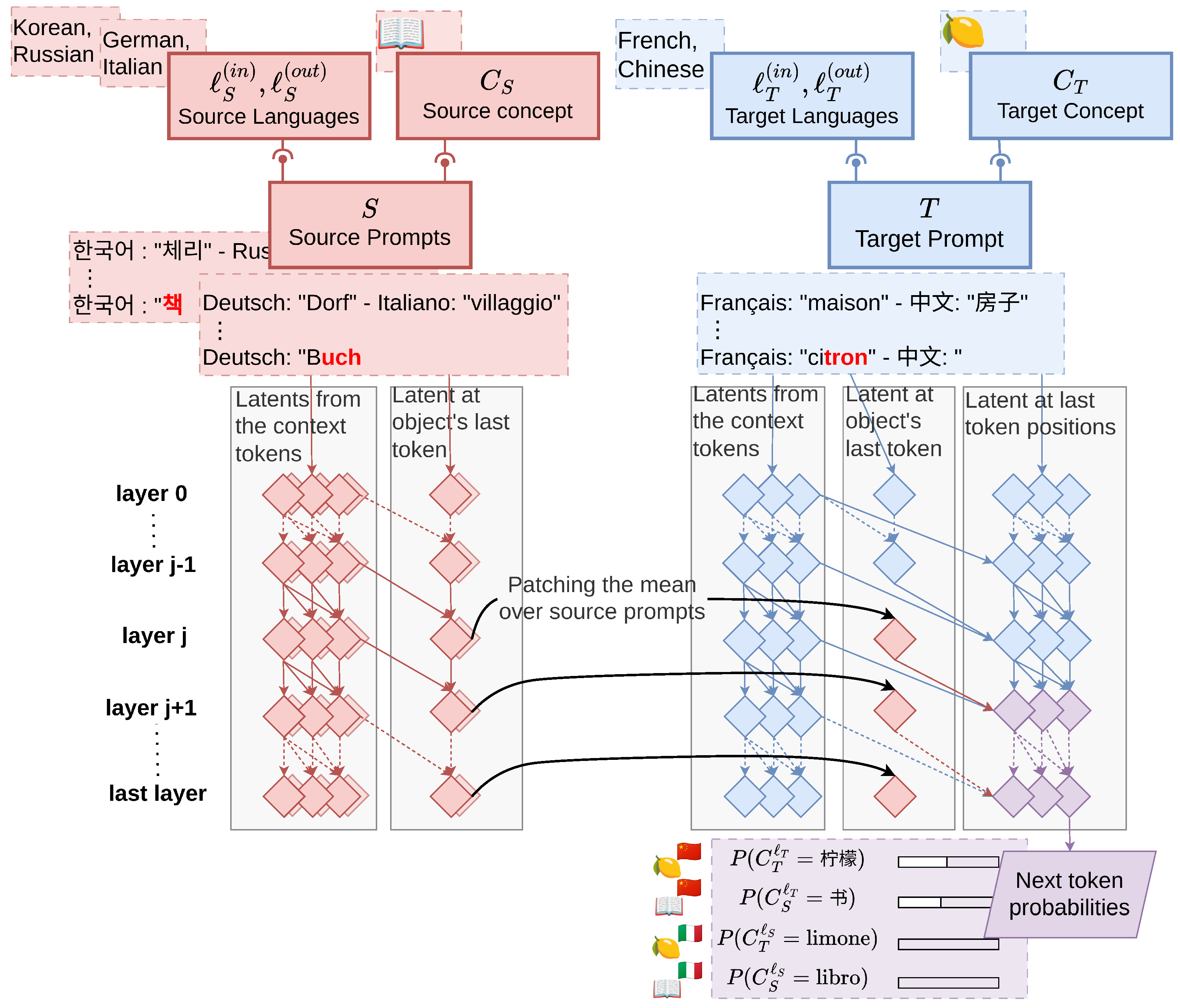

- Sia, S.; Mueller, D.; Duh, K. Where does In-context Translation Happen in Large Language Models. arXiv 2024, arXiv:2403.04510. [Google Scholar] [CrossRef]

- Wen-Yi, A.W.; Mimno, D. Hyperpolyglot LLMs: Cross-Lingual Interpretability in Token Embeddings. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023; pp. 1124–1131. [Google Scholar] [CrossRef]

- Xue, L.; Constant, N.; Roberts, A.; Kale, M.; Al-Rfou, R.; Siddhant, A.; Barua, A.; Raffel, C. mT5: A Massively Multilingual Pre-trained Text-to-Text Transformer. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 6–11 June 2021; pp. 483–498. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar] [CrossRef]

- Hua, T.; Yun, T.; Pavlick, E. mOthello: When Do Cross-Lingual Representation Alignment and Cross-Lingual Transfer Emerge in Multilingual Models? In Proceedings of the Findings of the Association for Computational Linguistics: NAACL 2024, Mexico City, Mexico, 16–21 June 2024; pp. 1585–1598. [Google Scholar] [CrossRef]

- Li, K.; Hopkins, A.K.; Bau, D.; Viégas, F.; Pfister, H.; Wattenberg, M. Emergent World Representations: Exploring a Sequence Model Trained on a Synthetic Task. arXiv 2024, arXiv:2210.13382. [Google Scholar] [CrossRef]

- Artetxe, M.; Labaka, G.; Agirre, E. Unsupervised Statistical Machine Translation. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 3632–3642. [Google Scholar] [CrossRef]

- Søgaard, A.; Ruder, S.; Vulić, I. On the Limitations of Unsupervised Bilingual Dictionary Induction. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Melbourne, Australia, 15–20 June 2018; pp. 778–788. [Google Scholar] [CrossRef]

- Conneau, A.; Khandelwal, K.; Goyal, N.; Chaudhary, V.; Wenzek, G.; Guzmán, F.; Grave, E.; Ott, M.; Zettlemoyer, L.; Stoyanov, V. Unsupervised Cross-lingual Representation Learning at Scale. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 8440–8451. [Google Scholar] [CrossRef]

- Wendler, C.; Veselovsky, V.; Monea, G.; West, R. Do Llamas Work in English? On the Latent Language of Multilingual Transformers. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Bangkok, Thailand, 11–16 August 2024; pp. 15366–15394. [Google Scholar] [CrossRef]

- Nostalgebraist. Interpreting GPT: The Logit Lens. 2020. Available online: https://www.lesswrong.com/posts/AcKRB8wDpdaN6v6ru/interpreting-gpt-the-logit-lens (accessed on 27 November 2025).

- Liu, S.; Lyu, C.; Wu, M.; Wang, L.; Luo, W.; Zhang, K.; Shang, Z. New Trends for Modern Machine Translation with Large Reasoning Models. arXiv 2025, arXiv:2503.10351. [Google Scholar] [CrossRef]

- Chen, A.; Song, Y.; Zhu, W.; Chen, K.; Yang, M.; Zhao, T.; Zhang, M. Evaluating o1-Like LLMs: Unlocking Reasoning for Translation through Comprehensive Analysis. arXiv 2025, arXiv:2502.11544. [Google Scholar] [CrossRef]

- Schut, L.; Gal, Y.; Farquhar, S. Do Multilingual LLMs Think In English? arXiv 2025, arXiv:2502.15603. [Google Scholar] [CrossRef]

- Wang, M.; Adel, H.; Lange, L.; Liu, Y.; Nie, E.; Strötgen, J.; Schuetze, H. Lost in Multilinguality: Dissecting Cross-lingual Factual Inconsistency in Transformer Language Models. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Vienna, Austria, 27 July–1 August 2025; pp. 5075–5094. [Google Scholar] [CrossRef]

- Lim, Z.W.; Aji, A.F.; Cohn, T. Language-Specific Latent Process Hinders Cross-Lingual Performance. arXiv 2025, arXiv:2505.13141. [Google Scholar] [CrossRef]

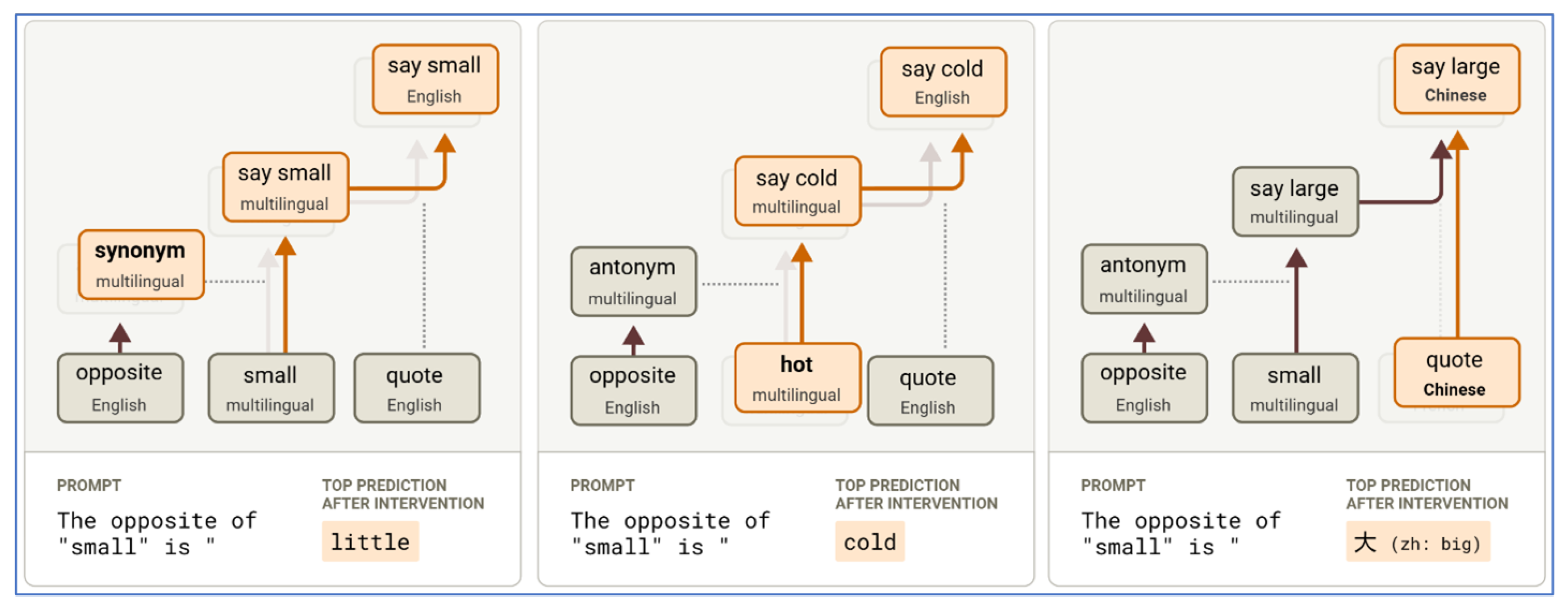

- Dumas, C.; Wendler, C.; Veselovsky, V.; Monea, G.; West, R. Separating Tongue from Thought: Activation Patching Reveals Language-Agnostic Concept Representations in Transformers. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Vienna, Austria, 27 July–1 August 2025; pp. 31822–31841. [Google Scholar] [CrossRef]

- Lindsey, J.; Gurnee, W.; Ameisen, E.; Chen, B.; Pearce, A.; Turner, N.L.; Citro, C.; Abrahams, D.; Carter, S.; Hosmer, B.; et al. On the Biology of a Large Language Model. Transform. Circuits Thread. 2025. Available online: https://transformer-circuits.pub/2025/attribution-graphs/biology.html (accessed on 27 November 2025).

- Partee, B. Compositionality. In Varieties of Formal Semantics: Proceedings of the Fourth Amsterdam Colloquium; Foris: Dordrecht, The Netherlands, 1984; pp. 281–311. [Google Scholar]

- Fodor, J. The Language of Thought; Harvard University Press: Cambridge, MA, USA, 1975. [Google Scholar]

- Zhang, R.; Yu, Q.; Zang, M.; Eickhoff, C.; Pavlick, E. The Same But Different: Structural Similarities and Differences in Multilingual Language Modeling. arXiv 2024, arXiv:2410.09223. [Google Scholar] [CrossRef]

- Singh, S.; Vargus, F.; D’souza, D.; Karlsson, B.; Mahendiran, A.; Ko, W.Y.; Shandilya, H.; Patel, J.; Mataciunas, D.; O’Mahony, L.; et al. Aya Dataset: An Open-Access Collection for Multilingual Instruction Tuning. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Bangkok, Thailand, 11–16 August 2024; pp. 11521–11567. [Google Scholar] [CrossRef]

- Reynolds, L.; McDonell, K. Prompt Programming for Large Language Models: Beyond the Few-Shot Paradigm. arXiv 2021, arXiv:2102.07350. [Google Scholar] [CrossRef]

- Popović, M. chrF: Character n-gram F-score for automatic MT evaluation. In Proceedings of the Tenth Workshop on Statistical Machine Translation, Lisbon, Portugal, 17–18 September 2015; pp. 392–395. [Google Scholar] [CrossRef]

- Kreutzer, J.; Caswell, I.; Wang, L.; Wahab, A.; van Esch, D.; Ulzii-Orshikh, N.; Tapo, A.; Subramani, N.; Sokolov, A.; Sikasote, C.; et al. Quality at a Glance: An Audit of Web-Crawled Multilingual Datasets. Trans. Assoc. Comput. Linguist. 2022, 10, 50–72. [Google Scholar] [CrossRef]

- Thompson, B.; Dhaliwal, M.; Frisch, P.; Domhan, T.; Federico, M. A Shocking Amount of the Web is Machine Translated: Insights from Multi-Way Parallelism. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2024, Bangkok, Thailand, 11–16 August 2024; pp. 1763–1775. [Google Scholar] [CrossRef]

- Kocyigit, M.Y.; Briakou, E.; Deutsch, D.; Luo, J.; Cherry, C.; Freitag, M. Overestimation in LLM Evaluation: A Controlled Large-Scale Study on Data Contamination’s Impact on Machine Translation. arXiv 2025, arXiv:2501.18771. [Google Scholar] [CrossRef]

- Liu, Y.; Gu, J.; Goyal, N.; Li, X.; Edunov, S.; Ghazvininejad, M.; Lewis, M.; Zettlemoyer, L. Multilingual Denoising Pre-training for Neural Machine Translation. Trans. Assoc. Comput. Linguist. 2020, 8, 726–742. [Google Scholar] [CrossRef]

- Mikolov, T.; Le, Q.V.; Sutskever, I. Exploiting Similarities among Languages for Machine Translation. arXiv 2013, arXiv:1309.4168. [Google Scholar] [CrossRef]

- Dempster, A.P.; Laird, N.M.; Rubin, D.B. Maximum Likelihood from Incomplete Data Via the EM Algorithm. J. R. Stat. Soc. Ser. B Stat. Methodol. 1977, 39, 1–22. [Google Scholar] [CrossRef]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent dirichlet allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Brown, P.F.; Della Pietra, S.A.; Della Pietra, V.J.; Mercer, R.L. The Mathematics of Statistical Machine Translation: Parameter Estimation. Comput. Linguist. 1993, 19, 263–311. [Google Scholar]

- Bureau Works. We Tested Chat GPT for Translation—Here’s the Data. Available online: https://www.bureauworks.com/blog/chatgpt-for-translation (accessed on 27 November 2025).

- Tang, K.; Song, P.; Qin, Y.; Yan, X. Creative and Context-Aware Translation of East Asian Idioms with GPT-4. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2024, Miami, FL, USA, 11–16 November 2024; pp. 9285–9305. [Google Scholar] [CrossRef]

- Yan, J.; Yan, P.; Chen, Y.; Li, J.; Zhu, X.; Zhang, Y. Benchmarking GPT-4 against Human Translators: A Comprehensive Evaluation Across Languages, Domains, and Expertise Levels. arXiv 2024, arXiv:2411.13775. [Google Scholar] [CrossRef]

- Johnson, M.; Schuster, M.; Le, Q.V.; Krikun, M.; Wu, Y.; Chen, Z.; Thorat, N.; Viégas, F.; Wattenberg, M.; Corrado, G.; et al. Google’s Multilingual Neural Machine Translation System: Enabling Zero-Shot Translation. Trans. Assoc. Comput. Linguist. 2017, 5, 339–351. [Google Scholar] [CrossRef]

- Enis, M.; Hopkins, M. From LLM to NMT: Advancing Low-Resource Machine Translation with Claude. arXiv 2024, arXiv:2404.13813. [Google Scholar] [CrossRef]

- Conneau, A.; Wu, S.; Li, H.; Zettlemoyer, L.; Stoyanov, V. Emerging Cross-lingual Structure in Pretrained Language Models. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 6022–6034. [Google Scholar] [CrossRef]

- Aldosari, L.A.; Altuwairesh, N. Assessing the effects of translation prompts on the translation quality of GPT-4 Turbo using automated and human evaluation metrics: A case study. Perspectives 2025, 1–25. [Google Scholar] [CrossRef]

- Zhang, H.; Chen, K.; Bai, X.; Li, X.; Xiang, Y.; Zhang, M. Exploring Translation Mechanism of Large Language Models. arXiv 2025, arXiv:2502.11806. [Google Scholar] [CrossRef]

- Heimersheim, S.; Nanda, N. How to use and interpret activation patching. arXiv 2024, arXiv:2404.15255. [Google Scholar] [CrossRef]

- Wang, K.R.; Variengien, A.; Conmy, A.; Shlegeris, B.; Steinhardt, J. Interpretability in the Wild: A Circuit for Indirect Object Identification in GPT-2 Small. In Proceedings of the Eleventh International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Lommel, A.; Gladkoff, S.; Melby, A.; Wright, S.E.; Strandvik, I.; Gasova, K.; Vaasa, A.; Benzo, A.; Marazzato Sparano, R.; Foresi, M.; et al. The Multi-Range Theory of Translation Quality Measurement: MQM scoring models and Statistical Quality Control. In Proceedings of the 16th Conference of the Association for Machine Translation in the Americas (Volume 2: Presentations), Chicago, IL, USA, 30 September–2 October 2024; pp. 75–94. [Google Scholar]

- Castilho, S.; Knowles, R. A survey of context in neural machine translation and its evaluation. Nat. Lang. Process. 2025, 31, 986–1016. [Google Scholar] [CrossRef]

- OLMo, T.; Walsh, P.; Soldaini, L.; Groeneveld, D.; Lo, K.; Arora, S.; Bhagia, A.; Gu, Y.; Huang, S.; Jordan, M.; et al. 2 OLMo 2 Furious. arXiv 2025, arXiv:2501.00656. [Google Scholar] [CrossRef]

- OpenAI. Introducing GPT-OSS. 2025. Available online: https://openai.com/index/introducing-gpt-oss/ (accessed on 27 November 2025).

- Wang, Y.; Wu, A.; Neubig, G. English Contrastive Learning Can Learn Universal Cross-lingual Sentence Embeddings. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 9122–9133. [Google Scholar] [CrossRef]

- Elmakias, I.; Vilenchik, D. An Oblivious Approach to Machine Translation Quality Estimation. Mathematics 2021, 9, 2090. [Google Scholar] [CrossRef]

- Quine, W.V.O. Word and Object; MIT Press: Cambridge, MA, USA, 1960. [Google Scholar]

- Davidson, D. Radical Interpretation. Dialectica 1973, 27, 313–328. [Google Scholar] [CrossRef]

- Rawling, P.; Wilson, P. (Eds.) The Routledge Handbook of Translation and Philosophy; Routledge Handbooks in Translation and 452 Interpreting Studies; Routledge: Abingdon, UK; New York, NY, USA, 2019. [Google Scholar] [CrossRef]

- Brinkmann, J.; Wendler, C.; Bartelt, C.; Mueller, A. Large Language Models Share Representations of Latent Grammatical Concepts Across Typologically Diverse Languages. In Proceedings of the 2025 Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers), Albuquerque, NM, USA, 29 April–4 May 2025; pp. 6131–6150. [Google Scholar] [CrossRef]

- Nida, E. Toward a Science of Translating: With Special Reference to Principles and Procedures Involved in Bible Translating; E.J. Brill: Leiden, The Netherlands, 1964. [Google Scholar]

- Jakobson, R. On Linguistic Aspects of Translation. In On Translation; Brower, R., Ed.; MIT Press: Cambridge, MA, USA, 1959; pp. 232–239. [Google Scholar]

- Bernardini, S. Think-aloud protocols in translation research: Achievements, limits, future prospects. Target. Int. J. Transl. Stud. 2001, 13, 241–263. [Google Scholar] [CrossRef]

- Xu, Y.; Hu, L.; Zhao, J.; Qiu, Z.; Xu, K.; Ye, Y.; Gu, H. A survey on multilingual large language models: Corpora, alignment, and bias. Front. Comput. Sci. 2025, 19, 1911362. [Google Scholar] [CrossRef]

| LoRA/QLoRA Fine-Tuning | Chain-of-Thought Prompting for Translation Tasks | Explicit Pivot Translation | |

|---|---|---|---|

| Best | When a stable, repeated translation task exists (e.g., for a specific client or domain with terminology constraints), modest compute is available, and privacy allows holding small parallel sets. | When inputs are long or ambiguous, require disambiguation, terminology planning, or document-level coherence, and when one can afford a few extra tokens. | When the direct pair is low-resource but the source ↔ pivot and pivot ↔ target directions are strong (typically via English) and errors are mostly lexical rather than cultural or stylistic. |

| Strengths | Durable gains, controllable style and terminology. | Improves factual grounding, consistency, and error visibility; can guide pivoting or term choices explicitly. | Leverages high-resource links; simple to implement; useful as a fallback. |

| Costs/risks | Setup time, evaluation/monitoring, maintaining adapters per domain/language. | Higher latency and longer outputs; diminishing returns on simple sentence. | Compounding errors, loss of nuance, exposure to pivot-language biases, and the need to mitigate these effects with targeted post-editing or terminology constraints. |

| To be avoided | When the task is one-off, data extremely scarce, or latency and storage constraints preclude adapters. | For very short, formulaic content or when token budgets are strict. | When the direct pair suffices; when match to target cannot tolerate ambiguity. |

| Method/Approach | Prediction/Evaluation |

|---|---|

| P1: Data manipulation | Fine-tuning on data with no parallel segments versus data containing only parallel segments should differentially affect translation style and generalization. Systematic double dissociations in performance would support the existence of dual pathways. Evaluation: standard automatic metrics (BLEU/chrF/COMET) plus targeted manual checks. |

| P2: Synthetic probes | In in-context learning settings, injecting a few parallel exemplars into the prompt should push the model into a more “Local/TM-like” mode, while shuffled cross-lingual contexts should help only if the model performs Global alignment “on the fly.” |

| P3: Mechanistic signatures | Mid-layer “task/meaning” circuits should separate from language-context circuits. Intervening on each (via techniques such as activation patching or related tools) should selectively degrade either “Global semantics” or “Local lexicon/memorization.” |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Balashov, Y. Translation in the Wild. Information 2025, 16, 1077. https://doi.org/10.3390/info16121077

Balashov Y. Translation in the Wild. Information. 2025; 16(12):1077. https://doi.org/10.3390/info16121077

Chicago/Turabian StyleBalashov, Yuri. 2025. "Translation in the Wild" Information 16, no. 12: 1077. https://doi.org/10.3390/info16121077

APA StyleBalashov, Y. (2025). Translation in the Wild. Information, 16(12), 1077. https://doi.org/10.3390/info16121077