Intelligent Sustainability: Evaluating Transformers for Cryptocurrency Environmental Claims

Abstract

1. Introduction

2. Literature Review

2.1. Cryptocurrency Sustainability Assessment

2.2. Sentiment Analysis in Financial Text Analysis

2.3. Automated Sustainability Claims Classification

2.4. Computational Efficiency in Sustainability Assessment

2.5. Research Gap and Theoretical Framework

3. Materials and Methods

4. Results and Findings

4.1. Problem Identification and Objective Definition

- DO1. Develop an efficient framework for automated classification of cryptocurrency sustainability news using minimal computational resources and smaller datasets, aligning with sustainable AI principles.

- DO2. Leverage transfer learning capabilities of transformer architectures to improve classification accuracy while reducing training requirements.

- DO3. Implement robust mechanisms to minimize hallucination risks in LLMs when processing cryptocurrency news.

- DO4. Create a deployable solution that maintains high accuracy while ensuring computational efficiency and practical applicability.

4.2. Artifact Design and Development

4.3. Demonstration and Evaluation

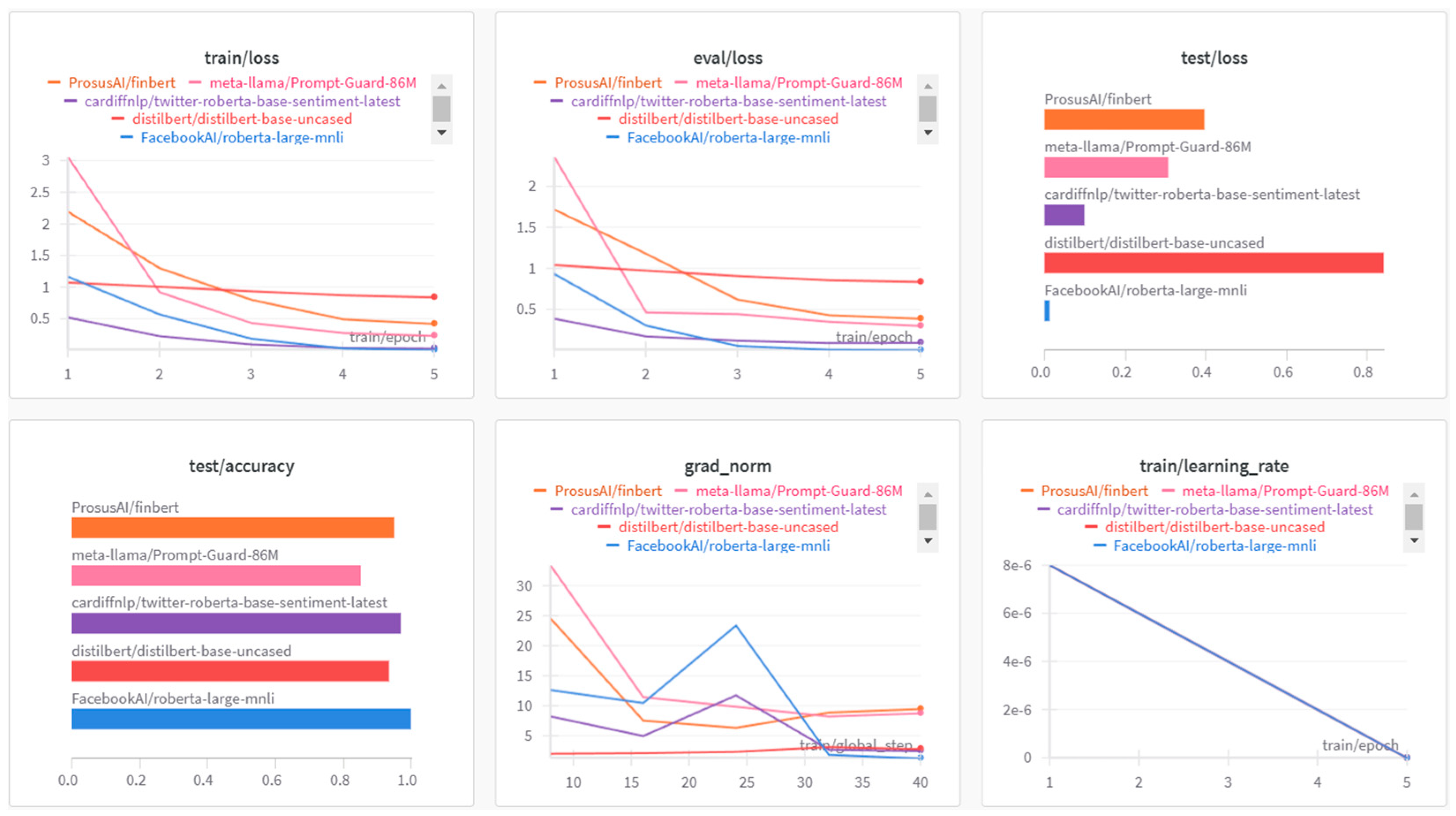

- Efficiency Spectrum: Models demonstrate varying levels of learning efficiency, from RoBERTa-large-MNLI’s precise updates (low gradient norm, high accuracy) to FinBERT’s more aggressive optimization approach (high gradient norm, high accuracy).

- Stability Patterns: Lower gradient norms (RoBERTa-large-MNLI, Twitter-RoBERTa) generally correlate with more stable accuracy improvements, while higher norms (Prompt-Guard-86M, FinBERT) indicate more dramatic learning adjustments.

- Architectural Implications: The gradient norm magnitude appears related to architectural characteristics, with lighter models (DistilBERT, Twitter-RoBERTa) showing more conservative updates compared to larger or specialized models.

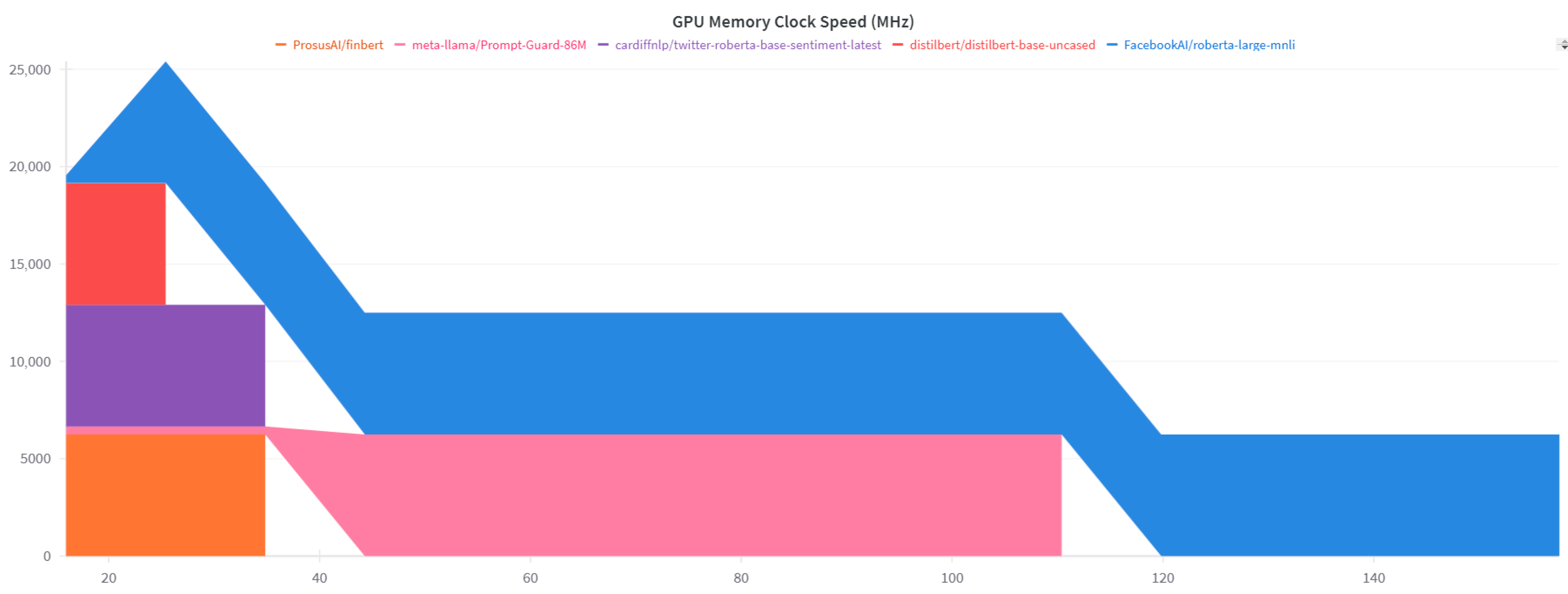

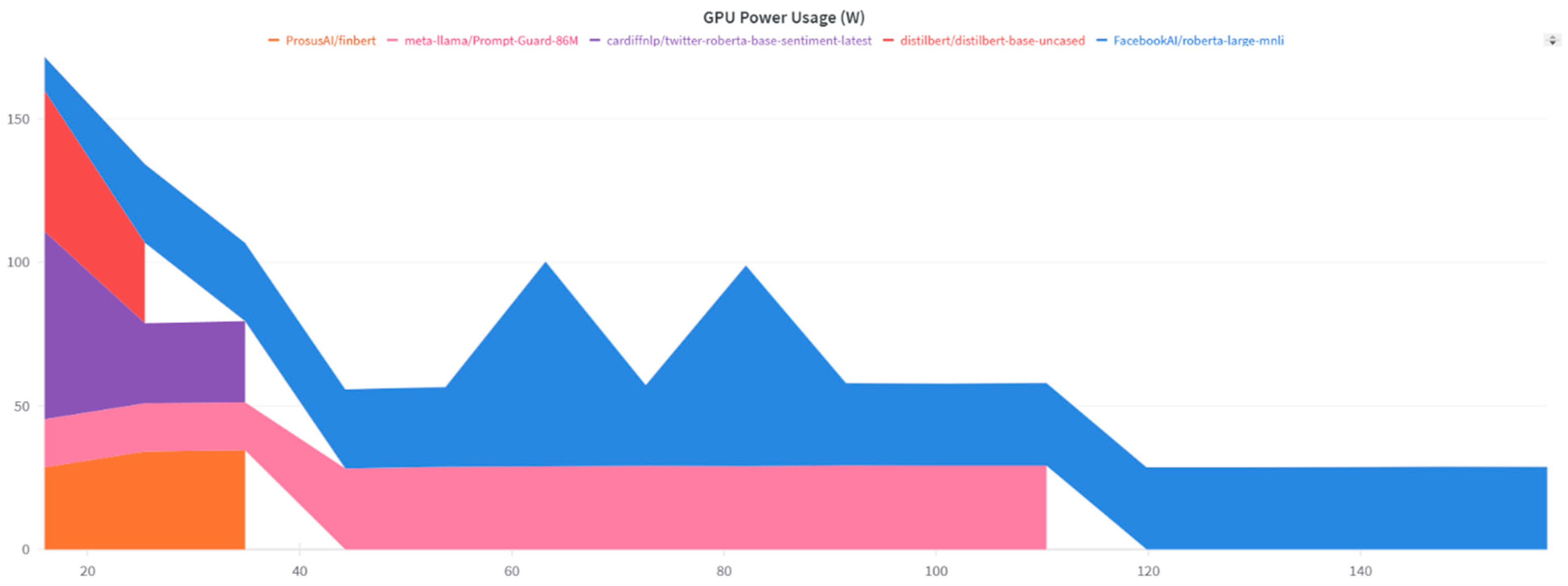

4.3.1. GPU Processing Characteristics During Model Training

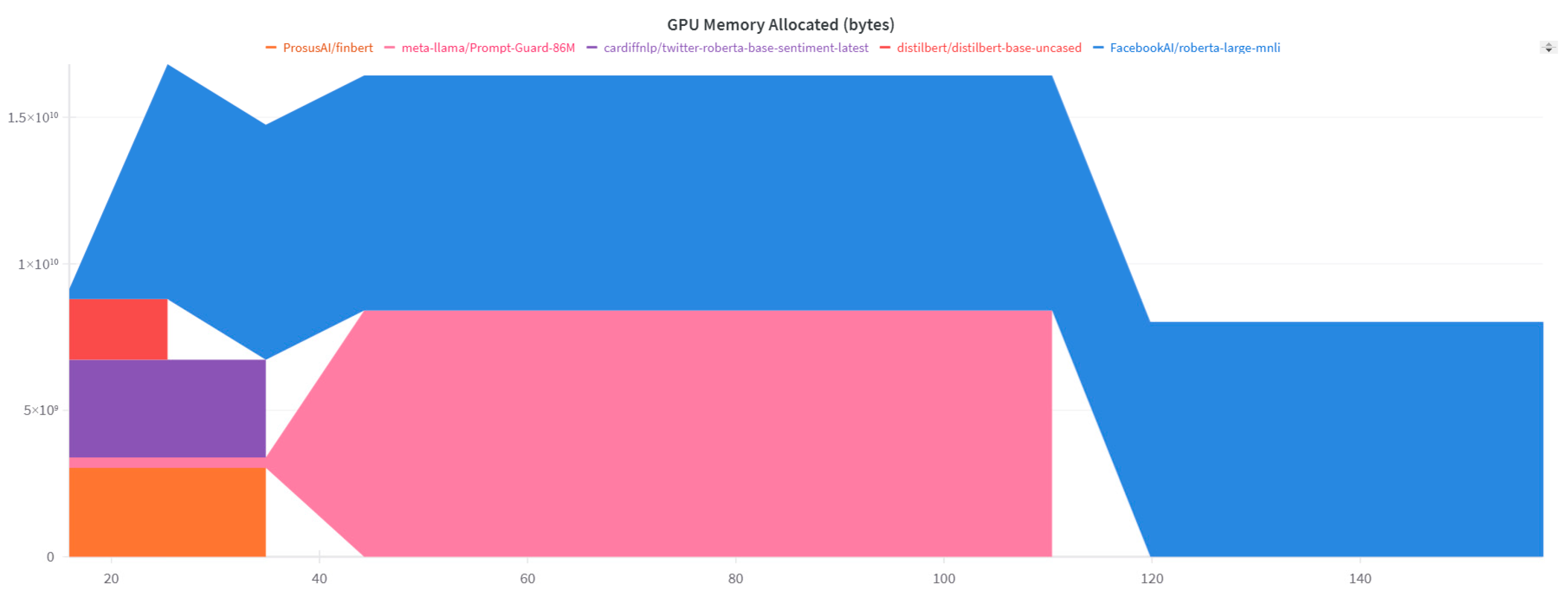

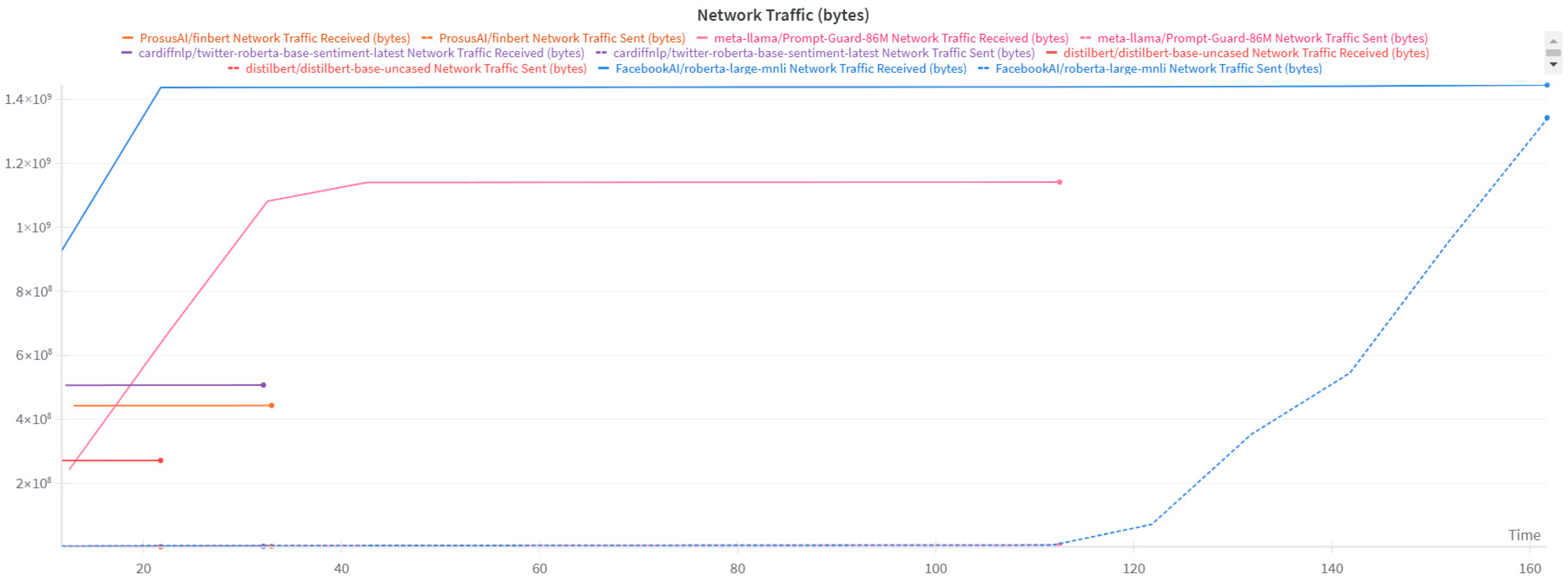

4.3.2. Resource Utilization Patterns During Model Training

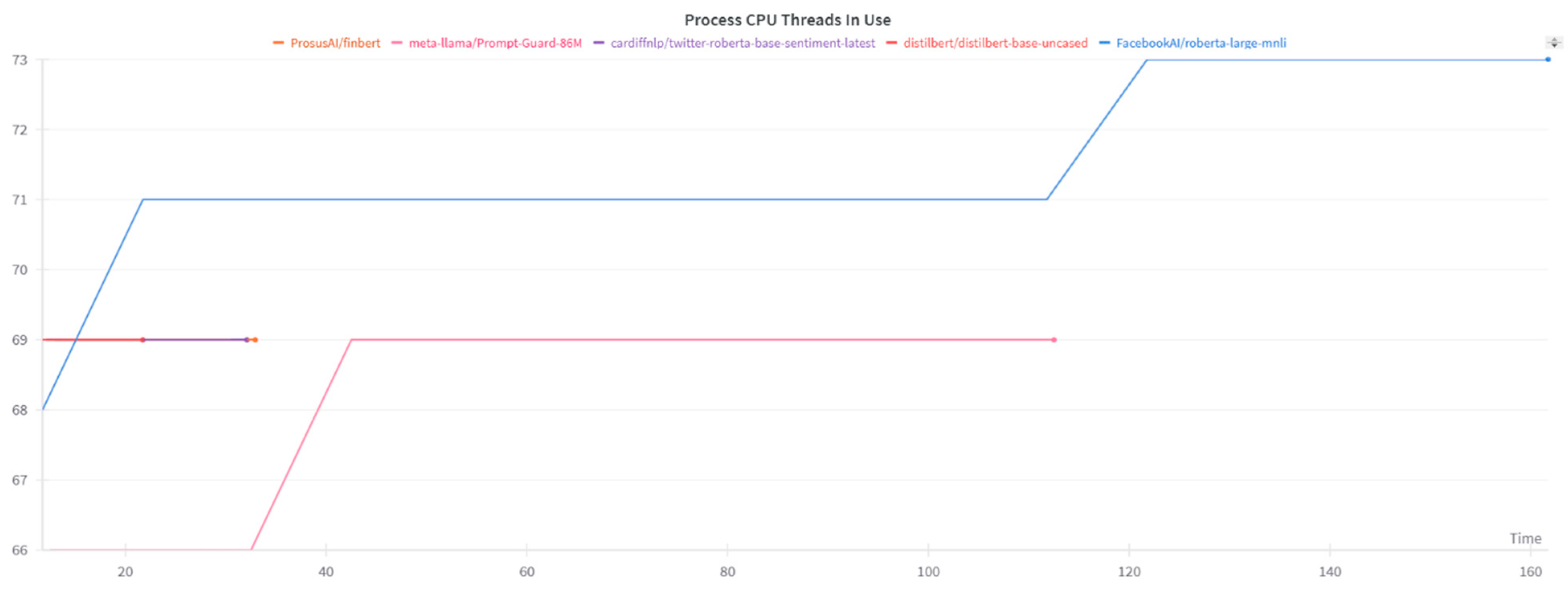

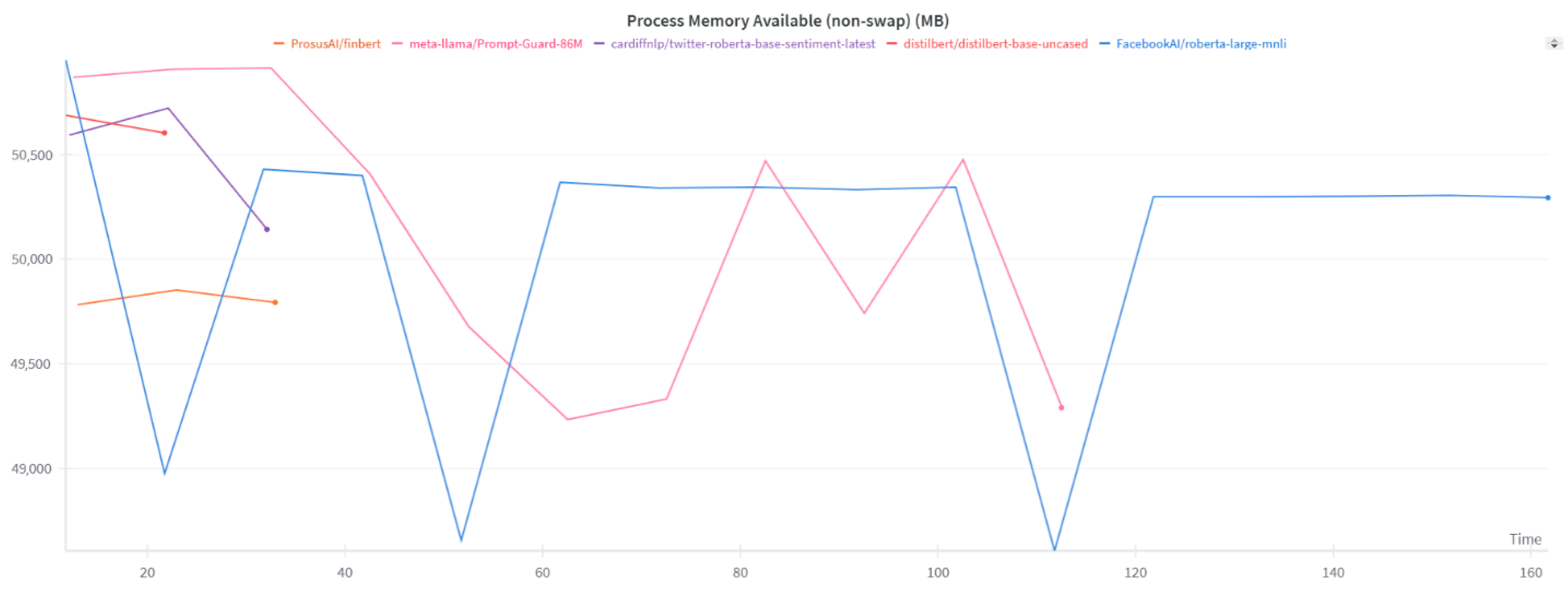

4.3.3. System Resource Management and Scalability Metrics

4.3.4. Memory Management and Stability Analysis

4.4. Communication

5. Discussion

6. Conclusions

6.1. Theoretical Implications

6.2. Practical Implications

6.3. Limitations

6.4. Future Research Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| DSR | Design Science Research |

| NLP | natural language processing |

| PoW | Proof-of-Work |

| PoS | Proof-of-Stake |

| LGOs | local governments |

| EOSL | Energy-Optimized Semantic Loss |

| DOs | design objectives |

| SM | Streaming Multiprocessor |

References

- Anandhabalaji, V.; Babu, M.; Brintha, R. Energy consumption by cryptocurrency: A bibliometric analysis revealing research trends and insights. Energy Nexus 2024, 13, 100274. [Google Scholar] [CrossRef]

- Huynh, A.N.Q.; Duong, D.; Burggraf, T.; Luong, H.T.T.; Bui, N.H. Energy consumption and bitcoin market. Asia-Pac. Financ. Mark. 2022, 29, 79–93. [Google Scholar] [CrossRef]

- Sapra, N.; Shaikh, I. Impact of Bitcoin mining and crypto market determinants on Bitcoin-based energy consumption. Manag. Financ. 2023, 49, 1828–1846. [Google Scholar] [CrossRef]

- Schinckus, C.; Nguyen, C.P.; Chong, F.H.L. Cryptocurrencies’ hashrate and electricity consumption: Evidence from mining activities. Stud. Econ. Financ. 2022, 39, 524–546. [Google Scholar] [CrossRef]

- Sedlmeir, J.; Buhl, H.U.; Fridgen, G.; Keller, R. The energy consumption of blockchain technology: Beyond myth. Bus. Inf. Syst. Eng. 2020, 62, 599–608. [Google Scholar] [CrossRef]

- Zhang, D.; Chen, X.H.; Lau, C.K.M.; Xu, B. Implications of cryptocurrency energy usage on climate change. Technol. Forecast. Soc. Change 2023, 187, 122219. [Google Scholar] [CrossRef]

- Bajra, U.Q.; Ermir, R.; Avdiaj, S. Cryptocurrency blockchain and its carbon footprint: Anticipating future challenges. Technol. Soc. 2024, 77, 102571. [Google Scholar] [CrossRef]

- Erdogan, S.; Ahmed, M.Y.; Sarkodie, S.A. Analyzing asymmetric effects of cryptocurrency demand on environmental sustainability. Environ. Sci. Pollut. Res. Int. 2022, 29, 31723–31733. [Google Scholar] [CrossRef] [PubMed]

- Kohli, V.; Chakravarty, S.; Chamola, V.; Sangwan, K.S.; Zeadally, S. An analysis of energy consumption and carbon footprints of cryptocurrencies and possible solutions. Digit. Commun. Netw. 2023, 9, 79–89. [Google Scholar] [CrossRef]

- Mustafa, F.; Mordi, C.; Elamer, A.A. Green gold or carbon beast? Assessing the environmental implications of cryptocurrency trading on clean water management and carbon emission SDGs. J. Environ. Manag. 2024, 367, 122059. [Google Scholar] [CrossRef]

- Hossain, M.R.; Rao, A.; Sharma, G.D.; Dev, D.; Kharbanda, A. Empowering energy transition: Green innovation, digital finance, and the path to sustainable prosperity through green finance initiatives. Energy Econ. 2024, 136, 107736. [Google Scholar] [CrossRef]

- Gunay, S.; Sraieb, M.M.; Kaskaloglu, K.; Yıldız, M.E. Cryptocurrencies and global sustainability: Do blockchained sectors have distinctive effects? J. Clean. Prod. 2023, 425, 138943. [Google Scholar] [CrossRef]

- Karim, S.; Naeem, M.A.; Tiwari, A.K.; Ashraf, S. Examining the avenues of sustainability in resources and digital blockchains backed currencies: Evidence from energy metals and cryptocurrencies. Ann. Oper. Res. 2023, 1–18. [Google Scholar] [CrossRef] [PubMed]

- Mulligan, C.; Morsfield, S.; Cheikosman, E. Blockchain for sustainability: A systematic literature review for policy impact. Telecommun. Policy 2024, 48, 102676. [Google Scholar] [CrossRef]

- Mili, R.; Trimech, A.; Benammou, S. Impact of bitcoin transaction volume and energy consumption on environmental sustainability: Evidence through ARDL model. In Proceedings of the 2024 IEEE 15th International Colloquium on Logistics and Supply Chain Management (LOGISTIQUA), Sousse, Tunisia, 2–4 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–7. [Google Scholar]

- Del Sarto, N.; Scali, E.; Barontini, R. Harnessing the potential of green cryptocurrencies: A path toward climate change mitigation. In Climate Change and Finance Sustainable Finance; Naifar, N., Ed.; Springer: Berlin/Heidelberg, Germany, 2024; pp. 299–322. [Google Scholar]

- Wiwoho, J.; Trinugroho, I.; Kharisma, D.B.; Suwadi, P. Cryptocurrency mining policy to protect the environment. Cogent Soc. Sci. 2024, 10, 2323755. [Google Scholar] [CrossRef]

- Ali, F.; Khurram, M.U.; Sensoy, A.; Vo, X.V. Green cryptocurrencies and portfolio diversification in the era of greener paths. Renew. Sustain. Energy Rev. 2024, 191, 114137. [Google Scholar] [CrossRef]

- Qudah, H.; Malahim, S.; Airout, R.; AlQudah, M.Z.; Al-Zoubi, W.K.; Huson, Y.A.; Zyadat, A. Unlocking the ESG value of sustainable investments in cryptocurrency: A bibliometric review of research trends. Technol. Anal. Strateg. Manag. 2024, 37, 1341–1355. [Google Scholar] [CrossRef]

- Tripathi, G.; Ahad, M.A.; Casalino, G.A. comprehensive review of blockchain technology: Underlying principles and historical background with future challenges. Decis. Anal. J. 2023, 9, 100344. [Google Scholar] [CrossRef]

- Ali, A.; Jiang, X.; Ali, A. Enhancing corporate sustainable development: Organizational learning, social ties, and environmental strategies. Bus. Strategy Environ. 2023, 32, 1232–1247. [Google Scholar] [CrossRef]

- Arnone, G. The social and environmental impact of cryptocurrencies. In Navigating the World of Cryptocurrencies: Technology, Economics, Regulations, and Future Trends; Contributions to Finance and Accounting; Springer: Berlin/Heidelberg, Germany, 2024; pp. 155–170. [Google Scholar] [CrossRef]

- Wendl, M.; Doan, M.H.; Sassen, R. The environmental impact of cryptocurrencies using proof of work and proof of stake consensus algorithms: A systematic review. J. Environ. Manag. 2023, 326 Pt A, 116530. [Google Scholar] [CrossRef]

- Uzun, I.; Lobachev, M.; Kharchenko, V.; Schöler, T.; Lobachev, I. Candlestick pattern recognition in cryptocurrency price time-series data using rule-based data analysis methods. Computation 2024, 12, 132. [Google Scholar] [CrossRef]

- Zhao, Y. Improving life cycle assessment accuracy and efficiency with transformers. In Proceedings of the 3rd International Conference on Advanced Surface Enhancement (INCASE) 2023 INCASE 2023, Singapore, 25–27 September 2023; Lecture Notes in Mechanical, Engineering. Springer: Berlin/Heidelberg, Germany, 2024; pp. 417–421. [Google Scholar]

- Faiz, A.; Kaneda, S.; Wang, R.; Osi, R.; Sharma, P.; Chen, F.; Jiang, L. Llmcarbon: Modeling the end-to-end carbon footprint of large language models. arXiv 2023, arXiv:2309.14393. [Google Scholar] [CrossRef]

- Khowaja, S.A.; Khuwaja, P.; Dev, K.; Wang, W.; Nkenyereye, L. ChatGPT needs SPADE (sustainability, privacy, digital divide, and ethics) evaluation: A review. Cogn. Comput. 2024, 16, 2528–2550. [Google Scholar] [CrossRef]

- Li, B.; Jiang, Y.; Gadepally, V.; Tiwari, D. Toward sustainable genai using generation directives for carbon-friendly large language model inference. arXiv 2024, arXiv:2403.12900. [Google Scholar] [CrossRef]

- Liu, V.; Yin, Y. Green AI: Exploring carbon footprints, mitigation strategies, and trade offs in large language model training. Discov. Artif. Intell. 2024, 4, 49. [Google Scholar] [CrossRef]

- Varoquaux, G.; Luccioni, A.S.; Whittaker, M. Hype, sustainability, and the price of the bigger-is-better paradigm in AI. arXiv 2024, arXiv:2409.14160. [Google Scholar] [CrossRef]

- Peffers, K.; Tuunanen, T.; Rothenberger, M.A.; Chatterjee, S. A design science research methodology for information systems research. J. Manag. Inf. Syst. 2007, 24, 45–77. [Google Scholar] [CrossRef]

- De Angelis, P.; De Marchis, R.; Marino, M.; Martire, A.L.; Oliva, I. Betting on bitcoin: A profitable trading between directional and shielding strategies. Decis. Econ. Financ. 2021, 44, 883–903. [Google Scholar] [CrossRef]

- Gandal, N.; Hamrick, J.T.; Moore, T.; Vasek, M. The rise and fall of cryptocurrency coins and tokens. Decis. Econ. Financ. 2021, 44, 981–1014. [Google Scholar] [CrossRef]

- Kang, K.Y. Cryptocurrency and double spending history: Transactions with zero confirmation. Econ. Theory 2023, 75, 453–491. [Google Scholar] [CrossRef]

- Ahn, J.; Yi, E.; Kim, M. Blockchain Consensus Mechanisms: A Bibliometric Analysis (2014–2024) Using VOSviewer and R Bibliometrix. Information 2024, 15, 644. [Google Scholar] [CrossRef]

- Sasani, F.; Moghareh Dehkordi, M.; Ebrahimi, Z.; Dustmohammadloo, H.; Bouzari, P.; Ebrahimi, P.; Lencsés, E.; Fekete-Farkas, M. Forecasting of Bitcoin Illiquidity Using High-Dimensional and Textual Features. Computers 2024, 13, 20. [Google Scholar] [CrossRef]

- Corbet, S.; Urquhart, A.; Yarovaya, L. Cryptocurrency and Blockchain Technology; De Gruyter: Berlin, Germany, 2020. [Google Scholar]

- Mohsin, M.; Naseem, S.; Zia-ur-Rehman, M.; Baig, S.A.; Salamat, S. The crypto-trade volume, GDP, energy use, and environmental degradation sustainability: An analysis of the top 20 crypto-trader countries. Int. J. Financ. Econ. 2020, 28, 651–667. [Google Scholar] [CrossRef]

- Gemajli, A.; Patel, S.; Bradford, P.G. A low carbon proof-of-work blockchain. arXiv 2024, arXiv:2404.04729. [Google Scholar] [CrossRef]

- Kliber, A.; Będowska-Sójka, B. Proof-of-work versus proof-of-stake coins as possible hedges against green and dirty energy. Energy Econ. 2024, 138, 107820. [Google Scholar] [CrossRef]

- Li, A.; Gong, K.; Li, J.; Zhang, L.; Luo, X. Is monopolization inevitable in proof-of-work blockchains? Insights from miner scale analysis. Comput. Econ. 2024, 66, 1825–1850. [Google Scholar] [CrossRef]

- Wijewardhana, D.; Vidanagamachchi, S.; Arachchilage, N. Examining attacks on consensus and incentive systems in proof-of-work blockchains: A systematic literature review. arXiv 2024, arXiv:2411.00349. [Google Scholar] [CrossRef]

- Treiblmaier, H. A comprehensive research framework for Bitcoin’s energy use: Fundamentals, economic rationale, and a pinch of thermodynamics. Blockchain Res. Appl. 2023, 4, 100149. [Google Scholar] [CrossRef]

- Hajiaghapour-Moghimi, M.; Tafreshi, O.H.; Hajipour, E.; Vakilian, M.; Lehtonen, M. Investigating the cryptocurrency mining loads’ high penetration impact on electric power grid. IEEE Access 2024, 12, 153643–153663. [Google Scholar] [CrossRef]

- Li, J.; Li, N.; Peng, J.; Cui, H.; Wu, Z. Energy consumption of cryptocurrency mining: A study of electricity consumption in mining cryptocurrencies. Energy 2019, 168, 160–168. [Google Scholar] [CrossRef]

- Majumder, S.; Aravena, I.; Xie, L. An econometric analysis of large flexible cryptocurrency-mining consumers in electricity markets. arXiv 2024, arXiv:2408.12014. [Google Scholar] [CrossRef]

- Zheng, M.; Feng, G.-F.; Zhao, X.; Chang, C.-P. The transaction behavior of cryptocurrency and electricity consumption. Financ. Innov. 2023, 9, 44. [Google Scholar] [CrossRef]

- Altın, H. The impact of energy efficiency and renewable energy consumption on carbon emissions in G7 countries. Int. J. Sustain. Eng. 2024, 17, 134–142. [Google Scholar] [CrossRef]

- Holechek, J.L.; Geli, H.M.E.; Sawalhah, M.N.; Valdez, R. A global assessment: Can renewable energy replace fossil fuels by 2050? Sustainability 2022, 14, 4792. [Google Scholar] [CrossRef]

- Owusu, P.A.; Asumadu-Sarkodie, S. A review of renewable energy sources, sustainability issues and climate change mitigation. Cogent Eng. 2016, 3, 1167990. [Google Scholar] [CrossRef]

- Rahman, A.; Murad, S.M.W.; Mohsin, A.K.M.; Wang, X. Does renewable energy proactively contribute to mitigating carbon emissions in major fossil fuels consuming countries? J. Clean. Prod. 2024, 452, 142113. [Google Scholar] [CrossRef]

- Singh, M.K.; Malek, J.; Sharma, H.K.; Kumar, R. Converting the threats of fossil fuel-based energy generation into opportunities for renewable energy development in India. Renew. Energy 2024, 224, 120153. [Google Scholar] [CrossRef]

- Yeong, Y.-C.; Kalid, K.S.; Savita, K.S.; Ahmad, M.N.; Zaffar, M. Sustainable cryptocurrency adoption assessment among IT enthusiasts and cryptocurrency social communities. Sustain. Energy Technol. Assess. 2022, 52, 102085. [Google Scholar] [CrossRef]

- Bhimani, A.; Hausken, K.; Arif, S. Do national development factors affect cryptocurrency adoption? Technol. Forecast. Soc. Change 2022, 181, 121739. [Google Scholar] [CrossRef]

- di Prisco, D.; Strangio, D. Technology and financial inclusion: A case study to evaluate potential and limitations of Blockchain in emerging countries. Technol. Anal. Strateg. Manag. 2021, 37, 448–461. [Google Scholar] [CrossRef]

- Mhlanga, D. Blockchain for digital financial inclusion towards reduced inequalities. In FinTech and Artificial Intelligence for Sustainable Development: The Role of Smart Technologies in Achieving Development Goals; Sustainable Development Goals Series; Springer Nature: Cham, Switzerland, 2023; pp. 175–192. [Google Scholar]

- Ganesh, C.; Orlandi, C.; Tschudi, D. Proof-of-stake protocols for privacy-aware blockchains. In Advances in Cryptology—EUROCRYPT 2019; Ishai, Y., Rijmen, V., Eds.; Lecture Notes in Computer, Science; Springer: Berlin/Heidelberg, Germany, 2019; Volume 11476, pp. 486–515. [Google Scholar]

- Grandjean, D.; Heimbach, L.; Wattenhofer, R. Ethereum proof-of-stake consensus layer: Participation and decentralization. In Financial Cryptography and Data Security: FC 2024 International Workshops; Budurushi, J., Kulyk, O., Allen, S., Diamandis, T., Klages-Mundt, A., Bracciali, A., Goodell, G., Matsuo, S., Eds.; Lecture Notes in Computer, Science; Springer: Berlin/Heidelberg, Germany, 2025; Volume 14746, pp. 287–310. [Google Scholar]

- Leporati, A.; Rovida, L. Looking for stability in proof-of-stake based consensus mechanisms. Blockchain Res. Appl. 2024, 5, 100222. [Google Scholar] [CrossRef]

- Li, W.; Andreina, S.; Bohli, J.-M.; Karame, G. Securing proof-of-stake blockchain protocols. In Data Privacy Management, Cryptocurrencies and Blockchain Technology; Garcia-Alfaro, J., Navarro-Arribas, G., Hartenstein, H., Herrera-Joancomarti, J., Eds.; Lecture Notes in Computer, Science; Springer: Berlin/Heidelberg, Germany, 2017; Volume 10436, pp. 299–315. [Google Scholar]

- Saad, M.; Qin, Z.; Ren, K.; Nyang, D.; Mohaisen, D. e-PoS: Making proof-of-stake decentralized and fair. IEEE Trans. Parallel Distrib. Syst. 2021, 32, 1961–1973. [Google Scholar] [CrossRef]

- Tang, W. Trading and wealth evolution in the Proof of Stake protocol. arXiv 2023, arXiv:2308.01803. [Google Scholar] [CrossRef]

- Wu, S.; Song, Z.; Wei, P.; Tang, P.; Yuan, Q. Improving privacy of anonymous proof-of-stake protocols. In Cryptology and Network Security: CANS 2023; Deng, J., Kolesnikov, V., Schwarzmann, A.A., Eds.; Lecture Notes in Computer, Science; Springer: Berlin/Heidelberg, Germany, 2023; Volume 14342, pp. 368–391. [Google Scholar]

- De Angelis, S.; Lombardi, F.; Zanfino, G.; Aniello, L.; Sassone, V. Security and dependability analysis of blockchain systems in partially synchronous networks with Byzantine faults. Int. J. Parallel Emergent Distrib. Syst. 2023, 1–21. [Google Scholar] [CrossRef]

- Larangeira, M.; Karakostas, D. The security of delegated proof-of-stake wallet and stake pools. In Blockchains Advances in Information Security; Ruj, S., Kanhere, S.S., Conti, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2024; Volume 105, pp. 135–150. [Google Scholar]

- Tas, E.N.; Tse, D.; Gai, F.; Kannan, S.; Maddah-Ali, M.A.; Yu, F. Bitcoin-enhanced proof-of-stake security: Possibilities and impossibilities. In Proceedings of the 2023 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 22–24 May 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 126–145. [Google Scholar]

- Bochkay, K.; Brown, S.V.; Leone, A.J.; Tucker, J.W. Textual analysis in accounting: What’s next? Contemp. Account. Res. 2022, 40, 765–805. [Google Scholar] [CrossRef]

- Chan, S.W.K.; Chong, M.W.C. Sentiment analysis in financial texts. Decis. Support Syst. 2017, 94, 53–64. [Google Scholar] [CrossRef]

- Huang, A.H.; Wang, H.; Yang, Y. FinBERT: A large language model for extracting information from financial text. Contemp. Account. Res. 2023, 40, 806–841. [Google Scholar] [CrossRef]

- Khalil, F.; Pipa, G. Is deep-learning and natural language processing transcending the financial forecasting? Investigation through lens of news analytic process. Comput. Econ. 2022, 60, 147–171. [Google Scholar] [CrossRef]

- Lewis, C.; Young, S. Fad or future? Automated analysis of financial text and its implications for corporate reporting. Account. Bus. Res. 2019, 49, 587–615. [Google Scholar] [CrossRef]

- Todd, A.; Bowden, J.; Moshfeghi, Y. Text-based sentiment analysis in finance: Synthesising the existing literature and exploring future directions. Intell. Syst. Account. Financ. Manag. 2024, 31, e1549. [Google Scholar] [CrossRef]

- Wang, L.; Cheng, Y.; Xiang, A.; Zhang, J.; Yang, H. Application of natural language processing in financial risk detection. arXiv 2024, arXiv:2406.09765. [Google Scholar] [CrossRef]

- Xing, F.Z.; Cambria, E.; Welsch, R.E. Natural language based financial forecasting: A survey. Artif. Intell. Rev. 2018, 50, 49–73. [Google Scholar] [CrossRef]

- Mishev, K.; Gjorgjevikj, A.; Vodenska, I.; Chitkushev, L.T.; Trajanov, D. Evaluation of sentiment analysis in finance: From lexicons to transformers. IEEE Access 2020, 8, 131662–131682. [Google Scholar] [CrossRef]

- Rizinski, M.; Peshov, H.; Mishev, K.; Jovanovik, M.; Trajanov, D. Sentiment analysis in finance: From transformers back to explainable lexicons (XLex). IEEE Access 2024, 12, 7170–7198. [Google Scholar] [CrossRef]

- Yang, L.; Kenny, E.; Ng, T.L.J.; Yang, Y.; Smyth, B.; Dong, R. Generating plausible counterfactual explanations for deep transformers in financial text classification. arXiv 2020, arXiv:2010.12512. [Google Scholar] [CrossRef]

- Rao, D.; Huang, S.; Jiang, Z.; Deverajan, G.G.; Patan, R. A dual deep neural network with phrase structure and attention mechanism for sentiment analysis. Neural Comput. Appl. 2021, 33, 11297–11308. [Google Scholar] [CrossRef]

- Mnih, V.; Heess, N.; Graves, A. Recurrent models of visual attention. arXiv 2014, arXiv:1406.6247. [Google Scholar] [CrossRef]

- Bashiri, H.; Naderi, H. Comprehensive review and comparative analysis of transformer models in sentiment analysis. Knowl. Inf. Syst. 2024, 66, 7305–7361. [Google Scholar] [CrossRef]

- Fatouros, G.; Soldatos, J.; Kouroumali, K.; Makridis, G.; Kyriazis, D. Transforming sentiment analysis in the financial domain with ChatGPT. Mach. Learn. Appl. 2023, 14, 100508. [Google Scholar] [CrossRef]

- Garcia-Diaz, J.A.; Garcia-Sanchez, F.; Valencia-Garcia, R. Smart analysis of economics sentiment in spanish based on linguistic features and transformers. IEEE Access 2023, 11, 14211–14224. [Google Scholar] [CrossRef]

- Gutiérrez-Fandiño, A.; Kolm, P.N.; Armengol-Estapé, J. FinEAS: Financial embedding analysis of sentiment. arXiv 2021, arXiv:2111.00526. [Google Scholar] [CrossRef]

- Naseem, U.; Razzak, I.; Musial, K.; Imran, M. Transformer based deep intelligent contextual embedding for Twitter sentiment analysis. Future Gener. Comput. Syst. 2020, 113, 58–69. [Google Scholar] [CrossRef]

- Luo, W.; Gong, D. Pre-trained large language models for financial sentiment analysis. arXiv 2024, arXiv:2401.05215. [Google Scholar] [CrossRef]

- Mao, Y.; Liu, Q.; Zhang, Y. Sentiment analysis methods, applications, and challenges: A systematic literature review. J. King Saud Univ.-Comput. Inf. Sci. 2024, 36, 102048. [Google Scholar] [CrossRef]

- Barandas, M.; Famiglini, L.; Campagner, A.; Folgado, D.; Simão, R.; Cabitza, F.; Gamboa, H. Evaluation of uncertainty quantification methods in multi-label classification: A case study with automatic diagnosis of electrocardiogram. Inf. Fusion 2024, 101, 101978. [Google Scholar] [CrossRef]

- Nie, Y.; Kong, Y.; Dong, X.; Mulvey, J.M.; Poor, H.V.; Wen, Q.; Zohren, S. A survey of large language models for financial applications: Progress, prospects and challenges. arXiv 2024, arXiv:2406.11903. [Google Scholar] [CrossRef]

- Angin, M.; Taşdemir, B.; Yılmaz, C.A.; Demiralp, G.; Atay, M.; Angin, P.; Dikmener, G.A. RoBERTa approach for automated processing of sustainability reports. Sustainability 2022, 14, 16139. [Google Scholar] [CrossRef]

- Nagarajan, R.; Narayanasamy, S.K.; Thirunavukarasu, R.; Raj, P. Intelligent Systems and Sustainable Computational Models: Concepts, Architecture, and Practical Applications, 1st ed.; Auerbach Publications: Boca Raton, FL, USA, 2024. [Google Scholar]

- Olawumi, T.O.; Chan, D.W.M. Cloud-based sustainability assessment (CSA) system for automating the sustainability decision-making process of built assets. Expert Syst. Appl. 2022, 188, 116020. [Google Scholar] [CrossRef]

- Wulff, D.U.; Meier, D.S.; Mata, R. Using novel data and ensemble models to improve automated labeling of sustainable development goals. Sustain. Sci. 2024, 19, 1773–1787. [Google Scholar] [CrossRef]

- Chelli, M.; Durocher, S.; Fortin, A. Substantive and symbolic strategies sustaining the environmentally friendly ideology: A media-sensitive analysis of the discourse of a leading French utility. Account. Audit. Account. J. 2019, 32, 1013–1042. [Google Scholar] [CrossRef]

- Anderson, T.; Sarkar, S.; Kelley, R. Analyzing public sentiment on sustainability: A comprehensive review and application of sentiment analysis techniques. Nat. Lang. Process. J. 2024, 8, 100097. [Google Scholar] [CrossRef]

- Rocca, L.; Giacomini, D.; Zola, P. Environmental disclosure and sentiment analysis: State of the art and opportunities for public-sector organisations. Meditari Account. Res. 2021, 29, 617–646. [Google Scholar] [CrossRef]

- Diwanji, V.S.; Baines, A.F.; Bauer, F.; Clark, K. Green consumerism: A cross-cultural linguistic and sentiment analysis of sustainable consumption discourse on Twitter (X). J. Curr. Issues Res. Advert. 2024, 45, 476–505. [Google Scholar] [CrossRef]

- Baxter, K.; Ghandour, R.; Histon, W. Greenwashing and brand perception—A consumer sentiment analysis on organisations accused of greenwashing. Int. J. Internet Mark. Advert. 2024, 21, 149–179. [Google Scholar] [CrossRef]

- Berthelot, A.; Caron, E.; Jay, M.; Lefèvre, L. Estimating the environmental impact of Generative-AI services using an LCA-based methodology. Procedia CIRP 2024, 122, 707–712. [Google Scholar] [CrossRef]

- Konya, A.; Nematzadeh, P. Recent applications of AI to environmental disciplines: A review. Sci. Total Environ. 2024, 906, 167705. [Google Scholar] [CrossRef]

- Ligozat, A.-L.; Lefevre, J.; Bugeau, A.; Combaz, J. Unraveling the hidden environmental impacts of AI solutions for environment. arXiv 2021, arXiv:2110.11822. [Google Scholar] [CrossRef]

- van Wynsberghe, A. Sustainable AI: AI for sustainability and the sustainability of AI. AI Ethics 2021, 1, 213–218. [Google Scholar] [CrossRef]

- Farsi, M.; Hosseinian-Far, A.; Daneshkhah, A.; Sedighi, T. Mathematical and computational modelling frameworks for integrated sustainability assessment (ISA). In Strategic Engineering for Cloud Computing and Big Data Analytics; Hosseinian-Far, A., Ramachandran, M., Sarwar, D., Eds.; Springer: Berlin/Heidelberg, Germany, 2017; pp. 1–22. [Google Scholar]

- Ansar, W.; Goswami, S.; Chakrabarti, A. A survey on transformers in NLP with focus on efficiency. arXiv 2024, arXiv:2406.16893. [Google Scholar] [CrossRef]

- Cahyawijaya, S. Greenformers: Improving computation and memory efficiency in transformer models via low-rank approximation. arXiv 2021, arXiv:2108.10808. [Google Scholar] [CrossRef]

- Lin, T.; Wang, Y.; Liu, X.; Qiu, X. A survey of transformers. AI Open 2022, 3, 111–132. [Google Scholar] [CrossRef]

- Nedjah, N.; Mourelle, L.d.M.; dos Santos, R.A.; dos Santos, L.T.B. Sustainable maintenance of power transformers using computational intelligence. Sustain. Technol. Entrep. 2022, 1, 100001. [Google Scholar] [CrossRef]

- Pati, S.; Aga, S.; Islam, M.; Jayasena, N.; Sinclair, M.D. Computation vs. communication scaling for future transformers on future hardware. arXiv 2023, arXiv:2302.02825. [Google Scholar] [CrossRef]

- Tang, Y.; Wang, Y.; Guo, J.; Tu, Z.; Han, K.; Hu, H.; Tao, D. A survey on transformer compression. arXiv 2024, arXiv:2402.05964. [Google Scholar] [CrossRef]

- Zhuang, B.; Liu, J.; Pan, Z.; He, H.; Weng, Y.; Shen, C. A survey on efficient training of transformers. arXiv 2023, arXiv:2302.01107. [Google Scholar] [CrossRef]

- Mukherjee, S.; Beard, C.; Song, S. Transformers for green semantic communication: Less energy, more semantics. arXiv 2023, arXiv:2310.07592. [Google Scholar] [CrossRef]

- Tschand, A.; Rajan, A.T.R.; Idgunji, S.; Ghosh, A.; Holleman, J.; Kiraly, C.; Ambalkar, P.; Borkar, R.; Chukka, R.; Cockrell, T.; et al. MLPerf power: Benchmarking the energy efficiency of machine learning systems from microwatts to megawatts for sustainable AI. arXiv 2024, arXiv:2410.12032. [Google Scholar] [CrossRef]

- Gowda, S.N.; Hao, X.; Li, G.; Gowda, S.N.; Jin, X.; Sevilla-Lara, L. Watt for what: Rethinking deep learning’s energy-performance relationship. arXiv 2023, arXiv:2310.06522. [Google Scholar] [CrossRef]

- Doyle, C.; Sammon, D.; Neville, K. A design science research (DSR) case study: Building an evaluation framework for social media enabled collaborative learning environments (SMECLEs). J. Decis. Syst. 2016, 25 (Suppl. 1), 125–144. [Google Scholar] [CrossRef]

- Ebrahimi, P.; Schneider, J. Fine-tuning image-to-text models on Liechtenstein tourist attractions. Electron. Mark. 2025, 35, 55. [Google Scholar] [CrossRef]

- Tuunanen, T.; Winter, R.; vom Brocke, J. Dealing with complexity in design science research: A methodology using design echelons. MIS Q. 2024, 48, 427–458. [Google Scholar] [CrossRef]

- Guggenberger, T.; Schellinger, B.; von Wachter, V.; Urbach, N. Kickstarting blockchain: Designing blockchain-based tokens for equity crowdfunding. Electron. Commer. Res. 2024, 24, 239–273. [Google Scholar] [CrossRef]

- vom Brocke, J.; Hevner, A.; Maedche, A. (Eds.) Introduction to design science research. In Design Science Research Cases Progress in IS; Springer: Berlin/Heidelberg, Germany, 2020; pp. 1–13. [Google Scholar]

- Alotaibi, E.M.; Issa, H.; Codesso, M. Blockchain-based conceptual model for enhanced transparency in government records: A design science research approach. Int. J. Inf. Manag. Data Insights 2025, 5, 100304. [Google Scholar] [CrossRef]

- Ballandies, M.C.; Holzwarth, V.; Sunderland, B.; Pournaras, E.; Vom Brocke, J. Advancing customer feedback systems with blockchain. Bus. Inf. Syst. Eng. 2024, 67, 449–471. [Google Scholar] [CrossRef]

- Cerchione, R. Design and evaluation of a blockchain-based system for increasing customer engagement in circular economy. Corp. Soc. Responsib. Environ. Manag. 2024, 32, 160–175. [Google Scholar] [CrossRef]

- Anh, V.N.H. An organizational modeling for developing smart contracts on blockchain-based supply chain finance systems. Procedia Comput. Sci. 2024, 239, 3–10. [Google Scholar] [CrossRef]

- Ballandies, M.C.; Dapp, M.M.; Degenhart, B.A.; Helbing, D. Finance 4.0: Design principles for a value-sensitive cryptoecnomic system to address sustainability. arXiv 2021, arXiv:2105.11955. [Google Scholar] [CrossRef]

- Diniz, E.H.; de Araujo, M.H.; Alves, M.A.; Gonzalez, L. Design principles for sustainable community currency projects. Sustain. Sci. 2024, 20, 1169–1183. [Google Scholar] [CrossRef]

- Bilal, M.; Almazroi, A.A. Effectiveness of fine-tuned BERT model in classification of helpful and unhelpful online customer reviews. Electron. Commer. Res. 2023, 23, 2737–2757. [Google Scholar] [CrossRef]

- Hashmi, E.; Yayilgan, S.Y. A robust hybrid approach with product context-aware learning and explainable AI for sentiment analysis in Amazon user reviews. Electron. Commer. Res. 2024. [Google Scholar] [CrossRef]

- Sanh, V.; Debut, L.; Chaumond, J.; Wolf, T. DistilBERT, a distilled version of BERT: Smaller, faster, cheaper and lighter. arXiv 2019, arXiv:1910.01108. [Google Scholar] [CrossRef]

- Camacho-Collados, J.; Rezaee, K.; Riahi, T.; Ushio, A.; Loureiro, D.; Antypas, D.; Boisson, J.; Espinosa Anke, L.; Liu, F.; Cámara, E.M. TweetNLP: Cutting-edge natural language processing for social media. arXiv 2022, arXiv:2206.14774. [Google Scholar] [CrossRef]

- Loureiro, D.; Barbieri, F.; Neves, L.; Anke, L.E.; Camacho-Collados, J. TimeLMs: Diachronic language models from Twitter. arXiv 2022, arXiv:2202.03829. [Google Scholar] [CrossRef]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar] [CrossRef]

- di Tollo, G.; Andria, J.; Filograsso, G. The Predictive Power of Social Media Sentiment: Evidence from Cryptocurrencies and Stock Markets Using NLP and Stochastic ANNs. Mathematics 2023, 11, 3441. [Google Scholar] [CrossRef]

| Model | Epoch | Training Loss | Validation Loss | Accuracy | Gradient Norm |

|---|---|---|---|---|---|

| DistilBERT-base-uncased (test loss in epoch 5 is 0.84) | |||||

| 1 | 1.08 | 1.05 | 0.72 | 2.12 | |

| 2 | 1.02 | 0.98 | 0.92 | 2.22 | |

| 3 | 0.94 | 0.91 | 0.93 | 2.45 | |

| 4 | 0.88 | 0.86 | 0.93 | 3.24 | |

| 5 | 0.85 | 0.84 | 0.93 | 2.89 | |

| Twitter-RoBERTa-base-sentiment-latest (test loss in epoch 5 is 0.1) | |||||

| 1 | 0.53 | 0.40 | 0.83 | 8.33 | |

| 2 | 0.24 | 0.18 | 0.95 | 5.07 | |

| 3 | 0.11 | 0.13 | 0.97 | 11.81 | |

| 4 | 0.05 | 0.10 | 0.97 | 2.81 | |

| 5 | 0.04 | 0.10 | 0.97 | 2.57 | |

| Prompt-Guard-86M (test loss in epoch 5 is 0.30) | |||||

| 1 | 3.06 | 2.36 | 0.37 | 33.43 | |

| 2 | 0.93 | 0.47 | 0.77 | 11.53 | |

| 3 | 0.44 | 0.45 | 0.78 | 9.93 | |

| 4 | 0.29 | 0.36 | 0.83 | 8.32 | |

| 5 | 0.24 | 0.31 | 0.85 | 8.84 | |

| RoBERTa-large-MNLI (test loss in epoch 5 is 0.01) | |||||

| 1 | 1.17 | 0.94 | 0.58 | 12.71 | |

| 2 | 0.58 | 0.31 | 0.95 | 10.55 | |

| 3 | 0.20 | 0.07 | 0.98 | 23.41 | |

| 4 | 0.05 | 0.02 | 1.00 | 1.95 | |

| 5 | 0.02 | 0.01 | 1.00 | 1.33 | |

| FinBERT (test loss in epoch 5 is 0.39) | |||||

| 1 | 2.20 | 1.72 | 0.53 | 24.61 | |

| 2 | 1.31 | 1.18 | 0.60 | 7.64 | |

| 3 | 0.81 | 0.63 | 0.70 | 6.43 | |

| 4 | 0.50 | 0.44 | 0.90 | 8.97 | |

| 5 | 0.43 | 0.40 | 0.95 | 9.56 | |

| Model | Inference Time (ms/Sample) | GPU Memory (MB) | Training Runtime (s) | Train Samples/s | FLOPS (×1012) |

|---|---|---|---|---|---|

| DistilBERT-base-uncased | 0.00064 | 0.0151 | 19.68 | 60.99 | 9.62 |

| Twitter-RoBERTa-base-sentiment-latest | 0.00132 | 0.0132 | 25.35 | 47.34 | 17.88 |

| Prompt-Guard-86M | 0.00325 | 0.0225 | 72.15 | 16.63 | 20.35 |

| RoBERTa-large-MNLI | 0.00343 | 0.0132 | 90.57 | 13.25 | 63.34 |

| FinBERT | 0.00123 | 0.0225 | 26.49 | 45.30 | 19.12 |

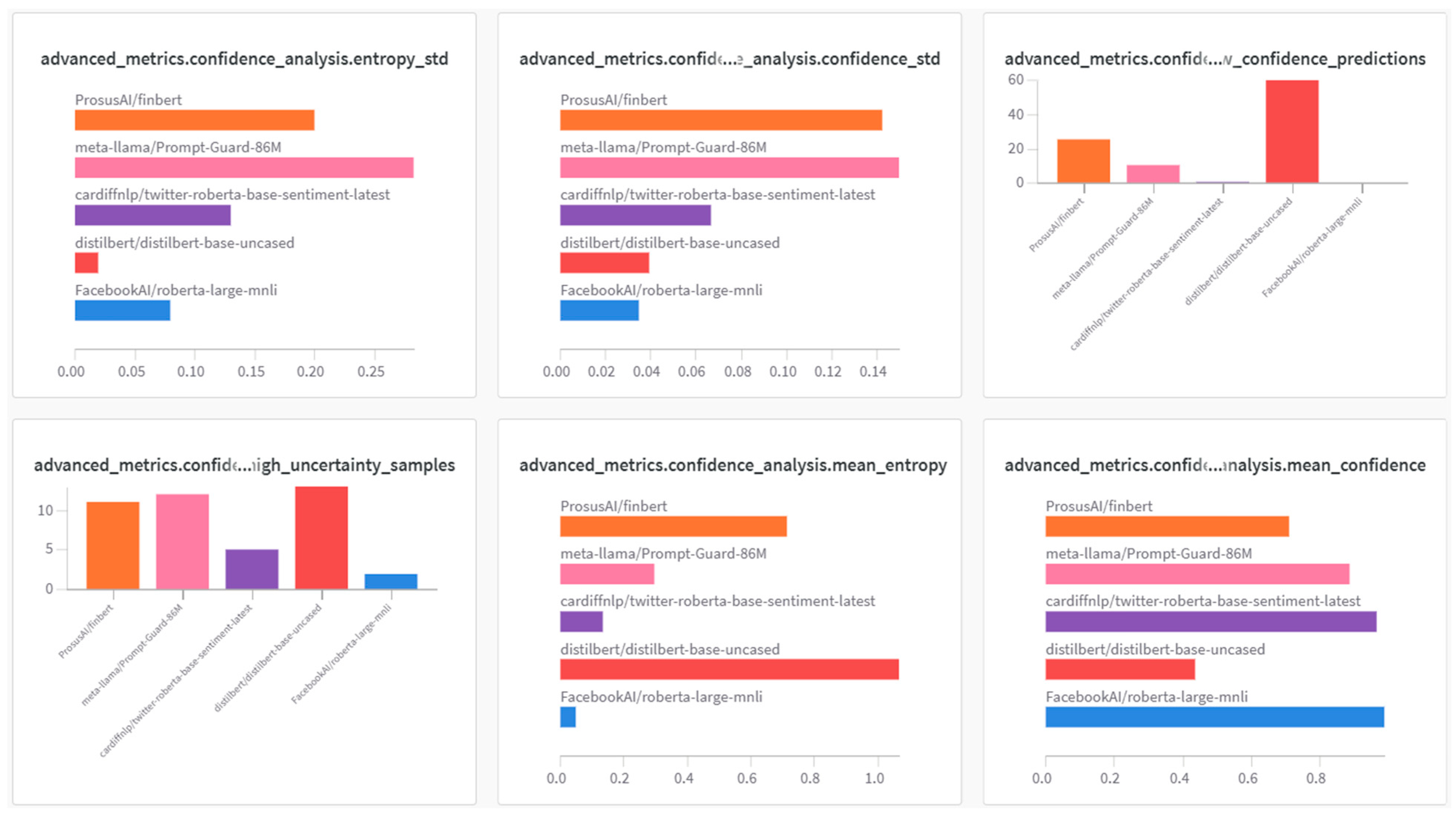

| Model | Mean Confidence | Mean Entropy | High Uncertainty Samples | Low Confidence Predictions | Confidence STD | Entropy STD |

|---|---|---|---|---|---|---|

| DistilBERT-base-uncased | 0.44 | 1.07 | 13 | 60 | 0.04 | 0.02 |

| Twitter-RoBERTa-base-sentiment-latest | 0.96 | 0.13 | 5 | 1 | 0.07 | 0.13 |

| Prompt-Guard-86M | 0.88 | 0.29 | 12 | 11 | 0.15 | 0.28 |

| RoBERTa-large-MNLI | 0.99 | 0.05 | 2 | 0 | 0.03 | 0.08 |

| FinBERT | 0.71 | 0.71 | 11 | 26 | 0.14 | 0.20 |

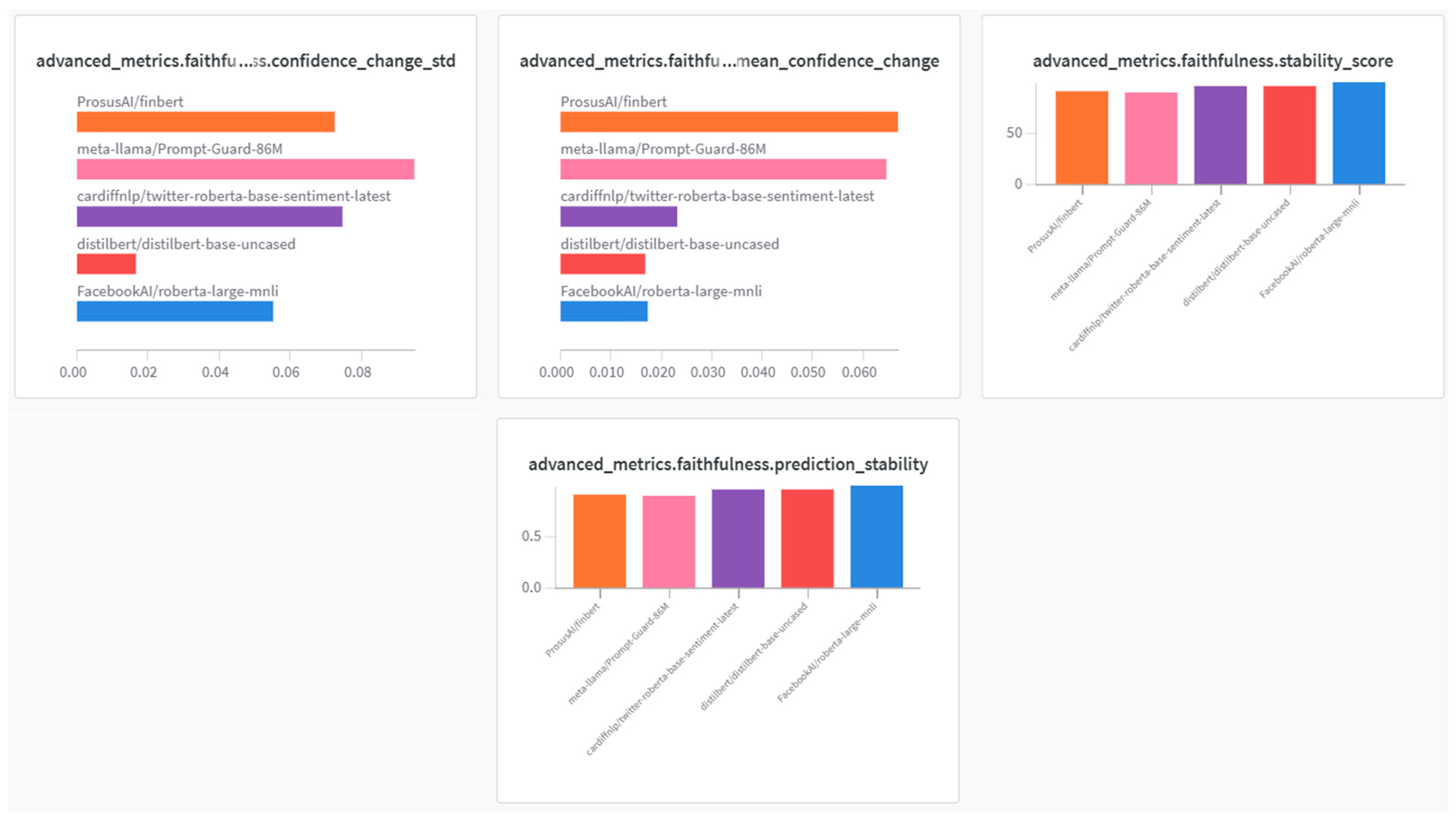

| Model | Prediction Stability | Stability Score | Mean Confidence Change | Confidence Change STD |

|---|---|---|---|---|

| DistilBERT-base-uncased | 0.95 | 95.00 | 0.02 | 0.02 |

| Twitter-RoBERTa-base-sentiment-latest | 0.95 | 95.00 | 0.02 | 0.07 |

| Prompt-Guard-86M | 0.88 | 88.33 | 0.06 | 0.10 |

| RoBERTa-large-MNLI | 0.98 | 98.33 | 0.02 | 0.06 |

| FinBERT | 0.90 | 90.00 | 0.07 | 0.07 |

| Model | Class | Precision | Recall | F1-Score | Weighted F1 |

|---|---|---|---|---|---|

| DistilBERT-base-uncased | Negative | 0.80 | 0.92 | 0.86 | 0.93 |

| Neutral | 0.96 | 0.96 | 0.96 | ||

| Positive | 1.00 | 0.91 | 0.95 | ||

| Twitter-RoBERTa-base-sentiment-latest | Negative | 0.87 | 1.00 | 0.93 | 0.97 |

| Neutral | 1.00 | 0.92 | 0.96 | ||

| Positive | 1.00 | 1.00 | 1.00 | ||

| Prompt-Guard-86M | Negative | 0.65 | 1.00 | 0.79 | 0.85 |

| Neutral | 0.90 | 0.75 | 0.82 | ||

| Positive | 1.00 | 0.87 | 0.93 | ||

| RoBERTa-large-MNLI | Negative | 1.00 | 1.00 | 1.00 | 1.00 |

| Neutral | 1.00 | 1.00 | 1.00 | ||

| Positive | 1.00 | 1.00 | 1.00 | ||

| FinBERT | Negative | 0.81 | 1.00 | 0.90 | 0.95 |

| Neutral | 1.00 | 0.88 | 0.93 | ||

| Positive | 1.00 | 1.00 | 1.00 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bouzari, P.; Fekete-Farkas, M.; Szalay, Z.G. Intelligent Sustainability: Evaluating Transformers for Cryptocurrency Environmental Claims. Information 2025, 16, 1022. https://doi.org/10.3390/info16121022

Bouzari P, Fekete-Farkas M, Szalay ZG. Intelligent Sustainability: Evaluating Transformers for Cryptocurrency Environmental Claims. Information. 2025; 16(12):1022. https://doi.org/10.3390/info16121022

Chicago/Turabian StyleBouzari, Parisa, Maria Fekete-Farkas, and Zsigmond Gábor Szalay. 2025. "Intelligent Sustainability: Evaluating Transformers for Cryptocurrency Environmental Claims" Information 16, no. 12: 1022. https://doi.org/10.3390/info16121022

APA StyleBouzari, P., Fekete-Farkas, M., & Szalay, Z. G. (2025). Intelligent Sustainability: Evaluating Transformers for Cryptocurrency Environmental Claims. Information, 16(12), 1022. https://doi.org/10.3390/info16121022