YOLO11-FGA: Express Package Quality Detection Based on Improved YOLO11

Abstract

1. Introduction

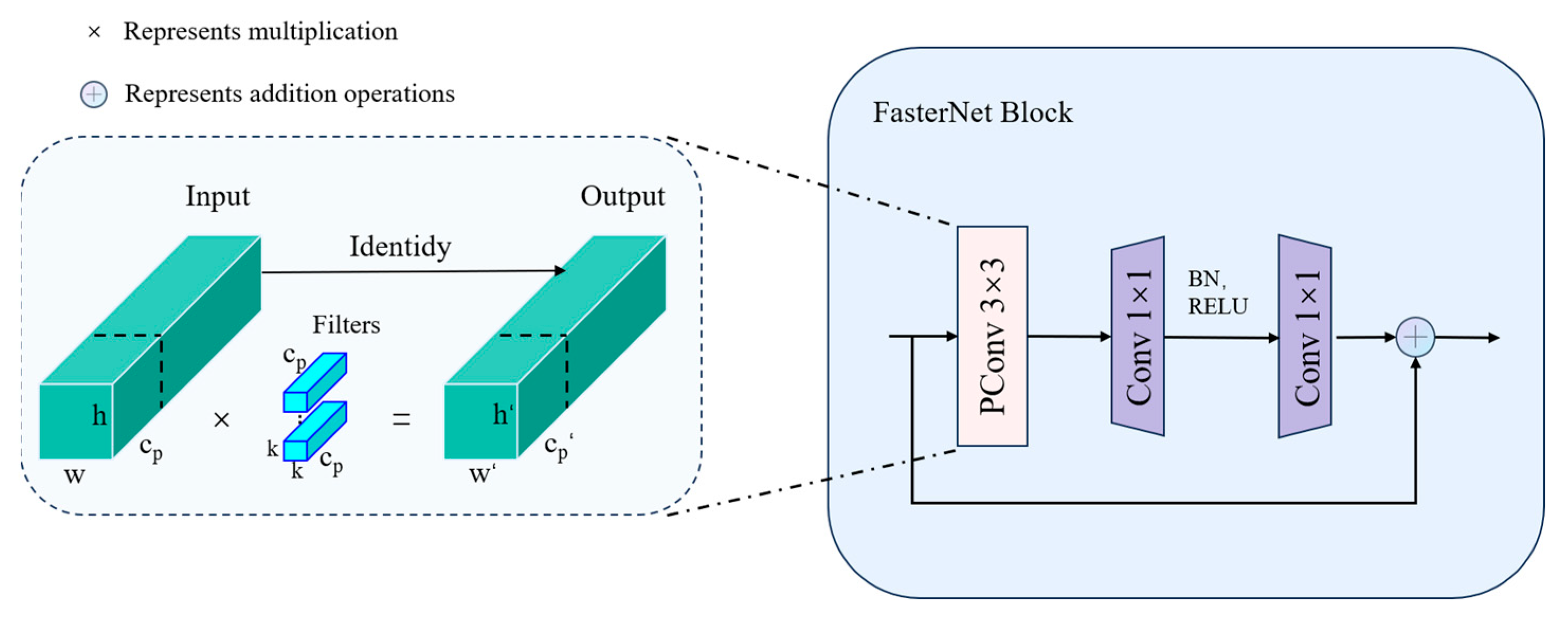

- To address the issue of feature differences in logistics package quality in images, the FasterNet backbone network is used to replace the YOLO11 backbone network to improve feature extraction capabilities and lightweight design.

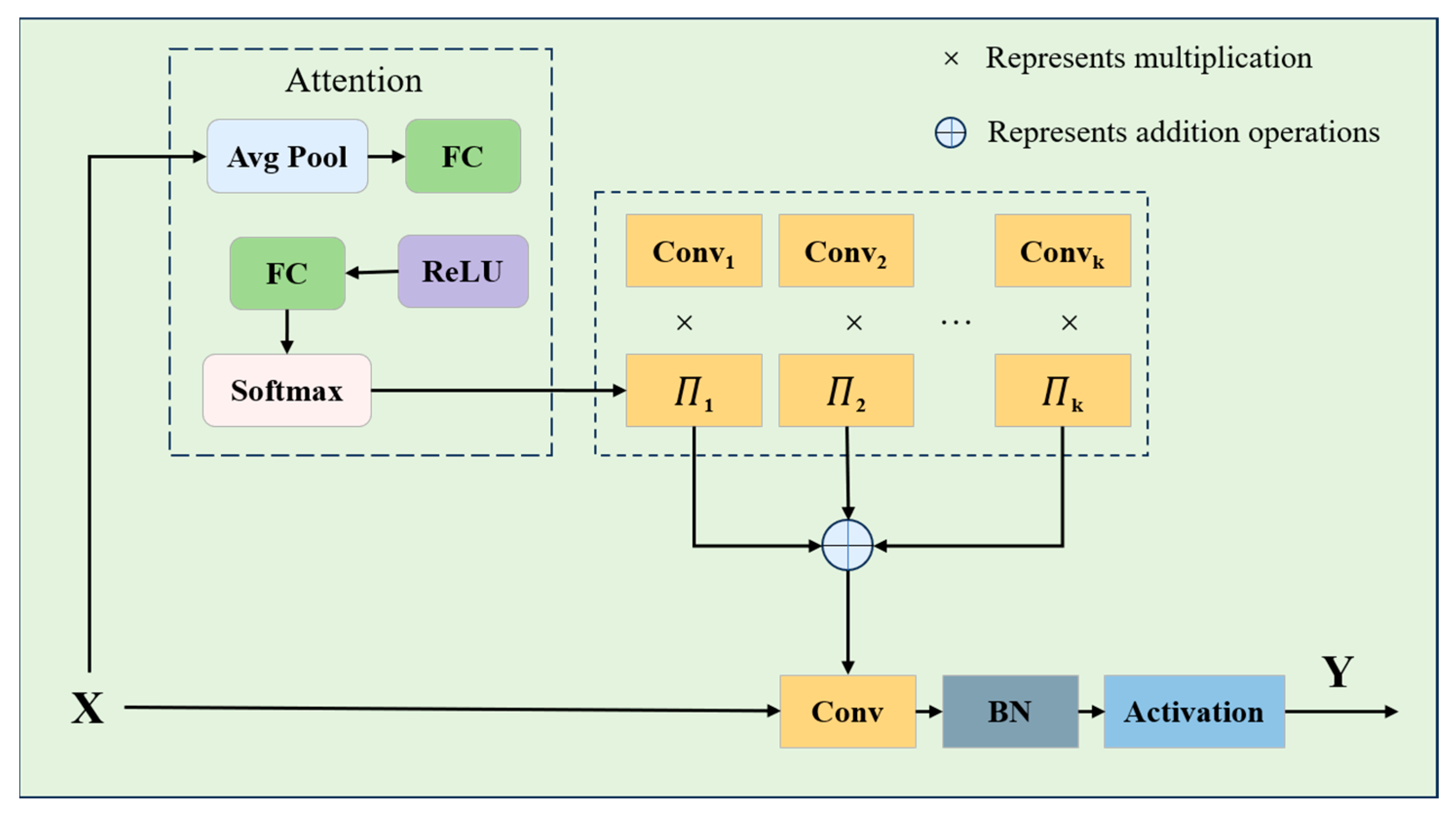

- To fully perceive global information and accurately capture the local features of small targets, the C3k2_GhostDynamicConv module is proposed by integrating GhostBottleneck and DynamicConv into C3k2 to improve feature extraction capabilities and package quality detection capabilities.

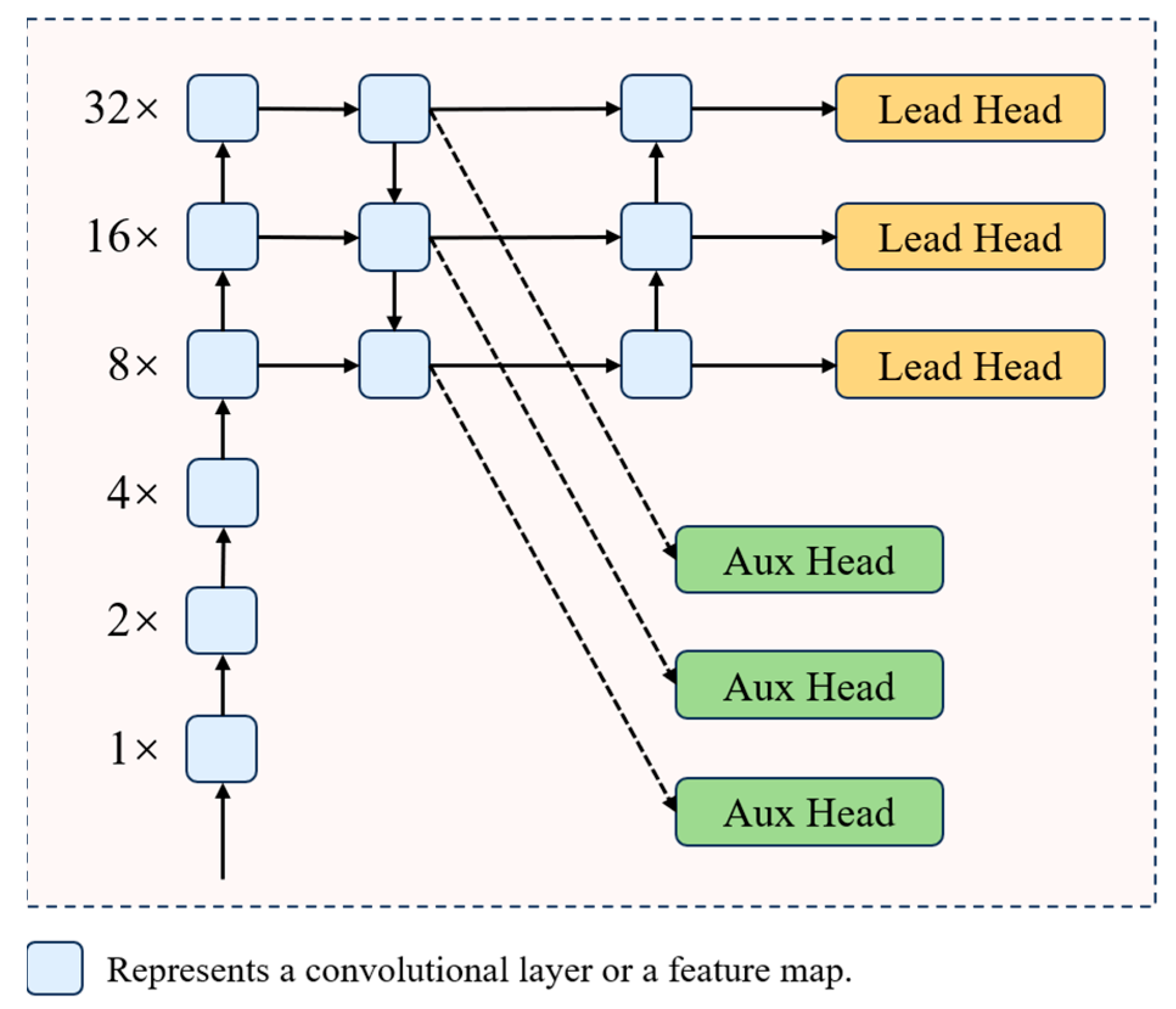

- An auxiliary training head Aux is added to the original YOLO11 detection head to enhance the multi-scale feature fusion capability, thereby improving the accuracy of small target detection.

2. Related Works

3. Materials and Methods

3.1. Model Improvement

3.1.1. FasterNet Backbone Network

3.1.2. C3k2_GhostDynamicConv

3.1.3. Auxiliary Detection Head DetectAux

- Multi-scale feature enhancement for fine-grained defect detection: Express packages captured in real-world logistics environments exhibit substantial variations in size, surface texture, and viewing angle due to packaging diversity and illumination changes. Traditional detection networks with a limited number of primary detection heads tend to overlook small or subtle defects such as fine wrinkles, scratches, or partial tears. To address this issue, six DetectAux modules are introduced and connected to feature maps at different scales of the backbone network. These additional detection branches enable the model to aggregate multi-scale spatial information, thereby improving the perception of small and irregular defects. By complementing the primary detection head, DetectAux strengthens fine-grained feature representation and ensures more complete multi-scale defect detection.

- Robustness improvement under complex inspection environments: In practical logistics scenarios, external factors such as overlapping packages, reflective materials, or uneven lighting conditions often interfere with visual perception. To enhance detection stability, the DetectAux module establishes multiple parallel feature pathways that extract discriminative cues from different receptive field perspectives. This redundant supervision mechanism allows the model to maintain high accuracy even under partial occlusion or background clutter, significantly improving the overall robustness and adaptability of the system.

- Convergence acceleration and supervised reinforcement during training: During the training stage, DetectAux acts as an auxiliary supervision mechanism, providing additional gradient propagation paths that alleviate vanishing gradient issues and improve parameter update efficiency. Each auxiliary head independently computes a local detection loss, which guides the backbone to learn discriminative features more rapidly in the early stages of training. This multi-path supervision not only accelerates convergence but also enhances the overall feature learning quality, allowing the model to achieve optimal detection performance with fewer training epochs.

- Resource control and computational efficiency optimization: Although six DetectAux branches are introduced, the design remains lightweight and deployment-friendly. Each auxiliary detection head employs small convolutional kernels and reduced channel dimensions, ensuring that the additional parameters contribute minimal computational overhead. The auxiliary branches are active only during training to enrich feature learning, while in the inference phase, all DetectAux modules are removed, retaining only the main detection head. This design ensures real-time inference and efficient deployment on resource-limited platforms such as industrial embedded GPUs. Furthermore, the modular nature of DetectAux supports flexible activation and deactivation, allowing easy adaptation across different hardware environments. Techniques such as mixed-precision training and gradient checkpointing can further reduce memory consumption and improve computational efficiency in large-scale industrial inspection tasks.

4. Data Source and Processing

5. Results and Analysis

5.1. Experimental Environment and Parameter Settings

5.2. Evaluation Indicators

5.3. Optimizing the Module Ablation Experiment

5.4. Comparative Experiment

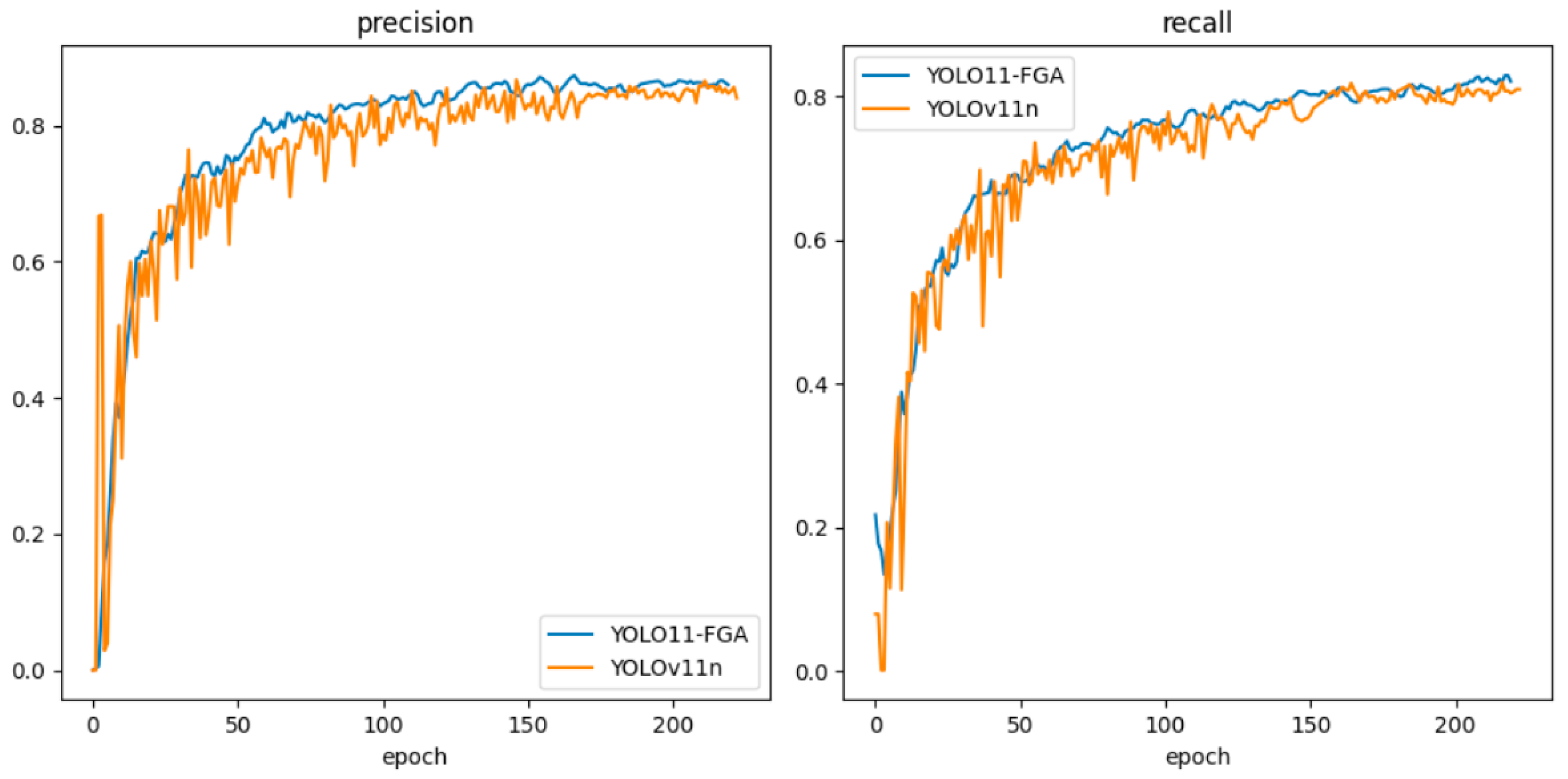

5.5. Comparison of Model Performance Before and After Improvement

5.6. Analysis of Test Results

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jazairy, A.; Persson, E.; Brho, M.; von Haartman, R.; Hilletofth, P. Drones in last-mile delivery: A systematic literature review from a logistics management perspective. Int. J. Logist. Manag. 2025, 36, 1–62. [Google Scholar] [CrossRef]

- Chen, L.; Dong, T.; Li, X.; Xu, X. Logistics engineering management in the platform supply chain: An overview from logistics service strategy selection perspective. Engineering 2025, 47, 236–249. [Google Scholar] [CrossRef]

- Jazairy, A.; Pohjosenperä, T.; Prataviera, L.B.; Juntunen, J. Innovators and transformers: Revisiting the gap between academia and practice: Insights from the green logistics phenomenon. Int. J. Phys. Distrib. Logist. Manag. 2025, 55, 341–360. [Google Scholar] [CrossRef]

- Malhotra, G.; Kharub, M. Elevating logistics performance: Harnessing the power of artificial intelligence in e-commerce. Int. J. Logist. Manag. 2025, 36, 290–321. [Google Scholar] [CrossRef]

- Li, C.; Wang, Y.; Li, Z.; Wang, L. Study on calculation and optimization path of energy utilization efficiency of provincial logistics industry in China. Renew. Energy 2025, 243, 122594. [Google Scholar] [CrossRef]

- Lee, H.; Koo, B.; Chattopadhyay, A.; Neerukatti, R.K.; Liu, K.C. Damage detection technique using ultrasonic guided waves and outlier detection: Application to interface delamination diagnosis of integrated circuit package. Mech. Syst. Signal Process. 2021, 160, 107884. [Google Scholar] [CrossRef]

- Liu, K.; Zhang, H.; Hu, Z.; Wang, F.; Yu, P.S. Data augmentation for supervised graph outlier detection with latent diffusion models. arXiv 2023, arXiv:2312.17679. [Google Scholar] [CrossRef]

- Arnaudon, A.; Schindler, D.J.; Peach, R.L.; Gosztolai, A.; Hodges, M.; Schaub, M.T.; Barahona, M. PyGenStability: Multiscale community detection with generalized Markov Stability. arXiv 2023, arXiv:2303.05385. [Google Scholar]

- Almeida-Silva, F.; Van de Peer, Y. Assessing the quality of comparative genomics data and results with the cogeqc R/Bioconductor package. Methods Ecol. Evol. 2023, 14, 2942–2952. [Google Scholar] [CrossRef]

- Cao, Y.; Ni, Y.; Zhou, Y.; Li, H.; Huang, Z.; Yao, E. An auto chip package surface defect detection based on deep learning. IEEE Trans. Instrum. Meas. 2023, 73, 3507115. [Google Scholar] [CrossRef]

- Arnaudon, A.; Schindler, D.J.; Peach, R.L.; Gosztolai, A.; Hodges, M.; Schaub, M.T.; Barahona, M. Algorithm 1044: PyGenStability, a multiscale community detection framework with generalized markov stability. ACM Trans. Math. Softw. 2024, 50, 1–8. [Google Scholar] [CrossRef]

- Mao, W.-L.; Wang, C.-C.; Chou, P.-H.; Liu, Y.-T. Automated defect detection for mass-produced electronic components based on YOLO object detection models. IEEE Sens. J. 2024, 24, 26877–26888. [Google Scholar] [CrossRef]

- Pham, D.L.; Chang, T.W. A YOLO-based real-time packaging defect detection system. Procedia Comput. Sci. 2023, 217, 886–894. [Google Scholar]

- Chomklin, A.; Jaiyen, S.; Wattanakitrungroj, N.; Mongkolnam, P.; Chaikhan, S. Packaging defect detection in lean manufacturing: A comparative study of yolov8, yolov9, and yolov10. In Proceedings of the 2024 28th International Computer Science and Engineering Conference (ICSEC), Khon Kaen, Thailand, 6–8 November 2024; pp. 1–6. [Google Scholar]

- Dong, P.; Wang, Y.; Yu, Q.; Feng, W.; Zong, G. AMC-YOLO: Improved YOLOv8-based defect detection for cigarette packs. IET Image Process. 2024, 18, 4873–4886. [Google Scholar] [CrossRef]

- Chen, Y.W.; Shiu, J.M. An implementation of YOLO-family algorithms in classifying the product quality for the acrylonitrile butadiene styrene metallization. Int. J. Adv. Manuf. Technol. 2022, 119, 8257–8269. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Chen, J.; Kao, S.H.; He, H.; Zhuo, W.; Wen, S.; Lee, C.H.; Chan, S.H.G. Run, don’t walk: Chasing higher FLOPS for faster neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 12021–12031. [Google Scholar]

- Chen, Y.; Dai, X.; Liu, M.; Chen, D.; Yuan, L.; Liu, Z. Dynamic convolution: Attention over convolution kernels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11030–11039. [Google Scholar]

- Razmand, M. Development of a Tile Module for Ghost and Its Application; The University of Iowa: Iowa City, IA, USA, 2020. [Google Scholar]

- Gao, H.; Yang, Y.; Li, C.; Gao, L.; Zhang, B. Multiscale residual network with mixed depthwise convolution for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 3396–3408. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1580–1589. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Ye, C.; Guo, Z.; Gao, Y.; Yuan, F.; Wu, M.; Xiao, K. Deep Learning-Driven Body-Sensing Game Action Recognition: A Research on Human Detection Methods Based on MediaPipe and YOLO. In Proceedings of the 2025 6th International Conference on Computer Engineering and Application (ICCEA), Hangzhou, China, 25–27 April 2025; pp. 2087–2092. [Google Scholar]

- Zhang, K.; Yuan, F.; Jiang, Y.; Mao, Z.; Zuo, Z.; Peng, Y. A Particle Swarm Optimization-Guided Ivy Algorithm for Global Optimization Problems. Biomimetics 2025, 10, 342. [Google Scholar] [CrossRef]

- Zhao, Z.; Chen, S.; Ge, Y.; Yang, P.; Wang, Y.; Song, Y. Rt-detr-tomato: Tomato target detection algorithm based on improved rt-detr for agricultural safety production. Appl. Sci. 2024, 14, 6287. [Google Scholar] [CrossRef]

- Qu, Y.; Wan, B.; Wang, C.; Ju, H.; Yu, J.; Kong, Y.; Chen, X. Optimization algorithm for steel surface defect detection based on PP-YOLOE. Electronics 2023, 12, 4161. [Google Scholar] [CrossRef]

- Feng, Y.; Huang, J.; Du, S.; Ying, S.; Yong, J.-H.; Li, Y.; Ding, G.; Ji, R.; Gao, Y. Hyper-yolo: When visual object detection meets hypergraph computation. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 47, 2388–2401. [Google Scholar] [CrossRef] [PubMed]

| Authors | Year | Improvement Method | Core Mechanism | Limitations |

|---|---|---|---|---|

| Lee H [6] | 2021 | ultrasonic guided waves and outlier detection | Uses ultrasonic guided waves (UGW) for non-destructive evaluation (NDE) of sealant delamination in integrated circuit (IC) packages, combined with DBSCAN for damage classification. | Limited to delamination detection |

| Liu K [7] | 2023 | Data augmentation for supervised graph outlier detection | GODM uses latent diffusion models to generate synthetic outliers, addressing class imbalance in supervised graph outlier detection. | Limited to graph data; does not address other types of data imbalance. |

| Arnaudon A [8] | 2023 | PyGenStability for multiscale community detection | Integrates generalized Markov stability to optimize graph partitions at different resolutions. | Requires heavy computational resources for large-scale graphs. |

| Almeida-Silva F [9] | 2023 | R/Bioconductor package for assessing comparative genomics data quality | cogeqc package provides tools for evaluating genome assembly and annotation quality, orthogroup inference, and synteny detection, using comparative statistics and graph-based analysis. | Focused on comparative genomics; may not generalize to other biological datasets. |

| Cao Y [10] | 2023 | Real-time chip package surface defect detection based on YOLOv7 | Proposes a real-time defect detection method using YOLOv7, incorporating k-means++ for anchor frame clustering, CBAM, RFB, and a new confidence propagation cluster (CP-Cluster) to improve accuracy and efficiency. | Limited to chip package defects; not applicable to other domains. |

| Arnaudon A [11] | 2024 | PyGenStability for multiscale community detection | Introduces PyGenStability, a Python package for unsupervised multiscale community detection in graphs, using the generalized Markov Stability quality function with the Louvain or Leiden algorithms. | Limited to graph-based applications; may require tuning for specific graphs. |

| Mao W [12] | 2024 | Automated defect detection for DIP using YOLO and ConSinGAN | Proposes an automated defect detection system for dual in-line package (DIP) components using YOLO object detection models (v3, v4, v7, v9) combined with ConSinGAN for data augmentation. | Limited to DIP defects; the model’s performance may not generalize well to other components. |

| Pham D L [13] | 2023 | YOLO-based real-time packaging defect detection system | Proposes a real-time defect detection system based on the YOLO algorithm to automatically classify defective packaged products in industrial quality control. | Limited to packaging defects; may not generalize to all types of defects. |

| Chomklin A [14] | 2024 | Comparative study of YOLOv8, YOLOv9, and YOLOv10 for packaging defect detection | Compares various YOLO models for detecting packaging defects in lean manufacturing, utilizing a dataset with seven classes | Limited to packaging defects; dataset may not cover all defect types. |

| Dong P [15] | 2024 | AMC-YOLO: Improved YOLOv8-based defect detection for cigarette packs | Proposes AMC-YOLO, a YOLOv8-based model designed for cigarette pack defect detection, incorporating Adaptive Spatial Weight Perception (ASWP), MARFPN, and Cross-Layer Collaborative Detection Head (CLCDH) for improved feature learning and accuracy. | Focused specifically on cigarette packaging defects; may not generalize to other industries. |

| Chen Y W [16] | 2022 | YOLO-family algorithms for product quality classification in ABS metallization | Applies YOLO-family algorithms (v2 to v5) to develop an automatic optical inspection (AOI) system for defect detection on reflective surfaces of electroplated Acrylonitrile Butadiene Styrene (ABS) products. | Focused on ABS electroplating defects; may not generalize to other materials or industries. |

| Model | P/% | R/% | mAP (0.5) | mAP (0.5:0.95) | FPS |

|---|---|---|---|---|---|

| ConvNeXtV2 | 85.9 | 81.6 | 86.7 | 53.6 | 60.7 |

| LskNet | 85.5 | 80 | 87 | 53.7 | 57.7 |

| StarNet | 85.7 | 81.1 | 86.7 | 54.2 | 107.8 |

| MobileNetV4 | 87 | 80.2 | 86.8 | 53.8 | 99.8 |

| FasterNet | 86.4 | 84 | 89.1 | 56.6 | 125.3 |

| Category | Number of Pictures | Number of Annotations |

|---|---|---|

| scratch | 1053 | 2858 |

| hole | 1060 | 1727 |

| wet | 1715 | 2355 |

| all | 3828 | 6940 |

| Name | Environment Configuration |

|---|---|

| Operating system | Ubantu 22.04 |

| CPU | Intel(R) Xeon(R) E5-2680 v4 @ 2.40 GHz |

| GPU | RTX 4090 |

| GPU graphics memory | 24 GB |

| programming language | Python3.10 |

| Deep Learning Framework | PyTorch2.2.2 and Cuda12.1 |

| FasterNet | C3k2_GhostDynamicConv | Aux | P/% | R/% | mAP (0.5) | mAP (0.5:0.95) | GFLOPs (G) | FPS | Params (M) |

|---|---|---|---|---|---|---|---|---|---|

| ✕ | ✕ | ✕ | 85.8 | 81.4 | 87.7 | 55.4 | 6.3 | 142.7 | 2.58 |

| √ | ✕ | ✕ | 86.4 | 84 | 89.1 | 56.6 | 9.2 | 125.3 | 3.90 |

| ✕ | √ | ✕ | 83.6 | 83.2 | 88.3 | 55.2 | 5.4 | 61.7 | 2.22 |

| ✕ | ✕ | √ | 86.8 | 80.6 | 88.6 | 56.4 | 6.3 | 141 | 2.58 |

| √ | √ | ✕ | 87.3 | 81.5 | 89.1 | 57.3 | 8.7 | 80.7 | 3.71 |

| √ | ✕ | √ | 86.4 | 82.9 | 89.4 | 58.1 | 9.2 | 122.5 | 3.90 |

| ✕ | √ | √ | 84.4 | 84.8 | 89.4 | 56.5 | 5.8 | 89.1 | 2.39 |

| √ | √ | √ | 86.9 | 82.7 | 90.0 | 56.8 | 8.7 | 82.7 | 3.71 |

| Model | P/% | R/% | mAP (0.5) | mAP (0.5:0.95) | GFLOPs (G) |

|---|---|---|---|---|---|

| YOLOv5n | 85.7 | 82.1 | 87.9 | 52.5 | 4.1 |

| YOLOv8n | 85.7 | 81.5 | 88.6 | 56.4 | 8.1 |

| YOLOv10n | 84.5 | 76.9 | 85.9 | 54.7 | 8.2 |

| YOLOv11n | 85.8 | 81.4 | 87.7 | 55.4 | 6.3 |

| YOLOv11s | 84.8 | 81.7 | 87.3 | 57.2 | 21.3 |

| RT-DETRr18 [26] | 83.4 | 77.7 | 83.8 | 52.0 | 56.95 |

| PP-YOLOEs [27] | 85.4 | 83.2 | 87.6 | 55.9 | 18.96 |

| Hyper-YOLOt [28] | 86.4 | 82.1 | 88.2 | 56.7 | 8.91 |

| YOLO11-FGA | 86.9 | 82.7 | 90.0 | 56.8 | 8.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, P.; Qiang, G.; Fan, Y.; Du, Y.; Yang, J.; Tian, Z. YOLO11-FGA: Express Package Quality Detection Based on Improved YOLO11. Information 2025, 16, 1021. https://doi.org/10.3390/info16121021

Zhao P, Qiang G, Fan Y, Du Y, Yang J, Tian Z. YOLO11-FGA: Express Package Quality Detection Based on Improved YOLO11. Information. 2025; 16(12):1021. https://doi.org/10.3390/info16121021

Chicago/Turabian StyleZhao, Peng, Guanglei Qiang, Yangrui Fan, Yu Du, Junye Yang, and Zhen Tian. 2025. "YOLO11-FGA: Express Package Quality Detection Based on Improved YOLO11" Information 16, no. 12: 1021. https://doi.org/10.3390/info16121021

APA StyleZhao, P., Qiang, G., Fan, Y., Du, Y., Yang, J., & Tian, Z. (2025). YOLO11-FGA: Express Package Quality Detection Based on Improved YOLO11. Information, 16(12), 1021. https://doi.org/10.3390/info16121021