Digital Surface Model and Fractal-Guided Multi-Directional Network for Remote Sensing Image Super-Resolution

Abstract

1. Introduction

2. Related Work

2.1. Single-Image Super-Resolution (SISR)

2.2. Remote Sensing Image Super-Resolution (RSISR)

2.3. Fractal Theory

3. Proposed Method

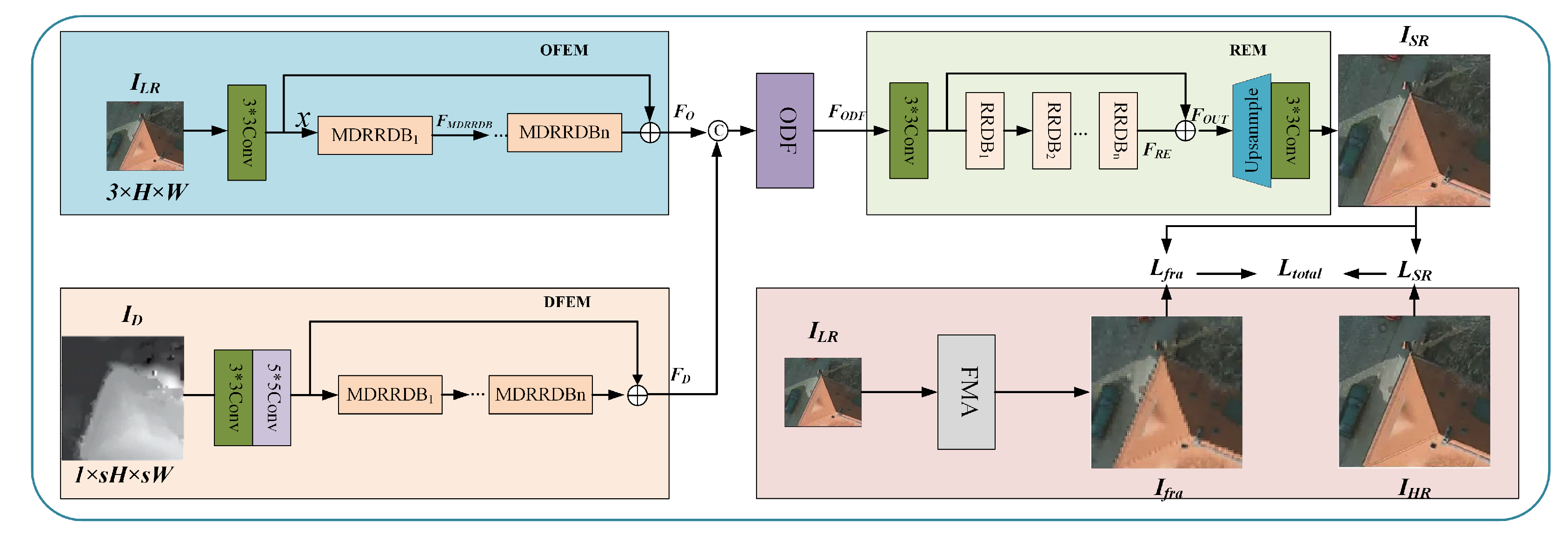

3.1. Network Architecture

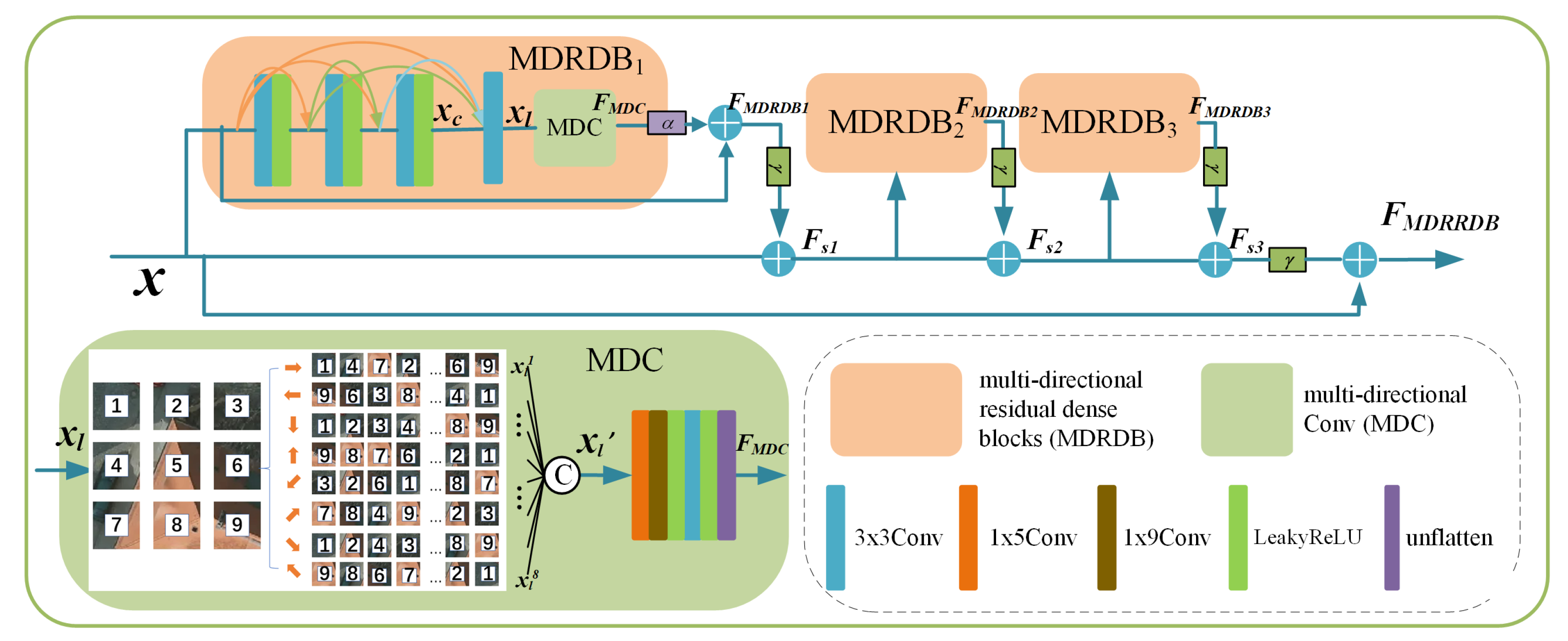

3.2. Multi-Directional Residual-in-Residual Dense Block (MDRRDB)

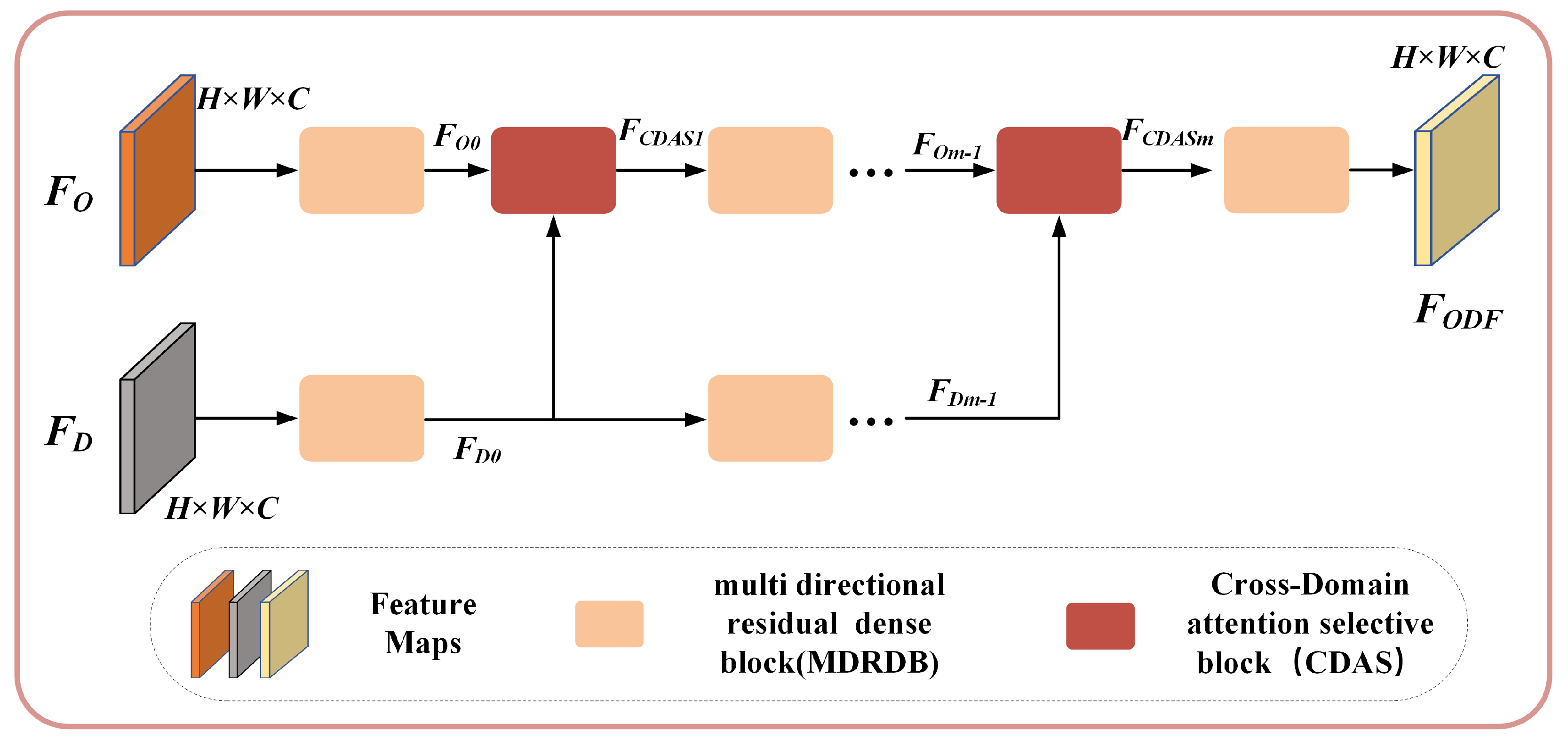

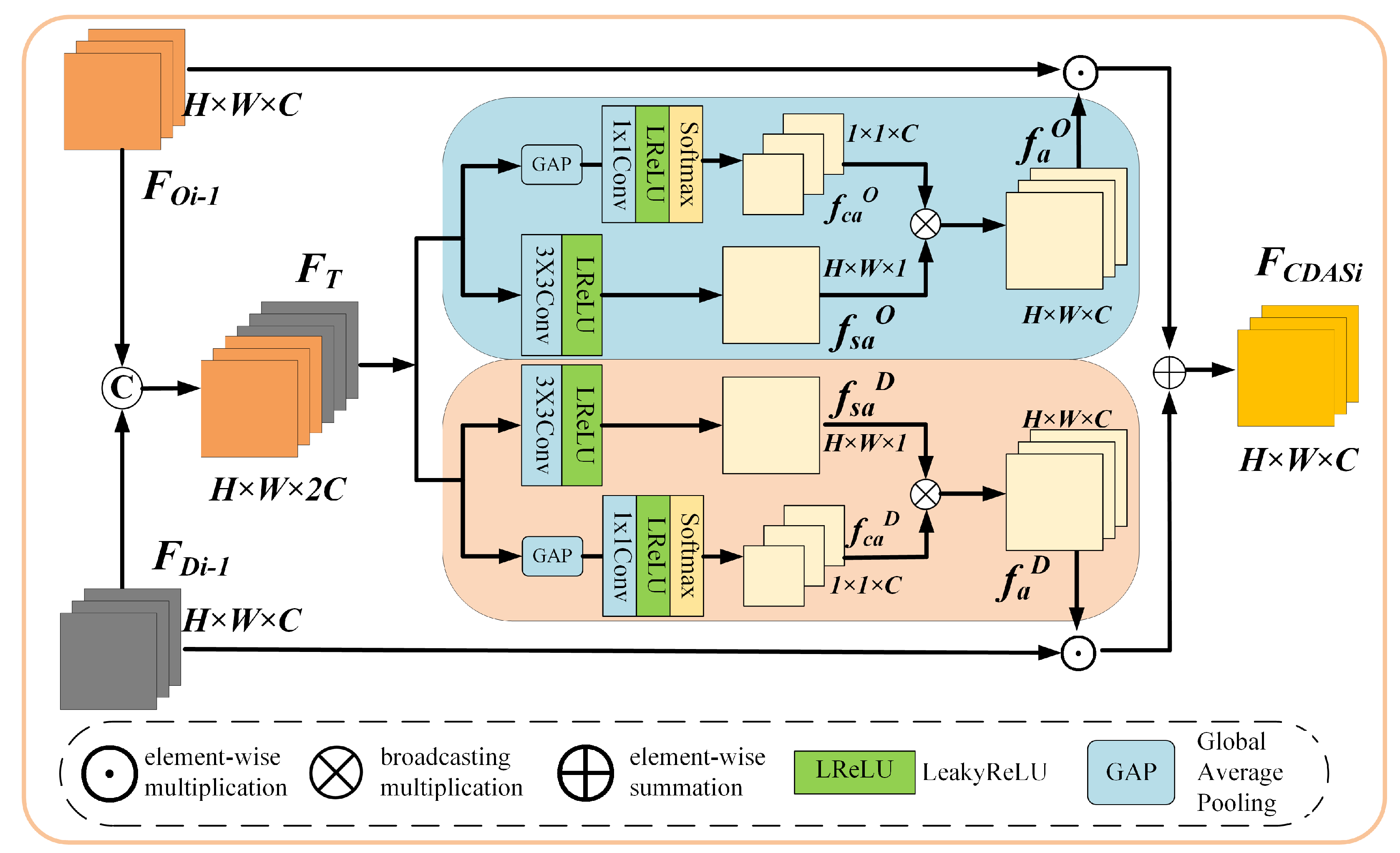

3.3. Optical and DSM Fusion Module (ODF)

3.4. Fractal Mapping Super-Resolution Algorithm (FMA) and Fractal Loss Function

| Algorithm 1 Fractal Mapping Super-Resolution Algorithm |

| Input: |

| optical image , scaling factor s |

| Output: |

| fractal-based SR image |

| Initialize: |

| Generate fractal coordinates: |

| for do |

| for do |

| if then |

| end if |

| Update: |

| if then |

| end if |

| end for |

| end for |

| return |

4. Experimental Results and Analyses

4.1. Dataset

4.2. Evaluation Metrics and Implementation Details

4.3. Comparison with the State-of-the-Arts

4.3.1. Quantitative Comparison

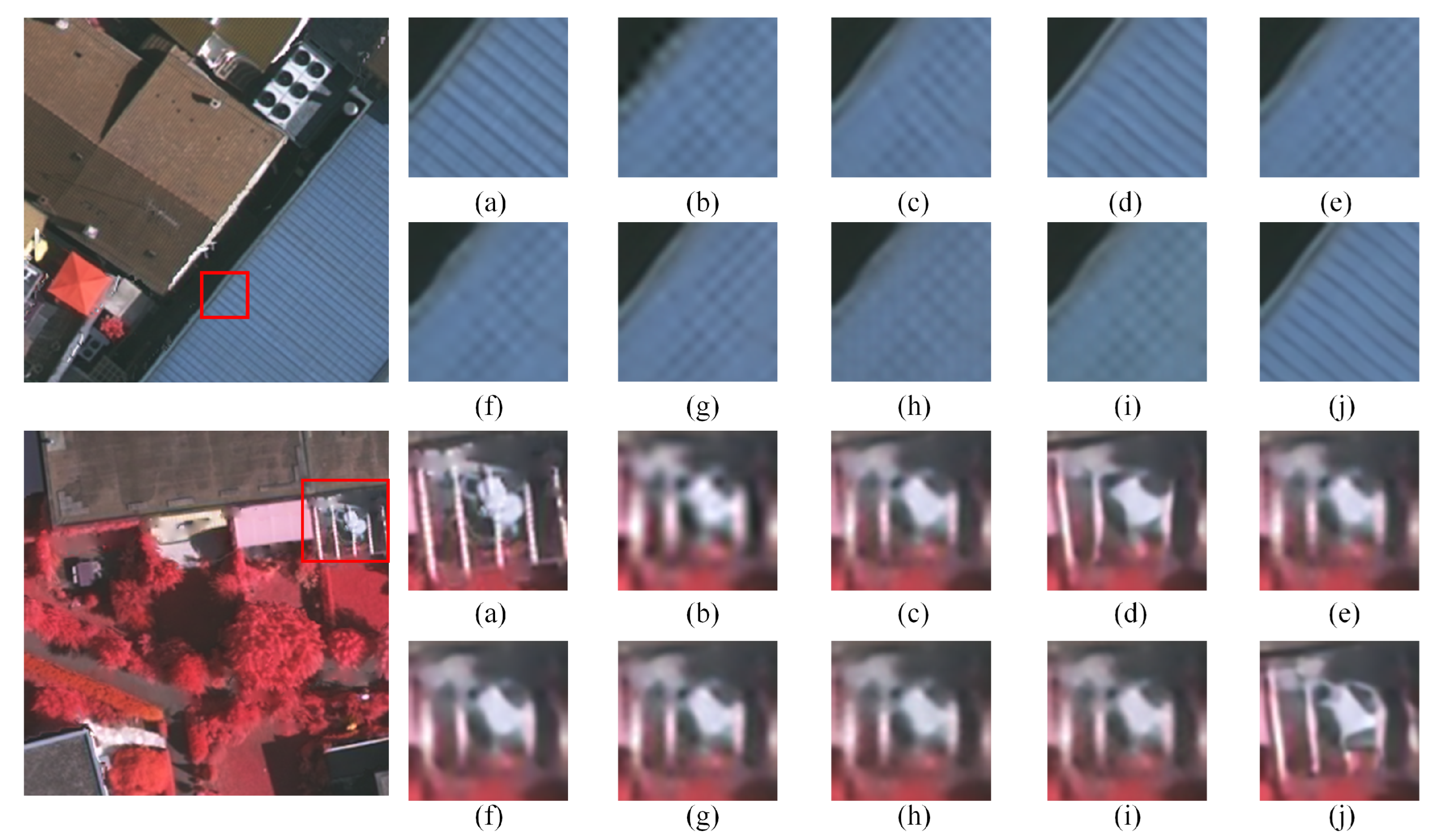

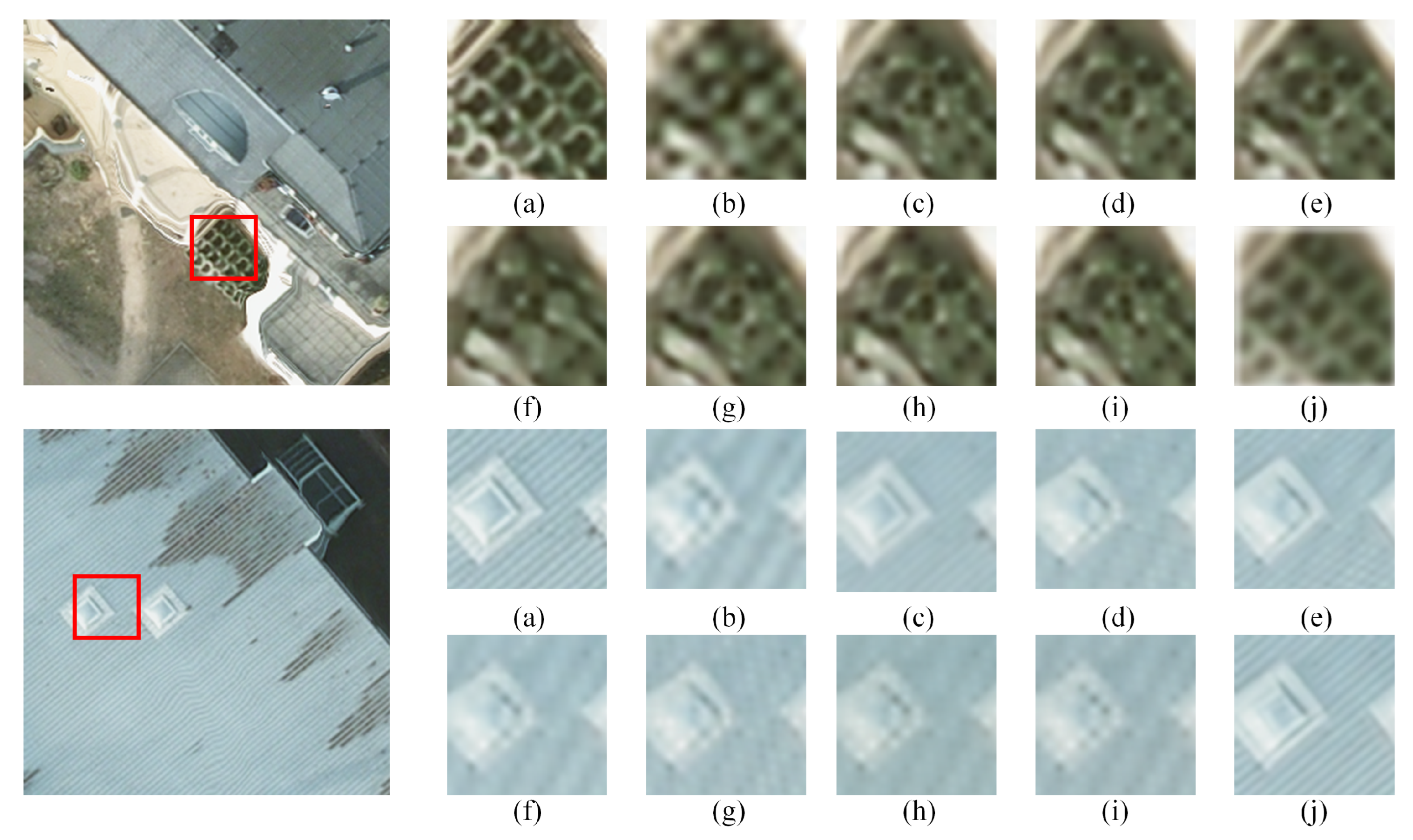

4.3.2. Qualitative Comparison

4.4. Complexity Analysis

4.5. Statistical Significance Analysis

4.6. Ablation Experiments

4.6.1. Study of

4.6.2. Effectiveness of the DFEM, ODF, and MDRRDB

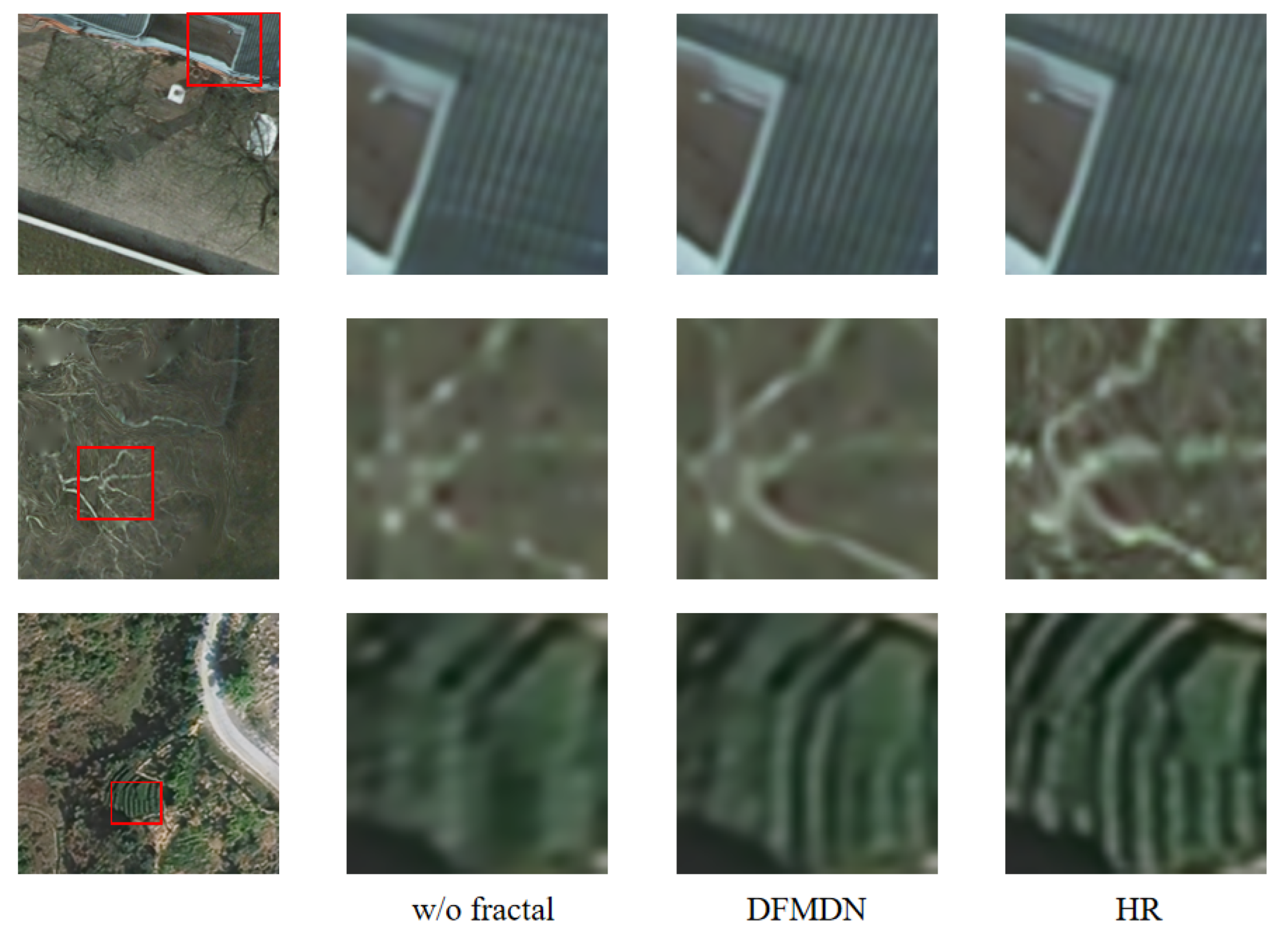

4.6.3. Effectiveness of the FMA

4.6.4. Study of the ODF

4.6.5. Study of the Number of Directions in MDRRDB

4.6.6. Study of the Number of CDAS in ODF

4.7. Experiments on a County Dataset

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Pruitt, E.L. The office of naval research and geography. Ann. Assoc. Am. Geogr. 1979, 69, 103–108. [Google Scholar] [CrossRef]

- Lillesand, T.; Kiefer, R.W.; Chipman, J. Remote Sensing and Image Interpretation; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Tucker, C.J.; Townshend, J.R.; Goff, T.E. African land-cover classification using satellite data. Science 1985, 227, 369–375. [Google Scholar] [CrossRef]

- Tralli, D.M.; Blom, R.G.; Zlotnicki, V.; Donnellan, A.; Evans, D.L. Satellite remote sensing of earthquake, volcano, flood, landslide and coastal inundation hazards. ISPRS J. Photogramm. Remote Sens. 2005, 59, 185–198. [Google Scholar] [CrossRef]

- Williams, D.L.; Goward, S.; Arvidson, T. Landsat. Photogramm. Eng. Remote Sens. 2006, 72, 1171–1178. [Google Scholar] [CrossRef]

- Schowengerdt, R.A. Remote Sensing: Models and Methods for Image Processing; Elsevier: Amsterdam, The Netherlands, 2006. [Google Scholar]

- Nakazawa, S.; Iwasaki, A. Super-resolution imaging using remote sensing platform. In Proceedings of the 2014 IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 1987–1990. [Google Scholar]

- Vishnukumar, S.; Wilscy, M. Super-resolution for remote sensing images using content adaptive detail enhanced self examples. In Proceedings of the 2016 International Conference on Circuit, Power and Computing Technologies (ICCPCT), Nagercoil, India, 18–19 March 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–5. [Google Scholar]

- Tu, T.M.; Su, S.C.; Shyu, H.C.; Huang, P.S. A new look at IHS-like image fusion methods. Inf. Fusion 2001, 2, 177–186. [Google Scholar] [CrossRef]

- Vivone, G.; Alparone, L.; Chanussot, J.; Dalla Mura, M.; Garzelli, A.; Licciardi, G.A.; Restaino, R.; Wald, L. A critical comparison among pansharpening algorithms. IEEE Trans. Geosci. Remote Sens. 2014, 53, 2565–2586. [Google Scholar] [CrossRef]

- González-Audícana, M.; Saleta, J.L.; Catalán, R.G.; García, R. Fusion of multispectral and panchromatic images using improved IHS and PCA mergers based on wavelet decomposition. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1291–1299. [Google Scholar] [CrossRef]

- Masi, G.; Cozzolino, D.; Verdoliva, L.; Scarpa, G. Pansharpening by convolutional neural networks. Remote Sens. 2016, 8, 594. [Google Scholar] [CrossRef]

- Liebel, L.; Körner, M. Single-image super resolution for multispectral remote sensing data using convolutional neural networks. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 883–890. [Google Scholar] [CrossRef]

- Haut, J.M.; Fernandez-Beltran, R.; Paoletti, M.E.; Plaza, J.; Plaza, A.; Pla, F. A new deep generative network for unsupervised remote sensing single-image super-resolution. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6792–6810. [Google Scholar] [CrossRef]

- Haut, J.M.; Paoletti, M.E.; Fernández-Beltran, R.; Plaza, J.; Plaza, A.; Li, J. Remote sensing single-image superresolution based on a deep compendium model. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1432–1436. [Google Scholar] [CrossRef]

- Wang, Y.; Shao, Z.; Lu, T.; Wu, C.; Wang, J. Remote sensing image super-resolution via multiscale enhancement network. IEEE Geosci. Remote Sens. Lett. 2023, 20, 5000905. [Google Scholar] [CrossRef]

- Zhang, D.; Shao, J.; Li, X.; Shen, H.T. Remote sensing image super-resolution via mixed high-order attention network. IEEE Trans. Geosci. Remote Sens. 2021, 59, 5183–5196. [Google Scholar] [CrossRef]

- He, D.; Zhong, Y. Deep hierarchical pyramid network with high-frequency-aware differential architecture for super-resolution mapping. IEEE Trans. Geosci. Remote Sens. 2023, 61. [Google Scholar] [CrossRef]

- Huan, H.; Li, P.; Zou, N.; Wang, C.; Xu, D. End-to-End Super-Resolution for Remote-Sensing Images Using an Improved Multi-Scale Residual Network. Remote Sens. 2021, 13, 666. [Google Scholar] [CrossRef]

- Jiang, K.; Wang, Z.; Yi, P.; Wang, G.; Lu, T.; Jiang, J. Edge-enhanced GAN for remote sensing image super resolution. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5799–5812. [Google Scholar] [CrossRef]

- Li, H.; Deng, W.; Zhu, Q.; Guan, Q.; Luo, J. Local-Global Context-Aware Generative Dual-Region Adversarial Networks for Remote Sensing Scene Image Super-Resolution. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5402114. [Google Scholar] [CrossRef]

- Zhao, S.; Chen, H.; Zhang, X.; Xiao, P.; Bai, L.; Ouyang, W. RS-Mamba for Large Remote Sensing Image Dense Prediction. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5633314. [Google Scholar] [CrossRef]

- Lei, S.; Shi, Z. Hybrid-scale self-similarity exploitation for remote sensing image super-resolution. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5401410. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate image super-resolution using very deep convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1646–1654. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Lee, K.M. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 136–144. [Google Scholar]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Qiao, Y.; Change Loy, C. ESRGAN: Enhanced super-resolution generative adversarial networks. In Proceedings of the European Conference on Computer Vision Workshops, Munich, Germany, 20–22 September 2019; pp. 63–79. [Google Scholar]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image super-resolution using very deep residual channel attention networks. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 286–301. [Google Scholar]

- Dai, T.; Cai, J.; Zhang, Y.; Xia, S.T.; Zhang, L. Second-order attention network for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 11065–11074. [Google Scholar]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Van Gool, L.; Timofte, R. SwinIR: Image restoration using Swin transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Virtual, 11–17 October 2021; pp. 1833–1844. [Google Scholar]

- Liu, C.; Yang, H.; Fu, J.; Qian, X. Learning trajectory-aware transformer for video super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5687–5696. [Google Scholar]

- Chen, X.; Wang, X.; Zhou, J.; Dong, C. Activating more pixels in image super-resolution transformer. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 22367–22377. [Google Scholar]

- Yekeben, Y.; Cheng, S.; Du, A. CGFTNet: Content-Guided Frequency Domain Transform Network for Face Super-Resolution. Information 2024, 15, 765. [Google Scholar] [CrossRef]

- Yao, X.; Pan, Y.; Wang, J. An omnidirectional image super-resolution method based on enhanced SwinIR. Information 2024, 15, 248. [Google Scholar] [CrossRef]

- Lei, S.; Shi, Z.; Zou, Z. Super-resolution for RSIs via local–global combined network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1243–1247. [Google Scholar] [CrossRef]

- Lu, T.; Wang, J.; Zhang, Y.; Wang, Z.; Jiang, J. Satellite image super-resolution via multi-scale residual deep neural network. Remote Sens. 2019, 11, 1588. [Google Scholar] [CrossRef]

- Ma, C.; Rao, Y.; Cheng, Y.; Chen, C.; Lu, J.; Zhou, J. Structure-preserving super resolution with gradient guidance. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 7769–7778. [Google Scholar]

- Zhao, K.; Lu, T.; Wang, J.; Zhang, Y.; Jiang, J.; Xiong, Z. Hyper-Laplacian Prior for Remote Sensing Image Super-Resolution. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5634514. [Google Scholar] [CrossRef]

- Jia, S.; Wang, Z.; Li, Q.; Jia, X.; Xu, M. Multiattention Generative Adversarial Network for Remote Sensing Image Super-Resolution. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5624715. [Google Scholar] [CrossRef]

- Dong, R.; Zhang, L.; Fu, H. RRSGAN: Reference-Based Super-Resolution for Remote Sensing Image. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5601117. [Google Scholar] [CrossRef]

- Tu, Z.; Yang, X.; He, X.; Yan, J.; Xu, T. RGTGAN: Reference-Based Gradient-Assisted Texture-Enhancement GAN for Remote Sensing Super-Resolution. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5607221. [Google Scholar] [CrossRef]

- Meng, F.; Wu, S.; Li, Y.; Zhang, Z.; Feng, T.; Liu, R.; Du, Z. Single remote sensing image super-resolution via a generative adversarial network with stratified dense sampling and chain training. IEEE Trans. Geosci. Remote Sens. 2023, 62, 5400822. [Google Scholar] [CrossRef]

- Cannon, J.W.; Floyd, W.J.; Parry, W.R. Crystal growth, biological cell growth, and geometry. In Pattern Formation in Biology: Vision, and Dynamics; World Scientific: Singapore, 2000; pp. 65–82. [Google Scholar]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; IEEE: Piscataway, NJ, USA, 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Ghazel, M.; Freeman, G.H.; Vrscay, E.R. Fractal image denoising. IEEE Trans. Image Process. 2003, 12, 1560–1578. [Google Scholar] [CrossRef]

- Zhang, Y.; Fan, Q.; Bao, F.; Liu, Y.; Zhang, C. Single-Image Super-Resolution Based on Rational Fractal Interpolation. IEEE Trans. Image Process. 2018, 27, 3782–3797. [Google Scholar] [CrossRef]

- Hua, Z.; Zhang, H.; Li, J. Image Super Resolution Using Fractal Coding and Residual Network. Complexity 2019, 2019. [Google Scholar] [CrossRef]

- Song, X.; Liu, W.; Liang, L.; Shi, W.; Xie, G.; Lu, X.; Hei, X. Image super-resolution with multi-scale fractal residual attention network. Comput. Graph. 2023, 113, 21–31. [Google Scholar] [CrossRef]

- Rottensteiner, F.; Sohn, G.; Jung, J.; Gerke, M.; Baillard, C.; Benitez, S.; Breitkopf, U. The ISPRS benchmark on urban object classification and 3D building reconstruction. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, I-3, 293–298. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Zhang, C.; Fang, S.; Duan, C.; Meng, X.; Atkinson, P.M. UNetFormer: A UNet-like transformer for efficient semantic segmentation of remote sensing urban scene imagery. ISPRS J. Photogramm. Remote Sens. 2022, 190, 196–214. [Google Scholar] [CrossRef]

- Zhou, W.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Wang, S.; Zhou, T.; Lu, Y.; Di, H. Contextual transformation network for lightweight remote-sensing image super-resolution. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Xiao, Y.; Yuan, Q.; Jiang, K.; He, J.; Lin, C.W.; Zhang, L. TTST: A top-k token selective transformer for remote sensing image super-resolution. IEEE Trans. Image Process. 2024, 33, 738–752. [Google Scholar] [CrossRef]

| Method | Scale | Vaihingen | Potsdam | ||

|---|---|---|---|---|---|

| PSNR | SSIM | PSNR | SSIM | ||

| Bicubic | 34.9100 | 0.9433 | 37.9500 | 0.9573 | |

| EDSR | 37.4575 | 0.9655 | 40.6685 | 0.9679 | |

| RCAN | 37.8421 | 0.9679 | 40.7080 | 0.9685 | |

| RRDBNet | 37.1978 | 0.9636 | 40.3393 | 0.9663 | |

| CTNet | 36.9865 | 0.9626 | 40.3567 | 0.9668 | |

| HSENet | 37.4813 | 0.9658 | 40.6847 | 0.9681 | |

| MHAN | 37.5879 | 0.9663 | 40.7405 | 0.9683 | |

| TTST | 37.8165 | 0.9678 | 40.7555 | 0.9688 | |

| DFMDN (ours) | 37.8622 | 0.9682 | 40.9591 | 0.9696 | |

| Bicubic | 27.9300 | 0.8086 | 30.0600 | 0.8051 | |

| EDSR | 29.8381 | 0.8540 | 32.7318 | 0.8631 | |

| RCAN | 29.6331 | 0.8564 | 33.0310 | 0.8646 | |

| RRDBNet | 29.7839 | 0.8538 | 33.0146 | 0.8641 | |

| CTNet | 29.4021 | 0.8425 | 32.3686 | 0.8533 | |

| HSENet | 29.7186 | 0.8534 | 32.8000 | 0.8604 | |

| MHAN | 29.6125 | 0.8503 | 32.9895 | 0.8636 | |

| TTST | 29.8667 | 0.8564 | 32.9834 | 0.8635 | |

| DFMDN (ours) | 30.0023 | 0.8578 | 33.0796 | 0.8644 | |

| Model | Params (M) | FLOPs (G) | Time (s) |

|---|---|---|---|

| EDSR | 1.52 | 8.12 | 0.32 |

| RCAN | 12.61 | 53.16 | 0.54 |

| RRDBNet | 16.70 | 73.43 | 0.38 |

| CTNet | 0.35 | 1.04 | 0.39 |

| HSENet | 5.29 | 16.70 | 0.56 |

| MHAN | 11.20 | 46.31 | 0.36 |

| TTST | 18.30 | 76.84 | 0.42 |

| DFMDN(ours) | 13.89 | 62.03 | 0.39 |

| Metric | TTST | Proposed (DFMDN) | Wilcoxon p-Value |

|---|---|---|---|

| PSNR | * | ||

| SSIM | * |

| Model Setting | PSNR | SSIM |

|---|---|---|

| = 0.5 | 32.7749 | 0.8597 |

| = 0.4 | 32.8557 | 0.8619 |

| = 0.3 | 32.9754 | 0.8631 |

| = 0.2 | 33.0157 | 0.8636 |

| = 0.1 (the final) | 33.0796 | 0.8644 |

| Model Setting | PSNR | SSIM |

|---|---|---|

| w/o DFEM | 32.9085 | 0.8613 |

| w/o ODF | 32.9706 | 0.8621 |

| w/o MDRRDB | 32.9913 | 0.8632 |

| DFMDN (the final) | 33.0796 | 0.8644 |

| Model Setting | PSNR | SSIM |

|---|---|---|

| Sum | 32.9036 | 0.8623 |

| Concat | 32.9703 | 0.8621 |

| Channel attention | 32.9687 | 0.8635 |

| CDAS (the final) | 33.0796 | 0.8644 |

| Number of Directions | PSNR | SSIM |

|---|---|---|

| 1 | 32.9913 | 0.8632 |

| 2 | 33.0439 | 0.8640 |

| 4 | 33.0714 | 0.8642 |

| 8 (the final) | 33.0796 | 0.8644 |

| Number of CDAS | PSNR | SSIM |

|---|---|---|

| 1 | 33.0388 | 0.8637 |

| 2 (the final) | 33.0796 | 0.8644 |

| 3 | 33.0837 | 0.8644 |

| 4 | 33.0839 | 0.8645 |

| Model Setting | PSNR | SSIM |

|---|---|---|

| w/o DFEM | 25.9457 | 0.7291 |

| w/o ODF | 25.8749 | 0.7277 |

| w/o MDRRDB | 25.8997 | 0.7296 |

| DFMDN (the final) | 26.0039 | 0.7300 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, S.; He, J.; Zhao, B. Digital Surface Model and Fractal-Guided Multi-Directional Network for Remote Sensing Image Super-Resolution. Information 2025, 16, 1020. https://doi.org/10.3390/info16121020

Li S, He J, Zhao B. Digital Surface Model and Fractal-Guided Multi-Directional Network for Remote Sensing Image Super-Resolution. Information. 2025; 16(12):1020. https://doi.org/10.3390/info16121020

Chicago/Turabian StyleLi, Sumei, Jiang He, and Bo Zhao. 2025. "Digital Surface Model and Fractal-Guided Multi-Directional Network for Remote Sensing Image Super-Resolution" Information 16, no. 12: 1020. https://doi.org/10.3390/info16121020

APA StyleLi, S., He, J., & Zhao, B. (2025). Digital Surface Model and Fractal-Guided Multi-Directional Network for Remote Sensing Image Super-Resolution. Information, 16(12), 1020. https://doi.org/10.3390/info16121020