1. Introduction

Temporal multimodality provides avenues for heterogeneous information processing. Efficient handling of such phenomena significantly enhances the ability to account for a wide range of diverse data types in unified platforms and environments. Recent artificial intelligence (AI) models [

1,

2,

3] process multimodality in unprecedented ways, often quite natively using deep neural architectures [

4,

5]. From real-time language translation to reasoning over images and video processing and captioning, the breakthroughs in recent models are expected to drive a new era of products and innovations that leverage these advances (e.g., [

6,

7,

8]).

Handling such diversity requires a foundation that lays the groundwork for various accounts in terms of forms and latencies. Especially in time-sensitive domains, the synchronization of different, time-varying forms must be continually assessed to ensure a seamless flow with minimal discrepancies. The computational complexity of new AI-driven solutions must be examined and thoroughly tested to select candidates that offer potential improvements and novelty over traditional methods.

Data in many applications often rely on timely transmission to remain valid [

9,

10,

11]. When data are inherently asynchronous, they can pose the challenge of handling delays and drops without undermining the fidelity of the flow and communication. Multimodality introduces new challenges, primarily when operating under hard, real-time constraints, as in online streams. The time base on which such a flow depends may significantly contribute to the interpretability of the transmitted data. While strict constraints are necessary in many domains, handling errors and missing values is also important. Diffusion algorithms and models [

2] have recently achieved a significant milestone in generative performance. In some domains, conditional diffusion models [

12] have shown 40–65% improvements when applied to imputing over missing values in time series. The goal of this work is to equip traditional simulators with learnability to reduce staleness caused by drops or delays in multimodal streams while adhering to the formal temporal properties of the underlying modeling framework.

Thus, this paper establishes a computational foundation for simulating information flow that inherently accommodates temporal multimodality (e.g., neural encoding and diffusion) and offers significant solutions to regular timing and delays. Combining methods across layers—from symbolic reasoning to signal processing—can be challenging due to interdisciplinary barriers. However, having a framework that enables such multilayered assessment by leveraging recent AI models stands to offer significant resolutions for widely known challenging issues.

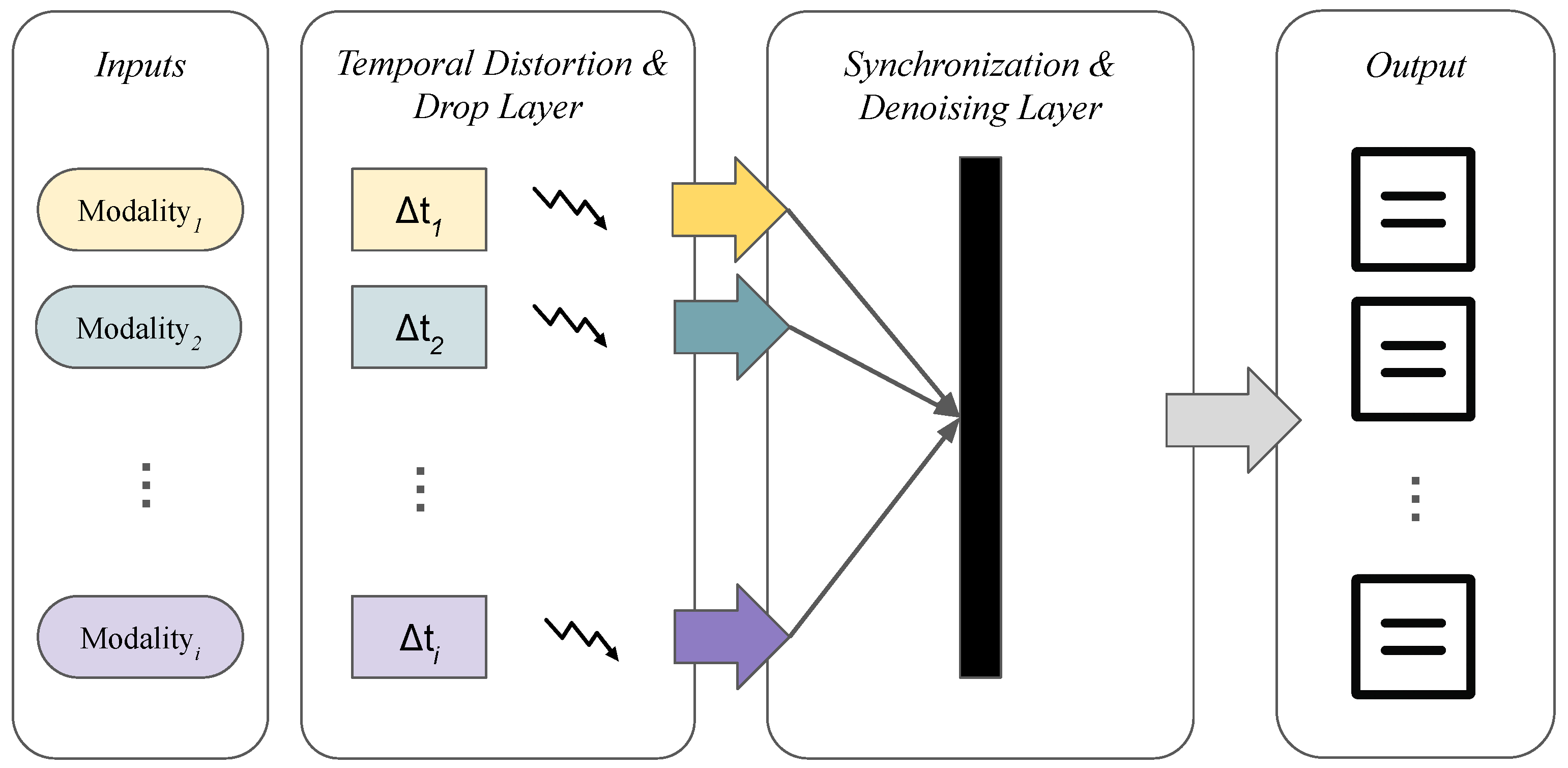

Figure 1 illustrates how modern communications may take place given temporal multimodality. Various forms of inputs enter the pipeline through different ports and channels. A different latency sanctions each form. Additionally, jitter during transmission further increases flow variability due to differences in the modalities’ latencies. The synchronization layer must therefore accommodate such variations. To effectively reconstruct the flow, the layer uses a suitable AI model tailored to each modality for denoising. The outcome is a correctly constructed stream according to a defined quality threshold.

Thus, a simulation modeling framework is proposed in this work to precisely account for the variability and jitter in multimodal flows. The framework models each modality as an incoming input to the system, characterized by distinct latency and jitter. Each input encounters variable delays derived from a predetermined distribution. It simulates the varying latencies each modality encounters, as well as the jitter each latency may undergo. Specific dropping semantics can also be introduced at this stage. At the synchronization layer, the implemented model expects incoming inputs with various latencies and combines them to form the corresponding flow. At this layer, the model can be tuned to minimize waiting and optimize overall performance within a well-defined experimental framework.

Background information on the underlying formulations is provided in the next section. Then,

Section 3 explains in detail the methodology of creating the modeling & simulation (M&S) framework in light of the given background. The approach is demonstrated with example simulations and experiments in

Section 4, and discusses the results in

Section 5.

3. Methods

First, the model is specified at a high level using an activity flow diagram. Each modality is presented as an input parameter. Upon arrival, different modalities follow parallel paths with varying latencies and jitter specifications. Each path consists of atomic steps/actions with a distinct timing and state characterizations. Each action may optionally have a queue to manage multiple arrivals and input pressure. It can also be used to define dropping semantics. However, it is an optional feature of the model, and the flow may choose to drop inputs that arrive while actions are in a processing state. After completing these steps, each input modality ultimately reaches the synchronization node. Now, the underlying model allows different combining mechanisms to occur across various accounts of timing, order, and type. For example, inputs received through different channels can be combined arbitrarily or according to a predefined mechanism. It can also be extended to accommodate a live stream and time windows.

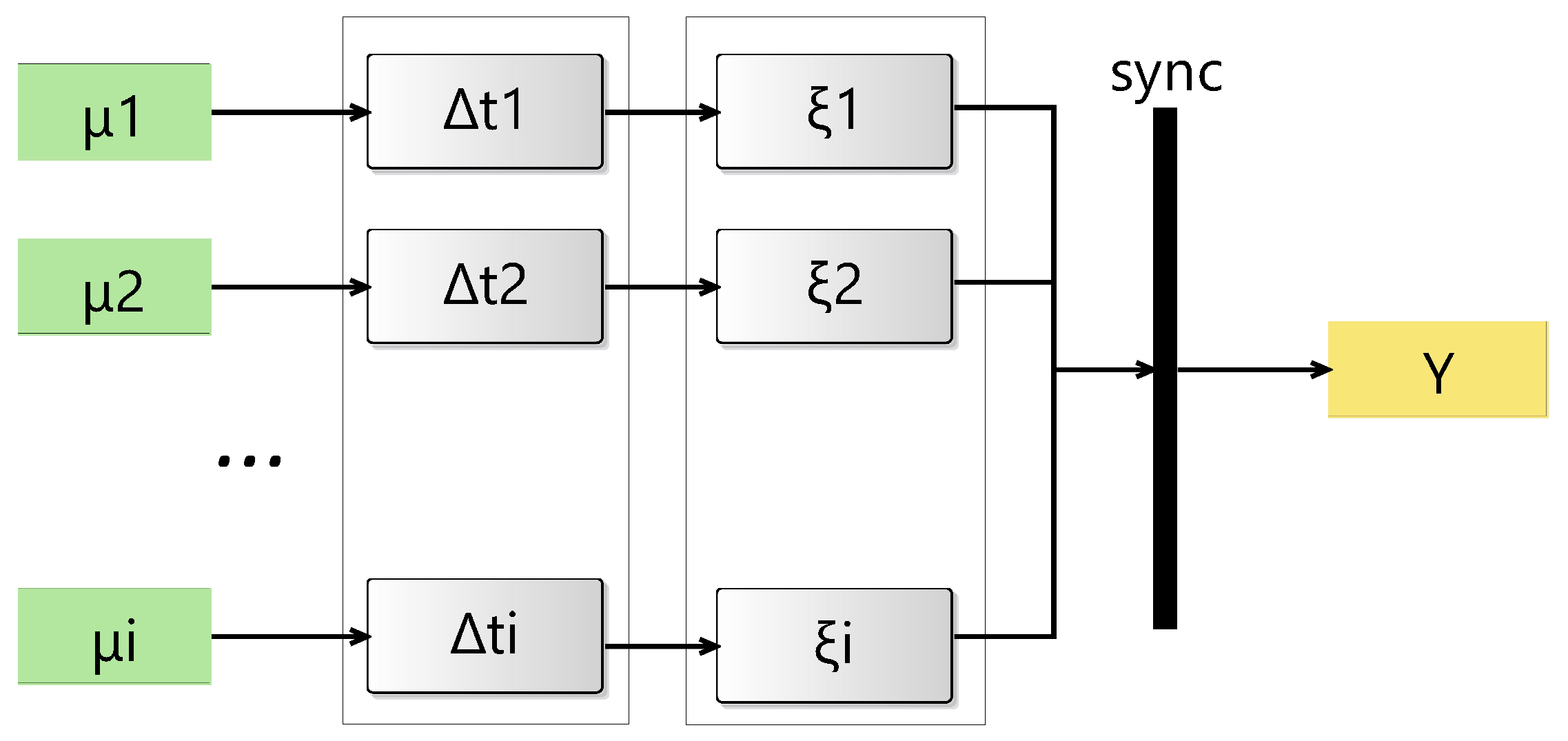

The underlying formulation specifies the overall structure of the system with models, layers, hierarchical levels, and couplings. It also specifies the state transition and time-advance functions for each atomic model. A generic overall structure of the model is depicted as an activity flow diagram in

Figure 2. Horizontal layers highlight the stages through which the stream undergoes state changes, where hierarchical levels determine depth. Note that the diagram can be readily simulated by following the stages as described below.

The diagram is transformed into a fully specified coupled model. The coupled model consists of multiple atomic models. It is initially a one-level model but it will later be expanded to cover more detailed cases with a multi-level hierarchy and to examine its effect on the observed analytics. Each modality is represented in the coupled model as an external input to the root model. Each modality stream undergoes a distinct latency at the beginning, specified by an atomic model with a deterministic or probabilistic timing assignment and state changes. At the second stage of this layer, a new set of atomic models introduces timing variability into the stream by using various distributions within a modular DEVS Markov model. It can be a single atomic model but it can also consist of multiple models in various structural and hierarchical arrangements.

4. Experiments

An exemplary model illustrates the key aspects of the framework.

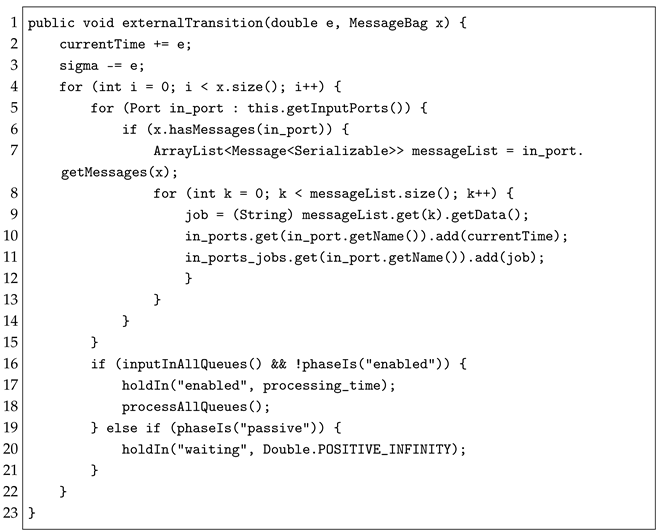

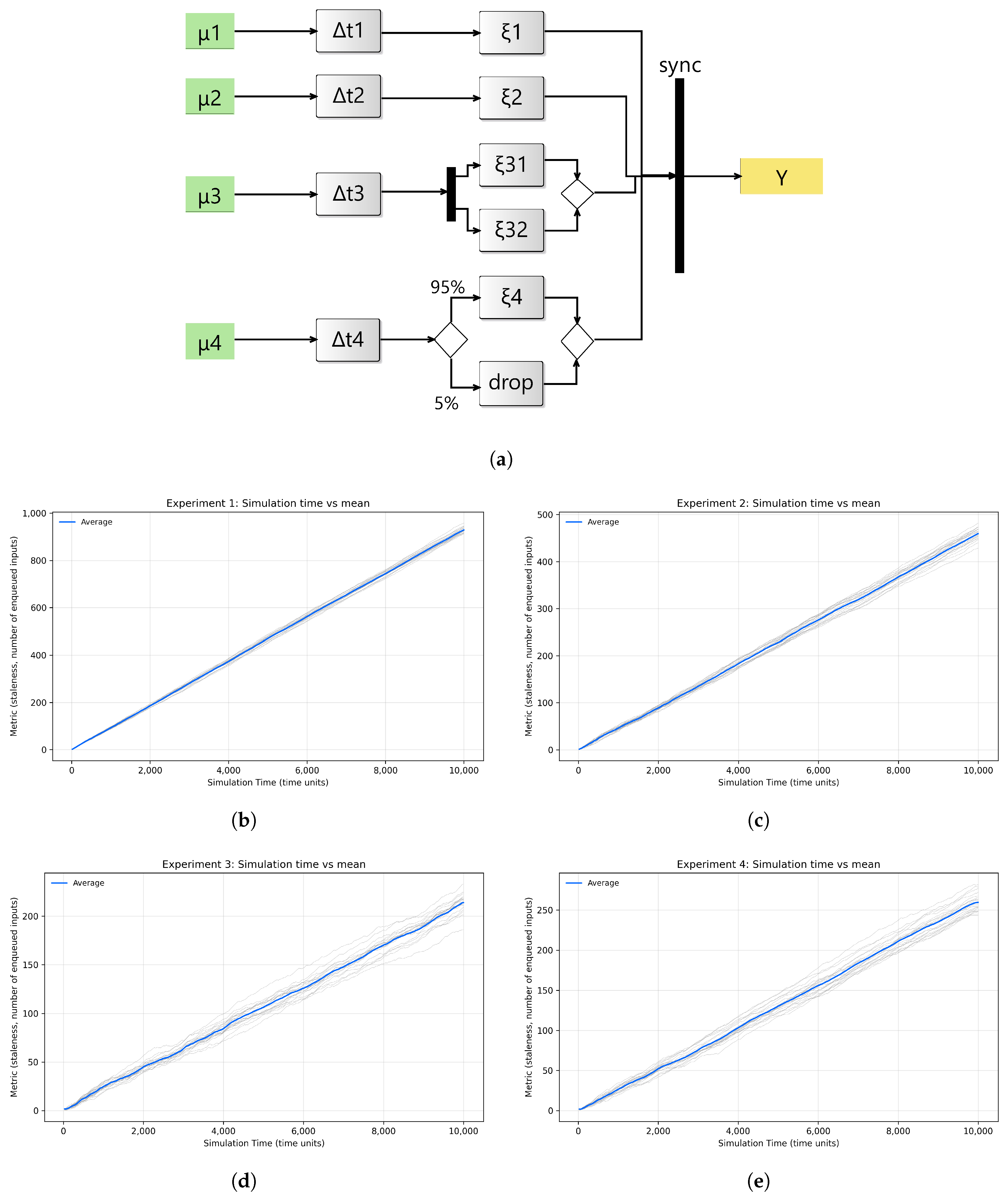

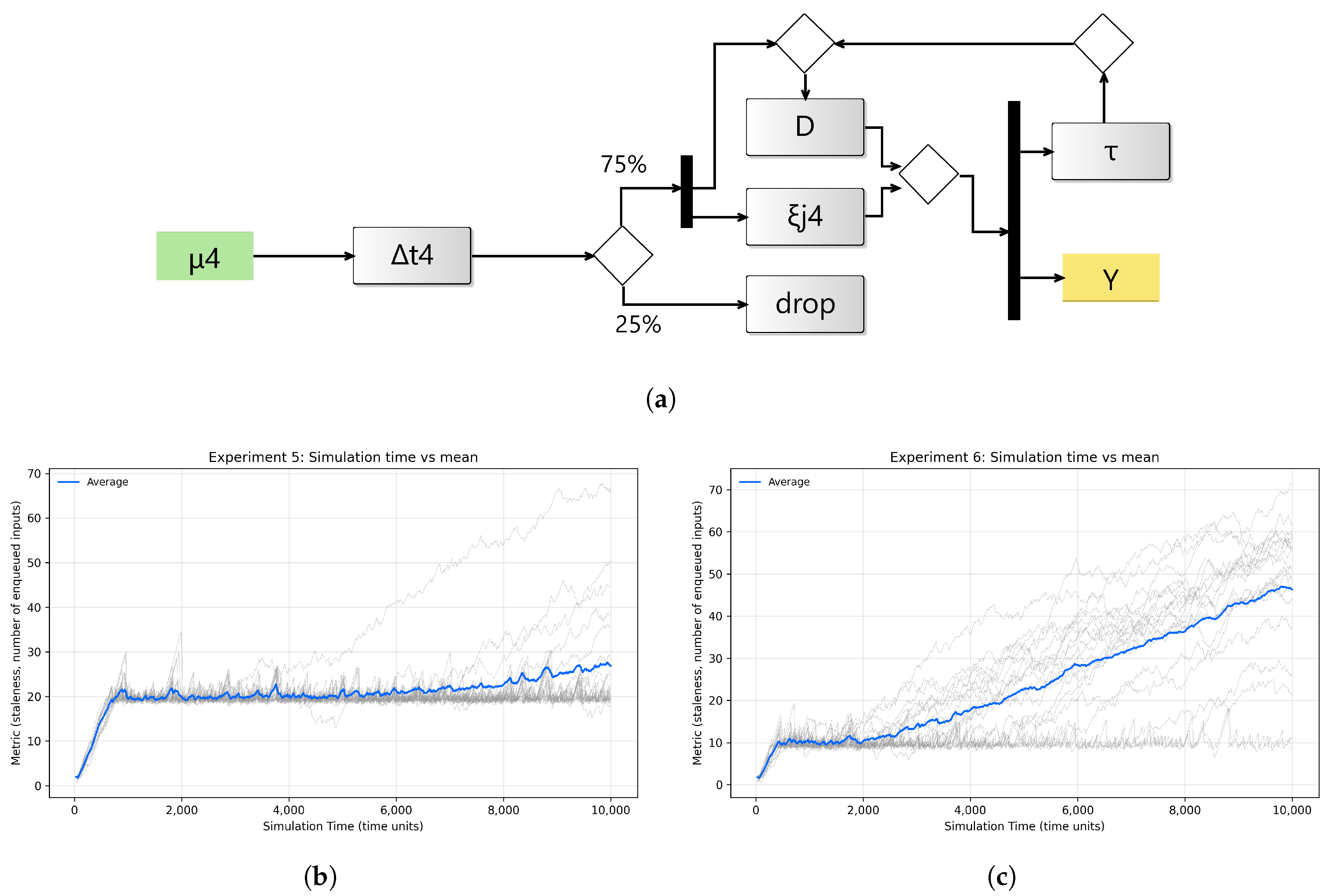

Figure 3 shows the devised activity along with some sample simulation results. In this experiment, staleness is observed at the synchronizer via average queue size. The larger the average size, the greater the staleness. Since each stream feeds into the synchronization layer via a channel queue, the average queue size across the four incoming flows indicates the waiting time for inputs arriving through each flow. The focus on staleness and average queue size stems from their relevance to synchronization and input freshness in this context. While throughput can be measured, the model actively synthesizes and reconstructs missing or delayed inputs rather than processing a complete flow. The relationship between staleness and throughput is generally asymmetric. In conventional queuing systems, larger queues increase waiting times and reduce throughput. In the proposed model, as queues grow, the diffusion process synthesizes the missing inputs, thereby maintaining effective throughput despite delays. The resulting trade-off is that lower staleness is achieved at the potential cost of reduced stream quality. A simple baseline of the experiment corresponds to conservative interarrival rates, where all modalities exhibit varying timing behavior and no synthesis, under which the model self-manages staleness with a simple synchronization control.

Table 1 shows the simulation results for timestamp matching with different interarrival rates without dropping.

The activity in

Figure 3a illustrates four modalities

each undergoing a latency stage (

) followed by stochastic jitter

before entering the synchronizer. Modality

encounters a delay

specified by a uniform distribution

∼

, representing a lightweight latency. Modality

encounters a delay

specified as

∼

. Modality

encounters a delay

specified by an exponential distribution

∼

, followed by two parallel jitter models

. Their outputs are merged afterward. Modality

goes through a delay

specified by a Gaussian distribution

∼

, representing higher latency. After this step, the flow undergoes a probabilistic branching. The input is forwarded to jitter

with probability

, and with probability

, the input is dropped.

All jitter outputs are routed into the synchronizer block, which produces the combined output stream Y. All jitter models time advance function is specified with an exponential distribution ∼.

The delay distributions are arbitrarily selected to mimic diversity in latency behaviors observed in multimodal streams. The uniform distributions represent a lightweight delay with near-constant time. The exponential delay reflects a memoryless arrival typical of asynchronous event-driven streams, while the Gaussian delay ∼ introduces a high-latency, tightly clustered modality analogous to slow, periodic sensors. The probabilistic drop and the additional exponential jitter further add sporadic interruptions and noise. Additionally, a sensitivity analysis was conducted in which the distributions’ parameters were perturbed to different values. Lower staleness behavior is observed with consistent modalities, and similar staleness was observed when the diffusion model is introduced across all modalities, confirming that the synchronization behavior is qualitatively invariant to moderate variations in the underlying latency distributions. This indicates that the synchronizer primarily adapts to the temporal order and frequency of missing data rather than to the exact form of the delay distribution.

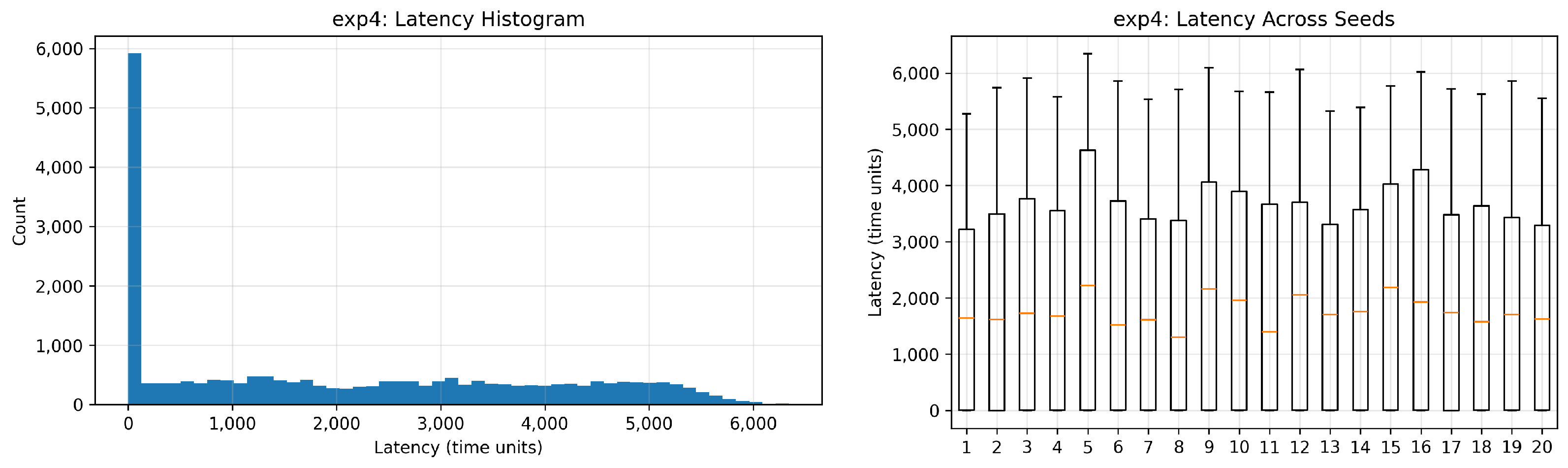

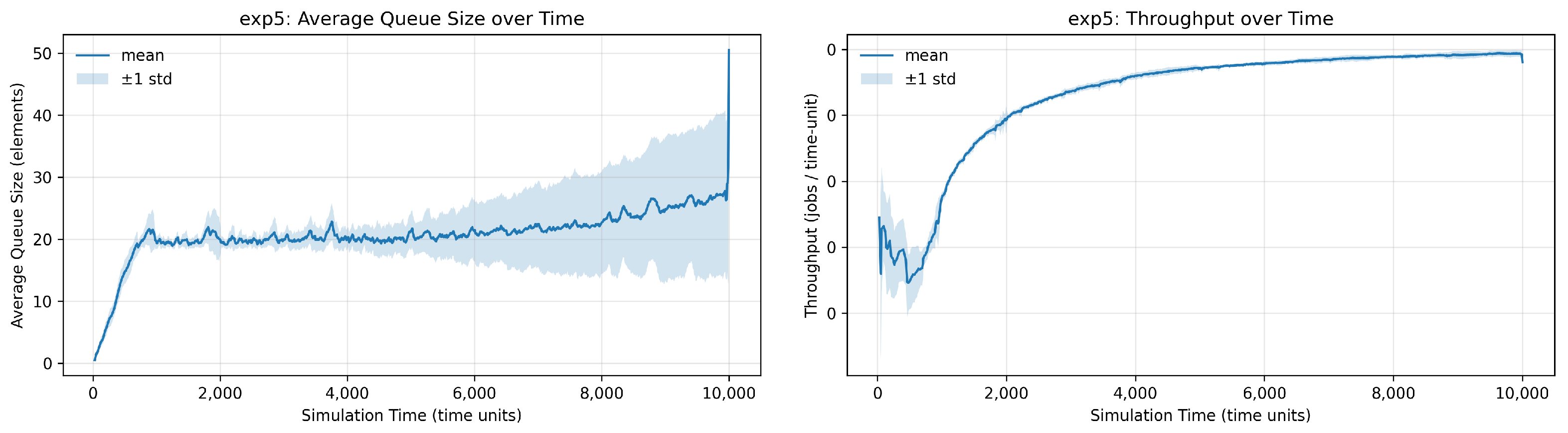

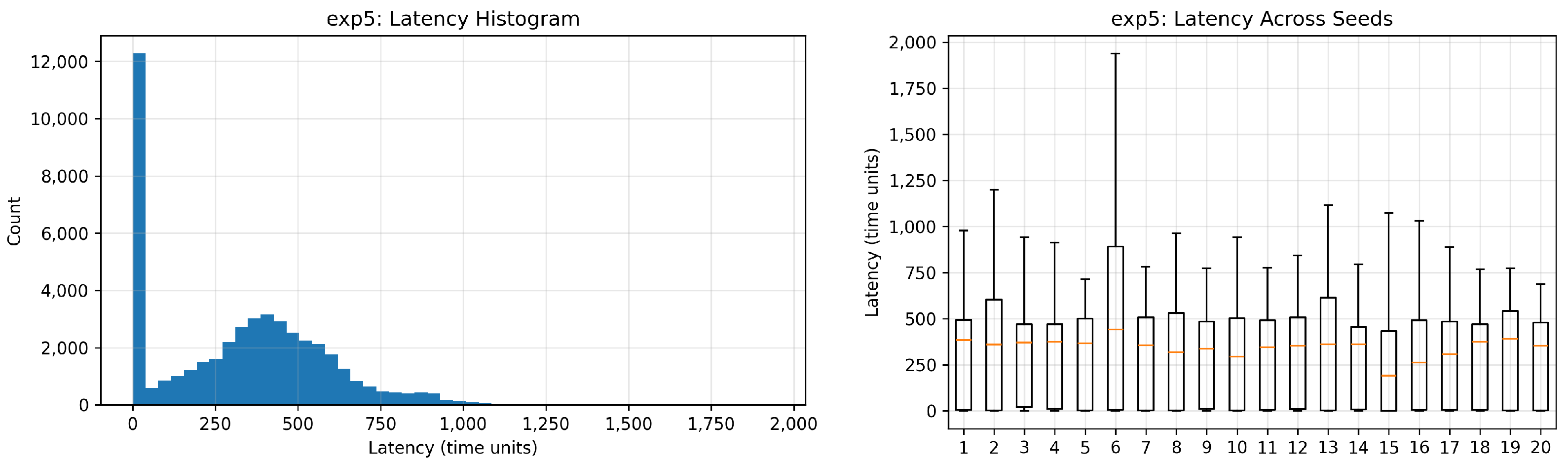

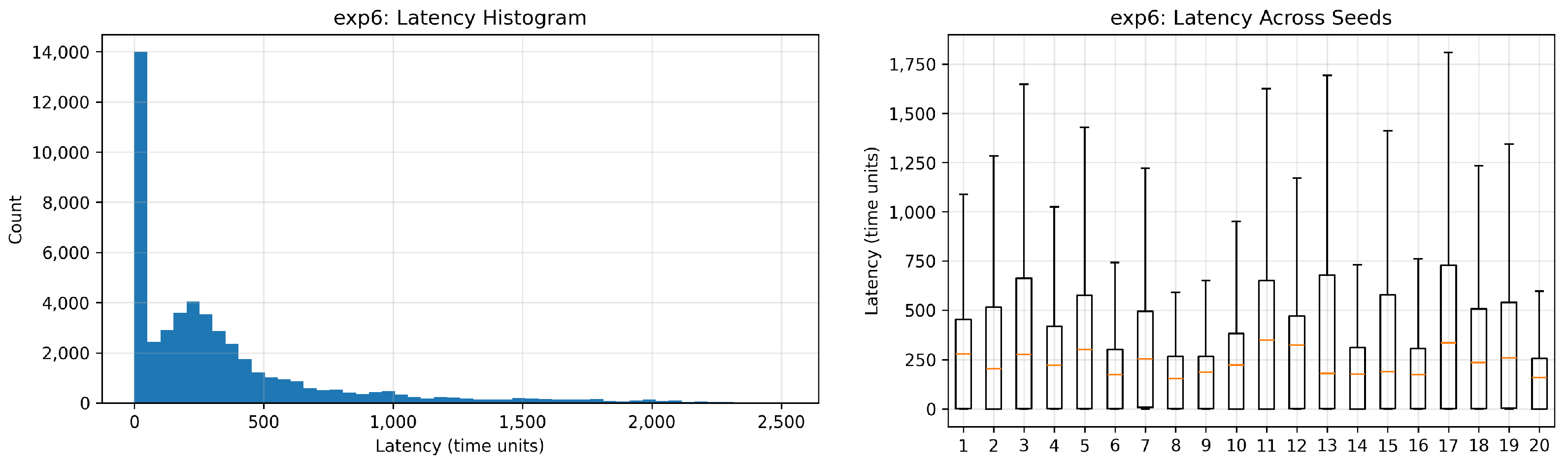

Four experimental setups are devised to evaluate the effect of interarrival rate and dropping probability on the average waiting time within the synchronizer queues, thereby quantifying staleness by averaging through queue sizes. Each experiment is run over 10,000 time units. In the first setup, a new input is generated at every time unit and fed consecutively into one of the streams. In other words, an input is generated for each stream every four time units (

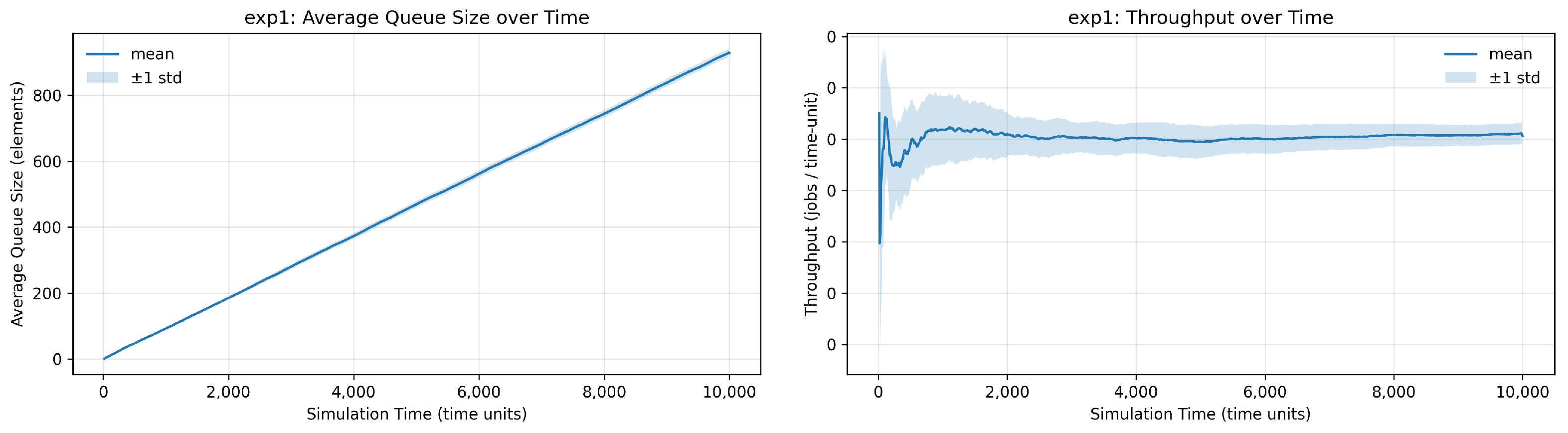

i). This produces the largest buildup of inputs in the synchronizer queues, since arrivals are frequent and often wait for higher-latency streams. The result is shown in

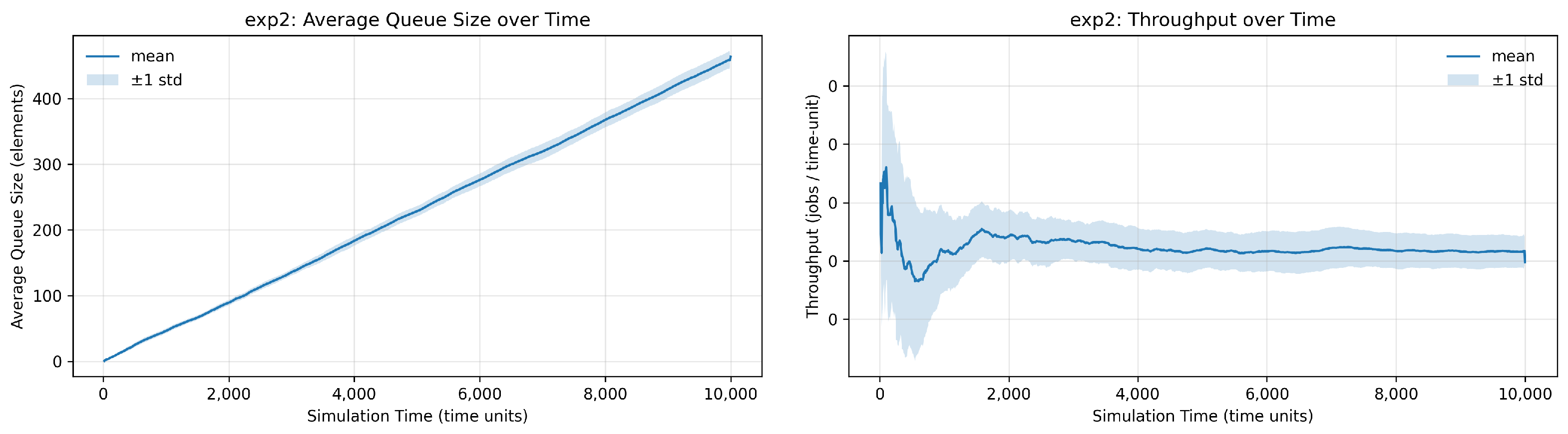

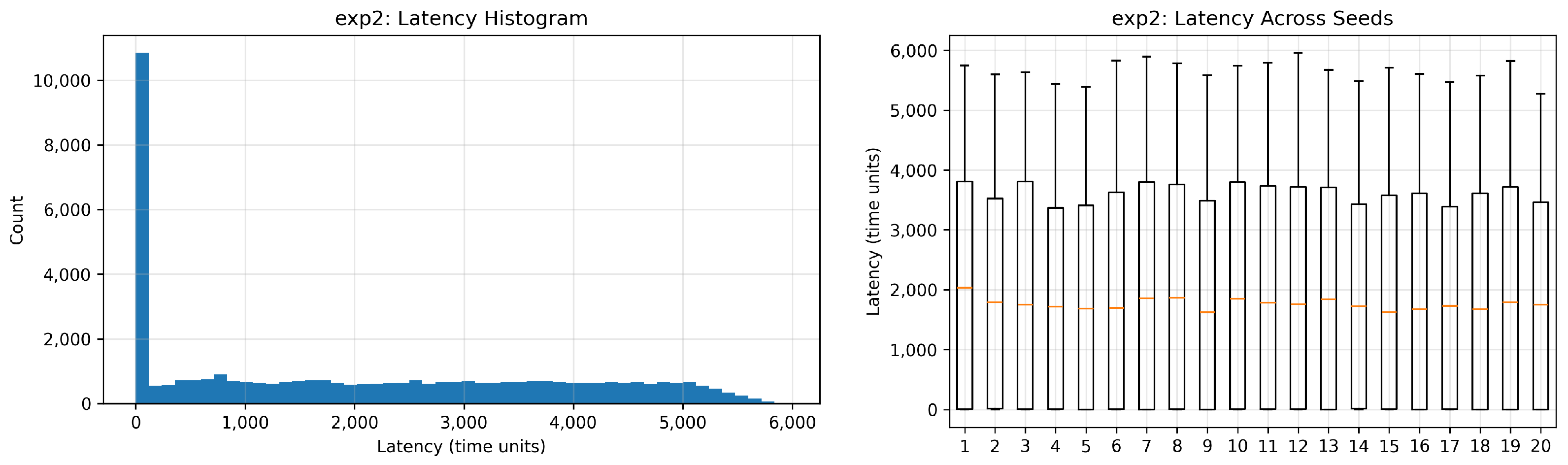

Figure 3b, where staleness increases steadily. In the second setup, a new input is generated every 3 time units. As expected, the staleness is significantly smaller in

Figure 3c. In the third setup, with inputs generated only every five time units, queue pressure is further reduced while showing some staleness fluctuations, highlighting a typical dissipation behavior in queuing systems (

Figure 3d).

In the fourth setup, the input rate is kept at every five time units, but the drop probability at the probabilistic branch of modality

is increased to 25%. The goal is to introduce additional pressure by increasing the drop rate and then observing staleness at the synchronizer. As expected, the results in

Figure 3e show an increase in staleness due to the increased wait time of other, more frequent arrivals in faster streams.

Overall, the results of these four experiments validate the simulation in two respects. First, it confirms that higher input rates directly increase synchronizer queue occupancy. Second, increasing the dropping probability results in more waiting time for the incoming flow from other streams. These results highlight the trade-off between aspects of throughput and input pressure in synchronizing temporal multimodal streams.

4.2. Empirical Evaluation on Multivariate Sensor Data

The empirical evaluation of synthesis is conducted using publicly available sensor data from Beach Weather Stations operated by the Chicago Park District [

25]. The proposed diffusion synthesis is evaluated on multivariate, timestamped weather streams. Training is conducted at each location (single-station) and on the combined dataset. The combined dataset is constructed by concatenating all available station records and shuffling temporally. While evaluation is performed at each station, it is also performed at both stations to test the model’s robustness.

Nine channels are considered a feature set in a fixed order. For readability, feature indices are denoted as – corresponding respectively to: Air Temperature, Wet Bulb Temperature, Humidity, Rain Intensity, Interval Rain, Wind Speed, Maximum Wind Speed, Barometric Pressure, Battery Life. All features are z-score normalized using training statistics. Reconstruction and metrics are reported in original units.

Training. The model is trained on a single station (Oak Street), comprising timestamped rows. It is also trained at another station (Foster), comprising rows, as well as the combined dataset.

Evaluation (eight runs). Results are reported under the different training conditions and two inference regimes (non-causal/causal), and on two stations:

Note that “Wet Bulb Temperature” and “Rain Intensity” are not available at station 2. In this procedure, these are treated as missing throughout the evaluation. Consequently, metrics for those features are undefined and appear as in the tables (no valid ground-truth positions contribute to the average).

During evaluation, dropping is simulated with a binary drop mask

applied to available values. Metrics are computed exclusively over positions that (i) have ground-truth and (ii) were dropped by the simulation:

Overall, MAE/MSE and MAE-by-feature are all reported. Non-causal inference uses the full context, while causal inference reconstructs sequentially using only past inputs.

Table 2 summarizes overall MAE/MSE across the four runs where the training is performed per station.

Table 3 reports the same results when the training is performed for the combined dataset. Partial input dropping is emulated by randomly masking approximately 25% of the values using a Bernoulli process (

bernoulli_p = 0.25).

Table 4 reports the MAE per feature with the training conducted per station.

Table 5 reports the same results for evaluation while conducting the training on the combined datasets.

Table 6 shows the results with fully synthetic inputs drawn from the training on the combined dataset. The results highlight the degradation of MAE for some features.

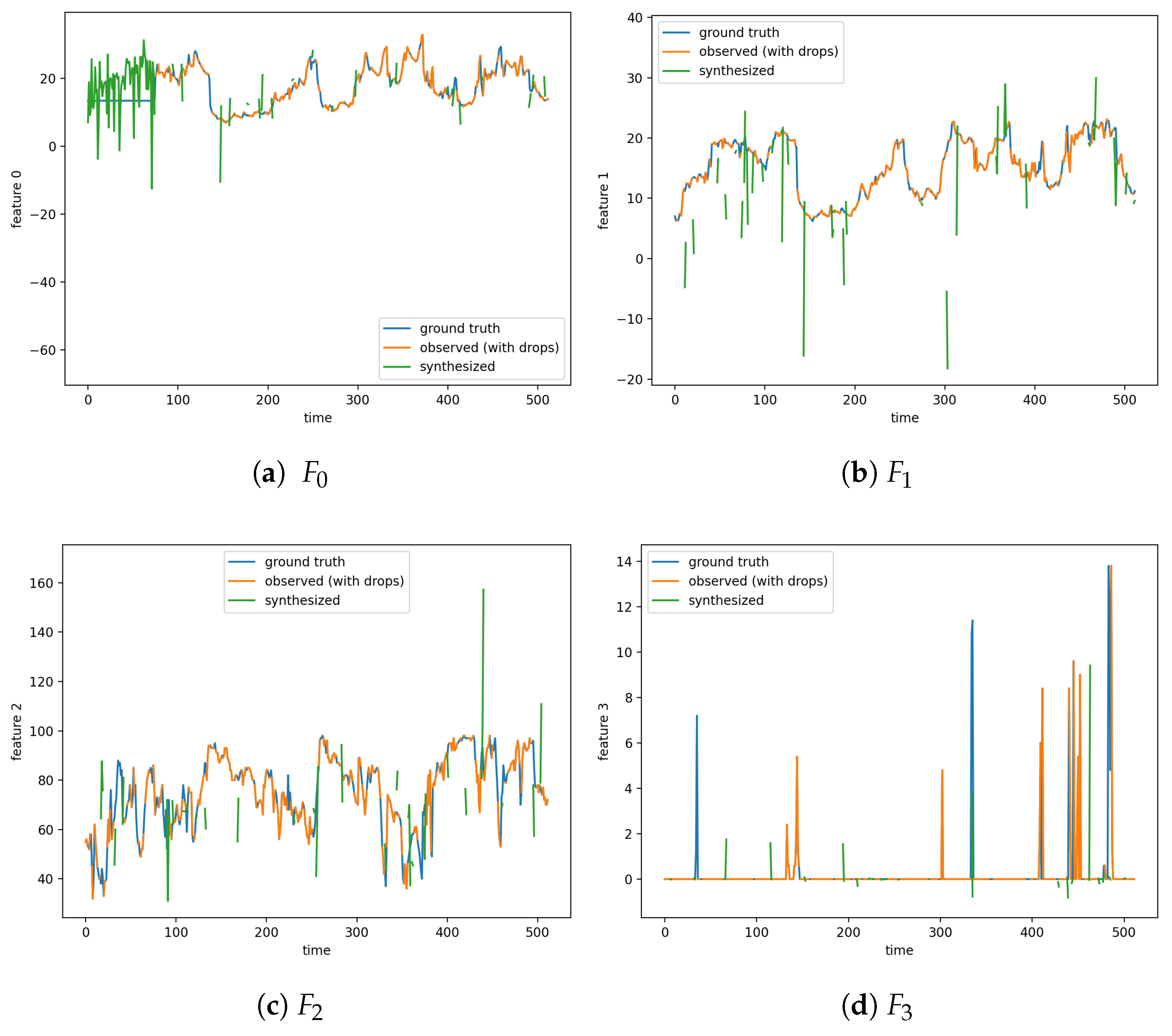

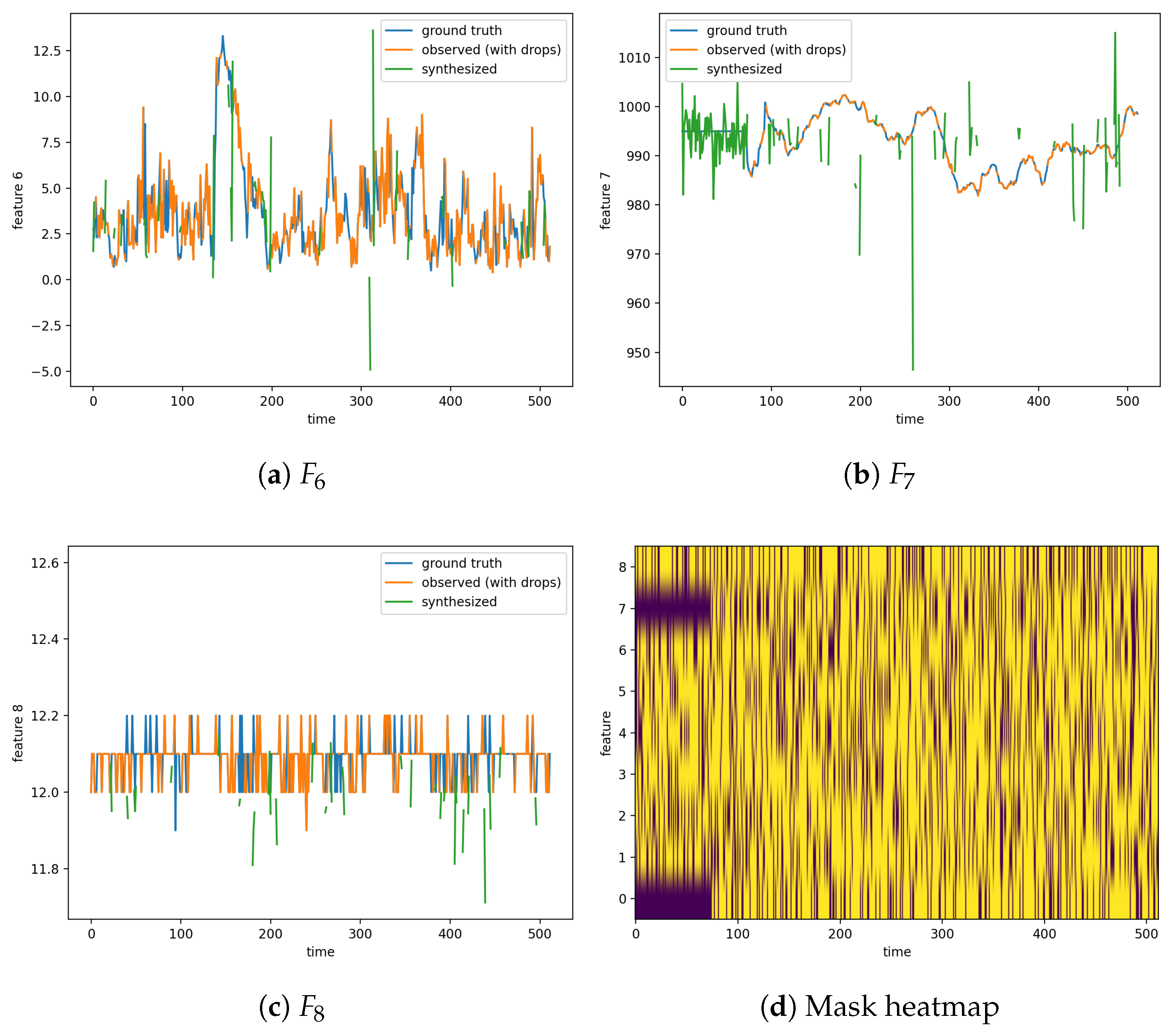

For each run and feature, ground-truth visualizations are provided, along with observed values and synthesized inputs during the simulation, where values are dropped.

Figure 5 and

Figure 6 show the results of the simulation with station 1 while dropping 25% of the inputs. The results for the other 13 experiments are available in the code repository.

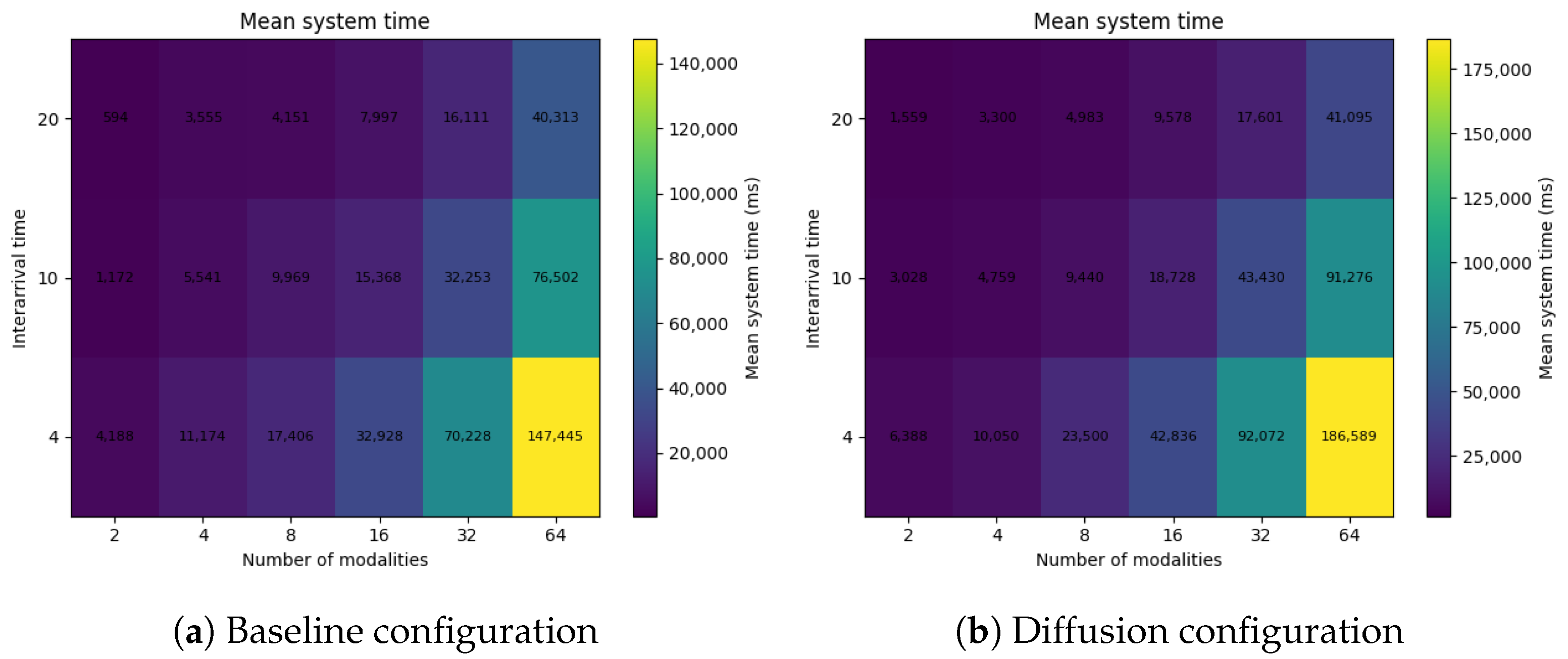

Computational Overheads

The simulations were run on a standard workstation equipped with an NVIDIA GeForce RTX 3070 GPU (Nvidia Corporation, Santa Clara, CA, USA) and an Intel Core i9-12900H CPU with 32 GB of RAM (Intel Corporation, Santa Clara, CA, USA). Each diffusion training run required around 5 s per epoch, and full convergence was typically reached within 10 epochs. In the DEVS-based simulation with four modalities, the overall runtime for 10,000 time units was about 4337–11,039 ms per seed, with both the viewer and logging disabled. In modest cases, these overheads remain manageable but provide a basis for assessing the practical feasibility of integrating learnable imputation into a formal simulation framework, while acknowledging the computational trade-off.

Figure 7 compares the mean system time across configurations defined by interarrival interval and number of modalities, with the drop rate fixed at 25%. Each cell shows the average of 20 runs. The basic configuration (

Figure 7a) demonstrates a gradual increase in processing time as the system load grows, whereas the diffusion configuration (

Figure 7b) exhibits a steeper rise at higher modality counts.

The performance of the proposed framework is influenced by several external factors, including data characteristics and computational constraints. Variations in inter-arrival times and the simulated drop probability directly affect the quality of the stream and staleness behavior. Overfitting can occur when the model is trained with limited data. This can be mitigated within the modular framework and through accurate system decomposition. In the sensor data example, this can be achieved via cross-station evaluation with dedicated modules.