HyEWCos: A Comparative Study of Hybrid Embedding and Weighting Techniques for Text Similarity in Short Subjective Educational Text

Abstract

1. Introduction

2. Related Work

3. Materials and Methods

3.1. Pre Processing

- Case Folding.

- b.

- Punctuation Removal.

- c.

- Number Removal.

- d.

- Tokenization.

- e.

- Stop Word Removal.

- f.

- Lemmatization.

3.2. Corpus Construction

3.3. Data Transformation

3.4. Building an Embedding and Word Weighting Model

- Cosine Similarity

- b.

- Propose method

3.5. Model Evaluation

3.6. Evaluation Result Analysis

4. Results

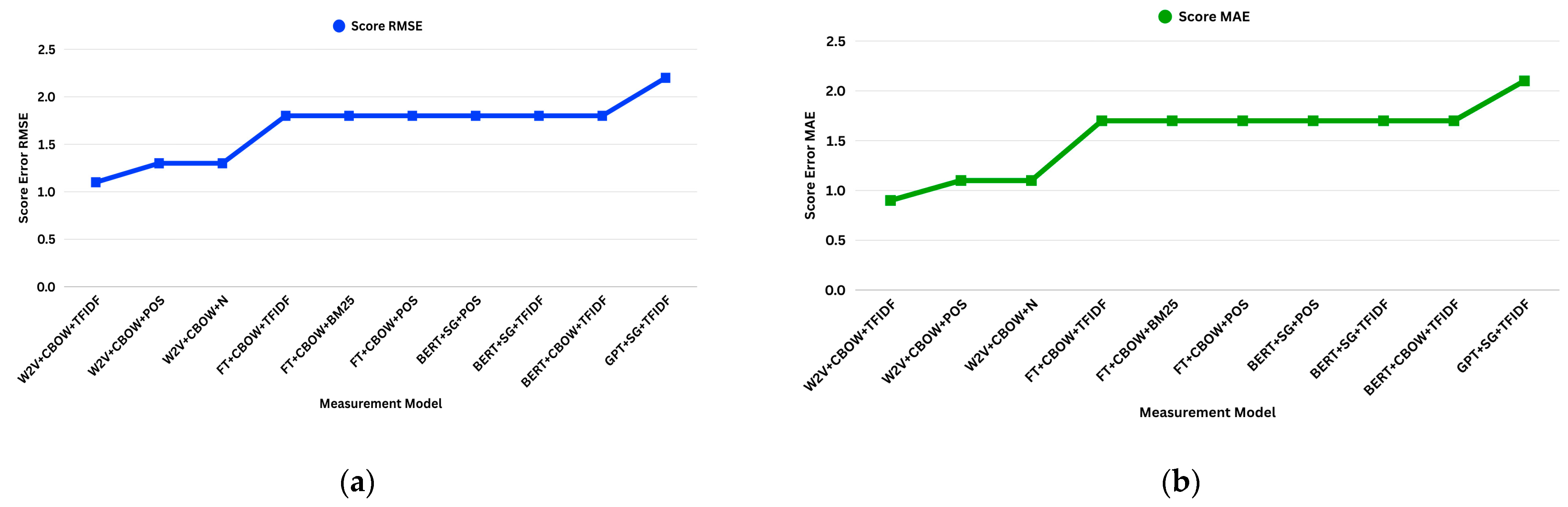

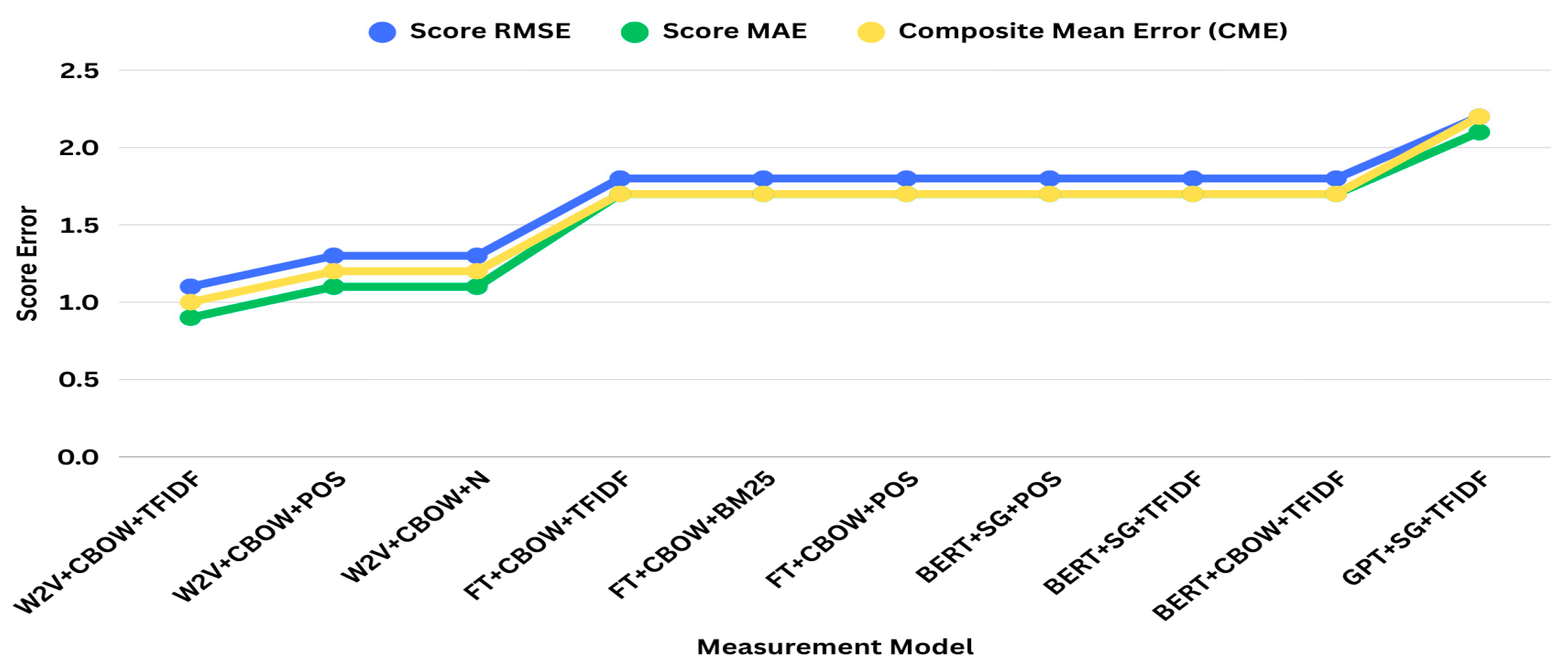

4.1. Model Performance and Consistency Evaluation Based

4.2. Evaluation Based on Combined Correlation and Standard Deviation Scores

4.3. Evaluation of Models Using RMSE, MEA, and Correlation Metrics

4.4. Comparison of Assessment Text Similarity Scores

4.5. Evaluation Results of Method Combinations in Text Similarity Measurement

5. Discussion

5.1. Proposed Model Performance in Text Similarity Measurement

5.2. Analysis of Hybrid Embedding and Weighting Model Performance

5.3. Correlation Relationship with Error Values (RMSE and MAE)

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| HyEWCos | Hybrid Embedding with Weighting and Cosine Similarity |

| W2V | Word2vec |

| FT | FastText |

| BERT | Bidirectional Encoder Representations from Transformers |

| GPT | Generative Pre-Trained Transformer |

| TFIDF-w | Term Frequency-Inverse Document Frequency-Weighting |

| POS-w | Part-of-Speech—Weighting |

| N-w | No Weighting |

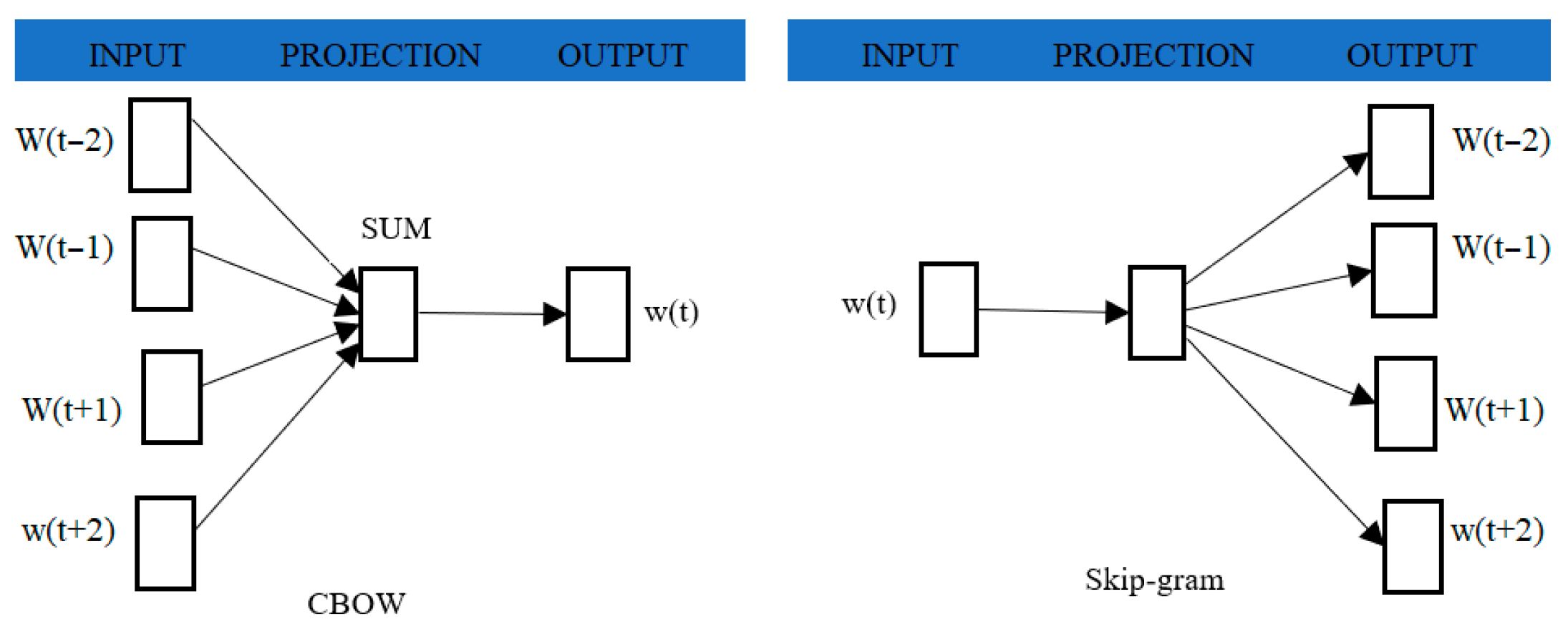

| CBOW | Continuous Bag of Words |

| SG | Skip-Gram |

| RMSE | Root Mean Square Error |

| MAE | Mean Absolute Error |

| STD | Standard Deviation |

| P1 | Text Pair D1 with Text D2 |

| P2 | Text Pair D1 with Text D3 |

| P3 | Text Pair D1 with Text D4 |

| ST | Text Similarity Score |

| ED | Embedding |

| WT | Weighting |

| TA_STD | Total Average Standard Deviation |

| TA_SM | Total Average Similarity |

References

- Rasool, A.; Aslam, S.; Hussain, N.; Imtiaz, S.; Riaz, W. nBERT: Harnessing NLP for Emotion Recognition in Psychotherapy to Transform Mental Health Care. Information 2025, 16, 301. [Google Scholar] [CrossRef]

- Makhmudov, F.; Kultimuratov, A.; Cho, Y.I. Enhancing Multimodal Emotion Recognition through Attention Mechanisms in BERT and CNN Architectures. Appl. Sci. 2024, 14, 4199. [Google Scholar] [CrossRef]

- Lestandy, M.; Abdurrahim. Effect of Word2Vec Weighting with CNN-BiLSTM Model on Emotion Classification. J. Nas. Pendidik. Tek. Inform. 2023, 12, 99–107. [Google Scholar] [CrossRef]

- Malik, R.A.A.; Sibaroni, Y. Multi-aspect Sentiment Analysis of Tiktok Application Usage Using FasText Feature Expansion and CNN Method. J. Comput. Syst. Inform. 2022, 3, 277–285. [Google Scholar] [CrossRef]

- Lokkondra, C.Y.; Ramegowda, D.; Thimmaiah, G.M.; Bassappa Vijaya, A.P.; Shivananjappa, M.H. ETDR: An Exploratory View of Text Detection and Recognition in Images and Videos. Rev. d’Intelligence Artif. 2021, 35, 383–393. [Google Scholar] [CrossRef]

- Tiwari, D.; Nagpal, B.; Bhati, B.S.; Mishra, A.; Kumar, M. A Systematic Review of Social Network Sentiment Analysis with Comparative Study of Ensemble-Based Techniques; Springer: Dordrecht, The Netherlands, 2023; Volume 56. [Google Scholar] [CrossRef]

- Shahbandegan, A.; Mago, V.; Alaref, A.; van der Pol, C.B.; Savage, D.W. Developing a machine learning model to predict patient need for computed tomography imaging in the emergency department. PLoS ONE 2022, 17, e0278229. [Google Scholar] [CrossRef]

- Subba, B.; Kumari, S. A heterogeneous stacking ensemble based sentiment analysis framework using multiple word embeddings. Comput. Intell. 2022, 38, 530–559. [Google Scholar] [CrossRef]

- Allahim, A.; Cherif, A. Advancing Arabic Word Embeddings: A Multi-Corpora Approach with Optimized Hyperparameters and Custom Evaluation. Appl. Sci. 2024, 14, 11104. [Google Scholar] [CrossRef]

- Sikic, L.; Kurdija, A.S.; Vladimir, K.; Silic, M. Graph Neural Network for Source Code Defect Prediction. IEEE Access 2022, 10, 10402–10415. [Google Scholar] [CrossRef]

- Ali, A.; Taqa, A. Analytical Study of Traditional and Intelligent Textual Plagiarism Detection Approaches. J. Educ. Sci. 2022, 31, 8–25. [Google Scholar] [CrossRef]

- Hussain, Z.; Mata, R.; Wulff, D.U. Novel embeddings improve the prediction of risk perception. EPJ Data Sci. 2024, 13, 38. [Google Scholar] [CrossRef]

- Li, Z.; Tomar, Y.; Passonneau, R.J. A Semantic Feature-Wise Transformation Relation Network for Automatic Short Answer Grading. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 7–11 November 2021; pp. 6030–6040. [Google Scholar] [CrossRef]

- Yang, S.; Huang, G.; Ofoghi, B.; Yearwood, J. Short text similarity measurement using context-aware weighted biterms. Concurr. Comput. Pract. Exp. 2020, 34, e5765. [Google Scholar] [CrossRef]

- Rosnelly, R.; Hartama, D.; Sadikin, M.; Lubis, C.P.; Simanjuntak, M.S.; Kosasi, S. The Similarity of Essay Examination Results using Preprocessing Text Mining with Cosine Similarity and Nazief-Adriani Algorithms. Turkish J. Comput. Math. Educ. 2021, 12, 1415–1422. [Google Scholar] [CrossRef]

- Ramadhani, S.; Hariyadi, M.A.; Crysdian, C. The Evaluation of Computer Science Curriculum for High School Education Based on Similarity Analysis. Int. J. Adv. Data Inf. Syst. 2023, 4, 201–213. [Google Scholar] [CrossRef]

- Priyatno, A.M.; Prasetya, M.R.A.; Cholidhazia, P.; Sari, R.K. Comparison of Similarity Methods on New Student Admission Chatbots Using Retrieval-Based Concepts. J. Eng. Sci. Appl. 2024, 1, 32–40. [Google Scholar] [CrossRef]

- Wang, L.; Luo, J.; Deng, S.; Guo, X. RoCS: Knowledge Graph Embedding Based on Joint Cosine Similarity. Electronics 2024, 13, 147. [Google Scholar] [CrossRef]

- Wang, J.; Dong, Y. Measurement of text similarity: A survey. Information 2020, 11, 421. [Google Scholar] [CrossRef]

- Chawla, S.; Kaur, R.; Aggarwal, P. Text classification framework for short text based on TFIDF-FastText. Multimed. Tools Appl. 2023, 82, 40167–40180. [Google Scholar] [CrossRef]

- Deng, C.; Lai, G.; Deng, H. Improving word vector model with part-of-speech and dependency grammar information. CAAI Trans. Intell. Technol. 2020, 5, 260–267. [Google Scholar] [CrossRef]

- Nugroho, F.A.; Septian, F.; Pungkastyo, D.A.; Riyanto, J. Penerapan Algoritma Cosine Similarity untuk Deteksi Kesamaan Konten pada Sistem Informasi Penelitian dan Pengabdian Kepada Masyarakat. J. Inform. Univ. Pamulang 2021, 5, 529. [Google Scholar] [CrossRef]

- Febriyanti, N.; Rini, D.P.; Arsalan, O. Text Similarity Detection Between Documents Using Case Based Reasoning Method with Cosine Similarity Measure (Case Study SIMNG LPPM Universitas Sriwijaya). Sriwij. J. Inform. Appl. 2022, 3, 36–45. [Google Scholar] [CrossRef]

- Pertiwi, A.; Azhari, A.; Mulyana, S. Fast2Vec, a modified model of FastText that enhances semantic analysis in topic evolution. PeerJ Comput. Sci. 2025, 11, e2862. [Google Scholar] [CrossRef]

- Sarwar, T.B.; Noor, N.M.; Miah, M.S.U. Evaluating keyphrase extraction algorithms for finding similar news articles using lexical similarity calculation and semantic relatedness measurement by word embedding. PeerJ Comput. Sci. 2022, 8, e1024. [Google Scholar] [CrossRef]

- Suzanti, I.O.; Jauhari, A. Comparison of Stemming and Similarity Algorithms in Indonesian Translated Al-Qur’an Text Search. J. Ilm. Kursor 2022, 11, 91. [Google Scholar] [CrossRef]

- Mao, Y.; Fung, K.W. Use of word and graph embedding to measure semantic relatedness between unified medical language system concepts. J. Am. Med. Inform. Assoc. 2020, 27, 1538–1546. [Google Scholar] [CrossRef]

- Sovina, M.; Yusfrizal, Y.; Harahap, F.A.; Lazuly, I. Application for Recommending Tourist Attractions on The Island of Java with Content Based Filtering Using Cosine Similarity. J. Artif. Intell. Eng. Appl. 2024, 3, 565–569. [Google Scholar] [CrossRef]

- Mai, G.; Janowicz, K.; Prasad, S.; Shi, M.; Cai, L.; Zhu, R.; Regalia, B.; Lao, N. Semantically-Enriched Search Engine for Geoportals: A Case Study with ArcGIS Online. Agil. GIScience Ser. 2020, 1, 13. [Google Scholar] [CrossRef]

- Thapa, M.; Kapoor, P.; Kaushal, S.; Sharma, I. A Review of Contextualized Word Embeddings and Pre-Trained Language Models, with a Focus on GPT and BERT. In Proceedings of the 1st International Conference on Cognitive & Cloud Computing, Jaipur, India, 1–2 August 2024; pp. 205–214. [Google Scholar] [CrossRef]

- HaCohen-Kerner, Y.; Miller, D.; Yigal, Y. The influence of preprocessing on text classification using a bag-of-words representation. PLoS ONE 2020, 15, e0232525. [Google Scholar] [CrossRef]

- Kowsari, K.; Meimandi, K.J.; Heidarysafa, M.; Mendu, S.; Barnes, L.; Brown, D. Text classification algorithms: A survey. Information 2019, 10, 150. [Google Scholar] [CrossRef]

- Camacho-Collados, J.; Pilehvar, M.T. On the Role of Text Preprocessing in Neural Network Architectures: An Evaluation Study on Text Categorization and Sentiment Analysis. In Proceedings of the 2018 EMNLP Workshop BlackboxNLP: Analyzing and Interpreting Neural Networks for NLP, Brussels, Belgium, 1 November 2018; pp. 40–46. [Google Scholar] [CrossRef]

- Trieu, H.L.; Miwa, M.; Ananiadou, S. BioVAE: A pre-trained latent variable language model for biomedical text mining. Bioinformatics 2022, 38, 872–874. [Google Scholar] [CrossRef] [PubMed]

- Thakur, N.; Reimers, N.; Rücklé, A.; Srivastava, A.; Gurevych, I. BEIR: A Heterogeneous Benchmark for Zero-shot Evaluation of Information Retrieval Models. arXiv 2021, arXiv:2104.08663. [Google Scholar] [CrossRef]

- Jalilifard, A.; Caridá, V.F.; Mansano, A.F.; Cristo, R.S.; da Fonseca, F.P.C. Semantic Sensitive TF-IDF to Determine Word Relevance in Documents. In Advances in Computing and Network Communications; Lecture Notes in Electrical Engineering; Springer: Singapore, 2021; pp. 327–337. [Google Scholar] [CrossRef]

- Babić, K.; Guerra, F.; Martinčić-Ipšić, S.; Meštrović, A. A comparison of approaches for measuring the semantic similarity of short texts based on word embeddings. J. Inf. Organ. Sci. 2020, 44, 231–246. [Google Scholar] [CrossRef]

- Umer, M.; Imtiaz, Z.; Ahmad, M.; Nappi, M.; Medaglia, C.; Choi, G.S.; Mehmood, A. Impact of convolutional neural network and FastText embedding on text classification. Multimed. Tools Appl. 2023, 82, 5569–5585. [Google Scholar] [CrossRef]

- Galal, O.; Abdel-Gawad, A.H.; Farouk, M. Rethinking of BERT sentence embedding for text classification. Neural Comput. Appl. 2024, 36, 20245–20258. [Google Scholar] [CrossRef]

- Weng, M.H.; Wu, S.; Dyer, M. Identification and Visualization of Key Topics in Scientific Publications with Transformer-Based Language Models and Document Clustering Methods. Appl. Sci. 2022, 12, 11220. [Google Scholar] [CrossRef]

- Singh, R.; Singh, S. Text Similarity Measures in News Articles by Vector Space Model Using NLP. J. Inst. Eng. Ser. B 2021, 102, 329–338. [Google Scholar] [CrossRef]

- Harwood, T.V.; Treen, D.G.C.; Wang, M.; de Jong, W.; Northen, T.R.; Bowen, B.P. BLINK enables ultrafast tandem mass spectrometry cosine similarity scoring. Sci. Rep. 2023, 13, 13462. [Google Scholar] [CrossRef]

- Al-Tarawneh, M.A.B.; Al-irr, O.; Al-Maaitah, K.S.; Kanj, H.; Aly, W.H.F. Enhancing Fake News Detection with Word Embedding: A Machine Learning and Deep Learning Approach. Computers 2024, 13, 239. [Google Scholar] [CrossRef]

- Zhou, Y.; Li, C.; Huang, G.; Guo, Q.; Li, H.; Wei, X. A Short-Text Similarity Model Combining Semantic and Syntactic Information. Electronics 2023, 12, 3126. [Google Scholar] [CrossRef]

- Lezama-Sánchez, A.L.; Vidal, M.T.; Reyes-Ortiz, J.A. An Approach Based on Semantic Relationship Embeddings for Text Classification. Mathematics 2022, 10, 4161. [Google Scholar] [CrossRef]

- Szymański, J.; Operlejn, M.; Weichbroth, P. Enhancing Word Embeddings for Improved Semantic Alignment. Appl. Sci. 2024, 14, 11519. [Google Scholar] [CrossRef]

- Reimers, N.; Gurevych, I. Sentence-BERT: Sentence embeddings using siamese BERT-networks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, Hong Kong, China, 3–7 November 2019; pp. 3982–3992. [Google Scholar] [CrossRef]

- Patil, A.; Han, K.; Jadon, A. Comparative Analysis of Text Embedding Models for Bug Report Semantic Similarity. In Proceedings of the 2024 11th International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 21–22 March 2024; pp. 262–267. [Google Scholar] [CrossRef]

- Xiao, L.; Li, Q.; Ma, Q.; Shen, J.; Yang, Y.; Li, D. Text classification algorithm of tourist attractions subcategories with modified TF-IDF and Word2Vec. PLoS ONE 2024, 19, e0305095. [Google Scholar] [CrossRef]

- Colla, D.; Mensa, E.; Radicioni, D.P. Novel metrics for computing semantic similarity with sense embeddings. Knowl.-Based Syst. 2020, 206, 106346. [Google Scholar] [CrossRef]

- Gani, M.O.; Ayyasamy, R.K.; Alhashmi, S.M.; Sangodiah, A.; Fui, Y.T. ETFPOS-IDF: A Novel Term Weighting Scheme for Examination Question Classification Based on Bloom’s Taxonomy. IEEE Access 2022, 10, 132777–132785. [Google Scholar] [CrossRef]

- Rani, R.; Lobiyal, D.K. A weighted word embedding based approach for extractive text summarization. Expert Syst. Appl. 2021, 186, 115867. [Google Scholar] [CrossRef]

- Qiu, Z.; Huang, G.; Qin, X.; Wang, Y.; Wang, J.; Zhou, Y. A Hybrid Semantic Representation Method Based on Fusion Conceptual Knowledge and Weighted Word Embeddings for English Texts. Information 2024, 15, 708. [Google Scholar] [CrossRef]

- Gong, P.; Liu, J.; Xie, Y.; Liu, M.; Zhang, X. Enhancing context representations with part-of-speech information and neighboring signals for question classification. Complex Intell. Syst. 2023, 9, 6191–6209. [Google Scholar] [CrossRef]

- Beno, J.; Silen, A.; Yanti, M. The Structure of Health Factors among Community-dwelling Elderly People. Braz. Dent. J. 2022, 33, 1–12. [Google Scholar]

- Wang, H.; Yu, D. Going Beyond Sentence Embeddings: A Token-Level Matching Algorithm for Calculating Semantic Textual Similarity. Proc. Annu. Meet. Assoc. Comput. Linguist. 2023, 2, 563–570. [Google Scholar] [CrossRef]

- Balkus, S.V.; Yan, D. Improving short text classification with augmented data using GPT-3. Nat. Lang. Eng. 2024, 30, 943–972. [Google Scholar] [CrossRef]

- Felix, E.A.; Lee, S.P. Systematic literature review of preprocessing techniques for imbalanced data. IET Softw. 2019, 13, 479–496. [Google Scholar] [CrossRef]

- Karaca, M.F. Effects of preprocessing on text classification in balanced and imbalanced datasets. KSII Trans. Internet Inf. Syst. 2024, 18, 591–609. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, B.; Liu, W.; Cai, J.; Zhang, H. STMAP: A novel semantic text matching model augmented with embedding perturbations. Inf. Process. Manag. 2024, 61, 103576. [Google Scholar] [CrossRef]

- Siegert, I.; Böck, R.; Wendemuth, A. Inter-rater reliability for emotion annotation in human–computer interaction: Comparison and methodological improvements. J. Multimodal User Interfaces 2014, 8, 17–28. [Google Scholar] [CrossRef]

- Yaman, N. A corpus-based analysis of conversational features in bahasa Inggris textbooks for junior high schools in Indonesia. Bachelor’s Thesis, Universitas Ahmad Dahlan, Yogyakarta, Indonesia, 2023; pp. 120–130. [Google Scholar]

- Li, X.; Li, J. AoE—Angle-Optimized Embeddings for Semantic Textual Similarity; Association for Computational Linguistics: Stroudsburg, PA, USA, 2024. [Google Scholar] [CrossRef]

- Dos Santos, F.J.; Coelho, A.L.V. Eliciting correlated weights for multi-criteria group decision making with generalized canonical correlation analysis. Symmetry 2020, 12, 1612. [Google Scholar] [CrossRef]

- Das, M.; Kamalanathan, S.; Alphonse, P. A Comparative Study on TF-IDF feature weighting method and its analysis using unstructured dataset. In Proceedings of the 5th International Conference on Computational Linguistics and Intelligent Systems, Kharkiv, Ukraine, 22–23 April 2021; Volume 2870, pp. 98–107. [Google Scholar]

- Marwah, D.; Beel, J. Term-Recency for {TF}-{IDF}, {BM}25 and {USE} Term Weighting. In Proceedings of the 8th International Workshop on Mining Scientific Publications; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 36–41. Available online: https://www.aclweb.org/anthology/2020.wosp-1.5 (accessed on 1 July 2025).

- Zhang, K.; Liu, Y.; Mei, F.; Sun, G.; Jin, J. IBGJO: Improved Binary Golden Jackal Optimization with Chaotic Tent Map and Cosine Similarity for Feature Selection. Entropy 2023, 25, 1128. [Google Scholar] [CrossRef] [PubMed]

- Gao, T.; Yao, X.; Chen, D. SimCSE: Simple Contrastive Learning of Sentence Embeddings. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 7–11 November 2021; pp. 6894–6910. [Google Scholar] [CrossRef]

- Roudbaraki, S.T. Benchmarking Synonym Extraction Methods in Domain-Specific Contexts. Politecnico di Torino. 2025. Available online: http://webthesis.biblio.polito.it/id/eprint/36445. (accessed on 1 July 2025).

- Xu, J.; Shao, W.; Chen, L.; Liu, L. SimCSE++: Improving Contrastive Learning for Sentence Embeddings from Two Perspectives. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023; pp. 12028–12040. [Google Scholar] [CrossRef]

- Rep, I.; Dukić, J.; Šnajder, J. Are ELECTRA’s Sentence Embeddings Beyond Repair? The Case of Semantic Textual Similarity. In Proceedings of the EMNLP 2024–2024 Conference on Empirical Methods in Natural Language Processing Finding EMNLP 2024, Miami, FL, USA, 12–16 November 2024; pp. 9159–9169. [Google Scholar] [CrossRef]

- Iqbal, M.A.; Sharif, O.; Hoque, M.M.; Sarkar, I.H. Word Embedding based Textual Semantic Similarity Measure in Bengali. Procedia Comput. Sci. 2021, 193, 92–101. [Google Scholar] [CrossRef]

- Viji, D.; Revathy, S. A hybrid approach of Weighted Fine-Tuned BERT extraction with deep Siamese Bi—LSTM model for semantic text similarity identification. Multimed. Tools Appl. 2022, 81, 6131–6157. [Google Scholar] [CrossRef]

- Prakoso, D.W.; Abdi, A.; Amrit, C. Short text similarity measurement methods: A review. Soft Comput. 2021, 25, 4699–4723. [Google Scholar] [CrossRef]

- Hameed, N.H.; Alimi, A.M.; Sadiq, A.T. Short Text Semantic Similarity Measurement Approach Based on Semantic Network. Baghdad Sci. J. 2022, 19, 1581–1591. [Google Scholar] [CrossRef]

- Xin, Y. Development of English Composition Correction and Scoring System Based on Text Similarity Algorithm. J. Electr. Syst. 2024, 20, 501–508. [Google Scholar] [CrossRef]

- Dasgupta, J.; Mishra, P.K.; Karuppasamy, S.; Mahajan, A.D. A Survey of Numerous Text Similarity Approach. Int. J. Sci. Res. Comput. Sci. Eng. Inf. Technol. 2023, 3307, 184–194. [Google Scholar] [CrossRef]

- Wehnert, S.; Dureja, S.; Kutty, L.; Sudhi, V.; De Luca, E.W. Applying BERT Embeddings to Predict Legal Textual Entailment. Rev. Socionetw. Strateg. 2022, 16, 197–219. [Google Scholar] [CrossRef]

- Hamza, A.; En-Nahnahi, N.; El Mahdaouy, A.; El Alaoui Ouatik, S. Embedding arabic questions by feature-level fusion of word representations for questions classification: It is worth doing. J. King Saud Univ. Comput. Inf. Sci. 2022, 34, 6583–6594. [Google Scholar] [CrossRef]

| Embedding | TFIDF-Weighting | BM25-Weighting | POS-Weighting | N-Weighting |

|---|---|---|---|---|

| W2V + CBOW W | W2V + CBOW + TFIDF | W2V + CBOW + BM25 | W2V + CBOW + POS | W2V + CBOW + N |

| W2V + Skip-gram | W2V + SG + TFIDF | W2V + SG + BM25 | W2V + SG + POS | W2V + SG + N |

| FastText + CBOW | FT + CBOW + TFIDF | FT + CBOW + BM25 | FT + CBOW + POS | FT + CBOW + N |

| FastText + Skip-gram | FT + SG + TFIDF | FT + SG + BM25 | FT + SG + POS | FT + SG + N |

| BERT + CBOW | BERT + CBOW + TFIDF | BERT + CBOW + BM25 | BERT + CBOW + POS | BERT + CBOW + N |

| BERT + Skip-gram | BERT + SG + TFIDF | BERT + SG + BM25 | BERT + SG + POS | BERT + SG + N |

| GPT + CBOW | GPT + CBOW + TFIDF | GPT + CBOW + BM25 | GPT + CBOW + POS | GPT + CBOW + N |

| GPT + Skip-gram | GPT + SG + TFIDF | GPT + SG + BM25 | GPT + SG + POS | GPT + SG + N |

| Models | Average Text Similarity Score | Average Standard Deviation Score | TA_SM | TA_STD | ||||

|---|---|---|---|---|---|---|---|---|

| P1 | P2 | P3 | P1 | P2 | P3 | |||

| W2V + SG + N | 3.079776 | 3.132397 | 3.038225 | 0.840000 | 0.780000 | 0.760000 | 3.083466 | 0.793333 |

| W2V + SG + POS | 3.049827 | 3.107077 | 3.047112 | 0.830000 | 0.770000 | 0.770000 | 3.068006 | 0.790000 |

| W2V + SG + TFIDF | 2.854184 | 2.866958 | 2.802054 | 0.830000 | 0.960000 | 0.830000 | 2.841065 | 0.873333 |

| FT + SG + N | 3.532000 | 3.485506 | 3.502811 | 0.590000 | 0.670000 | 0.620000 | 3.520506 | 0.626667 |

| FT + SG + POS | 3.529990 | 3.478788 | 3.449800 | 0.630000 | 0.720000 | 0.680000 | 3.486193 | 0.676667 |

| FT + SG + TFIDF | 3.297830 | 3.315046 | 3.293138 | 0.770000 | 0.900000 | 0.860000 | 3.302005 | 0.843333 |

| BERT + CBOW + TFIDF | 3.619748 | 3.591358 | 3.596959 | 0.396800 | 0.449300 | 0.846100 | 3.602688 | 0.564067 |

| BERT + SG + TFIDF | 3.495678 | 3.522178 | 3.524813 | 0.360000 | 0.300000 | 0.260000 | 3.514223 | 0.306667 |

| BERT + SG + N | 3.495734 | 3.522248 | 3.498465 | 0.360000 | 0.300000 | 0.260000 | 3.505482 | 0.306667 |

| GPT + CBOW + TFIDF | 3.986f171 | 3.974885 | 3.983351 | 0.010000 | 0.020000 | 0.010000 | 3.981469 | 0.013333 |

| GPT + SG + TFIDF | 3.986703 | 3.973653 | 3.983351 | 0.010000 | 0.020000 | 0.010000 | 3.981236 | 0.013333 |

| GPT + CBOW + BM25 | 3.990000 | 3.970000 | 3.980000 | 0.010000 | 0.020000 | 0.010000 | 3.980000 | 0.013333 |

| Embedding | Weighting | Vector Size | Average Pearson Correlation | Average Spearman Correlation | Average Standard Deviation | Final Score |

|---|---|---|---|---|---|---|

| W2V + SG | TFIDF | 128 | 0.594 | 0.540 | 0.847 | 0.484 |

| POS | 128 | 0.469 | 0.586 | 0.873 | 0.447 | |

| W2V + CBOW | BM25 | 128 | 0.510 | 0.527 | 0.933 | 0.428 |

| POS | 150 | 0.375 | 0.468 | 1.947 | 0.148 | |

| FT + SG | POS | 128 | 0.592 | 0.573 | 0.817 | 0.502 |

| N | 128 | 0.365 | 0.607 | 0.843 | 0.420 | |

| BM25 | 128 | 0.749 | 0.622 | 0.903 | 0.568 | |

| FT CBOW | BM25 | 150 | 0.524 | 0.547 | 0.777 | 0.473 |

| TFIDF | 150 | 0.334 | 0.597 | 0.677 | 0.437 | |

| BERT + SG | POS | 128 | 0.397 | 0.466 | 0.303 | 0.478 |

| TFIDF | 128 | 0.152 | 0.475 | 1.447 | 0.145 | |

| BERT + CBOW | POS | 128 | 0.397 | 0.466 | 0.307 | 0.479 |

| TFIDF | 150 | 0.397 | 0.346 | 0.077 | 0.524 | |

| GPT + CBOW | TFIDF | 128 | 0.354 | 0.466 | 0.013 | 0.526 |

| N | 128 | 0.354 | 0.474 | 0.013 | 0.529 | |

| GPT + SG | TGIDF | 128 | 0.354 | 0.474 | 0.013 | 0.529 |

| POS | 150 | 0.293 | 0.346 | 0.033 | 0.449 |

| Model | RMSE | MAE | Composite Mean Error (CME) | Pearson Corc | Spearman Corr |

|---|---|---|---|---|---|

| W2V + CBOW + TFIDF | 1.0827056 | 0.8909355 | 0.9868206 | 0.5244000 | 0.5430000 |

| FT + CBOW + TFIDF | 1.7811809 | 1.6800599 | 1.7306204 | 0.3344000 | 0.5972000 |

| FT + CBOW + BM25 | 1.7812067 | 1.6800867 | 1.7306467 | 0.5235000 | 0.5468000 |

| W2V + CBOW + POS | 1.2822023 | 1.0808654 | 1.1815338 | 0.3750000 | 0.4684000 |

| FT + CBOW + POS | 1.7812112 | 1.6800940 | 1.7306526 | 0.3329000 | 0.5886000 |

| W2V + CBOW + N | 1.3214898 | 1.1219350 | 1.2217124 | 0.4365000 | 0.7800000 |

| BERT + SG + TFIDF | 1.7812067 | 1.6800867 | 1.7306467 | 0.1516000 | 0.4750000 |

| BERT + SG + POS | 1.7811809 | 1.6800599 | 1.7306204 | 0.3968000 | 0.4662000 |

| BERT + SG + N | 2.1807533 | 2.1169872 | 2.1488703 | 0.4515000 | 0.3460000 |

| GPT + SG + TFIDF | 2.2071628 | 2.1427744 | 2.1749686 | 0.3544000 | 0.4662000 |

| Text-1 | Text-2 | ED | ST Expert | ST TFIDF | ST POS | ST N |

|---|---|---|---|---|---|---|

| D1-1. aspek politik uts sendiri memiliki kedekatan yang cukup erat dengan dunia | D1-3. upps telah mengungkapkan isu yang terkait dengan kondisi lingkungan | W2V + SG | 3 | 2.9586 | 3.455 | 3.394 |

| D1-1. The political aspect of uta itself has a fairly close relationship with the world of | D1-3. The study program has expressed issues related to environmental conditions | |||||

| D9-1. Surat keputusan yayasan pendidikan anak kukang tentang statuta | D9-2 kebijakan terkait pengembangan kerjasama tidak ada penjelasan | FT + SG | 1 | 1.3602 | 1.318 | 1.366 |

| D9-1. The decree of the Anak Kukang Educational Foundation regarding the statute | D9-2. There is no explanation regarding policies related to partnership development | |||||

| D20-1 dokumen formal kebijakan standar pendidikan meliputi | D20-2 ada 15 sk tentang pelaksanaan pendidikan yang tidak dapat diases karena | BERT + CBOW | 2 | 3.1808 | 3.181 | 3.181 |

| D20-1. The formal document on education standard policy includes decrees | D20-2. There are 15 decrees on the implementation of education that cannot be assessed because | |||||

| D20-1 dokumen formal kebijakan standar pendidikan meliputi | D20-4 ada sk rektor tentang pembantukan tim penyusun rencana induk | GPT + SG | 3 | 3.9497 | 3.963 | 3.950 |

| D20-1. The formal document on education standard policy includes decrees | D20-4. There is a rector’s decree regarding the formation of a master plan drafting team |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hendry, H.; Tukino, T.; Sediyono, E.; Fauzi, A.; Huda, B. HyEWCos: A Comparative Study of Hybrid Embedding and Weighting Techniques for Text Similarity in Short Subjective Educational Text. Information 2025, 16, 995. https://doi.org/10.3390/info16110995

Hendry H, Tukino T, Sediyono E, Fauzi A, Huda B. HyEWCos: A Comparative Study of Hybrid Embedding and Weighting Techniques for Text Similarity in Short Subjective Educational Text. Information. 2025; 16(11):995. https://doi.org/10.3390/info16110995

Chicago/Turabian StyleHendry, Hendry, Tukino Tukino, Eko Sediyono, Ahmad Fauzi, and Baenil Huda. 2025. "HyEWCos: A Comparative Study of Hybrid Embedding and Weighting Techniques for Text Similarity in Short Subjective Educational Text" Information 16, no. 11: 995. https://doi.org/10.3390/info16110995

APA StyleHendry, H., Tukino, T., Sediyono, E., Fauzi, A., & Huda, B. (2025). HyEWCos: A Comparative Study of Hybrid Embedding and Weighting Techniques for Text Similarity in Short Subjective Educational Text. Information, 16(11), 995. https://doi.org/10.3390/info16110995