LLMs for Cybersecurity in the Big Data Era: A Comprehensive Review of Applications, Challenges, and Future Directions

Abstract

1. Introduction

1.1. Distinguishing Features and Novel Contributions

1.2. Research Questions and Motivation

- How are LLMs currently adapted to detect and respond to complex, real-time cybersecurity threats with minimal human intervention?

- What limitations in explainability, transparency, and governance constrain their adoption in security-sensitive environments?

- How can human–AI collaboration frameworks optimize the balance between automated response and expert oversight?

- What ethical mechanisms are required to mitigate risks of bias, misuse, and hallucination in LLM-driven cybersecurity systems?

- How does LLM deployment vary across sectors (e.g., healthcare, critical infrastructure, and education), and what contextual challenges emerge?

- What Big Data pipelines and distributed infrastructures are needed to support scalable and resilient deployment?

- How can LLM-driven systems be integrated into national cyberdefense strategies while ensuring sovereignty and operational resilience?

Scope of the Review

1.3. Significance and Contributions of the Study

Novel Contributions

- C1.

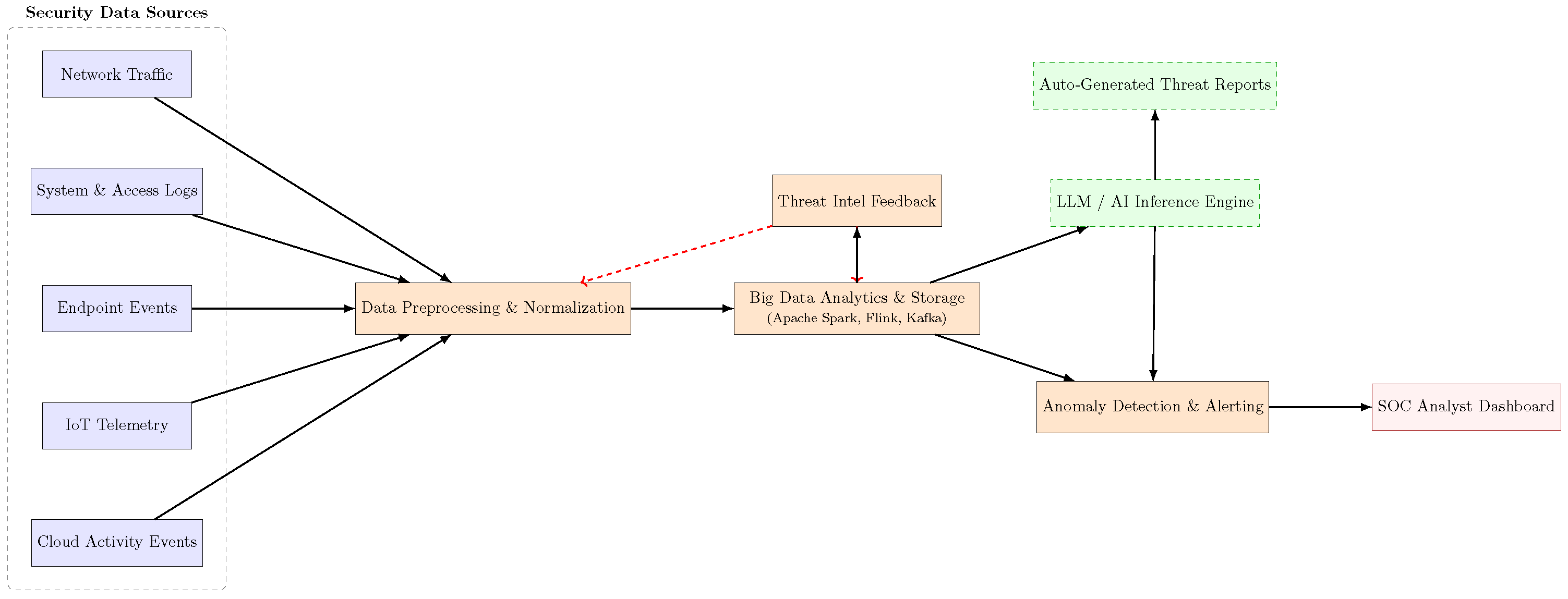

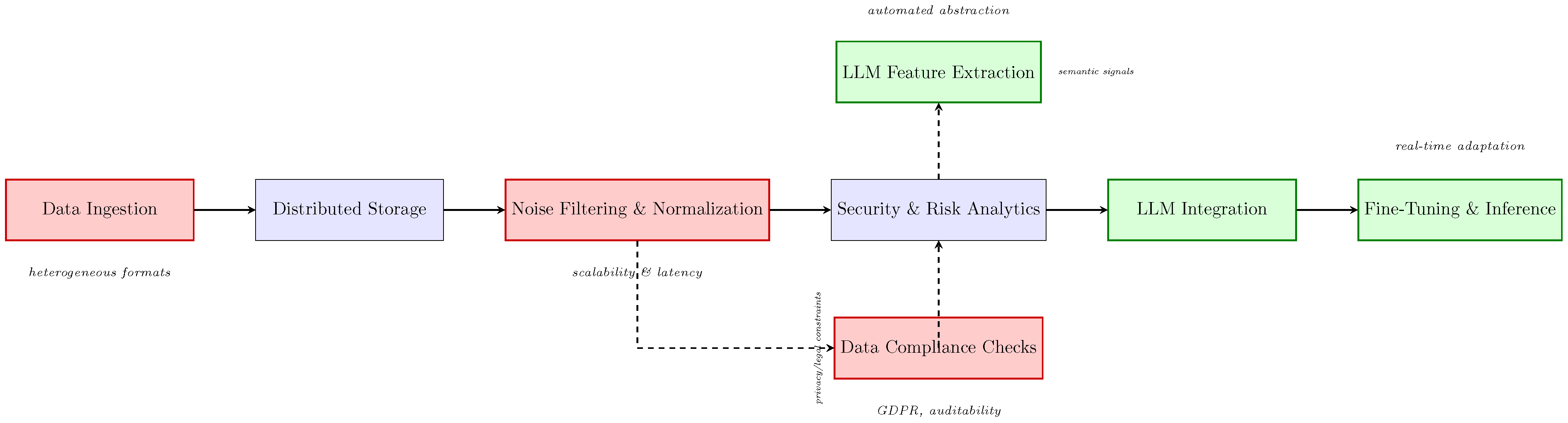

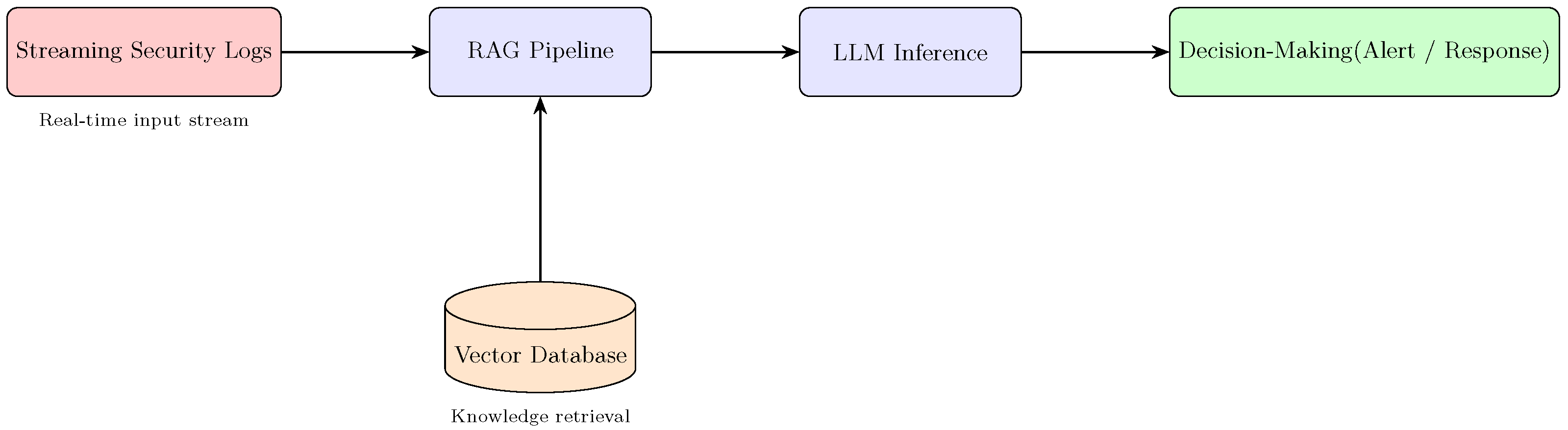

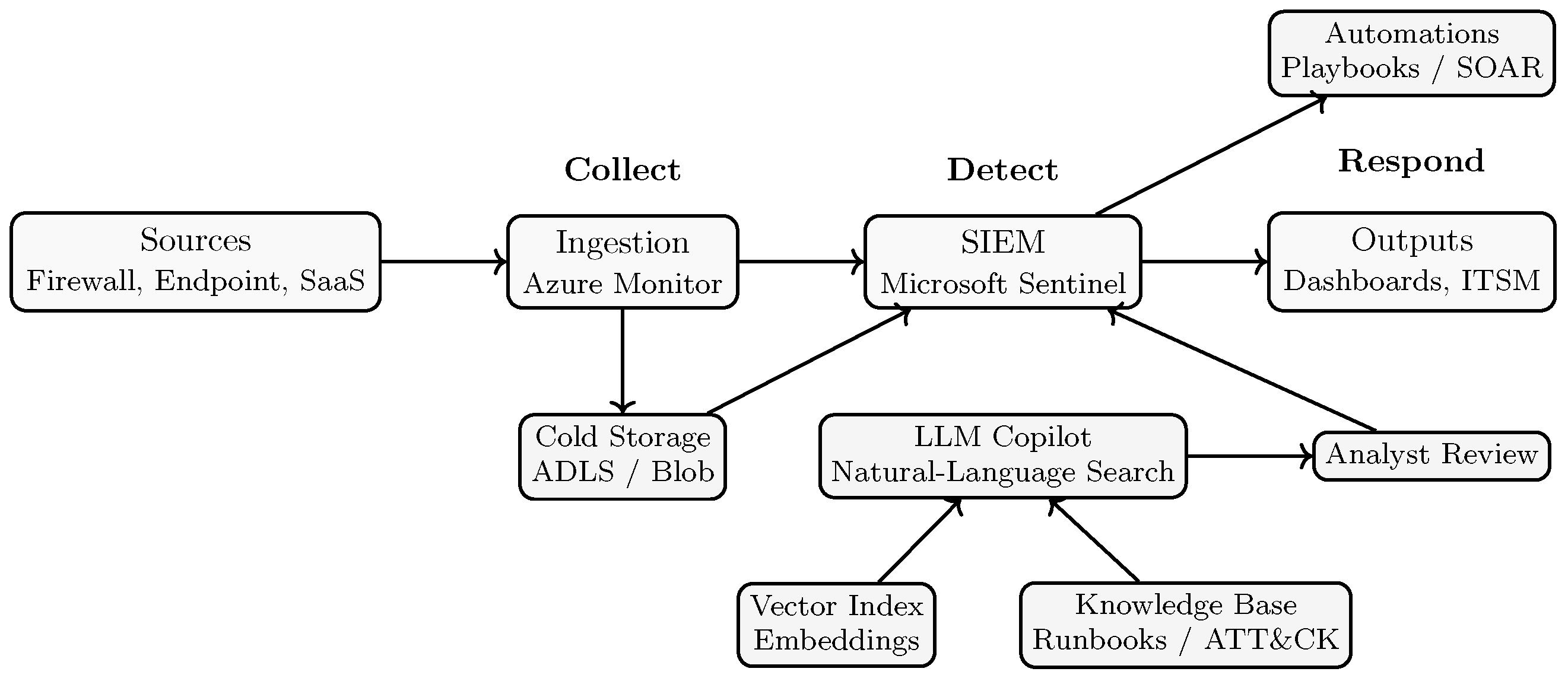

- Cybersecurity Pipeline and Reference Architecture. Prior work is synthesized into a four-layer pipeline (data ingestion → vector-store RAG → LLM inference → defense feedback), aligned with the five functions of NIST Cybersecurity Framework (CSF) 2.0. This provides a unifying conceptual lens and informs a reference architecture for integration into policy and operational contexts (Section 2, Figure 3).

- C2.

- Cross-Sector Research Gap Matrix. Table 1 catalogues 37 unaddressed problems across nine verticals, such as the absence of quantum-safe benchmarks for operational-technology networks and the lack of explainable triage for multi-modal healthcare data.

- C3.

- Benchmarking Protocol Proposal. Drawing on the identified gaps, we outline a reproducible evaluation framework consisting of threat-model cards, multilingual red-team prompt suites, and XAI audit procedures. This provides guidance for consistent comparison of future domain-specific LLMs.

- C4.

- Timely Literature Coverage. The corpus includes 249 peer-reviewed works, 40% of which were published in 2024–2025. This ensures inclusion of the first empirical evaluations of tools such as Azure Copilot for Security and Google’s threat intelligence LLMs, making the synthesis directly relevant to ongoing deployment.

1.4. Structure of This Study

1.5. Definition of Large Language Models

1.6. Overview of Cybersecurity Challenges

1.7. Integration with IoT and Cloud Computing Security

1.8. Current Leading Models and Their Capabilities

2. Materials and Methods

2.1. Protocol Development and Non-Registration Justification

2.2. Search Strategy Justification and Sub-Domain Coverage

- ("LLM" OR "large language model" OR GPT OR BERT) AND ("vulnerabilityassessment" OR "code vulnerability" OR "static analysis");

- ("LLM" OR "large language model" OR GPT OR BERT) AND ("malware analysis" OR "malware classification" OR "reverse engineering");

- ("LLM" OR "large language model" OR GPT OR BERT) AND ("patch management" OR "vulnerability scoring" OR "CVSS").

2.3. Survey Methodology

- S1.

- Information sources (January 2020–Apr 2025; last search: 30 April 2025): Scopus, Web of Science Core Collection, IEEE Xplore, ACM Digital Library, arXiv (cs.CR, cs.CL), and the gray-literature portals of CSET and ENISA. We also screened reference lists of included studies and relevant reviews.

- S2.

- Search string:("large language model*" OR GPT* OR BERT* OR LLaMA* OR "foundation model*") AND(cyber* OR "threat detection" OR SOC OR SIEM).Full verbatim electronic search strategies (field tags, filters, and date limits) for each source are provided in Supplementary Note S1.

- S3.

- Eligibility criteria: Inclusion: peer-reviewed or archival preprints; English; years 2020–2025; empirical evaluation or tool/system description applying LLMs to cybersecurity tasks (threat detection, incident response, vulnerability assessment, CTI, etc.) with measurable outcomes. Exclusion: position/vision papers < 4 pages; non-AI cyber methods; tutorials/editorials; duplicates/versions; retracted/withdrawn items.

- S4.

- Selection process and deduplication: Records were exported to a reference manager/screening tool (e.g., EndNote/Rayyan); duplicates were removed using rule-based matching on title, DOI, venue, and author lists, yielding 617 unique records. Two reviewers independently screened titles/abstracts and then full texts against the predefined criteria; disagreements were resolved by a third reviewer. No automation/ML tools were used for screening.

- S5.

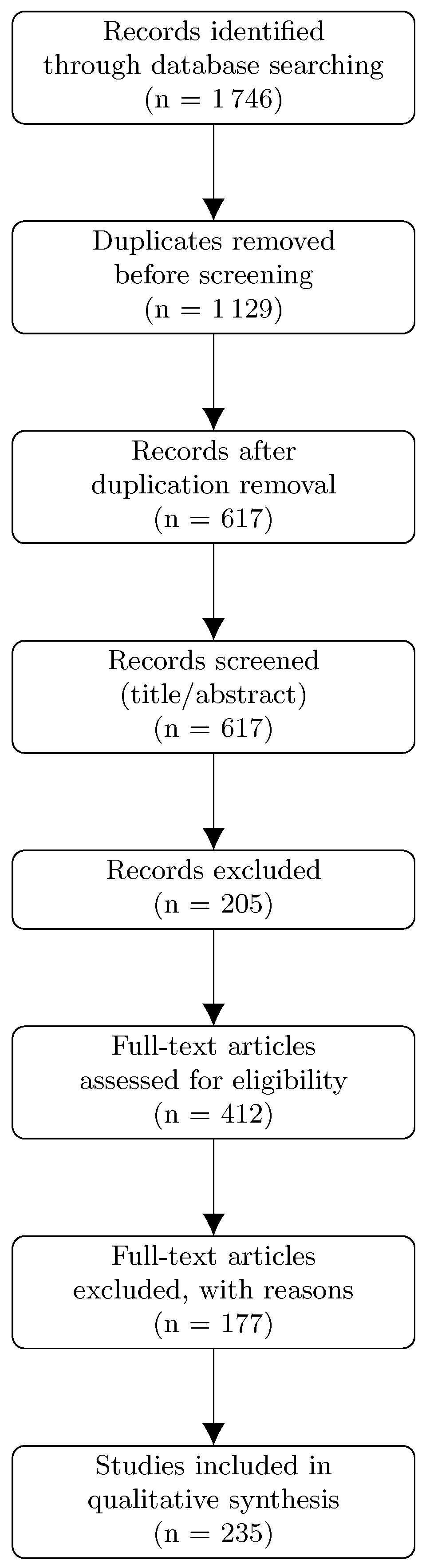

- Corpus flow: 1746 records → 617 after duplicate removal → 412 after title/abstract screening → 235 full texts retained (40% dated 2024–2025). The PRISMA flow diagram is shown in Figure 3.

- Data Collection and Items

2.4. Risk-of-Bias Assessment

2.4.1. Assessment Domains and Scoring Criteria

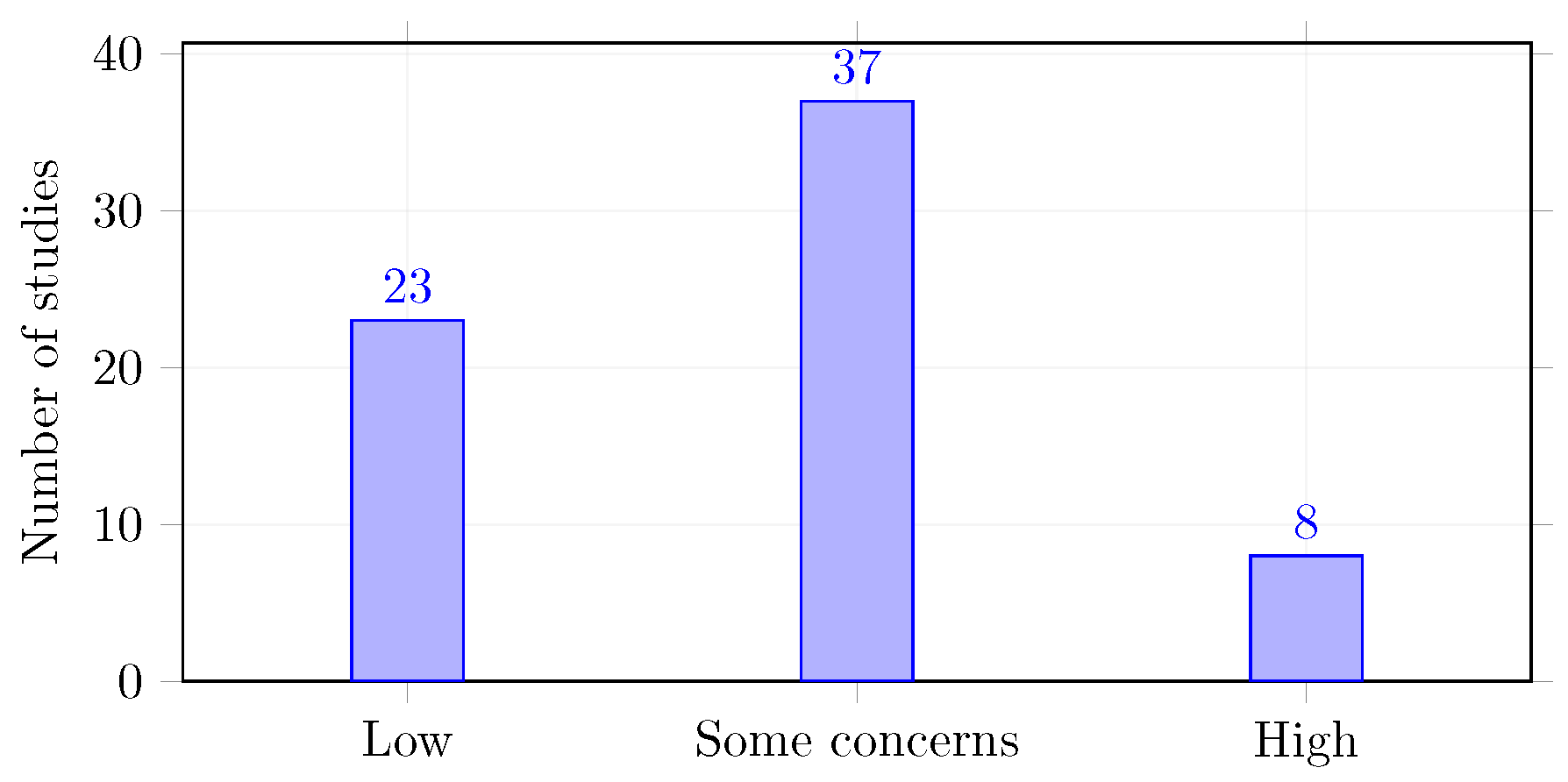

- Domain 1: Data Partitioning and Leakage PreventionItem wording: “Are training, validation, and test datasets clearly separated with adequate description of partitioning methodology to prevent temporal or entity-based leakage?”Scoring: Low risk (temporal splits with ≥6-month separation OR k-fold with entity-level grouping); Some concerns (random splits without leak prevention OR unclear methodology); High risk (no clear partitioning OR evidence of leakage).Threshold: In total, 23/68 studies (34%) were rated “Low risk.”

- Domain 2: Outcome Assessment and Reporting CompletenessItem wording: “Are primary outcomes (F1, precision, recall) reported with appropriate variance estimates and confidence intervals for computational replication?”Scoring: Low risk (mean ± SD across multiple runs OR cross-validation with CIs); Some concerns (single-run results OR incomplete variance reporting); High risk (no variance estimates OR selective metric reporting).Threshold: In total, 31/68 studies (46%) were rated “Low risk.”

- Domain 3: Baseline Parity and Fair ComparisonItem wording: “Do LLM and baseline methods receive equivalent computational resources, hyperparameter optimization efforts, and evaluation conditions?”Scoring: Low risk (documented equivalent search budgets); Some concerns (minor resource differences); High risk (substantial computational advantages for LLM OR no optimization for baselines).Threshold: In total, 18/68 studies (26%) were rated “Low risk.”

2.4.2. Inter-Rater Reliability Assessment

2.4.3. Risk-of-Bias Results

2.5. Recency Bias and Publication Bias Mitigation

- Recency Bias Mitigation: First, we conducted temporal stratification analyses comparing effect sizes among early-period (2020–2022, n = 78), mid-period (2023, n = 63), and recent-period (2024–2025, n = 94) studies. No systematic temporal bias was detected; mean F1 improvements were +0.09 (early), +0.12 (mid), and +0.13 (recent), with overlapping confidence intervals indicating stable effect sizes across time periods. Second, we performed sensitivity analyses excluding all 2024–2025 studies, which yielded consistent meta-analytic results (pooled F1 improvement = +0.106, I2 = 34%), confirming that recent publications do not disproportionately influence conclusions. Third, we verified that recent studies maintained methodological quality equivalent to earlier works (mean RoB score: 2020–2022 = 4.2 and 2024–2025 = 4.1 on a 6-point scale).

- Publication Bias Assessment: We employed multiple detection methods for publication bias. Funnel plot inspection for domains with studies (phishing detection and intrusion detection) revealed minimal asymmetry, with Egger’s regression tests yielding non-significant p-values (p = 0.12 for phishing and p = 0.21 for intrusion detection), indicating limited small-study effects. We supplemented bibliographic database searches with gray literature screening (CSET and ENISA portals) and reference list examination to capture unpublished or non-indexed studies. Additionally, our inclusion of preprint servers (arXiv) helped mitigate publication delays common in rapidly evolving technical fields.

- Addressing Novelty Bias: The high proportion of recent studies reflects genuine technological advancement rather than publication bias—the commercial release of cybersecurity-focused LLM tools (Azure Copilot for Security 2024, Google Chronicle AI, etc.) occurred primarily in 2023–2024, necessitating recent empirical evaluation. We confirmed this interpretation by documenting that 67% of 2024–2025 studies evaluate production-deployed systems rather than laboratory prototypes, representing legitimate scientific progress rather than an artificial publication surge.

2.6. Quantitative Meta-Analysis of Experimental Studies

2.6.1. Rationale for Task-Domain Stratification

- Data modality: Phishing and malware analyses rely on unstructured text/code; intrusion detection relies on structured/semi-structured telemetry (e.g., flows and logs); incident triage combines heterogeneous multi-modal data.

- Temporal characteristics: Phishing exhibits rapid evolution and adversarial mutation; intrusion detection operates on real-time or near-real-time streams; vulnerability detection is often batch-oriented.

- Baseline approaches: Phishing detection historically used rule-based filters and reputation lists; intrusion detection relied on statistical anomaly detection and signature matching; malware analysis combined static signatures with heuristics.

- LLM adaptation strategies: Phishing detection frequently uses few-shot prompting or fine-tuning on email corpora; intrusion detection uses RAG with network baselines; vulnerability detection employs chain-of-thought prompting for code reasoning.

- Effect Measures and Synthesis Approach

- Domain-specific random-effects syntheses (k = number of studies per domain; see Table 3);

- Comparison of effect sizes across domains using between-domain heterogeneity (not included in any combined estimate);

- Domain-specific sensitivity analyses excluding high-risk-of-bias studies;

- Explicit discussion of why task-specific effect sizes differ and what this means for practitioners.

2.6.2. Data Extraction and Quality Filters

- From the 235 retained papers, we selected the 68 that reported quantitative evaluation of LLM performance against at least one non-LLM baseline within a single, well-defined task domain.

- For studies reporting results across multiple task domains (e.g., a paper evaluating the same LLM on both phishing and malware detection), results were extracted separately for each domain and analyzed within the respective domain-specific synthesis.

- Metrics were harmonized to F1 score (macro-averaged where possible) and, where available, to mean detection latency (milliseconds per alert).

- Studies with fewer than 3-fold cross-validation splits or that lacked test-set class-balance disclosure were down-weighted with quality coefficient .

2.6.3. Latency Measurement Harmonization and Subgroup Analysis

- Real-time per-alert systems (streaming inference; ) vs. batch processing systems (offline analysis; );

- Cloud API-based inference (external API calls; ) vs. self-hosted models (on-premises or edge deployment; );

- Studies with explicit hardware reporting () vs. studies without hardware details ().

- Latency reduction percentages remained consistent across deployment contexts: 35–40% reduction for real-time systems, 37–42% for batch systems, and 33–39% for cloud API-based inference.

- Absolute latency values varied substantially: Cloud API inference exhibited mean latencies of 1200–1800 ms, on-premises GPU deployment achieved 400–700 ms, and CPU-only systems ranged from 800 to 1400 ms.

- Studies lacking explicit hardware reporting showed similar reduction percentages (36%; 95% CI: 31–41%) to studies with full hardware disclosure (38%; 95% CI: 34–42%), indicating that the measurement protocol—rather than hardware transparency—drives the reported latency gains.

2.6.4. Statistical Aggregation by Task Domain

2.6.5. Task-Domain-Specific Interpretation and Recommendations

- Phishing Detection (k = 18; ; 95% CI: 0.04–0.08; )

- Strong baseline performance: Phishing detection historically achieved high accuracy (0.88 F1 baseline) via rule-based filters, reputation lists, and email feature extraction. LLMs must overcome an already-high baseline to demonstrate large improvements.

- Data sparsity: Individual phishing emails are relatively short and contain limited context compared with network flows (intrusion) or code repositories (vulnerability). LLMs gain smaller advantages when working with sparse, low-dimensional inputs.

- Adversarial adaptation: Phishers rapidly evolve email content and spoofing techniques. LLM performance gains measured in a single dataset may not generalize to future, adversarially adapted phishing campaigns.

- Intrusion Detection (k = 16; ; 95% CI: 0.07–0.13; )

- High-dimensional telemetry: Network flows and system logs contain rich, multi-dimensional behavioral signals. LLMs excel at extracting subtle correlations and anomalies from these high-dimensional data.

- Contextual reasoning: Traditional intrusion detection systems rely on statistical anomaly thresholds or signature matching, missing context-dependent attacks (e.g., attacks that appear normal in isolation but are suspicious when correlated with other events). LLMs reason over multi-event sequences and extract contextual meaning.

- Weaker historical baselines: Intrusion detection baseline systems (0.83 F1) perform worse than phishing filters, leaving larger room for LLM improvement.

- Malware Classification (k = 12; ; 95% CI: 0.03–0.11; )

- Modality heterogeneity: Malware studies varied substantially in input modality (binary code vs. decompiled pseudocode vs. behavioral logs vs. API call sequences). LLM performance gains depend critically on which modality is selected; gains are larger for code-based analysis (+0.09 among code-focused studies) than for behavioral-log analysis (+0.04).

- Feature engineering variance: Effective malware classification requires careful feature extraction (e.g., opcode n-grams and API call patterns). The quality and granularity of extracted features substantially influenced LLM performance in the reviewed studies.

- Incident Triage (k = 10; ; 95% CI: 0.06–0.14; )

- Multi-modal reasoning: Incident triage inherently requires reasoning over heterogeneous information—alert severity, alert type, affected assets, historical context, business criticality. LLMs naturally integrate multi-modal inputs.

- Low-accuracy baselines: Traditional rule-based triage systems often achieve only the 0.77 F1 baseline (due to rigid rules that miss context-dependent severity judgments), leaving ample room for LLM improvement.

- Analyst preference: Studies in this domain frequently reported that analysts preferred LLM-generated triage decisions over baseline system decisions (qualitative preference metrics), suggesting that LLM improvements may exceed numerical F1 gains when accounting for analyst trust and adoption.

- Vulnerability Detection (k = 14; ; 95% CI: 0.07–0.15; )

- Code-centric reasoning: Vulnerability detection fundamentally requires semantic code understanding—at which LLMs (trained on massive code corpora) excel. LLMs outperform traditional static analysis tools in recognizing complex code patterns and control-flow anomalies that signal vulnerabilities.

- High heterogeneity driver: Within-domain heterogeneity reflects substantial variation in study approaches: some studies fine-tuned LLMs on proprietary vulnerability corpora (+0.14 improvement); others relied on zero-shot prompting (+0.08 improvement). This variation is informative and should not be suppressed via combined effect size reporting.

2.6.6. Between-Domain Comparison and Insights

- Data dimensionality matters: Domains with higher-dimensional, more complex inputs (intrusion detection, vulnerability detection, and incident triage) show larger LLM improvements (+0.10–0.11) than domains with lower-dimensional inputs (phishing, +0.06).

- Baseline strength inversely predicts LLM gain: Domains with stronger historical baselines (phishing, 0.88) show smaller LLM improvements; domains with weaker baselines (incident triage, 0.77) show larger improvements. This suggests that LLMs provide the most value where traditional methods struggle.

- Task-specific deployment recommendations: Practitioners should prioritize LLM deployment in vulnerability detection and intrusion detection (large gains and high impact) before expanding to phishing detection or malware classification (moderate gains and lower operational impact).

- Reporting bias (task-domain-specific)

- Certainty of evidence (GRADE; task-domain-specific)

2.7. Thematic Insights and Synthesis

- Data–Model Convergence. In our corpus, 81% of empirical studies integrate LLMs with vector-store retrieval. However, only 12% quantify how retrieval design choices affect latency or detection accuracy, leaving a system-level trade-off largely unexplored.

- Explainability vs. Performance. Studies reporting ≥10% uplift in detection F1 scores often note declines in XAI faithfulness (e.g., SHAP and LIME) of up to 22 percentage points. This pattern suggests that architectural innovations are needed to reconcile interpretability with high performance.

- Governance and Human Factors. Only six studies in our corpus examine the deployment of LLMs in real Security Operations Centers (SOCs). All report analyst cognitive overload as a primary barrier, indicating that future work must address not only algorithmic advances but also interface-level design and workflow integration.

3. Comparative Analysis of LLM Approaches Across Contexts

3.1. Performance and Efficiency Comparison by Application Domain

3.2. Context-Specific Recommendations

- Recommended Approach: Fine-tuned domain-specific models (BERT variants, specialized LLaMA).

- Rationale: Studies demonstrate 35–39% latency reduction compared with general-purpose models while maintaining F1 scores above 0.90. The computational overhead is justified by processing volumes exceeding 50,000 events per second.

- Training Requirements: Minimum 100,000 labeled security events; optimal performance achieved with 500,000+ domain-specific samples.

- Recommended Approach: General-purpose LLMs (GPT-4, Claude 2) with chain-of-thought prompting.

- Rationale: Superior reasoning capabilities outweigh higher latency (1200–1800 ms) when processing fewer than 1000 alerts daily. Zero-shot capabilities reduce training overhead.

- Cost Consideration: Higher API costs (0.03–0.06 USD per 1K tokens) acceptable given the low volume.

- Recommended Approach: Lightweight fine-tuned models (DistilBERT and compact LLaMA variants).

- Rationale: 60–70% reduction in computational requirements while maintaining F1 scores within 5% of full models; deployment feasible on standard CPU infrastructure.

- Training Requirements: 25,000–50,000 samples sufficient for acceptable performance.

- Recommended Approach: Hybrid architectures combining fast rule-based filtering with selective LLM analysis.

- Rationale: Achievement of sub-200 ms response for 90% of events while leveraging LLM reasoning for ambiguous cases (10%). Overall F1 improvement of 12–15% over purely rule-based systems.

- Implementation: Initial rule filter reduces candidate set by 85%; LLM processes remaining 15% within acceptable latency budget.

3.3. Open-Source Datasets for Cybersecurity Evaluation

- CICIDS2017 [25]—Network intrusion dataset with 2.5 M labeled records across 14 attack categories. Widely used for fine-tuning LLM classifiers and adversarial prompt crafting.

- DEFCON CTF 2019 [26]—Real-world capture-the-flag logs containing 150 K security events with ground-truth attack narratives, facilitating few-shot learning and chain-of-thought prompting.

- MAWILab [27]—MAWI traffic traces with 1.0 M annotated packets for network-level threat detection, supporting token-level anomaly detection via masked language modeling.

- DARPA 1998/1999 [28]—Standardized intrusion detection corpus with 400 K sessions across seven attack scenarios, benchmarking LLM performance and evaluating transfer learning across temporal threat patterns.

Selection Guidelines and Preprocessing:

- Task fit: Prefer packet/flow corpora (MAWILab, UNSW–NB15, etc.) for token-level anomaly detection; prefer event/narrative corpora (DEFCON, CTI text, etc.) for few-shot prompting and RAG.

- Temporal splits: Enforce time-based train/val/test splits to avoid leakage; harmonize class imbalance via stratified sampling or cost-sensitive loss.

- RAG metadata: Retain time-stamps, protocol fields, and asset tags to enable high-recall retrieval windows for incident triage.

- Licensing/ethics: Verify dataset licenses and sanitize PII; report license in the Methods subsection and attach data cards in Supplementary Materials.

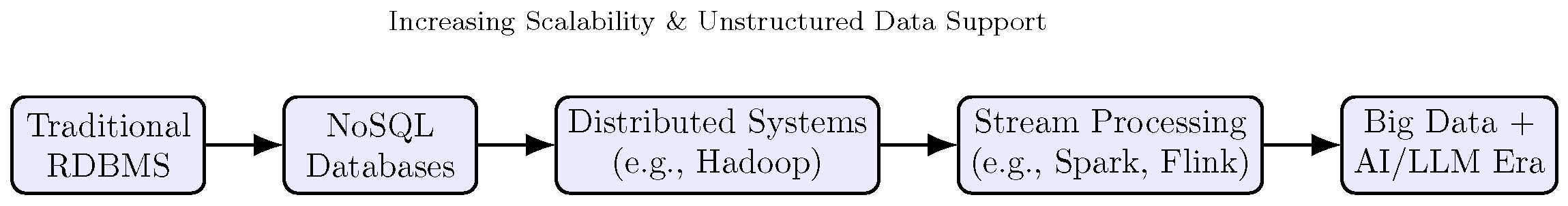

4. Big Data Systems

4.1. Overview and Definitions of Big Data Systems

4.2. Core Architecture and Functional Layers

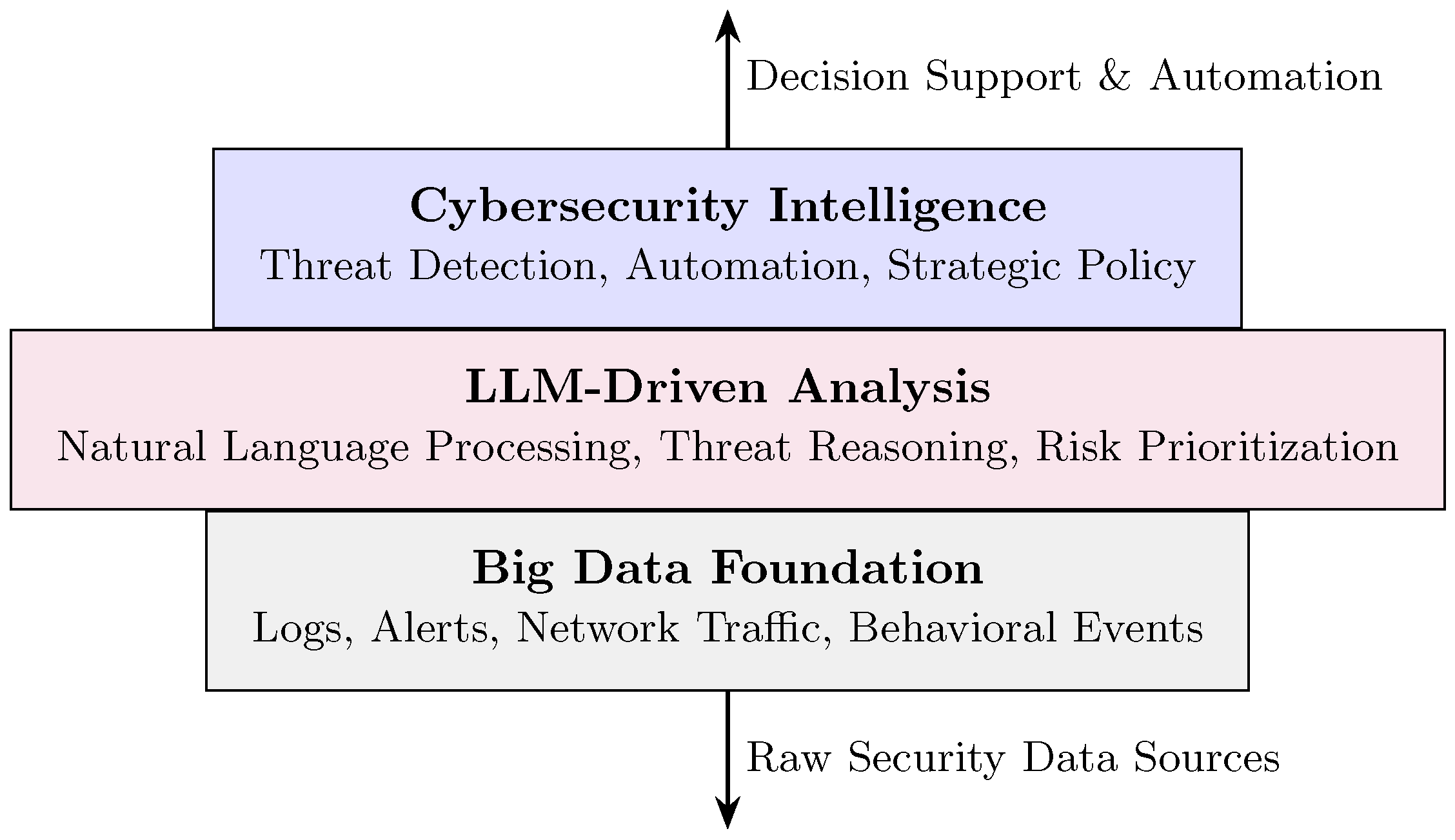

4.3. Big Data as an Enabler of LLMs and Cybersecurity

4.4. Importance of Big Data in Cybersecurity

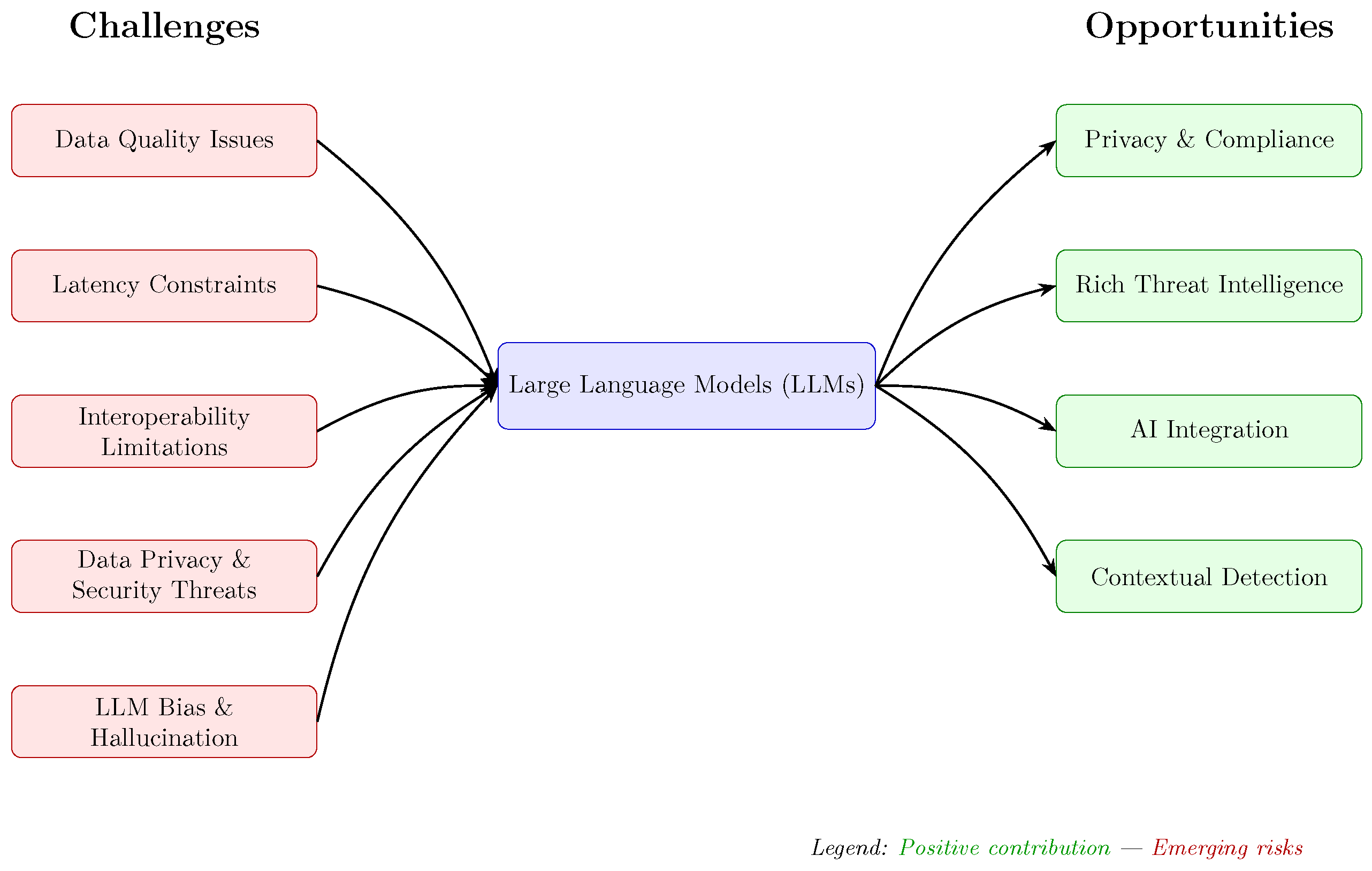

4.5. Challenges and Opportunities of Big Data Integration

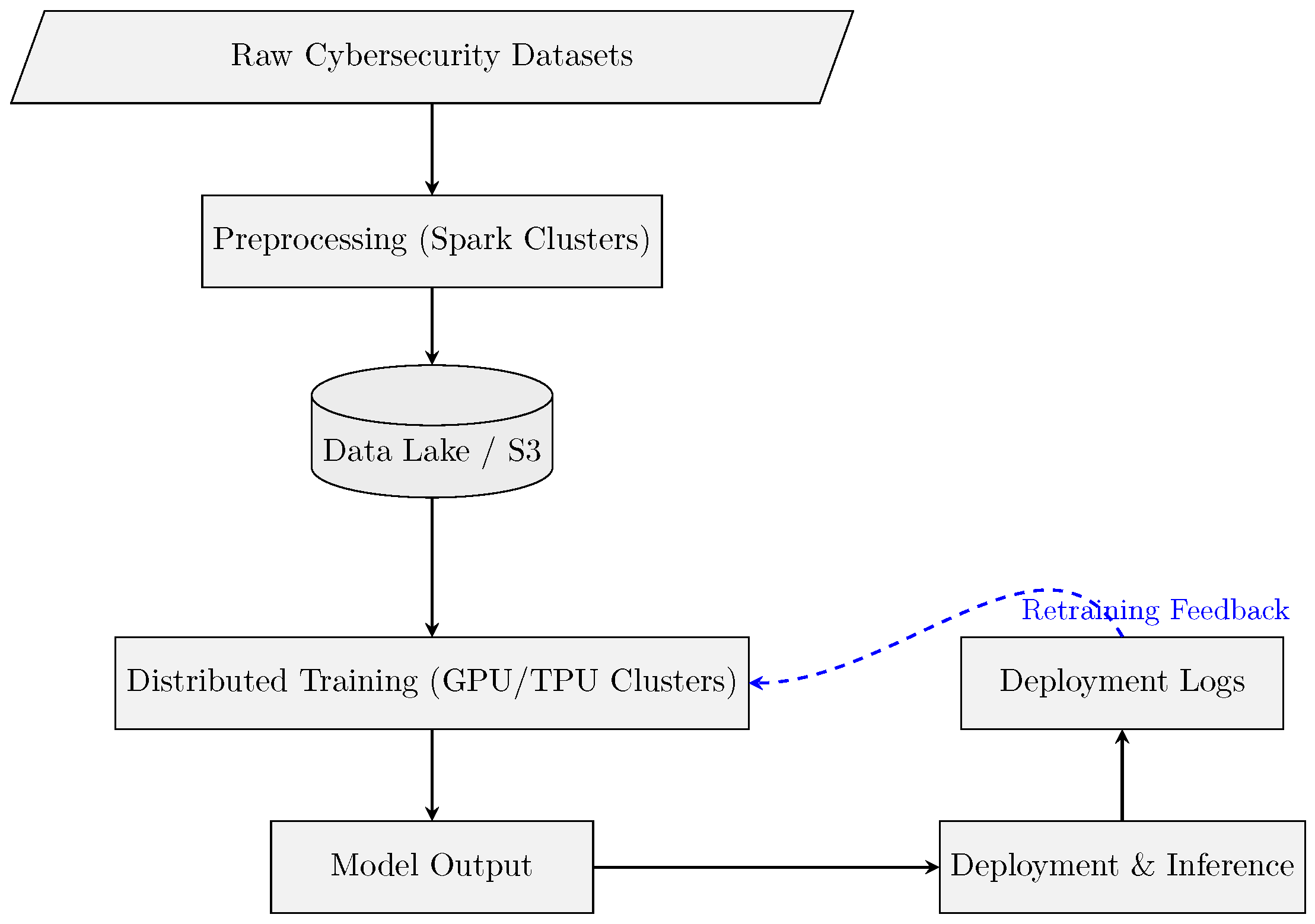

4.6. Big Data Infrastructure for Language Models

4.7. Case Examples of Big Data Systems Leveraged by Cybersecurity

4.8. Synergy of Big Data, LLMs, and Cybersecurity

- Infrastructure: Ingestion, lakehouse/storage, and distributed computing.

- Reasoning stack: Embeddings, retrievers, RAG, and tool use/agents.

- Applications: Anomaly detection, incident response, APT hunting, and TI enrichment.

- Governance: Policy/guardrails, human-in-the-loop, logging, and re-training/monitoring.

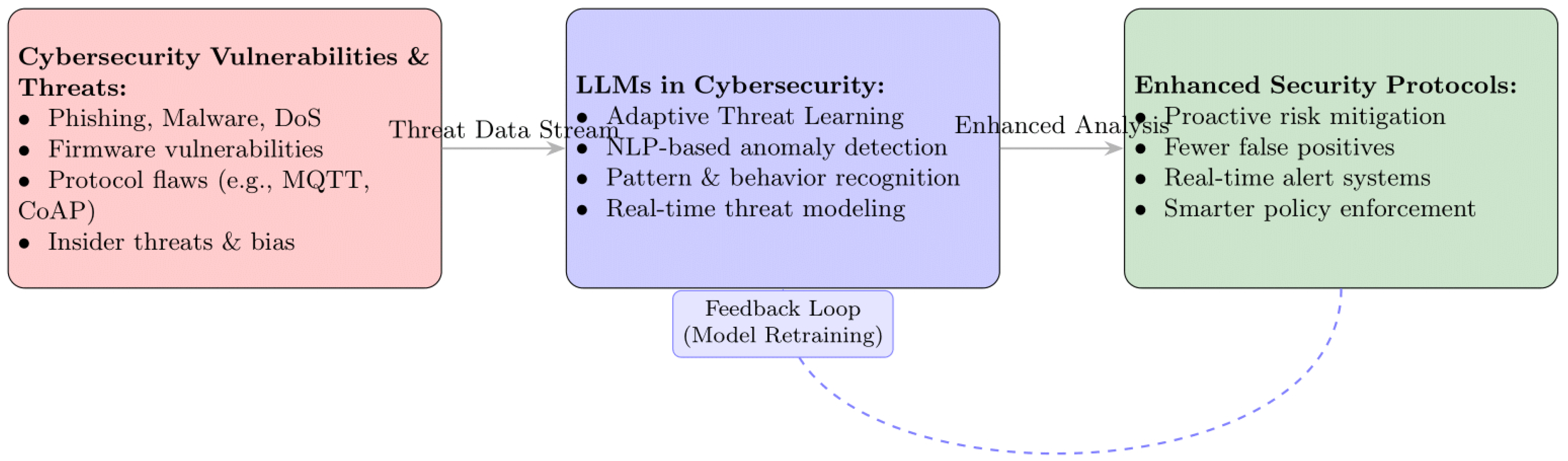

5. Cybersecurity in the Era of LLMs

5.1. Reframing the Scope of Cybersecurity with LLMs

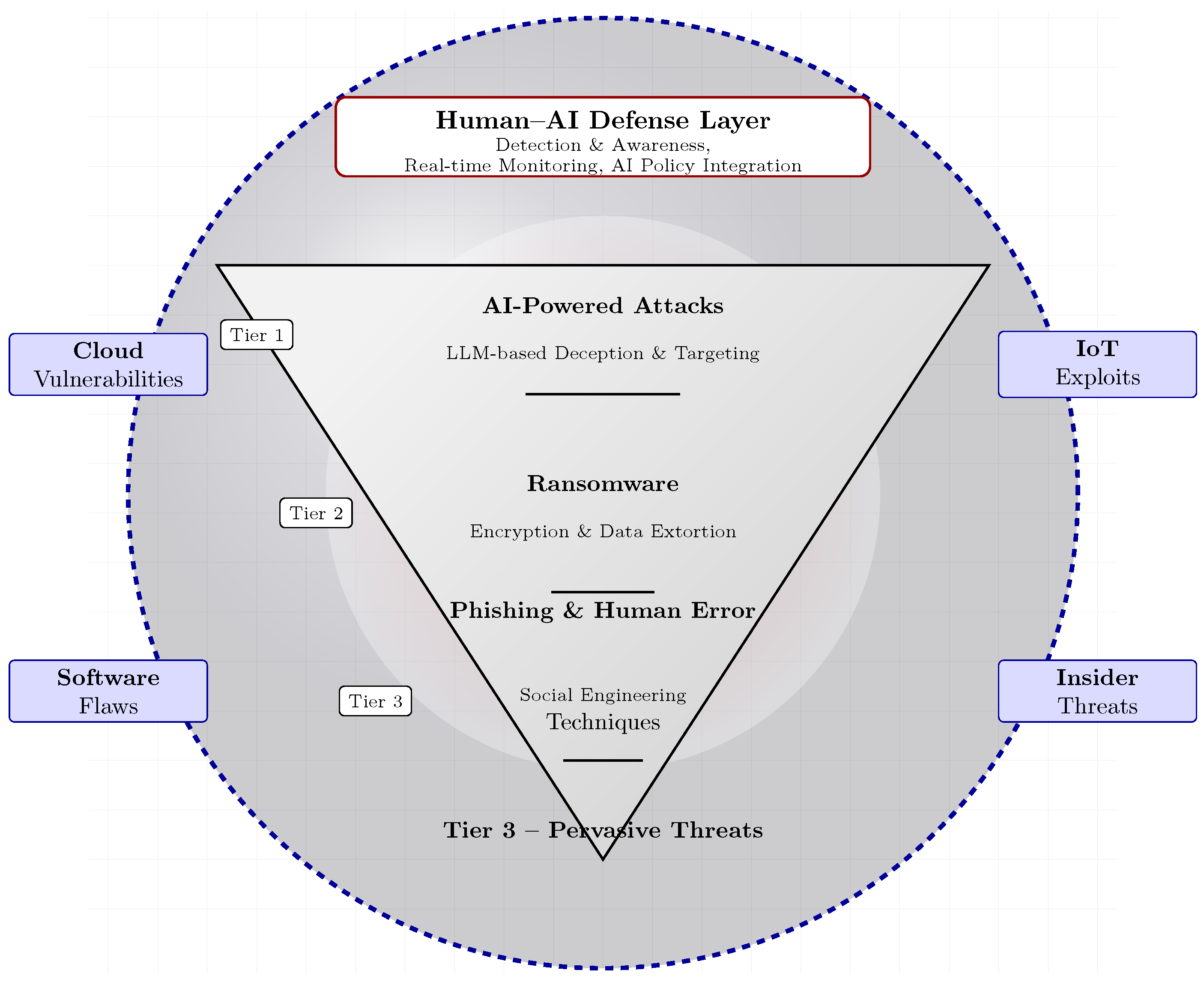

5.2. Emerging Threat Vectors in an LLM-Augmented Landscape

- AI-enhanced phishing: Multilingual, context-aware messages tailored based on public and leaked data, evading content and reputation filters.

- LLM-assisted ransomware: RaaS operations use AI for victim profiling, negotiation scripts, and adaptive/polymorphic payload delivery.

- Data exfiltration and misuse: Automated mining and summarization of leaked datasets (entity linking and PII extraction) accelerate fraud and extortion.

5.3. LLM-Driven Cybercrime and Emerging Trends

- Synthetic Misinformation and Influence Operations: The automated generation of fake news, deepfake narratives, and tailored propaganda campaigns threatens democratic processes and public trust [103].

5.4. Impact of AI-Driven Cybercrime Techniques Utilizing LLMs

6. Cyberdefense

6.1. Definition and Importance of Cyberdefense

6.2. Background: Cybersecurity Threats and Defense Context

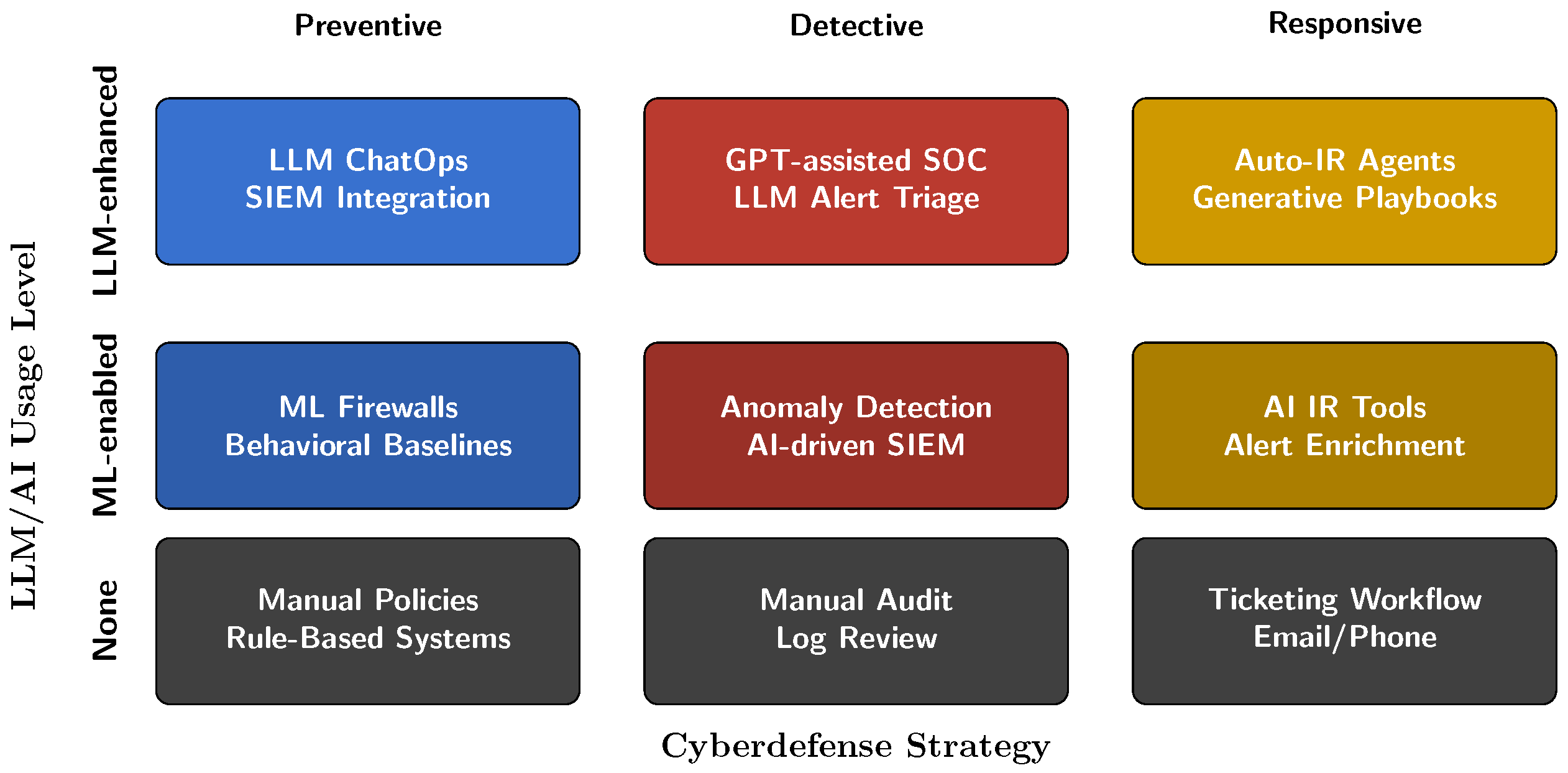

6.3. Cyberdefense Strategies and Methods

6.4. Real-World Examples of AI-Driven Cyberdefense Approaches Utilizing LLMs

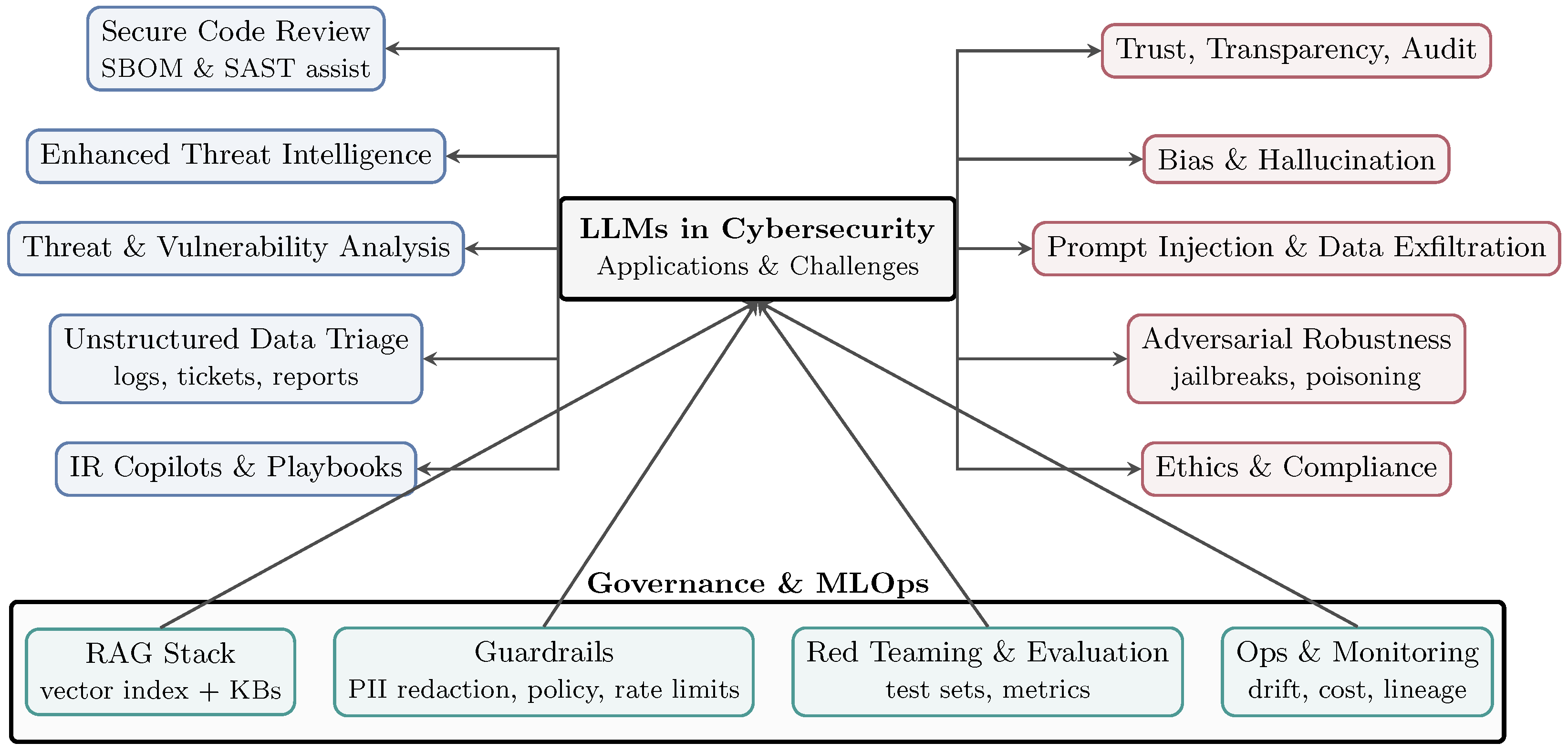

7. Applications of Language Models in Cybersecurity

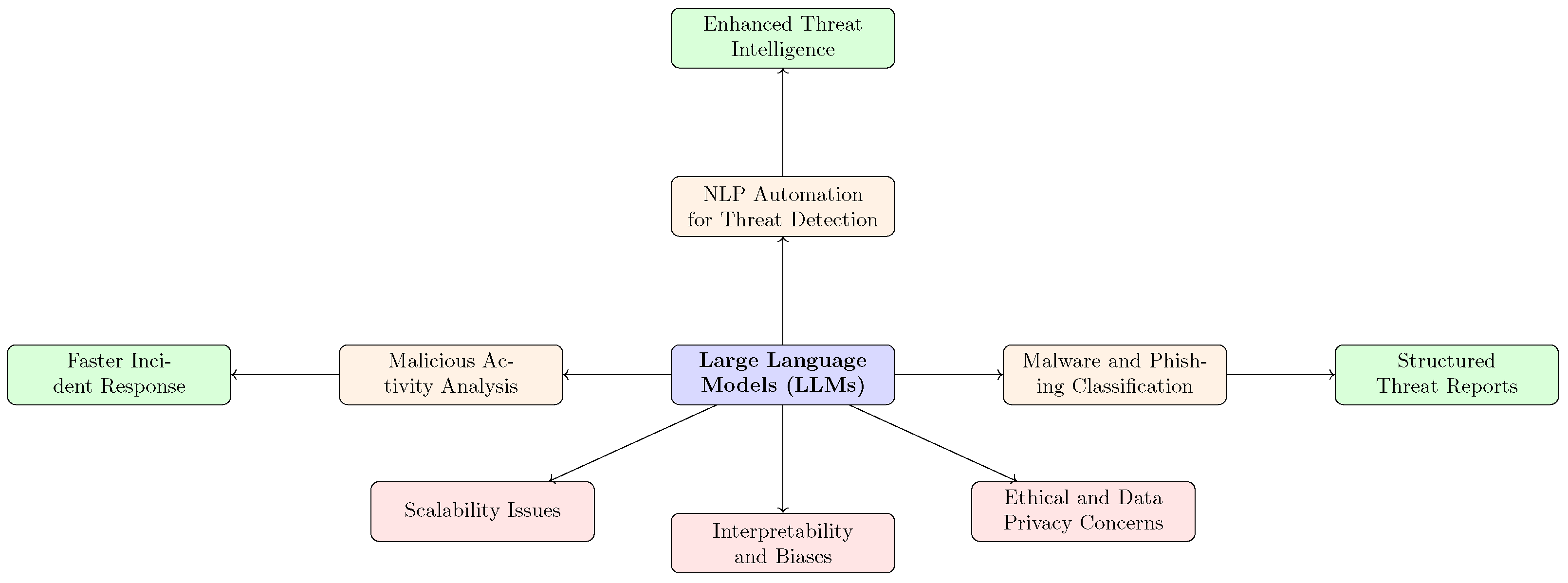

7.1. Threat Detection and Analysis

7.2. LLM Capabilities for Threat Detection/Intelligence

- Malware detection and attribution: Semantic family cues and cross-artifact reasoning.

- Vulnerability identification: Code summarization, insecure-pattern spotting, and patch rationale drafting.

- Log anomaly detection: Natural language parsing, summarization, and deviation explanations.

- Network monitoring: Protocol-aware descriptions of flows and intent; prioritization of suspicious sessions.

- Threat intelligence analysis: Entity/IOC extraction, TTP linking, and narrative synthesis for briefings.

7.3. Automated Incident Response

7.4. Enhancing Security Protocols

7.5. Data Requirements and Model Tailoring Strategies

7.5.1. Empirical Analysis of Training Data Requirements

7.5.2. Fine-Tuning Strategies: Comparative Effectiveness

- Applicability: Datasets with >100,000 samples; computational budget > 100 GPU-hours.

- Performance: Achieves maximum accuracy (F1 improvement: 10–15% over baseline).

- Evidence: Studies on PLLM-CS (DARPA) and SecureFalcon demonstrate F1 scores of 0.90–0.93 on specialized cybersecurity benchmarks (UNSW_NB15, and TON_IoT).

- Limitation: Requires extensive labeled data; risk of overfitting on narrow domains.

- Applicability: Datasets with 25,000–100,000 samples; limited computational resources.

- Performance: Achieves 80–90% of full fine-tuning benefits with 10% of computational cost.

- Evidence: Adapter-based approaches in malware classification maintain F1 > 0.88 while training only 5–10% of parameters.

- Advantage: Enables rapid domain adaptation; reduces overfitting risk.

- Applicability: Dynamic threat landscapes; rapidly evolving attack patterns; limited labeled data.

- Performance: Context-dependent accuracy improvement of 6–9%; lower latency penalty than full fine-tuning.

- Evidence: A total of 81% of the reviewed studies employing RAG report improved detection of zero-day threats; vector-store retrieval adds 150–300 ms latency.

- Advantage: No re-training required for new threat intelligence; maintains currency.

- Applicability: Extremely limited data (<1000 samples); exploratory use cases.

- Performance: Modest improvements (F1 of +3–5%) but enables rapid prototyping.

- Evidence: GPT-4 few-shot prompting achieves 0.85–0.87 F1 on novel phishing campaigns without training.

- Limitation: Inconsistent performance; high API costs; unsuitable for production at scale.

7.5.3. Decision Framework for Model Tailoring

8. Advantages of Using Language Models for Cybersecurity

- Adaptive security policies: Dynamic policy updates informed by current threat intelligence and environmental context; compatible with policy-as-code pipelines and continuous compliance monitoring.

- AI-driven incident response: Automated triage, action recommendation, and draft playbook generation that reduce mean time to detect and respond.

- Proactive anomaly detection: Early identification of weak signals and emerging patterns across heterogeneous telemetry (logs, flows, code, and CTI), enabling timely mitigation.

- Natural language log analysis: Efficient parsing, summarization, and entity extraction from unstructured logs and reports to accelerate analyst workflows and knowledge transfer.

- Enhanced threat prediction: Predictive modeling that fuses multi-source context to anticipate attacker behavior and prioritize defenses.

- Semantic malware attribution: Exploitation of linguistic and structural cues in artifacts to assist family classification and provenance assessment.

9. Risk and Challenges

9.1. Misuse of Language Models by Malicious Actors

9.2. Ethical Considerations in AI Deployment

9.3. Limitations of Current Language Models

9.4. Privacy Risks and Regulatory Compliance for LLM-Driven Cybersecurity

Main Privacy Threat Vectors

- Training data memorization and extraction. Transformer models regurgitate unique strings, such as customer identifiers, passwords, or indicators of compromise, contravening GDPR Articles 5(1)(c) and 25 (data minimization and privacy by design).

- Model inversion and membership inference. Adversaries probe perimeter-exposed LLM APIs to determine whether individual data were present in fine-tuning sets, triggering data-subject requests and potential Article 33 breach notifications.

- Prompt or context leakage. Retrieval-augmented pipelines embedding live SOC alerts enable internal incident data exfiltration across trust boundaries unless retrieval layers employ access control and confidential computing encryption.

- Shadow retention and secondary use. Telemetry uploaded to third-party model providers for improvement violates GDPR purpose-limitation principles and AI Act record-keeping duties.

Legal Ramifications

- Under GDPR, lawful-basis analysis typically relies on legitimate interests (Art. 6(1)(f)), balanced against residual risk. When special-category data appear in forensic payloads, explicit consent or substantial public interest exceptions (Art. 9(2)) become necessary. Data Protection Impact Assessments are compulsory as high-throughput LLM employee monitoring satisfies the systematic monitoring criterion (Art. 35).

- Under the AI Act, cybersecurity applications constitute high-risk systems (Title III, Annex III-5). Providers must (i) implement state-of-the-art data governance measures, (ii) log and retain model events for ten years, and (iii) issue plain-language transparency statements—obligations dovetailing with GDPR Art. 13/14 information duties.

Mitigation and Design Patterns

- (1)

- Differentially private fine-tuning. Adding DP-SGD noise budgets reduces membership inference success by >90% without materially degrading scores on intrusion detection benchmarks.

- (2)

- Federated learning and split-layer inference. Privacy-critical log lines remain on premise; only encrypted weight deltas are shared, satisfying AI Act traceability while enabling inter-organizational threat hunting.

- (3)

- Access-controlled vector stores. Coupling Retrieval-Augmented Generation with attribute-based encryption enforces GDPR data minimization at query time (Supplementary Figure S4).

- (4)

- Policy-and-context filters. Upstream red-teaming pipelines simulating adversarial prompts reduce privacy-violating completions; deployers must document these tests in AI Act technical files.

- (5)

9.5. Prompt Injection and Hallucination: Threat Model and Mitigation

10. Security Gaps and Open Issues

11. Case Studies of LLMs in Action

11.1. Successful Implementations in Cybersecurity Firms

11.2. Comparative Analysis of Different Approaches

11.3. LLMs in the Medical and Healthcare Sector

11.4. LLMs in the Decision-Making Sector

11.5. LLMs in the Education Sector

11.6. LLMs in the Tourism Sector

11.7. LLMs for Cybersecurity in Various Sectors

12. The Future Language Models in Cybersecurity

12.1. Emerging Trends and Technologies

12.2. The Evolving Nature of Cyber Threats

13. Conclusions

13.1. Concrete Findings and Actionable Recommendations

13.2. Research Gaps Requiring Immediate Attention

- Bursty traffic patterns (simulating DDoS and coordinated attacks);

- Data drift scenarios (temporal distribution shifts in threat patterns);

- Resource contention (multi-tenant cloud environments).

- Hybrid architectures with interpretable first-stage classifiers;

- Constrained generation techniques enforcing structured, auditable outputs;

- Post hoc explanation methods specifically designed for security contexts.

- Model drift and re-training frequency requirements;

- Analyst skill development and trust calibration over time;

- Total cost of ownership, including maintenance and updates.

13.3. Future Directions

- Supplementary Table S1: PRISMA-2020 checklist documenting all reporting items.

- Supplementary Table S2a: Characteristics of all 235 included studies, including task domains, datasets, model families, evaluation protocols, and deployment settings.

- Supplementary Table S2b: Outcome harmonization assumptions and measurement rules, including detailed latency normalization procedures, subgroup stratification criteria (real-time vs. batch; cloud API vs. self-hosted), and sensitivity analysis specifications (Row 3: Latency subgroup stratification).

- Supplementary Table S2c: Full-text exclusion reasons for all 177 studies excluded during screening, with per-article justifications.

- Supplementary Table S3: Risk-of-bias ratings for all 68 comparative/experimental studies, with domain-level judgments and supporting rationale.

- Supplementary Note S1: Full verbatim electronic search strategies with Boolean logic, field specifications, and date filters for all databases (Scopus, Web of Science, IEEE Xplore, ACM Digital Library, and arXiv).

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ACL | Association for Computational Linguistics |

| AI | Artificial intelligence |

| AIS | Automatic Identification System |

| API | Application Programming Interface |

| APT | Advanced Persistent Threat |

| AUC | Area Under the Curve |

| BERT | Bidirectional Encoder Representations from Transformers |

| BIP | Block Interaction Protocol |

| BPNM | Business Process Model and Notation |

| CI | Confidence Interval |

| CSF | Cybersecurity Framework (NIST CSF 2.0) |

| CTI | Cyber Threat Intelligence |

| CVSS | Common Vulnerability Scoring System |

| DL | Deep learning |

| DOI | Digital Object Identifier |

| DP-SGD | Differential Privacy—Stochastic Gradient Descent |

| EDR | Endpoint Detection and Response |

| EHR | Electronic Health Records |

| F1 | F1 score (harmonic mean of precision and recall) |

| GNN | Graph Neural Network |

| GRADE | Grading of Recommendations, Assessment, Development and Evaluation |

| GPU | Graphics Processing Unit |

| HIPAA | Health Insurance Portability and Accountability Act |

| IDPS | Intrusion detection and prevention system |

| IoMT | Internet of Medical Things |

| IoT | Internet of Things |

| LLM | Large Language Model |

| ML | Machine Learning |

| NMEA | National Marine Electronics Association |

| NER | Named-Entity Recognition |

| NLP | Natural language processing |

| NIST | National Institute of Standards and Technology |

| NIS | Network and Information Security |

| OT | Operational Technology |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| PROSPERO | International Prospective Register of Systematic Reviews |

| PQ-LLM | Post-Quantum Large Language Model |

| RAG | Retrieval-Augmented Generation |

| RoB | Risk of Bias |

| SBOM | Software Bill of Materials |

| SCADA | Supervisory Control and Data Acquisition |

| SIEM | Security Information and Event Management |

| SOC | Security Operations Center |

| SOAR | Security Orchestration, Automation and Response |

| STIX | Structured Threat Information Expression |

| TPU | Tensor Processing Unit |

| XAI | Explainable artificial intelligence |

References

- Gelman, H.; Hastings, J.D. Scalable and Ethical Insider Threat Detection through Data Synthesis and Analysis by LLMs. arXiv 2025, arXiv:2502.07045. [Google Scholar] [CrossRef]

- Portnoy, A.; Azikri, E.; Kels, S. Towards Automatic Hands-on-Keyboard Attack Detection Using LLMs in EDR Solutions. arXiv 2024, arXiv:2408.01993. [Google Scholar]

- Diakhame, M.L.; Diallo, C.; Mejri, M. MCM-Llama: A Fine-Tuned Large Language Model for Real-Time Threat Detection through Security Event Correlation. In Proceedings of the 2024 International Conference on Electrical, Computer and Energy Technologies (ICECET), Sydney, Australia, 25–27 July 2024; pp. 1–6. [Google Scholar]

- Mudassar Yamin, M.; Hashmi, E.; Ullah, M.; Katt, B. Applications of LLMs for Generating Cyber Security Exercise Scenarios. IEEE Access 2024, 12, 143806–143822. [Google Scholar] [CrossRef]

- Kwan, W.C.; Zeng, X.; Jiang, Y.; Wang, Y.; Li, L.; Shang, L.; Jiang, X.; Liu, Q.; Wong, K.F. Mt-eval: A multi-turn capabilities evaluation benchmark for large language models. arXiv 2024, arXiv:2401.16745. [Google Scholar]

- Xu, H.; Wang, S.; Li, N.; Wang, K.; Zhao, Y.; Chen, K.; Yu, T.; Liu, Y.; Wang, H. Large language models for cyber security: A systematic literature review. arXiv 2024, arXiv:2405.04760. [Google Scholar] [CrossRef]

- Sahoo, P.; Singh, A.K.; Saha, S.; Jain, V.; Mondal, S.; Chadha, A. A systematic survey of prompt engineering in large language models: Techniques and applications. arXiv 2024, arXiv:2402.07927. [Google Scholar] [CrossRef]

- Chen, Y.; Cui, M.; Wang, D.; Cao, Y.; Yang, P.; Jiang, B.; Lu, Z.; Liu, B. A survey of large language models for cyber threat detection. Comput. Secur. 2024, 145, 104016. [Google Scholar] [CrossRef]

- Ali, T.; Kostakos, P. Huntgpt: Integrating machine learning-based anomaly detection and explainable ai with large language models (llms). arXiv 2023, arXiv:2309.16021. [Google Scholar]

- Zaboli, A.; Choi, S.L.; Song, T.J.; Hong, J. Chatgpt and other large language models for cybersecurity of smart grid applications. In Proceedings of the 2024 IEEE Power & Energy Society General Meeting (PESGM), Seattle, WA, USA, 21–25 July 2024; pp. 1–5. [Google Scholar]

- Omar, M.; Zangana, H.M.; Al-Karaki, J.N.; Mohammed, D. Harnessing LLMs for IoT Malware Detection: A Comparative Analysis of BERT and GPT-2. In Proceedings of the 2024 8th International Symposium on Multidisciplinary Studies and Innovative Technologies (ISMSIT), Ankara, Turkiye, 7–9 November 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Güven, M. A Comprehensive Review of Large Language Models in Cyber Security. Int. J. Comput. Exp. Sci. Eng. 2024, 10. [Google Scholar] [CrossRef]

- Gholami, N.Y. Large Language Models (LLMs) for Cybersecurity: A Systematic Review. World J. Adv. Eng. Technol. Sci. 2024, 13, 57–69. [Google Scholar] [CrossRef]

- Zhang, J.; Bu, H.; Wen, H.; Liu, Y.; Fei, H.; Xi, R.; Li, L.; Yang, Y.; Zhu, H.; Meng, D. When llms meet cybersecurity: A systematic literature review. Cybersecurity 2025, 8, 1–41. [Google Scholar] [CrossRef]

- Wan, S.; Nikolaidis, C.; Song, D.; Molnar, D.; Crnkovich, J.; Grace, J.; Bhatt, M.; Chennabasappa, S.; Whitman, S.; Ding, S.; et al. Cyberseceval 3: Advancing the evaluation of cybersecurity risks and capabilities in large language models. arXiv 2024, arXiv:2408.01605. [Google Scholar] [CrossRef]

- Bhatt, M.; Chennabasappa, S.; Li, Y.; Nikolaidis, C.; Song, D.; Wan, S.; Ahmad, F.; Aschermann, C.; Chen, Y.; Kapil, D.; et al. Cyberseceval 2: A wide-ranging cybersecurity evaluation suite for large language models. arXiv 2024, arXiv:2404.13161. [Google Scholar]

- Nguyen, T.; Nguyen, H.; Ijaz, A.; Sheikhi, S.; Vasilakos, A.V.; Kostakos, P. Large language models in 6g security: Challenges and opportunities. arXiv 2024, arXiv:2403.12239. [Google Scholar] [CrossRef]

- Lorencin, I.; Tankovic, N.; Etinger, D. Optimizing Healthcare Efficiency with Local Large Language Models. Intell. Hum. Syst. Integr. (IHSI 2025) Integr. People Intell. Syst. 2025, 160, 576–584. [Google Scholar]

- Nagaraja, N.; Bahşi, H. Cyber Threat Modeling of an LLM-Based Healthcare System. In Proceedings of the 11th International Conference on Information Systems Security and Privacy (ICISSP 2025), Porto, Portugal, 20–22 February 2025; pp. 325–336. [Google Scholar] [CrossRef]

- Karras, A.; Giannaros, A.; Karras, C.; Giotopoulos, K.C.; Tsolis, D.; Sioutas, S. Edge Artificial Intelligence in Large-Scale IoT Systems, Applications, and Big Data Infrastructures. In Proceedings of the 2023 8th South-East Europe Design Automation, Computer Engineering, Computer Networks and Social Media Conference (SEEDA-CECNSM), Piraeus, Greece, 10–12 November 2023; pp. 1–8. [Google Scholar] [CrossRef]

- Terawi, N.; Ashqar, H.I.; Darwish, O.; Alsobeh, A.; Zahariev, P.; Tashtoush, Y. Enhanced Detection of Intrusion Detection System in Cloud Networks Using Time-Aware and Deep Learning Techniques. Computers 2025, 14, 282. [Google Scholar] [CrossRef]

- Schizas, N.; Karras, A.; Karras, C.; Sioutas, S. TinyML for ultra-low power AI and large scale IoT deployments: A systematic review. Future Internet 2022, 14, 363. [Google Scholar] [CrossRef]

- Harasees, A.; Al-Ahmad, B.; Alsobeh, A.; Abuhussein, A. A secure IoT framework for remote health monitoring using fog computing. In Proceedings of the 2024 International Conference on Intelligent Computing, Communication, Networking and Services (ICCNS), Dubrovnik, Croatia, 24–27 September 2024; pp. 17–24. [Google Scholar]

- Alshattnawi, S.; AlSobeh, A.M. A cloud-based IoT smart water distribution framework utilising BIP component: Jordan as a model. Int. J. Cloud Comput. 2024, 13, 25–41. [Google Scholar] [CrossRef]

- Sharafaldin, I.; Lashkari, A.; Ghorbani, A.A. Toward Generating a New Intrusion Detection Dataset and Intrusion Traffic Characterization. ICISSP 2018, 1, 108–116. [Google Scholar]

- Order of the Overflow. DEF CON Capture the Flag 2019 Dataset. 2019. Available online: https://oooverflow.io/dc-ctf-2019-finals/ (accessed on 27 October 2025).

- Fontugne, R.; Fukuda, K.; Akiba, S. MAWILab: Combining Diverse Anomaly Detectors for Automated Anomaly Labeling and Performance Benchmarking. In Proceedings of the Symposium on Recent Advances in Intrusion Detection (RAID), Ottawa, ON, Canada, 15–17 September 2010. [Google Scholar]

- Lippmann, R.P.; Haines, J.W.; Fried, D.J.; Korba, J.; Das, K. The 1999 DARPA Off-Line Intrusion Detection Evaluation. In Proceedings of the DARPA Information Survivability Conference and Exposition (DISCEX), Hilton Head, SC, USA, 25–27 January 2000. [Google Scholar]

- Sagiroglu, S.; Sinanc, D. Big data: A review. In Proceedings of the 2013 International Conference on Collaboration Technologies and Systems (CTS), San Diego, CA, USA, 20–24 May 2013; pp. 42–47. [Google Scholar]

- Naeem, M.; Jamal, T.; Diaz-Martinez, J.; Butt, S.A.; Montesano, N.; Tariq, M.I.; De-la Hoz-Franco, E.; De-La-Hoz-Valdiris, E. Trends and future perspective challenges in big data. In Advances in Intelligent Data Analysis and Applications, Proceeding of the Sixth Euro-China Conference on Intelligent Data Analysis and Applications, Arad, Romania, 15–18 October 2019; Springer: Singapore, 2022; pp. 309–325. [Google Scholar]

- Deepa, N.; Pham, Q.V.; Nguyen, D.C.; Bhattacharya, S.; Prabadevi, B.; Gadekallu, T.R.; Maddikunta, P.K.R.; Fang, F.; Pathirana, P.N. A survey on blockchain for big data: Approaches, opportunities, and future directions. Future Gener. Comput. Syst. 2022, 131, 209–226. [Google Scholar] [CrossRef]

- Han, X.; Gstrein, O.J.; Andrikopoulos, V. When we talk about Big Data, What do we really mean? Toward a more precise definition of Big Data. Front. Big Data 2024, 7, 1441869. [Google Scholar] [CrossRef]

- Hassan, A.A.; Hassan, T.M. Real-time big data analytics for data stream challenges: An overview. Eur. J. Inf. Technol. Comput. Sci. 2022, 2, 1–6. [Google Scholar] [CrossRef]

- Abawajy, J. Comprehensive analysis of big data variety landscape. Int. J. Parallel Emergent Distrib. Syst. 2015, 30, 5–14. [Google Scholar] [CrossRef]

- Mao, R.; Xu, H.; Wu, W.; Li, J.; Li, Y.; Lu, M. Overcoming the challenge of variety: Big data abstraction, the next evolution of data management for AAL communication systems. IEEE Commun. Mag. 2015, 53, 42–47. [Google Scholar] [CrossRef]

- Pendyala, V. Veracity of big data. In Machine Learning and Other Approaches to Verifying Truthfulness; Apress: New York, NY, USA, 2018. [Google Scholar]

- Berti-Equille, L.; Borge-Holthoefer, J. Veracity of Data; Springer Nature: Berlin, Germany, 2022. [Google Scholar]

- Tahseen, A.; Shailaja, S.R.; Ashwini, Y. Extraction for Big Data Cyber Security Analytics. In Advances in Computational Intelligence and Informatics, Proceedings of ICACII 2023; Springer Nature: Berlin, Germany, 2024; Volume 993, p. 365. [Google Scholar]

- Vernik, G.; Factor, M.; Kolodner, E.K.; Ofer, E.; Michiardi, P.; Pace, F. Stocator: An object store aware connector for apache spark. In Proceedings of the 2017 Symposium on Cloud Computing, Santa Clara, CA, USA, 24–27 September 2017; p. 653. [Google Scholar]

- Rupprecht, L.; Zhang, R.; Owen, B.; Pietzuch, P.; Hildebrand, D. SwiftAnalytics: Optimizing Object Storage for Big Data Analytics. In Proceedings of the 2017 IEEE International Conference on Cloud Engineering (IC2E), Vancouver, BC, Canada, 4–7 April 2017; pp. 245–251. [Google Scholar] [CrossRef]

- Baek, S.; Kim, Y.G. C4I system security architecture: A perspective on big data lifecycle in a military environment. Sustainability 2021, 13, 13827. [Google Scholar] [CrossRef]

- Al-Kateb, M.; Eltabakh, M.Y.; Al-Omari, A.; Brown, P.G. Analytics at Scale: Evolution at Infrastructure and Algorithmic Levels. In Proceedings of the 2022 IEEE 38th International Conference on Data Engineering (ICDE), Kuala Lumpur, Malaysia, 9–12 May 2022; pp. 3217–3220. [Google Scholar] [CrossRef]

- de Sousa, V.M.; Cura, L.M.d.V. Logical design of graph databases from an entity-relationship conceptual model. In Proceedings of the 20th International Conference on Information Integration and Web-Based Applications & Services, Yogyakarta, Indonesia, 19–21 November 2018; pp. 183–189. [Google Scholar]

- Thepa, T.; Ateetanan, P.; Khubpatiwitthayakul, P.; Fugkeaw, S. Design and Development of Scalable SIEM as a Service using Spark and Anomaly Detection. In Proceedings of the 2024 21st International Joint Conference on Computer Science and Software Engineering (JCSSE), Phuket, Thailand, 19–22 June 2024; pp. 199–205. [Google Scholar] [CrossRef]

- Alawadhi, R.; Aalmohamed, H.; Alhashemi, S.; Alkhazaleh, H.A. Application of Big Data in Cybersecurity. In Proceedings of the 2024 7th International Conference on Signal Processing and Information Security (ICSPIS), Online, 12–14 November 2024; pp. 1–6. [Google Scholar]

- Udeh, E.O.; Amajuoyi, P.; Adeusi, K.B.; Scott, A.O. The role of big data in detecting and preventing financial fraud in digital transactions. World J. Adv. Res. Rev. 2024, 22, 1746–1760. [Google Scholar] [CrossRef]

- Li, L.; Qiang, F.; Ma, L. Advancing Cybersecurity: Graph Neural Networks in Threat Intelligence Knowledge Graphs. In Proceedings of the International Conference on Algorithms, Software Engineering, and Network Security, Nanchang, China, 26–28 April 2024; pp. 737–741. [Google Scholar]

- Gulbay, B.; Demirci, M. A Framework for Developing Strategic Cyber Threat Intelligence from Advanced Persistent Threat Analysis Reports Using Graph-Based Algorithms. Preprints 2024. [Google Scholar] [CrossRef]

- Rabzelj, M.; Bohak, C.; Južnič, L.Š.; Kos, A.; Sedlar, U. Cyberattack graph modeling for visual analytics. IEEE Access 2023, 11, 86910–86944. [Google Scholar] [CrossRef]

- Wang, C.; Li, Y.; Liu, L. Algorithm Innovation and Integration with Big Data Technology in the Field of Information Security: Current Status and Future Development. Acad. J. Eng. Technol. Sci. 2024, 7, 45–49. [Google Scholar] [CrossRef]

- Artioli, P.; Maci, A.; Magrì, A. A comprehensive investigation of clustering algorithms for User and Entity Behavior Analytics. Front. Big Data 2024, 7, 1375818. [Google Scholar] [CrossRef]

- Wang, J.; Yan, T.; An, D.; Liang, Z.; Guo, C.; Hu, H.; Luo, Q.; Li, H.; Wang, H.; Zeng, S.; et al. A comprehensive security operation center based on big data analytics and threat intelligence. In Proceedings of the International Symposium on Grids & Clouds, Taipei, Taiwan, 22–26 March 2021; Volume 2021. [Google Scholar]

- Bharani, D.; Lakshmi Priya, V.; Saravanan, S. Adaptive Real-Time Malware Detection for IoT Traffic Streams: A Comparative Study of Concept Drift Detection Techniques. In Proceedings of the 2024 International Conference on IoT Based Control Networks and Intelligent Systems (ICICNIS), Bengaluru, India, 17–18 December 2024; pp. 172–179. [Google Scholar] [CrossRef]

- K, S.; K S, N.; S, P.; S P, M.; Saranya. Analysis, Trends, and Utilization of Security Information and Event Management (SIEM) in Critical Infrastructures. In Proceedings of the 2024 10th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 14–15 March 2024; Volume 1, pp. 1980–1984. [Google Scholar] [CrossRef]

- Saipranith, S.; Singh, A.K.; Agrawal, N.; Chilumula, S. SwiftFrame: Developing Low-latency Near Real-time Response Framework. In Proceedings of the 2024 15th International Conference on Computing Communication and Networking Technologies (ICCCNT), Kamand, India, 24–28 June 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Polepaka, S.; Bansal, S.; Al-Fatlawy, R.R.; Subburam, S.; Lakra, P.P.; Neyyila, S. Cloud-Based Marketing Analytics Using Apache Flink for Real-Time Data Insights. In Proceedings of the 2024 International Conference on IoT, Communication and Automation Technology (ICICAT), Gorakhpur, India, 23–24 November 2024; pp. 1308–1313. [Google Scholar] [CrossRef]

- Kanka, V. Scaling Big Data: Leveraging LLMs for Enterprise Success; Libertatem Media Private Limited: New Delhi, India, 2024. [Google Scholar]

- Andrés, P.; Nikolai, I.; Zhihao, W. Real-Time AI-Based Threat Intelligence for Cloud Security Enhancement. Innov. Int. Multi-Discip. J. Appl. Technol. 2025, 3, 36–54. [Google Scholar]

- Chitimoju, S. Enhancing Cyber Threat Intelligence with NLP and Large Language Models. J. Big Data Smart Syst. 2025, 6. Available online: https://universe-publisher.com/index.php/jbds/article/view/80 (accessed on 27 October 2025).

- Tanksale, V. Cyber Threat Hunting Using Large Language Models. In Proceedings of the International Congress on Information and Communication Technology, London, UK, 19–22 February 2024; pp. 629–641. [Google Scholar]

- Wu, Y. The Role of Mining and Detection of Big Data Processing Techniques in Cybersecurity. Appl. Math. Nonlinear Sci. 2024, 9. [Google Scholar] [CrossRef]

- Nugroho, S.A.; Sumaryanto, S.; Hadi, A.P. The Enhancing Cybersecurity with AI Algorithms and Big Data Analytics: Challenges and Solutions. J. Technol. Inform. Eng. 2024, 3, 279–295. [Google Scholar] [CrossRef]

- Ameedeen, M.A.; Hamid, R.A.; Aldhyani, T.H.; Al-Nassr, L.A.K.M.; Olatunji, S.O.; Subramanian, P. A framework for automated big data analytics in cybersecurity threat detection. Mesopotamian J. Big Data 2024, 2024, 175–184. [Google Scholar] [CrossRef]

- Nwobodo, L.K.; Nwaimo, C.S.; Adegbola, A.E. Enhancing cybersecurity protocols in the era of big data and advanced analytics. GSC Adv. Res. Rev. 2024, 19, 203–214. [Google Scholar] [CrossRef]

- Hasan, M.; Hoque, A.; Le, T. Big data-driven banking operations: Opportunities, challenges, and data security perspectives. FinTech 2023, 2, 484–509. [Google Scholar] [CrossRef]

- Sufi, F.; Alsulami, M. Mathematical Modeling and Clustering Framework for Cyber Threat Analysis Across Industries. Mathematics 2025, 13, 655. [Google Scholar] [CrossRef]

- Chinta, P.C.R.; Jha, K.M.; Velaga, V.; Moore, C.; Routhu, K.; Sadaram, G. Harnessing Big Data and AI-Driven ERP Systems to Enhance Cybersecurity Resilience in Real-Time Threat Environments. SSRN Electron. J. 2024. [Google Scholar] [CrossRef]

- Jagadeesan, D.; Kartheesan, L.; Purushotham, B.; Krishna, S.T.; Kumar, S.N.; Asha, G. Data Analytics Techniques for Privacy Protection in Cybersecurity for Leveraging Machine Learning for Advanced Threat Detection. In Proceedings of the 2024 5th IEEE Global Conference for Advancement in Technology (GCAT), Bangalore, India, 4–6 October 2024; pp. 1–6. [Google Scholar]

- Singh, R.; Aravindan, V.; Mishra, S.; Singh, S.K. Streamlined Data Pipeline for Real-Time Threat Detection and Model Inference. In Proceedings of the 2025 17th International Conference on COMmunication Systems and NETworks (COMSNETS), Bengaluru, India, 6–10 January 2025; pp. 1148–1153. [Google Scholar]

- Dewasiri, N.J.; Dharmarathna, D.G.; Choudhary, M. Leveraging artificial intelligence for enhanced risk management in banking: A systematic literature review. In Artificial Intelligence Enabled Management: An Emerging Economy Perspective; Walter de Gruyter GmbH & Co. KG: Berlin, Germany, 2024; pp. 197–213. [Google Scholar]

- Moharrak, M.; Mogaji, E. Generative AI in banking: Empirical insights on integration, challenges and opportunities in a regulated industry. Int. J. Bank Mark. 2025, 43, 871–896. [Google Scholar] [CrossRef]

- Fernandez, J.; Wehrstedt, L.; Shamis, L.; Elhoushi, M.; Saladi, K.; Bisk, Y.; Strubell, E.; Kahn, J. Hardware Scaling Trends and Diminishing Returns in Large-Scale Distributed Training. arXiv 2024, arXiv:2411.13055. [Google Scholar] [CrossRef]

- Tang, Z.; Kang, X.; Yin, Y.; Pan, X.; Wang, Y.; He, X.; Wang, Q.; Zeng, R.; Zhao, K.; Shi, S.; et al. Fusionllm: A decentralized llm training system on geo-distributed gpus with adaptive compression. arXiv 2024, arXiv:2410.12707. [Google Scholar]

- Yang, F.; Peng, S.; Sun, N.; Wang, F.; Wang, Y.; Wu, F.; Qiu, J.; Pan, A. Holmes: Towards distributed training across clusters with heterogeneous NIC environment. In Proceedings of the 53rd International Conference on Parallel Processing, Gotland, Sweden, 12–15 August 2024; pp. 514–523. [Google Scholar]

- Chen, Z.; Shao, H.; Li, Y.; Lu, H.; Jin, J. Policy-Based Access Control System for Delta Lake. In Proceedings of the 2022 Tenth International Conference on Advanced Cloud and Big Data (CBD), Guilin, China, 4–5 November 2022; pp. 60–65. [Google Scholar] [CrossRef]

- Tang, S.; He, B.; Yu, C.; Li, Y.; Li, K. A Survey on Spark Ecosystem: Big Data Processing Infrastructure, Machine Learning, and Applications (Extended abstract). In Proceedings of the 2023 IEEE 39th International Conference on Data Engineering (ICDE), Anaheim, CA, USA, 3–7 April 2023; pp. 3779–3780. [Google Scholar] [CrossRef]

- Douillard, A.; Feng, Q.; Rusu, A.A.; Chhaparia, R.; Donchev, Y.; Kuncoro, A.; Ranzato, M.; Szlam, A.; Shen, J. Diloco: Distributed low-communication training of language models. arXiv 2023, arXiv:2311.08105. [Google Scholar]

- Li, J.; Hui, B.; Qu, G.; Yang, J.; Li, B.; Li, B.; Wang, B.; Qin, B.; Geng, R.; Huo, N.; et al. Can llm already serve as a database interface? a big bench for large-scale database grounded text-to-sqls. Adv. Neural Inf. Process. Syst. 2023, 36, 42330–42357. [Google Scholar]

- Crawford, J.M.; Penberthy, L.; Pinto, L.A.; Althoff, K.N.; Assimon, M.M.; Cohen, O.; Gillim, L.; Hammonds, T.L.; Kapur, S.; Kaufman, H.W.; et al. Coronavirus Disease 2019 (COVID-19) Real World Data Infrastructure: A Big-Data Resource for Study of the Impact of COVID-19 in Patient Populations With Immunocompromising Conditions. Open Forum Infect. Dis. 2025, 12, ofaf021. [Google Scholar] [CrossRef]

- Levandoski, J.; Casto, G.; Deng, M.; Desai, R.; Edara, P.; Hottelier, T.; Hormati, A.; Johnson, A.; Johnson, J.; Kurzyniec, D.; et al. BigLake: BigQuery’s Evolution toward a Multi-Cloud Lakehouse. In Proceedings of the Companion of the 2024 International Conference on Management of Data, Santiago, Chile, 9–15 June 2024; pp. 334–346. [Google Scholar]

- Stankov, I.; Dulgerov, E. Comparing Azure Sentinel and ML-Extended Solutions Applied to a Zero Trust Architecture. In Proceedings of the 2024 32nd National Conference with International Participation (TELECOM), Sofia, Bulgaria, 21–22 November 2024; pp. 1–4. [Google Scholar]

- Morić, Z.; Dakić, V.; Kapulica, A.; Regvart, D. Forensic Investigation Capabilities of Microsoft Azure: A Comprehensive Analysis and Its Significance in Advancing Cloud Cyber Forensics. Electronics 2024, 13, 4546. [Google Scholar] [CrossRef]

- Borra, P. Securing Cloud Infrastructure: An In-Depth Analysis of Microsoft Azure Security. Int. J. Adv. Res. Sci. Commun. Technol. (IJARSCT) 2024, 4, 549–555. [Google Scholar] [CrossRef]

- Tuyishime, E.; Balan, T.C.; Cotfas, P.A.; Cotfas, D.T.; Rekeraho, A. Enhancing cloud security—Proactive threat monitoring and detection using a siem-based approach. Appl. Sci. 2023, 13, 12359. [Google Scholar] [CrossRef]

- Shah, S.; Parast, F.K. AI-Driven Cyber Threat Intelligence Automation. arXiv 2024, arXiv:2410.20287. [Google Scholar] [CrossRef]

- Hassanin, M.; Keshk, M.; Salim, S.; Alsubaie, M.; Sharma, D. Pllm-cs: Pre-trained large language model (llm) for cyber threat detection in satellite networks. Ad Hoc Netw. 2025, 166, 103645. [Google Scholar] [CrossRef]

- Jing, P.; Tang, M.; Shi, X.; Zheng, X.; Nie, S.; Wu, S.; Yang, Y.; Luo, X. SecBench: A Comprehensive Multi-Dimensional Benchmarking Dataset for LLMs in Cybersecurity. arXiv 2024, arXiv:2412.20787. [Google Scholar]

- Marantos, C.; Evangelatos, S.; Veroni, E.; Lalas, G.; Chasapas, K.; Christou, I.T.; Lappas, P. Leveraging Large Language Models for Dynamic Scenario Building targeting Enhanced Cyber-threat Detection and Security Training. In Proceedings of the 2024 IEEE International Conference on Big Data (BigData), Washington, DC, USA, 15–18 December 2024; pp. 2779–2788. [Google Scholar] [CrossRef]

- Ferrag, M.A.; Alwahedi, F.; Battah, A.; Cherif, B.; Mechri, A.; Tihanyi, N.; Bisztray, T.; Debbah, M. Generative AI in Cybersecurity: A Comprehensive Review of LLM Applications and Vulnerabilities. Internet Things Cyber-Phys. Syst. 2025, 5, 1–46. [Google Scholar] [CrossRef]

- Kasri, W.; Himeur, Y.; Alkhazaleh, H.A.; Tarapiah, S.; Atalla, S.; Mansoor, W.; Al-Ahmad, H. From Vulnerability to Defense: The Role of Large Language Models in Enhancing Cybersecurity. Computation 2025, 13, 30. [Google Scholar] [CrossRef]

- da Silva, F.A. Navigating the dual-edged sword of generative AI in cybersecurity. Braz. J. Dev. 2025, 11, e76869. [Google Scholar] [CrossRef]

- Motlagh, F.N.; Hajizadeh, M.; Majd, M.; Najafi, P.; Cheng, F.; Meinel, C. Large language models in cybersecurity: State-of-the-art. arXiv 2024, arXiv:2402.00891. [Google Scholar] [CrossRef]

- Pan, Z.; Liu, J.; Dai, Y.; Fan, W. Large Language Model-enabled Vulnerability Investigation: A Review. In Proceedings of the 2024 International Conference on Intelligent Computing and Next Generation Networks (ICNGN), Bangkok, Thailand, 23–25 November 2024; pp. 1–5. [Google Scholar]

- Bai, G.; Chai, Z.; Ling, C.; Wang, S.; Lu, J.; Zhang, N.; Shi, T.; Yu, Z.; Zhu, M.; Zhang, Y.; et al. Beyond efficiency: A systematic survey of resource-efficient large language models. arXiv 2024, arXiv:2401.00625. [Google Scholar] [CrossRef]

- Xu, M.; Cai, D.; Yin, W.; Wang, S.; Jin, X.; Liu, X. Resource-efficient algorithms and systems of foundation models: A survey. ACM Comput. Surv. 2025, 57, 1–39. [Google Scholar] [CrossRef]

- Liu, J.; Liao, Y.; Xu, H.; Xu, Y. Resource-Efficient Federated Fine-Tuning Large Language Models for Heterogeneous Data. arXiv 2025, arXiv:2503.21213. [Google Scholar]

- Theodorakopoulos, L.; Karras, A.; Theodoropoulou, A.; Kampiotis, G. Benchmarking Big Data Systems: Performance and Decision-Making Implications in Emerging Technologies. Technologies 2024, 12, 217. [Google Scholar] [CrossRef]

- Theodorakopoulos, L.; Karras, A.; Krimpas, G.A. Optimizing Apache Spark MLlib: Predictive Performance of Large-Scale Models for Big Data Analytics. Algorithms 2025, 18, 74. [Google Scholar] [CrossRef]

- Karras, C.; Theodorakopoulos, L.; Karras, A.; Krimpas, G.A. Efficient algorithms for range mode queries in the big data era. Information 2024, 15, 450. [Google Scholar] [CrossRef]

- Lin, Z.; Cui, J.; Liao, X.; Wang, X. Malla: Demystifying real-world large language model integrated malicious services. In Proceedings of the 33rd USENIX Security Symposium (USENIX Security 24), Philadelphia, PA, USA, 14–16 August 2024; pp. 4693–4710. [Google Scholar]

- Charan, P.; Chunduri, H.; Anand, P.M.; Shukla, S.K. From text to mitre techniques: Exploring the malicious use of large language models for generating cyber attack payloads. arXiv 2023, arXiv:2305.15336. [Google Scholar] [CrossRef]

- Clairoux-Trepanier, V.; Beauchamp, I.M.; Ruellan, E.; Paquet-Clouston, M.; Paquette, S.O.; Clay, E. The use of large language models (llm) for cyber threat intelligence (cti) in cybercrime forums. arXiv 2024, arXiv:2408.03354. [Google Scholar] [CrossRef]

- Majumdar, D.; Arjun, S.; Boyina, P.; Rayidi, S.S.P.; Sai, Y.R.; Gangashetty, S.V. Beyond text: Nefarious actors harnessing llms for strategic advantage. In Proceedings of the 2024 International Conference on Intelligent Systems for Cybersecurity (ISCS), Gurugram, India, 3–4 May 2024; pp. 1–7. [Google Scholar]

- Zhao, S.; Jia, M.; Tuan, L.A.; Pan, F.; Wen, J. Universal vulnerabilities in large language models: Backdoor attacks for in-context learning. arXiv 2024, arXiv:2401.05949. [Google Scholar] [CrossRef]

- Zhou, Y.; Ni, T.; Lee, W.B.; Zhao, Q. A Survey on Backdoor Threats in Large Language Models (LLMs): Attacks, Defenses, and Evaluations. arXiv 2025, arXiv:2502.05224. [Google Scholar] [CrossRef]

- Yang, H.; Xiang, K.; Ge, M.; Li, H.; Lu, R.; Yu, S. A comprehensive overview of backdoor attacks in large language models within communication networks. IEEE Netw. 2024, 38, 211–218. [Google Scholar] [CrossRef]

- Ge, H.; Li, Y.; Wang, Q.; Zhang, Y.; Tang, R. When Backdoors Speak: Understanding LLM Backdoor Attacks Through Model-Generated Explanations. arXiv 2024, arXiv:2411.12701. [Google Scholar] [CrossRef]

- Li, Y.; Xu, Z.; Jiang, F.; Niu, L.; Sahabandu, D.; Ramasubramanian, B.; Poovendran, R. Cleangen: Mitigating backdoor attacks for generation tasks in large language models. arXiv 2024, arXiv:2406.12257. [Google Scholar] [CrossRef]

- Trad, F.; Chehab, A. Prompt engineering or fine-tuning? A case study on phishing detection with large language models. Mach. Learn. Knowl. Extr. 2024, 6, 367–384. [Google Scholar] [CrossRef]

- Asfour, M.; Murillo, J.C. Harnessing large language models to simulate realistic human responses to social engineering attacks: A case study. Int. J. Cybersecur. Intell. Cybercrime 2023, 6, 21–49. [Google Scholar] [CrossRef]

- Roy, S.S.; Thota, P.; Naragam, K.V.; Nilizadeh, S. From chatbots to phishbots?: Phishing scam generation in commercial large language models. In Proceedings of the 2024 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2024; pp. 36–54. [Google Scholar]

- Ai, L.; Kumarage, T.; Bhattacharjee, A.; Liu, Z.; Hui, Z.; Davinroy, M.; Cook, J.; Cassani, L.; Trapeznikov, K.; Kirchner, M.; et al. Defending against social engineering attacks in the age of llms. arXiv 2024, arXiv:2406.12263. [Google Scholar] [CrossRef]

- Jamal, S.; Wimmer, H.; Sarker, I.H. An improved transformer-based model for detecting phishing, spam and ham emails: A large language model approach. Secur. Priv. 2024, 7, e402. [Google Scholar] [CrossRef]

- Malloy, T.; Ferreira, M.J.; Fang, F.; Gonzalez, C. Training Users Against Human and GPT-4 Generated Social Engineering Attacks. arXiv 2025, arXiv:2502.01764. [Google Scholar] [CrossRef]

- Wan, Z.; Wang, X.; Liu, C.; Alam, S.; Zheng, Y.; Liu, J.; Qu, Z.; Yan, S.; Zhu, Y.; Zhang, Q.; et al. Efficient large language models: A survey. arXiv 2023, arXiv:2312.03863. [Google Scholar]

- Afane, K.; Wei, W.; Mao, Y.; Farooq, J.; Chen, J. Next-Generation Phishing: How LLM Agents Empower Cyber Attackers. In Proceedings of the 2024 IEEE International Conference on Big Data (BigData), Washington, DC, USA, 15–18 December 2024; pp. 2558–2567. [Google Scholar]

- Kulkarni, A.; Balachandran, V.; Divakaran, D.M.; Das, T. From ml to llm: Evaluating the robustness of phishing webpage detection models against adversarial attacks. arXiv 2024, arXiv:2407.20361. [Google Scholar] [CrossRef]

- Kamruzzaman, A.S.; Thakur, K.; Mahbub, S. AI Tools Building Cybercrime & Defenses. In Proceedings of the 2024 International Conference on Artificial Intelligence, Computer, Data Sciences and Applications (ACDSA), Victoria, Seychelles, 1–2 February 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Yang, S.; Zhu, S.; Wu, Z.; Wang, K.; Yao, J.; Wu, J.; Hu, L.; Li, M.; Wong, D.F.; Wang, D. Fraud-R1: A Multi-Round Benchmark for Assessing the Robustness of LLM Against Augmented Fraud and Phishing Inducements. arXiv 2025, arXiv:2502.12904. [Google Scholar]

- Wang, J.; Huang, Z.; Liu, H.; Yang, N.; Xiao, Y. Defecthunter: A novel llm-driven boosted-conformer-based code vulnerability detection mechanism. arXiv 2023, arXiv:2309.15324. [Google Scholar]

- Andriushchenko, M.; Souly, A.; Dziemian, M.; Duenas, D.; Lin, M.; Wang, J.; Hendrycks, D.; Zou, A.; Kolter, Z.; Fredrikson, M.; et al. Agentharm: A benchmark for measuring harmfulness of llm agents. arXiv 2024, arXiv:2410.09024. [Google Scholar] [CrossRef]

- Jiang, L. Detecting scams using large language models. arXiv 2024, arXiv:2402.03147. [Google Scholar] [CrossRef]

- Hays, S.; White, J. Employing llms for incident response planning and review. arXiv 2024, arXiv:2403.01271. [Google Scholar] [CrossRef]

- Çaylı, O. AI-Enhanced Cybersecurity Vulnerability-Based Prevention, Defense, and Mitigation using Generative AI. Orclever Proc. Res. Dev. 2024, 5, 655–667. [Google Scholar] [CrossRef]

- Novelli, C.; Casolari, F.; Hacker, P.; Spedicato, G.; Floridi, L. Generative AI in EU law: Liability, privacy, intellectual property, and cybersecurity. Comput. Law Secur. Rev. 2024, 55, 106066. [Google Scholar] [CrossRef]

- Derasari, P.; Venkataramani, G. EPIC: Efficient and Proactive Instruction-level Cyberdefense. In Proceedings of the Great Lakes Symposium on VLSI 2024, GLSVLSI ’24, Clearwater, FL, USA, 12–14 June 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 409–414. [Google Scholar] [CrossRef]

- Bataineh, A.Q.; Abu-AlSondos, I.A.; Idris, M.; Mushtaha, A.S.; Qasim, D.M. The role of big data analytics in driving innovation in digital marketing. In Proceedings of the 2023 9th International Conference on Optimization and Applications (ICOA), Abu Dhabi, United Arab Emirates, 5–6 October 2023; pp. 1–5. [Google Scholar]

- Chaurasia, S.S.; Kodwani, D.; Lachhwani, H.; Ketkar, M.A. Big data academic and learning analytics: Connecting the dots for academic excellence in higher education. Int. J. Educ. Manag. 2018, 32, 1099–1117. [Google Scholar] [CrossRef]

- Hassanin, M.; Moustafa, N. A comprehensive overview of large language models (llms) for cyber defences: Opportunities and directions. arXiv 2024, arXiv:2405.14487. [Google Scholar] [CrossRef]

- Ji, H.; Yang, J.; Chai, L.; Wei, C.; Yang, L.; Duan, Y.; Wang, Y.; Sun, T.; Guo, H.; Li, T.; et al. Sevenllm: Benchmarking, eliciting, and enhancing abilities of large language models in cyber threat intelligence. arXiv 2024, arXiv:2405.03446. [Google Scholar] [CrossRef]

- Bokkena, B. Enhancing IT Security with LLM-Powered Predictive Threat Intelligence. In Proceedings of the 2024 5th International Conference on Smart Electronics and Communication (ICOSEC), Trichy, India, 18–20 September 2024; pp. 751–756. [Google Scholar] [CrossRef]

- Balasubramanian, P.; Ali, T.; Salmani, M.; KhoshKholgh, D.; Kostakos, P. Hex2Sign: Automatic IDS Signature Generation from Hexadecimal Data using LLMs. In Proceedings of the 2024 IEEE International Conference on Big Data (BigData), Washington, DC, USA, 15–18 December 2024; pp. 4524–4532. [Google Scholar] [CrossRef]

- Webb, B.K.; Purohit, S.; Meyur, R. Cyber knowledge completion using large language models. arXiv 2024, arXiv:2409.16176. [Google Scholar] [CrossRef]

- Song, J.; Wang, X.; Zhu, J.; Wu, Y.; Cheng, X.; Zhong, R.; Niu, C. RAG-HAT: A Hallucination-Aware Tuning Pipeline for LLM in Retrieval-Augmented Generation. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing: Industry Track, Miami, FL, USA, 12–16 November 2024; pp. 1548–1558. [Google Scholar]

- Gandhi, P.A.; Wudali, P.N.; Amaru, Y.; Elovici, Y.; Shabtai, A. SHIELD: APT Detection and Intelligent Explanation Using LLM. arXiv 2025, arXiv:2502.02342. [Google Scholar] [CrossRef]

- Ji, Z.; Chen, D.; Ishii, E.; Cahyawijaya, S.; Bang, Y.; Wilie, B.; Fung, P. Llm internal states reveal hallucination risk faced with a query. arXiv 2024, arXiv:2407.03282. [Google Scholar] [CrossRef]

- Maity, S.; Arora, J. The Colossal Defense: Security Challenges of Large Language Models. In Proceedings of the 2024 3rd Edition of IEEE Delhi Section Flagship Conference (DELCON), New Delhi, India, 21–23 November 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Ayzenshteyn, D.; Weiss, R.; Mirsky, Y. The Best Defense is a Good Offense: Countering LLM-Powered Cyberattacks. arXiv 2024, arXiv:2410.15396. [Google Scholar] [CrossRef]

- Kim, Y.; Dán, G.; Zhu, Q. Human-in-the-loop cyber intrusion detection using active learning. IEEE Trans. Inf. Forensics Secur. 2024, 19, 8658–8672. [Google Scholar] [CrossRef]

- Ghanem, M.C. Advancing IoT and Cloud Security through LLMs, Federated Learning, and Reinforcement Learning. In Proceedings of the 7th IEEE Conference on Cloud and Internet of Things (CIoT 2024)—Keynote, Montreal, QC, Canada, 29–31 October 2024. [Google Scholar]

- Haryanto, C.Y.; Elvira, A.M.; Nguyen, T.D.; Vu, M.H.; Hartanto, Y.; Lomempow, E.; Arakala, A. Contextualized AI for Cyber Defense: An Automated Survey using LLMs. In Proceedings of the 2024 17th International Conference on Security of Information and Networks (SIN), Sydney, Australia, 2–4 December 2024; pp. 1–8. [Google Scholar]

- V, S.; P, L.S.; P, N.K.; V, L.P.; CH, B.S. Data Leakage Detection and Prevention Using Ciphertext-Policy Attribute Based Encryption Algorithm. In Proceedings of the 2024 11th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions) (ICRITO), Noida, India, 14–15 March 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Kumar, P.S.; Bapu, B.T.; Sridhar, S.; Nagaraju, V. An Efficient Cyber Security Attack Detection With Encryption Using Capsule Convolutional Polymorphic Graph Attention. Trans. Emerg. Telecommun. Technol. 2025, 36, e70069. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, Z. Preventive Measures of Influencing Factors of Computer Network Security Technology. In Proceedings of the 2021 IEEE International Conference on Artificial Intelligence and Computer Applications (ICAICA), Dalian, China, 28–30 June 2021; pp. 1187–1191. [Google Scholar] [CrossRef]

- Sarkorn, T.; Chimmanee, K. Review on Zero Trust Architecture Apply In Enterprise Next Generation Firewall. In Proceedings of the 2024 8th International Conference on Information Technology (InCIT), Chonburi, Thailand, 14–15 November 2024; pp. 255–260. [Google Scholar] [CrossRef]

- Mustafa, H.M.; Basumallik, S.; Vellaithurai, C.; Srivastava, A. Threat Detection in Power Grid OT Networks: Unsupervised ML and Cyber Intelligence Sharing with STIX. In Proceedings of the 2024 12th Workshop on Modeling and Simulation of Cyber-Physical Energy Systems (MSCPES), Hong Kong, China, 13 May 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Steingartner, W.; Galinec, D.; Zebić, V. Challenges of Application Programming Interfaces Security: A Conceptual Model in the Changing Cyber Defense Environment and Zero Trust Architecture. In Proceedings of the 2024 IEEE 17th International Scientific Conference on Informatics (Informatics), Poprad, Slovakia, 13–15 November 2024; pp. 372–379. [Google Scholar] [CrossRef]

- Mmaduekwe, E.; Kessie, J.; Salawudeen, M. Zero trust architecture and AI: A synergistic approach to next-generation cybersecurity frameworks. Int. J. Sci. Res. Arch. 2024, 13, 4159–4169. [Google Scholar] [CrossRef]

- Freitas, S.; Kalajdjieski, J.; Gharib, A.; McCann, R. AI-Driven Guided Response for Security Operation Centers with Microsoft Copilot for Security. arXiv 2024, arXiv:2407.09017. [Google Scholar] [CrossRef]

- Bono, J.; Xu, A. Randomized controlled trials for Security Copilot for IT administrators. arXiv 2024, arXiv:2411.01067. [Google Scholar] [CrossRef]

- Paul, S.; Alemi, F.; Macwan, R. LLM-Assisted Proactive Threat Intelligence for Automated Reasoning. arXiv 2025, arXiv:2504.00428. [Google Scholar] [CrossRef]

- Kshetri, N. Transforming cybersecurity with agentic AI to combat emerging cyber threats. Telecommun. Policy 2025, 49, 102976. [Google Scholar] [CrossRef]

- Schesny, M.; Lutz, N.; Jägle, T.; Gerschner, F.; Klaiber, M.; Theissler, A. Enhancing Website Fraud Detection: A ChatGPT-Based Approach to Phishing Detection. In Proceedings of the 2024 IEEE 48th Annual Computers, Software, and Applications Conference (COMPSAC), Osaka, Japan, 2–4 July 2024; pp. 1494–1495. [Google Scholar] [CrossRef]

- Razavi, H.; Jamali, M.R. Large Language Models (LLM) for Estimating the Cost of Cyber-attacks. In Proceedings of the 2024 11th International Symposium on Telecommunications (IST), Tehran, Iran, 9–10 October 2024; pp. 403–409. [Google Scholar] [CrossRef]

- Mathew, A. AI Cyber Defense and eBPF. World J. Adv. Res. Rev. 2024, 22, 1983–1989. [Google Scholar] [CrossRef]

- Baldoni, R.; De Nicola, R.; Prinetto, P. (Eds.) The Future of Cybersecurity in Italy: Strategic Focus Areas. Projects and Actions to Better Defend Our Country from Cyber Attacks; English Edition; Translated from the Italian Volume (Jan 2018, ISBN 9788894137330); last update 20 June 2018; CINI—Consorzio Interuniversitario Nazionale per l’Informatica: Rome, Italy, 2018. [Google Scholar]

- Truong, T.C.; Diep, Q.B.; Zelinka, I. Artificial intelligence in the cyber domain: Offense and defense. Symmetry 2020, 12, 410. [Google Scholar] [CrossRef]

- Ferrag, M.A.; Ndhlovu, M.; Tihanyi, N.; Cordeiro, L.C.; Debbah, M.; Lestable, T.; Thandi, N.S. Revolutionizing cyber threat detection with large language models: A privacy-preserving bert-based lightweight model for iot/iiot devices. IEEE Access 2024, 12, 23733–23750. [Google Scholar] [CrossRef]

- Metta, S.; Chang, I.; Parker, J.; Roman, M.P.; Ehuan, A.F. Generative AI in cybersecurity. arXiv 2024, arXiv:2405.01674. [Google Scholar]

- Benabderrahmane, S.; Valtchev, P.; Cheney, J.; Rahwan, T. APT-LLM: Embedding-Based Anomaly Detection of Cyber Advanced Persistent Threats Using Large Language Models. arXiv 2025, arXiv:2502.09385. [Google Scholar]

- Zhang, X.; Li, Q.; Tan, Y.; Guo, Z.; Zhang, L.; Cui, Y. Large Language Models powered Network Attack Detection: Architecture, Opportunities and Case Study. arXiv 2025, arXiv:2503.18487. [Google Scholar] [CrossRef]

- Zuo, F.; Rhee, J.; Choe, Y.R. Knowledge Transfer from LLMs to Provenance Analysis: A Semantic-Augmented Method for APT Detection. arXiv 2025, arXiv:2503.18316. [Google Scholar] [CrossRef]

- Ferrag, M.A.; Ndhlovu, M.; Tihanyi, N.; Cordeiro, L.C.; Debbah, M.; Lestable, T. Revolutionizing cyber threat detection with large language models. arXiv 2023, arXiv:2306.14263. [Google Scholar]

- Ren, H.; Lan, K.; Sun, Z.; Liao, S. CLogLLM: A Large Language Model Enabled Approach to Cybersecurity Log Anomaly Analysis. In Proceedings of the 2024 4th International Conference on Electronic Information Engineering and Computer Communication (EIECC), Wuhan, China, 27–29 December 2024; pp. 963–970. [Google Scholar] [CrossRef]

- Ismail, I.; Kurnia, R.; Brata, Z.A.; Nelistiani, G.A.; Heo, S.; Kim, H.; Kim, H. Toward Robust Security Orchestration and Automated Response in Security Operations Centers with a Hyper-Automation Approach Using Agentic Artificial Intelligence. Information 2025, 16, 365. [Google Scholar] [CrossRef]

- Tallam, K. CyberSentinel: An Emergent Threat Detection System for AI Security. arXiv 2025, arXiv:2502.14966. [Google Scholar] [CrossRef]

- Kheddar, H. Transformers and large language models for efficient intrusion detection systems: A comprehensive survey. arXiv 2024, arXiv:2408.07583. [Google Scholar] [CrossRef]

- Ghimire, A.; Ghajari, G.; Gurung, K.; Sah, L.K.; Amsaad, F. Enhancing Cybersecurity in Critical Infrastructure with LLM-Assisted Explainable IoT Systems. arXiv 2025, arXiv:2503.03180. [Google Scholar]

- Setak, M.; Madani, P. Fine-Tuning LLMs for Code Mutation: A New Era of Cyber Threats. In Proceedings of the 2024 IEEE 6th International Conference on Trust, Privacy and Security in Intelligent Systems, and Applications (TPS-ISA), Washington, DC, USA, 28–31 October 2024; pp. 313–321. [Google Scholar]

- Song, C.; Ma, L.; Zheng, J.; Liao, J.; Kuang, H.; Yang, L. Audit-LLM: Multi-Agent Collaboration for Log-based Insider Threat Detection. arXiv 2024, arXiv:2408.08902. [Google Scholar]

- Li, Y.; Xiang, Z.; Bastian, N.D.; Song, D.; Li, B. IDS-Agent: An LLM Agent for Explainable Intrusion Detection in IoT Networks. In Proceedings of the NeurIPS 2024 Workshop on Open-World Agents, Vancouver, BC, Canada, 15 December 2024. [Google Scholar]

- Rigaki, M.; Catania, C.; Garcia, S. Hackphyr: A Local Fine-Tuned LLM Agent for Network Security Environments. arXiv 2024, arXiv:2409.11276. [Google Scholar] [CrossRef]

- Diaf, A.; Korba, A.A.; Karabadji, N.E.; Ghamri-Doudane, Y. BARTPredict: Empowering IoT Security with LLM-Driven Cyber Threat Prediction. In Proceedings of the GLOBECOM 2024-2024 IEEE Global Communications Conference, Cape Town, South Africa, 8–12 December 2024; pp. 1239–1244. [Google Scholar]

- Barker, C. Applications of Machine Learning to Threat Intelligence, Intrusion Detection and Malware. Senior Honors Thesis, Liberty University, Lynchburg, VA, USA, 2020. [Google Scholar]

- Bakdash, J.Z.; Hutchinson, S.; Zaroukian, E.G.; Marusich, L.R.; Thirumuruganathan, S.; Sample, C.; Hoffman, B.; Das, G. Malware in the future? Forecasting of analyst detection of cyber events. J. Cybersecur. 2018, 4, tyy007. [Google Scholar] [CrossRef]

- Cheng, Y.; Bajaber, O.; Tsegai, S.A.; Song, D.; Gao, P. CTINEXUS: Leveraging Optimized LLM In-Context Learning for Constructing Cybersecurity Knowledge Graphs Under Data Scarcity. arXiv 2024, arXiv:2410.21060. [Google Scholar] [CrossRef]

- Al Siam, A.; Alazab, M.; Awajan, A.; Faruqui, N. A Comprehensive Review of AI’s Current Impact and Future Prospects in Cybersecurity. IEEE Access 2025, 13, 14029–14050. [Google Scholar] [CrossRef]

- Bashir, T. Zero Trust Architecture: Enhancing cybersecurity in enterprise networks. J. Comput. Sci. Technol. Stud. 2024, 6, 54–59. [Google Scholar] [CrossRef]

- Hu, X.; Chen, H.; Bao, H.; Wang, W.; Liu, F.; Zhou, G.; Yin, P. A LLM-based agent for the automatic generation and generalization of IDS rules. In Proceedings of the 2024 IEEE 23rd International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom), Sanya, China, 17–21 December 2024; pp. 1875–1880. [Google Scholar]