Long-Term Preservation of Emotion Analyses with OAIS: A Software Prototype Design Approach for Information Package Conversion in KM-EP

Abstract

1. Introduction and Motivation

How can we provide data transformations in the KM-EP to prepare Emotion-Data Packages for long-term preservation in accordance with the OAIS Reference Model Framework and at the same time enabling sustainable reimports of archived packages into KM-EP while ensuring integrity and completeness of the archived data? (Research Question 1)

How can we provide Data Compression and Data Decompression within the KM-EP to support reliable long-term archiving of Emotion-Data Packages and ideally reduce storage requirements and enable reuse of emotion-data within KM-EP? (Research Question 2)

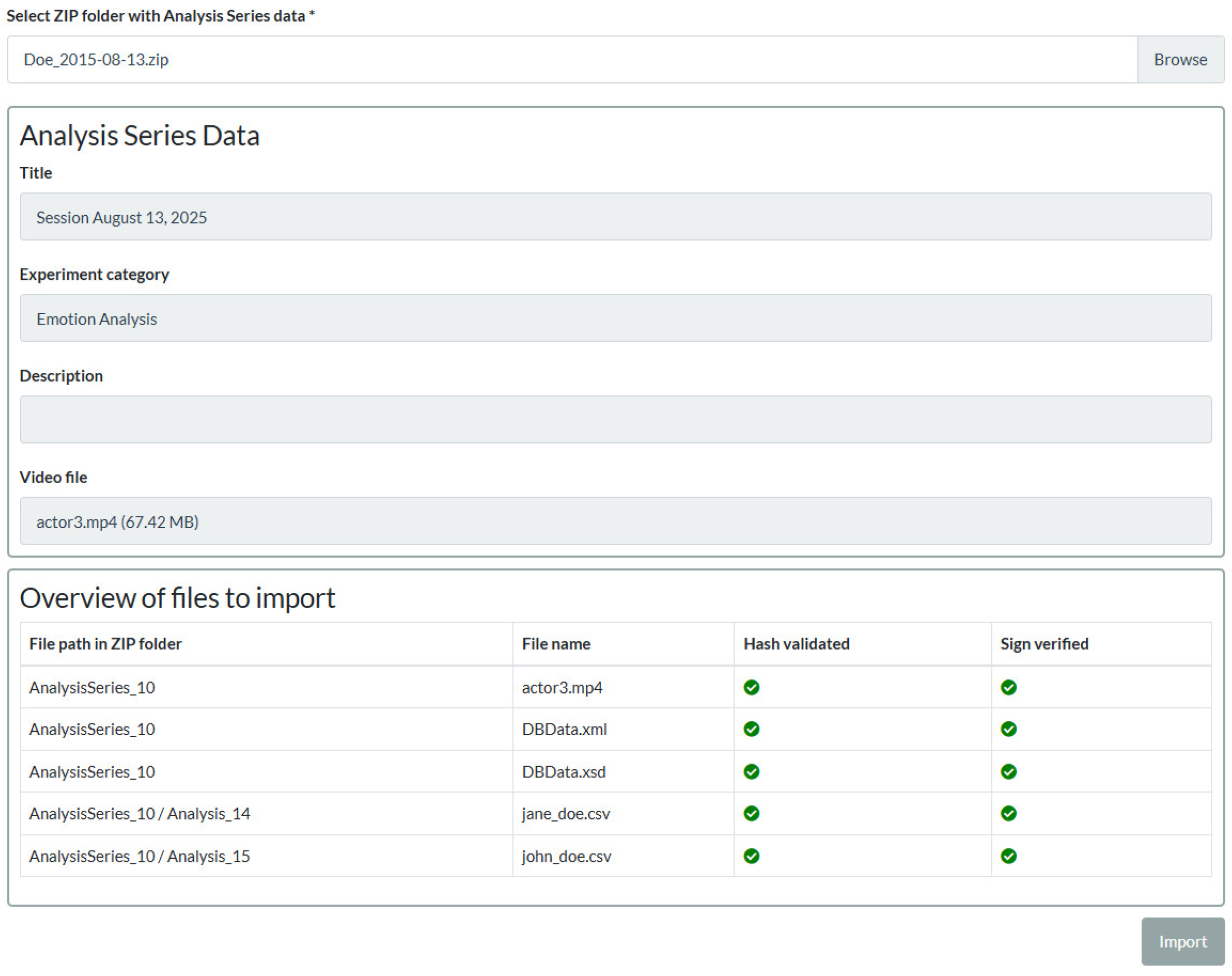

How can we provide export and import of Emotion-Data Packages in the KM-EP in compliance with the OAIS Reference Model Framework using step-by-step KM-EP user-interface dialogs and ensuring data integrity and accessibility? (Research Question 3)

2. State of the Art in Science and Technology

2.1. OAIS and Digital Preservation

- •

- Content Information: set of information that is the original target of preservation consisting of a content data object and its representational information.

- •

- Preservation Description information (PDI): contains information necessary to adequately preserve the Content Information it is associated with.

- •

- Packaging Information: describes the components of the information package and how these are connected through logical or physical conditions as well as methods to identify and extract these.

- •

- Descriptive Information: set of information supporting finding, ordering, and retrieving information by consumers.

- •

- How does representational information need to be modeled and designed to meet the requirements of the OAIS Reference Model Framework? (RC1.1)

- •

- How does PDI need to be modeled and designed to meet the requirements of the OAIS Reference Model Framework? (RC1.2)

- •

- How do Emotion-Data Packages need to be modeled and designed to meet the requirements of the OAIS Reference Model Framework? (RC1.3)

- •

- How does data need to be transformed to enable efficient preservation and accessibility in the long-term according to the OAIS Reference Model Framework? (RC1.4)

- •

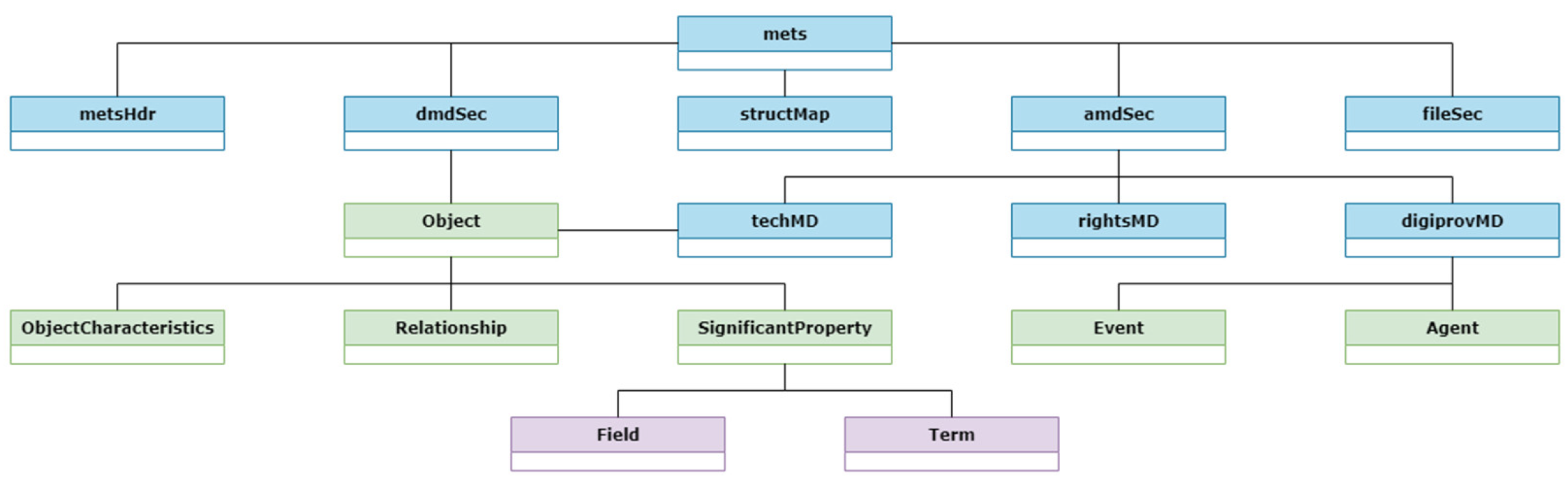

- How do metadata need to be modeled and designed to meet the requirements of the OAIS Reference Model Framework as well as METS and PREMIS standards? (RC1.5)

- •

- How does content information need to be modeled and designed to meet the requirements of the OAIS Reference Model Framework? (RC1.6)

2.2. Data Compression and Data Decompression

- •

- How can video recordings be converted between MP4 and MKV for long-term preservation? (RC2.1)

- •

- How can video recordings be converted to H.264 codec to enable a compromise between minimal loss of quality and optimized file size and to meet the requirements for conditional long-term suitability? (RC2.2)

- •

- How can video recordings be converted to grayscale to reduce file size? (RC2.3)

- •

- How can audio of video recordings be removed to reduce file size? (RC2.4)

- •

- How does the variation in video compression in terms of format selection, migration to grayscale, and elimination of the audio track affect the file size? (RC2.5)

2.3. Data Integrity and Accessibility

- •

- How does the generation of hash values need to be modeled and designed to enable data integrity? (RC3.1)

- •

- How can metadata be extended to ensure the integrity of Emotion-Data Packages? (RC3.2)

- •

- How can digital signatures be modeled and designed to ensure data authenticity? (RC3.3)

- •

- How can metadata be extended to ensure data authenticity? (RC3.4)

- •

- How can the user be enabled to import and export Emotion-Data Packages using a step-by-step KM-EP user-interface dialog? (RC3.5)

3. Conceptual Modeling and Design

3.1. Data Transformation and Metadata Generation

- •

- Metadata XML file containing the representational data and PDI in METS/PREMIS format;

- •

- XML file containing the database entities in the context of the emotion analysis;

- •

- XSD schema file for the database entities XML file;

- •

- Additional files of the emotion analysis like video recordings and emotion sequence data.

- The content information (RC1.6) is represented through files, like video recordings or emotion sequence data, but also through exports of database entities which provide information to Emotion Analyses, like, for example, details of conversation participants. The following files are expected to be part of the content information:

- •

- Database entities and their relations (XML);

- •

- Video recordings (MP4/MKV);

- •

- Emotion sequence data or further analysis data, like the configuration of the Emotion Recognition Algorithms (Text, CSV, and JSON).

- •

- Reference information helps to identify and search the information object [5].

- •

- Context information describes the context of the information object and relevant events or linked objects [5].

- •

- Provenance information holds information to the origin, change history, and which agent is responsible for the information [5].

- •

- Fixity information ensures the integrity of the information object by storing hash values [5].

- •

- Access right information controls access and usage rights [5].

3.2. Data Compression and Data Decompression

3.3. Hash Values and Digital Signatures

4. Implementing a Software Prototype for Long-Term Preservation

5. Evaluation

5.1. Evaluation Using Functional Tests

5.2. Evaluation Using Cognitive Walkthrough

- •

- Will the user attempt to perform the correct action?

- •

- Will the user recognize that the correct action is available?

- •

- Does the user associate the correct action with the desired effect?

- •

- Does the user recognize that progress has been made after the correct action?

6. Discussion and Outlook

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| AIP | Archival Information Package |

| DIP | Dissemination Information Package |

| EDP | Emotion-Data Package |

| KM-EP | Knowledge Management Ecosystem Portal |

| MKV | Matroska Container |

| OAIS | Open Archival Information System |

| PDI | Preservation Description Information |

| RC | Remaining Challenge |

| RQ | Research Question |

| SIP | Submission Information Package |

| UCD | User-Centered Design |

References

- Der Wissenschaftliche Beirat Beim Bundesministerium für Wirtschaft und Energie r Wissenschaftliche Beirat Beim Bundesministerium für Wirtschaft und Energie. Digitalisierung in Deutschland—Lehren aus der Corona-Krise. Bundesministerium für Wirtschaft und Energie (BMWi) Öffentlichkeitsarbeit, March 2021. Available online: https://www.bmwk.de/Redaktion/DE/Publikationen/Ministerium/Veroeffentlichung-Wissenschaftlicher-Beirat/gutachten-digitalisierung-in-deutschland.pdf (accessed on 26 October 2025).

- Bitkom eigentragener Verein (e.V.). Corona Beschleunigt die Digitalisierung der Medizin—Mit Unterschiedlichem Tempo. Available online: https://www.bitkom.org/Presse/Presseinformation/Corona-beschleunigt-die-Digitalisierung-der-Medizin-mit-unterschiedlichem-Tempo (accessed on 26 October 2025).

- Maier, D.; Hemmje, M.; Kikic, Z.; Wefers, F. Real-Time Emotion Recognition in Online Video Conferences for Medical Consultations. In International Conference on Machine Learning, Optimization, and Data Science; Springer Nature: Cham, Switzerland, 2024; pp. 479–487. [Google Scholar] [CrossRef]

- ISO 14721:2025; Space Data System Practices—Reference model for an open archival information system (OAIS). 2025. Available online: https://www.iso.org/standard/87471.html (accessed on 30 October 2025).

- The Consultative Committee for Space Data Systems. Reference Model for an Open Archival Information System (OAIS); MAGENTA BOOK; CCSDS Secretariat National Aeronautics and Space Administration: Washington, DC, USA, 2024; Volume CCSDS 650.0-M-3. [Google Scholar]

- Schreyer, V.; Bornschlegl, M.X.; Hemmje, M. Toward Annotation, Visualization, and Reproducible Archiving of Human–Human Dialog Video Recording Applications. Information 2025, 16, 349. [Google Scholar] [CrossRef]

- Salomon, D.; Motta, G. Handbook of Data Compression; Springer: London, UK, 2010. [Google Scholar] [CrossRef]

- Sayood, K. Introduction to Data Compression (The Morgan Kaufmann Series in Multimedia Information and Systems), 3rd ed.; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2006. [Google Scholar]

- Nunamaker, J.F.; Chen, M.; Purdin, T.D.M. Systems Development in Information Systems Research. J. Manag. Inf. Syst. 1990, 7, 89–106. [Google Scholar] [CrossRef]

- Dappert, A.; Guenther, R.S.; Peyrard, S. Digital Preservation Metadata for Practitioners; Springer International Publishing: Cham, Switzerland, 2016. [Google Scholar] [CrossRef]

- Gartner, R.; Lavoie, B. Preservation Metadata, 2nd ed.; Charles Beagrie Ltd.: Salisbury, UK, 2013. [Google Scholar] [CrossRef]

- Congress, T.L.O. METS: An Overview & Tutorial. Available online: https://www.loc.gov/standards/mets/METSOverview.html (accessed on 26 October 2025).

- Manz, O. Gut Gepackt—Kein Bit zu Viel; Springer Fachmedien: Wiesbaden, Germany, 2020. [Google Scholar] [CrossRef]

- Li, Z.-N.; Drew, M.S.; Liu, J. Fundamentals of Multimedia; Springer International Publishing: Cham, Switzerland, 2021. [Google Scholar] [CrossRef]

- Bunkus, M. What Is Matroska? Available online: https://www.matroska.org/what_is_matroska.html (accessed on 21 August 2025).

- Trac. FFV1 Encoding Cheatsheet. Available online: https://trac.ffmpeg.org/wiki/Encode/FFV1 (accessed on 21 August 2025).

- Jones, E. Data Accuracy vs. Data Integrity: Similarities and Differences. Available online: https://www.ibm.com/think/topics/data-accuracy-vs-data-integrity (accessed on 21 August 2025).

- Mittelbach, A.; Fischlin, M. The Theory of Hash Functions and Random Oracles; Springer International Publishing: Cham, Switzerland, 2021. [Google Scholar] [CrossRef]

- Falcetta, F.S.; de Almeida, F.K.; Lemos, J.C.S.; Goldim, J.R.; da Costa, C.A. Automatic documentation of professional health interactions: A systematic review. Artif. Intell. Med. 2023, 137, 102487. [Google Scholar] [CrossRef] [PubMed]

- Clemen, J.M.B.; Teleron, J.I. Advancements in Encryption Techniques for Secure Data Communication. Int. J. Adv. Res. Sci. Commun. Technol. 2023, 3, 444–451. [Google Scholar] [CrossRef]

- Easttom, W. Modern Cryptography; Springer International Publishing: Cham, Switzerland, 2022. [Google Scholar] [CrossRef]

- PREMIS Editorial Committee. PREMIS Data Dictionary for Preservation Metadata, Vol. 3.0. 2015. Available online: https://www.loc.gov/standards/premis/v3/premis-3-0-final.pdf (accessed on 21 August 2025).

- Ffmpeg. ffmpeg Documentation. Available online: https://ffmpeg.org/ffmpeg.html (accessed on 21 August 2025).

- The PHP Documentation Group. OpenSSL. Available online: https://www.php.net/manual/de/book.openssl.php (accessed on 21 August 2025).

- Leloudas, P. Introduction to Software Testing; Apress: Berkeley, CA, USA, 2023. [Google Scholar] [CrossRef]

- Wilson, C. Cognitive Walkthrough. In User Interface Inspection Methods; Elsevier: Amsterdam, The Netherlands, 2014; pp. 65–79. [Google Scholar] [CrossRef]

| Format | With Audio Track | Grayscale | File Size |

|---|---|---|---|

| MP4 | ✓ | ✗ | 31.4 MB (32,970,323 Bytes) |

| MP4 | ✓ | ✓ | 29.2 MB (30,662,751 Bytes) |

| MP4 | ✗ | ✗ | 27.8 MB (29,228,090 Bytes) |

| MP4 | ✗ | ✓ | 25.6 MB (26,920,474 Bytes) |

| MKV | ✓ | ✗ | 1.41 GB (1,522,372,157 Bytes) |

| MKV | ✓ | ✓ | 1.13 GB (1,222,986,897 Bytes) |

| MKV | ✗ | ✗ | 1.34 GB (1,441,165,178 Bytes) |

| MKV | ✗ | ✓ | 1.06 GB (1,141,779,917 Bytes) |

| Task | Required Steps | Successful Steps | Problem Rate |

|---|---|---|---|

| Export the ZIP folder | 8 | 8 | 0% |

| Reimport the ZIP folder | 4 | 4 | 0% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Schreyer, V.; Pfalzgraf, M.; Bornschlegl, M.X.; Hemmje, M. Long-Term Preservation of Emotion Analyses with OAIS: A Software Prototype Design Approach for Information Package Conversion in KM-EP. Information 2025, 16, 951. https://doi.org/10.3390/info16110951

Schreyer V, Pfalzgraf M, Bornschlegl MX, Hemmje M. Long-Term Preservation of Emotion Analyses with OAIS: A Software Prototype Design Approach for Information Package Conversion in KM-EP. Information. 2025; 16(11):951. https://doi.org/10.3390/info16110951

Chicago/Turabian StyleSchreyer, Verena, Michael Pfalzgraf, Marco Xaver Bornschlegl, and Matthias Hemmje. 2025. "Long-Term Preservation of Emotion Analyses with OAIS: A Software Prototype Design Approach for Information Package Conversion in KM-EP" Information 16, no. 11: 951. https://doi.org/10.3390/info16110951

APA StyleSchreyer, V., Pfalzgraf, M., Bornschlegl, M. X., & Hemmje, M. (2025). Long-Term Preservation of Emotion Analyses with OAIS: A Software Prototype Design Approach for Information Package Conversion in KM-EP. Information, 16(11), 951. https://doi.org/10.3390/info16110951