Are We Ready for Synchronous Conceptual Modeling in Augmented Reality? A Usability Study on Causal Maps with HoloLens 2

Abstract

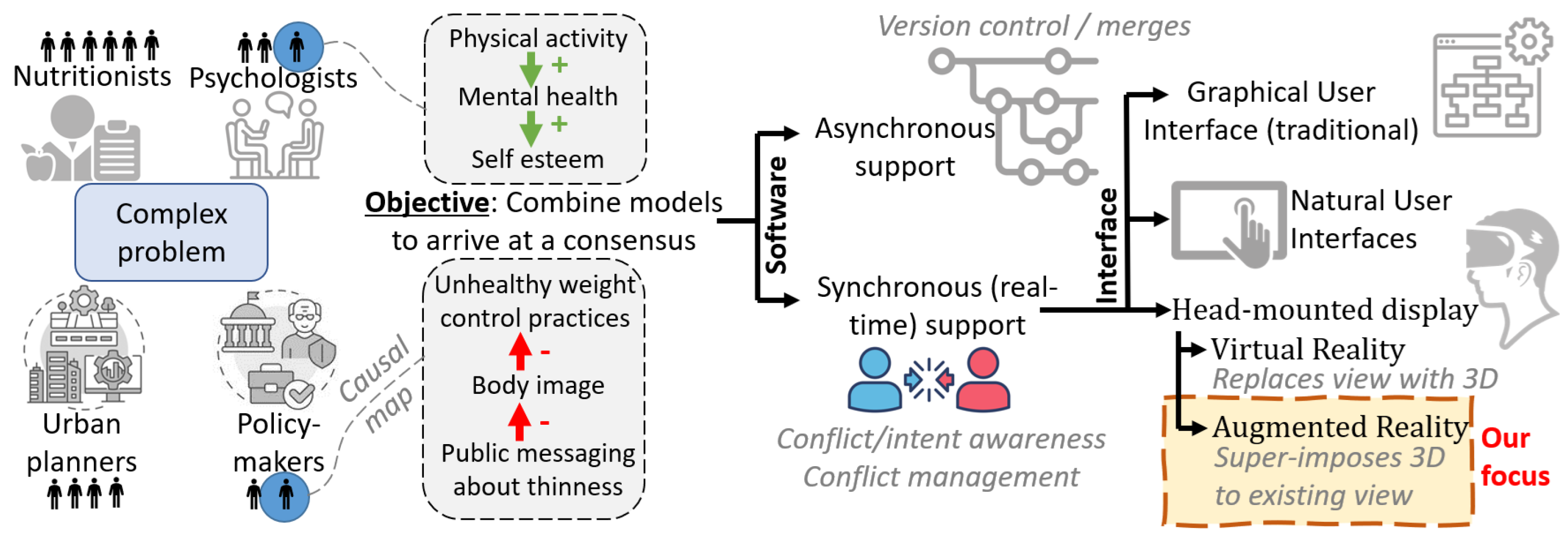

1. Introduction

2. Background

2.1. From Asynchronous to Real-Time Collaborative Modeling

2.2. Modeling in Virtual and Augmented Reality: Prevalence, Prototypes, and Usability

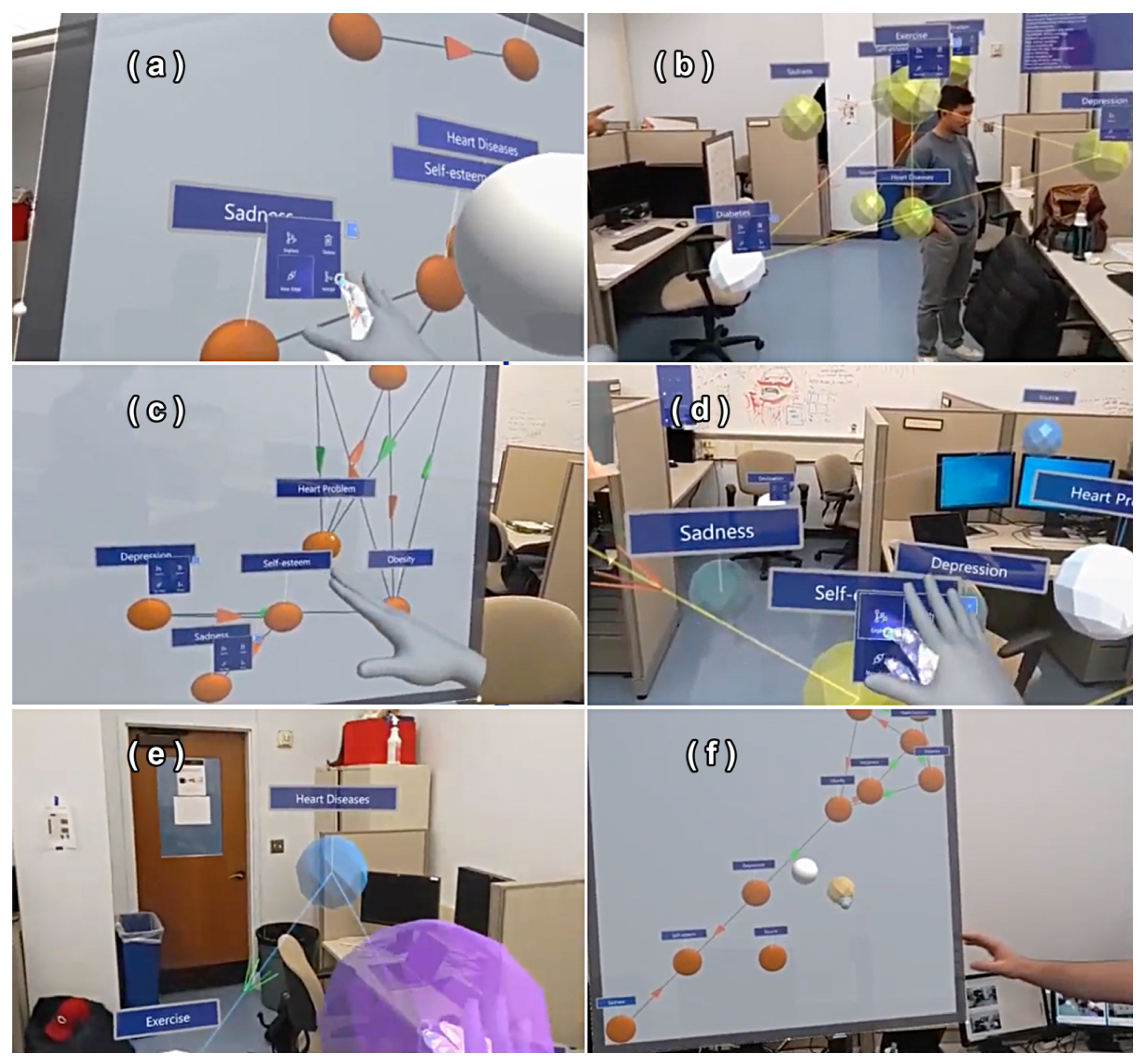

2.3. A Microsoft HoloLens 2Open Source Application to Resolve Conflicts in Causal Maps

2.4. Usability in Collaborative Settings via Augmented and Virtual Reality

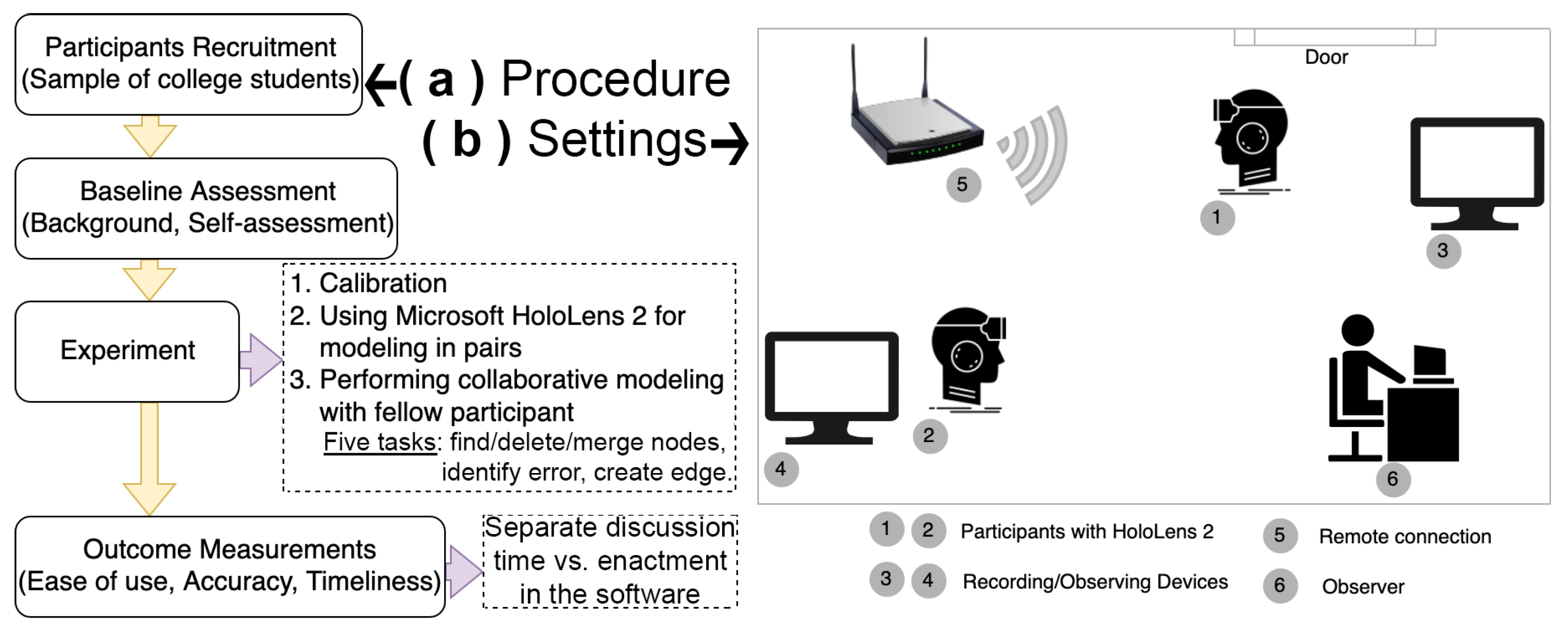

3. Methods

3.1. Overview, Goals, and Participants

- Assess the correctness, confidence, and time spent to complete routine actions required for causal map modeling in a novel augmented reality environment.

- Evaluate how the visual environment (2D vs. 3D projection of a map) acts as a mediating factor in the ability of users to interact with the causal map.

3.2. Questionnaires and Tasks

3.2.1. Pre-Study Questionnaire

3.2.2. Usability

- Deleting an unnecessary concept. In real-world scenarios, participants may include tangential concepts and realize through discussions that the model could be reduced. We thus included irrelevant concepts in the map. This is a common activity in conceptual modeling, as maps with a large diameter may signal that a participant went beyond the boundaries of the problem space (i.e., on a tangent) [49]. Among several approaches, the deletion of peripheral concepts (i.e., ‘exogenous variables’) [50] helps to reduce model complexity by omission—alternatives include aggregation and substitution [51].

- Creating a new edge to causally link one concept onto another. We ensured that several concepts in the maps had plausible yet missing connections.

- Finding one error in the graph (wrong causality).

- Merging semantically related concepts. This is an important task to negotiate a shared meaning, as participants must find a pair of concepts that should be merged. The individual graphs contained a pair of closely related nodes (‘heart diseases’, ‘heart problem’) as well as a distractor pair (‘depression’, ‘happiness’). We expected this task to trigger a discussion and several software actions to select and move a concept onto its merging target. Merging related concepts is a well-known time-consuming modeling task, which is performed manually for small maps or increasingly benefits from AI solutions to identify potential concepts to merge in large maps [15,52].

3.2.3. Post-Usability Questionnaire

4. Results

4.1. Pre-Study Questionnaire

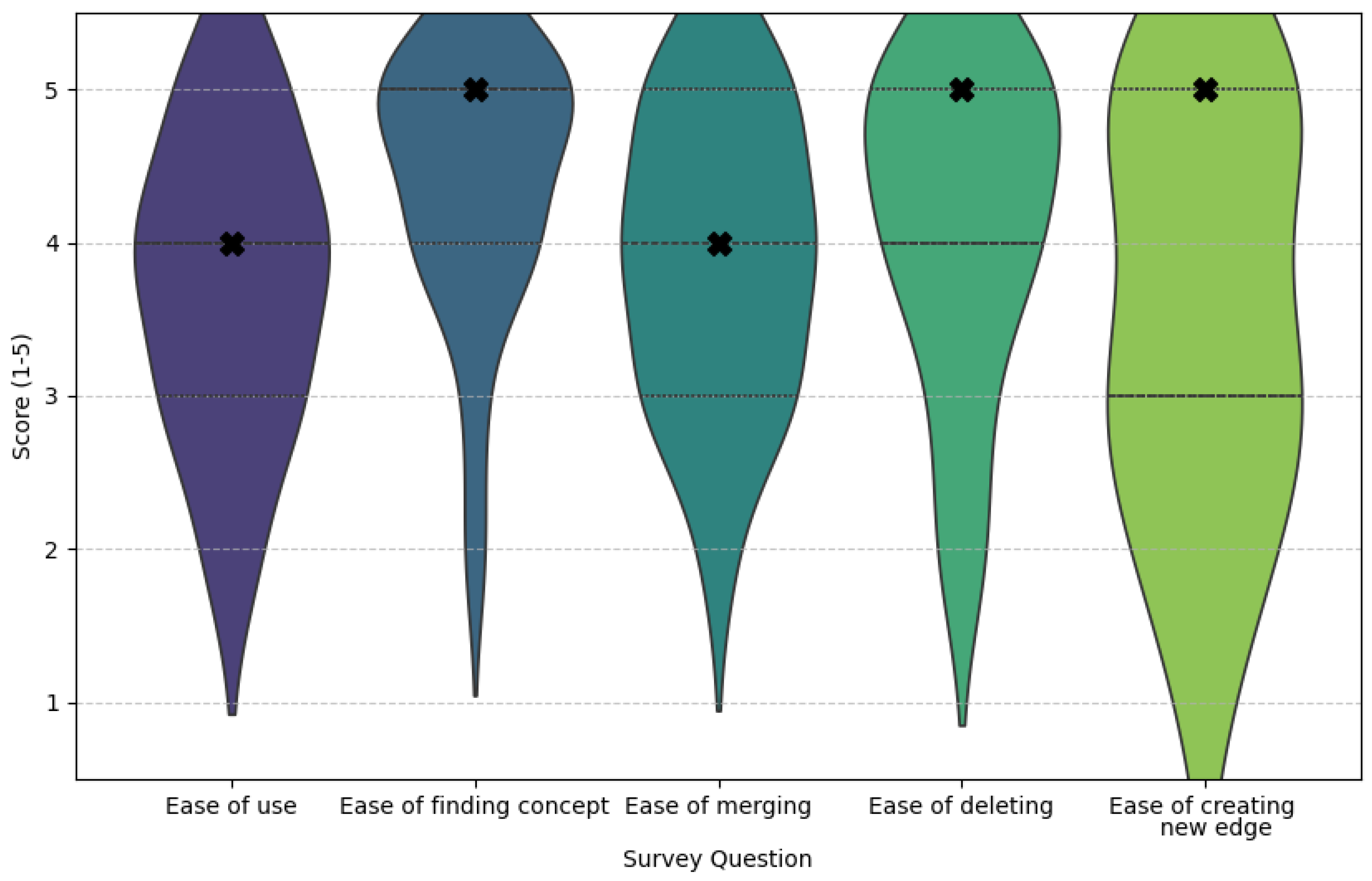

4.2. Usability Results

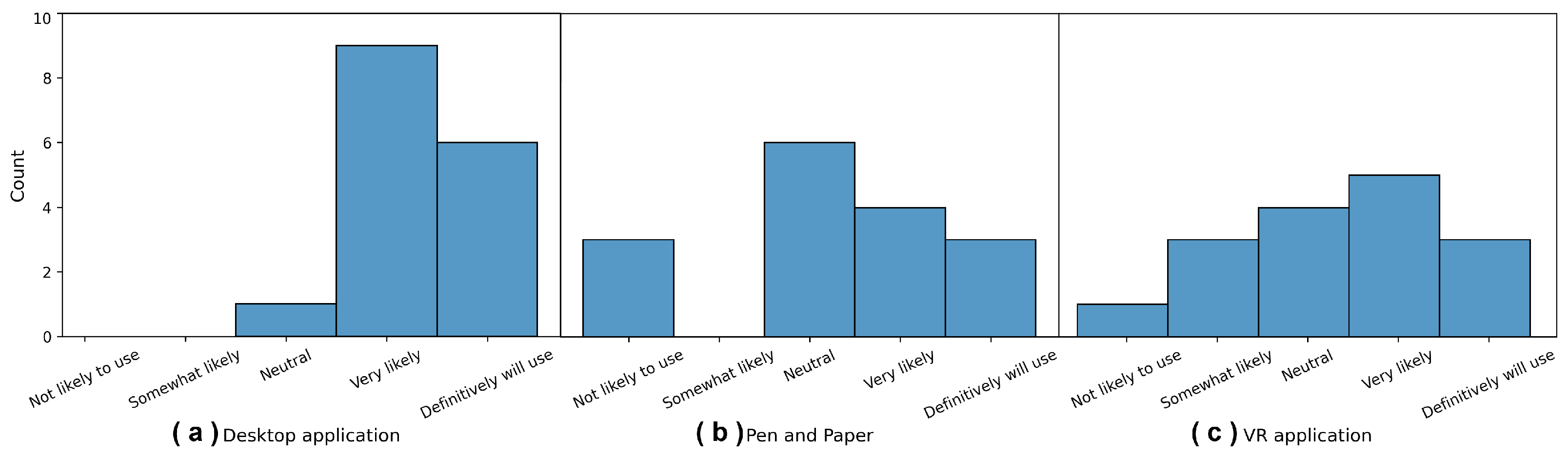

4.3. Post-Usability Questionnaire

5. Discussion

5.1. Key Findings

5.2. Limitations

5.3. Future Works

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AR | Augmented Reality |

| BPMN | Business Process Model and Notation |

| ER | Entity-Relationship Models |

| IRB | Institutional Review Board |

| MDSE | Model-Driven Software Engineering |

| NUI | Natural User Interfaces |

| SUS | System Usability Scale |

| UML | Unified Modeling Language |

| VR | Virtual reality |

Appendix A

References

- Robinson, S.; Arbez, G.; Birta, L.G.; Tolk, A.; Wagner, G. Conceptual modeling: Definition, purpose and benefits. In Proceedings of the 2015 Winter Simulation Conference (WSC), Huntington Beach, CA, USA, 6–9 December 2015; IEEE: New York, NY, USA, 2015; pp. 2812–2826. [Google Scholar]

- Thalheim, B. The enhanced entity-relationship model. In Handbook of Conceptual Modeling: Theory, Practice, and Research Challenges; Springer: Berlin/Heidelberg, Germany, 2011; pp. 165–206. [Google Scholar]

- Farshidi, S.; Kwantes, I.B.; Jansen, S. Business process modeling language selection for research modelers. Softw. Syst. Model. 2024, 23, 137–162. [Google Scholar] [CrossRef]

- Strutzenberger, D.; Mangler, J.; Rinderle-Ma, S. Evaluating BPMN Extensions for Continuous Processes Based on Use Cases and Expert Interviews. Bus. Inf. Syst. Eng. 2024, 66, 709–735. [Google Scholar] [CrossRef]

- Gogolla, M. UML and OCL in Conceptual Modeling. In Handbook of Conceptual Modeling: Theory, Practice, and Research Challenges; Springer: Berlin/Heidelberg, Germany, 2011; pp. 85–122. [Google Scholar]

- Vallecillo, A.; Gogolla, M. Modeling behavioral deontic constraints using UML and OCL. In Proceedings of the International Conference on Conceptual Modeling, Vienna, Austria, 3–6 November 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 134–148. [Google Scholar]

- Keet, C.M. The What and How of Modelling Information and Knowledge: From Mind Maps to Ontologies; Springer Nature: Cham, Switzerland, 2023. [Google Scholar]

- Voinov, A.; Jenni, K.; Gray, S.; Kolagani, N.; Glynn, P.D.; Bommel, P.; Prell, C.; Zellner, M.; Paolisso, M.; Jordan, R.; et al. Tools and methods in participatory modeling: Selecting the right tool for the job. Environ. Model. Softw. 2018, 109, 232–255. [Google Scholar] [CrossRef]

- Karagiannis, D.; Lee, M.; Hinkelmann, K.; Utz, W. Domain-Specific Conceptual Modeling: Concepts, Methods and ADOxx Tools; Springer Nature: Cham, Switzerland, 2022. [Google Scholar]

- Delcambre, L.M.; Liddle, S.W.; Pastor, O.; Storey, V.C. Articulating conceptual modeling research contributions. In Proceedings of the Advances in Conceptual Modeling: ER 2021 Workshops CoMoNoS, EmpER, CMLS, St. John’s, NL, Canada, 18–21 October 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 45–60. [Google Scholar]

- Argent, R.M.; Sojda, R.S.; Giupponi, C.; McIntosh, B.; Voinov, A.A.; Maier, H.R. Best practices for conceptual modelling in environmental planning and management. Environ. Model. Softw. 2016, 80, 113–121. [Google Scholar] [CrossRef]

- Nafis, F.A.; Rose, A.; Su, S.; Chen, S.; Han, B. Are We There Yet? Unravelling Usability Challenges and Opportunities in Collaborative Immersive Analytics for Domain Experts. In Proceedings of the International Conference on Human-Computer Interaction, Gothenburg, Sweden, 22–27 June 2025; Springer: Berlin/Heidelberg, Germany, 2025; pp. 159–181. [Google Scholar]

- Stancek, M.; Polasek, I.; Zalabai, T.; Vincur, J.; Jolak, R.; Chaudron, M. Collaborative software design and modeling in virtual reality. Inf. Softw. Technol. 2024, 166, 107369. [Google Scholar] [CrossRef]

- Yigitbas, E.; Gorissen, S.; Weidmann, N.; Engels, G. Design and evaluation of a collaborative uml modeling environment in virtual reality. Softw. Syst. Model. 2023, 22, 1397–1425. [Google Scholar] [CrossRef]

- Freund, A.J.; Giabbanelli, P.J. Automatically combining conceptual models using semantic and structural information. In Proceedings of the 2021 Annual Modeling and Simulation Conference (ANNSIM), Fairfax, VA, USA, 19–22 July 2021; IEEE: New York, NY, USA, 2021; pp. 1–12. [Google Scholar]

- Reddy, T.; Giabbanelli, P.J.; Mago, V.K. The artificial facilitator: Guiding participants in developing causal maps using voice-activated technologies. In Proceedings of the Augmented Cognition: 13th International Conference, AC 2019, Held as Part of the 21st HCI International Conference, HCII 2019, Orlando, FL, USA, 26–31 July 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 111–129. [Google Scholar]

- Bork, D.; De Carlo, G. An extended taxonomy of advanced information visualization and interaction in conceptual modeling. Data Knowl. Eng. 2023, 147, 102209. [Google Scholar] [CrossRef]

- Poppe, E.; Brown, R.; Johnson, D.; Recker, J. Preliminary evaluation of an augmented reality collaborative process modelling system. In Proceedings of the 2012 International Conference on Cyberworlds, Darmstadt, Germany, 25–27 September 2012; IEEE: New York, NY, USA, 2012; pp. 77–84. [Google Scholar]

- Mikkelsen, A.; Honningsøy, S.; Grønli, T.M.; Ghinea, G. Exploring microsoft hololens for interactive visualization of UML diagrams. In Proceedings of the 9th International Conference on Management of Digital EcoSystems, Bangkok, Thailand, 7–10 November 2017; pp. 121–127. [Google Scholar]

- Lutfi, M.; Valerdi, R. Integration of SysML and Virtual Reality Environment: A Ground Based Telescope System Example. Systems 2023, 11, 189. [Google Scholar] [CrossRef]

- Oberhauser, R.; Baehre, M.; Sousa, P. VR-EvoEA+ BP: Using Virtual Reality to Visualize Enterprise Context Dynamics Related to Enterprise Evolution and Business Processes. In Proceedings of the International Symposium on Business Modeling and Software Design, Utrecht, The Netherlands, 3–5 July 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 110–128. [Google Scholar]

- Giabbanelli, P.; Shrestha, A.; Demay, L. Design and Development of a Collaborative Augmented Reality Environment for Systems Science. In Proceedings of the 57th Hawaii International Conference on System Sciences, Honolulu, HI, USA, 3–6 January 2024; pp. 589–598. [Google Scholar]

- Storey, V.C.; Lukyanenko, R.; Castellanos, A. Conceptual modeling: Topics, themes, and technology trends. ACM J. Comput. Cult. Herit. 2023, 55, 1–38. [Google Scholar] [CrossRef]

- Riemer, K.; Holler, J.; Indulska, M. Collaborative process modelling-tool analysis and design implications. In Proceedings of the European Conference on Information Systems (ECIS), Helsinki, Finland, 9–11 June 2011. [Google Scholar]

- Kelly, S.; Tolvanen, J.P. Collaborative modelling and metamodelling with MetaEdit+. In Proceedings of the 2021 ACM/IEEE International Conference on Model Driven Engineering Languages and Systems Companion (MODELS-C), Fukuoka, Japan, 10–15 October 2021; IEEE: New York, NY, USA, 2021; pp. 27–34. [Google Scholar]

- Saini, R.; Mussbacher, G. Towards conflict-free collaborative modelling using VS code extensions. In Proceedings of the 2021 ACM/IEEE International Conference on Model Driven Engineering Languages and Systems Companion (MODELS-C), Fukuoka, Japan, 10–15 October 2021; IEEE: New York, NY, USA, 2021; pp. 35–44. [Google Scholar]

- Gois, M.I.F. Collaborative Modelling Interaction Mechanisms. Master’s Thesis, NOVA University, Lisbon, Portugal, 2023. [Google Scholar]

- David, I.; Aslam, K.; Malavolta, I.; Lago, P. Collaborative Model-Driven Software Engineering—A systematic survey of practices and needs in industry. J. Syst. Softw. 2023, 199, 111626. [Google Scholar] [CrossRef]

- Robinson, S. Conceptual modelling for simulation: Progress and grand challenges. J. Simul. 2019, 14, 1–20. [Google Scholar] [CrossRef]

- Tolk, A. Conceptual alignment for simulation interoperability: Lessons learned from 30 years of interoperability research. Simulation 2023, 100, 709–726. [Google Scholar] [CrossRef]

- Muff, F. State-of-the-Art and Related Work. In Metamodeling for Extended Reality; Springer Nature: Cham, Switzerland, 2025; pp. 17–60. [Google Scholar]

- Ternes, B.; Rosenthal, K.; Strecker, S. User interface design research for modeling tools: A literature study. Enterp. Model. Inf. Syst. Archit. (EMISAJ) 2021, 16, 1–30. [Google Scholar]

- Fellmann, M.; Metzger, D.; Jannaber, S.; Zarvic, N.; Thomas, O. Process modeling recommender systems: A generic data model and its application to a smart glasses-based modeling environment. Bus. Inf. Syst. Eng. 2018, 60, 21–38. [Google Scholar] [CrossRef]

- Muff, F.; Fill, H.G. Initial Concepts for Augmented and Virtual Reality-based Enterprise Modeling. In Proceedings of the ER Demos/Posters, St. John’s, NL, Canada, 18–21 October 2021; pp. 49–54. [Google Scholar]

- Brunschwig, L.; Campos-López, R.; Guerra, E.; de Lara, J. Towards domain-specific modelling environments based on augmented reality. In Proceedings of the 2021 IEEE/ACM 43rd International Conference on Software Engineering: New Ideas and Emerging Results (ICSE-NIER), Madrid, Spain, 25–28 May 2021; IEEE: New York, NY, USA, 2021; pp. 56–60. [Google Scholar]

- Cardenas-Robledo, L.A.; Hernández-Uribe, Ó.; Reta, C.; Cantoral-Ceballos, J.A. Extended reality applications in industry 4.0.—A systematic literature review. Telemat. Inform. 2022, 73, 101863. [Google Scholar] [CrossRef]

- Schäfer, A.; Reis, G.; Stricker, D. A Survey on Synchronous Augmented, Virtual, andMixed Reality Remote Collaboration Systems. ACM Comput. Surv. 2022, 55, 1–27. [Google Scholar] [CrossRef]

- Riihiaho, S. Usability testing. The Wiley Handbook of Human Computer Interaction; Wiley: Hoboken, NJ, USA, 2018; Volume 1, pp. 255–275. [Google Scholar]

- Giabbanelli, P.J.; Vesuvala, C.X. Human factors in leveraging systems science to shape public policy for obesity: A usability study. Information 2023, 14, 196. [Google Scholar] [CrossRef]

- Vidal-Balea, A.; Blanco-Novoa, O.; Fraga-Lamas, P.; Vilar-Montesinos, M.; Fernández-Caramés, T.M. Creating collaborative augmented reality experiences for industry 4.0 training and assistance applications: Performance evaluation in the shipyard of the future. Appl. Sci. 2020, 10, 9073. [Google Scholar] [CrossRef]

- Knopp, S.; Klimant, P.; Allmacher, C. Industrial use case-ar guidance using hololens for assembly and disassembly of a modular mold, with live streaming for collaborative support. In Proceedings of the 2019 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Beijing, China, 10–18 October 2019; IEEE: New York, NY, USA, 2019; pp. 134–135. [Google Scholar]

- O’Keeffe, V.; Jang, R.; Manning, K.; Trott, R.; Howard, S.; Hordacre, A.L.; Spoehr, J. Forming a view: A human factors case study of augmented reality collaboration in assembly. Ergonomics 2024, 67, 1828–1844. [Google Scholar] [CrossRef]

- Wang, J.; Hu, Y.; Yang, X. Multi-person collaborative augmented reality assembly process evaluation system based on hololens. In Proceedings of the International Conference on Human-Computer Interaction, Online, 26 June–1 July 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 369–380. [Google Scholar]

- Wang, P.; Yang, H.; Billinghurst, M.; Zhao, S.; Wang, Y.; Liu, Z.; Zhang, Y. A survey on XR remote collaboration in industry. J. Manuf. Syst. 2025, 81, 49–74. [Google Scholar] [CrossRef]

- Hidalgo, R.; Kang, J. Navigating Usability Challenges in Collaborative Learning with Augmented Reality. In Proceedings of the International Conference on Quantitative Ethnography, Philadelphia, PA, USA, 3–7 November 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 70–78. [Google Scholar]

- Upadhyay, B.; Brady, C.; Madathil, K.C.; Bertrand, J.; Gramopadhye, A. Collaborative augmented reality in higher education settings–strategies, learning outcomes and challenges. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Washington, DC, USA, 23–27 October 2023; SAGE Publications: Los Angeles, CA, USA, 2023; Volume 67, pp. 1090–1096. [Google Scholar]

- Pintani, D.; Caputo, A.; Mendes, D.; Giachetti, A. Cider: Collaborative interior design in extended reality. In Proceedings of the 15th Biannual Conference of the Italian SIGCHI Chapter, Torino, Italy, 20–22 September 2023; pp. 1–11. [Google Scholar]

- Vovk, A.; Wild, F.; Guest, W.; Kuula, T. Simulator sickness in augmented reality training using the Microsoft HoloLens. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 23–26 April 2018; pp. 1–9. [Google Scholar]

- Giabbanelli, P.J.; Tawfik, A.A. How perspectives of a system change based on exposure to positive or negative evidence. Systems 2021, 9, 23. [Google Scholar] [CrossRef]

- Asif, M.; Inam, A.; Adamowski, J.; Shoaib, M.; Tariq, H.; Ahmad, S.; Alizadeh, M.R.; Nazeer, A. Development of methods for the simplification of complex group built causal loop diagrams: A case study of the Rechna doab. Ecol. Model. 2023, 476, 110192. [Google Scholar] [CrossRef]

- van der Zee, D.J. Approaches for simulation model simplification. In Proceedings of the 2017 Winter Simulation Conference (WSC), Las Vegas, NV, USA, 3–6 December 2017; IEEE: New York, NY, USA, 2017; pp. 4197–4208. [Google Scholar]

- Valdivia Cabrera, M.; Johnstone, M.; Hayward, J.; Bolton, K.A.; Creighton, D. Integration of large-scale community-developed causal loop diagrams: A Natural Language Processing approach to merging factors based on semantic similarity. BMC Public Health 2025, 25, 923. [Google Scholar] [CrossRef] [PubMed]

- Friedl-Knirsch, J.; Pointecker, F.; Pfistermüller, S.; Stach, C.; Anthes, C.; Roth, D. A Systematic Literature Review of User Evaluation in Immersive Analytics. In Proceedings of the Computer Graphics Forum, Savannah, GA, USA, 23–25 April 2024; Wiley Online Library: Hoboken, NJ, USA, 2024; Volume 43, p. e15111. [Google Scholar]

- Vigderman, A. Virtual Reality Awareness and Adoption Report. 2024. Available online: https://www.security.org/digital-security/virtual-reality-annual-report/ (accessed on 30 May 2025).

- Hu, M.; Shealy, T. Methods for measuring systems thinking: Differences between student self-assessment, concept map scores, and cortical activation during tasks about sustainability. In Proceedings of the 2018 ASEE Annual Conference & Exposition, Salt Lake City, UT, USA, 24–27 June 2018. [Google Scholar]

- Vona, F.; Stern, M.; Ashrafi, N.; Kojić, T.; Hinzmann, S.; Grieshammer, D.; Voigt-Antons, J.N. Investigating the impact of virtual element misalignment in collaborative Augmented Reality experiences. In Proceedings of the 2024 16th International Conference on Quality of Multimedia Experience (QoMEX), Karlshamn, Sweden, 18–20 June 2024; IEEE: New York, NY, USA, 2024; pp. 293–299. [Google Scholar]

- Numan, N.; Brostow, G.; Park, S.; Julier, S.; Steed, A.; Van Brummelen, J. CoCreatAR: Enhancing authoring of outdoor augmented reality experiences through asymmetric collaboration. In Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 26 April–1 May 2025; pp. 1–22. [Google Scholar]

- Chan, W.P.; Hanks, G.; Sakr, M.; Zhang, H.; Zuo, T.; Van der Loos, H.M.; Croft, E. Design and evaluation of an augmented reality head-mounted display interface for human robot teams collaborating in physically shared manufacturing tasks. ACM Trans. Human-Robot Interact. (THRI) 2022, 11, 1–19. [Google Scholar] [CrossRef]

- Hoogendoorn, E.M.; Geerse, D.J.; Helsloot, J.; Coolen, B.; Stins, J.F.; Roerdink, M. A larger augmented-reality field of view improves interaction with nearby holographic objects. PLoS ONE 2024, 19, e0311804. [Google Scholar] [CrossRef] [PubMed]

- Murphy, R.O.; Ackermann, K.A.; Handgraaf, M.J. Measuring social value orientation. Judgm. Decis. Mak. 2011, 6, 771–781. [Google Scholar] [CrossRef]

- Soto, C.J.; John, O.P. Short and extra-short forms of the Big Five Inventory–2: The BFI-2-S and BFI-2-XS. J. Res. Personal. 2017, 68, 69–81. [Google Scholar] [CrossRef]

- Wiepke, A.; Heinemann, B. A systematic literature review on user factors to support the sense of presence in virtual reality learning environments. Comput. Educ. X Real. 2024, 4, 100064. [Google Scholar] [CrossRef]

- Katifori, A.; Lougiakis, C.; Roussou, M. Exploring the effect of personality traits in VR interaction: The emergent role of perspective-taking in task performance. Front. Virtual Real. 2022, 3, 860916. [Google Scholar] [CrossRef]

- Malin, J.; Winkler, S.; Brade, J.; Lorenz, M. Personality Traits and Presence Barely Influence User Experience Evaluation in VR Manufacturing Applications–Insights and Future Research Directions (Between-Subject Study). Int. J. Hum.-Comput. Interact. 2025, 1–22. [Google Scholar] [CrossRef]

- Knox, C.; Furman, K.; Jetter, A.; Gray, S.; Giabbanelli, P.J. Creating an FCM with Participants in an Interview or Workshop Setting. In Fuzzy Cognitive Maps: Best Practices and Modern Methods; Springer: Berlin/Heidelberg, Germany, 2024; pp. 19–44. [Google Scholar]

| Study | AR/VR | Modeling Formalism | Collaborative | Usability Assessed |

|---|---|---|---|---|

| [18] | AR | Business processes | – | Prototype only |

| [19] | AR | UML diagrams | – | Prototype only |

| [14] | VR | UML class diagrams | ✓ | ✓ |

| [13] | VR | UML class diagrams | ✓ | ✓ |

| [34] | AR | BPMN diagrams | – | Prototype only |

| [22] | AR | Causal maps | ✓ | Stage 1 only |

| Our work | AR | Causal maps | ✓ | ✓ (with users) |

| Task | Description | Rationale in Collaborative Causal Mapping |

|---|---|---|

| Find Node | Locate a specific node within the causal map. | Ensures participants can navigate the shared model and refer to common elements during discussion. |

| Delete Node | Remove an unnecessary or irrelevant concept. | Models often contain tangential or redundant concepts; deleting nodes is essential for simplifying and refining shared maps. |

| Create Edge | Add a directed causal link between two nodes. | Captures new consensus knowledge by explicitly encoding causal relationships identified during negotiation. |

| Identify Error | Detect and correct an incorrect causal relation. | Promotes critical review and correction of the shared model, maintaining accuracy and consistency across participants. |

| Merge Concepts | Combine two semantically related nodes into one. | Resolves differences in terminology or perspective, supporting conceptual alignment and a unified representation. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shrestha, A.; Giabbanelli, P.J. Are We Ready for Synchronous Conceptual Modeling in Augmented Reality? A Usability Study on Causal Maps with HoloLens 2. Information 2025, 16, 952. https://doi.org/10.3390/info16110952

Shrestha A, Giabbanelli PJ. Are We Ready for Synchronous Conceptual Modeling in Augmented Reality? A Usability Study on Causal Maps with HoloLens 2. Information. 2025; 16(11):952. https://doi.org/10.3390/info16110952

Chicago/Turabian StyleShrestha, Anish, and Philippe J. Giabbanelli. 2025. "Are We Ready for Synchronous Conceptual Modeling in Augmented Reality? A Usability Study on Causal Maps with HoloLens 2" Information 16, no. 11: 952. https://doi.org/10.3390/info16110952

APA StyleShrestha, A., & Giabbanelli, P. J. (2025). Are We Ready for Synchronous Conceptual Modeling in Augmented Reality? A Usability Study on Causal Maps with HoloLens 2. Information, 16(11), 952. https://doi.org/10.3390/info16110952