1. Introduction

The progression of intelligent transformation in schooling has emerged as a pivotal trend for future advancement [

1]. The use of artificial intelligence technology allows automation of identifying and analyzing student classroom behavior. This boosts understanding of pupils’ learning conditions and classroom performance. It also offers teachers objective, real-time data support. These factors help optimize pedagogical practices, increasing instructional efficiency and classroom management efficacy [

2,

3]. Conventional approaches mostly rely on human observation and documentation. These methods are vulnerable to subjective influences, prone to tiredness, and time-consuming. As a result, they are hard to implement for widespread and prolonged classroom oversight [

4,

5]. There is now a pressing need for a more effective and sophisticated technological approach to support classroom behavior analysis.

Due to the swift progression of big data and deep learning, artificial intelligence has attained notable accomplishments in areas such as computer vision, medical imaging, and security surveillance, and is progressively infiltrating the education sector [

6]. Li et al. [

7] utilized convolutional neural networks (CNNs) to recognize and assess classroom teaching behaviors, significantly improving the precision of student behavior detection for the purpose of facilitating classroom interaction analysis. Several scientists have endeavored to integrate temporal or spatio–temporal modeling methodologies to identify students’ learning states. The IMRMB-Net model introduced by Feng et al. [

8] attains a balance between occlusion management, small object behavior identification, and computing efficiency in intricate classroom environments. Furthermore, investigations have examined lightweight detection networks tailored for resource-limited smart classroom settings [

8]. For instance, Han et al. [

9] augmented multi-scale and fine-grained modeling capabilities via CA-C2f and 2DPE-MHA modules, complemented by dynamic sampling strategies. Meanwhile, Chen et al. enhanced the YOLOv8 methodology, which markedly bolstered robustness in occlusion and small-scale behavior detection [

10].

This research has established a foundation for behavior identification in educational contexts; nonetheless, various problems remain in actual classroom settings. In standard classroom settings, the high student population, considerable scale disparities between the front and rear rows, and recurrent occlusion problems significantly diminish detection accuracy. Simultaneously, student behaviors are notably focused, primarily exhibiting static postures or visual indicators such as “listening attentively,” “looking down,” or “standing up.” This imposes increased requirements on models to discern subtle distinctions. Although current research has shown advancements in accuracy, the majority of methodologies depend on intricate network structures or entail substantial computing expenses, making them challenging to integrate into standard teaching practices.

This work presents an enhanced object identification model derived from YOLOv11, with primary contributions including the following:

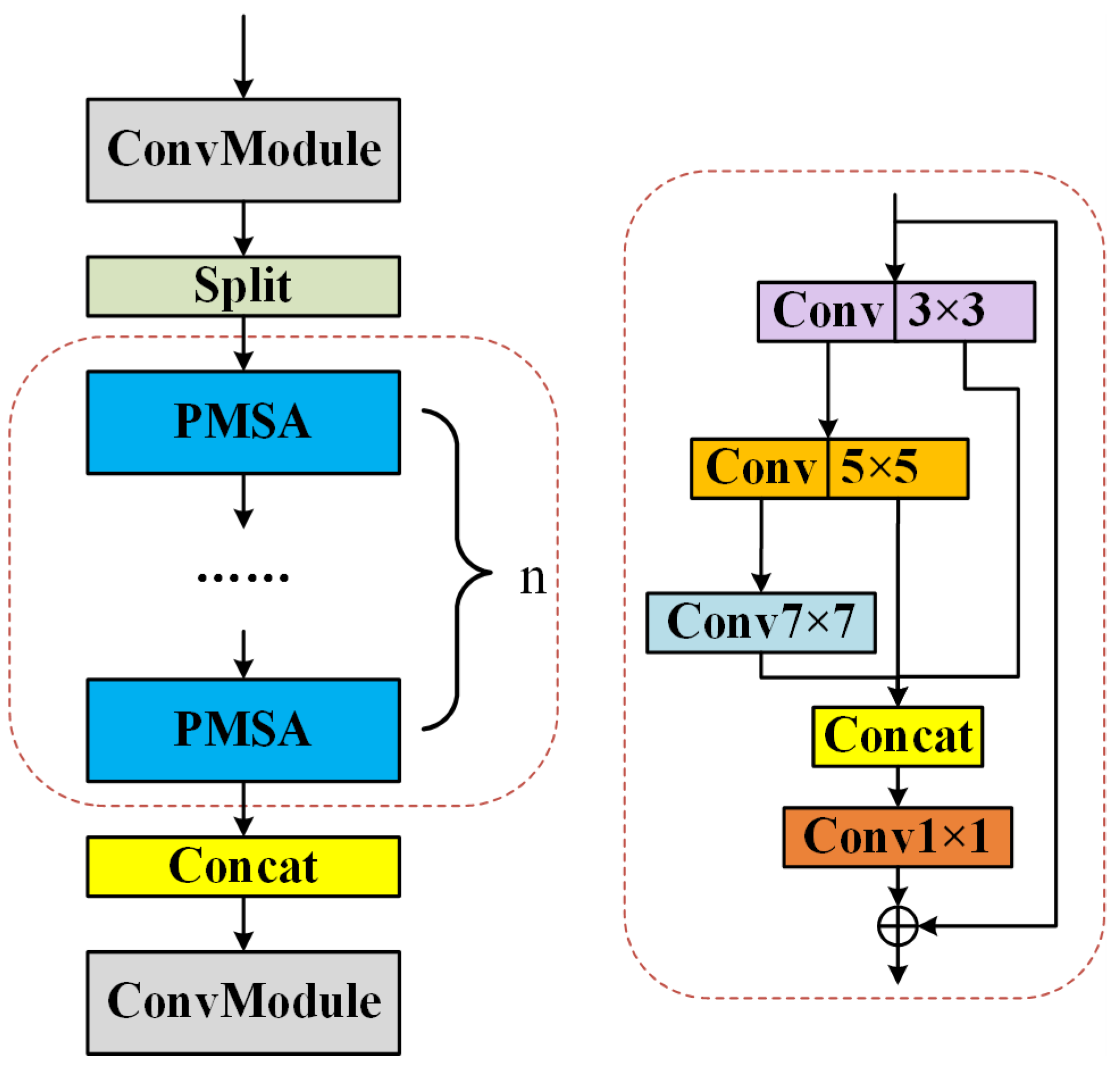

(1) Developed the CSP-PMSA module, integrating Cross Stage Partial connections with partial multi-scale convolutions to adaptively augment important information representation, thereby significantly enhancing contextual modeling skills in intricate backdrops;

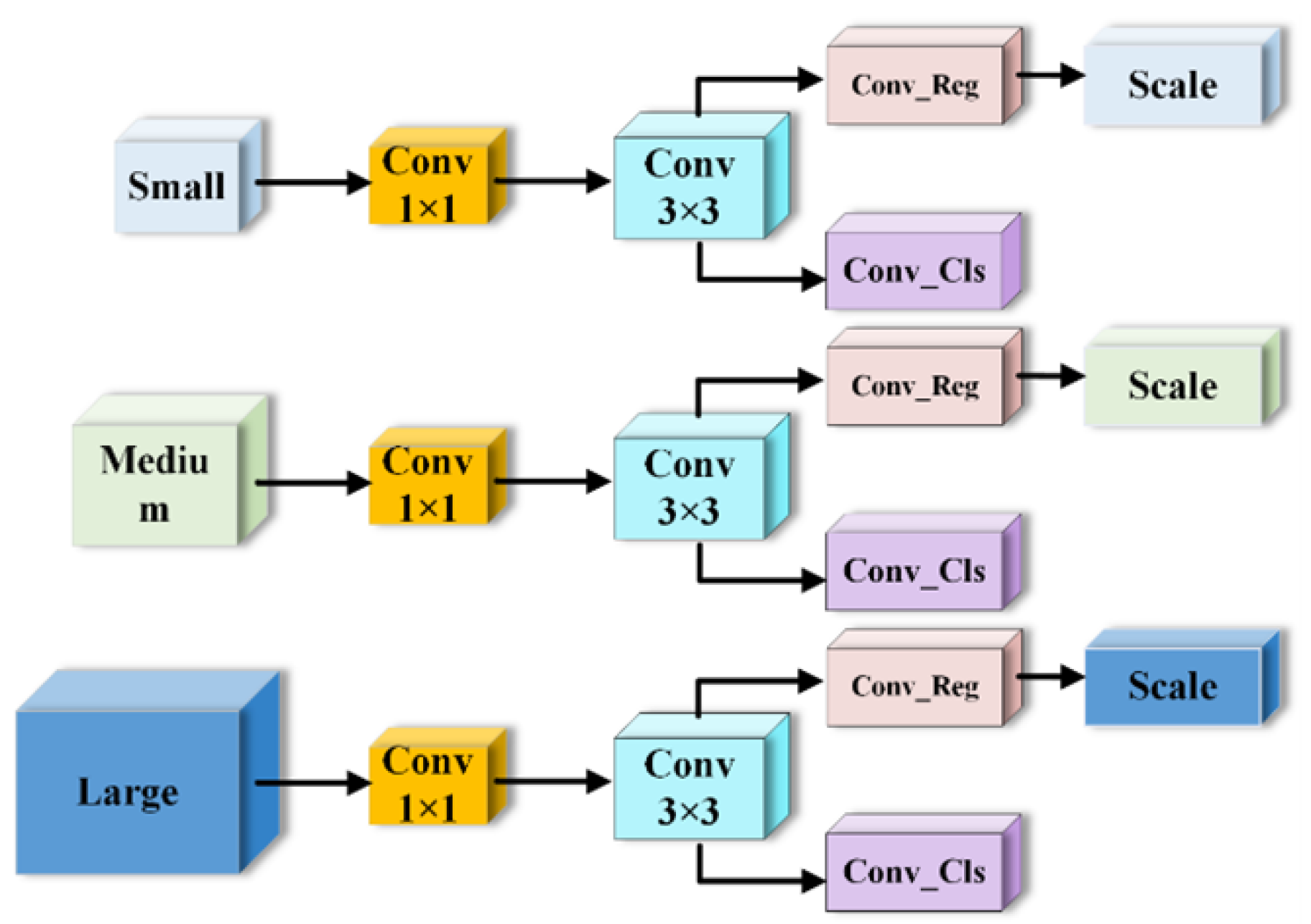

(2) Developed the Small Object Aware Head (SAH), which markedly improves the model’s detection efficacy for diminutive objects and nuanced behaviors via a cohesive channel and scale-adaptive mechanism;

(3) Implemented the Multi-Head Self-Attention (MHSA) mechanism prior to the detection head to capture global dependencies across various subspaces, thereby enhancing the model’s capacity to differentiate visually analogous behavioral categories and its overall resilience.

This paper is organized in the following way:

Section 2 gives a brief overview of related research;

Section 3 goes into more detail about the proposed model methodology;

Section 4 introduces the dataset and evaluation metrics, showing how the experiment was set up, the results, and the analysis; and

Section 5 wraps up the whole paper and suggests future research directions.

4. Experimental Results and Analysis

4.5. Performance Comparison

In order to ensure real-time deployment feasibility in resource-constrained teaching environments, student behavior recognition in the intricate practical application scenario of smart classrooms requires not only high detection accuracy but also full consideration of model parameter scale and computational complexity. In order to do this, the suggested approach is methodically comparing with other popular detection models using the same experimental setup and datasets in this paper.

Table 5 displays the outcomes of the experiment.

All things considered, conventional detection methods like SSD and Faster R-CNN met detection requirements for specific static scenarios with mAP@50 scores of 81.5% and 84.2%, respectively. However, they are ineffective for real-time applications in smart classrooms because of their large parameter sizes (24.5 M and 41.3 M) and high computational complexity (31.5 GFLOPs and 189 GFLOPs). The YOLO series algorithms, on the other hand, exhibit a better balance between computing efficiency and detection accuracy due to their lightweight design. YOLOv11, the most recent baseline model, reaches 88.9% mAP@50 and 73.1% mAP@50–95, setting new records for detection performance. Its consistency in complicated action recognition is still lacking, though. With its self-attention mechanism, DETR exhibits powerful global modeling capabilities. Large-scale adoption in classroom teaching situations is hampered by its computing demand of 58.6 GFLOPs and enormous parameter count of 20.1 million, which leads to deployment costs that are much greater than those of the YOLO series.

Conversely, the suggested enhanced model exhibits all-encompassing performance benefits, including an mAP@50 of 91.6%, recall (R) of 85.6%, precision (P) of 88.2%, and an mAP@50–95 of 75.7%. Our method delivers a 2.6% increase in mAP@50-95 and a 3.0% improvement in accuracy when compared to the YOLOv11 baseline, with only slight increases in processing cost and parameter count. Additionally, our method reduces the number of parameters by about 86.6% and the computational complexity by about 84.3%, while improving accuracy by 4.6% and 3.6%, respectively, when compared to DETR. This preserves excellent precision while drastically reducing resource use. These outcomes show that our method is the best option for large-scale, low-latency intelligent classroom behavior detection since it not only achieves accuracy breakthroughs but also demonstrates exceptional benefits in complexity management.

These performance improvements can be attributed to the joint contribution of three modules integrated into the network. CSP-PMSA enhances the representation of salient features and contextual cues in cluttered classroom scenes, effectively mitigating background interference and occlusion effects. SAH unifies multi-scale channels and adaptively adjusts their weights, significantly improving the detection of small and fine-grained behaviors in the rear seating areas. MHSA, introduced before each detection head, captures long-range dependencies and positional bias across spatial subspaces, enhancing global perception and improving the distinction between visually similar categories. As shown in

Table 6, the proposed method improves AP@50 for “reading” and “listening” by 4.5% and 5.5%, respectively, relative to YOLOv11, and achieves 3.5% and 4.0% gains for “raising hands” and “discussing”. These improvements confirm that MHSA effectively captures subtle differences in posture, gestures, and orientation, thereby reducing confusion between similar categories and boosting fine-grained behavior recognition.

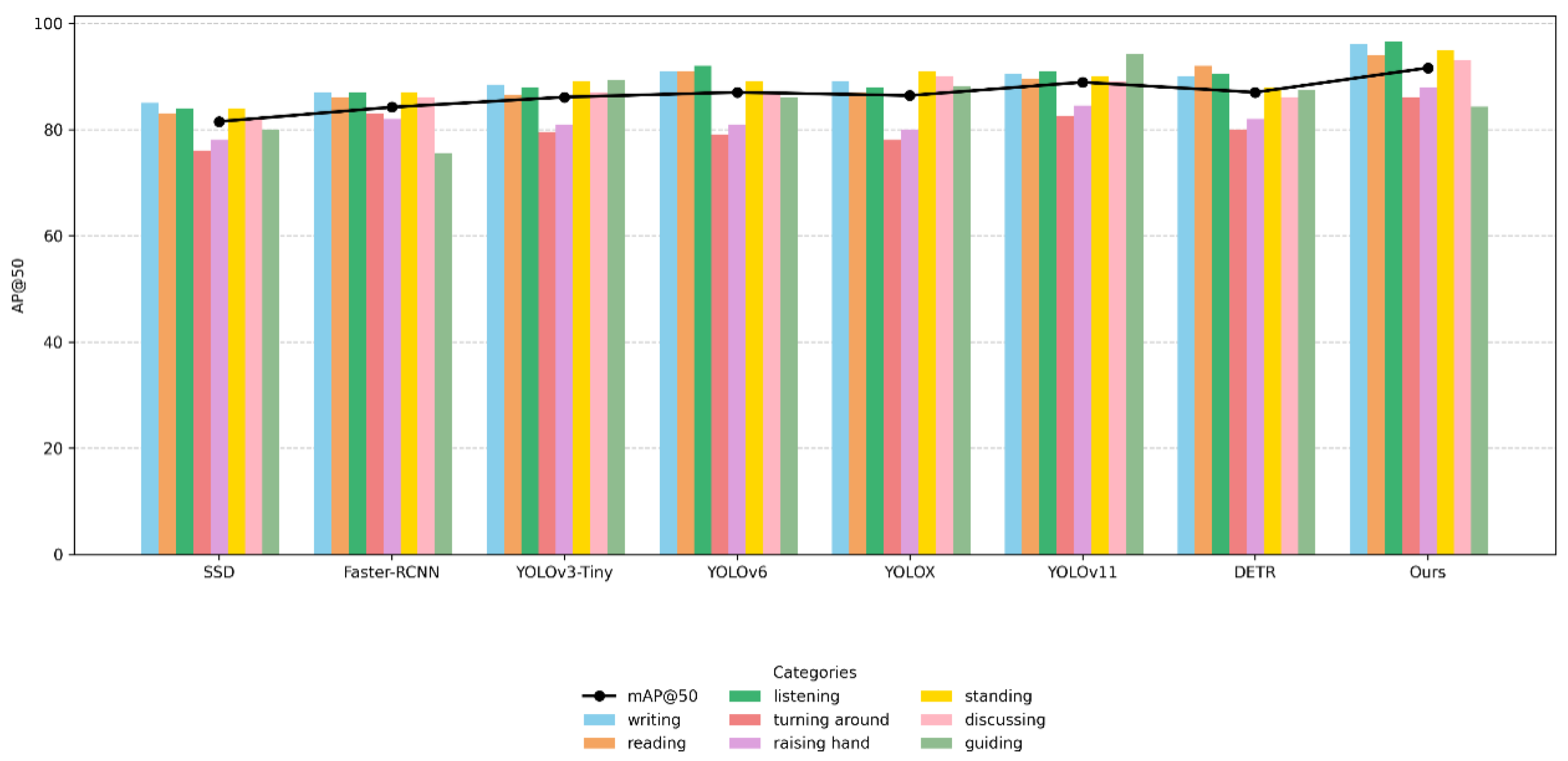

There are noticeable variations in detection performance among the eight student behavior classes at the category level (refer to

Table 6 and

Figure 8). For static behaviors like “writing” and “reading,” SSD and Faster R-CNN exhibit respectable accuracy; however, for small object or dynamic categories like “turning around” and “guiding,” their precision significantly decreases. Overall performance gains are shown by YOLOv3-Tiny, YOLOv6, and YOLOX; however, there is still difficulty differentiating between comparable categories, such as “raising hand” and “discussing.” While DETR excels in large-motion categories like “standing,” it demonstrates significant variability in small object identification. YOLOv11, on the other hand, has a fairly balanced performance but lacks stability for several fine-grained actions. Conversely, the suggested model consistently demonstrates good accuracy in all eight behavioral categories, with a notable emphasis on fine-grained action identification, such as “raising hands” and “discussing.” In challenging classroom contexts, the mAP@50 curve continuously outperforms other models, exhibiting higher flexibility and discriminative capability.

Notably, the suggested model shows better consistency and generalization capacities across behavioral categories in addition to striking a balance between detection performance and efficiency. This makes it possible to better adapt to a variety of classroom environments, better identify intricate interactive behaviors, and enable dynamic evaluation of student involvement, attentiveness, and classroom interaction patterns in educational settings. In conclusion, the suggested approach shows great application potential and usefulness by achieving high accuracy, low computational complexity, and strong robustness in smart classroom behavior detection tasks.

4.6. Model Performance Analysis of Deep Learning for Student Behavior Detection in Smart Classroom Environments

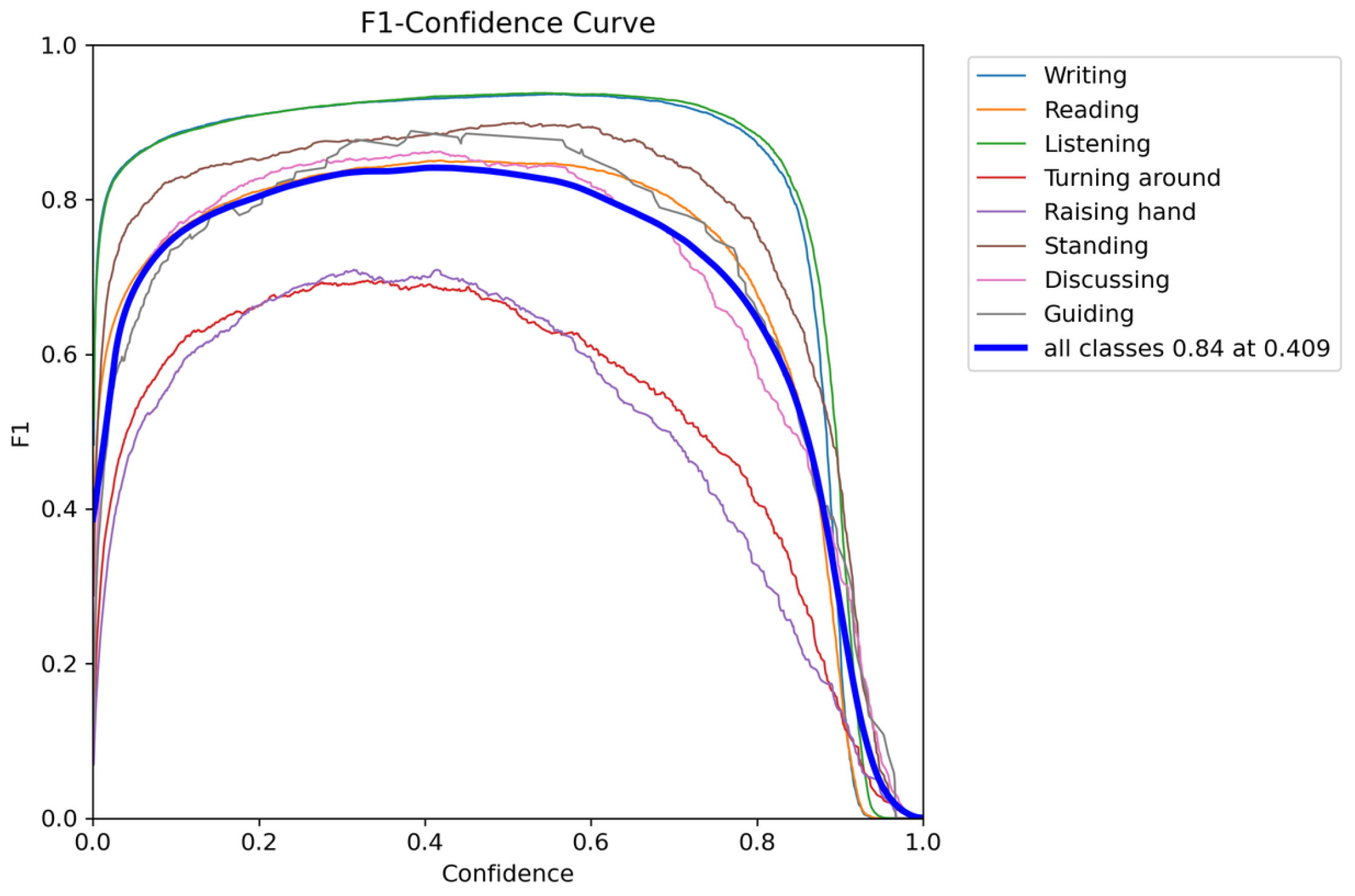

The YOLOv11n model’s F1 confidence curve is shown in

Figure 9. The findings show that while the model performs consistently overall across the majority of behavioral categories, it still has issues interpreting small targets and fine-grained behaviors. For example, YOLOv11n’s poor F1 ratings for the “turning around” and “guiding” actions show that it is not sensitive enough to local features and occlusions in complicated classroom environments. Additionally, the model’s practical applicability is limited due to its high rates of false positives and false negatives for dynamic actions and visually similar categories, even while it achieves adequate recognition accuracy for typical static behaviors (such as “writing” and “reading”).

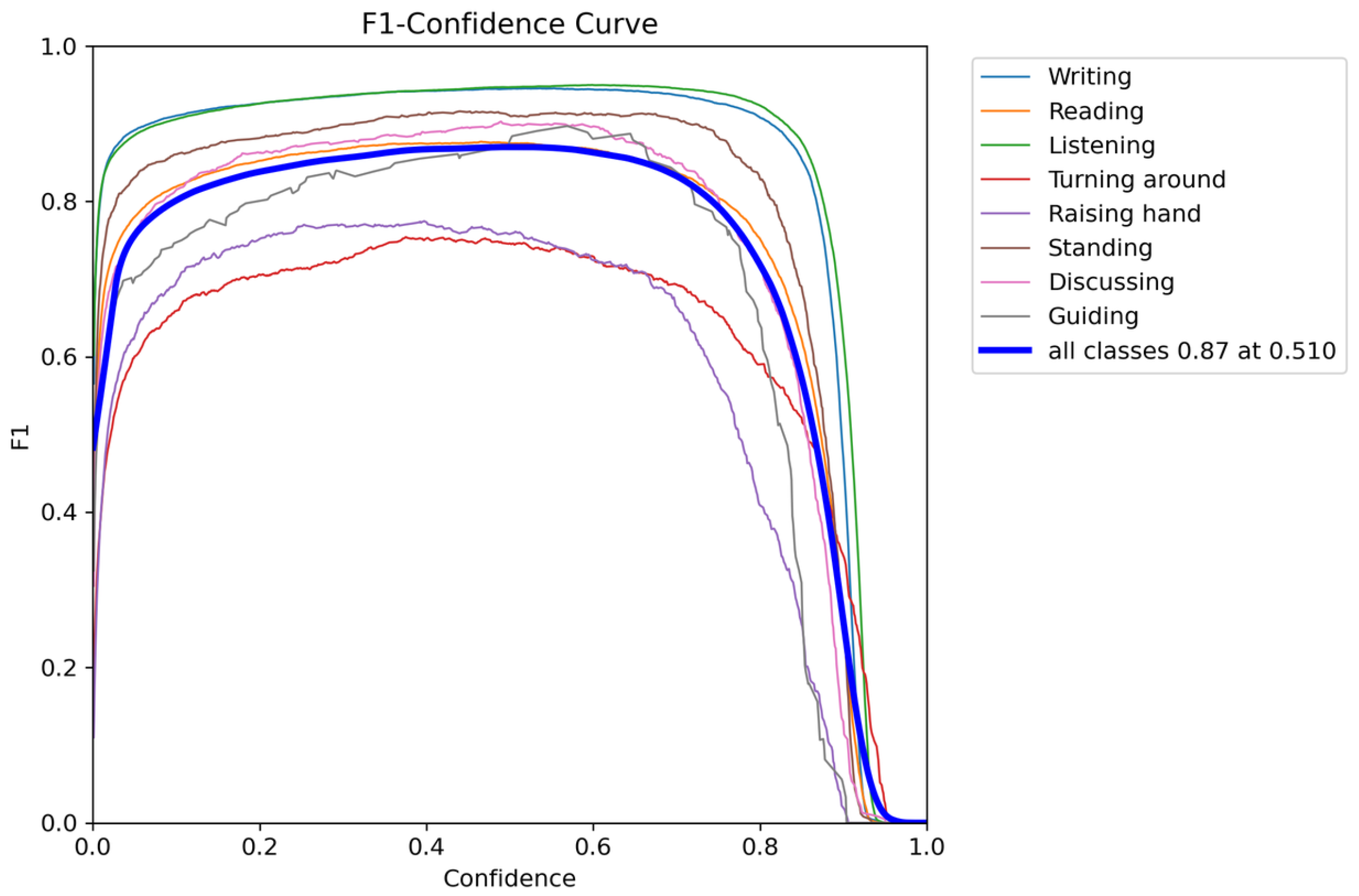

On the other hand, the F1 confidence curve for the suggested enhanced model is shown in

Figure 10. Greater stability and smoothness in the overall curve show that the model retains good precision and recall across a range of confidence criteria. Notably, the updated model shows noticeably higher F1 scores for categories like “writing” and “raising hand,” which strengthens the ability to recognize small objects and enhances the recognition of fine-grained behaviors. More significantly, the enhanced model outperforms YOLOv11n in terms of discriminating in readily confused comparable categories (e.g., “raising hand” versus “discussing”). This result confirms that the CSP-PMSA, SAH, and MHSA modules that were introduced have complementary benefits when it comes to modeling features at various hierarchical levels.

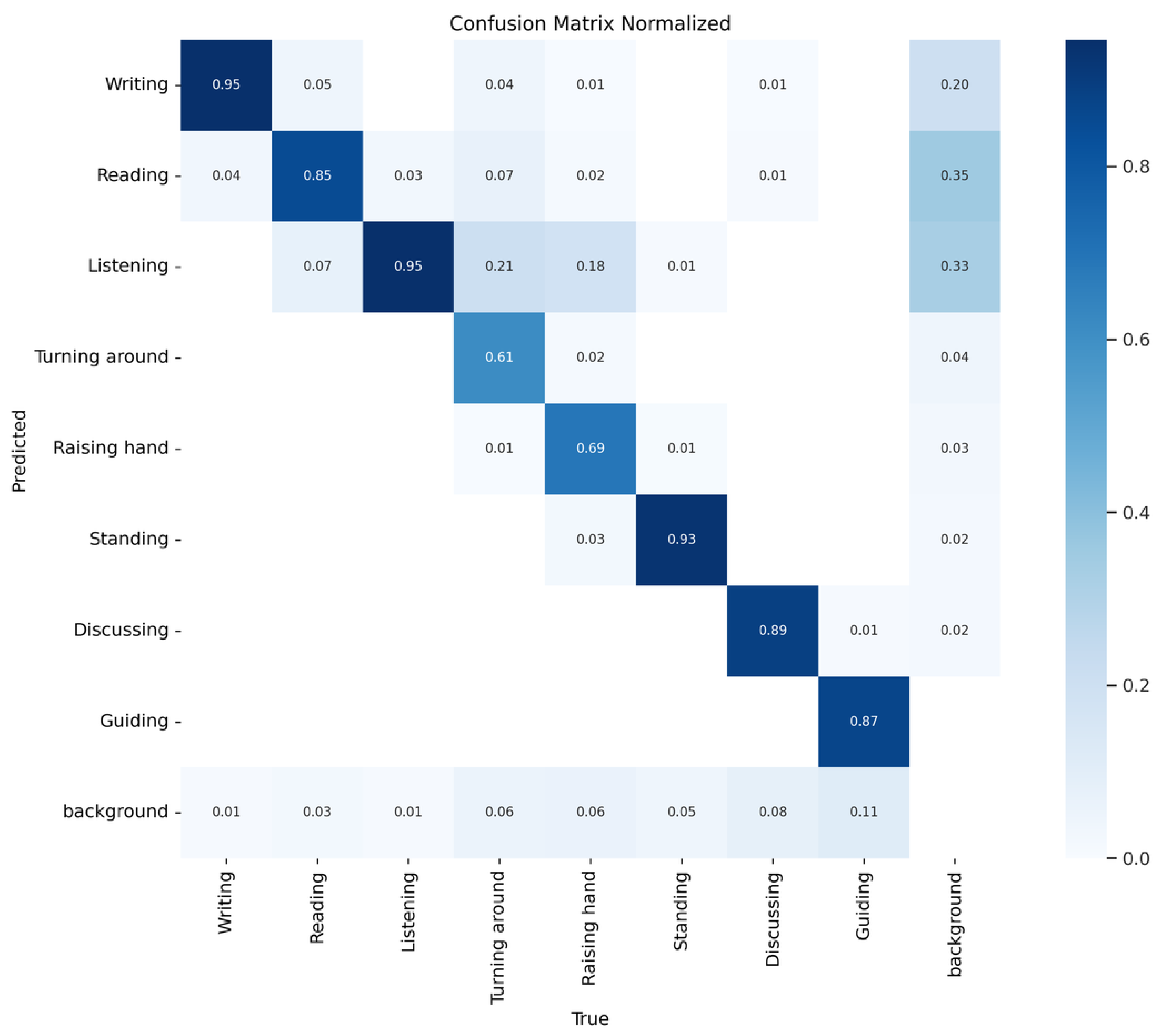

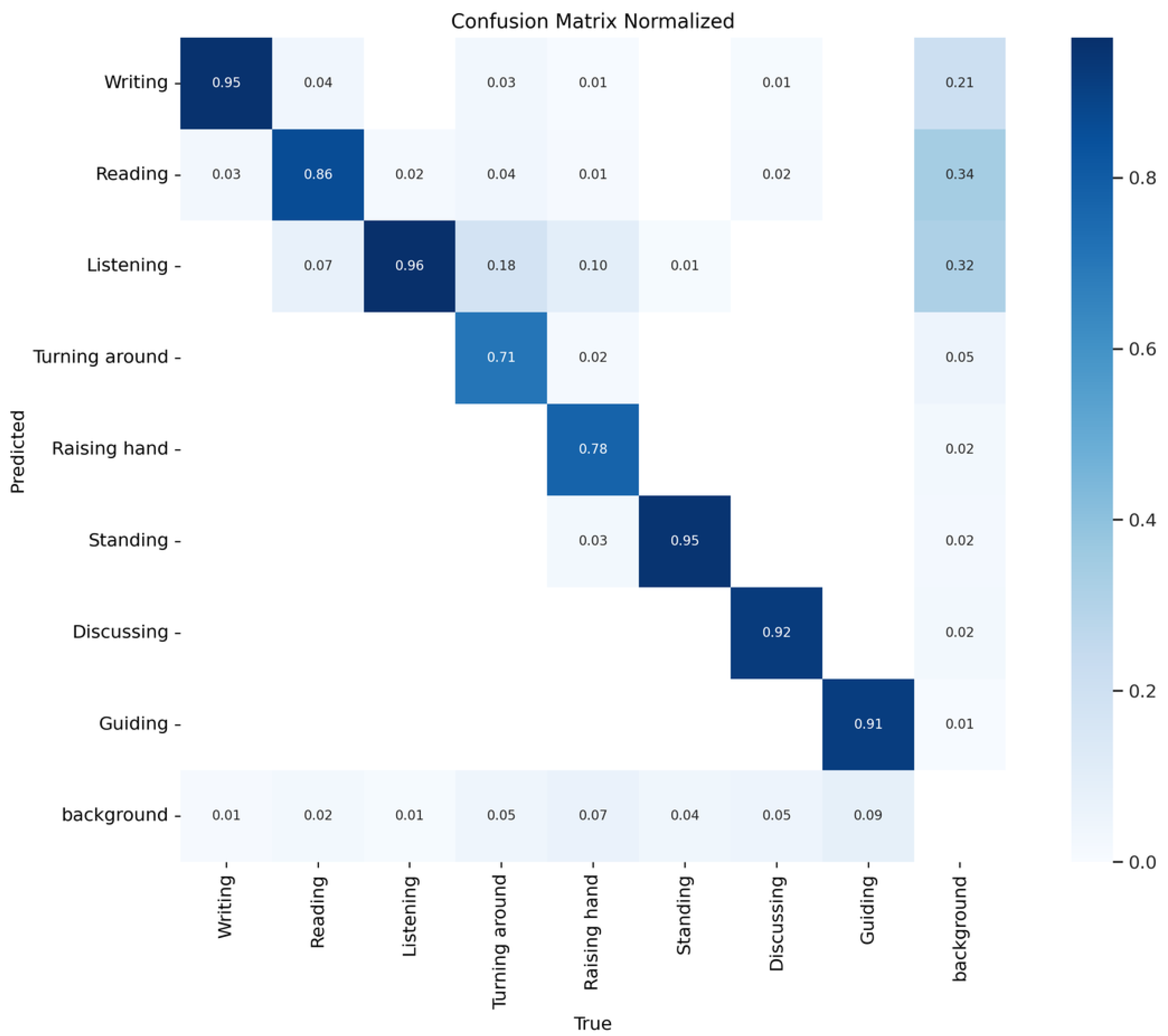

Figure 11 and

Figure 12 use normalized confusion matrices to better show the differences between before and after improvement. An overall improvement in classification accuracy is indicated by the enhanced model’s general increase in diagonal components across behavioral categories. The most notable improvements were seen in actions that were prone to occlusion or small scale:

turning around saw a diagonal value increase from 0.61 to 0.71 (+0.10),

raising hand from 0.69 to 0.78 (+0.09),

standing from 0.93 to 0.95 (+0.02),

discussing from 0.89 to 0.92 (+0.03), and

guiding from 0.87 to 0.91 (+0.04). Additionally, categories that were visually stable or static continued to increase steadily:

reading improved from 0.85 to 0.86 (+0.01),

writing was steady at 0.95, and

listening climbed from 0.95 to 0.96 (+0.01). This unique enhancement closely matches the three modules’ functionalities: by modeling global dependencies and positional bias across multiple subspaces, MHSA improves discrimination between similar categories (e.g.,

discussing and

standing); PMSA suppresses background interference in context modeling, guaranteeing high consistency for categories like

listening and

standing in complex scenarios; and SAH efficiently improves small-scale and local-scale features, greatly improving detection of

turning around and

raising hand.

Interestingly, the model’s improvements are further validated by convergence in misclassification pairings. The misclassification rate for reading→guiding dropped from 0.35 to 0.34 (−0.01), listening→turning around dropped from 0.21 to 0.18 (−0.03), and listening→guiding dropped from 0.33 to 0.32 (−0.01). Furthermore, false positives caused by the background were much reduced, with the bottom row “background” often lowering interference in all categories. For example, the guiding column reduced from 0.11 to 0.09 (−0.02), the turning around column from 0.06 to 0.05 (−0.01), the reading column from 0.03 to 0.02 (−0.01), and the standing column from 0.05 to 0.04 (−0.01). SAH’s function in scale alignment (reducing misclassification of small targets with adjacent categories), PMSA’s advantage in context filtering (effectively reducing false triggers in complex backgrounds), and MHSA’s global consistency modeling capability (reducing confusion between semantically similar categories) are all reflected in these changes.

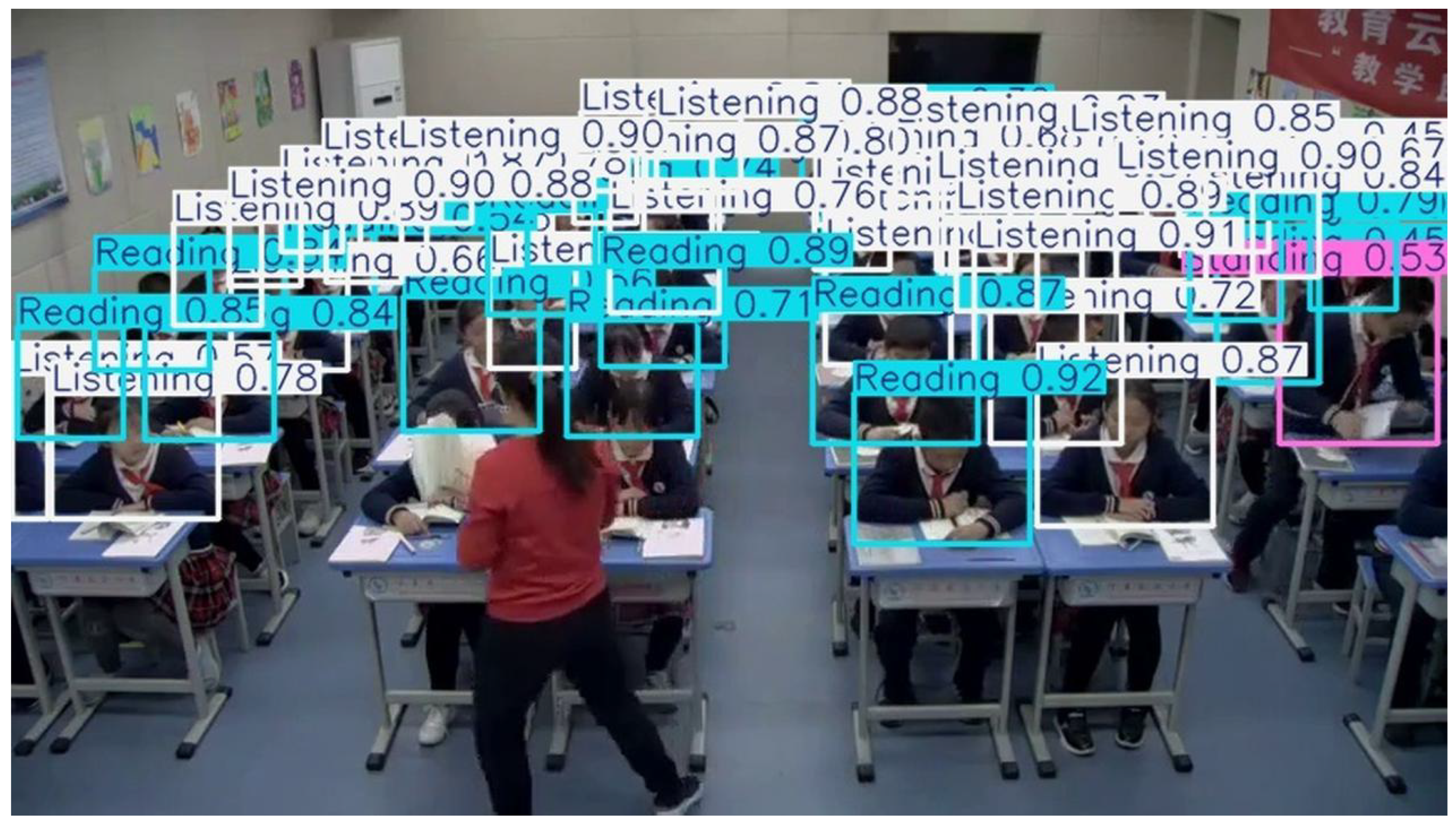

The classroom detection results from the YOLOv11 baseline model are shown in

Figure 13. For common static behaviors like “reading” and “listening,” this model reliably generates stable boundary boxes. It has trouble with small actions and occlusions, though, which can result in missing detections or inconsistent labeling. A “writing” activity, for example, is not detected in

Figure 12, demonstrating the model’s inability to reliably identify fine-grained behaviors in busy classroom settings.

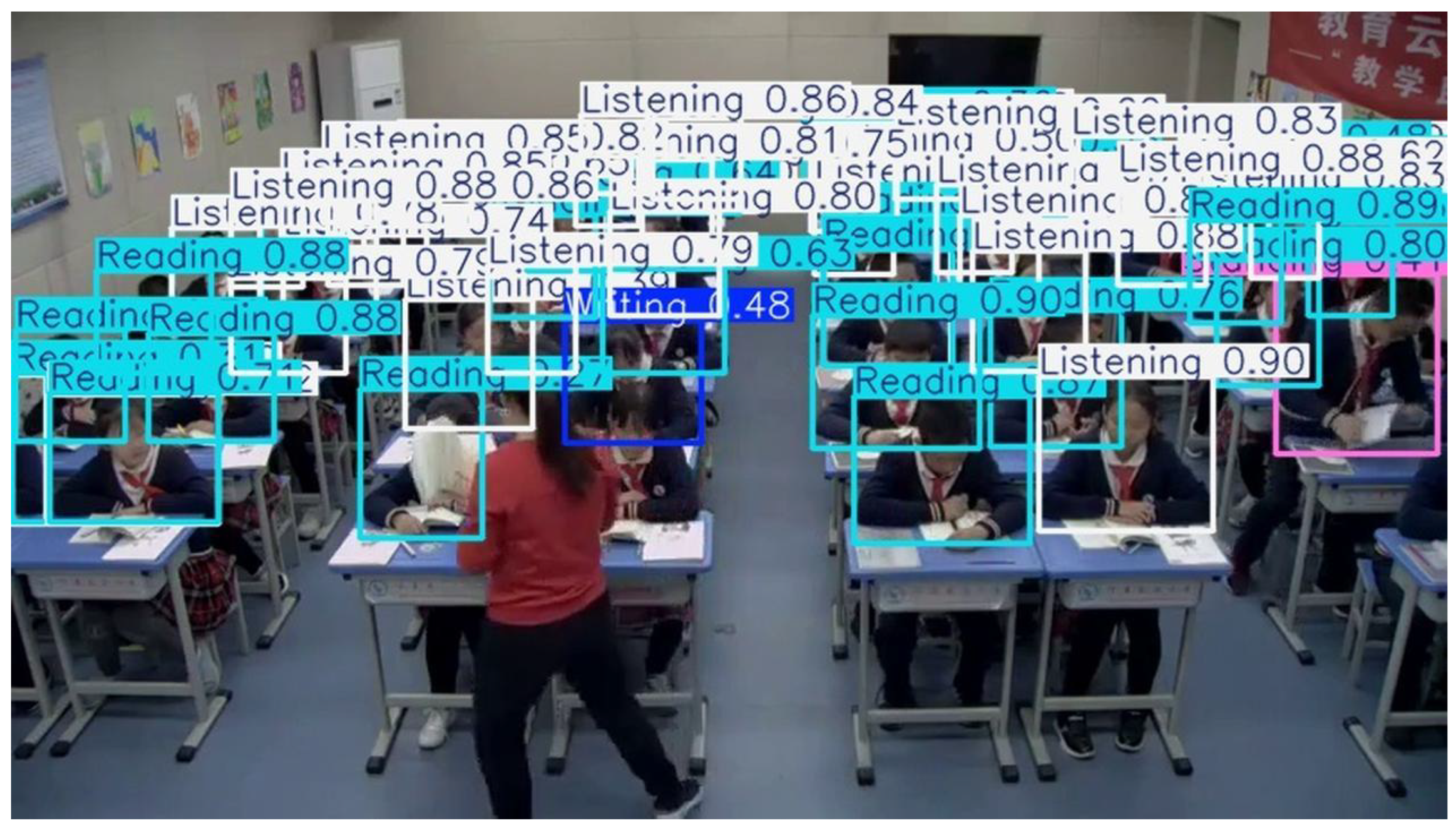

On the other hand, the detection outcomes from our suggested model are displayed in

Figure 14. With more accurate bounding boxes and higher confidence scores, this improved model performs noticeably better. Partial occlusions are handled by the model with ease, and it correctly detects delicate actions like “writing.” Additionally,

Figure 14 shows that our model produces a cleaner and more dependable detection output than the baseline since it shows fewer duplicate boxes and misclassifications. In conclusion, the enhanced model outperforms the YOLOv11 baseline model in real-world classroom scenarios, as seen in

Figure 14, which also shows higher performance in handling occlusions and recognizing complicated behaviors.

Author Contributions

Conceptualization, J.W.; Methodology, J.W. and S.T.; Software, S.T.; Validation, J.W. and Y.S.; Formal Analysis, S.T.; Investigation, J.W.; Resources, J.W.; Data Curation, S.T.; Writing—Original Draft Preparation, J.W. and S.T.; Writing—Review and Editing, J.W., S.T. and Y.S.; Visualization, J.W., S.T. and Y.S.; Supervision, J.W.; Project Administration, J.W.; Funding Acquisition, J.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Scientific Research Program of the Hubei Provincial Department of Education (grant number B2023417) and the Hubei Province First-Class Undergraduate Course Construction Project.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Ethics Committee of Wuhan Qingchuan University (24 October 2025).

Informed Consent Statement

This study involves the analysis of anonymized or de-identified classroom behavioral data and does not include any medical intervention or activities that may infringe on participants’ rights or privacy. All procedures comply with the principles outlined in the Declaration of Helsinki (1975, revised 2013) and the relevant institutional and national ethical standards.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Yang, Y.; Chen, L.; He, W.; Sun, D.; Salas-Pilco, S.Z. Artificial intelligence for enhancing special education for K-12: A decade of trends, themes, and global insights (2013–2023). Int. J. Artif. Intell. Educ. 2024, 35, 1129–1177. [Google Scholar] [CrossRef]

- Hu, J.; Huang, Z.; Li, J.; Xu, L.; Zou, Y. Real-time classroom behavior analysis for enhanced engineering education: An AI-assisted approach. Int. J. Comput. Intell. Syst. 2024, 17, 167. [Google Scholar] [CrossRef]

- Liu, Q.; Jiang, X.; Jiang, R. Classroom behavior recognition using computer vision: A systematic review. Sensors 2025, 25, 373. [Google Scholar] [CrossRef]

- Zhao, X.-M.; Yusop, F.D.B.; Liu, H.-C.; Prilanita, Y.-N.; Chang, Y.-X. Classroom student behavior recognition using an intelligent sensing framework. IEEE Access 2025, 13, 49767–49776. [Google Scholar] [CrossRef]

- Liu, S.; Zhang, J.; Su, W. An improved method of identifying learner’s behaviors based on deep learning. J. Supercomput. 2022, 78, 12861–12872. [Google Scholar] [CrossRef]

- Zheng, L.; Wang, C.; Chen, X.; Song, Y.; Meng, Z.; Zhang, R. Evolutionary machine learning builds smart education big data platform: Data-driven higher education. Appl. Soft Comput. 2023, 136, 110114. [Google Scholar] [CrossRef]

- Li, G.; Liu, F.; Wang, Y.; Guo, Y.; Xiao, L.; Zhu, L. A convolutional neural network (CNN) based approach for the recognition and evaluation of classroom teaching behavior. Sci. Program. 2021, 2021, 6336773. [Google Scholar] [CrossRef]

- Feng, C.; Luo, Z.; Kong, D.; Ding, Y.; Liu, J. IMRMB-Net: A lightweight student behavior recognition model for complex classroom scenarios. PLoS ONE 2025, 20, e0318817. [Google Scholar] [CrossRef] [PubMed]

- Han, L.; Ma, X.; Dai, M.; Bai, L. A WAD-YOLOv8-based method for classroom student behavior detection. Sci. Rep. 2025, 15, 9655. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Zhou, G.; Jiang, H. Student behavior detection in the classroom based on improved YOLOv8. Sensors 2023, 23, 8385. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar]

- Amatari, V.O. The instructional process: A review of Flanders’ interaction analysis in a classroom setting. Int. J. Second. Educ. 2015, 3, 43–49. [Google Scholar] [CrossRef]

- Wang, D.; Han, H.; Liu, H. Analysis of instructional interaction behaviors based on OOTIAS in smart learning environment. In Proceedings of the 2019 Eighth International Conference on Educational Innovation through Technology (EITT), Biloxi, MS, USA, 6–8 December 2019; pp. 147–152. [Google Scholar]

- Sarabu, A.; Santra, A.K. Human action recognition in videos using convolution long short-term memory network with spatio-temporal networks. Emerg. Sci. J. 2021, 5, 25–33. [Google Scholar] [CrossRef]

- Pang, C.; Lu, X.; Lyu, L. Skeleton-based action recognition through contrasting two-stream spatial-temporal networks. IEEE Trans. Multimed. 2023, 25, 8699–8711. [Google Scholar] [CrossRef]

- Varshney, N.; Bakariya, B. Deep convolutional neural model for human activities recognition in a sequence of video by combining multiple CNN streams. Multimed. Tools Appl. 2022, 81, 42117–42129. [Google Scholar] [CrossRef]

- Wang, Z.; Yao, J.; Zeng, C.; Li, L.; Tan, C. Students’ classroom behavior detection system incorporating deformable DETR with Swin transformer and light-weight feature pyramid network. Systems 2023, 11, 372. [Google Scholar] [CrossRef]

- Peng, S.; Zhang, X.; Zhou, L.; Wang, P. YOLO-CBD: Classroom behavior detection method based on behavior feature extraction and aggregation. Sensors 2025, 25, 3073. [Google Scholar] [CrossRef] [PubMed]

- Zhu, W.; Yang, Z. CSB-YOLO: A rapid and efficient real-time algorithm for classroom student behavior detection. J. Real-Time Image Process. 2024, 21, 140. [Google Scholar] [CrossRef]

- Chen, H.; Guan, J. Teacher–student behavior recognition in classroom teaching based on improved YOLO-v4 and Internet of Things technology. Electronics 2022, 11, 3998. [Google Scholar] [CrossRef]

- Zhang, Y.; Wu, Z.; Chen, X.; Dai, L.; Li, Z.; Zong, X.; Liu, T. Classroom behavior recognition based on improved YOLOv3. In Proceedings of the 2020 International Conference on Artificial Intelligence and Education (ICAIE), Hangzhou, China, 26–28 June 2020; pp. 93–97. [Google Scholar]

- Ouyang, D.; He, S.; Zhang, G.; Luo, M.; Guo, H.; Zhan, J.; Huang, Z. Efficient multi-scale attention module with cross-spatial learning. In Proceedings of the ICASSP 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Yang, L.; Zhang, R.-Y.; Li, L.; Xie, X. SimAM: A simple, parameter-free attention module for convolutional neural networks. In Proceedings of the International Conference on Machine Learning (ICML), Virtual Event, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Lau, K.W.; Po, L.-M.; Rehman, Y.A.U. Large separable kernel attention: Rethinking the large kernel attention design in CNN. Expert Syst. Appl. 2024, 236, 121352. [Google Scholar] [CrossRef]

- Cai, X.; Lai, Q.; Wang, Y.; Wang, W.; Sun, Z.; Yao, Y. Poly kernel inception network for remote sensing detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 27706–27716. [Google Scholar]

- Srinivas, A.; Lin, T.-Y.; Parmar, N.; Shlens, J.; Abbeel, P.; Vaswani, A. Bottleneck transformers for visual recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 16519–16529. [Google Scholar]

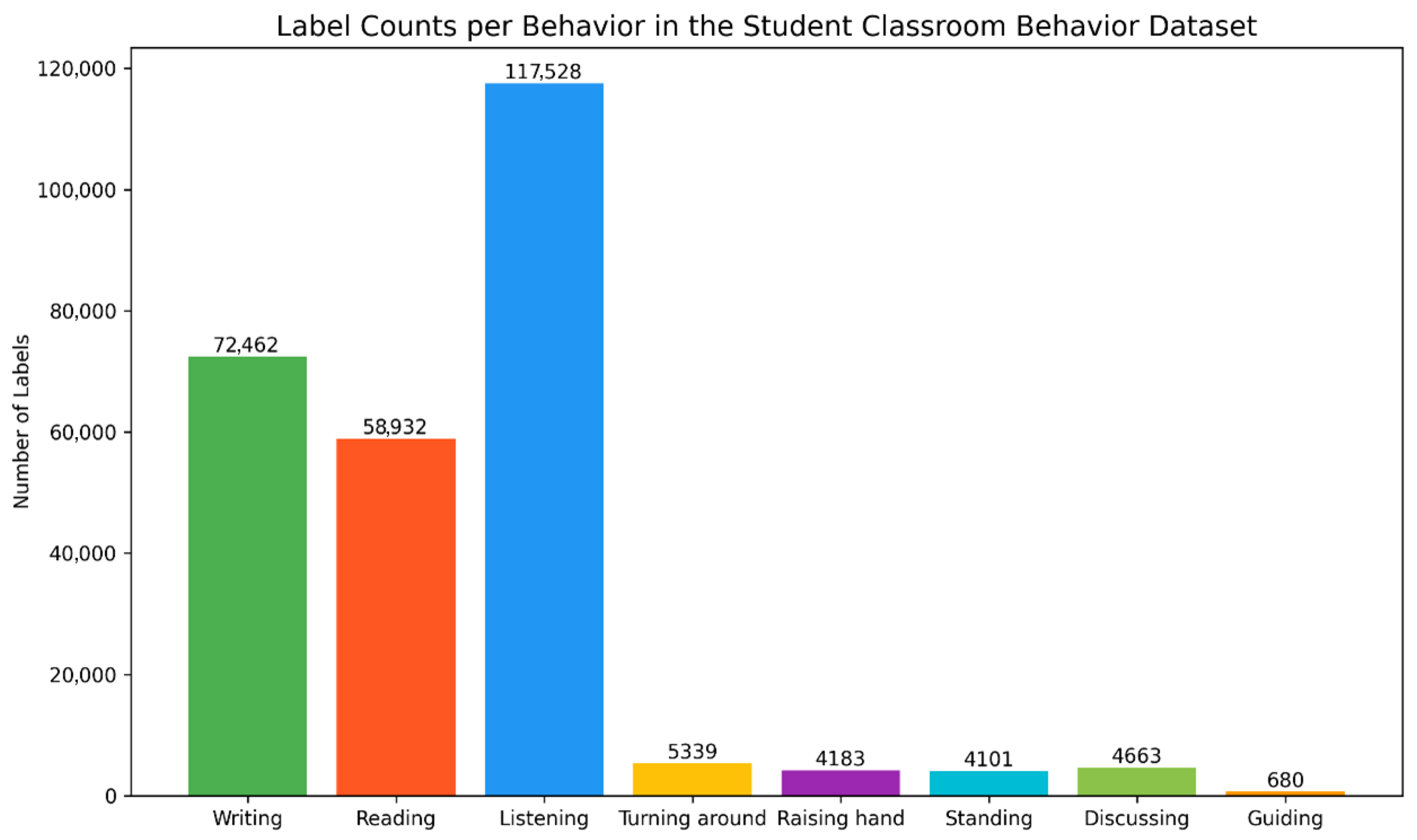

Figure 1.

Distribution of labels across rows in the dataset.

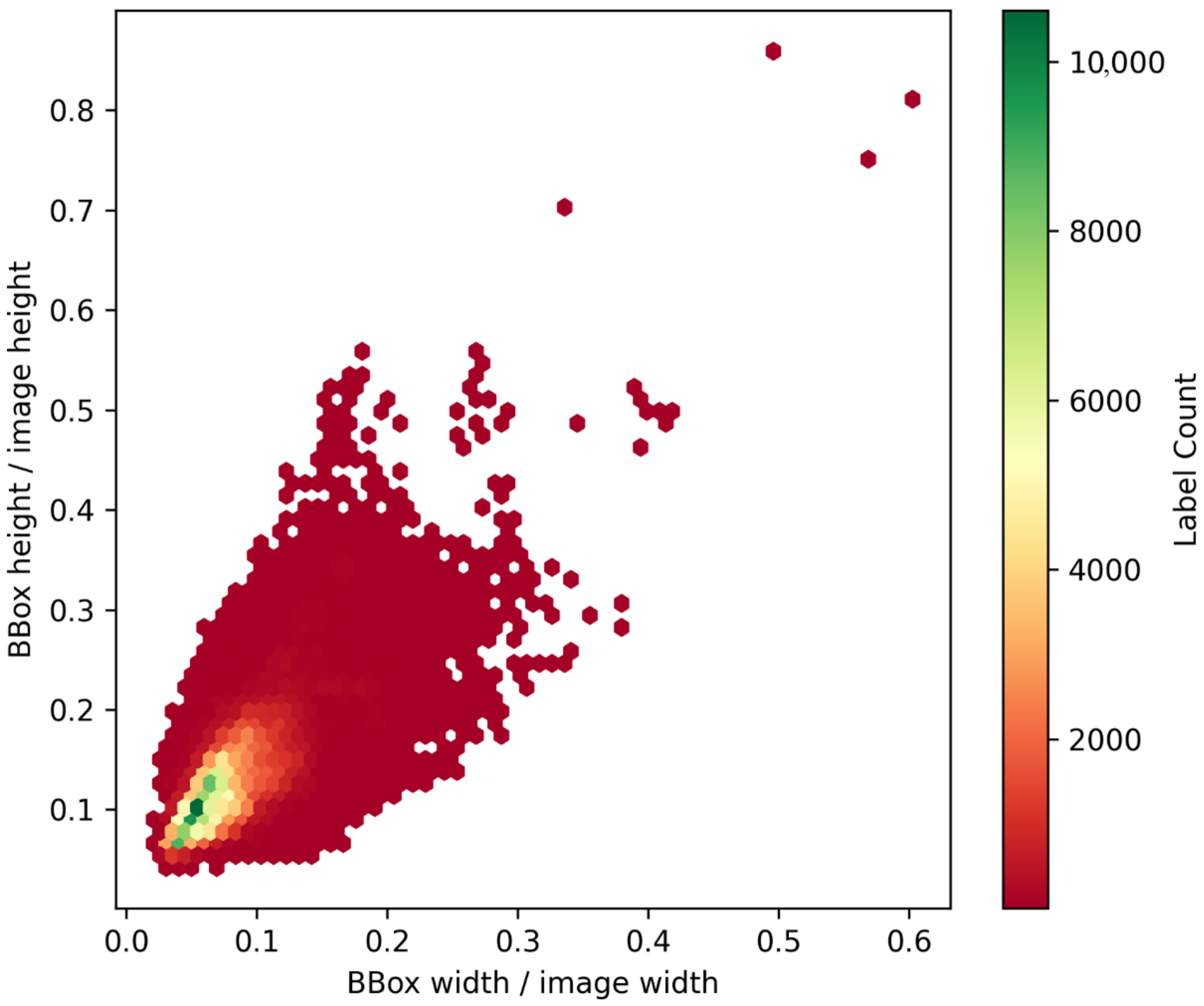

Figure 2.

Visualization of entire dataset.

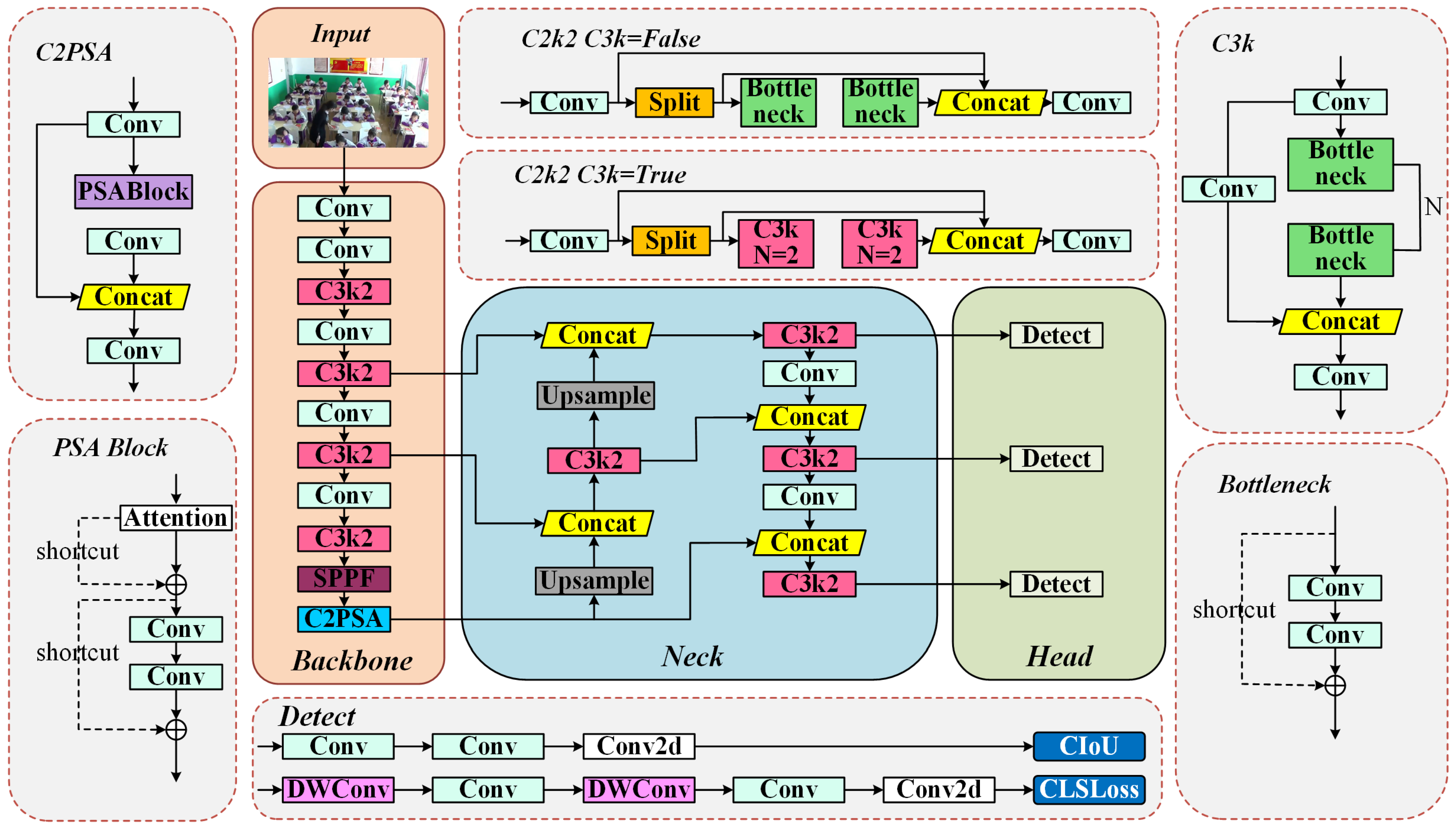

Figure 3.

Network architecture of YOLOv11 baseline model.

Figure 4.

Network architecture of proposed algorithm.

Figure 5.

CSP-PMSA module architecture.

Figure 6.

Schematic diagram of SAH module structure.

Figure 7.

Schematic diagram of MHSA module structure.

Figure 8.

Detection comparison results across eight student behavior categories for each model.

Figure 9.

F1 confidence curve of YOLOv11n baseline model.

Figure 10.

F1 confidence curve of the improved model.

Figure 11.

Normalized confusion matrix of YOLOv11n baseline model.

Figure 12.

Normalized confusion matrix of improved model.

Figure 13.

Classroom detection results with YOLOv11n baseline model.

Figure 14.

Classroom detection results with improved model.

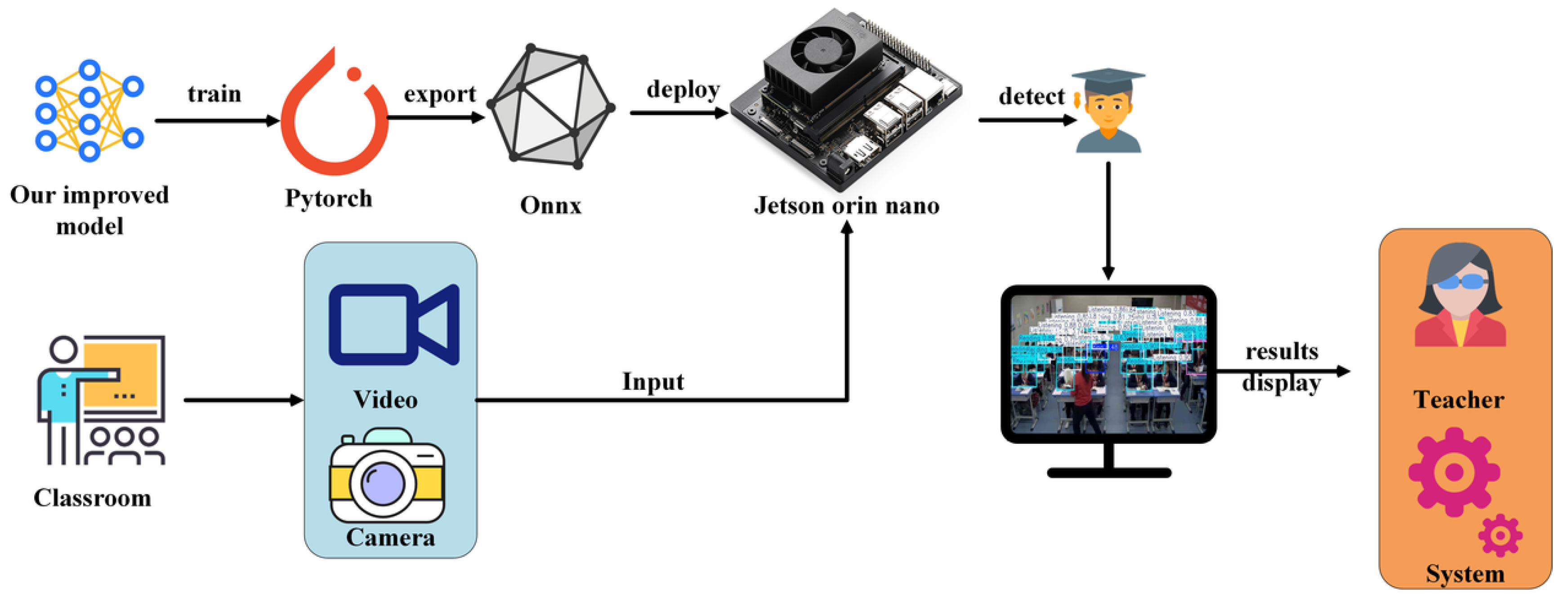

Figure 15.

Comprehensive procedure of smart classroom student behavior detection system.

Table 1.

Experimental environment configuration.

| Configurations | Parameters |

|---|

| CPU | x86_64 |

| GPU | NVIDIA GeForce RTX 3090 (24 GB) |

| RAM | 48 GB |

| Operating System | Ubuntu 22.04.3 LTS |

| Python | 3.10.12 |

| CUDA | 12.8 |

| Torch | 2.3.1 |

Table 2.

Model training hyperparameter settings.

| Parameters | Setup |

|---|

| Epochs | 50 |

| Image Size | 640 |

| Batch Size | 16 |

| Optimizer | SGD |

| Learning Rate | 0.01 |

| Weight Decay | 0.0005 |

| Warmup Epochs | 3 |

| Momentum | 0.937 |

| Scheduler | Cosine |

| Loss Function | CIoU + BCE |

| Regularization | L2 + Label Smoothing |

Table 3.

Comparison of attention mechanisms.

| Method | mAP@50 (%) | mAP@50–95 (%) | Parameters (M) | GFLOPs (G) |

|---|

| YOLOv11n | 88.9 | 73.1 | 2.6 | 6.4 |

| +EMA | 88.8 | 72.9 | 2.6 | 6.6 |

| +SimAM | 88.5 | 71.7 | 2.6 | 6.4 |

| +LSKA | 89.5 | 73.9 | 2.6 | 6.6 |

| +CAA | 88.6 | 72.1 | 2.7 | 6.8 |

| +MHSA | 90.3 | 74.6 | 2.8 | 8.0 |

Table 4.

Comparison of ablation experiment results.

| Model | P

(%) | R

(%) | mAP@50

(%) | mAP@50–95

(%) | Param

(M) | GFLOPs

(G) |

|---|

| YOLOv11n | 85.6 | 84.0 | 88.9 | 73.1 | 6.4 | 2.6 |

| YOLOv11n + PMSA | 87.5 | 85.0 | 90.1 | 74.3 | 7.8 | 2.6 |

| YOLOv11n + SAH | 86.1 | 84.4 | 89.9 | 73.6 | 6.3 | 2.4 |

| YOLOv11n + MHSA | 86.0 | 84.4 | 90.3 | 74.6 | 8.0 | 2.8 |

| Ours | 88.2 | 85.6 | 91.5 | 75.5 | 9.2 | 2.7 |

Table 5.

Comparison of detection results across different models.

| Model | P

(%) | R

(%) | mAP@50

(%) | mAP@50–95

(%) | Param

(M) | GFLOPs

(G) |

|---|

| SSD | 79.5 | 69.5 | 81.5 | 68.1 | 24.5 | 31.5 |

| Faster-RCNN | 81.8 | 71.5 | 84.2 | 70.3 | 41.3 | 189 |

| YOLOv3-Tiny | 82.5 | 70.5 | 86.1 | 71.9 | 11.6 | 18.9 |

| YOLOv6 | 84.0 | 72.5 | 87.0 | 71.6 | 4.0 | 11.8 |

| YOLOX | 83.8 | 72.8 | 86.4 | 71.5 | 9.0 | 26.8 |

| YOLOv11 | 85.6 | 84.0 | 88.9 | 73.1 | 2.6 | 6.4 |

| RT-DETR | 84.5 | 72.5 | 87.0 | 72.1 | 20.1 | 58.6 |

| Ours | 88.2 | 85.6 | 91.6 | 75.7 | 2.7 | 9.2 |

Table 6.

Per-class AP@50 across eight student behavior categories for different models.

| Model | Writ. | Read. | List. | TA | Raise. | Stand. | Disc. | Guid. |

|---|

| SSD | 85.0 | 83.0 | 84.0 | 76.0 | 78.0 | 84.0 | 82.0 | 80.0 |

| Faster-RCNN | 87.0 | 86.0 | 87.0 | 83.0 | 82.0 | 87.0 | 86.0 | 75.6 |

| YOLOv3-Tiny | 88.5 | 86.5 | 88.0 | 79.5 | 81.0 | 89.0 | 87.0 | 89.3 |

| YOLOv6 | 91.0 | 91.0 | 92.0 | 79.0 | 81.0 | 89.0 | 87.0 | 86.0 |

| YOLOX | 89.0 | 87.0 | 88.0 | 78.0 | 80.0 | 91.0 | 90.0 | 88.2 |

| YOLOv11 | 90.5 | 89.5 | 91.0 | 82.5 | 84.5 | 90.0 | 89.0 | 94.2 |

| DETR | 90.0 | 92.0 | 90.5 | 80.0 | 82.0 | 88.0 | 86.0 | 87.5 |

| Ours | 96.0 | 94.0 | 96.5 | 86.0 | 88.0 | 95.0 | 93.0 | 84.3 |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).