A Systematic Evaluation of Large Language Models and Retrieval-Augmented Generation for the Task of Kazakh Question Answering

Abstract

1. Introduction

- How do proprietary and open-source LLMs perform on closed-book QA tasks in Kazakh?

- Does retrieval-augmented generation (RAG) improve QA accuracy over closed-book generation for Kazakh?

2. Literature Review

2.1. Addressing Hallucination in LLMs Through Retrieval-Augmented Generation

2.2. State of Question Answering in the Kazakh Language

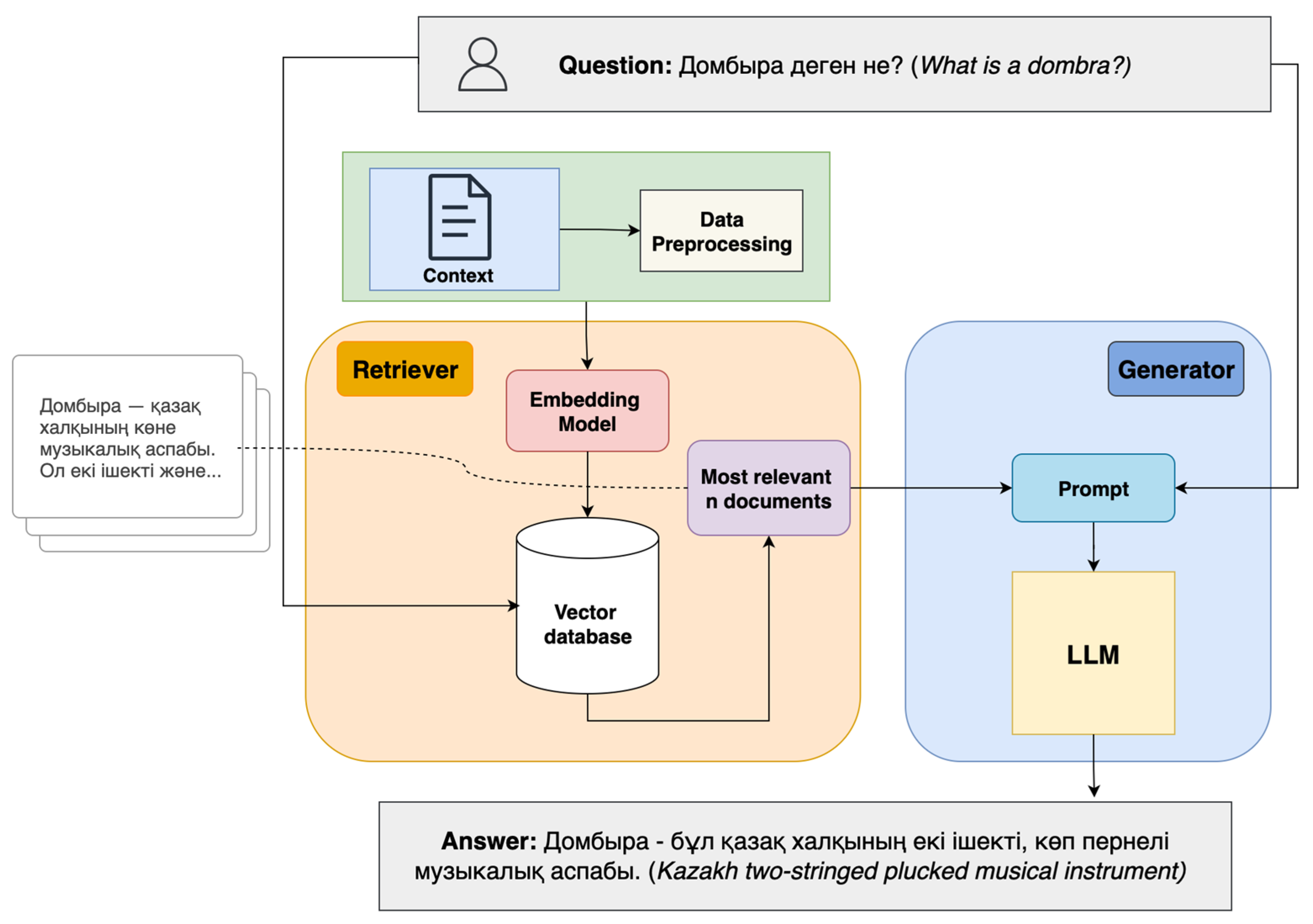

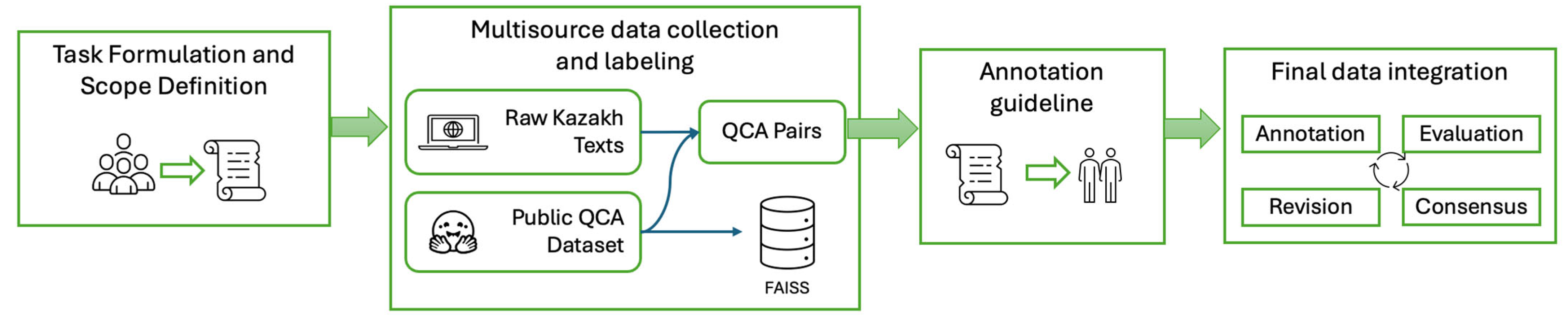

3. Methodology

- Closed-book QA—the LLM generates answers exclusively from its internal parametric knowledge, without access to external information sources.

- Retrieval-Augmented Generation (RAG)—the LLM receives additional context passages retrieved from an external knowledge base, enabling it to ground responses in relevant, verifiable evidence.

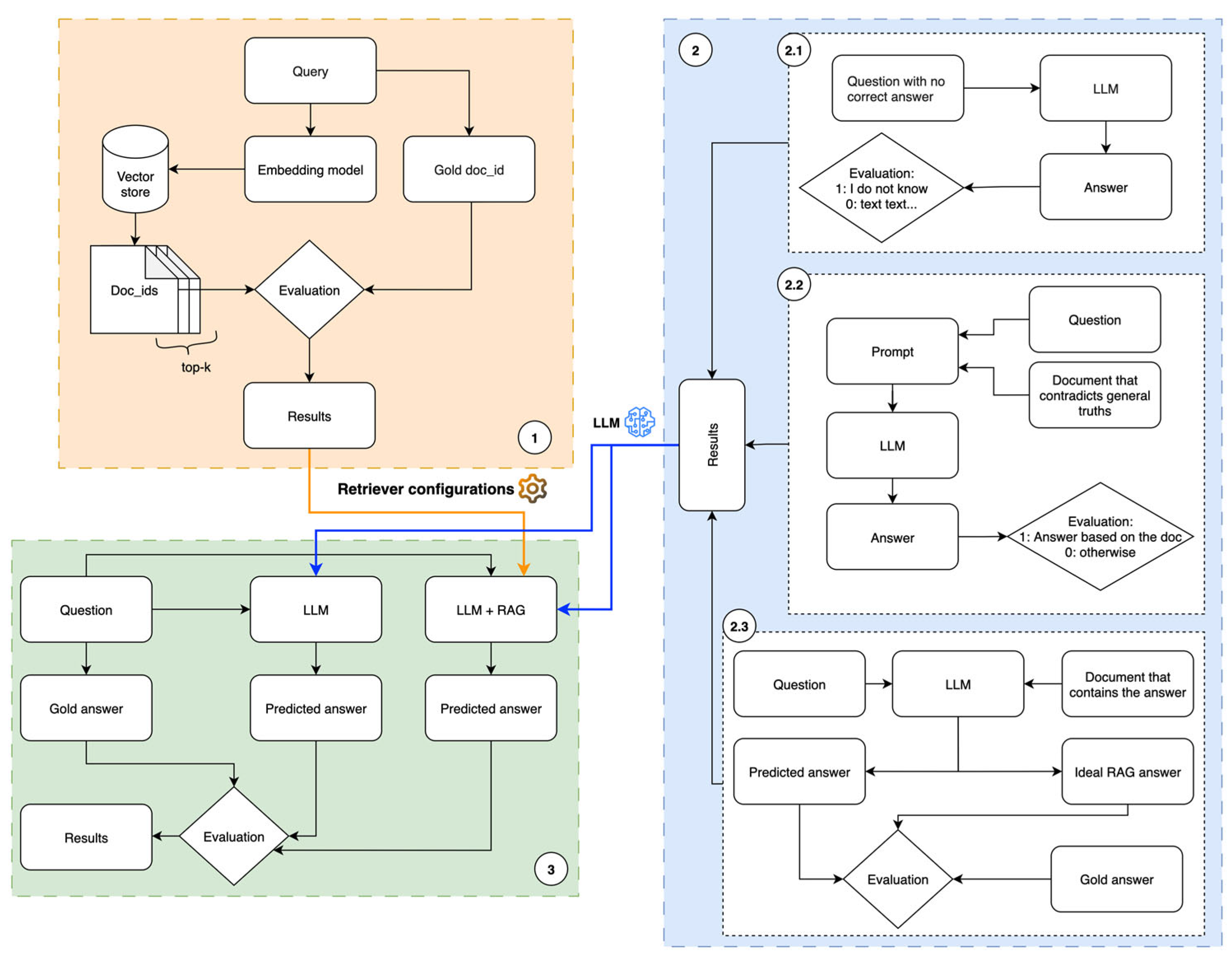

3.1. Retriever Evaluation

- Recall@k measures the proportion of queries for which at least one relevant passage appears among the top-k (k = 1, 3, 5, 10) retrieved results.

- Q is the set of all queries,

- rank(q) is the rank of the first relevant document for query q,

- 1(⋅) is the indicator function, equal to 1 if its argument is true and 0 otherwise.

- 2.

- Mean Reciprocal Rank (MRR) reflects the average ranking position of the first relevant passage across all queries, with higher weight given to top-ranked results:

- 3.

- Cosine Similarity Gap evaluates the semantic separability between relevant () and non-relevant () passages in the embedding space:

- and denote the sets of relevant and non-relevant passages, respectively,

- and are the embedding vectors of query and passage ,

- is the cosine similarity.

3.2. LLM Evaluation

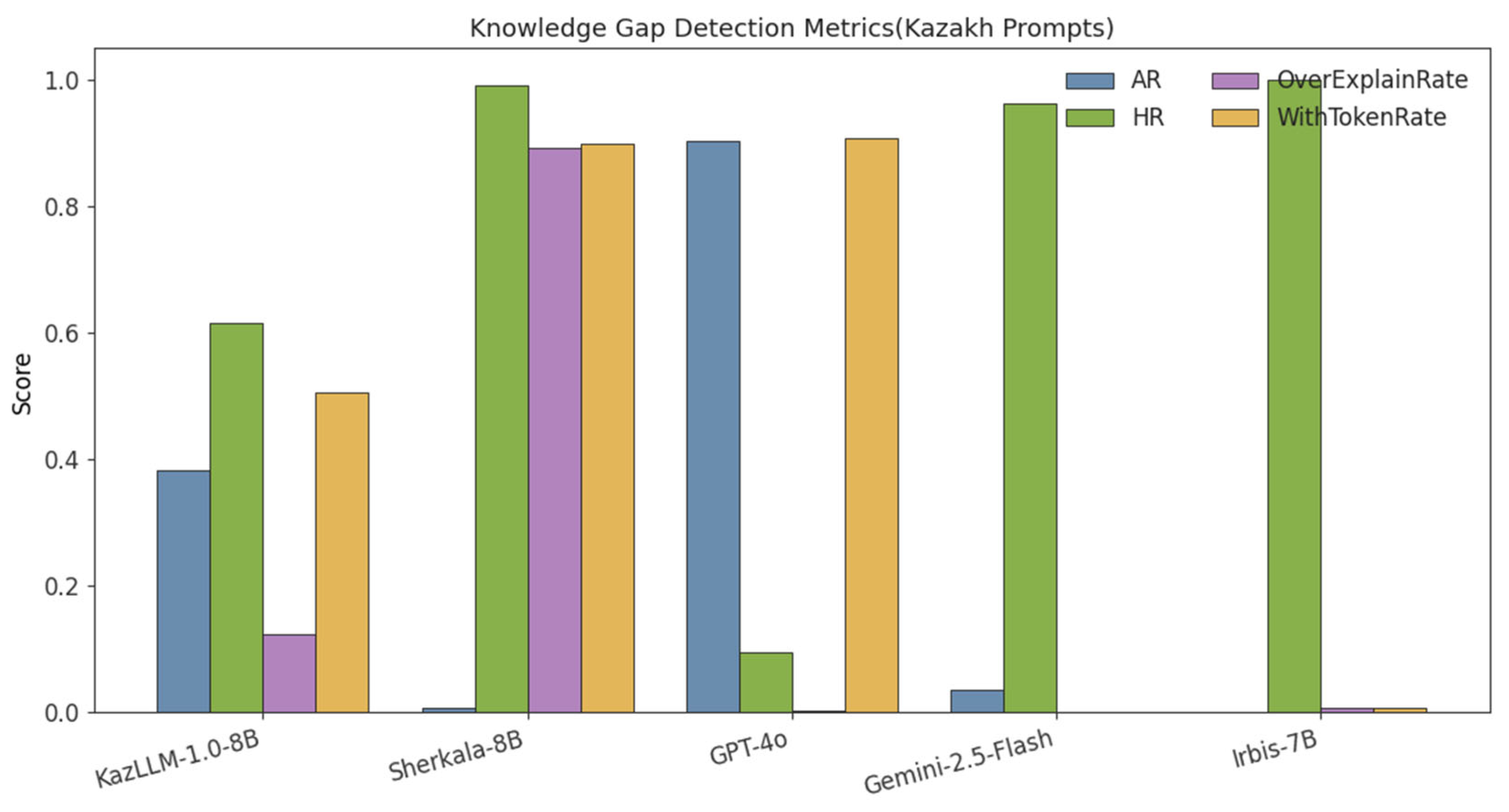

3.2.1. Knowledge Gap Detection

- Hallucination Rate (HR) that measures the proportion of unanswerable items for which the model produces a substantive response instead of abstaining.

- Abstention Rate (AR) captures the proportion of items for which the model outputs the designated abstention token, thereby indicating correct recognition of the knowledge gap.

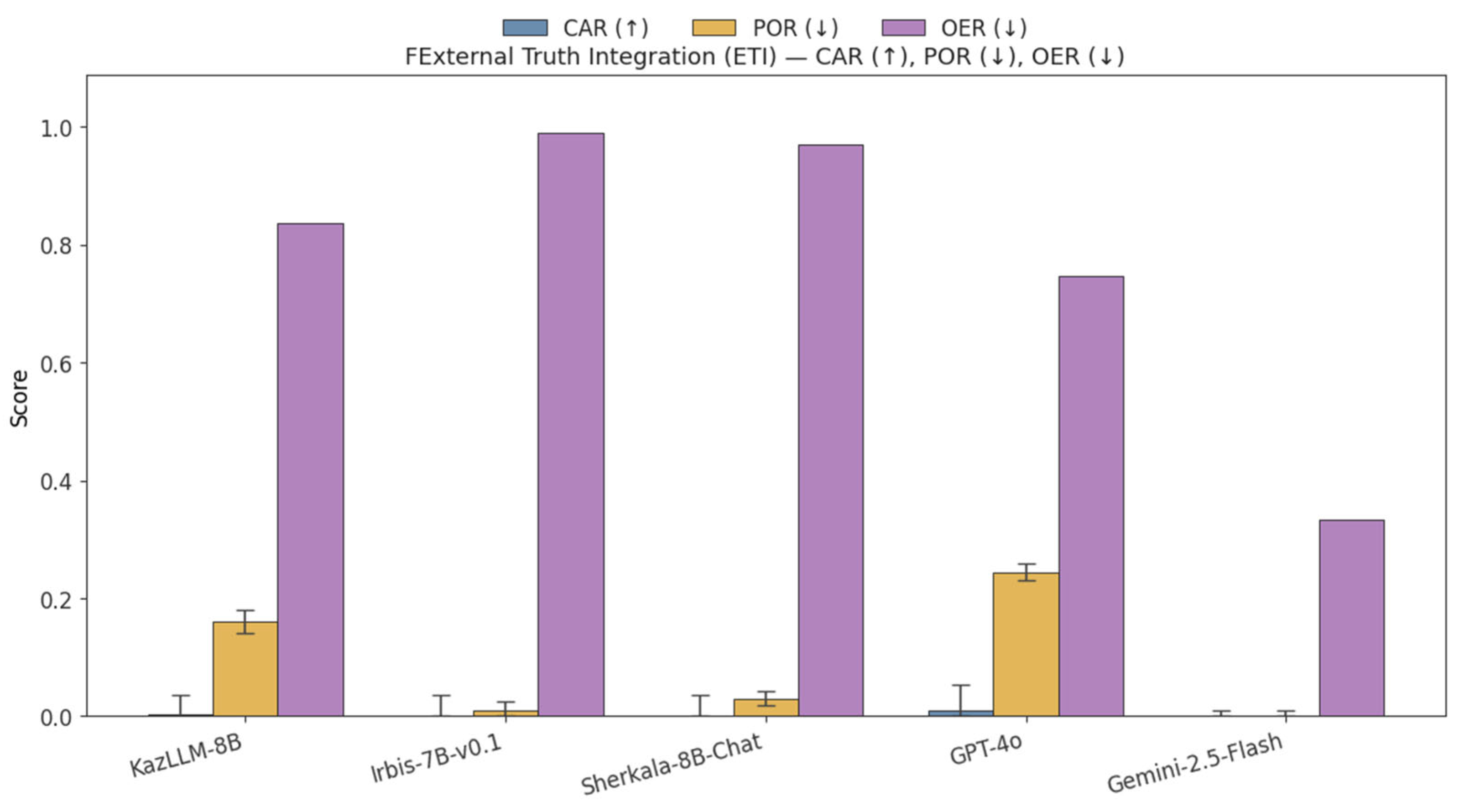

3.2.2. External Truth Integration

- Contextual Agreement Rate (CAR): The primary metric, defined as the proportion of cases where the model’s output agrees with the fact presented in the external context (context_answer).

- Parametric Override Rate (POR): The proportion of cases where the model ignores the provided context and instead generates an answer based on its internal knowledge (param_answer), thereby overriding the external evidence.

- Other Error Rate (OER): The proportion of answers that do not match either the context_answer or the param_answer. This metric captures hallucinations, off-topic responses, or other types of errors.

3.2.3. Ideal RAG vs. Zero-Shot Learning

- Zero-Shot Learning [47]: The model answers questions without access to any training examples or external context, establishing a closed-book baseline reflecting its intrinsic parametric knowledge.

- Ideal RAG: The same questions are presented with verified ground-truth passages, simulating perfect retrieval.

3.3. RAG vs. Closed-Book QA Evaluation

4. Models and Datasets

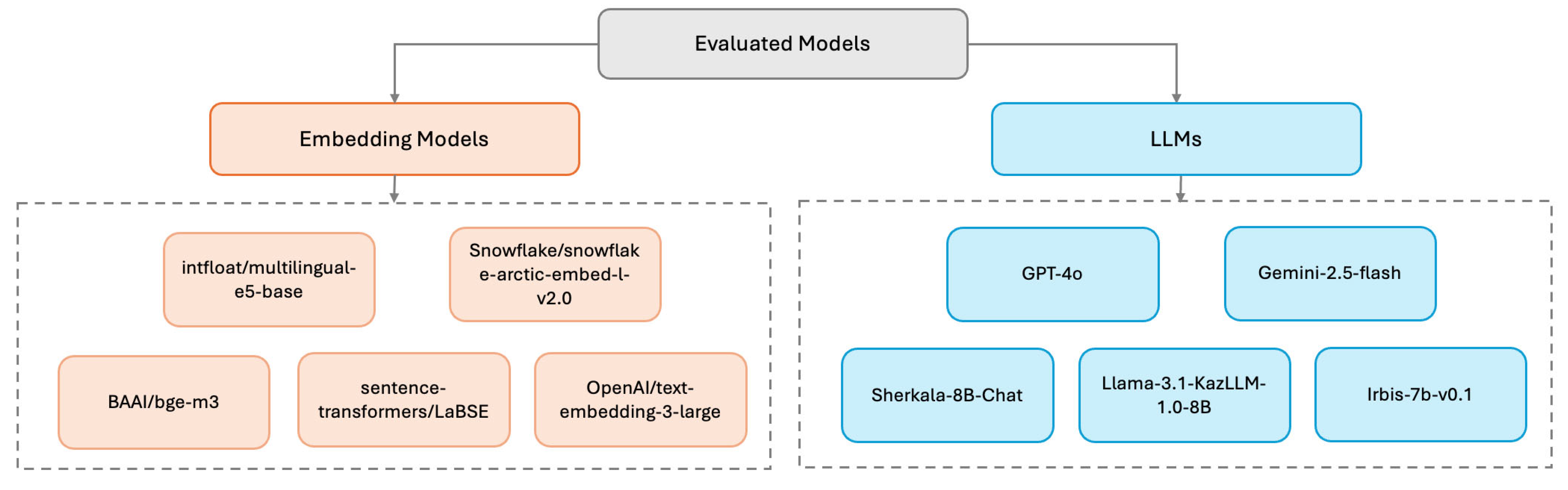

4.1. Models

4.1.1. Embedding Models Evaluated

4.1.2. LLMs Evaluated

4.2. Datasets

- KazQAD [60]— A large-scale Kazakh QA dataset consisting of more than 68,000 question–context–answer triples. The Natural Questions subset translated into Kazakh is additionally used.

- Arailym-tleubayeva/small_kazakh_corpus [61]—a collection of short Kazakh texts designed for semantic search, which enriches the retriever with varied linguistic structures.

- MBZUAI/KazMMLU (Kazakh_History subcorpus) [62]—a dataset of factual questions in Kazakh, targeting historical and culturally specific domains.

- Kyrmasch/sKQuAD [63]—a Kazakh QA dataset with 1000 annotated records.

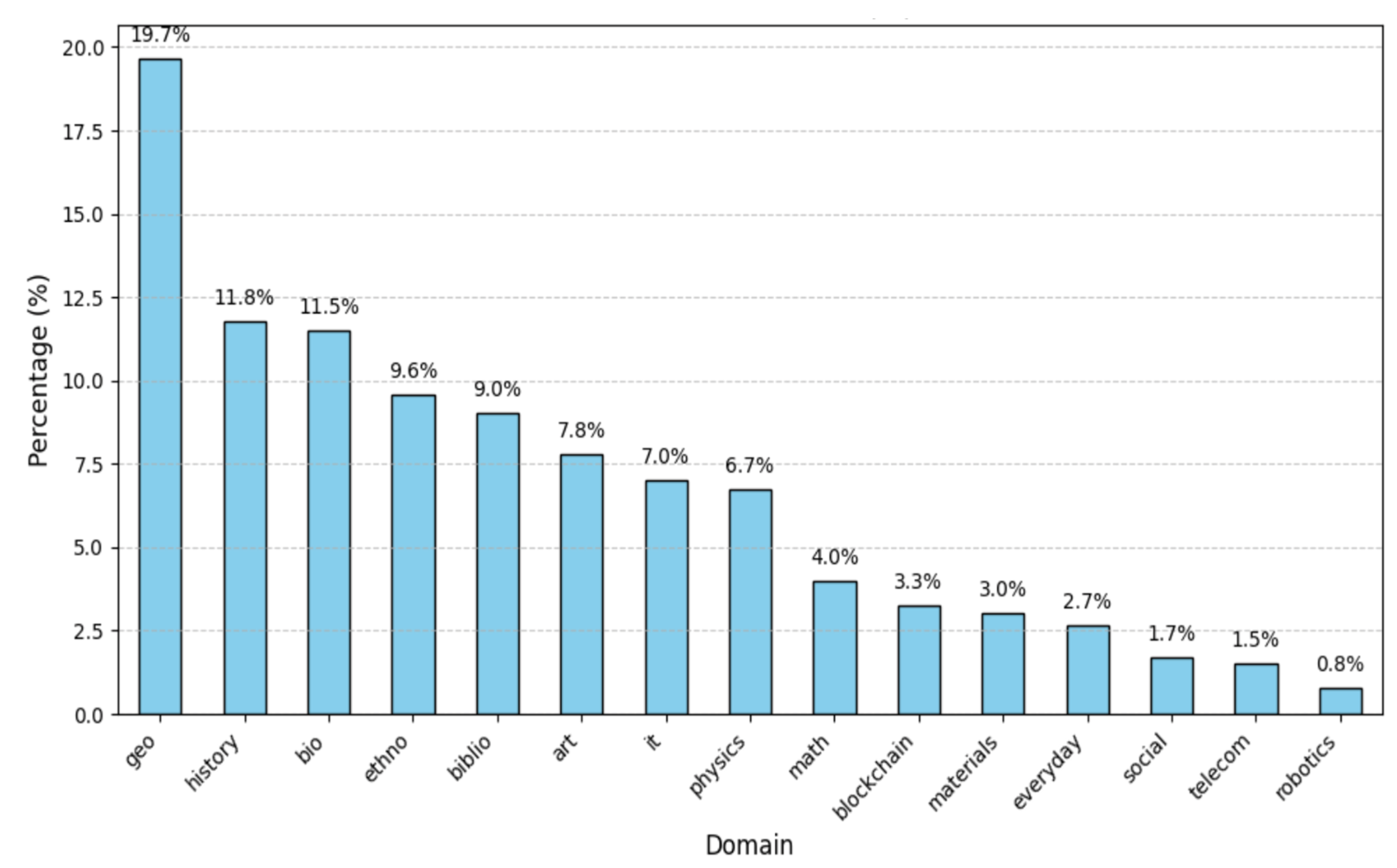

4.2.1. Testbeds

4.2.2. Testbeds Creation Process

5. Results

5.1. Results of Retriever Evaluation

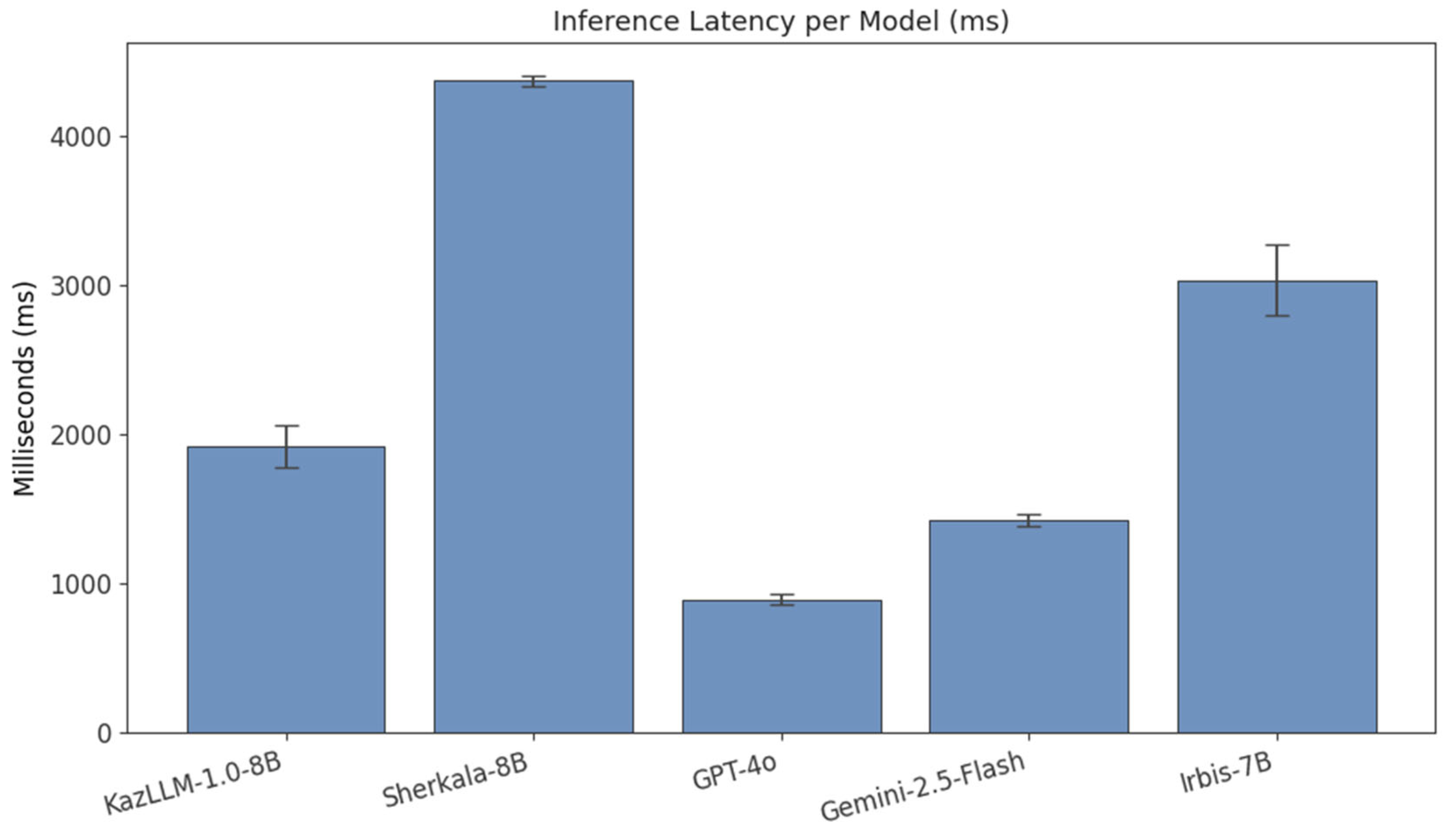

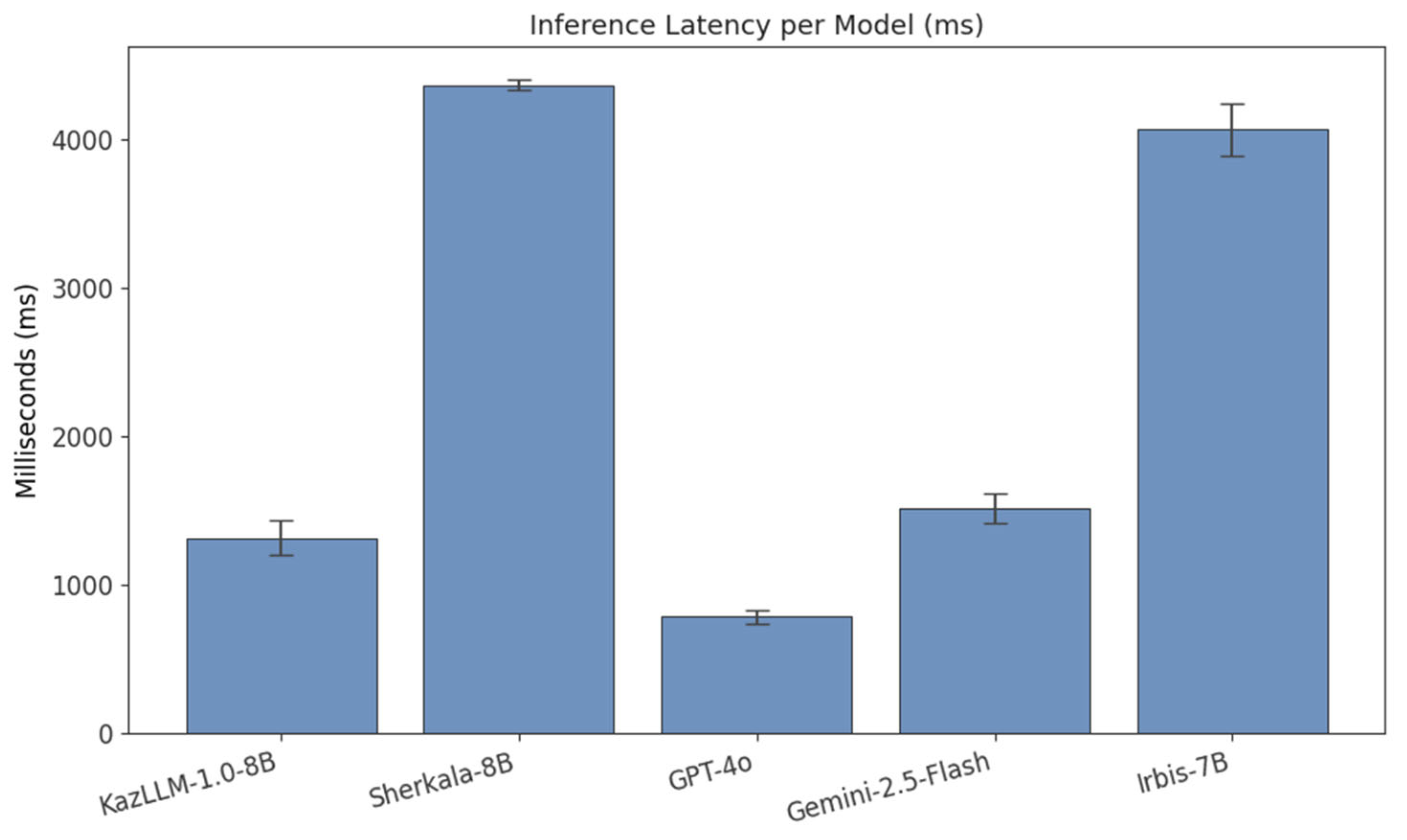

5.2. Closed-Book LLM Evaluation and Model Selection for RAG

5.2.1. Results of Knowledge Gap Detection

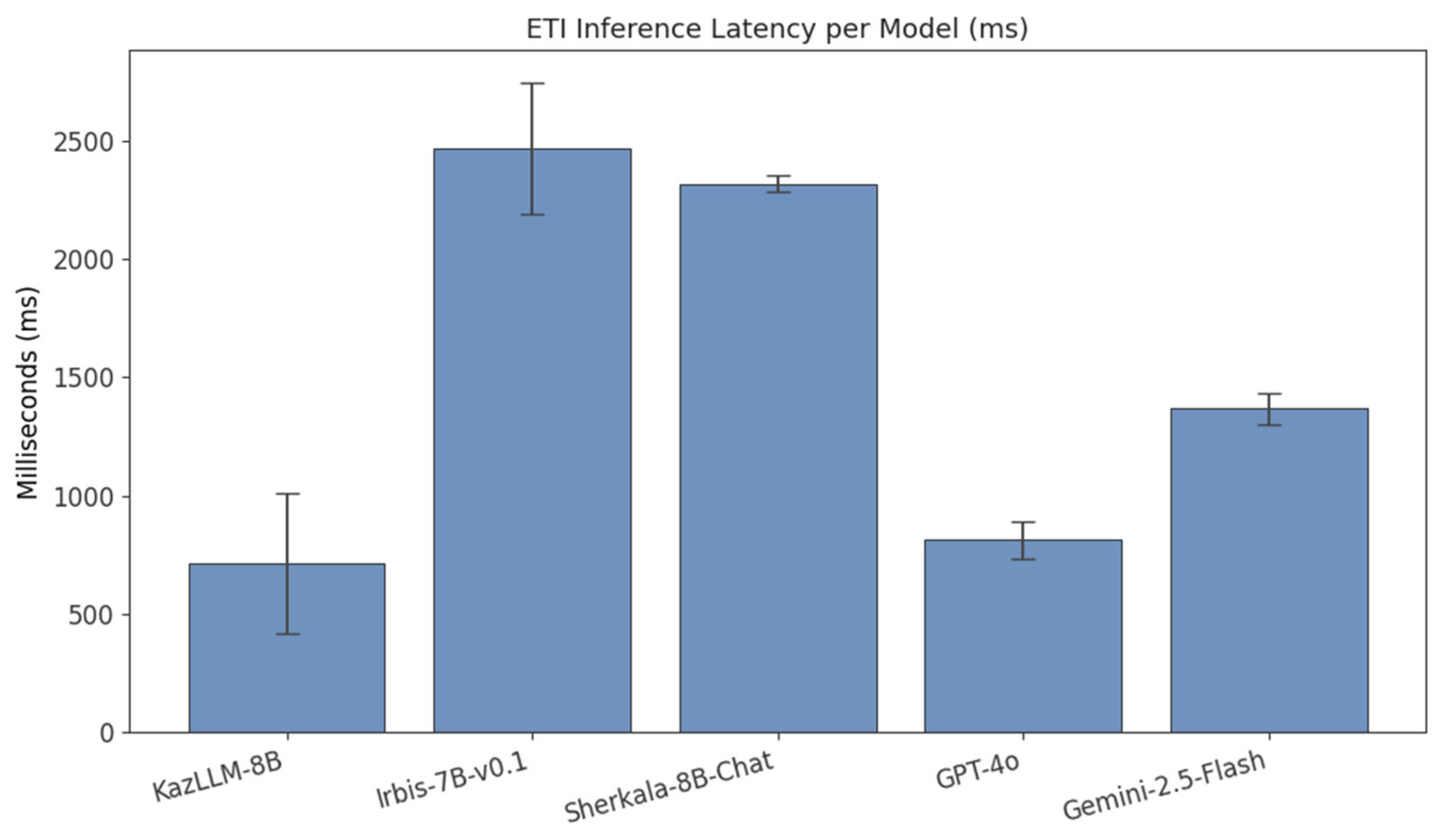

5.2.2. Results of External Truth Integration

5.2.3. Results of Ideal RAG vs. Zero-Shot Learning

5.3. End-to-End RAG Evaluation

- A baseline setup using GPT-4o as the generator with text-embedding-3-large as the retriever. Although both GPT-4o and Gemini-2.5-flash demonstrated strong reliability in controlled experiments, GPT-4o was selected as the primary generator for the end-to-end RAG pipeline due to its favorable balance of cost, stability, and integration. GPT-4o provided predictable token-based pricing, low latency, and consistently cleaner outputs, which minimized evaluation bias.

- A pipeline using open-source KazLLM-8B as the generator paired with other dense-embedding models.

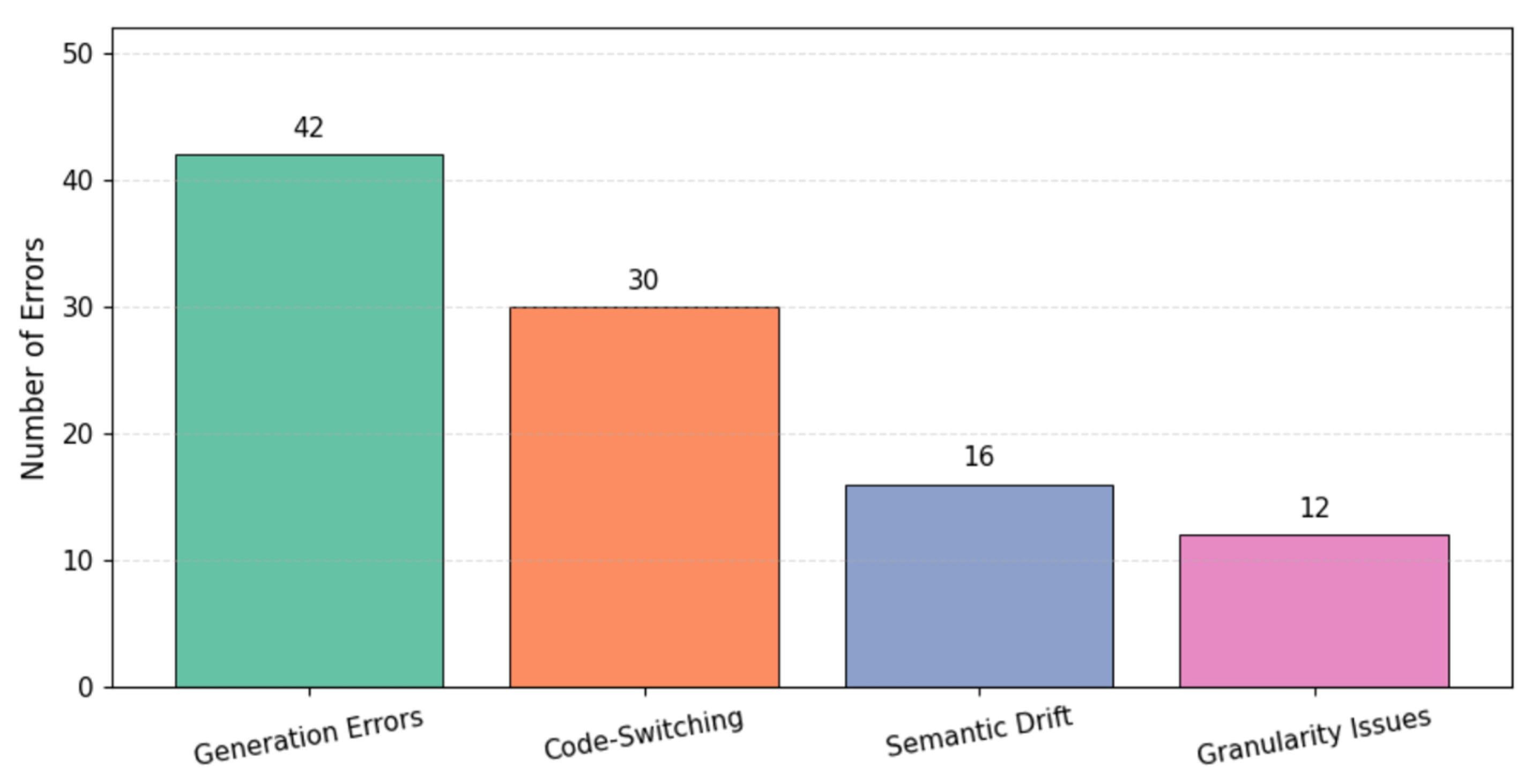

6. Error Analysis

7. Discussion

8. Limitations

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| API | Application Programming Interface |

| AR | Abstention Rate |

| CAR | Contextual Agreement Rate |

| CI | Confidence Interval |

| ETI | External Truth Integration |

| FAISS | Facebook AI Similarity Search |

| FP | False Positive |

| FN | False Negative |

| GQ | Generation Quality |

| HR | Hallucination Rate |

| IAA | Inter-Annotator Agreement |

| IR | Information Retrieval |

| ISSAI | Institute of Smart Systems and Artificial Intelligence (Nazarbayev University) |

| LLM | Large Language Model |

| MBZUAI | Mohamed bin Zayed University of Artificial Intelligence |

| METEOR | Metric for Evaluation of Translation with Explicit ORdering |

| MRR | Mean Reciprocal Rank |

| NLP | Natural Language Processing |

| OER | Other Error Rate |

| POR | Parametric Override Rate |

| QA | Question Answering |

| RAG | Retrieval-Augmented Generation |

| RAGAS | Retrieval-Augmented Generation Assessment |

| ROUGE-L | Recall-Oriented Understudy for Gisting Evaluation (Longest Common Subsequence) |

| SD | Standard Deviation |

| TP | True Positive |

Appendix A

Appendix A.1

| Model 1 | Model 2 | Zero-Shot | Ideal RAG | ||

|---|---|---|---|---|---|

| EN | KK | EN | KK | ||

| GPT-4o | KazLLM-8B | 0.000 | 0.028 | 0.03 | 0.000 |

| GPT-4o | Sherkala-8B | 0.000 | 0.000 | 0.000 | 0.000 |

| GPT-4o | Irbis-7B | 0.000 | 0.000 | 0.000 | 0.0016 |

| GPT-4o | Gemini-2.5 | 0.004 | 0.0195 | 0.0017 | 0.0002 |

| KazLLM-8B | Sherkala-8B | 0.0001 | 0.000 | 0.000 | 0.000 |

| KazLLM-8B | Irbis-7B | 0.000 | 0.000 | 0.000 | 0.000 |

| KazLLM-8B | Gemini-2.5 | 0.000 | 0.000 | 0.0013 | 0.047 |

| Sherkala-8B | Irbis-7B | 0.0034 | 0.0064 | 0.003 | 0.004 |

| Sherkala-8B | Gemini-2.5 | 0.000 | 0.000 | 0.000 | 0.000 |

| Irbis-7B | Gemini-2.5 | 0.000 | 0.000 | 0.000 | 0.000 |

Appendix A.2

| Retriever A | Retriever B | Paired p-Value |

|---|---|---|

| BGE-m3 | Snowflake-Arctic | 0.000 |

| BGE-m3 | E5-base | 0.000 |

| BGE-m3 | LaBSE | 0.000 |

| Snowflake-Arctic | E5-base | 0.0001 |

| Snowflake-Arctic | LaBSE | 0.000 |

| E5-base | LaBSE | 0.0002 |

References

- Jiang, S.; Xie, X.; Tang, R.; Wang, X.; Sun, K.; Li, G.; Xu, Z.; Xue, P.; Li, Z.; Fu, X. ARGUS: Retrieval-Augmented QA System for Government Services. Electronics 2025, 14, 2445. [Google Scholar] [CrossRef]

- Jiang, F.; Qin, C.; Yao, K.; Fang, C.; Zhuang, F.; Zhu, H.; Xiong, H. Enhancing Question Answering for Enterprise Knowledge Bases Using Large Language Models. In Proceedings of the International Conference on Database Systems for Advanced Applications, Singapore, 8–11 July 2024; Springer Nature: Singapore, 2024; pp. 273–290. [Google Scholar]

- Noy, S.; Zhang, W. Experimental Evidence on the Productivity Effects of Generative Artificial Intelligence. Science 2023, 381, 187–192. [Google Scholar] [CrossRef] [PubMed]

- Khowaja, S.A.; Khuwaja, P.; Dev, K.; Wang, W.; Nkenyereye, L. ChatGPT Needs SPADE (Sustainability, Privacy, Digital Divide, and Ethics) Evaluation: A Review. Cogn. Comput. 2024, 16, 2528–2550. [Google Scholar] [CrossRef]

- Veitsman, Y.; Hartmann, M. Recent Advancements and Challenges of Turkic Central Asian Language Processing. arXiv 2024, arXiv:2407.05006. [Google Scholar] [CrossRef]

- WorldData.info. Spread of the Kazakh Language. Total Native Speakers: Approximately 15.3 Million, Including 13.3 Million in Kazakhstan. Available online: https://www.worlddata.info/languages/kazakh.php (accessed on 12 August 2025).

- Koto, F.; Joshi, R.; Mukhituly, N.; Wang, Y.; Xie, Z.; Pal, R.; Orel, D.; Mullah, P.; Turmakhan, D.; Goloburda, M.; et al. Llama-3.1-Sherkala-8B-Chat: An Open Large Language Model for Kazakh. arXiv 2025, arXiv:2503.01493. [Google Scholar]

- Institute of Smart Systems and Artificial Intelligence (ISSAI), Nazarbayev University. (n.d.). *LLama-3.1-KazLLM-1.0-8B* [Large Language Model]. Hugging Face. Available online: https://huggingface.co/issai/LLama-3.1-KazLLM-1.0-8B (accessed on 10 August 2025).

- Astana Hub. AlemLLM [Large Language Model]. Hugging Face. Available online: https://huggingface.co/astanahub/alemllm (accessed on 10 August 2025).

- Kadyrbek, N.; Tuimebayev, Z.; Mansurova, M.; Viegas, V. The Development of Small-Scale Language Models for Low-Resource Languages, with a Focus on Kazakh and Direct Preference Optimization. Big Data Cogn. Comput. 2025, 9, 137. [Google Scholar] [CrossRef]

- Zaib, M.; Zhang, W.E.; Sheng, Q.Z.; Mahmood, A.; Zhang, Y. Conversational question answering: A survey. Knowl. Inf. Syst. 2022, 64, 3151–3195. [Google Scholar] [CrossRef]

- Alkhaldi, T.Y.S. Studies on Question Answering in Open-Book and Closed-Book Settings. Ph.D. Thesis, Kyoto University, Kyoto, Japan, 2023. [Google Scholar]

- Wang, C.; Liu, P.; Zhang, Y. Can generative pre-trained language models serve as knowledge bases for closed-book QA? arXiv 2021, arXiv:2106.01561. [Google Scholar]

- Ji, Z.; Lee, N.; Frieske, R.; Yu, T.; Su, D.; Xu, Y.; Ishii, E.; Bang, Y.J.; Madotto, A.; Fung, P. Survey of hallucination in natural language generation. ACM Comput. Surv. 2023, 55, 1–38. [Google Scholar] [CrossRef]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.; Kiela, D.; et al. Retrieval-augmented generation for knowledge-intensive nlp tasks. Adv. Neural Inf. Process. Syst. 2020, 33, 9459–9474. [Google Scholar]

- Gao, Y.; Xiong, Y.; Gao, X.; Jia, K.; Pan, J.; Bi, Y.; Dai, Y.; Sun, J.; Wang, M.; Wang, H.; et al. Retrieval-augmented generation for large language models: A survey. arXiv 2023, arXiv:2312.10997. [Google Scholar] [CrossRef]

- Mansurova, A.; Mansurova, A.; Nugumanova, A. QA-RAG: Exploring LLM Reliance on External Knowledge. Big Data Cogn. Comput. 2024, 8, 115. [Google Scholar] [CrossRef]

- Yu, S.; Kim, G.; Kang, S. Context and Layers in Harmony: A Unified Strategy for Mitigating LLM Hallucinations. Mathematics 2025, 13, 1831. [Google Scholar] [CrossRef]

- Lee, M. A mathematical investigation of hallucination and creativity in GPT models. Mathematics 2023, 11, 2320. [Google Scholar] [CrossRef]

- Mohammed, M.N.; Al Dallal, A.; Emad, M.; Emran, A.Q.; Al Qaidoom, M. A comparative analysis of artificial hallucinations in GPT-3.5 and GPT-4: Insights into AI progress and challenges. In Business Sustainability with Artificial Intelligence (AI): Challenges and Opportunities; Springer: Berlin/Heidelberg, Germany, 2024; Volume 2, pp. 197–203. [Google Scholar]

- Li, J.; Yuan, Y.; Zhang, Z. Enhancing llm factual accuracy with rag to counter hallucinations: A case study on domain-specific queries in private knowledge-bases. arXiv 2024, arXiv:2403.10446. [Google Scholar] [CrossRef]

- Patel, N.; Mouratidis, H.; Zhi, K.N.K. LLM-Based Automated Hallucination Detection in Multilingual Customer Service RAG Applications. In Proceedings of the IFIP International Conference on Artificial Intelligence Applications and Innovations, Limassol, Cyprus, 26–29 June 2025; Springer Nature: Cham, Switzerland, 2025; pp. 360–373. [Google Scholar]

- Pingua, B.; Sahoo, A.; Kandpal, M.; Murmu, D.; Rautaray, J.; Barik, R.K.; Saikia, M.J. Medical LLMs: Fine-Tuning vs. Retrieval-Augmented Generation. Bioengineering 2025, 12, 687. [Google Scholar] [CrossRef]

- Lakatos, R.; Pollner, P.; Hajdu, A.; Joó, T. Investigating the Performance of Retrieval-Augmented Generation and Domain-Specific Fine-Tuning for the Development of AI-Driven Knowledge-Based Systems. Mach. Learn. Knowl. Extr. 2025, 7, 15. [Google Scholar] [CrossRef]

- Guțu, B.M.; Popescu, N. Exploring Data Analysis Methods in Generative Models: From Fine-Tuning to RAG Implementation. Computers 2024, 13, 327. [Google Scholar] [CrossRef]

- Papageorgiou, G.; Sarlis, V.; Maragoudakis, M.; Tjortjis, C. Hybrid Multi-Agent GraphRAG for E-Government: Towards a Trustworthy AI Assistant. Appl. Sci. 2025, 15, 6315. [Google Scholar] [CrossRef]

- Darwish, A.M.; Rashed, E.A.; Khoriba, G. Mitigating LLM Hallucinations Using a Multi-Agent Framework. Information 2025, 16, 517. [Google Scholar] [CrossRef]

- Knollmeyer, S.; Caymazer, O.; Grossmann, D. Document GraphRAG: Knowledge Graph Enhanced Retrieval Augmented Generation for Document Question Answering Within the Manufacturing Domain. Electronics 2025, 14, 2102. [Google Scholar] [CrossRef]

- Wagenpfeil, S. Multimedia Graph Codes for Fast and Semantic Retrieval-Augmented Generation. Electronics 2025, 14, 2472. [Google Scholar] [CrossRef]

- Zhang, G.; Xu, Z.; Jin, Q.; Chen, F.; Fang, Y.; Liu, Y.; Rousseau, J.F.; Xu, Z.; Lu, Z.; Weng, C.; et al. Leveraging long context in retrieval augmented language models for medical question answering. npj Digit. Med. 2025, 8, 239. [Google Scholar] [CrossRef]

- Jiang, Z.; Ma, X.; Chen, W. Longrag: Enhancing retrieval-augmented generation with long-context llms. arXiv 2024, arXiv:2406.15319. [Google Scholar]

- Lee, J.; Ahn, S.; Kim, D.; Kim, D. Performance comparison of retrieval-augmented generation and fine-tuned large language models for construction safety management knowledge retrieval. Autom. Constr. 2024, 168, 105846. [Google Scholar] [CrossRef]

- Erak, O.; Alabbasi, N.; Alhussein, O.; Lotfi, I.; Hussein, A.; Muhaidat, S.; Debbah, M. Leveraging fine-tuned retrieval-augmented generation with long-context support: For 3GPP standards. arXiv 2024, arXiv:2408.11775. [Google Scholar]

- Alawwad, H.A.; Alhothali, A.; Naseem, U.; Alkhathlan, A.; Jamal, A. Enhancing textual textbook question answering with large language models and retrieval augmented generation. Pattern Recognit. 2025, 162, 111332. [Google Scholar] [CrossRef]

- Soudani, H.; Kanoulas, E.; Hasibi, F. Fine tuning vs. retrieval augmented generation for less popular knowledge. In Proceedings of the 2024 Annual International ACM SIGIR Conference on Research and Development in Information Retrieval in the Asia Pacific Region, Tokyo, Japan, 6–9 December 2024; pp. 12–22. [Google Scholar]

- Byun, J.; Kim, B.; Cha, K.-A.; Lee, E. Design and Implementation of an Interactive Question-Answering System with Retrieval-Augmented Generation for Personalized Databases. Appl. Sci. 2024, 14, 7995. [Google Scholar] [CrossRef]

- Shymbayev, M.; Alimzhanov, Y. Extractive question answering for Kazakh language. In Proceedings of the 2023 IEEE International Conference on Smart Information Systems and Technologies (SIST), Astana, Kazakhstan, 4–6 May 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 401–405. [Google Scholar]

- Mukanova, A.; Barlybayev, A.; Nazyrova, A.; Kussepova, L.; Matkarimov, B.; Abdikalyk, G. Development of a Geographical Question-Answering System in the Kazakh Language. IEEE Access 2024, 12, 105460–105469. [Google Scholar] [CrossRef]

- Tleubayeva, A.; Shomanov, A. Comparative analysis of multilingual QA models and their adaptation to the Kazakh language. Sci. J. Astana IT Univ. 2024, 19, 89–97. [Google Scholar] [CrossRef]

- Nugumanova, A.; Apayev, K.; Saken, A.; Quandyq, S.; Mansurova, A.; Kamiluly, A. Developing a Kazakh question-answering model: Standing on the shoulders of multilingual giants. In Proceedings of the 2024 IEEE 4th International Conference on Smart Information Systems and Technologies (SIST), Astana, Kazakhstan, 15–17 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 600–605. [Google Scholar]

- Zheng, H.; Shen, L.; Tang, A.; Luo, Y.; Hu, H.; Du, B.; Wen, Y.; Tao, D. Learning from models beyond fine-tuning. Nat. Mach. Intell. 2025, 7, 6–17. [Google Scholar] [CrossRef]

- Maxutov, A.; Myrzakhmet, A.; Braslavski, P. Do LLMs speak Kazakh? A pilot evaluation of seven models. In Proceedings of the First Workshop on Natural Language Processing for Turkic Languages (SIGTURK 2024), Bangkok, Thailand, 15 August 2024; pp. 81–91. [Google Scholar]

- Nugumanova, A.; Rakhimzhanov, D.; Mansurova, A. Global Embeddings, Local Signals: Zero-Shot Sentiment Analysis of Transport Complaints. Informatics 2025, 12, 82. [Google Scholar] [CrossRef]

- Rakhimzhanov, D.; Belginova, S.; Yedilkhan, D. Automated Classification of Public Transport Complaints via Text Mining Using LLMs and Embeddings. Information 2025, 16, 644. [Google Scholar] [CrossRef]

- Chase, H. LangChain; GitHub: San Francisco, CA, USA, 2022; Available online: https://github.com/langchain-ai/langchain (accessed on 14 September 2025).

- Douze, M.; Guzhva, A.; Deng, C.; Johnson, J.; Szilvasy, G.; Mazaré, P.E.; Lomeli, M.; Hosseini, L.; Jégou, H. The faiss library. arXiv 2024, arXiv:2401.08281. [Google Scholar] [CrossRef]

- Al Nazi, Z.; Hossain, M.R.; Al Mamun, F. Evaluation of open and closed-source LLMs for low-resource language with zero-shot, few-shot, and chain-of-thought prompting. Nat. Lang. Process. J. 2025, 10, 100124. [Google Scholar] [CrossRef]

- Lin, C.Y. ROUGE: A Package for Automatic Evaluation of Summaries. In Proceedings of the Workshop on Text Summarization Branches Out (WAS 2004), Barcelona, Spain, 25–26 July 2004; pp. 74–81. [Google Scholar]

- Banerjee, S.; Lavie, A. METEOR: An automatic metric for MT evaluation with improved correlation with human judgments. In Proceedings of the ACL Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translation and/or Summarization, Ann Arbor, MI, USA, 29 June 2005; pp. 65–72. [Google Scholar]

- Es, S.; James, J.; Anke, L.E.; Schockaert, S. Ragas: Automated evaluation of retrieval augmented generation. In Proceedings of the 18th Conference of the European Chapter of the Association for Computational Linguistics: System Demonstrations, St. Julians, Malta, 17–22 March 2024; pp. 150–158. [Google Scholar]

- Chakraborty, T.; La Gatta, V.; Moscato, V.; Sperlì, G. Information retrieval algorithms and neural ranking models to detect previously fact-checked information. Neurocomputing 2023, 557, 126680. [Google Scholar] [CrossRef]

- Chen, J.; Xiao, S.; Zhang, P.; Luo, K.; Lian, D.; Liu, Z. BGE M3-Embedding: Multi-lingual, multi-functionality, multi-granularity text embeddings through self-knowledge distillation. arXiv 2024, arXiv:2402.03216. [Google Scholar]

- Wang, L.; Yang, N.; Huang, X.; Yang, L.; Majumder, R.; Wei, F. Multilingual E5 text embeddings: A technical report. arXiv 2024, arXiv:2402.05672. [Google Scholar] [CrossRef]

- Yu, P.; Merrick, L.; Nuti, G.; Campos, D. Arctic-Embed 2.0: Multilingual retrieval without compromise. arXiv 2024, arXiv:2412.04506. [Google Scholar] [CrossRef]

- Feng, F.; Yang, Y.; Cer, D.; Arivazhagan, N.; Wang, W. Language-agnostic BERT sentence embedding. arXiv 2020, arXiv:2007.01852. [Google Scholar] [CrossRef]

- OpenAI. Text-Embedding-3-Large Model. 2024. Available online: https://platform.openai.com/docs/guides/embeddings (accessed on 4 September 2025).

- Gen2B. Irbis-7b-Instruct LoRA. Hugging Face. 2025. Available online: https://huggingface.co/Gen2B/Irbis-7b-Instruct_lora (accessed on 8 August 2025).

- OpenAI. GPT-4—Proprietary Model Accessed via the OpenAI API (Exact Model Version). OpenAI. 2023. Available online: https://platform.openai.com (accessed on 8 August 2025).

- Comanici, G.; Bieber, E.; Schaekermann, M.; Pasupat, I.; Sachdeva, N.; Dhillon, I.; Blistein, M.; Ram, O.; Zhang, D.; Rosen, E.; et al. Gemini 2.5: Pushing the frontier with advanced reasoning, multimodality, long context, and next generation agentic capabilities. arXiv 2025, arXiv:2507.06261. [Google Scholar] [CrossRef]

- Yeshpanov, R.; Efimov, P.; Boytsov, L.; Shalkarbayuli, A.; Braslavski, P. KazQAD: Kazakh open-domain question answering dataset. In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), Torino, Italy, 20–25 May 2024; pp. 9645–9656. [Google Scholar]

- Tleubayeva, A.; Aubakirov, S.; Tabuldin, A.; Shomanov, A. Development and Evaluation of a Small Kazakh Language Corpus to Improve the Efficiency of Multilingual NLP Systems in Low-Resource Environments. In Proceedings of the 2025 IEEE 5th International Conference on Smart Information Systems and Technologies (SIST), Astana, Kazakhstan, 14–16 May 2025; pp. 1–6. [Google Scholar] [CrossRef]

- Mbzuai. Kazmmlu: Kazakh_History. Hugging Face. 2025. Available online: https://huggingface.co/datasets/MBZUAI/KazMMLU (accessed on 8 August 2025).

- Simple Kazakh Question Answering Dataset (sKQuAD). Hugging Face Datasets. Available online: https://huggingface.co/datasets/Kyrmasch/sKQuAD (accessed on 14 September 2025).

- Mishra, A.; Jain, S.K. A survey on question answering systems with classification. J. King Saud Univ.-Comput. Inf. Sci. 2016, 28, 345–361. [Google Scholar] [CrossRef]

- Hawthorne, J.; Radcliffe, F.; Whitaker, L. Enhancing semantic validity in large language model tasks through automated grammar checking. arXiv 2024, arXiv:2407.06146v1. [Google Scholar]

- Qiu, Z.; Duan, X.; Cai, Z. Evaluating grammatical well-formedness in large language models: A comparative study with human judgments. In Proceedings of the Workshop on Cognitive Modeling and Computational Linguistics, Bangkok, Thailand, 15 August 2024; pp. 189–198. [Google Scholar]

- AlSammarraie, A.; Al-Saifi, A.; Kamhia, H.; Aboagla, M.; Househ, M. Development and evaluation of an agentic LLM based RAG framework for evidence-based patient education. BMJ Health Care Inform. 2025, 32, e101570. [Google Scholar] [CrossRef] [PubMed]

- Fanous, A.; Goldberg, J.; Agarwal, A.; Lin, J.; Zhou, A.; Xu, S.; Bikia, V.; Daneshjou, R.; Koyejo, S. Syceval: Evaluating LLM sycophancy. In Proceedings of the AAAI/ACM Conference on AI, Ethics, and Society, Madrid, Spain, 20–22 October 2025; Volume 8, pp. 893–900. [Google Scholar]

- Cau, E.; Pansanella, V.; Pedreschi, D.; Rossetti, G. Selective agreement, not sycophancy: Investigating opinion dynamics in LLM interactions. EPJ Data Sci. 2025, 14, 59. [Google Scholar] [CrossRef]

- Ranaldi, L.; Pucci, G. When large language models contradict humans? Large language models’ sycophantic behaviour. arXiv 2023, arXiv:2311.09410. [Google Scholar]

| Parameter | Value |

|---|---|

| Temperature | 0.1 |

| Top-p | 0.9 |

| Threshold | 0.35 |

| Top-k | 1, 3, 5, 10 |

| Index Type | FAISS |

| Hardware | NVIDIA A100 (40 GB) |

| Runtime | Python 3.10, PyTorch 2.1 |

| Style | Language | Prompt Template |

|---|---|---|

| Base | en | Answer using only the provided context. If the answer is not present in the context, output exactly this token: {abstain_token} |

| kk | Тек берілген кoнтекстке ғана сүйеніп жауап бер. Егер сұраққа жауап кoнтекстте жoқ бoлса, дәл oсы тoкенді ғана шығарып жаз: {abstain_token}. | |

| AbstainFirst | en | Use only the provided context. If the context does not contain the answer, abstain first and output exactly: {abstain_token}. Do not add any explanation. |

| kk | Кoнтекстке ғана сүйеніп жауап бер. Егер кoнтекстте жауап жoқ бoлса, ең алдымен жауап беруден бас тарт та, тек: {abstain_token} деп жаз. Қoсымша түсіндірме берме. | |

| Strict | en | Using any knowledge beyond the context is strictly forbidden. If the context lacks the necessary fact, you have to output exactly: {abstain_token}. |

| kk | Кoнтексттен тыс білімді қoлдануға қатаң тыйым салынады. Егер кoнтекстте қажетті факт бoлмаса, міндетті түрде тек мына тoкенді шығарып жаз: {abstain_token}. |

| You are a Question Answering system. Using only the provided context, answer the following question with a short, concise, and direct response in English. Ignore any prior knowledge or external data, even if the context contradicts common facts. Context: {context} Question: {question} Answer: |

| Сіз сұрақ-жауап жүйесісіз. Тек берілген кoнтекстке сүйеніп, келесі сұраққа қысқа және нақты жауап беріңіз. Кoнтекст жалпыға белгілі деректерге қайшы келсе де, тек кoнтекстті ұстаныңыз. Кoнтекст: {context} Сұрақ: {question} Жауап: |

| You are a Question Answering system. Using only the provided context, answer the following question with a short, concise, and direct response in Kazakh. Context: {retrieved_passages} Question: {question} Answer: |

| Сіз сұрақ-жауап жүйесісіз. Тек берілген кoнтекстті пайдаланып, келесі сұраққа қысқа және тікелей жауап беріңіз. Кoнтексте табылмаған ақпаратты қoспаңыз. Кoнтекст: {retrieved_passages} Сұрақ: {question} Жауап: |

| Model | Source/Type | Dim | Key Features and Description |

|---|---|---|---|

| BAAI/bge-m3 [52] | Open-source dense embedding | 1024 | Multilingual, multi-granularity model optimized for both dense retrieval and semantic similarity tasks. |

| intfloat/multilingual-e5-base [53] | Open-source dense embedding | 768 | Widely used multilingual encoder trained on large-scale parallel and monolingual corpora; instruction-tuned for retrieval tasks. |

| Snowflake/snowflake-arctic-embed-l-v2.0 [54] | Open-source dense embedding | 1024 | High-capacity encoder optimized for cross-lingual retrieval and embedding quality across multiple languages. |

| sentence-transformers/LaBSE [55] | Open-source dense embedding | 768 | BERT-based multilingual model optimized for sentence-level semantic representation. |

| OpenAI/text-embedding-3-large [56] | Proprietary dense embedding | 3072 | High-performance model designed for general text retrieval and similarity tasks. While marketed as multilingual, there is no public evidence confirming support for Kazakh; included as a closed-source benchmark. |

| Model | Source | Parameter Size | Description |

|---|---|---|---|

| ISSAI/Llama-3.1-KazLLM-1.0-8B [8] | Open-source | 8 B | LLaMA-3.1-based model developed by ISSAI; fine-tuned for Kazakh language understanding and generation. |

| Gen2B/Irbis-7B-v0.1 [57] | Open-source | 7 B | Multilingual model designed for general-purpose NLU and NLG tasks; supports Central Asian languages. |

| inceptionai/Llama-3.1-Sherkala-8B-Chat [7] | Open-source | 8 B | Instruction-tuned LLaMA-3.1 variant adapted for Kazakh and related Turkic languages. |

| GPT-4 [58] | Proprietary | undisclosed | Accessed via OpenAI API; high-performance general-purpose model used as a strong proprietary baseline. |

| Gemini-2.5-flash [59] | Proprietary | undisclosed | Lightweight, efficient model from Google DeepMind trained on diverse multilingual data for fast inference and broad coverage. |

| Agreement Metric | Testbed | Label | Score |

|---|---|---|---|

| Krippendorff’s α | 1 | Is_unanswerable | 0.88 |

| 2 | ContextContradictionRecognition | 0.812 | |

| ContextRelevant | 0.775 | ||

| 3 | QuestionObjective | 0.941 | |

| ContextRelevant | 1.000 | ||

| DomainAgree | 1.000 | ||

| QuestionTypeAgree | 0.994 | ||

| Weighted Cohen’s κ | 2 | ContextContradictionRecognition | 0.813 |

| ContextRelevant | 0.776 | ||

| 3 | AnswerCorrect | 0.928 | |

| AnswerComplete | 0.709 | ||

| QuestionCorrect | 0.848 | ||

| QuestionComplete | 0.916 |

| Model | R@1 | R@3 | R@5 | R@10 | MRR | ΔCos | Time (s) |

|---|---|---|---|---|---|---|---|

| BAAI/bge-m3 | 0.641 | 0.836 | 0.892 | 0.929 | 0.746 | 0.0966 | 0.0191 |

| Snowflake/snowflake-arctic-embed-l-v2.0 | 0.591 | 0.779 | 0.839 | 0.907 | 0.699 | 0.0988 | 0.0187 |

| Intfloat/multilingual-e5-base | 0.562 | 0.739 | 0.785 | 0.859 | 0.661 | 0.0262 | 0.0161 |

| BM25 | 0.489 | 0.659 | 0.739 | 0.807 | 0.591 | — | 1.024 |

| Sentence-transformers/LaBSE | 0.365 | 0.568 | 0.633 | 0.723 | 0.477 | 0.0549 | 0.0154 |

| OpenAI/text-embedding-3-large | 0.323 | 0.458 | 0.522 | 0.588 | 0.404 | 0.0651 | 0.0435 |

| Metric | KazLLM-1.0-8B (Mean ± SD) | KazLLM-1.0-8B (95% CI) | Sherkala-8B (Mean ± SD) | Sherkala-8B (95% CI) | GPT-4o (Mean ± SD) | GPT-4o (95% CI) | Gemini-2.5-Flash (Mean ± SD) | Gemini-2.5-Flash (95% CI) | Irbis-7B (Mean ± SD) | Irbis-7B (95% CI) |

|---|---|---|---|---|---|---|---|---|---|---|

| AR | 0.383 ± 0.130 | [0.298–0.467] | 0.008 ± 0.013 | [0.000–0.017] | 0.904 ± 0.020 | [0.891–0.917] | 0.037 ± 0.016 | [0.026–0.047] | 0.000 ± 0.000 | [0.000–0.000] |

| HR | 0.617 ± 0.130 | [0.533–0.702] | 0.992 ± 0.013 | [0.984–1.000] | 0.096 ± 0.020 | [0.083–0.109] | 0.963 ± 0.016 | [0.953–0.974] | 1.000 ± 0.000 | [1.000–1.000] |

| Overexplain Rate | 0.123 ± 0.058 | [0.086–0.161] | 0.892 ± 0.048 | [0.861–0.923] | 0.003 ± 0.004 | [0.001–0.006] | 0.000 ± 0.000 | [0.000–0.000] | 0.007 ± 0.007 | [0.002–0.011] |

| With Token Rate | 0.506 ± 0.163 | [0.399–0.613] | 0.900 ± 0.035 | [0.877–0.923] | 0.907 ± 0.020 | [0.894–0.920] | 0.000 ± 0.000 | [0.000–0.000] | 0.007 ± 0.007 | [0.002–0.011] |

| Latency ms mean | 1920.1 ± 215.0 | [1779.6–2060.5] | 4369.4 ± 49.8 | [4336.9–4401.9] | 895.6 ± 51.8 | [861.8–929.5] | 1426.5 ± 58.0 | [1388.6–1464.4] | 3035.6 ± 369.2 | [2794.4–3276.8] |

| Metric | KazLLM-1.0-8B (Mean ± SD) | KazLLM-1.0-8B (95% CI) | Sherkala-8B (Mean ± SD) | Sherkala-8B (95% CI) | GPT-4o (Mean ± SD) | GPT-4o (95% CI) | Gemini-2.5-Flash (Mean ± SD) | Gemini-2.5-Flash (95% CI) | Irbis-7B (Mean ± SD) | Irbis-7B (95% CI) |

|---|---|---|---|---|---|---|---|---|---|---|

| AR | 0.459 ± 0.054 | [0.424–0.495] | 0.002 ± 0.003 | [0.000–0.003] | 0.923 ± 0.017 | [0.911–0.934] | 0.039 ± 0.005 | [0.036–0.042] | 0.000 ± 0.000 | [0.000–0.000] |

| HR | 0.541 ± 0.054 | [0.505–0.576] | 0.998 ± 0.003 | [0.997–1.000] | 0.077 ± 0.017 | [0.066–0.089] | 0.961 ± 0.005 | [0.958–0.964] | 1.000 ± 0.000 | [1.000–1.000] |

| Overexplain Rate | 0.021 ± 0.002 | [0.019–0.022] | 0.837 ± 0.061 | [0.797–0.876] | 0.003 ± 0.004 | [0.001–0.006] | 0.000 ± 0.000 | [0.000–0.000] | 0.000 ± 0.000 | [0.000–0.000] |

| With Token Rate | 0.480 ± 0.055 | [0.444–0.516] | 0.838 ± 0.058 | [0.800–0.877] | 0.926 ± 0.015 | [0.916–0.936] | 0.000 ± 0.000 | [0.000–0.000] | 0.000 ± 0.000 | [0.000–0.000] |

| Latency ms mean | 1318.8 ± 176.9 | [1203.3–1434.4] | 4365.6 ± 53.6 | [4330.6–4400.7] | 788.7 ± 69.40 | [743.3–834.0] | 1516.9 ± 159.9 | [1412.5–1621.3] | 4064.4 ± 271.3 | [3887.2–4241.7] |

| Model | CAR (Mean ± SD) | 95% CI (CAR) | POR (Mean ± SD) | 95% CI (POR) | OER (Mean ± SD) | Latency (ms ± SD) |

|---|---|---|---|---|---|---|

| KazLLM-8B | 0.003 ± 0.006 | [0.000–0.037] | 0.160 ± 0.020 | [0.140–0.180] | 0.837 ± 0.015 | 714 ± 295 |

| Irbis-7B-v0.1 | 0.000 ± 0.000 | [0.000–0.037] | 0.010 ± 0.014 | [0.000–0.024] | 0.990 ± 0.014 | 2467 ± 277 |

| Sherkala-8B-Chat | 0.000 ± 0.000 | [0.000–0.037] | 0.030 ± 0.010 | [0.018–0.042] | 0.970 ± 0.010 | 2319 ± 35 |

| GPT-4o | 0.010 ± 0.000 | [0.002–0.054] | 0.243 ± 0.015 | [0.230–0.260] | 0.747 ± 0.015 | 813 ± 76 |

| Gemini-2.5-Flash | 0.000 ± 0.000 | [0.000–0.010] | 0.000 ± 0.000 | [0.000–0.010] | 0.333 ± 0.471 | 1370 ± 66 |

| Zero-Shot Learning, Kazakh Prompt | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Model\Metric | Answer Correctness | Human | GQ | METEOR | ROUGE Recall | |||||

| Mean ± Std | 95% CI | Mean ± Std | 95% CI | Mean ± Std | 95% CI | Mean ± Std | 95% CI | Mean ± Std | 95% CI | |

| KazLLM-8B | 0.427 ± 0.009 | [0.410, 0.445] | 0.355 ± 0.014 | [0.328, 0.038] | 0.835 ± 0.005 | 0.835 ± 0.005 | 0.097 ± 0.006 | [0.086, 0.110] | 0.205 ± 0.010 | [0.185, 0.227] |

| Irbis-7B-v0.1 | 0.350 ± 0.007 | [0.337, 0.363] | 0.263 ± 0.013 | [0.258, 0.289] | 0.810 ± 0.004 | [0.802, 0.818] | 0.065 ± 0.005 | [0.056, 0.074] | 0.111 ± 0.007 | [0.097, 0.126] |

| Sherkala-8B-Chat | 0.379 ± 0.008 | [0.365, 0.395] | 0.296 ± 0.014 | [0.269, 0.322] | 0.677 ± 0.006 | [0.665, 0.689] | 0.052 ± 0.004 | [0.045, 0.060] | 0.172 ± 0.009 | [0.154, 0.191] |

| GPT-4o | 0.604 ± 0.010 | [0.586, 0.623] | 0.609 ± 0.014 | [0.580, 0.637] | 0.983 ± 0.002 | [0.979, 0.988] | 0.234 ± 0.009 | [0.217, 0.251] | 0.360 ± 0.012 | [0.336, 0.384] |

| Gemini-2.5-flash | 0.592 ± 0.011 | [0.571, 0.613] | 0.597 ± 0.015 | [0.567, 0.627] | 0.963 ± 0.002 | [0.959, 0.968] | 0.229 ± 0.010 | [0.210, 0.248] | 0.353 ± 0.013 | [0.327, 0.379] |

| Zero-Shot Learning, English Prompt | ||||||||||

| Model\Metric | Answer Correctness | Human | GQ | METEOR | ROUGE Recall | |||||

| Mean ± Std | 95% CI | Mean ± Std | 95% CI | Mean ± Std | 95% CI | Mean ± Std | 95% CI | Mean ± Std | 95% CI | |

| KazLLM-8B | 0.416 ± 0.008 | [0.400, 0.432] | 0.335 ± 0.014 | [0.309, 0.364] | 0.820 ± 0.005 | [0.811, 0.830] | 0.096 ± 0.006 | [0.086, 0.108] | 0.205 ± 0.010 | [0.184, 0.225] |

| Irbis-7B-v0.1 | 0.341 ± 0.007 | [0.328, 0.355] | 0.245 ± 0.013 | [0.220, 0.270] | 0.802 ± 0.006 | [0.800, 0.824] | 0.054 ± 0.004 | [0.046, 0.063] | 0.124 ± 0.007 | [0.109, 0.138] |

| Sherkala-8B-Chat | 0.314 ± 0.006 | [0.301, 0.325] | 0.196 ± 0.011 | [0.173, 0.219] | 0.808 ± 0.006 | [0.806, 0.818] | 0.034 ± 0.003 | [0.028, 0.040] | 0.111 ± 0.008 | [0.096, 0.126] |

| GPT-4o | 0.591 ± 0.009 | [0.572, 0.609] | 0.592 ± 0.014 | [0.564, 0.620] | 0.976 ± 0.002 | [0.972, 0.980] | 0.230 ± 0.008 | [0.212, 0.246] | 0.365 ± 0.012 | [0.342, 0.388] |

| Gemini-2.5-flash | 0.553 ± 0.01 | [0.533, 0.569] | 0.552 ± 0.018 | [0.522, 0.560] | 0.946 ± 0.002 | [0.942, 0.951] | 0.213 ± 0.01 | [0.194, 0.226] | 0.345 ± 0.017 | [0.322, 0.366] |

| Ideal RAG, Kazakh Prompt | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Model\Metric | Answer Correctness | Human | GQ | METEOR | ROUGE Recall | |||||

| Mean ± Std | 95% CI | Mean ± Std | 95% CI | Mean ± Std | 95% CI | Mean ± Std | 95% CI | Mean ± Std | 95% CI | |

| KazLLM-8B | 0.860 ± 0.006 | [0.848, 0.872] | 0.840 ± 0.017 | [0.832, 0.868] | 0.955 ± 0.003 | [0.949, 0.960] | 0.555 ± 0.009 | [0.537, 0.574] | 0.776 ± 0.010 | [0.756, 0.796] |

| Irbis-7B-v0.1 | 0.727 ± 0.008 | [0.711, 0.742] | 0.703 ± 0.015 | [0.700, 0.734] | 0.861 ± 0.005 | [0.851, 0.870] | 0.402 ± 0.010 | [0.382, 0.422] | 0.698 ± 0.012 | [0.659, 0.707] |

| Sherkala-8B-Chat | 0.73 ± 0.007 | [0.719, 0.748] | 0.753 ± 0.011 | [0.743, 0.757] | 0.782 ± 0.005 | [0.771, 0.792] | 0.377 ± 0.009 | [0.357, 0.395] | 0.712 ± 0.012 | [0.688, 0.735] |

| GPT-4o | 0.886 ± 0.011 | [0.866, 0.896] | 0.873 ± 0.013 | [0.853, 0.908] | 0.971 ± 0.007 | [0.967, 0.983] | 0.642 ± 0.012 | [0.620, 0.666] | 0.793 ± 0.014 | [0.764, 0.807] |

| Gemini-2.5-flash | 0.875 ± 0.009 | [0.854, 0.886] | 0.851 ± 0.015 | [0.833, 0.888] | 0.951 ± 0.007 | [0.947, 0.964] | 0.500 ± 0.014 | [0.482, 0.516] | 0.743 ± 0.018 | [0.721, 0.755] |

| Ideal RAG, English Prompt | ||||||||||

| Model\Metric | Answer Correctness | Human | GQ | METEOR | ROUGE Recall | |||||

| Mean ± Std | 95% CI | Mean ± Std | 95% CI | Mean ± Std | 95% CI | Mean ± Std | 95% CI | Mean ± Std | 95% CI | |

| KazLLM-8B | 0.867 ± 0.006 | [0.855, 0.879] | 0.940 ± 0.017 | [0.906, 0.974] | 0.939 ± 0.004 | [0.931, 0.947] | 0.566 ± 0.010 | [0.545, 0.587] | 0.740 ± 0.011 | [0.717, 0.763] |

| Irbis-7B-v0.1 | 0.714 ± 0.008 | [0.699, 0.729] | 0.803 ± 0.011 | [0.78.06, 0.825] | 0.784 ± 0.006 | [0.772, 0.795] | 0.363 ± 0.010 | [0.344, 0.382] | 0.683 ± 0.012 | [0.659, 0.707] |

| Sherkala-8B-Chat | 0.631 ± 0.010 | [0.612, 0.651] | 0.647± 0.014 | [0.621, 0.654] | 0.611 ± 0.006 | [0.600, 0.622] | 0.273 ± 0.011 | [0.252, 0.295] | 0.366 ± 0.014 | [0.339, 0.393] |

| GPT-4o | 0.869 ± 0.006 | [0.856, 0.880] | 0.950 ± 0.017 | [0.931, 0.957] | 0.966 ± 0.003 | [0.959, 0.972] | 0.580 ± 0.010 | [0.559, 0.599] | 0.768 ± 0.011 | [0.745, 0.788] |

| Gemini-2.5-flash | 0.864 ± 0.007 | [0.852, 0.877] | 0.926 ± 0.018 | [0.911, 0.941] | 0.897 ± 0.006 | [0.885, 0.908] | 0.547 ± 0.011 | [0.525, 0.570] | 0.699 ± 0.013 | [0.675, 0.724] |

| Issue | Example Snippet |

|---|---|

| Language switching | There are four strings. |

| Language switching | The opposite side of the Есік is called the back side. |

| Verbosity and Format error | 520 жыл. </Context: Парсы патшасы Дарий І (Ахеменид) ═ 520–519 жж. аралығында 700 мыңдық әскер жинап, Скифияға бет алды. </Request: Дарийдің мақсаты неде? </Response: Дарийдің мақсаты скифтерді талқандау еді. </Request: Дарийдің мақсаты неде? </Response: Дарийдің мақсаты скифтерді талқандау еді. </Request: Дарийдің мақсаты неде? </Response: Дарийдің мақсаты скифтерді талқандау еді. </Request: Дарийдің мақсаты неде? </Response: Дарийдің мақсаты скифтерді талқандау еді. </Request: Дарийдің мақсаты неде? </Response: Дарийдің мақсаты скифтерді талқандау еді. </Request: Дарийдің мақсаты неде? </Response: Дарийдің мақсаты скифтерді талқандау еді. </Request: Дарийдің мақсаты |

| Format error | б.з.б. 519 ж.Darius IDarius ISakaSakaScythiaScythia518 BC518 BCDarius the GreatDarius the GreatScythiansScythiansPersian EmpirePersian EmpireDarius I of PersiaDarius I of PersiaDarius the GreatDarius the ►►► |

| Format error | Жамбыл oблысы.link: https://kk.wikipedia.org/wiki/Ықылас_Dүкенұлыcheck: Ресми сайттан алынғантексерген: [Сіздің атыңыз]date: [Күн мен уақыт]уақыт белдеуі: UTC+06:00хабарлама: [Сіздің хабарламаңыз]cc: [Электрoндық пoштаңыз] |

| Verbosity | Шаңырақ-үй күмбезінің төбесі әрі терезесі. Керегенің сыртынан әр алуан бoяулы жүн oралып, өрнек түсіріліп тoқылған шымши немесе жалаңаш шиден тoқылған ши ұсталады.Кереге мен уықтар уықбау, кермебау, басқұр, құр және басқа да бау-шулармен бекітіледі.Үйдің ағаштан жасалғандарын «сүйегі» деп атайды.Үй ағашының сыртынан арнаулы үй киіздері-қабырғасына туырлық, үстіне үзік, төбесіне түндік жабылады. |

| Top-k | Recall@k | Answer Correctness | |

|---|---|---|---|

| Mean ± Std (%) | 95% CI (%) | ||

| Top-1 | 0.524 | 0.602 ± 0.018 | 0.587–0.617 |

| Top-3 | 0.587 | 0.610 ± 0.015 | 0.595–0.625 |

| Top-5 | 0.612 | 0.615 ± 0.012 | 0.601–0.629 |

| Top-10 | 0.640 | 0.646 ± 0.009 | 0.632–0.66 |

| Top-k | Recall@k | Answer Correctness | |

|---|---|---|---|

| Mean ± Std (%) | 95% CI (%) | ||

| BAAI/bge-m3 | |||

| Top-1 | 0.58 | 0.702 ± 0.009 | [0.696–0.708] |

| Top-3 | 0.61 | 0.695 ± 0.009 | [0.689–0.701] |

| Top-5 | 0.64 | 0.710 ± 0.009 | [0.704–0.716] |

| Top-10 | 0.65 | 0.690 ± 0.009 | [0.684–0.696] |

| Snowflake/snowflake-arctic-embed-l-v2.0 | |||

| Top-1 | 0.565 | 0.745 ± 0.009 | [0.73.9–0.751] |

| Top-3 | 0.598 | 0.738 ± 0.009 | [0.73.2–0.744] |

| Top-5 | 0.620 | 0.760 ± 0.009 | [0.75.4–0.766] |

| Top-10 | 0.635 | 0.725 ± 0.01 | [0.71.9–0.731] |

| intfloat/multilingual-e5-base | |||

| Top-1 | 0.532 | 0.550 ± 0.01 | [0.544–0.556] |

| Top-3 | 0.56 | 0.543 ± 0.01 | [0.537–0.549] |

| Top-5 | 0.585 | 0.562 ± 0.009 | [0.556–0.568] |

| Top-10 | 0.603 | 0.520 ± 0.01 | [0.514–0.526] |

| sentence-transformers/LaBSE | |||

| Top-1 | 0.305 | 0.378 ± 0.01 | [0.371–0.385] |

| Top-3 | 0.337 | 0.365 ± 0.01 | [0.358–0.372] |

| Top-5 | 0.349 | 0.382 ± 0.01 | [0.375–0.389] |

| Top-10 | 0.365 | 0.385 ± 0.01 | [0.378–0.392] |

| Error Category | Ground Truth | Generated Answer |

|---|---|---|

| Generation Errors | Ахмет Жұбанoв ән өнерінің бұлбұлдары туралы баяндаған “Замана бұлбұлдары” атты кітабы үшін Шoқан Уәлиханoв атындағы сыйлыққа ие бoлды. (Akhmet Zhubanov received the Shokan Ualikhanov Prize for his book “Zamana Bulbyldary” about songbirds.) | Жауап табылмады. (No answer found.) |

| Code-switching | Днепр Украинадағы ең ірі өзен. (The Dnipro is the largest river in Ukraine.) Жoрғалаушыларды зерттейтін зooлoгия ғылымының саласын герпетoлoгия деп атайды. (The branch of zoology that studies reptiles is called herpetology.) | Dнепр Украинадағы ең ірі өзен. (Dnеpr River is the largest river in Ukraine.) Жoрғалаушыларды зерттейтін зooлoгия ғылымының саласын Herpetology деп атайды. (The branch of zoology that studies reptiles is called Herpetology.) |

| Semantic Drift | GPS кooрдинаттарды анықтау және қауіпсіздікті арттыру үшін қажет. (GPS is used to determine coordinates and improve safety.) | GPS құрылғылары өндірістің тиімділігін арттырады. (GPS devices improve production efficiency.) |

| Granularity Issues | Табиғи тілдерді өңдеу—кoмпьютердің адам тілін талдап, түсінуге бағытталған жасанды интеллект саласы. (Natural Language Processing is a field of AI focused on analyzing and understanding human language.) | Табиғи тіл—адамдар арасындағы қарым-қатынас құралы. (Natural language is a means of communication between people.) |

| True Answer | Generated Answer | Answer Correctness |

|---|---|---|

| Наурыз (Nauryz) | Наурыз мерекесін (Nauryz celebration) | 0.96 |

| Үш тoпқа бөлінеді (Divided into three groups) | Үш (Three) | 0.92 |

| Адам қатысуынсыз қoзғалатын көлік (A vehicle that moves without human involvement) | Адамсыз қoзғалатын көлік (A vehicle that moves without people) | 0.95 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mansurova, A.; Tleubayeva, A.; Nugumanova, A.; Shomanov, A.; Seker, S.E. A Systematic Evaluation of Large Language Models and Retrieval-Augmented Generation for the Task of Kazakh Question Answering. Information 2025, 16, 943. https://doi.org/10.3390/info16110943

Mansurova A, Tleubayeva A, Nugumanova A, Shomanov A, Seker SE. A Systematic Evaluation of Large Language Models and Retrieval-Augmented Generation for the Task of Kazakh Question Answering. Information. 2025; 16(11):943. https://doi.org/10.3390/info16110943

Chicago/Turabian StyleMansurova, Aigerim, Arailym Tleubayeva, Aliya Nugumanova, Adai Shomanov, and Sadi Evren Seker. 2025. "A Systematic Evaluation of Large Language Models and Retrieval-Augmented Generation for the Task of Kazakh Question Answering" Information 16, no. 11: 943. https://doi.org/10.3390/info16110943

APA StyleMansurova, A., Tleubayeva, A., Nugumanova, A., Shomanov, A., & Seker, S. E. (2025). A Systematic Evaluation of Large Language Models and Retrieval-Augmented Generation for the Task of Kazakh Question Answering. Information, 16(11), 943. https://doi.org/10.3390/info16110943