1. Introduction

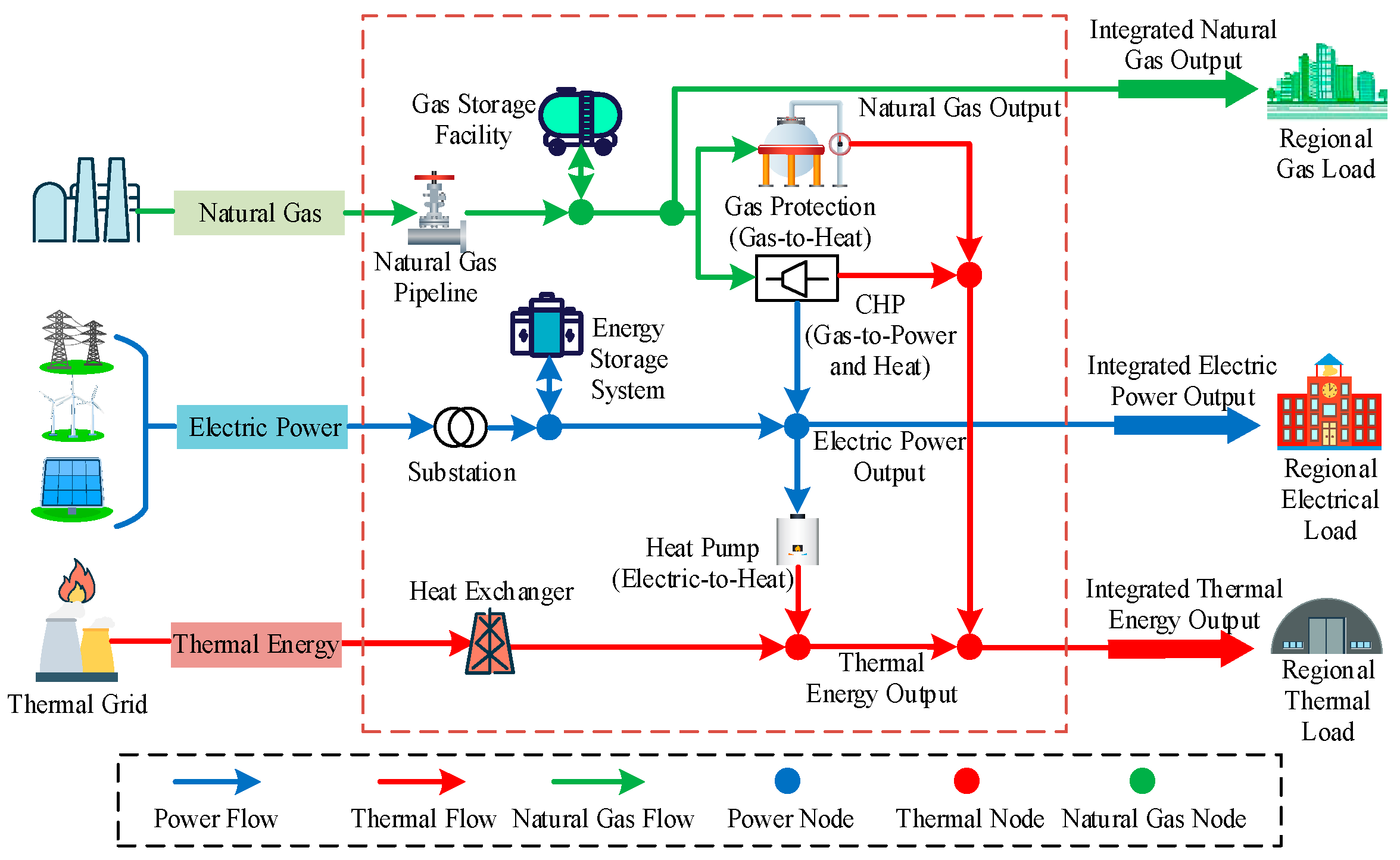

The tight coupling of multiple energy sources across production, distribution, storage, and consumption endows integrated energy systems with operational characteristics fundamentally distinct from those of standalone systems. These systems are characterized by multi-physics coupling, dynamic interrelationships across multiple timescales, strong nonlinearity, and inherent uncertainty [

1,

2]. Consequently, integrated energy systems have attracted considerable global research interest, encompassing critical areas such as planning and design [

3], load forecasting [

4], energy management [

5], and monitoring and maintenance [

6].

Among these, load forecasting serves as the foundation for operational optimization and economic dispatch in integrated energy systems, and Load forecasting has been a hot research topic in integrated energy systems in recent years [

7,

8]. Currently, the mainstream approaches can be categorized into two types: those that solely consider the temporal variation patterns and those that account for the characteristic coupling of multiple energy sources. At present, the former category has several implementation approaches, which can be further divided based on whether the temporal characteristics of the data are decomposed. For example, in references [

9,

10], the historical load data is not decomposed, but instead, traditional fuzzy theory is employed for long-term forecasts of planning issues in the transmission network and various scenarios.

With the continuous development of signal processing techniques and neural networks, researchers aim to extract different frequency band state information by analyzing the fluctuation characteristics of historical load data [

11,

12,

13,

14,

15]. In reference [

11], wavelet packet decomposition is applied to daily maximum load values and seasonal data, separating high-frequency and low-frequency feature data, and neural networks are used to predict each decomposition frequency band. In reference [

12], a household-level short-term load forecasting model is proposed to understand residents’ daily routine. This method utilizes Long Short-Term Memory (LSTM) networks and wavelet packet decomposition of load nodes and neighboring nodes to capture highly correlated historical and future information, enhancing the prediction accuracy of the model. However, the use of fixed wavelet packet decomposition basis functions limits the adaptability of this forecasting model. References [

13,

14] address the non-periodic, nonlinear, volatile, and abrupt stochastic nature of the load data by employing the empirical mode decomposition method to obtain regular and stationary intrinsic mode components. However, this method lacks a solid theoretical derivation and fails to solve the endpoint effect problem. Reference [

15] incorporates a vector feature reconstruction process, which effectively addresses the impact of outliers on the overall model training. However, this leads to extreme values in model accuracy, making the prediction errors unable to continuously decrease.

However, there is currently relatively limited research on the impact of considering the coupling characteristics among multiple energy sources on the accuracy of load forecasting in integrated energy systems. References [

16,

17,

18] apply Convolutional Neural Network (CNN) to load forecasting and extract the spatial characteristics of the data. However, directly using a CNN for feature extraction can disrupt the original temporal features [

16], leading to a decrease in prediction accuracy. Reference [

17] employs multi-task learning to predict the load in integrated energy systems, considering both the coupling characteristics among multiple energy sources and the temporal features. Although a temporal model is incorporated after feature extraction, it does not effectively address the issue of disrupted temporal features. Reference [

18] utilizes ensemble learning to weight the predictions of different models. However, due to differences in the training data of each model and the lack of mutual constraints among the models, the prediction accuracy of the models is easily influenced by the features of the training set.

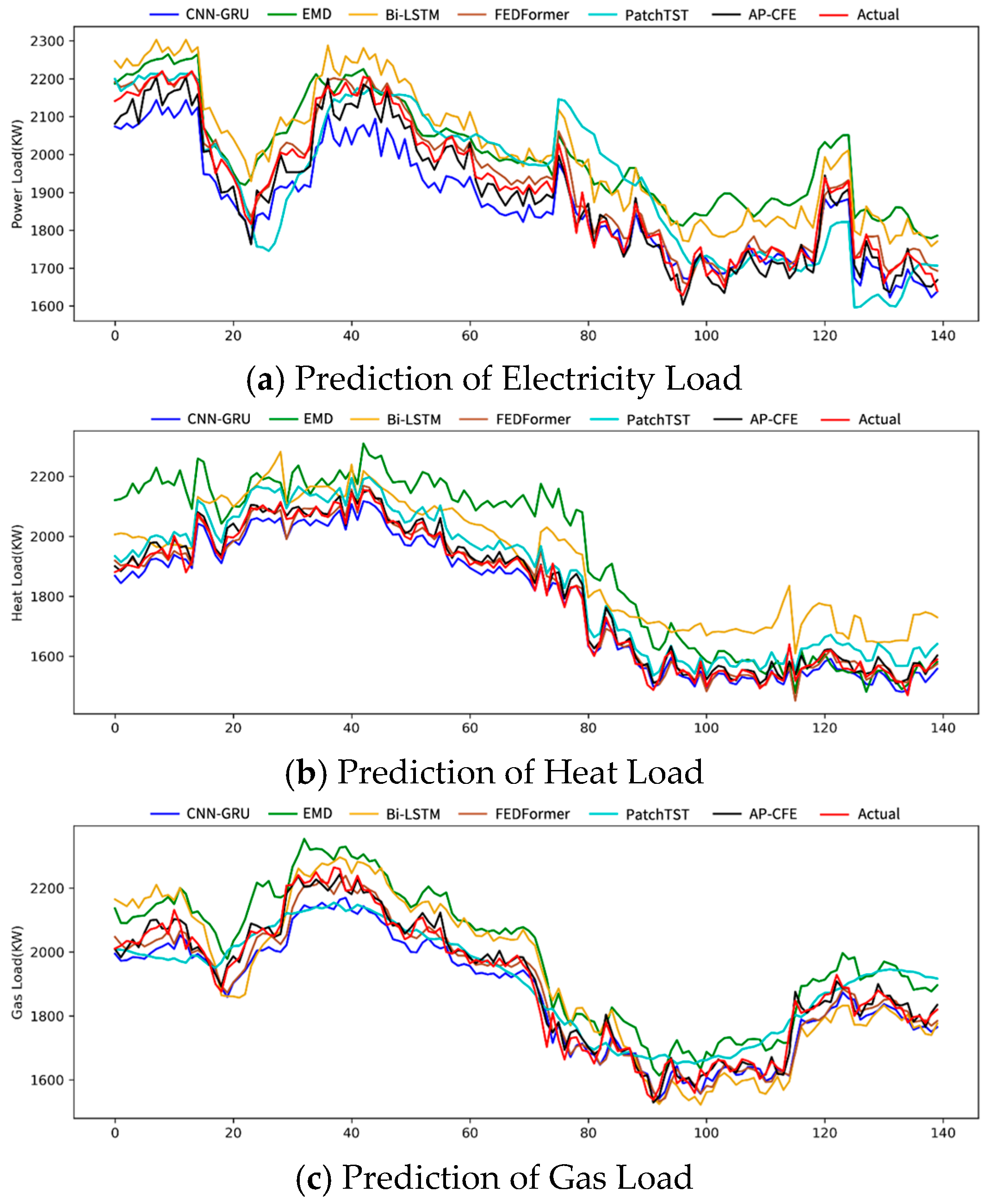

A common limitation in prior load forecasting studies for integrated energy systems is their frequent failure to capture the inherent long-term oscillatory behavior of the data. However, accurate extraction of periodic information not only reveals the patterns in the current data but also enables the learning of future data trends. Therefore, this paper proposes a short-term adaptive load forecasting method for integrated energy systems based on coupled feature extraction. This method considers both the coupling and periodicity of the data, analyzing the intrinsic correlations among different energy sources while preserving the temporal dependence on the data timeline. Additionally, the adaptive algorithm is proposed to enhance the network’s generalization ability, addressing overfitting issues when training on small datasets and improving the model’s generalization capability.

Contribution

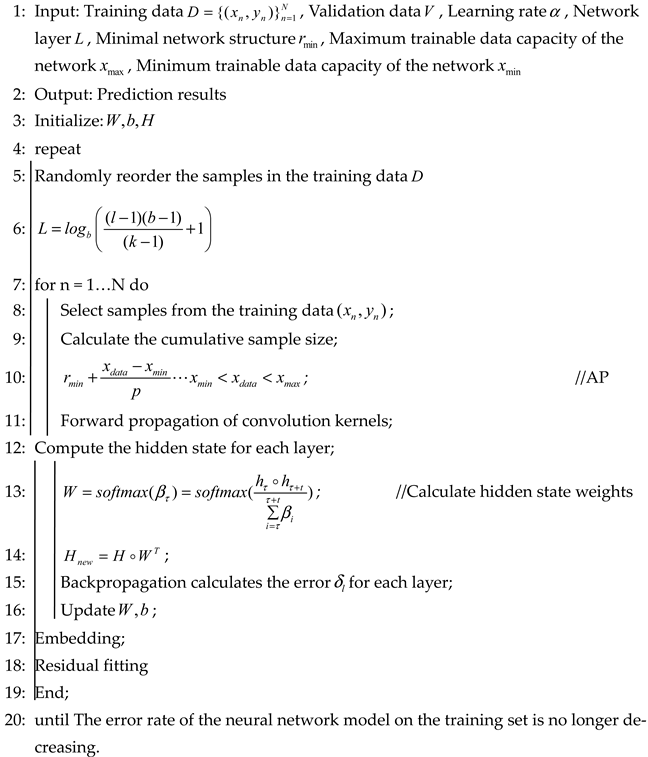

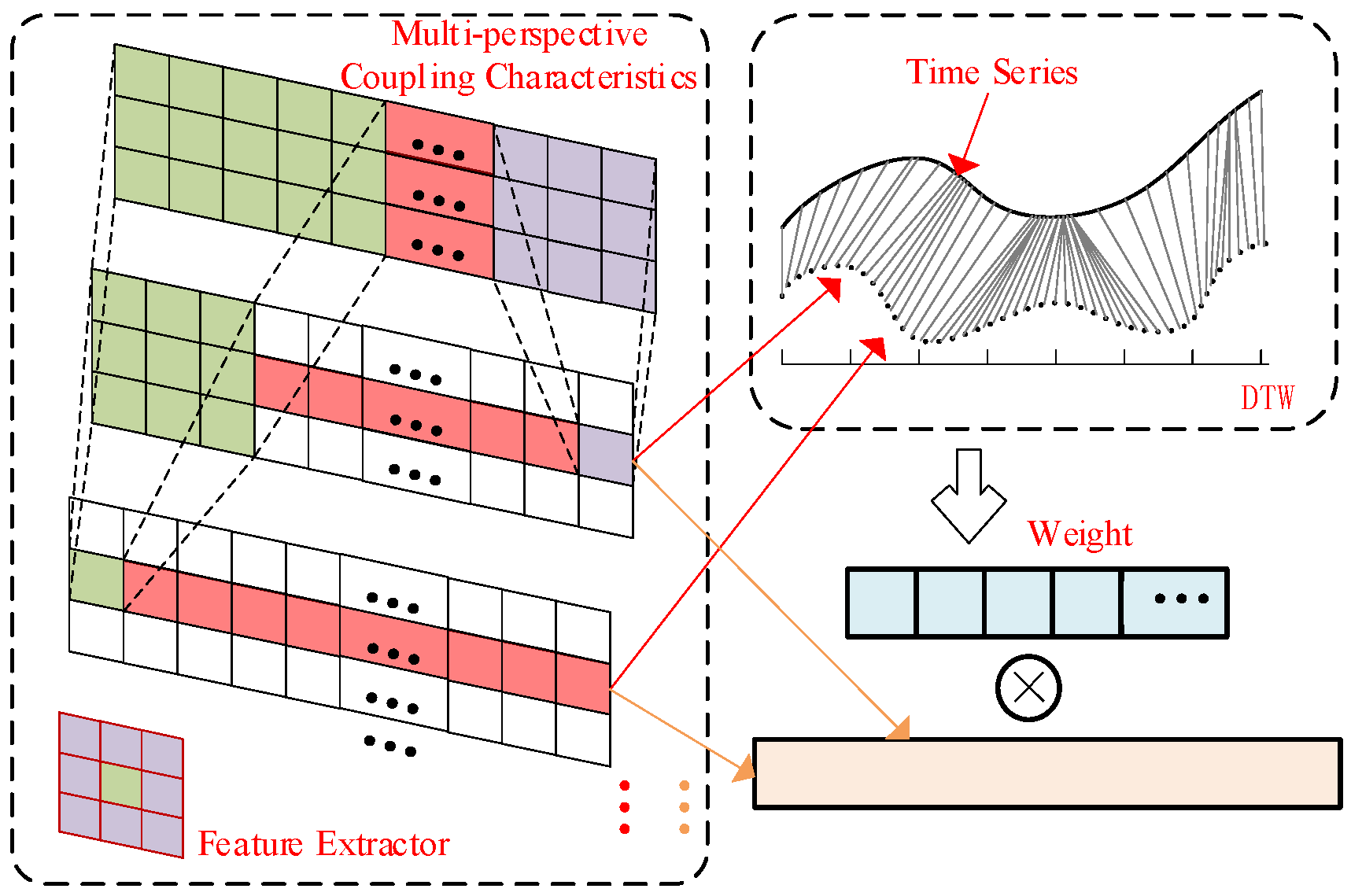

This architecture is designed to enable precise forecasting of integrated energy systems. Among these: The two-dimensional feature extraction model is used for extracting temporal features and coupled features, thereby reducing feature loss; AP enable the adaptive adjustment of neural network parameters; Finally, the residuals are mitigated by employing a sparse self-attention mechanism. Our main contributions are summarized as follows:

- (1)

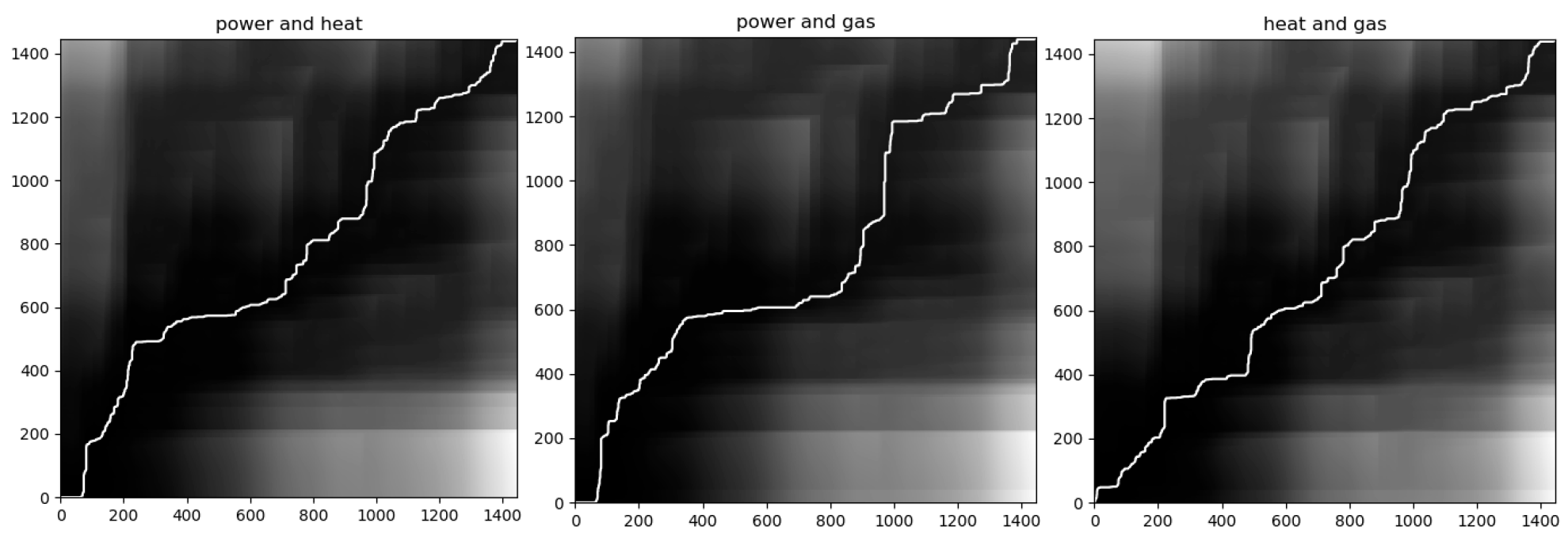

In order to investigate the coupling characteristics of multi-energy systems, Dynamic Time Warping (DTW) is employed to analyze temporal delays and oscillatory behaviors within load data.

- (2)

Periodic characteristics are systematically quantified through multi-timescale information fusion. A two-dimensional feature extraction model is then employed to enhance the correlation of the coupled features within their respective cycles.

- (3)

AP accommodate dynamic network structures, thereby mitigating issues of overfitting and underfitting.