2. Materials and Methods

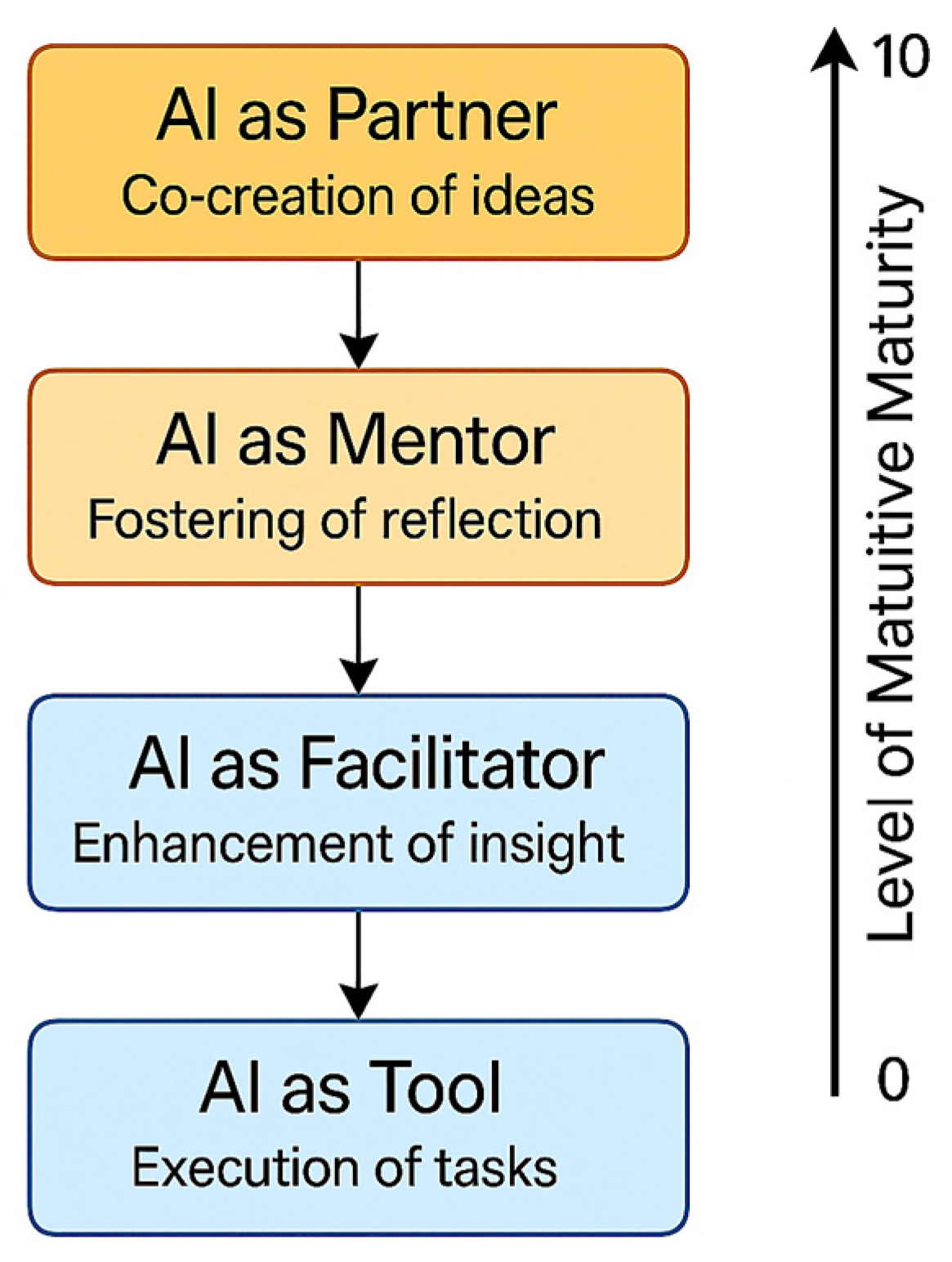

The cognitive co-evolution model builds upon the duality between two processes that coexist in human–AI interaction: cognitive growth and cognitive atrophy.

These processes are not opposites but mutually entangled trajectories of human adaptation to intelligent systems. Growth occurs through stimulation, reflection, and creative co-thinking with AI, while atrophy arises from excessive reliance, automation complacency, and the erosion of self-generated reasoning.

This dual dynamic reflects a dialectical principle of technological cognition: every cognitive extension carries the risk of internal contraction if not consciously regulated.

The model synthesizes two conceptual strands:

- •

Cognitive growth model (CGM) representing the reinforcement of metacognitive skills through interactive AI use, feedback loops, and reflective questioning.

- •

Cognitive atrophy paradox (CAP) representing the progressive reduction in analytical effort and epistemic responsibility as AI becomes more autonomous and confident in its outputs.

Although the CGM and the CAP are both presented as four-stage progressions, they represent two opposite trajectories of human–AI cognitive adaptation. CGM explains how reflective, metacognitive engagement leads to increasing autonomy and conceptual reinforcement, whereas CAP describes the erosion pathway driven by over-reliance and automation bias. Their structural similarity reflects the fact that both are evolutionary processes, but they operate in opposite directions and produce different cognitive consequences. To avoid conceptual redundancy, later sections integrate these dual trajectories into a unified co-evolution framework (

Section 2.3), where their interaction defines cognitive balance.

2.2. Cognitive Atrophy Paradox

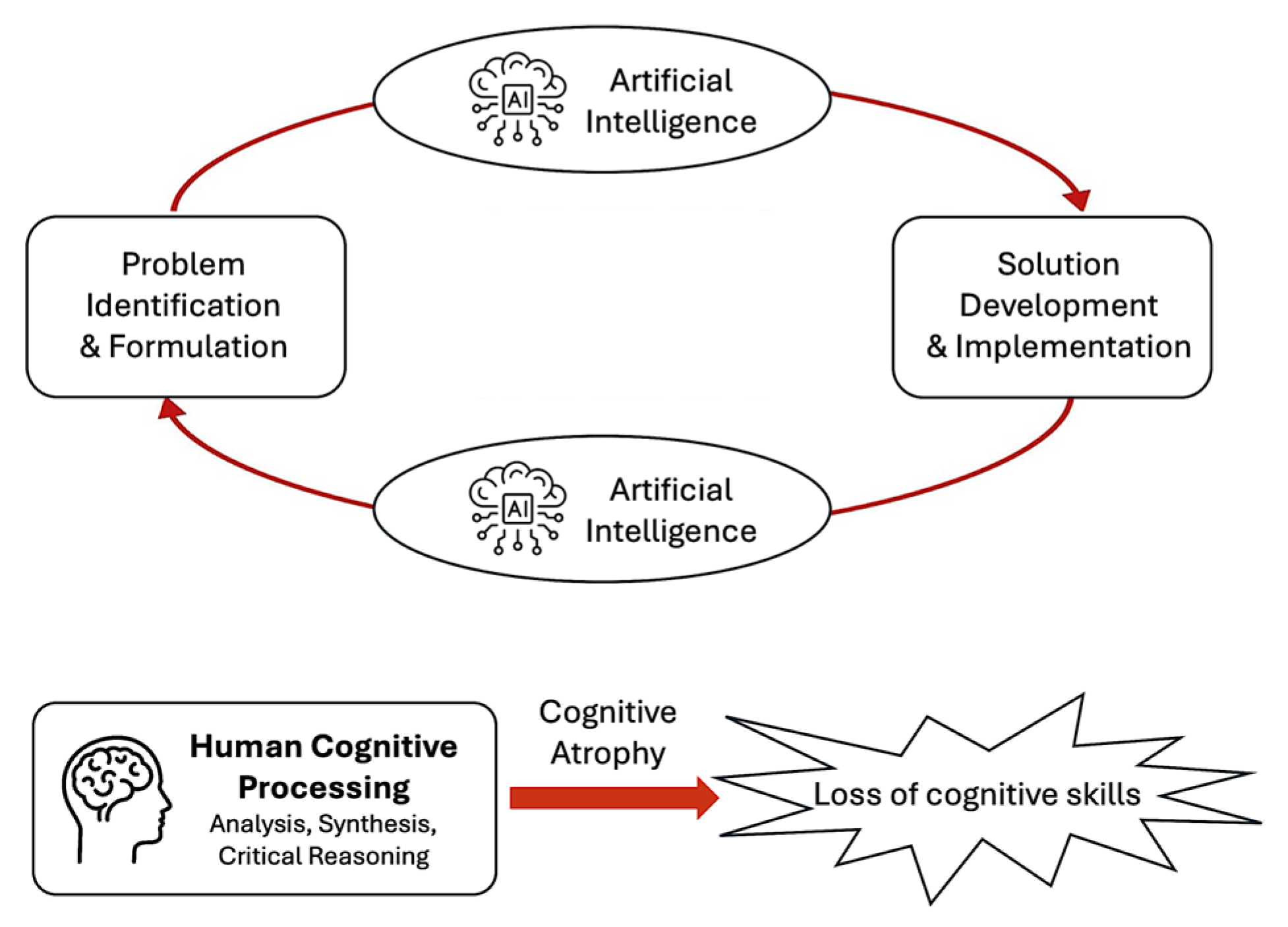

The cognitive atrophy paradox describes a counterintuitive phenomenon in which the same technological systems that enhance cognitive efficiency also erode the very mental functions they are meant to support. As AI systems become increasingly capable, transparent, and autonomous, individuals gradually shift from using these systems to thinking through them substituting machine inference for human understanding.

This paradox captures the essential fragility of human cognition in the era of intelligent automation: the more fluently technology assists us, the less effort we invest in maintaining the cognitive processes that underlie reasoning, creativity, and judgment. The CAP unfolds through four sequential yet overlapping phases, representing a gradual reconfiguration of human mental effort.

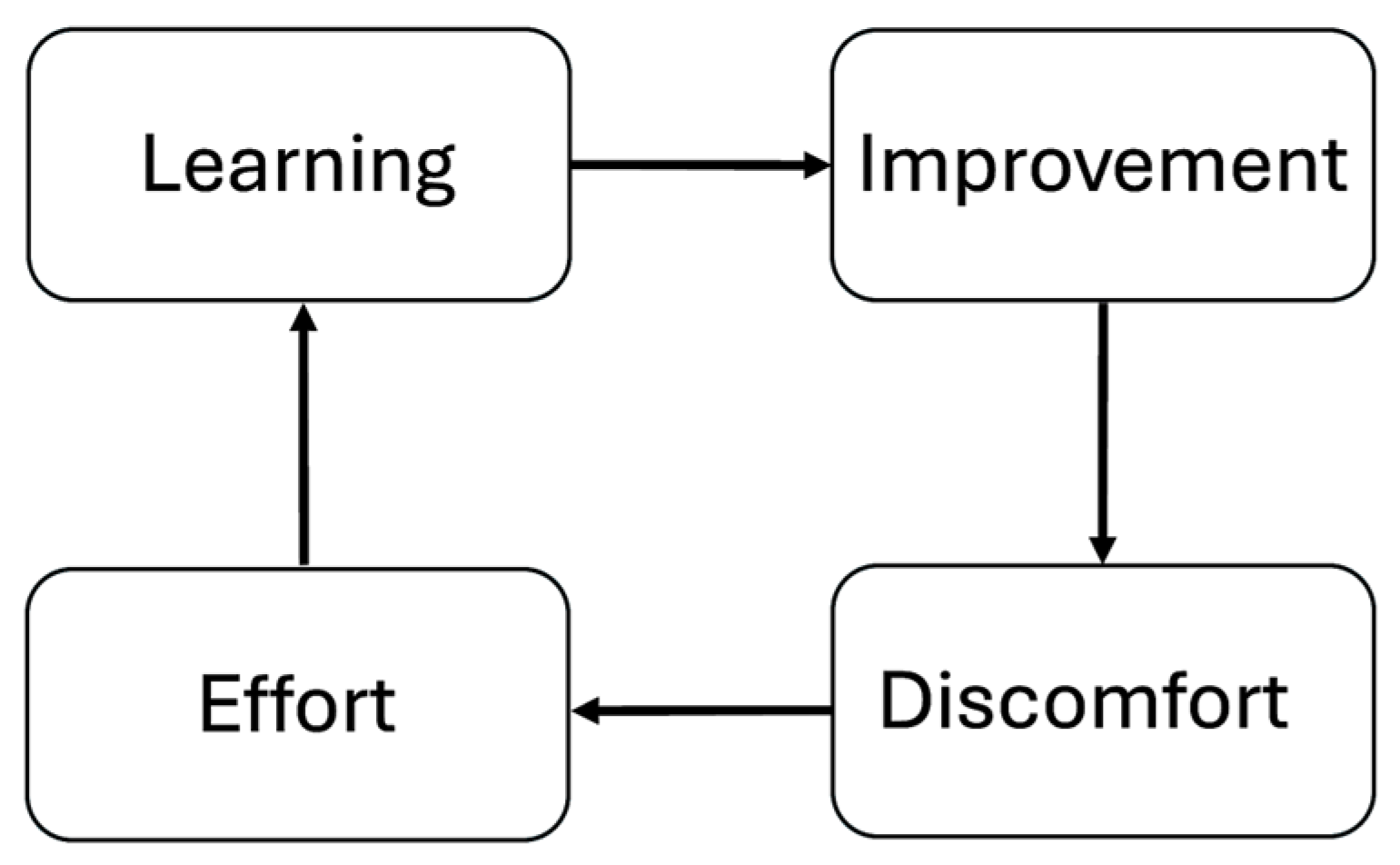

Phase 1. Traditional cognition. Before AI mediation, cognitive activity was fully endogenous. Humans independently formulated problems, synthesized information, and validated results. Each cycle of learning acted as a form of cognitive training, reinforcing neural pathways associated with understanding and recall (

Figure 2).

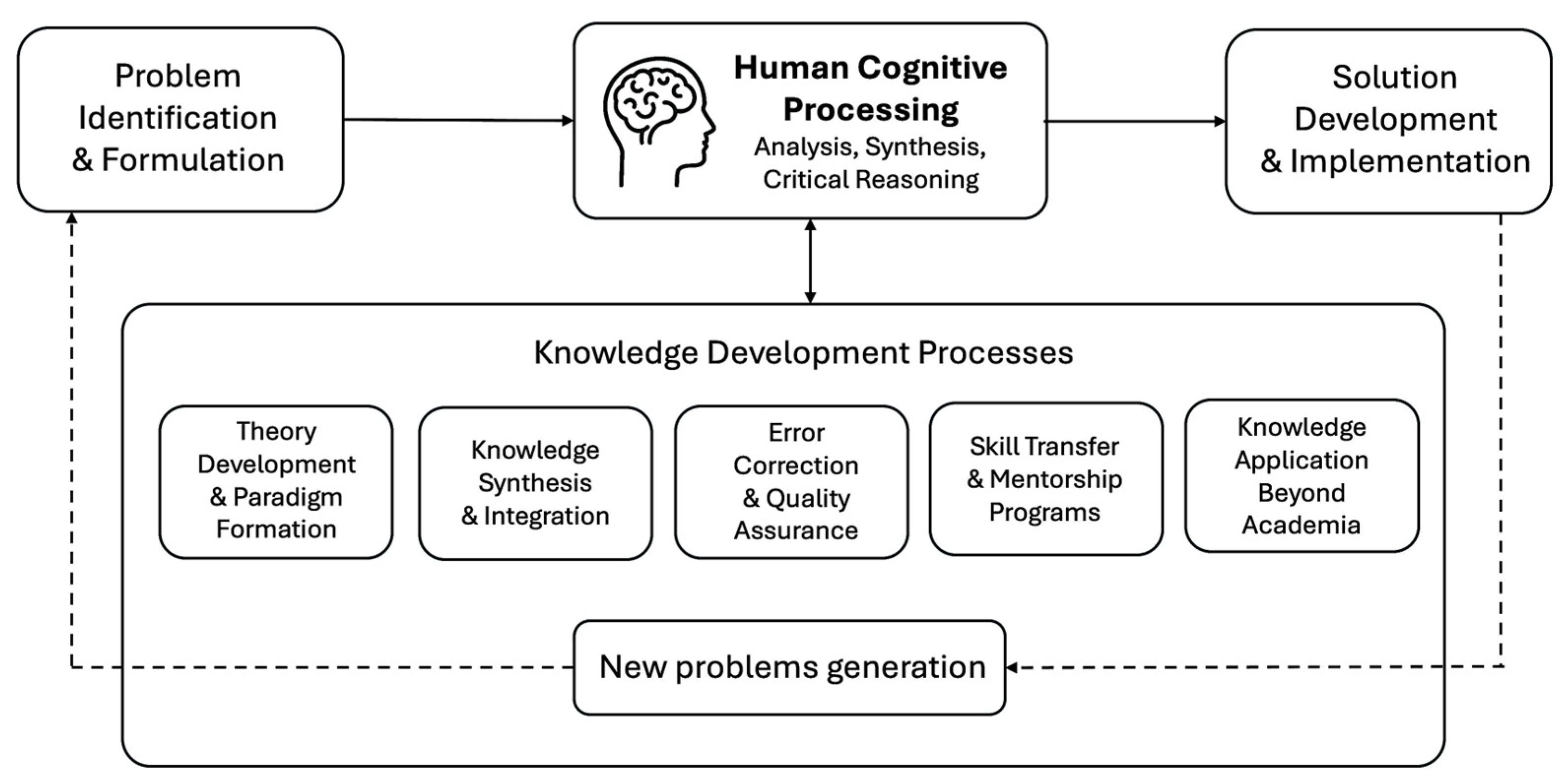

Phase 2. Augmentation phase. The introduction of AI-based tools (such as search engines, analytical assistants, and generative models) provides cognitive amplification. AI acts as a supportive extension of the user’s intellect offloading routine computations while preserving conceptual control. At this stage, cognitive capacity expands through collaboration (

Figure 3).

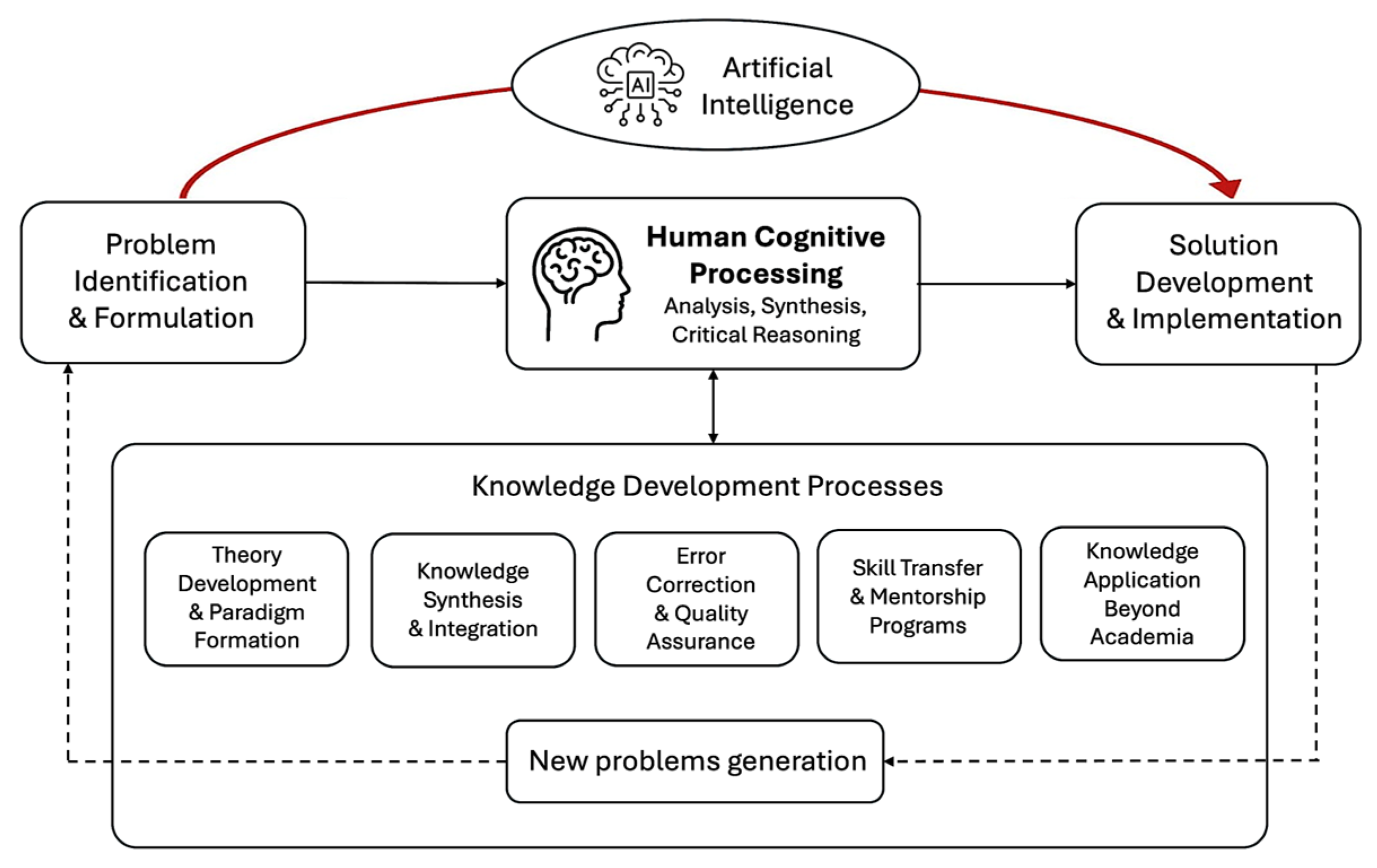

Phase 3. Bypass phase. As AI systems demonstrate growing accuracy and fluency, users begin to bypass internal reasoning. Instead of constructing knowledge, they retrieve it. Understanding becomes secondary to obtaining the correct or efficient output. Reflection declines, and cognitive offloading turns into cognitive avoidance (

Figure 4).

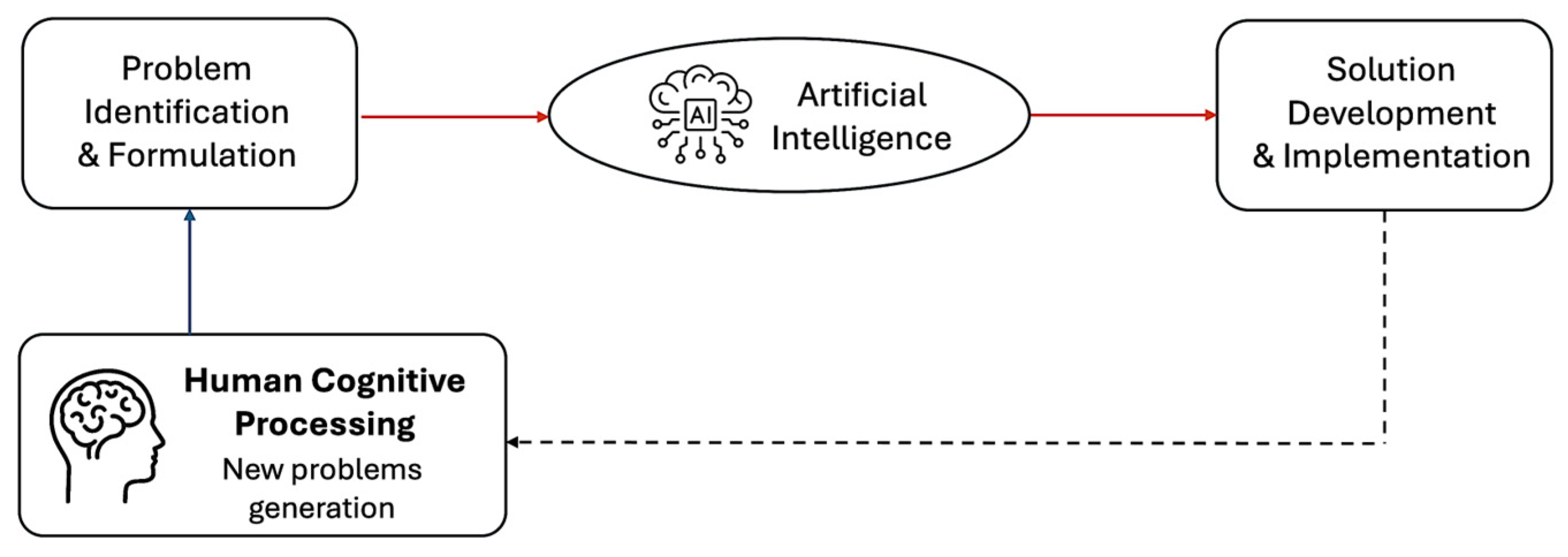

Phase 4. Dependency phase.

Prolonged reliance culminates in cognitive passivity. Users cease to verify, interpret, or reconstruct information generated by AI, accepting algorithmic outputs as epistemic authority. The mind no longer rehearses analytical processes and gradually loses the ability to regenerate them without external support (

Figure 5).

These phases do not represent discrete categories but a continuum of decreasing cognitive autonomy. Over time, the bypass and dependency phases can lead to structural cognitive weakening, analogous to muscular atrophy in physiology: unused neural and metacognitive circuits deteriorate due to disuse.

Cognitive atrophy emerges gradually as individuals delegate increasing portions of analytical and creative effort to intelligent systems. What begins as a convenience evolves into delegation drift as a slow reallocation of cognitive responsibility from comprehension and synthesis to prompting and verification.

This shift produces several manifestations:

- •

Automation bias, where AI outputs are trusted over personal reasoning.

- •

Comprehension erosion, as users rely on summaries rather than underlying principles.

- •

Motivational narrowing, in which intellectual curiosity declines when effort is consistently mediated by algorithms.

The dynamics are subtle: cognitive effort is not lost but redistributed toward managing and interpreting machine outputs. Over time, this reconfiguration weakens deep learning and adaptive problem-solving skills, reinforcing passive consumption behaviors. Mitigation requires balancing automation with deliberate cognitive engagement. Cultivating awareness of these mechanisms allows human cognition to evolve alongside technology rather than erode beneath it.

2.3. Cognitive Co-Evolution Model

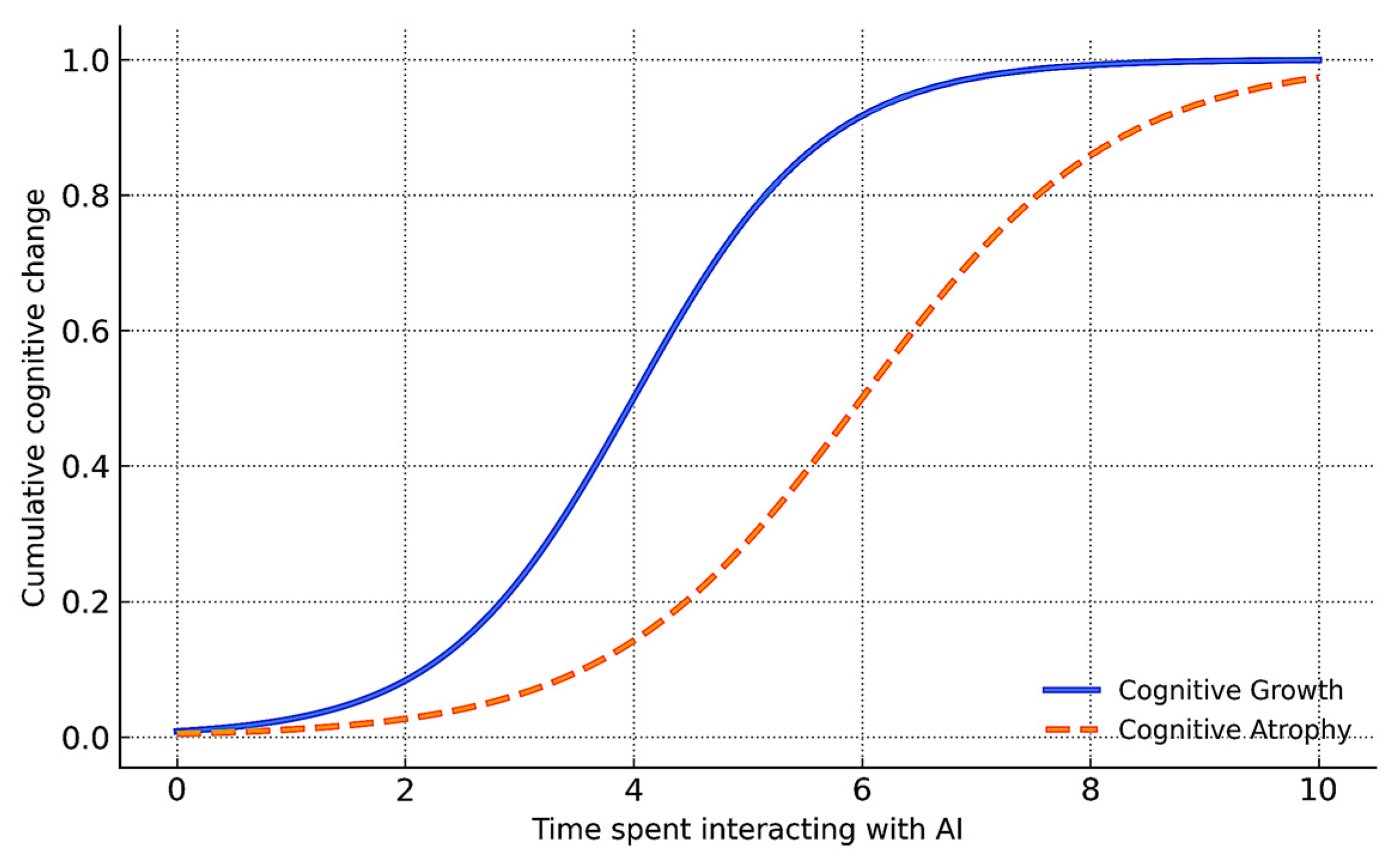

The dynamics of human–AI interaction are best understood as a continuous and reciprocal process in which both cognitive growth and cognitive atrophy evolve over time. Rather than representing mutually exclusive outcomes, these trajectories coexist as dual accumulative processes whose interaction defines the overall direction of cognitive development.

The cognitive co-evolution model conceptualizes this relationship as a system of two interdependent, monotonically increasing functions (

Figure 6), one of which capturing the reinforcement of metacognitive and creative capabilities (cognitive growth), and the other representing the gradual accumulation of dependency and automation bias (cognitive atrophy). Both cognitive growth and cognitive atrophy intensify during prolonged interaction with AI systems, though they follow distinct temporal patterns.

To provide a more intuitive interpretation of the dynamics shown in

Figure 6, it is useful to view the two curves as representing parallel but opposing tendencies that unfold during prolonged interaction with AI systems. The cognitive growth curve

begins with a steep incline because early engagement with AI typically stimulates reflection, exploration, and rapid acquisition of new conceptual structures. As the user internalizes these benefits, the rate of improvement naturally slows, producing a plateau that reflects diminishing marginal returns to learning. In contrast, the atrophy curve

starts slowly because the initial use of AI still requires active verification and human oversight. Over time, as reliance on automated outputs increases and habits of independent reasoning weaken, the rate of atrophy accelerates, generating the characteristic upward bend of the second curve.

The intersection of the derivatives of these curves, not the values, captures the moment of cognitive balance: a point at which the incremental benefits of AI-assisted learning are matched by the incremental risks of over-delegation. Before this point, growth dominates, and human–AI interaction strengthens cognitive autonomy. Beyond it, atrophy becomes increasingly likely as automated reasoning begins to displace internal cognitive effort. The curves therefore do not depict fixed stages or predetermined outcomes but rather illustrate how cognitive trajectories evolve dynamically in response to user behavior, interaction patterns, and the intensity of AI reliance. This interpretation helps clarify the qualitative meaning of the model and highlights the importance of maintaining reflective engagement to avoid entering the atrophy-dominant region.

The critical transition between these two dynamics occurs at the cognitive balance point (CBP), defined mathematically as the moment when the rates of change in both processes are equal:

At this equilibrium, the benefits of reflective augmentation are exactly counterbalanced by the onset of automation-induced decline.

Although both cumulative functions

and

continue to increase beyond this point, their relative slopes determine whether the system evolves toward sustainable co-adaptation or cognitive dependency. The region preceding the CBP corresponds to the cognitive growth zone, characterized by metacognitive reinforcement and creative exploration, while the region beyond it defines the cognitive inversion zone, where apparent efficiency masks the erosion of analytical depth and epistemic independence.

The cognitive co-evolution model thus provides a unifying theoretical foundation for subsequent quantitative analysis. In this sense, the model links theory and measurement, it captures not only what changes as humans engage with AI but also how the pace of those changes defines long-term cognitive sustainability. Through this framework, cognitive health can be continuously monitored and regulated via applied cognitive management (ACM) mechanisms, ensuring that technological augmentation remains reflective, adaptive, and intellectually regenerative.

2.5. Applied Cognitive Management Framework

The applied cognitive management framework translates the theoretical constructs of cognitive growth, atrophy, and sustainability into a practical governance system for maintaining cognitive integrity within human–AI ecosystems (

Figure 8).

While the cognitive co-evolution model captures the dynamic interplay between growth and decline, and the cognitive sustainability index quantifies their balance, ACM establishes the feedback and control architecture that transforms analytical insight into adaptive action.

At its core, ACM functions as a closed-loop regulatory system, continuously monitoring, interpreting, and adjusting cognitive dynamics to preserve equilibrium between human autonomy and algorithmic assistance. The framework follows a three-stage operational cycle:

Measurement with continuous tracking of cognitive indicators, including CSI values and behavioral proxies, to assess cognitive engagement and automation reliance.

Interpretation with analytical evaluation of deviations from the optimal cognitive balance zone, identifying early signs of drift toward over-delegation or cognitive fatigue.

Intervention—application of targeted educational, managerial, or behavioral adjustments that restore balance, ensuring sustainable co-adaptation of human and artificial cognition.

This cyclical mechanism mirrors classical cybernetic control systems, but it is specifically adapted for cognitive–organizational regulation.

ACM operates across four interconnected levels of control:

- •

At individual level, users employ reflective self-assessment tools, dashboards, and feedback loops to monitor their own CSI and cognitive engagement.

- •

At educational level, instructors and learning platforms analyze aggregated cognitive metrics to adapt course design, assessment methods, and metacognitive scaffolding.

- •

At professional level, organizations monitor workforce interaction patterns with AI tools, adjusting automation intensity and promoting analytical independence.

- •

At institutional level, policymakers and leadership bodies incorporate cognitive sustainability metrics into digital transformation strategies, innovation management, and ethical governance frameworks.

At every level, ACM prioritizes preventive rather than corrective regulation, focusing on maintaining awareness and cognitive resilience before dependency or skill erosion occurs. The goal of ACM is not to minimize automation, but to manage its cognitive consequences. Preventive strategies may include:

- •

Scheduled reflective pauses or “human-in-the-loop” checkpoints in automated decision chains.

- •

Alternating cycles of human-only and AI-assisted task execution.

- •

“Explain-your-choice” mechanisms to sustain reasoning transparency and epistemic accountability.

These interventions stabilize the cognitive homeostasis zone, preventing cumulative cognitive drift and fostering a balanced distribution of effort between comprehension and automation.

Formally, the ACM process can be described as a dynamic feedback function:

where

denotes the instantaneous cognitive sustainability level,

represents the deviation from the cognitive balance zone and

reflects the magnitude or intensity of corrective interventions.

When

exceeds a critical threshold, ACM triggers adaptive responses that recalibrate the human–AI interaction ratio, reinforcing reflective engagement and cognitive autonomy.

Practically, ACM acts as the operational interface between cognitive analytics and digital governance. Integrated into organizational dashboards, it enables real-time visualization of team-level cognitive health and early detection of automation-induced dependency.

For executives, educators, and policy leaders, ACM provides a strategic mechanism to reconcile immediate efficiency gains with long-term intellectual capital preservation, ensuring that digital transformation enhances, not erodes human cognitive capacity.

Ultimately, the ACM framework transforms the abstract notion of cognitive sustainability into a continuous process of measurement, reflection, and adaptation. It represents a new paradigm of cognitive governance, where human and artificial intelligence co-evolve through deliberate supervision maintaining technological progress as both efficient and intellectually regenerative.

3. Results

3.1. Derived Cognitive Parameters and Functional Relationships

To examine the quantitative behavior of the CSI and validate the dynamics of the cognitive co-evolution model, a simplified simulation framework was developed. The model quantifies the balance between human cognitive engagement and AI dependency using normalized behavioral parameters and adaptive weighting. Although the data are illustrative, the simulation reflects realistic interaction scenarios observed in AI-supported educational and professional environments.

To empirically illustrate the operational behavior of the CSI, two representative user profiles were modeled:

- •

Researcher, exemplifying high autonomy and reflective balance.

- •

Student, exhibiting partial cognitive delegation and dependency.

The purpose of this simulation was not to describe actual participants but to demonstrate the interpretability and sensitivity of the index under realistic cognitive configurations.

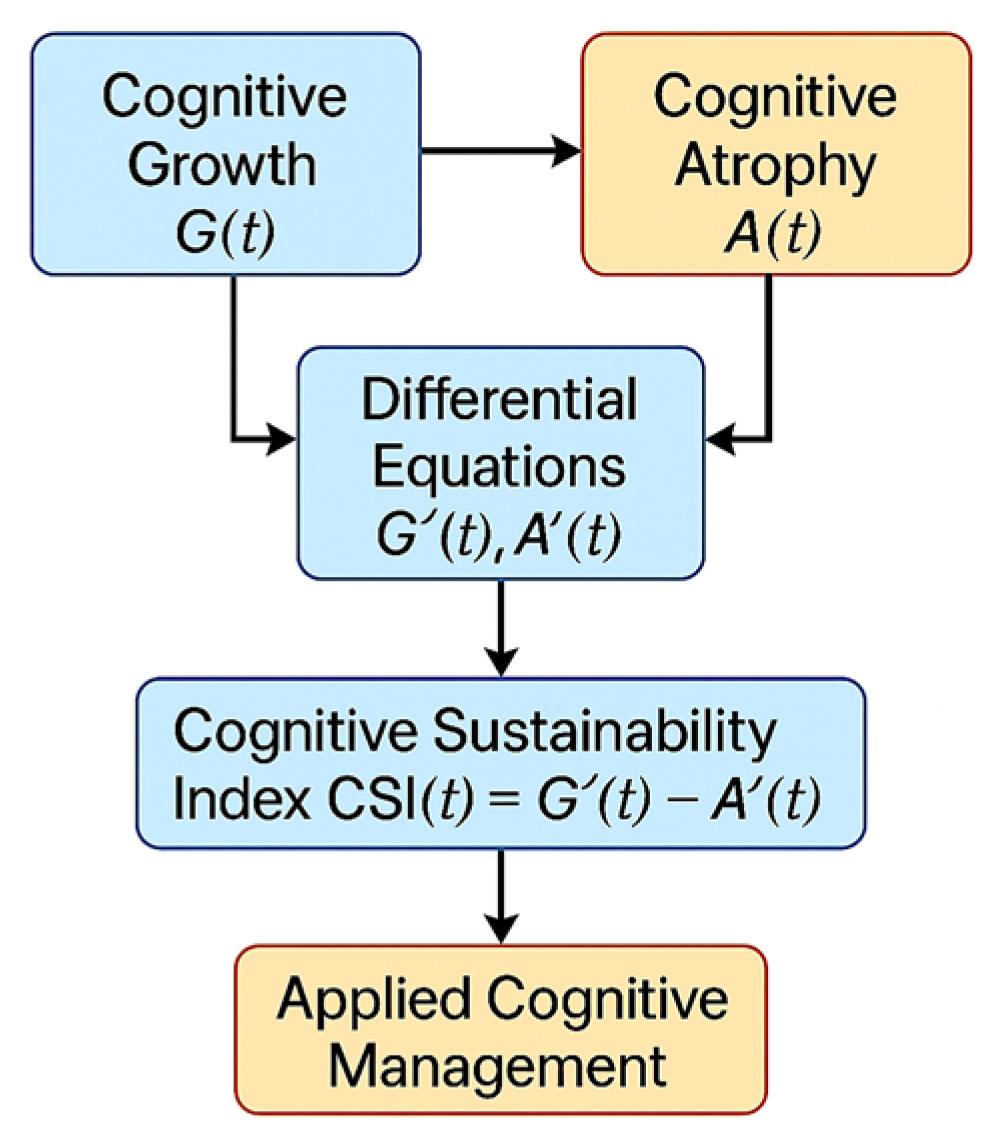

Cognitive performance over time is represented by two interacting functions:

- •

Cognitive growth G(t)—reinforcement of autonomy, reflection, and creativity.

- •

Cognitive atrophy A(t)—decline of analytical depth caused by over-delegation and automation bias.

Both are parameterized through five behavioral indicators.

The resulting cognitive sustainability index is expressed as:

where

denote human-side parameters,

capture automation influence, and

are weight coefficients reflecting contextual emphasis.

Notation of the parameters is shown in

Table 3. All parameters were normalized to a 0–10 ordinal scale enabling comparative assessment between users or time periods. For this study, steady-state (static) values were used to isolate the equilibrium characteristics of the index.

Each variable in the CSI can be evaluated on a 0–10 scale with behavioral anchors (

Table 4), ensuring consistency across self-assessment and expert assessment.

Both self-assessment and external (instructor or supervisor) assessment forms are used. The self-assessment captures perceived cognitive engagement, while the external evaluation provides an objective counterpoint based on observed behaviors (e.g., originality, reasoning depth, frequency of independent analysis).

Each parameter set was evaluated through a two-stage computation:

Raw CSI values were first obtained under a neutral configuration

providing an unweighted reference ratio between human and AI factors.

- 2.

Contextual recalibration.

The results were then adjusted using the context-specific weighting scheme:

- ○

For human-dominant conditions (Researcher), WH was increased to 1.4.

- ○

For automation-dominant conditions (Student), WAI was increased to 1.5.

This recalibration allows CSI to reflect not only the parameter magnitudes but also the cognitive environment’s sensitivity emphasizing reflection in growth contexts and delegation pressure in dependency contexts. The context-adjusted results are reported in

Table 5.

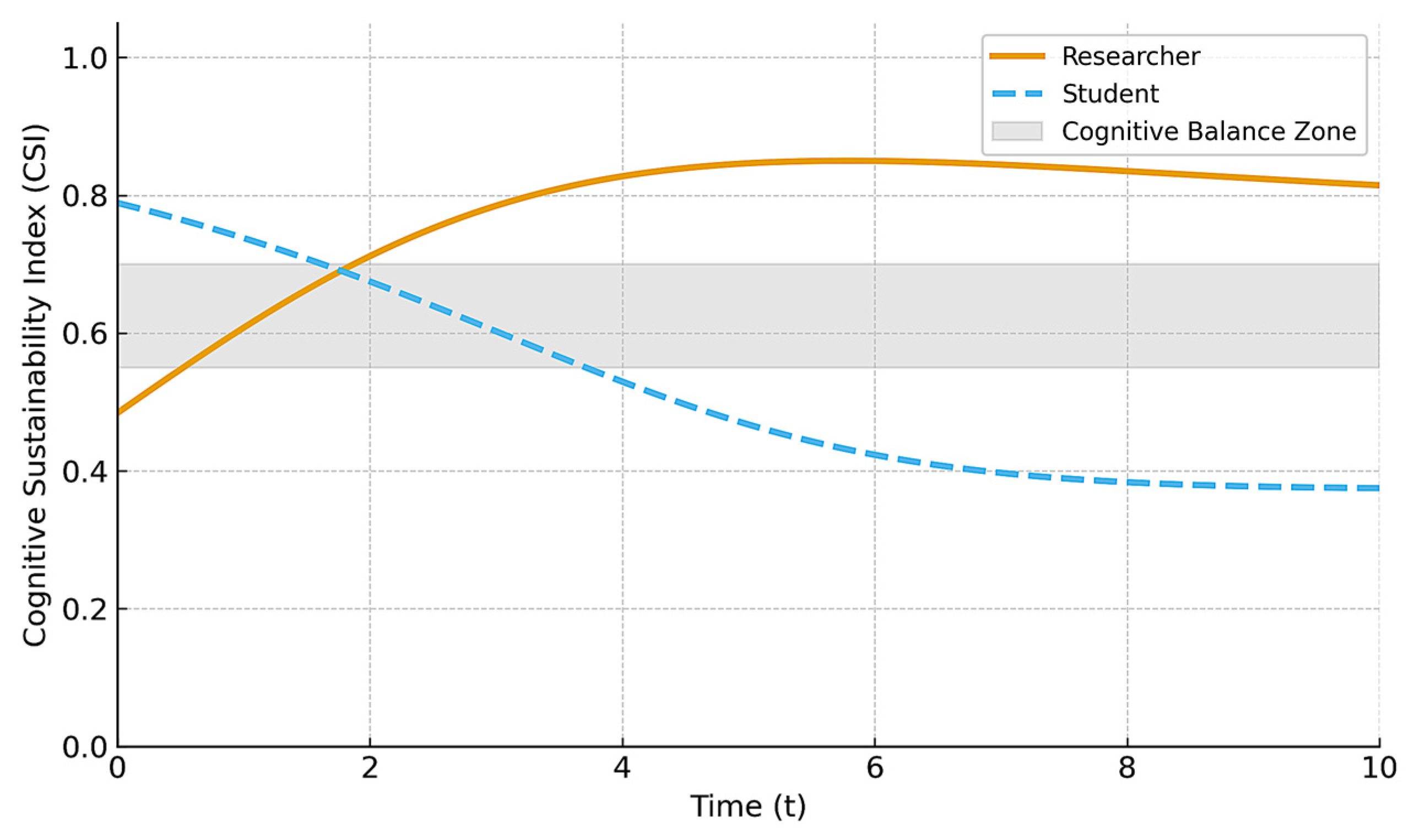

For each profile,

is computed. The researcher profile assumes high metacognitive regulation () and low automation reliance (), with rapid growth () and slow atrophy (). The student profile assumes lower metacognitive regulation () and higher automation reliance (), with slower growth () and faster atrophy (). Time is modeled over

in normalized learning cycles. The shaded band () denotes the cognitive balance zone, where human and AI contributions remain cognitively sustainable.

Figure 10 illustrates the temporal evolution of the CSI for Researcher and Student representative user profiles modeled using differential growth and atrophy parameters. The CSI quantifies the dynamic balance between metacognitive engagement (

) and automation reliance (

) across time.

The time variable

is expressed in normalized units corresponding to progressive learning or operational cycles, rather than absolute time. Each increment represents a discrete stage in cognitive adaptation or automation exposure.

The Researcher curve (solid line) demonstrates a consistently high level of cognitive sustainability, remaining above the cognitive balance zone (CBZ) defined within the interval

. This profile reflects a strong dominance of metacognitive regulation over automation reliance, ensuring sustained conceptual understanding and reflective control.

In contrast, the Student curve (dashed line) initially rises as learning progresses but soon declines below the CBZ, entering the cognitive atrophy zone ().

This indicates a transition from active synthesis to dependency on automation, where comprehension and reasoning are increasingly replaced by verification-oriented behavior.

The shaded area corresponding to the CBZ represents the optimal equilibrium between human and artificial cognition, where automation enhances performance without undermining understanding. The divergence of the two curves emphasizes how differences in metacognitive regulation and automation reliance can determine the long-term sustainability of cognitive performance.

Overall, the figure demonstrates that maintaining

is essential for preserving cognitive integrity within AI-assisted learning or professional environments, and that continuous monitoring of CSI can serve as an early diagnostic indicator of cognitive imbalance or dependency.

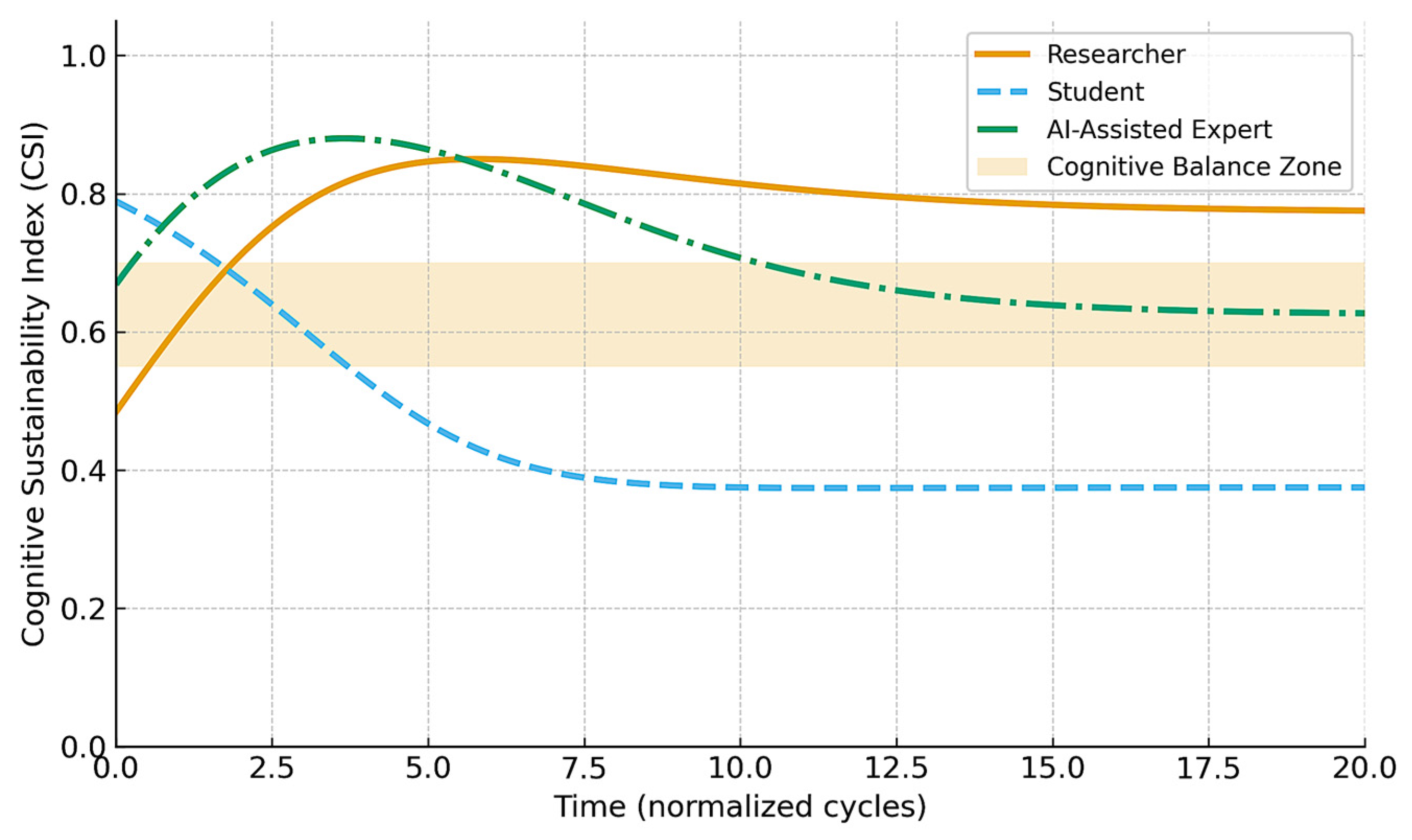

Figure 11 shows the modeled evolution of CSI for three profiles: Student (high automation reliance, low metacognitive regulation), Researcher (high metacognitive regulation, low automation reliance), and AI-Assisted Expert (high metacognitive regulation with disciplined, audited AI use). Time is expressed in normalized cognitive adaptation cycles. The shaded band represents the CBZ defined as

, where human and AI contributions are synergistic and cognitively sustainable.

The Student profile (dashed line) rapidly falls below the CBZ and stabilizes at a low CSI, indicating dependency and conceptual atrophy. The Researcher profile (solid line) maintains a high CSI, reflecting durable autonomy and reflective control, but drifts above the CBZ, meaning performance is sustainable but depends primarily on human cognition rather than balanced co-cognition. The AI-Assisted Expert profile (dash-dotted line) initially accelerates toward very high CSI (rapid learning amplification through AI), then gradually stabilizes inside the CBZ. This indicates an equilibrium in which AI augmentation is strong but remains continuously audited and cognitively internalized by the human actor. This behavior represents the target operational regime for cognitively resilient human–AI teaming.

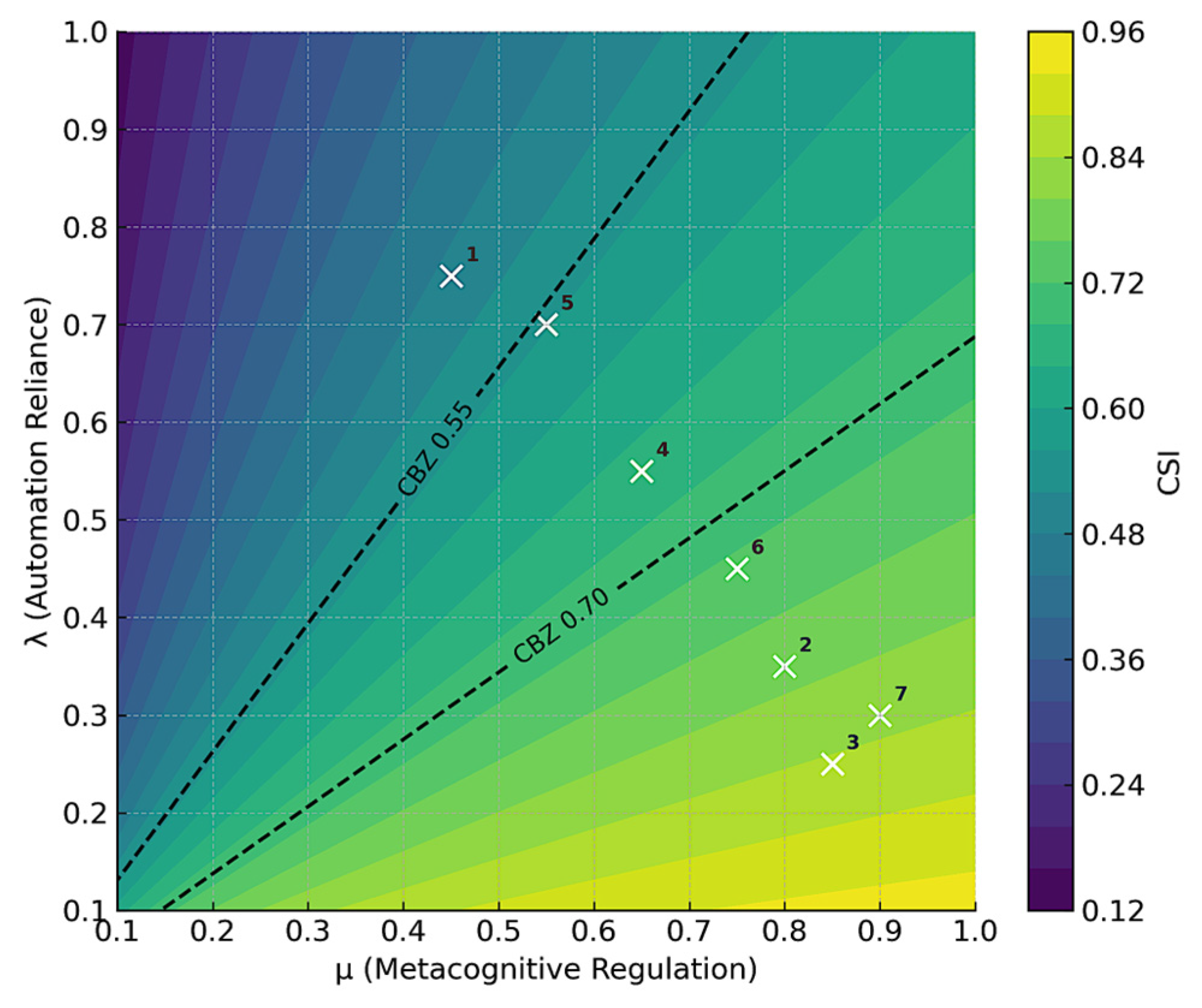

Figure 12 presents a contour map of CSI as a function of metacognitive regulation

and automation reliance

, evaluated at a representative adaptation stage

. Warmer regions correspond to higher CSI (more sustainable cognition), and cooler regions correspond to lower CSI (greater risk of cognitive atrophy). The dashed contour lines indicate the CBZ, here marked at

(upper boundary of desirable balance) and

(lower boundary). Points above these lines reflect cognitively resilient regimes in which reflective control dominates over blind automation. Regions below the lower CBZ contour correspond to high automation reliance with insufficient metacognitive regulation, indicating unstable cognitive dependence on AI systems.

3.2. Reference Evaluation Tables for Cognitive Sustainability Assessment

To operationalize the CSI, a detailed evaluation toolkit was developed. It defines five foundational parameters which together characterize the user’s cognitive engagement within AI-supported activities: Human Autonomy (H), Reflection (R), Creativity (C), Delegation (D), and Reliance (L). Each parameter is rated on a 0–10 scale, enabling both self- and expert-based evaluation.

The assessment of the five fundamental cognitive dimensions provides a nuanced understanding of user interaction patterns within AI-supported environments.

Each parameter contributes distinct insights into the mechanisms underlying CSI and reveals characteristic profiles associated with different phases of cognitive co-evolution.

Human autonomy (H) exhibited a wide variance across users, indicating that autonomy is the most sensitive indicator of cognitive balance. Participants or test groups maintaining autonomy scores between 5 and 7 demonstrated stable equilibrium between independent reasoning and algorithmic assistance, whereas those below level 3 showed a clear tendency toward cognitive delegation and automation dependence. These findings confirm that sustained cognitive autonomy is a primary prerequisite for long-term cognitive sustainability.

Reflection (R) emerged as a central mediator of metacognitive regulation. Moderate reflection scores (4–6) corresponded with consistent verification and interpretation of AI-generated reasoning, while higher levels (7–8) indicated the formation of meta-reflective cycles were users actively anticipated AI limitations. Low reflection values were systematically associated with mechanical acceptance of outputs and correlated negatively with both autonomy and creativity.

Creativity (C) revealed strong nonlinear dependence on autonomy and reflection. Participants with balanced cognitive engagement (CSI ≈ 2.0) demonstrated generative creativity, blending AI suggestions with human conceptual input. When reflection or autonomy dropped below threshold levels, creativity rapidly decreased, producing purely reproductive outputs. Conversely, high reflection combined with active autonomy (scores above 7) corresponded to transformative and original ideation, aligning with the Cognitive Growth Zone.

Delegation (D) showed the inverse relationship to autonomy, functioning as a compensatory variable within the cognitive balance model. Balanced delegation values (5–6) were associated with effective task-sharing between human and AI components, while excessive delegation (>7) indicated over-reliance and reduced epistemic responsibility. Controlled delegation therefore acts as a stabilizing factor that prevents both underutilization and cognitive overload.

Reliance (L), representing the user’s trust calibration toward AI, demonstrated the narrowest optimal range. Scores around 5–6 corresponded to informed reliance—confidence grounded in understanding of algorithmic logic and contextual validation. Low reliance values (<3) reflected systematic distrust leading to cognitive inefficiency, whereas high values (>8) signaled overconfidence in automation. This confirms that trust must be dynamically modulated rather than maximized to preserve reflective engagement.

Overall, the distribution of parameter values supports the theoretical expectation that cognitive sustainability emerges only within a balanced configuration of autonomy, reflection, and creativity, modulated by adaptive levels of delegation and reliance.

This equilibrium represents the CBZ which functions as the empirical foundation for computing the composite CSI.

To support the practical application of the CSI and its interpretation through the CIS, a set of standardized evaluation tables was developed. These tables provide reference criteria for both self-assessment (subjective user reflection) and external expert assessment (educator, supervisor, or analyst evaluation). They enable consistent qualitative interpretation of quantitative CSI values and can be used in training, educational analytics, or professional development programs to monitor cognitive maturity in human–AI collaboration. The scales follow a five-level structure (0–10), where each range corresponds to a distinct cognitive interaction zone and includes characteristic behavioral indicators.

Table 11 presents a self-assessment reference, while

Table 12 provides guidelines for external evaluation.

The two scales can be used together as complementary instruments within the applied cognitive management framework.

In educational or professional settings, individuals perform a self-assessment using

Table 11, followed by an expert or supervisor evaluation using

Table 12. In this way, the reference tables operationalize the theoretical models introduced in previous sections, offering a transparent and standardized approach to evaluating cognitive balance, autonomy, and reflective engagement in AI-assisted environments.

3.3. Case Study: Cognitive Scale of AI Utilization in Software Development

To validate the applicability of the cognitive co-evolution model and the CSI beyond educational contexts, the model was extended to the software engineering professional domain. This sector provides a clear and data-rich example of human–AI co-adaptation: programmers increasingly use generative and assistive AI tools (e.g., code completion, debugging agents, documentation assistants), which directly affect autonomy, reflection, and creativity, the same cognitive dimensions used in the CSI model.

The data were obtained through a combined analytical and observational approach conducted during the first half of 2025.

Three complementary data sources were used:

Expert evaluation. Structured interviews were conducted with ten senior developers and technical leads from European software companies. Each participant assessed the extent of AI use within their teams using five-point Likert scales corresponding to the CSI dimensions (Autonomy, Reflection, Creativity, Delegation, and Reliance).

Behavioral observation. Public developer repositories were analyzed qualitatively to identify behavioral markers of cognitive engagement—frequency of independent problem formulation, use of AI-generated code without modification, and documentation style.

Self-reported surveys. Developers at different career stages completed short questionnaires estimating the proportion of their daily tasks assisted by AI (measured in percentages) and their perceived dependence on automated tools.

All responses were normalized to a 0–10 cognitive interaction scale consistent with the CSI model, where higher values represent higher levels of cognitive maturity and balanced collaboration with AI. Average scores were aggregated by professional level (Junior, Middle, Senior, and R&D/AI Engineers) and cross-validated through expert consensus.

The aggregated data (

Table 13) indicate that the average cognitive utilization level of AI in software development in 2025 lies between 5 and 7, corresponding to collaborative interaction rather than full cognitive synergy. Junior developers tend to operate within the assisted range, often using AI as a direct problem-solving substitute. Senior and R&D engineers demonstrate higher integration and reflective control, aligning more closely with the CGZ.

The results confirm that programming professions exhibit the entire cognitive spectrum predicted by the model from mechanistic use to reflective synergy.

By mapping the behavioral data from

Table 13 onto the CSI formula, approximate index values can be inferred:

- •

Junior ≈ 1.0–1.5

- •

Middle ≈ 2.0–2.5

- •

Senior ≈ 3.0–4.0

- •

R&D ≈ 4.5–5.0

The software development domain serves as a good empirical validation of the CSI’s interpretive range and demonstrates the potential for industry-level cognitive monitoring.

Figure 13 visualizes the cognitive positioning of different roles in an AI-assisted software development team. The background heatmap shows the CSI as a function of metacognitive regulation

(

x-axis) and automation reliance

(

y-axis), evaluated at a representative adaptation stage

. Higher CSI (lighter regions) indicates more sustainable cognition—reflective control, internalized understanding, and reversible use of automation. Lower CSI (darker regions) indicates elevated cognitive atrophy risk.

Dashed contour lines mark the CBZ, here defined between

and

. Points inside or near this band represent roles where AI support is strong but remains cognitively accountable and auditable.

Each white marker corresponds to a software development role or task context, labeled with an ID for clarity:

Junior Developer (AI-assisted coding)

Senior Developer (architectural reasoning)

Code Reviewer (human-led QA + AI linting)

DevOps Engineer (pipeline automation)

QA Tester (AI-generated test cases)

Prompt Engineer/Integrator

AI Tool Maintainer/Governance Lead

Roles 3 and 7 cluster in the high-CSI region: they combine high metacognitive oversight (strong review, governance, or architectural reasoning) with relatively low blind reliance on automation. Roles 1 and 5 sit closer to or just below the CBZ, reflecting heavy use of AI-generated output with limited epistemic verification. These locations reveal which tasks are cognitively self-sustaining and which tasks are drifting toward dependency.

While the current case study provides an initial empirical illustration of CSI applicability in software development, its interpretive scope is constrained by the small and non-random sample, absence of control groups, and descriptive rather than inferential analytical design. Therefore, the results should be viewed as indicative rather than conclusive. A rigorous validation program will require large-scale data collection across educational and industrial settings, longitudinal tracking of cognitive engagement patterns, and statistical testing of CSI’s discriminant, predictive, and convergent validity. Such efforts will support the transition from a conceptual demonstration to evidence-based assessment of cognitive sustainability in AI-assisted work.

4. Discussion

4.4. Challenges, Limitations, and Future Research Directions

Although the proposed cognitive co-evolution model and cognitive sustainability index establish a unified conceptual and quantitative framework for analyzing human–AI interaction, the study inevitably faces several theoretical, methodological, and practical challenges. These limitations do not undermine its scientific contribution but instead define a trajectory for future research aimed at refining, validating, and operationalizing the model.

At the theoretical level, the model simplifies complex and multidimensional aspects of human cognition, reflection, and creativity into parameterized constructs. While this abstraction enables quantification and comparison, it inevitably omits emergent socio-cognitive phenomena such as collective learning, contextual intuition, and ethical deliberation. The assumption that the balance between cognitive growth and atrophy can be expressed through deterministic relationships serves as a useful approximation but cannot yet capture the full richness of adaptive reasoning in real-world human–AI collaboration. Overcoming these conceptual boundaries will require interdisciplinary synthesis that includes insights from cognitive neuroscience, psychology, and systems theory.

From a methodological perspective, the study relies primarily on simulated and expert-derived data rather than extensive empirical datasets. The parameter values and weighting coefficients were defined heuristically, reflecting plausible behavioral tendencies rather than statistically validated relationships. Similarly, the assumption that human and AI contributions can be represented on a common normalized scale introduces an interpretive simplification, as human reasoning and algorithmic processing differ qualitatively in transparency, adaptability, and accountability. Future work should therefore involve longitudinal empirical studies integrating psychometric evaluation, behavioral observation, and digital trace analysis to calibrate the CSI model with higher precision. Dynamic weighting mechanisms should also be developed so that coefficients evolve adaptively in response to user behavior, task context, and domain-specific constraints. Advanced statistical and machine learning methods may further enable automatic inference of cognitive states from large-scale interaction data, allowing the CSI to transition from conceptual construct to applied analytics tool.

The implementation of applied cognitive management in educational and professional environments also poses significant organizational and ethical challenges. Reliable cognitive monitoring requires the collection of behavioral and performance data, which raises questions of privacy, trust, and user acceptance. If cognitive indicators are perceived as instruments of control or surveillance, they may provoke resistance rather than engagement. Successful adoption of ACM frameworks therefore depends on transparent governance, voluntary participation, and a shift in institutional culture—where cognitive sustainability is recognized as a strategic investment in workforce intelligence rather than a managerial constraint. From the managerial perspective, a central challenge is balancing short-term automation gains with long-term preservation of professional competence. Without active intervention, organizations risk accumulating cognitive debt, where immediate efficiency conceals a gradual erosion of analytical capacity. To mitigate this, leaders must implement cognitive monitoring systems that identify early signs of over-delegation and enable targeted interventions such as reflective training, manual practice cycles, or adaptive task rebalancing.

The pathway forward is defined by several complementary directions for research and practice. Future studies should focus on empirical validation through large-scale cross-professional assessments that identify benchmark CSI values and measure how cognitive balance evolves across industries. Efforts should also be directed toward dynamic cognitive modeling, where user interaction data, reflection time, and corrective behavior are used to update CSI metrics in real time. The integration of CSI with digital twin architectures offers particularly promising potential, allowing simulation of cognitive trajectories and predictive modeling of human–AI co-adaptation. At the systemic level, cross-sector application of the model can lead to the development of an atlas of cognitive sustainability, mapping domains such as aviation, healthcare, education, and engineering according to their maturity of cognitive co-evolution. In parallel, research should explore ethical and governance frameworks for cognitive data usage, including guidelines for cognitive integrity auditing and responsible AI literacy. The design of interactive cognitive feedback dashboards could turn monitoring from a passive diagnostic function into an active learning tool, enabling users and organizations to self-regulate their cognitive trajectories.

The long-term vision of this research is to establish a quantitative methodology of cognitive sustainability, linking individual metacognition, organizational learning, and AI system design into a unified adaptive ecosystem. The current study represents a step toward this goal, providing the conceptual foundations and mathematical formalism necessary for further empirical expansion. As the model evolves, its objective is not merely to describe cognitive change but to ensure that AI-driven transformation remains intellectually regenerative rather than cognitively depleting, reinforcing human expertise, creativity, and ethical responsibility in the era of intelligent automation.

Future work may integrate insights from management science, where knowledge creation and cognitive sustainability are understood as socially distributed processes shaped by collective interaction. Recent studies highlight that AI systems increasingly mediate collaboration and knowledge co-construction within organizations [

45]. This suggests that cognitive sustainability should be examined not only at the individual level but also across teams and knowledge networks.

Integrating the notion of learning loops, both single-loop and double-loop learning [

46], could further clarify how reflection and feedback support the maintenance of cognitive balance over time. Future research may explore how these learning mechanisms interact with ACM and CSI across individual and organizational contexts.

Incorporating insights from cognitive bias research could further strengthen the discussion, as biases significantly influence how users rely on AI systems and how cognitive regulation mechanism’s function [

47]. Future research may examine how specific biases interact with ACM and CSI and how AI-supported bias-mitigation strategies could help maintain cognitive balance.

5. Conclusions

The study developed a unified conceptual and methodological framework for understanding, quantifying, and managing the evolving relationship between human cognition and artificial intelligence. Through the integration of the cognitive co-evolution model, the cognitive sustainability index and the applied cognitive management framework, it establishes a holistic foundation for analyzing the dynamic interplay of cognitive growth, atrophy, and balance within AI-supported environments.

The cognitive co-evolution model conceptualizes AI–human interaction as a dialectical process in which reflective engagement stimulates metacognitive development, while excessive automation fosters cognitive atrophy. This dynamic equilibrium defines the cognitive balance zone as a state of sustainable co-adaptation where automation enhances rather than replaces human reasoning. The cognitive sustainability index translates this conceptual balance into a measurable construct, integrating behavioral and contextual parameters such as autonomy, reflection, creativity, delegation, and reliance.

Simulation experiments and professional case studies, including the example of software developers, demonstrated that the CSI effectively distinguishes between cognitive zones ranging from mechanical dependency to reflective synergy and can be used as a diagnostic tool for assessing cognitive sustainability in diverse domains.

Building on this analytical foundation, the paper introduced applied cognitive management as the operational mechanism that transforms measurement into action. ACM provides a closed-loop architecture linking continuous cognitive monitoring with adaptive interventions at four interdependent levels: individual, educational, professional, and institutional. This framework allows organizations, educators, and policymakers to maintain cognitive equilibrium through targeted reflection practices, feedback mechanisms, and adaptive task design. By embedding cognitive monitoring into decision-making and governance processes, ACM establishes a path toward cognitive sustainability governance, in which the balance between automation efficiency and human autonomy becomes a measurable, controllable, and ethically guided objective.

The results underscore the strategic importance of maintaining CSI above a sustainability threshold to preserve analytical competence, creativity, and epistemic accountability. For executives and policymakers, the framework provides an early-warning system for cognitive debt. For educators and researchers, it offers a foundation for designing curricula and learning environments that cultivate reflective resilience in the age of AI.

Longitudinal studies and digital twin simulations could further refine the model into a predictive instrument for cognitive risk management and AI ethics auditing. Ultimately, this research contributes to the emergence of a quantitative science of cognitive sustainability, where human expertise, organizational intelligence, and artificial cognition evolve together in reflective and regenerative equilibrium.

The transition from cognitive growth and cognitive atrophy toward cognitive balance represents not merely an intellectual construct but a strategic imperative for the digital era. The proposed framework unites analytical rigor, management design, and ethical foresight to offer both a theoretical lens and a practical roadmap that ensure AI-driven progress remains cognitively sustainable, socially responsible, and profoundly human-centered.