Defenses Against Adversarial Attacks on Object Detection: Methods and Future Directions

Abstract

1. Introduction

- We give background on adversarial attacks on object detection and characterize different defenses that protect against them.

- For each defense technique, we provide a detailed list of metrics they test against, target datasets used, types of models that can leverage these defenses, and the kind of attacks against which they are able to defend.

- We categorize defenses into different groups, highlight the strengths of each defense, and identify possible directions for future research.

2. Background

2.1. Object Detection

2.2. Adversarial Attacks on Image Classification

- Minimize subject to;

- .

3. Adversarial Attacks on Object Detection

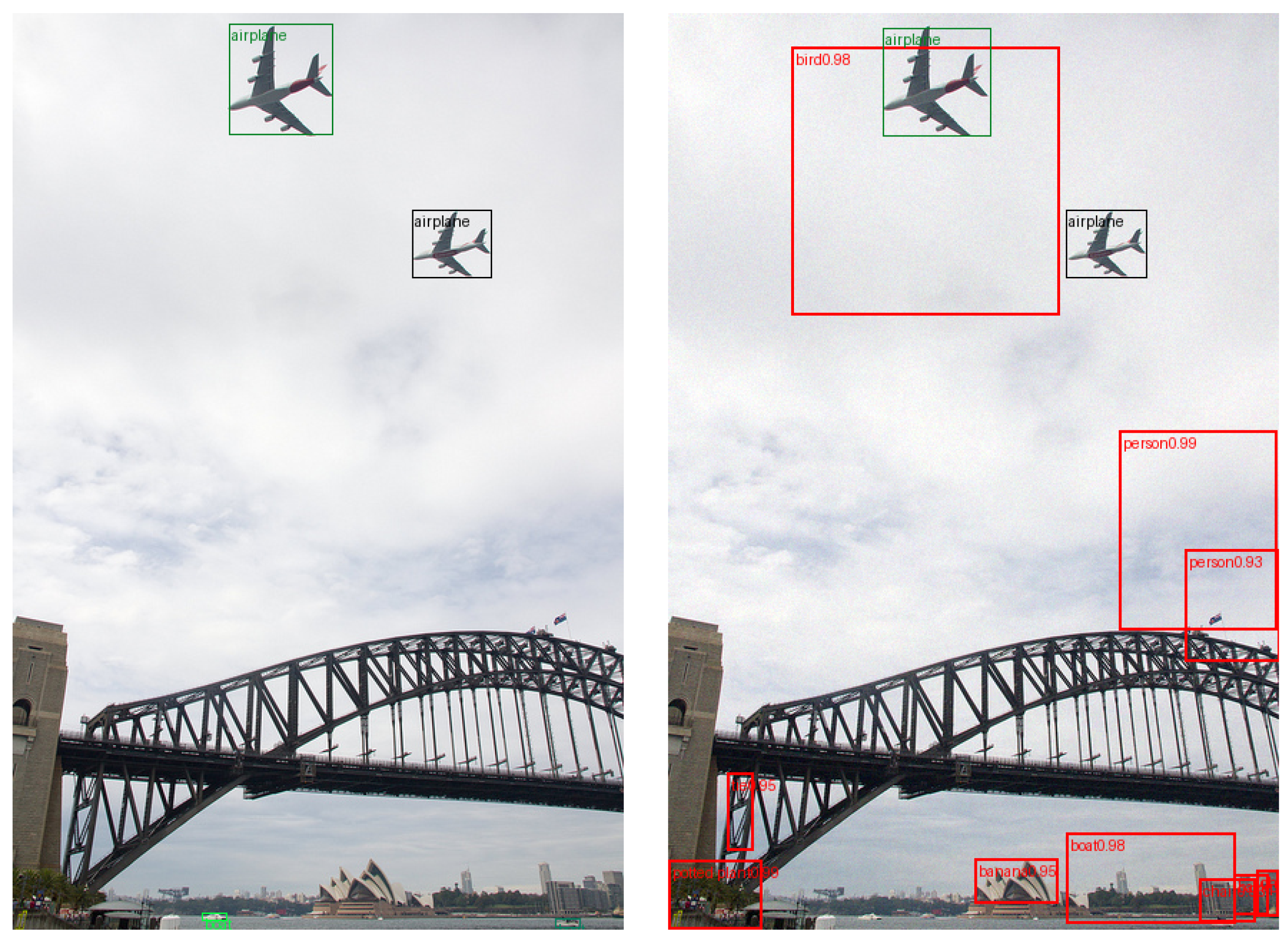

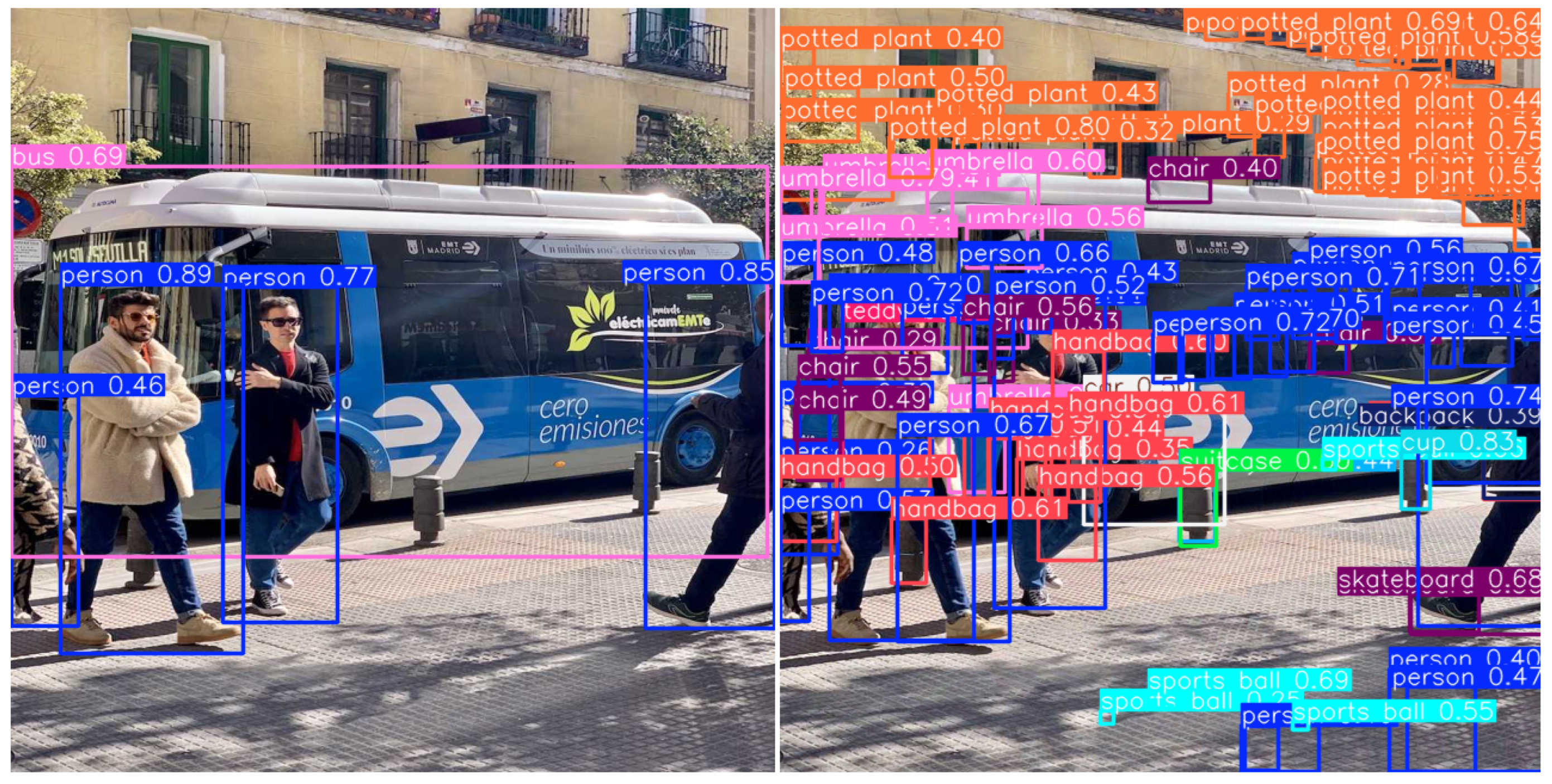

3.1. Mislabeling Attack

3.2. Bounding Box Attack

3.3. Fabrication Attack

4. Defenses Against Object Detection Attacks

4.1. Preprocessing

4.2. Adversarial Training

| Algorithm 1 Adversarial Training using k-step PGD [17] |

Initialize :

|

4.2.1. Changes to Loss Function

| Algorithm 2 Adversarial Training using MTD [43] |

|

4.2.2. Changes to Model

4.3. Detection of Adversarial Noise

4.4. Architecture Changes

4.5. Ensemble Defense

4.6. Certified Defenses

5. Discussion and Future Work

5.1. Mapping Between Attacks and Defenses

5.2. Unified Evaluation Pipeline

- Dataset selection: Standard datasets such as PASCAL VOC and MS-COCO should be used. For real-time detectors, a subset of BDD100K or KITTI can be used for efficiency.

- Model preparation: Train or fine-tune representative detectors (e.g., Faster R-CNN, YOLOv, SSD, DETR) using standard clean datasets.

- Attack simulation: Apply common -bounded attacks (PGD, C&W, TOG, UEA, CWA, DAG, RAP, OOA) and latency-based attacks such as Daedalus, Overload, and Phantom Sponge at multiple perturbation strengths ( values). Evaluate both white-box and transfer (black-box) settings.

- Integration of defenses: Implement each defense in its respective category—input transformations, adversarial training, noise detection modules, or certified smoothing.

- Evaluation metrics: Measure performance using clean and adversarial mAP, per-class accuracy, detection latency, and certified radius (for certified defenses).

- Visualization: Use Grad-CAM to qualitatively assess robustness and localization fidelity.

5.3. Future Work and Research Directions

- Broadening the attack surfaceTable 3 summarizes the empirical defenses along with the corresponding attacks they address. Notably, only one defense, Underload [46], specifically targets latency-based attacks such as Daedalus, Overload, and Phantom Sponge, indicating a clear need for further research and development of defenses in this area. Similarly, many defenses are validated only against PGD attacks. Relatively few are tested against stronger or more recent object detection–specific attacks (DAG, TOG, CWA, universal adversarial perturbations). This creates blind spots, in that if the defense is not evaluated against a broad attack set, it may fail when deployed for use.

- Scalability and training efficiencyTable 5 lists the impacts of defenses on mAP for clean images and the additional training time they incur as they rely on multi-step PGD adversarial training. Methods that reuse gradients [70] can reduce the cost. The loss of accuracy on clean data can be alleviated by strengthening the backbone classification network [53] or incorporating a Transformer-Encoder Module [55] to capture broader contextual information.

- Benchmarking and evaluation frameworksExisting defenses are evaluated under different experimental setups that differ in model architectures, datasets, or attack configurations. Many defenses only test on a single dataset, such as PASCAL VOC or MS-COCO, and on a single detector, typically YOLO, SSD, or Faster R-CNN. Transferability across datasets, architectures, and deployment scenarios is rarely shown. A shared evaluation pipeline, like the one described in previous Section 5.2, can help standardize both accuracy- and latency-based metrics. Integrating visualization tools like Grad-CAM [88] can strengthen interpretability. Further research is also needed to develop explanations for both robust and non-robust features.

- Hybrid and cross-domain defensesChiang et al. [87] proposed certifying object detection through median smoothing. Their approach relies on Monte Carlo sampling, which requires about 2000 samples per image to approximate the smoothed model, making inference computationally expensive. A more practical direction would be to leverage data generated with diffusion models, as demonstrated by Altstidl et al. [89], to obtain stronger robustness certificates on PASCAL VOC and MS-COCO under various attacks. A key opportunity for future work lies in developing formal verification techniques for larger architectures such as YOLO and Faster R-CNN, aimed at verifying issues like overdetection, misclassification, and misdetection in multi-object settings. In the ensemble defense section, we covered four approaches that leverage diversity (across upstream backbones or downstream detectors) to improve robustness against adversarial attacks. The Daedalus attack [36] demonstrated how ensembles of surrogate models can be exploited to bypass defenses. These findings underscore a potential limitation of ensemble defenses: the same diversity that strengthens defenses can be harnessed by adversaries to improve transferability. Future research could therefore explore adaptive ensemble defenses that dynamically reconfigure model diversity in response to evolving attacks or investigate hybrid strategies combining ensembles with input transformation.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Girshick, R.B.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R.B. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Redmon, J.; Divvala, S.K.; Girshick, R.B.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 1137–1149. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.E.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.E.; Fu, C.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. arXiv 2020, arXiv:2005.12872. [Google Scholar]

- Vaswani, A.; Shazeer, N.M.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2019; pp. 10778–10787. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. arXiv 2019, arXiv:1905.11946. [Google Scholar]

- Cheng, T.; Song, L.; Ge, Y.; Liu, W.; Wang, X.; Shan, Y. YOLO-World: Real-Time Open-Vocabulary Object Detection. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 16901–16911. [Google Scholar]

- Liu, S.; Zeng, Z.; Ren, T.; Li, F.; Zhang, H.; Yang, J.; Li, C.; Yang, J.; Su, H.; Zhu, J.; et al. Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection. In Proceedings of the European Conference on Computer Vision, Paris, France, 2–3 October 2023. [Google Scholar]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and Harnessing Adversarial Examples. arXiv 2014, arXiv:1412.6572. [Google Scholar]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.J.; Fergus, R. Intriguing properties of neural networks. arXiv 2013, arXiv:1312.6199. [Google Scholar]

- Madry, A.; Makelov, A.; Schmidt, L.; Tsipras, D.; Vladu, A. Towards Deep Learning Models Resistant to Adversarial Attacks. arXiv 2017, arXiv:1706.06083. [Google Scholar]

- Kurakin, A.; Goodfellow, I.J.; Bengio, S. Adversarial examples in the physical world. arXiv 2016, arXiv:1607.02533. [Google Scholar]

- Carlini, N.; Wagner, D.A. Towards Evaluating the Robustness of Neural Networks. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–26 May 2017; pp. 39–57. [Google Scholar]

- Moosavi-Dezfooli, S.; Fawzi, A.; Fawzi, O.; Frossard, P. Universal Adversarial Perturbations. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 86–94. [Google Scholar]

- Moosavi-Dezfooli, S.; Fawzi, A.; Frossard, P. DeepFool: A Simple and Accurate Method to Fool Deep Neural Networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal loss for dense object detection. In Proceedings of the International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Xie, C.; Wang, J.; Zhang, Z.; Zhou, Y.; Xie, L.; Yuille, A.L. Adversarial Examples for Semantic Segmentation and Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1378–1387. [Google Scholar]

- Wei, X.; Liang, S.; Cao, X.; Zhu, J. Transferable Adversarial Attacks for Image and Video Object Detection. In Proceedings of the International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.C.; Bengio, Y. Generative Adversarial Networks. In Proceedings of the 2023 14th International Conference on Computing Communication and Networking Technologies (ICCCNT), Delhi, India, 6–8 July 2023; pp. 1–7. [Google Scholar]

- Lin, T.; Maire, M.; Belongie, S.J.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Agnew, C.; Eising, C.; Denny, P.; Scanlan, A.G.; Van de Ven, P.; Grua, E.M. Quantifying the Effects of Ground Truth Annotation Quality on Object Detection and Instance Segmentation Performance. IEEE Access 2023, 11, 25174–25188. [Google Scholar] [CrossRef]

- Chen, P.-C.; Kung, B.-H.; Chen, J.-C. Class-Aware Robust Adversarial Training for Object Detection. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 10415–10424. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The Pascal visual object classes (VOC) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Yu, F.; Chen, H.; Wang, X.; Xian, W.; Chen, Y.; Liu, F.; Madhavan, V.; Darrell, T. BDD100K: A Diverse Driving Dataset for Heterogeneous Multitask Learning. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 2633–2642. [Google Scholar]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-FCN: Object Detection via Region-based Fully Convolutional Networks. In Proceedings of the Neural Information Processing Systems (2016), Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Li, Y.; Tian, D.; Chang, M.; Bian, X.; Lyu, S. Robust Adversarial Perturbation on Deep Proposal-based Models. In Proceedings of the British Machine Vision Conference, Newcastle, UK, 3–6 September 2018. [Google Scholar]

- Wang, Y.; Tan, Y.; Zhang, W.; Zhao, Y.; Kuang, X. An adversarial attack on DNN-based black-box object detectors. J. Netw. Comput. Appl. 2020, 161, 102634. [Google Scholar] [CrossRef]

- Venter, G.; Sobieszczanski-Sobieski, J. Particle Swarm Optimization. In Advances in Metaheuristic Algorithms for Optimal Design of Structures; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Wang, D.; Li, C.; Wen, S.; Nepal, S.; Xiang, Y. Daedalus: Breaking Non-Maximum Suppression in Object Detection via Adversarial Examples. arXiv 2019, arXiv:1902.02067. [Google Scholar] [CrossRef] [PubMed]

- Shapira, A.; Zolfi, A.; Demetrio, L.; Biggio, B.; Shabtai, A. Phantom Sponges: Exploiting Non-Maximum Suppression to Attack Deep Object Detectors. In Proceedings of the 2023 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–7 January 2023; pp. 4560–4569. [Google Scholar]

- Chen, E.; Chen, P.; Chung, I.; Lee, C. Overload: Latency Attacks on Object Detection for Edge Devices. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 24716–24725. [Google Scholar]

- Ultralytics. Yolov5. 2022. Available online: https://github.com/ultralytics/yolov5 (accessed on 1 August 2025).

- Chow, K.; Liu, L.; Loper, M.L.; Bae, J.; Gursoy, M.E.; Truex, S.; Wei, W.; Wu, Y. Adversarial Objectness Gradient Attacks in Real-time Object Detection Systems. In Proceedings of the 2020 Second IEEE International Conference on Trust, Privacy and Security in Intelligent Systems and Applications (TPS-ISA), Atlanta, GA, USA, 28–31 October 2020; pp. 263–272. [Google Scholar]

- Nguyen, K.N.; Zhang, W.; Lu, K.; Wu, Y.; Zheng, X.; Tan, H.L.; Zhen, L. A Survey and Evaluation of Adversarial Attacks in Object Detection. IEEE Trans. Neural Netw. Learn. Syst. 2024, 36, 15706–15722. [Google Scholar] [CrossRef] [PubMed]

- Zhou, L.; Liu, Q.; Zhou, S. Preprocessing-based Adversarial Defense for Object Detection via Feature Filtration. In Proceedings of the 7th International Conference on Algorithms, Computing and Systems (ICACS ’23). Association for Computing Machinery, New York, NY, USA, 19–21 October 2024; pp. 80–87. [Google Scholar]

- Zhang, H.; Wang, J. Towards Adversarially Robust Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 421–430. [Google Scholar]

- Choi, J.I.; Tian, Q. Adversarial Attack and Defense of YOLO Detectors in Autonomous Driving Scenarios. In Proceedings of the 2022 IEEE Intelligent Vehicles Symposium (IV), Aachen, Germany, 4–9 June 2022; pp. 1011–1017. [Google Scholar]

- Jung, Y.; Song, B.C. Toward Enhanced Adversarial Robustness Generalization in Object Detection: Feature Disentangled Domain Adaptation for Adversarial Training. IEEE Access 2024, 12, 179065–179076. [Google Scholar] [CrossRef]

- Wang, T.; Wang, Z.; Wang, C.; Shu, Y.; Deng, R.; Cheng, P.; Chen, J. Can’t Slow me Down: Learning Robust and Hardware-Adaptive Object Detectors against Latency Attacks for Edge Devices. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 11–15 June 2025. [Google Scholar]

- Chen, X.; Xie, C.; Tan, M.; Zhang, L.; Hsieh, C.; Gong, B. Robust and Accurate Object Detection via Adversarial Learning. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 16617–16626. [Google Scholar]

- Dong, Z.; Wei, P.; Lin, L. Adversarially-Aware Robust Object Detector. In Computer Vision—ECCV 2022. Lecture Notes in Computer Science; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Springer: Cham, Switzerland, 2022; Volume 13669. [Google Scholar]

- Cheng, J.; Huang, B.; Fang, Y.; Han, Z.; Wang, Z. Adversarial intensity awareness for robust object detection. Comput. Vis. Image Underst. 2024, 251, 104252. [Google Scholar] [CrossRef]

- Amirkhani, A.; Karimi, M.P. Adversarial defenses for object detectors based on Gabor convolutional layers. Vis. Comput. 2022, 38, 1929–1944. [Google Scholar] [CrossRef]

- Zeng, W.; Gao, S.; Zhou, W.; Dong, Y.; Wang, R. Improving the Adversarial Robustness of Object Detection with Contrastive Learning. In Chinese Conference on Pattern Recognition and Computer Vision; Springer: Singapore, 2023. [Google Scholar]

- Muhammad, A.; Zhuang, W.; Lyu, L.; Bae, S.-H. FROD: Robust Object Detection for Free. arXiv 2023, arXiv:2308.01888. [Google Scholar] [CrossRef]

- Li, X.; Chen, H.; Hu, X. On the Importance of Backbone to the Adversarial Robustness of Object Detectors. IEEE Trans. Inf. Forensics Secur. 2025, 20, 2387–2398. [Google Scholar] [CrossRef]

- Yang, H.; Wang, X.; Chen, Y.; Dou, H.; Zhang, Y. RPU-PVB: Robust object detection based on a unified metric perspective with bilinear interpolation. J. Cloud Comput. 2023, 12, 169. [Google Scholar] [CrossRef]

- Alamri, F.; Kalkan, S.; Pugeault, N. Transformer-Encoder Detector Module: Using Context to Improve Robustness to Adversarial Attacks on Object Detection. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2020; pp. 9577–9584. [Google Scholar]

- Xu, W.; Huang, H.; Pan, S. Using Feature Alignment Can Improve Clean Average Precision and Adversarial Robustness in Object Detection. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 2184–2188. [Google Scholar]

- Xu, W.; Chu, P.; Xie, R.; Xiao, X.; Huang, H. Robust and Accurate Object Detection Via Self-Knowledge Distillation. In Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; pp. 91–95. [Google Scholar]

- Chow, K.-H. Robust Object Detection Fusion Against Deception. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Singapore, 14–18 August 2021. [Google Scholar]

- Chow, K.H.; Liu, L. Boosting Object Detection Ensembles with Error Diversity. In Proceedings of the 2022 IEEE International Conference on Data Mining (ICDM), Orlando, FL, USA, 28 November–1 December 2022; pp. 903–908. [Google Scholar]

- Peng, Z.; Chen, X.; Huang, W.; Kong, X.; Li, J.; Xue, S. Shielding Object Detection: Enhancing Adversarial Defense through Ensemble Methods. In Proceedings of the 2024 5th Information Communication Technologies Conference (ICTC), Nanjing, China, 10–12 May 2024; pp. 88–97. [Google Scholar] [CrossRef]

- Wu, Y.; Chow, K.; Wei, W.; Liu, L. Exploring Model Learning Heterogeneity for Boosting Ensemble Robustness. In Proceedings of the 2023 IEEE International Conference on Data Mining (ICDM), Shanghai, China, 1–4 December 2023; pp. 648–657. [Google Scholar]

- Cohen, J.M.; Rosenfeld, E.; Kolter, J.Z. Certified Adversarial Robustness via Randomized Smoothing. arXiv 2019, arXiv:1902.02918. [Google Scholar] [CrossRef]

- Elboher, Y.Y.; Raviv, A.; Weiss, Y.L.; Cohen, O.; Assa, R.; Katz, G.; Kugler, H. Formal Verification of Deep Neural Networks for Object Detection. arXiv 2024, arXiv:2407.01295. [Google Scholar]

- Ilyas, A.; Santurkar, S.; Tsipras, D.; Engstrom, L.; Tran, B.; Madry, A. Adversarial Examples Are Not Bugs, They Are Features. In Proceedings of the Neural Information Processing Systems 2019, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein GAN. arXiv 2017, arXiv:1701.07875. [Google Scholar] [PubMed]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A.C. Improved training of wasserstein gans. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; p. 30. [Google Scholar]

- Miyato, T.; Kataoka, T.; Koyama, M.; Yoshida, Y. Spectral Normalization for Generative Adversarial Networks. arXiv 2018, arXiv:1802.05957. [Google Scholar] [CrossRef]

- Tsipras, D.; Santurkar, S.; Engstrom, L.; Turner, A.; Madry, A. There Is No Free Lunch In Adversarial Robustness (But There Are Unexpected Benefits). arXiv 2018, arXiv:1805.12152. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.S.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2014, 115, 211–252. [Google Scholar] [CrossRef]

- Shafahi, A.; Najibi, M.; Ghiasi, A.; Xu, Z.; Dickerson, J.P.; Studer, C.; Davis, L.S.; Taylor, G.; Goldstein, T. Adversarial Training for Free! In Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 3358–3369. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2016; pp. 6517–6525. [Google Scholar]

- Xiao, C.; Deng, R.; Li, B.; Yu, F.; Liu, M.; Song, D.X. Characterizing Adversarial Examples Based on Spatial Consistency Information for Semantic Segmentation. arXiv 2018, arXiv:1810.05162. [Google Scholar] [CrossRef]

- Xie, C.; Tan, M.; Gong, B.; Wang, J.; Yuille, A.L.; Le, Q.V. Adversarial examples improve image recognition. In Proceedings of the Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. arXiv 2015, arXiv:1502.03167. [Google Scholar] [CrossRef]

- Kingma, D.P.; Welling, M. Auto-encoding variational bayes. In Proceedings of the International Conference on Learning Representations (ICLR), Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Chen, Y.; Dai, X.; Liu, M.; Chen, D.; Yuan, L.; Liu, Z. Dynamic convolution: Attention over convolution kernels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11030–11039. [Google Scholar]

- P’erez, J.C.; Alfarra, M.; Jeanneret, G.; Bibi, A.; Thabet, A.K.; Ghanem, B.; Arbel’aez, P. Gabor Layers Enhance Network Robustness. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Yang, H.; Chen, Y.; Dou, H.; Luo, Y.; Tan, C.J.; Zhang, Y. Robust Object Detection Based on a Comparative Learning Perspective. In Proceedings of the 2023 IEEE Intl Conf on Dependable, Autonomic and Secure Computing, Intl Conf on Pervasive Intelligence and Computing, Intl Conf on Cloud and Big Data Computing, Intl Conf on Cyber Science and Technology Congress (DASC/PiCom/CBDCom/CyberSciTech), Abu Dhabi, United Arab Emirates, 14–17 November 2023; pp. 0557–0563. [Google Scholar]

- Wong, E.; Rice, L.; Kolter, J.Z. Fast is better than free: Revisiting adversarial training. arXiv 2020, arXiv:2001.03994. [Google Scholar] [CrossRef]

- Hinton, G.E.; Vinyals, O.; Dean, J. Distilling the Knowledge in a Neural Network. arXiv 2015, arXiv:1503.02531. [Google Scholar] [CrossRef]

- Thys, S.; Ranst, W.V.; Goedemé, T. Fooling Automated Surveillance Cameras: Adversarial Patches to Attack Person Detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–17 June 2019; pp. 49–55. [Google Scholar]

- Liu, X.; Yang, H.; Liu, Z.; Song, L.; Chen, Y.; Li, H.H. DPATCH: An Adversarial Patch Attack on Object Detectors. arXiv 2018, arXiv:1806.02299. [Google Scholar]

- Xu, K.; Zhang, G.; Liu, S.; Fan, Q.; Sun, M.; Chen, H.; Chen, P.; Wang, Y.; Lin, X. Adversarial T-Shirt! Evading Person Detectors in a Physical World. In Computer Vision—ECCV 2020, Proceedings of the 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part V; Springer: Berlin/Heidelberg, Germany, 2020; pp. 665–681. [Google Scholar]

- Mao, C.; Gupta, A.; Nitin, V.; Ray, B.; Song, S.; Yang, J.; Vondrick, C. Multitask learning strengthens adversarial robustness. In Computer Vision—ECCV 2020, Proceedings of the 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part II, Ser. Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2020; Volume 12347, pp. 158–174. [Google Scholar]

- Yeo, T.; Kar, O.F.; Zamir, A. Robustness via cross-domain ensembles. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 12189–12199. [Google Scholar]

- Mopuri, K.R.; Garg, U.; Babu, R.V. Fast feature fool: A data independent approach to universal adversarial perturbations. arXiv 2017, arXiv:1707.05572. [Google Scholar] [CrossRef]

- Chiang, P.Y.; Curry, M.; Abdelkader, A.; Kumar, A.; Dickerson, J.; Goldstein, T. Detection as Regression: Certified Object Detection by Median Smoothing. Adv. Neural Inf. Process. Syst. 2020, 33, 1275–1286. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Altstidl, T.R.; Dobre, D.; Kosmala, A.; Eskofier, B.M.; Gidel, G.; Schwinn, L. On the Scalability of Certified Adversarial Robustness with Generated Data. In Proceedings of the 38th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 10–15 December 2024. [Google Scholar]

| Dataset | Description |

|---|---|

| PASCAL VOC 2007-12 [29] | 20 classes in 4 categories person, animal, vehicle and indoor. 11,530 training images and 4921 test images. |

| MS-COCO [26] | 80 classes. 80k training images and 40k validation images (115k/5k in 2017), 40k test images |

| KITTI [30] | 7481 training images and 7518 test images, containing 80,256 labeled objects |

| BDD [31] | 100k videos with objects belonging to 12 classes (lane, driveable area, car, traffic sign, traffic light, person, train, motor, rider, bike, bus, truck.) |

| Defense Techniques | Defenses |

|---|---|

| Preprocessing | Feature Filtration [42] |

| Adversarial Training | Changes to Loss function: MTD [43], CWAT [28], OOD [44], FDDA [45], Underload [46] Changes to Model: Det-AdvProp [47], RobustDet [48], AIAD [49], Gabor conv layers [50], Contrastive Adv Training [51], FROD [52], Backbone Adv Robustness [53] |

| Detection of Adversarial Noise | RPU-PVB [54] |

| Architecture Changes | Transformer-Encoder Module [55], FA [56], UDFA [57] |

| Ensemble Defense | FUSE [58], Error Diversity Framework(EDI) [59], DEM [60], Two-tier ensemble [61] |

| Certified Defenses | Certified Object Detection by Median Smoothing [62], Formal Verification of Deep Neural Networks for Object Detection [63] |

| Defenses | PGD * | DAG | RAP | CWA | OOA ** | TOG | UEA | FFF [86] | Latency *** |

|---|---|---|---|---|---|---|---|---|---|

| Feature Filtration [42] | x | x | x | x | x | ✓ | ✓ | x | x |

| MTD [43] | ✓ | ✓ | ✓ | x | x | x | x | x | x |

| CWAT [28] | ✓ | ✓ | x | ✓ | x | x | x | x | x |

| OOD [44] | x | x | x | x | ✓ | x | x | x | x |

| FDDA [45] | ✓ | ✓ | x | ✓ | x | x | x | x | x |

| Underload [46] | x | x | x | x | x | x | x | x | ✓ |

| Det-AdvProp [47] | ✓ | x | x | x | x | x | x | x | x |

| RobustDet [48] | ✓ | ✓ | x | ✓ | x | x | x | x | x |

| AIAD [49] | ✓ | x | x | ✓ | x | x | x | x | x |

| Gabor conv layers [50] | x | ✓ | ✓ | x | x | ✓ | ✓ | x | x |

| Contrastive Adv Training [51] | ✓ | x | x | ✓ | x | x | x | x | x |

| FROD [52] | ✓ | x | x | x | x | x | x | x | x |

| Backbone Adv Robustness [53] | ✓ | x | x | ✓ | x | x | x | x | x |

| RPU-PVB [54] | ✓ | ✓ | x | ✓ | x | x | x | x | x |

| Transformer-Encoder Module [55] | x | x | x | x | x | x | x | ✓ | x |

| FA [56] | ✓ | x | x | x | x | x | x | x | x |

| UDFA [57] | ✓ | x | x | x | x | x | x | x | x |

| FUSE [58] | x | ✓ | ✓ | x | x | ✓ | ✓ | x | x |

| EDI [59] | x | x | x | x | x | ✓ | x | x | x |

| DEM [60] | x | ✓ | x | x | x | ✓ | x | x | x |

| Two-tier ensemble [61] | x | x | x | x | x | ✓ | x | x | x |

| Attack Type | Preprocessing | Adversarial Training | Noise Detection | Architectural Change | Ensemble | Certified Defense |

|---|---|---|---|---|---|---|

| Mislabeling | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Bounding-box | ✓ | ✓ | ✓ | ✓ | ||

| Fabrication | ✓ | ✓ | ✓ |

| Defenses | mAP on Clean Images | Additional Training Time |

|---|---|---|

| Feature Filtration [42] | 12% decrease for YOLOv3, Faster R-CNN | Time to train the filter and critic networks |

| MTD [43] | Decreases (12 in case of SSD) | k-step PGD cls and loc attack time |

| CWAT [28] | Decreases, but less than MTD | 3.19× faster than MTD |

| OOD [44] | Not mentioned | FGSM attack 3 times for each batch per epoch |

| FDDA [45] | 0.8% decrease on MS-COCO, 0.3–0.5% decrease for PASCAL VOC | 24% increase compared with standard adversarial training |

| Underload [46] | 6 to 13% decrease on PASCAL VOC | k-step PGD loop till maximum number of candidates are reached |

| Det-AdvProp [47] | +1.1 mAP increase | k-step PGD cls and loc attack time |

| RobustDet [48] | Decrease of 2.7% for PASCAL VOC, 6% for MS-COCO | Time to train AID and CFR |

| AIAD [49] | 2% decrease for PASCAL VOC, 10% decrease for MS-COCO | k-step PGD cls and loc attack time |

| Gabor conv layers [50] | <1% drop in accuracy reported | Perturbation generation time and discrete gabor filter bank generation time |

| Contrastive Adv Training [51] | Higher than MTD, CWAT, FA | perturbation generation time, contrastive learning module training time |

| FROD [52] | 10% decrease for PASCAL VOC (less than MTD, CWAT) | Minimal, takes advantage of [70] technique |

| Backbone Adv Robustness [53] | Increased for PASCAL VOC (Faster R-CNN), Slight decrease in case of MS-COCO dataset | Adv training of backbone and object detector (took advantage of [70]) |

| RPU-PVB [54] | Decrease of 2.8% for PASCAL VOC, 5.8% for MS-COCO | MTD adversarial sample generation time, RCP module training |

| Transformer-Encoder Module [55] | Increases mAP by 4.71 for MS-COCO dataset | No change, pre-trained Transfer encoder is used |

| FA [56] | Slight decrease | k-step PGD training and distillation time |

| UDFA [57] | Increase in mAP by 1.6 AP for PASCAL VOC | k-step PGD cls and loc attack time and feature generation time |

| FUSE [58] | Increases mAP by 1.20× for Faster R-CNN | Time to perform diverse joint training of detection models |

| EDI [59] | Increases mAP by 1.27× for Faster R-CNN | Time to create the best ensemble using EDI |

| DEM [60] | Increases for PASCAL VOC, mild increase for MS-COCO | 9x increase per epoch |

| Two-tier ensemble [61] | Increases mAP by 1.07× for Faster R-CNN, 1.18x for SSD | Time to create ensemble |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Thunuguntla, A.; Tadepalli, P.; Raffa, G. Defenses Against Adversarial Attacks on Object Detection: Methods and Future Directions. Information 2025, 16, 1003. https://doi.org/10.3390/info16111003

Thunuguntla A, Tadepalli P, Raffa G. Defenses Against Adversarial Attacks on Object Detection: Methods and Future Directions. Information. 2025; 16(11):1003. https://doi.org/10.3390/info16111003

Chicago/Turabian StyleThunuguntla, Anant, Prasad Tadepalli, and Giuseppe Raffa. 2025. "Defenses Against Adversarial Attacks on Object Detection: Methods and Future Directions" Information 16, no. 11: 1003. https://doi.org/10.3390/info16111003

APA StyleThunuguntla, A., Tadepalli, P., & Raffa, G. (2025). Defenses Against Adversarial Attacks on Object Detection: Methods and Future Directions. Information, 16(11), 1003. https://doi.org/10.3390/info16111003