Autoencoder-Based Poisoning Attack Detection in Graph Recommender Systems

Abstract

1. Introduction

- First exploration of autoencoder-based GRS poisoning attack detection. We propose AutoDAP, a detection method specifically for GRS poisoning attacks. It focuses on identifying attacks that disrupt GNN neighborhood aggregation by injecting fake interaction edges.

- User behavior representation integrating statistical features. We extract multi-dimensional statistical features from raw interaction data to construct a more comprehensive representation of user behavior. This process is part of our data preprocessing step. These features capture macroscopic behavioral patterns not directly apparent in raw interactions and are effectively fused, providing more discriminative input for subsequent deep feature learning.

- Dual-objective optimized detection model. We designed an autoencoder-based detection model. The encoder extracts deep user features and feeds them into a classification branch to predict the probability of a user being an attacker. Simultaneously, the decoder reconstructs the original graph structure. By jointly optimizing classification and reconstruction losses, the model effectively fuses supervised signals (from classification probabilities) and unsupervised signals (from feature reconstruction). Combined with a subsequent discrimination mechanism, this improves detection of attack users, especially stealthy ones mimicking genuine user behavior.

- Extensive experimental validation. Evaluations on the MovieLens-10M dataset against various poisoning attacks and on the Amazon dataset with real attack samples demonstrate AutoDAP’s superior performance. It outperforms several representative baseline methods against diverse GRS poisoning attacks, showing good effectiveness and robustness.

2. Related Work and Background Knowledge

2.1. Poisoning Attacks in Graph Recommender Systems

2.2. Related Work

2.2.1. Traditional Machine Learning-Based Detection Methods

2.2.2. Deep Learning-Based Detection Methods

2.3. Autoencoder

- Encoder. The main task of the encoder is to map a high-dimensional original input feature vector x to a latent representation vector h, which usually has a lower dimension. This process can be seen as information compression and feature abstraction of the input data. Specifically, an encoder usually consists of one or more neural network layers. Its typical functional form is an affine transformation followed by a non-linear activation function (e.g., Sigmoid or ReLU) [30,31]:Here, x represents the input user feature vector, and and are the weight matrix and bias vector of the encoder, respectively.

- Latent Representation. The latent representation h (also known as code or bottleneck feature) is the output of the encoder and the input to the decoder. It captures the core features and intrinsic structure of the input data. Its dimension is usually much smaller than the original input data dimension d (i.e., < d), thus achieving effective information compression. In some cases, overcomplete representations ( > d) combined with sparsity constraints might also be used.

- Decoder. The decoder’s goal is opposite to the encoder’s. It attempts to recover or reconstruct the original input data from the low-dimensional latent representation h, obtaining a reconstructed vector . Decoders usually also employ a multi-layer perceptron structure symmetric to the encoder. Through a series of linear transformations and non-linear activation functions , they progressively map the latent vector back to the original input space [30,31]:Here, and are the weight matrix and bias vector of the decoder, respectively. is the reconstruction of the original input x. By minimizing the reconstruction loss, the autoencoder learns encoders and decoders that can effectively extract meaningful features from data.

3. Proposed Detection Method

3.1. Detection Framework

- Data Preprocessing. First, we randomly sample users and their interaction data without replacement from the original rating dataset to construct non-overlapping training, validation, and test sets. Then, these datasets undergo unified preprocessing. The core step is to extract the user–item interaction matrix I. This matrix is usually converted from raw rating data, binarizing user rating behavior to indicate the existence of an interaction [34]. Based on the interaction matrix I, we further calculate three key statistical features to profile user behavior patterns: Interaction Mean (), Interaction Variance (), and Total Interaction Count (). These three types of statistical features are concatenated with the interaction matrix I to form an initial user feature representation. To eliminate scale differences between different features, we standardize the concatenated features, finally generating a normalized user feature matrix X, which will serve as input to the subsequent encoder.

- Model Training. Model training involves two collaborative core objectives: a supervised classification task and an unsupervised reconstruction task. This dual-objective learning framework aims to simultaneously utilize supervised information for discrimination and unsupervised information for characterizing normal patterns. A similar approach has been used in data visualization by mixing autoencoders and classifiers [32].

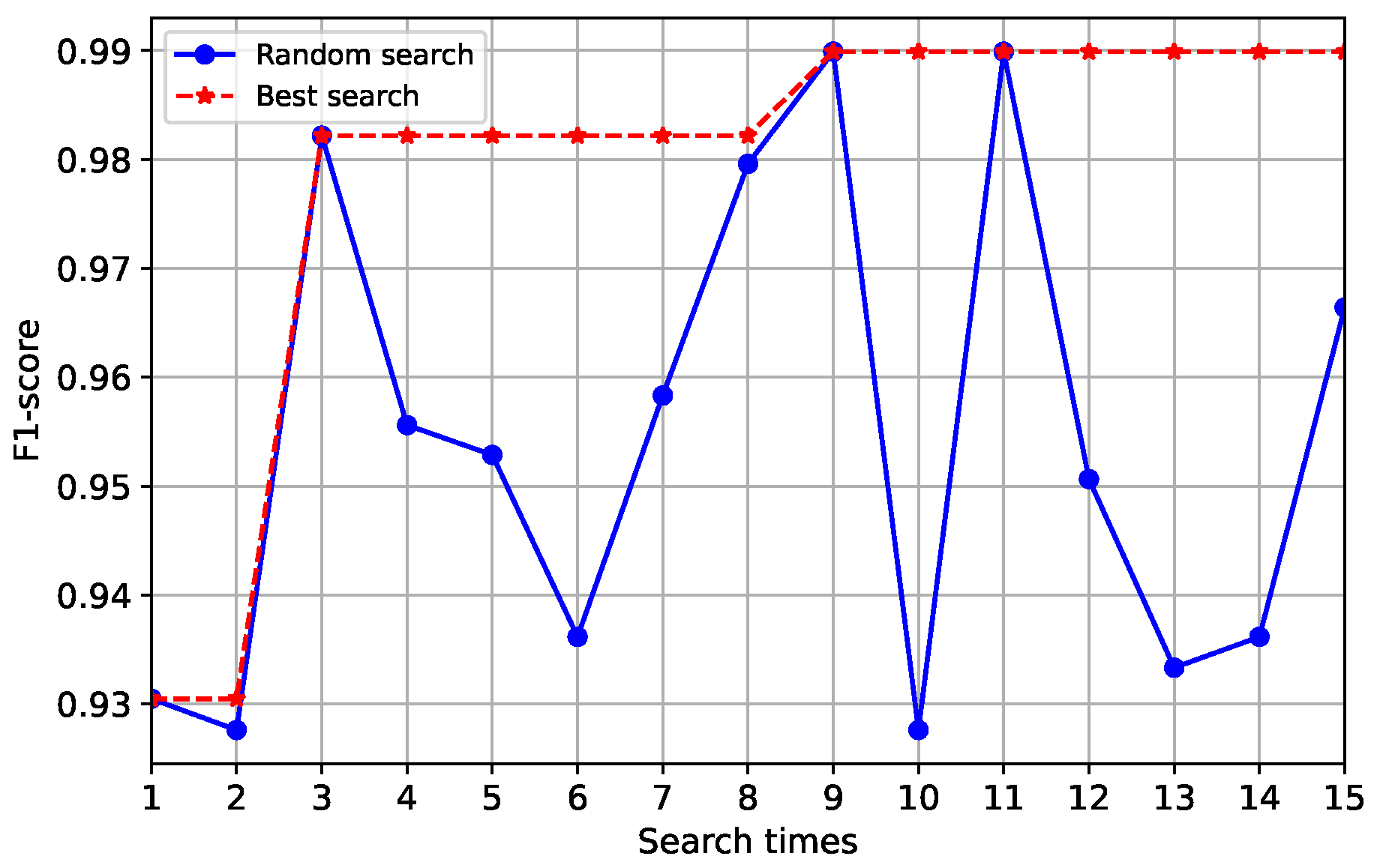

- Validation and Hyperparameter Optimization. The validation set is used to optimize key model hyperparameters, such as training epochs, loss function weighting factors, etc. The core goal is to find a set of hyperparameters that achieve the best model performance on the validation data (e.g., maximizing F1-score). Based on this, a final discrimination threshold F is determined to distinguish between attack and genuine users. The detailed calculation of this threshold F will be described in Section 3.2.5.

- Testing and Evaluation. In the testing phase, the model, optimized on the training and validation sets, performs detection on the test set users. For each user, the model outputs their predicted classification probability of being an attacker and the deviation of their behavior pattern from the normal model (i.e., reconstruction error). This information is integrated into a composite attack score s. By comparing this score s with the discrimination threshold F determined during validation, the user is finally classified as an attacker or not. The specific calculation method for score s will be given in Section 3.2.5. Finally, the detection model’s performance metrics are evaluated based on the discrimination results.

3.2. Key Technical Modules

3.2.1. Data Preprocessing

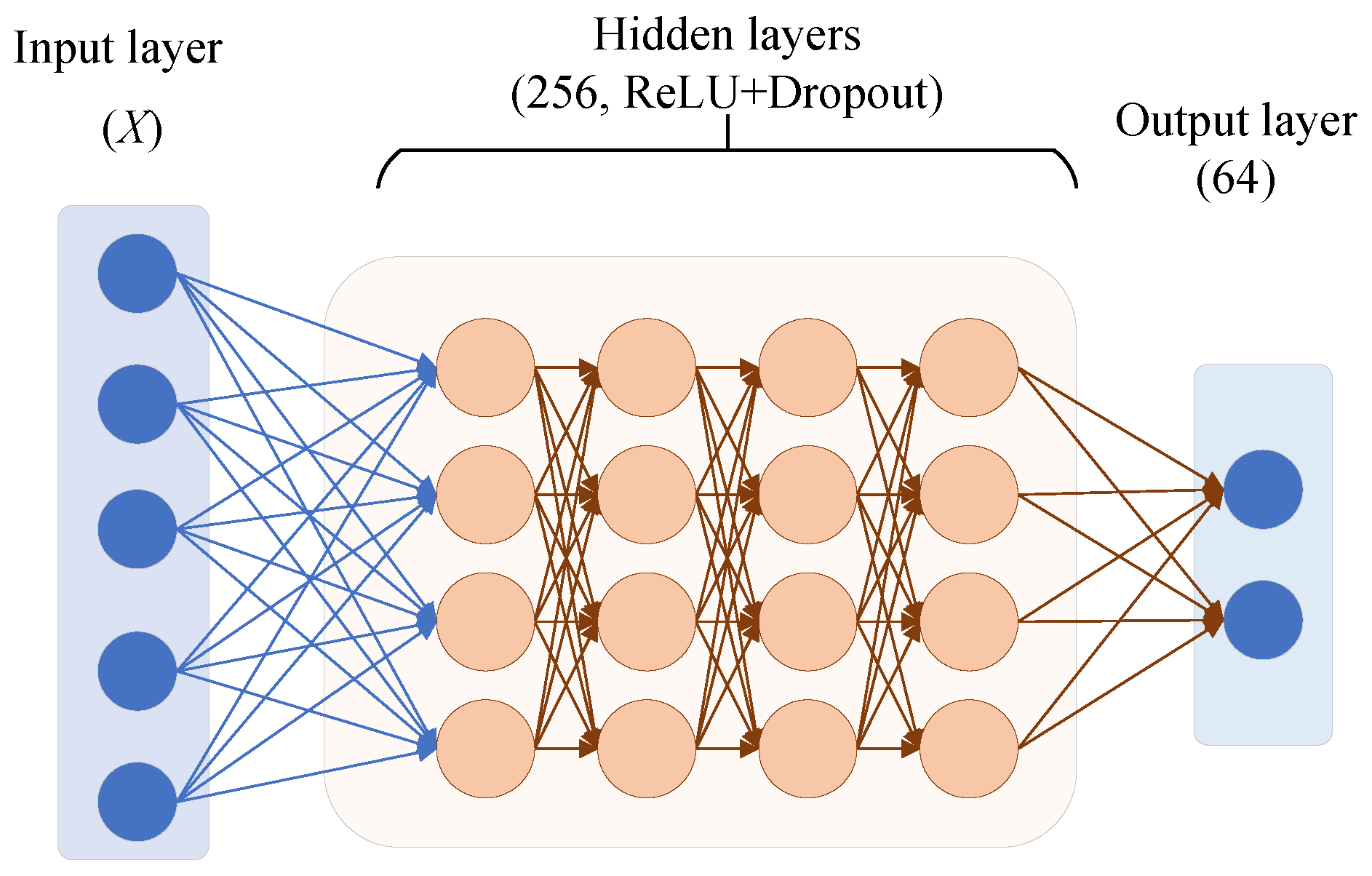

3.2.2. Encoder

- Input Layer. The encoder’s input layer directly accepts the user feature matrix X, which has been preprocessed and standardized as described in Section 3.2.1. This matrix encapsulates each user’s original interaction information and derived statistical features.

- Hidden Layers (4 layers). The encoder contains four hidden layers that progressively abstract and reduce the dimensionality of the input features. Its structure is defined as: . The uniform width facilitates capacity control and stable training. ReLU provides non-linear representation, while Dropout mitigates overfitting on sparse dimensions.

- Output Layer. A final operation produces the 64-dimensional latent vector Z. This vector represents the condensed essence of user behavior patterns learned by the encoder. It is designed to capture crucial information for distinguishing between genuine and attack users in a low-dimensional, dense format. This latent representation Z subsequently serves as input for both the classification and reconstruction tasks. In this way, the encoder effectively achieves its dual objectives of feature dimensionality reduction and key information extraction.

3.2.3. Decoder

- Input Layer. Receives the latent vector .

- Hidden Layers. The structure is .

- Output Layer. A layer with a linear activation function produces the reconstructed features .

3.2.4. Loss Function

- Classification Loss Function. The latent representation Z output by the encoder first passes through an additional Sigmoid activation layer. This layer acts as the Classification Head, responsible for predicting the probability that user u is an attacker. The classification loss uses Binary Cross-Entropy (BCE) [37] to measure the difference between the predicted probability e and the true label (1 for attack user, 0 for genuine user). To enhance model generalization and prevent overfitting, we introduce an L2 regularization term [38], applied to all trainable weights W in the model. The complete classification loss is defined as:where, is calculated as follows:Here, N is the number of samples in the current batch. is the L2 norm squared of all trainable model weights W, and is the regularization coefficient. This loss function considers both prediction accuracy and model complexity, guiding the model to learn more robust user behavior representations.

- Reconstruction Loss Function. The core objective of the reconstruction task is to train the decoder so that it can accurately recover the genuine user’s original input features X from the latent representation Z generated by the encoder. A key design choice is that during decoder training to minimize reconstruction loss, we only use genuine user data from the training set. The rationale is that attack user behavior patterns inherently differ from genuine users. Therefore, when data from these attack users is reconstructed by a decoder optimized for genuine user behavior, a higher reconstruction error than that of genuine users is expected. This becomes an important basis for attack detection. The reconstruction loss uses Mean Squared Error (MSE) [39] to quantify the deviation between the reconstructed output and the original input . It is formally expressed as:Here, is the number of genuine users involved in reconstruction, is the original feature vector of a genuine user, and is its corresponding reconstructed feature vector.

- Total Loss Function. The model’s total loss is a weighted sum of the classification loss and the reconstruction loss . This approach integrates supervised and unsupervised learning signals:Here, and are hyperparameters used to balance the importance of the classification and reconstruction tasks, with . By carefully adjusting these two weights, we can guide the model to achieve an optimal balance between accurately identifying attack users (high classification accuracy) and effectively capturing the distribution of genuine user data (low reconstruction error). This joint optimization strategy enables AutoDAP to learn user behavior features more comprehensively, thereby enhancing its robustness in detecting complex and stealthy poisoning attacks. This method of weighting and combining loss functions from different learning tasks shares a common design philosophy with models that aim to fuse different learning paradigms, such as the model in [32] for data visualization, which uses a mixing coefficient to balance autoencoder and classifier losses.

3.2.5. Discrimination Threshold and Attack Score

- Determining the Discrimination Threshold. During model validation, to obtain the optimal discrimination boundary, we determine a composite discrimination threshold F. This threshold combines the predicted probabilities from the classification task and the error measures from the reconstruction task. First, by maximizing the F1-score on the validation set, we separately determine the optimal classification probability threshold and the percentile for selecting genuine user reconstruction errors.Based on , the optimal reconstruction error threshold is calculated. Its value is the average reconstruction error of the top genuine users in the validation set, sorted by increasing reconstruction error.The final discrimination threshold F is calculated using the following formula:Here, and are the optimal loss weights, selected through hyperparameter optimization during the model training and validation phases.

- User Attack Score and Final Discrimination. In the testing phase, for any user to be detected, the model first obtains their classification prediction probability and reconstruction error e.Then, the user’s composite attack score s is calculated:

3.3. Detection Algorithm

| Algorithm 1 Detection Algorithm of AutoDAP. |

|

3.4. Complexity Analysis

- Training Complexity: The complexity of a single training iteration is determined by both the encoder and the decoder. The encoder’s complexity is , and the decoder’s is . In practical applications, and . Therefore, the main contribution to the total training complexity comes from the input and output layers, which can be approximated as . This indicates that the training cost is linear with respect to the number of users and items, ensuring the model’s scalability for large datasets. Notably, our dual-objective design only introduces a constant-level of additional computation (for the decoder part) and does not change the order of complexity.

- Inference Complexity: Detecting a single user requires only one forward pass, with a complexity of . The computational cost is extremely low, fully meeting the real-time requirements for online or near-online detection.

3.5. Overfitting Control and Regularization

4. Experiments and Analysis

4.1. Datasets

4.2. Experimental Data Setup

4.2.1. Data Setup on MovieLens-10M Dataset

4.2.2. Data Setup on Amazon Dataset

4.3. Baseline Methods

- FAP [13]. This method is based on the observation that user fraudulent behavior propagates in a network. It constructs a user–item graph and uses a label propagation-like mechanism to identify attack users. This method is representative of early efforts because it attempts to detect attacks from the perspective of behavioral pattern diffusion, enabling generic detection of different attack types and providing early insights for subsequent research.

- Pop-SAD [19]. This method innovatively shifts the focus of poisoning attack detection from traditional “rating patterns” to analyzing users’ “item selection behavior.” Its core insight is that attackers, to achieve their goals, have item selection strategies significantly different from normal users’ preference-based selections. This difference is reflected in their rated item popularity distributions. Thus, Pop-SAD represents a new approach to identifying malicious users by analyzing intrinsic characteristics of user item selection behavior, offering a more robust and computationally cheaper way for attack detection.

- kNN-Mix [20]. This is a mixed-feature detection framework for multi-criteria recommender systems. It combines traditional behavioral features with novel item popularity features and uses a kNN-Mix classifier for attack detection. Its representativeness lies in enhancing detection performance for specific recommender system scenarios (multi-criteria) through carefully designed mixed feature engineering, showcasing innovation in traditional machine learning at the feature level.

- DL-DRA [25]. This method proposes a 2D feature reconstruction-based convolutional detection model. Its core idea is to transform user rating vectors into approximate 2D “images” (square matrices) using techniques like bicubic interpolation, making them suitable for CNN input. This allows CNNs to extract spatial features. This method represents an early attempt to apply mature image processing CNN models to recommender system attack detection, focusing on how to transform data for CNN feature extraction.

- CNN-LSTM [26]. This method designs a spatio-temporal joint learning architecture. The CNN module extracts local spatial patterns in user–item interactions, while the LSTM module models long-term temporal dependencies in user behavior sequences. By combining these two networks, the model can learn implicit feature vectors from user rating data to distinguish between genuine and attack users. This architecture represents a common hybrid model approach in deep learning, aiming to capture both local spatial and sequential temporal characteristics of data.

- SpDetector [27]. This method effectively captures shilling attacks by constructing multiple components such as user spectral features, item similarity offset, and rating prediction error. It then uses these carefully designed features to train a deep learning network for detection. Its representativeness lies in not solely relying on end-to-end automatic feature learning but combining domain knowledge for multi-angle feature engineering, then using a deep learning model for classification, reflecting a strategy that combines feature engineering with deep learning.

- CNN-BAG [28]. This method combines deep learning with ensemble learning. It uses CNN-based deep neural networks as base classifiers to automatically extract and learn shilling attack features. It then employs the Bagging ensemble strategy to improve overall detection performance and robustness. This method represents a research direction that combines the powerful feature representation capabilities of deep learning with the advantages of ensemble learning (e.g., improving stability and generalization) to enhance detection effectiveness.

- CDDPA [33]: An advanced framework for anomalous user detection based on GCN. By employing teacher-student knowledge distillation and contrastive learning, it learns node representations directly from the user–item interaction graph to identify users with atypical topological connection patterns. Its inclusion as a baseline serves to benchmark our method’s competitiveness against a model specifically designed to exploit graph topology.

4.4. Parameter Settings and Evaluation Metrics

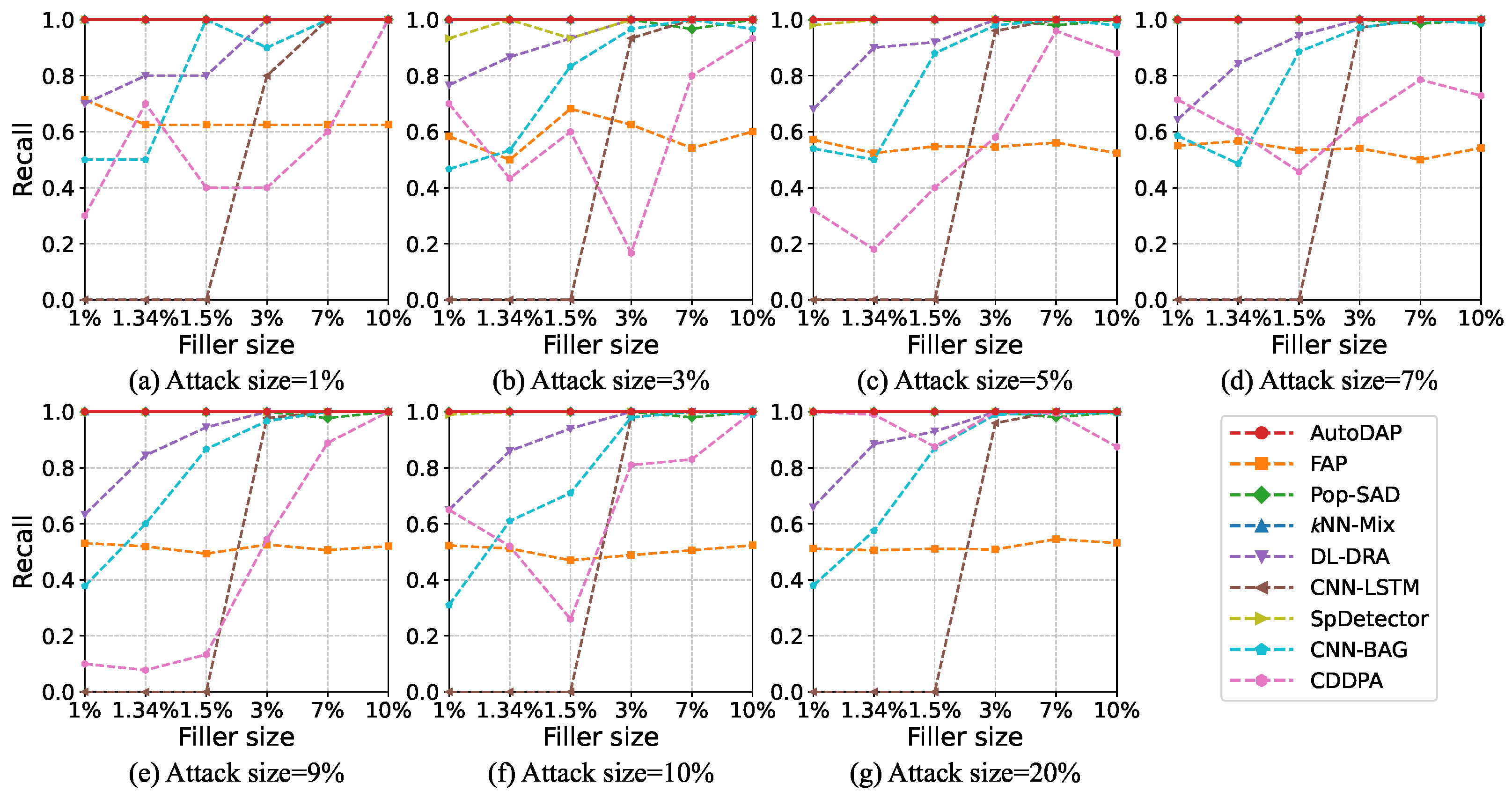

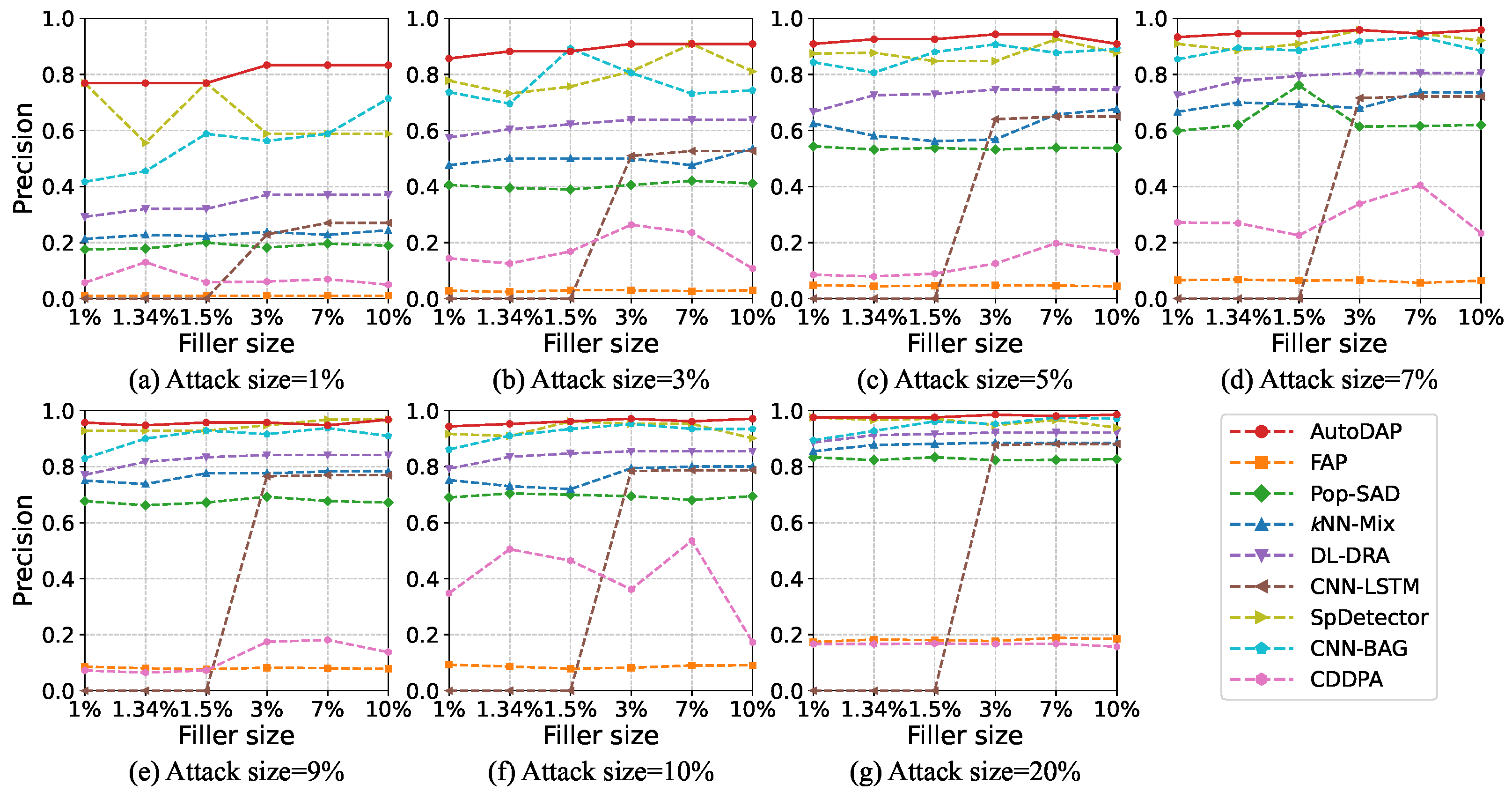

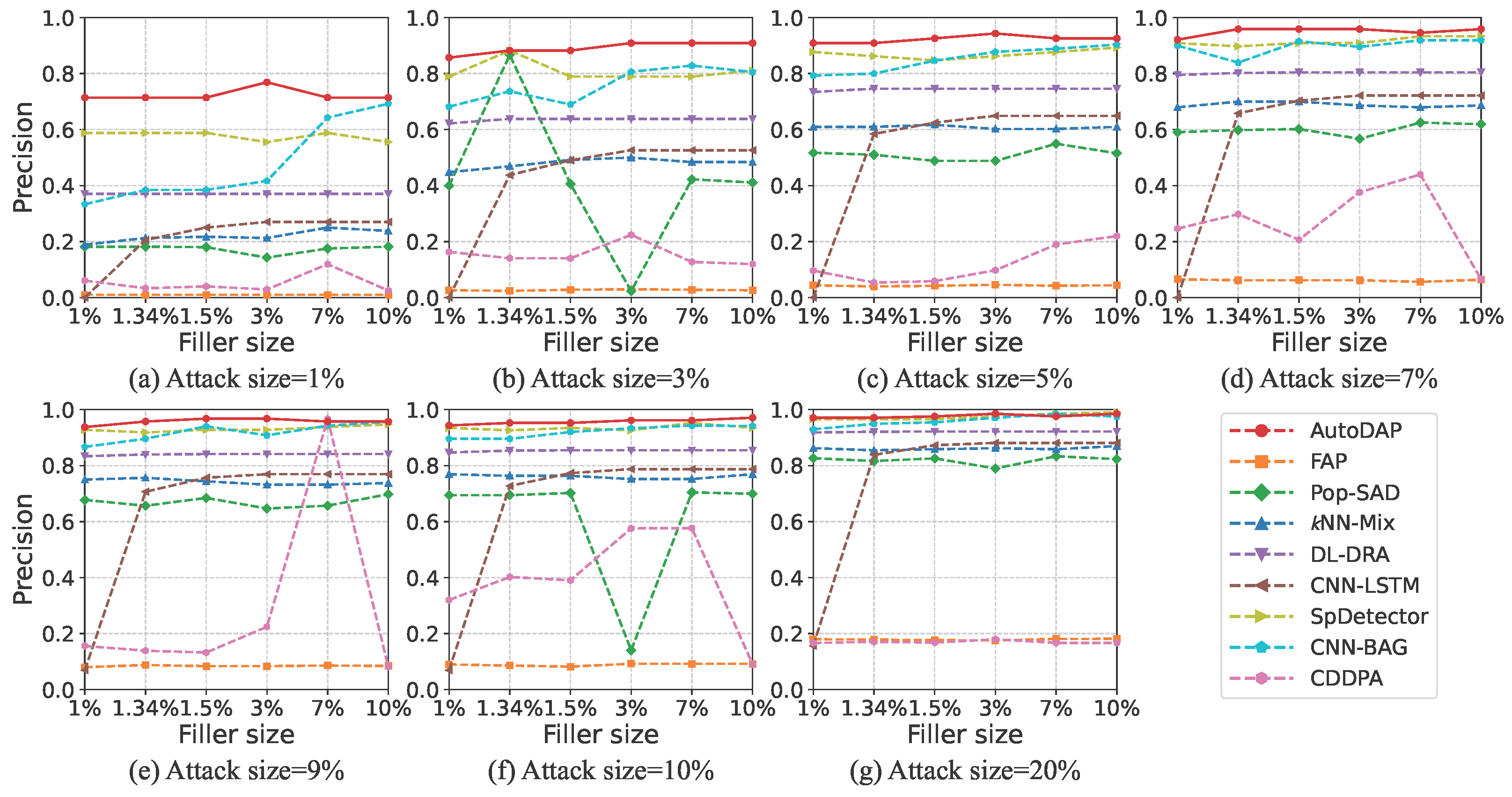

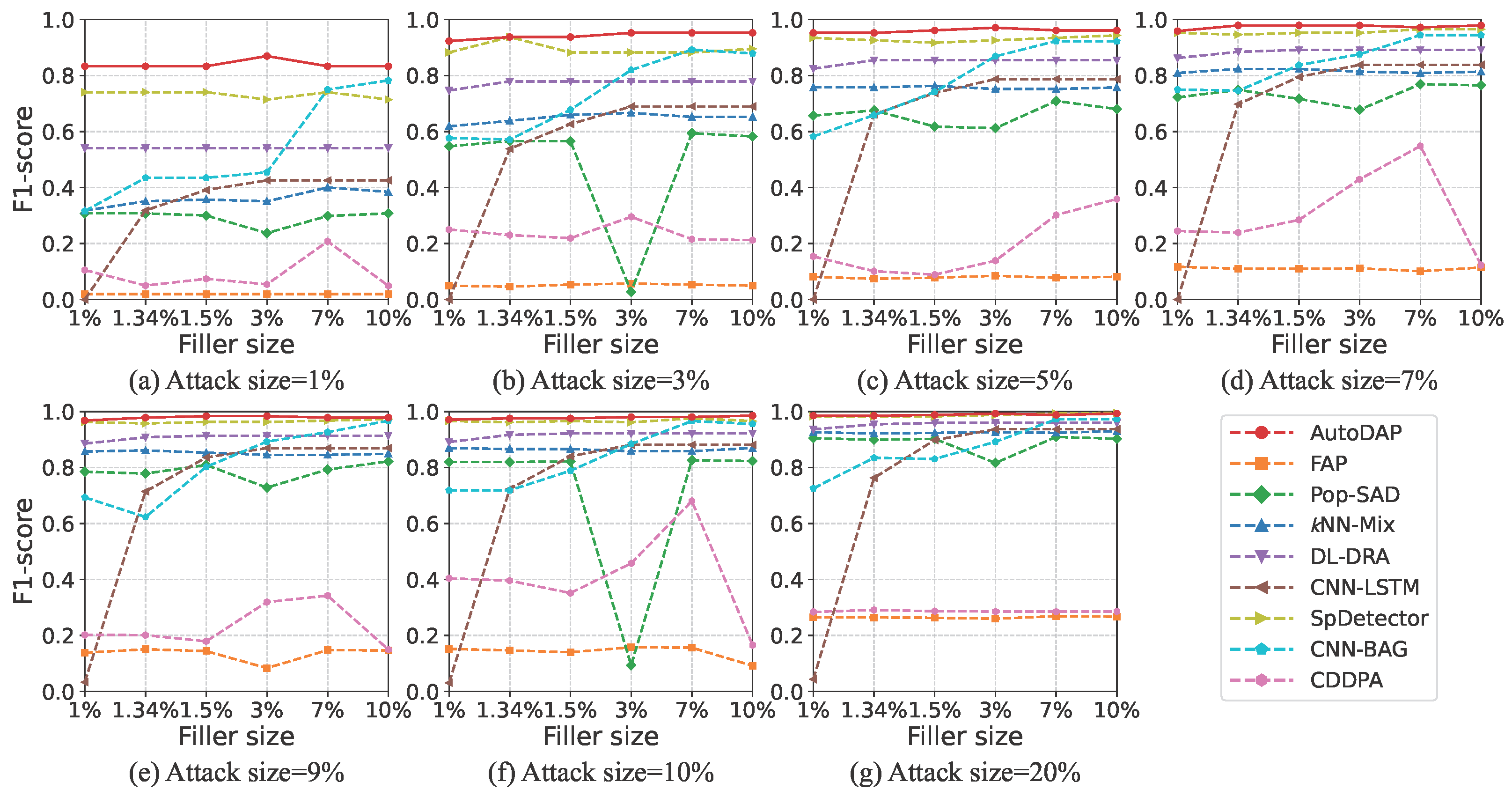

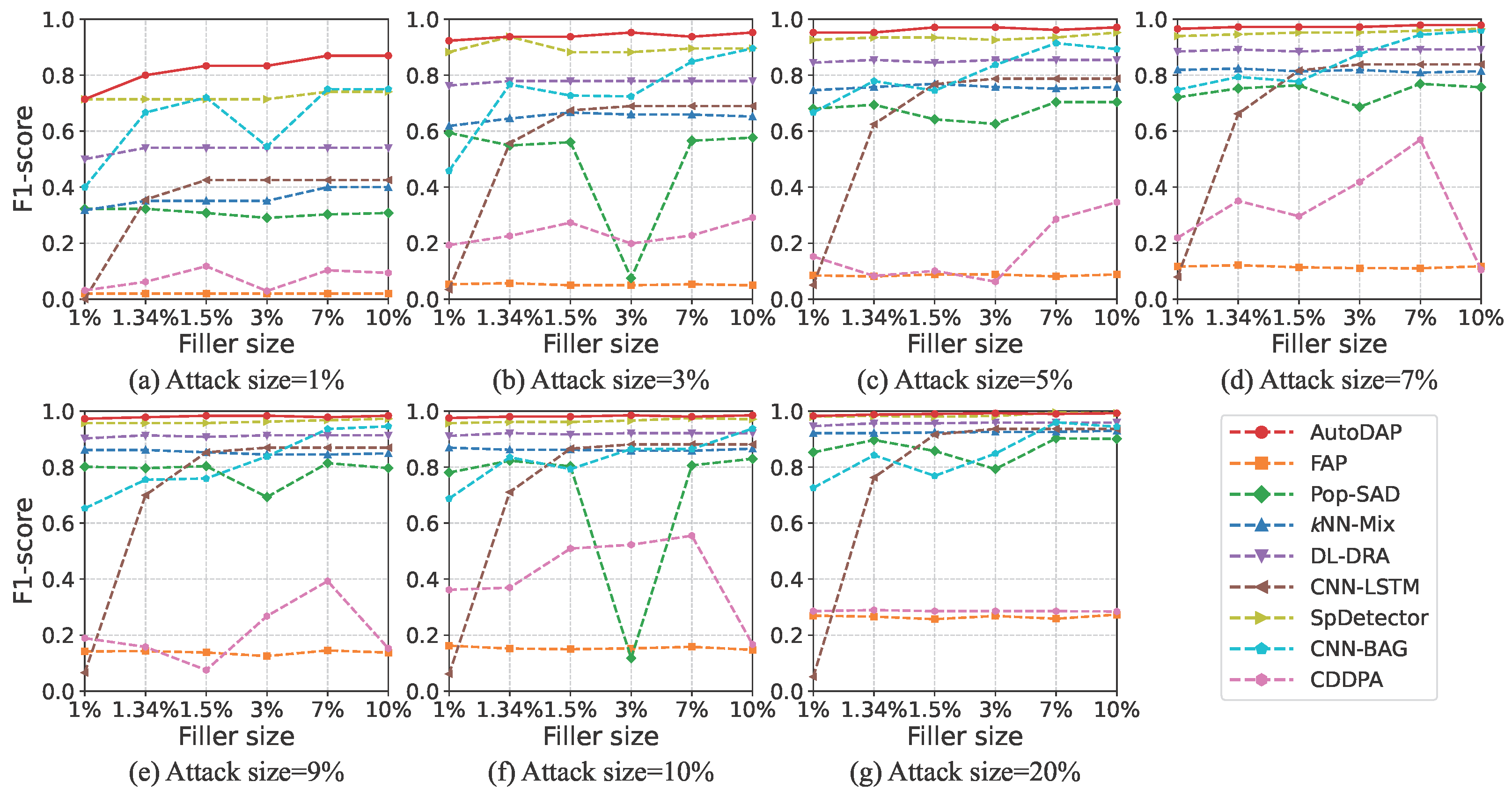

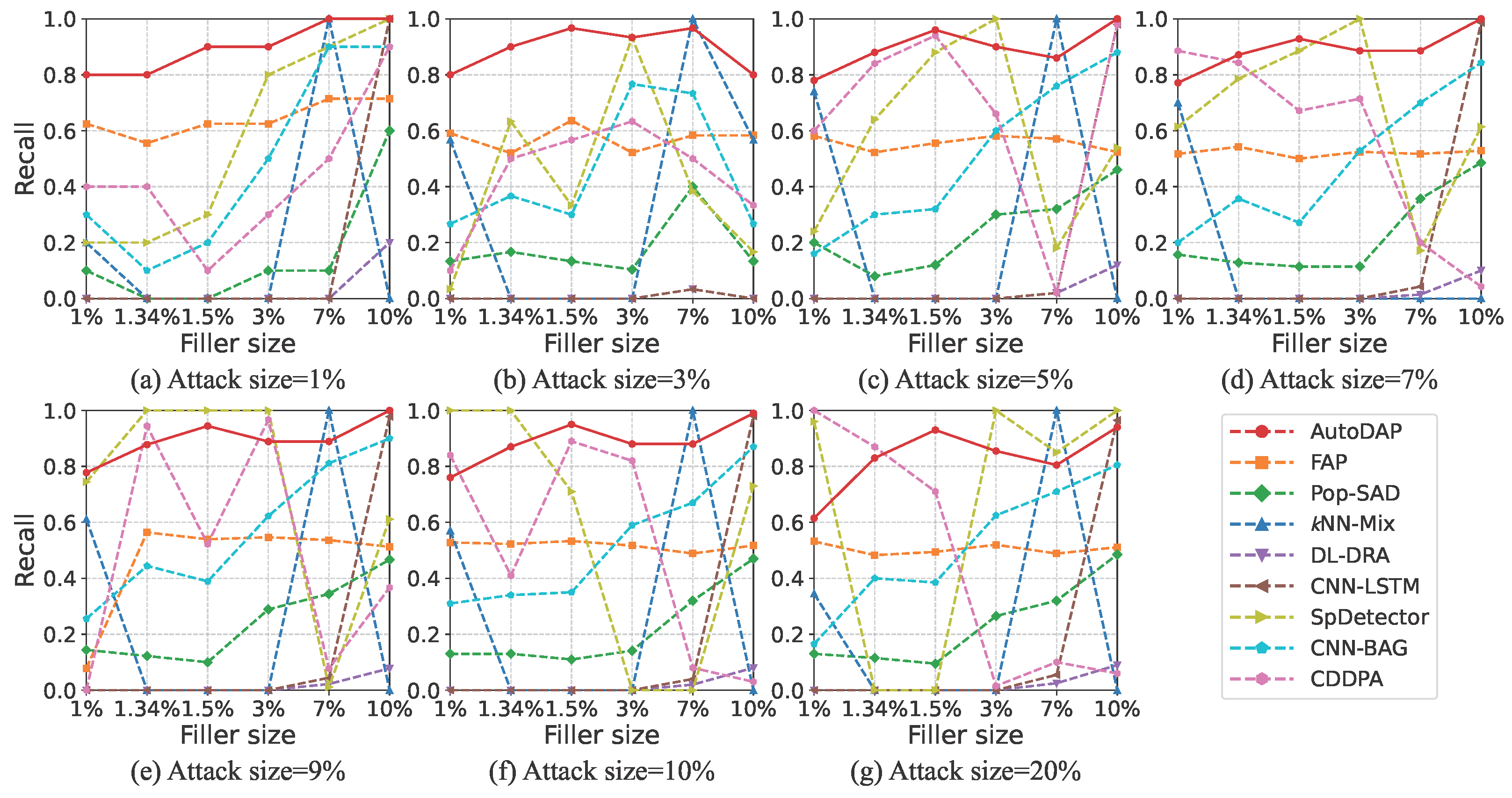

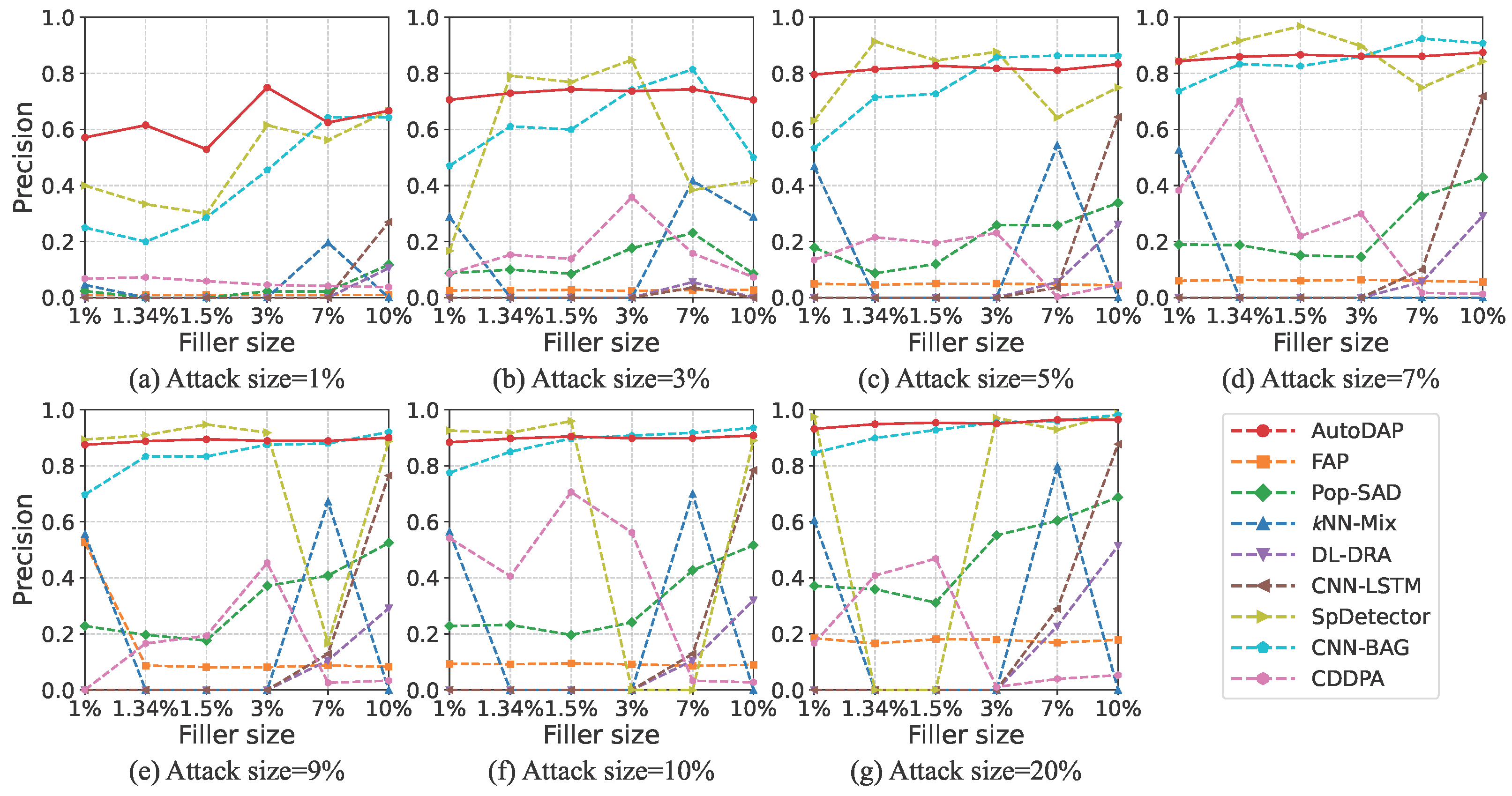

4.5. Experimental Comparison on MovieLens-10M Dataset

4.5.1. Hyperparameter Optimization

4.5.2. Detection Results

4.6. Experimental Comparison on Amazon Dataset

4.6.1. Hyperparameter Optimization

4.6.2. Detection Results

4.7. Ablation Study

4.8. In-Depth Analysis

- How robust is the model to key hyperparameters?

- Is the model’s performance stable against random data perturbations?

- What are the performance limits when facing state-of-the-art, highly stealthy attacks?

- Can the attack pattern recognition ability learned from a source domain generalize to a new target domain?

- How does the model perform in terms of computational efficiency and scalability in practical applications?

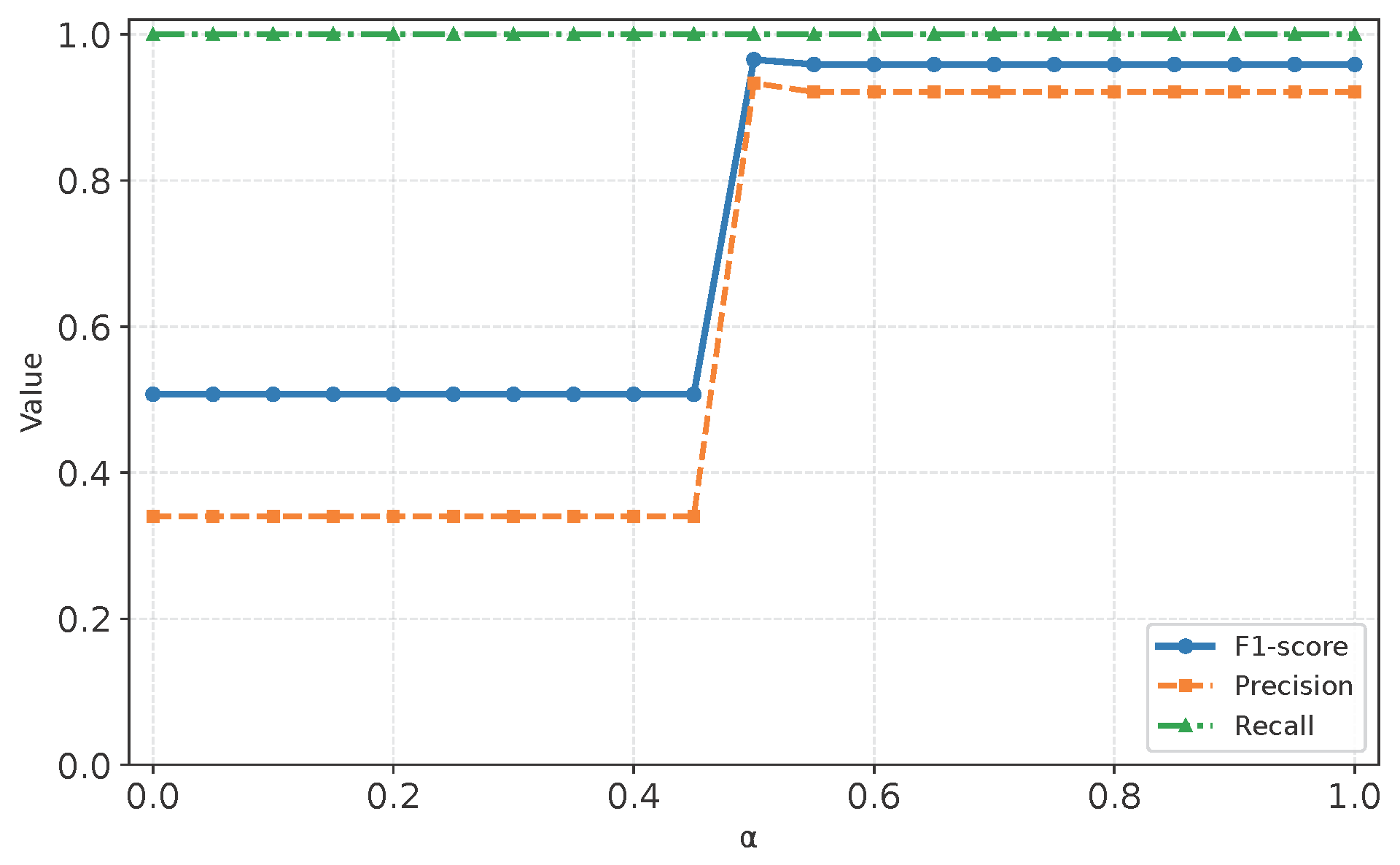

4.8.1. Sensitivity Analysis

4.8.2. Stability Analysis

4.8.3. Analysis of the Highly Stealthy InfoAtk Attack

4.8.4. Cross-Domain Generalization Analysis

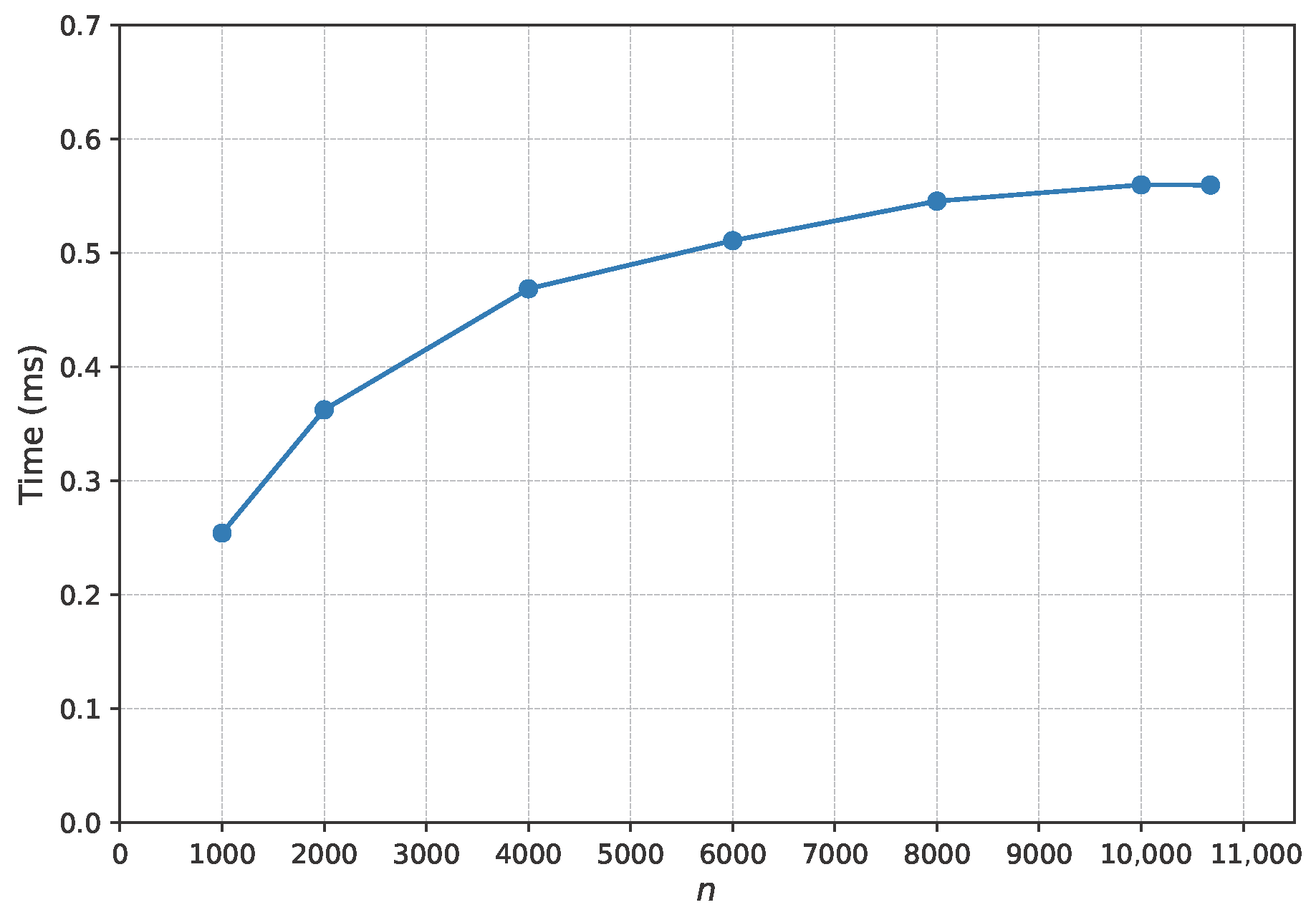

4.8.5. Computational Efficiency and Scalability Analysis

- (1)

- Exceptional Real-Time Performance: Across all tested scales, even when the number of items exceeds ten thousand, the total average per-user overhead, including all preprocessing, remains below 0.6 milliseconds. This sub-millisecond latency is significantly lower than the typical industry requirement of tens of milliseconds for online services, fully confirming the model’s capability to meet real-time detection needs.

- (2)

- Strong Scalability: The total latency exhibits a controllable, non-explosive, sub-linear growth trend as the number of items increases. The slope of the curve flattens with the growing number of items, which indicates that as the system scales up, the marginal time cost for processing each additional item decreases. This demonstrates excellent scalability.

4.9. Discussion

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Sheng, Z.; Wei, L. HS-SocialRec: A Study on Boosting Social Recommendations with Hard Negative Sampling in LightGCN. Information 2025, 16, 422. [Google Scholar] [CrossRef]

- Huang, J.; Xie, Z.; Zhang, H.; Yang, B.; Di, C.; Huang, R. Enhancing Knowledge-Aware Recommendation with Dual-Graph Contrastive Learning. Information 2024, 15, 534. [Google Scholar] [CrossRef]

- Nguyen, T.T.; Nguyen, Q.V.H.; Nguyen, T.T.; Huynh, T.T.; Nguyen, T.T.; Weidlich, M.; Yin, H. Manipulating Recommender Systems: A Survey of Poisoning Attacks and Countermeasures. ACM Comput. Surv. 2024, 57, 3. [Google Scholar] [CrossRef]

- Lam, S.K.; Riedl, J. Shilling Recommender Systems for Fun and Profit. In Proceedings of the 13th International Conference on World Wide Web, New York, NY, USA, 17–20 May 2004; Feldman, S., Uretsky, M., Najork, M., Wills, C., Eds.; ACM: New York, NY, USA, 2004; pp. 393–402. [Google Scholar] [CrossRef]

- Nawara, D.; Aly, A.; Kashef, R. Shilling Attacks and Fake Reviews Injection: Principles, Models, and Datasets. IEEE Trans. Comput. Soc. Syst. 2024, 12, 362–375. [Google Scholar] [CrossRef]

- Burke, R.; Mobasher, B.; Zabicki, R.; Bhaumik, R. Identifying Attack Models for Secure Recommendation. In Beyond Personalization; Setten, M.V., McNee, S.M., Konstan, J.A., Terveen, L., Ardissono, L., Herlocker, J., Smyth, B., Nijholt, A., Eds.; IUI’05: San Diego, CA, USA, 2005; pp. 347–361. Available online: http://www.grouplens.org/beyond2005/papers.html (accessed on 14 November 2025).

- Fang, M.; Yang, G.; Gong, N.Z.; Liu, J. Poisoning Attacks to Graph-Based Recommender Systems. In Proceedings of the 34th Annual Computer Security Applications Conference, San Juan, PR, USA, 3–7 December 2018; Caballero, J., Gu, G., Eds.; ACM: New York, NY, USA, 2018; pp. 381–392. [Google Scholar] [CrossRef]

- Nguyen, T.T.; Quach, N.D.K.; Nguyen, T.T.; Huynh, T.T.; Vu, V.H.; Nguyen, P.L.; Jo, J.; Nguyen, Q.V.H. Poisoning GNN-based Recommender Systems with Generative Surrogate-based Attacks. ACM Trans. Inf. Syst. 2023, 41, 58. [Google Scholar] [CrossRef]

- Guo, S.; Bai, T.; Deng, W. Targeted Shilling Attacks on GNN-based Recommender Systems. In Proceedings of the 32nd ACM International Conference on Information and Knowledge Management, Birmingham, UK, 21–25 October 2023; Frommholz, I., Hopfgartner, F., Lee, M., Oakes, M., Lalmas, M., Zhang, M., Santos, R., Eds.; ACM: New York, NY, USA, 2023; pp. 649–658. [Google Scholar] [CrossRef]

- Ma, H.; Gao, M.; Wei, F.; Wang, Z.; Jiang, F.; Zhao, Z.; Yang, Z. Stealthy Attack on Graph Recommendation System. Expert Syst. Appl. 2024, 255, 124476. [Google Scholar] [CrossRef]

- Chirita, P.A.; Nejdl, W.; Zamfir, C. Preventing Shilling Attacks in Online Recommender Systems. In Proceedings of the 7th Annual ACM International Workshop on Web Information and Data Management, New York, NY, USA, 4 November 2005; Bonifati, A., Lee, D., Eds.; ACM: New York, NY, USA, 2005; pp. 67–74. [Google Scholar] [CrossRef]

- Mehta, B.; Hofmann, T.; Fankhauser, P. Lies and propaganda: Detecting Spam Users in Collaborative Filtering. In Proceedings of the 12th International Conference on Intelligent User Interfaces, Honolulu, HI, USA, 28–31 January 2007; ACM: New York, NY, USA, 2007; pp. 14–21. [Google Scholar] [CrossRef]

- Zhang, Y.; Tan, Y.; Zhang, M.; Liu, Y.; Chua, T.S.; Ma, S. Catch the Black Sheep: Unified Framework for Shilling Attack Detection Based on Fraudulent Action Propagation. In Proceedings of the 24th International Conference on Artificial Intelligence, Buenos Aires, Argentina, 25–31 July 2015; Yang, Q., Wooldridge, M., Eds.; AAAI Press: Menlo Park, CA, USA, 2015; pp. 2408–2414. Available online: https://dl.acm.org/doi/10.5555/2832581.2832585 (accessed on 14 November 2025).

- Zhang, F.; Zhang, Z.; Zhang, P.; Wang, S. UD-HMM: An Unsupervised Method for Shilling Attack Detection Based on Hidden Markov Model and Hierarchical Clustering. Knowl.-Based Syst. 2018, 148, 146–166. [Google Scholar] [CrossRef]

- Cai, H.; Zhang, F. BS-SC: An Unsupervised Approach for Detecting Shilling Profiles in Collaborative Recommender Systems. IEEE Trans. Knowl. Data Eng. 2019, 33, 1375–1388. [Google Scholar] [CrossRef]

- Zhang, F.; Chan, P.P.K.; He, Z.M.; Yeung, D.S. Unsupervised Contaminated User Profile Identification Against Shilling Attack in Recommender System. Intell. Data Anal. 2024, 28, 1411–1426. [Google Scholar] [CrossRef]

- Williams, C.A.; Mobasher, B.; Burke, R. Defending Recommender Systems: Detection of Profile Injection Attacks. Serv. Oriented Comput. Appl. 2007, 1, 157–170. [Google Scholar] [CrossRef]

- Zhou, W.; Wen, J.; Xiong, Q.; Gao, M.; Zeng, J. SVM-TIA A Shilling Attack Detection Method Based On SVM and Target Item Analysis in Recommender Systems. Neurocomputing 2016, 210, 197–205. [Google Scholar] [CrossRef]

- Li, W.; Gao, M.; Li, H.; Zeng, J.; Xiong, Q.; Hirokawa, S. Shilling Attack Detection in Recommender Systems Via Selecting Patterns Analysis. IEICE Trans. Inf. Syst. 2016, 99, 2600–2611. [Google Scholar] [CrossRef]

- Kaya, T.T.; Yalcin, E.; Kaleli, C. A Novel Classification-based Shilling Attack Detection Approach for Multi-criteria Recommender Systems. Comput. Intell. 2023, 39, 499–528. [Google Scholar] [CrossRef]

- Gambhir, S.; Dhawan, S.; Singh, K. Enhancing Recommendation Systems with Skew Deviation Bias for Shilling Attack Detection. Recent Adv. Electr. Electron. Eng. 2025, 18, 212–233. [Google Scholar] [CrossRef]

- Wu, Z.; Wu, J.; Cao, J.; Tao, D. HySAD: A Semi-Supervised Hybrid Shilling Attack Detector for Trustworthy Product Recommendation. In Proceedings of the 18th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Beijing, China, 12–16 August 2012; Yang, Q., Agarwal, D., Pei, J., Eds.; ACM: New York, NY, USA, 2012; pp. 985–993. [Google Scholar] [CrossRef]

- Zhou, Q.; Duan, L. Semi-supervised Recommendation Attack Detection Based on Co-Forest. Comput. Secur. 2021, 109, 102390. [Google Scholar] [CrossRef]

- Tong, C.; Yin, X.; Li, J.; Zhu, T.; Lv, R.; Sun, L.; Rodrigues, J.J. A Shilling Attack Detector Based on Convolutional Neural Network for Collaborative Recommender System in Social Aware Network. Comput. J. 2018, 61, 949–958. [Google Scholar] [CrossRef]

- Zhou, Q.; Wu, J.; Duan, L. Recommendation Attack Detection Based on Deep Learning. J. Inf. Secur. Appl. 2020, 52, 102493. [Google Scholar] [CrossRef]

- Ebrahimian, M.; Kashef, R. Detecting Shilling Attacks Using Hybrid Deep Learning Models. Symmetry 2020, 12, 1805. [Google Scholar] [CrossRef]

- Li, H.; Gao, M.; Zhou, F.; Zhou, F.; Wang, Y.; Fan, Q.; Yang, L. Fusing Hypergraph Spectral Features for Shilling Attack Detection. J. Inf. Secur. Appl. 2021, 63, 103051. [Google Scholar] [CrossRef]

- Zhou, Q.; Huang, C. A Recommendation Attack Detection Approach Integrating CNN with Bagging. Comput. Secur. 2024, 146, 104030. [Google Scholar] [CrossRef]

- Zhang, Y.; Hao, Q.; Zheng, W.; Xiao, Y. User Similarity-based Graph Convolutional Neural Network for Shilling Attack Detection. Appl. Intell. 2025, 55, 340. [Google Scholar] [CrossRef]

- Vincent, P.; Larochelle, H.; Lajoie, I.; Bengio, Y.; Manzagol, P.A.; Bottou, L. Stacked Denoising Autoencoders: Learning Useful Representations In a Deep Network with a Local Denoising Criterion. J. Mach. Learn. Res. 2010, 11, 3371–3408. Available online: https://dl.acm.org/doi/10.5555/1756006.1953039 (accessed on 14 November 2025).

- Li, P.; Pei, Y.; Li, J. A Comprehensive Survey on Design and Application of Autoencoder in Deep Learning. Appl. Soft Comput. 2023, 138, 110176. [Google Scholar] [CrossRef]

- Hartono, P. Mixing Autoencoder with Classifier: Conceptual Data Visualization. IEEE Access 2020, 8, 105301–105310. [Google Scholar] [CrossRef]

- Wang, Z.; Song, W.; Zhang, P.; Ma, R.; Zhang, F. Cross-distillation-based approach for detecting poisoning attacks in recommender systems. J. Intell. Inf. Syst. 2025, 63, 2079–2107. [Google Scholar] [CrossRef]

- Sarridis, I.; Kotropoulos, C. Neural Factorization Applied to Interaction Matrix for Recommendation. In Proceedings of the 2021 29th European Signal Processing Conference (EUSIPCO), Dublin, Ireland, 23–27 August 2021; IEEE: New York, NY, USA, 2021; pp. 1336–1340. [Google Scholar] [CrossRef]

- Fei, N.; Gao, Y.; Lu, Z.; Xiang, T. Z-Score Normalization, Hubness, and Few-Shot Learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; IEEE: New York, NY, USA, 2021; pp. 142–151. Available online: https://ieeexplore.ieee.org/document/9710829 (accessed on 14 November 2025).

- Tolstikhin, I.O.; Houlsby, N.; Kolesnikov, A.; Beyer, L.; Zhai, X.; Unterthiner, T.; Yung, J.; Steiner, A.; Keysers, D.; Uszkoreit, J. MLP-mixer: An All-MLP Architecture for Vision. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: New York, NY, USA, 2021; pp. 32–58. Available online: https://proceedings.neurips.cc/paper_files/paper/2021/file/cba0a4ee5ccd02fda0fe3f9a3e7b89fe-Paper.pdf (accessed on 14 November 2025).

- Zhang, Z.; Sabuncu, M. Generalized Cross Entropy Loss for Training Deep Neural Networks with Noisy Labels. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: New York, NY, USA, 2018; Available online: https://proceedings.neurips.cc/paper_files/paper/2018/file/f2925f97bc13ad2852a7a551802feea0-Paper.pdf (accessed on 14 November 2025).

- Kosson, A.; Messmer, B.; Jaggi, M. Rotational Equilibrium: How Weight Decay Balances Learning Across Neural Networks. arXiv 2024, arXiv:2305.17212. [Google Scholar] [CrossRef]

- Chicco, D.; Warrens, M.J.; Jurman, G. The Coefficient of Determination R-squared Is More Informative Than SMAPE, MAE, MAPE, MSE and RMSE in Regression Analysis Evaluation. PeerJ Comput. Sci. 2021, 7, e623. [Google Scholar] [CrossRef]

- Harper, F.M.; Konstan, J.A. The MovieLens Datasets: History and Context. ACM Trans. Interact. Intell. Systems. 2015, 5, 19. [Google Scholar] [CrossRef]

- Xu, C.; Zhang, J.; Chang, K.; Long, C. Uncovering Collusive Spammers in Chinese Review Websites. In Proceedings of the 22nd ACM International Conference on Information & Knowledge Management, San Francisco, CA, USA, 27 October–1 November 2013; He, Q., Lyengar, A., Nejdl, W., Pei, J., Rastogi, R., Eds.; ACM: New York, NY, USA, 2013; pp. 979–988. [Google Scholar] [CrossRef]

- Bergstra, J.; Bengio, Y. Random Search for Hyper-parameter Optimization. J. Mach. Learn. Res. 2012, 13, 281–305. Available online: https://dl.acm.org/doi/abs/10.5555/2188385.2188395 (accessed on 14 November 2025).

- Sarvade, V.P.; Kulkarni, S.A.; Raj, C.V. A Hybrid Classical-Quantum Neural Network Model for DDoS Attack Detection in Software-Defined Vehicular Networks. Information 2025, 16, 722. [Google Scholar] [CrossRef]

| Dataset | User | Item | Rating | Rating Range | Filler Ratio | Genuine User | Attack User |

|---|---|---|---|---|---|---|---|

| MovieLens-10M | 71,567 | 10,681 | 10,000,054 | [0.5–5] | 1.34% | 71,567 | 0 |

| Amazon | 4902 | 16,885 | 51,346 | [1–5] | 0.06% | 2995 | 1907 |

| Attack Model | Genuine User | Filler Size | ||||

|---|---|---|---|---|---|---|

| 1% | 1.5% | 3% | 7% | 10% | ||

| OGPAttack | 1000 | 10 | 10 | 10 | 10 | 10 |

| GSPAttack | 1000 | 10 | 10 | 10 | 10 | 10 |

| AutoAttack | 1000 | 10 | 10 | 10 | 10 | 10 |

| InfoAtk | 1000 | 10 | 10 | 10 | 10 | 10 |

| Attack Model | Genuine User | Filler Size | ||||

|---|---|---|---|---|---|---|

| 1% | 1.5% | 3% | 7% | 10% | ||

| OGPAttack | 1000 | 10 | 10 | 10 | 10 | 10 |

| GSPAttack | 1000 | 10 | 10 | 10 | 10 | 10 |

| AutoAttack | 1000 | 10 | 10 | 10 | 10 | 10 |

| InfoAtk | 1000 | 10 | 10 | 10 | 10 | 10 |

| Dataset Type | Genuine Users | #Attack Users |

|---|---|---|

| Training Set | 1500 | 1000 |

| Validation Set | 495 | 407 |

| Test Set | 1000 | 500 |

| Data Set | Attack Model | Without Statistical Features | With Statistical Features | ||||

|---|---|---|---|---|---|---|---|

| Recall | Precision | F1-Score | Recall | Precision | F1-Score | ||

| MovieLens-10M | OGPAttack | 1.0000 | 0.9459 | 0.9722 | 1.0000 | 0.9589 | 0.9790 |

| GSPAttack | 1.0000 | 0.9452 | 0.9718 | 1.0000 | 0.9589 | 0.9790 | |

| AutoAttack | 1.0000 | 0.9459 | 0.9722 | 1.0000 | 0.9459 | 0.9722 | |

| InfoAtk | 0.6286 | 0.9362 | 0.7521 | 0.8857 | 0.8611 | 0.8732 | |

| Amazon | Identified attack user | 0.9380 | 0.9214 | 0.9296 | 0.9380 | 0.9269 | 0.9324 |

| Attack Size | Precision (Mean ± Std) | Recall (Mean ± Std) | F1-Score (Mean ± Std) |

|---|---|---|---|

| 1% | |||

| 3% | |||

| 5% | |||

| 7% | |||

| 9% | |||

| 10% | |||

| 20% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, Q.; Zhao, X.; Zhang, X. Autoencoder-Based Poisoning Attack Detection in Graph Recommender Systems. Information 2025, 16, 1004. https://doi.org/10.3390/info16111004

Zhou Q, Zhao X, Zhang X. Autoencoder-Based Poisoning Attack Detection in Graph Recommender Systems. Information. 2025; 16(11):1004. https://doi.org/10.3390/info16111004

Chicago/Turabian StyleZhou, Quanqiang, Xi Zhao, and Xiaoyue Zhang. 2025. "Autoencoder-Based Poisoning Attack Detection in Graph Recommender Systems" Information 16, no. 11: 1004. https://doi.org/10.3390/info16111004

APA StyleZhou, Q., Zhao, X., & Zhang, X. (2025). Autoencoder-Based Poisoning Attack Detection in Graph Recommender Systems. Information, 16(11), 1004. https://doi.org/10.3390/info16111004