Mining User Perspectives: Multi Case Study Analysis of Data Quality Characteristics

Abstract

1. Introduction

2. Background

2.1. DQ Assessment Frameworks and Methods

2.2. Consumer-Centric Governance in Data Platforms

2.3. Monetisation Models and Consumer Participation

2.4. Clinical and Business Protocols: Lessons for DQ

3. Materials and Methods

3.1. Case Selection and Participant Profile

- •

- Regular interaction with third-party or external datasets.

- •

- Active use of data for decision-making, modelling, or product development.

- •

- Organisational responsibility for data-related strategy, integration, or evaluation.

- •

- Computer Science and Technology

- •

- Financial Services and Banking

- •

- ESG Consulting and Workplace Strategy

- •

- Urban Infrastructure and Transport Analytics

- •

- Public Research and Statistical Modelling

3.2. Data Collection

- •

- Definitions and relevance of DQ for business goals.

- •

- Challenges in sourcing, cleaning, and integrating datasets.

- •

- Expectations from data providers and platforms.

- •

- Views on metadata, documentation, and standards.

- •

- Perceptions of pricing models, licensing, and monetisation strategies.

- •

- Trust mechanisms, validation practices, and legal concerns in data sharing.

Informed Consent Statement and Approvals

3.3. Data Analysis

- •

- •

- Inductive codes are derived from participant narratives, including terms and concerns specific to the Indian data ecosystem, such as, ‘data freshness’, ‘lineage visibility’, ‘manual cleaning burden’, ‘version control gaps’, and ‘APIs without schema’.

- •

- The number of participants from each domain who discussed the theme,

- •

- The depth of the participant engagement (detail, multi-point extracts), and

- •

- The diversity of concerns (number of distinct sub-themes or sub-codes addressed).

4. Results

4.1. Fit-for-Purpose Quality: Needs Expressed by Data Consumers

| Statement | Participant |

|---|---|

| “If even if, let’s say the accuracy is low, however, we measure the accuracy as long as they help in making business decisions.” | AI Startup (P19) |

| “I think accuracy and newness of data matters because what happens is like there is data available, but those surveys have been done in 2020. Know how relevant they are in 2023 is questionable.” | Mental Health Startup (P20) |

| “ There needs to be some reasonable level of accuracy, but the accuracy need not be 100% as long as the data is good enough to generate business decisions, that’s fine.” | AI Startup (P19) |

| “In the open source systems, not really the paid version because the paid version didn’t have all the variety… when we want to buy data, we have to see the quality.” | IT Consulting (P1) |

| “these are the basic things, to basically trust the data it has to be internally consistent.” | AI Startup (P19) |

| “If you take the names of districts in India, it should be consistent within the same data sources and across data sources.” | AI Startup (P19) |

| “Data validation is first thing… the context always changes with my digital footprint | Technology Private Firm (P6) |

| “If it has been used widely, all of that adds to credibility… if you cannot trust the data then you can’t do anything from it.” | Oil and Gas Multinational (P24) |

| “Data comes in some file format which is not yet standardized. It could be Excel files without exact metadata about when it was collected or what standards it follows. There’s no description of the columns, inconsistent nomenclature, and the data is mostly static.” | Urban Transport Analytics (P7) |

| “World Wide Web Consortium has given guidelines on metadata standards… all G20 countries adopted it except India.” | Finance/Law Non-Profit (P18) |

| “The ability to look at how data has transformed from source till date… whole transformation lineage is critical.” | Independent Consultant (P4) |

| “Publishing data methodology or data cookbook along with the dataset.” | Finance/Law Non-Profit (P18) |

| Sector | Participants (IDs) | Key Needs/Issues |

|---|---|---|

| IT/Tech and AI | P1, P6, P10, P19, P22 | API documentation, accuracy for decisions, mission-critical data relevance |

| Finance and Analytics | P4, P5, P8, P14 | Data freshness, lineage tracking, real-time updates |

| Urban/Smart Cities | P7, P12, P16 | Standardized formats, metadata completeness, context-aware data |

| Healthcare | P17, P20, P25 | Historical data accuracy, medical subcode specificity |

| Energy/Oil and Gas | P11, P24 | Instrument reliability, credibility vs. real-world observations |

| Retail/Manufacturing | P15, P21 | Large-volume processing, label consistency (e.g., product sizes) |

| Sustainability | P9, P23 | Cross-departmental data cleanliness, triangulation validation |

| Banking/FinTech | P13 | Truthfulness verification, transaction data integrity |

| Sector | Participants (IDs) | Depth/Diversity | Rating |

|---|---|---|---|

| IT/Tech and AI | P1, P6, P10, P19, P22 | Detailed, multi-point | High |

| Finance and Analytics | P4, P5, P8, P14 | Detailed, diverse issues | High |

| Urban/Smart Cities | P7, P12, P16 | Moderate | High |

| Healthcare | P17, P20, P25 | Moderate | High |

| Energy/Oil and Gas | P11, P24 | Brief | Low |

| Retail/Manufact. | P15, P21 | Brief | Low |

| Sustainability | P9, P23 | Some diversity | Medium |

| Banking/FinTech | P13 | Brief | Low |

4.2. Usability and Format Frictions: Common Problems Encountered

| Statement | Participant |

|---|---|

| “Eighty percent of my team’s effort is spent cleaning data and making sure it is all right—so much time wasted.” | AI Startup (P19) |

| “Data cleaning takes up a lot of time.” | Software (P3) |

| “The data cleaning process is definitely there… more than 50% [of time] goes into data cleaning only.” | Financial Services (P8) |

| “Absence of data as well as missing information.” | IT Consulting (P1) |

| “I do not know whether it is an applicable data source or not… there are missing values.” | AI Startup (P19) |

| “Saving data in an organized format and handling multi-format data are some of the issues that we are facing when it comes to raw data handling.” | Banking (P13) |

| “Standardization is needed to scale and explore and move into the market quickly .” | Urban Transport Analytics (P7) |

| “The issue in most of the manufacturing area in India is that they’re not labelled the same way.” | Banking/FinTech (P25) |

| Sector | Participants (IDs) | Common Frictions |

|---|---|---|

| IT/Tech and AI | P3, P10, P19 | 80% of effort spent cleaning data, missing values, formatting overheads |

| Finance and Data Science | P5, P8, P13, P14 | Multi-format chaos (CSV/Excel), manual noise reduction |

| Urban/Smart Cities | P7, P16 | Non-standardized files, static datasets without metadata |

| Healthcare | P17, P25 | Unfilled fields, inconsistent medical labels (e.g., “Size L” ambiguity) |

| Energy/Oil and Gas | P11 | Sensor drift, instrument calibration issues |

| Retail/Manufacturing | P15, P21 | Harmonization gaps (e.g., product IDs across vendors) |

| General Business | P1, P6, P9 | Duplicate data, lack of documentation |

| Sector | Participants (IDs) | Depth/Diversity | Rating |

|---|---|---|---|

| IT/Tech and AI | P3, P10, P19 | Heavy cleaning, missing values | High |

| Finance and Data Science | P5, P8, P13, P14 | Multi-format issues, manual fixes | High |

| Urban/Smart Cities | P7, P16 | Non-standardized files, metadata | Medium |

| Healthcare | P17, P25 | Unfilled fields, inconsistent labels | Medium |

| Energy/Oil and Gas | P11 | Sensor drift, calibration | Low |

| Retail/Manufact. | P15, P21 | Harmonization gaps | Low |

| General Business | P1, P6, P9 | Duplication, lack of documentation | High |

4.3. Platform Requirements: Transparency, Traceability, and Governance

5. Discussion and Implications

5.1. DQ as a Consumer-Driven Construct

5.2. Platform Frictions: Usability as a Neglected Priority

5.3. The Central Role of Transparency and Governance

5.4. Toward Consumer-Centric Data Ecosystems

- •

- Governance structures to allow users to shape DQ standards,

- •

- Platform features to promote traceability, offer certification, and provide clear avenues for raising concerns,

- •

- Transparent and flexible pricing systems to reflect demonstrable quality and relevance, and

- •

- Sustained investments in documentation, metadata tools, and automated usability enhancements.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| API | Application Programming Interface |

| CDISC | Clinical Data Interchange Standards Consortium |

| CODCA | Consumer-Oriented Data Control and Auditability |

| CEO | Chief Executive Officer |

| CTO | Chief Technology Officer |

| DCAT | Data Catalog Vocabulary |

| DQ | Data Quality |

| ESG | Environmental, Social, and Governance |

| IoT | Internet of Things |

| IT | Information Technology |

| ML | Machine Learning |

| OECD | Organisation for Economic Co-operation and Development |

| SLA | Service Level Agreement |

| SPIRIT 2013 | Standard Protocol Items: Recommendations for Interventional Trials 2013 |

Appendix A

| Fisher and Kingma, 2001 [55] | Pipino et al., 2002 [13] | Parssian et al., 2004 [56] | Shankarnarayanan and Chi, 2006 [57] | Batini et al., 2009 [58] | Sidi et al., 2009 [59] | Even et al., 2010 [60] | Moges et al., 2013 [61] | Woodall et al., 2013 [62] | Behkamal et al., 2014 [12] | Becker et al., 2015 [63] | Batini et al., 2015 [64] | Rao et al.,2015 [65] | Guo and Liu, 2015 [66] | Taleb et al., 2016 [67] | Vetro et al., 2016 [68] | Taleb and Serhani 2017 [69] | Zhang et al., 2018 [33] | Jesiļevska 2017 [70] | Färber et al., 2018 [71] | Ardagna et al.,2018 [72] | Cichy and Rass, 2019 [73] | Byabazaire et al., 2020 [74] | Gὀmez-Omella et al., 2022 [18] | Makhoul 2022 [75] | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Part A | |||||||||||||||||||||||||

| Accessibility | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ||||||||||||||||

| Accuracy | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | |||||||

| Alignment | ✔ | ||||||||||||||||||||||||

| Believability | ✔ | ✔ | ✔ | ||||||||||||||||||||||

| Completeness | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ||||

| Compactness | ✔ | ||||||||||||||||||||||||

| Concise Representation | ✔ | ✔ | ✔ | ✔ | ✔ | ||||||||||||||||||||

| Correctness | ✔ | ||||||||||||||||||||||||

| Consistency | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | |||||||||||||

| Clarity | ✔ | ||||||||||||||||||||||||

| Cohesion | ✔ | ||||||||||||||||||||||||

| Confidentiality | ✔ | ||||||||||||||||||||||||

| Conformity | ✔ | ||||||||||||||||||||||||

| Distinctness | ✔ | ||||||||||||||||||||||||

| Ease of Manipulation | ✔ | ✔ | |||||||||||||||||||||||

| Free of Error | ✔ | ||||||||||||||||||||||||

| Interpretability | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ||||||||||||||||||

| Minimality | ✔ | ||||||||||||||||||||||||

| Objectify | ✔ | ✔ | |||||||||||||||||||||||

| Part B | |||||||||||||||||||||||||

| Precision | ✔ | ✔ | ✔ | ✔ | |||||||||||||||||||||

| Pertinence | ✔ | ||||||||||||||||||||||||

| Pedigree | ✔ | ||||||||||||||||||||||||

| Relevance | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ||||||||||||||||||

| Reliability | ✔ | ✔ | |||||||||||||||||||||||

| Reputation | ✔ | ✔ | ✔ | ✔ | |||||||||||||||||||||

| Redundancy | ✔ | ||||||||||||||||||||||||

| Simplicity | ✔ | ||||||||||||||||||||||||

| Security | ✔ | ✔ | ✔ | ✔ | |||||||||||||||||||||

| Timeliness | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ||||||||||||

| Traceability | ✔ | ✔ | |||||||||||||||||||||||

| Trust | ✔ | ✔ | |||||||||||||||||||||||

| Understandability | ✔ | ✔ | ✔ | ✔ | |||||||||||||||||||||

| Usability | ✔ | ||||||||||||||||||||||||

| Uniqueness | ✔ | ✔ | ✔ | ||||||||||||||||||||||

| Value-Added | ✔ | ✔ | |||||||||||||||||||||||

| Validity | ✔ | ||||||||||||||||||||||||

| Volume | ✔ | ✔ | |||||||||||||||||||||||

References

- Wang, R.Y.; Strong, D.M. Beyond Accuracy: What Data Quality Means to Data Consumers. J. Manag. Inf. Syst. 1996, 12, 5–33. [Google Scholar] [CrossRef]

- Reinsel, D.; Gantz, J.; Rydning, J. The Digitization of the World From Edge to Core. Framingham: International Data Corporation 2018, 16, 1–28. [Google Scholar]

- Richter, H.; Slowinski, P.R. The Data Sharing Economy: On the Emergence of New Intermediaries. IIC Int. Rev. Intellect. Prop. Compet. Law 2019, 50, 4–29. [Google Scholar] [CrossRef]

- Zhang, M.; Beltran, F.; Liu, J. A Survey of Data Pricing for Data Marketplaces. IEEE Trans. Big Data 2023, 9, 1038–1056. [Google Scholar] [CrossRef]

- Koutroumpis, P.; Leiponen, A.; Thomas, L.D.W. Markets for Data. Ind. Corp. Change 2020, 29, 645–660. [Google Scholar] [CrossRef]

- OECD. Smart City Data Governance; OECD: Paris, France, 2023; ISBN 9789264910201. [Google Scholar]

- Johnson, J.; Hevia, A.; Yergin, R.; Karbassi, S.; Levine, A. Data Governance Frameworks for Smart Cities: Key Considerations for Data Management and Use; Covington: Palo Alto, CA, USA, 2022; Volume 2022. [Google Scholar]

- Gerli, P.; Mora, L.; Neves, F.; Rocha, D.; Nguyen, H.; Maio, R.; Jansen, M.; Serale, F.; Albin, C.; Biri, F.; et al. World Smart Cities Outlook 2024 Acknowledgements Contributors (Data Collection); ESCAP: Bangkok, Thailand, 2014. [Google Scholar]

- Urbinati, A.; Bogers, M.; Chiesa, V.; Frattini, F. Creating and Capturing Value from Big Data: A Multiple-Case Study Analysis of Provider Companies. Technovation 2019, 84–85, 21–36. [Google Scholar] [CrossRef]

- Ghosh, D.; Garg, S. India’s Data Imperative: The Pivot towards Quality; 2005. Available online: https://www.niti.gov.in/sites/default/files/2025-06/FTH-Quaterly-Insight-june.pdf (accessed on 27 August 2025).

- Batini, C.; Scannapieca, M. Data Quality: Concepts, Methodologies and Techniques; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Behkamal, B.; Kahani, M.; Bagheri, E.; Jeremic, Z. A Metrics-Driven Approach for Quality Assessment of Linked Open Data. J. Theor. Appl. Electron. Commer. Res. 2014, 9, 64–79. [Google Scholar] [CrossRef]

- Pipino, L.L.; Lee, Y.W.; Wang, R.Y. Data Quality Assessment. Commun. ACM 2002, 45, 211–218. [Google Scholar] [CrossRef]

- Bovee, M.; Srivastava, R.P.; Mak, B. A Conceptual Framework and Belief-Function Approach to Assessing Overall Information Quality. Int. J. Intell. Syst. 2003, 18, 51–74. [Google Scholar] [CrossRef]

- Ehrlinger, L.; Werth, B.; Wöß, W. Automated Continuous Data Quality Measurement with QuaIIe. Int. J. Adv. Softw. 2018, 11, 400–417. [Google Scholar]

- Chug, S.; Kaushal, P.; Kumaraguru, P.; Sethi, T. Statistical Learning to Operationalize a Domain Agnostic Data Quality Scoring. arXiv 2021, arXiv:2108.08905. [Google Scholar] [CrossRef]

- Liu, Q.; Feng, G.; Tayi, G.K.; Tian, J. Managing Data Quality of the Data Warehouse: A Chance-Constrained Programming Approach. Inf. Syst. Front. 2021, 23, 375–389. [Google Scholar] [CrossRef]

- Meritxell, G.O.; Sierra, B.; Ferreiro, S. On the Evaluation, Management and Improvement of Data Quality in Streaming Time Series. IEEE Access 2022, 10, 81458–81475. [Google Scholar] [CrossRef]

- Taleb, I.; Serhani, M.A.; Bouhaddioui, C.; Dssouli, R. Big Data Quality Framework: A Holistic Approach to Continuous Quality Management. J. Big Data 2021, 8, 76. [Google Scholar] [CrossRef]

- Alwan, A.A.; Ciupala, M.A.; Brimicombe, A.J.; Ghorashi, S.A.; Baravalle, A.; Falcarin, P. Data Quality Challenges in Large-Scale Cyber-Physical Systems: A Systematic Review. Inf. Syst. 2022, 105, 101951. [Google Scholar] [CrossRef]

- Sadiq, S.; Indulska, M. Open Data: Quality over Quantity. Int. J. Inf. Manag. 2017, 37, 150–154. [Google Scholar] [CrossRef]

- Cai, L.; Zhu, Y. The Challenges of Data Quality and Data Quality Assessment in the Big Data Era. Data Sci. J. 2015, 14, 2. [Google Scholar] [CrossRef]

- Abedjan, Z.; Golab, L.; Naumann, F. Data Profiling. In Proceedings of the 2017 ACM International Conference on Management of Data, Chicago, IL, USA, 14–19 May 2017; ACM: New York, NY, USA, 2017; pp. 1747–1751. [Google Scholar]

- Podobnikar, T. Bridging Perceived and Actual Data Quality: Automating the Framework for Governance Reliability. Geosciences 2025, 15, 117. [Google Scholar] [CrossRef]

- Tapsell, J.; Akram, R.N.; Markantonakis, K. Consumer Centric Data Control, Tracking and Transparency—A Position Paper. In Proceedings of the 2018 17th IEEE International Conference on Trust, Security and Privacy in Computing and Communications/12th IEEE International Conference on Big Data Science and Engineering (TrustCom/BigDataSE), New York, NY, USA, 1–3 August 2018. [Google Scholar]

- Acev, D.; Biyani, S.; Rieder, F.; Aldenhoff, T.T.; Blazevic, M.; Riehle, D.M.; Wimmer, M.A. Systematic Analysis of Data Governance Frameworks and Their Relevance to Data Trusts. In Management Review Quarterly; Springer: Berlin/Heidelberg, Germany, 2025. [Google Scholar] [CrossRef]

- Otto, B.; Jarke, M. Designing a Multi-Sided Data Platform: Findings from the International Data Spaces Case. Electron. Mark. 2019, 29, 561–580. [Google Scholar] [CrossRef]

- Veltri, G.A.; Lupiáñez-Villanueva, F.; Folkvord, F.; Theben, A.; Gaskell, G. The Impact of Online Platform Transparency of Information on Consumers’ Choices. Behav. Public Policy 2023, 7, 55–82. [Google Scholar] [CrossRef]

- Ducuing, C.; Reich, R.H. Data Governance: Digital Product Passports as a Case Study. Compet. Regul. Netw. Ind. 2023, 24, 3–23. [Google Scholar] [CrossRef]

- Agahari, W.; Ofe, H.; de Reuver, M. It Is Not (Only) about Privacy: How Multi-Party Computation Redefines Control, Trust, and Risk in Data Sharing. Electron. Mark. 2022, 32, 1577–1602. [Google Scholar] [CrossRef]

- Yu, H.; Zhang, M. Data Pricing Strategy Based on Data Quality. Comput. Ind. Eng. 2017, 112, 1–10. [Google Scholar] [CrossRef]

- Yang, J.; Zhao, C.; Xing, C. Big Data Market Optimization Pricing Model Based on Data Quality. Complexity 2019, 2019, 1–10. [Google Scholar] [CrossRef]

- Zhang, D.; Wang, H.; Ding, X.; Zhang, Y.; Li, J.; Gao, H. On the Fairness of Quality-Based Data Markets. arXiv 2018, arXiv:1808.01624. [Google Scholar] [CrossRef]

- Pei, J. A Survey on Data Pricing: From Economics to Data Science. IEEE Trans. Knowl. Data Eng. 2022, 34, 4586–4608. [Google Scholar] [CrossRef]

- Miao, X.; Peng, H.; Huang, X.; Chen, L.; Gao, Y.; Yin, J. Modern Data Pricing Models: Taxonomy and Comprehensive Survey. arXiv 2023, arXiv:2306.04945. [Google Scholar] [CrossRef]

- Majumdar, R.; Gurtoo, A.; Maileckal, M. Developing a Data Pricing Framework for Data Exchange. Future Bus. J. 2025, 11, 4. [Google Scholar] [CrossRef]

- Malieckal, M.; Gurtoo, A.; Majumdar, R. Data Pricing for Data Exchange: Technology and AI. In Proceedings of the 2024 7th Artificial Intelligence and Cloud Computing Conference, Tokyo, Japan, 14–16 December 2024; Association for Computing Machinery: New York, NY, USA, 2025; pp. 392–401. [Google Scholar]

- Chan, A.W.; Tetzlaff, J.M.; Gøtzsche, P.C.; Altman, D.G.; Mann, H.; Berlin, J.A.; Dickersin, K.; Hróbjartsson, A.; Schulz, K.F.; Parulekar, W.R.; et al. SPIRIT 2013 Explanation and Elaboration: Guidance for Protocols of Clinical Trials. BMJ 2013, 346, e7586. [Google Scholar] [CrossRef]

- Juddoo, S.; George, C.; Duquenoy, P.; Windridge, D. Data Governance in the Health Industry: Investigating Data Quality Dimensions within a Big Data Context. Appl. Syst. Innov. 2018, 1, 43. [Google Scholar] [CrossRef]

- Facile, R.; Muhlbradt, E.E.; Gong, M.; Li, Q.; Popat, V.; Pétavy, F.; Cornet, R.; Ruan, Y.; Koide, D.; Saito, T.I.; et al. Use of Clinical Data Interchange Standards Consortium (CDISC) Standards for Real-World Data: Expert Perspectives From a Qualitative Delphi Survey. JMIR Med. Inform. 2022, 10, e30363. [Google Scholar] [CrossRef] [PubMed]

- Aggarwal, R.; Farag, S.; Martin, G.; Ashrafian, H.; Darzi, A. Patient Perceptions on Data Sharing and Applying Artificial Intelligence to Health Care Data: Cross-Sectional Survey. J. Med. Internet Res. 2021, 23, e26162. [Google Scholar] [CrossRef]

- Miller, R.; Whelan, H.; Chrubasik, M.; Whittaker, D.; Duncan, P.; Gregório, J. A Framework for Current and New Data Quality Dimensions: An Overview. Data 2024, 9, 151. [Google Scholar] [CrossRef]

- Etheredge, L.M. A Rapid-Learning Health System. Health Aff. 2007, 26, w107–w118. [Google Scholar] [CrossRef] [PubMed]

- Kaye, J.; Whitley, E.A.; Lund, D.; Morrison, M.; Teare, H.; Melham, K. Dynamic Consent: A Patient Interface for Twenty-First Century Research Networks. Eur. J. Hum. Genet. 2015, 23, 141–146. [Google Scholar] [CrossRef]

- Hoare, C.A.R. Data Reliability. ACM Sigplan Not. 1975, 10, 528–533. [Google Scholar] [CrossRef]

- Chapple, J.N. Business Systems Techniques: For the Systems Professional; Longman: London, UK, 1976. [Google Scholar]

- Fox, C.; Levitin, A.; Redman, T. The Notion of Data and Its Quality Dimensions. Inf. Process Manag. 1994, 30, 9–19. [Google Scholar] [CrossRef]

- Ballou, D.P.; Pazer, H.L. Modeling Data and Process Quality in Multi-Input, Multi-Output Information Systems. Manag. Sci. 1985, 31, 150–162. [Google Scholar] [CrossRef]

- Amicis, F.; Barone, D.; Batini, C. An Analytical Framework to Analyze Dependencies Among Data Quality Dimensions. In Proceedings of the 2006 International Conference on Information Quality, ICIQ 2006, Cambridge, MA, USA, 10–12 November 2006; pp. 369–383. [Google Scholar]

- Chatfield, A.T.; Reddick, C.G. A Framework for Internet of Things-Enabled Smart Government: A Case of IoT Cybersecurity Policies and Use Cases in U.S. Federal Government. Gov. Inf. Q. 2019, 36, 346–357. [Google Scholar] [CrossRef]

- Guest, G.; Bunce, A.; Johnson, L. How Many Interviews Are Enough? An Experiment with Data Saturation and Variability. Field Methods 2006, 18, 59–82. [Google Scholar] [CrossRef]

- Hennink, M.M.; Kaiser, B.N.; Marconi, V.C. Code Saturation Versus Meaning Saturation: How Many Interviews Are Enough? Qual. Health Res. 2016, 27, 591–608. [Google Scholar] [CrossRef]

- Mortier, R.; Haddadi, H.; Henderson, T.; McAuley, D.; Crowcroft, J. Human-data interaction: The human face of the data-driven society. arXiv 2014, arXiv:1412.6159. [Google Scholar] [CrossRef]

- Sailaja, N.; Jones, R.; McAuley, D. Designing for human data interaction in data-driven media experiences. In Proceedings of the Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–7. [Google Scholar]

- Fisher, C.W.; Kingma, B.R. Criticality of Data Quality as Exempli®ed in Two Disasters. Inf. Manag. 2001, 39, 109–116. [Google Scholar] [CrossRef]

- Parssian, A.; Sarkar, S.; Jacob, V.S. Assessing Data Quality for Information Products: Impact of Selection, Projection, and Cartesian Product. Manag. Sci. 2004, 50, 967–982. [Google Scholar] [CrossRef]

- Shankaranarayanan, G.; Cai, Y. Supporting Data Quality Management in Decision-Making. Decis. Support Syst. 2006, 42, 302–317. [Google Scholar] [CrossRef]

- Batini, C.; Cappiello, C.; Francalanci, C.; Maurino, A. Methodologies for Data Quality Assessment and Improvement. ACM Comput. Surv. 2009, 41, 1–52. [Google Scholar] [CrossRef]

- Sidi, F.; Shariat Panahy, P.H.; Affendey, L.S.; Jabar, M.A.; Ibrahim, H.; Mustapha, A. Data Quality: A Survey of Data Quality Dimensions. In Proceedings of the 2012 International Conference on Information Retrieval & Knowledge Management, Kuala Lumpur, Malaysia, 13–15 March 2012; IEEE: New York, NY, USA, 2012; pp. 300–304. [Google Scholar]

- Even, A.; Shankaranarayanan, G.; Berger, P.D. Evaluating a Model for Cost-Effective Data Quality Management in a Real-World CRM Setting. Decis. Support. Syst. 2010, 50, 152–163. [Google Scholar] [CrossRef]

- Moges, H.-T.; Dejaeger, K.; Lemahieu, W.; Baesens, B. A Multidimensional Analysis of Data Quality for Credit Risk Management: New Insights and Challenges. Inf. Manag. 2013, 50, 43–58. [Google Scholar] [CrossRef]

- Woodall, P.; Koronios, A. An Investigation of How Data Quality Is Affected by Dataset Size in the Context of Big Data Analytics. In Proceedings of the International Conference on Information Quality, Xi’an, China, 1–3 August 2014. [Google Scholar]

- Becker, D.; King, T.D.; McMullen, B. Big Data, Big Data Quality Problem. In Proceedings of the 2015 IEEE International Conference on Big Data (Big Data), Santa Clara, CA, USA, 29 October–1 November 2015; IEEE: New York, NY, USA, 2015. [Google Scholar]

- Batini, C.; Rula, A.; Scannapieco, M.; Viscusi, G. From Data Quality to Big Data Quality. J. Database Manag. 2015, 26, 60–82. [Google Scholar] [CrossRef]

- Rao, D.; Gudivada, V.N.; Raghavan, V.V. Data Quality Issues in Big Data. In Proceedings of the 2015 IEEE International Conference on Big Data (Big Data), Santa Clara, CA, USA, 29 October–1 November 2015; IEEE: New York, NY, USA, 2015. [Google Scholar]

- Guo, J.; Liu, F. Automatic Data Quality Control of Observations in Wireless Sensor Network. IEEE Geosci. Remote Sens. Lett. 2015, 12, 716–720. [Google Scholar] [CrossRef]

- Taleb, I.; Kassabi, H.T.E.; Serhani, M.A.; Dssouli, R.; Bouhaddioui, C. Big Data Quality: A Quality Dimensions Evaluation. In Proceedings of the 2016 Intl IEEE Conferences on Ubiquitous Intelligence & Computing, Advanced and Trusted Computing, Scalable Computing and Communications, Cloud and Big Data Computing, Internet of People, and Smart World Congress (UIC/ATC/ScalCom/CBDCom/IoP/SmartWorld), Toulouse, France, 18–21 July 2016. [Google Scholar]

- Vetrò, A.; Canova, L.; Torchiano, M.; Minotas, C.O.; Iemma, R.; Morando, F. Open Data Quality Measurement Framework: Definition and Application to Open Government Data. Gov. Inf. Q. 2016, 33, 325–337. [Google Scholar] [CrossRef]

- Taleb, I.; Serhani, M.A. Big Data Pre-Processing: Closing the Data Quality Enforcement Loop. In Proceedings of the 2017 IEEE 6th International Congress on Big Data, BigData Congress 2017, Honolulu, HI, USA, 25–30 June 2017; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2017; pp. 498–501. [Google Scholar]

- Jesiļevska, S. Data Quality Dimensions to Ensure Optimal Data Quality. Rom. Econ. J. 2017, 20, 89–103. [Google Scholar]

- Färber, M.; Bartscherer, F.; Menne, C.; Rettinger, A. Linked Data Quality of DBpedia, Freebase, OpenCyc, Wikidata, and YAGO. Semant. Web 2018, 9, 77–129. [Google Scholar] [CrossRef]

- Ardagna, D.; Cappiello, C.; Samá, W.; Vitali, M. Context-Aware Data Quality Assessment for Big Data. Future Gener. Comput. Syst. 2018, 89, 548–562. [Google Scholar] [CrossRef]

- Cichy, C.; Rass, S. An Overview of Data Quality Frameworks. IEEE Access 2019, 7, 24634–24648. [Google Scholar] [CrossRef]

- Byabazaire, J.; O’Hare, G.; Delaney, D. Using trust as a measure to derive data quality in data shared IoT deployments. In Proceedings of the 2020 29th International Conference on Computer Communications and Networks (ICCCN), Honolulu, HI, USA, 3–6 August 2020; pp. 1–9. [Google Scholar]

- Makhoul, N. Review of Data Quality Indicators and Metrics, and Suggestions for Indicators and Metrics for Structural Health Monitoring. Adv. Bridge Eng. 2022, 3, 17. [Google Scholar] [CrossRef]

| Domain of Analysis | Objectives and Benefits | Barriers and Problems | References |

|---|---|---|---|

| DQ in Platforms in India (including Smart City and Real-Time Systems) | Improved access, usability, traceability; real-time, high-quality data for decision-making; context-specific governance and consumer empowerment | Fragmented datasets, poor documentation, limited consumer input; complex real-time governance; regulatory immaturity and disempowerment | Richter & Slowinski 2018 [3]; Koutroumpis et al., 2020 [5]; Ghosh & Garg 2025 [10] |

| DQ Assessment Frameworks and Methods | Multidimensional models; scalable, automated tools; real-time assessment adaptable to fragmented ecosystems | Limited real-world validation; provider-centric; requires structured datasets; consumer-friendly tools are largely missing | Wang & Strong 1996 [1] Batini & Scannapieco 2006 [11]; Behkamal et al., 2014 [12]; Pipino et al., 2002 [13]; Bovee et al., 2003 [14]; Ehrlinger et al., 2018 [15]; Chug et al., 2021 [16]; Liu et al., 2021 [17]; Gómez-Omella et al., 2022 [18]; Taleb et al., 2021 [19]; Zhang et al., 2023 [4]; Alwan et al., 2022 [20]; Sadiq and Indulska, 2017 [21]; Cai and Zhu, 2015 [22]; Abedjan et al., 2016 [23] |

| Consumer-Centric Governance in Data Platforms | Traceability, fairness, transparency, participatory governance, consumer trust | Weak operationalisation, limited consumer influence, regulatory immaturity | Podobnikar 2025 [24]; Tapsell et al., 2018 [25]; Acev et al., 2025 [26]; Otto & Jarke 2019 [27]; Veltri et al., 2020 [28]; Ducuing & Reich 2023 [29]; Agahari et al., 2022 [30] |

| Monetisation Models and Consumer Participation | Quality-driven, tiered pricing linked to consumer willingness to pay | Pricing opacity, inconsistent service quality, limited research on consumer preferences | Yu and Zhang, 2017 [31]; Yang et al., 2019 [32]; Zhang et al., 2018 [33]; Pei 2020 [34]; Miao et al., 2023 [35]; Zhang et al., 2023 [4]; Majumdar et al., 2024 [36]; Malieckal et al., 2024 [37] |

| Clinical and Business Protocols | Interoperability, continuous improvement, user-centred governance and consent | Regulatory complexity, sector specificity, limited applicability to open platforms | Chan et al., 2017 [38]; Juddoo et al., 2018 [39]; Facile et al., 2022 [40]; Aggarwal et al., 2021 [41]; Miller et al., 2024 [42]; Etheredge 2007 [43]; Kaye et al., 2015 [44] |

| Case ID | Role | Sector | Organization Type |

|---|---|---|---|

| P1 | Partner and Tech Evangelist | IT Consulting | Large Firm |

| P2 | Director | Patent Analytics | Mid-Size Enterprise |

| P3 | Product Owner | Software | Multinational Corporation |

| P4 | Vice President | Independent Consultant | Independent |

| P5 | Senior Data Scientist | Cross-Industry (Retail, Manufacturing) | Independent Consultant |

| P6 | CEO | Technology | Private Firm |

| P7 | Data Architect | Urban Transport Analytics | Government-Affiliated |

| P8 | Quantitative Analyst | Financial Services | Multinational Corporation |

| P9 | CEO | ESG and Workplace Strategy | Consulting Firm |

| P10 | Founder Director | Computer Science and Technology | Startup |

| P11 | Statistical Modelling Specialist | Oil and Gas Consulting | Consulting Firm |

| P12 | Knowledge Management Consultant | Infrastructure | Large Firm |

| P13 | Senior Manager | Banking | Multinational Corporation |

| P14 | Consulting Partner | Financial Analytics | Large IT Firm |

| P15 | Program Manager | Retail and Consumer Goods | IT Services Company |

| P16 | Product Head for Traffic Solutions | Smart Cities/Urban Mobility | Private Firm |

| P17 | CEO | Healthcare | Startup |

| P18 | Founder | Finance and Law | Non-Profit |

| P19 | Founder | Artificial Intelligence | Startup |

| P20 | Founder | Mental Health | Startup |

| P21 | Founder | Human Resources | Startup |

| P22 | Head of Delivery Excellence | Cloud/Tech | Multinational Corporation |

| P23 | CEO, Co-founder | Sustainability | Social Enterprise |

| P24 | Opportunities Evaluation Manager | Oil and Gas | Multinational Corporation |

| P25 | Co-Founder and CTO | Banking/FinTech | Startup |

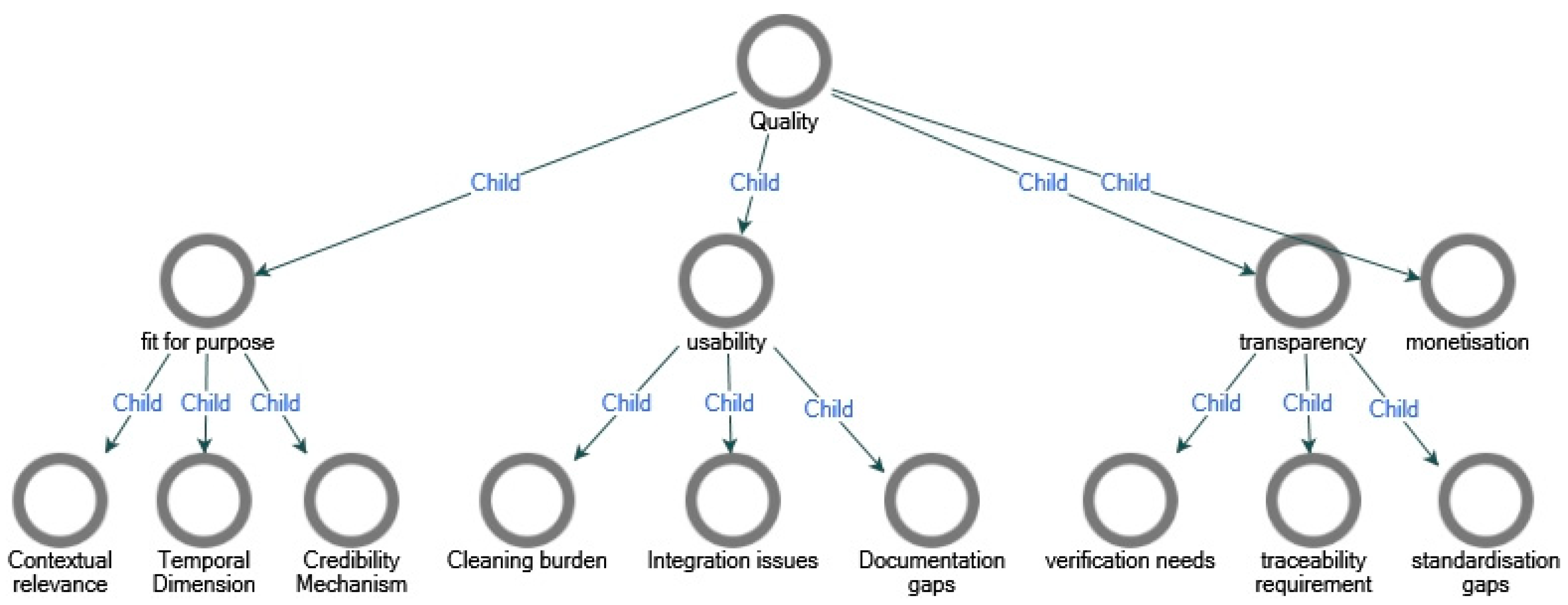

| Quality Theme | Key Concepts and Keywords | Participants | Number of Extracts |

|---|---|---|---|

| Fit-for-Purpose Quality: Needs Expressed by Data Consumers | Consistency in Formats, Data Accuracy, Relevance to Task, Volume, Freshness, Frequency of Updating, Credibility, Completeness, Expert Validation, Documentation, API Documentation, Metadata, Data Handbook, Lineage | 23 (92%) | 92 |

| Usability and Format Frictions: Common Problems Encountered | Missing data, Time Spent on Data Cleaning, Faulty Instruments, Data Duplication, Multi-Format Inflows (data/file), Lack of Documentation | 19 (76%) | 52 |

| Transparency, Traceability, and Governance: Consumer Expectations and Platform Requirements | Certification, Authentication, Provider-Side Quality Checks, Establishing Common Standards, Version Control, Source Traceability, Transformation Logs | 10 (40%) | 22 |

| Monetisation and Willingness to Pay: Pricing Concerns | Willingness to Pay Linked to Better-Quality, High-Quality Data Essential for Monetization | 3 (12%) | 3 |

| Code | Subcode | Keywords and Concepts | Participants | Number of Extracts |

|---|---|---|---|---|

| Fit-for-Purpose Quality | Contextual Relevance | Accuracy, Consistency, Data Hygiene, Usefulness, Timeliness, Completeness, Version Control, Metadata, Standards, Lineage, Transparency | 23 | 80 |

| Temporal Dimensions | Freshness, Relevance, Timeliness, Real-Time Data, Historical Data, Mission-Critical | 9 | 12 | |

| Credibility Mechanisms | Authenticity, Standardization, Traceability, Veracity, Certification, Validation | 7 | 16 | |

| Usability and Format Frictions | Cleaning Burden | Data Cleaning, Missing Values, Formatting Issues, Time-Consuming, Accuracy, Duplication, Multi-Format Data, Resource Drain, Value Addition. | 10 | 21 |

| Integration Issues | Data Formats, Synchronization, Interoperability, Labelling, Vendor Lock-In, Standardization, Scalability, Harmonization, IoT Limitations. | 9 | 19 | |

| Documentation Gaps | Metadata, Standardization, Missing Information, Data Culture, Unstructured Data, Sampling Issues, Publication Details, Supplementary Fields. | 9 | 12 | |

| Transparency, Traceability, and Governance | Verification Needs | Authentication, Certification, Triangulation, Data Validation, Trust, Credentials. | 5 | 6 |

| Traceability Requirements | Version Control, Data Lineage, Processing History, Transparency, Documentation, Relevance. | 2 | 3 | |

| Standardization Gaps | Multiple Standards, Interoperability, DQ, Sector-Specific, Stakeholder Collaboration, Metadata Standards, Market Scalability. | 6 | 13 |

| Intensity | Criteria |

|---|---|

| High | ≥3 participants from the domain, with detailed or multi-point discussion (several sub-themes addressed) |

| Medium | 1–2 participants with moderate-to-deep engagement and moderate diversity |

| Low | 1 participant with a brief mention, or 2+ participants but only surface-level comments (minimal diversity/detail) |

| None | No participants from the domain discussed the theme |

| Statement | Participant |

|---|---|

| “I need the certification of the data authentication of the data.” | Cloud/Tech (P22) |

| “standardization is needed to scale and explore and move into the market quickly or develop the market quickly.” | Urban Transport Analytics (P7) |

| “the verification of the data is the most important thing.” | ESG and Workplace Strategy (P9) |

| “Checking data quality becomes easier based on fixed parameters of data quality, because those can only be defined data.” | Finance/Law Non-Profit (P18) |

| “Is not easy because if you take any domain, not just one standard, even if you see sensor for sensors only, at least I have come across more than three standards.” | Software Multinational Corporation (P3) |

| “data standardization and the normalization are another challenge that you know I’ve been facing for in case of a data integration kind of thing” | Smart Cities/Urban Mobility (P16) |

| “now that becomes challenging, maintaining that entire, uh, like the history of the version control of the data and understanding what are all the changes that that has gone through over the course in time.” | Finance and Law Non-Profit (P18) |

| “One thing which when we’re talking about version control of the data, one thing which people do often tend to ignore is the sort of processing that has gone from the raw to the finalized data.” | Finance and Law Non-Profit (P18) |

| Sector | Participants (IDs) | Core Expectations |

|---|---|---|

| IT/Tech and Consulting | P1, P22 | Provider-side certification (e.g., API governance checks) |

| Finance and Legal | P4, P14 | Version control, transformation logs for auditability |

| Urban/Smart Cities | P7, P12 | Adoption of DCAT standards, consortium-led quality parameters |

| Sustainability | P9, P23 | Methodology transparency, source validation protocols |

| Healthcare | P10 | Raw-to-finalized data processing history |

| Cross-Industry | P5 | Lineage tracking for regulatory compliance |

| Sector | Participants (IDs) | Depth/Diversity | Rating |

|---|---|---|---|

| IT/Tech and Consulting | P1, P22 | Certification, API governance checks | Medium |

| Finance and Legal | P4, P14 | Version control, audit logs, compliance | Medium |

| Urban/Smart Cities | P7, P12 | Standards (DCAT), quality parameters | Medium |

| Sustainability | P9, P23 | Methodology transparency, source validation | Medium |

| Healthcare | P10 | Data processing history | Low |

| Cross-Industry | P5 | Lineage tracking | Low |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Malieckal, M.; Gurtoo, A. Mining User Perspectives: Multi Case Study Analysis of Data Quality Characteristics. Information 2025, 16, 920. https://doi.org/10.3390/info16100920

Malieckal M, Gurtoo A. Mining User Perspectives: Multi Case Study Analysis of Data Quality Characteristics. Information. 2025; 16(10):920. https://doi.org/10.3390/info16100920

Chicago/Turabian StyleMalieckal, Minnu, and Anjula Gurtoo. 2025. "Mining User Perspectives: Multi Case Study Analysis of Data Quality Characteristics" Information 16, no. 10: 920. https://doi.org/10.3390/info16100920

APA StyleMalieckal, M., & Gurtoo, A. (2025). Mining User Perspectives: Multi Case Study Analysis of Data Quality Characteristics. Information, 16(10), 920. https://doi.org/10.3390/info16100920