Game On: Exploring the Potential for Soft Skill Development Through Video Games

Abstract

1. Introduction

- The specific in-game mechanics and player behavioral patterns that correlate with and contribute to the development of targeted soft skills.

- How player characteristics, such as prior gaming experience and engagement levels, influence the effectiveness of AI-driven soft skill enhancement and assessment within video game environments.

- The development of a novel AI-based stealth assessment methodology that leverages commercial video game data for non-intrusive soft skill measurement addresses a significant gap in standardized evaluation methods. Note that stealth assessment refers to the integration of assessment mechanisms into the game in a discreet and fluid manner.

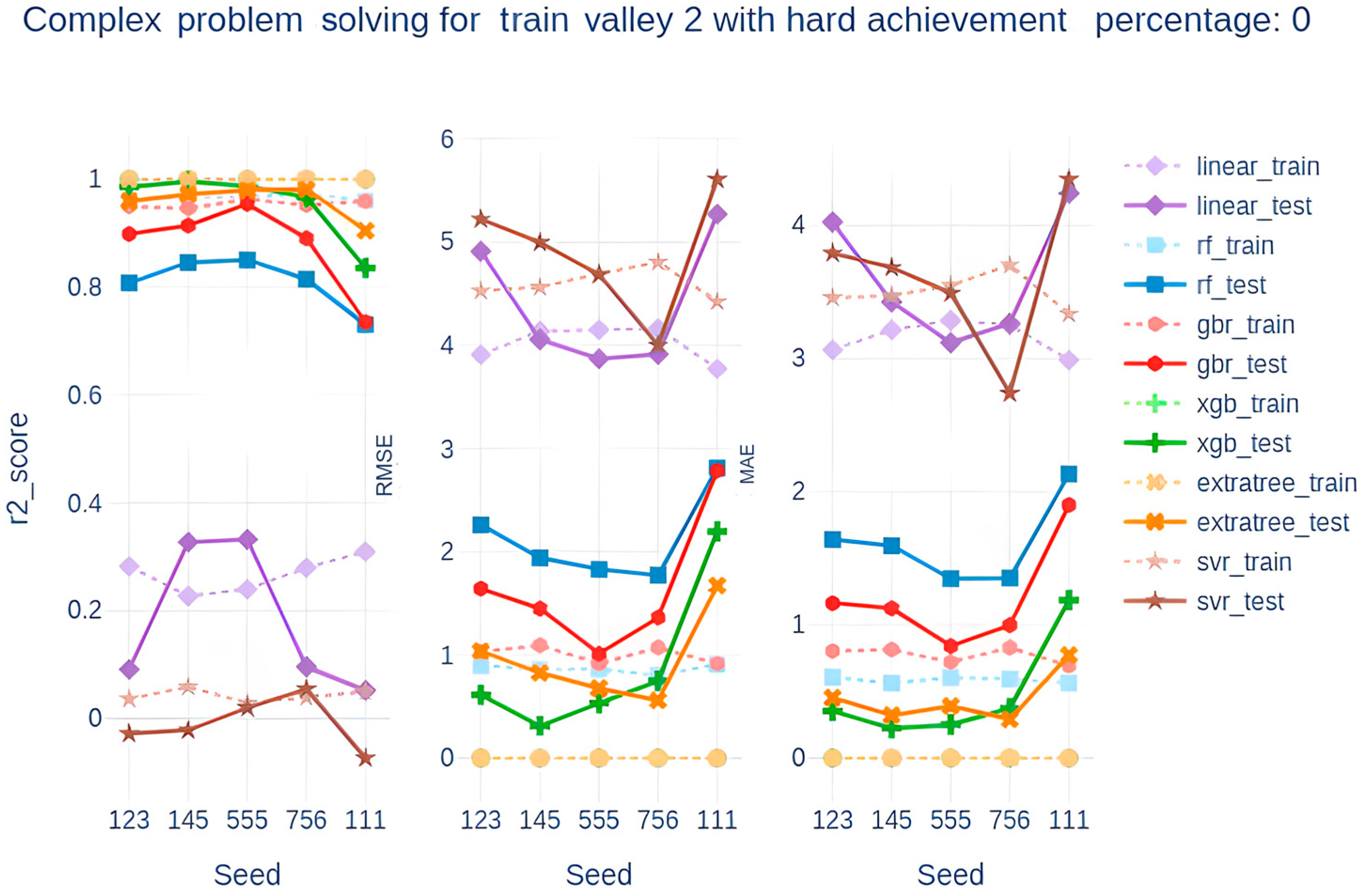

- The empirical validation of the AI model’s (Gradient Boosting Regressor) high efficacy in predicting soft skill improvement, demonstrating a Coefficient of Determination (R2) value of approximately 0.9 and low Root Mean Squared Error (RMSE) values near 1.

- The practical application of this methodology using real player data from the Steam platform showcases a scalable and engaging approach for integrating game-based soft skill enhancement into traditional training programs.

2. Video Games for Soft Skill Development

- Foster a wide range of complex problem-solving skills: improving abilities in problem decomposition, systems thinking, and causal analysis [46]; enhancing spatial reasoning, sequential processing, and overall solution optimization [47]; enhancing strategic planning and analytical reasoning [48]; and improving specific cognitive areas like attention and reasoning [49].

- Enhance time management skills: improving task prioritization, time estimation and project planning skills, and improving executive control skills [54].

3. Stealth Assessment in Video Games

- Player behavior and action logs: Data from players’ actions, such as movement, interaction with objects, and decision-making, provide insights into skills development. These action logs can be analyzed to infer critical soft skills [59]. This information has been applied to profile player behavior and categorize players [60], to perform skill and performance analysis [61], and to visualize and cluster players with similar behaviors [62].

- Response time and decision speed: In games with time-sensitive challenges, response time is a critical metric. These metrics not only reflect the cognitive and perceptual benefits of gaming but also highlight potential areas for training and improvement: reaction times [63], sensorimotor decision-making capabilities [64], balance between speed and precision [65], and processing speed [66].

- Player interaction data: Interaction data, such as chat logs, cooperative task completion, and social dynamics, are important indicators of collaboration and communication skills [67]. Metrics such as interaction frequency, leadership role assumption, and conflict resolution strategies can be tracked.

- Success rates and achievement tracking: These metrics provide valuable insights into player behavior, game content consumption, and performance dynamics, which can inform game development, enhance player retention, and optimize gaming experiences across various genres [68]. Genres include the following: developing performance prediction models to analyze player actions, such as predicting hits or misses [69]; tracking individual performance to understand dynamics within ad hoc teams in team-based games [70]; reporting how cognitive skills are linked to gaming performance [71]; and analyzing player retention by modeling motivations, progression, and churn to predict dropout rates and improve retention strategies [72].

- Behavioral patterns: These are used to measure and enhance player performance by understanding their actions, strategies, and engagement levels [73,74]. This approach not only aids in improving game design and player satisfaction but also provides insights into broader social behaviors that reveal the development of soft skills, such as adaptability or leadership [75,76].

- In-game analytics: Games are often designed with built-in systems that automatically collect and analyze data from players’ in-game behaviors. These analytics are used to enhance game design, improve player engagement, and tailor experiences to different player profiles [81,82]. The integration of machine learning and predictive models further enhances the ability to analyze and predict player actions, making in-game analytics an essential tool for developers and researchers [83].

4. Materials and Methods

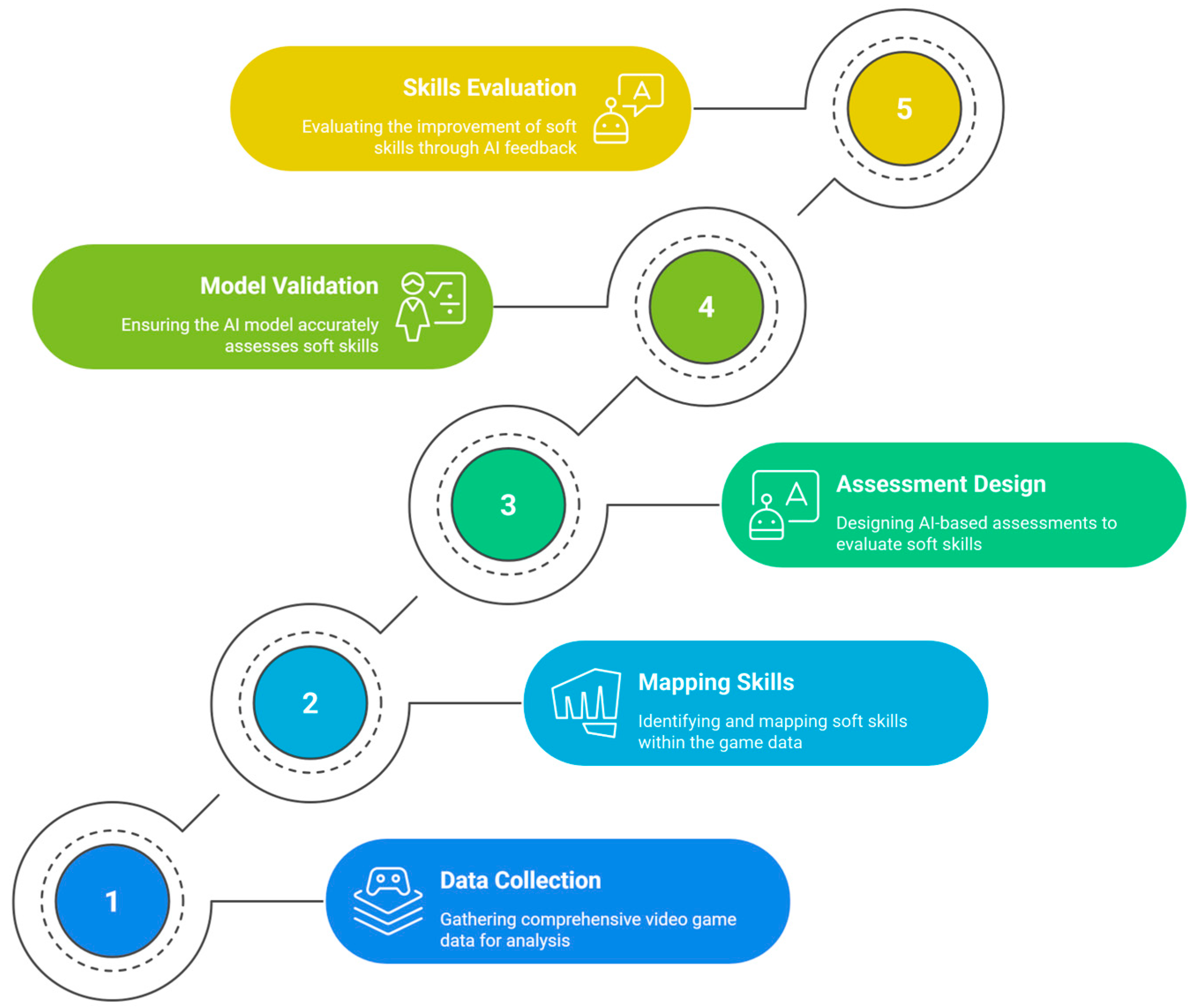

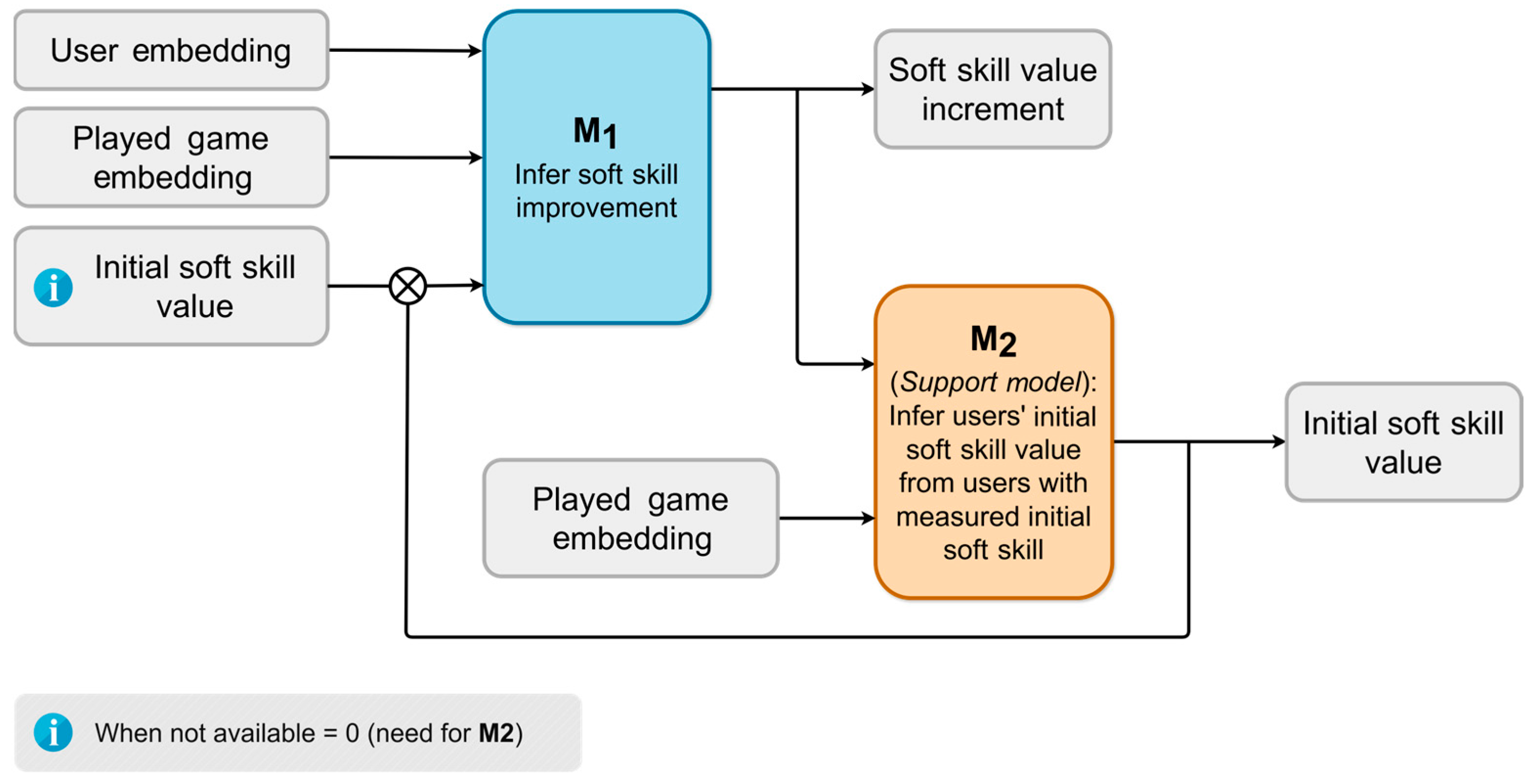

4.1. Methodology

4.2. Sample

4.3. Materials

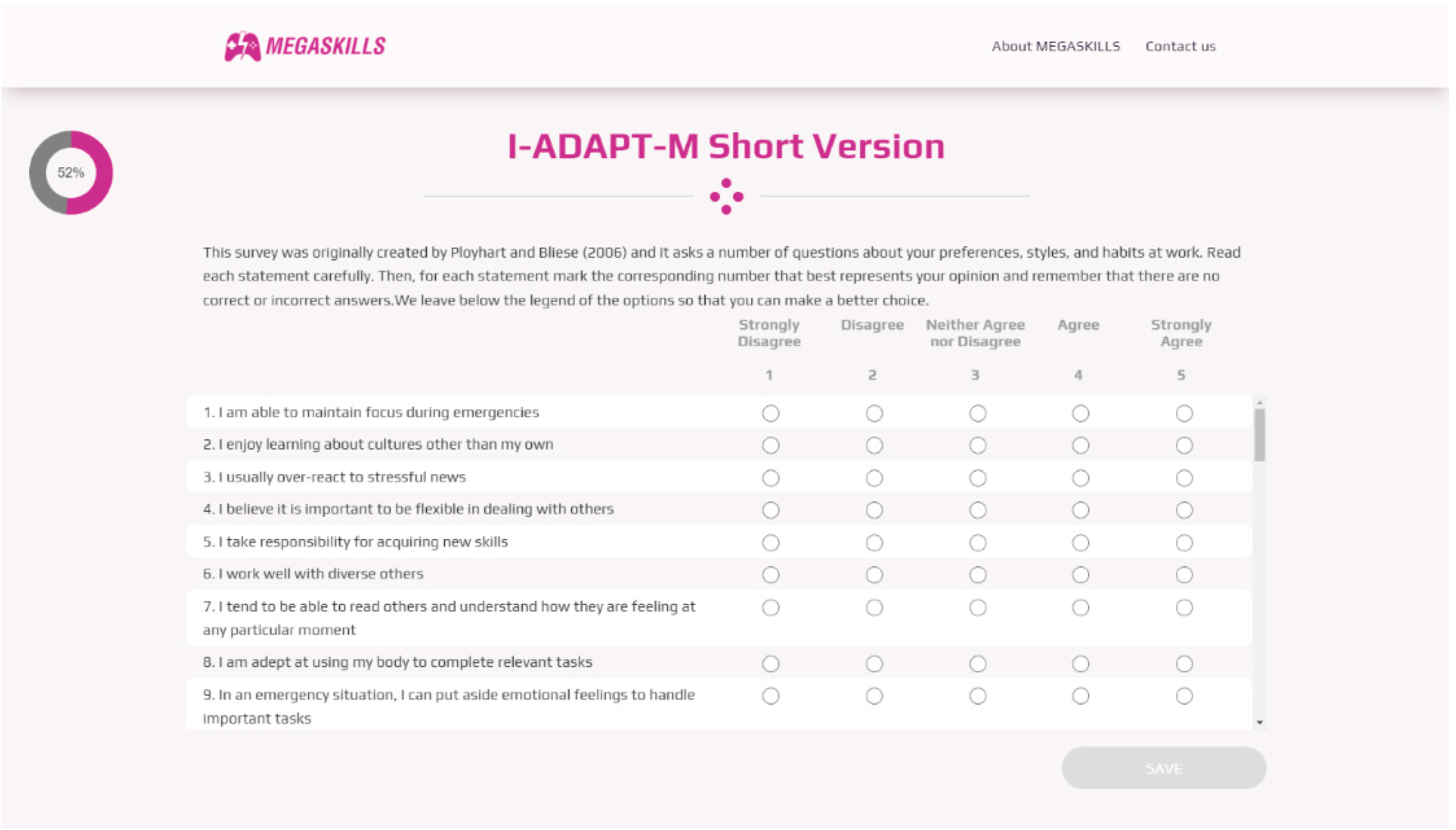

4.3.1. Standardized Tests

4.3.2. Video Games

- Train Valley 2: This video game fits perfectly in a complex problem-solving experiment context since the video game presents different types of problems and variables to consider. The user must learn to manage several train stations, from building the tracks and ways for the trains to circulate (having a limited budget for this task) and managing the departures of said trains. The difficulty, in addition to increasing as the levels progress, lies in the anticipation and planning of the different train routes, having to either prevent problems or solve them in the event of an accident.

- Lightseekers: As a card video game, it meets many of the requirements to stimulate and train critical thinking. Players will have to plan and analyze the different possible compositions of cards or decks to find out which one has the best performance, depending on the environment or context of each game.

- Relic Hunters Zero: It is a very friendly and entertaining top-down shooting roguelike game. This video game genre is perfect in the flexibility experimentation context, since the rules of each game change once the player dies and resets the progress. Thus, it allows the use of different weapons and strategies that encourage paradigm and variable shifts, as a main flexibility indicator, and the parallel learning of each of the elements that may be present in the game.

- Minion Masters: It is a dual management game. On the one hand, the player must manage time, both macro and micro, during the games. This means that users must consider the countdown present in each game as well as the different deployment times, movement speed, and attack speed of each unit. On the other hand, outside of games, the player can manage the deck and the collection of units that he collects and improves. The gameplay mainly consists of attacking enemy turrets and bases while defending your own in 1vs1 battles, where all types of units with different characteristics are deployed to achieve this objective.

4.3.3. MEGASKILLS Platform

4.3.4. Procedure

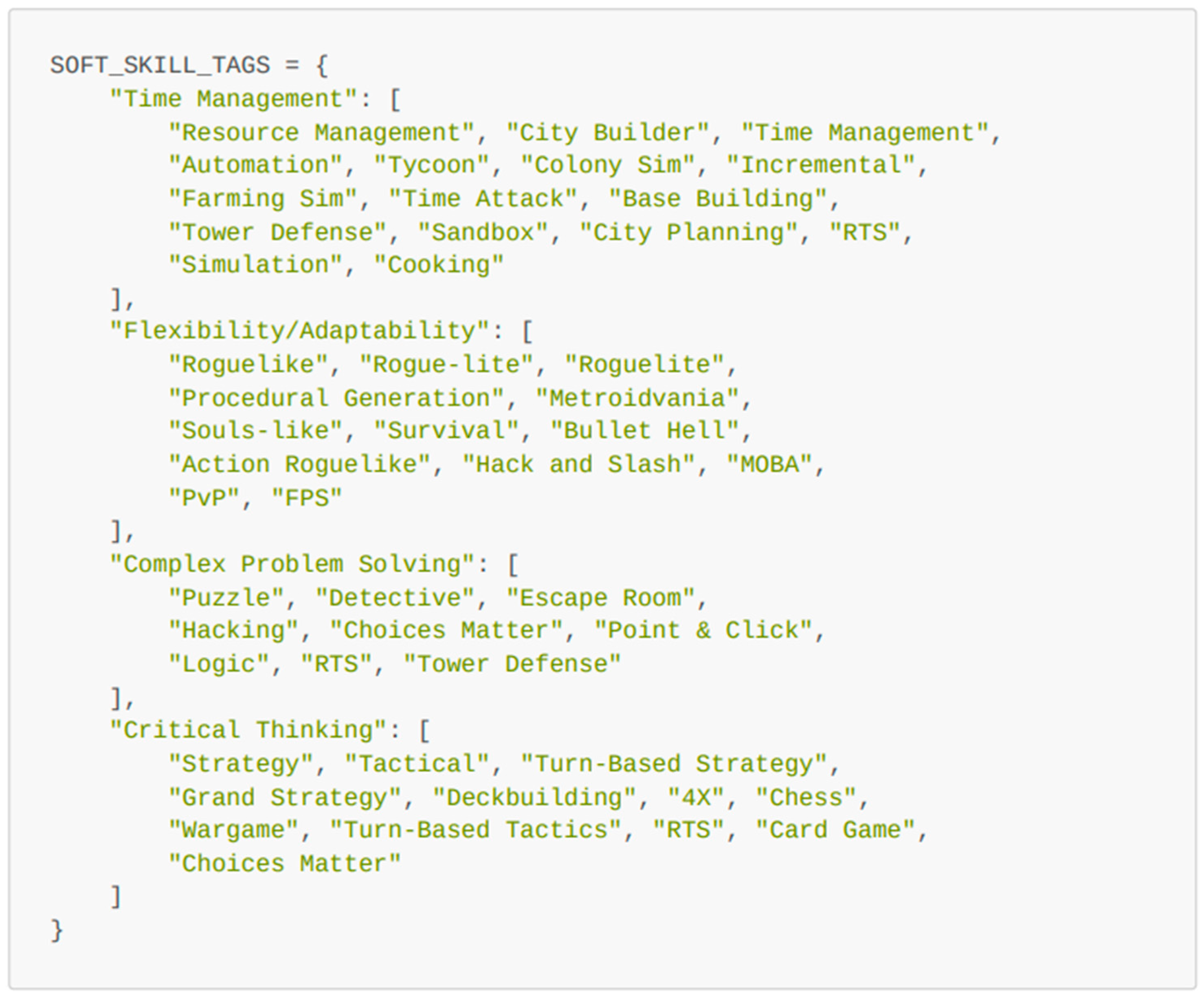

- Remove all games that contain tags suggesting the game does not contribute to the development of soft skills, e.g., “SEXUAL CONTENT”, “NUDITY”, “MATURE”, “NSFW”.

- Remove all tags that belong to groups that do not contribute to the development of soft skills, e.g., “Uncategorized”, “Franchises”, “Hardware”, “Tools”.

- If a game has more than 10 tags, the embedding will only consider the first 10. This is performed by a function F(T) which also removes and filters tags, defined hereafter as T, and returns a curated list of tags T’.

- If the total amount of clicks in a game is less than 200 clicks, it is penalized. This is conducted when the total amount of clicks is computed. Given the function , returning the number of clicks for a tag, the total click amount is computed as . This simple consideration will identify games with fewer than 200 clicks in their total amount of clicks, in contrast with those having fewer tags with many clicks.

- The tags of a game are weighted depending on their position on the list (which depends on the clicks). This weighting process is applied for each soft skill as , where and K is the total amount of clicks as introduced previously.

- Finally, a value between 0 and 1 that represents the contribution of the game to a soft skill is computed, considering the number of clicks and the ranking of tags, and the soft skill. For that, a function that returns the weight of a tag (t) for a soft skill is defined as and , further defined as , where is a list of soft skills and is the list of tags under that category, for this study of those described in Figure 4. Finally, the sum of all the values for each soft skill is computed as .

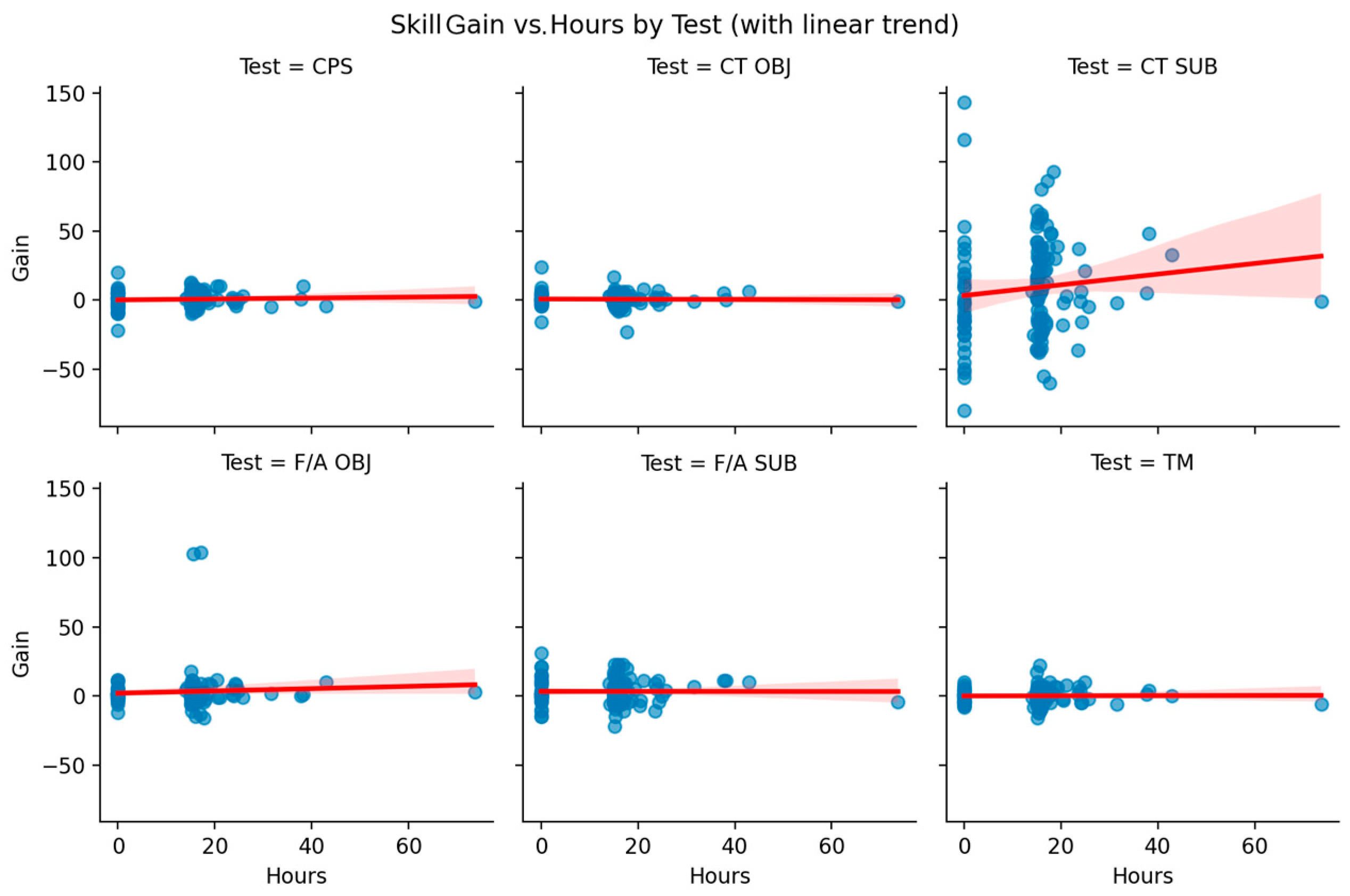

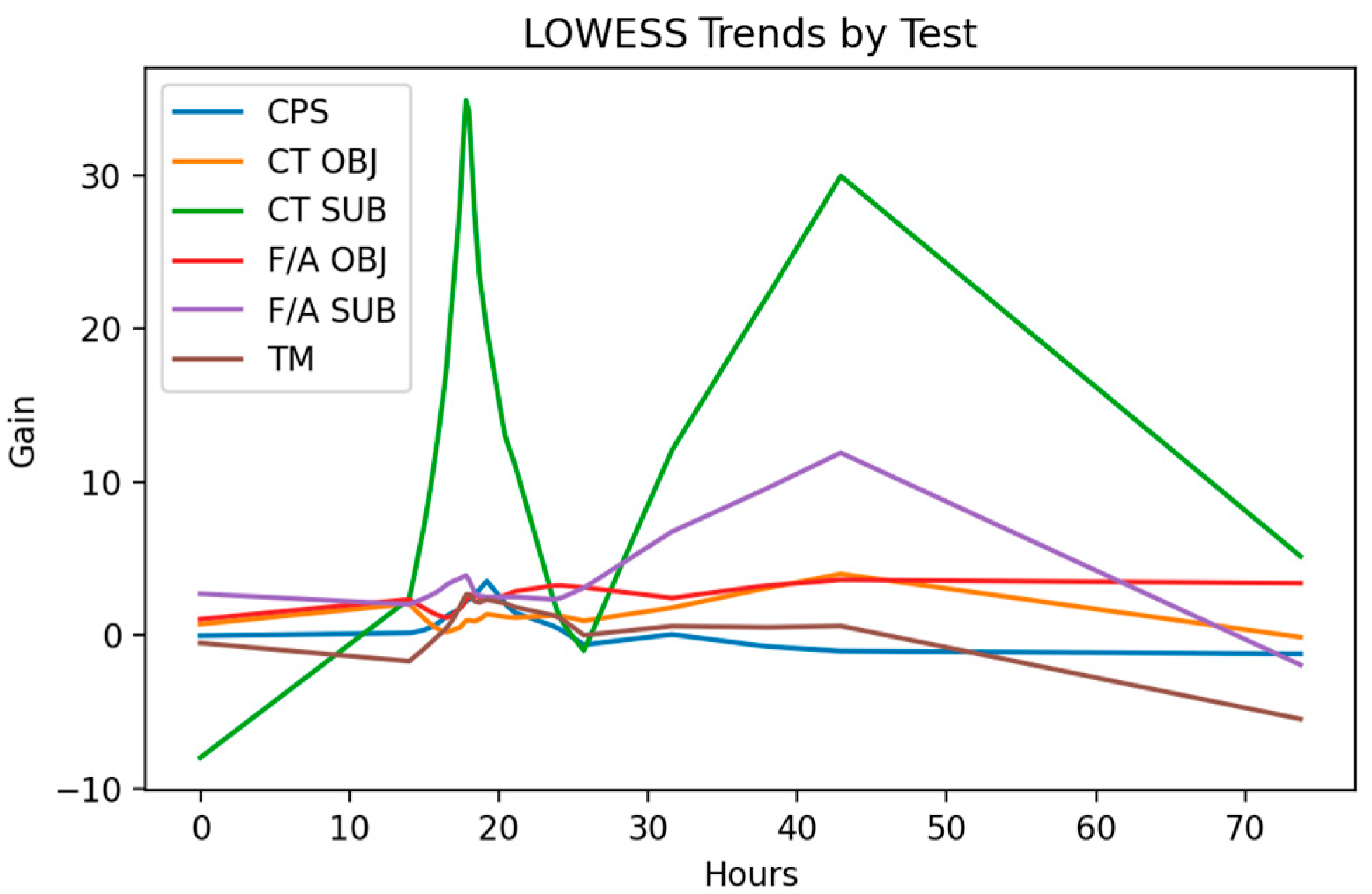

5. Results

- Coefficient of Determination (R2): Indicates the proportion of variance in the observed data that is explained by the model, reflecting how good it fits. where are the observed values, are the predicted values, is the mean of the observed values, and is the number of samples.

- Root Mean Squared Error (RMSE): Quantifies the standard deviation of the prediction errors, giving more weight to large deviations and highlighting models with big individual errors.

- Mean Absolute Error (MAE): Measures the average magnitude of the prediction errors, providing guidance on how close the predictions are to the observed values.

6. Discussion

- Implementing longer intervention periods to better understand the temporal aspects of skill development and allow for potentially greater skill gains.

- Collecting significantly larger datasets to improve the robustness and generalization of the AI models used for stealth assessment.

- Conducting more detailed analyses of specific game mechanics within the selected or other commercial games and their relationship to the development of targeted soft skills.

- Developing more sophisticated measures and validation methods for assessing the transfer of soft skills developed in gaming environments to real-world professional and personal contexts.

- Investigating the role of player engagement, motivation, and individual differences in skill development through game-based learning.

- Further refining the AI methodology, including exploring different architectures or input features for the user and game embeddings to better capture nuanced gaming behaviors and their links to soft skills.

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| PRE | Pre-intervention |

| POST | Post-intervention |

| Obj | Objective |

| Sub | Subjective |

Appendix A

| Soft Skill | Test | Very Low | Low | Moderate | High | Very High |

|---|---|---|---|---|---|---|

| CPS | Reflective Thinking Skill Scale for Problem Solving | 14–27 | 28–41 | 42–55 | 56–63 | 64–70 |

| CT | Critical Thinking Assessment (SUB) | 60–159 | 160–259 | 260–360 | ||

| CT | Test of Critical Thinking (OBJ) | 0–15 | 16–25 | 26–45 | ||

| F/A | I-ADAPT-M (SUB) | 55–128 | 129–202 | 203–275 | ||

| F/A | CAMBIOS (OBJ) | 0–11 | 12–18 | 19–27 | ||

| TM | Time Management Questionnaire | 18–41 | 42–65 | 66–90 |

Appendix B

| Model | Train_R2 | Test_R2 | Train_RMSE | Test_RMSE | Train_MAE | Test_MAE |

|---|---|---|---|---|---|---|

| extratree | 1.0 | 0.9051 | 0.0 | 1.2716 | 0.0 | 0.6673 |

| gbr | 0.9806 | 0.9141 | 0.614 | 1.2145 | 0.4543 | 0.8483 |

| linear | 0.2097 | 0.0549 | 3.956 | 4.3111 | 2.6747 | 3.0102 |

| rf | 0.9735 | 0.8424 | 0.7079 | 1.7153 | 0.4392 | 1.1583 |

| svr | 0.0514 | 0.0018 | 4.3317 | 4.4261 | 2.9084 | 3.0472 |

| xgb | 1.0 | 0.9375 | 0.0014 | 0.9176 | 0.0009 | 0.4411 |

| Model | Train_R2 | Test_R2 | Train_RMSE | Test_RMSE | Train_MAE | Test_MAE |

|---|---|---|---|---|---|---|

| extratree | 1.0 | 0.8505 | 0.0 | 2.0174 | 0.0 | 0.9537 |

| gbr | 0.9464 | 0.7473 | 1.3323 | 2.7669 | 0.9861 | 1.9595 |

| linear | 0.1626 | −0.0662 | 5.2848 | 5.8661 | 4.0393 | 4.5063 |

| rf | 0.9603 | 0.7098 | 1.144 | 2.9992 | 0.7653 | 2.0933 |

| svr | 0.065 | 0.0078 | 5.5839 | 5.6846 | 4.1561 | 4.3284 |

| xgb | 1.0 | 0.851 | 0.0019 | 1.9605 | 0.0012 | 0.9859 |

| Model | Train_R2 | Test_R2 | Train_RMSE | Test_RMSE | Train_MAE | Test_MAE |

|---|---|---|---|---|---|---|

| extratree | 1.0 | 0.761 | 0.0003 | 4.0102 | 0.0 | 2.2805 |

| gbr | 0.9499 | 0.7783 | 1.9313 | 3.9277 | 1.4679 | 2.911 |

| linear | 0.2412 | 0.0454 | 7.5258 | 8.4144 | 6.1603 | 6.8591 |

| rf | 0.9499 | 0.6401 | 1.9279 | 5.1109 | 1.4221 | 3.8847 |

| svr | 0.008 | −0.0362 | 8.607 | 8.8023 | 6.7411 | 6.9645 |

| xgb | 1.0 | 0.8474 | 0.004 | 3.0861 | 0.0026 | 2.0641 |

| Model | Train_R2 | Test_R2 | Train_RMSE | Test_RMSE | Train_MAE | Test_MAE |

|---|---|---|---|---|---|---|

| extratree | 1.0 | 0.9015 | 0.0 | 1.3417 | 0.0 | 0.6102 |

| gbr | 0.9582 | 0.8262 | 0.9646 | 1.8605 | 0.7309 | 1.2738 |

| linear | 0.2777 | 0.1158 | 4.0275 | 4.44 | 3.1751 | 3.5527 |

| rf | 0.9689 | 0.7702 | 0.8302 | 2.2213 | 0.5761 | 1.5885 |

| svr | 0.037 | −0.0016 | 4.6521 | 4.7545 | 3.4996 | 3.643 |

| xgb | 1.0 | 0.9108 | 0.0016 | 1.0959 | 0.001 | 0.5137 |

References

- Cinque, M. “Lost in translation”. Soft skills development in European countries. Tuning J. High. Educ. 2016, 3, 389–427. [Google Scholar] [CrossRef]

- Kubátová, J.; Müller, M.; Kosina, D.; Kročil, O.; Slavíčková, P. Soft Skills for the 21st Century: Defining a Framework for Navigating Human-Centered Development in an AI-Driven World; Springer Nature: Berlin/Heidelberg, Germany, 2025. [Google Scholar]

- Labobar, J.; Malatuny, Y.G. Soft Skill Development of Stakpn Sentani Christian Religious Education Graduates in the Workplace. In Proceedings of the 4th International Conference on Progressive Education 2022 (ICOPE 2022), Lampung, Indonesia, 15–16 October 2022; Atlantis Press: Dordrecht, The Netherlands, 2022; pp. 226–236. [Google Scholar] [CrossRef]

- Sejzi, A.A.; Aris, B.; Yuh, C.P. Important soft skills for university students in 21th century. In Proceedings of the 4th International Graduate Conference on Engineering, Science, and Humanities (IGCESH 2013), Johor, Malaysia, 16–17 April 2013. [Google Scholar]

- Schulz, B. The importance of soft skills: Education beyond academic knowledge. J. Lang. Commun. 2008, 146–154. [Google Scholar]

- Marin-Zapata, S.I.; Román-Calderón, J.P.; Robledo-Ardila, C.; Jaramillo-Serna, M.A. Soft skills, do we know what we are talking about? Rev. Manag. Sci. 2022, 16, 969–1000. [Google Scholar] [CrossRef]

- Vasanthakumari, S. Soft skills and its application in work place. World J. Adv. Res. Rev. 2019, 3, 066–072. [Google Scholar] [CrossRef]

- Tripathy, M. Relevance of Soft Skills in Career Success. MIER J. Educ. Stud. Trends Pract. 2020, 10, 91–102. [Google Scholar] [CrossRef]

- Fletcher, S.; Thornton, K.R. The Top 10 Soft Skills in Business Today Compared to 2012. Bus. Prof. Commun. Q. 2023, 86, 411–426. [Google Scholar] [CrossRef]

- Robles, M.M. Executive perceptions of the top 10 soft skills needed in today’s workplace. Bus. Commun. Q. 2012, 75, 453–465. [Google Scholar] [CrossRef]

- Abina, A.; Batkovič, T.; Cestnik, B.; Kikaj, A.; Kovačič Lukman, R.; Kurbus, M.; Zidanšek, A. Decision support concept for improvement of sustainability-related competences. Sustainability 2022, 14, 8539. [Google Scholar] [CrossRef]

- Farao, C.; Bernuzzi, C.; Ronchetti, C. The crucial role of green soft skills and leadership for sustainability: A case study of an Italian small and medium enterprise operating in the food sector. Sustainability 2023, 15, 15841. [Google Scholar] [CrossRef]

- Sujová, E.; Čierna, H.; Simanová, Ľ.; Gejdoš, P.; Štefková, J. Soft skills integration into business processes based on the requirements of employers—Approach for sustainable education. Sustainability 2021, 13, 13807. [Google Scholar] [CrossRef]

- Seetha, N. Are soft skills important in the workplace? A preliminary investigation in Malaysia. Int. J. Acad. Res. Bus. Soc. Sci. 2014, 4, 44. [Google Scholar] [CrossRef] [PubMed]

- Daly, S.; McCann, C.; Phillips, K. Teaching soft skills in healthcare and higher education: A scoping review protocol. Soc. Sci. Protoc. 2022, 5, 1–8. [Google Scholar] [CrossRef]

- Ntola, P.; Nevines, E.; Qwabe, L.Q.; Sabela, M.I. A survey of soft skills expectations: A view from work integrated learning students and the chemical industry. J. Chem. Educ. 2024, 101, 984–992. [Google Scholar] [CrossRef]

- Basir, N.M.; Zubairi, Y.Z.; Jani, R.; Wahab, D.A. Soft skills and graduate employability: Evidence from Malaysian tracer study. Pertanika J. Soc. Sci. Humanit. 2022, 30, 1975–1986. [Google Scholar] [CrossRef]

- Touloumakos, A.K. Expanded yet restricted: A mini review of the soft skills literature. Front. Psychol. 2020, 11, 2207. [Google Scholar] [CrossRef]

- Bhati, H.; Khan, P.; The importance of soft skills in the workplace. Journal of Student Research. 2022. Available online: https://www.jsr.org/hs/index.php/path/article/view/2764 (accessed on 12 October 2025).

- Tadimeti, V. E-Soft Skills Training: Challenges and Opportunities. The IUP Journal of Soft Skills, 8, 34. Tadimeti, Vasundara, E-Soft Skills Training: Challenges and Opportunities. IUP J. Soft Ski. 2014, VIII, 34–44. [Google Scholar]

- Gibb, S. Soft skills assessment: Theory development and the research agenda. Int. J. Lifelong Educ. 2014, 33, 455–471. [Google Scholar] [CrossRef]

- Cukier, W.; Hodson, J.; Omar, A. “Soft” Skills Are Hard. A Review of the Literature 2015; Ryerson University: Toronto, ON, Canada, 2015. [Google Scholar]

- Kutz, M.R.; Stiltner, S. Program Directors’ Perception of the Importance of Soft Skills in Athletic Training. Athl. Train. Educ. J. 2021, 16, 53–58. [Google Scholar] [CrossRef]

- AbuJbara, N.A.K.; Worley, J.A. Leading toward new horizons with soft skills. Horiz. Int. J. Learn. Futures 2018, 26, 247–259. [Google Scholar] [CrossRef]

- Dondi, M.; Klier, J.; Panier, F.; Schubert, J. Defining the Skills Citizens Will Need in the Future World of Work; McKinsey & Company: New York, NY, USA, 2021; Volume 25, pp. 1–19. [Google Scholar]

- Kyllonen, P.C. Soft skills for the workplace. Change Mag. High. Learn. 2013, 45, 16–23. [Google Scholar] [CrossRef]

- Snape, P. Enduring Learning: Integrating C21st Soft Skills through Technology Education. Des. Technol. Educ. Int. J. 2017, 22, 48–59. [Google Scholar]

- Altomari, L.; Altomari, N.; Iazzolino, G. Gamification and Soft Skills Assessment in the Development of a Serious Game: Design and Feasibility Pilot Study. JMIR Serious Games 2023, 11, e45436. [Google Scholar] [CrossRef]

- Nihal, K.S.; Pallavi, L.; Raj, R.; Babu, C.M.; Mishra, B. Enhancing Soft Skill Development with ChatGPT and VR: An Exploratory Study. In Proceedings of the 2023 International Conference on Research Methodologies in Knowledge Management, Artificial Intelligence and Telecommunication Engineering (RMKMATE), Chennai, India, 1–2 November 2023; pp. 1–6. [Google Scholar] [CrossRef]

- European Commission: Directorate-General for Communications Networks, Content and Technology. Understanding the Value of a European Video Games Society—Final Report; Publications Office of the European Union: Luxembourg, 2023; Available online: https://data.europa.eu/doi/10.2759/332575 (accessed on 12 October 2025).

- Barr, M. Video games can develop graduate skills in higher education students: A randomised trial. Comput. Educ. 2017, 113, 86–97. [Google Scholar] [CrossRef]

- De Freitas, S. Are games effective learning tools? A review of educational games. J. Educ. Technol. Soc. 2018, 21, 74–84. [Google Scholar]

- Qian, M.; Clark, K.R. Game-based Learning and 21st century skills: A review of recent research. Comput. Hum. Behav. 2016, 63, 50–58. [Google Scholar] [CrossRef]

- McGowan, N.; López-Serrano, A.; Burgos, D. Serious games and soft skills in higher education: A case study of the design of compete! Electronics 2023, 12, 1432. [Google Scholar] [CrossRef]

- Bezanilla, M.J.; Arranz, S.; Rayón, A.; Rubio, I.; Menchaca, I.; Guenaga, M.; Aguilar, E. A proposal for generic competence assessment in a serious game. J. New Approaches Educ. Res. 2014, 3, 42–51. [Google Scholar] [CrossRef]

- Connolly, T.M.; Boyle, E.A.; MacArthur, E.; Hainey, T.; Boyle, J.M. A systematic literature review of empirical evidence on computer games and serious games. Comput. Educ. 2012, 59, 661–686. [Google Scholar] [CrossRef]

- Krath, J.; Schürmann, L.; Von Korflesch, H.F. Revealing the theoretical basis of gamification: A systematic review and analysis of theory in research on gamification, serious games and game-based learning. Comput. Hum. Behav. 2021, 125, 106963. [Google Scholar] [CrossRef]

- Subhash, S.; Cudney, E.A. Gamified learning in higher education: A systematic review of the literature. Comput. Hum. Behav. 2018, 87, 192–206. [Google Scholar] [CrossRef]

- Zhonggen, Y. A meta-analysis of use of serious games in education over a decade. Int. J. Comput. Games Technol. 2019, 2019, 4797032. [Google Scholar] [CrossRef]

- Sutil-Martín, D.L.; Otamendi, F.J. Soft skills training program based on serious games. Sustainability 2021, 13, 8582. [Google Scholar] [CrossRef]

- Clark, D.B.; Tanner-Smith, E.E.; Killingsworth, S.S. Digital games, design, and learning: A systematic review and meta-analysis. Rev. Educ. Res. 2016, 86, 79–122. [Google Scholar] [CrossRef]

- Granic, I.; Lobel, A.; Engels, R.C. The benefits of playing video games. Am. Psychol. 2014, 69, 66. [Google Scholar] [CrossRef]

- Plass, J.L.; Homer, B.D.; Kinzer, C.K. Foundations of game-based learning. Educ. Psychol. 2015, 50, 258–283. [Google Scholar] [CrossRef]

- Squire, K. From content to context: Videogames as designed experience. Educ. Res. 2006, 35, 19–29. [Google Scholar] [CrossRef]

- Ryan, R.M.; Deci, E.L. Self-determination theory and the facilitation of intrinsic motivation, social development, and well-being. Am. Psychol. 2000, 55, 68. [Google Scholar] [CrossRef]

- Eseryel, D.; Law, V.; Ifenthaler, D.; Ge, X.; Miller, R. An investigation of the interrelationships between motivation, engagement, and complex problem solving in game-based learning. J. Educ. Technol. Soc. 2014, 17, 42–53. [Google Scholar]

- Shute, V.J.; Wang, L.; Greiff, S.; Zhao, W.; Moore, G. Measuring problem solving skills via stealth assessment in an engaging video game. Comput. Hum. Behav. 2016, 63, 106–117. [Google Scholar] [CrossRef]

- Lie, A.; Stephen, A.; Supit, L.R.; Achmad, S.; Sutoyo, R. Using strategy video games to improve problem solving and communication skills: A systematic literature review. In Proceedings of the 2022 4th International Conference on Cybernetics and Intelligent System (ICORIS), Prapat, Indonesia, 8–9 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Baniqued, P.L.; Kranz, M.B.; Voss, M.W.; Lee, H.; Cosman, J.D.; Severson, J.; Kramer, A.F. Cognitive training with casual video games: Points to consider. Front. Psychol. 2014, 4, 1010. [Google Scholar] [CrossRef]

- Barr, M. Graduate Skills and Game-Based Learning: Using Video Games for Employability in Higher Education; Springer Nature: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Mao, W.; Cui, Y.; Chiu, M.M.; Lei, H. Effects of game-based learning on students’ critical thinking: A meta-analysis. J. Educ. Comput. Res. 2022, 59, 1682–1708. [Google Scholar] [CrossRef]

- Pusey, M.; Wong, K.W.; Rappa, N.A. Resilience interventions using interactive technology: A scoping review. Interact. Learn. Environ. 2022, 30, 1940–1955. [Google Scholar] [CrossRef]

- Glass, B.D.; Maddox, W.T.; Love, B.C. Real-time strategy game training: Emergence of a cognitive flexibility trait. PLoS ONE 2013, 8, e70350. [Google Scholar] [CrossRef]

- Strobach, T.; Frensch, P.A.; Schubert, T. Video game practice optimizes executive control skills in dual-task and task switching situations. Acta Psychol. 2012, 140, 13–24. [Google Scholar] [CrossRef]

- Shute, V.J. Stealth assessment in computer-based games to support learning. Comput. Games Instr. 2011, 55, 503–524. [Google Scholar]

- Rahimi, S.; Shute, V.J. Stealth assessment: A theoretically grounded and psychometrically sound method to assess, support, and investigate learning in technology-rich environments. Educ. Technol. Res. Dev. 2024, 72, 2417–2441. [Google Scholar] [CrossRef]

- Yannakakis, G.N.; Togelius, J. Artificial Intelligence and Games; Springer: New York, NY, USA, 2018; Volume 2, pp. 1502–2475. [Google Scholar]

- Swiecki, Z.; Khosravi, H.; Chen, G.; Martinez-Maldonado, R.; Lodge, J.M.; Milligan, S.; Selwyn, N.; Gašević, D. Assessment in the age of artificial intelligence. Comput. Educ. Artif. Intell. 2022, 3, 100075. [Google Scholar] [CrossRef]

- Fragoso, L.; Stanley, K.G. Stable: Analyzing player movement similarity using text mining. In Proceedings of the 2021 IEEE Conference on Games (CoG), Copenhagen, Denmark, 17–20 August 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Melo, S.A.; Kohwalter, T.C.; Clua, E.; Paes, A.; Murta, L. Player behavior profiling through provenance graphs and representation learning. In Proceedings of the 15th International Conference on the Foundations of Digital Games 2020, Bugibba, Malta, 15–18 September 2020; pp. 1–11. [Google Scholar] [CrossRef]

- Lee, H.; Lee, S.; Nallapati, R.; Uh, Y.; Lee, B. Characterizing and Quantifying Expert Input Behavior in League of Legends. In Proceedings of the CHI Conference on Human Factors in Computing Systems 2024, Honolulu, HI, USA, 11–16 May 2024; pp. 1–21. [Google Scholar] [CrossRef]

- Thawonmas, R.; Iizuka, K. Visualization of online-game players based on their action behaviors. Int. J. Comput. Games Technol. 2008, 2008, 906931. [Google Scholar] [CrossRef]

- Dye, M.W.; Green, C.S.; Bavelier, D. Increasing speed of processing with action video games. Curr. Dir. Psychol. Sci. 2009, 18, 321–326. [Google Scholar] [CrossRef] [PubMed]

- Jordan, T.; Dhamala, M. Enhanced dorsal attention network to salience network interaction in video gamers during sensorimotor decision-making tasks. Brain Connect. 2023, 13, 97–106. [Google Scholar] [CrossRef]

- McDermott, A.F.; Bavelier, D.; Green, C.S. Memory abilities in action video game players. Comput. Hum. Behav. 2014, 34, 69–78. [Google Scholar] [CrossRef]

- Mack, D.J.; Wiesmann, H.; Ilg, U.J. Video game players show higher performance but no difference in speed of attention shifts. Acta Psychol. 2016, 169, 11–19. [Google Scholar] [CrossRef]

- Steinkuehler, C.; Duncan, S. Scientific habits of mind in virtual worlds. J. Sci. Educ. Technol. 2008, 17, 530–543. [Google Scholar] [CrossRef]

- Bailey, E.; Miyata, K. Improving video game project scope decisions with data: An analysis of achievements and game completion rates. Entertain. Comput. 2019, 31, 100299. [Google Scholar] [CrossRef]

- Lopez-Gordo, M.A.; Kohlmorgen, N.; Morillas, C.; Pelayo, F. Performance prediction at single-action level to a first-person shooter video game. Virtual Real. 2021, 25, 681–693. [Google Scholar] [CrossRef]

- Sapienza, A.; Zeng, Y.; Bessi, A.; Lerman, K.; Ferrara, E. Individual performance in team-based online games. R. Soc. Open Sci. 2018, 5, 180329. [Google Scholar] [CrossRef]

- Cretenoud, A.F.; Barakat, A.; Milliet, A.; Choung, O.H.; Bertamini, M.; Constantin, C.; Herzog, M.H. How do visual skills relate to action video game performance? J. Vis. 2021, 21, 10. [Google Scholar] [CrossRef] [PubMed]

- Karmakar, B.; Liu, P.; Mukherjee, G.; Che, H.; Dutta, S. Improved retention analysis in freemium role-playing games by jointly modelling players’ motivation, progression and churn. J. R. Stat. Soc. Ser. A Stat. Soc. 2022, 185, 102–133. [Google Scholar] [CrossRef]

- Lange, A.; Somov, A.; Stepanov, A.; Burnaev, E. Building a behavioral profile and assessing the skill of video game players. IEEE Sens. J. 2021, 22, 481–488. [Google Scholar] [CrossRef]

- Zhao, S.; Xu, Y.; Luo, Z.; Tao, J.; Li, S.; Fan, C.; Pan, G. Player behavior modeling for enhancing role-playing game engagement. IEEE Trans. Comput. Soc. Syst. 2021, 8, 464–474. [Google Scholar] [CrossRef]

- Hou, H.T. Integrating cluster and sequential analysis to explore learners’ flow and behavioral patterns in a simulation game with situated-learning context for science courses: A video-based process exploration. Comput. Hum. Behav. 2015, 48, 424–435. [Google Scholar] [CrossRef]

- Kang, J.; Liu, M.; Qu, W. Using gameplay data to examine learning behavior patterns in a serious game. Comput. Hum. Behav. 2017, 72, 757–770. [Google Scholar] [CrossRef]

- Han, Y.J.; Moon, J.; Woo, J. Prediction of churning game users based on social activity and churn graph neural networks. IEEE Access 2024, 12, 101971–101984. [Google Scholar] [CrossRef]

- Loh, C.S.; Sheng, Y. Measuring expert performance for serious games analytics: From data to insights. In Serious Games Analytics: Methodologies for Performance Measurement, Assessment, and Improvement; Springer: Berlin/Heidelberg, Germany, 2015; pp. 101–134. [Google Scholar] [CrossRef]

- Marczak, R.; Schott, G.; Hanna, P. Postprocessing gameplay metrics for gameplay performance segmentation based on audiovisual analysis. IEEE Trans. Comput. Intell. AI Games 2014, 7, 279–291. [Google Scholar] [CrossRef]

- Tlili, A.; Chang, M.; Moon, J.; Liu, Z.; Burgos, D.; Chen, N.S. A systematic literature review of empirical studies on learning analytics in educational games. Int. J. Interact. Multimed. Artif. Intell. 2021, 7, 250–261. [Google Scholar] [CrossRef]

- Afonso, A.P.; Carmo, M.B.; Gonçalves, T.; Vieira, P. VisuaLeague: Player performance analysis using spatial-temporal data. Multimed. Tools Appl. 2019, 78, 33069–33090. [Google Scholar] [CrossRef]

- El-Nasr, M.S.; Drachen, A.; Canossa, A. Game Analytics; Springer: Berlin/Heidelberg, Germany, 2016; p. 13. [Google Scholar]

- Min, W.; Frankosky, M.H.; Mott, B.W.; Rowe, J.P.; Smith, A.; Wiebe, E.; Boyer, K.E.; Lester, J.C. DeepStealth: Game-based learning stealth assessment with deep neural networks. IEEE Trans. Learn. Technol. 2019, 13, 312–325. [Google Scholar] [CrossRef]

- Smerdov, A.; Somov, A.; Burnaev, E.; Zhou, B.; Lukowicz, P. Detecting video game player burnout with the use of sensor data and machine learning. IEEE Internet Things J. 2021, 8, 16680–16691. [Google Scholar] [CrossRef]

- de Almeida Rocha, D.; Duarte, J.C. Simulating human behaviour in games using machine learning. In Proceedings of the 2019 18th Brazilian Symposium on Computer Games and Digital Entertainment (SBGames), Rio de Janeiro, Brazil, 28–31 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 163–172. [Google Scholar] [CrossRef]

- Mustač, K.; Bačić, K.; Skorin-Kapov, L.; Sužnjević, M. Predicting player churn of a Free-to-Play mobile video game using supervised machine learning. Appl. Sci. 2022, 12, 2795. [Google Scholar] [CrossRef]

- Gee, J.P. What video games have teach us about learning and literacy. Comput. Entertain. (CIE) 2003, 1, 20. [Google Scholar] [CrossRef]

- Frommel, J.; Phillips, C.; Mandryk, R.L. Gathering self-report data in games through NPC dialogues: Effects on data quality, data quantity, player experience, and information intimacy. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Okohama, Japan, 8–13 May 2021; pp. 1–12. [Google Scholar] [CrossRef]

- Stafford, T.; Dewar, M. Tracing the trajectory of skill learning with a very large sample of online game players. Psychol. Sci. 2014, 25, 511–518. [Google Scholar] [CrossRef]

- Kizilkaya, G.; Askar, P. The development of a reflective thinking skill scale towards problem solving. Egit. Ve Bilim 2009, 34, 82. [Google Scholar]

- Payan-Carreira, R.; Sacau-Fontenla, A.; Rebelo, H.; Sebastião, L.; Pnevmatikos, D. Development and Validation of a Critical Thinking Assessment-Scale Short Form. Educ. Sci. 2022, 12, 938. [Google Scholar] [CrossRef]

- Bracken, B.A.; Bai, W.; Fithian, E.; Lamprecht, M.S.; Little, C.; Quek, C. The Test of Critical Thinking; Center for Gifted Education, College of William y Mary: Williamsburg, VA, USA, 2003. [Google Scholar]

- Burke, C.S.; Pierce, L.G.; Salas, E. (Eds.) Understanding Adaptability: A Prerequisite for Effective Performance Within Complex Environments; JAI Press: Stamford, CT, USA, 2006. [Google Scholar]

- Seisdedos, N. CAMBIOS Test de Flexibilidad Cognitiva; TEA Ediciones: Madrid, Spain, 2004. [Google Scholar]

- Britton, B.K.; Tesser, A. Effects of time-management practices on college grades. J. Educ. Psychol. 1991, 83, 405–410. [Google Scholar] [CrossRef]

- Oei, A.C.; Patterson, M.D. Enhancing cognition with video games: A multiple game training study. PLoS ONE 2013, 8, e58546. [Google Scholar] [CrossRef]

| Gender | Complex Problem Solving | Critical Thinking | Flexibility/Adaptability | Time Management | Control |

|---|---|---|---|---|---|

| Male | 14 | 9 | 16 | 17 | 19 |

| Female | 11 | 5 | 5 | 9 | 10 |

| Other | 1 | 2 | 0 | 0 | 0 |

| Prefer not | 1 | 1 | 0 | 0 | 0 |

| Total | 27 | 17 | 21 | 26 | 29 |

| Soft Skill | Test | Video Games |

|---|---|---|

| CPS | Reflective Thinking Skill Scale for Problem Solving (RTSSPS) (onwards CPS) | Train Valley 2 |

| CT | Critical Thinking Assessment Scale Short Form (onwards CTS) Test of Critical Thinking (onwards CTO) | Lightseekers |

| F/A | I-ADAPT-M (onwards F/AS) CAMBIOS (onwards F/AO) | Relic Hunters Zero: Remix |

| TM | Time Management Questionnaire (onwards TM) | Minion Masters |

| Query | Description |

|---|---|

| GetPlayerSummaries | Returns basic profile information. Some data associated with a Steam account may be hidden if the user has their profile visibility set to “Friends Only” or “Private”. In that case, only public data will be returned. |

| GetFriendList | Returns the friend list of any Steam user, provided their Steam community profile visibility is set to “Public”. |

| GetOwnedGames | Returns a list of games a player owns along with some playtime information. |

| GetRecentlyPlayedGames | Returns a list of games a player has played in the last two weeks. |

| GetPlayerAchievements | Returns a list of achievements for this user by the game identifier (app ID). |

| GetGlobalAchievementPercentagesForApp | Returns on global achievements overview of a specific game in percentages. |

| Name | Tags (Top 12) | Expert Assigned Soft Skill | AI Embedding |

|---|---|---|---|

| Lightseekers | Free to Play, Strategy, Card Game | CT | CT-0.2 TM-0.0 CPS-0.0 F/A-0.0 |

| Minion Masters | PvE, Trading Card Game, Stylized, Turn-Based Tactics, Free to Play, Multiplayer, Card Battler, Tactical RPG, PvP, Strategy, Deckbuilding, Card Game | TM | CT-0.5 TM-0.3 CPS-0.2 F/A-0.0 |

| Relic Hunters Zero: Remix | Looter Shooter, Twin Stick Shooter, Action, Top-Down Shooter, Action Roguelike, Adventure Co-Op Multiplayer, Free to Play, Bullet Hell, Pixel Graphics, Local Co-Op | F/A | F/A-0.4 CT-0.0 TM-0.0 CPS-0.0 |

| Train Valley 2 | Quick-Time Events, Multiple Endings, Trains, Relaxing, Simulation, Strategy, Singleplayer, Management, Level Editor, Indie, Building, Puzzle | CPS | CT-0.2 TM-0.2 CPS-0.1 F/A-0.0 |

| Video Game | Average Time Played (h) (SD) | Average nº Achievements (SD) |

|---|---|---|

| Lightseekers (n = 15) | 16.63 (SD = 1.89) | 10.93 (SD = 4.69) |

| Minion Masters (n = 27) | 18.20 (SD = 5.15) | 13.27 (SD = 6.41) |

| Relic Hunters Zero: Remix (n = 19) | 15.6 (SD = 1.14) | 7.19 (SD = 6.54) |

| Train Valley 2 (n = 26) | 20.34 (SD = 12.10) | 21.45 (SD = 8.45) |

| Group | Pre CPS | Pre CTO | Pre CTS | Pre F/AO | Pre F/AS | Pre TM | Post CPS | Post CTO | Post CTS | Post F/AO | Post F/AS | Post TM |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Lightseekers (n = 15) | 42.2 (SD = 5.25) | 32 (SD = 8.41) | 235.26 (SD = 67.03) | 18.86 (SD = 6.71) | 126.73 (SD = 11.32) | 56.26 (SD = 8.48) | 42.06 (SD = 6.85) | 31.53 (SD = 8.36) | 246.93 (SD = 49.02) | 20.13 (SD = 6.78) | 128.46 (SD = 11.07) | 55.13 (SD = 7.16) |

| Minion Masters (n = 27) | 41.29 (SD = 4.85) | 32.07 (SD = 4.67) | 247.81 (SD = 41.41) | 20.29 (SD = 5.80) | 127.18 (SD = 8.52) | 56.66 (SD = 8.41) | 42.81 (SD = 5.09) | 33.03(SD = 6.09) | 256.88(SD = 39.77) | 21.88(SD = 4.61) | 131.81 (SD = 11.37) | 57.81 (SD = 9.81) |

| Relic Hunters Zero: Remix (n = 19) | 41.05 (SD = 5.32) | 34.52 (SD = 3.53) | 232.73 (SD = 44.54) | 17.47 (SD = 7.11) | 129 (SD = 12.45) | 57.94 (SD = 7.74) | 41.52 (SD = 7.23) | 34.05 (SD = 3.59) | 249.89 (SD = 50.01) | 19.42 (SD = 6.51) | 131.15 (SD = 10.95) | 58 (SD = 8.47) |

| Train Valley 2 (n = 26) | 41.65 (SD = 5.48) | 32.34 (SD = 7.00) | 245.42 (SD = 48.89) | 18.30 (SD = 5.94) | 129.84 (SD = 11.10) | 61 (SD = 6.82) | 43 (SD = 4.40) | 33.88 (SD = 5.53) | 256.07 (SD = 45.50) | 19.44 (SD = 6.80) | 133.30 (SD = 9.51) | 61.42 (SD = 7.34) |

| Control Group (n = 29) | 42.17 (SD = 6.39) | 32.31(SD = 5.49) | 252.48(SD = 54.34 | 18.10(SD = 6.69 | 129.89(SD = 11.75 | 59.10(SD = 8.67 | 42.13(SD = 5.78 | 33.27(SD = 5.60 | 252.20(SD = 47.64 | 19.24(SD = 6.18 | 134.79(SD = 11.06 | 58.82(SD = 7.62 |

| Test | Game Group | p Post | U Post | R Post | Post ML | Post MH | U Change | p Change | R Change | Change ML | Change MH | Effect Size |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CPS | Lightseekers | 0.7380 | 263 | 0.0497 | −3 | 6 | 243.5 | 0.9377 | −0.0128 | −6 | 4 | 0.0161 |

| CPS | Minion Masters | 0.4650 | 495 | 0.0949 | −3 | 4 | 514.5 | 0.3067 | 0.1323 | −2 | 4 | −0.1548 |

| CPS | Relic Hunters Zero: Remix | 0.8044 | 300 | −0.0355 | −4 | 4 | 331.0 | 0.7457 | 0.0461 | −5 | 5 | −0.0558 |

| CPS | Train Valley 2 | 0.3086 | 496 | 0.1331 | −2 | 5 | 487.0 | 0.3788 | 0.1152 | −2.5 | 4 | −0.1351 |

| CTO | Lightseekers | 0.8062 | 236 | −0.0369 | −4 | 5 | 200.0 | 0.2941 | −0.1524 | −5 | 2 | 0.1919 |

| CTO | Minion Masters | 0.9169 | 453 | 0.0143 | −3 | 3 | 519.5 | 0.2718 | 0.1419 | −1 | 3 | −0.1661 |

| CTO | Relic Hunters Zero: Remix | 0.6749 | 336 | 0.0592 | −3 | 4 | 260.0 | 0.3119 | −0.1409 | −3 | 2 | 0.1706 |

| CTO | Train Valley 2 | 0.5502 | 468.5 | 0.0785 | −2 | 4 | 462.5 | 0.6129 | 0.0665 | −2 | 3 | −0.0780 |

| CTS | Lightseekers | 0.8674 | 239.5 | −0.0256 | −48 | 50 | 295.0 | 0.2957 | 0.1524 | −16 | 52 | −0.1919 |

| CTS | Minion Masters | 0.5131 | 490 | 0.0853 | −19 | 38 | 538.5 | 0.1692 | 0.1783 | −11 | 37 | −0.2087 |

| CTS | Relic Hunters Zero: Remix | 0.9772 | 315.5 | 0.0052 | −37 | 44 | 416.5 | 0.0513 | 0.2714 | 3 | 42 | −0.3285 |

| CTS | Train Valley 2 | 0.5720 | 466.5 | 0.0745 | −18.5 | 45 | 545.5 | 0.0764 | 0.2315 | −1 | 42 | −0.2715 |

| F/AO | Lightseekers | 0.3548 | 289.5 | 0.1348 | −2 | 7 | 259.5 | 0.7967 | 0.0385 | −2 | 3 | −0.0484 |

| F/AO | Minion Masters | 0.0283 | 593 | 0.2829 | −1 | 8 | 501.5 | 0.4074 | 0.1074 | −2 | 3 | −0.1257 |

| F/AO | Relic Hunters Zero: Remix | 0.7029 | 334 | 0.0540 | −2 | 6 | 349.5 | 0.4970 | 0.0948 | −2 | 3 | −0.1148 |

| F/AO | Train Valley 2 | 0.4449 | 461.5 | 0.1010 | −5 | 7 | 434.0 | 0.7400 | 0.0443 | −1 | 2 | −0.0521 |

| F/AS | Lightseekers | 0.0885 | 170.5 | −0.2472 | −13 | 0 | 212.0 | 0.4356 | −0.1139 | −8 | 4 | 0.1434 |

| F/AS | Minion Masters | 0.1956 | 358 | −0.1678 | −10 | 2 | 509.5 | 0.3447 | 0.1227 | −1 | 7 | −0.1436 |

| F/AS | Relic Hunters Zero: Remix | 0.2820 | 256.5 | −0.1502 | −11 | 3 | 291.0 | 0.6754 | −0.0592 | −7 | 9 | 0.0717 |

| F/AS | Train Valley 2 | 0.5769 | 392 | −0.0735 | −9 | 5 | 430.0 | 0.9939 | 0.0019 | −4.5 | 6 | −0.0023 |

| TM | Lightseekers | 0.2003 | 183.5 | −0.1880 | −8 | 1.5 | 223.5 | 0.7142 | −0.0549 | −6 | 4 | 0.0687 |

| TM | Minion Masters | 0.8789 | 421.5 | −0.0207 | −7 | 6 | 537.0 | 0.1106 | 0.2079 | −1 | 5 | −0.2430 |

| TM | Relic Hunters Zero: Remix | 0.9844 | 302.5 | −0.0040 | −6 | 6 | 289.5 | 0.7843 | −0.0395 | −4 | 4 | 0.0476 |

| TM | Train Valley 2 | 0.1249 | 514.5 | 0.2022 | −0.5 | 7.5 | 448.0 | 0.6213 | 0.0656 | −2 | 4 | −0.0769 |

| RF | GBR | XGB | EXTRATREE | SVR | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CT | FA | PS | TM | CT | FA | PS | TM | CT | FA | PS | TM | CT | FA | PS | TM | CT | FA | PS | TM | |

| LINEAR |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |

| RF | - | - | - | - |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |

| GBR | - | - | - | - | - | - | - | - |  |  |  |  |  |  |  |  |  |  |  |  |

| LINEAR | RF | GBR | XGB | EXTRATREE | SVR | |

|---|---|---|---|---|---|---|

| CT | 0.055 ± 0.12 | 0.842 ± 0.07 | 0.914 ± 0.06 | 0.937 ± 0.08 | 0.905 ± 0.09 | 0.002 ± 0.06 |

| FA | 0.045 ± 0.13 | 0.640 ± 0.12 | 0.778 ± 0.11 | 0.847 ± 0.14 | 0.761 ± 0.14 | −0.036 ± 0.04 |

| PS | 0.116 ± 0.17 | 0.770 ± 0.12 | 0.826 ± 0.14 | 0.911 ± 0.14 | 0.901 ± 0.09 | −0.002 ± 0.04 |

| TM | −0.066 ± 0.18 | 0.710 ± 0.14 | 0.747 ± 0.14 | 0.851 ± 0.15 | 0.851 ± 0.13 | 0.008 ± 0.06 |

| Nº Games | Game Playtime (min) | Total Playtime (min) | Nº Achievements | CPS | CT | FA | TM | |

|---|---|---|---|---|---|---|---|---|

| Start | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Week1 | 1 | 726 | 726 | 6 | 44.08 | 32.02 | 21.89 | 57.59 |

| Week2 | 1 | 982 | 982 | 9 | 44.08 | 32.02 | 23.34 | 59.04 |

| Nº Games | Game Playtime (min) | Total Playtime (min) | Nº Achievements | CPS | CT | FA | TM | |

|---|---|---|---|---|---|---|---|---|

| Start | 9 | 0 | 108,784 | 1528 | 40.40 | 31.48 | 21.43 | 55.20 |

| Week1 | 10 | 605 | 109,289 | 1537 | 40.40 | 31.48 | 26.10 | 59.87 |

| Week2 | 10 | 850 | 109,634 | 1538 | 40.40 | 31.48 | 28.81 | 62.57 |

| Week3 | 10 | 921 | 109,705 | 1538 | 40.40 | 31.48 | 28.89 | 62.71 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bartolomé, J.; del Río, I.; Martínez, A.; Aranguren, A.; Laña, I.; Alloza, S. Game On: Exploring the Potential for Soft Skill Development Through Video Games. Information 2025, 16, 918. https://doi.org/10.3390/info16100918

Bartolomé J, del Río I, Martínez A, Aranguren A, Laña I, Alloza S. Game On: Exploring the Potential for Soft Skill Development Through Video Games. Information. 2025; 16(10):918. https://doi.org/10.3390/info16100918

Chicago/Turabian StyleBartolomé, Juan, Idoya del Río, Aritz Martínez, Andoni Aranguren, Ibai Laña, and Sergio Alloza. 2025. "Game On: Exploring the Potential for Soft Skill Development Through Video Games" Information 16, no. 10: 918. https://doi.org/10.3390/info16100918

APA StyleBartolomé, J., del Río, I., Martínez, A., Aranguren, A., Laña, I., & Alloza, S. (2025). Game On: Exploring the Potential for Soft Skill Development Through Video Games. Information, 16(10), 918. https://doi.org/10.3390/info16100918