3.1. Heuristic Construction

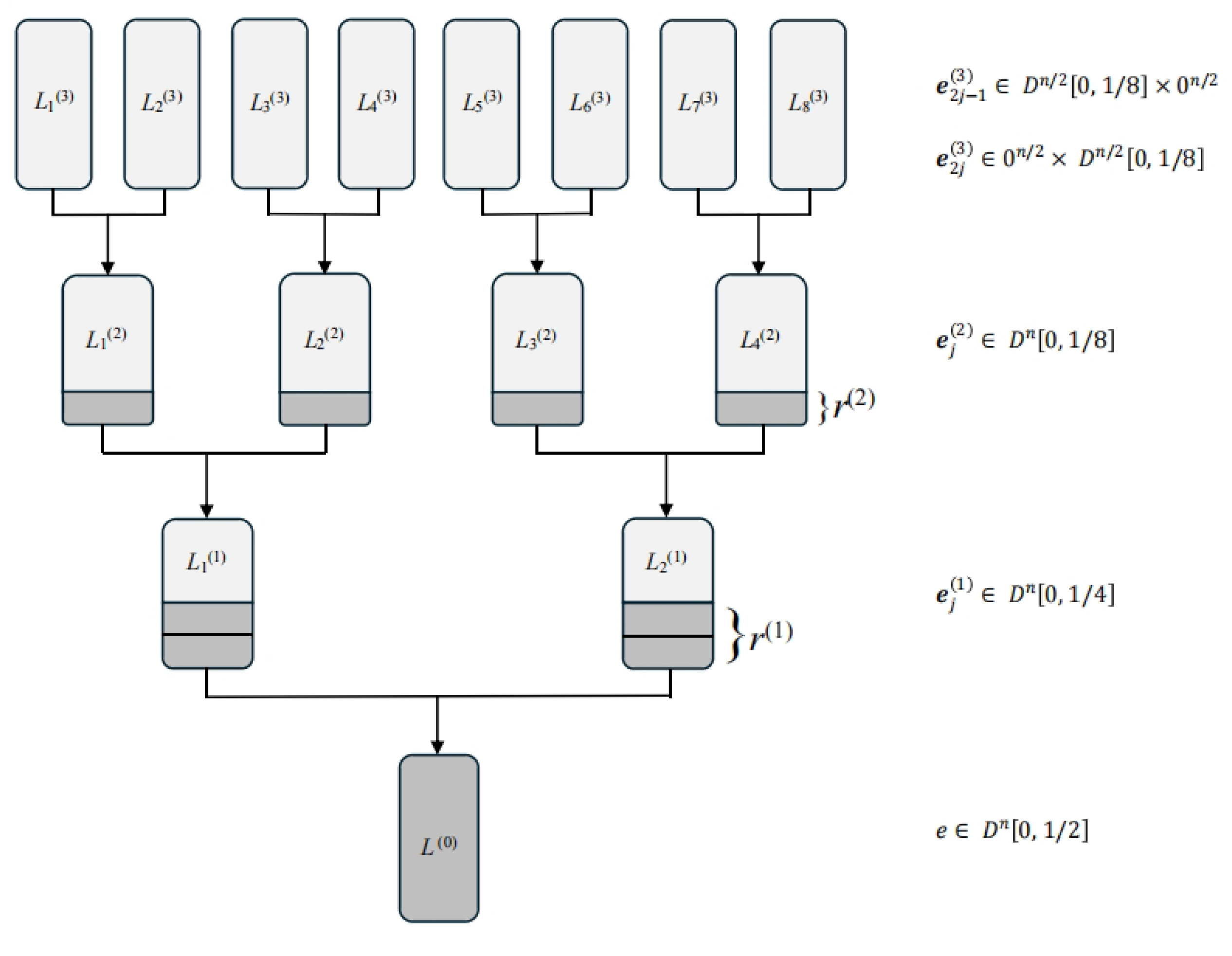

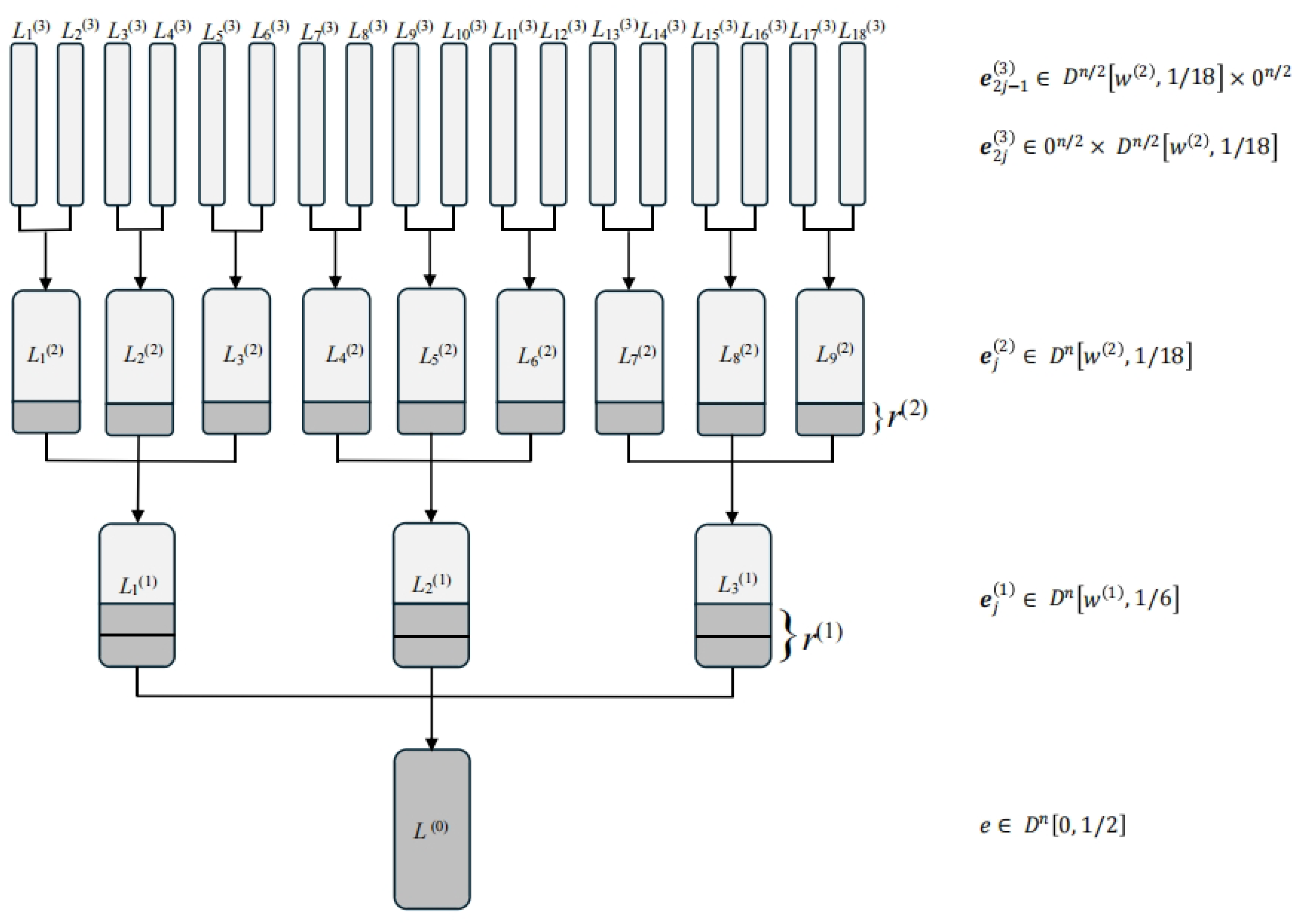

The proposed classical algorithm, which we refer to as TER, employs a ternary tree structure as illustrated in

Figure 2. We encourage the reader to consult the figure for a clearer understanding of the algorithmic workflow. The tree has a depth of 3—a value determined through numerical optimization to be optimal for TER (see

Appendix A for details).

For clarity, we consider a subset-sum instance

with a solution

. The key idea is to represent

as a sum of three vectors in

, i.e.,

where

. This means each of these three vectors contains exactly

entries equal to

,

entries equal to 1, and

entries equal to 0.

We begin by computing the number of representations and establishing the parameter

. For each 1-coordinate

in

, its decomposition via the

k-th coordinates of

must be one of

Let denote the number of representations for each of the last three types. The total number of representations for the first three types is equal to , because has 1-coordinates.

For each 0-coordinate

in

, its decomposition via the

k-th coordinates of

must be one of

excluding the trivial case

. Let

denote the number of each of these six nontrivial representations.

Table 3 summarizes the counts for each type of level-1 representation.

The total number of representations is given by

where the two factors, respectively, account for the number of representations for 1-coordinates and 0-coordinates in the vector

.

The number of -coordinates of for is and the number of 1-coordinates is Let , then for .

To capture a representation of

, we choose a modulus

where

, and random targets

. We then define the lists:

where

.

A valid solution is found when and . If no collision occurs, new values are sampled. The failure probability becomes negligible after polynomially many attempts.

The expected size of each list

is

These lists are constructed recursively. For example, to build , we represent as with .

Let

count each of the representations

,

, and

for the 1-coordinates of

, and

count each of the six nontrivial representations of the 0-coordinates. For the

-coordinates, the representations are

Let

count each of the last three types.

Table 4 provides the counts for each type of level-2 representation.

The number of representations at this level is

The number of

-coordinates of

for

is calculated as

and the number of 1-coordinates of

for

is calculated as

Let , then for .

Let

and choose random targets

for

. We define

for

, where

for

.

The expected size of each

is

The level-2 lists are built from the level-3 lists via a Meet-in-the-Middle approach.

for

, each of size

Note that we employ the binary tree only for the top level, because the representation technique is not used in this level.

Algorithm 2 outlines the TER algorithm with a depth of 3. In summary, it recursively constructs three lists that are expected to contain a single representation of . Similar to the HGJ algorithm, this algorithm executes the merge-and-filter operation repeatedly over several levels.

Consider three input distributions, , and , with a target distribution . Given three lists , and , we define the following:

| Algorithm 2 TER with a depth of 3 |

- Input:

The random subset-sum instance - Parameters:

that need to be optimized. - Output:

A solution or ⊥ if no solution is found. - 1:

Construct all level-3 lists for - 2:

Compute from for - 3:

for down to 0 do - 4:

for to do - 5:

Construct from . - 6:

end for - 7:

end for - 8:

if

then - 9:

return any - 10:

else - 11:

return ⊥ - 12:

end if

|

Similar to the standard subset-sum assumption, our heuristic assumes that elements in are independently and uniformly sampled from the distribution D.

Heuristic 2. Suppose the input vectors are uniformly distributed over . Then the filtered pairs of vectors are also uniformly distributed over the target set D—or more precisely, over the subset of vectors in D that satisfy the given modular constraint.

By Heuristic 2, the expected sizes of the merged list is given by , and the average size of the filtered list equals , where pf denotes the probability that a uniformly random triple from satisfies

Theorem 1. Let be a random subset-sum instance whose solution is a vector . Under Heuristic 2, Algorithm 2 finds in time and space .

Proof. For each level

, the size of the corresponding lists is denoted by

. Under Heuristic 2, the list sizes concentrate sharply around their expectations, leading to the expressions

where

and

are parameters to be optimized.

Based on the preceding analysis, the time complexity of Algorithm 2 can be derived as follows:

and the space complexity is

. Let us focus on the asymptotic exponent in

and relative to

n. Denote

for

. Recall that

and

. Computing the time complexity essentially amounts to solving the following optimization problem, where the objective function is

subject to the following constraints:

Note that Lemma 1 is applied when computing the exponents of the constraints.

Numerical optimization yields the parameters

This results in

which in turn yield list sizes

The time complexity is , determined by the merged list size, and the space complexity is , governed by the filtered list size. □

3.2. Rigorous Analysis of TER

In this subsection, we establish rigorous foundations for Heuristic 2 and present a provable variant of TER that eliminates heuristic assumptions. Our analysis relies on distributional properties of modular sums, as captured by the following fundamental result (Theorem 3.2 in [

46]).

Theorem 2. For any set , the identityholds, where denotes the probability that for a random drawn uniformly from , i.e., The left-hand side of Equation (

3) represents the average squared deviation from uniformity across all coefficient vectors

and residues

. This quantity measures the overall non-uniformity of the distribution. The right-hand side being constant for fixed

implies that if certain subset-sum instances exhibit significant deviation from uniformity, others must be exceptionally uniform to maintain the average.

We now extend this result to the Cartesian product setting relevant to our decomposition approach.

Theorem 3. For any sets and the same coefficient vector , the identityholds, where denotes the probability that and for random drawn uniformly from . We now analyze pathological instances that cause TER to fail. During ternary decomposition (e.g., representing

as

), certain coefficient vectors—such as

—prevent constructing valid level-1 lists (Equation (

1)) with random targets

due to sparse residue coverage.

Let and denote the vector sets in the first two parts of the ternary decomposition. We quantify how many residue pairs remain uncovered by . For parameter , define an instance as Type I bad if either covers fewer than residues or covers fewer than residues.

For such bad instances, at least

residues lead to zero probability:

or

. We now establish a lower bound for the variance under bad instances. Without loss of generality, assume that

covers fewer than

residues. For at least

uncovered residues

,

For the remaining at most

covered residues

, we consider the minimal uniformity scenario: each such residue has a probability of at least

(since the total probability sums to 1), and

is uniform. Then,

Consequently, the lower bound for the variance is

Let

denote the number of Type I bad instances. By Theorem 3,

Since

, the fraction of Type I bad instances is

Another failure mode occurs when intermediate lists grow beyond expected sizes, violating the complexity analysis in Theorem 1. We now rigorously analyze list sizes during TER’s execution.

Let be the filtered list in a merge-and-filter operation with distributions D. Let denote all vectors with distribution D. We want for parameter . Define Type II bad instances as those where more than residues c satisfy .

By Theorem 2,

where

counts Type II bad instances. Thus,

The fraction of Type II bad instances is at most .

Now consider the merged list size. Let

be the merged list in a merge-and-filter operation (definition in Equation (

2)). Let

contain all sums

without modular constraints. Decompose

, where

represents active moduli from input lists and

is the supplementary constraint introduced during

construction.

Let denote the target sum for elements in , with , , representing partial sums from the three input lists, respectively, satisfying .

The size of

admits the bound

To estimate this quantity, we require bounds on sums of the form

We can extend the relation from Theorem 2 to third moments:

Define

Type III bad instances as those where more than

values

satisfy

Letting

count such instances,

which implies

In TER, for construction, so . The fraction of Type III bad instances is at most .

This analysis provides theoretical guarantees for all intermediate lists in TER. Since depth-3 TER uses four ternary decompositions, the total fraction of bad instances is bounded by

By choosing sufficiently large , this fraction becomes negligible.

Excluding three types of bad subset-sum instances, we analyze the success probability of algorithm TER on the remaining good instances, where modulus values are randomly resampled in each ternary decomposition. For good instances, failures fall into three categories:

Selecting residues that yield zero probability. With at most such residues, the failure probability is .

The filtered list overflow. With at most residues, the failure probability is .

The merged list overflow. For each of the three lists to be merged, at most residues cause overflow, and the failure probability here is .

Thus, the overall failure probability is

Using the limit formula , we repeat each ternary decomposition times to ensure a failure probability of at most . Since , and TER involves four ternary decompositions, the overall failure probability is at most . Hence, the success probability is at least . If this success probability is insufficient, a polynomial number of repetitions yields a success probability exponentially close to 1, excluding the bad subset-sum instances. Therefore, this repetition strategy allows us to construct a provable probabilistic version of TER.