This Special Issue comprises papers on various digital technologies, researched and assessed in diverse contexts. Six of the twelve papers concern the use and impact of Artificial Intelligence (AI), which indicates the increasing deployment of this technology in organisations in recent years. Global events evidence the pace and impact of AI. The war in Ukraine has witnessed a transformation in warfare technology [

1] as AI-assisted weapons are complementing or replacing traditional military tools and capabilities. Away from the arena of war, the capabilities of ChatGPT have forced educators, administrators, and the legal profession to assess the potential of AI [

2] and the need for new regulations and governance procedures.

In the first paper in this collection, Nell Watson and colleagues examine some of the issues raised in the use of AI-assistants or “agentic AI systems”. Specifically, the authors discuss the need to align the behaviour of such systems with human values, safety requirements, and regulatory compliance. To address this challenge, the authors propose a “Superego” agent to act as an overseer and control mechanism, which can reference user-selected “Creed Constitutions” and thereby support and steer the planning of AI-agent design and deployment. However, in their call for continued research in this field, the authors note that “while the constitutional Superego aims to mitigate adverse behaviours, external steering of an AI does not fully resolve the ‘inner alignment’ problem or eliminate risks from emergent deception or unforeseen capabilities”. There is thus a need for “continued research into interpretability tools, verifiable chain-of-thought mechanisms, and deeper alignment strategies that modify the AI’s intrinsic goal structures” (p. 39).

In the following paper, Bilgin Metin and his fellow authors focus on the potential of AI to identify and resolve flaws in the logic embedded in the design and operation of business processes. Building on a systematic literature review and interviews with industry practitioners, they propose an eight-stage framework for detecting business logic vulnerabilities with the aid of AI tools. This study highlights the shortcomings of the current reliance on mainly manual detection methods and points to the potential of hybrid, multi-layered approaches that integrate human expertise with AI tools. The developed framework, validated by industry practitioners, illustrates how—in addressing the growing challenge of evolving vulnerabilities and threats—AI can transform cybersecurity from a reactive to a proactive process.

The paper by Papathomas et al. studies another AI-related theme, drawing upon the established TAM and UTAUT frameworks to examine the behavioural drivers of AI adoption in banking in Greece. The authors adopt a quantitative research methodology, using partial least squares structural equation modelling (PLS-SEM) to analyse data from 297 respondents. The findings reveal that performance expectancy, effort expectancy, and hedonic motivation are particularly relevant in influencing AI adoption in the Greek banking sector, while occupation and education serve as significant moderators. The authors conclude that “AI adoption in the banking sector presents transformative potential, particularly for improving efficiency, enhancing customer experiences, and driving innovation” (p. 17).

Francesc Font-Cot and colleagues from Spain focus on forecasting the survival chances of startup businesses. Based on an analysis of 20 startup companies, the authors develop a multivariate AI-driven model for predicting the survival of startups. In assessing attributes including team dynamics, market conditions, and financial metrics, the model demonstrates high accuracy and clustering capabilities. In particular, results point to team dynamics and product differentiation as critical to survival. This study makes a valuable contribution to the literature in this field, providing a scalable, adaptable, and practical framework that can be used and adapted by other researchers and practitioners.

Mirko Talajić and his fellow Croatian academics examine workforce diversity and propose a game theory approach to developing an AI-driven management system that fosters a stable workforce. Results suggest that immediately available incentives can increase productivity in the short-term, but that long-term rewards are more likely to produce desired changes in behaviour, which are significant in sustaining organisational performance improvement. This study also demonstrates how AI can be leveraged in conjunction with theoretical models to inform strategic workforce management decisions.

Pérez-Jorge and colleagues from the University of La Laguna, Tenerife, examine how the integration of AI-powered application programming interfaces (APIs) can enhance educational information management and learning processes. Their analysis of 27 peer-reviewed studies, published between 2013 and 2025, suggests five main benefits: data interoperability, personalised learning, automated feedback, real-time student monitoring, and predictive performance analytics. The authors present an outline conceptual model that highlights pedagogical, technological, and ethical perspectives. However, they note that one area worthy of further research is “designing inclusive frameworks for API integration that take into account infrastructure disparities and the need for pedagogical adaptation” (p. 27).

Several key issues and related questions regarding the future of AI are addressed in these papers. For example, how pervasive will AI become in business and society at large? Will agentic AI systems, as discussed in Watson et al.’s paper, become so commonplace and easy to configure that they will be embedded in end-user computing in industry, whereby computer users across the enterprise develop and deploy these tools, much like spreadsheets are used today? And in wider society, will they spread into homes, public arenas, and commercial environments as information providers and decision support units, much like mobile apps have permeated many aspects of daily life over the past two decades? Watson et al. suggest a range of ethical and regulatory, if not technical, challenges remain before this vision of the future can materialise.

Another aspect here is the degree to which AI can address the weaknesses in company IT systems and infrastructure resulting from years of substandard IT strategy development and implementation, which have left many organisations with disparate, non-integrated software packages, islands of inconsistent data, and poorly supported business processes. Clearly, not all organisations are weighed down by these shortcomings, but many are, with problems exacerbated by the need to maintain customer service levels while simultaneously attempting to transition to the new digital age. There have been false dawns before in this context: the data warehouse potentially offered a solution to inconsistent corporate data, APIs were seen by some as the solution to systems integration problems [

3], and outsourcing was put forward as a more effective and efficient way to manage IT in the 1990s and beyond—but AI constitutes a possible solution on a different level in terms of capability and reach.

These developments also pose questions about the future role of the IT department. Will AI speed the transfer of management responsibility for IT to the business functions, leaving a central IT function with the cross-company roles of cybersecurity, IT governance, and corporate database and network management? Again, this has been predicted before, and to some extent has materialised, particularly in larger organisations, but AI could provide a tipping point that will push this forward at speed. One view may be that AI will indeed help resolve many of these problems in the years to come; another may be that embedded technology and process problems will remain in many organisations, and may even be exacerbated by heightened and unrealistic management expectations of AI projects. If organisations could start with a “blank sheet of paper”, AI as part of a new IT strategy could, if skilfully managed and executed, deliver some stunning benefits; but to bring in AI-powered systems on top of poorly integrated IS and IT portfolios is likely to prove a significant challenge, which may encourage a conservative approach to AI deployment from senior IT management. It is not surprising that the role of the IT Director seems to be in a state of constant flux [

4], and realistic timescales are needed for an organisation to transition to a manageable, secure, and stable IT environment that utilises AI effectively.

Other papers in this Special Issue research or review some of the other new information and communications technologies. Data mining, in the context of sports analytics, is researched by Vangelis Sarlis and Christos Tjortjis from the International Hellenic University of Greece. The authors employ feature selection techniques and clustering algorithms to assess the financial implications of injuries and examine the relationship between player age, position, and performance in the National Basketball Association (NBA) in North America. The results provide a clear picture of when peak performance is achieved and the impact of musculoskeletal injuries, offering new insights into player attributes, health, and financial implications. In another paper, Restrepo-Carmona and colleagues also focus on data management, here in the context of a case study of public expenditure in Colombia. The authors conduct a bibliometric literature review to examine data management strategies for enhancing fiscal management within government operations. This study identifies data visualisation as critical in facilitating informed decision-making. The applied case study in Colombia supports the development of a proposed research agenda to improve fiscal control through more effective data management.

The role of blockchain in media copyright management is examined by Roberto García and colleagues from the University of Lleida, in Catalonia, Spain. The authors also employ a bibliometric literature analysis to identify four main areas of activity, namely “Digital Rights Management”, “Copyright Protection”, “Social Media”, and “Intellectual Property Rights”. Relevant cases of blockchain deployment are discussed and illustrated. The review is particularly welcome in an under-researched area of technology application in a fast-moving subject area. The urgency of such research was recently highlighted by Sir Elton John, who observed that “without thorough and robust copyright protection the UK’s future as a leader in arts and popular culture is in serious jeopardy” [

5]. Blockchain technology, combined with new regulatory measures, may play a key role in the future in providing such protection.

Bibliometric techniques and qualitative analysis are also employed in the study of digital information in facilitating crowdfunding, as noted in the paper by Garrigos-Simon and Narangajavana-Kaosiri from universities in Valencia, Spain. In providing a detailed analysis of the existing literature in this field, this article identifies recent shifts in focus, with practical applications, wider stakeholder involvement, specific digital technologies, and entrepreneurship now to the forefront. Managerial, medical, and cultural heritage applications are also featured more in the recent literature, in contrast to the decline in research related to geography and crisis management over the past two years. This study also discusses some of the practical aspects of crowdsourcing and digital information (CDI) initiatives. The authors note that success “depends on effective task analysis and design, contributor selection, and attention to considerations such as privacy” and that “engagement and workers’ motivation also depend on the evolution of online communities and social media” (p. 18). The authors also point out that poorly structured processes or inadequate management can limit the potential impact of crowdsourcing initiatives.

Digital platforms are examined in a paper by Lucilene Bustilho Cardoso and colleagues from Portugal and the USA, who investigate the impact of these platforms and innovations on the health literacy of patients with special needs. A systematic literature review identified five key articles of particular relevance to this specialist area, and these provide the basis for their findings. The authors conclude that there is “consistent evidence from the articles in this systematic review regarding the effectiveness of using new technologies and innovations in promoting oral health literacy in patients with special health needs” (p. 11).

Finally, Wynn and Jones examine the concept and practical application of corporate digital responsibility (CDR) in the digital era. This is a subject of growing interest to both researchers and industry professionals. Based on an analysis of available literature and case studies of two major international companies (Walmart and Deutsche Telekom), the authors discuss the relationship between CDR and corporate social responsibility, and propose a model outlining the key parameters of CDR. The scope of these studies on CDR has since been extended to encompass CDR in specific contexts, including AI [

6], the metaverse [

7], and quantum computing [

8].

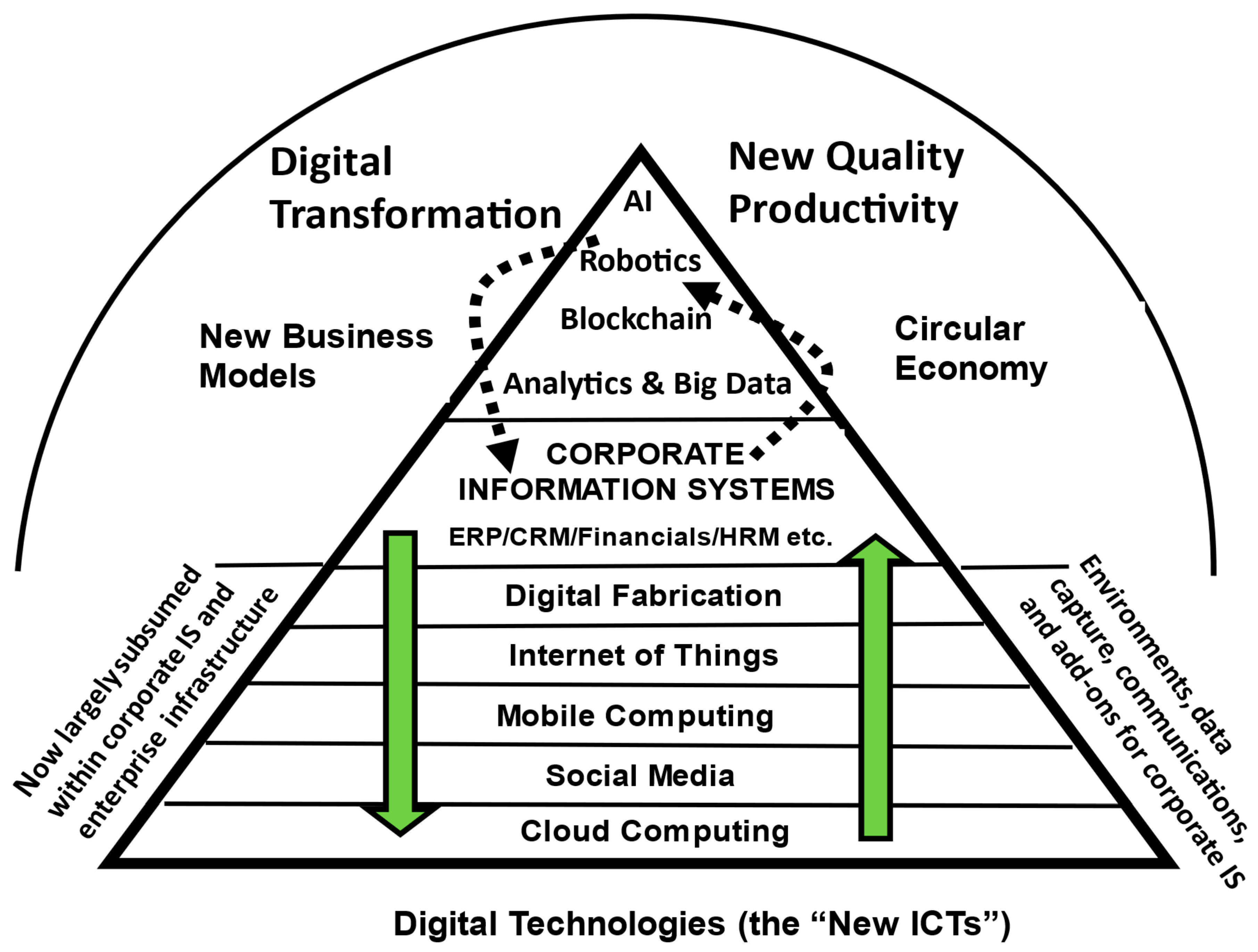

In summary, the themes covered in this Special Issue reflect the evolving significance of the “new ICTs” (or digital technologies). These have hitherto often been represented by the acronyms SMAC (Social Media, Mobile Computing, Analytics/Big Data, Cloud) and BRAID (Blockchain, Robotics, AI, IoT, Digital Fabrication). Five of these nine technologies—Social Media, Cloud, Mobile, IoT, and Digital Fabrication—are now well-embedded in many organisations and across wider society and are probably no longer appropriately classified as “new”; nor are they likely to bring major, unexpected results. However, AI (and associated robotics applications), analytics (and big data), and blockchain remain the primary change technologies now impacting business and society, and which are increasingly evident in research studies on digital transformation, new quality productivity [

9], new business models, and the circular economy (

Figure 1). These technologies will soon be complemented by others that are currently advancing and will likely soon arrive on what Gartner [

10] terms the “Plateau of Productivity” in their hype cycle model. Such technologies may include the metaverse, quantum computing, and possibly nanotechnology applications.

Ten years ago, Rotolo et al. [

11] observed that digital technologies have “the potential to exert a considerable impact on the socio-economic domain(s), which is observed in terms of the composition of actors, institutions and patterns of interactions, along with the associated knowledge production processes”, but forecast that the “most prominent impact, however, lies in the future” (p. 4). Whilst their perspective on future events is now being played out, their statement remains ever more appropriate today. The impact of AI and other emerging technologies in industry, on multiple aspects of society, the global economy, and political activities will continue to increase at a rapid pace.