Speech Recognition and Synthesis Models and Platforms for the Kazakh Language

Abstract

1. Introduction

2. Related Works

2.1. Low-Resource ASR: General Approaches

2.2. Turkic Languages: ASR and TTS

2.3. Kazakh Speech Features in Systems and Resources

2.4. Summary

3. Materials and Methods

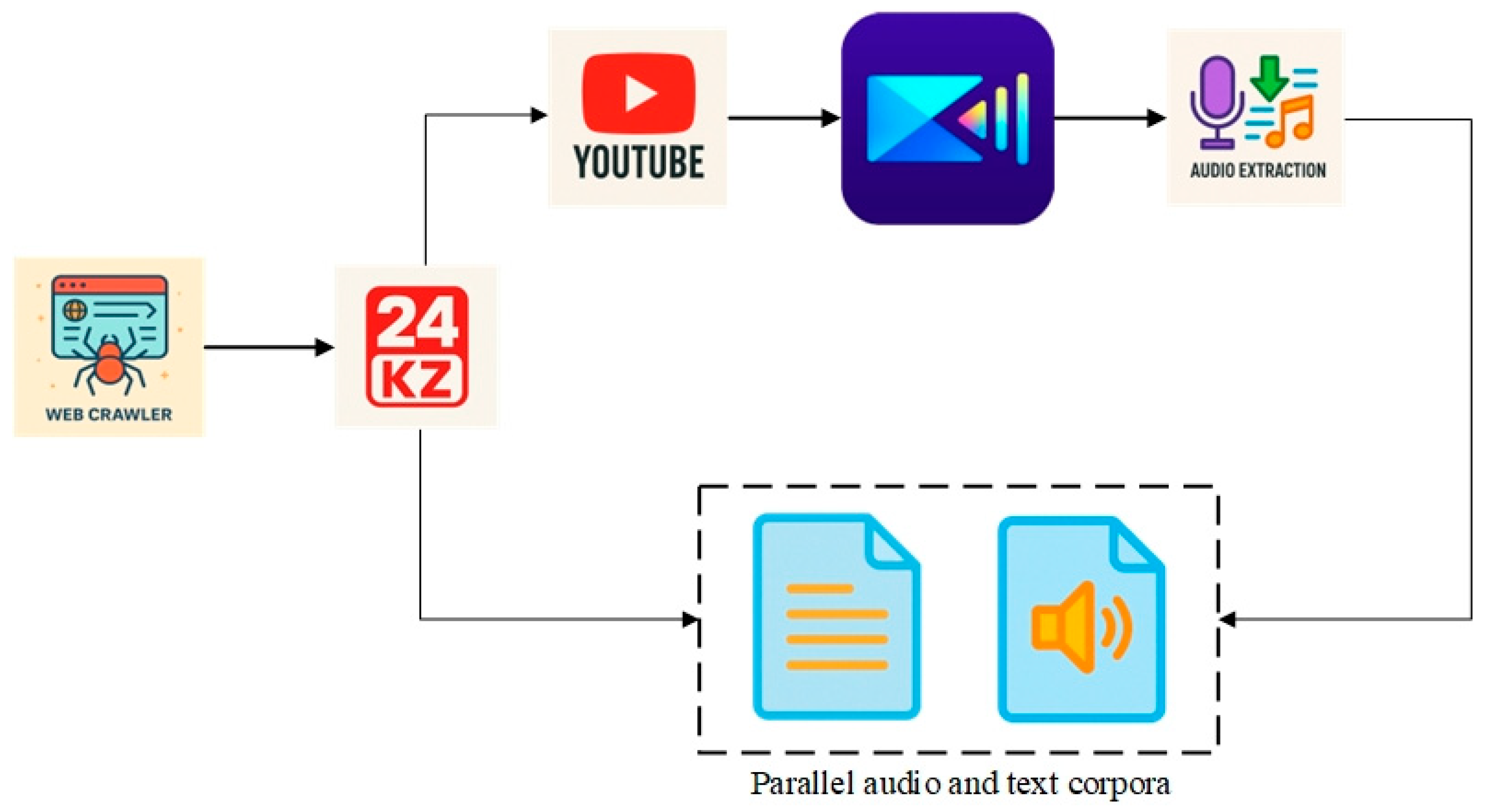

3.1. Audio and Text Dataset Formation

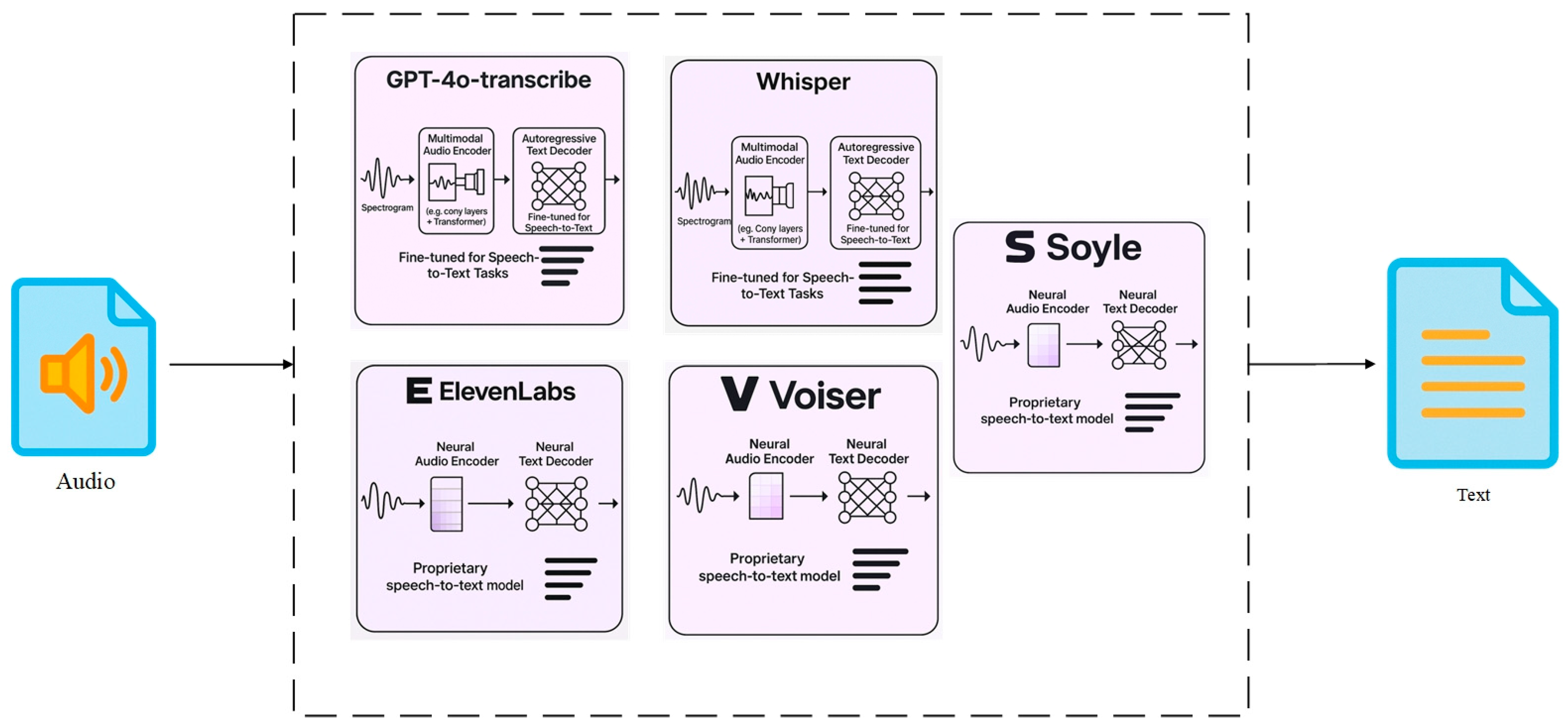

3.2. ASR Systems and Selecting Criteria

- Availability: The most important criterion was the system’s availability for large-scale use. This concept meant both technical availability (the ability to deploy and integrate quickly) and legal openness, including the availability of a free license or access to the source code. Preference was given to open-source solutions that did not require significant financial investments at the implementation stage.

- Recognition quality: One of the key technical parameters was the linguistic accuracy of the system. This crucial factor ensures the system’s ability to correctly interpret both standard and accented speech, taking into account the language’s morphological and syntactic features. Particular attention was also paid to the contextual relevance of the recognized text, that is, the system’s ability to preserve semantic integrity when converting oral speech into written form.

- The efficiency of subsequent processing: An additional criterion was the system’s ability to effectively work with a large volume of input data, implying not only accurate recognition but also the possibility of further processing (for example, automatic translation or categorization of content). Special importance was given to the scalability of the architecture and support for batch processing of audio files, ensuring a high-performance system.

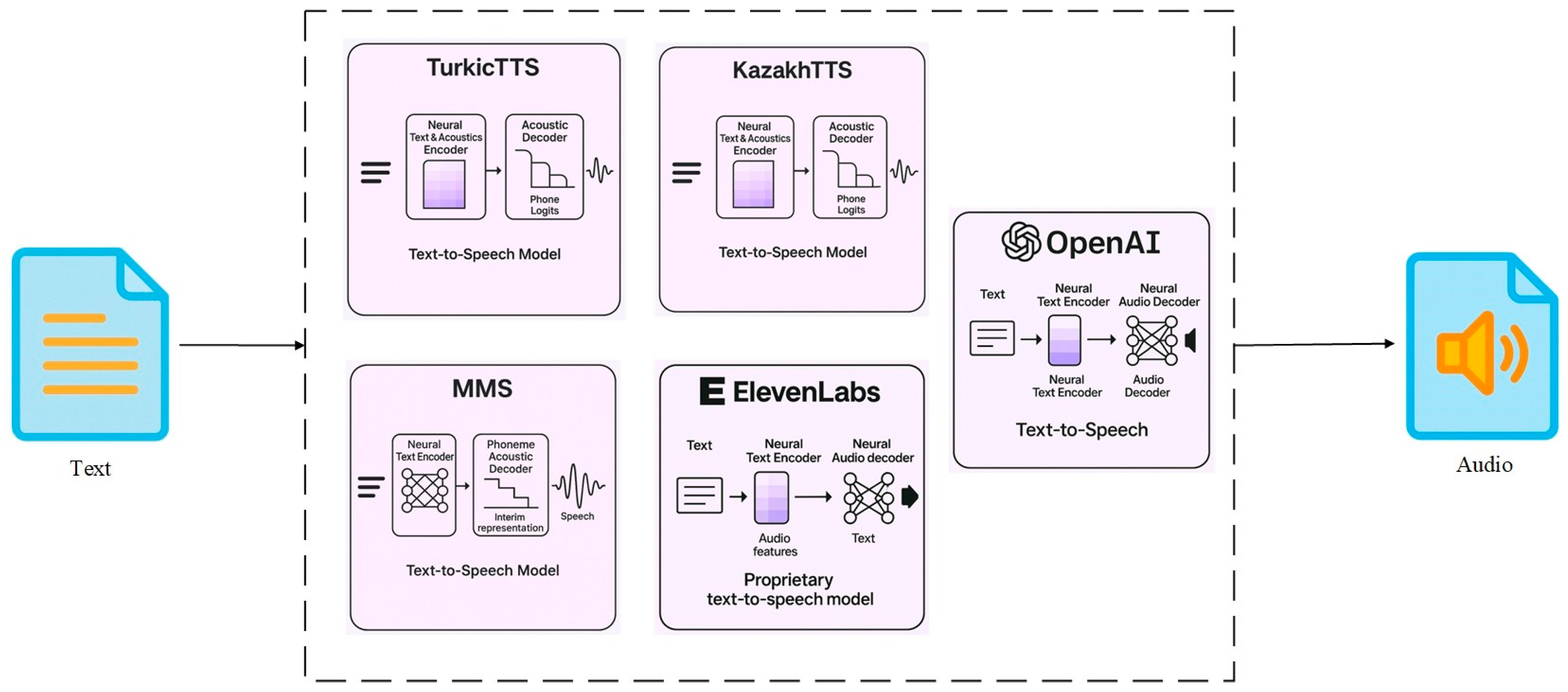

3.3. Text-to-Speech (TTS)

4. Results and Discussion

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ASR | Automatic speech recognition |

| TTS | Text-to-speech |

| STT | Speech-to-Text |

| E2E | End-to-End |

| WER | Word error rates |

| TER | Translation Edit Rate |

| BLEU | Bilingual Evaluation Understudy |

| chrF | CHaRacter-level F-score |

| LoRA | Low-Rank Adaptation |

| CER | Character error rate |

| KSC | Kazakh Speech Corpus |

| MT | Machine translation |

| HMM | Hidden Markov Models |

| PESQ | Perceptual Evaluation of Speech Quality |

| STOI | Short-Time Objective Intelligibility |

| USM | Universal Speech Model |

| USC | Uzbek Speech Corpus |

| MCD | Mel Cepstral Distortion |

| DNSMOS | Deep Noise Suppression Mean Opinion Score |

| DNS | Deep Noise Suppression |

| MOS | Mean Opinion Score |

| MSE | Mean square error |

| MMS | Massively Multilingual Speech |

| ISSAI | Institute of Intelligent Systems and Artificial Intelligence |

| NU | Nazarbayev University |

| CTC | Connectionist temporal classification |

| KSD | Kazakh Speech Dataset |

| AI | Artificial Intelligence |

| COMET | Crosslingual Optimized Metric for Evaluation of Translation |

| RNN-T | Recurrent neural network-transducer |

| LSTM | Long Short-Term Memory |

| UzLM | Uzbek language model |

| STS | Speech-to-speech |

| LID | Language identifier |

| DL | Deep Learning |

| IPA | International Phonetic Alphabet |

| API | Application Programming Interface |

| GPT | Generative Pre-trained Transformer |

| WebRTC | Web Real-Time Communication |

| HiFi-GAN | Generative Adversarial Networks for Efficient and High-Fidelity Speech Synthesis |

| WaveGAN | Generative adversarial network for unsupervised synthesis of raw-waveform audio |

References

- Vacher, M.; Aman, F.; Rossato, S.; Portet, F. Development of Automatic Speech Recognition Techniques for Elderly Home Support: Applications and Challenges. In Lecture Notes in Computer Science, Proceedings of the International Conference on Human Aspects of IT for the Aged Population; Springer: Los Angeles, CA, USA, 2015; pp. 341–353. [Google Scholar] [CrossRef]

- Bekarystankyzy, A.; Mamyrbayev, O.; Mendes, M.; Fazylzhanova, A.; Assam, M. Multilingual end-to-end ASR for low-resource Turkic languages with common alphabets. Sci. Rep. 2024, 14, 13835. [Google Scholar] [CrossRef]

- Tukeyev, U.; Turganbayeva, A.; Abduali, B.; Rakhimova, D.; Amirova, D.; Karibayeva, A. Inferring the Complete Set of Kazakh Endings as a Language Resource. In Advances in Computational Collective Intelligence: Proceedings of the 12th International Conference, ICCCI 2020, Da Nang, Vietnam, 30 November–3 December 2020; Hernes, M., Wojtkiewicz, K., Szczerbicki, E., Eds.; Communications in Computer and Information Science; Springer: Cham, Switzerland, 2020; Volume 1287, pp. 741–751. [Google Scholar] [CrossRef]

- Tukeyev, U.; Karibayeva, A.; Zhumanov, Z. Morphological Segmentation Method for Turkic Language Neural Machine Translation. Cogent Eng. 2020, 7, 1832403. [Google Scholar] [CrossRef]

- Tukeyev, U.; Karibayeva, A.; Turganbayeva, A.; Amirova, D. Universal Programs for Stemming, Segmentation, Morphological Analysis of Turkic Words. In Computational Collective Intelligence: Proceedings of the International Conference (ICCCI 2021), Rhodes, Greece, 29 September–1 October 2021; Nguyen, N.T., Iliadis, L., Maglogiannis, I., Trawiński, B., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2021; Volume 12876, pp. 643–654. [Google Scholar] [CrossRef]

- Tukeyev, U.; Gabdullina, N.; Karipbayeva, N.; Abdurakhmonova, N.; Balabekova, T.; Karibayeva, A. Computational Model of Morphology and Stemming of Uzbek Words on Complete Set of Endings. In Proceedings of the 2024 IEEE 3rd International Conference on Problems of Informatics, Electronics and Radio Engineering (PIERE), Novosibirsk, Russia, 15–17 November 2024; pp. 1760–1764. [Google Scholar] [CrossRef]

- Kadyrbek, N.; Mansurova, M.; Shomanov, A.; Makharova, G. The Development of a Kazakh Speech Recognition Model Using a Convolutional Neural Network with Fixed Character Level Filters. Big Data Cogn. Comput. 2023, 7, 132. [Google Scholar] [CrossRef]

- Yeshpanov, R.; Mussakhojayeva, S.; Khassanov, Y. Multilingual Text-to-Speech Synthesis for Turkic Languages Using Transliteration. In Proceedings of the INTERSPEECH, 2023, Dublin, Ireland, 20–24 August 2023; pp. 5521–5525. [Google Scholar] [CrossRef]

- Mussakhojayeva, S.; Janaliyeva, A.; Mirzakhmetov, A.; Khassanov, Y.; Varol, H.A. KazakhTTS: An Open-Source Kazakh Text-to-Speech Synthesis Dataset. In Proceedings of the INTERSPEECH, Brno, Czechia, 30 August–3 September 2021; pp. 2786–2790. [Google Scholar] [CrossRef]

- Kuanyshbay, D.; Amirgaliyev, Y.; Baimuratov, O. Development of Automatic Speech Recognition for Kazakh Language Using Transfer Learning. Int. J. Adv. Trends Comput. Sci. Eng. 2020, 9, 5880–5886. [Google Scholar] [CrossRef]

- Orken, M.; Dina, O.; Keylan, A.; Tolganay, T.; Mohamed, O. A study of transformer-based end-to-end speech recognition system for Kazakh language. Sci. Rep. 2022, 12, 8337. [Google Scholar] [CrossRef]

- Ahlawat, H.; Aggarwal, N.; Gupta, D. Automatic Speech Recognition: A Survey of Deep Learning Techniques and Approaches. Int. J. Cogn. Comput. Eng. 2025, 7, 201–237. [Google Scholar] [CrossRef]

- Rosenberg, A.; Zhang, Y.; Ramabhadran, B.; Jia, Y.; Moreno, P.; Wu, Y.; Wu, Z. Speech recognition with augmented synthesized speech. In Proceedings of the 2019 IEEE Automatic Speech Recognition and Understanding Workshop, Singapore, 14–18 December 2019; pp. 996–1002. [Google Scholar]

- Zhang, C.; Li, B.; Sainath, T.; Strohman, T.; Mavandadi, S.; Chang, S.-Y.; Haghani, P. Streaming end-to-end multilingual speech recognition with joint language identification. In Proceedings of the INTERSPEECH, 2022, Incheon, Korea, 18–22 September 2022. [Google Scholar] [CrossRef]

- Zhang, Y.; Han, W.; Qin, J.; Wang, Y.; Bapna, A.; Chen, Z.; Chen, N.; Li, B.; Axelrod, V.; Wang, G.; et al. Google USM: Scaling automatic speech recognition beyond 100 languages. arXiv 2023, arXiv:2303.01037. [Google Scholar] [CrossRef]

- Liu, Y.; Yang, X.; Qu, D. Exploration of Whisper Fine-Tuning Strategies for Low-Resource ASR. EURASIP J. Audio Speech Music Process. 2024, 2024, 29. [Google Scholar] [CrossRef]

- Metze, F.; Gandhe, A.; Miao, Y.; Sheikh, Z.; Wang, Y.; Xu, D.; Zhang, H.; Kim, J.; Lane, I.; Lee, W.K.; et al. Semi-supervised training in low-resource ASR and KWS. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, QLD, Australia, 19–24 April 2015; pp. 5036–5040. [Google Scholar] [CrossRef]

- Du, W.; Maimaitiyiming, Y.; Nijat, M.; Li, L.; Hamdulla, A.; Wang, D. Automatic Speech Recognition for Uyghur, Kazakh, and Kyrgyz: An Overview. Appl. Sci. 2023, 13, 326. [Google Scholar] [CrossRef]

- Mukhamadiyev, A.; Mukhiddinov, M.; Khujayarov, I.; Ochilov, M.; Cho, J. Development of Language Models for Continuous Uzbek Speech Recognition System. Sensors 2023, 23, 1145. [Google Scholar] [CrossRef]

- Veitsman, Y.; Hartmann, M. Recent Advancements and Challenges of Turkic Central Asian Language Processing. In Proceedings of the First Workshop on Language Models for Low-Resource Languages, Abu Dhabi, United Arab Emirates, 19–20 January 2025; pp. 309–324. [Google Scholar]

- Oyucu, S. A Novel End-to-End Turkish Text-to-Speech (TTS) System via Deep Learning. Electronics 2023, 12, 1900. [Google Scholar] [CrossRef]

- Polat, H.; Turan, A.K.; Koçak, C.; Ulaş, H.B. Implementation of a Whisper Architecture-Based Turkish ASR System and Evaluation of Fine-Tuning with LoRA Adapter. Electronics 2024, 13, 4227. [Google Scholar] [CrossRef]

- Musaev, M.; Mussakhojayeva, S.; Khujayorov, I.; Khassanov, Y.; Ochilov, M.; Atakan Varol, H. USC: An open-source Uzbek speech corpus and initial speech recognition experiments. In Speech and Computer; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2021; pp. 437–447. [Google Scholar]

- Mussakhojayeva, S.; Dauletbek, K.; Yeshpanov, R.; Varol, H.A. Multilingual Speech Recognition for Turkic Languages. Information 2023, 14, 74. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Gulati, A.; Qin, J.; Chiu, C.C.; Parmar, N.; Zhang, Y.; Yu, J.; Han, W.; Wang, S.; Zhang, Z.; Wu, Y.; et al. Conformer: Convolution-augmented Transformer for Speech Recognition. In Proceedings of the INTERSPEECH, Shanghai, China, 25–29 October 2020; pp. 5036–5040. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Xu, T.; Brockman, G.; McLeavey, C.; Sutskever, I. Robust Speech Recognition via Large-Scale Weak Supervision. arXiv 2022, arXiv:2212.04356. [Google Scholar] [CrossRef]

- Watanabe, S.; Hori, T.; Karita, S.; Hayashi, T.; Nishitoba, J.; Unno, Y.; Soplin, N.E.; Heymann, J.; Wiesner, M.; Chen, N. ESPnet: End-to-End Speech Processing Toolkit. arXiv 2018, arXiv:1804.00015. [Google Scholar]

- Conneau, A.; Khandelwal, K.; Goyal, N.; Chaudhary, V.; Wenzek, G.; Guzmán, F.; Grave, E.; Ott, M.; Zettlemoyer, L.; Stoyanov, V. Unsupervised Cross-lingual Representation Learning at Scale. In Proceedings of the ACL, Online, 19 July 2020; pp. 8440–8451. [Google Scholar] [CrossRef]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. arXiv 2021, arXiv:2106.09685. [Google Scholar]

- ESPnet Toolkit. Available online: https://github.com/espnet/espnet (accessed on 10 June 2025).

- Povey, D.; Ghoshal, A.; Boulianne, G.; Burget, L.; Glembek, O.; Goel, N.; Hannemann, M.; Motlíček, P.; Qian, Y.; Schwarz, P.; et al. The Kaldi speech recognition toolkit. In Proceedings of the ASRU, Hilton Waikoloa Village Resort, Waikoloa, HI, USA, 11–15 December 2011; pp. 1–4. [Google Scholar]

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. Transformers: State-of-the-art Natural Language Processing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, Online, 16–20 November 2020; pp. 38–45. [Google Scholar] [CrossRef]

- Shen, J.; Pang, R.; Weiss, R.J.; Schuster, M.; Jaitly, N.; Yang, Z.; Chen, Z.; Zhang, Y.; Wang, Y.; Skerrv-Ryan, R.; et al. Natural TTS synthesis by conditioning WaveNet on mel spectrogram predictions. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 4779–4783. [Google Scholar] [CrossRef]

- Ren, Y.; Hu, C.; Tan, X.; Qin, T.; Zhao, S.; Zhao, Z.; Liu, T.Y. FastSpeech 2: Fast and High-Quality End-to-End Text to Speech. arXiv 2020, arXiv:2006.04558. [Google Scholar]

- Kong, J.; Kim, J.; Bae, J. HiFi-GAN: Generative Adversarial Network for Efficient and High Fidelity Speech Synthesis. arXiv 2020, arXiv:2010.05646. [Google Scholar]

- Karabaliyev, Y.; Kolesnikova, K. Kazakh Speech and Recognition Methods: Error Analysis and Improvement Prospects. Sci. J. Astana IT Univ. 2024, 20, 62–75. [Google Scholar] [CrossRef]

- Rakhimova, D.; Duisenbekkyzy, Z.; Adali, E. Investigation of ASR Models for Low-Resource Kazakh Child Speech: Corpus Development, Model Adaptation, and Evaluation. Appl. Sci. 2025, 15, 8989. [Google Scholar] [CrossRef]

- Khassanov, Y.; Mussakhojayeva, S.; Mirzakhmetov, A.; Adiyev, A.; Nurpeiissov, M.; Varol, H.A. A Crowdsourced Open-Source Kazakh Speech Corpus and Initial Speech Recognition Baseline. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, EACL, Online, 19–23 April 2021; pp. 697–706. [Google Scholar] [CrossRef]

- Kozhirbayev, Z.; Islamgozhayev, T. Cascade Speech Translation for the Kazakh Language. Appl. Sci. 2023, 13, 8900. [Google Scholar] [CrossRef]

- Kapyshev, G.; Nurtas, M.; Altaibek, A. Speech recognition for Kazakh language: A research paper. Procedia Comput. Sci. 2024, 231, 369–372. [Google Scholar] [CrossRef]

- Mussakhojayeva, S.; Gilmullin, R.; Khakimov, B.; Galimov, M.; Orel, D.; Abilbekov, A.; Varol, H.A. Noise-Robust Multilingual Speech Recognition and the Tatar Speech Corpus. In Proceedings of the 2024 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Osaka, Japan, 19–22 February 2024; pp. 732–737. [Google Scholar] [CrossRef]

- Mussakhojayeva, S.; Khassanov, Y.; Varol, H.A. KSC2: An industrial-scale open-source Kazakh speech corpus. In Proceedings of the INTERSPEECH, Incheon, Korea, 18–22 September 2022; pp. 1367–1371. [Google Scholar] [CrossRef]

- Common Voice. Available online: https://commonvoice.mozilla.org/ru/datasets (accessed on 10 June 2025).

- KazakhTTS. Available online: https://github.com/IS2AI/Kazakh_TTS (accessed on 10 June 2025).

- Kazakh Speech Corpus. Available online: https://www.openslr.org/102/ (accessed on 10 June 2025).

- Kazakh Speech Dataset. Available online: https://www.openslr.org/140/ (accessed on 10 June 2025).

- ISSAI. Available online: https://github.com/IS2AI/ (accessed on 10 September 2025).

- Whisper. Available online: https://github.com/openai/whisper (accessed on 2 June 2025).

- GPT-4o-transcribe (OpenAI). Available online: https://platform.openai.com/docs/models/gpt-4o-transcribe (accessed on 2 July 2025).

- Soyle. Available online: https://github.com/IS2AI/Soyle (accessed on 2 June 2025).

- ElevenLabs Scribe. Available online: https://elevenlabs.io/docs/capabilities/speech-to-text (accessed on 20 June 2025).

- Voiser. Available online: https://voiser.net/ (accessed on 30 June 2025).

- MMS (Massively Multilingual Speech). Available online: https://github.com/facebookresearch/fairseq/tree/main/examples/mms (accessed on 10 June 2025).

- TurkicTTS. Available online: https://github.com/IS2AI/TurkicTTS (accessed on 12 June 2025).

- ElevenLabs TTS. Available online: https://elevenlabs.io/docs/capabilities/text-to-speech (accessed on 2 July 2025).

- OpenAI TTS. Available online: https://platform.openai.com/docs/guides/text-to-speech (accessed on 30 June 2025).

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. BLEU: A Method for Automatic Evaluation of Machine Translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 6–12 July 2002; pp. 311–318. [Google Scholar] [CrossRef]

- Gillick, L.; Cox, S. Some Statistical Issues in the Comparison of Speech Recognition Algorithms. In Proceedings of the International Conference on Acoustics, Speech, and Signal Processing, Glasgow, UK, 23–26 May 1989; Volume 1, pp. 532–535. [Google Scholar] [CrossRef]

- Snover, M.; Dorr, B.; Schwartz, R.; Micciulla, L.; Makhoul, J. A Study of Translation Edit Rate with Targeted Human Annotation. In Proceedings of the 7th Conference of the Association for Machine Translation in the Americas: Technical Papers, Cambridge, MA, USA, 8–12 August 2006; Available online: https://aclanthology.org/2006.amta-papers.25/ (accessed on 10 June 2025).

- Popović, M. chrF: Character n-gram F-score for automatic MT evaluation. In Proceedings of the Tenth Workshop on Statistical Machine Translation, Lisbon, Portugal, 17–18 September 2015; pp. 392–395. [Google Scholar] [CrossRef]

- Rei, R.; Farinha, A.C.; Martins, A.F.T. COMET: A Neural Framework for MT Evaluation. In Proceedings of the EMNLP, Online, 16–20 November 2020; pp. 2685–2702. [Google Scholar] [CrossRef]

- Kubichek, R. Mel-cepstral distance measure for objective speech quality assessment. In Proceedings of the IEEE Pacific Rim Conference on Communications Computers and Signal Processing, Victoria, BC, Canada, 19–21 May 1993; Volume 1, pp. 125–128. [Google Scholar] [CrossRef]

- Rix, A.W.; Beerends, J.G.; Hollier, M.P.; Hekstra, A.P. Perceptual evaluation of speech quality (PESQ). In Proceedings of the 2001 IEEE International Conference on Acoustics, Speech, and Signal Processing. Proceedings (Cat. No. 01CH37221), Salt Lake City, UT, USA, 7–11 May 2001; Volume 2, pp. 749–752. [Google Scholar] [CrossRef]

- Taal, C.H.; Hendriks, R.C.; Heusdens, R.; Jensen, J. An Algorithm for Intelligibility Prediction of Time–Frequency Weighted Noisy Speech. IEEE Trans. Audio Speech Lang. Process. 2011, 19, 2125–2136. [Google Scholar] [CrossRef]

- Reddy, C.K.; Gopal, V.; Cutler, R. DNSMOS: A Non-Intrusive Perceptual Objective Speech Quality Metric to Evaluate Noise Suppressors. In Proceedings of the ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2020; pp. 6493–6497. [Google Scholar] [CrossRef]

| Audio Corpora Name | Data Type | Volume | Accessibility |

|---|---|---|---|

| Common Voice [44] | Audio recordings, with transcriptions. | 150+ h | open access |

| KazakhTTS [45] | Audio-text pair | 271 h | conditionally open |

| Kazakh Speech Corpus [39,46] | Speech + transcriptions | 330 h | open access |

| Kazakh Speech Dataset (KSD) [7,47] | Speech | 554 h | open access |

| Models | Advantages | Drawbacks |

|---|---|---|

| GPT-4o-transcribe | State-of-the-art accuracy Real-time streaming via WebSocket/WebRTC Low word error rate, strong for morphologically rich languages Robust in noisy and complex environments Integration with multimodal GPT-4o | Limited access through the OpenAI API Proprietary, no open-source release Requires a stable internet connection |

| Whisper | Open-source and free availability Supports 99+ languages, multilingual robustness Automatic language detection and translation Pretrained models available in multiple sizes Resilient to noise and accents | Possibility of lags in low-latency applications Resource-intensivity of large models |

| Soyle | Focused on Kazakh and low-resource Turkic languages Trained on local corpora (Kazakh Speech Corpus 2, Common Voice) Effective against background noise and speaker variability Locally developed, supports national use | A few language limitations Scarce public documentation Restriction of deployment options |

| Elevenlabs | High transcription accuracy for Kazakh Advanced features: speaker diarization, non-verbal event detection Easy access via API and web interface Optimized for low-latency deployment (real-time version in development) | Closed-source, proprietary system Primarily optimized for English Data privacy and reproducibility concerns Primarily focus on English Closed-source Influence of a noisy environment on the transcription quality |

| Voiser | High accuracy in Kazakh and Turkish Real-time and batch transcription Punctuation and speaker diarization Flexible cloud-based integration | Proprietary and closed-source Limited global language range Less academic benchmarking and transparency Performance influenced by noisy input |

| Models | Advantages | Drawbacks |

|---|---|---|

| MMS | Open-source and publicly available Supports 1100+ languages, including Kazakh Unified model for ASR, TTS, and language identification Trained on a vast amount of data Rapid adaptation to low-resource languages |

Less optimized for real-time use

May show degraded performance on specific dialects |

| TurkicTTS | Specially designed for Turkic languages into 7 languages (Azerbaijani, Bashkir, Kazakh, Kyrgyz, Sakha, Tatar, Turkish, Turkmen, Uyghur, and Uzbek) Incorporates phonological features of Turkic speech Provides open research resources and benchmarks Tacotron2 and WaveGAN architecture Zero-shot generalization without parallel corpora | Sometimes misidentifies Turkic languages Limited domain coverage and audio variation Research-focused, minimal production integration |

| KazakhTTS2 | Tailored for high-quality Kazakh TTS Improved naturalness, stress, and prosody Developed for national applications Open-source, available via GitHub Free Tacotron2 and HiFi-GAN vocoder Focus on Kazakh phonological accuracy Basis for national digital services and education |

Limited to Kazakh only

Requires fine-tuning for expressive or emotional speech |

| Elevenlabs | High-fidelity, human-like voice synthesis Supports multilingual and emotional speech User-friendly web and API interfaces Fast inference and low-latency output Speaker cloning and adaptation Optimized for interactive applications |

Commercial licensing with usage restrictions

No access to full training data or fine-tuning options |

| OpenAI TTS | Advanced expressiveness Integrated with GPT models for contextual adaptation Robust handling of pauses, emphasis, and emotion Multilingual support, including Kazakh | Closed-source and API-only Limited user customization Subject to quotas and usage caps |

| Model | BLEU% 1 1 2 2 | WER% 1 1 2 2 | TER% 1 1 2 2 | chrF 1 1 2 2 | COMET 1 1 2 2 |

|---|---|---|---|---|---|

| Whisper | 13.22 | 77.10 | 74.87 | 55.30 | 0.42 |

| GPT-4o-transcribe | 45.57 | 43.75 | 42.35 | 76.99 | 0.86 |

| Soyle | 38.66 | 48.14 | 36.30 | 80.35 | 0.97 |

| Elevenlabs | 43.33 | 42.77 | 41.89 | 77.36 | 0.88 |

| Voiser | 38.41 | 40.65 | 31.97 | 80.88 | 1.01 |

| Model | BLEU% 1 1 2 2 | WER% 1 1 2 2 | TER% 1 1 2 2 | chrF 1 1 2 2 | COMET 1 1 2 2 |

|---|---|---|---|---|---|

| Whisper | 21.97 | 60.55 | 54.36 | 68.36 | 0.30 |

| GPT-4o-transcribe | 53.46 | 36.22 | 23.04 | 81.15 | 1.02 |

| Soyle | 74.93 | 18.61 | 18.61 | 95.60 | 1.23 |

| Elevenlabs | 59.45 | 30.84 | 17.27 | 88.04 | 1.13 |

| Voiser | 47.04 | 37.11 | 22.95 | 84.51 | 1.05 |

| Model | STOI 1 1 2 2 | PESQ 1 1 2 2 | MCD 1 1 2 2 | LSD 1 1 2 2 | DNSMOS 1 1 2 2 |

|---|---|---|---|---|---|

| MMS | 0.09 | 1.12 | 145.16 | 1.15 | 4.63 |

| TurkicTTS | 0.11 | 1.16 | 129.54 | 1.06 | 5.92 |

| KazakhTTS2 | 0.10 | 1.09 | 150.53 | 1.11 | 8.79 |

| Elevenlabs | 0.10 | 1.10 | 164.29 | 1.34 | 6.13 |

| OpenAI TTS | 0.09 | 1.12 | 123.44 | 1.16 | 7.43 |

| Model | STOI 1 1 2 2 | PESQ 1 1 2 2 | MCD 1 1 2 2 | LSD 1 1 2 2 | DNSMOS 1 1 2 2 |

|---|---|---|---|---|---|

| MMS | 0.12 | 1.11 | 148.40 | 1.20 | 3.91 |

| TurkicTTS | 0.15 | 1.14 | 145.49 | 1.12 | 6.39 |

| KazakhTTS2 | 0.12 | 1.07 | 137.03 | 1.12 | 8.96 |

| Elevenlabs | 0.13 | 1.08 | 139.75 | 1.29 | 6.38 |

| OpenAI TTS | 0.14 | 1.14 | 117.11 | 1.19 | 7.04 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karibayeva, A.; Karyukin, V.; Abduali, B.; Amirova, D. Speech Recognition and Synthesis Models and Platforms for the Kazakh Language. Information 2025, 16, 879. https://doi.org/10.3390/info16100879

Karibayeva A, Karyukin V, Abduali B, Amirova D. Speech Recognition and Synthesis Models and Platforms for the Kazakh Language. Information. 2025; 16(10):879. https://doi.org/10.3390/info16100879

Chicago/Turabian StyleKaribayeva, Aidana, Vladislav Karyukin, Balzhan Abduali, and Dina Amirova. 2025. "Speech Recognition and Synthesis Models and Platforms for the Kazakh Language" Information 16, no. 10: 879. https://doi.org/10.3390/info16100879

APA StyleKaribayeva, A., Karyukin, V., Abduali, B., & Amirova, D. (2025). Speech Recognition and Synthesis Models and Platforms for the Kazakh Language. Information, 16(10), 879. https://doi.org/10.3390/info16100879