Advances in Facial Micro-Expression Detection and Recognition: A Comprehensive Review

Abstract

1. Introduction

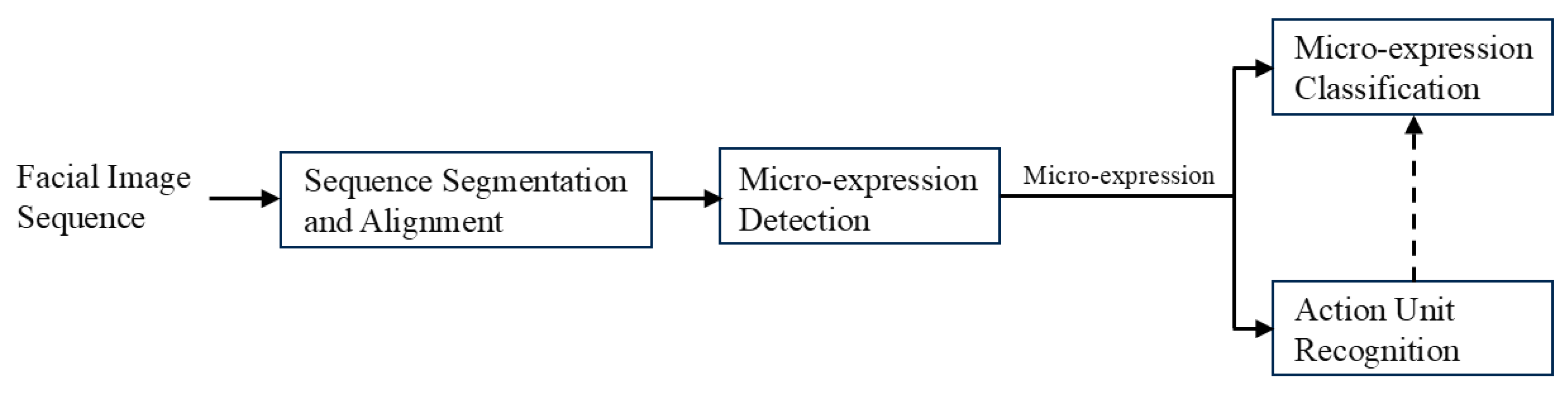

2. Action Unit Modeling for Micro-Expression Recognition

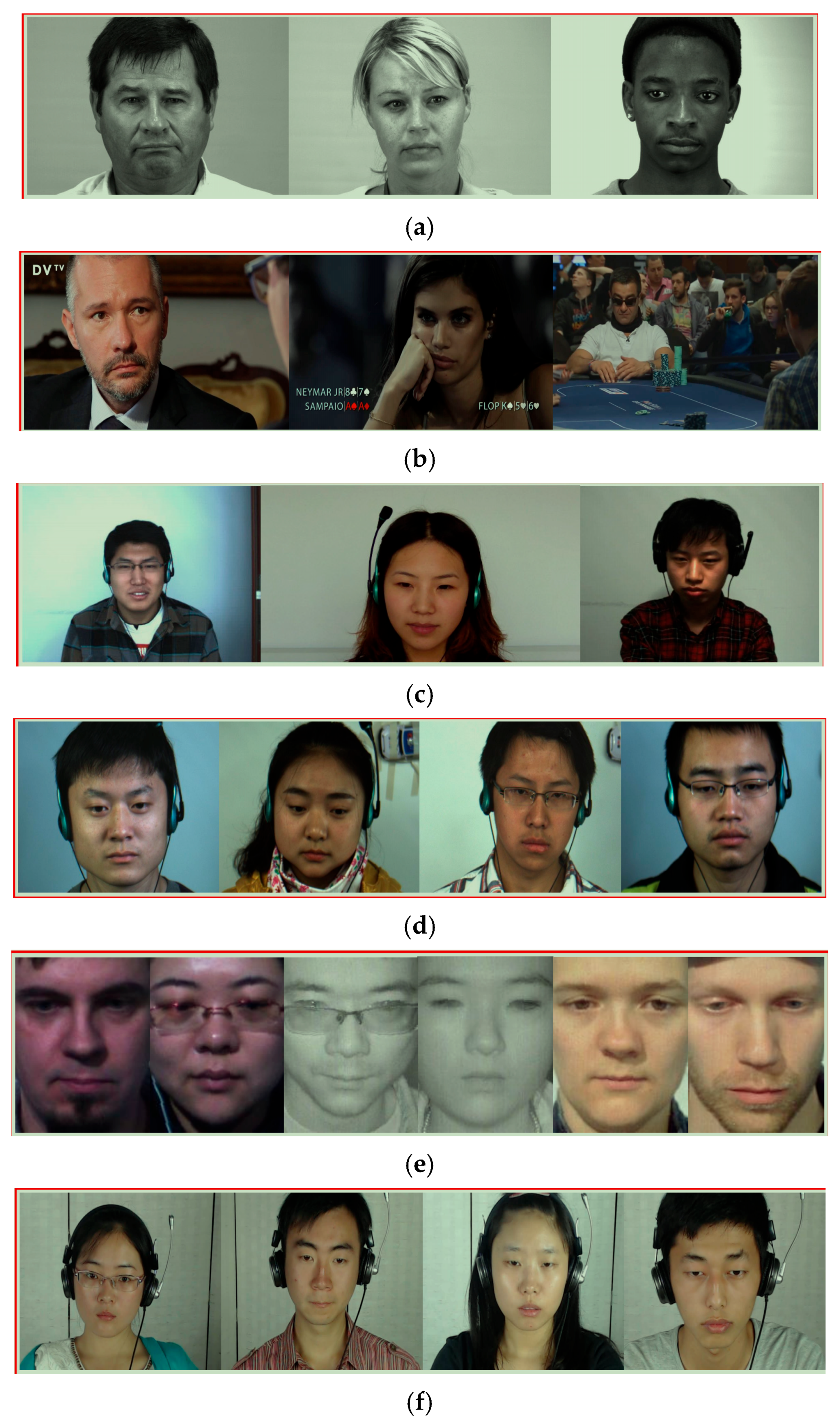

3. Micro-Expression Dataset

3.1. SMIC

3.2. MEVIEW

3.3. CASME and CASME II

3.4. SAMM

3.5. CAS(ME)2

3.6. MMEW

3.7. Comparative Analysis of Micro-Expression Datasets

4. Micro-Expression Recognition and Classification Methods

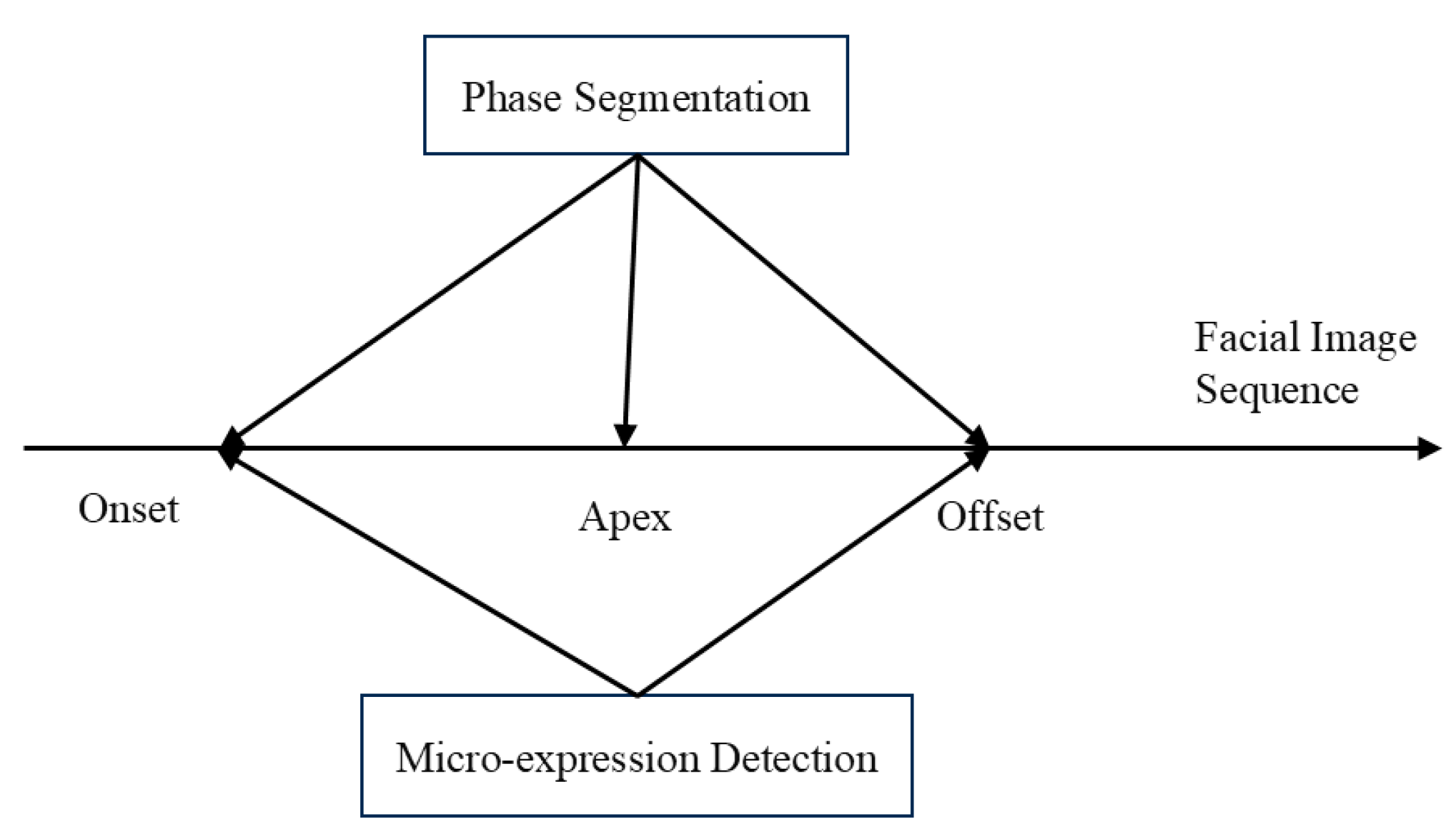

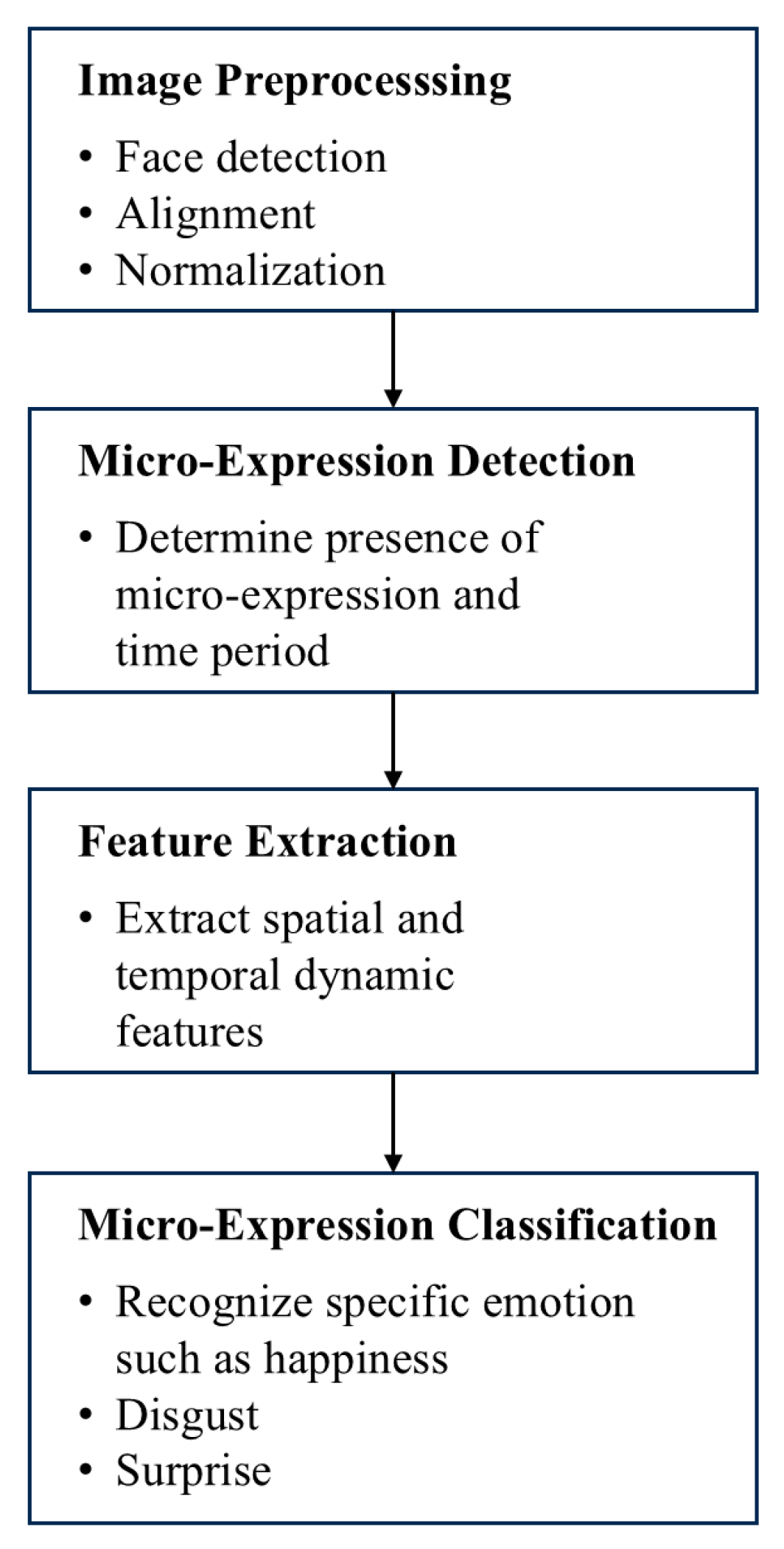

4.1. Micro-Expression Recognition Process

4.2. Micro-Expression Detection Method

- (1)

- Optical flow-based methods represent one of the earliest approaches to micro-expression detection. They estimate facial motion by computing pixel displacements across frames. Among them, the Main Directional Mean Optical Flow (MDMO) method captures micro-motion features by extracting the average optical flow direction and amplitude in local facial regions [42]. This approach is effective for short-term motion capture but is vulnerable to illumination changes.

- (2)

- Dynamic area extraction methods, such as strain maps [43] and frame differences [44], identify regions of interest by highlighting local muscle deformation or intensity changes between consecutive frames [17]. These methods are simple and intuitive, offering clear visual interpretations. However, their sensitivity to noise and limited robustness under high-frequency interference reduce their reliability.

- (3)

- Temporal sliding window strategies focus on modeling dynamic patterns over time. By defining a fixed-length window within a video sequence and sliding it across frames, these methods analyze localized temporal information to determine whether micro-expressions occur. They are often integrated with classifiers such as SVMs or random forests [45]. Nonetheless, the performance of this approach heavily depends on the chosen window length, which involves a trade-off between temporal resolution and computational efficiency.

- (4)

- Deep learning-based detection networks have recently become a dominant research direction. By employing CNNs, LSTMs, or hybrid architectures, these methods enable end-to-end spatiotemporal feature extraction and automatic detection. For instance, the two-stream network by Yang and Sun [46] combines spatial appearance with temporal optical flow features, while LSTM models capture long-term temporal dependencies to reduce feature omission [12]. Although deep learning achieves superior accuracy, it requires large-scale annotated datasets and substantial computational resources.

4.3. Feature Extraction

4.3.1. Manual Feature Method

4.3.2. Deep Feature Methods

4.4. Micro-Expression Classification Method

4.4.1. Machine Learning-Based Methods

4.4.2. Deep Learning-Based Methods

4.4.3. AU-Based Methods

4.5. Comparison of Micro-Expression Recognition Methods

5. Comparison and Analysis

6. Future Research Directions

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| METT | Micro-Expression Training Tool |

| LBP | Local Binary Patterns |

| MDMO | Multi-directional motion feature descriptor |

| CNN | Convolutional neural networks |

| AU | Action Unit |

| FACS | Facial Action Coding System |

| LSTM | long short-term memory networks |

| 3DCNN | Three-dimensional convolutional neural networks |

| RNN | Recurrent neural network |

| ViT | Vision Transformer |

| LBP-TOP | Local binary pattern three orthogonal planes |

| HOOF | Histogram of Oriented Optical Flow |

| KNN | K-nearest neighbor |

| GNNs | Graph neural networks |

| LOSO | Leave-one-subject-out |

| TSDN | Two-Stream Difference Network |

| HSTA | Hierarchical Space-Time Attention |

| GANs | Generative adversarial networks |

References

- Xia, B.; Wang, W.; Wang, S.; Chen, E. Learning from macro-expression: A micro-expression recognition framework. In Proceedings of the 28th ACM International Conference on Multimedia, Online, 12–16 October 2020; pp. 2936–2944. [Google Scholar]

- Ekman, P.; Friesen, W.V. Nonverbal leakage and clues to deception. Psychiatry 1969, 32, 88–106. [Google Scholar] [CrossRef]

- Porter, S.; Ten Brinke, L. Reading between the lies: Identifying concealed and falsified emotions in universal facial expressions. Psychol. Sci. 2008, 19, 508–514. [Google Scholar] [CrossRef]

- Ekman, P. Micro Expressions Training Tool. Available online: https://www.paulekman.com/micro-expressions-training-tools/ (accessed on 18 June 2025).

- Frank, M.G.; Ekman, P.; Friesen, W.V. Behavioral markers and recognizability of the smile of enjoyment. J. Personal. Soc. Psychol. 1993, 64, 83. [Google Scholar] [CrossRef]

- Rahim, M.A.; Hossain, M.N.; Wahid, T.; Azam, M.S. Face recognition using local binary patterns (LBP). Int. Res. J. 2013, 13, 1–8. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; IEEE: New York, NY, USA, 2005; pp. 886–893. [Google Scholar]

- Ben, X.; Ren, Y.; Zhang, J.; Wang, S.-J.; Kpalma, K.; Meng, W.; Liu, Y.-J. Video-based facial micro-expression analysis: A survey of datasets, features and algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 5826–5846. [Google Scholar] [CrossRef] [PubMed]

- Zhao, G.; Pietikainen, M. Dynamic texture recognition using local binary patterns with an application to facial expressions. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 915–928. [Google Scholar] [CrossRef] [PubMed]

- Liong, S.-T.; Phan, R.C.-W.; See, J.; Oh, Y.-H.; Wong, K. Optical strain based recognition of subtle emotions. In Proceedings of the 2014 International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS), Kuching, Malaysia, 1–4 December 2014; IEEE: New York, NY, USA, 2014; pp. 180–184. [Google Scholar]

- Ben, X.; Zhang, P.; Yan, R.; Yang, M.; Ge, G. Gait recognition and micro-expression recognition based on maximum margin projection with tensor representation. Neural Comput. Appl. 2016, 27, 2629–2646. [Google Scholar] [CrossRef]

- Khor, H.-Q.; See, J.; Phan, R.C.W.; Lin, W. Enriched long-term recurrent convolutional network for facial micro-expression recognition. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 22–26 May 2018; IEEE: New York, NY, USA, 2018; pp. 667–674. [Google Scholar]

- Reddy, S.P.T.; Karri, S.T.; Dubey, S.R.; Mukherjee, S. Spontaneous facial micro-expression recognition using 3D spatiotemporal convolutional neural networks. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; IEEE: New York, NY, USA, 2019. [Google Scholar]

- Wang, Z.; Zhang, K.; Luo, W.; Sankaranarayana, R. Htnet for micro-expression recognition. Neurocomputing 2024, 602, 128196. [Google Scholar] [CrossRef]

- Yan, W.-J.; Li, X.; Wang, S.-J.; Zhao, G.; Liu, Y.-J.; Chen, Y.-H.; Fu, X. CASME II: An improved spontaneous micro-expression database and the baseline evaluation. PLoS ONE 2014, 9, e86041. [Google Scholar] [CrossRef]

- Li, X.; Hong, X.; Moilanen, A.; Huang, X.; Pfister, T.; Zhao, G.; Pietikainen, M. Reading hidden emotions: Spontaneous micro-expression spotting and recognition. IEEE Trans. Affect. Comput. 2015, 2, 7. [Google Scholar]

- Davison, A.K.; Lansley, C.; Costen, N.; Tan, K.; Yap, M.H. Samm: A spontaneous micro-facial movement dataset. IEEE Trans. Affect. Comput. 2016, 9, 116–129. [Google Scholar] [CrossRef]

- Tang, M.; Ling, M.; Tang, J.; Hu, J.J.V.R. A micro-expression recognition algorithm based on feature enhancement and attention mechanisms. Virtual Real. 2023, 27, 2405–2416. [Google Scholar] [CrossRef]

- Peng, M.; Wu, Z.; Zhang, Z.; Chen, T. From macro to micro expression recognition: Deep learning on small datasets using transfer learning. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; IEEE: New York, NY, USA, 2018; pp. 657–661. [Google Scholar]

- Zhao, X.; Lv, Y.; Huang, Z. Multimodal fusion-based swin transformer for facial recognition micro-expression recognition. In Proceedings of the 2022 IEEE International Conference on Mechatronics and Automation (ICMA), Guilin, China, 7–10 August 2022; IEEE: New York, NY, USA, 2022; pp. 780–785. [Google Scholar]

- Oh, Y.-H.; See, J.; Le Ngo, A.C.; Phan, R.C.-W.; Baskaran, V.M. A survey of automatic facial micro-expression analysis: Databases, methods, and challenges. Front. Psychol. 2018, 9, 1128. [Google Scholar] [CrossRef] [PubMed]

- Goh, K.M.; Ng, C.H.; Lim, L.L.; Sheikh, U.U. Micro-expression recognition: An updated review of current trends, challenges and solutions. Vis. Comput. 2020, 36, 445–468. [Google Scholar] [CrossRef]

- Yan, W.-J.; Wu, Q.; Liang, J.; Chen, Y.-H.; Fu, X. How fast are the leaked facial expressions: The duration of micro-expressions. J. Nonverbal Behav. 2013, 37, 217–230. [Google Scholar] [CrossRef]

- Ekman, P. Telling Lies: Clues to Deceit in the Marketplace, Politics, and Marriage; Revised Edition; WW Norton & Company: New York, NY, USA, 2009. [Google Scholar]

- Pfister, T.; Li, X.; Zhao, G.; Pietikäinen, M. Recognising spontaneous facial micro-expressions. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; IEEE: New York, NY, USA, 2011; pp. 1449–1456. [Google Scholar]

- Ekman, P.; Friesen, W.V. Facial Action Coding System; University of California: San Francisco, CA, USA, 1978. [Google Scholar]

- Lucey, P.; Cohn, J.F.; Kanade, T.; Saragih, J.; Ambadar, Z.; Matthews, I. The extended cohn-kanade dataset (ck+): A complete dataset for action unit and emotion-specified expression. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition-Workshops, San Francisco, CA, USA, 13–18 June 2010; IEEE: New York, NY, USA, 2010; pp. 94–101. [Google Scholar]

- Li, X.; Hong, X.; Moilanen, A.; Huang, X.; Pfister, T.; Zhao, G.; Pietikainen, M. Towards reading hidden emotions: A comparative study of spontaneous micro-expression spotting and recognition methods. IEEE Trans. Affect. Comput. 2017, 9, 563–577. [Google Scholar] [CrossRef]

- Zhang, L.; Hong, X.; Arandjelović, O.; Zhao, G. Short and long range relation based spatio-temporal transformer for micro-expression recognition. IEEE Trans. Affect. Comput. 2022, 13, 1973–1985. [Google Scholar] [CrossRef]

- Li, X.; Pfister, T.; Huang, X.; Zhao, G.; Pietikäinen, M. A spontaneous micro-expression database: Inducement, collection and baseline. In Proceedings of the 2013 10th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Shanghai, China, 22–26 April 2013; IEEE: New York, NY, USA, 2013. [Google Scholar]

- Yan, W.-J.; Wu, Q.; Liu, Y.-J.; Wang, S.-J.; Fu, X. CASME database: A dataset of spontaneous micro-expressions collected from neutralized faces. In Proceedings of the 2013 10th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Shanghai, China, 22–26 April 2013; IEEE: New York, NY, USA, 2013. [Google Scholar]

- Qu, F.; Wang, S.-J.; Yan, W.-J.; Fu, X. CAS (ME) 2: A Database of Spontaneous Macro-expressions and Micro-expressions. In Proceedings of the Human-Computer Interaction Novel User Experiences: 18th International Conference, HCI International 2016, Toronto, ON, Canada, 17–22 July 2016; Proceedings, Part III 18. Springer: Berlin/Heidelberg, Germany, 2016; pp. 48–59. [Google Scholar]

- Tran, T.-K.; Vo, Q.-N.; Hong, X.; Li, X.; Zhao, G. Micro-expression spotting: A new benchmark. Neurocomputing 2021, 443, 356–368. [Google Scholar] [CrossRef]

- Li, J.; Yap, M.H.; Cheng, W.-H.; See, J.; Hong, X.; Li, X.; Hong, X.; Wang, S.-J. MEGC2022: ACM multimedia 2022 micro-expression grand challenge. In Proceedings of the 30th ACM International Conference on Multimedia, Lisbon, Portugal, 10–14 October 2022; pp. 7170–7174. [Google Scholar]

- Patel, D.; Zhao, G.; Pietikäinen, M. Spatiotemporal integration of optical flow vectors for micro-expression detection. In Proceedings of the International Conference on Advanced Concepts for Intelligent Vision Systems, Catania, Italy, 26–29 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 369–380. [Google Scholar]

- Takalkar, M.; Xu, M.; Wu, Q.; Chaczko, Z. A survey: Facial micro-expression recognition. Multimed. Tools Appl. 2018, 77, 19301–19325. [Google Scholar] [CrossRef]

- Shangguan, Z.; Dong, Y.; Guo, S.; Leung, V.; Deen, M.J.; Hu, X. Facial Expression Analysis and Its Potentials in IoT Systems: A Contemporary Survey. ACM Comput. Surv. 2024, 58, 43. [Google Scholar] [CrossRef]

- Verma, M.; Vipparthi, S.K.; Singh, G. Deep insights of learning-based micro expression recognition: A perspective on promises, challenges, and research needs. IEEE Trans. Cogn. Dev. Syst. 2022, 15, 1051–1069. [Google Scholar] [CrossRef]

- Qu, F.; Wang, S.-J.; Yan, W.-J.; Li, H.; Wu, S.; Fu, X. CAS (ME)2: A database for spontaneous macro-expression and micro-expression spotting and recognition. IEEE Trans. Affect. Comput. 2017, 9, 424–436. [Google Scholar] [CrossRef]

- Zhao, G.; Li, X.; Li, Y.; Pietikäinen, M. Facial micro-expressions: An overview. Proc. IEEE 2023, 111, 1215–1235. [Google Scholar] [CrossRef]

- Liong, S.-T.; See, J.; Wong, K.; Phan, R.C.-W. Less is more: Micro-expression recognition from video using apex frame. Signal Process. Image Commun. 2018, 62, 82–92. [Google Scholar] [CrossRef]

- Liu, Y.-J.; Zhang, J.-K.; Yan, W.-J.; Wang, S.-J.; Zhao, G.; Fu, X. A main directional mean optical flow feature for spontaneous micro-expression recognition. IEEE Trans. Affect. Comput. 2015, 7, 299–310. [Google Scholar] [CrossRef]

- Clocksin, W.F.; da Fonseca, J.Q.; Withers, P.; Torr, P.H. Image processing issues in digital strain mapping. In Proceedings of the Applications of Digital Image Processing XXV, San Diego, CA, USA, 7–11 July 2002; SPIE: Bellingham, WA, USA, 2002; pp. 384–395. [Google Scholar]

- Singla, N. Motion detection based on frame difference method. Int. J. Inf. Comput. Technol. 2014, 4, 1559–1565. [Google Scholar]

- Tran, T.-K.; Hong, X.; Zhao, G. Sliding window based micro-expression spotting: A benchmark. In Proceedings of the Advanced Concepts for Intelligent Vision Systems: 18th International Conference, ACIVS 2017, Antwerp, Belgium, 18–21 September 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 542–553. [Google Scholar]

- Yang, H.; Sun, S.; Chen, J. Deep Learning-Based Micro-Expression Recognition Algorithm Research. Int. J. Comput. Sci. Inf. Technol. 2024, 2, 59–70. [Google Scholar] [CrossRef]

- Lades, M.; Vorbruggen, J.C.; Buhmann, J.; Lange, J.; Von Der Malsburg, C.; Wurtz, R.P.; Konen, W. Distortion invariant object recognition in the dynamic link architecture. IEEE Trans. Comput. 1993, 42, 300–311. [Google Scholar] [CrossRef]

- Polikovsky, S.; Kameda, Y.; Ohta, Y. Facial micro-expressions recognition using high speed camera and 3D-gradient descriptor. In Proceedings of the 3rd International Conference on Imaging for Crime Detection and Prevention (ICDP 2009), London, UK, 1–2 September 2009; IET: Stevenage, UK, 2009. [Google Scholar]

- Shreve, M.; Godavarthy, S.; Goldgof, D.; Sarkar, S. Macro-and micro-expression spotting in long videos using spatio-temporal strain. In Proceedings of the 2011 IEEE International Conference on Automatic Face & Gesture Recognition (FG), Santa Barbara, CA, USA, 21–25 March 2011; IEEE: New York, NY, USA, 2011; pp. 51–56. [Google Scholar]

- Wang, L.; Jia, J.; Mao, N. Micro-expression recognition based on 2D-3D CNN. In Proceedings of the 2020 39th Chinese Control Conference (CCC), Shenyang, China, 27–29 July 2020; IEEE: New York, NY, USA, 2020; pp. 3152–3157. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Donahue, J.; Anne Hendricks, L.; Guadarrama, S.; Rohrbach, M.; Venugopalan, S.; Saenko, K.; Darrell, T. Long-term recurrent convolutional networks for visual recognition and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2625–2634. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Liong, S.-T.; See, J.; Wong, K.; Le Ngo, A.C.; Oh, Y.-H.; Phan, R. Automatic apex frame spotting in micro-expression database. In Proceedings of the 2015 3rd IAPR Asian Conference on Pattern Recognition (ACPR), Lille, France, 14–18 May 2019; IEEE: New York, NY, USA, 2015; pp. 665–669. [Google Scholar]

- Xia, Z.; Hong, X.; Gao, X.; Feng, X.; Zhao, G. Corrections to “spatiotemporal recurrent convolutional networks for recognizing spontaneous micro-expressions”. IEEE Trans. Multimedia 2020, 22, 1111. [Google Scholar] [CrossRef]

- Guo, Y.; Tian, Y.; Gao, X.; Zhang, X. Micro-expression recognition based on local binary patterns from three orthogonal planes and nearest neighbor method. In Proceedings of the 2014 International Joint Conference on Neural Networks (IJCNN), Beijing, China, 6–11 July 2014; IEEE: New York, NY, USA, 2014; pp. 3473–3479. [Google Scholar]

- Li, Q.; Yu, J.; Kurihara, T.; Zhang, H.; Zhan, S. Deep convolutional neural network with optical flow for facial micro-expression recognition. J. Circuits Syst. Comput. 2020, 29, 2050006. [Google Scholar] [CrossRef]

- Lin, C.; Long, F.; Huang, J.; Li, J. Micro-expression recognition based on spatiotemporal Gabor filters. In Proceedings of the 2018 Eighth International Conference on Information Science and Technology (ICIST), Kaifeng, China, 24–26 March 2018; IEEE: New York, NY, USA, 2018; pp. 487–491. [Google Scholar]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Happy, S.; Routray, A. Automatic facial expression recognition using features of salient facial patches. IEEE Trans. Affect. Comput. 2014, 6, 1–12. [Google Scholar] [CrossRef]

- Gan, Y.S.; Liong, S.-T.; Yau, W.-C.; Huang, Y.-C.; Tan, L.-K. OFF-ApexNet on micro-expression recognition system. Signal Process. Image Commun. 2019, 74, 129–139. [Google Scholar] [CrossRef]

- Liong, S.-T.; Gan, Y.S.; See, J.; Khor, H.-Q.; Huang, Y.-C. Shallow triple stream three-dimensional cnn (ststnet) for micro-expression recognition. In Proceedings of the 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2019), Lille, France, 14–18 May 2019; IEEE: New York, NY, USA, 2019. [Google Scholar]

- Carreira, J.; Zisserman, A. Quo vadis, action recognition? A new model and the kinetics dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6299–6308. [Google Scholar]

- Qingqing, W. Micro-expression recognition method based on CNN-LSTM hybrid network. Int. J. Wirel. Mob. Comput. 2022, 23, 67–77. [Google Scholar]

- Zhu, X.; Toisoul, A.; Perez-Rua, J.-M.; Zhang, L.; Martinez, B.; Xiang, T. Few-shot action recognition with prototype-centered attentive learning. arXiv 2021, arXiv:2101.08085. [Google Scholar]

- Zhang, H.; Vorobeychik, Y. Proceedings of the 30th AAAI Conference on Artificial Intelligence. In Proceedings of the AAAI Palo Alto, Phoenix, AZ, USA, 12–17 February 2016. [Google Scholar]

- Li, Y.; Peng, W.; Zhao, G. Micro-expression action unit detection with dual-view attentive similarity-preserving knowledge distillation. In Proceedings of the 2021 16th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2021), Jodhpur, India, 15–19 December 2021; IEEE: New York, NY, USA, 2021. [Google Scholar]

- Kim, Y.; Lee, H.; Provost, E.M. Deep learning for robust feature generation in audiovisual emotion recognition. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; IEEE: New York, NY, USA, 2013; pp. 3687–3691. [Google Scholar]

- Bartlett, M.S.; Littlewort, G.; Frank, M.G.; Lainscsek, C.; Fasel, I.R.; Movellan, J.R. Automatic recognition of facial actions in spontaneous expressions. J. Multimed. 2006, 1, 22–35. [Google Scholar] [CrossRef]

- Kopalidis, T.; Solachidis, V.; Vretos, N.; Daras, P. Advances in facial expression recognition: A survey of methods, benchmarks, models, and datasets. Information 2024, 15, 135. [Google Scholar] [CrossRef]

- Pan, H.; Xie, L.; Li, J.; Lv, Z.; Wang, Z. Micro-expression recognition by two-stream difference network. IET Comput. Vis. 2021, 15, 440–448. [Google Scholar] [CrossRef]

- Gomathi, R.; Logeswari, S.; Jothimani, S.N.; Sangeethaa, S.; Sangeetha, A.; LathaJothi, V. MEFNet-Micro Expression Fusion Network Based on Micro-Attention Mechanism and 3D-CNN Fusion Algorithms. Int. J. Intell. Eng. Syst. 2023, 16, 113. [Google Scholar]

- Nguyen, X.-B.; Duong, C.N.; Li, X.; Gauch, S.; Seo, H.-S.; Luu, K. Micron-bert: Bert-based facial micro-expression recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 1482–1492. [Google Scholar]

- Xing, F.; Wang, Y.-G.; Tang, W.; Zhu, G.; Kwong, S. Starvqa+: Co-training space-time attention for video quality assessment. arXiv 2023, arXiv:2306.12298. [Google Scholar]

- Qian, W.; Nielsen, T.D.; Zhao, Y.; Larsen, K.G.; Yu, J. Uncertainty-aware temporal graph convolutional network for traffic speed forecasting. IEEE Trans. Intell. Transp. Syst. 2024, 25, 8578–8590. [Google Scholar] [CrossRef]

- Zhao, H.; Kim, B.-G.; Slowik, A.; Pan, D. Temporal–spatial correlation and graph attention-guided network for micro-expression recognition in English learning livestreams. Discov. Comput. 2024, 27, 47. [Google Scholar] [CrossRef]

- Wang, F.; Li, J.; Qi, C.; Wang, L.; Wang, P. Multi-scale multi-modal micro-expression recognition algorithm based on transformer. arXiv 2023, arXiv:2301.02969. [Google Scholar]

- Wang, T.; Li, Z.; Xu, Y.; Chen, J.; Genovese, A.; Piuri, V.; Scotti, F. Few-Shot Steel Surface Defect Recognition via Self-Supervised Teacher–Student Model with Min–Max Instances Similarity. IEEE Trans. Instrum. Meas. 2023, 72, 5026016. [Google Scholar] [CrossRef]

- Zhang, S.; Yang, Y.; Chen, C.; Zhang, X.; Leng, Q.; Zhao, X. Deep learning-based multimodal emotion recognition from audio, visual, and text modalities: A systematic review of recent advancements and future prospects. Expert Syst. Appl. 2024, 237, 121692. [Google Scholar] [CrossRef]

- Malik, P.; Singh, J.; Ali, F.; Sehra, S.S.; Kwak, D. Action unit based micro-expression recognition framework for driver emotional state detection. Sci. Rep. 2025, 15, 27824. [Google Scholar] [CrossRef]

- bin Talib, H.K.; Xu, K.; Cao, Y.; Xu, Y.P.; Xu, Z.; Zaman, M.; Akhunzada, A. Micro-Expression Recognition using Convolutional Variational Attention Transformer (ConVAT) with Multihead Attention Mechanism. IEEE Access 2025, 13, 20054–20070. [Google Scholar] [CrossRef]

- Chefer, H.; Gur, S.; Wolf, L. Transformer interpretability beyond attention visualization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 782–791. [Google Scholar]

- Mehta, S.; Rastegari, M. Mobilevit: Lightweight, general-purpose, and mobile-friendly vision transformer. arXiv 2021, arXiv:2110.02178. [Google Scholar]

| Dataset | S | N | F | R | E | A | L | C |

|---|---|---|---|---|---|---|---|---|

| SMIC | 8/16 | 306 | 25/100 | 1280 × 720/640 × 480 | 3 | No | Self-report | VIS, NIR, HS cams |

| CASME | 2 | 195 | 60 | 640 × 480 | 7 to 3 | Yes | FACS experts | Lab-controlled |

| CASME II | 26 | 247 | 200 | 640 × 480 | 7 | Yes | FACS experts | Lab-controlled |

| SAMM | 32 | 159 | 200 | 2040 × 1088 | 7 | Yes | FACS experts | Multi-ethnic lab |

| CAS(ME)2 | 22 | 206/300 | 30 | 640 × 480 | Micro + Macro | Yes | Multimodal | Lab setup |

| MMEW | 36 | 3000+ | 90 | 640 × 480 | 6 | No | Multiple annot. | Natural scenes |

| Method Type | Techniques | Advantages | Limitations |

|---|---|---|---|

| Handcrafted Feature Methods [9,25] | LBP-TOP, HOG, Optical Flow | Strong interpretability, low computational cost | Limited expressive power, sensitive to noise and subject variation. |

| Deep Feature Methods [28,70] | CNN, 3D-CNN, Transformer | Automatic extraction of complex features, strong modeling capacity | Requires large datasets, high computational over-head. |

| Deep Learning Classifiers [23] | OFF-ApexNet, STSTNet, ViT | End-to-end learning, high adaptability | Prone to overfitting, re-quires substantial training data. |

| AU-Structured Modeling [12,71] | FACS, Graph Neural Network (GNN) | High objectivity, suitable for medical/psychological applications | High annotation cost, complex model design. |

| Algorithm | Model Architecture | Datasets | Accuracy (%) | F1 Score (%) |

|---|---|---|---|---|

| OFF-ApexNet [63] | CNN + Optical Flow Difference | CASME II | 74.6 | 71.0 |

| TSDN [73] | Two-Stream Difference Network | CASME II | 71.5 | 70.2 |

| Composite 3D-Fusion [74] | STSTNet + 3D-CNN Fusion | CASME II | 76.0 | 73.5 |

| Micron-BERT [75] | Tiny BERT + Self-Attention | CASME II | 80.1 | 77.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shuai, T.; Beng, S.; Khalid, F.B.; Rahmat, R.W.B.O.K. Advances in Facial Micro-Expression Detection and Recognition: A Comprehensive Review. Information 2025, 16, 876. https://doi.org/10.3390/info16100876

Shuai T, Beng S, Khalid FB, Rahmat RWBOK. Advances in Facial Micro-Expression Detection and Recognition: A Comprehensive Review. Information. 2025; 16(10):876. https://doi.org/10.3390/info16100876

Chicago/Turabian StyleShuai, Tian, Seng Beng, Fatimah Binti Khalid, and Rahmita Wirza Bt O. K. Rahmat. 2025. "Advances in Facial Micro-Expression Detection and Recognition: A Comprehensive Review" Information 16, no. 10: 876. https://doi.org/10.3390/info16100876

APA StyleShuai, T., Beng, S., Khalid, F. B., & Rahmat, R. W. B. O. K. (2025). Advances in Facial Micro-Expression Detection and Recognition: A Comprehensive Review. Information, 16(10), 876. https://doi.org/10.3390/info16100876