SAM-Based Input Augmentations and Ensemble Strategies for Image Segmentation

Abstract

1. Introduction

- We demonstrate how SAM and SAM 2 can provide valuable information for training segmentation models. Five different input augmentation methods (see Section 2.3) are proposed, each integrating SAM-derived information into images to improve the learning process of segmentation models. These methods consistently outperform the baseline across most datasets; each dataset tends to favor a different method, which indicates that new, meaningful information is being extracted, tailored to the specific characteristics of each dataset.

- We introduce AuxMix, an ensemble model trained with a combination of SAM-based augmentation techniques, designed to harness the complementary strengths of each method. The rationale behind AuxMix is to integrate diverse and complementary sources of information into a unified ensemble. These include different color representations, the principal directions of variance in the data (via PCA), segmentation logits produced by the SAM model, and stability scores of various segmentation masks; details are provided in Section 2.4.

- We propose a new protocol to evaluate methods trained on the Kvasir-SEG and CVC-ClinicDB datasets by also incorporating the public datasets from Polyp-Gen, which are divided across six clinical centers; for details, see Section 2.5.1. Since the training set remains unchanged, this approach does not increase computational demands while expanding the range of unseen datasets used for testing.

2. Materials and Methods

2.1. Base Models: PVT, HSNet

2.2. Foundation Models: SAM and SAM 2

- Two transposed convolutional layers.

- A token-to-image attention block that updates embeddings and feeds them into a three-layer perceptron.

Fine-Tuning SAM

2.3. Input Augmentation

2.3.1. SAMAug

- the first channel contains the grayscale version of x;

- the second channel is populated with the segmentation prior map, providing additional segmentation-related information;

- the third channel accommodates the boundary prior map, enriching the image with boundary details.

| Algorithm 1 SAMAug |

|

2.3.2. RG-segPrior

- Segmentation Mask Generation: The algorithm begins by generating segmentation masks for the input image using a mask generator (for all experiments, we used the default parameters of SamAutomaticMaskGenerator; a complete list of the settings is provided in Appendix A.1.), a class inside the SAM library that automatically builds a prompt for the given image and outputs a list of binary masks. Each mask represents a region of interest within the image and is associated with a stability score that indicates the confidence of the segmentation.

- Segmentation Prior Calculation: The matrix is initialized as an empty matrix. The algorithm iterates over each segmentation mask, modifying by adding the stability score for regions where the mask indicates the presence of a feature or object. This results in a prior that reflects the confidence in various regions of the image.

- Channel Separation: The image is separated into its red, green, and blue channels. The red and green channels are kept unchanged.

- Segmentation Prior Integration: The blue channel is replaced by , which is calculated from the segmentation masks. This modification injects segmentation information into the image, highlighting areas of interest as determined by the segmentation model.

- Image Reconstruction: The image is reconstructed by combining the unchanged red and green channels with the modified blue channel. The resulting image now embeds both the original image content and additional segmentation information.

| Algorithm 2 Segmentation Prior Modification |

|

2.3.3. RG-logits

- Input Image Preparation: The algorithm starts by setting the input image to the SAM model, preparing it for segmentation prediction. This allows the model to process the image and generate relevant outputs, such as the segmentation mask and the corresponding logits.

- Segmentation Logit Prediction: The SAM model returns the predicted mask along with its associated logits. These logits represent the model’s confidence in different regions of the image, highlighting areas where specific features or objects are present.

- Channel Separation: The image is separated into its red, green, and blue channels. The red and green channels are retained as is; the blue channel is modified based on the segmentation logits (Step 5).

- Logit Normalization: The logits are normalized to fit within the standard image channel range of [0, 255]. This ensures that the logits can be represented as valid pixel values and applied to the blue channel of the image.

- Blue Channel Replacement: The normalized logits replace the original blue channel of the image, resulting in a new image in which the blue channel now reflects the segmentation information. This modification highlights the areas of interest determined by the segmentation model.

- Image Reconstruction: The image is reconstructed by combining the unchanged red and green channels with the modified blue channel. The resulting image now displays the segmentation information while maintaining the original color integrity.

| Algorithm 3 Logit-based Modification |

|

2.3.4. SV-segPrior

- Input Image Preparation: The input image is converted to floating-point format to prepare it for processing. This ensures the compatibility of the pixel values with subsequent operations.

- Segmentation Prior Generation: Using the segmentation masks generated by the SAM model, a semantic prior map is created. For each mask, the segmentation region is weighted by its stability score, and these weights are accumulated across all masks to produce the segmentation prior.

- Color Space Conversion: The image is converted from RGB to HSV color space. This transformation separates the image into hue (H), saturation (S), and brightness (V) channels, allowing for isolated modifications to the hue channel.

- Channel Separation and Modification: The segmentation prior is assigned to the H channel, effectively encoding the semantic information in the hue component of the HSV image. The S and V channels remain unchanged.

- Image Reconstruction: The modified H channel is recombined with the original S and V channels to reconstruct the modified HSV image. The resulting image integrates semantic information directly into its color representation.

| Algorithm 4 HSV-based Segmentation Prior Modification |

|

2.3.5. PCA-segPrior

- Segmentation Mask Generation: The algorithm begins by generating segmentation masks using a mask generator. These masks represent regions of interest within the image, and each mask is associated with a stability score, which indicates the confidence in the mask’s correctness.

- Segmentation Prior Calculation: The matrix is initialized as a blank matrix (zeros). Then, for each segmentation mask, the algorithm updates . This update involves multiplying each mask by its corresponding stability score and accumulating the results. The segmentation prior thus reflects the confidence in various regions of the image based on the available segmentation masks.

- Dimensionality Reduction via PCA: The image is subject to PCA to reduce its dimensionality from three channels (RGB) to two channels. The reduced representation captures the key features of the image in fewer dimensions, making it more efficient for further processing.

- Scaling of PCA and Segmentation Data: The PCA output and the segmentation prior are normalized to the range to ensure that the data are properly adjusted for visualization and processing.

- Image Reconstruction: The image is reconstructed by combining the scaled PCA results and the segmentation prior into the red, green, and blue channels of the image. The red and green channels come from the PCA output, while the blue channel is influenced by the segmentation prior. This modified image now encodes both the reduced-dimensional representation of the image and additional information from the segmentation.

| Algorithm 5 PCA and Segmentation Prior Modification |

|

- Image Segmentation: the segmentation prior improves the analysis by injecting spatially relevant data that reflect the structure and boundaries of objects in the image. By modifying the color channels based on segmentation, the image highlights areas of interest, making further analysis or feature extraction more accurate.

- Dimensionality Reduction: reducing the dimensionality of the image using PCA can significantly simplify computational tasks, especially when processing large datasets or when the main features of the image can be captured in fewer dimensions. It also helps to reduce noise by focusing on the principal components of the image.

- Visual Enhancement: the combination of PCA and segmentation can also aid in visual enhancement, where segmentation-prioritized areas are highlighted in the image, making it easier to interpret or present visually.

2.3.6. OurSAMAug

- Prior matrix calculation: In this stage, both the matrix and the matrix are calculated as described for SAMAug. The matrix is initialized to a zero matrix; then, for each mask provided by SAM, the corresponding area in the matrix is colored in proportion to the area of the mask.

- Prior Integration: The matrix is summed to the G channel and the matrix is summed to the B channel, as in the SAMAug method. The matrix is added to the R channel.

- Image Reconstruction: The image is reconstructed by merging the blue and green channels containing the same information as SAMAug ( and ), plus the red channel with the additional . The final image then contains the same information as SAMAug, plus some additional area information regarding the size of the detected masks.

| Algorithm 6 Our simple modification of the SAMAug algorithm |

|

2.4. Ensembles

- Baseline(KX): K HSNet/PVT models (X = ’H’ for HSNet, X = ’P’ for PVT) are combined using the mean rule, employing standard data augmentation as the augmenting strategy.

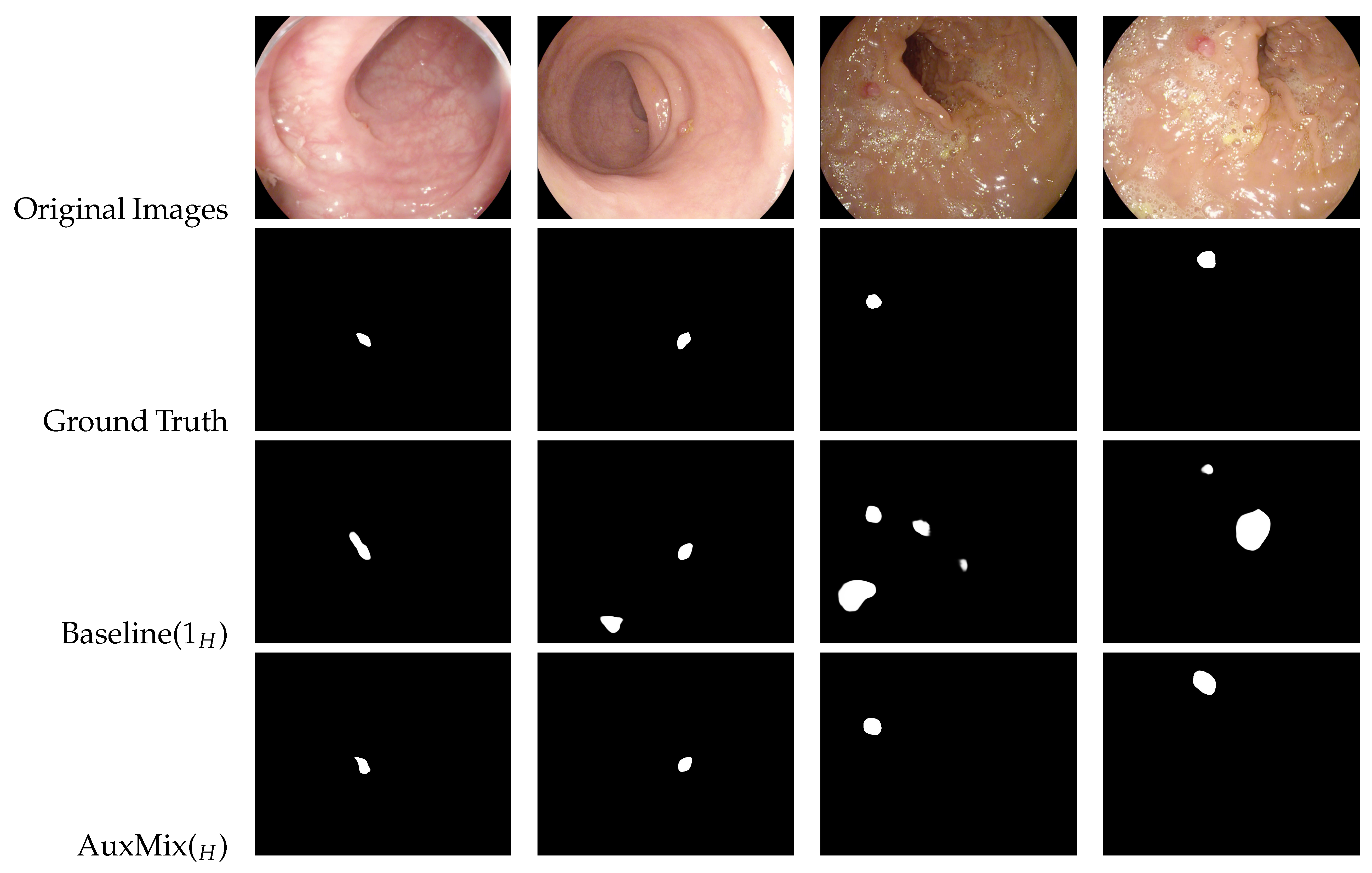

- AuxMix(X): fusion by the mean rule among nine HSNet/PVT models (X = ’H’ for HSNet, X = ’P’ for PVT): three HSNet/PVT models for BaselineX and one HSNet/PVT model for each of the following strategies: SAM1_RG-logits, SAM2_RG-logits, SAM1_SAMAug, SAM2_SAMAug, SAM1_PCA-segPrior and SAM2_PCA-segPrior (see Figure 5).

- AuxMix(H+P): fusion by the mean rule of AuxMix(H) and AuxMix(P).

- HUGE(X): fusion by the mean rule among 27 HSNet/PVT models (X = ’H’ for HSNet, X = ’P’ for PVT): nine HSNet/PVT models for BaselineX; three HSNet/PVT models for each of the following strategies: SAM1_RG-logits, SAM2_RG-logits, SAM1_SAMAug, SAM2_SAMAug, SAM1_PCA-segPrior and SAM2_PCA-segPrior.

- HUGE: fusion by the mean rule of HUGE(H) and HUGE(P).

2.5. Datasets

2.5.1. Polyp Segmentation

- CVC-T [34] contains 300 images. It is a test set derived from the larger CVC-EndoSceneStill dataset, which includes colonoscopy images with different polyp presentations.

- CVC-ClinicDB [35] (ClinDB) includes 612 images extracted from 31 videos of colonoscopy procedures. Expert annotations identify polyp regions, and ground-truth data are also available for light reflections. The images in this dataset are uniformly sized at 576 × 768 pixels.

- Kvasir-SEG [36] (Kvasir) contains 1000 images meticulously labeled and verified by medical professionals. This dataset features various segments of the digestive system, including both healthy and diseased tissue. The images have resolutions ranging from 720 × 576 pixels to 1920 × 1072 pixels and are organized into folders based on content. Some images also include a small picture-in-picture display indicating the position of the endoscope within the body.

- CVC-ColonDB [37] (ColDB) contains 380 images and provides a diverse range of polyp appearances to maximize dataset variability and encompass various polyp types and scenarios.

- ETIS-LaribPolypDB [38] (ETIS) consists of 196 colonoscopy images, which are valuable for evaluating segmentation performance due to their variety and quality.

- PolypGen [39] is an open-access resource containing 1537 polyp images. It was collected from six centers across Europe and Africa, offering a total of 3762 positive frames and 4275 negative frames. These images represent diverse populations, endoscopic systems, and surveillance expertise from Norway, France, the United Kingdom, Egypt, and Italy.

2.5.2. Segmentation of X-Ray Images

2.5.3. Camouflaged Object Segmentation

2.5.4. Locust Segmentation

3. Results and Discussion

- First, we tested the effectiveness of our proposed input augmentation strategies without resorting to ensembling, that is, using base models.

- Then, we tested the performance of our proposed ensembles.

- Lastly, we carried out ablation studies to quantify the impact of the different implementation choices in our strategies. Some studies could be performed with the experimental data collected in the second phase, while others required new experiments.

- The model is trained with the combined loss function discussed in Section 2.3.1, meaning it is trained with both raw and augmented images along with the corresponding ground truth.

- During test time, two inferences of the same model are performed and the mask with lower entropy is chosen.

- No other classical augmentation techniques are used alongside SAM-augmentation: each model is trained using exactly one input augmentation algorithm.

3.1. Input Augmentation

- Baseline() obtains the best performance only in two of the five datasets: ETIS and Ribs. For the other datasets, the best results are obtained by an approach proposed in this paper.

- The performance of each method is not stable across the datasets. Baseline() performs very well for ETIS but poorly for ClinDB. SAM1_RG-logits achieves the highest mean performance in the Polyp datasets but in CVC-T, Ribs, and CAMO it performs worse than many other approaches.

3.2. Ensembles

- Ensembling consistently improves the performance of standalone base networks, for both HSNet and PVT topologies.

- While the ensemble approach proposed here yields a statistically significant (Wilcoxon signed-rank test, p-value = 0.05) improvement over the baseline for HSNet, this is not observed for PVT. Based on our results, we hypothesize that the method may have reached a performance plateau. For instance, in polyp datasets, an ensemble combining eight SAM-based methods (PCA-segPrior, RG-logits, RG-segPrior, OurSAMAug for both SAM and SAM 2) achieves the same mean performance as Baseline(). Additionally, merging the two ensembles does not result in any performance gain, suggesting saturation.

- AuxMix(X) offers the best trade-off between performance and computation time.

- The results in Polyp-Gen are also noteworthy: the ensemble method appears to offer limited benefits, even for HSNet, and performance varies significantly across centers.

- The best ensemble models proposed in this work achieve state-of-the-art performance among CNN- and Transformer-based methods. Even some very recent results based on the fusion of CNNs and Transformers [43] are not competitive with those of our ensemble-based models. Table 7 provides an extensive comparison with the literature: the only approach that reports better results than ours is based on Mamba [44] and its implementation has not yet been made public. Nevertheless, the ensemble strategy we introduce in this paper is also compatible with Mamba-based models.

3.3. Ablation Studies

3.3.1. Contribution of Augmented Images

- The model receives only baseline images.

- The model receives only augmented images.

3.3.2. Effect of SAM vs. SAM 2

3.3.3. Effects of Ensembling

3.3.4. Evaluating Input Strategies

- Baseline: we use the HSNet model trained as described in the original paper, with the training set and the standard data augmentation proposed by the authors, and evaluate it on a test set composed exclusively of original images.

- Baseline (aug-only): models trained exclusively on augmented images and evaluated on the same test set. In this case, the entropy-based method is not applied; instead, evaluation is performed directly on the predictions produced when the model receives only augmented images as input.

- Combined Loss: the approach proposed in the SAMAug paper, which trains with both baseline and augmented images, integrating them within a single loss function. At test time, the entropy-based method is applied to combine the predictions from the two input types.

3.3.5. Effect of Excluding Each Augmentation from AuxMix

3.3.6. Evaluating Network Stability

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Experimental Details and Augmentations

Appendix A.1. SAM Mask Generation Parameters

- points per side = 32;

- points per batch = 64;

- pred iou thresh = 0.5;

- stability score thresh = 0.95;

- stability score offset = 1.0;

- box nms thresh = 0.5;

- crop n layers = 0;

- crop nms thresh = 0.5;

- crop overlap ratio = 512/1500;

- crop n points downscale factor = 1;

- point grids = none;

- min mask region area = 0;

- output mode = “binary mask”;

Appendix A.2. Image Augmentations

Appendix B. Channel Visual Comparison

References

- Nanni, L.; Lumini, A.; Fantozzi, C. Exploring the Potential of Ensembles of Deep Learning Networks for Image Segmentation. Information 2023, 14, 657. [Google Scholar] [CrossRef]

- Elhassan, M.A.; Zhou, C.; Khan, A.; Benabid, A.; Adam, A.B.; Mehmood, A.; Wambugu, N. Real-time semantic segmentation for autonomous driving: A review of CNNs, Transformers, and Beyond. J. King Saud Univ. Comput. Inf. Sci. 2024, 36, 102226. [Google Scholar] [CrossRef]

- Qureshi, I.; Yan, J.; Abbas, Q.; Shaheed, K.; Riaz, A.B.; Wahid, A.; Khan, M.W.J.; Szczuko, P. Medical image segmentation using deep semantic-based methods: A review of techniques, applications and emerging trends. Inf. Fusion 2023, 90, 316–352. [Google Scholar] [CrossRef]

- Hurtado, J.V.; Valada, A. Semantic Scene Segmentation for Robotics. arXiv 2024, arXiv:2401.07589. [Google Scholar] [CrossRef]

- Farhan Audianto, M.; Dwi Sulistiyo, M.; Rachmawati, E.; Hadiyoso, S. Fashion Parsing and Identification for E-Commerce Using Semantic Segmentation. In Proceedings of the 12th International Conference on Information and Communication Technology (ICoICT), Bandung, Indonesia, 7–8 August 2024; pp. 566–571. [Google Scholar] [CrossRef]

- Wang, S.; Mu, X.; Yang, D.; He, H.; Zhao, P. Attention Guided Encoder-Decoder Network With Multi-Scale Context Aggregation for Land Cover Segmentation. IEEE Access 2020, 8, 215299–215309. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar] [CrossRef]

- Siddique, N.; Paheding, S.; Elkin, C.P.; Devabhaktuni, V. U-Net and Its Variants for Medical Image Segmentation: A Review of Theory and Applications. IEEE Access 2021, 9, 82031–82057. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Hao, S.; Zhou, Y.; Guo, Y. A Brief Survey on Semantic Segmentation with Deep Learning. Neurocomputing 2020, 406, 302–321. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16×16 Words: Transformers for Image Recognition at Scale. In Proceedings of the 9th International Conference on Learning Representations (ICLR), Virtual, 3–7 May 2021. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid Vision Transformer: A Versatile Backbone for Dense Prediction without Convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 548–558. [Google Scholar] [CrossRef]

- Mohammed, A.; Kora, R. A comprehensive review on ensemble deep learning: Opportunities and challenges. J. King Saud Univ.-Comput. Inf. Sci. 2023, 35, 757–774. [Google Scholar] [CrossRef]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment Anything. arXiv 2023, arXiv:2304.02643. [Google Scholar]

- Ravi, N.; Gabeur, V.; Hu, Y.T.; Hu, R.; Ryali, C.; Ma, T.; Khedr, H.; Rädle, R.; Rolland, C.; Gustafson, L.; et al. SAM 2: Segment Anything in Images and Videos. arXiv 2024, arXiv:2408.00714. [Google Scholar] [PubMed]

- Zhang, Y.; Zhou, T.; Wang, S.; Liang, P.; Zhang, Y.; Chen, D.Z. Input Augmentation with SAM: Boosting Medical Image Segmentation with Segmentation Foundation Model. In MICCAI 2023 Workshops, Proceedings of the Medical Image Computing and Computer Assisted Intervention, Vancouver, BC, Canada, 8–12 October 2023. Celebi, M.E., Salekin, M.S., Kim, H., Albarqouni, S., Barata, C., Halpern, A., Tschandl, P., Combalia, M., Liu, Y., Zamzmi, G., et al., Eds.; Springer: Cham, Switzerland, 2023; pp. 129–139. [Google Scholar] [CrossRef]

- Wang, W.; Xie, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. PVT v2: Improved baselines with Pyramid Vision Transformer. Comput. Vis. Media 2022, 8, 415–424. [Google Scholar] [CrossRef]

- Zhang, W.; Fu, C.; Zheng, Y.; Zhang, F.; Zhao, Y.; Sham, C.W. HSNet: A hybrid semantic network for polyp segmentation. Comput. Biol. Med. 2022, 150, 106173. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Mao, H.; Girshick, R.; He, K. Exploring Plain Vision Transformer Backbones for Object Detection. In Computer Vision—ECCV 2022, Proceedings of the 17th European Conference, Tel Aviv, Israel, 23–27 October 2022. Proceedings, Part IX; Springer: Berlin/Heidelberg, Germany, 2022; pp. 280–296. [Google Scholar] [CrossRef]

- Tancik, M.; Srinivasan, P.; Mildenhall, B.; Fridovich-Keil, S.; Raghavan, N.; Singhal, U.; Ramamoorthi, R.; Barron, J.; Ng, R. Fourier features let networks learn high frequency functions in low dimensional domains. Adv. Neural Inf. Process. Syst. 2020, 33, 7537–7547. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.u.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems. Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Zhang, C.; Liu, L.; Cui, Y.; Huang, G.; Lin, W.; Yang, Y.; Hu, Y. A Comprehensive Survey on Segment Anything Model for Vision and Beyond. arXiv 2023, arXiv:2305.08196. [Google Scholar] [CrossRef]

- Cheng, J.; Ye, J.; Deng, Z.; Chen, J.; Li, T.; Wang, H.; Su, Y.; Huang, Z.; Chen, J.; Sun, L.J.H.; et al. SAM-Med2D. arXiv 2023, arXiv:2308.16184. [Google Scholar] [CrossRef]

- Lin, X.; Xiang, Y.; Zhang, L.; Yang, X.; Yan, Z.; Yu, L. SAMUS: Adapting Segment Anything Model for Clinically-Friendly and Generalizable Ultrasound Image Segmentation. arXiv 2023, arXiv:2309.06824. [Google Scholar] [CrossRef]

- Ma, J.; He, Y.; Li, F.; Han, L.; You, C.; Wang, B. Segment Anything in Medical Images. arXiv 2023, arXiv:2304.12306. [Google Scholar] [CrossRef]

- Zhang, K.; Liu, D. Customized Segment Anything Model for Medical Image Segmentation. arXiv 2023, arXiv:2304.13785. [Google Scholar] [CrossRef]

- Wu, J.; Ji, W.; Liu, Y.; Fu, H.; Xu, M.; Xu, Y.; Jin, Y. Medical SAM Adapter: Adapting Segment Anything Model for Medical Image Segmentation. arXiv 2023, arXiv:2304.12620. [Google Scholar] [CrossRef]

- Ryali, C.; Hu, Y.T.; Bolya, D.; Wei, C.; Fan, H.; Huang, P.Y.; Aggarwal, V.; Chowdhury, A.; Poursaeed, O.; Hoffman, J.; et al. Hiera: A hierarchical vision transformer without the bells-and-whistles. In Proceedings of the International Conference on Machine Learning, PMLR, Honolulu, HI, USA, 23–29 July 2023; pp. 29441–29454. [Google Scholar]

- Curcio, C.A.; Allen, K.A.; Sloan, K.R.; Lerea, C.L.; Hurley, J.B.; Klock, I.B.; Milam, A.H. Distribution and morphology of human cone photoreceptors stained with anti-blue opsin. J. Comp. Neurol. 1991, 312, 610–624. [Google Scholar] [CrossRef]

- Chen, C.; Huang, W.; Zhang, L.; Mow, W.H. Robust and Unobtrusive Display-to-Camera Communications via Blue Channel Embedding. IEEE Trans. Image Process. 2019, 28, 156–169. [Google Scholar] [CrossRef] [PubMed]

- Emre Celebi, M.; Wen, Q.; Hwang, S.; Iyatomi, H.; Schaefer, G. Lesion border detection in dermoscopy images using ensembles of thresholding methods. Skin Res. Technol. 2013, 19, e252–e258. [Google Scholar] [CrossRef] [PubMed]

- Vázquez, D.; Bernal, J.; Sánchez, F.J.; Fernández-Esparrach, G.; López, A.M.; Romero, A.; Drozdzal, M.; Courville, A. A Benchmark for Endoluminal Scene Segmentation of Colonoscopy Images. arXiv 2016, arXiv:1612.00799. [Google Scholar] [CrossRef]

- Bernal, J.; Sánchez, F.J.; Fernández-Esparrach, G.; Gil, D.; Rodríguez, C.; Vilariño, F. WM-DOVA maps for accurate polyp highlighting in colonoscopy: Validation vs. saliency maps from physicians. Comput. Med. Imaging Graph. 2015, 43, 99–111. [Google Scholar] [CrossRef]

- Kvasir-SEG: A Segmented Polyp Dataset. In Proceedings of the International Conference on Multimedia Modeling, Daejeon, South Korea, 5–8 January 2020; pp. 451–462. [CrossRef]

- Bernal, J.; Sánchez, J.; Vilariño, F. Towards automatic polyp detection with a polyp appearance model. Pattern Recognit. 2012, 45, 3166–3182. [Google Scholar] [CrossRef]

- Silva, J.; Histace, A.; Romain, O.; Dray, X.; Granado, B. Toward embedded detection of polyps in WCE images for early diagnosis of colorectal cancer. Int. J. Comput. Assist. Radiol. Surg. 2014, 9, 283–293. [Google Scholar] [CrossRef]

- Ali, S.; Jha, D.; Ghatwary, N.; Realdon, S.; Cannizzaro, R.; Salem, O.E.; Lamarque, D.; Daul, C.; Riegler, M.A.; Anonsen, K.V.; et al. A multi-centre polyp detection and segmentation dataset for generalisability assessment. Sci. Data 2023, 10, 75. [Google Scholar] [CrossRef]

- Nguyen, H.C.; Le, T.T.; Pham, H.H.; Nguyen, H.Q. VinDr-RibCXR: A Benchmark Dataset for Automatic Segmentation and Labeling of Individual Ribs on Chest X-rays. arXiv 2021, arXiv:2107.01327. [Google Scholar]

- Le, T.N.; Nguyen, T.V.; Nie, Z.; Tran, M.T.; Sugimoto, A. Anabranch network for camouflaged object segmentation. Comput. Vis. Image Underst. 2019, 184, 45–56. [Google Scholar] [CrossRef]

- Liu, L.; Liu, M.; Meng, K.; Yang, L.; Zhao, M.; Mei, S. Camouflaged locust segmentation based on PraNet. Comput. Electron. Agric. 2022, 198, 107061. [Google Scholar] [CrossRef]

- Luo, C.; Wang, Y.; Deng, Z.; Lou, Q.; Zhao, Z.; Ge, Y.; Hu, S. Colonic polyp segmentation based on transformer-convolutional neural networks fusion. Pattern Recognit. 2025, 170, 112116. [Google Scholar] [CrossRef]

- Dutta, T.K.; Majhi, S.; Nayak, D.R.; Jha, D. SAM-Mamba: Mamba Guided SAM Architecture for Generalized Zero-Shot Polyp Segmentation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Tucson, AZ, USA, 26 February–6 March 2025; pp. 4655–4664. [Google Scholar] [CrossRef]

- Ren, J.; Zhang, X.; Zhang, L. HiFiSeg: High-Frequency Information Enhanced Polyp Segmentation With Global-Local Vision Transformer. IEEE Access 2025, 13, 38704–38713. [Google Scholar] [CrossRef]

- Xia, Y.; Yun, H.; Liu, Y.; Luan, J.; Li, M. MGCBFormer: The multiscale grid-prior and class-inter boundary-aware transformer for polyp segmentation. Comput. Biol. Med. 2023, 167, 107600. [Google Scholar] [CrossRef] [PubMed]

- Rahman, M.M.; Marculescu, R. Medical Image Segmentation via Cascaded Attention Decoding. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 4–6 January 2023; pp. 6211–6220. [Google Scholar] [CrossRef]

- Yue, G.; Xiao, H.; Zhou, T.; Tan, S.; Liu, Y.; Yan, W. Progressive Feature Enhancement Network for Automated Colorectal Polyp Segmentation. IEEE Trans. Autom. Sci. Eng. 2025, 22, 5792–5803. [Google Scholar] [CrossRef]

- Sanderson, E.; Matuszewski, B.J. FCN-transformer feature fusion for polyp segmentation. In Medical Image Understanding and Analysis, Proceedings of the 26th Annual Conference, Cambridge, UK, 27–29 July 2022. Springer: Cham, Switzerland, 2022; pp. 892–907. [Google Scholar] [CrossRef]

- Xiao, B.; Hu, J.; Li, W.; Pun, C.M.; Bi, X. CTNet: Contrastive Transformer Network for Polyp Segmentation. IEEE Trans. Cybern. 2024, 54, 5040–5053. [Google Scholar] [CrossRef]

- Li, W.; Zhao, Y.; Li, F.; Wang, L. MIA-Net: Multi-information aggregation network combining transformers and convolutional feature learning for polyp segmentation. Knowl.-Based Syst. 2022, 247, 108824. [Google Scholar] [CrossRef]

- Duc, N.T.; Oanh, N.T.; Thuy, N.T.; Triet, T.M.; Dinh, V.S. ColonFormer: An Efficient Transformer Based Method for Colon Polyp Segmentation. IEEE Access 2022, 10, 80575–80586. [Google Scholar] [CrossRef]

- Liu, F.; Hua, Z.; Li, J.; Fan, L. DBMF: Dual Branch Multiscale Feature Fusion Network for polyp segmentation. Comput. Biol. Med. 2022, 151, 106304. [Google Scholar] [CrossRef]

- Dong, B.; Wang, W.; Fan, D.P.; Li, J.; Fu, H.; Shao, L. Polyp-PVT: Polyp Segmentation with Pyramid Vision Transformers. CAAI Artif. Intell. Res. 2023, 2, 9150015. [Google Scholar] [CrossRef]

- Lin, A.; Chen, B.; Xu, J.; Zhang, Z.; Lu, G.; Zhang, D. DS-TransUNet: Dual Swin Transformer U-Net for Medical Image Segmentation. IEEE Trans. Instrum. Meas. 2022, 71, 4005615. [Google Scholar] [CrossRef]

- Yue, G.; Li, Y.; Wu, S.; Jiang, B.; Zhou, T.; Yan, W.; Lin, H.; Wang, T. Dual-Domain Feature Interaction Network for Automatic Colorectal Polyp Segmentation. IEEE Trans. Instrum. Meas. 2024, 73, 5034012. [Google Scholar] [CrossRef]

- Lewis, J.; Cha, Y.J.; Kim, J. Dual encoder–decoder-based deep polyp segmentation network for colonoscopy images. Sci. Rep. 2023, 13, 1183. [Google Scholar] [CrossRef]

- Bui, N.T.; Hoang, D.H.; Nguyen, Q.T.; Tran, M.T.; Le, N. MEGANet: Multi-Scale Edge-Guided Attention Network for Weak Boundary Polyp Segmentation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 1–6 January 2024; pp. 7970–7979. [Google Scholar] [CrossRef]

- Yue, G.; Li, Y.; Jiang, W.; Zhou, W.; Zhou, T. Boundary Refinement Network for Colorectal Polyp Segmentation in Colonoscopy Images. IEEE Signal Process. Lett. 2024, 31, 954–958. [Google Scholar] [CrossRef]

- Shi, W.; Xu, J.; Gao, P. SSformer: A lightweight transformer for semantic segmentation. In Proceedings of the IEEE 24th International Workshop on Multimedia Signal Processing (MMSP), Shanghai, China, 26–28 September 2022; IEEE: New York, NY, USA, 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Kim, T.; Lee, H.; Kim, D. UACANet: Uncertainty Augmented Context Attention for Polyp Segmentation. In Proceedings of the 29th ACM International Conference on Multimedia—MM ’21, New York, NY, USA, 20–24 October 2021; pp. 2167–2175. [Google Scholar] [CrossRef]

- Lou, A.; Guan, S.; Loew, M.H. CaraNet: Context axial reverse attention network for segmentation of small medical objects. J. Med. Imaging 2023, 10, 014005. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Liu, H.; Hu, Q. TransFuse: Fusing Transformers and CNNs for Medical Image Segmentation. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2021, 24th International Conference, Strasbourg, France, 27 September–1 October 2021. de Bruijne, M., Cattin, P.C., Cotin, S., Padoy, N., Speidel, S., Zheng, Y., Essert, C., Eds.; Springer: Cham, Switzerland, 2021; pp. 14–24. [Google Scholar] [CrossRef]

- Wei, J.; Hu, Y.; Zhang, R.; Li, Z.; Zhou, S.K.; Cui, S. Shallow Attention Network for Polyp Segmentation. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2021, 24th International Conference, Strasbourg, France, 27 September–1 October 2021. Springer: Cham, Switzerland, 2021; Volume 12901, pp. 699–708. [Google Scholar] [CrossRef]

- Zhao, X.; Jia, H.; Pang, Y.; Lv, L.; Tian, F.; Zhang, L.; Sun, W.; Lu, H. M2SNet: Multi-scale in Multi-scale Subtraction Network for Medical Image Segmentation. arXiv 2023, arXiv:2303.10894. [Google Scholar]

- Zhou, T.; Zhou, Y.; He, K.; Gong, C.; Yang, J.; Fu, H.; Shen, D. Cross-level Feature Aggregation Network for Polyp Segmentation. Pattern Recognit. 2023, 140, 109555. [Google Scholar] [CrossRef]

- Zhao, X.; Zhang, L.; Lu, H. Automatic Polyp Segmentation via Multi-scale Subtraction Network. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2021, Strasbourg, France, 27 September–1 October 2021. de Bruijne, M., Cattin, P.C., Cotin, S., Padoy, N., Speidel, S., Zheng, Y., Essert, C., Eds.; Springer: Cham, Switzerland, 2021; pp. 120–130. [Google Scholar] [CrossRef]

- Carisi, L. Augmentation and Ensembles: Improving Medical Image Segmentation with SAM and Deep Networks. Master’s Thesis, University of Padova, Padova, Italy, 2024. Available online: https://hdl.handle.net/20.500.12608/78065 (accessed on 5 August 2025).

- Chiereghin, F. Exploring SAM-Augmented Ensembles For Image Segmentation Tasks. Master’s Thesis, University of Padova, Padova, Italy, 2025. Available online: https://hdl.handle.net/20.500.12608/84251 (accessed on 5 August 2025).

| Parameter | Value |

|---|---|

| Model | SAM1, ViT-H backbone |

| Trainable layers | Mask decoder only |

| Frozen layers | Vision encoder, Prompt encoder |

| Input resolution | Resized to img_size = 1024 (longest side), aspect ratio preserved |

| Batch size | 1 (single image) |

| Number of epochs | 20 |

| Optimizer | Adam |

| Learning rate | |

| Weight decay | 0 |

| Loss function | DiceCELoss (sigmoid = True, squared_pred = True) |

| Data augmentation | Bounding box perturbation ± 20 px, no additional augmentations |

| Prompt type | Bounding boxes from masks |

| Early stopping | Not implemented |

| Method | CVC-T | ClinDB | Kvasir | ColDB | ETIS | Average |

|---|---|---|---|---|---|---|

| Baseline() | 0.903 | 0.917 | 0.910 | 0.819 | 0.816 | 0.873 |

| SAM1_RG-segPrior | 0.894 | 0.938 | 0.925 | 0.823 | 0.800 | 0.876 |

| SAM1_RG-logits | 0.898 | 0.944 | 0.917 | 0.816 | 0.808 | 0.877 |

| SAM1_SV-segPrior | 0.899 | 0.943 | 0.910 | 0.811 | 0.756 | 0.864 |

| SAM1_PCA-segPrior | 0.903 | 0.945 | 0.905 | 0.820 | 0.777 | 0.870 |

| SAM1_SAMAug | 0.907 | 0.932 | 0.918 | 0.811 | 0.768 | 0.867 |

| SAM1_OurSAMAug | 0.880 | 0.943 | 0.913 | 0.814 | 0.769 | 0.861 |

| SAM2_RG-segPrior | 0.878 | 0.936 | 0.915 | 0.824 | 0.798 | 0.870 |

| SAM2_RG-logits | 0.890 | 0.934 | 0.910 | 0.814 | 0.781 | 0.866 |

| SAM2_SV-segPrior | 0.890 | 0.927 | 0.901 | 0.807 | 0.729 | 0.851 |

| SAM2_PCA-segPrior | 0.896 | 0.945 | 0.914 | 0.808 | 0.778 | 0.868 |

| SAM2_SAMAug | 0.899 | 0.930 | 0.917 | 0.826 | 0.766 | 0.868 |

| SAM2_OurSAMAug | 0.905 | 0.946 | 0.920 | 0.820 | 0.781 | 0.875 |

| Method | Ribs | CAMO | Locust |

|---|---|---|---|

| Baseline() | 0.863 | 0.811 | 0.882 |

| SAM1_RG-segPrior | 0.854 | 0.807 | 0.885 |

| SAM1_RG-logits | 0.850 | 0.796 | 0.873 |

| SAM1_SV-segPrior | 0.855 | 0.805 | 0.867 |

| SAM1_PCA-segPrior | 0.858 | 0.804 | 0.863 |

| SAM1_SAMAug | 0.857 | 0.814 | 0.863 |

| SAM1_OurSAMAug | 0.857 | 0.815 | 0.872 |

| SAM2_RG-segPrior | 0.856 | 0.794 | 0.881 |

| SAM2_RG-logits | 0.849 | 0.795 | 0.871 |

| SAM2_SV-segPrior | 0.855 | 0.796 | 0.866 |

| SAM2_PCA-segPrior | 0.857 | 0.793 | 0.871 |

| SAM2_SAMAug | 0.856 | 0.793 | 0.873 |

| SAM2_OurSAMAug | 0.857 | 0.786 | 0.869 |

| Method | Ribs | CAMO | Locust |

|---|---|---|---|

| Baseline() | 0.843 | 0.772 | 0.876 |

| Baseline() | 0.863 | 0.811 | 0.882 |

| Baseline() | 0.846 | 0.783 | 0.882 |

| Baseline() | 0.863 | 0.812 | 0.876 |

| Baseline(H + P) | 0.865 | 0.811 | 0.886 |

| AuxMix(P) | 0.843 | 0.793 | 0.888 |

| AuxMix(H) | 0.864 | 0.821 | 0.885 |

| AuxMix(H + P) | 0.862 | 0.816 | 0.897 |

| HUGE(H) | 0.864 | 0.821 | 0.890 |

| HUGE | 0.862 | 0.818 | 0.898 |

| Method | CVC-T | ClinDB | Kvasir | ColDB | ETIS | Average |

|---|---|---|---|---|---|---|

| Baseline() | 0.906 | 0.923 | 0.899 | 0.783 | 0.770 | 0.856 |

| Baseline() | 0.903 | 0.917 | 0.910 | 0.819 | 0.816 | 0.873 |

| Baseline() | 0.907 | 0.933 | 0.908 | 0.829 | 0.805 | 0.876 |

| Baseline() | 0.904 | 0.927 | 0.915 | 0.820 | 0.828 | 0.879 |

| Baseline() | 0.907 | 0.927 | 0.911 | 0.832 | 0.838 | 0.883 |

| AuxMix(P) | 0.908 | 0.929 | 0.908 | 0.834 | 0.802 | 0.876 |

| AuxMix(H) | 0.908 | 0.938 | 0.919 | 0.843 | 0.832 | 0.888 |

| AuxMix(H + P) | 0.909 | 0.947 | 0.918 | 0.838 | 0.836 | 0.889 |

| HUGE(H) | 0.905 | 0.949 | 0.920 | 0.846 | 0.829 | 0.890 |

| HUGE | 0.907 | 0.949 | 0.921 | 0.843 | 0.840 | 0.892 |

| Method | C1 | C2 | C3 | C4 | C5 | C6 | Average |

|---|---|---|---|---|---|---|---|

| Baseline() | 0.857 | 0.745 | 0.894 | 0.423 | 0.642 | 0.802 | 0.727 |

| Baseline() | 0.860 | 0.765 | 0.900 | 0.456 | 0.695 | 0.804 | 0.747 |

| Baseline() | 0.863 | 0.748 | 0.901 | 0.440 | 0.666 | 0.790 | 0.735 |

| Baseline() | 0.873 | 0.763 | 0.903 | 0.457 | 0.709 | 0.799 | 0.751 |

| Baseline( + ) | 0.871 | 0.760 | 0.905 | 0.454 | 0.708 | 0.804 | 0.750 |

| AuxMix(P) | 0.861 | 0.751 | 0.900 | 0.437 | 0.672 | 0.801 | 0.737 |

| AuxMix(H) | 0.870 | 0.757 | 0.905 | 0.472 | 0.700 | 0.805 | 0.752 |

| AuxMix(P + H) | 0.871 | 0.756 | 0.906 | 0.456 | 0.699 | 0.805 | 0.749 |

| HUGE(H) | 0.869 | 0.760 | 0.908 | 0.469 | 0.702 | 0.804 | 0.752 |

| HUGE | 0.871 | 0.759 | 0.907 | 0.458 | 0.713 | 0.804 | 0.752 |

| Model | CVC-T | ClinDB | Kvasir | ColDB | ETIS | Average |

|---|---|---|---|---|---|---|

| SAM-Mamba [44] | 0.920 | 0.942 | 0.924 | 0.853 | 0.848 | 0.897 |

| HUGE | 0.907 | 0.949 | 0.921 | 0.843 | 0.840 | 0.892 |

| HUGE(H) | 0.905 | 0.949 | 0.920 | 0.846 | 0.829 | 0.890 |

| AuxMix(H) | 0.908 | 0.938 | 0.919 | 0.843 | 0.832 | 0.888 |

| Ens2 [1] | 0.899 | 0.935 | 0.927 | 0.840 | 0.833 | 0.887 |

| HiFiSeg [45] | 0.905 | 0.942 | 0.933 | 0.826 | 0.822 | 0.886 |

| MGCBFormer [46] | 0.913 | 0.955 | 0.931 | 0.807 | 0.819 | 0.885 |

| PVT-CASCADE [47] | 0.905 | 0.943 | 0.926 | 0.825 | 0.801 | 0.880 |

| HSNet [19] | 0.903 | 0.948 | 0.926 | 0.810 | 0.808 | 0.879 |

| PFENet [48] | 0.896 | 0.940 | 0.931 | 0.821 | 0.809 | 0.879 |

| FCBFormer [49], from ref. [46] | 0.911 | 0.949 | 0.922 | 0.809 | 0.799 | 0.878 |

| CTNet [50] | 0.908 | 0.936 | 0.917 | 0.813 | 0.810 | 0.877 |

| MIA-Net [51] | 0.900 | 0.942 | 0.926 | 0.816 | 0.800 | 0.877 |

| ColonFormer-L [52] | 0.906 | 0.932 | 0.924 | 0.811 | 0.801 | 0.875 |

| DBMF [53] | 0.919 | 0.933 | 0.932 | 0.803 | 0.790 | 0.875 |

| Fu-TransHNet [43] | 0.907 | 0.938 | 0.912 | 0.810 | 0.793 | 0.872 |

| Polyp-PVT [54] | 0.900 | 0.937 | 0.917 | 0.808 | 0.787 | 0.870 |

| DS-TransUNet-L [55] | 0.911 | 0.936 | 0.935 | 0.798 | 0.761 | 0.868 |

| DFINet [56] | 0.886 | 0.937 | 0.924 | 0.799 | 0.791 | 0.867 |

| PSNet [57] | 0.877 | 0.928 | 0.929 | 0.795 | 0.787 | 0.863 |

| MEGANet (ResNet-34) [58] | 0.887 | 0.930 | 0.911 | 0.781 | 0.789 | 0.860 |

| BRNet [59] | 0.898 | 0.921 | 0.918 | 0.795 | 0.760 | 0.858 |

| SSformer [60], from ref. [45] | 0.887 | 0.927 | 0.926 | 0.772 | 0.767 | 0.856 |

| UACANet-L [61], from ref. [53] | 0.913 | 0.929 | 0.915 | 0.753 | 0.769 | 0.856 |

| CaraNet [62] | 0.903 | 0.936 | 0.918 | 0.773 | 0.747 | 0.855 |

| TransFuse-L* [63] | 0.894 | 0.942 | 0.920 | 0.781 | 0.737 | 0.855 |

| UACANet-L [61] | 0.910 | 0.926 | 0.912 | 0.751 | 0.766 | 0.853 |

| TransUNet, from ref. [63] | 0.893 | 0.935 | 0.913 | 0.781 | 0.731 | 0.851 |

| SANet [64], from ref. [53] | 0.899 | 0.922 | 0.909 | 0.759 | 0.763 | 0.850 |

| M2SNet [65] | 0.903 | 0.922 | 0.912 | 0.758 | 0.749 | 0.849 |

| CFA-Net [66] | 0.893 | 0.933 | 0.915 | 0.743 | 0.732 | 0.843 |

| MSNet [67], from ref. [53] | 0.873 | 0.926 | 0.915 | 0.756 | 0.733 | 0.841 |

| Multiplier | CVC-T | ClinDB | Kvasir | ColDB | ETIS | Average |

|---|---|---|---|---|---|---|

| ×1 (9 models) | 0.907 | 0.948 | 0.920 | 0.841 | 0.825 | 0.888 |

| ×2 (18 models) | 0.907 | 0.946 | 0.921 | 0.845 | 0.830 | 0.890 |

| ×3 (27 models) | 0.907 | 0.945 | 0.920 | 0.846 | 0.831 | 0.890 |

| ×4 (36 models) | 0.905 | 0.945 | 0.921 | 0.844 | 0.831 | 0.889 |

| Method | CVC-T | ClinDB | Kvasir | ColDB | ETIS | Average |

|---|---|---|---|---|---|---|

| Baseline | 0.903 | 0.917 | 0.910 | 0.819 | 0.816 | 0.873 |

| SAM1_SAMAug (aug-only) | 0.828 | 0.903 | 0.896 | 0.769 | 0.726 | 0.824 |

| SAM1_SAMAug (combined) | 0.907 | 0.932 | 0.918 | 0.811 | 0.768 | 0.867 |

| Method | CVC-T | ClinDB | Kvasir | ColDB | ETIS | Average |

|---|---|---|---|---|---|---|

| AuxMix(H) | 0.908 | 0.938 | 0.919 | 0.843 | 0.832 | 0.888 |

| w/o SAM1_RG-logits | 0.905 | 0.937 | 0.922 | 0.838 | 0.819 | 0.884 |

| w/o SAM1_PCA-segPrior | 0.903 | 0.934 | 0.922 | 0.842 | 0.821 | 0.884 |

| w/o SAM1_SAMAug | 0.901 | 0.940 | 0.925 | 0.840 | 0.821 | 0.885 |

| w/o SAM2_RG-logits | 0.904 | 0.937 | 0.921 | 0.841 | 0.822 | 0.885 |

| w/o SAM2_PCA-segPrior | 0.902 | 0.938 | 0.924 | 0.838 | 0.827 | 0.886 |

| w/o SAM2_SAMAug | 0.905 | 0.937 | 0.922 | 0.840 | 0.824 | 0.886 |

| Method | CVC-T | ClinDB | Kvasir | ColDB | ETIS |

|---|---|---|---|---|---|

| Baseline() | ±0.008 | ±0.009 | ±0.007 | ±0.006 | ±0.005 |

| SAM1_RG-segPrior | ±0.006 | ±0.010 | ±0.007 | ±0.005 | ±0.008 |

| SAM1_RG-logits | ±0.009 | ±0.008 | ±0.005 | ±0.007 | ±0.009 |

| SAM1_SV-segPrior | ±0.005 | ±0.007 | ±0.009 | ±0.004 | ±0.008 |

| SAM1_PCA-segPrior | ±0.007 | ±0.009 | ±0.008 | ±0.006 | ±0.004 |

| SAM1_SAMAug | ±0.010 | ±0.008 | ±0.005 | ±0.007 | ±0.009 |

| SAM1_OurSAMAug | ±0.004 | ±0.009 | ±0.006 | ±0.010 | ±0.007 |

| SAM2_RG-segPrior | ±0.009 | ±0.006 | ±0.010 | ±0.005 | ±0.007 |

| SAM2_RG-logits | ±0.008 | ±0.005 | ±0.009 | ±0.006 | ±0.010 |

| SAM2_SV-segPrior | ±0.007 | ±0.009 | ±0.006 | ±0.004 | ±0.008 |

| SAM2_PCA-segPrior | ±0.009 | ±0.006 | ±0.008 | ±0.007 | ±0.005 |

| SAM2_SAMAug | ±0.008 | ±0.007 | ±0.009 | ±0.006 | ±0.010 |

| SAM2_OurSAMAug | ±0.010 | ±0.008 | ±0.006 | ±0.004 | ±0.009 |

| AuxMix(H) | ±0.004 | ±0.003 | ±0.004 | ±0.004 | ±0.005 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Carisi, L.; Chiereghin, F.; Fantozzi, C.; Nanni, L. SAM-Based Input Augmentations and Ensemble Strategies for Image Segmentation. Information 2025, 16, 848. https://doi.org/10.3390/info16100848

Carisi L, Chiereghin F, Fantozzi C, Nanni L. SAM-Based Input Augmentations and Ensemble Strategies for Image Segmentation. Information. 2025; 16(10):848. https://doi.org/10.3390/info16100848

Chicago/Turabian StyleCarisi, Lorenzo, Francesco Chiereghin, Carlo Fantozzi, and Loris Nanni. 2025. "SAM-Based Input Augmentations and Ensemble Strategies for Image Segmentation" Information 16, no. 10: 848. https://doi.org/10.3390/info16100848

APA StyleCarisi, L., Chiereghin, F., Fantozzi, C., & Nanni, L. (2025). SAM-Based Input Augmentations and Ensemble Strategies for Image Segmentation. Information, 16(10), 848. https://doi.org/10.3390/info16100848