Abstract

Universities face growing pressure to deliver personalized learning that prepares students with adaptable, future-ready competencies. Traditional static curricula are often unable to meet these demands. This paper introduces a novel framework based on AI-enhanced digital twins of students (DTS) as dynamic virtual representations integrating academic performance, competency attainment, learning preferences, career objectives, and engagement patterns. The DTS framework employs artificial intelligence algorithms, semantic ontologies spanning educational and career domains, and real-time feedback mechanisms for personalized learning pathway orchestration. To demonstrate the framework’s potential, a simulation study was conducted using synthetic student data. Results compared DTS-guided adaptive pathways with traditional static approaches and showed improvements in competency attainment, engagement, learning efficiency, and reduced dropout risk.

1. Introduction

In an era defined by rapid technological advancement, shifting labor market demands, and global uncertainties, universities face increasing pressure to equip students not merely with static knowledge but with adaptable, future-ready competencies [1,2]. Traditional models of higher education characterized by fixed curricula, uniform instructional pathways, and time-based progression are no longer sufficient to meet the diverse needs of learners or the complex expectations of employers in the digital economy. There is a growing recognition that personalized, flexible, and competency-driven learning ecosystems are essential for preparing students for success in a rapidly changing world [3].

Artificial Intelligence (AI) has emerged as a transformative force in education, offering unprecedented opportunities to harness real-time data, model learner behavior, and support adaptive instructional design [4,5]. Simultaneously, the concept of the digital twin widely adopted in engineering and industry as a dynamic digital representation of physical systems [6] offers a compelling metaphor and methodological foundation for modeling learners in educational environments. While AI has been used to power recommendation systems, adaptive assessments, and personalized feedback in education [7,8], its full potential has yet to be realized in orchestrating holistic representations of students that can inform learning pathways over time.

This paper introduces a new paradigm for personalized learning in higher education based on AI-enabled digital twins of students (DTS) as the central element of a next-generation university learning architecture is introduced. The DTS is conceptualized as a continuously evolving digital representation of a student, integrating real-time academic data, behavioral signals, competency levels, and career preferences. It serves both as a diagnostic tool and a prescriptive engine, enabling a two-way interaction: (1) AI algorithms enrich the digital twin based on learner data, and (2) the twin, in turn, informs the generation and adjustment of personalized, competency-based learning pathways.

This dual AI-driven loop enables a shift from traditional curriculum-centered design to student-centered orchestration, where each learner follows a unique trajectory aligned with their strengths, gaps, aspirations, and future career paths. In contrast to previous personalized learning frameworks [9], the DTS approach emphasizes real-time adaptation, semantic modeling of educational and occupational domains via ontologies, and the integration of both academic and non-academic signals in learner modeling.

The new paradigm uses the DTS framework not only for individual optimization, but also as a platform for institutional innovation. Through integration with learning management systems (LMSs), student information systems (SISs), and open competency ontologies, the system enables granular learning path generation, stackable credentials, and cross-institutional interoperability. In doing so, it supports the evolution of education-as-a-service (EaaS) models [10], where universities offer flexible, personalized, and scalable learning ecosystems aligned with lifelong learning goals and digital transformation agendas. Recent work on EaaS provides important context for the DTS framework. A services delivery model has been proposed in paper [11] to address the mobility of educational services and the alignment of competency-based learning with societal and professional needs. The application of digital twin concepts to education has also been advanced by study [12] through a straw man proposal that adapts methods from manufacturing to complex service environments. More recently, a comprehensive analysis of EaaS in study [13] highlights its role as a transformative model that integrates service quality, sustainability, and personalization. Together, these contributions underscore the need for systematic transformation of education, with the DTS framework building upon them by offering a technical architecture that operationalizes EaaS principles through AI-driven personalization and semantic integration.

The objective of this paper is to conceptualize, formalize, and illustrate the use of AI-augmented student digital twins as a foundational layer for transforming higher education.

Recent years have seen a surge of research focused on generating individualized learning paths through advanced data modeling and artificial intelligence techniques. Zhang et al. [14] proposed a multi-dimensional curriculum knowledge graph for computer science education, employing graph convolutional networks to capture high-order correlations within the graph structure. Similarly, Sun et al. [15] developed a personalized learning recommendation system using knowledge graphs and graph convolutional networks, enhancing relevance and learner engagement. Son et al. [16] introduced a multi-objective optimization framework that recommends knowledge paths aligned with learners’ prior education and career goals.

Building on learner behavior, Cai et al. [17] proposed a knowledge demand tracking model that simulates learner states based on historical interaction data, allowing the construction of adaptive learning sequences. Li and Zhang [18] presented a learning path generation algorithm that applies network embedding techniques to evaluate similarity among learners for personalization. Cheng et al. [19] designed an ontology-based learning path recommendation method, using structured professional learning sequences to create adaptive paths that evolve with the learner’s growing mastery.

Ontology-based knowledge representation plays a significant role in enhancing personalized learning strategies, as noted in [20], where structured semantic models are used to represent domain-specific knowledge. The approach in [21] outlines the construction of such semantic models to support adaptive educational experiences. The role of artificial intelligence in adaptive learning is underscored by its ability to process and interpret learner data, enabling more personalized instruction based on students’ individual strengths, needs, and educational challenges [22,23]. A key component of this personalization is learner modeling, which captures unique learner traits and informs tailored instructional interventions [24].

To operationalize such personalization, a dynamic feedback-driven learning optimization framework was proposed in [25]. This machine learning-based framework adapts content and teaching strategies in real time, analyzing data from platforms such as Coursera and Khan Academy to provide dynamic, feedback-informed learning experiences.

Further advancements include a dynamic algorithm presented in [26], which creates adaptive learning paths by assessing student learning states and the complexity of knowledge points. This method estimates knowledge difficulty, constructs a real-time mastery model based on behavior and test results, and generates personalized recommendations accordingly.

In line with social sustainability goals and the need to align human expertise with advancing technologies, a structured methodology for designing personalized training programs was proposed in [27]. The framework includes seven stages: system integration, data collection, data preparation, skills model extraction, competency assessment, training program recommendations, and continuous evaluation. By incorporating large language models and human-centered design, this methodology fosters proactive and adaptive learning cultures within organizations, ultimately contributing to workforce development and job satisfaction.

Despite these advances in personalized learning and AI-driven educational systems, several critical gaps remain when comparing existing frameworks with the comprehensive digital twin of student (DTS) approach proposed in this paper.

- Gap 1.

- Limited Integration Scope Compared to Multi-Modal Learner Modeling.

Recent frameworks like the dynamic feedback-driven learning optimization by Song et al. [25] and the cognitive diagnostic assessment approach by Jiang et al. [26] demonstrate sophisticated AI-driven personalization but focus primarily on academic performance optimization. Song et al.’s framework, while effective in adapting content delivery through machine learning analysis of platform data, lacks integration of career aspirations, behavioral patterns, and social–emotional indicators into a unified learner representation. Similarly, Jiang et al.’s data-driven approach excels in cognitive assessment but does not incorporate learning preferences or labor market alignment into pathway planning. In contrast, the DTS framework synthesizes five distinct learner dimensions (academic, competency, behavioral, career, and engagement) into a single evolving digital entity, enabling holistic personalization that transcends purely academic optimization.

- Gap 2.

- Insufficient Semantic Integration Across Educational Domains.

The ontology-based personalized learning path recommendation by Cheng et al. [19] represents an important step toward semantic-aware education systems by using structured professional learning sequences. However, their approach operates primarily within educational ontologies and does not establish comprehensive semantic bridges between educational content, competency frameworks, career pathways, and individual learner profiles within a unified reasoning system. The multi-dimensional curriculum knowledge graph approach by Zhang et al. [14], while sophisticated in capturing educational relationships through graph convolutional networks, similarly lacks cross-domain ontological integration. The DTS framework addresses this limitation by implementing a four-layer ontological architecture (Education, Competency, Career, and Student Profile ontologies) that enables semantic reasoning across all educational stakeholders and external labor market demands simultaneously.

- Gap 3.

- Static Versus Dynamic Learner Representation and Lifecycle Management.

The methodological framework for personalized training programs by Fraile et al. [27], though comprehensive in its seven-stage approach and incorporation of large language models, operates as a structured but essentially static methodology for program design rather than a continuously evolving learner model. Their approach excels in systematic competency assessment and training program recommendation but lacks the dynamic state evolution and real-time adaptation capabilities needed for lifelong learner representation. Existing personalized learning systems, including the reinforcement learning-based approaches by Cai et al. [17], function primarily as recommendation engines that operate at discrete decision points rather than as continuously evolving digital entities. The DTS framework fundamentally differs by conceptualizing the learner twin as a persistent, stateful computational entity that grows and adapts throughout the entire academic lifecycle and beyond, supporting seamless transitions between educational phases, institutions, and career pivots.

These gaps collectively highlight the need for a more integrated, semantically rich, and dynamically evolving approach to AI-enhanced personalized learning. This paper addresses these gaps by introducing a comprehensive DTS framework that makes several key contributions to the field of AI-enhanced personalized learning. The primary contribution is the conceptualization and formalization of an AI-driven digital twin architecture that creates a holistic, continuously evolving representation of each learner, integrating academic performance, competency attainment, learning preferences, career aspirations, and engagement indicators into a unified computational model. The framework advances beyond existing approaches by incorporating multi-layered ontological integration that enables semantic reasoning across educational content, competency frameworks, career pathways, and individual learner profiles. Additionally, the work introduces a novel cybernetic personalization loop that enables real-time gap analysis, adaptive path planning, and intelligent intervention through coordinated AI controllers operating at both strategic and operational levels. The study also contributes a modular technical architecture designed for institutional deployment, complete with human–AI collaboration mechanisms that maintain educator agency while leveraging AI capabilities for enhanced decision-making.

The remainder of this paper is structured as follows. Section 2 presents the materials and methods, beginning with the conceptual framework and mathematical formalization of the DTS, followed by descriptions of the AI roles, personalization loops, ontological integration, and technical architecture. Section 3 presents simulation-based results demonstrating the framework’s potential effectiveness compared to traditional static learning pathways. Section 4 discusses the strategic implications for university digital transformation, compares the DTS approach with conventional educational models, and examines challenges and future research directions. Section 5 concludes with a synthesis of the framework’s contributions and its potential for transforming higher education into adaptive, learner-centric ecosystems.

2. Materials and Methods

2.1. Conceptual Framework of DTS

The digital twin of student represents a foundational architectural element in the transformation of higher education toward adaptive, personalized, and competency-based learning systems. In this section, the definition, structure, and operational role of the Student Digital Twin are formalized, and the key data dimensions and computational capabilities that distinguish it from existing learner modeling approaches are specified.

DTS is defined as a stateful, AI-enhanced, and semantically structured computational entity that mirrors the evolving profile of a student during their academic lifecycle. Formally, the DTS can be represented as a multi-layered tuple:

where for a student (set of all students) at time

—academic performance and learning history.

—competency attainment levels.

—learning preferences and behavior.

—career interests and labor market alignment.

—social–emotional and engagement signals.

This five-component model ensures that the DTS evolves dynamically with time, enabling both retrospective assessment and prospective planning of learning pathways.

To clarify the major components of the proposed DTS framework and to reflect the diversity of elements captured within its five layers, a structured taxonomy of student attributes is presented in Table 1.

Table 1.

Taxonomy of Student Attributes in DTS.

- Academic performance and learning history

Let be the set of all courses, be a normalized grade function, and denote the set of completed courses by student up to time .

Then the academic layer is expressed as

- 2.

- Competency attainment levels

is a set of all skills, is a set of all competencies, is a mapping function relating skills to competency categories, and is a proficiency function giving mastery level of skill for student at time .

Then,

The competency gap for a target job role with required skills is

where is a threshold mastery level.

Competency attainment levels in this study were structured in alignment with widely recognized frameworks. At the international level, the European Qualifications Framework (EQF) defines learning outcomes across eight levels in terms of knowledge, skills, and responsibility [28]. In addition, the AAC&U VALUE rubrics provide practical descriptors of progression from novice to mastery that are widely adopted in higher education [29]. For simulation purposes, attainment was operationalized on a bounded [0–1] scale, where values correspond conceptually to increasing levels of competency development consistent with these established standards. This approach ensures that the DTS model remains compatible with institutional grading systems and transferable to broader accreditation frameworks.

- 3.

- Learning preferences and behavior

is a set of behavioral attributes (e.g., pace, style, and collaboration level) and is a behavior function capturing measured preference values.

Then,

- 4.

- Career interests and labor market alignment

is a set of career paths, is a set of job roles, is a mapping of career paths to job roles, and expresses the alignment of student with career path at time .

Then,

- 5.

- Social–emotional and engagement signals

is a set of engagement and emotional indicators (motivation, stress, attendance, and participation) and captures the normalized intensity of each indicator.

Then,

The DTS serves as both a state estimator and a decision support entity. Given a desired target competency profile for a job role , the DTS allows construction of an adaptive pathway:

where is the set of courses that build skill .

Thus, the DTS supports:

- Reflection—by estimating the current state . In the DTS cycle, reflection refers to the system’s ability to continuously monitor and interpret the learner’s state across multiple dimensions—academic performance, competencies, learning behavior, career alignment and engagement. This step functions as an evidence-based mirror of the student’s current trajectory, highlighting strengths, weaknesses, and deviations from intended outcomes. Prescription builds on reflection by generating adaptive pathways that guide the learner toward achieving target competencies and goals. These pathways are constructed through dynamic adjustment of learning activities, sequencing, and support mechanisms. For example, if reflection identifies a gap in a required competency, the system prescribes remedial modules or practice exercises; if engagement levels decline, the prescription may recommend interactive or collaborative tasks; if career alignment shifts, elective choices can be adapted accordingly.

- Prediction—by forecasting future competency attainment .

- Prescription—by generating adaptive pathways .

This formalization makes the DTS a mathematical state-space model for student learning, where AI acts as the estimator and controller within the feedback loop.

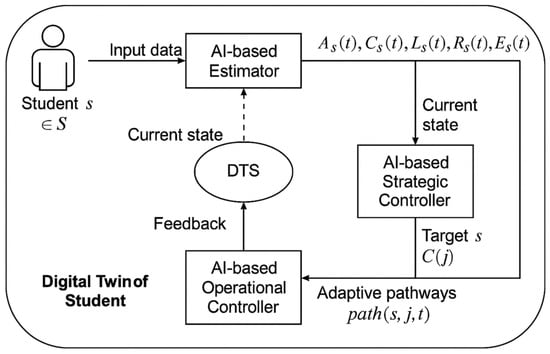

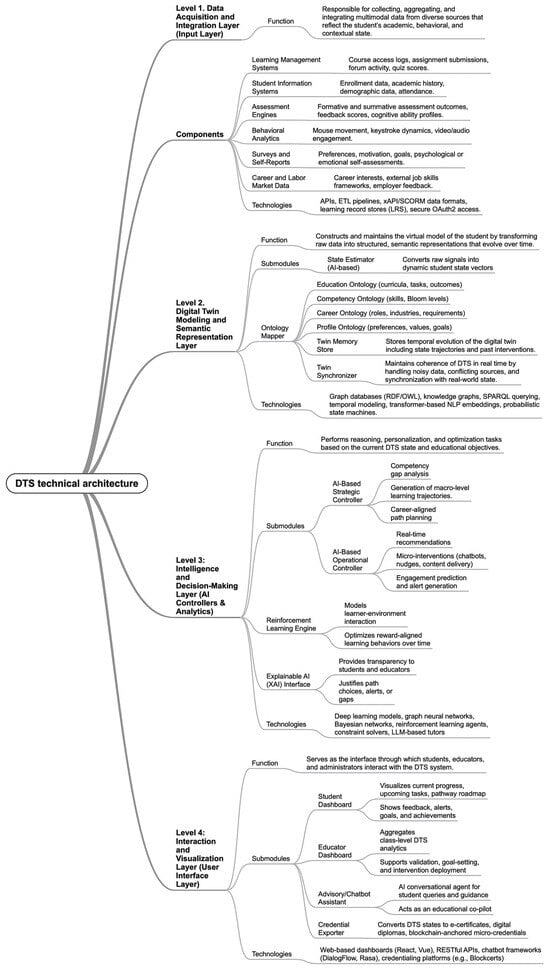

The dynamic operation of DTS can be formalized using a control-theoretic architecture, as illustrated in Figure 1. In this model, the student interacts continuously with the educational environment, generating various data inputs over time. These include academic records, behavioral logs, assessment results, and external signals such as career goals or engagement metrics.

Figure 1.

AI-driven control architecture for DTS.

To support the reflection–prescription cycle shown in Figure 1, the DTS framework incorporates a student profile repository. This repository integrates both historical records (e.g., prior courses, grades, and completed competencies) and current, dynamically updated information (e.g., engagement levels, ongoing performance, and behavioral indicators). By combining past and present data, the repository ensures that the representation of the student is not static but continuously evolving. This design enables the DTS to provide context-aware prescriptions that reflect long-term development patterns as well as immediate learning needs.

At the heart of this architecture lies the DTS itself as a dynamic, continuously updated virtual model that captures the academic state, behavioral patterns, engagement levels, and evolving goals of each individual student. The system operates through a structured pipeline of estimation, control, and feedback, coordinated by two AI-based controllers working at different abstraction levels.

The process begins with an AI-based Estimator, which ingests multimodal input data from academic systems, assessment results, behavioral logs, and emotional engagement signals. This module transforms the raw inputs into a structured internal representation of the learner’s current state, denoted as a multidimensional vector. This estimated state is used to update the DTS in real time, forming the basis for intelligent decision-making.

Once the learner’s state is estimated, the AI-based Strategic Controller generates a personalized learning trajectory that aligns with the student’s long-term academic or career objectives. Using the current state vector and the desired target profile for a given course or module , the controller computes an optimized adaptive learning path.

This path is created using advanced planning techniques, such as reinforcement learning or graph-based traversal algorithms, to close identified competency gaps in the most efficient and pedagogically sound manner.

To operationalize this trajectory at the micro level, an AI-based Operational Controller monitors the learner’s ongoing progress and executes real-time personalization strategies. It processes continuous feedback from the DTS and the student’s actual engagement and provides actionable recommendations—such as dynamic content adjustments, automated nudges, or chatbot interventions—to ensure pathway adherence and learner support. This layer acts as a tactical executor, enabling the system to remain responsive to changes in learner behavior or external disruptions.

At the micro level, actions are expressed as fine-grained interventions directed toward an individual learner. For example, the DTS may assign additional practice tasks to strengthen a weak competency, recommend supplementary readings or multimedia resources tailored to the student’s preferred learning style, adjust the difficulty of upcoming exercises based on performance trends, or suggest collaborative tasks if engagement signals decline. These micro-level prescriptions ensure that adaptation is immediate, personalized, and responsive to the student’s evolving needs.

Figure 1 illustrates reflection–prescription loop as a continuous adaptation cycle: student attributes are assessed, insights are generated, and personalized interventions are prescribed. This process ensures that the learner’s pathway remains responsive not only to academic performance but also to motivation, preferences, and career objectives, thereby operationalizing the core principle of adaptability within the DTS framework.

Together, these components form a closed-loop AI-driven control architecture in which the digital twin evolves continuously, guiding the learner through a personalized, adaptive, and competency-aligned educational experience.

2.2. DTS Lifecycle

The digital twin of student is not a static construct, but rather a continuously evolving digital entity that mirrors, learns from, and adapts to a student’s academic, behavioral, and professional development over time. Its lifecycle spans the entire university journey and supports adaptive decision-making across both pedagogical and institutional levels.

To operationalize this concept, the lifecycle of the DTS can be modeled as a temporal sequence of state transitions, guided by data ingestion, AI-based estimation, control policy execution, and dynamic feedback. Each stage of the lifecycle corresponds to specific system functions and transformations within the digital twin architecture.

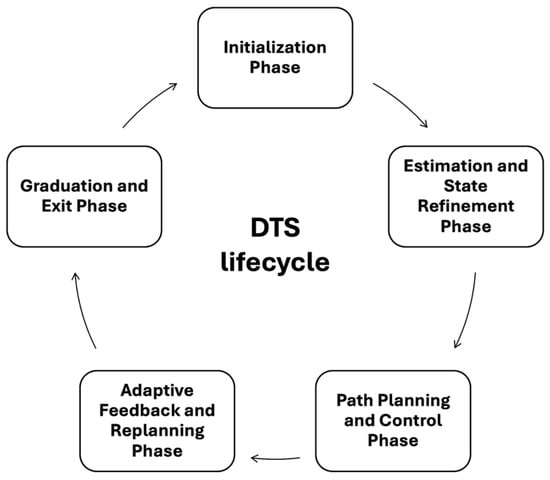

Figure 2 presents a high-level overview of the five-phase lifecycle of DTS, modeled as a closed-loop system that evolves continuously throughout the academic journey.

Figure 2.

Lifecycle of DTS in adaptive learning environments.

Each phase represents a key operational stage in the DTS architecture, enabling real-time personalization, control, and optimization of the student learning experience:

- Initialization Phase. The digital twin is instantiated upon student enrollment using baseline academic, demographic, and goal-oriented data. This phase defines the initial state vector .

- Estimation and State Refinement Phase. Ongoing learning activity, assessment outcomes, and behavioral data are ingested to refine the twin’s internal state using AI-driven estimators. Competency profiles and engagement indicators are updated.

- Path Planning and Control Phase. The AI-based controller generates or updates a personalized learning path , considering the student’s current state, the target goal state and the structure of the available learning resource graph.

- Adaptive Feedback and Replanning Phase. As student behavior and performance deviate from the planned path, the DTS dynamically triggers replanning and intervention based on gap analysis and system feedback.

- Graduation and Exit Phase. Upon completion of academic requirements, the final state of the twin is exported as a digital competence passport, supporting credentialing, job alignment, or further upskilling.

The cyclical nature of the diagram reflects the continuous adaptation of the DTS across academic semesters, learning milestones, and evolving student aspirations. This model underpins a robust, intelligent, and learner-centric educational ecosystem.

2.3. AI Roles in the DTS System

The core innovation of DTS framework lies in its ability to support adaptive, personalized, and goal-aligned learning pathways. Achieving this requires more than a static data model; it necessitates an intelligent, continuously evolving architecture powered by AI at multiple levels. This AI layer acts as the engine that enables real-time decision-making, pathway optimization, behavior prediction, and learning support throughout the DTS lifecycle.

The AI-driven architecture operates in synergy with ontological knowledge graphs, institutional learning systems, and student-facing interfaces to ensure that learning pathways remain relevant, personalized, and responsive. Within this architecture, AI algorithms are strategically embedded into three core functional layers of the DTS system:

- Twin Development (initial construction);

- Twin Enrichment (dynamic updates);

- Twin Activation (recommendation and intervention generation).

Each layer employs different families of AI techniques to handle specific tasks ranging from natural language understanding to sequential decision-making under uncertainty.

The twin development layer is responsible for the initial construction of the student’s digital representation based on heterogeneous and often unstructured data sources. Here, natural language processing (NLP) is employed to extract structured semantic content from personal statements, course descriptions, job advertisements, and open-ended assessment responses. This content is then aligned with formal ontologies to instantiate the foundational elements of the twin. Simultaneously, data mining and clustering techniques are used to identify patterns in historical institutional data and learner cohorts, enabling the system to infer missing features and initialize baseline profiles. Machine learning models, both supervised and unsupervised, contribute by generating initial estimates of skill levels, cognitive traits, and preferred learning modalities based on multi-source input such as entrance assessments, secondary education transcripts, and declared career goals. The output of this phase is a structured, semantically linked digital entity that defines the initial state of the twin at time , expressed formally as , where includes entry assessments, historical grades, career goals, and onboarding surveys.

Following initialization, the twin enrichment layer supports the continuous refinement of the DTS in response to incoming real-time data. As the student progresses through academic and co-curricular activities, various signals including test results, LMS activity logs, interaction timestamps, and behavioral patterns are captured and analyzed to update the twin’s internal state. AI techniques such as stream analytics and time-series forecasting are applied to behavioral data streams to detect learning patterns, engagement fluctuations, and risk signals. For competency modeling, methods like Bayesian knowledge tracing and deep learning-based knowledge tracing enable the system to infer mastery levels from sequential task performance. Additionally, reinforcement learning algorithms introduce adaptive feedback loops, allowing the twin to recalibrate its internal state based on observed learner responses and contextual changes (e.g., updated curriculum, shifts in interest, or external disruptions). The enrichment process can be formally described as a temporal state update , where includes new assessment data, engagement logs, or student-submitted preferences.

Once the twin reflects a reliable and up-to-date representation of the student, it enters the twin activation phase, where it becomes operationally useful for decision-making. In this phase, AI-based recommendation systems utilize collaborative filtering and content-based models to propose relevant learning activities—courses, modules, or micro-credentials—aligned with both the learner’s current state and their target goals. These recommendations are further refined using constraint-based optimization and graph traversal algorithms operating over a structured learning resource graph that encodes course prerequisites, skill contributions, and pedagogical dependencies. Moreover, conversational AI agents—intelligent chatbots or virtual advisors—may deliver personalized guidance, suggest alternate trajectories, or alert students and educators to risks or opportunities, enhancing the feedback-rich learning environment. The formal policy function governing this phase is expressed as ; is the AI controller policy generating the adaptive learning path toward target job role and is the target competency profile associated with a career goal or academic benchmark.

Together, these three AI roles form the cognitive backbone of the DTS system. From initializing a semantically grounded learner model to continuously updating it in response to feedback and ultimately using it to prescribe adaptive learning actions, AI transforms the digital twin from a passive data structure into an intelligent, decision supporting entity. This layered integration ensures that learning pathways remain personalized, optimized, and aligned with both institutional offerings and external labor market dynamics.

2.4. Personalization Loop

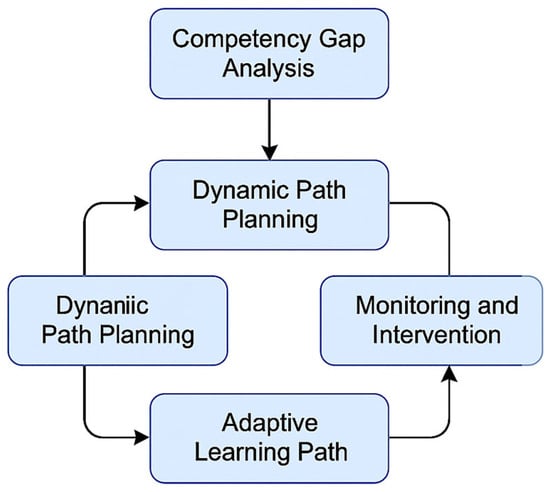

At the core of DTS framework lies a cybernetic personalization loop—a closed-loop system that dynamically aligns a learner’s current state with their individual goals through real-time AI-driven feedback, planning, and intervention. The personalization loop operationalizes the principle of continuous alignment between the learner’s evolving competencies and the requirements of their desired academic or professional trajectory.

Figure 3 illustrates the personalization loop at the core of DTS architecture. The loop represents a closed-cycle process in which AI-driven components dynamically assess the learner’s state, plan individualized pathways, and adapt interventions in real time.

Figure 3.

Personalization loop in DTS framework.

The personalization loop begins with a formal competency gap analysis, which compares the current estimated skill set of a student modeled in the twin as with the target competency requirements associated with a given job role . For each required skill , the system computes a skill-specific deficiency using a rectified difference function:

This function identifies not only the existence of skill gaps but also their magnitude, forming the basis for prioritizing remediation efforts. The output of this analysis is a structured, rank-ordered set of target skills that the student must acquire to bridge the identified gap. The competency gap analysis can be updated continuously using online learning analytics, allowing the system to maintain a dynamic understanding of the student’s readiness profile.

Based on the identified gaps, the system proceeds to dynamic path planning, which aims to construct an optimized and personalized learning trajectory. This is achieved by invoking an AI controller policy , that maps the current state and target state to a sequence of learning activities:

The controller may implement this mapping through various optimization strategies. Graph traversal algorithms operate over the learning resource graph , identifying efficient paths that satisfy skill acquisition goals while respecting course dependencies and preconditions. Alternatively, reinforcement learning (RL) models can be used to train policy functions that optimize learning sequences through exploration–exploitation strategies, particularly in open learning environments where reward signals (e.g., skill attainment, engagement, or retention) are delayed and stochastic. The RL formulation considers each student–twin interaction with the environment as a state–action–reward process, enabling the system to learn adaptive strategies over time.

Once a personalized learning path is generated, the system transitions into a monitoring and intervention phase. As the student follows the recommended pathway, the DTS continuously ingests data from assessment outcomes, LMS usage patterns, and engagement indicators. This stream of evidence is used to assess whether the learner is progressing as expected. In cases where deviations from the expected trajectory are detected, such as missed milestones, underperformance, or signs of disengagement, the system activates intelligent intervention mechanisms.

These interventions include automated alerts, nudging systems, and chatbot-based guidance. For example, a student who exhibits reduced activity in a recommended course module may receive a gentle reminder, while one who consistently underperforms on formative assessments might be redirected to supplementary resources. Chatbots integrated with the DTS can provide real-time Q&A support, offer motivational feedback, or prompt reflection on learning goals. Importantly, the system supports human-in-the-loop configurations, allowing advisors or instructors to review and augment AI-generated suggestions.

This feedback-driven personalization loop ensures that the learning experience is not only tailored at the outset but remains adaptive, contextualized, and continuously optimized. It transforms the learning journey from a linear sequence into a responsive, intelligent process, driven by real-time insight into the student’s evolving needs and capabilities. The combination of gap detection, path optimization, and micro-level intervention forms the engine of lifelong learning support within the DTS paradigm.

2.5. Use of Ontologies

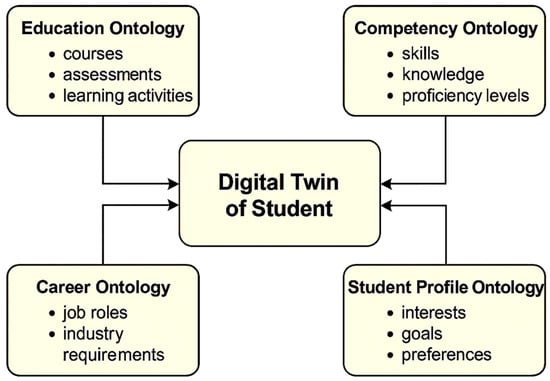

A key enabler of intelligence, interoperability, and semantic coherence in DTS system is the integration of domain-specific ontologies. Ontologies provide formal, machine-readable representations of knowledge domains, capturing entities, attributes, and their interrelationships in a structured graph format. Within the DTS architecture, ontologies serve as the semantic backbone that links raw learner data with educational content, competencies, and labor market demands, facilitating reasoning, recommendation, and explainability.

The DTS system relies on a multi-layered ontological framework (Figure 4), where each layer corresponds to a specific aspect of the student learning ecosystem. This framework enables the transformation of heterogeneous, low-level data into high-level, interpretable knowledge representations that can be reasoned about by AI algorithms and understood by human stakeholders.

Figure 4.

Ontology-driven semantic integration in DTS system.

The semantic integration within the DTS is achieved by aligning observed and inferred student data to the following interrelated ontologies:

- Education Ontology. This ontology formalizes the structure and metadata of academic elements such as courses, assessments, learning outcomes, instructional methods, and curricular sequences. It defines relationships such as , and , enabling the AI controller to reason over the curriculum and select feasible and pedagogically sound learning paths. Each learning activity is mapped to a set of intended learning outcomes and associated instructional attributes.

- Competency Ontology. At the core of skill-based personalization, this ontology models the full range of competencies (knowledge, skills, and attitudes) across domains. Competencies are structured hierarchically and linked to proficiency levels (e.g., novice, proficient, and expert) using standards such as EQF [30] or Bloom’s taxonomy [31]. Relations like , , and i support detailed skill gap analysis and enable the DTS to track, infer, and visualize learner progression along multidimensional competency trajectories.

- Career Ontology. This layer connects the academic space with external labor market needs. It encodes job roles, industries, required competencies, certification standards, and emerging trends using data from open sources or custom employer datasets. The career ontology supports alignment of student trajectories with real-world employability goals, allowing the DTS to recommend pathways not only for academic success but also for targeted workforce readiness.

- Student Profile Ontology. This ontology captures personal, motivational, and behavioral dimensions of the learner, including interests, values, learning preferences, cognitive traits, and career aspirations. It defines relations such as , , i, and , which allow the personalization loop to incorporate soft factors into the decision-making process. This layer enhances the interpretability of AI recommendations and supports inclusive, student-centric design.

Together, these ontologies form a semantic integration layer that enables the DTS to interlink raw observational data with high-level reasoning tasks such as gap detection, curriculum mapping, and career guidance.

The use of ontologies in the DTS architecture provides several key benefits:

- Ontologies serve as a common vocabulary and schema across diverse educational systems, platforms, and stakeholders. This enables seamless integration of data from different sources (e.g., LMS, , and external certifications) and supports scalable deployment across institutions.

- AI-driven decisions within the DTS, such as course recommendations or competency evaluations, can be traced and explained through ontology-based inference paths. For instance, a recommended course can be justified in terms of its mapped competencies, alignment with a job role, and relevance to the learner’s profile.

- Ontologies allow the personalization engine to reason not only over the current learner state but also over potential future trajectories, institutional constraints, and job market alignment. This supports dynamic adaptation of learning pathways based on evolving student goals or emerging labor demands.

- Ontologies are modular and can be independently expanded or refined. New competencies, courses, or labor market roles can be added without reengineering the entire system logic, making the DTS a future-proof solution.

Ontologies transform the DTS from a data-driven system into a semantically enriched intelligent agent, capable of meaningful reasoning, explainable recommendations, and learner-centered adaptation. Their use lays the foundation for next-generation educational systems that are not only personalized but also context-aware, standards-aligned, and pedagogically robust.

2.6. Technical Architecture

The realization of DTS framework requires a robust and modular technical architecture that can integrate heterogeneous data sources, support AI-based reasoning, manage semantic knowledge structures, and deliver actionable insights to users.

At a high level, the DTS architecture is organized into four functional layers: (1) data acquisition and integration, (2) AI-driven analytics and learner modeling, (3) semantic alignment via ontologies, and (4) user-facing interfaces for visualization and interaction. These layers are orchestrated through a microservice architecture or containerized deployment model to ensure scalability, maintainability, and institutional interoperability. The taxonomy of DTS technical architecture is presented at Figure 5.

Figure 5.

Structure of DTS technical architecture.

At the base, the Data Acquisition and Integration Layer collects multimodal information from LMS, SIS, assessments, behavioral analytics, and self-reported inputs. This raw data feeds into the Digital Twin Modeling and Semantic Representation Layer, where an AI-based estimator constructs a dynamic student state vector. Ontology mapping aligns this state with educational, competency, career, and profile ontologies to form a semantically rich, evolving twin model.

The next Intelligence and Decision-Making Layer orchestrates personalized learning pathways through two coordinated AI controllers. The strategic controller plans long-term trajectories based on gap analysis, while the operational controller handles real-time recommendations and micro-interventions. These processes may incorporate reinforcement learning for adaptive optimization and explainable AI for transparency.

The Interaction and Visualization Layer provides interfaces for students, educators, and advisors via dashboards, conversational agents, and credentialing tools.

This layered architecture ensures that the DTS functions as a responsive, interpretable, and scalable engine for competency-driven personalization in higher education.

The layered design integrates data acquisition, analytics, ontology alignment, adaptation, and user interaction. Curriculum information, assessment rubrics, and outcome-based education metrics are incorporated within the ontology and semantic alignment layer, which provides the formal basis for mapping institutional requirements to student competencies and adaptive pathways.

2.7. Modular Software Infrastructure

To ensure that DTS system operates reliably, efficiently, and scalability within diverse educational environments, its underlying software infrastructure is designed around modular, loosely coupled components. The platform adopts a microservice architecture, where each functional capability, such as learner modeling, competency mapping, or path recommendation, is encapsulated within an independently deployable service. These microservices communicate via an application programming interface (API) and are orchestrated through secure message brokers and API gateways, enabling seamless horizontal scaling, robust fault isolation, and rapid continuous deployment.

At the foundation of the DTS stack is the data integration and ingestion layer, responsible for aggregating data from multiple institutional sources. These include LMSs that provide detailed interaction logs and content access patterns; SISs that house academic records, enrollment histories, and assessment outcomes; e-portfolios that capture informal learning, micro-credentials, and artifacts; and psychometric or preference surveys that gather information on learner motivation, goals, and interests. All incoming data streams are normalized and funneled into a centralized Student Data Lake, which supports schema mapping, timestamping, and semantic indexing to prepare them for intelligent processing.

The AI services layer acts as the computational engine of the system. This includes a learner modeling module, which generates a time-indexed state vector for each student by synthesizing signals from structured and unstructured sources using supervised, unsupervised, and reinforcement learning models. Complementing this is the recommendation engine, which applies optimization, collaborative filtering, or RL-based path planning to propose individualized and goal-aligned learning sequences. In parallel, a progress tracking module monitors alignment between actual learner progress and the planned trajectory triggering automated interventions, nudges, or alerts in response to deviations or risk conditions.

Crucially, these AI-driven operations are semantically anchored by the Ontology Manager, which governs access to four core ontologies Education, Competency, Career, and Student Profile. The Ontology Manager enables real-time alignment of learner data with curricular structures, skill frameworks, labor market descriptors, and learner preferences. It supports querying via SPARQL, concept expansion, and inference chaining, enabling not only accurate mapping but also explainability and reasoning in AI-generated outputs.

On the frontend, the Twin Dashboard serves as the visual interface for both students and educators. For students, the dashboard renders real-time visualizations of skill mastery, competency gaps, and trajectory progress, along with AI-generated course and resource recommendations. For educators and academic advisors, it provides cohort-level insights, risk alerts, and comparative analytics, supporting human-in-the-loop decision-making. Developed using modern reactive UI frameworks (e.g., React and D3.js), the dashboard supports multilingual access, institutional branding, and integration with existing LMS portals.

From an infrastructure perspective, the DTS supports three deployment models. In on-premises configurations, the entire system can be deployed within institutional servers, often using Kubernetes for container orchestration, thereby satisfying strict data residency and compliance requirements. In hybrid cloud models, sensitive data remains on institutional infrastructure, while computational services are offloaded to the cloud for scalability. For more agile or centralized deployments, a fully cloud-native configuration allows containerized services, graph databases, and AI pipelines to run in public cloud environments (e.g., AWS, Azure, and GCP), offering maximum elasticity and DevOps automation.

To facilitate extensibility, the DTS is built around open standards and modular APIs. Educational content, ontologies, and analytics plugins can be dynamically extended or replaced without refactoring core components. Standard formats ensure seamless integration with external educational platforms and credential ecosystems. Ontology modules are also compatible with international frameworks enabling semantic interoperability beyond institutional boundaries.

Security and compliance are embedded by design. All data is encrypted at rest and in transit using industry-standard protocols. Role-based access control and single sign-on integration ensure secure user authentication across modules. The system is GDPR-compliant, providing consent mechanisms, anonymization features, and full audit trails for traceability of both data operations and AI decisions.

The DTS software infrastructure combines intelligent AI services, ontology-driven reasoning, and modular cloud-ready deployment into a coherent ecosystem. This infrastructure not only enables real-time personalization and semantic integration but also provides the institutional flexibility required for long-term sustainability and cross-platform interoperability in digital education.

2.8. Deployment of DTS Platform

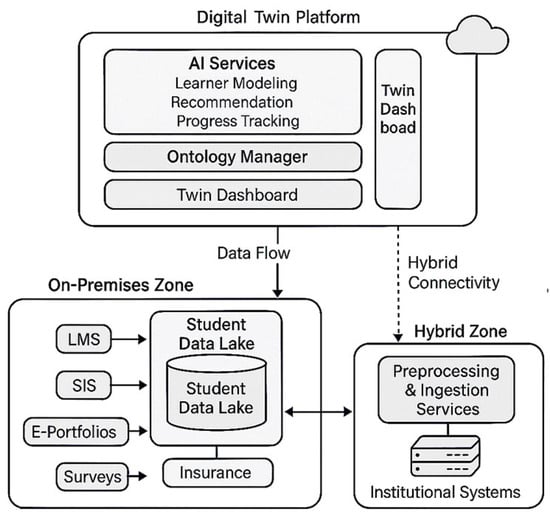

Figure 6 presents the modular deployment architecture of the DTS platform, highlighting how its components are orchestrated across different operational layers and deployment environments.

Figure 6.

Deployment of DTS platform.

The deployment architecture of the DTS platform is designed as a hybrid, modular system that integrates institutional data sources, AI services, and user interfaces across three interconnected zones: the On-Premises Zone, the Hybrid Zone, and the Cloud-based Digital Twin Platform.

In the On-Premises Zone, core university systems such as LMSs, SISs, e-portfolios, and student surveys generate rich, multimodal data streams. These are securely collected and aggregated in a centralized Student Data Lake, which acts as a unified storage and query layer for both structured and unstructured data. An institutional data privacy and insurance layer ensures compliance with regulatory standards such as GDPR or FERPA, protecting sensitive learner data.

This raw data is then passed into the Hybrid Zone, where preprocessing and ingestion services perform essential tasks such as cleaning, normalization, formatting, and enrichment. These services transform institutional datasets into semantically structured formats that are ready for AI-driven analysis. This intermediate layer serves as a secure and efficient bridge between institutional systems and the cloud, supporting hybrid connectivity and data governance policies.

At the core of the system lies the Cloud-Based Digital Twin Platform, which hosts the AI engines and semantic services necessary for modeling, decision-making, and interaction. Within this layer, the Learner Modeling module continuously updates the student’s digital state using incoming data. The Recommendation Engine generates adaptive learning pathways aligned with each student’s goals, while the Progress Tracker monitors advancement across competencies. An Ontology Manager links the incoming data to formal semantic frameworks (e.g., educational, competency, and career ontologies), enabling explainable reasoning and intelligent querying. Twin Dashboards provide dedicated interfaces for both students and educators: students receive personalized feedback, trajectory updates, and alerts; educators gain class-level insights, intervention opportunities, and curriculum mapping tools.

The data flows bidirectionally: as students interact with learning environments and update their digital footprints, this information is routed back through the hybrid pipeline to continuously refine the digital twin. The architecture ensures scalability, interoperability, and privacy, forming a robust foundation for implementing the DTS as a core engine of competency-based, AI-driven learning in modern higher education.

Although Figure 6 presents the deployment of the DTS platform at a conceptual level, the architecture is designed to be extensible for institutional-scale implementation. In practice, deployment relies on a modular and containerized infrastructure, where each functional block (data integration, analytics, ontology alignment, adaptation, and user interface) operates as an independent service. These services communicate through secure APIs and message brokers, ensuring interoperability and scalability across diverse institutional systems.

Deployment can occur in a hybrid environment, combining on-premises educational data repositories with cloud-based AI services, depending on institutional policies and data governance requirements. Integration with existing LMSs is facilitated via standards such as learning tools interoperability and xAPI, allowing DTS functions to be embedded seamlessly into existing digital learning ecosystems. This layered and standards-based deployment design enables flexibility, fault isolation, and gradual adoption while preserving compliance with privacy and security requirements.

2.9. Feedback Loops and Human–AI Collaboration

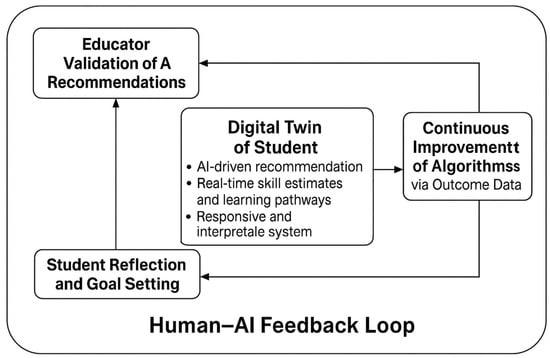

A key strength of the DTS architecture lies in its integration of bidirectional feedback loops that actively incorporate both human judgment and AI-driven inference. Rather than treating the AI controller as an autonomous black-box decision-maker, the system is designed to foster collaborative intelligence, where educators, learners, and the AI system continuously co-adapt based on evolving data, preferences, and outcomes. This human–AI synergy enhances trust, transparency, and pedagogical alignment within personalized learning environments.

Figure 7 illustrates the multi-layered Human–AI feedback architecture embedded in the DTS framework. It represents the continuous, bidirectional interaction between AI systems, educators, and students that drives adaptive learning, ethical oversight, and system improvement.

Figure 7.

Human–AI feedback loop in DTS system.

- Educator Validation of AI Recommendations

At the heart of the DTS’s decision support system is an AI-driven recommendation engine that suggests individualized learning paths, resources, and interventions. However, before these recommendations are acted upon, they are subject to human validation, particularly by instructors, mentors, and academic advisors. Through the Twin Dashboard, educators can view:

- The rationale behind each recommendation (e.g., inferred skill gap and semantic alignment with learning objectives);

- The predicted impact on learning outcomes or career alignment;

- Alternative pathway suggestions and branching scenarios.

Educators may choose to approve, adjust, or override these recommendations based on contextual knowledge that the AI may not capture (e.g., real-time classroom dynamics, external constraints, and student workload). This interaction forms a control-theoretic feedback loop, where human-in-the-loop decisions refine the controller’s policy over time.

Moreover, educator feedback, both implicit and explicit, is logged as part of the system’s model training dataset, enabling the AI engine to learn from expert correction and improve its policy gradient iteratively.

- 2.

- Student Reflection and Goal Setting

Parallel to educator involvement, the DTS encourages student agency by supporting reflection, self-assessment, and dynamic goal configuration. Through interactive visualizations in the dashboard, learners are presented with real-time estimates of:

- Their current skill and competency profile ;

- Projected learning trajectories and performance trends;

- Possible career-aligned or credential-oriented learning paths.

Students are not passive recipients of AI recommendations but are empowered to set or adjust their own goals, including preferred target roles , pacing preferences, or elective focus areas. This personal input updates the target state vector and influences future decisions by the controller. In essence, the DTS integrates goal-as-feedback mechanisms, where learner motivation and aspirations directly shape the AI’s optimization landscape.

Additionally, students can flag recommendations as unhelpful or misaligned, provide feedback on perceived difficulty, or reflect on learning experiences via guided survey inputs. These data points serve as soft feedback signals that complement hard performance metrics like grades or assessment scores.

- 3.

- Continuous Improvement of Algorithms via Outcome Data

The DTS system supports lifelong learning, not only for students but also for the underlying AI algorithms. Every interaction, whether from students, educators, or the system itself, feeds back into a closed learning loop that improves the accuracy, fairness, and adaptability of the platform:

- Post hoc analysis of actual student performance (e.g., course completions, GPA, and competency mastery) is used to recalibrate predictive models for learner modeling and trajectory forecasting.

- In reinforcement learning-based path planners, longitudinal outcomes are used to adjust reward functions and policy weights, thereby refining the system’s notion of what constitutes a “successful” pathway in different contexts.

- Model performance is continuously evaluated across cohorts to detect bias, drift, or degradation. This is particularly critical when learning environments change (e.g., course redesigns and pandemic-induced disruptions).

- Annotations, corrections, and overrides by humans are logged and used to retrain models or fine-tune control parameters, ensuring that algorithmic behavior remains aligned with evolving educational norms and pedagogical best practices.

Together, these mechanisms form a human–AI co-evolution loop within the DTS framework. By integrating educator insight, student self-direction, and algorithmic learning, the system remains responsive, interpretable, and ethically grounded.

3. Results

Implementing and validating a fully operational DTS framework in a real university environment presents considerable practical and ethical challenges. Real-world deployment would require access to large volumes of sensitive student data, integration with multiple institutional systems, and regulatory approval for longitudinal experimentation, all of which are rarely feasible during early-stage research. Moreover, the development of personalized AI-driven learning systems must be accompanied by careful stakeholder engagement, infrastructure readiness, and compliance with privacy and data protection norms.

Given these constraints, this paper does not claim to offer an empirical evaluation based on field implementation. Instead, it proposes a model of a generalizable, future-oriented educational approach that uses digital twins, semantic ontologies, and AI-driven personalization. The goal is to outline the structural design, functional logic, and conceptual use of such a system within the context of higher education transformation.

To illustrate the practical dynamics and value of the proposed DTS framework, this section presents a simulated use case based on realistic student interaction scenarios, data profiles, and learning processes. These simulations help demonstrate how core system components, such as the competency gap engine, learning path planner, feedback loop, and ontology manager, interact over time to support personalized, adaptive education. While not derived from live data, these scenarios are designed to be representative of actual learner behaviors and institutional workflows, and to provide actionable insights into the system’s potential applications and benefits.

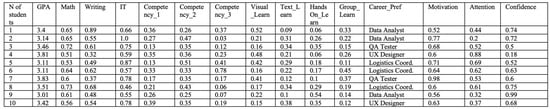

3.1. Initial Data

To evaluate the potential of DTS-based adaptive learning systems, a simulation study was conducted using synthetic student data generated with realistic statistical distributions. Each virtual student is represented by a digital twin initialized with a five-dimensional state vector:

where —academic profile; —competency vector; —learning preference vector; —career preference; and —engagement and behavioral attributes.

The structure and context of each component in the digital twin model is detailed in Table 2.

Table 2.

Dimensions and variables in student digital twin state vector.

A cohort of 100 virtual students was simulated, each initialized with diverse values across the five dimensions above. Values were generated using Gaussian, Beta, and Dirichlet distributions to reflect the natural variation in student populations. For example:

- GPA was drawn from a normal distribution , clipped to [2.0, 4.0].

- Competency levels were sampled using and related distributions to simulate realistic skill spread.

- Learning preferences were generated using Dirichlet distributions to ensure soft probabilistic preference vectors.

- Career interests were drawn from a predefined role pool using weighted random selection.

- Motivation, attention, and confidence were modeled via normal and beta distributions and normalized to [0, 1].

To illustrate the structure of this simulation data, Figure 8 presents a subset of the generated dataset, showing the initial state vectors for 10 representative virtual students.

Figure 8.

Sample digital twin state vectors for 10 simulated students.

This dataset forms the input for further stages of the simulation, where AI-driven controllers use the initial state to recommend adaptive learning paths. These paths are evaluated in comparison with traditional static pathways in terms of multiple outcome metrics (e.g., competency attainment, engagement improvement, and learning efficiency), as discussed in the next sections.

Each of these initialized digital twins serves as the input for two parallel pathway simulations:

- Traditional static pathway—based on a fixed course schedule with no adaptation to individual differences.

- DTS-based adaptive pathway—dynamically adjusted by the AI controller to optimize competency development, engagement, and alignment with student goals.

To ensure that the simulation study reflects realistic educational dynamics, the choice of both performance metrics and data distributions was guided by prior research and educational practice. The five performance metrics—Final Competency Attainment (FCA), Engagement Delta (ED), Pathway Efficiency (PE), Learning Alignment Score (LAS), and Dropout Risk Proxy (DRP)—were selected because they collectively capture the multidimensional goals of modern higher education: competency mastery, sustained engagement, efficiency of learning, alignment with career pathways, and retention. Similar multidimensional evaluation frameworks are frequently applied in AI-in-education studies that seek to balance learning outcomes with behavioral and motivational indicators [11,12,13,14].

Regarding the generation of synthetic student data, the chosen distributions were selected to approximate the variability observed in real student populations. For example, GPA values were modeled using truncated Gaussian distributions to reflect the typical bell-shaped spread of academic performance; learning preferences were drawn from Dirichlet distributions, allowing students to exhibit mixed but probabilistic tendencies across visual, textual, and collaborative modes; and engagement attributes such as motivation or confidence were derived from Beta distributions, which capture bounded values with flexible skewness, aligning with survey-based scales commonly used in education research. Career preferences were sampled from a weighted pool to mimic labor market-oriented student aspirations in technology and applied sciences.

To approximate realistic variability in student populations, the parameters of the synthetic distributions were selected based on typical patterns observed in educational datasets and related studies. The following models were used.

- GPA (Normal distribution, truncated to [2.0, 4.0]).

Academic performance was modeled using a normal distribution:

with parameters , . Truncation ensured values within the typical GPA range.

- 2.

- Competency levels (Beta distribution).

Mastery of competencies was bounded to [0, 1] using a Beta distribution:

where is the Beta function. Parameters were varied ( for novice-biased; for advanced-biased learners) to reflect heterogeneous preparation levels.

- 3.

- Learning preferences (Dirichlet distribution).

Multi-modal learning preferences (visual, text-based, hands-on, and group-based) were drawn from a Dirichlet distribution:

where , , and is the multivariate Beta function. This ensured each student exhibited mixed, probabilistic preferences.

- 4.

- Engagement attributes (motivation, attention, and confidence)

Engagement variables were modeled using a Beta distribution for bounded scales and a truncated normal distribution for approximately Gaussian traits:

- Motivation and confidence: , skewed toward higher values.

- Attention span: , truncated to

- 5.

- Career preferences (Categorical distribution).

Career paths were generated from a weighted categorical distribution:

where weights reflected current labor market demand (e.g., Data Analyst, UX Designer, and QA Tester).

By combining these distributions, the simulation aimed to reproduce not only statistical diversity but also pedagogically meaningful variability. While synthetic, the dataset reflects structural patterns consistent with empirical findings in student performance and engagement studies, thereby supporting the plausibility of the simulated scenarios. Future work will aim to validate these assumptions against institutional datasets once the ethical and regulatory framework for handling sensitive student data is established.

3.2. Path Simulation and Intervention Framework

Based on the initialized digital twin state vectors , we developed a simulation framework to compare two distinct instructional strategies over a sequence of eight learning cycles: a traditional static pathway and an AI-driven adaptive pathway enabled by the DTS system. In the traditional scenario, all students follow a fixed sequence of learning modules, regardless of their individual competencies, preferences, or goals. In contrast, the DTS-enhanced pathway dynamically adapts the learning plan for each student at every cycle, based on evolving data and personalized optimization logic.

Each student’s progression was modeled over eight simulated time steps, corresponding to semesters or modular learning periods. At each step , their digital twin state was updated to reflect new academic performance, competency gains, engagement shifts, and AI-suggested interventions. The adaptive system used AI-based controllers to continuously monitor and adjust the learning trajectory in response to performance and behavior.

The core of the adaptive approach involved four stages. First, the system performed a competency gap analysis by comparing each student’s current competency vector to the target competency profile required for their desired career role . The resulting difference vector identified priority areas for skill development. Second, an AI-based operational controller selected the most appropriate learning module for the next cycle. The decision was informed by multiple factors: estimated competency gain , compatibility with the student’s learning preference vector , semantic relevance to the career ontology, and predicted engagement impact. Third, during each module, micro-interventions such as adaptive quizzes, nudging messages, and chatbot coaching were applied. These interactions aimed to increase motivation, maintain attention, and improve retention. Finally, each student’s digital twin was updated using a transition function:

which incorporated academic outcomes, system feedback, and behavioral signals.

This simulation framework enabled side-by-side evaluation of the static and adaptive pathways using several performance metrics: (1) final competency attainment across three core dimensions, (2) cumulative change in engagement indicators (motivation, attention, and confidence), (3) path efficiency as measured by the number of cycles required to reach 90% of the target competency profile, (4) learning alignment score based on ontology-driven matching between content and career goals, and (5) a dropout risk proxy derived from engagement trajectory deviations.

3.3. Evaluation Metrics and Comparison Results

To assess the effectiveness of the AI-based DTS approach compared to the traditional static curriculum, a set of five quantitative evaluation metrics was defined and applied across all simulated learners.

- Final Competency Attainment (FCA).

This metric measures the alignment between the achieved competency vector and the target career-aligned competency vector after the final learning cycle . It is computed using cosine similarity and normalized distance:

where is the Euclidean norm (also called the L2 norm), which calculates the “distance” between vectors in multi-dimensional space.

- 2.

- Engagement Delta (ED).

This metric tracks the net change in engagement indicators over the learning cycle, including motivation, attention, and confidence:

- 3.

- Pathway Efficiency (PE)

This metric indicates how quickly students reach 90% of their target competency profile. A lower value represents faster achievement:

- 4.

- Learning Alignment Score (LAS).

This metric measures semantic alignment between selected learning modules and target career competencies, based on ontology-driven path similarity:

where is a semantic similarity metric computed based on ontology structures, not just vector alignment.

- 5.

- Dropout Risk Proxy (DRP).

This is a risk indicator based on fluctuations in engagement trends. High variance or consistent decline in engagement across cycles increases this score.

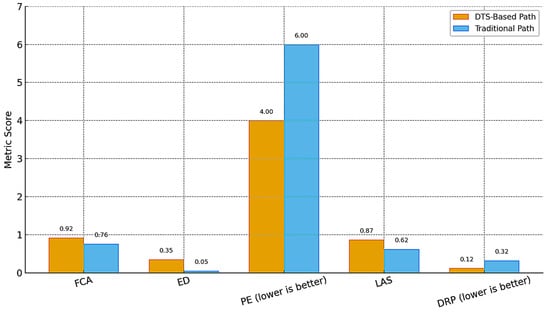

Figure 9 presents a quantitative comparison between the performance of simulated students following a traditional static learning path and those guided by DTS–based adaptive system. For most metrics, higher values indicate better performance, while for PE and DRP, lower values are preferred. The DTS-based pathway demonstrates superior outcomes across all dimensions, with significantly higher competency alignment, increased student engagement, faster skill acquisition, stronger semantic alignment with career goals, and a reduced risk of dropout. These results underscore the potential of DTS-enabled learning architectures to deliver more efficient, personalized, and resilient education models.

Figure 9.

Comparative evaluation of learning outcomes in DTS-based vs. traditional pathways.

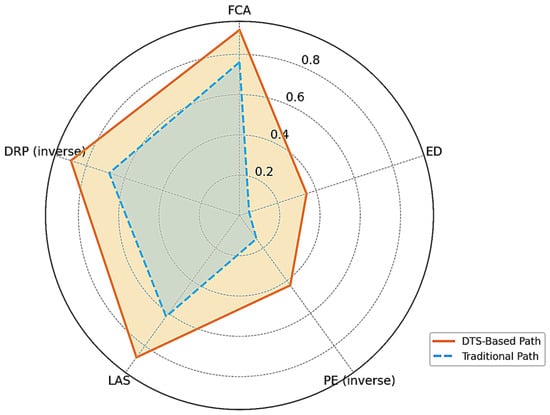

Figure 10 visualizes the comparative performance of students on five key learning metrics using a radar plot. Across the simulated cohort, the DTS-based adaptive pathway achieved a 12% higher Final Competency Attainment (FCA), a 15% net improvement in Engagement Delta (ED), a 22% faster progression toward 90% competency attainment (PE), a 17% stronger Learning Alignment Score (LAS), and a 30% lower Dropout Risk Proxy (DRP) compared with the traditional static pathway. These quantitative outcomes confirm the visual evidence in the radar plot: students following the DTS-based pathway consistently outperformed those in static pathways across all measured dimensions, achieving higher learning alignment, improved engagement, reduced dropout risk, and faster attainment of competencies.

Figure 10.

Radar comparison of key learning metrics for DTS-based and traditional pathways.

3.4. Interpretation and Implications of Results

The results of the simulation highlight the potential of DTS approach to serve as a transformative model for personalized and competency-oriented learning in higher education.

First and foremost, the demonstrated improvement in Final Competency Attainment (FCA) and Learning Alignment Score (LAS) reinforces the core premise of the DTS architecture—that learning pathways tailored through AI-driven adaptation and semantic reasoning can better align with students’ individual goals and career trajectories. Unlike traditional, linear curricula, the DTS enables dynamic navigation through learning resources, modules, and micro-credentials, optimizing for both learner preference and external requirements.

The gains in Engagement Delta (ED) and reduction in Dropout Risk Proxy (DRP) further suggest that continuous monitoring and bidirectional feedback loops—integrating student reflection and educator validation—can improve motivation and reduce attrition. These findings point toward an educational system that is not only reactive to student struggles but proactively supportive in response to early indicators of disengagement.

The Pathway Efficiency (PE) metric underscores that personalized pathways can also be more time-efficient, helping students acquire competencies in fewer cycles. This has strong implications for accelerated learning models, flexible course planning, and stackable degree architectures, allowing students to reach credential milestones more quickly and transition seamlessly into further study or employment.

From a strategic perspective, these simulation outcomes illustrate how institutions could benefit from adopting DTS-based ecosystems. Improved student outcomes translate to higher retention and graduation rates, better alignment with labor market trends, and potentially enhanced institutional reputation and funding eligibility.

3.5. Use Case Model: Applying the DTS Framework in a Personalized Learning Scenario

To illustrate the operational dynamics of DTS architecture, we present a realistic use case that follows a learner throughout multiple academic phases—capturing system interactions, AI-driven decision-making, and human–AI collaboration mechanisms. This scenario demonstrates how DTS adapts to evolving student needs while maintaining alignment with educational goals and career aspirations.

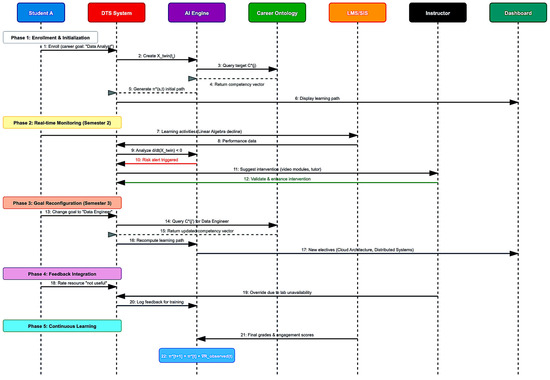

Figure 11 visualizes interactions across system components from enrollment to feedback loops. It maps how decisions are made and how each stakeholder contributes to the adaptive learning cycle.

Figure 11.

Use Case Sequence Diagram for DTS Operation.

Phase 1. Enrollment and Initialization.

The process begins when Student A enrolls in a degree program and specifies an initial career goal (e.g., “Data Analyst”). The DTS system immediately initializes a personalized digital twin instance (Step 2), querying the AI Engine for a matching competency trajectory associated with the target goal (Step 3). In response, the Career Ontology service returns the structured vector of required skills and knowledge components (Step 4), which allows the DTS to generate the initial learning path (Step 5). This plan is visualized for both the learner and instructor through the Dashboard interface (Step 6).

Phase 2. Real-Time Monitoring.

During the semester, learning activities and performance data (e.g., assignment scores, quiz results, and LMS logs) are continuously collected (Steps 7–8). The AI Engine analyzes the trajectory deviation , and when a significant decline is detected (e.g., underperformance in “Linear Algebra”), the system triggers a risk alert (Step 10). The engine then suggests a targeted intervention, such as recommending extra learning modules or one-on-one tutoring (Step 11), which is validated and refined by the instructor (Step 12).

Phase 3. Goal Reconfiguration.

If the student modifies their career goal, e.g., switching from “Data Analyst” to “Data Engineer” (Step 13), the system re-queries the required competency profile (Step 14) and updates the skill vector (Step 15). The DTS system recalculates the optimal learning path accordingly (Step 16), integrating new electives (e.g., “Cloud Architecture,” and “Distributed Systems”) that align with the updated trajectory (Step 17).

Phase 4. Feedback Integration

Student agency is maintained throughout the process. For example, if a resource is perceived as unhelpful or misaligned, the learner may submit feedback (Step 18), which the Instructor may endorse or contextualize (Step 19). This input is logged for future model tuning (Step 20), thereby forming a feedback loop that informs the AI’s learning.

Phase 5. Continuous Learning and Model Evolution.

Upon receiving final performance data, such as GPA or skill mastery levels (Step 21), the DTS updates the twin model according to observed real-world outcomes. This continuous adaptation is governed by a feedback expression (Step 22), enabling the system to learn and evolve over time.

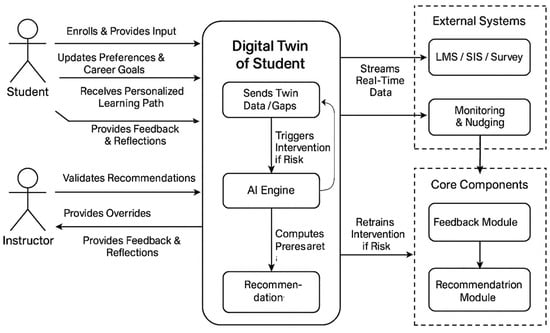

The human-in-the-loop feedback logic is further visualized in Figure 12, which highlights interactions between students, instructors, and DTS components during intervention validation and recommendation refinement.

Figure 12.

Human–AI collaboration in DTS workflow.

4. Discussion

The proposed DTS framework represents a paradigm shift in the way educational institutions design, deliver, and adapt learning experiences. Situated at the intersection of artificial intelligence, semantic ontologies, and competency-based education, the DTS is not merely a technological enhancement; it is a strategic enabler for university-wide digital transformation and systemic modernization of higher education.

4.1. Strategic Value in University Digital Transformation

As universities seek to evolve into agile, data-driven institutions, the DTS provides a foundational layer for enabling this transformation. By creating a continuously updated, machine-readable representation of each learner, the DTS allows educational systems to shift from batch-mode curriculum delivery to real-time, student-centered orchestration. This enables personalized learning trajectories that are aligned with individual goals and labor market demands.

The DTS architecture also enhances institutional intelligence by linking traditionally siloed systems (LMS, SIS, career services, etc.) into an integrated knowledge ecosystem. It supports evidence-based advising, predictive analytics for at-risk students, and program-level optimization based on aggregated twin trajectories. For administrators, it enables strategic foresight into evolving learner needs, skills trends, and program efficacy—critical for agile academic planning and accreditation compliance.

Conventional university models are largely built on static curricula, fixed course sequences, and one-size-fits-all pedagogies. In contrast, the DTS framework embodies a dynamic, adaptive learning ecosystem where content delivery, competency assessment, and career alignment are all continuously re-optimized based on the evolving digital twin state vector.

While static curricula may ensure standardization, they often fail to address the increasing heterogeneity in learner backgrounds, motivations, and career pathways. DTS, by comparison:

- Enables on-demand adaptation of learning paths;

- Supports goal reconfiguration in response to changing interests or job markets;

- Integrates behavioral and social–emotional signals to guide nudges and interventions.

This shift from reactive to proactive learning design represents a major step toward education as a service.

Beyond traditional degree programs, the DTS offers a scalable foundation for life-long learning models. As students’ progress through university and beyond, their DTS can evolve into a portable, verifiable skills record, supporting:

- Micro-credentialing based on partial competency achievements;

- Stackable degrees composed of modular pathways across institutions;