Abstract

Surface material recognition is a key component in robotic perception and physical interaction, particularly when leveraging both tactile and visual sensory inputs. In this work, we propose Surformer v1, a transformer-based architecture designed for surface classification using structured tactile features and Principal Component Analysis (PCA)-reduced visual embeddings extracted via ResNet 50. The model integrates modality-specific encoders with cross-modal attention layers, enabling rich interactions between vision and touch. Currently, state-of-the-art deep learning models for vision tasks have achieved remarkable performance. With this in mind, our first set of experiments focused exclusively on tactile-only surface classification. Using feature engineering, we trained and evaluated multiple machine learning models, assessing their accuracy and inference time. We then implemented an encoder-only Transformer model tailored for tactile features. This model not only achieves the highest accuracy, but also demonstrated significantly faster inference time compared to other evaluated models, highlighting its potential for real-time applications. To extend this investigation, we introduced a multimodal fusion setup by combining vision and tactile inputs. We trained both Surformer v1 (using structured features) and a Multimodal CNN (using raw images) to examine the impact of feature-based versus image-based multimodal learning on classification accuracy and computational efficiency. The results showed that Surformer v1 achieved 99.4% accuracy with an inference time of 0.7271 ms, while the Multimodal CNN achieved slightly higher accuracy but required significantly more inference time. These findings suggest that Surformer v1 offers a compelling balance between accuracy, efficiency, and computational cost for surface material recognition. The results also underscore the effectiveness of integrating feature learning, cross-modal attention and transformer-based fusion in capturing the complementary strengths of tactile and visual modalities.

1. Introduction

Robots interacting with the physical world must be able to accurately perceive and classify the surfaces they touch. This capability is vital for safe object manipulation, navigation over varied terrains, and executing fine-grained tasks in unstructured environments. Tactile sensing provides detailed information about surface compliance, friction, and texture, properties that vision alone may struggle to capture reliably, especially under occlusions, poor lighting, or specular reflections. On the other hand, vision offers global context and appearance cues, making the combination of visual and tactile sensing a powerful solution for material recognition and robotic perception.

Recent research has extensively explored both vision-based and tactile-based models. Vision-only models [1,2], particularly those leveraging deep Convolutional Neural Networks (CNNs) and vision transformers, have achieved strong performance on image recognition tasks. However, their reliance on visual features makes them sensitive to occlusion, illumination variations, and specular noise. Dave et al. introduced MViTac [3], a multimodal visual-tactile representation learning by self-supervised contrastive pre-training, which employs dual encoders and contrastive learning within and across visual and tactile modalities, exploiting intra and inter modal contrastive losses to align representations without explicit supervision. This method achieves robust material property classification and grasp success prediction using cross-modal embeddings, exceeding several supervised and unsupervised baselines. MViTac, like many self-supervised algorithms, has reduced grip prediction performance on smaller datasets, demonstrating sensitivity to dataset size and diversity [3]. On the other hand, tactile-only models have demonstrated robustness in manipulation, object understanding, grasping and tactile data classification tasks where physical contact is critical [4,5,6,7]. For example, GelSight-based [8] tactile sensors have enabled the extraction of high-resolution surface deformations, which are highly informative for material property inference.

To harness the strengths of both sensing modalities, multimodal learning strategies have been introduced to improve surface and object understanding. Early efforts employed simple feature-level fusion (e.g., concatenation) or decision-level fusion techniques [9,10], but these often failed to capture the nuanced relationships between heterogeneous modalities. More advanced fusion architectures have been proposed, including those using attention-based networks and cross-modal deep representations [11,12,13]. Models such as [14,15,16] have been developed for grasp planning, object exploration, and even navigation while interacting with aliased environments, highlighting the complementary nature of tactile and visual data. In parallel, transformer-based models have emerged as a powerful backbone for multimodal representation learning due to their ability to model long-range dependencies and perform cross-modal reasoning. Architectures such as [17,18] have shown remarkable generalization in vision-language tasks, suggesting their potential for cross-sensory integration in robotics. Moreover, models like MAE [19] and CLIP [20] demonstrate the effectiveness of attention-based learning in fusing distributed representations from different input spaces.

Despite these advances, several critical limitations persist in current surface classification approaches. First, end-to-end deep learning models typically rely on large volumes of labeled data, which are often scarce in tactile domains due to the complexity and cost of sensor setup and manual annotation. This data dependency not only limits scalability but also hampers generalizability in real-world scenarios. Second, many existing multimodal methods employ simplistic fusion strategies, such as feature concatenation or prediction averaging, which are inadequate for modeling the rich, nonlinear relationships and contextual dependencies between tactile and visual modalities. These approaches often ignore the dynamic interactions that naturally occur when humans perceive and interpret materials through both vision and touch. Third, most prior models lack modularity and transparency to incorporate both structured tactile information and learned visual embeddings and often treat tactile and visual inputs as homogeneous data streams without accounting for their distinct structures and complementary roles. As a result, there remains a need for models that are both effective and computationally efficient, particularly for resource-constrained robotic platforms.

To address these limitations, we propose Surformer v1, a transformer-based architecture designed specifically for surface classification. The model processes structured tactile features alongside reduced visual embeddings, combining them through a unified mid-level fusion framework. Unlike earlier methods, Surformer v1 enables cross-modal representation learning while maintaining computational efficiency, ensuring scalability to real-world robotic environments. Our key contributions are as follows:

- We introduce a multimodal architecture, leveraging both tactile and PCA-reduced ResNet 50-based visual embeddings suitable for robotic deployment and extension to other sensor combinations.

- We employ a mid-level fusion architecture with multi-head cross-attention layers to learn complementary representations, joint embeddings and bidirectional interaction between tactile and visual inputs.

- We implement a lightweight, encoder only Transformer for tactile-only classification, achieving the highest accuracy and the lowest latency, making it suitable for real-time deployment on embedded robotic systems where computational resources are limited.

- We evaluate our models on the Touch and Go dataset [21] and demonstrate that multimodal fusion improves accuracy over tactile-only baselines.

2. Methods

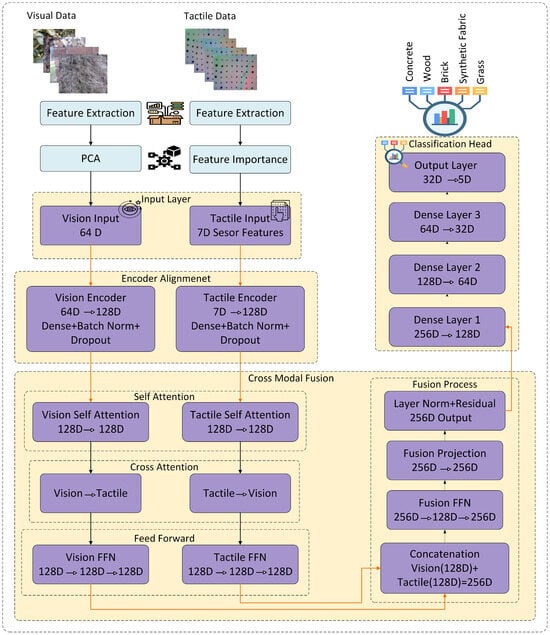

In this section we discuss Surformer v1 architecture, which is designed for surface material classification, leveraging modality-specific encoders and cross-modal attention to jointly learn discriminative representations from tactile and visual inputs. Tactile inputs consist of structured, low-dimensional features extracted from touch and go dataset which was originally collected using GelSight sensors [22], while visual features are derived from ResNet50 [23] and reduced via PCA to maintain balance between modalities. As illustrated in Figure 1, the overall model architecture consists of four key stages: (1) feature processing, (2) modality specific encoders, (3) cross-modal fusion blocks that enable bidirectional information flow and fusion between modalities, and (4) a classification head that outputs surface material probabilities.

Figure 1.

Architecture of Surformer v1 for multi-modal surface classification from tactile and visual data. The model accepts two input modalities: (1) visual features extracted from surface images using a pretrained ResNet-50 model and reduced to 64 dimensions via PCA, and (2) the top seven tactile features selected based on importance analysis. Each input passes through a modality-specific encoder to map it into a unified 128-dimensional latent space using dense layers, batch normalization, and dropout. In the Cross-Modal Fusion stage, both self-attention and bidirectional cross-attention mechanisms are applied to capture intra- and inter-modality relationships. Feed-forward networks refine features within each modality before they are concatenated and passed through a fusion block involving residual connections, projection layers, and normalization. The fused representation is finally processed by a classification head comprising three fully connected layers and a softmax output layer to predict one of five surface material classes. This design enables the model to leverage complementary cues from both modalities, improving surface understanding beyond single-sensor methods.

2.1. Feature Processing

2.1.1. Feature Extraction

For tactile features, GelSight sensor images were first converted to grayscale and processed through a custom feature engineering pipeline designed to extract key characteristics. Specifically, two distinct sets of features were derived: Texture/Roughness Features, which capture surface-level texture properties, and Pressure/Contact Features, which characterize the contact dynamics during tactile interaction. The Texture/Roughness Features include surface roughness (deviation), gradient magnitude, local contrast, edge density and uniformity index. The Pressure/Contact Features consist of average pressure, maximum pressure, contact area, pressure standard deviation and center of pressure deviation. These features were extracted to capture important information that are essential for better classification. A detailed description of these features is provided in Table 1.

Table 1.

Description of tactile features used for surface classification.

For the vision modality, raw RGB images are first preprocessed by scaling the pixel values to the [0, 255] range and resizing them to 224 × 224 × 3 to match the input requirements for ResNet50. The images are then passed through a pre-trained ResNet50 backbone (excluding the top classification layers), originally trained on ImageNet, followed by Global Average Pooling to convert the 7 × 7 × 2048 feature maps into 2048-dimensional dense embeddings that capture rich visual representations including textures, colors and structural patterns crucial for material identification.

2.1.2. Feature Selection

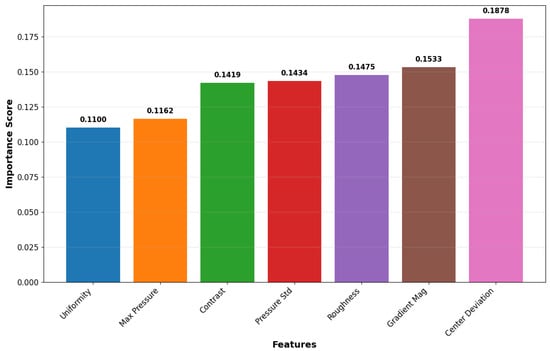

For tactile features, both Texture/Roughness Features and Pressure/Contact Features are evaluated and ranked with Random Forest (see Table 2) to gain insight on the features and their importance. The top seven features were selected based on their importance scores, and evaluated with RF again as illustrated in Figure 2, and concatenated into a unified 1D feature vector. This vector served as input for downstream surface classification tasks.

Table 2.

Feature importance rankings for texture/roughness and pressure/contact sets using Random Forest.

Figure 2.

Top seven tactile feature importance scores.

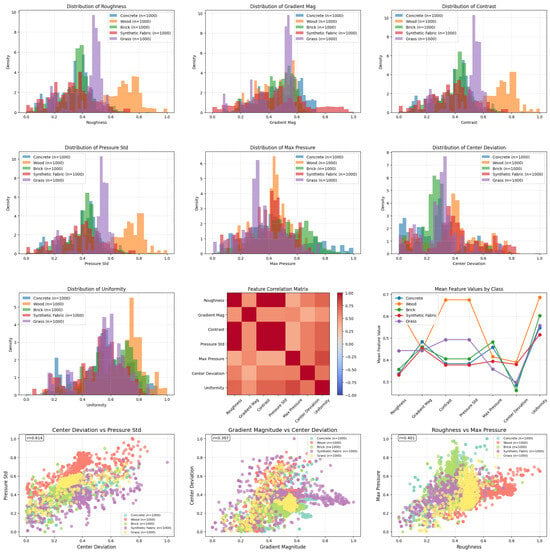

To better understand the discriminative capacity of the selected tactile features and how they capture different physical properties relevant to material classification, we performed a detailed statistical analysis of the top seven features identified via Random Forest-based feature importance ranking. These features include Roughness, Gradient Magnitude, Contrast, Pressure Standard Deviation, Maximum Pressure, Center Deviation and Uniformity. Figure 3 presents the feature distributions across all five surface material classes (Concrete, Wood, Brick, Synthetic Fabric and Grass), highlighting class-specific trends. A pairwise correlation reveals that most features are weakly correlated, indicating that each captures distinct surface properties. The mean feature profile per class confirms that the selected features carry discriminative patterns essential for material classification, thereby justifying their use as structured input for the Surformer v1 model. Additionally, pairwise scatter plots illustrate useful feature interactions, such as the relationship between roughness and maximum pressure or between gradient magnitude and center deviation, which further support the separability of classes in the feature space.

Figure 3.

Tactile features analysis. The figure shows the distribution of each feature’s density across all five classes and includes scatter plots to visualize relationships between pairs of features. These plots help reveal class separability and feature interactions.

For the vision features, PCA was applied to reduce the dimensionality from 2048 to a more compact representation with 64 dimensions. The reduced embeddings retained 90.7% variance. As a result, the final visual embeddings used for downstream tasks had a shape of (5000, 64).

2.2. Multi-Modal Classification

2.2.1. Cross-Modal Fusion

Cross-modal fusion block is the core of Surformer v1 architecture, exchanging information between vision and tactile features. Each fusion block performs multi-stage attention-based integration, allowing the model to classify the material. Before the fusion block, each input modality is passed through a dedicated encoder comprising progressive dense layers. These act as input embedding layers, mapping tactile features (7D) and reduced visual features (64D using PCA) into a common 128-dimensional latent space. This shared embedding space enables an effective interaction in subsequent attention-based fusion.

- Self Attention and Cross Attention:

The block begins by processing each input independently through self-attention mechanisms, where vision features attend to other vision features and tactile features attend to other tactile features. This self-attention stage allows each modality to refine its internal representations by identifying the most relevant patterns within itself. Following self-attention, the cross-attention mechanism operates bidirectionally: vision features serve as queries that attend to tactile features as keys and values, while simultaneously tactile features serve as queries that attend to vision features. The attention mechanisms within the fusion block leverage multi-head attention with two heads, each operating on 64-dimensional subspaces of the 128-dimensional feature space. This multi-head design is crucial because it allows the model to capture different types of cross-modal relationships simultaneously. The diversity of attention heads ensures comprehensive cross-modal understanding. The mathematical foundation of the cross-modal attention follows the scaled dot-product attention formula, but is adapted for cross-modal interaction. When vision features query tactile information, the attention weights are computed as:

where, refers to the query matrix derived from the visual modality, denotes the key matrix from the tactile modality, and is the value matrix also from the tactile modality. The dot product computes the attention scores, scaled by , the dimensionality of the keys. The softmax function transforms these scores into attention weights, which are used to compute a weighted sum over the tactile values, enabling cross-modal feature alignment. This formulation allows vision features to selectively attend to relevant tactile patterns based on their current visual understanding, creating a dynamic information exchange that adapts to the specific characteristics of each input sample.

- Feed-Forward Processing and Normalization:

After the attention operations, fusion block applies feed-forward networks to both modalities independently. These FFNs process 128 dimensional features through a 128-dimensional hidden layer back to 128 dimensions using ReLU activation functions. Layer normalization and residual connections are applied throughout the fusion block to ensure stable training and effective gradient flow. The normalization is applied before each sub-layer (pre-layer normalization). Residual connections allow the model to learn incremental refinements to the features while preserving the original information, preventing the vanishing gradient problem in deep networks.

- Fusion Process:

After the vision and tactile features have been refined through self-attention, cross-attention, and feed-forward processing, they are then concatenated to create a 256-dimensional combined representation. The concatenation preserves the distinct characteristics of each modality while creating a unified multi-modal feature vector that contains both visual and tactile information along with their correlations. The concatenated features then undergo a fusion feed-forward network that operates on the full 256-dimensional space (256D → 128D → 256D). This fusion FFN learns to integrate the combined multi-modal information. A projection layer then transforms these fused features, and the final output undergoes layer normalization with a residual connection from the original concatenated features, ensuring that the model can learn incremental refinements while preserving essential information.

2.2.2. Classification Head

A progressive dimensionality reduction strategy using multiple dense layers, each followed by batch normalization and dropout. The fused 256-dimensional multi-modal features are systematically reduced through a three-stage pathway: from 256 to 128, then to 64 dimensions, and finally to 32D. Each stage incorporates normalization and dropout to enhance training robustness. Finally, a softmax layer maps the 32-dimensional compressed representation to a probability distribution over the five material classes: Concrete, Wood, Brick, Synthetic Fabric and Grass.

3. Experimental Results and Discussion

3.1. Data Preprocessing

The touch and go dataset [21], consist of synchronized vision and gel-sight sensor video for more than 20 labeled classes. Thousands of synchronized frames were extracted from both vision (video) and tactile (GelSight), with each frame labeled according to its corresponding surface class. These images were preprocessed by resizing them to 224 × 224 resolution with three RGB channels and normalizing pixel values between 0 and 1. For this study, a curated subset of the dataset without noise or incorrect labels was selected to ensure clean and reliable input data. The extracted classes included Concrete (288), Wood (364), Brick (661), Synthetic Fabric (364) and Grass (600). To address class imbalance, data augmentation was performed using Keras, resulting in a balanced final dataset of 5000 paired vision and tactile images, 1000 samples per class.

For the vision images, data augmentation was applied using a series of transformations, including a rotation range of 25 degrees, width and height shifts of up to 10% and a zoom range of 0.05. Horizontal flipping was enabled to increase variability, while vertical flipping was avoided to maintain the realism of natural scenes. Brightness variation was constrained within a conservative range of 0.8 to 1.2, and channel shifts were limited to 0.1. All transformations used the nearest fill mode, and no automatic rescaling was applied since preprocessing handled it separately.

For the tactile images, augmentation included a rotation range of 20 degrees, and width and height shifts up to 10%. A zoom range of 0.05 was applied, with horizontal flipping enabled. Brightness adjustments were within a narrow range of 0.9 to 1.1. Similar to the vision image augmentation, no rescaling was performed, and the same “nearest” fill mode was used.

3.2. Training Strategy

For all the tactile only models including RF [24], XGBoost [25], SVM [26], Transformer [27], the dataset was split into 80% training and 20% testing using stratified sampling. For the Surformer v1 and Multi-modal CNN, dataset was split into 80% training, 10% testing and 10% validation to enable hyperparameter tuning. The encoder only transformer model was trained with a learning rate of 0.001 for 150 epochs using the AdamW optimizer with a batch size 64, and a learning rate scheduler (ReduceLROnPlateau) to adaptively reduce the learning rate based on validation performance with the patience of 10 and a factor of 0.5. Regularization techniques included a weight decay of 0.01 and dropout with a rate of 0.1 to prevent overfitting.

The multi-modal Surformer v1 was trained with a learning rate of for up-to 100 epochs using the Adam optimizer and a batch size of 32 to ensure stable convergence across both vision and tactile modalities. The EarlyStopping callback was used with a patience of 15 epochs based on validation accuracy, and a learning rate scheduler ReduceLROnPlateau was implemented to adaptively reduce the learning rate based on validation loss, with a patience of 8 epochs and a reduction factor of 0.5. The scheduler also included a minimum learning rate threshold of . Regularization techniques included a dropout rate of 0.1 across all encoders, blocks and classification heads. Batch normalization was employed in all dense layers within the modality specific encoders.

The Multimodal CNN uses a dual-stream architecture with two parallel ResNet50 backbones, vision and tactile. Both backbones are initialized with ImageNet weights and process 224 × 224 × 3 RGB images. After feature extraction, Global Average Pooling reduces spatial dimensions, followed by stream-specific dense layers with ReLU activation and dropout. The vision and tactile features are concatenated into a 512-dimensional fused representation, which is further processed through an additional dense layer before final classification into five material classes via a softmax layer. The model is optimized using Adam optimizer with a learning rate of , trained for 100 epochs with a batch size of 32, matching the training settings used for Surformer v1. Regularization techniques include L2 weight decay of , dropout of 0.5, early stopping with a patience of 15 epochs based on validation accuracy, and learning rate reduction on plateau with a factor 0.5 for every 8 epochs on validation loss. Most hyper-parameters for Multi-model CNN were kept similar as Surformer v1.

Additionally, classical machine learning algorithms were carefully optimized and evaluated. The Random Forest classifier was configured with 200 decision trees, a maximum depth of 15, and additional constraints, including a minimum sample split of 5 and a minimum sample leaf of 2. The XGBoost model, known for its gradient-boosted tree ensembles, was employed with 300 estimators, a maximum depth of 8 and a learning rate of 0.1. To enhance generalization, sub-sampling and column sampling were both set to 0.8 for regularization. Finally, Support Vector Machines (SVMs) were used with two kernel variants. The RBF kernel was configured with C = 10 and gamma = “scale” to capture non-linear decision boundaries, while the linear kernel used C = 1.0 to model high-dimensional linear separability. Both SVM configurations were enabled with probability estimation to support downstream interpretability and confidence analysis. These classical models served as strong baselines and provided valuable insights into the discriminative power of extracted tactile features.

3.3. Results and Discussion

In this section, we analyze both tactile-only and multi-modal classification approaches, comparing their performance based on precision, recall, F1-score and accuracy.

As shown in Table 3, for tactile-only classification, the encoder-only Transformer achieved the highest accuracy of 0.9740, followed closely by XGBoost (0.9670) and Random Forest (0.9560). The SVM with an RBF kernel performed moderately well with an accuracy of 0.8660, while the linear SVM yielded significantly lower results (0.6810 accuracy), highlighting its limited ability to model the complex decision boundaries required for tactile feature classification. In addition to classification performance, inference time and model size were also evaluated. Inference time in this study refers to the time it takes for a trained model to process new, unseen data and produce a classification output. It was evaluated on the test set with a batch size of 100, and is reported in milliseconds per sample for both tactile-only and vision-tactile models. The encoder-only Transformer not only delivered strong accuracy but also demonstrated the lowest inference time (0.0085 ms per sample) among all models, making it highly suitable for real-time applications. In contrast, while Random Forest and XGBoost showed competitive accuracy, they exhibited higher inference times (0.2819 ms and 0.0923 ms, respectively) and, in the case of Random Forest, a substantially larger number of parameters (108,788 parameters).

Table 3.

Classification performance comparison: Tactile (T) Only vs. Vision and Tactile (V-T) Models.

For multimodal classification, Surformer v1 demonstrated strong performance, highlighting the effectiveness of cross-modal fusion for surface material recognition. It achieved scores of 0.99 in precision, recall, and F1-score, with an accuracy of 0.9940. We further implemented a Multimodal CNN using raw vision and tactile images and compared the results with Surformer v1. The Multimodal CNN achieved the highest accuracy (1.00) across all metrics but required substantially higher inference time (5.0737 ms) and had a much larger number of parameters (48.3 million) compared to Surformer v1, which maintained a faster inference time of 0.7271 ms with only 673,321 parameters. This result demonstrates that while the Multimodal CNN slightly outperforms in accuracy, Surformer v1 offers a significantly more efficient and compact solution, making it better suited for real-time robotic applications where computational resources are limited.

4. Conclusions

In this paper, we introduced Surformer v1, a transformer-based model that combines structured tactile features and PCA-reduced visual embeddings from ResNet-50 to achieve robust surface material classification. The model architecture incorporates modality-specific encoders and cross-modal attention mechanisms, facilitating rich interactions between sensory inputs and capturing complementary characteristics of each modality. We investigated surface material classification through both tactile-only and multimodal learning approaches. We began by focusing on tactile-only classification, where feature engineering enabled the training of several classical machine learning models. An encoder-only Transformer tailored for tactile features was also implemented, which, while achieving strong accuracy, demonstrated significantly faster inference times than other models, highlighting its suitability for real-time applications where efficiency is critical. To extend this investigation, we incorporated visual information and introduced a multimodal fusion framework combining both tactile and vision inputs. We implemented and compared two multimodal models: a Multimodal CNN trained on raw images and the proposed Surformer v1, which operates on structured feature representations. The results showed that while the Multimodal CNN achieved slightly higher classification accuracy, Surformer v1 delivered substantially faster inference time and a smaller number of parameters. These findings demonstrate that Surformer v1 provides a compelling trade-off between accuracy, computational efficiency, and real-time applicability. Overall, this study underscores the value of integrating structured feature learning with cross-modal attention for efficient and accurate surface material recognition. Future work will explore scaling the approach to larger and more diverse datasets, evaluating generalizability across sensor types, and further optimizing the architecture for deployment in real-world robotic systems.

Author Contributions

Conceptualization, M.K., E.H., N.A.G. and S.R.; methodology, M.K., and N.A.G.; software, M.K. and E.H.; validation, M.K., E.H., N.A.G. and S.R.; formal analysis, M.K.; investigation, M.K., E.H., N.A.G. and S.R.; resources, M.K., N.A.G.; data curation, M.K.; writing—original draft preparation, M.K. and N.A.G.; writing—review and editing, N.A.G. and S.R.; visualization, M.K. and N.A.G.; supervision, N.A.G. and S.R.; project administration, S.R.; funding acquisition, N.A.G. and S.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

We used the publicly available Touch and Go dataset, which is accessible through the original publication [21].

Acknowledgments

The authors acknowledge the support and resources provided by the Predictive Analytics and Technology Integration (PATENT) Laboratory at Mississippi State University and Bioinspired Robotics, AI, Imaging and Neurocognitive Systems (BRAINS) Laboratory at The University of Alabama. The authors also extend their sincere gratitude to Sindhuja Penchala, a graduate student in the Department of Computer Science at The University of Alabama, for her feedback and suggestions during the review of this paper.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Dave, V.; Lygerakis, F.; Rückert, E. Multimodal Visual-Tactile Representation Learning through Self-Supervised Contrastive Pre-Training. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; pp. 8013–8020. [Google Scholar] [CrossRef]

- Li, Q.; Kroemer, O.; Su, Z.; Veiga, F.F.; Kaboli, M.; Ritter, H.J. A review of tactile information: Perception and action through touch. IEEE Trans. Robot. 2020, 36, 1619–1634. [Google Scholar] [CrossRef]

- Hu, Y.; Li, M.; Yang, S.; Li, X.; Liu, S.; Li, M. Learning robust grasping strategy through tactile sensing and adaption skill. arXiv 2024, arXiv:2411.08499. [Google Scholar] [CrossRef]

- Chen, L.; Zhu, Y.; Li, M. Tactile-GAT: Tactile graph attention networks for robot tactile perception classification. Sci. Rep. 2024, 14, 27543. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Ji, X.; Li, S.; Dong, H.; Liu, T.; Zhou, X.; Yu, S. Robot tactile data classification method using spiking neural network. In Proceedings of the 2021 China Automation Congress (CAC), Beijing, China, 22–24 October 2021; IEEE: New York, NY, USA, 2021; pp. 5274–5279. [Google Scholar]

- Yuan, W.; Dong, S.; Adelson, E.H. GelSight: High-resolution robot tactile sensors for estimating geometry and force. Sensors 2017, 17, 2762. [Google Scholar] [CrossRef] [PubMed]

- Atrey, P.K.; Hossain, M.A.; El Saddik, A.; Kankanhalli, M.S. Multimodal fusion for multimedia analysis: A survey. Multimed. Syst. 2010, 16, 345–379. [Google Scholar] [CrossRef]

- Srivastava, N.; Salakhutdinov, R.R. Multimodal learning with deep boltzmann machines. In Advances in Neural Information Processing Systems 25 (NIPS 2012), 26th Annual Conference on Neural Information Processing Systems 2012, Lake Tahoe, NV, USA, 3–6 December 2012; The Neural Information Processing Systems Foundation: San Diego, CA, USA, 2012; Volume 25, pp. 2949–2980. [Google Scholar]

- Nagrani, A.; Yang, S.; Arnab, A.; Jansen, A.; Schmid, C.; Sun, C. Attention bottlenecks for multimodal fusion. In Proceedings of the Advances in Neural Information Processing Systems 34 (NeurIPS 2021), Virtual, 6–14 December 2021; The Neural Information Processing Systems Foundation: San Diego, CA, USA, 2021; Volume 34, pp. 14200–14213. [Google Scholar]

- Lee, W.Y.; Jovanov, L.; Philips, W. Cross-modality attention and multimodal fusion transformer for pedestrian detection. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 608–623. [Google Scholar]

- Xu, D.; Ouyang, W.; Ricci, E.; Wang, X.; Sebe, N. Learning cross-modal deep representations for robust pedestrian detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5363–5371. [Google Scholar]

- Calandra, R.; Owens, A.; Jayaraman, D.; Lin, J.; Yuan, W.; Malik, J.; Adelson, E.H.; Levine, S. More than a feeling: Learning to grasp and regrasp using vision and touch. IEEE Robot. Autom. Lett. 2018, 3, 3300–3307. [Google Scholar] [CrossRef]

- Miller, P.; Leibowitz, P. Integration of vision, force and tactile sensing for grasping. Int. J. Intell. Mach. 1999, 4, 129–149. [Google Scholar]

- Struckmeier, O.; Tiwari, K.; Salman, M.; Pearson, M.J.; Kyrki, V. Vita-slam: A bio-inspired visuo-tactile slam for navigation while interacting with aliased environments. In Proceedings of the 2019 IEEE International Conference on Cyborg and Bionic Systems (CBS), Munich, Germany, 18–20 September 2019; IEEE: New York, NY, USA, 2019; pp. 97–103. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Lu, J.; Batra, D.; Parikh, D.; Lee, S. ViLBERT: Pretraining task-agnostic visiolinguistic representations for vision-and-language tasks. In Proceedings of the Advances in Neural Information Processing Systems 32 (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; The Neural Information Processing Systems Foundation: San Diego, CA, USA, 2019; Volume 32, pp. 1–11. [Google Scholar]

- He, K.; Chen, X.; Xie, S.; Li, Y.; Dollár, P.; Girshick, R. Masked autoencoders are scalable vision learners. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16000–16009. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Yang, F.; Ma, C.; Zhang, J.; Zhu, J.; Yuan, W.; Owens, A. Touch and Go: Learning from Human-Collected Vision and Touch. arXiv 2022, arXiv:2211.12498. [Google Scholar]

- Johnson, M.K.; Adelson, E.H. Retrographic sensing for the measurement of surface texture and shape. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: New York, NY, USA, 2009; pp. 1070–1077. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; The Neural Information Processing Systems Foundation: San Diego, CA, USA, 2017; Volume 30, pp. 1–11. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).