An Automated Domain-Agnostic and Explainable Data Quality Assurance Framework for Energy Analytics and Beyond

Abstract

1. Introduction

- A modular pipeline and metric set for smart-building time series.

- A Building Quality Score with task-aligned weights for completeness, temporal regularity, and statistical stability.

- SHAP-based sensitivity analysis that links metrics to NILM error.

- An LLM module that converts figures into concise diagnostic narratives.

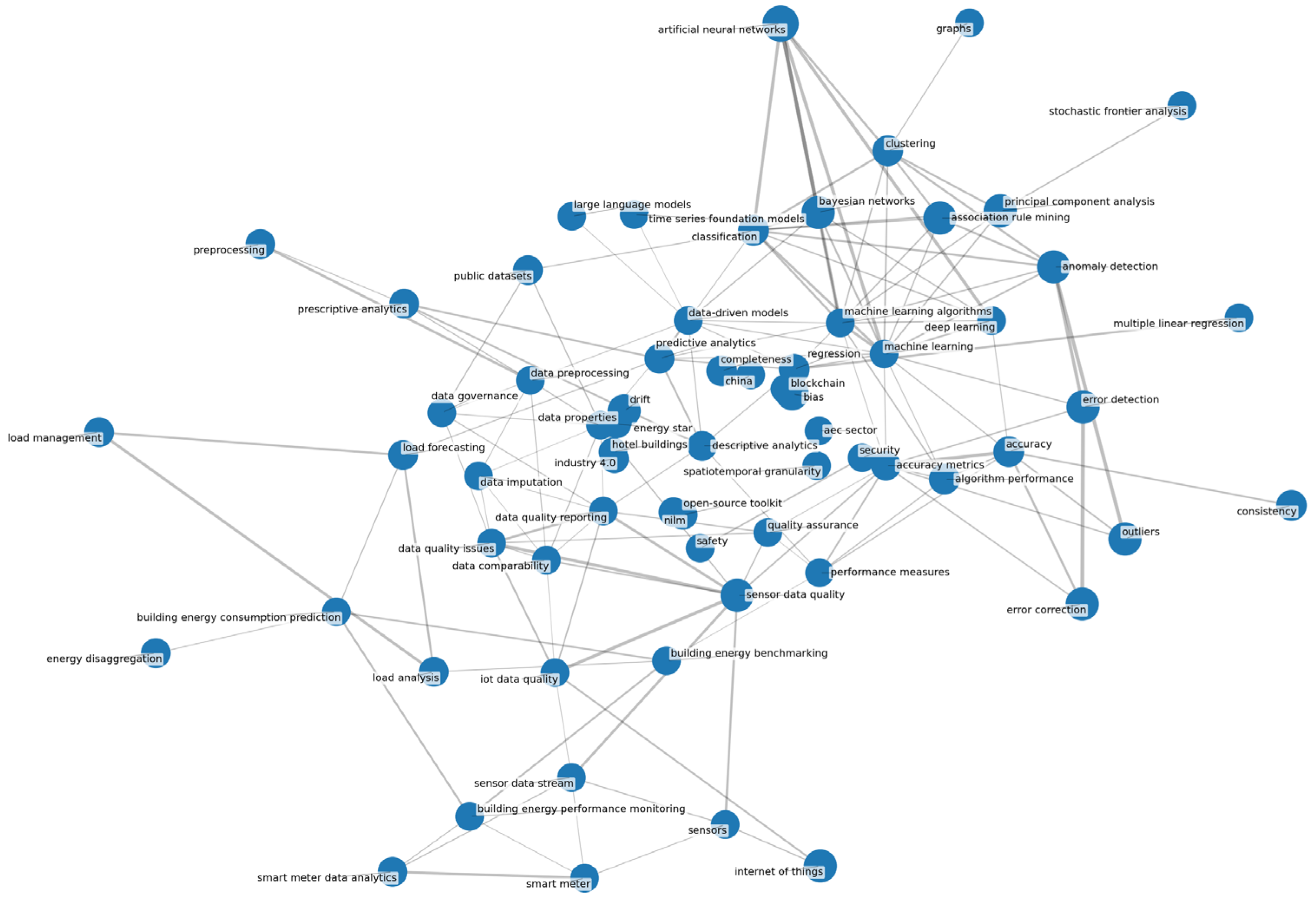

2. Related Works

2.1. Sensor Data Quality and Validation

2.2. Benchmark Datasets and Preprocessing for Energy Systems

2.3. Missing Data Imputation in Time Series

2.4. Real-Time Integrity and System Integration

2.5. Interpretable and Unified Quality Scoring

2.6. LLM Integration and Prompt Engineering

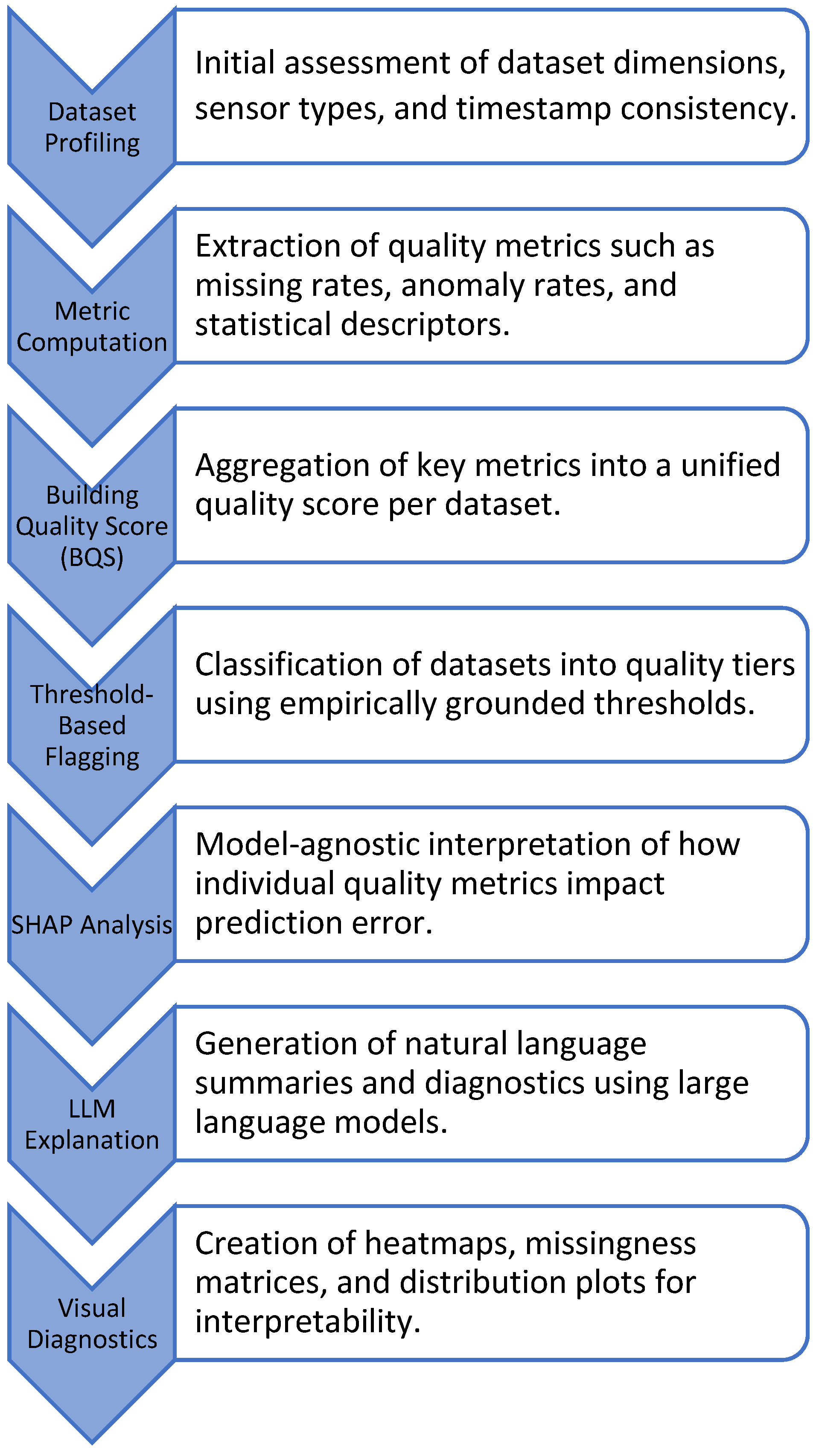

3. Data Quality Assurance Framework

3.1. System Overview and Implementation

3.2. Dataset Profiling

3.3. Quality Metric Evaluation

3.4. Threshold-Based Assessment

3.5. Unified Building Quality Score (BQS)

3.6. SHAP-Based Model Interpretation

3.7. Dataset Comparison and Selection

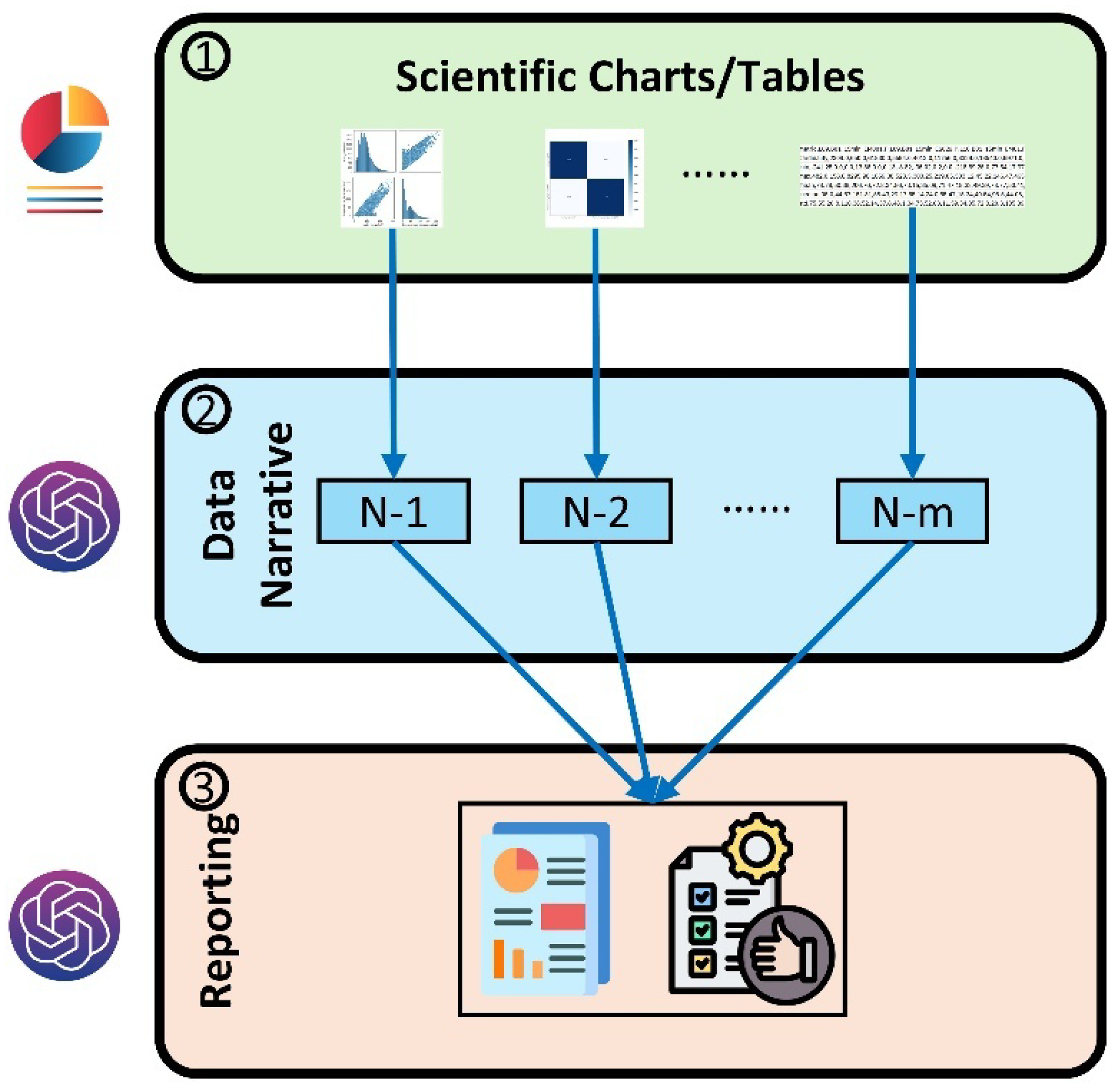

3.8. Prompt Design and Integration

4. Case Study and Results

4.1. Dataset Overview and Evaluation Protocol

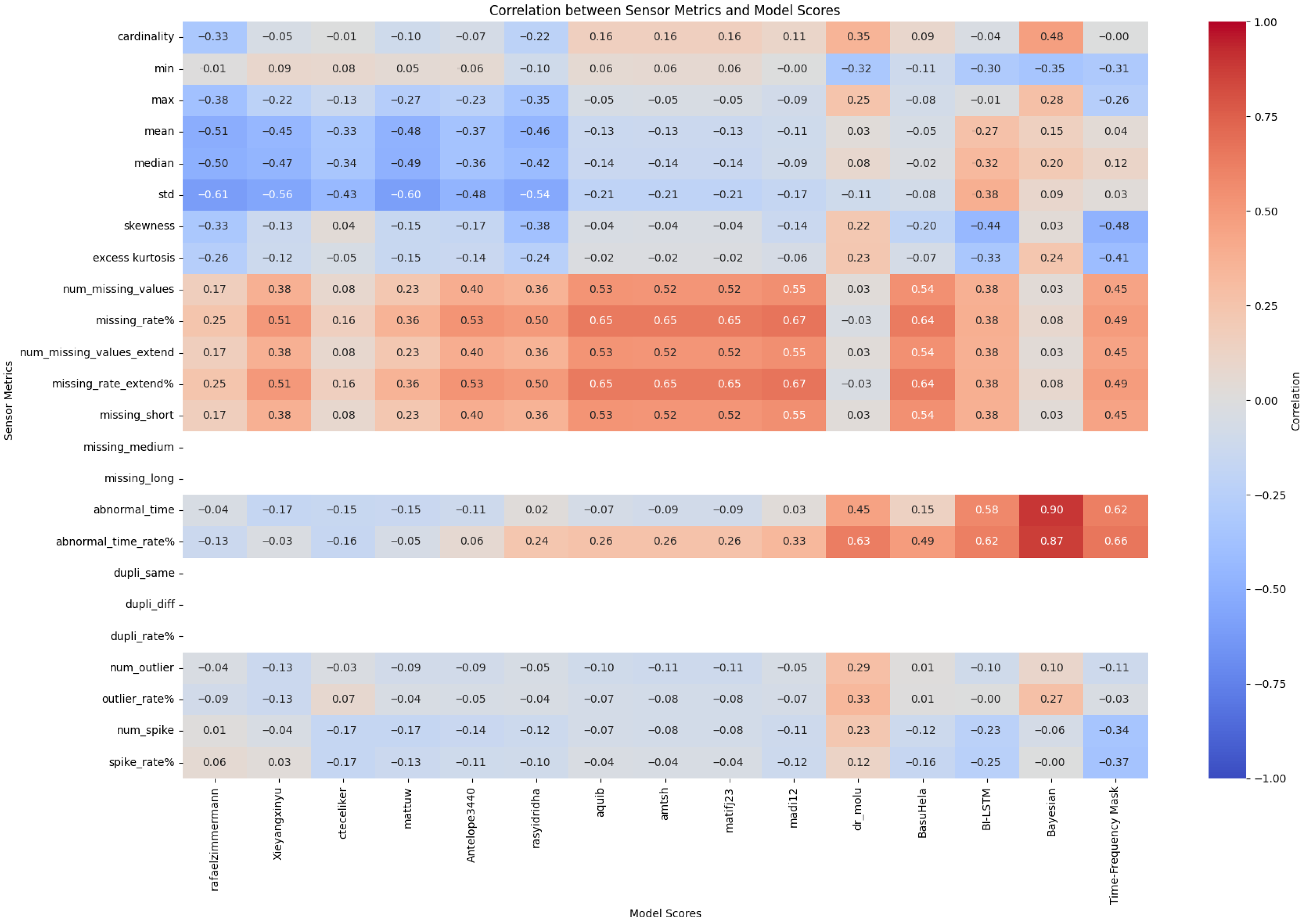

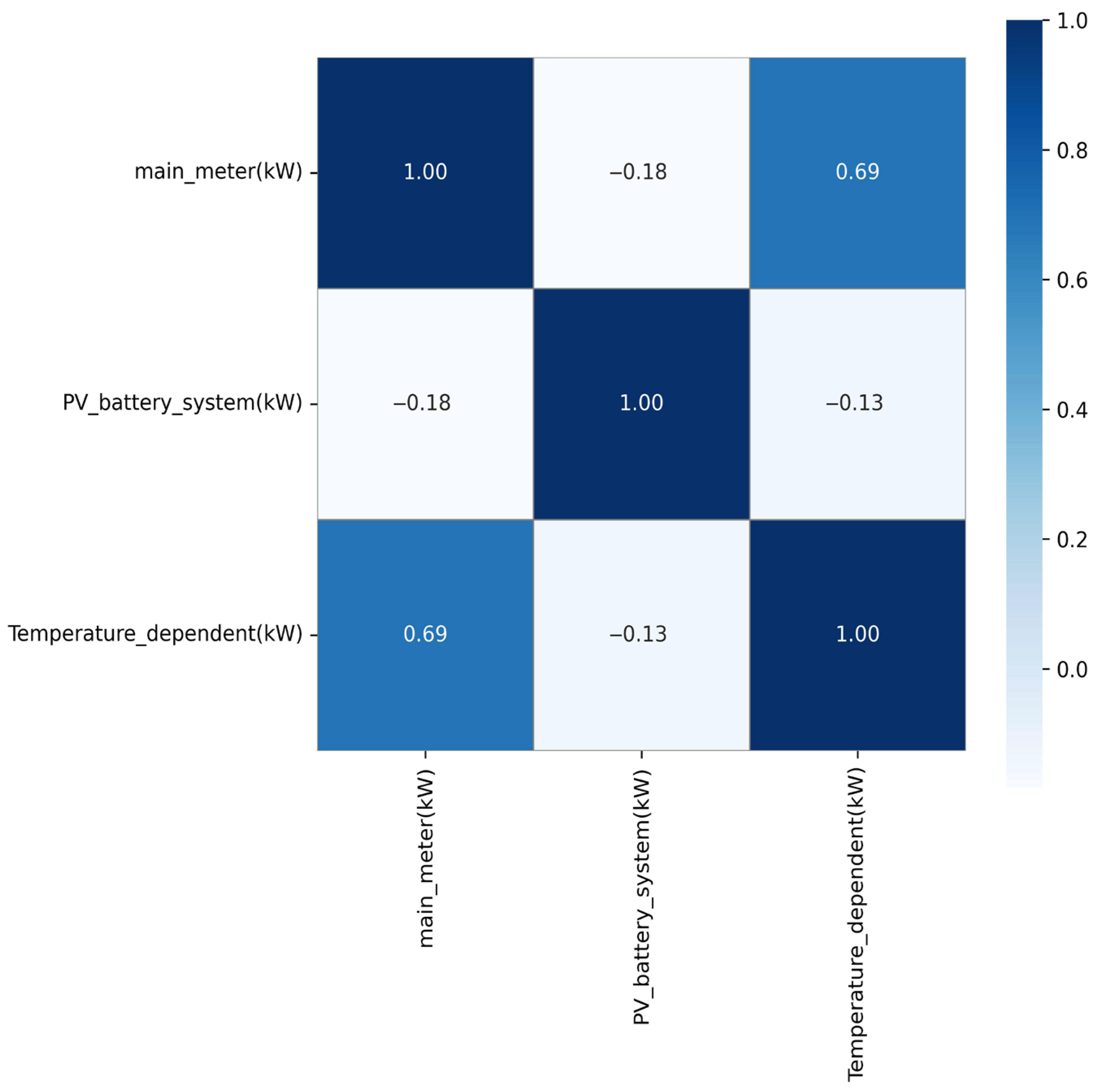

4.2. Correlation Between Data Quality and Model Scores

4.3. BQS of Building L06.B01

4.4. Evaluation and Application of the Building Quality Score (BQS)

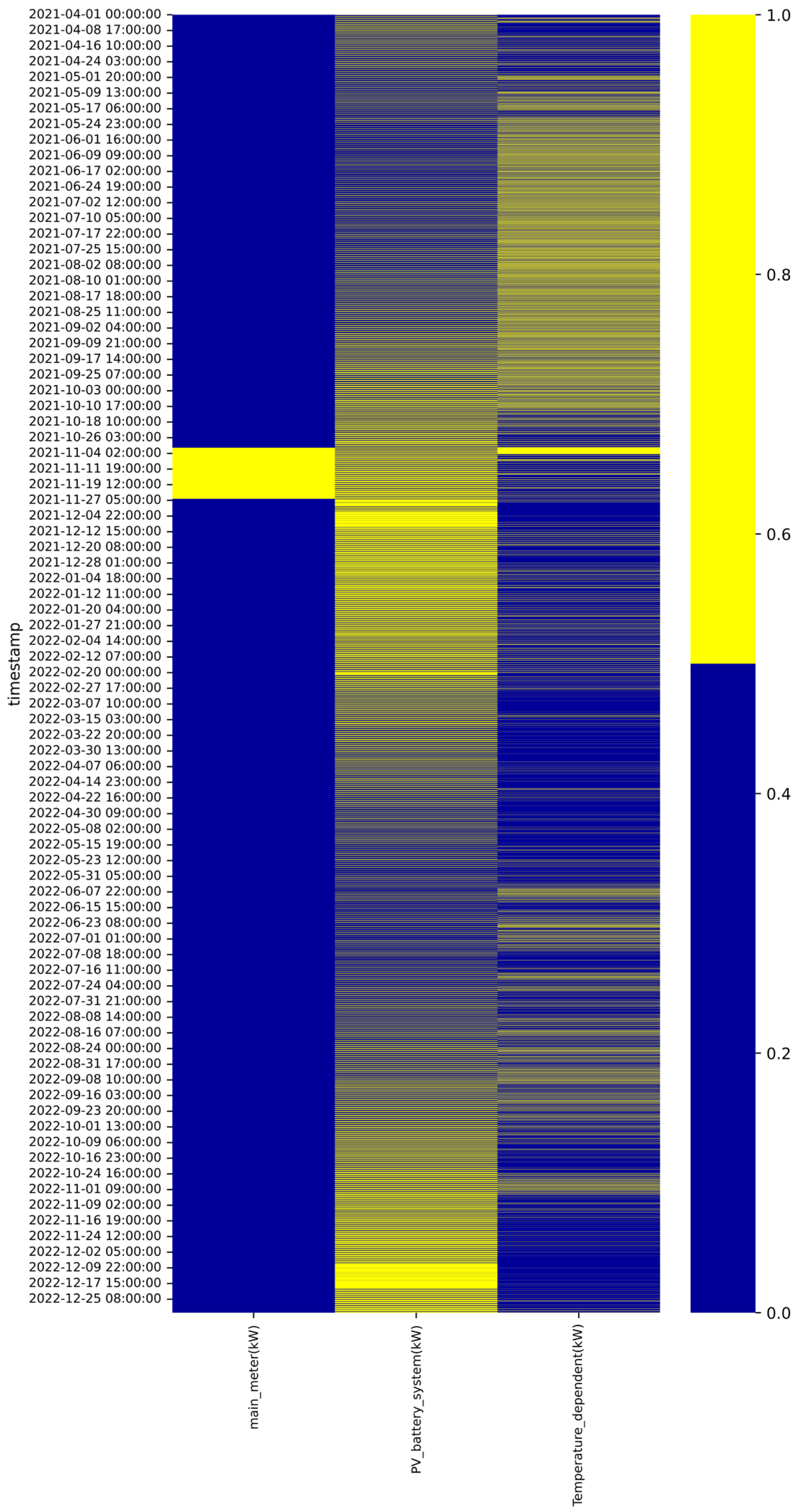

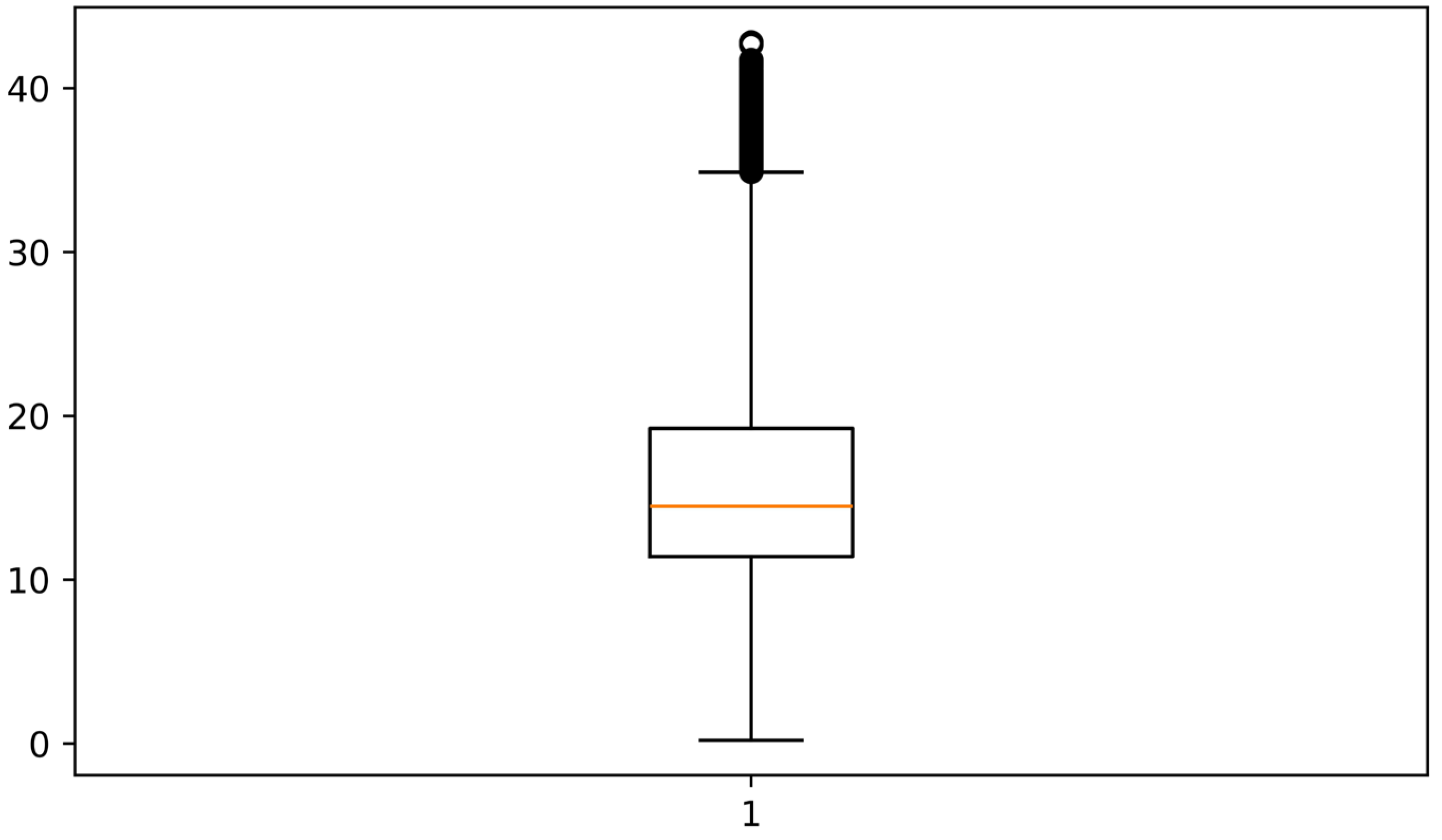

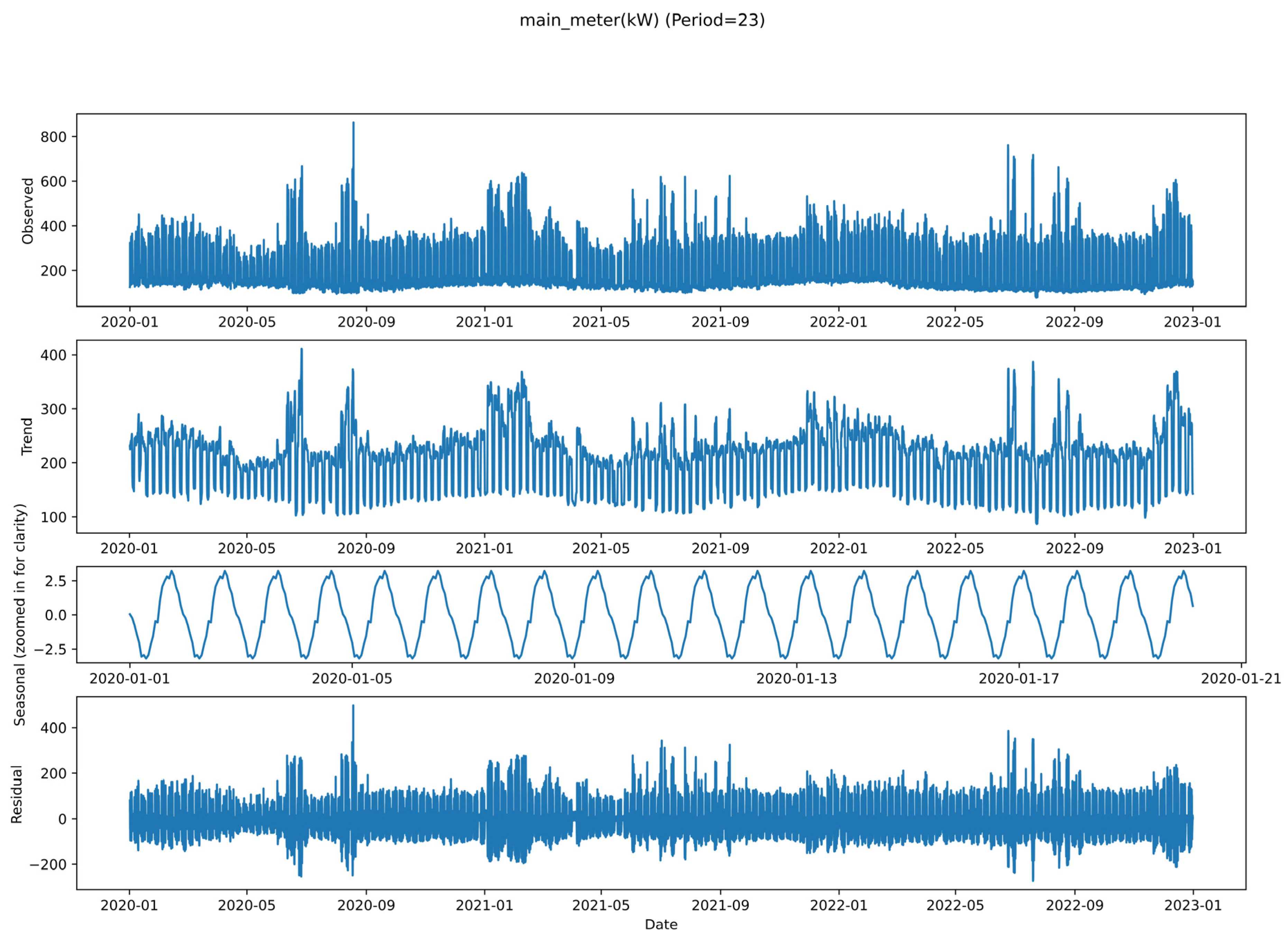

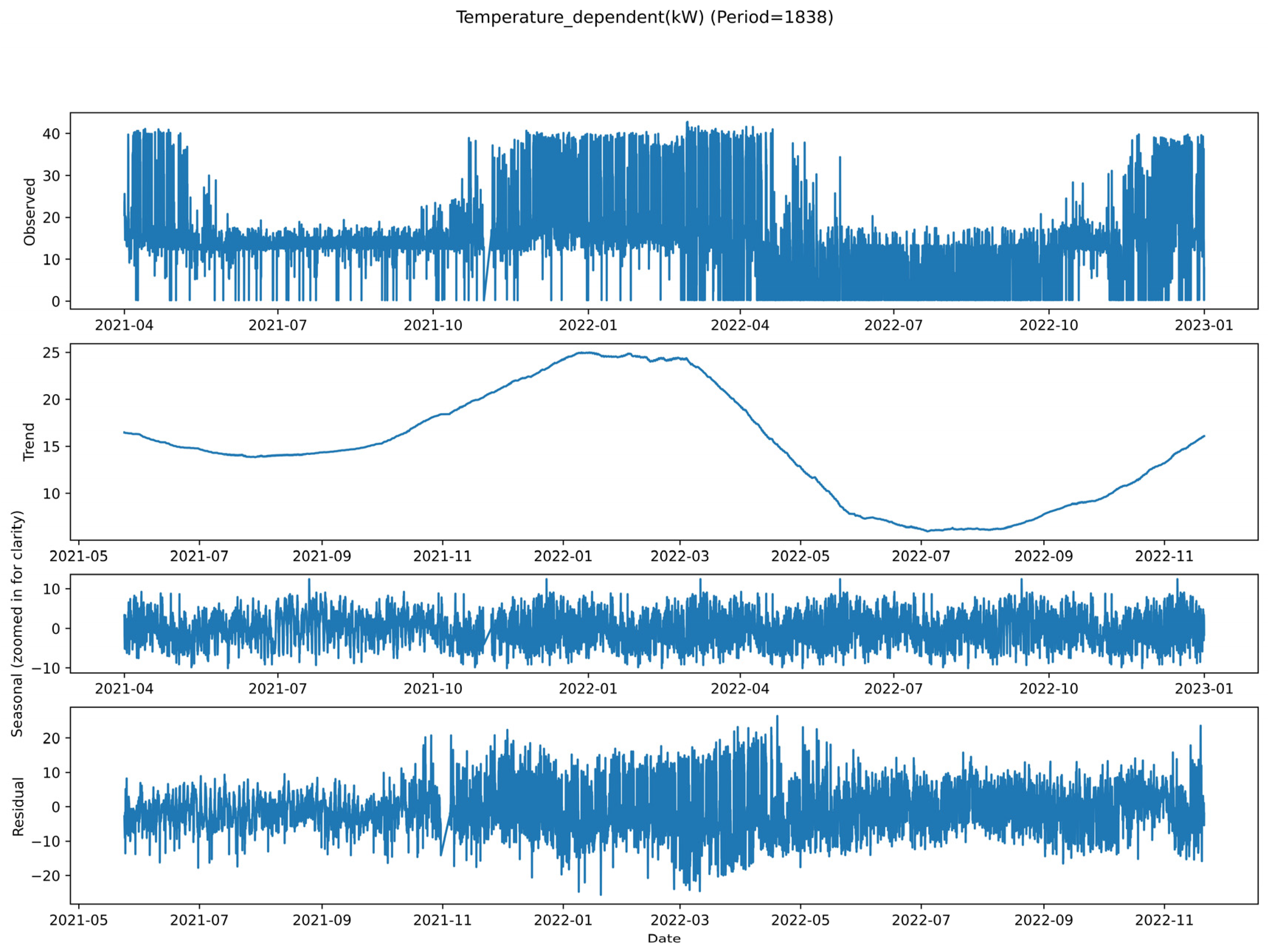

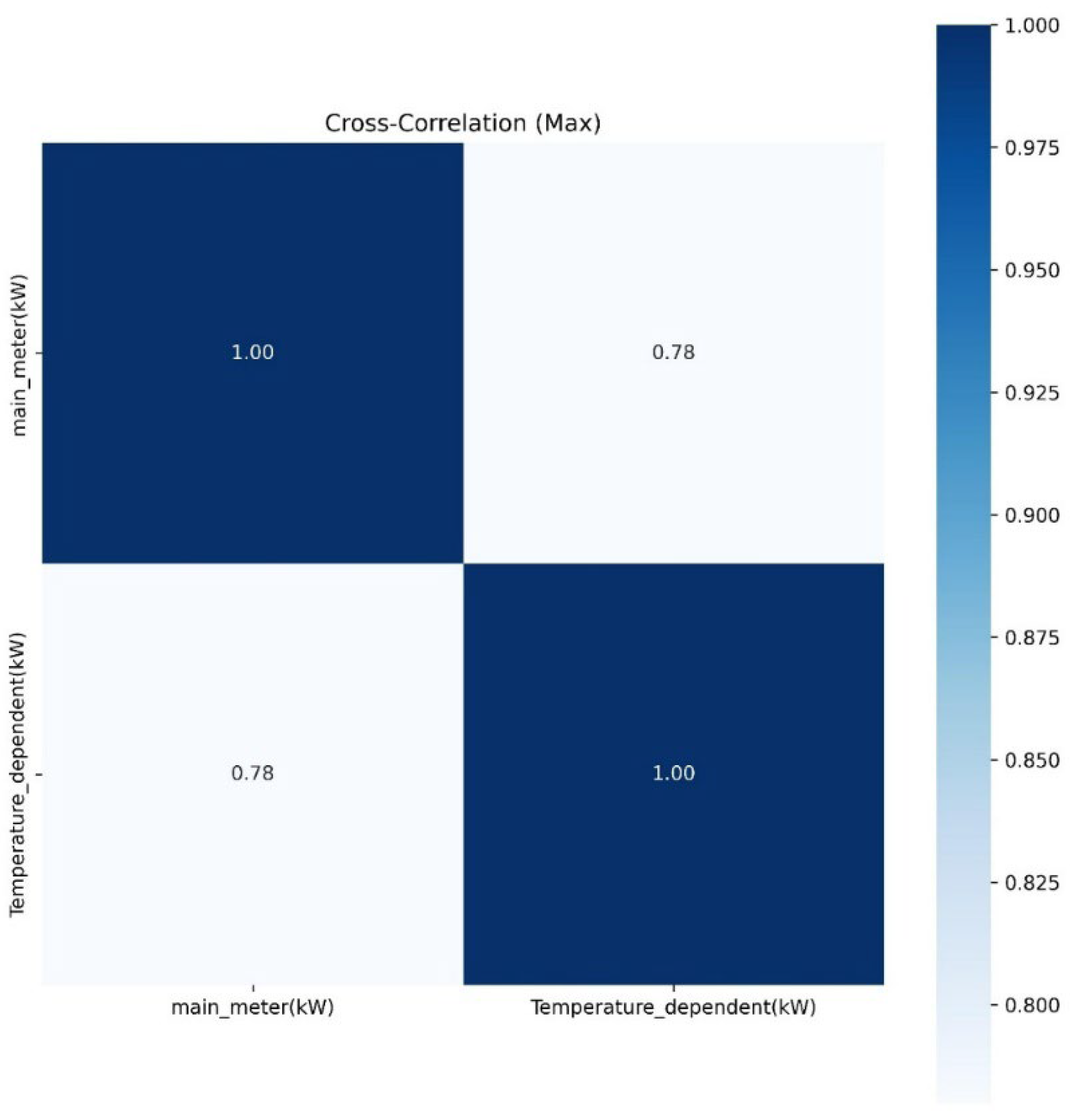

4.5. Data Quality Visualization Analysis

4.6. Impact of NaN Handling on Quality and Performance

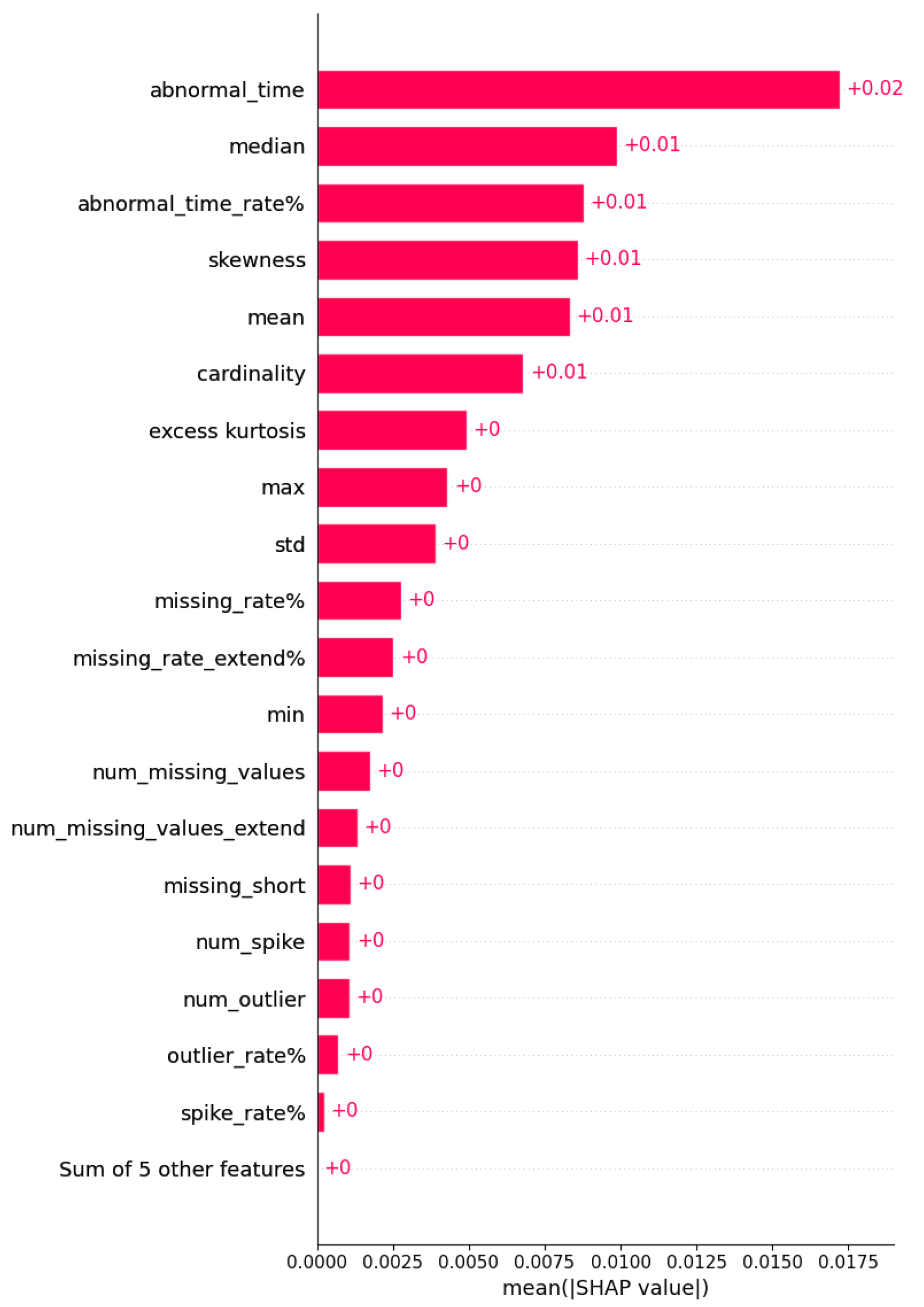

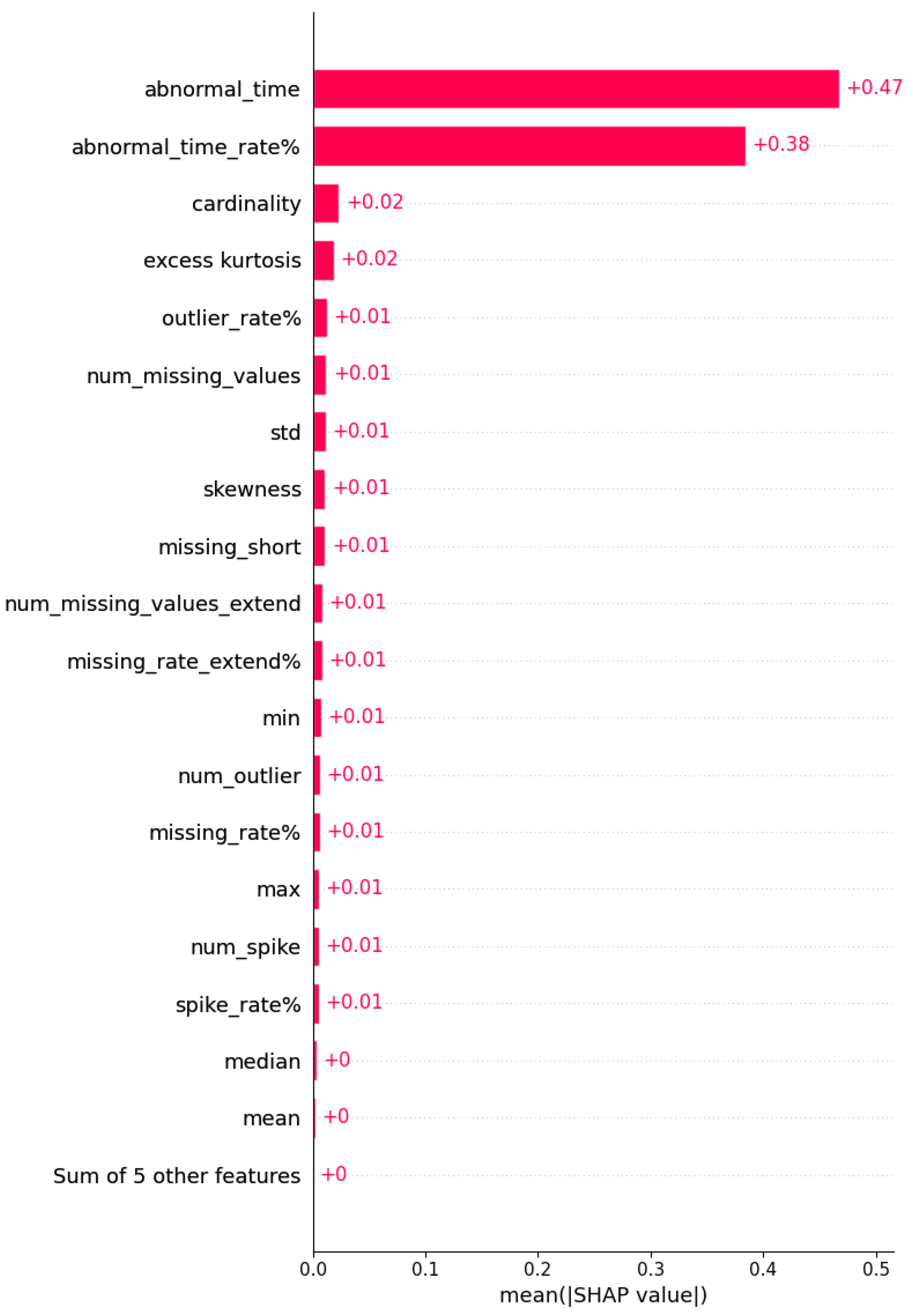

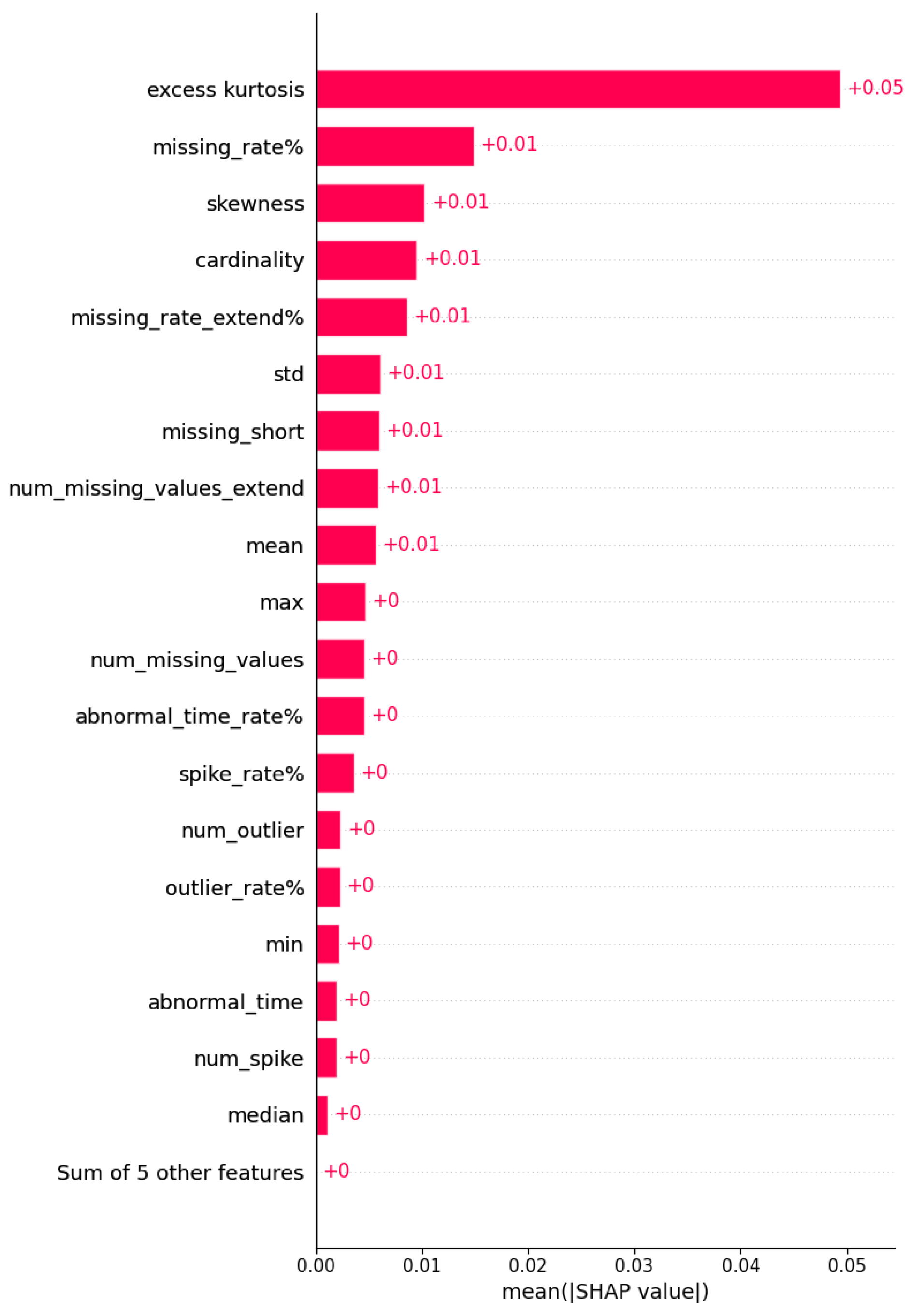

4.7. SHAP-Based Interpretation of Model Sensitivity

4.8. LLM-Based Interpretability and Diagnostics

5. Discussion

5.1. Comparative Positioning of Prior Work

5.2. Impact of Data Quality on Model Performance

5.3. Validity of the BQS Framework

5.4. Interpretability and Explainability in Quality Assessment

5.5. Cross-Domain Applicability

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| NILM | Non-Intrusive Load Monitoring |

| BQS | Building Quality Score |

| LLM | Large Language Models |

| SHAP | SHapley Additive exPlanations |

| NMAE | Normalized Mean Absolute Error |

| AMI | Advanced Metering Infrastructure |

| NILMTK | Non-Intrusive Load Monitoring Tool Kit |

| SaQC | System for Automated Quality Control |

| BI-LSTM | Bidirectional Long Short-Term Memory |

| CoT | Chain-of-Thought |

| CoK | Chain-of-Knowledge |

| NaN | Not a Number (used to denote missing values in datasets) |

| RNN | Recurrent Neural Network |

| CNN | Convolutional Neural Network |

| IQR | Interquartile Range |

| ASHRAE | American Society of Heating, Refrigerating and Air-Conditioning Engineers |

| BDG2 | Building Data Genome Project 2 |

| EUDP | Energy Technology Development and Demonstration Programme |

| GPT-4o | Generative Pre-trained Transformer 4 Omni |

References

- Hadri, S.; Najib, M.; Bakhouya, M.; Fakhri, Y.; El Aroussi, M.; Taifour, Z.; Gaber, J. Amismart an advanced metering infrastructure for power consumption monitoring and forecasting in smart buildings. Discov. Comput. 2025, 28, 121. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, Q.; Hong, T.; Kang, C. Review of smart meter data analytics: Applications, methodologies, and challenges. IEEE Trans. Smart Grid 2018, 10, 3125–3148. [Google Scholar] [CrossRef]

- Ding, Y.; Liu, X. A comparative analysis of data-driven methods in building energy benchmarking. Energy Build. 2020, 209, 109711. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, Y.; Ma, J. Non-Intrusive Load Monitoring in Smart Grids: A Comprehensive Review. arXiv 2024, arXiv:2403.06474. [Google Scholar] [CrossRef]

- Amasyali, K.; El-Gohary, N.M. A review of data-driven building energy consumption prediction studies. Renew. Sustain. Energy Rev. 2018, 81, 1192–1205. [Google Scholar] [CrossRef]

- Teh, H.Y.; Kempa-Liehr, A.W.; Wang, K.I.-K. Sensor data quality: A systematic review. J. Big Data 2020, 7, 11. [Google Scholar] [CrossRef]

- Xie, J.; Sun, L.; Zhao, Y.F. On the Data Quality and Imbalance in Machine Learning-based Design and Manufacturing—A Systematic Review. Engineering 2025, 45, 105–131. [Google Scholar] [CrossRef]

- Mohammed, S.; Budach, L.; Feuerpfeil, M.; Ihde, N.; Nathansen, A.; Noack, N.; Patzlaff, H.; Naumann, F.; Harmouch, H. The effects of data quality on machine learning performance on tabular data. Inf. Syst. 2025, 132, 102549. [Google Scholar] [CrossRef]

- Cicero, S.; Guarascio, M.; Guerrieri, A.; Mungari, S. A Deep Anomaly Detection System for IoT-Based Smart Buildings. Sensors 2023, 23, 9331. [Google Scholar] [CrossRef]

- Mansouri, T.; Sadeghi Moghadam, M.R.; Monshizadeh, F.; Zareravasan, A. IoT Data Quality Issues and Potential Solutions: A Literature Review. Comput. J. 2023, 66, 615–625. [Google Scholar] [CrossRef]

- Sartipi, A.; Delgado Fernández, J.; Potenciano Menci, S.; Magitteri, A. Bridging Smart Meter Gaps: A Benchmark of Statistical, Machine Learnin g and Time Series Foundation Models for Data Imputation. arXiv 2025, arXiv:2501.07276. [Google Scholar]

- Morewood, J. Building energy performance monitoring through the lens of data quality: A review. Energy Build. 2023, 279, 112701. [Google Scholar] [CrossRef]

- Schelter, S.; Lange, D.; Schmidt, P.; Celikel, M.; Biessmann, F.; Grafberger, A. Automating large-scale data quality verification. Proc. VLDB Endow. 2018, 11, 1781–1794. [Google Scholar] [CrossRef]

- Gong, A.; Campbell, J. Great Expectations. Zenodo, Mar. 2025, 19. [Google Scholar] [CrossRef]

- Batra, N.; Kelly, J.; Parson, O.; Dutta, H.; Knottenbelt, W.; Rogers, A.; Singh, A.; Srivastava, M. NILMTK: An open source toolkit for non-intrusive load monitoring. In Proceedings of the 5th International Conference on Future Energy Systems, Cambridge, UK, 11–13 June 2014. [Google Scholar]

- DeMedeiros, K.; Hendawi, A.; Alvarez, M. A Survey of AI-Based Anomaly Detection in IoT and Sensor Networks. Sensors 2023, 23, 1352. [Google Scholar] [CrossRef]

- Chatterjee, A.; Ahmed, B.S. IoT anomaly detection methods and applications: A survey. Internet Things 2022, 19, 100568. [Google Scholar] [CrossRef]

- Tolnai, B.A.; Ma, Z.G.; Jørgensen, B.N.; Sartori, I.; Pandiyan, S.V.; Amos, M.; Bengtsson, G.; Lien, S.K.; Walnum, H.T.; Hameed, A.; et al. ADRENALIN: Energy Data Preparation and Validation for HVAC Load Disaggregation in Commercial Buildings. In Nordic Energy Informatics Academy Conference 2025; Lecture Notes in Computer Science; Springer: Stockholm, Sweden, 2025. [Google Scholar]

- Tolnai, B.A.; Zimmermann, R.S.; Xie, Y.; Tran, N.; Çeliker, C.E.; Ma, Z.G.; Jørgensen, B.N.; Sartori, I.; Amos, M.; Bengtsson, G.; et al. Advancing Non-Intrusive Load Monitoring: Insights from the Winning Algorithms in the ADRENALIN 2024 Load Disaggregation Competition. In Nordic Energy Informatics Academy Conference 2025; Lecture Notes in Computer Science; Springer: Stockholm, Sweden, 2025. [Google Scholar]

- Lavrinovica, I.; Judvaitis, J.; Laksis, D.; Skromule, M.; Ozols, K. A Comprehensive Review of Sensor-Based Smart Building Monitoring and Data Gathering Techniques. Appl. Sci. 2024, 14, 10057. [Google Scholar] [CrossRef]

- Schmidt, L.; Schäfer, D.; Geller, J.; Lünenschloss, P.; Palm, B.; Rinke, K.; Rebmann, C.; Rode, M.; Bumberger, J. System for automated Quality Control (SaQC) to enable traceable and reproducible data streams in environmental science. Environ. Model. Softw. 2023, 169, 105809. [Google Scholar] [CrossRef]

- Lee, K.; Lim, H.; Hwang, J.; Lee, D. Evaluating missing data handling methods for developing building energy benchmarking models. Energy 2024, 308, 132979. [Google Scholar] [CrossRef]

- Ma, Z.; Jørgensen, B.N.; Ma, Z.G. A systematic data characteristic understanding framework towards physical-sensor big data challenges. J. Big Data 2024, 11, 84. [Google Scholar] [CrossRef]

- Miller, C.; Kathirgamanathan, A.; Picchetti, B.; Arjunan, P.; Park, J.Y.; Nagy, Z.; Raftery, P.; Hobson, B.W.; Shi, Z.; Meggers, F. The building data genome project 2, energy meter data from the ASHRAE great energy predictor III competition. Sci. Data 2020, 7, 368. [Google Scholar] [CrossRef] [PubMed]

- Liao, W.; Jin, X.; Ran, Y.; Xiao, F.; Gao, W.; Li, Y. A twenty-year dataset of hourly energy generation and consumption from district campus building energy systems. Sci. Data 2024, 11, 1400. [Google Scholar] [CrossRef] [PubMed]

- Emami, P.; Sahu, A.; Graf, P. Buildingsbench: A large-scale dataset of 900k buildings and benchmark for short-term load forecasting. Adv. Neural Inf. Process. Syst. 2023, 36, 19823–19857. [Google Scholar]

- Silva, M.D.; Liu, Q. A Review of NILM Applications with Machine Learning Approaches. Comput. Mater. Contin. 2024, 79, 2971–2989. [Google Scholar] [CrossRef]

- Maier, M.; Schramm, S. General NILM Methodology for Algorithm Parametrization, Optimization and Performance Evaluation. Buildings 2025, 15, 705. [Google Scholar] [CrossRef]

- Shi, D. Non-intrusive load monitoring with missing data imputation based on tensor decomposition. arXiv 2024, arXiv:2403.07012. [Google Scholar] [CrossRef]

- Alwateer, M.; Atlam, E.-S.; Abd El-Raouf, M.M.; Ghoneim, O.A.; Gad, I. Missing data imputation: A comprehensive review. J. Comput. Commun. 2024, 12, 53–75. [Google Scholar] [CrossRef]

- Ribeiro, S.M.; de Castro, C.L. Missing data in time series: A review of imputation methods and case study. In Learning and Nonlinear Models-Revista da Sociedade Brasileira de Redes Neurais-Special Issue: Time Series Analysis and Forecasting Using Computational Intelligence; Brazilian Society on Computational Intelligence: São Paulo, Brazil, 2021; Volume 19, pp. 31–46. [Google Scholar]

- Fu, C.; Quintana, M.; Nagy, Z.; Miller, C. Filling time-series gaps using image techniques: Multidimensional context autoencoder approach for building energy data imputation. Appl. Therm. Eng. 2024, 236, 121545. [Google Scholar] [CrossRef]

- Das, H.P.; Lin, Y.-W.; Agwan, U.; Spangher, L.; Devonport, A.; Yang, Y.; Drgoňa, J.; Chong, A.; Schiavon, S.; Spanos, C.J. Machine learning for smart and energy-efficient buildings. Environ. Data Sci. 2024, 3, e1. [Google Scholar] [CrossRef]

- Weber, M.; Turowski, M.; Çakmak, H.K.; Mikut, R.; Kühnapfel, U.; Hagenmeyer, V. Data-driven copy-paste imputation for energy time series. IEEE Trans. Smart Grid 2021, 12, 5409–5419. [Google Scholar] [CrossRef]

- Chen, Z.; Li, H.; Wang, F.; Zhang, O.; Xu, H.; Jiang, X.; Song, Z.; Wang, H. Rethinking the diffusion models for missing data imputation: A gradient flow perspective. Adv. Neural Inf. Process. Syst. 2024, 37, 112050–112103. [Google Scholar]

- Fang, C.; Wang, C. Time series data imputation: A survey on deep learning approaches. arXiv 2020, arXiv:2011.11347. [Google Scholar] [CrossRef]

- Stefanopoulou, A.; Michailidis, I.; Karatzinis, G.; Lepidas, G.; Boutalis, Y.S.; Kosmatopoulos, E.B. Ensuring real-time data integrity in smart building applications: A systematic end-to-end comprehensive pipeline evaluated in numerous real-life cases. Energy Build. 2025, 336, 115586. [Google Scholar] [CrossRef]

- Liguori, A.; Quintana, M.; Fu, C.; Miller, C.; Frisch, J.; van Treeck, C. Opening the Black Box: Towards inherently interpretable energy data imputation models using building physics insight. Energy Build. 2024, 310, 114071. [Google Scholar] [CrossRef]

- Zhang, L. A pattern-recognition-based ensemble data imputation framework for sensors from building energy systems. Sensors 2020, 20, 5947. [Google Scholar] [CrossRef] [PubMed]

- Henkel, P.; Kasperski, T.; Stoffel, P.; Müller, D. Interpretable data-driven model predictive control of building energy systems using SHAP. In Proceedings of the 6th Annual Learning for Dynamics & Control Conference, Oxford, UK, 15–17 July 2024. [Google Scholar]

- de la Peña, M.F.; Gómez, Á.L.P.; Maimó, L.F. ShaTS: A Shapley-based Explainability Method for Time Series Artificial Intelligence Models applied to Anomaly Detection in Industrial Internet of Things. arXiv 2025, arXiv:2506.01450. [Google Scholar]

- Han, Y.; Zhang, C.; Chen, X.; Yang, X.; Wang, Z.; Yu, G.; Fu, B.; Zhang, H. Chartllama: A multimodal llm for chart understanding and generation. arXiv 2023, arXiv:2311.16483. [Google Scholar] [CrossRef]

- Zhang, X.; Roy Chowdhury, R.; Gupta, R.K.; Shang, J. Large language models for time series: A survey. arXiv 2024, arXiv:2402.01801. [Google Scholar] [CrossRef]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Ichter, B.; Xia, F.; Chi, E.; Le, Q.V.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. Adv. Neural Inf. Process. Syst. 2022, 35, 24824–24837. [Google Scholar]

- Li, X.; Zhao, R.; Chia, Y.K.; Ding, B.; Joty, S.; Poria, S.; Bing, L. Chain-of-Knowledge: Grounding Large Language Models via Dynamic Knowledge Adapting over Heterogeneous Sources. In Proceedings of the Twelfth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Castelnovo, A.; Depalmas, R.; Mercorio, F.; Mombelli, N.; Potertì, D.; Serino, A.; Seveso, A.; Sorrentino, S.; Viola, L. Augmenting XAI with LLMs: A Case Study in Banking Marketing Recommendation. In World Conference on Explainable Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2024. [Google Scholar]

- Wang, Z.; Zhang, H.; Li, C.-L.; Eisenschlos, J.M.; Perot, V.; Wang, Z.; Miculicich, L.; Fujii, Y.; Shang, J.; Lee, C.-Y.; et al. Chain-of-Table: Evolving Tables in the Reasoning Chain for Table Understanding. In Proceedings of the Twelfth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Liu, F.; Wang, X.; Yao, W.; Chen, J.; Song, K.; Cho, S.; Yacoob, Y.; Yu, D. MMC: Advancing Multimodal Chart Understanding with Large-scale Instruction Tuning. In Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Mexico City, Mexico, 16–21 June 2024. [Google Scholar]

- Available online: https://platform.openai.com/docs/models/o4-mini (accessed on 19 May 2025).

- Tolnai, B.A.; Ma, Z.G.; Jørgensen, B.N. Comparison of Three Algorithms for Low-Frequency Temperature-Dependent Load Disaggregation in Buildings Without Submetering. In Nordic Energy Informatics Academy Conference 2025; Lecture Notes in Computer Science; Springer: Stockholm, Sweden, 2025. [Google Scholar]

- Hussain, A.; Giangrande, P.; Franchini, G.; Fenili, L.; Messi, S. Analyzing the Effect of Error Estimation on Random Missing Data Patterns in Mid-Term Electrical Forecasting. Electronics 2025, 14, 1383. [Google Scholar] [CrossRef]

- Alejo-Sanchez, L.E.; Márquez-Grajales, A.; Salas-Martínez, F.; Franco-Arcega, A.; López-Morales, V.; Acevedo-Sandoval, O.A.; González-Ramírez, C.A.; Villegas-Vega, R. Missing data imputation of climate time series: A review. MethodsX 2025, 15, 103455. [Google Scholar] [CrossRef]

| Model | Avg. NMAE (Before) | Avg. NMAE (After) |

|---|---|---|

| Bayesian | 0.82 | 0.76 |

| BI-LSTM | 0.71 | 0.64 |

| Time–Frequency Mask | 0.59 | 0.53 |

| Study Theme | Typical Methodology | Typical Results Reported | Primary Contribution | Relation to This Work | Impact on Performance | Impact on Interpretability |

|---|---|---|---|---|---|---|

| NILM toolkits and benchmarking | Standardized pipelines, shared datasets, reference metrics and baselines | Cross-model accuracy on multiple datasets, ablations by sampling and features | Reproducible model evaluation and baselines | Quality diagnostics are applied before benchmarking; BQS predicts sensitivity of models to input defects | Cleaner inputs strengthen baselines and reduce variance | Limited to metric definitions and plots; little causal attribution |

| Data quality and validation for sensor time series | Profiling gaps, spikes, timestamp integrity, unit checks, dataset curation workflows | Descriptive defect statistics and rule outcomes | Taxonomy of defects and practical validation procedures | BQS formalizes quality as task-aligned components and links scores to downstream error | Indirect improvement via defect reduction prior to modeling | Typically narrative; no model-agnostic attribution to error |

| Imputation for energy and sensor data | Forward fill, interpolation, seasonal naive, Kalman state-space, KNN multivariate | Reconstruction error on imputed channels; sometimes task deltas | Gap-handling strategies with cost–accuracy trade-offs | NaN handling used as conservative baseline; protocol defined for broader comparisons | Seasonality-aware or model-based methods preserve structure better for medium gaps | Method specific; rarely tied to model-agnostic explanations |

| Explainability in time-series models | SHAP or related feature-attribution on task models or surrogates | Global and local importances, case studies of drivers | Interpretable drivers for model behavior | SHAP on a Random Forest surrogate attributes NILM error to concrete BQS metrics | Enables targeted remediation that can reduce error | High; direct mapping from metric to error contribution |

| LLM-assisted analytics and reporting | Zero-shot prompts to generate short narratives from figures and metadata | Concise figure-aligned narratives or triage labels | Human-readable reporting at scale | Lightweight LLM converts diagnostics to short narratives; outputs treated as assistive | Indirect; accelerates triage and iteration on data fixes | Improves accessibility and traceability of diagnostics |

| This work, BQS + SHAP + LLM | Task-aligned scoring, surrogate-model attribution, narrative reporting | Correlation between BQS and NILM error, before after-effects for simple NaN handling | Unified pipeline connecting data quality to task performance with interpretable outputs | BQS predicts error sensitivity, SHAP identifies dominant defect families, LLM summarizes diagnostics | Demonstrated error reductions under simple remediation; extensible to stronger imputers | High; model-agnostic linkage from specific defects to expected impact |

| Method | Preserves Diurnal or Weekly Pattern | Handles Long Gaps | Uses Other Sensors | Tuning Burden | Notes |

|---|---|---|---|---|---|

| Forward fill + mean fallback | No | Weak | No | None | Conservative baseline, fast, stable for short gaps |

| Linear interpolation | Partial for short gaps | Weak | No | Low | Can oversmooth peaks and transitions |

| Seasonal naive interpolation | Yes | Medium | No | Low | Honors seasonal structure if frequency is known |

| Kalman state-space smoothing | Yes | Medium | Optional | Medium | Captures dynamics, requires simple model selection |

| KNN multivariate imputation | Partial | Medium | Yes | Medium | Leverages correlated channels, sensitive to scaling and k |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tolnai, B.A.; Ma, Z.; Jørgensen, B.N.; Ma, Z.G. An Automated Domain-Agnostic and Explainable Data Quality Assurance Framework for Energy Analytics and Beyond. Information 2025, 16, 836. https://doi.org/10.3390/info16100836

Tolnai BA, Ma Z, Jørgensen BN, Ma ZG. An Automated Domain-Agnostic and Explainable Data Quality Assurance Framework for Energy Analytics and Beyond. Information. 2025; 16(10):836. https://doi.org/10.3390/info16100836

Chicago/Turabian StyleTolnai, Balázs András, Zhipeng Ma, Bo Nørregaard Jørgensen, and Zheng Grace Ma. 2025. "An Automated Domain-Agnostic and Explainable Data Quality Assurance Framework for Energy Analytics and Beyond" Information 16, no. 10: 836. https://doi.org/10.3390/info16100836

APA StyleTolnai, B. A., Ma, Z., Jørgensen, B. N., & Ma, Z. G. (2025). An Automated Domain-Agnostic and Explainable Data Quality Assurance Framework for Energy Analytics and Beyond. Information, 16(10), 836. https://doi.org/10.3390/info16100836