Context_Driven Emotion Recognition: Integrating Multi_Cue Fusion and Attention Mechanisms for Enhanced Accuracy on the NCAER_S Dataset

Abstract

1. Introduction

- We employ YOLOv8 to extract the faces and bodies of the main actor with greater precision.

- We enhance the accuracy of the combined model by applying spatial and channel attention mechanisms after each convolution.

- We addressed class imbalances using Focal Loss, which significantly enhanced the model’s ability to learn from underrepresented classes.

- The context image is constructed by removing the face and body of the primary subject, encouraging the model to explore other visual elements within the scene.

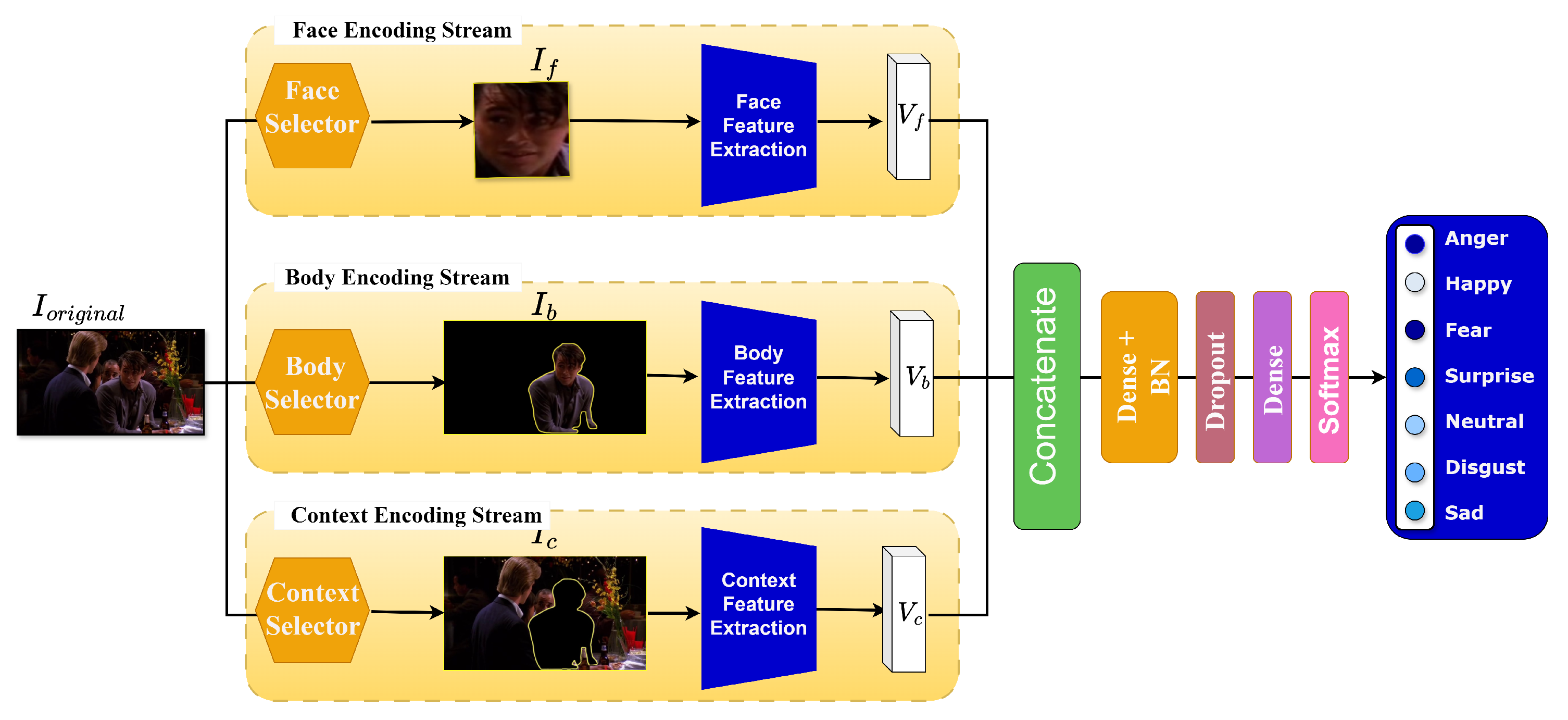

- The proposed framework leverages complementary cues from face, body, and context to address the limitations of single-modality approaches.

- Contextual understanding is enhanced by directing the model’s attention toward background objects and secondary actors in the scene.

- Emotion recognition performance is improved by isolating redundant features and forcing the network to learn from diverse visual signals.

2. Related Work

2.1. Emotion Recognition

2.2. Attention Mechanisms

3. Methodology

3.1. Proposed Network Architecture

3.2. Preprocessing Pipeline

3.3. Face-Encoding Stream

3.4. Body-Encoding Stream

3.5. Context-Encoding Stream

3.6. Adaptive Fusion Networks

4. Experimental Setup

4.1. Datasets

4.2. Visualization

4.3. Implementation Details

- N is the number of samples.

- C is the number of classes.

- is the predicted probability for sample i and class c.

- is the ground-truth label for sample i and class c.

- is a weighting factor that adjusts the importance of each class, and , which controls the degree of emphasis placed on hard-to-classify examples. In our implementation, we set to address the class imbalance, and to direct the model’s attention towards more difficult examples.

5. Results and Discussion

Summary of Achieved Results

- Face and Body Selection: Replacing Dlib with YOLOv8m_200e improved the robustness of actor detection, ensuring accurate face localization even under occlusion and complex poses. This enhancement also benefited the body-extraction stage, as the body region was derived from the detected face.

- Multimodal Fusion: Combining face, body, and context features led to higher recognition accuracy than any unimodal configuration. Each modality contributed complementary information: body cues improved recognition in challenging scenarios, while context compensated for missing or ambiguous body cues.

- Attention Mechanisms: The integration of channel and spatial attention (CBAM) within the face and context streams refined feature representation. The ablation study (Table 3) showed an accuracy gain from 54.29% (without attention) to 56.42% (with attention).

- Comparison with State-of-the-Art: As shown in Table 4, the proposed model surpassed existing multimodal baselines such as MCF_NET and GLAMOR-Net. It achieved an accuracy of 56.42%, representing an improvement of 8.02% over GLAMOR-Net.

- Per-Class Performance: The confusion matrix (Figure 12) revealed strong recognition of emotions such as Fear (85%), and Disgust (75%), but persistent confusion among visually similar categories like Happy, Surprise, and Neutral, largely due to dataset imbalance.

- Loss Function: Employing focal loss instead of cross-entropy accelerated convergence and improved classification for minority classes.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Randhavane, T.; Bhattacharya, U.; Kapsaskis, K.; Gray, K.; Bera, A.; Manocha, D. Identifying emotions from walking using affective and deep features. arXiv 2019, arXiv:1906.11884. [Google Scholar]

- Stathopoulou, I.O.; Tsihrintzis, G.A. Emotion recognition from body movements and gestures. In Intelligent Interactive Multimedia Systems and Services, Proceedings of the 4th International Conference on Intelligent Interactive Multimedia Systems and Services (IIMSS 2011), Piraeus, Greece, 20–22 July 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 295–303. [Google Scholar]

- Schindler, K.; Van Gool, L.; De Gelder, B. Recognizing emotions expressed by body pose: A biologically inspired neural model. Neural Netw. 2008, 21, 1238–1246. [Google Scholar] [CrossRef]

- Han, K.; Yu, D.; Tashev, I. Speech emotion recognition using deep neural network and extreme learning machine. In Proceedings of the Interspeech 2014, Singapore, 14–18 September 2014. [Google Scholar]

- Clavel, C.; Vasilescu, I.; Devillers, L.; Richard, G.; Ehrette, T. Fear-type emotion recognition for future audio-based surveillance systems. Speech Commun. 2008, 50, 487–503. [Google Scholar] [CrossRef]

- Yang, D.; Huang, S.; Xu, Z.; Li, Z.; Wang, S.; Li, M.; Wang, Y.; Liu, Y.; Yang, K.; Chen, Z.; et al. Aide: A vision-driven multi-view, multi-modal, multi-tasking dataset for assistive driving perception. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 20459–20470. [Google Scholar]

- Ali, M.; Mosa, A.H.; Al Machot, F.; Kyamakya, K. EEG-based emotion recognition approach for e-healthcare applications. In Proceedings of the 2016 Eighth International Conference on Ubiquitous and Future Networks (ICUFN), Vienna, Austria, 5–8 July 2016; pp. 946–950. [Google Scholar]

- Fragopanagos, N.; Taylor, J.G. Emotion recognition in human–computer interaction. Neural Netw. 2005, 18, 389–405. [Google Scholar] [CrossRef]

- Fukui, K.; Yamaguchi, O. Face recognition using multi-viewpoint patterns for robot vision. In Proceedings of the Robotics Research. The Eleventh International Symposium: With 303 Figures; Springer: Berlin/Heidelberg, Germany, 2005; pp. 192–201. [Google Scholar]

- Ma, X.; Lin, W.; Huang, D.; Dong, M.; Li, H. Facial emotion recognition. In Proceedings of the 2017 IEEE 2nd International Conference on Signal and Image Processing (ICSIP), Singapore, 4–6 August 2017; pp. 77–81. [Google Scholar] [CrossRef]

- Jain, D.K.; Shamsolmoali, P.; Sehdev, P. Extended deep neural network for facial emotion recognition. Pattern Recognit. Lett. 2019, 120, 69–74. [Google Scholar] [CrossRef]

- Wang, K.; Peng, X.; Yang, J.; Meng, D.; Qiao, Y. Region attention networks for pose and occlusion robust facial expression recognition. IEEE Trans. Image Process. 2020, 29, 4057–4069. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Zeng, J.; Shan, S.; Chen, X. Occlusion Aware Facial Expression Recognition Using CNN with Attention Mechanism. IEEE Trans. Image Process. 2019, 28, 2439–2450. [Google Scholar] [CrossRef] [PubMed]

- Dhall, A.; Goecke, R.; Lucey, S.; Gedeon, T. Acted Facial Expressions in the Wild Database; ANU Computer Science Technical Report Series; TR-CS-11-02; The Australian National University: Canberra, Australia, 2011; Volume 2. [Google Scholar]

- Goodfellow, I.J.; Erhan, D.; Carrier, P.L.; Courville, A.; Mirza, M.; Hamner, B.; Cukierski, W.; Tang, Y.; Thaler, D.; Lee, D.H.; et al. Challenges in representation learning: A report on three machine learning contests. In Neural Information Processing: 20th International Conference, ICONIP 2013, Daegu, Korea, 3–7 November 2013. Proceedings, Part III 20; Springer: Berlin/Heidelberg, Germany, 2013; pp. 117–124. [Google Scholar]

- Piana, S.; Staglianò, A.; Odone, F.; Verri, A.; Camurri, A. Real-time Automatic Emotion Recognition from Body Gestures. arXiv 2014, arXiv:1402.5047. [Google Scholar] [CrossRef]

- Lee, J.; Kim, S.; Kim, S.; Park, J.; Sohn, K. Context-aware emotion recognition networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 10143–10152. [Google Scholar]

- Costa, W.L.; Macêdo, D.; Zanchettin, C.; Figueiredo, L.S.; Teichrieb, V. Multi-Cue Adaptive Emotion Recognition Network. arXiv 2021, arXiv:2111.02273. [Google Scholar] [CrossRef]

- Wang, Z.; Lao, L.; Zhang, X.; Li, Y.; Zhang, T.; Cui, Z. Context-dependent emotion recognition. J. Vis. Commun. Image Represent. 2022, 89, 103679. [Google Scholar] [CrossRef]

- Meng, D.; Peng, X.; Wang, K.; Qiao, Y. Frame attention networks for facial expression recognition in videos. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 3866–3870. [Google Scholar]

- Georgescu, M.I.; Ionescu, R.T.; Popescu, M. Local learning with deep and handcrafted features for facial expression recognition. IEEE Access 2019, 7, 64827–64836. [Google Scholar] [CrossRef]

- Garber-Barron, M.; Si, M. Using body movement and posture for emotion detection in non-acted scenarios. In Proceedings of the 2012 IEEE International Conference on Fuzzy Systems, Brisbane, Australia, 10–15 June 2012; pp. 1–8. [Google Scholar]

- Ahmed, F.; Bari, A.H.; Gavrilova, M.L. Emotion recognition from body movement. IEEE Access 2019, 8, 11761–11781. [Google Scholar] [CrossRef]

- Luna-Jiménez, C.; Griol, D.; Callejas, Z.; Kleinlein, R.; Montero, J.M.; Fernández-Martínez, F. Multimodal emotion recognition on RAVDESS dataset using transfer learning. Sensors 2021, 21, 7665. [Google Scholar] [CrossRef]

- Caridakis, G.; Castellano, G.; Kessous, L.; Raouzaiou, A.; Malatesta, L.; Asteriadis, S.; Karpouzis, K. Multimodal emotion recognition from expressive faces, body gestures and speech. In Artificial Intelligence and Innovations 2007: From Theory to Applications, Proceedings of the 4th IFIP International Conference on Artificial Intelligence Applications and Innovations (AIAI 2007), Athens, Greece, 19–21 September 2007; Springer: New York, NY, USA, 2007; pp. 375–388. [Google Scholar]

- Kosti, R.; Alvarez, J.M.; Recasens, A.; Lapedriza, A. Emotion recognition in context. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1667–1675. [Google Scholar]

- Kosti, R.; Alvarez, J.M.; Recasens, A.; Lapedriza, A. Context based emotion recognition using emotic dataset. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 2755–2766. [Google Scholar] [CrossRef] [PubMed]

- Yang, D.; Huang, S.; Wang, S.; Liu, Y.; Zhai, P.; Su, L.; Li, M.; Zhang, L. Emotion recognition for multiple context awareness. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 144–162. [Google Scholar]

- Le, N.; Nguyen, K.; Nguyen, A.; Le, B. Global-local attention for emotion recognition. Neural Comput. Appl. 2022, 34, 21625–21639. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y.; et al. A survey on visual transformer. arXiv 2020, arXiv:2012.12556. [Google Scholar]

- Bello, I.; Zoph, B.; Vaswani, A.; Shlens, J.; Le, Q.V. Attention augmented convolutional networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3286–3295. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Vedaldi, A. Gather-excite: Exploiting feature context in convolutional neural networks. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2018; Volume 31. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3146–3154. [Google Scholar]

- Huang, Z.; Wang, X.; Huang, L.; Huang, C.; Wei, Y.; Liu, W. Ccnet: Criss-cross attention for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 603–612. [Google Scholar]

- Aminbeidokhti, M.; Pedersoli, M.; Cardinal, P.; Granger, E. Emotion Recognition with Spatial Attention and Temporal Softmax Pooling. In Proceedings of the Image Analysis and Recognition, Waterloo, ON, Canada, 27–29 August 2019; pp. 323–331. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. arXiv 2018, arXiv:1807.06521. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLO. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 14 January 2024).

- Ramchoun, H.; Ghanou, Y.; Ettaouil, M.; Janati Idrissi, M.A. Multilayer perceptron: Architecture optimization and training. Int. J. Interact. Multimed. Artif. Intell. 2016, 4, 26–30. [Google Scholar] [CrossRef]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. {TensorFlow}: A system for {Large-Scale} machine learning. In Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI 16), Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar] [CrossRef]

- King, D.E. Dlib-ml: A machine learning toolkit. J. Mach. Learn. Res. 2009, 10, 1755–1758. [Google Scholar]

- Dhall, A.; Goecke, R.; Joshi, J.; Hoey, J.; Gedeon, T. Emotiw 2016: Video and group-level emotion recognition challenges. In Proceedings of the 18th ACM International Conference on Multimodal Interaction, Tokyo, Japan, 12–16 November 2016; pp. 427–432. [Google Scholar]

- Do, N.T.; Kim, S.H.; Yang, H.J.; Lee, G.S.; Yeom, S. Context-aware emotion recognition in the wild using spatio-temporal and temporal-pyramid models. Sensors 2021, 21, 2344. [Google Scholar] [CrossRef]

- Xu, H.; Kong, J.; Kong, X.; Li, J.; Wang, J. MCF-Net: Fusion Network of Facial and Scene Features for Expression Recognition in the Wild. Appl. Sci. 2022, 12, 10251. [Google Scholar] [CrossRef]

| Notation | Meaning |

|---|---|

| N | Number of detected faces in an image. |

| Bounding box of the j-th detected face, defined by top-left corner , width , and height . | |

| Width and height of the j-th bounding box. | |

| Area of the j-th face bounding box. | |

| Index of the face with the maximum bounding box area. | |

| Bounding box of the main actor’s face (largest detected face). | |

| Original input image from the dataset. | |

| Cropped face region of the main actor extracted from . | |

| Bounding box of the i-th detected body. | |

| Bounding box of the main actor’s body (with largest overlap with ). | |

| Intersection area between the face bounding box and a body bounding box . | |

| Binary segmentation mask of the i-th detected body. | |

| Segmentation mask corresponding to the main actor’s body. | |

| Body image of the main actor. | |

| Context image obtained by masking the main actor’s body. | |

| F | Input feature map of the attention module. |

| Channel attention map. | |

| Refined feature map after applying channel attention. | |

| Spatial attention map. | |

| Final refined feature map after applying spatial attention to . | |

| Feature vector extracted from the face encoding stream, where denotes the k-th component. | |

| Feature vector extracted from the body encoding stream, where denotes the k-th component. | |

| Feature vector extracted from the context encoding stream, where denotes the k-th component. | |

| Concatenated vector of , , and used for fusion. | |

| Dimensions of the face, body, and context feature vectors. | |

| Sigmoid activation function used in channel and spatial attention. |

| References | Authors | Problems | Solving Methods |

|---|---|---|---|

| [22] | Mei Si et al. | Limited emotion recognition when using only facial expressions. | Used posture and body movement features to detect four emotions (triumph, frustration, defeat, concentration). |

| [23] | Ahmed et al. | Challenge of selecting relevant features from a large set of body movement descriptors. | Two-layer method to extract emotion-specific features of the body. |

| [24,25] | David Griol et al. Caridakis et al. | Facial cues alone are insufficient for robust recognition of multiple emotions. | (1) Combined speech and facial cues using a late fusion strategy. (2) Fused facial expressions, body movements, gestures, and speech to improve recognition. |

| [17,18,26,27] | Kosti et al. Lee et al. Willams Costa et al. | Lack of datasets with contextual information in real-world scenarios. | (1) Created EMOTIC dataset and extracted features from person and context for improved recognition. (2) Proposed CAER benchmark and CAER-Net, integrating facial and contextual features with attention to non-facial elements. (3) Introduced a multimodal approach combining face, context, and body pose cues. |

| [28,29] | Yang et al. Le et al. | Context in emotion recognition is inherently vast and multifaceted, making it difficult to model consistently across different environments and scenarios. | (1) Defined four context types: multimodal, scene, surrounding agent, and agent object; used attention modules. (2) Proposed global local attention to extract facial and contextual features separately, then learn them jointly. |

| Method | Body & Face Selector Algorithm (YoLov8) | Body Enc. (Ch + Sp Att.) 1 | Context Enc. (Ch + Sp Att.) 2 | Face Enc. (Ch + Sp Att.) 3 | Acc (%d) |

|---|---|---|---|---|---|

| Our method | ✓ | x | x | x | 54.28% |

| ✓ | x | x | ✓ | 55.92% | |

| ✓ | x | ✓ | ✓ | 56.62% |

| Methods | Accuracy (%) |

|---|---|

| MCF_NET [48] (Face + Context) | 45.59 |

| GLAMOR-Net (Original) [29] (Face + Context) | 46.91 |

| GLAMOR-Net (MobileNetV2) [29] (Face + Context) | 47.52 |

| GLAMOR-Net (ResNet-18) [29] (Face + Context) | 48.40 |

| Our Method (body + Context) | 49.89 |

| Our Method (Face + Context + Body) | 56.42 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Elkorchi, M.; Hdioud, B.; Oulad Haj Thami, R.; Merzouk, S. Context_Driven Emotion Recognition: Integrating Multi_Cue Fusion and Attention Mechanisms for Enhanced Accuracy on the NCAER_S Dataset. Information 2025, 16, 834. https://doi.org/10.3390/info16100834

Elkorchi M, Hdioud B, Oulad Haj Thami R, Merzouk S. Context_Driven Emotion Recognition: Integrating Multi_Cue Fusion and Attention Mechanisms for Enhanced Accuracy on the NCAER_S Dataset. Information. 2025; 16(10):834. https://doi.org/10.3390/info16100834

Chicago/Turabian StyleElkorchi, Merieme, Boutaina Hdioud, Rachid Oulad Haj Thami, and Safae Merzouk. 2025. "Context_Driven Emotion Recognition: Integrating Multi_Cue Fusion and Attention Mechanisms for Enhanced Accuracy on the NCAER_S Dataset" Information 16, no. 10: 834. https://doi.org/10.3390/info16100834

APA StyleElkorchi, M., Hdioud, B., Oulad Haj Thami, R., & Merzouk, S. (2025). Context_Driven Emotion Recognition: Integrating Multi_Cue Fusion and Attention Mechanisms for Enhanced Accuracy on the NCAER_S Dataset. Information, 16(10), 834. https://doi.org/10.3390/info16100834