4. Results

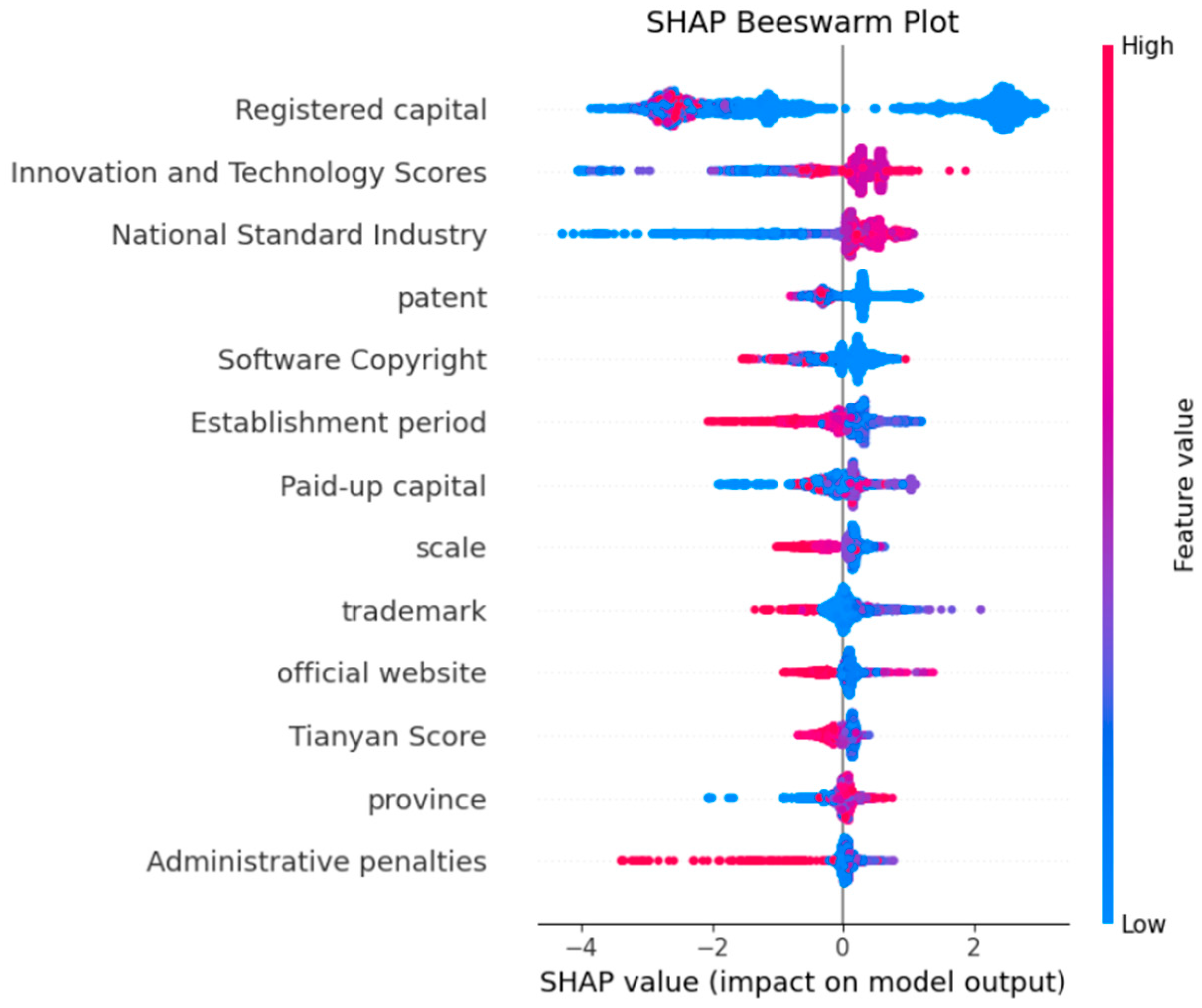

4.2. Identification of Key Risk Characteristics

The features employed in this study reflect multiple dimensions of foreign trade enterprises, including financial strength, operational stability, compliance behavior, and innovation capacity. For example, capital-related variables such as registered and paid-up capital indicate the economic base of an enterprise, while operational features such as establishment period, business years, enterprise scale, and whether there is an official website reflect maturity and organizational stability. Compliance-related attributes, including taxpayer qualifications and administrative penalties, capture the extent of regulatory adherence, which is especially relevant in foreign trade, where risks often arise from misreporting or tax evasion. In addition, innovation-oriented indicators such as the number of patents, trademarks, software copyrights, and innovation and technology scores represent a company’s long-term competitiveness and sustainability. Complementary information such as industry classification, province, and tripartite scoring further contextualize enterprise credibility from external and sectoral perspectives. Collectively, these features provide a comprehensive foundation for evaluating enterprise credit risk beyond traditional financial indicators.

Firstly, the SHAP method is used to explain the above models, obtaining the importance ranking of each feature and the corresponding SHAP values.

Table 3 presents the absolute mean SHAP values for the three models.

Based on the experimental results, an analysis of the SHAP feature importance of the XGBoost, LightGBM, and RF models was conducted. As shown in the table, despite the variation in specific SHAP values among the models, certain features consistently ranked high across multiple models, highlighting their importance in the evaluation of trade credit risk for foreign trade enterprises. In all three models, the SHAP value of registered capital ranked first, indicating the significant impact of this feature on credit risk assessment. National Standard Industry and patent followed closely, both appearing in the top four, demonstrating their importance. Business years and taxpayer qualifications were ranked lower, indicating that these features are not particularly significant in evaluating trade credit risk for foreign trade enterprises.

Secondly, the LIME method is employed to interpret the above models, obtaining the importance ranking of each feature along with the corresponding LIME values. This study will sequentially acquire the LIME values of 100 samples and calculate their absolute mean, thus deriving the LIME absolute mean which represents the comprehensive influence degree of the features.

Table 4 displays the LIME absolute means for the three models.

From the table, it can be observed that there are significant differences between the LIME feature importance values and SHAP feature importance values for the XGBoost, LightGBM, and RF models. This discrepancy primarily arises because LIME is a local model that approximates the complex original model using a simplified linear model. Consequently, the feature importance values calculated by LIME are typically lower.

Finally, the weighted_shap and weighted_lime of each feature are weighted to obtain a new ranking of feature contributions, as shown in

Table 5. At this stage, the features are sorted according to their scores to determine their importance. The purpose of this ranking is to filter out those features that show significant contributions when considered comprehensively, so as to include them in the next round of model development.

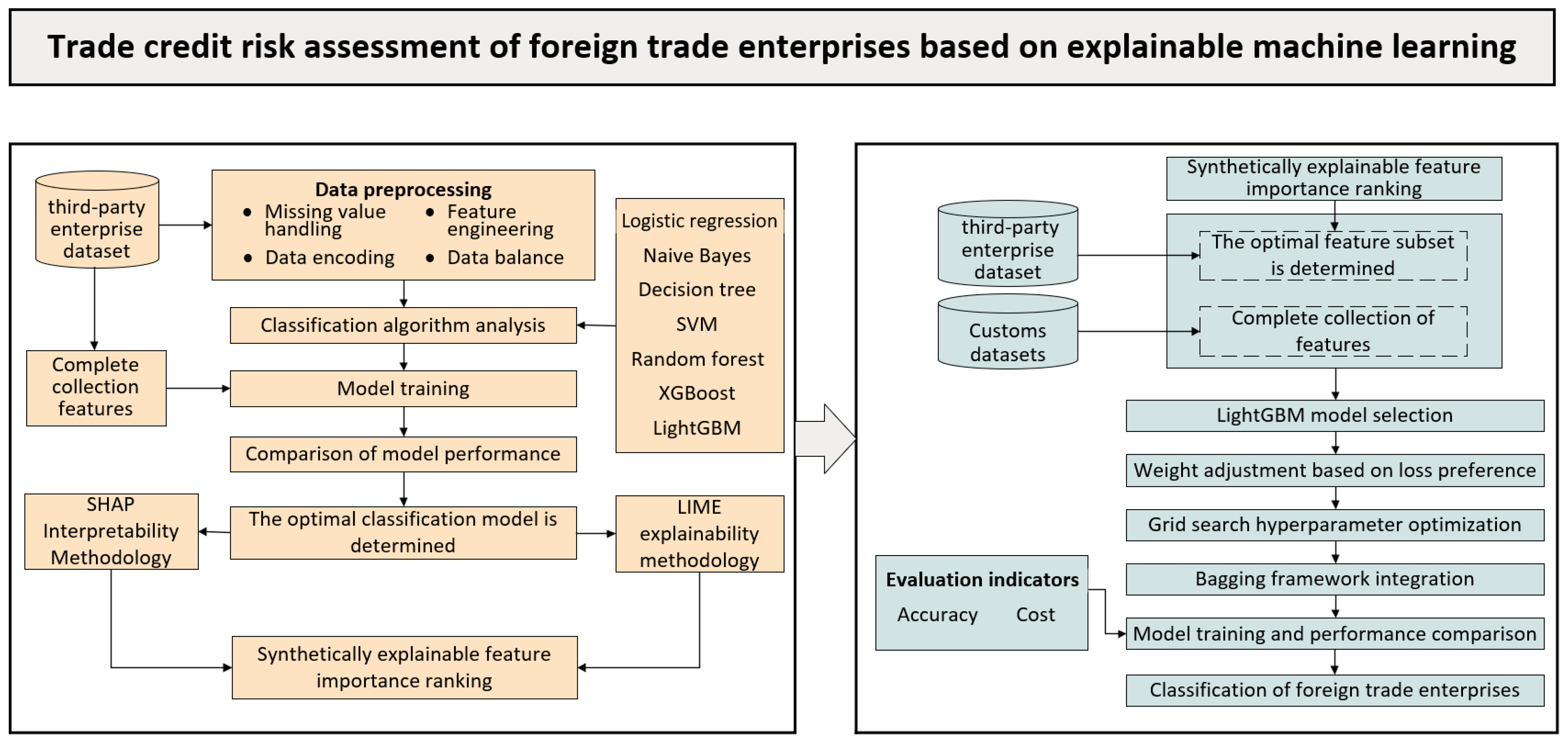

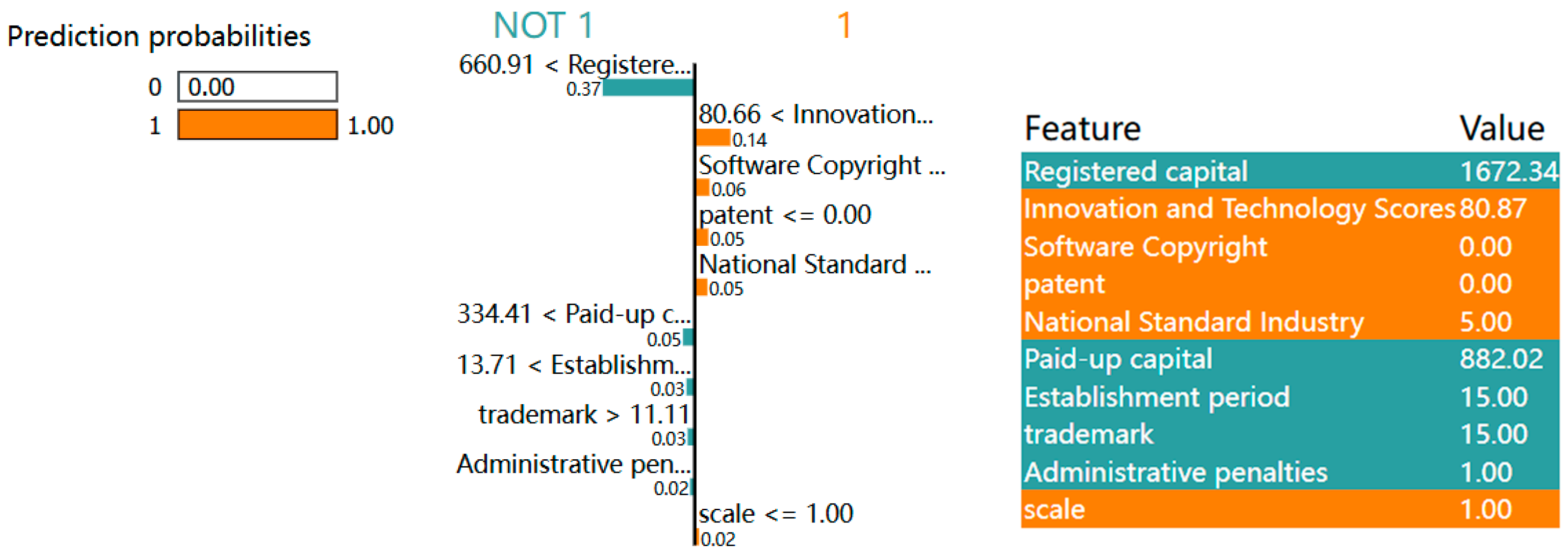

4.3. Analysis of Trade Credit Risk Assessment

In this study, considering its computational efficiency, scalability, and ability to handle large datasets, LightGBM was selected as the base model for further optimization and integration with interpretable methods. LightGBM selects the GBDT model as the base classifier for the ensemble learning model. All algorithms utilize the same subset of features, and grid search is employed for parameter tuning. 80% of the data randomly selected from the original dataset is used as the training set for model training, while the remaining 20% serves as the test set for evaluating model performance. In addition to the third-party enterprise dataset, we include the official dataset to demonstrate the model’s applicability.

This study introduces a misclassification cost index to more comprehensively assess the classification effectiveness of the model. In the credit evaluation process, two types of misclassification may occur: first, misjudging enterprises with low credit risk as having high credit risk (False Positive, FP); second, misjudging enterprises with high credit risk as having low credit risk (False Negative, FN). Since the costs of these two types of errors are different, existing research has indicated that in traditional offline lending, the losses from the second type of error may be 5 to 20 times that of the first type of error, meaning that the risk posed by failing to accurately identify individuals with poor credit is significantly greater. Therefore, this study sets the misclassification cost ratio of the first and second types of errors at 1:10 and constructs a corresponding misclassification cost index to measure the overall classification capability of the model for credit groups. The smaller the value of the misclassification cost index, the better the overall classification effectiveness of the model.

To confirm the optimal feature subset of the third-party enterprise dataset, LightGBM was trained using subsets of features ranked by importance, as shown in

Table 6. The experimental results indicate that when the number of features is 13, the model achieves optimal performance across various metrics. Compared to the situation using the full feature set, there is an improvement in accuracy, recall, and F1 score. As the number of features gradually decreases, the model’s performance declines, indicating that the reduction in feature information has a progressively significant impact on the model. This suggests that the 13-feature subset provides the best trade-off between predictive power and interpretability, while avoiding redundancy.

The experimental results based on the third-party enterprise dataset are presented in

Table 7. Compared with the traditional LightGBM model, introducing the weight adjustment strategy leads to an increase in the false positive rate (FPR) but a significant decrease in the false negative rate (FNR), thereby improving the model’s ability to identify high-risk enterprises. This adjustment also reduces the overall misclassification cost (from 0.884 to 0.431), demonstrating the effectiveness of incorporating loss preferences.

However, the improvement in risk detection comes at the expense of classification accuracy, which drops from 0.977 to 0.879. This indicates that the model sacrifices some overall accuracy to prioritize minimizing false negatives. After integrating the Bagging framework, accuracy is not only restored but slightly improved to 0.980, while maintaining a comparably low misclassification cost (0.433). These results suggest that the combined strategy achieves a better balance between accuracy and cost sensitivity, effectively enhancing high-risk enterprise detection without significantly increasing overall misclassification costs.

The experimental results on the official dataset are summarized in

Table 8. The traditional LightGBM model achieves an accuracy of 0.856 with a misclassification cost of 5.611. After applying the weight adjustment strategy, accuracy decreases to 0.822, but the cost value is significantly reduced to 4.866. This indicates that, although overall accuracy declined, the reduction in false negatives effectively lowered the overall misclassification cost.

When the Bagging framework is integrated, accuracy improves to 0.848, partially recovering from the decline observed under weight adjustment alone. Meanwhile, the cost increases slightly to 4.964, higher than the 4.866 of the weight-adjusted model but still substantially lower than the 5.611 of the traditional LightGBM. These results demonstrate that Bagging mitigates the accuracy loss caused by weight adjustment while maintaining a lower misclassification cost, thereby enhancing the robustness and practical applicability of the proposed model in credit risk assessment.

In summary, this study demonstrates that integrating a weight adjustment strategy with a Bagging ensemble framework effectively balances accuracy and misclassification costs. Specifically, the weight adjustment strategy significantly reduces false negatives and improves the detection of high-risk enterprises, though at the expense of overall accuracy. By incorporating Bagging, accuracy is restored, while the misclassification cost remains substantially lower than that of the traditional model. These results highlight that the proposed strategy achieves a robust trade-off between accuracy and cost sensitivity, enhancing both the stability and the practical applicability of credit risk assessment in regulatory contexts.

Author Contributions

Conceptualization, M.L. and W.J.; methodology, M.L. and W.J.; software, W.J.; validation, M.L., W.J. and J.Z.; formal analysis, M.L.; investigation, W.J.; resources, M.L. and J.Z.; data curation, W.J.; writing—original draft preparation, W.J.; writing—review and editing, M.L. and W.J.; visualization, M.L.; supervision, M.L.; project administration, J.Z.; funding acquisition, J.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key R&D Program of China (2021YFC3340501).

Data Availability Statement

Part of the data used in this study were collected from publicly available websites (

https://www.tianyancha.com/, accessed on 8 January 2025;

http://credit.customs.gov.cn/, accessed on 8 January 2025) and was processed by the authors. Another dataset was obtained from official authorities and is not publicly available due to confidentiality restrictions.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| SHAP | SHapley Additive exPlanations |

| LIME | Local Interpretable Model-agnostic Explanations |

| LR | Logistic Regression |

| NB | Naive Bayes |

| DT | Decision Trees |

| SVM | Support Vector Machines |

| RF | Random Forest |

| XGBoost | EXtreme Gradient Boosting |

| LightGBM | Light Gradient Boosting Machine |

| GBDT | Gradient Boosting Decision Tree |

| AUC | Area Under Curve |

References

- Wang, G.L. Research on Improving the Credit Classification Supervision of Foreign Trade Enterprises in the Field of Foreign Exchange:A Case Study of Zhoukou City, Henan Province. Credit Ref. 2023, 41, 73–79. [Google Scholar]

- Wu, Z.Y.; Jin, L.M.; Han, X.L.; Wang, Z.; Wu, B. Research on Financial Crisis Early Warning Model for Foreign Trade Listed Companies Based on SMOTE-XGBoost Algorithm. Comput. Eng. Appl. 2024, 60, 281–289. [Google Scholar]

- Yao, D.J.; Gu, Y.; Chen, W. Research on Credit Risk Evaluation of Small and Medium-sized Enterprises Based on RF-LSMA-SVM Model. Ind. Technol. Econ. 2023, 42, 85–94. [Google Scholar]

- Lu, H.; Wei, Y.; Jiao, L.D. Credit Card Post-loan Risk Rating Model and Empirical Research Based on GA-BP Neural Network. Oper. Res. Manag. Sci. 2023, 32, 192–198. [Google Scholar]

- Liu, H.B.; Liu, J.Y. Research on Credit Risk Evaluation of China’s Real Estate Enterprises. Credit Ref. 2023, 41, 66–72. [Google Scholar]

- Machado, M.R.; Karray, S. Assessing credit risk of commercial customers using hybrid machine learning algorithms. Expert Syst. Appl. 2022, 200, 116889. [Google Scholar] [CrossRef]

- Sun, Y.C. Research on Banks’ Optimal Credit Strategy for MSMEs Under Information Asymmetry—Default Rate Measurement Model Based on Logistic Regression. J. Financ. Dev. Res. 2021, 6, 78–84. [Google Scholar] [CrossRef]

- Meng, J.; Li, T.; Yuan, Z.M. Credit Risk Assessment of SMES Based on ODR-BADASYN-SVM. J. Financ. Dev. Res. 2018, 1, 24–31. [Google Scholar] [CrossRef]

- Zhang, H.; Shi, Y.; Yang, X. A firefly algorithm modified support vector machine for the credit risk assessment of supply chain finance. Res. Int. Bus. Financ. 2021, 58, 101482. [Google Scholar] [CrossRef]

- Zhang, D.; Tang, Y.; Yan, X. Supply chain risk management of badminton supplies company using decision tree model assisted by fuzzy comprehensive evaluation. Expert Syst. 2024, 41, e13275. [Google Scholar] [CrossRef]

- Wang, J.; Rong, W.; Zhang, Z.; Mei, D. Credit debt default risk assessment based on the XGBoost algorithm: An empirical study from China. Wirel. Commun. Mob. Comput. 2022, 2022, 8005493. [Google Scholar] [CrossRef]

- Mitra, R.; Dongre, A.; Dangare, P.; Goswami, A.; Tiwari, M.K. Knowledge graph driven credit risk assessment for micro, small and medium-sized enterprises. Int. J. Prod. Res. 2024, 62, 4273–4289. [Google Scholar] [CrossRef]

- Li, J.J.; Li, T. Research on Enterprise Credit Risk Assessment from the Perspective of Corporate Governance: Based on BP-Adaboost Model. Commun. Financ. Account. 2018, 05, 100–104. [Google Scholar] [CrossRef]

- Yu, L.A.; Zhang, Y.D. Weight-selected attribute bagging based on association rules for credit dataset classification. Syst. Eng. Theory Pract. 2020, 40, 366–372. [Google Scholar]

- Liu, C.; Shi, Y.; Xie, W.J.; Bao, X.Z. A novel approach to screening patents for securitization: A machine learning-based predictive analysis of high-quality basic asset. Kybernetes 2024, 53, 763–778. [Google Scholar] [CrossRef]

- Zhang, L.; Song, Q. Credit Evaluation of SMEs Based on GBDT-CNN-LR Hybrid Integrated Model. Wirel. Commun. Mob. Comput. 2022, 2022, 5251228. [Google Scholar] [CrossRef]

- Sun, J.; Li, J.; Fujita, H. Multi-class imbalanced enterprise credit evaluation based on asymmetric bagging combined with light gradient boosting machine. Appl. Soft Comput. 2022, 130, 109637. [Google Scholar] [CrossRef]

- Zhang, L.; Song, Q. Multimodel integrated enterprise credit evaluation method based on attention mechanism. Comput. Intell. Neurosci. 2022, 2022, 8612759. [Google Scholar] [CrossRef]

- Jia, D.; Wu, Z. Application of Machine Learning in Enterprise Risk Management. Secur. Commun. Netw. 2022, 2022, 4323150. [Google Scholar] [CrossRef]

- Zhang, W.; Yan, S.; Li, J.; Peng, R.; Tian, X. Deep reinforcement learning imbalanced credit risk of SMEs in supply chain finance. Ann. Oper. Res. 2024, 1–31. [Google Scholar] [CrossRef]

- Song, Y.; Wang, Y.; Ye, X.; Zaretzki, R.; Liu, C. Loan default prediction using a credit rating-specific and multi-objective ensemble learning scheme. Inf. Sci. 2023, 629, 599–617. [Google Scholar] [CrossRef]

- Salih, A.M.; Raisi-Estabragh, Z.; Galazzo, I.B.; Radeva, P.; Petersen, S.E.; Lekadir, K.; Menegaz, G. A perspective on explainable artificial intelligence methods: SHAP and LIME. Adv. Intell. Syst. 2025, 7, 2400304. [Google Scholar] [CrossRef]

- Teng, H.W.; Kang, M.H.; Lee, I.H.; Bai, L.C. Bridging accuracy and interpretability: A rescaled cluster-then-predict approach for enhanced credit scoring. Int. Rev. Financ. Anal. 2024, 91, 103005. [Google Scholar] [CrossRef]

- Park, S.; Park, K.; Shin, H. Network based Enterprise Profiling with Semi-Supervised Learning. Expert Syst. Appl. 2024, 238, 121716. [Google Scholar] [CrossRef]

- Xia, Y.; Xu, T.; Wei, M.X. Predicting Chain’s Manufacturing SME Credit Risk in Supply Chain Finance Based on Machine Learning Methods. Sustainability 2023, 15, 1087. [Google Scholar] [CrossRef]

- Jiang, H.; Cui, J.; Liu, Y. Credit Risk Measurement of Real Estate Enterprises Based on the Random Forest Model. J. Nonlinear Convex Anal. 2025, 26, 1593–1604. [Google Scholar]

- Chang, V.; Xu, Q.A.; Akinloye, S.H.; Benson, V.; Hall, K. Prediction of bank credit worthiness through credit risk analysis: An explainable machine learning study. Ann. Oper. Res. 2024, 1–25. [Google Scholar] [CrossRef]

- Xie, X.; Zhang, J.; Luo, Y.; Gu, J.; Li, Y. Enterprise credit risk portrait and evaluation from the perspective of the supply chain. Int. Trans. Oper. Res. 2024, 31, 2765–2795. [Google Scholar] [CrossRef]

- Zhao, L.; Yang, S.; Wang, S.; Shen, J. Research on PPP enterprise credit dynamic prediction model. Appl. Sci. 2022, 12, 10362. [Google Scholar] [CrossRef]

- Meng, Y.; Yang, N.; Qian, Z.; Zhang, G.Y. What makes an online review more helpful: An interpretation framework using XGBoost and SHAP values. J. Theor. Appl. Electron. Commer. Res. 2020, 16, 466–490. [Google Scholar] [CrossRef]

- Abdullah, T.A.; Zahid, M.S.; Turki, A.F.; Ali, W.; Jiman, A.A.; Abdulaal, M.J.; Sobahi, N.M.; Attar, E.T. Sig-lime: A signal-based enhancement of lime explanation technique. IEEE Access 2024, 12, 52641–52658. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).